Fireground Recognition and Spatio-Temporal Scalability Research Based on ICESat-2/ATLAS Vertical Structure Parameters

Abstract

:1. Introduction

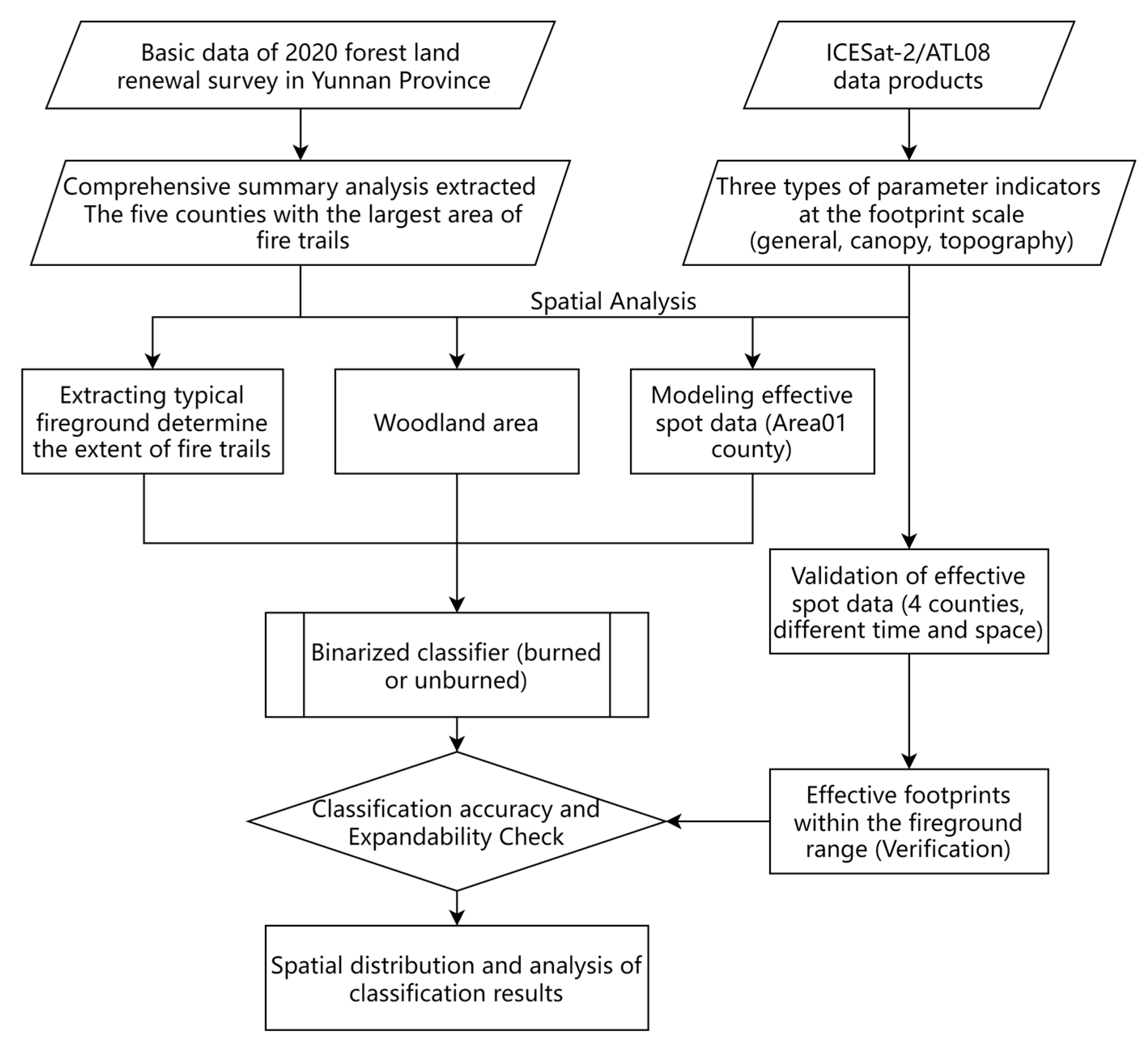

2. Materials and Methods

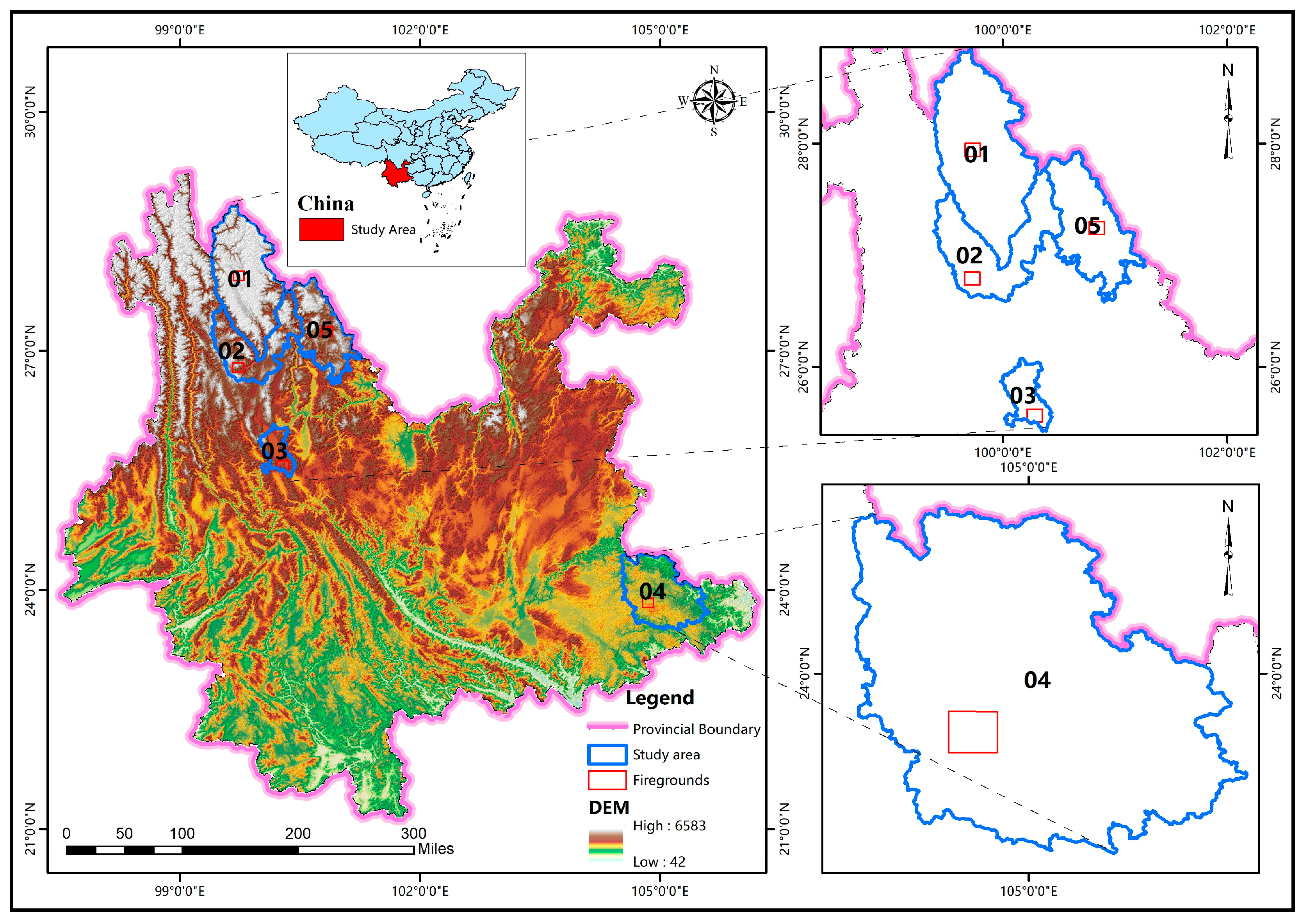

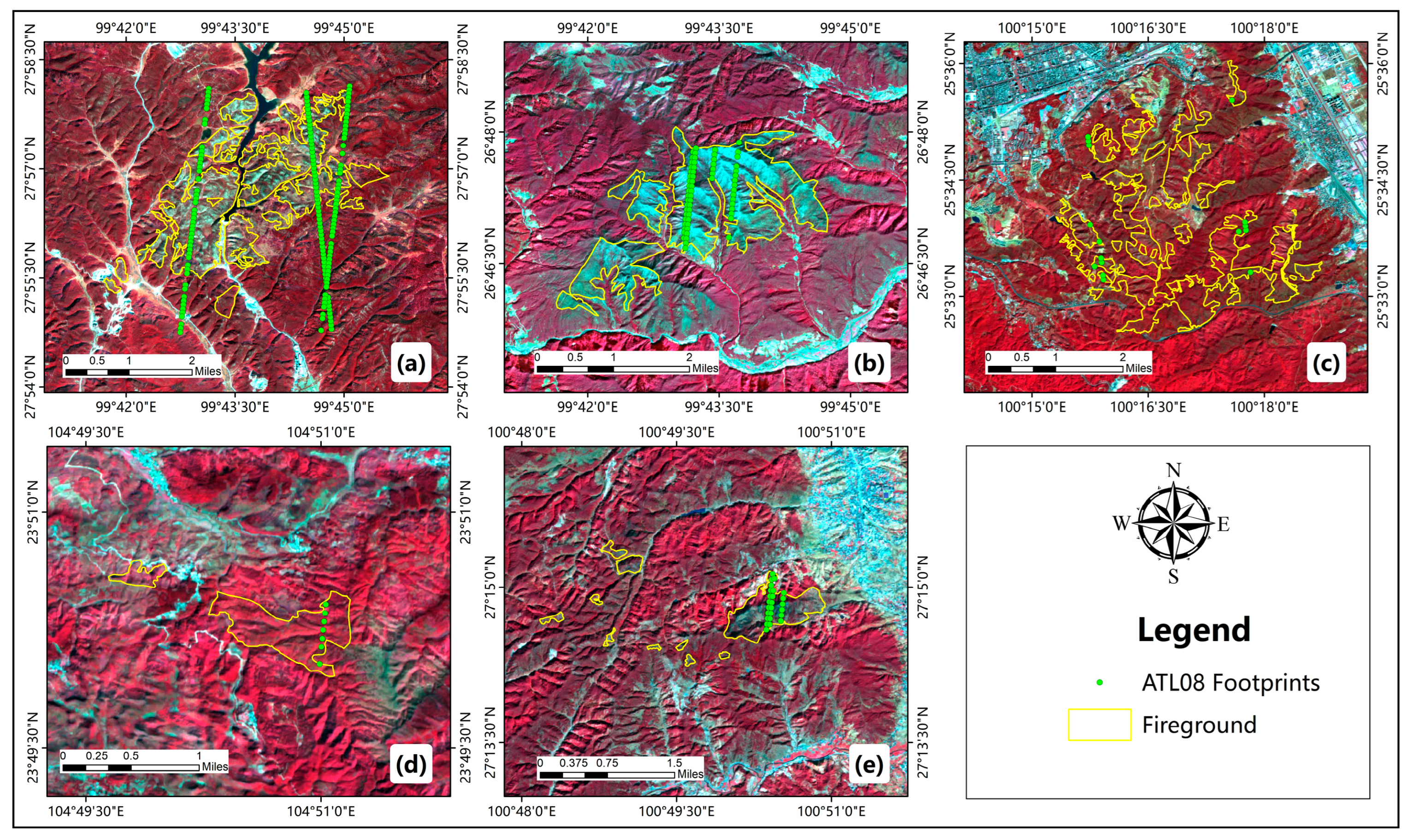

2.1. Study Area

2.2. Data

2.2.1. ICESat-2 Data Products

2.2.2. Sentinel-2 MSI

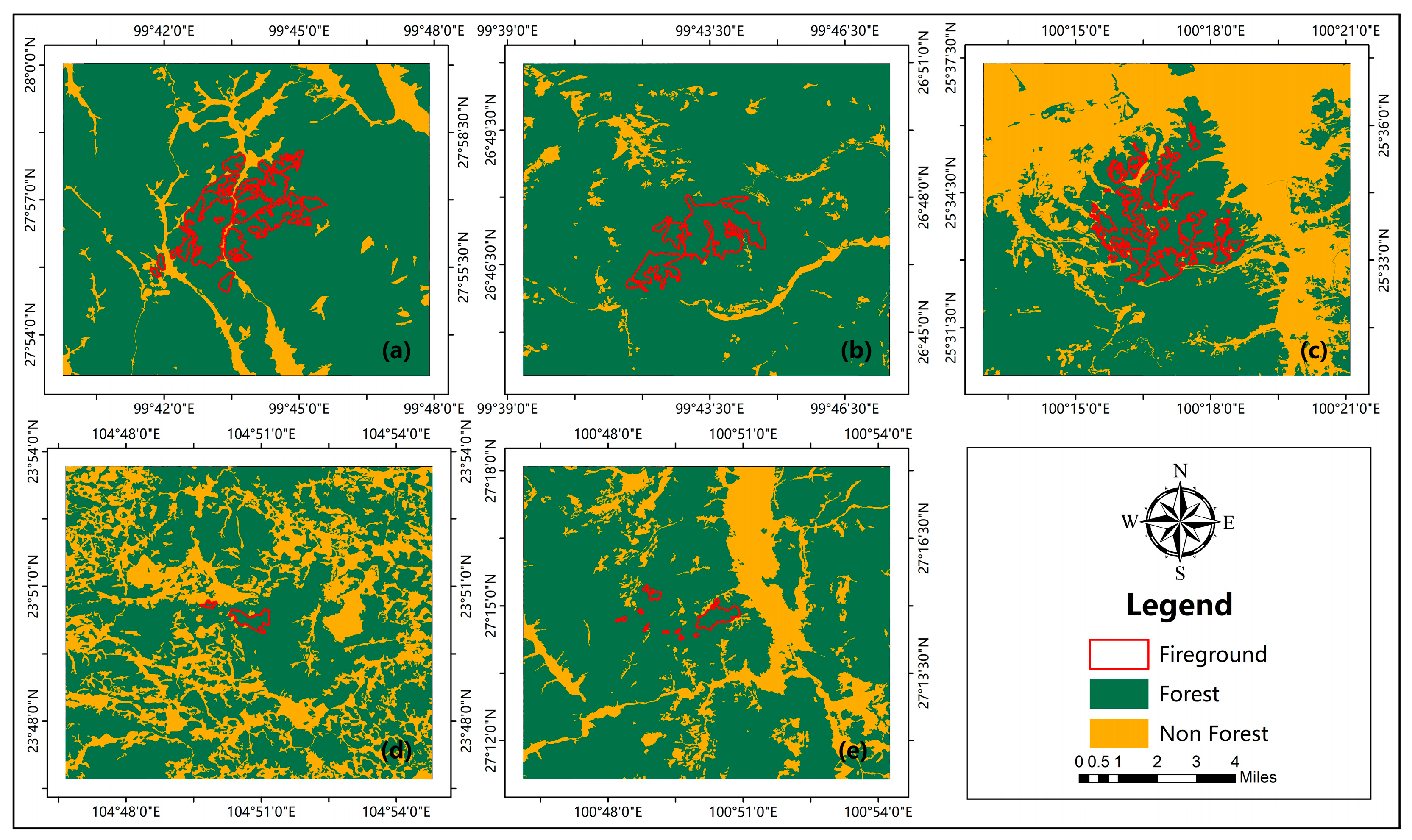

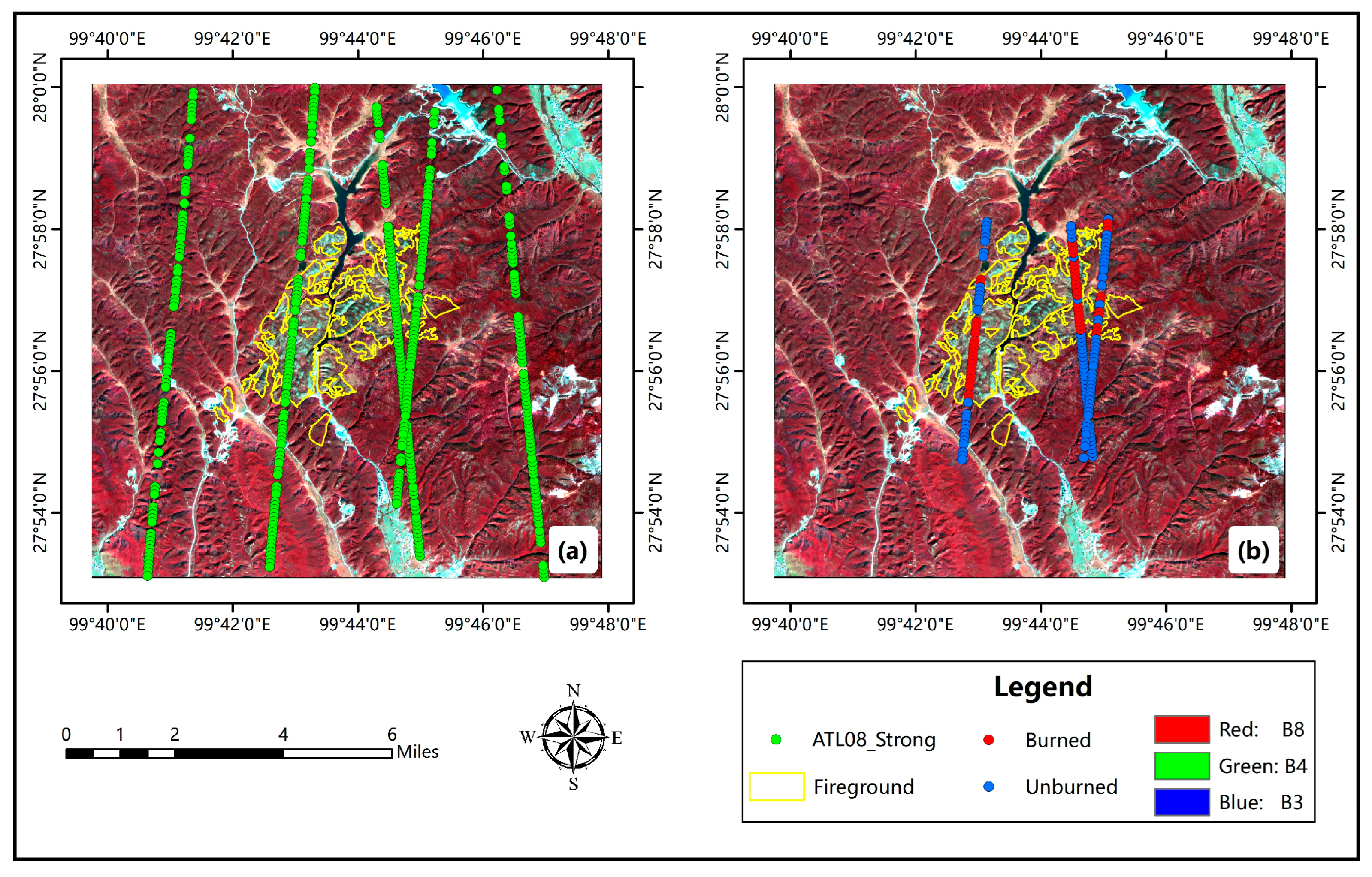

2.3. Determine the Burned in Woodland Based on ATLAS Photon Spots

2.4. ATLAS Fireground Recognition Classifier Construction

2.4.1. RF Algorithm Classifier

2.4.2. XGBoost Algorithm Classifier

2.4.3. Accuracy Assessment Criteria

3. Results

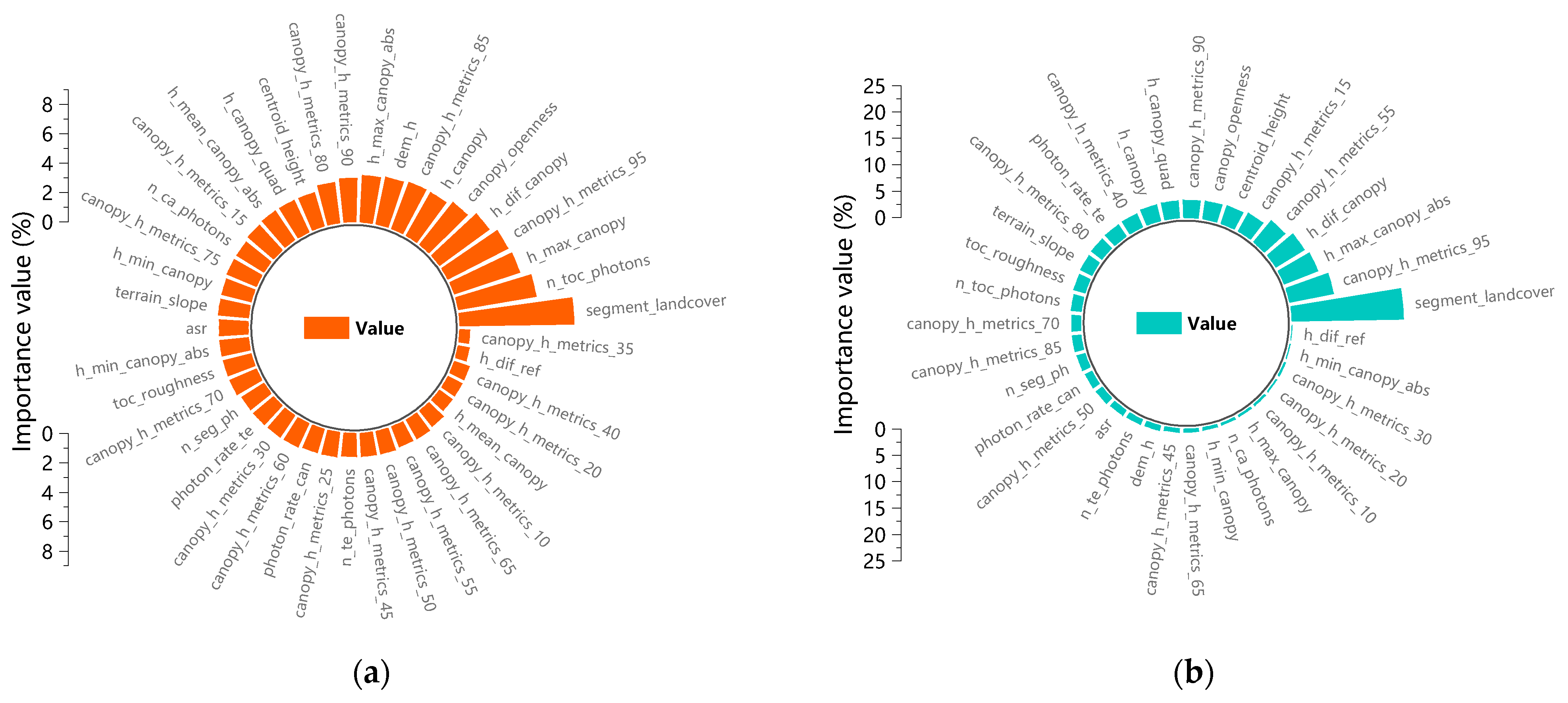

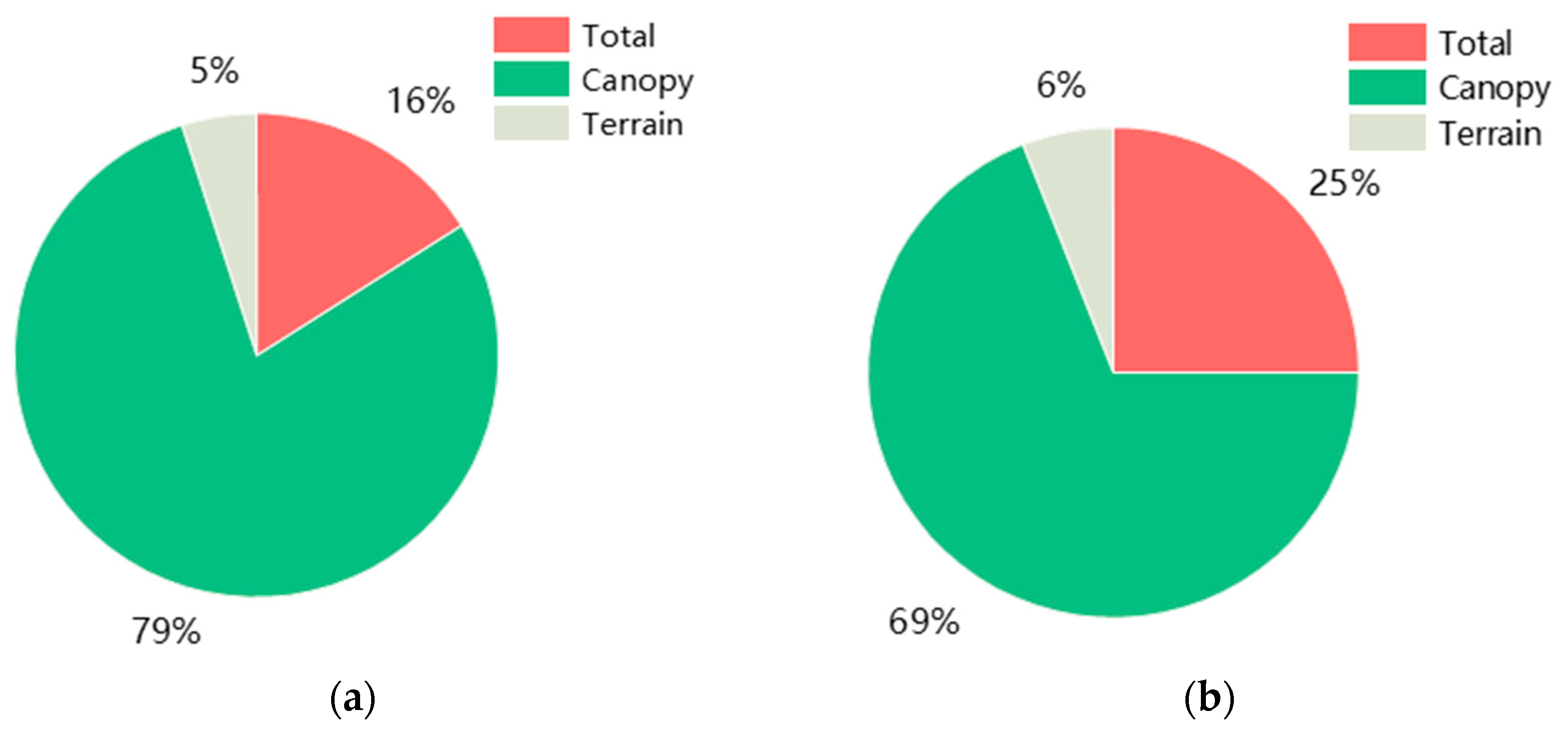

3.1. Analysis of the Contribution of ATLAS Parameters

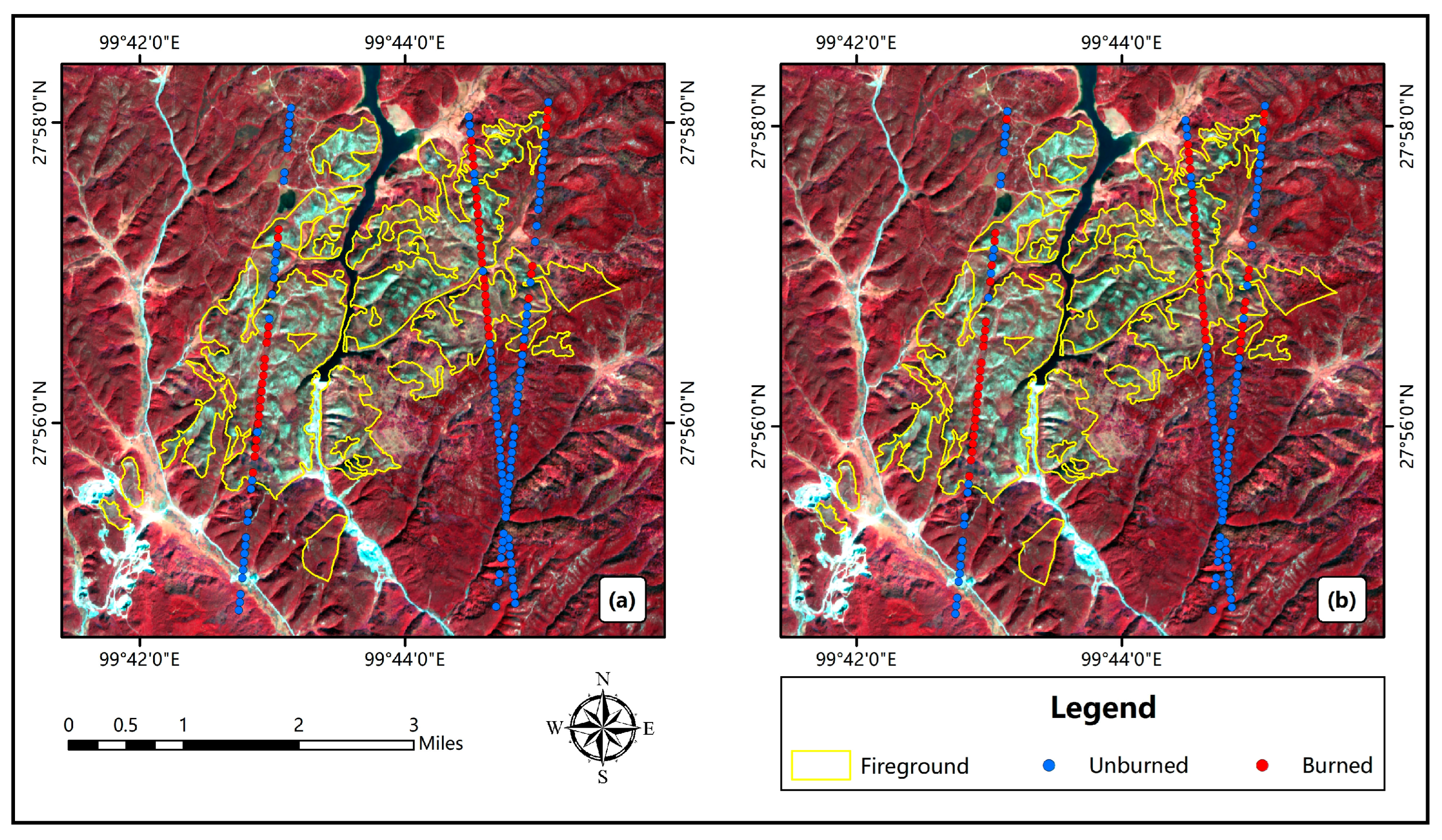

3.2. Analysis of Recognition Capabilities of ATLAS

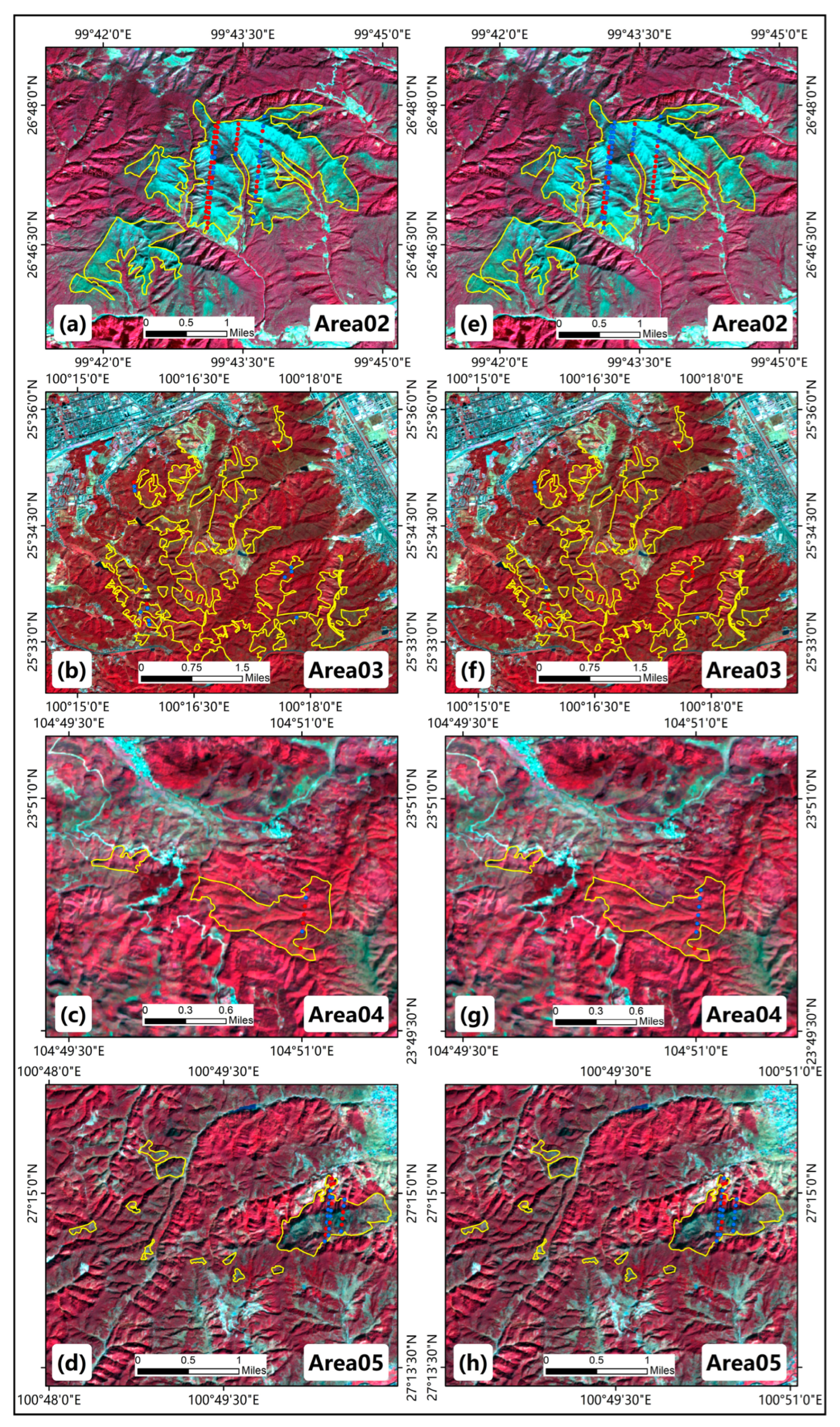

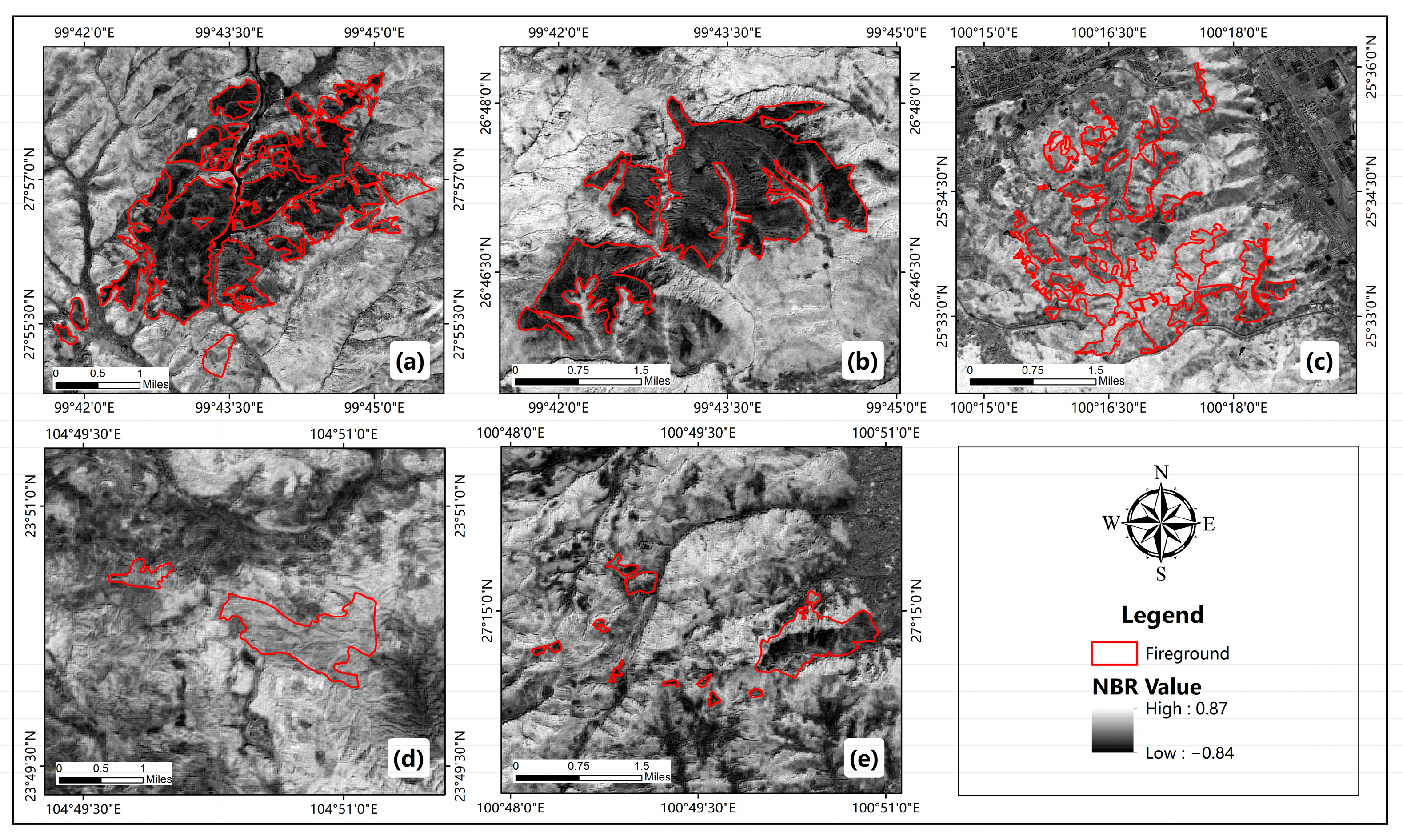

3.3. Analysis of the Spatial and Temporal Scalability of ATLAS Recognition of Firegrounds

4. Discussion

4.1. Effect of Different Burn Levels on Classification Accuracy

4.2. Limitations and Prospects

5. Conclusions

- (1)

- The parameter segment_landcover contributed the most to both the RF and XGBoost classifiers, with values of 7.8% and 21.2%, respectively, in the satellite-based photon-counting radar ATLAS data. The parameters associated with the canopy type, such as the canopy photon count, canopy openness, 95% quantile canopy height, etc., had relatively high contribution rates in both classifiers. The contributions of the parameters associated with the topography and overall type were relatively low. The use of ICESat-2/ATLAS vertical structure parameters to identify firegrounds has some feasibility.

- (2)

- The overall recognition accuracy of the XGBoost classifier, which is based on the vertical structure parameters of ATLAS in Shangri-La City via the 10-fold cross-validation method, is slightly better than that of the RF classifier. Both classifiers showed better potential with all evaluation metrics greater than 0.8 when tested with independent test samples, and both classifiers can be used for fire recognition with better results. The misclassified spots are mainly concentrated in gully areas with complex terrain, and the terrain may have some influence on the photon counting radar.

- (3)

- The RF classifier based on ATLAS vertical structure parameters is generally better than the XGBoost classifier for different spatial and temporal firegrounds, and the recognition effect is more stable and excellent. For areas with moderate fire severity, a combination of both classifiers can be used to complement each other for better recognition. The XGBoost classifier significantly outperforms the RF classifier for lighter areas.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, M.; Wang, B.; Fan, W.; Zhao, D. Simulation of forest net primary production and the effects of fire disturbance in Northeast China. Chin. J. Plant Ecol. 2015, 39, 322–332. [Google Scholar]

- Liu, Z.; Chang, Y.; He, H.; Chen, H. Long-term effects of fire suppression policy on forest landscape, fuels dynamics, and fire risks in Great Xing’an Mountains. Chin. J. Ecol. 2009, 28, 70–79. [Google Scholar]

- Li, Z.; Qing, X.L.; Gao, Z.; Deng, G.; Yi, L.; Sun, G.; Zu, X. Analysision monitoring burning status of forest fire using GF-4 satellite images. Spacecr. Eng. 2016, 25, 201–205. [Google Scholar]

- Qing, X.L.; Li, X.; Liu, S.; Liu, Q.; Li, Z. Forest fire early warning and monitoring techniques using satellite remote sensing in China. J. Remote Sens. 2020, 24, 511–520. [Google Scholar]

- Yang, Y.S. Forest Fire Recognition and Vegetation Restoration Evaluation Based on Google Earth Engine. Ph.D. Thesis, Beijing Forestry University, Beijing, China, 2020. [Google Scholar]

- Li, W.; Niu, Z.; Shang, R.; Qin, Y.; Wang, L.; Chen, H. High-resolution mapping of forest canopy height using machine learning by coupling ICESat-2 LiDAR with Sentinel-1, Sentinel-2 and Landsat-8 data. Int. J. Appl. Earth Obs. Geoinf. 2020, 92, 102163. [Google Scholar] [CrossRef]

- Flasse, S.; Ceccato, P. A contextual algorithm for AVHRR fire detection. Int. J. Remote Sens. 1996, 17, 419–424. [Google Scholar] [CrossRef]

- Kudoh, J.-I. Forest fire detection in Far East Region of Russia with NOAA-15 in 1998. In Proceedings of the IEEE 1999 International Geoscience and Remote Sensing Symposium, IGARSS’99 (Cat. No. 99CH36293), Hamburg, Germany, 28 June–2 July 1999; pp. 182–184. [Google Scholar]

- Nakayama, M.; Maki, M.; Elvidge, C.; Liew, S. Contextual algorithm adapted for NOAA-AVHRR fire detection in Indonesia. Int. J. Remote Sens. 1999, 20, 3415–3421. [Google Scholar] [CrossRef]

- Giglio, L.; Descloitres, J.; Justice, C.O.; Kaufman, Y.J. An enhanced contextual fire detection algorithm for MODIS. Remote Sens. Environ. 2003, 87, 273–282. [Google Scholar] [CrossRef]

- Sharma, S.K.; Aryal, J.; Rajabifard, A. Remote Sensing and Meteorological Data Fusion in Predicting Bushfire Severity: A Case Study from Victoria, Australia. Remote Sens. 2022, 14, 1645. [Google Scholar] [CrossRef]

- Pang, Y.; Jia, W.; Qing, X.; Si, L.; Liang, X.; Lin, X.; Li, Z. Forest fire monitoring using airborne optical full spectrum remote sensing data. J. Remote Sens. 2020, 24, 1280–1292. [Google Scholar] [CrossRef]

- Röder, A.; Hill, J.; Duguy, B.; Alloza, J.A.; Vallejo, R. Using long time series of Landsat data to monitor fire events and post-fire dynamics and identify driving factors. A case study in the Ayora region (eastern Spain). Remote Sens. Environ. 2008, 112, 259–273. [Google Scholar] [CrossRef]

- Quintano, C.; Fernandez-Manso, A.; Roberts, D.A. Burn severity mapping from Landsat MESMA fraction images and Land Surface Temperature. Remote Sens. Environ. 2017, 190, 83–95. [Google Scholar] [CrossRef]

- Kato, A.; Moskal, L.M.; Batchelor, J.L.; Thau, D.; Hudak, A.T. Relationships between Satellite-Based Spectral Burned Ratios and Terrestrial Laser Scanning. Forests 2019, 10, 444. [Google Scholar] [CrossRef]

- Yue, C.; Ciais, P.; Cadule, P.; Thonicke, K.; van Leeuwen, T.T. Modelling the role of fires in the terrestrial carbon balance by incorporating SPITFIRE into the global vegetation model ORCHIDEE—Part 2: Carbon emissions and the role of fires in the global carbon balance. Geosci. Model Dev. 2015, 8, 1321–1338. [Google Scholar] [CrossRef]

- Liu, s.; Li, X.; Qing, X.; Sun, G.; Liu, Q. Adaptive threshold method for active fire identification based on GF-4 PMI data. Natl. Remote Sens. Bull. 2020, 24, 215–225. [Google Scholar] [CrossRef]

- Lin, X.; Qing, X.L.; Liu, Q.; Liu, S. Application of GF-6 Wide Data in Identification of Forest Fire Traces—A Case Study of Hanma Nature Reserve in Daxing’ an Mountains, Inner Mongolia. Satell. Appl. 2019, 01, 41–44. [Google Scholar]

- Pang, Y.; Li, Z.; Ju, H.; Lu, H.; Jia, W.; Si, L.; Guo, Y.; Liu, Q.; Li, S.; Liu, L.; et al. LiCHy: The CAF’s LiDAR, CCD and Hyperspectral Integrated Airborne Observation System. Remote Sens. 2016, 8, 398. [Google Scholar] [CrossRef]

- Veraverbeke, S.; Dennison, P.; Gitas, I.; Hulley, G.; Kalashnikova, O.; Katagis, T.; Kuai, L.; Meng, R.; Roberts, D.; Stavros, N. Hyperspectral remote sensing of fire: State-of-the-art and future perspectives. Remote Sens. Environ. 2018, 216, 105–121. [Google Scholar] [CrossRef]

- Krishna Moorthy, S.M.; Bao, Y.; Calders, K.; Schnitzer, S.A.; Verbeeck, H. Semi-automatic extraction of liana stems from terrestrial LiDAR point clouds of tropical rainforests. ISPRS J. Photogramm. Remote Sens. 2019, 154, 114–126. [Google Scholar] [CrossRef] [PubMed]

- González-Olabarria, J.-R.; Rodríguez, F.; Fernández-Landa, A.; Mola-Yudego, B. Mapping fire risk in the Model Forest of Urbión (Spain) based on airborne LiDAR measurements. For. Ecol. Manag. 2012, 282, 149–156. [Google Scholar] [CrossRef]

- Alonzo, M.; Morton, D.C.; Cook, B.D.; Andersen, H.-E.; Babcock, C.; Pattison, R. Patterns of canopy and surface layer consumption in a boreal forest fire from repeat airborne lidar. Environ. Res. Lett. 2017, 12, 065004. [Google Scholar] [CrossRef]

- Oliveira, C.P.d.; Ferreira, R.L.C.; da Silva, J.A.A.; Lima, R.B.d.; Silva, E.A.; Silva, A.F.d.; Lucena, J.D.S.d.; dos Santos, N.A.T.; Lopes, I.J.C.; Pessoa, M.M.d.L.; et al. Modeling and Spatialization of Biomass and Carbon Stock Using LiDAR Metrics in Tropical Dry Forest, Brazil. Forests 2021, 12, 473. [Google Scholar] [CrossRef]

- Chen, M.; Qiu, X.; Zeng, W.; Peng, D. Combining Sample Plot Stratification and Machine Learning Algorithms to Improve Forest Aboveground Carbon Density Estimation in Northeast China Using Airborne LiDAR Data. Remote Sens. 2022, 14, 1477. [Google Scholar] [CrossRef]

- Wang, C.; Glenn, N.F. Estimation of fire severity using pre-and post-fire LiDAR data in sagebrush steppe rangelands. Int. J. Wildland Fire 2009, 18, 848–856. [Google Scholar] [CrossRef]

- Montealegre, A.L.; Lamelas, M.T.; Tanase, M.A.; De la Riva, J. Forest fire severity assessment using ALS data in a Mediterranean environment. Remote Sens. 2014, 6, 4240–4265. [Google Scholar] [CrossRef]

- Garcia, M.; Saatchi, S.; Casas, A.; Koltunov, A.; Ustin, S.; Ramirez, C.; Garcia-Gutierrez, J.; Balzter, H. Quantifying biomass consumption and carbon release from the California Rim fire by integrating airborne LiDAR and Landsat OLI data. J. Geophys. Res. Biogeosci. 2017, 122, 340–353. [Google Scholar] [CrossRef] [PubMed]

- Huang, X.; Xie, H.; Liang, T.; Yi, D. Estimating vertical error of SRTM and map-based DEMs using ICESat altimetry data in the eastern Tibetan Plateau. Int. J. Remote Sens. 2011, 32, 5177–5196. [Google Scholar] [CrossRef]

- García, M.; Popescu, S.; Riaño, D.; Zhao, K.; Neuenschwander, A.; Agca, M.; Chuvieco, E. Characterization of canopy fuels using ICESat/GLAS data. Remote Sens. Environ. 2012, 123, 81–89. [Google Scholar] [CrossRef]

- Liu, M.-S.; Xing, Y.-Q.; Wu, H.-B.; You, H.-T. Study on Mean Forest Canopy Height Estimation Based on ICESat-GLAS Waveforms. For. Res. 2014, 27, 309–315. [Google Scholar]

- Neuenschwander, A.; Pitts, K. The ATL08 land and vegetation product for the ICESat-2 Mission. Remote Sens. Environ. 2019, 221, 247–259. [Google Scholar]

- Popescu, S.C.; Zhou, T.; Nelson, R.; Neuenschwande, A.; Sheridan, R.; Narine, L.; Walsh, K.M. Photon counting LiDAR: An adaptive ground and canopy height retrieval algorithm for ICESat-2 data. Remote Sens. Environ. 2018, 208, 154–170. [Google Scholar] [CrossRef]

- Liu, M.; Popescu, S.; Malambo, L. Feasibility of Burned Area Mapping Based on ICESAT−2 Photon Counting Data. Remote Sens. 2019, 12, 24. [Google Scholar] [CrossRef]

- Silva, C.A.; Duncanson, L.; Hancock, S.; Neuenschwander, A.; Thomas, N.; Hofton, M.; Fatoyinbo, L.; Simard, M.; Marshak, C.Z.; Armston, J.; et al. Fusing simulated GEDI, ICESat-2 and NISAR data for regional aboveground biomass mapping. Remote Sens. Environ. 2021, 253, 112234. [Google Scholar] [CrossRef]

- Magruder, L.; Neumann, T.; Kurtz, N. ICESat-2 Early Mission Synopsis and Observatory Performance. Earth Space Sci. 2021, 8, e2020EA001555. [Google Scholar] [CrossRef] [PubMed]

- Babbel, B.J.; Parrish, C.E.; Magruder, L.A. ICESat-2 Elevation Retrievals in Support of Satellite-Derived Bathymetry for Global Science Applications. Geophys. Res. Lett. 2021, 48, e2020GL090629. [Google Scholar] [CrossRef]

- Zhang, J.; Tian, J.; Li, X.; Wang, L.; Chen, B.; Gong, H.; Ni, R.; Zhou, B.; Yang, C. Leaf area index retrieval with ICESat-2 photon counting LiDAR. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102488. [Google Scholar] [CrossRef]

- Wu, Z.; Li, M.; Wang, B.; Tian, Y.; Quan, Y.; Liu, J. Analysis of Factors Related to Forest Fires in Different Forest Ecosystems in China. Forests 2022, 13, 1021. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Mitchell, T.M. Machine Learning; McGraw-hill: New York, NY, USA, 2003; Available online: https://www.cin.ufpe.br/~cavmj/Machine%20-%20Learning%20-%20Tom%20Mitchell.pdf (accessed on 25 May 2022).

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Jafarzadeh, H.; Mahdianpari, M.; Gill, E.; Mohammadimanesh, F.; Homayouni, S. Bagging and Boosting Ensemble Classifiers for Classification of Multispectral, Hyperspectral and PolSAR Data: A Comparative Evaluation. Remote Sens. 2021, 13, 4405. [Google Scholar] [CrossRef]

- Jia, W.; Coops, N.C.; Tortini, R.; Pang, Y.; Black, T.A. Remote sensing of variation of light use efficiency in two age classes of Douglas-fir. Remote Sens. Environ. 2018, 219, 284–297. [Google Scholar] [CrossRef]

- García, M.L.; Caselles, V. Mapping burns and natural reforestation using Thematic Mapper data. Geocarto Int. 1991, 6, 31–37. [Google Scholar] [CrossRef]

- Narine, L.L.; Popescu, S.C.; Malambo, L. Synergy of ICESat-2 and Landsat for Mapping Forest Aboveground Biomass with Deep Learning. Remote Sens. 2019, 11, 1503. [Google Scholar] [CrossRef]

- Zhou, X.; Zhou, W.; Li, F.; Shao, Z.; Fu, X. Vegetation Type Classification Based on 3D Convolutional Neural Network Model: A Case Study of Baishuijiang National Nature Reserve. Forests 2022, 13, 906. [Google Scholar] [CrossRef]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.E.; Mohamed, A.-R.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T.N. Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Zhou, Z.-H.; Feng, J. Deep forest. Natl. Sci. Rev. 2019, 6, 74–86. [Google Scholar] [CrossRef]

- Zhang, J.; Song, H.; Zhou, B. SAR Target Classification Based on Deep Forest Model. Remote Sens. 2020, 12, 128. [Google Scholar] [CrossRef]

- Arora, J.; Agrawal, U. Classification of Maize leaf diseases from healthy leaves using Deep Forest. J. Artif. Intell. Syst. 2020, 2, 14–26. [Google Scholar] [CrossRef]

| Type | Name | Description | Path |

|---|---|---|---|

| Total | dem_h | Reference DEM elevation. | */dem_h |

| h_dif_ref | Difference between h_te_median and dem_h. | */h_dif_ref | |

| latitude | Latitude of each received photon. | */lat_ph | |

| longitude | Longitude of each received photon. | */lon_ph | |

| n_seg_ph | Number of photons within each land segment. | */n_seg_ph | |

| segment_landcover | Reference landcover for segment derived from best global landcover product available. | */segment_landcover | |

| asr | Apparent surface reflectance | */asr | |

| Canopy | canopy_openness | STD of relative heights for all photons classified as canopy photons within the segment to provide inference of canopy openness. | */canopy/canopy_openness |

| canopy_h_metrics | Relative canopy height metrics calculated at the following percentiles: 10 to 95% interval 5 stpe. | */canopy/canopy_h_metrics | |

| centroid_height | Absolute height above reference ellipsoid associated with the centroid of all signal photons. | */canopy/centroid_height | |

| h_canopy | 98% height of all the individual relative canopy heights. | */canopy/h_canopy | |

| h_canopy_quad | Quadratic mean canopy height. | */canopy/h_canopy_quad | |

| h_dif_canopy | Difference between h_canopy and canopy_h_metrics. | */canopy/h_dif_canopy | |

| h_max_canopy | Maximum of individual relative canopy heights within segment. | */canopy/h_max_canopy | |

| h_max_canopy_abs | Maximum of individual absolute canopy heights within segment. | */canopy/h_max_canopy_abs | |

| h_mean_canopy | Mean of individual relative canopy heights within segment. | */canopy/h_mean_canopy | |

| h_mean_canopy_abs | Mean of individual absolute canopy heights within segment. | */canopy/h_mean_canopy_abs | |

| h_min_canopy | Minimum of individual relative canopy heights within segment. | */canopy/h_min_canopy | |

| h_min_canopy_abs | Minimum of individual absolute canopy heights within segment. | */canopy/h_min_canopy_abs | |

| n_ca_photons | Number of canopy photons within the segment. | */canopy/n_ca_photons | |

| n_toc_photons | Number of top and canopy photons within segment. | */canopy/n_toc_photons | |

| photon_rate_can | Photon rate of canopy photons within each segment. | */canopy/photon_rate_can | |

| toc_roughness | STD of relative heights of all photons classified as top of canopy within the segment. | */canopy/toc_roughness | |

| Terrain | n_te_photons | Number of classed terrain photons in the segment. | */terrain/n_te_photons |

| photon_rate_te | Calculated photon rate for ground photons within each segment. | */terrain/photon_rate_te | |

| terrain_slope | Slope of terrain within segment. | */terrain/terrain_slope |

| Sentinel-2 MSI Bands | Resolution | Center Wavelength | Lower–Upper |

|---|---|---|---|

| B2 | 10 m | 496.6 nm (S2A)/492.1 nm (S2B) | 0.439–0.535 nm |

| B3 | 10 m | 560.0 nm (S2A)/559.0 nm (S2B) | 0.537–0.582 nm |

| B4 | 10 m | 664.5 nm (S2A)/665.0 nm (S2B) | 0.646–0.685 nm |

| B8 | 10 m | 835.1 nm (S2A)/833.0 nm (S2B) | 0.767–0.908 nm |

| B11 | 20 m | 1613.7 nm (S2A)/1610.4 nm (S2B) | 1.568–1.658 nm |

| Model | Accuracy | Recall | Precision | F1-Measure | |

|---|---|---|---|---|---|

| RF | Training sets | 1.000 | 1.000 | 1.000 | 1.000 |

| Cross-validation sets | 0.728 | 0.728 | 0.773 | 0.712 | |

| Test sets | 0.833 | 0.833 | 0.844 | 0.821 | |

| XGBoost | Training sets | 1.000 | 1.000 | 1.000 | 1.000 |

| Cross-validation sets | 0.830 | 0.830 | 0.867 | 0.827 | |

| Test sets | 0.812 | 0.812 | 0.832 | 0.819 | |

| Model and Area | Accuracy | Recall | Precision | F1-Measure | Error | |

|---|---|---|---|---|---|---|

| RF | Area2 | 0.719 | 0.719 | 1.000 | 0.836 | 0.281 |

| Area3 | 0.313 | 0.313 | 1.000 | 0.476 | 0.688 | |

| Area4 | 0.714 | 0.714 | 1.000 | 0.833 | 0.286 | |

| Area5 | 0.400 | 0.400 | 1.000 | 0.571 | 0.600 | |

| XGBoost | Area2 | 0.391 | 0.391 | 1.000 | 0.562 | 0.609 |

| Area3 | 0.625 | 0.625 | 1.000 | 0.769 | 0.375 | |

| Area4 | 0.143 | 0.143 | 1.000 | 0.250 | 0.857 | |

| Area5 | 0.240 | 0.240 | 1.000 | 0.387 | 0.760 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cao, G.; Wei, X.; Ye, J. Fireground Recognition and Spatio-Temporal Scalability Research Based on ICESat-2/ATLAS Vertical Structure Parameters. Forests 2024, 15, 1597. https://doi.org/10.3390/f15091597

Cao G, Wei X, Ye J. Fireground Recognition and Spatio-Temporal Scalability Research Based on ICESat-2/ATLAS Vertical Structure Parameters. Forests. 2024; 15(9):1597. https://doi.org/10.3390/f15091597

Chicago/Turabian StyleCao, Guojun, Xiaoyan Wei, and Jiangxia Ye. 2024. "Fireground Recognition and Spatio-Temporal Scalability Research Based on ICESat-2/ATLAS Vertical Structure Parameters" Forests 15, no. 9: 1597. https://doi.org/10.3390/f15091597