Sensorial Network Framework Embedded in Ubiquitous Mobile Devices

Abstract

1. Introduction

1.1. Trends and Standards

1.2. Problem Definition

2. Related Works

- (FTW) Feel the World, a mobile framework for participatory sensing [30];

- (MobiSens), a versatile mobile sensing platform for real-world applications [31];

- (SensLoc), sensing everyday places and paths using less [32];

- (ODK) Open Data Kit Sensors, a sensor integration framework for Android at the application level [33].

2.1. Feel the World Framework (FTW)

2.2. MobiSens Platform

2.3. SensLoc Location Service

2.4. Open Data Kit Framework

2.5. Discussion

3. Sensorial Network Framework Embedded in Ubiquitous Mobile Devices

3.1. Goals, Requirements, and User Stories

- To gather any possible sensorial information from mobile devices,

- To provide a visualization of gathered sensor data in a comprehensive form for end users;

- To add prediction models to the consolidated sensorial data.

3.2. System Architecture

3.3. Activity and Flow Model

3.4. State Models

3.5. Implementation

3.5.1. Frontend Application

3.5.2. Backend Application

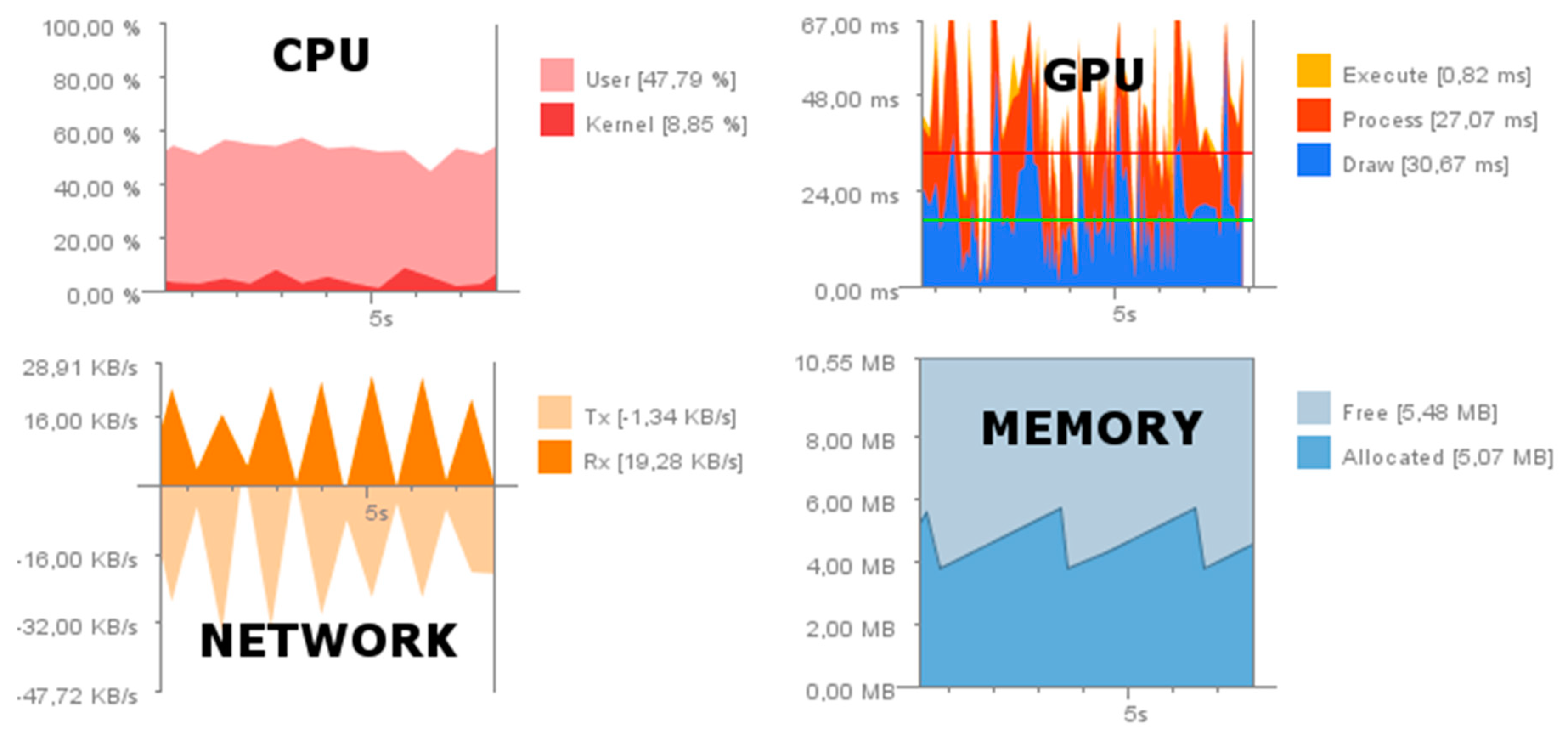

4. Evaluation and Discussions

4.1. Tuning and Testing of the Developed Solution

4.2. Discussion of Results

5. Conclusions and Future Works

- -

- Measuring the radio capabilities of networks, for instance, WiFi, 3G, LTE, etc. Global knowledge of a network map can be beneficial for a free WiFi connection or better connection, when this is required for a community with open communication;

- -

- Measuring sensors, such as a microphone and its volume level or a camera and its noise level. Such an application enables the identification of a surrounding area;

- -

- Measuring health sensors embedded in smart watches, connected to a mobile device via Bluetooth. Measuring the heartbeat or body temperature of a user can identify their behavior more precisely.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Machaj, J.; Brida, P. Optimization of Rank Based Fingerprinting Localization Algorithm. In Proceedings of the International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sydney, Australia, 13–15 November 2012. [Google Scholar]

- Hii, P.C.; Chung, W.Y. A comprehensive ubiquitous healthcare solution on an android (TM) mobile device. Sensors 2011, 11, 6799–6815. [Google Scholar] [CrossRef] [PubMed]

- Bellavista, P.; Cardone, G.; Corradi, A.; Foschini, L. The Future Internet convergence of IMS and ubiquitous smart environments: An IMS-based solution for energy efficiency. J. Netw. Comput. Appl. 2012, 35, 1203–1209. [Google Scholar] [CrossRef]

- Velandia, D.M.S.; Kaur, N.; Whittow, W.G.; Conway, P.P.; West, A.A. Towards industrial internet of things: Crankshaft monitoring, traceability and tracking using RFID. Robot. Comput. Integr. Manuf. 2016, 41, 66–77. [Google Scholar] [CrossRef]

- Li, F.; Han, Y.; Jin, C.H. Practical access control for sensor networks in the context of the Internet of Things. Comput. Commun. 2016, 89, 154–164. [Google Scholar] [CrossRef]

- Condry, M.W.; Nelson, C.B. Using Smart Edge IoT Devices for Safer, Rapid Response With Industry IoT Control Operations. Proc. IEEE 2016, 104, 938–946. [Google Scholar] [CrossRef]

- Salehi, S.; Selamat, A.; Fujita, H. Systematic mapping study on granular computing. Knowl. Based Syst. 2015, 80, 78–97. [Google Scholar] [CrossRef]

- Phithakkitnukoon, S.; Horanont, T.; Witayangkurn, A.; Siri, R.; Sekimoto, Y.; Shibasaki, R. Understanding tourist behavior using large-scale mobile sensing approach: A case study of mobile phone users in Japan. Pervasive Mob. Comput. 2015, 18, 18–39. [Google Scholar] [CrossRef]

- Android Operation System. Available online: https://en.wikipedia.org/wiki/Android_(operating_system) (accessed on 14 October 2019).

- Győrbíró, N.; Fábián, A.; Hományi, G. An activity recognition system for mobile phones. Mob. Netw. Appl. 2009, 14, 82–91. [Google Scholar] [CrossRef]

- Schirmer, M.; Höpfner, H. SenST*: Approaches for reducing the energy consumption of smartphone-based context recognition. In Proceedings of the International and Interdisciplinary Conference on Modeling and Using Context, Karlsruhe, Germany, 26–30 September 2011. [Google Scholar]

- Efstathiades, H.; Pa, G.; Theophilos, P. Feel the World: A Mobile Framework for Participatory Sensing. In Proceedings of the International Conference on Mobile Web and Information Systems, Paphos, Cyprus, 26–29 August 2013. [Google Scholar]

- Klepeis, N.E.; Nelson, W.C.; Ott, W.R.; Robinson, J.P.; Tsang, A.M.; Switzer, P.; Behar, J.V.; Hern, S.C.; Engelmann, W.H. The National Human Activity Pattern Survey (NHAPS): A resource for assessing exposure to environmental pollutants. J. Expo. Sci. Environ. Epidemiol. 2001, 11, 231–252. [Google Scholar] [CrossRef]

- Spring, Spring Framework—spring.io. Available online: http://docs.spring.io/spring-data/data-document/docs/current/reference/html/#mapping-usage-annotations (accessed on 14 October 2019).

- Kim, D.H.; Kim, Y.; Estrin, D.; Srivastava, M.B. SensLoc: Sensing everyday places and paths using less energy. In Proceedings of the 8th ACM Conference on Embedded Networked Sensor Systems (SenSys ’10), Zürich, Switzerland, 3–5 November 2010. [Google Scholar]

- Huseth, S.; Kolavennu, S. Localization in Wireless Sensor Networks. In Wireless Networking Based Control; Springer: New York, NY, USA, 2011; pp. 153–174. [Google Scholar]

- Lin, P.-J.; Chen, S.-C.; Yeh, C.-H.; Chang, W.-C. Implementation of a smartphone sensing system with social networks: A location-aware mobile application. Multimed. Tools Appl. 2015, 74, 8313–8324. [Google Scholar] [CrossRef]

- Gil, G.B.; Berlanga, A.; Molina, J.M. InContexto: Multisensor architecture to obtain people context from smartphones. Int. J. Distrib. Sens. Netw. 2012, 8. [Google Scholar] [CrossRef]

- Woerndl, W.; Manhardt, A.; Schulze, F.; Prinz, V. Logging user activities and sensor data on mobile devices. In International Workshop on Modeling Social Media; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6904, pp. 1–19. [Google Scholar]

- Brunette, W.; Sodt, R.; Chaudhri, R.; Goel, M.; Falcone, M.; Orden, J.V.; Borriello, G. Open data kit sensors: A sensor integration framework for android at the application-level. In Proceedings of the 10th International Conference on Mobile Systems, Applications, and Services, Low Wood Bay, Lake District, UK, 25–29 June 2012. [Google Scholar]

- Wu, P.; Zhu, J.; Zhang, J.Y. MobiSens: A Versatile Mobile Sensing Platform for Real-World Applications. Mobile Networks and Applications. Mob. Netw. Appl. 2013, 18, 60–80. [Google Scholar] [CrossRef]

- Behan, M.; Krejcar, O. Smart Home Point as Sustainable Intelligent House Concept. In Proceedings of the 12th IFAC Conference on Programmable Devices and Embedded Systems, Ostrava, Czech Republic, 25–27 September 2013. [Google Scholar]

- Tarkoma, S.; Siekkinen, M.; Lagerspetz, E.; Xiao, Y. Smartphone Energy Consumption: Modeling and Optimization; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

- Behan, M.; Krejcar, O. Modern smart device-based concept of sensoric networks. Eurasip. J. Wirel. Commun. Netw. 2013, 2013, 155. [Google Scholar] [CrossRef]

- Brida, P.; Benikovsky, J.; Machaj, J. Performance Investigation of WifiLOC Positioning System. In Proceedings of the 34th International Conference on Telecommunications and Signal Processing, Budapest, Hungary, 18–20 August 2011. [Google Scholar]

- Benikovsky, J.; Brida, P.; Machaj, J. Proposal of User Adaptive Modular Localization System for Ubiquitous Positioning. In Proceedings of the 4th Asian Conference on Intelligent Information and Database Systems, Kaohsiung, Taiwan, 19–21 March 2012. [Google Scholar]

- Machaj, J.; Brida, P. Performance Comparison of Similarity Measurements for Database Correlation Localization Method. In Proceedings of the 3rd Asian Conference on Intelligent Information and Database Systems, Daegu, Korea, 20–22 April 2011. [Google Scholar]

- Brida, P.; Machaj, J.; Gaborik, F.; Majer, N. Performance Analysis of Positioning in Wireless Sensor Networks. Przegląd Elektrotechniczny 2011, 87, 257–260. [Google Scholar]

- González, F.; Villegas, O.; Ramírez, D.; Sánchez, V.; Domínguez, H. Smart Multi-Level Tool for Remote Patient Monitoring Based on a Wireless Sensor Network and Mobile Augmented Reality. Sensors 2014, 14, 17212–17234. [Google Scholar] [CrossRef]

- Mao, L. Evaluating the Combined Effectiveness of Influenza Control Strategies and Human Preventive Behavior. PLoS ONE 2011, 6, e24706. [Google Scholar] [CrossRef]

- Cuzzocrea, A. Intelligent knowledge-based models and methodologies for complex information systems. Inf. Sci. 2012, 194, 1–3. [Google Scholar] [CrossRef]

- Lang, G.; Li, Q.; Cai, M.; Yang, T. Characteristic matrixes-based knowledge reduction in dynamic covering decision information systems. Knowl. Based Syst. 2015, 85, 1–26. [Google Scholar] [CrossRef]

- Hempelmann, C.F.; Sakoglu, U.; Gurupur, V.P.; Jampana, S. An entropy-based evaluation method for knowledge bases of medical information systems. Expert Syst. Appl. 2016, 45, 262–273. [Google Scholar] [CrossRef]

- Cavalcante, E.; Pereira, J.; Alves, M.P.; Maia, P.; Moura, R.; Batista, T.; Delicato, F.C.; Pires, P.F. On the interplay of Internet of Things and Cloud Computing: A systematic mapping study. Comput. Commun. 2016, 89, 17–33. [Google Scholar] [CrossRef]

- Ma, H.; Liu, L.; Zhou, A.; Zhao, D. On Networking of Internet of Things: Explorations and Challenges. IEEE Internet Things J. 2016, 3, 441–452. [Google Scholar] [CrossRef]

- Barbon, G.; Margolis, M.; Palumbo, F.; Raimondi, F.; Weldin, N. Taking Arduino to the Internet of Things: The ASIP programming model. Comput. Commun. 2016, 89, 128–140. [Google Scholar] [CrossRef]

- Mineraud, J.; Mazhelis, O.; Su, X.; Tarkoma, S. A gap analysis of Internet-of-Things platforms. Comput. Commun. 2016, 89, 5–16. [Google Scholar] [CrossRef]

- Ronglong, S.; Arpnikanondt, C. Signal: An open-source cross-platform universal messaging system with feedback support. J. Syst. Softw. 2016, 117, 30–54. [Google Scholar] [CrossRef]

- Krejcar, O. Threading Possibilities of Smart Devices Platforms for Future User Adaptive Systems. In Proceedings of the Asian Conference on Intelligent Information and Database Systems, Kaohsiung, Taiwan, 19–21 March 2012. [Google Scholar]

- Wang, S.; Wan, J.; Zhang, D.; Li, D.; Zhang, C. Towards smart factory for industry 4.0: A self-organized multi-agent system with big data based feedback and coordination. Comput. Netw. 2016, 101, 158–168. [Google Scholar] [CrossRef]

- Bangemann, T.; Riedl, M.; Thron, M.; Diedrich, C. Integration of Classical Components into Industrial Cyber-Physical Systems. Proc. IEEE 2016, 104, 947–959. [Google Scholar] [CrossRef]

- Pfeiffer, T.; Hellmers, J.; Schön, E.M.; Thomaschewski, J. Empowering User Interfaces for Industrie 4.0. Proc. IEEE 2016, 104, 986–996. [Google Scholar] [CrossRef]

- Blind, K.; Mangelsdorf, A. Motives to standardize: Empirical evidence from Germany. Technovation 2016, 48, 13–24. [Google Scholar] [CrossRef]

- Schleipen, M.; Lüder, A.; Sauer, O.; Flatt, H.; Jasperneite, J. Requirements and concept for Plug-and-Work Adaptivity in the context of Industry 4.0. Automatisierungstechnik 2015, 63, 801–820. [Google Scholar] [CrossRef]

- Schuh, G.; Potente, T.; Varandani, R.; Schmitz, T. Global Footprint Design based on genetic algorithms—An “Industry 4.0” perspective. CIRP Ann. 2014, 63, 433–436. [Google Scholar] [CrossRef]

- Xie, Z.; Hall, J.; McCarthy, I.P.; Skitmore, M.; Shen, L. Standardization efforts: The relationship between knowledge dimensions, search processes and innovation outcomes. Technovation 2016, 48, 69–78. [Google Scholar] [CrossRef]

- Gomez, C.; Chessa, S.; Fleury, A.; Roussos, G.; Preuveneers, D. Internet of Things for enabling smart environments: A technology-centric perspective. J. Ambient Intell. Smart Environ. 2019, 11, 23–43. [Google Scholar] [CrossRef]

| Sensor | Sampling [MJ] | Idle [MJ] | Switch ON/OFF [MJ] |

|---|---|---|---|

| accelerometer | 21 | - | -/- |

| gravity | 25 | - | -/- |

| magnetometer | 48 | 20 | -/- |

| gyroscope | 130 | 22 | -/- |

| microphone | 101 | - | 123/36 |

| Core Features | FTW [30] | Mobisens [31] | Sensloc [32] | ODK [35] | Required |

|---|---|---|---|---|---|

| embedded sensors | ALL | ALL | WiFi Accelerometer GPS | ALL | ALL |

| external sensors | YES | NO | NO | YES | NO |

| server data sync | YES | YES | NO | NO | YES |

| dynamic sampling | YES | YES | NO | NO | YES |

| decision module | YES | YES | NO | NO | YES |

| security | NO | NO | NO | NO | YES |

| third parties | YES | YES | NO | YES | YES |

| social connectors | NO | NO | NO | NO | YES |

| User Story Identifier (USI) | Content of User Stories | Related to |

|---|---|---|

| USI-1-1 | As a user, I want to register with a sensorial framework using specific credentials, such as an email and password | Main |

| USI-1-2 | As a user, I want to log in to a sensorial framework using defined credentials | Main |

| USI-1-3-1 | As a user, I want to change my password, when I forget it, via an email channel | Main |

| USI-1-3-2 | As a user, I want to change my password, when I am logged in | Main/Authorized |

| USI-1-3-3 | As a user, I want to change my email, when I am logged in | Main/Authorized |

| USI-1-4 | As a user, I want to logout | Main/Security/Authorized |

| USI-2-1 | As a user, I want to connect my device to a sensorial framework by installing application on the device using the same login credentials and by providing a basic description, such as a name | Devices/Authorized |

| USI-2-2 | As a user, I want to disconnect my device | Devices/Authorized |

| USI-2-3 | As a user, I want to modify the name of my device | Devices/Authorized |

| USI-2-4-1 | As a user, I want to see all of the devices connected to a sensorial framework with a basic description, such as the name of the device, type of device and connection status of the device in the device list | Devices/Authorized |

| USI-2-4-2 | As a user, I want to see all details of the devices by selecting them from a device list | Devices/Authorized |

| USI-3-1 | As a user, I want to see a list of the available sensors of the device, with the name, type, periodicity/action and data counters | Device/Authorized |

| USI-3-2 | As a user, I want to see details of device sensor, where I can customize details, such as the period of monitoring, type of action, etc. | Sensor/Authorized |

| USI-3-3 | As a user, I want to see historical data on sensors in timeline charts | Sensor/Authorized |

| Component | Time *** [ms] | Description |

|---|---|---|

| sensor proximity * | - | interactive |

| sensor accelerometer * | 12 | tested on Sony ZT3-blade |

| sensor magnetic field * | 46 | tested on Sony ZT3-blade |

| sensor orientation * | 69 | tested on Sony ZT3-blade |

| network rtt tcp ** | 30 | tested on WLAN (single router) 332B HTTP Rest Header 116B JSON payload |

| network rtt udp * | 15 | tested on WLAN (single router) 66B UDP Header 547B Java serializable payload |

| mongo database | 57 | <3000 records |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Behan, M.; Krejcar, O.; Sabbah, T.; Selamat, A. Sensorial Network Framework Embedded in Ubiquitous Mobile Devices. Future Internet 2019, 11, 215. https://doi.org/10.3390/fi11100215

Behan M, Krejcar O, Sabbah T, Selamat A. Sensorial Network Framework Embedded in Ubiquitous Mobile Devices. Future Internet. 2019; 11(10):215. https://doi.org/10.3390/fi11100215

Chicago/Turabian StyleBehan, Miroslav, Ondrej Krejcar, Thabit Sabbah, and Ali Selamat. 2019. "Sensorial Network Framework Embedded in Ubiquitous Mobile Devices" Future Internet 11, no. 10: 215. https://doi.org/10.3390/fi11100215

APA StyleBehan, M., Krejcar, O., Sabbah, T., & Selamat, A. (2019). Sensorial Network Framework Embedded in Ubiquitous Mobile Devices. Future Internet, 11(10), 215. https://doi.org/10.3390/fi11100215