Abstract

User experience with intuitive and flexible digital platforms can be enjoyable and satisfying. A strategy to deliver such an experience is to place the users at the center of the design process and analyze their beliefs and perceptions to add appropriate platform features. This study conducted with focus groups as a qualitative method of data collection to investigate users’ preferences and develop a new landing page for institutional repositories with attractive functionalities based on their information-structural rules. The research question was: What are the motivations and experiences of users in an academic community when publishing scientific information in an institutional repository? The focus group technique used in this study had three sessions. Results showed that 50% of the participants did not know the functionalities of the institutional repository nor its benefits. Users’ perceptions of platforms such as ResearchGate or Google Scholar that provide academic production were also identified. The findings showed that motivating an academic community to use an institutional repository requires technological functions, user guidelines that identify what can or cannot be published in open access, and training programs for open access publication practices and institutional repository use. These measures align with global strategies to strengthen the digital identities of scientific communities and thus benefit open science.

1. Introduction: Institutional Repositories

Confinement and social distancing brought about by the COVID-19 pandemic have necessitated incorporating technologies to continue professional, educational, and social activities. Thus, university information systems must urgently evolve to the next generation to support the digital transformation of society. In 2016, The Confederation of Open Access Repositories (COAR) [] released the best practice framework for the next generation of repositories. It includes functionalities to create web-friendly architecture, repositories embedded in researchers’ workflow, open peer review, and content quality assessment. It proscribes better impact and usage measures for discovery, access, reuse, integrity, and authenticity. According to COAR, the specific vision for Next Generation Repositories is “to position repositories as the foundation for a distributed, globally-networked infrastructure for scholarly communication, on top of which layers of value-added services will be deployed, thereby transforming the system, making it more research-centric, open to and supportive of innovation, while also collectively managed by the scholarly community” []. The development of open access and open science policies requires solid technological infrastructures that allow free access to scientific information, serve as a means of communicating research results, and allow their impact to be measured. Institutional repositories and journals are vital elements needed to execute open access mandates and implement open science policies. Institutional research repositories are assessed primarily through internal audits to improve their quality per best practices. They are guided by the Trust Principles for Digital Repositories, the requirements for next-generation COAR repositories, and Plan S. Certifiers of repositories include Core Trust Seal, Go-FAIR, and PLOS [].

Institutional repositories need technological platforms to store and publish scientific information in an open format and knowing the experience of researchers in using the repository will confirm whether they are not aware of the benefits of open access practices or why they do not find the institutional repository system attractive and usable. Initially, institutional repositories were platforms to provide access to scientific information and digital documentation for the public, whether to read, download, copy, print, or distribute []. These repositories were designed from the perspective of librarians, catalogers, or systems engineers. However, if the academic community intends to use them, a good practice would be to know and understand the end-user experience and create a design that allows them to effectively utilize the functionalities of the repository’s website. In the human and computer interaction study, system usability is related to how a system can work well when used maximally by users so that all system capabilities can be brought to bear []. It would also be ideal to measure the repositories’ user satisfaction and acceptance and improve their interfaces [] and utilization rates by providing greater satisfaction. In addition, the support of technologists, managers, and interface designers who make these types of sites that support open science “user-friendly” is necessary. Through three focus groups, this study investigated the repository users’ experience when performing tasks such as searching and entering information into the repository or to perform the manual process of self-archiving open access scientific papers. The paper begins with a theoretical framework that outlines the crucial points of four user experience categories when interacting with institutional repositories: (1) perceived usefulness, (2) perceived ease of search, (3) management of digital identity 2.0, and (4) user interface design. Next, the context where the study was applied is described with the methodology of the focus groups, and finally the results, discussion, conclusions, and future research are presented.

2. Related Work

2.1. Perceived Usefulness Indicator of the Institutional Repository

Although the reasons for having an institutional repository may be different for each educational institution, the willingness of the academic community to add content to it must be considered. The impact of available and accessible research and educational resources through an IR is related to the attitudes and perceptions of librarians, teachers, researchers, and students. The technological acceptance model (TAM) is an information technology framework for understanding users’ adoption and use of emerging technologies, proposes two significant variables that affect user intention: (a) ease of use and (b) perceived usefulness for determining the factors (internal beliefs and attitudes) that affect the usage of information systems []. Perceived usefulness is defined as the degree to which a person believes that using a particular system would enhance his or her job performance []. Simply, users are more likely to adopt a new technology with high-quality UX design (i.e., usable, useful, desirable, and credible) []. The TAM and UX view of experience allow us to compare how their views intersect when studying experience, as it has been shown that experimentally manipulating independent variables, such as levels of ease of use, seems a much-needed way to further investigate technology adoption and use []. The needs of users searching or performing the manual process of self-archiving open access scientific papers are identified using information and the informational behavior of users related to their competencies to access and use repositories to obtain the browsing behavior of users.

Adding automated self-archiving processes in the repository will make scientific papers more visible and therefore more cited, and analyzing how repository users self-archive educational resources can lead to reduced times and streamlined flows. Ref. [] points out that the factors that motivate or inhibit the self-archive of papers in open access range from age, concern about the copyright of their works, the additional time and effort required, to the additional time and effort required for self-archiving. Ref. [] examine social and individual motivation factors affecting researchers’ article-sharing intentions through their use of institutional repository or ResearchGate. They employed a theoretical framework that integrated the theory of planned behavior based on Ajzen (1991) and their findings demonstrate the need to develop different types of support and article-sharing policies users to facilitate or increase their article-sharing behaviors. Ref. [] shows in her study that, the author does not have enough information to know what version he or she can self-archive. So, beware of existing scholarly communication practices in the digital realm. It is the discipline-based norms and practices that determine self-archiving behavior, not the terms of copyright transfer agreements. The social appropriation of knowledge implies the democratization of access and use of scientific knowledge, as a strategy for its adequate transmission and use among the different social actors, which will result in the improvement of the quality of life of communities and their members []. To further promote best practices, librarians should adopt a role to disseminate, train, plan marketing, and convince faculty to self-archive their scientific papers in the institutional repository because if a researcher knows the benefits of publishing their scientific papers in the institutional repository, they will demonstrate a better attitude in spending the time to self-archive.

Institutional repositories now are required to preserve scientific production and improve the institutions’ and researchers’ rankings [] and impact, measured by the number of article citations and the quality of the journals in which the article appears with additional metrics, such as the number of persons reading or downloading an article []. Ref. [] mentions that institutional repositories must identify user profiles and tasks to attain greater scientific visibility through this platform and evolve toward collaboration that facilitates the social interactions of academic networks through incorporating communication tools such as email or private text messages. To help automate processes by eliminating bottlenecks in massive manual processes and in the simplification of self-archiving tasks, [] emphasizes that it is necessary for an internal layer based on artificial intelligence to introduce non-human users, perform data mining, and machine learning. Therefore, studies lean towards recommending increasing the appropriation of institutional repositories by the academic community, placing the user at the center of the process, and considering the developer as a facilitator and mediator to redesign the repository’s interface. A modern digital information repository with expert classification systems such as ontologies and powered by modern semantic technology should move towards a knowledge organization system (KOS) which empowers end users to quickly and efficiently retrieve information needed for knowledge propagation []. The challenge for universities is to review their open access policies to increase the scientific visibility of their researchers in the rankings and to develop strategies for communicating polices and promoting the digital transformation of the processes involved in both search and self-archive, and thus evolve towards digital platforms to promote education 4.0.

2.2. Perceived Findability from Search Engines Indicator of the Institutional Repository

“Findability”, an aspect of usability, is an important component in researchers’ perceptions of or satisfaction with how scientific papers published in the institutional repository are easily and quickly found in the various search engines and may increase their interest in using open access channels because it will increase the use the open practices. Peter Morville [] defines findability as (a) the quality of being locatable or navigable, (b) the degree to which a particular object is easy to discover or locate, and (c) the degree to which a system or environment supports navigation and retrieval. The platforms hosting repositories must have interfaces for finding educational resources simply and understandably with a discovery tool integrated []. Therefore, it is necessary to provide a search tool that centralizes the resources and retrieves all the collections from a single interface. In 2014, the NISO Open Discovery Initiative (ODI) defined discovery services as a central index that enables searches of all the library resources and provides results management, reference management, indexing by metadata categories, and statistical reports of searches by user, document, number of visitors, and how many times a user has downloaded a document, among others [] (p. 87).

Information search is one of the most common tasks in several sectors, including academia, the government, the private sector, and society. However, search efficiency is not ensured in many digital spaces []. The personalization of metadata to refine searches and a user-friendly navigation interface must be considered to increase the quality of the most relevant documents found []. For [], the development of repository platforms requires an escalation of open software through a complex negotiation with commercial brands that own cutting-edge technologies. Information architecture is the critical factor in the information retrieval process that could prevent a user from not finding the information searched in the repository, thereby reducing visibility and negatively impacting citation rates and university rankings []. Additionally, it is considered necessary to investigate in the near future whether to incorporate reusable data in combination with other datasets via the Web [].

2.3. Management of the Digital Identity 2.0 Indicator of the Institutional Repository

Digital identity and scientific visibility, although they go hand in hand, have different orientations. Scientific visibility allows the scientific production of a given author to be present and accessible to their target audience. By being careful with their digital identity, a researcher helps construct the digital identity of the institution to which he or she belongs [], which is what ends up being used by various rankings that measure the quality of universities and research centers []. Institutional repositories could be used to manage the researcher’s digital identity and personal profile, including name, photo, professional experience, ideas, capabilities, number of citations, and downloads of his or her articles, thereby cultivating an online researcher identity and professional reputation [].

Science 2.0 and Open Science, even if they do not always converge, express new forms of dissemination of knowledge, where open access to scientific production is integrated with traditional transmission systems of scientific information, and new research workflows are developed []. Science 2.0 facilitates collaboration with peers or others through social research networks, such as ResearchGate and Academia.edu. The evolution of open science brought innovative practices that, if properly used, can boost a country’s development, especially if the myths associated with the open knowledge movement have been uprooted []. An institution may consider its repository a technological tool to manage and store open science and facilitate Science 2.0.

Several aspects should be considered for adequate management of scientific information, such as citation reports, calculations of the impact of research, evaluation of candidates for employment, promotion or tenure, reports of disciplinary research trends, calculations of h-indexes, among others []. Search engine optimization (SEO) constitutes the set of methods designed to increase the visibility of, and the number of visits to, a web page by means of its ranking on the search engine results pages []. Academic social networks, such as ResearchGate.net or Academia.edu, promote scientific dissemination but not from an institutional perspective that highlights the researcher’s visibility. Therefore, the institutional repository should be used as a tool for communication, collaboration, and interaction []. This new way of communicating science has been called Research 2.0. Technology has supported creating new networks of academic collaboration []. The institutional repositories can acquire the functionalities of an academic social network to become a platform to share, connect, establish communication with peers, and carry out local, national, international, and inter-institutional projects in the areas of subscriber interest. Science communication occurs through social networks because they are a flexible means to significantly impact publications and achieve more considerable academic influence.

2.4. User Interface Design Indicator of the Institutional Repository

Information design is defined as the art and science of preparing information to be used by human beings efficiently and effectively []. Its primaries objectives are:

- To develop documents that are comprehensible and easy to translate for effective action.

- To use technology to design interactions that are easy, natural, and as pleasant as possible.

- To enable people to find their way in three-dimensional space with comfort and ease, especially in urban and virtual spaces.

Interactive design involves designing interactive products and services with a focus beyond the item being developed to consider the way users will interact with it []. Thus, the scrutiny of users’ needs, limitations, and contexts empowers designers to customize the output to suit precise demands. User experience is defined as the perceptions and responses of a person using or anticipating a product, system, or service []. Authors such as [] view users at the center of their experiences. Thus, it is vital to know users’ feelings when interacting with the system and address subjective qualities such as motivation and user expectations. This opposes the concept of usability that focuses on task performance and metrics for the time of task execution and the number of clicks or errors. To propose improvements, designers should systematically identify the types of problems faced by the user of repository interfaces when searching for information and uploading it []. Ref. [] focused on a study where the users evaluated the system and established various categories to assess repositories, which resulted in the search engine, metadata, and content being considered the most valued aspects. Ref. [] explain that the user experience defines their informational behavior, skills, and needs. On the other hand, Ref. [] proposed a mental model to understand users’ requirements and thus know how users think, an essential factor for proper design. Therefore, it is necessary to examine and evaluate the interaction of users with technologies to identify points of conflict regularly and update repository interfaces with the latest functionalities and trends.

The dimensions are the aspects a designer considers when designing interactions []:

- Words (1D) encompass text, which helps give users the right amount of information. They can include content and button labels.

- Visual representations (2D) are graphical elements such as images, typography, and icons that aid user interaction.

- Physical objects/space (3D) refers to the physical media that give users access to the product or service, for instance, a laptop via a mouse or a mobile phone via fingers.

- Time (4D) relates to media that changes with time, such as animations, videos, and sounds.

- Behavior (5D) is concerned with how the previous four dimensions define the interactions afforded by a product, for instance, how users perform actions on a website or operate a car. Behavior also refers to how the product reacts to the users’ inputs and provides feedback.

3. Materials and Methods

User motivations and expectations were explored using focus groups as a qualitative method of data collection to investigate users’ preferences. Refs. [,] recommended that the focus group participants share a homogeneous profile in that they have common characteristics and points out that the number of participants per group-in a focus group may be between 4 and 12 people. Moreover, the participants should be informed of the specific topic(s) to be addressed in advance to focus their opinions. The guiding questions must be carefully sequenced and relevant to the study topic. The focus groups should enjoy a comfortable and relaxed environment with a friendly and approachable moderator to inspire confidence, encouraging the participants to share their views and experience with the topic. This study had 16 participants divided into three groups sessions.

3.1. Selection Criteria of the Participants

An e-mail invitation was sent to researchers and doctoral students participating in the Binational Laboratory project and who would deposit their open access scientific production in the university’s institutional repository, The acceptance to attend was confirmed via email where demographic data was collected through a survey in google forms.

Three sessions were scheduled. The first focus group session had five participants, the second eight, and the third three. There were 16 participants in total. Each group session had the same moderator. The focus groups sessions were recorded in a room with a Gesell camera.

3.2. Validity and Reliability

Ref. [] indicated the validity criteria that should be present in a research study: validity of the model’s construct validity, internal validity, external validity, reliability, and triangulation. The types of validity that were used in this study are described below:

Construct validity: A pilot focus group was conducted first to assess the guide questions based on a series of indicators collected from the literature review.

Internal validity: Two or more researchers receive a set of previously generated constructs and relate them to the data the same way other researchers did and made field notes []. In this study, two researchers observed how the focus group was conducted and evaluated to identify the indicators’ relevance to the focus group’s objective. Once they issued their assessment, corrections were made, and more theoretical information was sought on the research’s objective to relate it to the observers’ interpretations and, thus, enrich the constructs.

External validity: External reliability addresses the issue of whether other independent researchers would discover the same truth or generate the same constructions in the same or a similar environment 49 []. In this study, we sent the new construction of indicators and the guide questions to other independent repository researchers at an anonymous institution.

The reliability of the research was based on triangulation with information sources. Triangulation is a complex interpretation system familiar to the researcher. It shows the consistency and logic that emerges from each step and sector of the data, conjectures, and results []. The reliability of this study, based on triangulation, was tested once the indicators were established with a search for information sources and a systematic literature review. The indicators were linked with the observing researchers’ interpretations for the ordered construction of the first version of the sections to be researched. A pilot focus group was conducted to assess the validity and reliability of the aspects that could be improved, i.e., location, moderator, topics to be addressed, and guide questions drafted after a literature review.

3.3. Ethical Processes

Ethics is one of the primary considerations of quantitative or qualitative paradigms; it prevents studies from being used for unrelated purposes []. For [], research ethics is no longer limited to defending the integrity and welfare of the participants to protect them against possible bad practices. Although this is still a critical aspect, research ethics also defines a complete framework for action. Therefore, standards and good scientific practice were applied in this study, safeguarding personal information, requesting prior authorization from the participants, and emphasizing the benefits gained from participation to improve acceptance and familiarity using the repository.

In this research, ethics were observed by using the participants’ data only in this study. The participation status was anonymous in the study results. Anonymity was observed both in the transcripts and the information collected for the analysis. Anonymous data were used to obtain truthfulness about their motivations, attitudes, and ways of interacting with the institutional repository. Authorization and acceptance letters were obtained from the professors and students, who were assured that their data would be used only for academic purposes. Letters of authorization from the Dean of Graduate Programs of the School of Humanities and Education and leader of the Openergy network of the Binational Laboratory project were also procured [].

3.4. Guide Questions with Coding Indicators

The final design of a survey was carried out according to the indicators, guiding questions, and descriptions, as shown in Table 1. The indicators were: (1) perceived usefulness, (2) perceived findability from search engines, (3) management of digital identity 2.0, and (4) user interface design.

Table 1.

Indicator guide: description and questions.

4. Results

This section describes the results from the three focus groups for each of the indicators designed. With these data, obtained from the qualitative instruments, we delved deeper into the object of study, arranged the main findings into conceptual maps, and presented the principal findings in each indicator in tables. The demographic data of the sample are presented in graphs made with tools such as Excel and Tableau.

4.1. Demographic Information

According to the acceptance to attend confirmed via e-mail by the participants and in which the demographic data were collected, the following information is presented: 56.25% were women, 43.75% were men, 31.25% had a Ph.D. degree, 56.25% had a master’s degree, and 12.5% had a bachelor’s degree. The average age was 37 years. All belonged to the anonymous institution community; 68.75% were Ph.D. students, and 31.21% were researchers. These data were collected through the invitation survey (see Figure 1).

Figure 1.

Demographic information of the focus group participants, gender, and education level. (own elaboration).

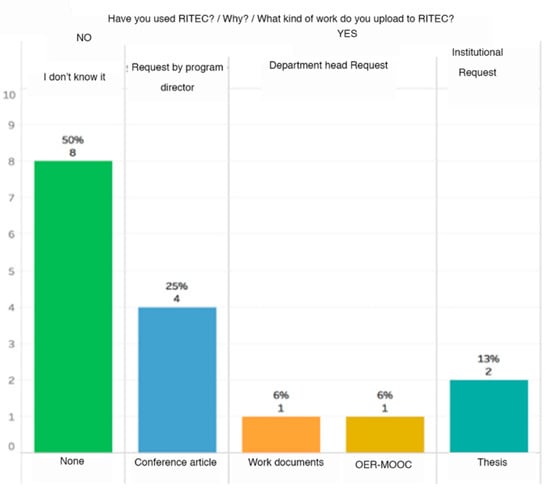

To identify the level of repository use, the survey asked, “Have you used the repository?” 50% of the participants chose the answer, “I do not know of the existence of an institutional repository and its purpose.” 25% chose, “I have uploaded papers at the request of my academic program director,” 12% chose the answer, “I have uploaded OER by request of my department head,” and 13% chose, “I have uploaded my thesis by institutional request” (see Figure 2).

Figure 2.

Results of repository use by participants of the focus groups. (own elaboration).

4.2. Perceived Usefulness Indicator

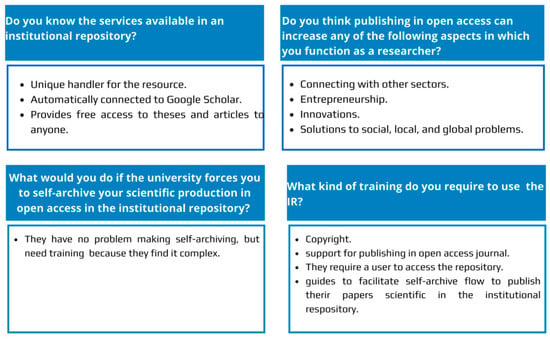

Some of the most relevant answers to the question, “Do you know the services available in an institutional repository?” revealed that participants had not received training to use the repository. However, they knew advantages, such as that it has a unique handle for the resource, is automatically connected to Google Scholar and provides free access to theses and articles. When asked, “Do you think that publishing in open access can impact other areas in which you function as a researcher,” participants answered that they believe that using the repository would allow access to their work through a digital space that belongs to their institution but allows their profile to be accessed. “What would you do if the university forces you to self-archive your scientific production in open access in the institutional repository?” They comment “we would have no problem in making the deposit but more training is required to do it because it seems very complex to them”. For the question “What kind of training do you require to use the IR?” the participants comment on copyright, publishing in open access in journals, and the requirement of a user to access the repository and use the flow to publish their scientific papers in open access (see Figure 3).

Figure 3.

Results of the perceived usefulness indicator. (Own elaboration).

4.3. Perceived Findability from Search Engines Indicator

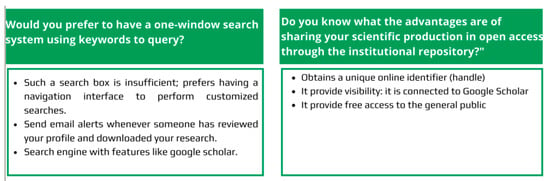

To the question, “Would you prefer to have a one-window search system where you can make any query by typing in keywords,” participants answered that a search box is not enough. They prefer to have a navigation interface to perform personalized searches. They also mentioned that the repository should have other features such as author recommendation by topic, resource prioritization according to search history, the ability to add comments to other authors’ works, and email alerts whenever someone has reviewed their profile and downloaded their research. For the question “Do you know what the advantages are of sharing your scientific production in open access through the institutional repository?”, they answered that one advantage is that the educational resource uploaded to the repository obtains a unique online identifier (handle). Another is that the repository is a digital and institutional space to gain visibility: it is connected to Google Scholar, allows finding grouped trends in publications by their colleagues and specialists on the same subject, and links with other sectors. Finally, and most importantly, the IRs provide free access to the society (see Figure 4).

Figure 4.

Results of the perceived ease for search. (Own elaboration).

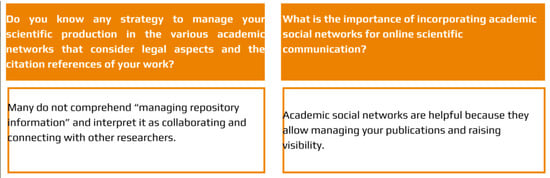

4.4. Management of Digital Identity 2.0

When asked the question, “Do you know any strategy to manage your scientific production in the different academic networks that take into account legal aspects and the registration of citations of your work,” the participants admitted that they were not familiar with the concept of managing scientific production in a repository (see Figure 5).

Figure 5.

Results of the management of digital identity 2.0. (Own elaboration).

When asked the question “What is the importance of incorporating academic social networks for online scientific communication?”, the participants admitted that academic social networks are helpful because they allow for managing publications and raising visibility and impact in society (see Figure 5).

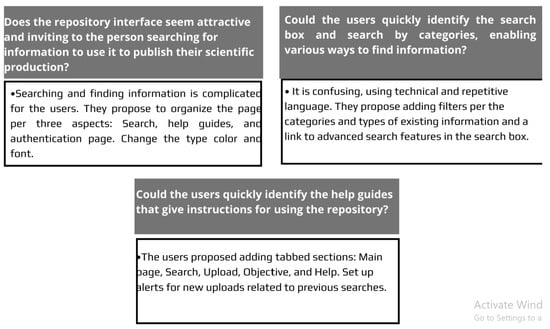

4.5. User Interface Design

For this indicator, participants were first invited to explore the main page of the institutional repository, version 3.2. When the researchers were asked their feelings about information organization, they commented that it was not appropriately ordered, proposing “to eliminate the left-side menu because it makes it look very crowded;” “to describe what is the main purpose of the repository and its benefits:” “to place three main sections: (a) guides or help, (b) file upload, and (c) search:” “to display the search box and underneath include options to search by type of document (article, book, conference)” (see Figure 6).

Figure 6.

Results of user interface design. (Own elaboration).

The most relevant information for this indicator was the participants’ perception of the repository’s main page interface and their suggestions for improvement. They proposed to organize the page based on three aspects:

- Eliminate the left-side menu, as it makes the page look overly crowded.

- Indicate on the main page the purpose of the repository and how the academic community can use it.

- Incorporate three main sections:

- Search: This section includes the search box and an advanced search drop-down menu that allows searching by type of document (article, book, conference), author, year, and other filters.

- Help and guides: This section includes manuals and interactive guides, but also indicates the purpose of the repository. A list of resources should be provided for authors to help them identify if it is feasible to publish their resources in an open or restricted manner. A way to review copyright policies should be provided for publishers and uploaded material, e.g., a link to the Sherpa Romeo portal: (https://v2.sherpa.ac.uk/romeo/, accessed on 20 October 2021).

- Authentication page: The participants also suggested adding a link to the authentication page using username and password with institutional credentials. They recommended describing the process for self-archiving educational resources and any requirements for the process.

5. Discussion

The more the academic community knows the benefits of an institutional repository, the more the personalized demands in their functionalities and services will increase. In Figure 2, the results of repository use show that 50% of the participants were unaware of the repository. In the focus groups session, participants commented that they had not used it and had not internalized its existence and, therefore, ignored its dissemination processes. However, they showed a high interest in knowing more about it. Ref. [] points out that, initially, institutional repositories were for cataloging and storing scientific production through digital collections. However, their possibilities have changed and extended beyond that, so it is imperative to identify the user profiles and tasks to give greater scientific visibility to this platform. Educational institutions must have new strategies to face changing demands, form multidisciplinary teams, and develop cutting-edge technological infrastructure, policies, and guidelines.

5.1. Perceived Usefulness Indicator

Effective appropriation of the institutional repository and the concept of “open access” require fostering a culture of open-access publication practices for users to perceive its usefulness and ease of use positively. Figure 3 shows the most relevant results of the perceived usefulness indicator collected from the focus groups. Social appropriation implies the democratization of access and use of scientific knowledge, as a strategy for its adequate transmission and use among the different social actors, which will result in the improvement of the quality of life of communities and their members []. A researcher must be aware that not everyone in society can access high-quality knowledge generated by universities. Often, the high costs of access to databases with research results, some of which have been financed with public funds, limit the technological progress and the quality of life in developing countries. Therefore, users in those countries must find research results open access. The 21st-century researchers have a new vision of how to disseminate their scientific production and make it known to the world, so educational institutions must have technology platforms that lead them to an effective digital transformation.

Furthermore, the visibility of resources positively affects the citations of their research works [] and the increase in the number of citations received []. It is necessary to promote the institutional repositories so that society can take advantage of the knowledge in their resources []. The researcher must find it beneficial to publish in the institutional repository, for example, knowing that it links with other sectors. Therefore, the institution must promote the platform, including training to access scientific production internally and externally. Regarding the question “Do you think publishing in open access can increase any of the following aspects in which you function as a researcher?”, the researchers considered that the repository linking with other sectors was ultimately most impactful to solving local and international societal problems.

5.2. Perceived Findability from Search Engines Indicator

If a researcher knows the benefits of uploading to the institutional repository, they will make a considerable effort to invest the time to upload their research and search for educational resources and thereby demonstrate a better attitude toward the platform. Figure 4 shows the most relevant results of the perceived findability from search engines indicator obtained from the focus groups. For this indicator, relevant aspects were obtained when asking the participants, a single window into the institutional repository to find scientific information can save time, given the massification of information and implementation of automatic learning tools, which allow for search preferences that are easy to categorize and manage. For [], the development of repository platforms requires escalating the open software, involving a complex negotiation with the commercial brands that own cutting-edge technologies. However, repositories have technology that is interoperable with other repositories and services known by users, such as Google Scholar and Scopus, and other features added through a discovery tool []. The academic community perceives that technology used for the repositories is lagging other commercial platforms. Furthermore, the high cost of implementing new features influences whether they remain cutting-edge. For that reason, it is essential to emphasize the functionalities and services offered by the institutional repository.

The need to develop different types of support and article-sharing policies users to facilitate or increase their article-sharing behaviors []. Ref. [] should be considered that the researcher-authors does not have enough information to know what version he or she can self-archive and need to concern about the copyright of their works and the additional time and effort required for self-archiving. The results show that to motivate authors and researchers, not only are smart technologies required, but also their integration with institutional policies, awareness and knowledge of researchers, user-friendly processes to publish in open access, so that the culture of open access must be taken beyond a friendly technology, and universities must encourage open access practices of their scientific research.

5.3. Management of Digital Identity 2.0

The management of scientific information involves the need for researchers in an academic community to develop skills to use new information systems, preserve and disseminate their scientific production, and create peer networks to publicize what they do. Therefore, their indicators in world indexes, such as Scopus or Google Scholar, can increase significantly. Figure 5 shows the most relevant information management results (Digital Identity 2.0 indicators) obtained from the focus groups, their answers reflected what they thought the term refers to, such as collaborating and connecting with other researchers. Ref. [] mentions that proper scientific information management involves knowing how to make citation reports, be aware of the research’s impact, identify trends in your disciplinary research field, and obtain the calculation of the h-index, among others.

Additionally, researchers should know how to publish openly in quality journals and share them in the repository []. Although collaborating and connecting with other researchers will help increase citations and impact indicators on various platforms, scientific management refers to evaluating and deliberately using all possible digital media to increase these indicators.

5.4. User Interface Design

Beyond good graphics design, a web portal involves information design, web programming, and interactions designing, all comprising information architecture. Ref. [] focused on a study where users evaluated the system and established various categories to assess repositories. The study showed that the search engine, metadata, and content were considered the most valued elements by users, which is why the researchers inquired about interaction and information design in the focus groups (see Figure 6). For [], the use of the search box is easy to understand and use without previous experience but restricts the user to keywords, while advanced search requires more knowledge of the content and sophisticated search skills. Ref. [] explain that the user experience encompasses their informational behavior, competencies, and needs. Therefore, the interface design must be oriented to facilitate the search for digital resources to significantly improve user satisfaction [].

As part of the user-centered design methodology, the information architecture is built during the requirements and design phases, validated by the user through prototypes and tests []. Thus, it is necessary to design and organize the repository navigation and then configure a basic search engine to invite exploration at first sight. It is necessary to place a help section with various communication methods for questions or comments. Institutional repositories observe standards for cataloging information through metadata based on the Dublin Core standard and protocols such as the OAI-PMH. These make interoperability and indexing possible with Google Scholar, among other functionalities. Open access presents multiple challenges [], e.g., the challenge for academic libraries, namely developing models to support research data management, assisting with the data management plan, expanding library staff qualifications to include data science literacy, and integrating library services into the education and research process through research grants, among others.

6. Conclusions

The objective of this study is to ascertain the perceptions of researchers about the usefulness of an institutional repository, their motivations to use it, the perception of usability to upload open access resources, and findability. A descriptive qualitative approach was used to understand the users’ perception of the interface and the user experience of the institutional repository. This study revealed that not all researchers in an academic community know what a repository is and therefore do not use it. With the results obtained, efforts should be directed towards the dissemination of the use of the repository as an open educational practice (OEP) that accomplishes the scientific purpose of social appropriation of society’s knowledge. It is important for librarians to use techniques to investigate the user experience of the academic community and thereby enhance the technical, ontological, and organizational components of the IR. It is worth mentioning that in the focus groups, librarians were invited as observers of the users’ comments. The number of researchers and students recruited for the focus groups was validated by the method used. The participants recruited represented a research group that promotes open access practices in an educational institution through the binational energy project in Mexico.

The dissemination of scientific knowledge, through open access journals and repositories institutional, is required for universities to provide alternatives to the high subscription costs of databases with restricted-access knowledge. This study presents revealing findings obtained from focus groups and the design of an instrument to conduct these focus groups. The latter was helpful to produce training strategies and assessment of the acceptance of an institutional repository in a higher education institution in Mexico. To answer the research question in this study, “What are the motivations and experiences of users in an academic community when publishing scientific information in an institutional repository,” we found it is necessary to promote the institutional repository through campaigns and training that raise its visibility and make the academic community aware of the digital platforms that support their teaching, research practices and dissemination.

Promoting the institutional repository through dissemination campaigns also implies training researchers on the digital platforms that facilitate their teaching and research practices. Digital platforms must be designed to increase motivation and technological appropriation by providing a solution to the end-user, which is why the information architecture of these platforms are based on thesauri and knowledge organization systems (KOS).

We used focus groups and other user-centered techniques to discover participants’ motivations regarding the technologies they use. These led us to explore adding new functionalities to information systems and user interactions and, importantly, to know how participants were feeling when using the repository technology and if they had a positive experience. One unexpected finding that emerged from the focus groups was that 50% of the participants were not aware of the institutional repository. Those who did know it had received instruction from a professor to upload their scientific resources. This finding was significant because it resulted in creating a virtual workshop-course, responding to the need to publicize the repository and the Open Educational Movement, and emphasizing the impact these have on an academic community. Additionally, we discovered that if users do not know the impact that their scientific production has on the repository, they will not use it again unless they are intrinsically motivated by the benefits that visibility has in their academic environments. Observing the participants’ operational difficulties with the repository’s search and self-archive functionalities, we created other instruments to measure the usability and impact if the user takes a virtual course and immediately performs these tasks.

A focus group is a data collection technique that allows collecting opinions and experiences on the topic through carefully sequenced questions that explicitly focus on analyzing a product or service. Therefore, creating a comfortable and open environment is necessary to know if the product fulfills the participants’ expectations regarding its use, presentation, and characteristics. However, it is also necessary to investigate the truth of the participants’ assertions in this type of study and validate that they will use the product once their expectations are met. Another critical observation would be that they do not mention errors that would make them not use it.

Future studies should identify the functionalities of the next generation of institutional repositories and their integration with CRIS (Current Research Information System) and the informational architecture that will sustain both digital platforms. In line with Education 4.0, functionalities should facilitate collaboration and user interactions. They should include profile management, a discovery tool, and machine learning algorithms to provide statistics and recommendations to researchers, teachers, and researchers. The technical support and designers could be analyzed, designed, programmed, and configured according to the DSpace configuration.

They considered three essential aspects for the future studies. (1) Information search and the implementation of a discovery tool for institutional repositories. It is possible to provide searches by using filters, a system of alerts and that is connected with other repositories, as well as creating a repository information architecture based on semantic web technologies which can be used by machine learning to produce better predictions by exploiting the semantic links in knowledge graphs and linked datasets to move towards a model of knowledge organization system (KOS). (2) Management of digital identity: Components are added to the repository so that the researcher can have the functionalities of a social network such as research gate, and from there share their resources with the scientific community, so that the identity relates to the institution to which it belongs, and its metrics are centralized at the time of searching for its citation indexes. A synthesized repository flow should be designed for the user, standardizing mandatory metadata, so that the user only enters mandatory fields, and the rest is entered by the cataloguer authorizing the resource. (3) Relevant indicators: Indicators should allow authors to autonomously access a space that allows them to identify the number of downloads of their documents, from where they are downloaded, number of times their document is accessed, and whether they have been cited by others.

The challenge for universities is that their open access policies are configured in intelligent digital platforms, i.e., with artificial intelligence, machine learning, and open data interoperability, in order to attract the attention of researchers to publish in open access journals. However, first, it should be oriented towards comprehensive training, from applying strategies on how to research, how to manage their research data, and towards open science. Self-archive (deposit) is a good practice as long as it ensures that the publishing researcher has the knowledge and skills about copyright, open access, embargo polices, knowing where to publish, and issues related to open science. It is essential that institutions have a strategy to encourage these practices and provide training to their staff, as well as support staff who are constantly trained in international guidelines, metadata and publishing technologies, journals, and repositories.

Author Contributions

Conceptualization, L.I.G.-P., M.S.R.-M. and F.J.G.-P.; methodology, L.I.G.-P., M.S.R.-M. and F.J.G.-P.; formal analysis, L.I.G.-P., M.S.R.-M. and F.J.G.-P.; investigation, L.I.G.-P., M.S.R.-M. and F.J.G.-P.; resources, L.I.G.-P., M.S.R.-M. and F.J.G.-P.; data curation, M.S.R.-M.; writing-original draft preparation, L.I.G.-P.; writing-review and editing, L.I.G.-P.; visualization, L.I.G.-P.; supervision, M.S.R.-M. and F.J.G.-P.; project administration, M.S.R.-M. and F.J.G.-P.; funding acquisition, M.S.R.-M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by “Binational Laboratory on Smart Sustainable Energy Management and Technology Training” project, funded by the CONACYT SENER fund for Energy Sustainability (S0019-2013). And The APC was funded by Tecnologico de Monterrey.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

To exclude this section.

Acknowledgments

This study was part of the “Binational Laboratory on Smart Sustainable Energy Management and Technology Training” project, funded by the CONACYT SENER fund for Energy Sustainability (S0019-2013). The study was also part of the project “Increase of repository visibility improving the user experience and interoperability with the national repository,” supported by CONACYT de México. Also, it was part of the project of the Ministry of Economy and Competitiveness of Spain, DEFINES (a Digital Ecosystem Framework for an Interoperable Network-based Society). (Ref. TIN2016-80172-R). The authors acknowledge the technical support of Writing Lab, Institute for the Future of Education, Tecnologico de Monterrey, Mexico, in the production of this work.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Confederation of Open Access Repositories, COAR. 2016. Available online: https://www.coar-repositories.org/ (accessed on 31 August 2021).

- Zervas, M.; Kounoudes, A.; Artemi, P.; Giannoulakis, S. Next Generation Institutional Repositories: The Case of the CUT Institutional Repository KTISIS. Procedia Comput. Sci. 2019, 146, 84–93. [Google Scholar] [CrossRef]

- Barrueco Cruz, J.M.; Rico-Castro, P.; Bonora Eve, L.V.; Azorín Millaruelo, C.; Bernal, I.; Gómez Castaño, J.; Guzmán Pérez, C.; Losada Yáñez, M.; Marín del Campo, R.; Martínez Galindo, F.J.; et al. Guía Para La Evaluación de Repositorios Institucionales de Investigación. 2021. Available online: https://riunet.upv.es/handle/10251/166115 (accessed on 31 August 2021).

- García-Peñalvo, F.J.; De Figuerola, C.G.; Merlo, J.A. Open Knowledge: Challenges and Facts. Online Inf. Rev. 2010, 34, 520–539. [Google Scholar] [CrossRef]

- Subiyakto, A.; Rahmi, Y.; Kumaladewi, N.; Huda, M.Q.; Hasanati, N.; Haryanto, T. Investigating Quality of Institutional Repository Website Design Using Usability Testing Framework. In AIP Conference Proceedings; AIP Publishing LLC: Jakarta, Indonesia, 2021; Volume 2331, p. 060016. [Google Scholar]

- Clements, K.; Pawlowski, J.; Manouselis, N. Open Educational Resources Repositories Literature Review–Towards a Comprehensive Quality Approaches Framework. Comput. Hum. Behav. 2015, 51, 1098–1106. [Google Scholar] [CrossRef]

- Davis, F.D.; Bagozzi, R.P.; Warshaw, P.R. User Acceptance of Computer Technology: A Comparison of Two Theoretical Models. Manag. Sci. 1989, 35, 982–1003. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Portz, J.D.; Bayliss, E.A.; Bull, S.; Boxer, R.S.; Bekelman, D.B.; Gleason, K.; Czaja, S. Using the Technology Acceptance Model to Explore User Experience, Intent to Use, and Use Behavior of a Patient Portal Among Older Adults With Multiple Chronic Conditions: Descriptive Qualitative Study. J. Med. Int. Res. 2019, 21, e11604. [Google Scholar] [CrossRef] [PubMed]

- Hornbæk, K.; Hertzum, M. Technology acceptance and user experience: A review of the experiential component in HCI. ACM Trans. Comput.-Hum. Interact. 2017, 24, 1–30. [Google Scholar] [CrossRef]

- Kim, J. Faculty self-archiving: Motivations and barriers. J. Am. Soc. Inf. Sci. Technol. 2010, 61, 1909–1922. [Google Scholar] [CrossRef]

- Kim, Y.; Oh, J.S. Researchers’ article sharing through institutional repositories and ResearchGate: A comparison study. J. Librariansh. Inf. Sci. 2021, 53, 475–487. [Google Scholar] [CrossRef]

- Antelman, K. Self-archiving practice and the influence of publisher policies in the social sciences. Learn. Publ. 2006, 19, 85–95. [Google Scholar] [CrossRef]

- Moreno, W.E.C. Importancia de La Apropiación Social y El Acceso Abierto al Conocimiento Especializado En Ciencias Agrarias. Rev. Fac. Nac. Agron. Medellín 2017, 70, 8234–8236. [Google Scholar]

- Ramirez-Montoya, M.S.; Ceballos-Cancino, H.G. Institutional Repositories. In Research Analytics: Boosting University Productivity and Competitiveness through Scientometrics; CRC Press: Monterrey, Mexico, 2017. [Google Scholar]

- Meishar-Tal, H.; Pieterse, E. Why Do Academics Use Academic Social Networking Sites? Int. Rev. Res. Open Distrib. Learn. 2017, 18, 1–22. [Google Scholar] [CrossRef]

- Kutay, S. Advancing Digital Repository Services for Faculty Primary Research Assets: An Exploratory Study. J. Acad. Librariansh. 2014, 40, 642–649. [Google Scholar] [CrossRef]

- García-Peñalvo, F.J. The Future of Institutional Repositories. Educ. Knowl. Soc. 2017, 18, 7–19. [Google Scholar] [CrossRef]

- Hakopov, Z.N. Digital Repository as Instrument for Knowledge Management (INIS-XA--16M5558); International Atomic Energy Agency (IAEA): Vienna, Austria, 2016. [Google Scholar]

- Gall, J.E. Ambient Findability. Peter Morville. (2005). O’Reilly Media. 188 Pp. $29.95 (Soft Cover). ISBN: 0-596-00765-5. Educ. Tech. Res. Dev. 2006, 54, 623–626. [Google Scholar] [CrossRef]

- Chickering, F.W.; Yang, S.Q. Evaluation and Comparison of Discovery Tools: An Update. Inf. Technol. Libr. 2014, 33, 5. [Google Scholar] [CrossRef]

- Walker, J. The NISO Open Discovery Initiative: Promoting Transparency in Discovery. Insights 2015, 28, 85–90. [Google Scholar] [CrossRef][Green Version]

- Borromeo, C.D.; Schleyer, T.K.; Becich, M.J.; Hochheiser, H. Finding Collaborators: Toward Interactive Discovery Tools for Research Network Systems. J. Med. Int. Res. 2014, 16, e244. [Google Scholar] [CrossRef] [PubMed]

- González-Pérez, L.I.; Ramírez-Montoya, M.S.; García-Peñalvo, F.J. Discovery Tools for Open Access Repositories: A Literature Mapping. In Proceedings of the Fourth International Conference on Technological Ecosystems for Enhancing Multiculturality, Salamanca, Spain, 2–4 November 2016; pp. 299–305. [Google Scholar]

- Breeding, M. Tendencias Actuales y Futuras En Tecnologías de La Información Para Unidades de Información. Prof. Inform. 2012, 21, 9–15. [Google Scholar]

- Fernández-Luna, A.; Pérez-Montoro, M.; Guallar, J. Metodología Para La Mejora Arquitectónica de Repositorios Universitarios. In Anales de Documentación; Facultad de Comunicación y Documentación y Servicio de Publicaciones de la Universidad de Murcia: Murcia, Spain, 2019; Volume 22. [Google Scholar]

- Teets, M.; Goldner, M. Libraries’ Role in Curating and Exposing Big Data. Future Internet 2013, 5, 429–438. [Google Scholar] [CrossRef]

- García-Peñalvo, F.J. Digital identity as researchers. The evidence and transparency of scientific production. Educ. Knowl. Soc. 2018, 19, 7–28. [Google Scholar] [CrossRef]

- García, N.R.; López-Cozar, E.D.; Torres-Salinas, D. Cómo Comunicar y Diseminar Información Científica En Internet Para Obtener Mayor Visibilidad e Impacto. Aula Abierta 2011, 39, 41–50. Available online: https://dialnet.unirioja.es/servlet/articulo?codigo=3691479 (accessed on 31 August 2021).

- Barbour, K.; Marshall, P. The Academic Online: Constructing Persona through the World Wide Web. First Monday 2012, 17, 1–12. [Google Scholar] [CrossRef]

- Melero, R.; Hernández San Miguel, F.J. Acceso Abierto a Los Datos de Investigación, Una Vía Hacia La Colaboración Científica. Rev. Española Doc. Científica 2014, 37. [Google Scholar] [CrossRef][Green Version]

- Ramírez, M.-S.; García-Peñalvo, F.J. Co-Creación e Innovación Abierta: Revisión Sistemática de Literatura = Co-Creation and Open Innovation: Systematic Literature Review. Comunicar 2018, 54, 9–18. [Google Scholar] [CrossRef]

- Priem, J.; Hemminger, B.H. Scientometrics 2.0: New Metrics of Scholarly Impact on the Social Web. First Monday 2010, 15. [Google Scholar] [CrossRef]

- Rovira, C.; Codina, L.; Guerrero-Solé, F.; Lopezosa, C. Ranking by Relevance and Citation Counts, a Comparative Study: Google Scholar, Microsoft Academic, WoS and Scopus. Future Internet 2019, 11, 202. [Google Scholar] [CrossRef]

- Freire, F.C.; Rogel, D.E.R.; Hidalgo, C.V.R. La Presencia e Impacto de Las Universidades de Los Países Andinos En Las Redes Sociales Digitales. Rev. Lat. Comun. Soc. 2014, 69, 571–592. [Google Scholar]

- Alonso-Arévalo, J. Alfabetización En Comunicación Científica: Acreditación, OA, Redes Sociales, Altmetrics, Bibliotecarios Incrustados y Gestión de La Identidad Digital; Presented at the Alfabetización Informacional; Reflexiones y Experiencias: Lima, Peru, 2014. [Google Scholar]

- Jacobson, R.E.; Jacobson, R. Information Design; MIT Press: Cambridge, MA, USA, 2000; Available online: https://mitpress.mit.edu/books/information-design (accessed on 31 August 2021).

- Saffer, D. Designing for Interaction: Creating Innovative Applications and Devices; New Riders: London, UK, 2010; Available online: https://www.oreilly.com/library/view/designing-for-interaction/9780321679406/ (accessed on 31 August 2021).

- Iso, D.I.S. 9241–210: 2010: Ergonomics of Human-System Interaction—Part 210: Human-Centred Design for Interactive Systems (Formerly Known as 13407). Switz. Int. Stand. Organ. 2010, 1. Available online: https://www.iso.org/standard/52075.html (accessed on 31 August 2021).

- Vermeeren, A.P.; Law, E.L.-C.; Roto, V.; Obrist, M.; Hoonhout, J.; Väänänen-Vainio-Mattila, K. User Experience Evaluation Methods: Current State and Development Needs. In Proceedings of the 6th Nordic Conference on Human-Computer Interaction: Extending Boundaries, Reykjavik, Iceland, 16–20 October 2010; pp. 521–530. [Google Scholar]

- González-Pérez, L.I.; Ramírez-Montoya, M.-S.; García-Peñalvo, F.J. User Experience in Institutional Repositories: A Systematic Literature Review. In Digital Libraries and Institutional Repositories: Breakthroughs in Research and Practice; Information Resources Management Association: Hershey, PA, USA, 2020; pp. 423–440. [Google Scholar]

- Khoo, M.; Kusunoki, D.; MacDonald, C. Finding Problems: When Digital Library Users Act as Usability Evaluators. In Proceedings of the 2012 45th Hawaii International Conference on System Sciences, Maui, HI, USA, 4–7 January 2012; pp. 1615–1624. [Google Scholar]

- Ferran, N.; Guerrero-Roldán, A.-E.; Mor, E.; Minguillón, J. User Centered Design of a Learning Object Repository. In International Conference on Human Centered Design; Springer: Berlin/Heidelberg, Germany, 2009; pp. 679–688. [Google Scholar]

- Buchan, J. An empirical cognitive model of the development of shared understanding of requirements. In Requirements Engineering; Springer: Berlin/Heidelberg, Germany, 2014; pp. 165–179. [Google Scholar]

- Silver, K. What Puts the Design in Interaction Design. UX Matters 2007. Available online: https://www.uxmatters.com/mt/archives/2007/07/what-puts-the-design-in-interaction-design.php (accessed on 31 August 2021).

- Krueger, R.A.; Casey, M.A. Focus Group Interviewing. Handb. Pract. Program Eval. 2010, 3, 378–403. Available online: http://ebookcentral.proquest.com/lib/umanitoba/detail.action?docID=2144898 (accessed on 31 August 2021).

- Piercy, F.P.; Hertlein, K.M. Focus Groups in Family Therapy Research. In Research Methods in Family Therapy; The Guilford Press: New York, NY, USA, 2005; pp. 85–99. Available online: https://psycnet.apa.org/record/2005-08638-005 (accessed on 31 August 2021).

- Yin, R.K. Design and Methods. Case Study Res. 2003, 3. Available online: https://doc1.bibliothek.li/acc/flmf044149.pdf (accessed on 31 August 2021).

- Lakshmi, S.; Mohideen, M.A. Issues in Reliability and Validity of Research. Int. J. Manag. Res. Rev. 2013, 3, 2752. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.1065.6043&rep=rep1&type=pdf (accessed on 31 August 2021).

- Cortés-Camarillo, G. Confiabilidad y validez en estudios cualitativos. Educ. Y Cienc. 1997, 1, 77–82. Available online: http://educacionyciencia.org/index.php/educacionyciencia/article/view/111 (accessed on 31 August 2021).

- Lincon, Y.; Guba, E. Criterios de Rigor Metodológico En Investigación Cualitativa 1985. Available online: https://us.sagepub.com/en-us/nam/naturalistic-inquiry/book842 (accessed on 31 August 2021).

- Amador, M.G. Ética de La Investigación. Rev. Iberoam. De Educ. 2010, 54, 1–2. [Google Scholar]

- Antón Ares, P. Red Openergy. Training and Research Experiences for the Accessible Instructional Design. Educ. Knowl. Soc. 2018, 19, 31–51. [Google Scholar] [CrossRef]

- Andrés, J.S.; Viguera, C. Análisis: Factor de Impacto y Comunicación Científica. Rev. De Neurol. 2009, 49, 57. [Google Scholar]

- Harnad, S.; Brody, T.; Vallières, F.; Carr, L.; Hitchcock, S.; Gingras, Y.; Oppenheim, C.; Stamerjohanns, H.; Hilf, E.R. The Access/Impact Problem and the Green and Gold Roads to Open Access. Ser. Rev. 2004, 30, 310–314. [Google Scholar] [CrossRef]

- Seo, J.-W.; Chung, H.; Seo, T.-S.; Jung, Y.; Hwang, E.S.; Yun, C.-H.; Kim, H. Equality, Equity, and Reality of Open Access on Scholarly Information. 2017. Available online: https://hdl.handle.net/10371/139236 (accessed on 31 August 2021).

- Montenegro Marin, C.E.; Garcia-Gaona, P.A.; Gaona-Garcia, E.E. Agentes inteligentes para el acceso a material bibliotecario a partir de dispositivos móviles. Ing. Amazon. 2014, 7, 16–26. Available online: http://www.uniamazonia.edu.co/revistas/index.php/ingenierias-y-amazonia/article/view/1085/1342 (accessed on 31 August 2021).

- Shneiderman, B. Research Agenda: Visual Overviews for Exploratory Search. Inf. Seek. Support Syst. 2008, 11. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.206.1390&rep=rep1&type=pdf#page=96 (accessed on 31 August 2021).

- Rosenfeld, L.; Morville, P. Information Architecture for the World Wide Web; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2002; Available online: https://www.oreilly.com/library/view/information-architecture-for/0596527349/ (accessed on 31 August 2021).

- Tzanova, S. Changes in Academic Libraries in the Era of Open Science. Educ. Inf. 2020, 36, 281–299. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).