Abstract

To use technology or engage with research or medical treatment typically requires user consent: agreeing to terms of use with technology or services, or providing informed consent for research participation, for clinical trials and medical intervention, or as one legal basis for processing personal data. Introducing AI technologies, where explainability and trustworthiness are focus items for both government guidelines and responsible technologists, imposes additional challenges. Understanding enough of the technology to be able to make an informed decision, or consent, is essential but involves an acceptance of uncertain outcomes. Further, the contribution of AI-enabled technologies not least during the COVID-19 pandemic raises ethical concerns about the governance associated with their development and deployment. Using three typical scenarios—contact tracing, big data analytics and research during public emergencies—this paper explores a trust-based alternative to consent. Unlike existing consent-based mechanisms, this approach sees consent as a typical behavioural response to perceived contextual characteristics. Decisions to engage derive from the assumption that all relevant stakeholders including research participants will negotiate on an ongoing basis. Accepting dynamic negotiation between the main stakeholders as proposed here introduces a specifically socio–psychological perspective into the debate about human responses to artificial intelligence. This trust-based consent process leads to a set of recommendations for the ethical use of advanced technologies as well as for the ethical review of applied research projects.

1. Introduction

Although confusion over informed consent is not specific to a public health emergency, the COVID-19 pandemic has brought into focus issues with consent across multiple areas often affecting different stakeholders. Consent, or Terms of Use for technology artefacts including online services, is intended to record the voluntary willingness to engage. Further, it is assumed to be informed: that individuals understand what is being asked of them or that they have read and understood the Terms of Use. It is often unclear, however, what this entails. For the user, how voluntary is such consent, and for providers, how much of their technology can they represent to their users? As an example from health and social care, contact tracing—a method to track transmission and help combat COVID-19—illustrates some of the confusion. Regardless of the socio-political implications of non-use, signing up for the app would imply a contract between the user and the service provider based on appropriate use of the app and limiting the liability of the provider. However, since it would typically involve the processing of personal data, there may also be a request for the user (now a data subject) to agree to that processing. In the latter case, consent is one possible legal basis under data protection law for the collection and exploitation of personal data. In addition, though, the service provider may collaborate with researchers and wish to share app usage and user data with them. This too is referred to as (research) consent, that is the willingness to take part in research. Finally, in response to an indication that the app user has been close to someone carrying the virus, they may be invited for a test; they would need to provide (clinical) consent for the clinical intervention, namely undergoing the test. It is unclear whether individuals are aware of these different, though common, meanings of consent, or of the implications of each. Added to that, there may be a societal imperative for processing data about individual citizens, which implies that there is a balance to be struck between individual and community rights.

Such examples emerge in other domains as well. Big Tech companies, for instance, may request user consent to process their personal data under data protection law. They may intend to share that data with third parties, however, to target advertising which involves some degree of profiling, which is only permitted under European data protection regulation in specific circumstances. Although legally responsible for the appropriate treatment of their users’ data, the service provider may not understand enough of the technology to meet their obligations. Irrespective of technology, the user too may struggle to identify which purpose or purposes they are providing consent for. With social media platforms, the platform provider must similarly request data protection consent to store and process their users’ personal data. They may also offer researchers access to the content generated on their platform or to digital behaviour traces for research purposes. This would come under research consent rather than specifically data protection consent. In these two cases, first the user must identify different purposes under the same consent that their (service) data may be used for, but secondly they may need to review different types of consent regarding their data as used for providing the service versus content they generate or activities they engage in used for research.

In this paper, I will explore the confusions around consent in terms of common social scientific models. This provides a specifically behavioural conception of the dialogue associated with consent contextualised within an ecologically valid presentation of the underlying mechanisms. As such, it complements and extends the discussion on explainable artificial intelligence (AI). Instead of focusing on specific AI technology, though, this discussion centres on the interaction of users with technologies from a perspective of engagement and trust rather than specifically focusing on explainability.

Overview of the Discussion

The following discussion is organised as follows. Section 2 provides an overview of responsible and understandable AI as perceived by specific users, and in related government attempts to guide the development of advanced technologies. In Section 3, I introduce behavioural models describing general user decision forming and action. Section 4 covers informed consent, including how it applies in research ethics in Section 4.1 (For the purpose of this article, ethics is used as an individual, subjective notion of right and wrong; moral, by contrast, would refer to more widely held beliefs of what is acceptable versus what is not [1]). Specific issues with consent in other areas are described in Section 4.2, including technology acceptance (Section 4.3). Section 4 finishes with an introduction to trust in Section 4.4 which I develop into an alternative to existing Informed Consent mechanisms.

Having presented different contexts for consent, Section 5 considers a trust-based approach applied to three different scenarios: Contact Tracing, Big Data Analytics and Public Health Emergencies. These scenarios are explored to demonstrate trust as an explanatory mechanism to account for the decision to engage with a service, share personal data or participate in research. As such, unlike existing consent-based mechanisms, a trust-based approach introduces an ecologically sound alternative to Informed Consent free from any associated confusion, and one derived from an ongoing negotiated agreement between parties.

2. Responsible and Explainable AI

Technological advances have seen AI components introduced across multiple domains such as transportation, healthcare, finance, the military and legal assessment [2]. At the same time, user rights to interrogate and control their personal data as processed by these technologies (Art 18, 21 and 22 [3]) call for a move away from a black-box implementation [2,4] to greater transparency and explainability (i.a., [5]). Explainable AI as a concept has been around at least since the beginning of the millennium [2] and has been formalised in programs such as DARPA in the USA [6]. In this section, I will consider some of the research and regulatory aspects as they relate to the current discussion.

The DARPA program defines explainable AI in terms of:

"… systems that can explain their rationale to a human user, characterize their strengths and weaknesses, and convey an understanding of how they will behave in the future"[6]

Anthropomorphising technology in this way has implications for technology acceptance (see [7]; and Section 4.3 below). Surveys by Adadi and Berrada [2] and Arrieta and his colleagues [5] focus primarily on mapping out the domain from recent research. Although both conclude there is a lack of consistency, common factors include explainability, transparency, fairness and accountability. Whilst they recognise those affected by the outcomes, those using technology for decision support, regulators and managers all as important stakeholders, Arrieta et al. focus ultimately on developers and technologists with a call to produce “Responsible AI” [5]. Much of this was found previously in our own 2018 Delphi consultation with domain experts. In confirming accountability in technology development and use, however, experts also called for a new type of ethics and encouraged ethical debate [8]. Adadi and Berrada meanwhile emphasise instead the different motivations and types of explainability: for control, to justify outcomes, to enable improvement, and finally to provide insights into human behaviours (control to discover) [2]. Došilović and his colleagues highlight a need for formalised measurement of subjective responses to explainability and interpretability [9], whereas Samek et al. propose a formalised, objective method to evaluate at least some aspects of algorithm performance [4]. Meanwhile, Khrais sought to investigate the research understanding of explainability, discovering not only terms like explanation, model and use which might be expected, but also more human-centric concepts like emotion, interpret and control [10].

Looking not at the interpretability of AI technologies, other studies seek to explore the implications of explainability on stakeholders, and especially on those dependent on its output (for instance, patients and clinicians using an AI-enabled medical decision-support system). The DARPA program seeks to support “explanation-informed acceptance” via an understanding of the socio-cognitive context of explanation [6]. Picking up on such a human-mediated approach, Weitz and her colleagues demonstrate how even simple methods, in their case the use of an avatar-like component, encourage and enhance perceptions of understanding the technology [7]. Taking this further and echoing [9] on trust, Israelsen and Ahmed, meanwhile, focus on trust-enhancing “algorithmic assurances” which echo traditional constructs like trustworthiness indicators in the trust literature (see Section 4.4) [11]. All of this comes together as positioning AI explainability as a co-construction of understanding between explainer (the advanced AI-enabled technology) and explainee (the user) [12]. This ongoing negotiation around explanability echoes my own trust-based alternative to the dialogue around informed consent below (Section 4.4).

Much of the research above makes explicit a link between the motivation towards explainable or responsible AI with regulation and data subject rights [2,4,5,9,11,12]. With specific regard to big data, the Toronto Declaration puts the onus on data scientists and to some degree governance structures to protect individual rights [13]. However, human rights conventions often balance individual rights with what is right for the community. For example, although the first paragraph of Art. 8 on privacy upholds individual rights and expectations, the second provides for exceptions where required by the community [14]. Individual versus community rights are significant for contact tracing and similar initiatives associated with the pandemic. While calling upon technologists for transparency and fairness in their use of data, the UK Government Digital Services guidance also tries to balance community needs with individual human rights [15]. The UK Department of Health and Social Care introduces the idea that both clinicians and patients, that is multiple stakeholders, need to be involved and to understand the technology [16]. Similarly, the EU stresses that developers, organisations deploying a given technology, and end-users should all share some responsibility in specifying and managing AI-enabled technologies, without considering how such technologies might disrupt existing relationships [17].

The focus on transparency and explainability within the (explainable) AI literature is relevant to the idea that consent should be informed. Although the focus is often on technologists [5,8], this assumes that all stakeholders—those affected by the output of the AI component, those using it for decision support, and those developing it (cf. [5])—share responsibility for the consent process. Even where studies have focused on stakeholder interactions and the co-construction of explainability [12], there is an evident need to consider the practicalities of the negotiation between parties to that process. For contact tracing, for example, who is responsible to the app user for the use and perhaps sharing of their data? Initially, the service provider would assume this role and make available appropriate terms of use, a privacy notice and privacy policy. However, surely the data scientist providing algorithms or models for such a system at least needs to explain the technology? A Service Level Agreement (SLA) for a machine-learning component would not typically involve detail about how a model was generated or its longer term performance. If it is not clear what stakeholders are responsible for, it becomes problematic to identify who should be informing the app user or participant of what to expect. Further, with advanced, AI-enabled technologies, not all stakeholders may be able to explain the technology. A clinician, for instance, is focused on care for their patients; they would not necessarily know how a machine-learning model had been generated or what the implications would be. There would have to be a paradigm shift perhaps before they consider trying to understand AI-technologies.

Leading on from studies which situate explainable AI within a behavioural context ([6,11,12]), I take the more general discussion about the use and effects of advanced technologies into the context of planned behaviour (in Section 3) and extend discussions of trust [9,11] into the practical consideration of informed consent in a number of different domains. Starting with contact tracing and similar applications of AI technologies (Section 5), this discussion seeks to explore the confusion around consent in a practical context, evaluate the feasibility of transparency, and review responsible stakeholders for different scenarios. Consent to engage with advanced technologies highlights, therefore, the impact of AI rather than specifically on how explainable the technology might be. Focusing on a new kind of ethics, this leads to the proposal for a trust-based alternative to consent.

3. Behaviour and Causal Models

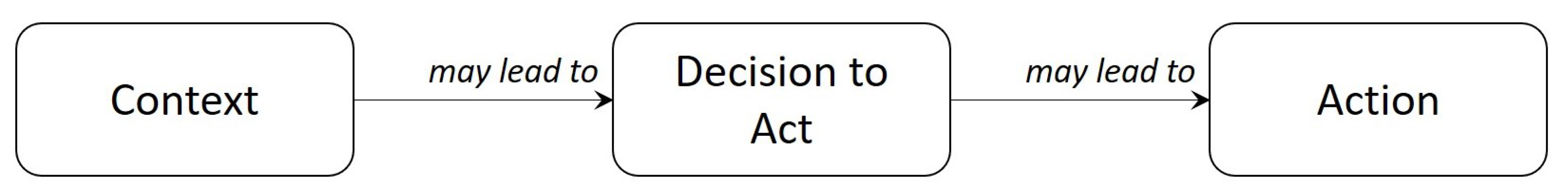

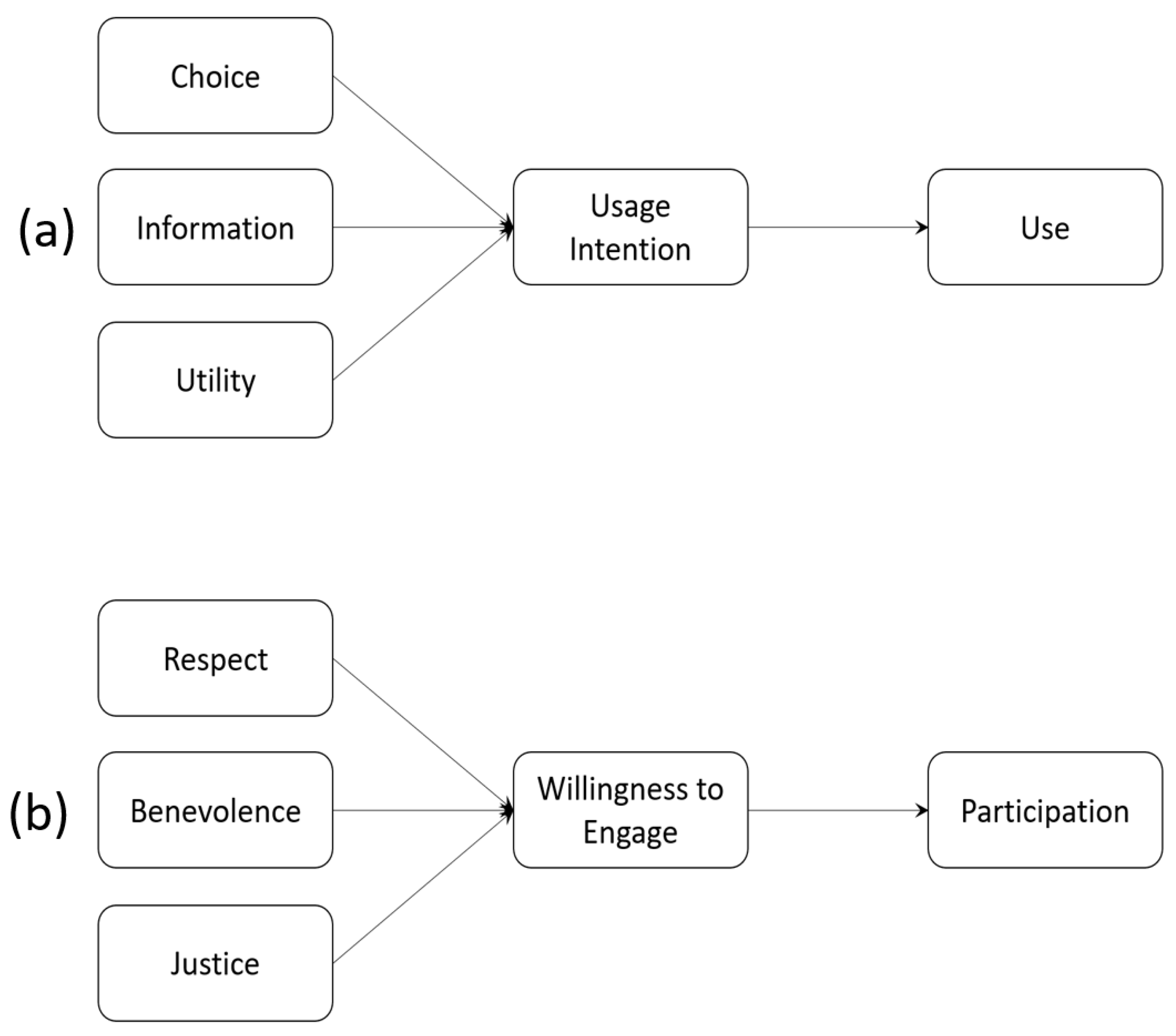

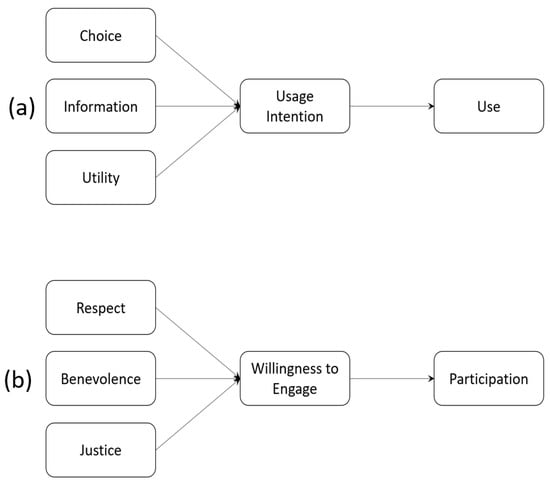

The Theory of Planned Behavior (TPB) assumes that a decision to act precedes the action or the actual activity itself. The separation between a decision to act and the action itself is important: we may decide to do something, but not actually do it. The decision to act is the result of a response to a given context. This is summarised in Figure 1. The context construct may include characteristics of the individual, of the situation itself, of the activity they are evaluating or of any other background factors. For instance, Figure 2 provides interpretations of Terms of Use ((a) the upper half of the figure) and Research Consent ((b) in the lower half) as behavioural responses.

Figure 1.

Schematic representation of the Theory of Planned Behavior [18].

Figure 2.

TPB Interpretation of Behaviours associated with (a) Terms of Use and (b) Research Consent.

Someone wishing to sign up to an online service, for instance, would be presented with the Choice to use the service or not, which may depend on the Information they are provided about the service provider and the perceived Utility they might derive from using the service. The context for Terms of Use therefore comprises Choice, Information and Utility. By contrast, a potential research participant would decide whether or not to take part (develop a Willingness to Engage) based on Respect shown to them by the researcher, whether the researcher is well disposed towards them (Benevolence), and that research outcomes will be shared equitably across the community (Justice).

4. Informed Consent

Although the concept can be traced back historically [19], the definition of informed consent was formalised more recently after World War II in the Nuremberg Code [20] and the Helsinki Declaration [21]. The emphasis is on:

“Voluntary agreement to or acquiescence in what another proposes or desires; compliance, concurrence, permission.”(https://www.oed.com/oed2/00047775) (accessed on 12 May 2021)

For technology, terms of use focuses on an agreement between user and provider, defining usage and limiting liability:

“[The] circumstances under which buyers of software or visitors to a public Web site can make use of that software or site”.[22] as part of a “binding contractual agreement” [23]

Indeed, Luger and her colleagues [24] and subsequently Richards and Hartzog make explicit the link between terms of use and:

“Consent [which] permeates both our law and our lives-particularly in the digital context”.(The term consent as used here will therefore include terms of use) [23]

In a medical or clinical context, and to counter the paternalism of earlier medical practice, the definition makes explicit who the main parties to the agreement are:

“[the] process of informed consent occurs when communication between a patient and physician results in the patient’s authorization or agreement to undergo a specific medical intervention”.(https://www.ama-assn.org/delivering-care/ethics/informed-consent) (accessed on 12 May 2021)

There are other concerns, though. For clinical treatment, the patient is reliant on the clinician to improve their well-being, and there is no guarantee that they will understand the implications of the treatment proposed. Further, concerned about their health or general prognosis, they may not be emotionally fit to think objectively about the information that they have been given. Additionally, during a public health emergency, there may be a legal or moral obligation to disclose data, such as infection status. This implies a balance to be struck between individual rights and the common good.

In research terms, consent is seen as integral to respect for the autonomy of the research participant:

“Respect for persons requires that subjects, to the degree that they are capable, be given the opportunity to choose what shall or shall not happen to them. This opportunity is provided when adequate standards for informed consent are satisfied.”(Part C (1), [25])

Latterly and with the emphasis on the right to privacy (Art. 8 [14]), consent is defined in data protection legislation as:

“any freely given, specific, informed and unambiguous indication of the data subject’s wishes by which he or she, by a statement or by a clear affirmative action, signifies agreement to the processing of personal data relating to him or her”.(Art. 4(11), [3])

Irrespective of context, all definitions assume the pre-requisite characteristics of voluntariness (freely given) and informed (understanding what another proposes or desires). It is not always clear, however, if these basic requirements are met or even possible. In a clinical context, apart from the assumption that patients are emotionally objective rather than directly reliant on the expertise of the clinician, the stakeholder relationship is unequal. Notwithstanding the right to religious tolerance [19] assuming it be based on true autonomy [26], clinical judgement is subordinate to uninformed or emotionally charged patient preference [27]. Putting clinician and patient on a more equal footing may be preferable and preserve the interests of all parties [28].

The legal context, that is the requirements governing data protection and clinical practice, limits what can be done in practical terms in regard to resolving confounding issues associated with informed consent. Any such legislation will tend to be jurisdiction-specific, and like the COPI Regulations [29] requiring the sharing of medical data, may be time- and domain-specific. It is also worth remembering in the context of data protection, that there are different requirements depending on the nature of the data themselves (Art. 6, 9 and 10 [3]). These imply different legal bases, or justifications, to allow personal data to be processed. Consent (Art. 6(1)(a)–(f), Art. 9(2)(a)–(j), [3]) is only one such legal basis, but has implications such as the data subject’s right to object to processing or to withdraw the data (Chapter 3 [3]). In a research study involving the processing of personal data, consent to participate may be different from the lawful basis covering the collection of the personal data (for instance, Art. 89, i.a., [3]). Consequently, conflating data protection and research ethics consent may confuse the data subject/participant as well as restrict what a researcher can do with the data they collect, or worse still, undermine the researcher/participant relationship. Further, an institutional Research Ethics Committee (REC; also known as Institutional Review Board, IRB) or indeed a data protection review would need to consider whether consent refers to research participation only (clinical trial or other academic research studies), or a legal basis for data protection purposes, or both. By contrast, consent for technology use (terms of use) may be weighted against the user and in favour of the supplier. This may result from a failure to read or be able to read the conditions [24], to differentiate technology-use contexts [30], or even from a de facto assumption that app usage implies consent [22]. Notwithstanding these specific issues of the type of consent, the following sections consider first the governance of research ethics, before moving on to the various challenges regarding voluntary and informed consent from the research participant’s perspective, and subsequently to implications for technology acceptance and adoption. The final subsection will give a definition of trust and describe how it applies to various different contexts where consent would normally be expected.

4.1. Applied Research Ethics

Research ethics relates to more general applied ethical principles, which helps contextualise issues pertaining to informed consent. Research ethics review is often based on recommendations from the Belmont Report [25], which echo similar values in medical ethics [31], and assumes that activity is research [32] rather than service evaluation, for instance. The primary focus includes:

- Participant Autonomy or Respect for the Individual: a guarantee to protect the dignity of the participant as well as respecting their willingness or otherwise to take part. This assumes that the researcher (including those involved with clinical research) have some expectation of outcomes and can articulate them to the potential participant. Deception is possible under appropriate circumstances [33], including the use of placebos. Big data requires careful thought, since it shifts the emphasis away from individual perspectives [34]. In so doing, a more societally focused view may be more appropriate [35]. Originally, autonomy related directly to the informed consent process. However, the potential for big data and AI-enabled approaches suggests that this may need rethinking.

- Beneficence and non-malevolence: ensuring that the research will treat the participant well and avoid harm. This principle, most obvious for medical ethics, puts the onus on the researcher (or data scientist) to understand and control outcomes (see also [15,17]). Although there is no suggestion that the researcher would deliberately wish to cause harm, unsupervised learning may impact expected outcomes. Calls for transparency and the human-in-the-loop to intervene if necessary [8,17] imply a recognition that predictions may not be fixed in advance. Once again, the informed nature of consent might be difficult to satisfy.

- Justice: to ensure the fair distribution of benefits. The final principle highlights a number of issues. The CARE Principles [36], originally conceived in regard to indigenous populations, stress the importance of ensuring that all stakeholders within research at least are treated equitably. For all its limitations, the trolley car dilemma [37] calls into question the assessment of justice. During a public health emergency, and inherent in contact tracing, the issue is whether justice is better served by protecting the rights of the individual especially privacy over a societal imperative.

In general ethics terms, autonomy, beneficence/non-malevolence, and justice reflect a Kantian or deontological stance: they represent the rules and obligations which govern good research practice (for a useful summary on applied ethical theories, see [38]). Utilitarianism—justifying the means on the basis of potential benefits—is subordinate to such obligations. However, Rawls’ justice theory and different outcomes to the trolley-car dilemma motivate a re-evaluation of a simple deontological versus utilitarian perspective. Further, the socially isolating effect of the COVID-19 pandemic raises the question as to whether a move away from focusing on the individual and considering instead the individual as defined by the collective community is more appropriate (see [39]). Indeed, the challenge comes when applying ethical principles in specific research environments [40,41], or medical situations [27]. Ethics review must therefore balance the potentially competing interests and expectations of research participants, researchers and the potential benefit to the community at large in determining what is ethically acceptable. At the same time, it is essential to consider whether the researcher or any other stakeholder can truly assess what the potential outcomes might be. In either case—individual versus community benefit, and the overall knowledge and transparency of the research involved—there needs to be an ongoing negotiation amongst stakeholders to agree on an acceptable approach.

4.2. Issues with Consent

Leading on from the earlier discussion about confusions arising from consent, in this section I return to some specific issues with consent identified in the literature. As part of the consent process, a potential participant is provided detailed information covering what the research is about, what they as participant will be expected to do, and any potential risks or benefits to them. They are typically given an opportunity to discuss with the researcher or indeed anyone else for clarification. Ultimately, it is assumed that this will address the need to provide all the detail the research participant needs to make an informed decision about participation. Although there is some evidence to suggest both researchers and their participants are satisfied with the informed consent process [42], this begs the question as to whether participants are able to make balanced decision based on that information—namely, their competence—but also whether they do in fact feel that they are free to make whatever decision they choose.

Researchers must consider the ability of their potential participants to assimilate and understand the information they can provide when requesting participation, therefore. This may be due to the capacity of the participant themselves, but also their understanding of the implications of the research on them and on others like them [43]. There are also indications that the amount of information provided [44], and of issues such as potential risks and benefits may not be satisfactory in some contexts [45,46]. At the same time, researchers tend to be concerned about regulatory compliance as opposed to balancing what the research goals are and how to manage risks and challenges [47]. Ultimately, though, participants may simply fail to understand the implications of what they are being asked to agree to [48] or be unable to engage with the information provided [49]. They do however appreciate that researchers must balance multiple aspects of the proposed research and so would be prepared to negotiate on those terms with the researchers rather than be part of a regulatory compliance exercise [47]. Finally, whether researchers themselves are fully aware of the implications of what they are asking for patient or participant willingness to engage is not always clear [50].

Just as Biros et al. [43] highlight concerns about the broader, community implications of research, there are other social dimensions which should be considered. Nijhawan et al. [51] and Kumar [52], for instance, maintain that consent is really a Western construct. In their studies in India, they also stress that an independently made decision to participate could be influenced by institutional regard: patients may be influenced by an implicit trust in healthcare services, for example [51]. The cultural aspects here echo traditional differences between individualist and collectivist societies [53,54]. Only the former would be used to putting their own wishes and needs above those of the community at large. Indeed, for some cultures, the concept of self exists only as it is part of and dependent on a collective group [39]. This may not be as simple as individualism versus collectivism though: European data protection legislation, for example, puts the rights of the individual above those of the collective, whereas the opposite is true in the USA [40].

Nijhawan and his colleagues [51] highlight a more general concern about the informed consent process: if there is implicit trust in an institution influencing the consent decision, then there are emotional issues which need consideration (see also [55] on privacy; and [56] on trust). Ethics review in the behavioural sciences was introduced to provide additional oversight for what may be seen as unnecessarily stressful for participants [57,58]. However, this misses the point that potential participants may comply, and give their consent, because of a perceived power dynamic [59,60]: the participant may feel obliged to do as they are told to please the researcher. Alternatively, they may simply trust that the researcher is competent and means well which in turn encourages them to trust the researcher [47,48]. Indeed, there is anecdotal evidence that participants would prefer just to get on with the research [48]; and in areas like clinical treatment, consent is almost irrelevant [61]. Whether or not the consent process really reflects true autonomy is not always clear [26].

Like others, Nijhawan et al. [51] make a distinction between consent (the formal agreement to engage based on participant competence) and assent (a less formal indication of such agreement). So, while a parent or guardian must provide legally based consent for their child to take part, the child themselves should provide assent. The latter is not legally required, but without it there is no monitoring of continuing motivation and willingness to carry on with participation. Assent is an informal agreement then which should be in place to preserve the quality of the research itself though not required by law. Irrespective of whether consent or assent, there is an argument which says it should be re-negotiated throughout a given research study [48,62]. Otherwise, and learning from considerations around biobanks and the ongoing exploitation of samples, informed consent may become too broad to be effective [63] and need continued review [64].

Finally, perhaps most fundamentally, there is the question of whether consent based on full disclosure of information is practical (see Section 2 above) or even desirable. There are contexts within which deliberate deception can be justified [33], and where traditional informed consent is actually undermining research progress [65]. Similarly, as well as competence and the emotional implications of illness or duress discussed above, there are always cases where full disclosure may not be possible. One such example, which will be explored below, concerns public health emergencies and vaccination programs, as well as inadvertent third-party disclosures [66]. Even within a clinical context, O’Neill suggests informed consent be replaced with an informed request: extending that to research, the participant would effectively respect the researcher’s competence and agree on that basis to proceed.

Given the nature and extent of issues raised in the literature, it is important to reconsider the purpose of the relationship between research participant and researcher in light of generalised deontological obligations such as autonomy (respect for the individual), benevolence and non-malevolence, and justice. In legal terms alone, problems with informed consent have been summarised thus [23]: consent may be unwitting (or unintentional) as in cases where acceptance is assumed by default [22], non-voluntary or coerced, and incapacitated in that a full understanding is not possible [23]. With that in mind, empirical research is ultimately a negotiation between the researcher and participant, or indirectly between researcher and the data they can access. So, it is unclear whether the informed consent process is adequate to capture what is needed for regulatory compliance or even the reality of empirical research. A research participant is effectively prepared to expose themselves to the vulnerability that the research protocol may not provide the expected outcomes, but believe that the researcher respects them and their input; researchers will do what they can to support participants throughout the research lifecycle and consider the implications of eventual publication. The willingness to be vulnerable in this way has been discussed repeatedly in the trust literature within the social sciences for many years. After a review of implications for technology acceptance in the next section, discussion subsequently turns to the potential benefit of a trust-based approach to research participation.

4.3. Technology Acceptance

How users decide to engage with technology, especially for services like contact tracing, needs to consider issues of consent. This is not always the case (see [23]). Traditionally, causal models predicting technology adoption have focused particularly on features of the technology itself, such as perceived ease of use and perceived usefulness [67]. The Context (see Section 3) derives solely from the technology. Other variables associated with technology uptake such as the demographics of potential adopters, any facilitating context and social influence have been identified as moderators, however [68]. McKnight, Thatcher and their colleagues combine these technology-based approaches with models of trust, however [69,70,71]. In so doing, they extend the influence of social norms identified by Venkatesh [68] to include a trust relationship with relevant human agents in the overall context within which a particular technology is used.

For the COVID-19 Public Health Emergency (PHE), contact tracing was seen as an effective tool in managing the pandemic. Early on in the pandemic, it was identified as one method among many which would be of use, given the balance between individual rights and societal benefit [72]. Concerns around privacy simply need careful management [73]. Later empirical studies have demonstrated a willingness to engage with the technology: perceived usefulness in managing personal risk outweighs both effort and privacy concerns [74,75]. Perceived ease of use as predicted by standard causal models for technology acceptance [67], therefore, was not seen as an issue.

By contrast the overall context for the introduction of contact tracing needs to be considered. The perceived failure in France, for instance, was due in part to a lack of trust in the government and how it was encouraging uptake [76]. Indeed, Rowe and his colleagues attribute this failure to a lack of cross-disciplinary planning and knowledge sharing, with attempts to force public acceptance of contact tracing perceived as coercive and therefore likely to lead to public distrust [76]. Introducing trust here is entirely consistent with McKnight and Thatcher’s work (see above, refs. [69,70,71]). Without trust in the main stakeholders, as Rowe et al. found, adoption will be compromised.

Examining trust in the context of contact tracing usage brings the discussion back to consent. Given the shortcomings of consent across multiple contexts including terms of use, Richards and Hartzog [23] argue for an approach which does not ignore basic ethical principles such as autonomy but rather empowers stakeholders to engage appropriately. They conclude that existing consent approaches should be replaced with a legally-based trust process. This would allow, they claim, obligations to protect and be discrete with participants and their data and to avoid manipulative practices. The present discussion takes this idea one stage further. In the next section, I review a common social psychological definition of trust as a continuous socially constructed agreement between parties. After that, this is applied to specific technology scenarios relevant to contact tracing, AI-enabled technologies and PHE.

4.4. Trust

Many of the issues outlined above imply that there is a negotiation between different actors in research, or other activities like clinical treatment. While O’Neill discourages replacing the informed consent process with a “ritual of trust” [66], and notwithstanding the importance of general trust in healthcare in making consent decisions [51], it is unclear how consent and trust relate to one another. Roache positions consent as an important part of encouraging good practice in order to introduce a debate on trust and consent [77]. Eyal, however, rejects an unqualified assumption that informed consent promotes trust in medical care in general [78]. He questions [79] the utilitarian approach proposed by Tännsjö [80] and the social good arguments of Bok [81]. However, and regardless of the relative strength of the arguments in this exchange, both Bok and Tännsjö contextualise informed consent within a social negotiation between actors: in their case, patient and clinician. In a research study, researcher and participant may similarly not be on equal footing in terms of competence and understanding. Yet they continue to engage [47,48].

Defining trust can be problematic [82,83]. However, and with specific reference to the inferred vulnerability of the research participant, for the present discussion, one helpful definition was offered by Mayer and his colleagues:

“… the willingness of a party to be vulnerable to the actions of another party based on the expectation that the other will perform a particular action important to the trustor, irrespective of the ability to monitor or control that other party.”Ref. [84]

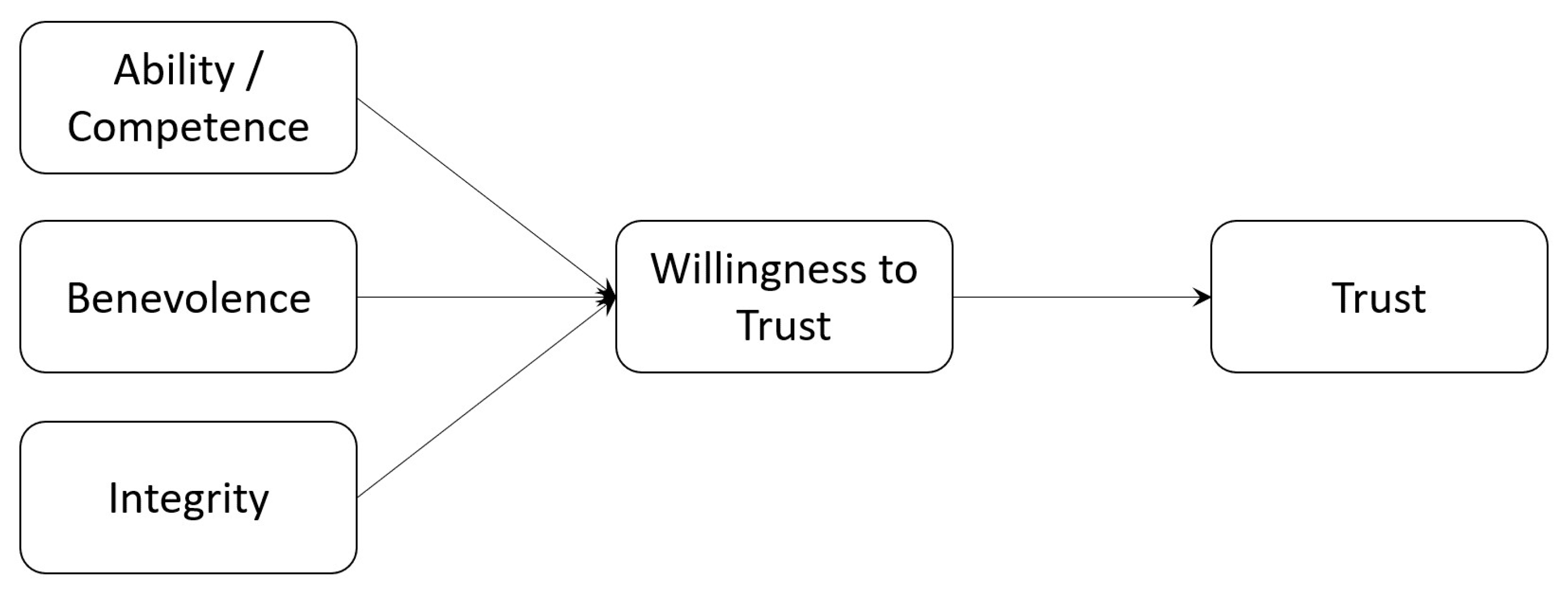

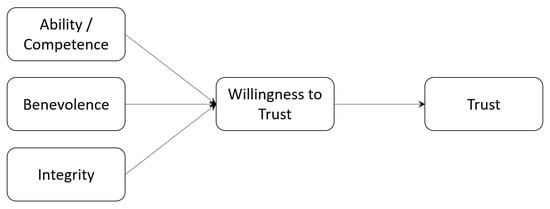

Trust is therefore the response by a trustor to perceived trustworthiness indicators of benevolence, competence (or ability) and integrity in the trustee.

This is summarised in Figure 3. Like the TPB-based visualisations for Terms of Use and Research Consent in Figure 2 in Section 3 above, the assumption is that a Willingness to Trust in Mayer et al.’s conception [84] is a response to trustworthiness indicators as context. The separation between Willingness to Trust and Trust itself is important. Once the trustor actually trusts the trustee, they may still continue to reassess the trustworthiness indicators. In so doing, their willingness may be undermined. They lose trust, and the trustee must now act in order to rebuild the lost trust. Trust becomes a constant negotiation, therefore.

Figure 3.

A Schematic Representation of Trust Behaviours.

To expand on this, trust is socially constructed [85] as an ongoing dialogue between exposure to risk and an evaluation of the behaviours of others [86]. Undermining any one of the individual trustworthiness indicators leads to a loss of trust even distrust and a need to repair the original trust response [87] if the relationship is to continue. Trust repair depends on a willingness to identify issues, take responsibility to address them and contextualise behaviours within a narrative that makes sense to the trustor [88,89]. As such, I maintain, this behavioural perspective on trust reflects well Bok’s call for one party to accept the potential limitations of another whilst continuing to evaluate and re-evaluate their behaviour [81].

In a research context, basing the agreement to participate on the assumed trust between researcher and participant can account for the observed pragmatic approach to informed consent [48]. Over time, a trust relationship in a research study will depend on the maintenance by the researcher of their reputation for integrity, competence and benevolence towards the research participant [88,89,90,91,92]. However, there is empirical evidence for a willingness to compromise: technology adoption would not be possible without it [70,71,93,94]. Further, trust may generalise across related areas, which would be beneficial for a research study itself and its context [95,96,97]. Leaving aside the specific issue of whether this is simply a different definition or approach to informed consent, a trust-based approach may well describe what happens in decisions to engage with research more closely than anticipated by regulatory control or governance frameworks [47]. Adopting a trust-based perspective derived from Mayer et al.’s definition [84] would need to consider how to demonstrate the trustworthiness indicators of integrity, benevolence and competence in pertinent research activities. This will be termed trust-based consent in the discussion below.

5. Scenarios

Notwithstanding any legal obligations under clinical practice and data protection regulations, to evaluate the concept of trust-based research consent, this section considers three different scenarios with specific relevance to the COVID-19 pandemic and beyond. Throughout the discussion above, I have used contract tracing as a starting point. Identifying the transmission paths of contagious diseases with such technology has been around as a concept for some time [98]. However, this has potential implications for privacy including the inadvertent and unconsented disclosure of third parties from a consent perspective. It has been noted that human rights instruments make provision for when community imperatives supersede individual rights (Art. 8 §2, [14]); and trust in government has had implications for the acceptance and success of tracing applications, for instance [76,99]. For representative research behaviours, therefore, it is important to consider the implications of current informed consent procedures in research ethics as well as from the perspective of trust-based consent introduced above.

- Contact tracing: During the COVID-19 pandemic, there has been some discussion about the technical implementation [100] and how tracing fits within a larger socio-technical context [101]. Introduction of such applications is not without controversy in socio-political terms [76,102]. At the same time, there is a balance to be struck between individual rights and the public good [103]; in the case of the COVID-19 pandemic, the social implications of the disease are almost as important as its impact on public and individual health [104]. Major challenges include:

- –

- Public Opinion;

- –

- Inadvertent disclosure of third party data;

- –

- Public/Individual responses to alerts.

- Big Data Analytics: this includes exploiting the vast amounts of data available typically via the Internet to attempt to understand behavioural and other patterns [105,106]. Such approaches have already shown much promise in healthcare [107], and with varying degrees of success for tracing the COVID-19 pandemic [108]. There are, however, some concerns about the impact of big data on individuals and society [109,110]. Major challenges include:

- –

- Identification of key actors;

- –

- Mutual understanding between those actors;

- –

- Influence of those actors on processing (and results).

- Public Health Emergency Research: multidisciplinary efforts to understand, inform and ultimately control the transmission and proliferation of disease (see for instance [111]) as well as social impacts [99,104], and to consider the long-term implications of the COVID-19 pandemic and other PHEs [112]. Major challenges include:

- –

- Changes in research focus;

- –

- Changes introduced as research outcomes become available;

- –

- Respect for all potential groups;

- –

- Balancing individual and community rights;

- –

- Unpredicted benefits of research data and outcomes (e.g., in future).

Table A1 in Appendix A summarises perspectives relating to informed consent and trust-based consent relating to the three related activities: contact tracing, big data and issues pertinent to research during a PHE as described above. Each of these scenarios needs to be contextualised within different perspectives: the broader socio-political context, the wider delivery ecosystem, and historical and community-benefit aspects, respectively. Traditional informed consent for research would be problematic for different reasons in each case as summarised. If run in connection with or as part of data protection informed consent, any risk of research participants stopping their participation may result in withdrawal of research data unless a different legal basis for processing can be found.

In all three cases, it is apparent that a simple exchange between researcher and research participant is not possible. There are other contextual factors which must be taken into account and which may well introduce additional stakeholders. There are also external factors—contemporary context, a relevant underlying ecosystem setting expectations, and a dynamic and historical perspective which may introduce both types of factors from the other two scenarios—which would indicate at the very least that each contextualised agreement must be re-validated, and that the consent cannot be assumed to remain stable as external factors influence the underlying perceptions of the actors involved. Trust would allow for such contextualisation and implies a continuous negotiation.

6. Discussion and Recommendations

The existing informed consent process clearly poses several problems, not least the potential to confuse research participants about what they are agreeing to: use of an app, the processing of their personal data, undergoing treatment, or taking part in a research study. This situation would be exacerbated where several such activities co-occur. Indeed, it is not unusual for research studies to include collection of personal data as part of the research protocol. However, there are more challenging issues. Where the researcher is unable to describe exactly what should happen, what the outcomes might be, and how data or participant engagement will be used, then it is impossible to provide sufficient information for any consent to be fully informed. The literature in this area provides some evidence too that research participants may well wish to engage without being overwhelmed with detail they do not want or may not understand. There is an additional complication where multiple stakeholders, not just the researcher, may be involved in handling and interpreting research outcomes. Any such stakeholders should be involved in or at least represented as part of the discussion with the research participant. All of this suggests that there needs to be some willingness to accept risk: participants must trust researchers and their intentions.

6.1. Recommendations for Research Ethics Review

Such a trust-based approach would, however, affect how RECs/IRBs review research submissions. Most importantly, reviewers need to consider the main actors involved in any research and their expectations. This suggests a number of main considerations during review:

- The research proposal should first describe in some detail the trustworthiness basis for the research engagement. I have used characteristics from the literature—integrity, benevolence, and competence—though others may be more appropriate such as reputation and evidence of participant reactions in related work.

- The context of the proposed research should be disclosed, including the identification of the types of contextual effects which might be expected. These may include the general socio-political environment, existing relationships that the research participant might be expected to be aware of (such as clinician–patient), and any dynamic effects, such as implications for other cohorts, including future cohorts. Any such contextual factors should be explained, justified and appropriately managed by the researcher.

- The proposed dialogue between researcher and research participant should be described, how it will be conducted, what it will cover, and how frequently the dialogue will be repeated. This may depend, for example, on when results start to become available. The frequency and delivery channel of this dialogue should be simple for the potential research participant. This must be justified, and the timescales realistic. This part of the trust-based consent process might also include how the researcher will manage research participant withdrawal.

The intention with such an approach would be to move away from the burdensome governance described in the literature (see [47,48], for instance), instead focusing on what is of practical importance to enter into a trust relationship and what might encourage a more natural and familiar communicative exchange with participants. Traditional information such as the assumed benefits of the research outcomes should be confined to the research ethics approval submission; it may not be clear to a potential research participant how relevant that may be for them to make a decision to engage. Review ultimately must consider the Context (see Section 3 above) within which a participant develops a Willingness to Engage.

The ethics review process thereby becomes an evaluation not only a consideration of the typical cost–benefit to the research participant, but rather of how researcher and research participant are likely to engage with one another to collaborate effectively on an equal footing and sharing the risks of failure. The participant then becomes a genuine actor within the research protocol rather than simply a subject of observation.

6.2. Recommendations for the Ethical Use of Advanced Technologies

Official guidance tends to focus on data governance [13,15] or on the obligations of technologists to provide robust, reliable and transparent operation [16,17]. However, I have emphasised in the previous discussion that it is essential to consider the entire ecosystem where advanced, AI-enabled technologies are deployed. These technologies are an integral part of a broader socio-technical system.

The data scientist providing the technology to a service provider and the service provider themselves must take into account a number of factors:

- Understand who the main actors are. Each domain (healthcare, eCommerce, social media, and so forth) will often be regulated with specific obligations. More importantly though, I maintain, would be the interaction between end user and provider, and the reliance of the provider on the data scientist or technologist. These actors would all influence the trust context. So how they contribute needs to be understood.

- Understand what their expectations are. Once the main actors have been identified, their individual expectations will influence how they view their own responsibilities and how they believe the other actors will behave. This will contextualise what each expects from the service or interaction, and from one another.

- Reinforce competence, integrity and benevolence (from [84]). As the defining characteristics of a trust relationship outlined above, each of the actors has a responsibility to support that relationship, and to avoid actions which would affect trust. Inadvertent or unavoidable problems can be dealt with ([88,89]). Further, occasional (though infrequent [23]) re-affirmation of the relationship is advantageous. So, ongoing communication between the main actors is important in maintaining trust (see also [12]).

Just as a trust-based approach is proposed as an alternative to the regulatory constraint of existing deontological consent processes, I suggest that the main actors share a responsibility to invest in a relationship. In ethical terms, this is more consistent with Floridi’s concept of entropy [113]: each actor engages with the high-level interaction (e.g., contact tracing) in support of common beliefs. Rather than trying to balance individual rights and the common good, this assumes engagement by the main actors willing to expose themselves to vulnerability (because outcomes are not necessarily predictable at the outset) and therefore invest jointly towards the success of the engagement.

7. Future Research Directions

Based on existing research across multiple domains, I have presented here a trust-based approach to consent. This assumes an ongoing dialogue between trustor (data subject, service user, research participant, patient) and trustee (data controller, service provider, researcher, clinician). To a large extent, this echoes what Rohlfing and her colleagues describe as a co-constructed negotiation around explainability in AI between explainer and explainee [12]. However, my trust-based approach derives from social psychological terms and therefore accepts vulnerability. None of the stakeholders are assumed to be infallible. Any risk to the engagement is shared across them all. This would now benefit from empirical validation.

Firstly, and following some of the initial work by Wiles and her colleagues [47], trustors of different and representative categories could provide at least two different types of responses: their attitudes and perceptions of current consent processes, backed up with ethnographic observation of how they engage with those processes currently. Secondly, expanding on proposals by Richards and Hartzog [23] as applied not only in the US but also in other jurisdictions, engaging with service providers, researchers and clinicians asked to provide their perspective on how they currently use the consent process and what a trust-based negotiation would mean to them in offering the services or engaging with trustors as described here. Third, it is important to compare the co-construction of explainability for AI technologies (which assumes understanding is enough for acceptability) and the negotiation of shared risk implied by a trust-based approach to consent. If understanding the technology alone proves insufficient, then informed consent to formalise the voluntary agreement to engage is not enough either.

Synthesising these findings would provide concrete proposals for policy makers, as well as a basis to critically evaluate existing guidance on data sharing and the development and deployment of advanced technologies.

8. Conclusions

In this paper, I have suggested a different approach to negotiating ongoing consent (including terms of use) from the traditional process of informed consent or unwitting acceptance of terms of use, based on the definition of trust from the social psychology literature pertaining to person-to-person interactions. This was motivated by four sets of observations: firstly, that informed consent has different implications in different situations such as data protection, clinical trials or interventions, or research, and known issues with terms of use for online services. Secondly, the research literature highlights multiple cases where the assumptions relating to informed consent do not hold, and terms of use are typically imposed rather than informed and freely given. Thirdly, there may be contexts which are more complex than a simple exchange between two actors: researcher and research participant, or service user and service provider. Finally, even explainability for AI technologies may rely on a co-constructed understanding of outputs between the main stakeholders. Reviewing common activities during the COVID-19 pandemic, but also relevant to any Public Health Emergency, I have stressed that the broader socio-political context, the socio-technical environment within which big data analytics are implemented, and the historical relevance of PHE research complicates a straight-forward informed consent process. Further, researchers may simply not be in a position to predict or guarantee expected research outcomes making fully informed consent problematic. I suggest that this might better be served by a trust-based approach. Trust, in traditional definitions in the behavioural sciences, is based on an acceptance of vulnerability to unknown outcomes, a shared responsibility for those outcomes. In consequence, a more dynamic trust-based negotiation in response to situational changes over time is called for. This, I suggest, could be handled with a much more communication-focused approach, with implications for research ethics review, as well as AI-enhanced services. Moving forward, there needs to be discussion with relevant stakeholders, especially potential research participants and researchers themselves, to understand their expectations and thereby validate the arguments presented here exploring how a trust-based consent process might meet their requirements. Finally, although I have contextualised the discussion here against the background of the coronavirus pandemic, other test scenarios need to be explored to evaluate whether the same factors apply.

Funding

This work was funded in part by the European Union’s Horizon 2020 research and innovation programme under grant agreement No 780495 (project BigMedilytics). Disclaimer: Any dissemination of results here presented reflects only the author’s view. The Commission is not responsible for any use that may be made of the information it contains. It was also supported, in part, by the Bill & Melinda Gates Foundation [INV-001309]. Under the grant conditions of the Foundation, a Creative Commons Attribution 4.0 Generic License has already been assigned to the Author Accepted Manuscript version that might arise from this submission.

Data Availability Statement

Not Applicable, the study does not report any data.

Conflicts of Interest

The author declares no conflict of interest.

Appendix A

Table A1.

Summary of Issues across Domains.

Table A1.

Summary of Issues across Domains.

| Domain | Challenges | Informed Consent | Trust-Based Consent |

|---|---|---|---|

| Contact Tracing | The socio-political context within which the app is used or research is carried out. Media reporting, including fake news, can influence public confidence | One-off consent on research engagement or upon app download may not be sufficient as context changes. Retention may be challenging depending on trustworthiness perceptions of public authorities and responses to media reports leading to app/research study abandonment (i.e., the impact and relevance of context which may have nothing to do with the actual app/research) | Researchers (app developers) may need to demonstrate integrity and benevolence on an ongoing basis, and specifically when needed in response to any public concerns around data protection, and to any misuse or unforeseen additional use of data. Researchers must therefore communicate their own trustworthiness and position themselves appropriately within a wider socio-political context for which they may feel they have no responsibility. It is their responsibility, however, to maintain the relationship with relevant stakeholders, i.e., to develop and maintain trust. |

| Big Data Analytics | The potential disruption to an existing ecosystem—e.g., the actors who are important for delivery of service, such as patient and clinician for healthcare, or research participant and researcher for Internet-based research. Technology may therefore be disruptive to any such existing relationship. Further, unless the main actors are identified, it would be difficult to engage with traditional approaches to consent. | Researcher (data scientist) may not be able to disclose all information necessary to make a fully informed decision, not least because they may only be able to describe expected outcomes (and how data will be used) in general terms. The implications of supervised and unsupervised learning may not be understood. Not all beneficiaries can engage with an informed consent process (e.g., clinicians would not be asked to consent formally to data analytics carried out on their behalf; for Internet-based research, it may be impractical or ill-advised for researchers to contact potential research participants). | Data scientists need to engage in the first instance with domain experts in other fields who will use their results (e.g., clinicians in healthcare; web scientists etc. for Internet-based modelling; etc.) to understand each other’s expectations and any limitations. For a clinician or other researcher dependent on the data scientist, this will affect the perception of their own competence. This will also form part of trust-based engagement with a potential research participant. Ongoing communication between participants, data scientists and the other relevant domain experts should continue to maintain perceptions of benevolence and integrity. |

| Public Health Emergency | The difficulty in identifying the scope of research (in terms of what is required and who will benefit now, and especially in the future) and therefore identify the main stakeholders, not just participants providing (clinical) data directly | The COVID-19 pandemic has demonstrated that research understanding changed significantly over time: the research community, including clinicians, had to adapt. Policy decisions struggled to keep pace with the results. Informed consent would need constant review and may be undermined if research outcomes/policy decisions are not consistent. In the latter case, this may result in withdrawal of research participants. Further, research from previous pandemics was not available to inform current research activities | A PHE highlights the need to balance individual rights and the imperatives for the community (the common good). As well as the effects of fake news, changes in policy based on research outcomes may lead to concern about competence: do the researchers know what they are doing? However, there needs to be an understanding of how the research is being conducted and why things do change. So, there will also be a need for ongoing communication around integrity and benevolence. This may advantageously extend existing public engagement practices, but would also need to consider future generations and who might represent their interests. There is a clear need for an ongoing dialogue including participants where possible, but also other groups with a vested interest in the research data and any associated outcomes, including those who may have nothing to do with the original data collection or circumstances. |

References

- Walker, P.; Lovat, T. You Say Morals, I Say Ethics—What’s the Difference? In The Conversation; IMDb: Seattle, WA, USA, 2014. [Google Scholar]

- Adadi, A.; Berrada, M. Peeking Inside the Black-Box: A Survey on Explainable Artificial Intelligence (XAI). IEEE Access 2018, 6, 52138–52160. [Google Scholar] [CrossRef]

- European Commission. Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016, 2016; European Commission: Brussels, Belgium, 2016. [Google Scholar]

- Samek, W.; Wiegand, T.; Müller, K.R. Explainable Artificial Intelligence: Understanding, Visualizing and Interpreting Deep Learning Models. arXiv 2017, arXiv:1708.08296. [Google Scholar]

- Arrieta, A.B.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; Garcia, S.; Gil-Lopez, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Gunning, D.; Aha, D.W. DAPRA’s Explainable Artificial Intelligence Program. AI Mag. 2019, 40, 44–58. [Google Scholar]

- Weitz, K.; Schiller, D.; Schlagowski, R.; Huber, T.; André, E. “Do you trust me?”: Increasing User-Trust by Integrating Virtual Agents in Explainable AI Interaction Design. In Proceedings of the IVA ’19: 19th ACM International Conference on Intelligent Virtual Agents, Paris, France, 2–5 July 2019; ACM: New York, NY, USA, 2019; pp. 7–9. [Google Scholar] [CrossRef]

- Taylor, S.; Pickering, B.; Boniface, M.; Anderson, M.; Danks, D.; Følstad, A.; Leese, M.; Müller, V.; Sorell, T.; Winfield, A.; et al. Responsible AI—Key Themes, Concerns & Recommendations For European Research and Innovation; HUB4NGI Consortium: Zürich, Switzerland, 2018. [Google Scholar] [CrossRef]

- Došilović, F.K.; Brčić, M.; Hlupić, N. Explainable Artificial Intelligence: A Survey. In Proceedings of the 2018 41st International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 21–25 May 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 0210–0215. [Google Scholar] [CrossRef]

- Khrais, L.T. Role of Artificial Intelligence in Shaping Consumer Demand in E-Commerce. Future Internet 2020, 12, 226. [Google Scholar] [CrossRef]

- Israelsen, B.W.; Ahmed, N.R. “Dave …I can assure you …that it’s going to be all right …” A Definition, Case for, and Survey of Algorithmic Assurances in Human-Autonomy Trust Relationships. ACM Comput. Surv. 2019, 51, 113. [Google Scholar] [CrossRef]

- Rohlfing, K.J.; Cimiano, P.; Scharlau, I.; Matzner, T.; Buhl, H.M.; Buschmeier, H.; Eposito, E.; Grimminger, A.; Hammer, B.; Häb-Umbach, R.; et al. Explanation as a social practice: Toward a conceptual framework for the social design of AI systems. IEEE Trans. Cogn. Dev. Syst. 2020. [Google Scholar] [CrossRef]

- Amnesty Internationl and AccessNow. The Toronto Declaration: Protecting the Right to Equality and Non-Discrimination in Machine Learning Systems. 2018. Available online: https://www.accessnow.org/the-toronto-declaration-protecting-the-rights-to-equality-and-non-discrimination-in-machine-learning-systems/ (accessed on 14 May 2021).

- Council of Europe. European Convention for the Protection of Human Rights and Fundamental Freedoms, as Amended by Protocols Nos. 11 and 14; Council of Europe: Strasbourg, France, 2010. [Google Scholar]

- UK Government Digital Services. Data Ethics Framework. 2020. Available online: https://www.gov.uk/government/publications/data-ethics-framework (accessed on 14 May 2021).

- Department of Health and Social Care. Digital and Data-Driven Health and Care Technology; Department of Health and Social Care: London, UK, 2021.

- European Commission. Ethics Guidelines for Trustworthy AI; European Commission: Brussels, Belgium, 2019. [Google Scholar]

- Ajzen, I. The theory of planned behavior. Organ. Behav. Hum. Decis. Process. 1991, 50, 179–211. [Google Scholar] [CrossRef]

- Murray, P.M. The History of Informed Consent. Iowa Orthop. J. 1990, 10, 104–109. [Google Scholar]

- USA v Brandt Court. The Nuremberg Code (1947). Br. Med. J. 1996, 313, 1448. [Google Scholar] [CrossRef]

- World Medical Association. WMA Declaration of Helsinki—Ethical Principles for Medical Research Involving Human Subjects; World Medical Association: Ferney-Voltaire, France, 2018. [Google Scholar]

- Lemley, M.A. Terms of Use. Minn. Law Rev. 2006, 91, 459–483. [Google Scholar]

- Richards, N.M.; Hartzog, W. The Pathologies of Digital Consent. Wash. Univ. Law Rev. 2019, 96, 1461–1504. [Google Scholar]

- Luger, E.; Moran, S.; Rodden, T. Consent for all: Revealing the hidden complexity of terms and conditions. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Paris, France, 27 April–2 May 2013; pp. 2687–2696. [Google Scholar]

- Belmont. The Belmont Report: Ethical Principles and Guidelines for The Protection of Human Subjects of Research; American College of Dentists: Gaithersburg, MD, USA, 1979. [Google Scholar]

- Beauchamp, T.L. History and Theory in “Applied Ethics”. Kennedy Inst. Ethics J. 2007, 17, 55–64. [Google Scholar] [CrossRef] [PubMed]

- Muirhead, W. When four principles are too many: Bloodgate, integrity and an action-guiding model of ethical decision making in clinical practice. Clin. Ethics 2011, 38, 195–196. [Google Scholar] [CrossRef]

- Rubin, M.A. The Collaborative Autonomy Model of Medical Decision-Making. Neuro. Care 2014, 20, 311–318. [Google Scholar] [CrossRef]

- The Health Service (Control of Patient Information) Regulations 2002. 2002. Available online: https://www.legislation.gov.uk/uksi/2002/1438/contents/made (accessed on 14 May 2021).

- Hartzog, W. The New Price to Play: Are Passive Online Media Users Bound By Terms of Use? Commun. Law Policy 2010, 15, 405–433. [Google Scholar] [CrossRef]

- Beauchamp, T.L.; Childress, J.F. Principles of Biomedical Ethics, 8th ed.; Oxford University Press: Oxford, UK, 2019. [Google Scholar]

- OECD. Frascati Manual 2015; OECD: Paris, France, 2015. [Google Scholar] [CrossRef]

- BPS. Code of Human Research Ethics; BPS: Leicester, UK, 2014. [Google Scholar]

- Herschel, R.; Miori, V.M. Ethics & Big Data. Technol. Soc. 2017, 49, 31–36. [Google Scholar] [CrossRef]

- Floridi, L.; Taddeo, M. What is data ethics? Philos. Trans. R. Soc. 2016. [Google Scholar] [CrossRef]

- Carroll, S.R.; Garba, I.; Figueroa-Rodríguez, O.L.; Holbrook, J.; Lovett, R.; Materechera, S.; Parsons, M.; Raseroka, K.; Rodriguez-Lonebear, D.; Rowe, R.; et al. The CARE Principles for Indigenous Data Governance. Data Sci. J. 2020, 19, 1–12. [Google Scholar] [CrossRef]

- Thomson, J.J. The Trolley Problem. Yale Law J. 1985, 94, 1395–1415. [Google Scholar] [CrossRef]

- Parsons, T.D. Ethical Challenges in Digital Psychology and Cyberpsychology; Cambridge University Press: Cambridge, UK, 2019. [Google Scholar]

- Murove, M.F. Ubuntu. Diogenes 2014, 59, 36–47. [Google Scholar] [CrossRef]

- Ess, C. Ethical Decision-Making and Internet Research: Recommendations from the AoIR Ethics Working Committee; IGI Global: Hershey, PA, USA, 2002. [Google Scholar]

- Markham, A.; Buchanan, E. Ethical Decision-Making and Internet Research: Recommendations from the Aoir Ethics Working Committee (Version 2.0). 2002. Available online: https://aoir.org/reports/ethics2.pdf (accessed on 14 May 2021).

- Sugarman, J.; Lavori, P.W.; Boeger, M.; Cain, C.; Edson, R.; Morrison, V.; Yeh, S.S. Evaluating the quality of informed consent. Clin. Trials 2005, 2, 34–41. [Google Scholar] [CrossRef]

- Biros, M. Capacity, Vulnerability, and Informed Consent for Research. J. Law Med. Ethics 2018, 46, 72–78. [Google Scholar] [CrossRef]

- Tam, N.T.; Huy, N.T.; Thoa, L.T.B.; Long, N.P.; Trang, N.T.H.; Hirayama, K.; Karbwang, J. Participants’ understanding of informed consent in clinical trials over three decades: Systematic review and meta-analysis. Bull. World Health Organ. 2015, 93, 186H–198H. [Google Scholar] [CrossRef] [PubMed]

- Falagas, M.E.; Korbila, I.P.; Giannopoulou, K.P.; Kondilis, B.K.; Peppas, G. Informed consent: How much and what do patients understand? Am. J. Surg. 2009, 198, 420–435. [Google Scholar] [CrossRef] [PubMed]

- Nusbaum, L.; Douglas, B.; Damus, K.; Paasche-Orlow, M.; Estrella-Luna, N. Communicating Risks and Benefits in Informed Consent for Research: A Qualitative Study. Glob. Qual. Nurs. Res. 2017, 4. [Google Scholar] [CrossRef]

- Wiles, R.; Crow, G.; Charles, V.; Heath, S. Informed Consent and the Research Process: Following Rules or Striking Balances? Sociol. Res. Online 2007, 12. [Google Scholar] [CrossRef]

- Wiles, R.; Charles, V.; Crow, G.; Heath, S. Researching researchers: Lessons for research ethics. Qual. Res. 2006, 6, 283–299. [Google Scholar] [CrossRef]

- Naarden, A.L.; Cissik, J. Informed Consent. Am. J. Med. 2006, 119, 194–197. [Google Scholar] [CrossRef] [PubMed]

- Al Mahmoud, T.; Hashim, M.J.; Almahmoud, R.; Branicki, F.; Elzubeir, M. Informed consent learning: Needs and preferences in medical clerkship environments. PLoS ONE 2018, 13, e0202466. [Google Scholar] [CrossRef]

- Nijhawan, L.P.; Janodia, M.D.; Muddukrishna, B.S.; Bhat, K.M.; Bairy, K.L.; Udupa, N.; Musmade, P.B. Informed consent: Issues and challenges. J. Adv. Phram. Technol. Res. 2013, 4, 134–140. [Google Scholar] [CrossRef]

- Kumar, N.K. Informed consent: Past and present. Perspect. Clin. Res. 2013, 4, 21–25. [Google Scholar] [CrossRef]

- Hofstede, G. Cultural Dimensions. 2003. Available online: www.geerthofstede.com (accessed on 12 May 2021).

- Hofstede, G.; Hofstede, J.G.; Minkov, M. Cultures and Organizations: Software of the Mind, 3rd ed.; McGraw-Hill: New York, NY, USA, 2010. [Google Scholar]

- Acquisti, A.; Brandimarte, L.; Loewenstein, G. Privacy and human behavior in the age of information. Science 2015, 347, 509–514. [Google Scholar] [CrossRef] [PubMed]

- McEvily, B.; Perrone, V.; Zaheer, A. Trust as an Organizing Principle. Organ. Sci. 2003, 14, 91–103. [Google Scholar] [CrossRef]

- Milgram, S. Behavioral study of obedience. J. Abnorm. Soc. Psychol. 1963, 67, 371–378. [Google Scholar] [CrossRef] [PubMed]

- Haney, C.; Banks, C.; Zimbardo, P. Interpersonal Dynamics in a Simulated Prison; Wiley: New Youk, NY, USA, 1972. [Google Scholar]

- Reicher, S.; Haslam, S.A. Rethinking the psychology of tyranny: The BBC prison study. Br. J. Soc. Psychol. 2006, 45, 1–40. [Google Scholar] [CrossRef] [PubMed]

- Reicher, S.; Haslam, S.A. After shock? Towards a social identity explanation of the Milgram ’obedience’ studies. Br. J. Soc. Psychol. 2011, 50, 163–169. [Google Scholar] [CrossRef]

- Beauchamp, T.L. Informed Consent: Its History, Meaning, and Present Challenges. Camb. Q. Healthc. Ethics 2011, 20, 515–523. [Google Scholar] [CrossRef]

- Ferreira, C.M.; Serpa, S. Informed Consent in Social Sciences Research: Ethical Challenges. Int. J. Soc. Sci. Stud. 2018, 6, 13–23. [Google Scholar] [CrossRef]

- Hofmann, B. Broadening consent - and diluting ethics? J. Med Ethics 2009, 35, 125–129. [Google Scholar] [CrossRef]

- Steinsbekk, K.S.; Myskja, B.K.; Solberg, B. Broad consent versus dynamic consent in biobank research: Is passive participation an ethical problem? Eur. J. Hum. Genet. 2013, 21, 897–902. [Google Scholar] [CrossRef] [PubMed]

- Sreenivasan, G. Does informed consent to research require comprehension? Lancet 2003, 362, 2016–2018. [Google Scholar] [CrossRef]

- O’Neill, O. Some limits of informed consent. J. Med. Ethics 2003, 29, 4–7. [Google Scholar] [CrossRef] [PubMed]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 319–340. [Google Scholar] [CrossRef]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User acceptance of information technology: Toward a unified view. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- McKnight, H.; Carter, M.; Clay, P. Trust in technology: Development of a set of constructs and measures. In Proceedings of the Digit, Phoenix, AZ, USA, 15–18 December 2009. [Google Scholar]

- McKnight, H.; Carter, M.; Thatcher, J.B.; Clay, P.F. Trust in a specific technology: An investigation of its components and measures. ACM Trans. Manag. Inf. Syst. (TMIS) 2011, 2, 12. [Google Scholar] [CrossRef]

- Thatcher, J.B.; McKnight, D.H.; Baker, E.W.; Arsal, R.E.; Roberts, N.H. The Role of Trust in Postadoption IT Exploration: An Empirical Examination of Knowledge Management Systems. IEEE Trans. Eng. Manag. 2011, 58, 56–70. [Google Scholar] [CrossRef]

- Hinch, R.; Probert, W.; Nurtay, A.; Kendall, M.; Wymant, C.; Hall, M.; Lythgoe, K.; Cruz, A.B.; Zhao, L.; Stewart, A. Effective Configurations of a Digital Contact Tracing App: A Report to NHSX. 2020. Available online: https://cdn.theconversation.com/static_files/files/1009/Report_-_Effective_App_Configurations.pdf (accessed on 14 May 2021).

- Parker, M.J.; Fraser, C.; Abeler-Dörner, L.; Bonsall, D. Ethics of instantaneous contact tracing using mobile phone apps in the control of the COVID-19 pandemic. J. Med. Ethics 2020, 46, 427–431. [Google Scholar] [CrossRef]

- Walrave, M.; Waeterloos, C.; Ponnet, K. Ready or Not for Contact Tracing? Investigating the Adoption Intention of COVID-19 Contact-Tracing Technology Using an Extended Unified Theory of Acceptance and Use of Technology Model. Cyberpsychol. Behav. Soc. Netw. 2020. [Google Scholar] [CrossRef]

- Velicia-Martin, F.; Cabrera-Sanchez, J.-P.; Gil-Cordero, E.; Palos-Sanchez, P.R. Researching COVID-19 tracing app acceptance: Incorporating theory from the technological acceptance model. PeerJ Comput. Sci. 2021, 7, e316. [Google Scholar] [CrossRef]

- Rowe, F.; Ngwenyama, O.; Richet, J.-L. Contact-tracing apps and alienation in the age of COVID-19. Eur. J. Inf. Syst. 2020, 29, 545–562. [Google Scholar] [CrossRef]

- Roache, R. Why is informed consent important? J. Med. Ethics 2014, 40, 435–436. [Google Scholar] [CrossRef] [PubMed]

- Eyal, N. Using informed consent to save trust. J. Med. Ethics 2014, 40, 437–444. [Google Scholar] [CrossRef]

- Eyal, N. Informed consent, the value of trust, and hedons. J. Med. Ethics 2014, 40, 447. [Google Scholar] [CrossRef] [PubMed]

- Tännsjö, T. Utilitarinism and informed consent. J. Med. Ethics 2013, 40, 445. [Google Scholar] [CrossRef] [PubMed]

- Bok, S. Trust but verify. J. Med. Ethics 2014, 40, 446. [Google Scholar] [CrossRef]

- Rousseau, D.M.; Sitkin, S.B.; Burt, R.S.; Camerer, C. Not so different after all: A cross-discipline view of trust. Acad. Manag. Rev. 1998, 23, 393–404. [Google Scholar] [CrossRef]

- Robbins, B.G. What is Trust? A Multidisciplinary Review, Critique, and Synthesis. Sociol. Compass 2016, 10, 972–986. [Google Scholar] [CrossRef]