Application of Artificial Intelligence for EV Charging and Discharging Scheduling and Dynamic Pricing: A Review

Abstract

:1. Introduction

2. EV Charging/Discharging and Battery Degradation

2.1. EV Charging and Discharging Techniques

2.2. Vehicle to Grid (V2G) Concept

2.3. Battery Degradation and Charging Efficiency

3. Artificial Intelligence-Based Forecasting Model

3.1. Supervised Learning Methods

3.2. Gated Recurrent Units (GRUs)

3.3. Long Short-Term Memory (LSTM)

3.4. Hybrid and Ensemble

4. Artificial Intelligence-Based Scheduling

4.1. Heuristic Algorithms

4.2. Fuzzy Logic

4.3. Q-Learning and Deep Reinforcement Learning (DRL)

5. Dynamic Pricing and Peer-to-Peer for EV Charging/Discharging

5.1. Time of Use (ToU)

5.2. Real-Time Pricing (RTP)

5.2.1. Application of RTP

5.2.2. RTP Classification

5.3. Peer-to-Pear (P2P)

6. Discussion

| Algorithm | Applications | Advantages | Disadvantages | Possible enhancement |

|---|---|---|---|---|

| Supervised Learning [20,22,54,55,56,57,59,60,61,62,63,64,65,66,67,68,72,73,103,107,109] |

|

|

|

|

| Reinforcement Learning [5,20,21,22,23,24,25,26,27,28,29,30,51,55,56,58,71,125,128,129] |

|

|

|

|

| Dynamic Pricing [27,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51] |

|

|

|

|

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| A3C | Asynchronous Advantage Actor Critic |

| ABC | Artificial Bee Colony |

| AC-DC | Alternating Current-Direct Current |

| ANN | Artificial Neural Networks |

| CNN | Convolutional Neural Network |

| CPP | Critical Peak Pricing |

| DBN | Deep Belief Network |

| DCNN | Deep Convolutional Neural Network |

| DDPG | Deep Deterministic Policy Gradient |

| DE | Differential Evaluation |

| DOD | Depth of Discharge |

| DP | Dynamic Programming |

| DQN | Deep-Q Network |

| DRL | Deep Reinforcement Learning |

| DSM | Demand-Side Management |

| DT | Decision Tree |

| EOL | End-of-Life |

| EV | Electric Vehicle |

| EVCS | EV Charging Station |

| GA | Genetic Algorithm |

| GP | Gaussian Processes |

| GRU | Gated Recurrent Unit |

| KNN | K-Nearest Neighbor |

| LFP | Lithium Ferro-Phosphate |

| LR | Linear Regression |

| LSTM | Long Short-Term Memory |

| MAPE | Mean Absolute Percentage Error |

| MDP | Markov Decision Process |

| MILP | Mixed-Integer Linear Programming |

| NCA | Nickel Cobalt Aluminium Oxides |

| P2P | Peer-to-Pear |

| PBIL | Population-Based Incremental Learning |

| PDDPG | Prioritized Deep Deterministic Policy Gradient |

| PHEV | Plug-in Hybrid Electric Vehicle |

| PSO | Particle Swarm Optimization |

| PTR | Peak Time Rebates |

| RF | Random Forest |

| RNN | Recurrent Neural Network |

| RTP | Real-Time Pricing |

| SAC | Soft-Actor-Critic |

| SDR | Supply and Demand Ratio |

| SOC | State of Charge |

| SVM | Support Vector Machine |

| ToU | Time of Use |

| V2G | Vehicle-to-Grid |

References

- Sun, X.; Li, Z.; Wang, X.; Li, C. Technology development of electric vehicles: A review. Energies 2020, 13, 90. [Google Scholar] [CrossRef] [Green Version]

- IEA. Global EV Outlook 2022. IEA 2022. May 2022. Available online: https://www.iea.org/reports/global-ev-outlook-2022 (accessed on 5 October 2022).

- Wang, Z.; Wang, S. Grid power peak shaving and valley filling using vehicle-to-grid systems. IEEE Trans. Power Deliv. 2013, 28, 1822–1829. [Google Scholar] [CrossRef]

- Limmer, S. Dynamic pricing for electric vehicle charging—A literature review. Energies 2019, 12, 3574. [Google Scholar] [CrossRef] [Green Version]

- Rotering, N.; Ilic, M. Optimal charge control of plug-in hybrid electric vehicles in deregulated electricity markets. IEEE Trans. Power Syst. 2011, 26, 1021–1029. [Google Scholar] [CrossRef]

- Mohammad, A.; Zamora, R.; Lie, T.T. Integration of electric vehicles in the distribution network: A review of pv based electric vehicle modelling. Energies 2020, 13, 4541. [Google Scholar] [CrossRef]

- Kempton, W.; Tomic, J. Vehicle-to-grid power implementation: From stabilizing the grid to supporting large-scale renewable energy. J. Power Sources 2005, 144, 280–294. [Google Scholar] [CrossRef]

- Kempton, W.; Letendre, S.E. Electric vehicles as a new power source for electric utilities. Transp. Res. Part D Transp. Environ. 1996, 2, 157–175. [Google Scholar] [CrossRef]

- Ravi, S.S.; Aziz, M. Utilization of electric vehicles for vehicle-to-grid services: Progress and perspectives. Energies 2022, 15, 589. [Google Scholar] [CrossRef]

- Scott, C.; Ahsan, M.; Albarbar, A. Machine learning based vehicle to grid strategy for improving the energy performance of public buildings. Sustainability 2021, 13, 4003. [Google Scholar] [CrossRef]

- Lund, H.; Kempton, W. Integration of renewable energy into the transport and electricity sectors through V2G. Energy Policy 2008, 36, 3578–3587. [Google Scholar] [CrossRef]

- Al-Awami, A.T.; Sortomme, E. Coordinating vehicle-to-grid services with energy trading. IEEE Trans. Smart Grid 2012, 3, 453–462. [Google Scholar] [CrossRef]

- Sovacool, B.K.; Axsen, J.; Kempton, W. The future promise of vehicle-to-grid (V2G) integration: A sociotechnical review and research agenda. Annu. Rev. Environ. Resour. 2017, 42, 377–406. [Google Scholar] [CrossRef] [Green Version]

- V2G Hub Insights. Available online: https://www.v2g-hub.com/insights (accessed on 4 November 2022).

- Kern, T.; Dossow, P.; von Roon, S. Integrating bidirectionally chargeable electric vehicles into the electricity markets. Energies 2020, 13, 5812. [Google Scholar] [CrossRef]

- Sovacool, B.K.; Noel, L.; Axsen, J.; Kempton, W. The neglected social dimensions to a vehicle-to-grid (v2g) transition: A critical and systematic review. Environ. Res. Lett. 2018, 13, 013001. [Google Scholar] [CrossRef]

- Cao, Y.; Tang, S.; Li, C.; Zhang, P.; Tan, Y.; Zhang, Z.; Li, J. An optimized EV charging model considering TOU price and SOC curve. IEEE Trans. Smart Grid 2012, 3, 388–393. [Google Scholar] [CrossRef]

- Lee, Z.J.; Pang, J.Z.F.; Low, S.H. Pricing EV charging service with demand charge. Electr. Power Syst. Res. 2020, 189, 106694. [Google Scholar] [CrossRef]

- Wang, B.; Wang, Y.; Nazaripouya, H.; Qiu, C.; Chu, C.; Gadh, R. Predictive scheduling framework for electric vehicles with uncertainties of user behaviors. IEEE Internet Things J. 2016, 4, 52–63. [Google Scholar] [CrossRef]

- Wan, Z.; Li, H.; He, H.; Prokhorov, D. Model-free real-time EV charging scheduling based on deep reinforcement learning. IEEE Trans. Smart Grid 2019, 10, 5246–5257. [Google Scholar] [CrossRef]

- Lee, J.; Lee, E.; Kim, J. Electric vehicle charging and discharging algorithm based on reinforcement learning with data-driven approach in dynamic pricing scheme. Energies 2020, 13, 1950. [Google Scholar] [CrossRef] [Green Version]

- Zhang, F.; Yang, Q.; An, D. CDDPG: A deep-reinforcement-learning-based approach for electric vehicle charging control. IEEE Internet Things J. 2021, 8, 3075–3087. [Google Scholar] [CrossRef]

- Ding, T.; Zeng, Z.; Bai, J.; Qin, B.; Yang, Y.; Shahidehpour, M. Optimal electric vehicle charging strategy with markov decision process and reinforcement learning technique. IEEE Trans. Ind. Appl. 2020, 56, 5811–5823. [Google Scholar] [CrossRef]

- Liu, D.; Wang, W.; Wang, L.; Jia, H.; Shi, M. Deep deterministic policy gradient (DDPG) base reinforcement learning algorithm. IEEE Access 2021, 9, 21556–21566. [Google Scholar] [CrossRef]

- Li, S.; Hu, W.; Cao, D.; Dragičević, T.; Huang, Q.; Chen, Z.; Blaabjerg, F. Electric vehicle charging management based on deep reinforcement learning. J. Mod. Power Syst. Clean Energy 2022, 10, 719–730. [Google Scholar] [CrossRef]

- Wang, K.; Wang, H.; Yang, J.; Feng, J.; Li, Y.; Zhang, S.; Okoye, M.O. Electric vehicle clusters scheduling strategy considering real-time electricity prices based on deep reinforcement learning. In Proceedings of the International Conference on New Energy and Power Engineering (ICNEPE 2021), Sanya, China, 19–21 November 2021. [Google Scholar]

- Lee, S.; Choi, D.H. Dynamic pricing and energy management for profit maximization in multiple smart electric vehicle charging stations: A privacy-preserving deep reinforcement learning approach. Appl. Energy 2021, 304, 117754. [Google Scholar] [CrossRef]

- Kiaee, F. Integration of electric vehicles in smart grid using deep reinforcement learning. In Proceedings of the 11th International Conference on Information and Knowledge Technology (IKT), Tehran, Iran, 22–23 December 2020. [Google Scholar]

- Lee, S.; Choi, D.H. Reinforcement learning-based energy management of smart home with rooftop solar photovoltaic system, energy storage system, and home appliances. Sensors 2019, 19, 3937. [Google Scholar] [CrossRef] [Green Version]

- Qiu, D.; Ye, Y.; Papadaskalopoulos, D.; Strbac, G. A deep reinforcement learning method for pricing electric vehicles with discrete charging levels. IEEE Trans. Ind. Appl. 2020, 56, 5901–5912. [Google Scholar] [CrossRef]

- Al-Ogaili, A.S.; Hashim, T.J.T.; Rahmat, N.A.; Ramasamy, A.K.; Marsadek, M.B.; Faisal, M.; Hannan, M.A. Review on scheduling, clustering, and forecasting strategies for controlling electric vehicle charging: Challenges and recommendations. IEEE Access 2019, 7, 128353–128371. [Google Scholar] [CrossRef]

- Amin, A.; Tareen, W.U.K.; Usman, M.; Ali, H.; Bari, I.; Horan, B.; Mekhilef, S.; Asif, M.; Ahmed, S.; Mahmood, A. A review of optimal charging strategy for electric vehicles under dynamic pricing schemes in the distribution charging network. Sustainability 2020, 12, 10160. [Google Scholar] [CrossRef]

- Parsons, G.R.; Hidrue, M.K.; Kempton, W.; Gardner, M.P. Willingness to pay for vehicle-to-grid (V2G) electric vehicles and their contract terms. Energy Econ. 2014, 42, 313–324. [Google Scholar] [CrossRef]

- Shariff, S.M.; Iqbal, D.; Alam, M.S.; Ahmad, F. A state of the art review of electric vehicle to grid (V2G) technology. IOP Conf. Ser. Mater. Sci. Eng. 2019, 561, 012103. [Google Scholar] [CrossRef]

- Shao, S.; Zhang, T.; Pipattanasomporn, M.; Rahman, S. Impact of TOU rates on distribution load shapes in a smart grid with PHEV penetration. In Proceedings of the IEEE PES T&D 2010, New Orleans, LA, USA, 19–22 April 2010. [Google Scholar]

- Xu, X.; Niu, D.; Li, Y.; Sun, L. Optimal pricing strategy of electric vehicle charging station for promoting green behavior based on time and space dimensions. J. Adv. Transp. 2020, 2020, 8890233. [Google Scholar] [CrossRef]

- Lu, Z.; Qi, J.; Zhang, J.; He, L.; Zhao, H. modelling dynamic demand response for plug-in hybrid electric vehicles based on real-time charging pricing. IET Gener. Transm. Distrib. 2017, 11, 228–235. [Google Scholar] [CrossRef]

- Mao, T.; Lau, W.H.; Shum, C.; Chung, H.S.H.; Tsang, K.F.; Tse, N.C.F. A regulation policy of EV discharging price for demand scheduling. IEEE Trans. Power Syst. 2018, 33, 1275–1288. [Google Scholar] [CrossRef]

- Chekired, D.A.E.; Dhaou, S.; Khoukhi, L.; Mouftah, H.T. Dynamic pricing model for EV charging-discharging service based on cloud computing scheduling. In Proceedings of the 13th International Wireless Communications and Mobile Computing Conference, Valencia, Spain, 26–30 June 2017. [Google Scholar]

- Shakya, S.; Kern, M.; Owusu, G.; Chin, C.M. Neural network demand models and evolutionary optimisers for dynamic pricing. Knowl. Based Syst. 2012, 29, 44–53. [Google Scholar] [CrossRef]

- Kim, B.G.; Zhang, Y.; van der Schaar, M.; Lee, J.W. Dynamic pricing and energy consumption scheduling with reinforcement learning. IEEE Trans. Smart Grid 2016, 7, 2187–2198. [Google Scholar] [CrossRef]

- Cedillo, M.H.; Sun, H.; Jiang, J.; Cao, Y. Dynamic pricing and control for EV charging stations with solar generation. Appl. Energy 2022, 326, 119920. [Google Scholar] [CrossRef]

- Moghaddam, V.; Yazdani, A.; Wang, H.; Parlevliet, D.; Shahnia, F. An online reinforcement learning approach for dynamic pricing of electric vehicle charging stations. IEEE Access 2020, 8, 130305–130313. [Google Scholar] [CrossRef]

- Bitencourt, L.D.A.; Borba, B.S.M.C.; Maciel, R.S.; Fortes, M.Z.; Ferreira, V.H. Optimal EV charging and discharging control considering dynamic pricing. In Proceedings of the 2017 IEEE Manchester PowerTech, Manchester, UK, 18–22 June 2017. [Google Scholar]

- Ban, D.; Michailidis, G.; Devetsikiotis, M. Demand response control for PHEV charging stations by dynamic price adjustments. In Proceedings of the 2012 IEEE PES Innovative Smart Grid Technologies (ISGT), Washington, DC, USA, 16–20 January 2012. [Google Scholar]

- Xu, P.; Sun, X.; Wang, J.; Li, J.; Zheng, W.; Liu, H. Dynamic pricing at electric vehicle charging stations for waiting time reduction. In Proceedings of the 4th International Conference on Communication and Information Processing, Qingdao, China, 2–4 November 2018. [Google Scholar]

- Erdinc, O.; Paterakis, N.G.; Mendes, T.D.P.; Bakirtzis, A.G.; Catalão, J.P.S. Smart household operation considering bi-directional EV and ESS utilization by real-time pricing-based DR. IEEE Trans. Smart Grid 2015, 6, 1281–1291. [Google Scholar] [CrossRef]

- Luo, C.; Huang, Y.F.; Gupta, V. Dynamic pricing and energy management strategy for ev charging stations under uncertainties. In Proceedings of the International Conference on Vehicle Technology and Intelligent Transport Systems—VEHITS, Rome, Italy, 23–24 April 2016. [Google Scholar]

- Guo, Y.; Xiong, J.; Xu, S.; Su, W. Two-stage economic operation of microgrid-like electric vehicle parking deck. IEEE Trans. Smart Grid 2016, 7, 1703–1712. [Google Scholar] [CrossRef]

- Wang, B.; Hu, Y.; Xiao, Y.; Li, Y. An EV charging scheduling mechanism based on price negotiation. Future Internet 2018, 10, 40. [Google Scholar] [CrossRef]

- Maestre, R.; Duque, J.; Rubio, A.; Arevalo, J. Reinforcement learning for fair dynamic pricing. In Proceedings of the Intelligent Systems Conference, London, UK, 6–7 September 2018. [Google Scholar]

- Ahmed, M.; Zheng, Y.; Amine, A.; Fathiannasab, H.; Chen, Z. The role of artificial intelligence in the mass adoption of electric vehicles. Joule 2021, 5, 2296–2322. [Google Scholar] [CrossRef]

- Shahriar, S.; Al-Ali, A.R.; Osman, A.H.; Dahou, S.; Nijim, M. Machine learning approaches for EV charging behavior: A review. IEEE Access 2020, 8, 168980–168993. [Google Scholar] [CrossRef]

- Erol-Kantarci, M.; Mouftah, H.T. Prediction-based charging of PHEVs from the smart grid with dynamic pricing. In Proceedings of the IEEE Local Computer Network Conference, Denver, CO, USA, 10–14 October 2010. [Google Scholar]

- Wang, F.; Gao, J.; Li, M.; Zhao, L. Autonomous PEV charging scheduling using dyna-q reinforcement learning. IEEE Trans. Veh. Technol. 2020, 69, 12609–12620. [Google Scholar] [CrossRef]

- Dang, Q.; Wu, D.; Boulet, B. EV charging management with ANN-based electricity price forecasting. In Proceedings of the 2020 IEEE Transportation Electrification Conference & Expo (ITEC), Chicago, IL, USA, 23–26 June 2020. [Google Scholar]

- Lu, Y.; Gu, J.; Xie, D.; Li, Y. Integrated route planning algorithm based on spot price and classified travel objectives for ev users. IEEE Access 2019, 7, 122238–122250. [Google Scholar] [CrossRef]

- Iversen, E.B.; Morales, J.M.; Madsen, H. Optimal charging of an electric vehicle using a markov decision process. Appl. Energy 2014, 123, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Shang, Y.; Li, Z.; Shao, Z.; Jian, L. Distributed V2G dispatching via LSTM network within cloud-edge collaboration framework. In Proceedings of the 2021 IEEE/IAS Industrial and Commercial Power System Asia (I&CPS Asia), Chengdu, China, 18–21 July 2021. [Google Scholar]

- Li, S.; Gu, C.; Li, J.; Wang, H.; Yang, Q. Boosting grid efficiency and resiliency by releasing v2g potentiality through a novel rolling prediction-decision framework and deep-lstm algorithm. IEEE Syst. J. 2021, 15, 2562–2570. [Google Scholar] [CrossRef]

- Nogay, H.S. Estimating the aggregated available capacity for vehicle to grid services using deep learning and Nonlinear Autoregressive Neural Network. Sustain. Energy Grids Netw. 2022, 29, 100590. [Google Scholar] [CrossRef]

- Shipman, R.; Roberts, R.; Waldron, J.; Naylor, S.; Pinchin, J.; Rodrigues, L.; Gillotta, M. We got the power: Predicting available capacity for vehicle-to-grid services using a deep recurrent neural network. Energy 2021, 221, 119813. [Google Scholar] [CrossRef]

- Gautam, A.; Verma, A.K.; Srivastava, M. A novel algorithm for scheduling of electric vehicle using adaptive load forecasting with vehicle-to-grid integration. In Proceedings of the 2019 8th International Conference on Power Systems (ICPS), Jaipur, India, 20–22 December 2019. [Google Scholar]

- Kriekinge, G.V.; Cauwer, C.D.; Sapountzoglou, N.; Coosemans, T.; Messagie, M. Peak shaving and cost minimization using model predictive control for uni- and bi-directional charging of electric vehicles. Energy Rep. 2021, 7, 8760–8771. [Google Scholar] [CrossRef]

- Zhang, X.; Chan, K.W.; Li, H.; Wang, H.; Qiu, J.; Wang, G. Deep-Learning-Based Probabilistic Forecasting of Electric Vehicle Charging Load With a Novel Queuing Model. IEEE Trans. Cybern. 2021, 51, 3157–3170. [Google Scholar] [CrossRef]

- Zhong, J.; Xiong, X. An orderly EV charging scheduling method based on deep learning in cloud-edge collaborative environment. Adv. Civ. Eng. 2021, 2021, 6690610. [Google Scholar] [CrossRef]

- Kriekinge, G.V.; Cauwer, C.D.; Sapountzoglou, N.; Coosemans, T.; Messagie, M. Day-ahead forecast of electric vehicle charging demand with deep neural networks. World Electr. Veh. J. 2021, 12, 178. [Google Scholar] [CrossRef]

- Sun, D.; Ou, Q.; Yao, X.; Gao, S.; Wang, Z.; Ma, W.; Li, W. Integrated human-machine intelligence for EV charging prediction in 5G smart grid. EURASIP J. Wirel. Commun. Netw. 2020, 2020, 139. [Google Scholar] [CrossRef]

- Wang, K.; Gu, L.; He, X.; Guo, S.; Sun, Y.; Vinel, A.; Shen, J. Distributed energy management for vehicle-to-grid networks. IEEE Netw. 2017, 31, 22–28. [Google Scholar] [CrossRef]

- Patil, V.; Sindhu, M.R. An intelligent control strategy for vehicle-to-grid and grid-to-vehicle energy transfer. IOP Conf. Ser. Mater. Sci. Eng. 2019, 561, 012123. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Wang, J.; Liu, J. Vehicle to grid frequency regulation capacity optimal scheduling for battery swapping station using deep q-network. IEEE Trans. Ind. Inform. 2021, 17, 1342–1351. [Google Scholar] [CrossRef]

- Lu, Y.; Li, Y.; Xie, D.; Wei, E.; Bao, X.; Chen, H.; Zhong, X. The application of improved random forest algorithm on the prediction of electric vehicle charging load. Energies 2018, 11, 3207. [Google Scholar] [CrossRef] [Green Version]

- Boulakhbar, M.; Farag, M.; Benabdelaziz, K.; Kousksou, T.; Zazi, M. A deep learning approach for prediction of electrical vehicle charging stations power demand in regulated electricity markets: The case of Morocco. Clean. Energy Syst. 2022, 3, 100039. [Google Scholar] [CrossRef]

- Lee, E.H.P.; Lukszo, Z.; Herder, P. Conceptualization of vehicle-to-grid contract types and their formalization in agent-based models. Complexity 2018, 2018, 3569129. [Google Scholar]

- Gao, Y.; Chen, Y.; Wang, C.Y.; Liu, K.J.R. Optimal contract design for ancillary services in vehicle-to-grid networks. In Proceedings of the 2012 IEEE Third International Conference on Smart Grid Communications (SmartGridComm), Tainan, Taiwan, 5–8 November 2012. [Google Scholar]

- Wahyuda, S.B. Dynamic pricing in electricity: Research potential in Indonesia. Procedia Manuf. 2015, 4, 300–306. [Google Scholar] [CrossRef] [Green Version]

- Dutschke, E.; Paetz, A.G. Dynamic electricity pricing—Which programs do consumers prefer? Energy Policy 2013, 59, 226–234. [Google Scholar] [CrossRef]

- Yoshida, Y.; Tanaka, K.; Managi, S. Which dynamic pricing rule is most preferred by consumers?—Application of choice experiment. Econ. Struct. 2017, 6, 4. [Google Scholar] [CrossRef]

- Latinopoulos, C.; Sivakumar, A.; Polak, J.W. Response of electric vehicle drivers to dynamic pricing of parking and charging services: Risky choice in early reservations. Transp. Res. Part C 2017, 80, 175–189. [Google Scholar] [CrossRef]

- Apostolaki-Iosifidou, E.; Codani, P.; Kempton, W. Measurement of power loss during electric vehicle charging and discharing. Energy 2017, 127, 730–742. [Google Scholar] [CrossRef]

- Myers, E.H.; Surampudy, M.; Saxena, A. Utilities and Electric Vehicles: Evolving to Unlock Grid Value; Smart Electric Power Alliance: Washington, DC, USA, 2018. [Google Scholar]

- Väre, V. The Vehicle-to-Grid Boom Is around the Corner. Virta Global. 7 December 2020. Available online: https://www.virta.global/blog/vehicle-to-grid-boom-is-around-the-corner (accessed on 19 October 2022).

- Barre, A.; Deguilhem, B.; Grolleau, S.; Gerard, M.; Suard, F.; Riu, D. A review on lithium-ion battery ageing mechanisms and estimations for automotive applications. J. Power Source 2013, 241, 680–689. [Google Scholar] [CrossRef] [Green Version]

- Guo, J.; Yang, J.; Lin, Z.; Serrano, C.; Cortes, A.M. Impact analysis of V2G services on EV battery degradation—A review. In Proceedings of the 2019 IEEE Milan PowerTech, Milan, Italy, 23–27 June 2019. [Google Scholar]

- Meng, J.; Boukhnifer, M.; Diallo, D. Lithium-Ion battery monitoring and observability analysis with extended equivalent circuit model. In Proceedings of the 28th Mediterranean Conference on Control and Automation (MED), Saint-Raphaël, France, 15–18 September 2020. [Google Scholar]

- Meng, J.; Yue, M.; Diallo, D. A degradation empirical-model-free battery end-of-life prediction framework based on Gaussian process regression and Kalman filter. IEEE Trans. Transp. Electrif. 2022, 1–11. [Google Scholar] [CrossRef]

- Wang, J.; Deng, Z.; Yu, T.; Yoshida, A.; Xu, L.; Guan, G.; Abudula, A. State of health estimation based on modified Gaussian process regression for lithium-ion batteries. J. Energy Storage 2022, 51, 104512. [Google Scholar] [CrossRef]

- Chaoui, H.; Ibe-Ekeocha, C.C. State of charge and state of health estimation for Lithium batteries using recurrent neural networks. IEEE Trans. Veh. Technol. 2017, 66, 8773–8783. [Google Scholar] [CrossRef]

- Petit, M.; Prada, E.; Sauvant-Moynot, V. Development of an empirical aging model for Li-ion batteries and application to assess the impact of Vehicle-to-Grid strategies on battery lifetime. Appl. Energy 2016, 172, 398–407. [Google Scholar] [CrossRef]

- Pelletier, S.; Jabali, O.; Laporte, G.; Veneroni, M. Battery degradation and behaviour for electric vehicles: Review and numerical analyses of several models. Transp. Res. Part B Methodol. 2017, 103, 158–187. [Google Scholar] [CrossRef]

- Prochazka, P.; Cervinka, D.; Martis, J.; Cipin, R.; Vorel, P. Li-Ion battery deep discharge degradation. ECS Trans. 2016, 74, 31–36. [Google Scholar] [CrossRef]

- Guo, R.; Lu, L.; Ouyang, M.; Feng, X. Mechanism of the entire overdischarge process and overdischarge-induced internal short circuit in lithium-ion batteries. Sci. Rep. 2016, 6, 30248. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Krieger, E.M.; Arnold, C.B. Effects of undercharge and internal loss on the rate dependence of battery charge storage efficiency. J. Power Source 2012, 210, 286–291. [Google Scholar] [CrossRef]

- Quinlan, J.R. Induction of decision trees. Mach. Learn. 1986, 1, 81–106. [Google Scholar] [CrossRef] [Green Version]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Cortes, C.; Vapnik, V. Support-vector networks. Machine Leaming 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Cover, T.; Hart, P.E. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef] [Green Version]

- Jain, A.K.; Mao, J.; Mohiuddin, K.M. Artificial neural networks: A tutorial. Computer 1996, 29, 31–44. [Google Scholar] [CrossRef] [Green Version]

- O’Shea, K.; Nash, R. An introduction to convolutional neural networks. arXiv 2015, arXiv:1511.08458. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2017, 60, 84–90. [Google Scholar] [CrossRef] [Green Version]

- Cho, K.; Merrienboer, B.v.; Bahdanau, D.; Bengio, Y. On the properties of neural machine translation: Encoder-decoder approaches. arXiv 2014, arXiv:1409.1259, 1–9. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Xydas, E.S.; Marmaras, C.E.; Cipcigan, L.M.; Hassan, A.S.; Jenkins, N. Forecasting electric vehicle charging demand using Support Vector Machines. In Proceedings of the 48th International Universities’ Power Engineering Conference (UPEC), Dublin, Ireland, 2–5 September 2013. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modelling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Gruber, N.; Jockisch, A. Are GRU cells more specific and LSTM cells more sensitive in motive classification of text? Front. Artif. Intell. 2020, 3, 40. [Google Scholar] [CrossRef]

- Mateus, B.C.; Mendes, M.; Farinha, J.T.; Assis, R.; Cardoso, A.M. Comparing LSTM and GRU models to predict the condition of a pulp paper press. Energies 2021, 14, 6958. [Google Scholar] [CrossRef]

- Jin, H.; Lee, S.; Nengroo, S.H.; Har, D. Development of charging/discharging scheduling algorithm for economical and energy-efficient operation of multi-EV charging station. Appl. Sci. 2022, 12, 4786. [Google Scholar] [CrossRef]

- Cahuantzi, R.; Chen, X.; Güttel, S. A comparison of LSTM and GRU networks for learning symbolic sequences. arXiv 2021, arXiv:2107.02248. [Google Scholar]

- Shipman, R.; Roberts, R.; Waldron, J.; Rimmer, C.; Rodrigues, L.; Gillotta, M. Online machine learning of available capacity for vehicle-to-grid services during the coronavirus pandemic. Energies 2021, 14, 7176. [Google Scholar] [CrossRef]

- Raju, M.P.; Laxmi, A.J. IOT based online load forecasting using machine learning algorithms. Procedia Comput. Sci. 2020, 171, 551–560. [Google Scholar] [CrossRef]

- Laouafi, A.; Mordjaoui, M.; Haddad, S.; Boukelia, T.E.; Ganouche, A. Online electricity demand forecasting based on an effective forecast combination methodology. Electr. Power Syst. Res. 2017, 148, 35–47. [Google Scholar] [CrossRef]

- Krannichfeldt, L.V.; Wang, Y.; Hug, G. Online ensemble learning for load forecasting. IEEE Trans. Power Syst. 2021, 36, 545–548. [Google Scholar] [CrossRef]

- Fekri, M.N.; Patel, H.; Grolinger, K.; Sharma, V. Deep learning for load forecasting with smart meter data: Online adaptive recurrent neural network. Appl. Energy 2021, 282, 116177. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, M.; Dong, Z.Y.; Meng, K. Probabilistic anomaly detection approach for data-driven wind turbine condition monitoring. CSEE J. Power Energy Syst. 2019, 5, 149–158. [Google Scholar] [CrossRef]

- Holland, J.H. Genetic algorithms. Sci. Am. 1992, 267, 66–73. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R.C. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995. [Google Scholar]

- Storn, R.; Price, K. Differential evolution—A simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Karaboga, D.; Basturk, B. Artificial bee colony (ABC) optimization algorithm for solving constrained optimization problems. In Proceedings of the Foundations of Fuzzy Logic and Soft Computing, Cancun, Mexico, 18–21 June 2007. [Google Scholar]

- Ke, B.R.; Lin, Y.H.; Chen, H.Z.; Fang, S.C. Battery charging and discharging scheduling with demand response for an electric bus public transportation system. Sustain. Energy Technol. Assess. 2020, 40, 100741. [Google Scholar] [CrossRef]

- Farahani, H.F. Improving voltage unbalance of low-voltage distribution networks using plug-in electric vehicles. J. Clean. Prod. 2017, 148, 336–346. [Google Scholar] [CrossRef]

- Gong, L.; Cao, W.; Liu, K.; Zhao, J.; Li, X. Spatial and temporal optimization strategy for plug-in electric vehicle charging to mitigate impacts on distribution network. Energies 2018, 11, 1373. [Google Scholar] [CrossRef] [Green Version]

- Dogan, A.; Bahceci, S.; Daldaban, F.; Alci, M. Optimization of charge/discharge coordination to satisfy network requirements using heuristic algorithms in vehicle-to-grid concept. Adv. Electr. Comput. Eng. 2018, 18, 121–130. [Google Scholar] [CrossRef]

- Qiu, H.; Liu, Y. Novel heuristic algorithm for large-scale complex optimization. Procedia Comput. Sci. 2016, 80, 744–751. [Google Scholar] [CrossRef] [Green Version]

- Zadeh, L. Fuzzy sets. Inf. Control 1965, 8, 338–353. [Google Scholar] [CrossRef] [Green Version]

- Yan, L.; Chen, X.; Zhou, J.; Chen, Y.; Wen, J. Deep reinforcement learning for continuous electric vehicles charging control with dynamic user behaviors. IEEE Trans. Smart Grid 2021, 12, 5124–5134. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; The MIT Press: London, UK, 2018. [Google Scholar]

- Watkins, C.J.C.H.; Dayan, P. Q-learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- Mhaisen, N.; Fetais, N.; Massoud, A. Real-time scheduling for electric vehicles charging/discharging using reinforcement learning. In Proceedings of the 2020 IEEE International Conference on Informatics, IoT, and Enabling Technologies (ICIoT), Doha, Qatar, 2–5 February 2020. [Google Scholar]

- Sun, X.; Qiu, J. A customized voltage control strategy for electric vehicles in distribution networks with reinforcement learning method. IEEE Trans. Ind. Inform. 2021, 17, 6852–6863. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing Atari with deep reinforcement learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Hasselt, H.v.; Guez, A.; Silver, D. Deep reinforcement learning with double Q-learning. arXiv 2015, arXiv:1509.06461. [Google Scholar] [CrossRef]

- Zeng, P.; Liu, A.; Zhu, C.; Wang, T.; Zhang, S. Trust-based multi-agent imitation learning for green edge computing in smart cities. IEEE Trans. Green Commun. Netw. 2022, 6, 1635–1648. [Google Scholar] [CrossRef]

- Mnih, V.; Badia, A.P.; Mirza, M.; Graves, A.; Harley, T.; Lillicrap, T.P.; Silver, D.; Kavukcuoglu, K. Asynchronous methods for deep reinforcement learning. arXiv 2016, arXiv:1602.01783. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. In Proceedings of the International Conference on Learning Representations, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Haarnoja, T.; Zhou, A.; Abbeel, P.; Levine, S. Soft Actor-Critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018. [Google Scholar]

- Meeus, L.; Purchala, K.; Belmans, R. Development of the internal electricity market in Europe. Electr. J. 2005, 18, 25–35. [Google Scholar] [CrossRef]

- Liu, H.; Zhang, Y.; Zheng, S.; Li, Y. Electric vehicle power trading mechanism based on blockchain and smart contract in V2G network. IEEE Access 2019, 7, 160546–160558. [Google Scholar] [CrossRef]

- Nhede, N. Smart Energy International, Synergy BV. 13 April 2019. Available online: https://www.smart-energy.com/industry-sectors/electric-vehicles/as-energy-gets-smarter-time-of-use-tariffs-spread-globally/ (accessed on 6 October 2022).

- Guo, Y.; Liu, X.; Yan, Y.; Zhang, N.; Wencong, S. Economic analysis of plug-in electric vehicle parking deck with dynamic pricing. In Proceedings of the2014 IEEE PES General Meeting|Conference & Exposition, National Harbor, MD, USA, 27–31 July 2014. [Google Scholar]

- Wolbertus, R.; Gerzon, B. Improving electric vehicle charging station efficiency through pricing. J. Adv. Transp. 2018, 2018, 4831951. [Google Scholar] [CrossRef]

- Geng, B.; Mills, J.K.; Sun, D. Two-stage charging strategy for plug-in electric vehicles at the residential transformer level. IEEE Trans. Smart Grid 2013, 4, 1442–1452. [Google Scholar] [CrossRef]

- Zethmayr, J.; Kolata, D. Charge for less: An analysis of hourly electricity pricing for electric vehicles. Would Electr. Veh. J. 2019, 10, 6. [Google Scholar] [CrossRef] [Green Version]

- Sather, G.; Granado, P.C.D.; Zaferanlouei, S. Peer-to-peer electricity trading in an industrial site: Value of buildings flexibility on peak load reduction. Energy Build. 2021, 236, 110737. [Google Scholar] [CrossRef]

- Liu, N.; Yu, X.; Wang, C.; Li, C.; Ma, L.; Lei, J. Energy-sharing model with price-based demand response for microgrids of peer-to-peer prosumers. IEEE Trans. Power Syst. 2017, 32, 3569–3583. [Google Scholar] [CrossRef]

- Marwala, T.; Hurwitz, E. Artificial Intelligence and Economic Theory: Skynet in the Market; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Shi, L.; LV, T.; Wang, Y. Vehicle-to-grid service development logic and management formulation. J. Mod. Power Syst. Clean Energy 2019, 7, 935–947. [Google Scholar] [CrossRef]

- Hazra, A. Using the confidence interval confidently. J. Thorac. Dis. 2017, 9, 4125–4130. [Google Scholar] [CrossRef]

- Gershman, S.J.; Daw, N.D. Reinforcement learning and episodic memory in humans and animals: An integrative framework. Annu. Rev. Psychol. 2017, 68, 101–128. [Google Scholar] [CrossRef] [Green Version]

- Botvinick, M.; Ritter, S.; Wang, J.X.; Kurth-Nelson, Z.; Blundell, C.; Hassabis, D. Reinforcement learning, fast and slow. Trends Cogn. Sci. 2019, 23, 408–422. [Google Scholar] [CrossRef] [Green Version]

- Hu, Z.; Wan, K.; Gao, X.; Zhai, Y. A dynamic adjusting reward function method for deep reinforcement learning with adjustable parameters. Math. Probl. Eng. 2019, 2019, 7619483. [Google Scholar] [CrossRef]

- Wu, J.; Chen, X.Y.; Zhang, H.; Xiong, L.D.; Lei, H.; Deng, S.H. Hyperparameter optimization for machine learning models based on bayesian optimization. J. Electron. Sci. Technol. 2019, 17, 26–40. [Google Scholar]

- Feurer, M.; Hutter, F. Hyperparameter Optimization. In Automated Machine Learning; Springer: Cham, Switzerland, 2019; pp. 3–33. [Google Scholar]

- Zhang, N.; Sun, Q.; Yang, L.; Li, Y. Event-triggered distributed hybrid control scheme for the integrated energy system. IEEE Trans. Ind. Inform. 2022, 18, 835–846. [Google Scholar] [CrossRef]

| Project Name | Country | No. of Chargers | Timespan | Service |

|---|---|---|---|---|

| Realising Electric Vehicle to Grid Services | Australia | 51 | 2020–2022 | Frequency response, reserve |

| Parker | Denmark | 50 | 2016–2018 | Arbitrage, distribution services, frequency response |

| Bidirektionales Lademanagement—BDL | Germany | 50 | 2021–2022 | Arbitrage, frequency response, time shifting |

| Fiat-Chrysler V2G | Italy | 600 | 2019–2021 | Load balancing |

| Leaf to home | Japan | 4000 | 2012–ongoing | Emergency backup, time shifting |

| Utrecht V2G charge hubs | Netherlands | 80 | 2018–ongoing | Arbitrage |

| Share the Sun/Deeldezon Project | Netherlands | 80 | 2019–2021 | Distribution services, frequency response, time shifting |

| VGI core comp. dev. and V2G demo. using CC1 | South Korea | 100 | 2018–2022 | Arbitrage, frequency response, reserve, time shifting |

| SunnYparc | Switzerland | 250 | 2022–2025 | Time shifting, pricing scheme testing, reserve |

| Electric Nation Vehicle to Grid | UK | 100 | 2020–2022 | Distribution services, reserve, time shifting |

| OVO Energy V2G | UK | 320 | 2018–2021 | Arbitrage |

| Powerloop: Domestic V2G Demonstrator Project | UK | 135 | 2018–ongoing | Arbitrage, distribution services, emergency backup, time shifting |

| UK Vehicle-2-Grid (V2G) | UK | 100 | 2016–ongoing | Support power grid |

| INVENT—UCSD/Nissan/Nuvve | US | 50 | 2017–2020 | Distribution services, frequency response, time shifting |

| SmartMAUI, Hawaii | US | 80 | 2012–2015 | Distribution services, frequency response, time shifting |

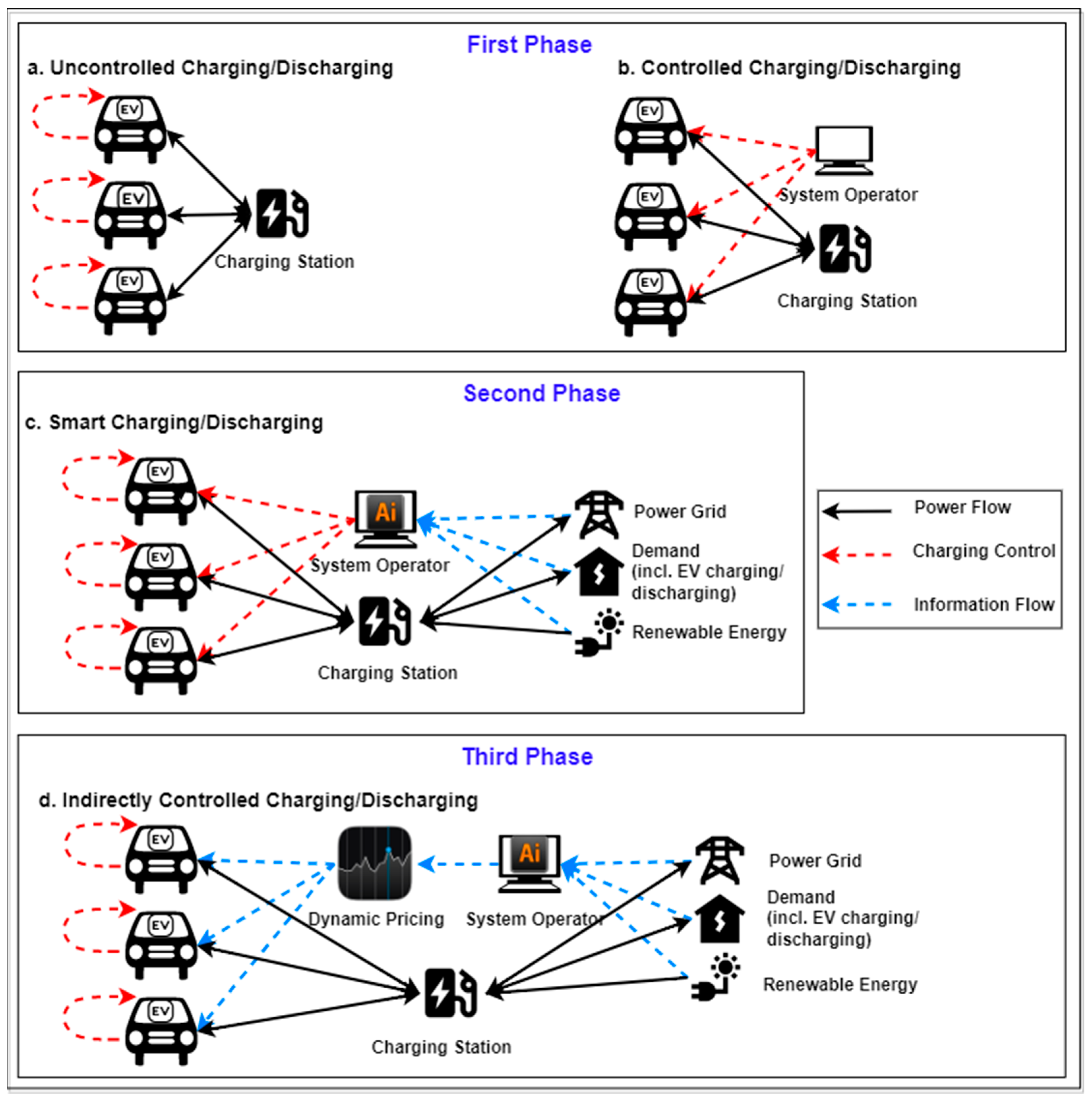

| Techniques | Benefits | Challenges |

|---|---|---|

| Uncontrolled |

|

|

| Controlled |

|

|

| Smart |

|

|

| Indirectly controlled |

|

|

| Advantages | Disadvantages |

|---|---|

|

|

| |

| |

| |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Q.; Folly, K.A. Application of Artificial Intelligence for EV Charging and Discharging Scheduling and Dynamic Pricing: A Review. Energies 2023, 16, 146. https://doi.org/10.3390/en16010146

Chen Q, Folly KA. Application of Artificial Intelligence for EV Charging and Discharging Scheduling and Dynamic Pricing: A Review. Energies. 2023; 16(1):146. https://doi.org/10.3390/en16010146

Chicago/Turabian StyleChen, Qin, and Komla Agbenyo Folly. 2023. "Application of Artificial Intelligence for EV Charging and Discharging Scheduling and Dynamic Pricing: A Review" Energies 16, no. 1: 146. https://doi.org/10.3390/en16010146

APA StyleChen, Q., & Folly, K. A. (2023). Application of Artificial Intelligence for EV Charging and Discharging Scheduling and Dynamic Pricing: A Review. Energies, 16(1), 146. https://doi.org/10.3390/en16010146