Gaze Point in the Evacuation Drills: Analysis of Eye Movement at the Indoor Wayfinding

Abstract

:1. Introduction

2. Experimental Method

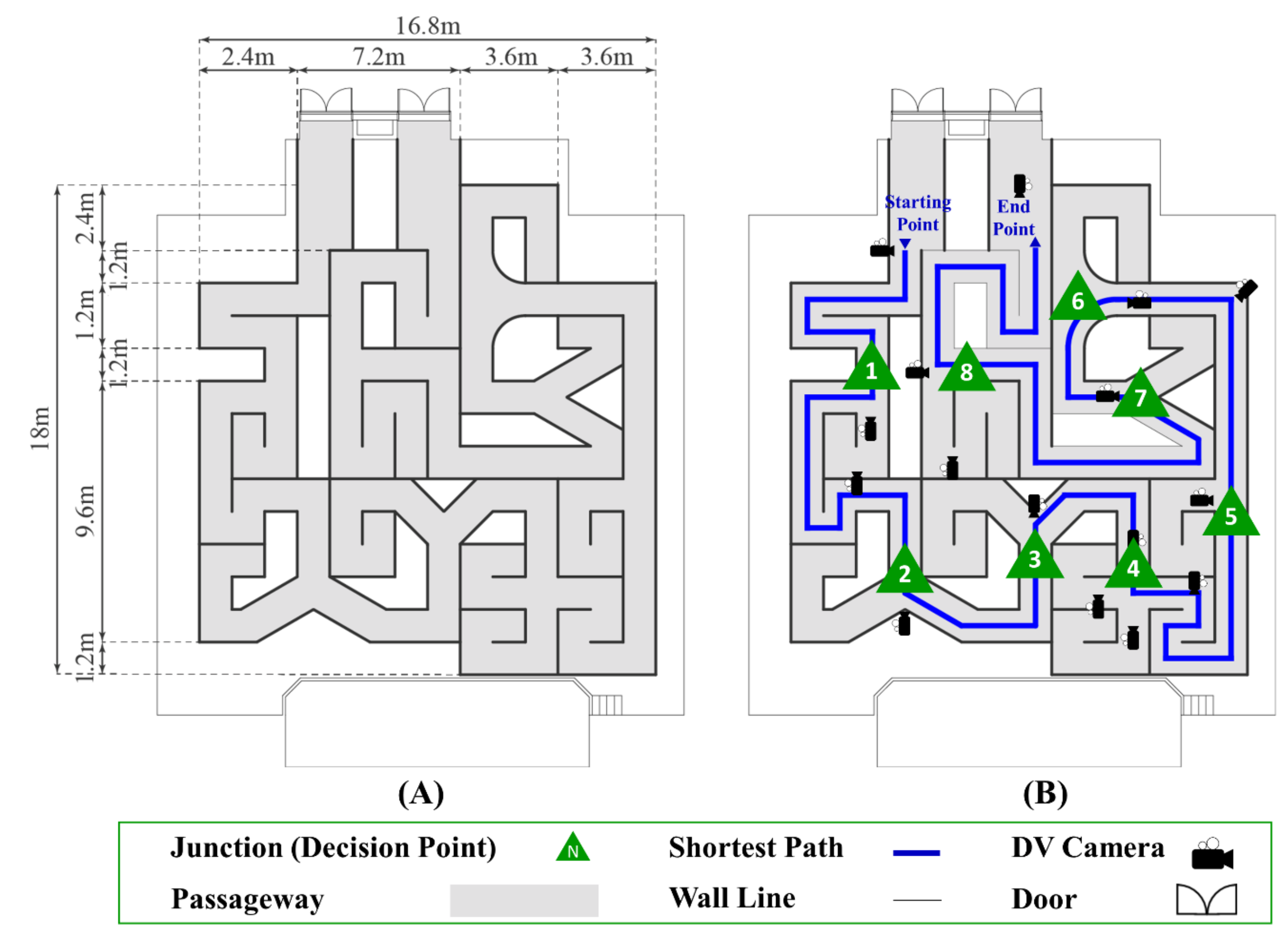

2.1. Maze Set Production Process and Layout

2.2. Participants and Experimental Procedures

- Participants should not have prior knowledge about the maze set (structure and shape, location of the entrance and exits, routes; the academic literature emphasizes that when occupants evacuate, they are greatly influenced by previous experiences (e.g., familiarity with the space [14] )).

- Participants should not need glasses as they have to wear eye trackers. In addition, they should not feel any discomfort (e.g., dizziness) while wearing the eye trackers.

- The supervisor asked the subjects to find the end point as quickly as possible, as if they were evacuating in an emergency situation.

2.3. Data Gathering and Analysis Method

3. Experimental Results

3.1. Participants Gaze Point in Maze Set

3.2. Gaze Point of the Corridor and Junction

3.3. Gaze Point with Respect to the Type of Junction

4. Conclusions

- The result showed that participants most often look between 100 cm and 150 cm (vertical height) in the corridor and in junctions.

- In addition, the gaze points of the evacuees are quantified by the horizontal and vertical directions according to the type of junction where the wayfinding decisions occur. This investigation showed that there are marked differences depending on the type. In particular, participants tend to look in a certain direction when there is only one alternative.

- In addition, as the angle of the Y junction increases, so does the share of gaze duration at area C in the horizontal AOIs. Furthermore, when the walls are at a right angle, such as at a T junction, the probability of gaze duration at C area in the horizontal AOIs also increases, as compared with a junction where the walls are curved.

- Results show that the current heights of the signage are not aligned with most people’s gaze points, suggesting the need to change signage position according to the type of junction.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Veeraswamy, A.; Galea, E.; Lawrence, P. Wayfinding Behavior within Buildings—An International Survey. Fire Saf. Sci. 2011, 10, 735–748. [Google Scholar] [CrossRef]

- Shields, T.J.; Boyce, K.E. Study of evacuation from large retail stores. Fire Saf. J. 2000, 35, 25–49. [Google Scholar] [CrossRef]

- Sime, J.D. Movement toward the familiar: Person and Place Affiliation in a Fire Entrapment Setting. Environ. Behav. 1985, 17, 697–724. [Google Scholar] [CrossRef]

- Nilsson, D.; Frantzich, H.; Saunders, W.L. Influencing exit choice in the event of a fire evacuation. Fire Saf. Sci. 2008, 9, 341–352. [Google Scholar] [CrossRef]

- Kobes, M.; Helsloot, I.; de Vries, B.; Post, J.G.; Oberijé, N.; Groenewegen, K. Way finding during fire evacuation; an analysis of unannounced fire drills in a hotel at night. Build. Environ. 2010, 45, 537–548. [Google Scholar] [CrossRef]

- Gwynne, S.M.V.; Kuligowski, E.D.; Kinsey, M.J.; Hulse, L.M. Modelling and influencing human behaviour in fire. Fire Mater. 2017, 41, 412–430. [Google Scholar] [CrossRef]

- Vilar, E.; Rebelo, F.; Noriega, P.; Duarte, E.; Mayhorn, C.B. Effects of competing environmental variables and signage on route-choices in simulated everyday and emergency wayfinding situations. Ergonomics 2014, 57, 511–524. [Google Scholar] [CrossRef]

- Vilar, E.; Rebelo, F.; Noriega, P. Smart Systems in Emergency Wayfinding: A Literature Review. In Lecture Notes in Computer Science (including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2018; Volume 10919, pp. 379–388. ISBN 9783319918020. [Google Scholar]

- Vilar, E.; Rebelo, F.; Noriega, P. Indoor human wayfinding performance using vertical and horizontal signage in virtual reality. Hum. Factors Ergon. Manuf. Serv. Ind. 2014, 24, 601–615. [Google Scholar] [CrossRef]

- Mantovani, G.; Gamberini, L.; Martinelli, M.; Varotto, D. Exploring the Suitability of Virtual Environments for Safety Training: Signals, Norms and Ambiguity in a Simulated Emergency Escape. Cogn. Technol. Work 2001, 3, 33–41. [Google Scholar] [CrossRef]

- National Fire Protection Association. NFPA. 101: Life Safety Code® Handbook, 2018th Edition; National Fire Protection Association: Quincy, MA, USA, 2018. [Google Scholar]

- International Organization for Standardization. ISO 16069:2017 Graphical Symbols-Safety Signs-Safety Way Guidance Systems (SWGS); International Organization for Standardization: Geneva, Switzerland, 2017. [Google Scholar]

- Ministry of Public Administration and Security. NFSC (National Fire Safety Code) 303: Fire Safety Regulation of Exit Light and Exit Sign; Ministry of Public Administration and Security: Seoul, Korea, 2016. [Google Scholar]

- Xie, H.; Filippidis, L.; Galea, E.R.; Blackshields, D.; Lawrence, P.J. Experimental analysis of the effectiveness of emergency signage and its implementation in evacuation simulation. Fire Mater. 2012, 36, 367–382. [Google Scholar] [CrossRef]

- Jeon, G.Y. An Analysis of Evacuation System Development for Underground Space with Consideration to Behaviors and Cognitive Characteristics of Humans under Emergency Situations. Ph.D. Thesis, Kyungpook University, Daegu, Korea, 2005. [Google Scholar]

- Xie, H.; Galea, E.R.; Lawrence, P.J. Experimental Study of the Effectiveness of Dynamic Signage System. In Proceedings of the 13th International Fire Science and Engineering Conference, London, UK, 24–26 June 2013; pp. 967–978. [Google Scholar]

- Galea, E.; Xie, H.; Lawrence, P. Experimental and Survey Studies on the Effectiveness of Dynamic Signage Systems. Fire Saf. Sci. 2014, 11, 1129–1143. [Google Scholar] [CrossRef] [Green Version]

- O’neill, M.J. Effects of signage and floor plan configuration on wayfinding accuracy. Environ. Behav. 1991, 23, 553–574. [Google Scholar] [CrossRef]

- Frantzich, H.; Nilsson, D. Evacuation Experiments in a Smoke Filled Tunnel. Third Int. Symp. Hum. Behav. Fire 2004, 229–238. [Google Scholar]

- Jin, T.; Yamada, T. Experimental study on effect of escape guidance in fire smoke by travelling flashing of light sources. Fire Saf. Sci. 1994, 4, 705–714. [Google Scholar] [CrossRef] [Green Version]

- Galea, E.R.; Xie, H.; Deere, S.; Cooney, D.; Filippidis, L. Evaluating the effectiveness of an improved active dynamic signage system using full scale evacuation trials. Fire Saf. J. 2017, 91, 908–917. [Google Scholar] [CrossRef]

- Galea, E.R.; Xie, H.; Deere, S.; Cooney, D.; Filippidis, L. An international survey and full-scale evacuation trial demonstrating the effectiveness of the active dynamic signage system concept. In Proceedings of the Fire and Materials, San Francisco, CA, USA, 6–8 February 2017; Volume 41, pp. 493–513. [Google Scholar]

- Kwee-Meier, S.T.; Mertens, A.; Schlick, C.M. Age-related differences in decision-making for digital escape route signage under strenuous emergency conditions of tilted passenger ships. Appl. Ergon. 2017, 59, 264–273. [Google Scholar] [CrossRef]

- Nilsson, D.; Frantzich, H.; Saunders, W. Coloured flashing lights to mark emergency exits—Experiences from evacuation experiments. Fire Saf. Sci. 2005, 8, 569–579. [Google Scholar] [CrossRef] [Green Version]

- Kinateder, M.; Warren, W.H.; Schloss, K.B. What color are emergency exit signs? Egress behavior differs from verbal report. Appl. Ergon. 2019, 75, 155–160. [Google Scholar] [CrossRef]

- Zhang, Z.; Jia, L.; Qin, Y. Optimal number and location planning of evacuation signage in public space. Saf. Sci. 2017, 91, 132–147. [Google Scholar] [CrossRef]

- Church, R.; ReVelle, C. The maximal covering location problem. Pap. Reg. Sci. 1974, 32, 101–118. [Google Scholar] [CrossRef]

- Chen, C.; Li, Q.; Kaneko, S.; Chen, J.; Cui, X. Location optimization algorithm for emergency signs in public facilities and its application to a single-floor supermarket. Fire Saf. J. 2009, 44, 113–120. [Google Scholar] [CrossRef]

- Vilar, E.; Rebelo, F.; Noriega, P.; Teles, J.; Mayhorn, C. The influence of environmental features on route selection in an emergency situation. Appl. Ergon. 2013, 44, 618–627. [Google Scholar] [CrossRef] [PubMed]

- Jeon, Y.T. A Study on Route Choice Patterns According to Agent Characteristics for Algorithms Complement of Evacuation Simulation Tools. Master’s Thesis, Pukyong National University, Busan, Korea, 2017. [Google Scholar]

- Scharine, A.A.; McBeath, M.K. Right-Handers and Americans Favor Turning to the Right. Hum. Factors J. Hum. Factors Ergon. Soc. 2005, 44, 248–256. [Google Scholar] [CrossRef] [PubMed]

- Bianconi, F.; Filippucci, M.; Felicini, N. Immersive Wayfinding: Virtual Reconstruction and Eye-Tracking for Orientation Studies Inside Complex Architecture. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 143–150. [Google Scholar] [CrossRef]

- Fu, L.; Cao, S.; Song, W.; Fang, J. The influence of emergency signage on building evacuation behavior: An experimental study. Fire Mater. 2019, 43, 22–33. [Google Scholar] [CrossRef] [Green Version]

- Filippidis, L.; Galea, E.R.; Gwynne, S.; Lawrence, P.J. Representing the influence of signage on evacuation behavior within an evacuation model. J. Fire Prot. Eng. 2006, 16, 37–73. [Google Scholar] [CrossRef]

- Jeon, E.-M.; Choi, J.-H.; Hong, W.-H. Analysis on Characteristics of Human Behavior and Cognitive Effects according to Types of Egress Route Instruction in a Large-scale Mazy Facility TT—Analysis on Characteristics of Human Behavior and Cognitive Effects according to Types of Egress Route I. J. Archit. Inst. Korea Plan. Des. 2011, 27, 51–58. [Google Scholar]

- Zhang, J.; Klingsch, W.; Schadschneider, A.; Seyfried, A. Transitions in pedestrian fundamental diagrams of straight corridors and T-junctions. J. Stat. Mech. Theory Exp. 2011, 2011, P06004. [Google Scholar] [CrossRef]

- Korea Ministry of Government Legislation. Enforcement Decree of the Building Act; Korea Ministry of Government Legislation: Sejong City, Korea, 2017. [Google Scholar]

- Bae, Y.H.; Kim, Y.C.; Oh, R.S.; Son, J.Y.; Hong, W.H.; Choi, J.H. Gaze point in the maze set evacuation drills: analysis of eye movement at the indoor wayfinding. In Proceedings of the 15th International Fire Science and Engineering Conference–Interflam, London, UK, 1–3 July 2019; pp. 739–750. [Google Scholar]

| Area | Average Duration Time (ms) | Proportion (%) | Kruskal-Wallis Test | ||

|---|---|---|---|---|---|

| Mean Rank | df | sig | |||

| A1 | 6,603.72 | 8.85 | 18.17 | 4 | 0.000*** |

| A2 | 21,984.92 | 29.46 | 39.33 | ||

| A3 | 27,145.64 | 36.37 | 45.25 | ||

| A4 | 11,783.41 | 15.79 | 29.50 | ||

| O | 7,114.10 | 9.53 | 20.25 | ||

| Total | 74,631.79 | 100 | |||

| Type | Average Duration Time (s) | Proportion (%) | Kruskal-Wallis Test | |||

|---|---|---|---|---|---|---|

| Mean Rank | df | sig | ||||

| Corridor | A1 | 5048.18 | 8.08 | 17.83 | 4 | 0.000*** |

| A2 | 18,430.03 | 29.50 | 38.42 | |||

| A3 | 23,324.49 | 37.34 | 45.33 | |||

| A4 | 9563.66 | 15.31 | 29.83 | |||

| O | 6106.12 | 9.77 | 21.08 | |||

| Total | 62,472.48 | 100.00 | ||||

| Junction | A1 | 1555.54 | 12.79 | 21.71 | 4 | 0.006*** |

| A2 | 3554.89 | 29.24 | 39.42 | |||

| A3 | 3821.15 | 31.43 | 40.50 | |||

| A4 | 2219.75 | 18.26 | 30.79 | |||

| O | 1007.98 | 8.28 | 20.08 | |||

| Total | 12,159.31 | 100.00 | ||||

| AOI | Probability (%) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Junction | ||||||||||

| ALL | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | ||

| Horizontal AOIs | L | 25.36 | 2.27 | 23.52 | 46.99 | 40.16 | 30.26 | 4.16 | 39.00 | 8.24 |

| C | 37.96 | 45.41 | 38.62 | 35.05 | 34.46 | 41.65 | 34.96 | 17.85 | 66.11 | |

| R | 28.40 | 52.32 | 37.86 | 17.96 | 22.37 | 2.69 | 35.52 | 41.25 | 25.65 | |

| O | 8.28 | 0.00 | 0.00 | 0.00 | 3.00 | 25.40 | 25.36 | 1.91 | 0.00 | |

| Total | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | |

| Vertical AOIs | A1 | 12.79 | 3.93 | 9.42 | 23.44 | 19.13 | 8.27 | 4.08 | 27.55 | 4.51 |

| A2 | 29.24 | 43.86 | 43.64 | 25.05 | 27.83 | 11.32 | 5.92 | 51.13 | 35.70 | |

| A3 | 31.43 | 35.16 | 36.76 | 33.87 | 39.21 | 23.01 | 35.76 | 12.48 | 39.82 | |

| A4 | 18.26 | 17.04 | 10.19 | 17.63 | 10.83 | 32.00 | 28.88 | 6.93 | 19.98 | |

| O | 8.28 | 0.00 | 0.00 | 0.00 | 3.00 | 25.40 | 25.36 | 1.91 | 0.00 | |

| Total | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | |

| All AOIs | A1L | 4.02 | 0.00 | 2.55 | 7.53 | 7.35 | 1.38 | 0.00 | 11.09 | 1.67 |

| A1C | 5.10 | 3.93 | 2.80 | 11.83 | 8.38 | 6.89 | 2.72 | 1.73 | 2.06 | |

| A1R | 3.67 | 0.00 | 4.07 | 4.09 | 3.40 | 0.00 | 1.36 | 14.73 | 0.77 | |

| A2L | 10.07 | 1.55 | 13.84 | 16.56 | 11.38 | 6.39 | 0.00 | 24.96 | 4.25 | |

| A2C | 10.97 | 24.43 | 17.83 | 8.06 | 6.09 | 2.25 | 3.60 | 11.87 | 23.33 | |

| A2R | 8.20 | 17.87 | 11.97 | 0.43 | 10.36 | 2.69 | 2.32 | 14.30 | 8.12 | |

| A3L | 7.12 | 0.00 | 6.88 | 11.93 | 13.99 | 12.85 | 2.64 | 2.34 | 2.32 | |

| A3C | 13.27 | 6.32 | 17.06 | 12.04 | 19.29 | 10.16 | 13.44 | 3.03 | 27.19 | |

| A3R | 11.04 | 28.85 | 12.82 | 9.89 | 5.93 | 0.00 | 19.68 | 7.11 | 10.31 | |

| A4L | 4.15 | 0.72 | 0.25 | 10.97 | 7.43 | 9.65 | 1.52 | 0.61 | 0.00 | |

| A4C | 8.62 | 10.73 | 0.93 | 3.12 | 0.71 | 22.35 | 15.20 | 1.21 | 13.53 | |

| A4R | 5.49 | 5.60 | 9.00 | 3.55 | 2.69 | 0.00 | 12.16 | 5.11 | 6.44 | |

| O | 8.28 | 0.00 | 0.00 | 0.00 | 3.00 | 25.40 | 25.36 | 1.91 | 0.00 | |

| Total | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | |

| AOI | Probability (%) | |||

|---|---|---|---|---|

| All Junction | Y Junction (2,3,7) | T Junction (6,8) | ||

| Horizontal AOIs | L | 25.36 | 35.68 | 28.02 |

| C | 37.96 | 30.27 | 46.50 | |

| R | 28.40 | 33.38 | 23.62 | |

| O | 8.28 | 0.67 | 1.86 | |

| Total | 100.00 | 100.00 | 100.00 | |

| Vertical AOIs | A1 | 12.79 | 19.84 | 13.57 |

| A2 | 29.24 | 40.99 | 30.82 | |

| A3 | 31.43 | 27.34 | 39.43 | |

| A4 | 18.26 | 11.16 | 14.32 | |

| O | 8.28 | 0.67 | 1.86 | |

| Total | 100.00 | 100.00 | 100.00 | |

| All AOIs | A1L | 4.02 | 6.99 | 5.19 |

| A1C | 5.10 | 5.00 | 5.98 | |

| A1R | 3.67 | 7.85 | 2.40 | |

| A2L | 10.07 | 18.55 | 8.67 | |

| A2C | 10.97 | 12.94 | 12.64 | |

| A2R | 8.20 | 9.50 | 9.51 | |

| A3L | 7.12 | 6.71 | 9.55 | |

| A3C | 13.27 | 10.67 | 22.29 | |

| A3R | 11.04 | 9.96 | 7.59 | |

| A4L | 4.15 | 3.43 | 4.61 | |

| A4C | 8.62 | 1.66 | 5.59 | |

| A4R | 5.49 | 6.07 | 4.12 | |

| O | 8.28 | 0.67 | 1.86 | |

| Total | 100.00 | 100.00 | 100.00 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bae, Y.-H.; Kim, Y.-C.; Oh, R.-S.; Son, J.-Y.; Hong, W.-H.; Choi, J.-H. Gaze Point in the Evacuation Drills: Analysis of Eye Movement at the Indoor Wayfinding. Sustainability 2020, 12, 2902. https://doi.org/10.3390/su12072902

Bae Y-H, Kim Y-C, Oh R-S, Son J-Y, Hong W-H, Choi J-H. Gaze Point in the Evacuation Drills: Analysis of Eye Movement at the Indoor Wayfinding. Sustainability. 2020; 12(7):2902. https://doi.org/10.3390/su12072902

Chicago/Turabian StyleBae, Young-Hoon, Young-Chan Kim, Ryun-Seok Oh, Jong-Yeong Son, Won-Hwa Hong, and Jun-Ho Choi. 2020. "Gaze Point in the Evacuation Drills: Analysis of Eye Movement at the Indoor Wayfinding" Sustainability 12, no. 7: 2902. https://doi.org/10.3390/su12072902