Application of Event Detection to Improve Waste Management Services in Developing Countries

Abstract

1. Introduction

1.1. Lack of Awareness

1.2. Lack of Utilization of Existing Infrastructure

- We built an experimental setup and created a data set of 3200 video files. These videos have durations of 150–1200 s and were captured at various city locations for 15 days. Additionally, captured data are preprocessed and labeled to generate the ground truth.

- The main contribution of the study is exploiting the computational capabilities of 3D CNN to identify the events related to waste thrown in the waste bin and its proximity. These events determine the position of waste thrown, i.e., if waste is successfully placed inside the bin or thrown outside the bin due to improper handling, or dropped outside due to the overflow of the bin.

- An analysis of the identified events through a case study was performed to develop an effective waste management infrastructure, policies, and awareness to improve SWM services.

2. Literature Survey

- The bin status is not filled or overflowing, and the waste is dropped directly into the bin.

- The bin condition is not filled or overflowing, but the waste is thrown intentionally or unintentionally in the proximity of the bin.

- The bin status is filled or overflowing, and then the waste is dropped outside the bin.

3. Proposed Model

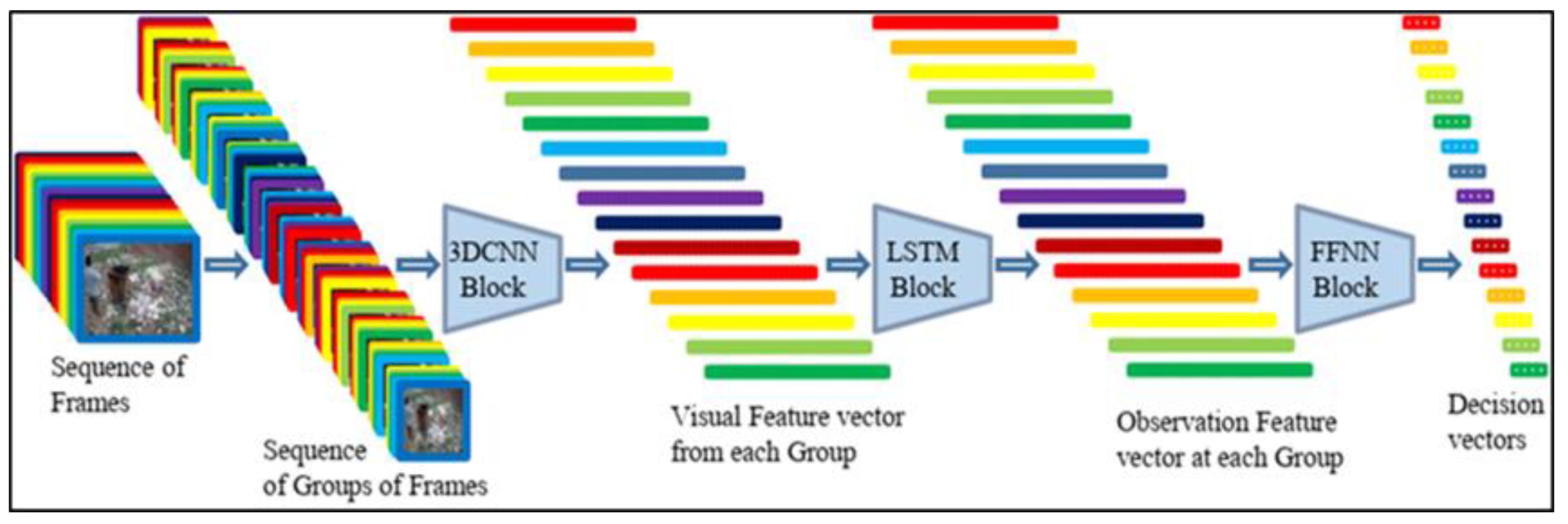

3.1. Overview

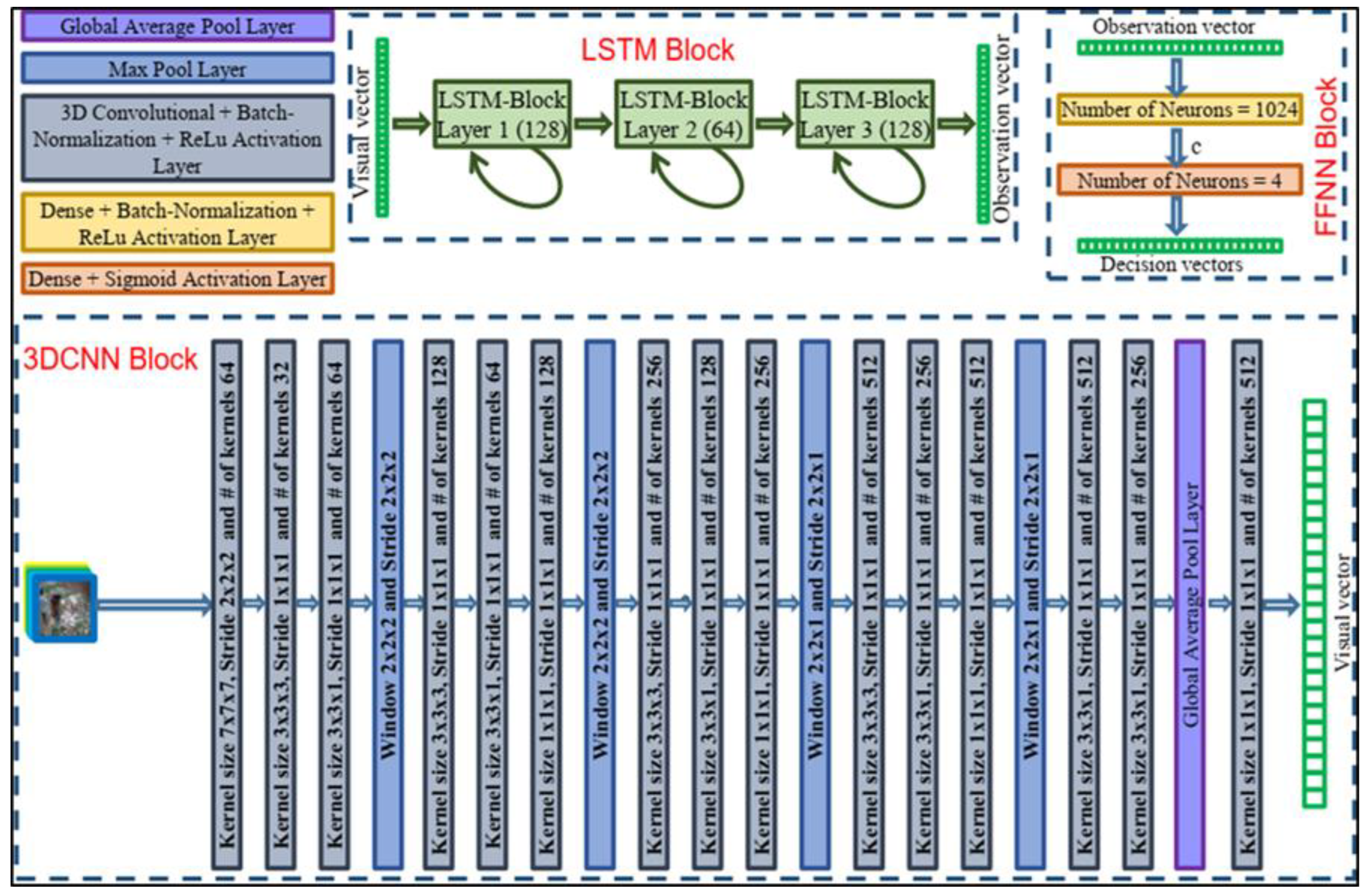

3.2. 3D Convolutional Neural Network Block

3.3. Long Short-Term Memory Block

3.4. Fully Connected Feed-Forward Neural Network Block

3.5. Training Loss Function

3.6. Interpretation of Output Decision Vector

4. Experimental Setup

4.1. Data Generation

4.2. Label Generation

4.3. Training Procedure

4.4. Inference Phase

4.4.1. Offline Mode

4.4.2. Online Mode

5. Results and Analysis

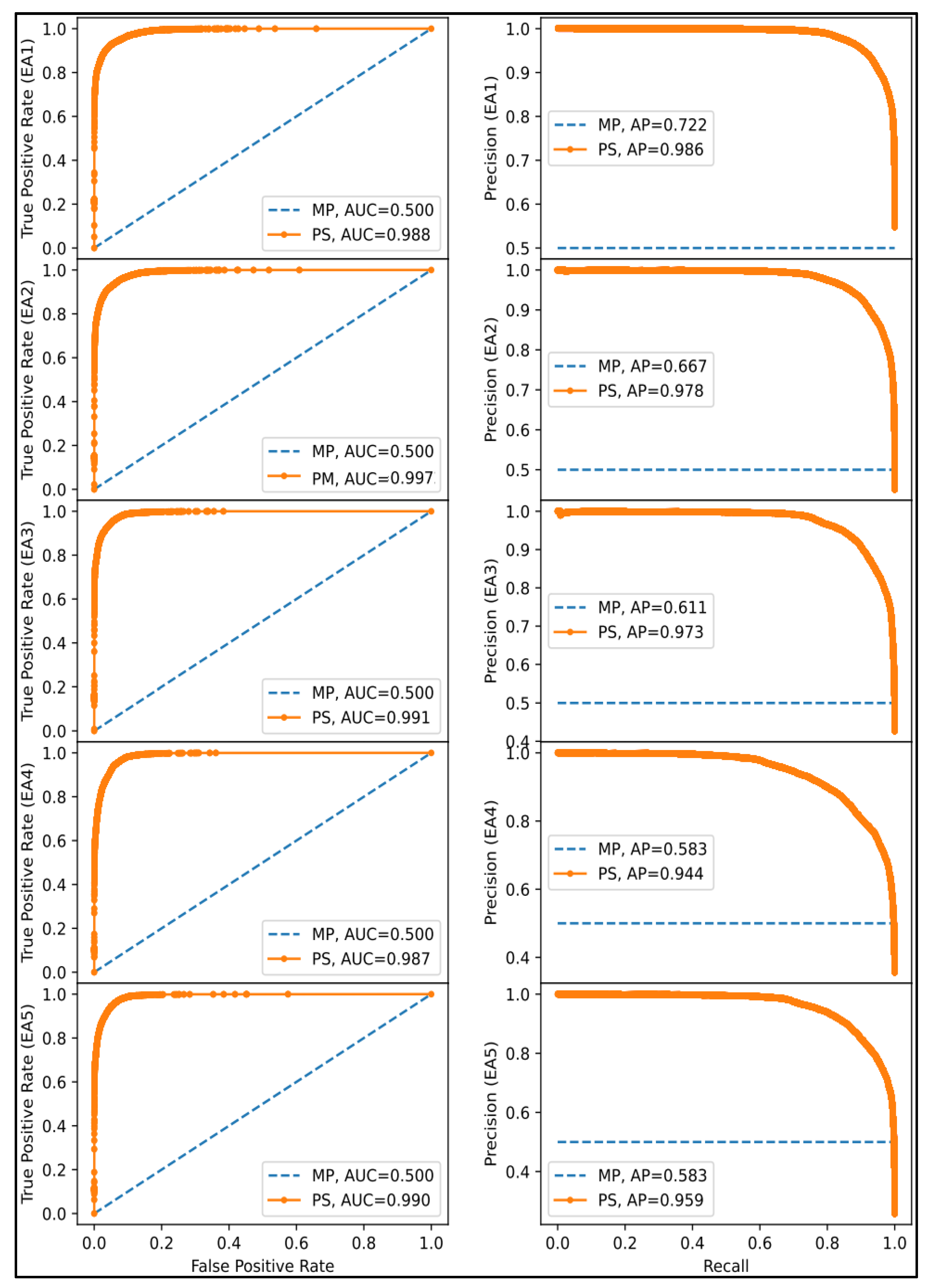

5.1. Evaluation Criteria

5.1.1. Area under Receiver Characteristic Operator Curve

5.1.2. Average Precision

5.2. Performance Analysis of the Model

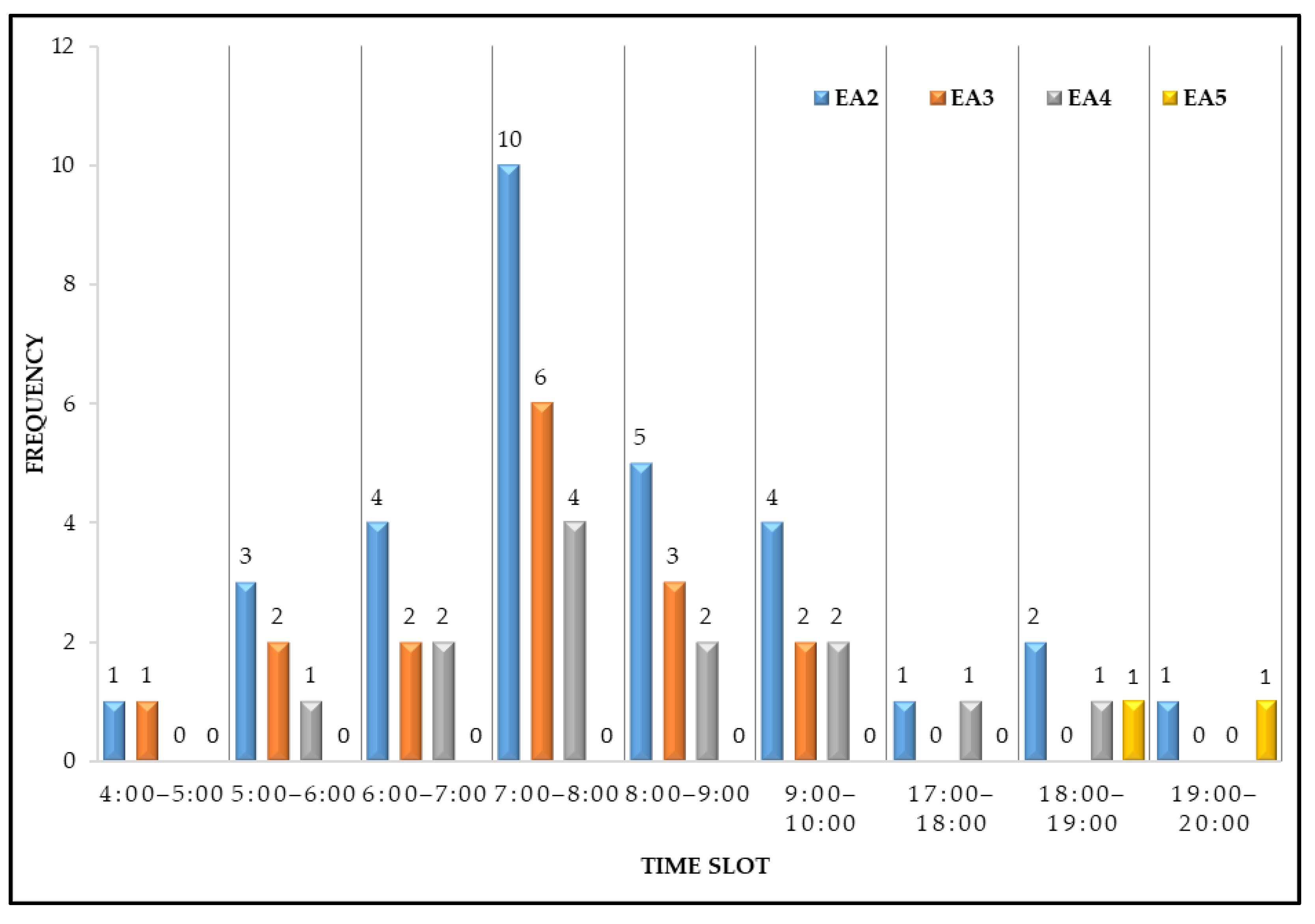

5.3. Case Study

- People need to be educated about the consequences of waste scattering.

- Bin capacity or waste collection schedules are required to change.

- Both above actions are required simultaneously.

- None of the actions are needed.

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ho, W.S.; Hashim, H.; Lim, J.S.; Lee, C.T.; Sam, K.C.; Tan, S.T. Waste Management Pinch Analysis (WAMPA): Application of Pinch Analysis for Greenhouse Gas (GHG) Emission Reduction in Municipal Solid Waste Management. Appl. Energy 2017, 185 Pt 2, 1481–1489. [Google Scholar] [CrossRef]

- Speight, J.G. Waste Gasification for Synthetic Liquid Fuel Production. In Gasification for Synthetic Fuel Production: Fundamentals, Processes and Applications; Elsevier Ltd.: Amsterdam, The Netherlands, 2015; pp. 277–301. ISBN 9780857098085. [Google Scholar]

- Adhikari, S.; Nam, H.; Chakraborty, J.P. Conversion of Solid Wastes to Fuels and Chemicals through Pyrolysis. In Waste Biorefinery: Potential and Perspectives; Elsevier: Amsterdam, The Netherlands, 2018; pp. 239–263. ISBN 9780444639929. [Google Scholar]

- Kaza, S.; Yao, L.C.; Bhada-Tata, P.; Van Woerden, F. What a Waste 2.0: A Global Snapshot of Solid Waste Management to 2050; World Bank Publications, The World Bank Group: Washington, DC, USA, 2018; Volume 1, ISBN 978-1-4648-1329-0. [Google Scholar]

- Moya, D.; Aldás, C.; López, G.; Kaparaju, P. Municipal Solid Waste as a Valuable Renewable Energy Resource: A Worldwide Opportunity of Energy Recovery by Using Waste-To-Energy Technologies. In Proceedings of the Energy Procedia; Elsevier Ltd.: Amsterdam, The Netherlands, 2017; Volume 134, pp. 286–295. [Google Scholar]

- Anjum, M.; Shahab, S. Umar MSApplication of Modified Grey Forecasting Model to Predict the Municipal Solid Waste Generation Using MLP and MLE. Int. J. Math. Eng. Manag. Sci. 2021, 6, 1276–1296. [Google Scholar] [CrossRef]

- Diaz-Barriga-Fernandez, A.D.; Santibañez-Aguilar, J.E.; Betzabe González-Campos, J.; Nápoles-Rivera, F.; Ponce-Ortega, J.M.; El-Halwagi, M.M. Strategic Planning for Managing Municipal Solid Wastes with Consideration of Multiple Stakeholders. In Computer Aided Chemical Engineering; Elsevier BV: Amsterdam, The Netherlands, 2018; Volume 44, pp. 1597–1602. [Google Scholar]

- Clos, J. The Challenge of Local Government Financing in Developing Countries; UN-Habitat: Nairobi, Kenya, 2015; ISBN 978-92-1-132653-6. [Google Scholar]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Tirkolaee, E.B.; Goli, A.; Gütmen, S.; Weber, G.W.; Szwedzka, K. A Novel Model for Sustainable Waste Collection Arc Routing Problem: Pareto-Based Algorithms. Ann. Oper. Res. 2022, 1–26. [Google Scholar] [CrossRef]

- Tirkolaee, E.B.; Mahdavi, I.; Esfahani, M.M.S.; Weber, G.W. A Robust Green Location-Allocation-Inventory Problem to Design an Urban Waste Management System under Uncertainty. Waste Manag. 2020, 102, 340–350. [Google Scholar] [CrossRef]

- Babaee Tirkolaee, E.; Mahdavi, I.; Seyyed Esfahani, M.M.; Weber, G.W. A Hybrid Augmented Ant Colony Optimization for the Multi-Trip Capacitated Arc Routing Problem under Fuzzy Demands for Urban Solid Waste Management. Waste Manag. Res. 2020, 38, 156–172. [Google Scholar] [CrossRef]

- Tirkolaee, E.B.; Iraj, M.; Esfahani, M.M.S. Solving the Multi-Trip Vehicle Routing Problem with Time Windows in Urban Waste Management Using Grey Wolf Optimization Algorithm. J. Model. Eng. 2019, 17, 93–110. [Google Scholar]

- Babaee Tirkolaee, E.; Alinaghian, M.; Bakhshi Sasi, M.; Seyyed Esfahani, M. Solving a Robust Capacitated Arc Routing Problem Using a Hybrid Simulated Annealing Algorithm: A Waste Collection Application. J. Ind. Eng. Manag. Stud. 2016, 3, 61–76. [Google Scholar]

- Zhao, Y.; Xiong, Y.; Lin, D. Recognize Actions by Disentangling Components of Dynamics. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6566–6575. [Google Scholar]

- Xu, D.; Yan, Y.; Ricci, E.; Sebe, N. Detecting Anomalous Events in Videos by Learning Deep Representations of Appearance and Motion. Comput. Vis. Image Underst. 2017, 156, 117–127. [Google Scholar] [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving into High Quality Object Detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar]

- Teoh, K.H.; Ismail, R.C.; Naziri, S.Z.M.; Hussin, R.; Isa, M.N.M.; Basir, M.S.S.M. Face Recognition and Identification Using Deep Learning Approach. J. Phys. Conf. Ser. 2021, 1755, 012006. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Shahab, S.; Anjum, M.; Umar, M.S. Deep Learning Applications in Solid Waste Management: A Deep Literature Review. Int. J. Adv. Comput. Sci. Appl. 2022, 13, 381–395. [Google Scholar] [CrossRef]

- Gundupalli, S.P.; Hait, S.; Thakur, A. A Review on Automated Sorting of Source-Separated Municipal Solid Waste for Recycling. Waste Manag. 2017, 60, 56–74. [Google Scholar] [CrossRef]

- Aziz, F.; Arof, H.; Mokhtar, N.; Mubin, M.; Abu Talip, M.S. Rotation Invariant Bin Detection and Solid Waste Level Classification. Meas. J. Int. Meas. Confed. 2015, 65, 19–28. [Google Scholar] [CrossRef]

- Hannan, M.A.; Arebey, M.; Begum, R.A.; Basri, H.; Al Mamun, M.A. Content-Based Image Retrieval System for Solid Waste Bin Level Detection and Performance Evaluation. Waste Manag. 2016, 50, 10–19. [Google Scholar] [CrossRef] [PubMed]

- Hannan, M.A.; Abdulla Al Mamun, M.; Hussain, A.; Basri, H.; Begum, R.A. A Review on Technologies and Their Usage in Solid Waste Monitoring and Management Systems: Issues and Challenges. Waste Manag. 2015, 43, 509–523. [Google Scholar] [CrossRef]

- Anjum, M.; Shahab, S.; Umar, M.S. Smart Waste Management Paradigm in Perspective of IoT and Forecasting Models. Int. J. Environ. Waste Manag. 2022, 29, 34. [Google Scholar] [CrossRef]

- Kumar, S.; Yadav, D.; Gupta, H.; Verma, O.P.; Ansari, I.A.; Ahn, C.W. A Novel Yolov3 Algorithm-Based Deep Learning Approach for Waste Segregation: Towards Smart Waste Management. Electronics 2021, 10, 14. [Google Scholar] [CrossRef]

- Yang, M.; Thung, G. Classification of Trash for Recyclability Status. CS229 Proj. Rep. 2016, 1, 3. [Google Scholar]

- Bircanoglu, C.; Atay, M.; Beser, F.; Genc, O.; Kizrak, M.A. RecycleNet: Intelligent Waste Sorting Using Deep Neural Networks. In Proceedings of the 2018 IEEE (SMC) International Conference on Innovations in Intelligent Systems and Applications, INISTA, Thessaloniki, Greece, 3–5 July 2018; pp. 1–7. [Google Scholar]

- Ruiz, V.; Sánchez, Á.; Vélez, J.F.; Raducanu, B. Automatic Image-Based Waste Classification. In Proceedings of the Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), Sapporo, Japan, 15–18 December 2019; Springer: Cham, Switzerland, 2019; Volume 11487, pp. 422–431. [Google Scholar]

- Lindermayr, J.; Senst, C.; Hoang, M.H.; Hägele, M. Visual Classification of Single Waste Items in Roadside Application Scenarios for Waste Separation. In Proceedings of the 50th International Symposium on Robotics, ISR 2018, Munich, Germany, 20–21 June 2018; pp. 226–231. [Google Scholar]

- Toğaçar, M.; Ergen, B.; Cömert, Z. Waste Classification Using AutoEncoder Network with Integrated Feature Selection Method in Convolutional Neural Network Models. Meas. J. Int. Meas. Confed. 2020, 153, 107459. [Google Scholar] [CrossRef]

- Chu, Y.; Huang, C.; Xie, X.; Tan, B.; Kamal, S.; Xiong, X. Multilayer Hybrid Deep-Learning Method for Waste Classification and Recycling. Comput. Intell. Neurosci. 2018, 2018, 5060857. [Google Scholar] [CrossRef]

- Nowakowski, P.; Pamuła, T. Application of Deep Learning Object Classifier to Improve E-Waste Collection Planning. Waste Manag. 2020, 109, 1–9. [Google Scholar] [CrossRef]

- Ozkaya, U.; Seyfi, L. Fine-Tuning Models Comparisons on Garbage Classification for Recyclability. arXiv Prepr. 2019, arXiv:1908.04393. [Google Scholar]

- Fadel, F. The Design and Implementation of Smart Trash Bin. Acad. J. Nawroz Univ. 2017, 6, 141–148. [Google Scholar] [CrossRef]

- Malapur, B.S.; Pattanshetti, V.R. IoT Based Waste Management: An Application to Smart City. In 2017 International Conference on Energy, Communication, Data Analytics and Soft Computing, ICECDS 2017; IEEE: Piscataway, NJ, USA, 2018; pp. 2476–2479. [Google Scholar] [CrossRef]

- Singh, A.; Aggarwal, P.; Arora, R. IoT Based Waste Collection System Using Infrared Sensors. In Proceedings of the 2016 5th International Conference on Reliability, Infocom Technologies and Optimization, ICRITO 2016: Trends and Future Directions, Noida, India, 19 December 2016; pp. 505–509. [Google Scholar]

- Anjum, M.; Sarosh Umar, M.; Shahab, S. IoT-Based Novel Framework for Solid Waste Management in Smart Cities. In Proceedings of the Inventive Computation and Information Technologies, Lecture Notes in Networks and Systems, Budva, Montenegro, 12–14 April 2022; pp. 687–700. [Google Scholar]

- Foudery, A.A.L.; Alkandari, A.A.; Almutairi, N.M. Trash Basket Sensor Notification Using Arduino with Android Application. Indones. J. Electr. Eng. Comput. Sci. 2018, 10, 120–128. [Google Scholar] [CrossRef]

- Muthugala, M.A.V.J.; Samarakoon, S.M.B.P.; Elara, M.R. Tradeoff between Area Coverage and Energy Usage of a Self-Reconfigurable Floor Cleaning Robot Based on User Preference. IEEE Access 2020, 8, 76267–76275. [Google Scholar] [CrossRef]

- Bai, J.; Lian, S.; Liu, Z.; Wang, K.; Liu, D. Deep Learning Based Robot for Automatically Picking up Garbage on the Grass. IEEE Trans. Consum. Electron. 2019, 64, 382–389. [Google Scholar] [CrossRef]

- Anjum, M.; Umar, M.S. Garbage Localization Based on Weakly Supervised Learning in Deep Convolutional Neural Network. In Proceedings of the IEEE 2018 International Conference on Advances in Computing, Communication Control and Networking, ICACCCN 2018, Greater Noida, India, 12–13 October 2018; pp. 1108–1113. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

| Decision Vector | Description of the Event/Activity | Abbreviation for Event/Activity | |||

|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | ||

| H | X | X | X | Any event occurs in the proximity of the bin. | EA1 |

| H | H | X | X | The event is related to the bin. | EA2 |

| H | H | H | X | The waste is successfully placed in the bin. | EA3 |

| H | H | L | L | Unsuccessful placement of waste in the bin due to inappropriate handling. | EA4 |

| H | H | L | H | Unsuccessful placement of waste in the bin due to overflow. | EA5 |

| Event/Activity | Performance | ||||

|---|---|---|---|---|---|

| Recall | Precision | f-Measure | AUROC | AP | |

| EA1 | 0.936 | 0.924 | 0.930 | 0.988 | 0.986 |

| EA2 | 0.920 | 0.906 | 0.913 | 0.988 | 0.978 |

| EA3 | 0.916 | 0.885 | 0.900 | 0.991 | 0.973 |

| EA4 | 0.872 | 0.837 | 0.854 | 0.987 | 0.944 |

| EA5 | 0.891 | 0.859 | 0.8765 | 0.990 | 0.959 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Anjum, M.; Shahab, S.; Umar, M.S. Application of Event Detection to Improve Waste Management Services in Developing Countries. Sustainability 2022, 14, 13189. https://doi.org/10.3390/su142013189

Anjum M, Shahab S, Umar MS. Application of Event Detection to Improve Waste Management Services in Developing Countries. Sustainability. 2022; 14(20):13189. https://doi.org/10.3390/su142013189

Chicago/Turabian StyleAnjum, Mohd, Sana Shahab, and Mohammad Sarosh Umar. 2022. "Application of Event Detection to Improve Waste Management Services in Developing Countries" Sustainability 14, no. 20: 13189. https://doi.org/10.3390/su142013189

APA StyleAnjum, M., Shahab, S., & Umar, M. S. (2022). Application of Event Detection to Improve Waste Management Services in Developing Countries. Sustainability, 14(20), 13189. https://doi.org/10.3390/su142013189