Abstract

Web-based educational systems collect tremendous amounts of electronic data, ranging from simple histories of students’ interactions with the system to detailed traces of their reasoning. However, less attention has been given to the pedagogical interaction data of customised learning in a gamification environment. This study aims to research user experience, communication methods, and feedback and survey functionalities among the existing learning management system; understand the data flow of the online study feedback loop; propose the design of a personalised feedback system; and conclude with a discussion of the findings from the collected experiment data. We will test the importance of gamification learning and analytics learning, as well as conduct a literature review and laboratory experiment to examine how they can influence the effectiveness of monetary incentive schemes. This research will explain the significance of gamification learning and personalised feedback on motivation and performance during the learning journey.

1. Introduction

Amidst the relentless advancement of information and technology and the ever-growing prominence of digitalisation, the relevance of e-learning systems with personalised feedback in students’ educational journeys has intensified. The concept of “Personalised learning refers to instruction in which the pace of learning and the instructional approach are optimised for the needs of each learner [1]. Learning objectives, instructional approaches, and instructional content may all vary based on learner needs”. Leveraging the potential of data analysis and statistics, combined with powerful computing resources, facilitates real-time multimodal data-processing capabilities, benefiting various fields, including education. Within the domain of academia, there is a heated debate surrounding the topic of feedback, which plays a pivotal role in the learning process. Dissatisfaction among students with the feedback they receive on their work is prevalent, with timeliness and relevance being key issues [2]. In this regard, an adeptly designed system grounded in the principles of human–computer interaction (HCI) proves superior to conventional methodologies. Such a system can identify user behaviours effectively, enabling immediate and accurate responses, thus supporting learners with diverse backgrounds to achieve incremental milestones based on their strengths and motivation. The system also empowers learners to exercise greater control over the learning process and adaptability, thereby enabling them to construct personalised learning paths and attain their individual educational objectives.

This paper introduces a personalised feedback strategy focused on bolstering student learning within the Learning Management System (LMS). The strategy incorporates automated feedback and surveys to provide learners with valuable insights into their weaknesses and expected learning experiences in the MOOC. The primary emphasis is on delivering adaptive learning resources to learners in manageable phases, while also modelling the learners and learning context within a well-structured environment.

- Aim1:

- To comprehensively understand the generic feedback process through extensive research, including the design, pipeline, structure, and content of feedback messages, among other aspects.

- Aim2:

- To extract pertinent information from website resources based on learners’ demands and individual backgrounds, facilitating the design of modules and a planner system to optimise the learning process accordingly.

- Aim3:

- To design a system capable of recording platform communications, including interaction logs and quiz attempts, thereby enhancing the system’s capacity for automated feedback in future iterations.

2. Background

The backdrop against which these objectives are pursued consists of the ever-evolving landscape of information and technology, coupled with the pervasive influence of digitalisation.

As personalised feedback is empowered by data analysis and statistical techniques, it harnesses the potential of real-time multimodal data processing to yield valuable insights for stakeholders, including students, lecturers, and moderators. Enhanced synchronisation between stakeholders allows for more frequent interactions, leading to a profound impact on students’ learning progress, as influenced by their unique backgrounds and feedback.

Within the domain of e-learning, the process of attaining personalisation in feedback describes how learners assimilate performance-relevant information to optimise their educational progress. The significance of feedback has been duly acknowledged by academic institutions, wherein, for instance, the students’ satisfaction rate functions as a real-time measure of their learning experience, exhibiting considerable potential in identifying patterns and challenges inherent in the educational process.

To foster an enhanced educational journey, a well-structured system aligned with HCI is proposed to identify user behaviours effectively. This approach facilitates prompt and precise responses, catering to students with diverse backgrounds, and enriching their learning experience by offering controlled learning processes. The system empowers users to construct personalised study plans, thereby attaining their individual learning objectives through the e-learning platform.

2.1. Preliminary Knowledge

2.1.1. Learning Analytics

The paper refers to a study on the affective responses of students concerning personalised feedback [3]. The findings indicate the successful application of learning analytics in expanding personalised feedback. In addition, the influence of student perceptions and emotions in relation to their engagement with feedback is considered.

2.1.2. Effect of Gamification

During their initial foray into university studies, freshmen undergo a period of adjustment to meet professional demands. Research demonstrates that gamified approaches, replete with practical tips and tricks, considerably ease their academic transition, thereby fostering a seamless beginning to their educational journey [4]. Nevertheless, extant literature highlights a prevalent issue of low retention rates and limited learner engagement, especially in Massive Online Open Courses (MOOCs) [5]. To address this, gamification has been advocated as an efficacious strategy to augment user engagement, particularly in the domain of online education [6]. The implementation of gaming activities on interactive displays fosters cognitive stimulation and imaginative exploration among students, enhancing engagement through rewards and collaborative endeavours [7]. However, recent studies posit that completion rates alone may not serve as a definitive measure of MOOCs’ success [8], considering the varied goals and motivations of participants [9]. Therefore, integrating gamification into MOOCs empowers users to develop personalised plans, thereby facilitating the accomplishment of their individual objectives throughout the course [10]. This integration further intensifies user engagement within the gamified environment. Customised learner modes, rooted in learners’ motivations and engagement profiles, are proposed for incorporation into the system [11]. Notably, a systematic literature review conducted by Alessandra, Roland, and Marcus highlights the nascent nature of gamification and its application in online learning, particularly MOOCs. The paucity of empirical experiments and evidence is noted, with gamification largely employed as a mechanism for external rewards [10]. Anna Faust from Technology University Berlin explores the impact of gamification as a non-monetary incentive on motivation and performance [12]. Three studies, including a literature review and laboratory experiment, evaluate the influence of gamification on motivation and performance in the context of task complexity and monetary incentive schemes.

2.2. Categorisation and Frameworks

2.2.1. Learning Process

First, the system must identify the learner’s status; if learners are using the system for the first time, the original course material list (non-personalisation list) is provided to them based on their query terms. After learners visit some course materials and respond to the assigned questionnaires, the proposed system re-evaluates their abilities, adjusts the difficulty parameters of the selected course material, and recommends appropriate course materials.

2.2.2. Learning Analytics Visualisation

Dispositional learning analytics is implemented to evaluate the characteristics and provide constructive suggestions to students and teachers. The data are extracted from student self-report, instructor perception, and conduct follows.

Riiid Labs, an AI arrangements supplier delivering innovative disruption to the training market, enables global schooling players to reconsider conventional methods of learning utilizing AI [13]. In 2017, with a strong belief in equal opportunities in education, Riiid launched the AI mentor based on deep learning analytics for over 100 million students in South Korea [13]. The innovative algorithm will assist in addressing global challenges in education. If successful, it is conceivable that any student with an Internet connection can participate in the benefits of a personalised learning experience.

Drawing on Clark and Brennan’s influential Common Ground theory, a Debate Dashboard augmented with a CCSAV tool and a set of widgets that deliver meta-information about participants and the interaction process has been designed [14]. An empirical study simulating a moderately sized collective deliberation scenario provides evidence that this experimental version outperformed the control version on a range of indicators, including usability, mutual understanding, quality of perceived collaboration, and accuracy of individual decisions [14]. Using representations that highlight conceptual relationships between contributions will promote more critical thinking and evidence-based reasoning.

“The goal of KnowledgeTree is to bridge the gap between the currently popular approach to Web-based education, which is centred on learning management systems vs. the powerful but underused technologies in intelligent tutoring and adaptive hypermedia [15].” From the literature sources such as web pages and ontology libraries to the middleware layer of web crawlers (literature information) and bidirectional LSTM-CRF training model (meteorological simulation) for knowledge acquisition, the final knowledge graph construction will be built up through knowledge fusion.

Meanwhile, Tailored Recruitment Analytics and Curriculum Knowledge (TRACK) is another vital information-/examination-fueled undertaking that is building student- and staff-facing tools to assist in illuminating these and many other questions) [16]. Uniting individuals from the Connected Intelligence Center (CIC), Student Services Unit (SSU), Information Technology Division (ITD), and the Institute for Interactive Media and Learning (IML), handling live data for these tools is another curriculum. The Application Programming Interface (API) service will also make it workable for individuals to extract curriculum data for use in different apparatuses.

2.2.3. Learning Feedback

One of the challenges associated with creating a personalised e-learning system is to achieve viable personalisation functionalities, such as customised content administration, a student model, a student plan, and versatile moment collaboration. The architecture for the Personalised Virtual Learning Environment was proposed by Dongming Xu from City University of Hong Kong [17], utilizing intelligent decision-making agents with autonomous capabilities, along with pre-active and proactive behaviours, to enhance e-learning effectiveness. Additionally, in the study of “Stimulated Planning Game Element Embedded(SPGEE) in a MOOC Platform” [18], Alessandra et al. explored the usability of Stimulated Planning through usability tests, eye tracking, and retrospective think-aloud methods. The user feedback was then applied to assess the alignment between the design choices and the conceptual planning process of the users. The progress tracking step, which links learning activities and progress to the plan, provides immediate feedback to the students, informing them about their intentions and plan-related progress. This enables learners to initiate re-planning at the earliest opportunity.

On the other hand, the OnTask project developed a software tool that collects and assesses data on students’ activities, allowing instructors to design personalised feedback. By providing frequent suggestions on specific tasks in the course, students can quickly adapt and improve their learning progressively [19]. At the University of South Australia, using UniSA LMS and course dashboard analytics, the OnTask platform increased student engagement with the course and their studies. Similarly, at UNSW, with Moodle LMS, TMGrouper, MHCampus, and custom learning analytics reporting/dashboard, OnTask enhanced communication through personalised feedback and fostered students’ sense of connectedness to the course. At the University of Adelaide, OnTask was utilised by the course coordinator to encourage early student engagement with the course [20]. In addition, OnTask has been applied for personalised, learning analytics-based feedback. The diagram of assessed minor methods for fruition rate shows that for the benchmark group, there was a decline in Connect action in the semester, followed by an increase in activity near the end of the semester. The pattern for the treatment group was reversed, with lower rate fruition rates at the beginning, and then a sharp increase during the semester (corresponding to Feedback Point 1), which was sustained over the remainder of the semester (Table 1).

Table 1.

Align course features with Feedback message details [21].

The outcomes from direct relapse analysis demonstrated that both the program subsection score and the OnTask group were significant indicators of the final course performance. The findings highlight the recurring nature of SRL: based on the external standards provided by LA-based feedback, students assess and adjust their actions to enhance their performance, leading to new targets for evaluation in subsequent learning iterations. Students responded effectively to personalised, learning analysis-based feedback. Using a combination of deductive coding and ENA, they found that students’ perceptions of their feedback were generally positive in terms of quality, and although there was a range of emotional responses, most of them were associated with improved motivation for learning. The model below depicts the various messages students receive based on test scores. They are written in the academics’ own voice but are integrated and automatically sent to the relevant students (Table 2).

Table 2.

The different messages students receive are contingent on the variable of quiz scores [22].

2.2.4. Learning Result Analysis

Student learning results can be derived from summative and formative assessments. A summative assessment includes the evaluation of a midterm examination, academic paper, and final project. A formative assessment entails the analysis of gamification elements, social interaction, and attitudinal surveys. The research will primarily focus on assessing each student’s actual learning level based on evidence of their knowledge and abilities. Hence, ongoing feedback will be collected through a five-point Likert scale questionnaire and social network interaction.

To investigate the impact of personalised gamification, the University of Tehran categorised gamification elements according to related motivation types, leading to increased average participation with a personalised approach [23]. Another study compared the effects of gamification and social networking on e-learning. Learning modules were designed with corresponding social network components and participation on the social networking site was based on interactions and contributions to the system [24]. The results demonstrated that participants in social networking achieved higher academic success in terms of knowledge acquisition. However, further study is required to thoroughly discuss the synthesis of social network feedback and its impact. Furthermore, the personalised system reduced the number of errors made by learners. Those in the adaptive situation exhibited a greater reduction in errors during the second session compared to learners using the non-adaptive situation [25].

2.3. Gaps of Existing Research

In the body of literature, there exists an array of untracked, offline “embedded tasks” [20] that have yet to be accounted for. Previous studies have not thoroughly examined the intricate details available from the LMS logs to measure the extent to which any observed changes endure, spanning across multiple weeks or more extended durations. Consideration of a partner investigation, rather than a randomised controlled trial, would be advantageous in examining the impact of the intercession.

Moreover, communication methods [19] currently applied in text format, such as Email and messages in LMS, could be expanded to include video call data, voice call data, and other multimedia information during the trend of remote learning. This would enhance learning analytics, measure student engagement, and provide accurate personalised feedback.

OnTask [22] can play a crucial role in delivering personalised feedback on a student’s work quality and quantity, along with recommending specific curriculum content. However, integration sources, such as video watching, quiz completion, practical attendance, and assignment viewing, remain limited in individual university online learning portals, thereby potentially constraining the comprehensive evaluation of individual student progress. Considering other Massive Open Online Courses (MOOCs) and activities outside of the LMS could provide diverse options for action items.

Furthermore, in consideration of the absence of a student end portal facilitating the organisation of various online channels and ownership of learning data, an ordinary student confronts the challenge of efficiently managing the required resources and sharing personal learning data with the corresponding university or employer, solely under authorised and specific circumstances.

2.4. Motivation

The objective underlying this research seeks to develop a novel generation system capable of documenting multi-source communications within a platform that maintains students’ records and enables the generation of statistics and reports. During the ongoing transition from the current system to the personalised feedback-enabled e-learning, the goal is also to devise an approach that bridges the gap in essential information required for evaluating each individual student’s portfolio matrix and learning progress. Moreover, the system is intended to enhance communication between instructors and feedback design, thus enabling the retrieval and storage of desired information while eliminating data redundancy issues. Additionally, through surveys, we seek to enhance the user experience by improving system interactions and providing diverse support based on the actual needs of the students.

2.5. Main Research Topics

2.5.1. Impact of Learning Analytics-Based(LAB) Process Feedback in a Large Course

Notwithstanding the developing number of LA-based frameworks intended to give feedback to students, Lisa Lim’s investigation [3] observed how handling criticism and processing feedback, in view of students’ learning data, impacts their Self Regulated Learning (SRL) and academic performance at a coarse level and academic performance within the context of a biological science course. The study utilised multimodal data, including log data generated from the Learning Management System (LMS) and the Connect digital book, just as students’ performance in the assigned course assessment.

Two sets of conditional probabilities were computed: the probability of SCORM accessed with feedback (p = 0.63) and the probability of Sharable Content Object Reference Model (SCORM) accessed without feedback (p = 0.59). These findings indicate that students engaged with Connect differently across the platforms, and the pattern of engagement varied between groups. The treatment group achieved higher final course grades despite recording significantly lower Connect activity.

The diagram of assessed minor methods for fruition rate shows that, for the benchmark group, there was a decline in Connect activity during the semester, followed by an increase in activity towards the end of the semester. Conversely, the pattern for the treatment group was reversed, with lower completion rates at the beginning, followed by a sharp increase during the semester, which was sustained for the remainder of the semester.

Meanwhile, linear regression was conducted to predict the final course mark based on the OnTask grouping (1 = with OnTask feedback; 0 = no OnTask feedback), prior academic achievement as measured by program entry score, and the interaction between prior academic achievement and program entry score. The results indicated that the program entry score (b = 0.26, p < 0.001), OnTask group (b = 0.47, p < 0.001), and the program entry score by OnTask group interaction (b = 0.10, p = 0.31) significantly influenced the prediction. The regression equation demonstrated a significant relationship, with F(3353) = 24.05, p < 0.001, and an R2 of 0.16.

2.5.2. Metacognitive Control

Metacognitive insight proves to be valuable only to the extent that it can be utilised to guide and regulate behaviour. Therefore, extensive research has been conducted to explore the mechanisms by which individuals modify their responses based on the self-understanding acquired through metacognitive observing [26].

Metacognition models posit that when individuals assess tasks and recognise shortcomings in their current psychological or social processes for achieving their goals, they engage in control activities to ensure more effective or efficient goal attainment.

Although limited research has directly explored the link between monitoring and control actions in children, it has been hypothesised that a potential reason for children exhibiting poor metacognitive control skills is their struggle in translating monitoring assessments into fitting changes in conduct.

Ineffective metacognitive monitoring hampers the flow of goal progress information available for processing and simultaneously limits the ability to identify cues that could guide action. In certain contexts, such as alcohol consumption, metacognitive monitoring is disrupted. For instance, individuals may hold positive metacognitive beliefs about the benefits of alcohol, helping them manage worrisome thoughts. However, alcohol impairs their ability to introspect and monitor progress towards their goal of reducing stressing considerations.

2.5.3. Multimodal Learning Analytics

Multimodal Learning Analytics (MLA) includes traditional log–file data captured by online systems, along with learning artefacts and more natural human signals, including gestures, gaze, speech, or writing [27].

MLA constitutes a sub-field that aims to integrate diverse sources of learning traces into Learning Analytics research and practice, focusing on understanding and enhancing learning in both digital and real-world environments, where interactions may not necessarily be mediated through a computer or digital device. In MLA, traces are derived not only from log records or digital documents but also from recorded video and audio, pen strokes, positional GPS trackers, biosensors, and any other techniques that facilitate understanding or measurement of the learning process. Moreover, in MLA, traces extracted from multiple modalities are combined to provide a more comprehensive view of students’ actions and internal states.

The application of diverse modalities for learning contemplation is a customary practice in conventional trial-based educational research, albeit relatively new in the context of LA. The incorporation of a human observer, inherently a multimodal sensor, into an authentic learning environment represents the established approach to studying learning in natural settings. Technological advancements, such as video and audio recording and labelling tools, have made this process less intrusive yet more measurable. However, the traditional approach to educational research encounters issues due to the manual nature of data collection and analysis, which proves to be costly and non-scalable. The limited scale and time constraints of data collection, along with the delayed availability of data analysis results, impede their utility for the learners under study. Nevertheless, if multiple modalities could be recorded, and learning traces could be automatically extracted from them, LA tools could offer a seamless real-time feedback loop to enhance the learning process [26].

In the context of communication theory, multimodality refers to the use of multiple communication methods to exchange information and meaning among individuals. The media—motion pictures, books, site pages—serve as the physical or digital substrate through which a mode of communication can be encoded. Each mode can be expressed through one or several media. For instance, discourse can be encoded as varieties of weight noticeable all around (in a vis-à-vis exchange), as varieties of attractive direction on a tape (in a tape recording), or as varieties of computerised numbers (in an MP3 file). Additionally, a similar medium can be utilised to convey multiple modes. For instance, a video recording can contain information regarding body language, emotions (e.g., facial expressions), and apparatuses or utilities used.

2.6. Hypothesis

The proposed new system aims to capture multi-source communications within a platform that maintains records for all students and allows the generation of statistics and reports; although transitioning from the current system to the Personalised Feedback-Enabled (PFE) e-learning, the design will also address the need for missing information to assess each individual student’s portfolio matrix and learning progress. Moreover, it will facilitate improved communication between instructors and feedback design, enabling selective retrieval and storage of desired information while resolving data redundancy issues. Additionally, the system will enhance the user experience by improving system interactions and providing diverse support tailored to the specific needs of students.

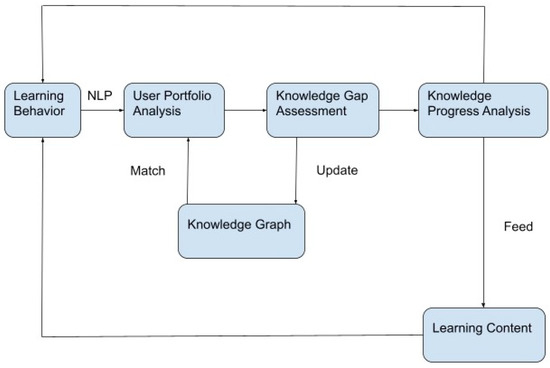

The learner model will contain learner-related information to adapt to individual demands, comprising three components: personal information, prior knowledge, and learning style. Once the learner’s profile and chosen area are identified, the associated information will be saved and processed by the knowledge extraction model. This model is divided into two phases: the relevance phase and the ranking phase.

2.7. Research Objectives

2.7.1. Personalised Feedback

The research aims to explore the perception, interpretation, and actions associated with personalised feedback in the context of an experiment’s design. The effective implementation of personalised e-learning depends on well-designed task-focused and improvement-oriented feedback, structured content, and timely feedback loops. Without these elements, Massive Open Online Courses (MOOCs) might struggle to guide students adequately based on their individual learning intentions. In the proposed learning process, the learner’s status is identified through pre-course exams and profile analysis. For first-time users, the system presents an original course material list (non-personalisation list) based on the learner’s query terms. After the learners interact with course materials and complete assignments and quizzes, the system reevaluates their abilities and progress, adjusts difficulty parameters, and recommends appropriate materials accordingly.

To achieve this, the system should align human–computer interaction (HCI) with suitable methodologies, perceiving user behaviours to provide immediate and accurate responses. This approach facilitates attendees with varying backgrounds to achieve their objectives based on individual strengths and motivations. The framework or tool should utilise data collected from students’ interactions with web-based educational systems to derive pedagogically personalised feedback. Defining the type of information beneficial to stakeholders is crucial, and the framework’s objectives are as follows:

- Instructors:

- Track students’ course completion patterns to evaluate students’ engagement with the online course.

- Discern the concepts/topics that are most confusing for students by analysing forums.

- Evaluate the quality and difficulty of quizzes/assignments by examining results.

- Evaluate the study patterns by checking clicks per student for pre-reading materials.

- Analyse surveys for queries regarding course instructions.

- Learning researchers:

- Observe the relation between students’ course completion patterns and their results.

- Analyse keystroke rhythm recognition for each student to maintain the integrity of online submissions.

2.7.2. Design of Feedback

Teachers can improve the instructional quality of their courses by making a number of small changes such as providing detailed task-focused and improvement-oriented feedback. As stated in “Implementing summative assessment with a formative flavour: a case study in a large class,” several considerations should be made for the design of feedback. These include having a set of exemplars available online, providing detailed explanations of rubric/marking criteria through the video, breaking down larger assessments into linked summative assessments, utilizing audio feedback as a replacement for written feedback, and offering formative audio feedback to markers to enhance consistency in marking and feedback [28]. Teachers can enhance the instructional quality of their courses by implementing small changes, such as providing detailed task-focused and improvement-oriented feedback.

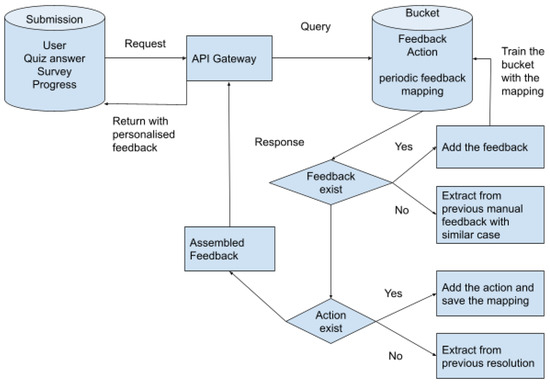

2.7.3. The Pipeline of Feedback Data

Multiple innovative data-processing methodologies may be adopted during the entire journey of engagement in the e-learning system for every platform user. The data captured from video calls or Learning Management System (LMS) communication can be retrieved from diverse platforms through proper authorisation and subsequently classified according to our proposed data model structure. Amongst the data propagation, the data flow stemming from streamlined Event Input can undergo Natural Language Processing (NLP) for expressing meta-cognitive recognition. Additionally, deep learning (DL) methodologies, such as Bayesian Active Learning (BAL), can further aid in applying uncertainly estimated sequence labelling with promising performance. Based on the output stream design, the feedback data can be delivered to end users through various appropriate channels.

2.7.4. Structure and Content of Feedback Message

To present the feedback information effectively to the audience, it is needed to identify the necessity to connect data with actions that impact the learning experience and recognise the potential effect of the message. For instance, the feedback can be categorised as the context of criteria scope, achieved items, insufficient items, and actions. By adhering to the defined structure of feedback, all the necessary datasets can be linked and retrieved from the specified sources. Simultaneously, in-depth research should be conducted to determine the best practices for delivering the feedback message, including the syntax, wording, length, and emotional expression of the content.

2.8. Research Methods

2.8.1. Learning Data

Information Finder [29] utilises heuristics to extract significant phrases from documents for learning, whereas the agent learns standard decision trees for each user category. The system’s objective is to monitor and log the selected actions of the learners during the educational process. All recordings are conducted with the user’s knowledge and consent, imperceptibly at the user level, without any intervention in the educational process. The data collected during this process can be correlated with the learner’s prior knowledge (theoretical background), skills in using new technologies, attitudes, and patterns during their use of the learning environment, as well as the instances and points where they sought assistance. The subsystem aims to log sufficient data, both in terms of quantity and quality, which can be utilised to build a personalised profile for the learner and provide personalised materials and assistance (Figure 1).

Figure 1.

Overall framework of personalised feedback methdologies.

Data Format Example

Initiation stage: initial knowledge and learning_behaviour.

Profile stage: Analysis through student capability, knowledge gap, degree of difficulty

<Analyzing_Result> <Sender=‘‘Activity_Agent‘‘/> <Destination=‘‘Modeling_Agent‘‘/> <TimeStamp=‘‘15/9/25/08/2021‘‘/> <LearnerName=‘‘Tony‘‘/> <LearnerID=‘‘990013‘‘/> <CourseID=‘‘FB2500‘‘/> <Stage=‘‘Afterpretest‘‘/> <Individual_Summary Subject=‘‘Pretest‘‘/> <Range=‘‘Course‘‘/> <TimeSpent=‘‘20m\‘‘/> <HitCounts=‘‘13\‘‘/> <Percentage_of_cor />

In this study, the learning data can be divided into summative assessments, such as quizzes, assignments, papers, and online exams, and human factors feedback, such as self-efficacy, enjoyment, and usefulness, showed a strong correlation; thus, they are expected to have a significant positive impact on satisfaction, which, in turn, positively influences effectiveness. Control, attitude, teaching style, learning style, promptness, feedback, availability, and interaction exhibited varying degrees of moderate correlations [30]. The human factor learning data can be collected through questionnaires, interviews, and student surveys. Accordingly, survey questions will be attached after each of our summative assessments to understand the emotional feedback. If formative evaluation protocols are provided and the workload can be met, there is a question regarding how to motivate students to participate in the assignments. The self-regulator learns to balance all types of feedback instead of relying solely on the strongest or cheapest option [31].

In our experimental design, users will undertake the course with the learning progress data monitoring, including:

- Slides completion analysis;

- Slides time taken analysis;

- Quiz time taken analysis;

- Quiz answer analysis;

- Learning interval;

- Visited page tracking;

- Submitted quiz answers;

- Questionnaires.

2.8.2. Classification, Regression, and Clustering

The author of “Deep Bayesian Active Learning for Natural Language Processing: Results of a Large-Scale Empirical Study” [32] has discovered that Bayesian active learning by disagreement, utilizing uncertainty estimates provided by either Dropout or Bayes-by-Backprop, significantly enhances performance compared to i.i.d. baselines. Moreover, it often outperforms classic uncertainty sampling. In this context, prediction can aid in identifying “at-risk” students. This research treats the problem as a regression task and compares the F1 scores of two models, Linear regression and Support Vector Regression (SVR), in the realm of supervised learning. Specifically, Support Vector Regression is employed, wherein slack variables are introduced to handle errors, similar to the soft margin loss function in SVMs for classification. By treating it as an optimisation problem, the objective is to fit a maximum number of points within the decision boundary lines. Representatively, a generic hyperplane equation Y = wx + b, −n < Y < +n should be satisfied by hyperplane(s).

2.8.3. Natural Language Processing

Natural Language Processing (NLP) constitutes a prominent component of the ongoing AI revolution, including various tasks, from text tagging to understanding semantic content. In the context of online learning analysis, NLP techniques can be employed to validate the contents against learning outcomes. Linguistic knowledge and word features are utilised to extract significant key phrases and keywords representing each content, facilitating the assessment of whether a website fulfils the learning outcomes.

Evidence suggests that formative assessment positively impacts student outcomes, including enhanced academic performance, self-regulated learning, and self-efficacy. In this regard, there exists a research tool, such as MetaTutor, which can measure the deployment of self-regulatory processes through the collection of rich, multi-stream data [33].

Our system aims to leverage NLP to enable learners to express their meta-cognitive monitoring and control processes. For instance, learners can interact with the SRL palette and rate their level of understanding on a six-item Likert scale before taking a quiz. Moreover, the interface allows students to summarise a static illustration related to the circulatory system. The difficulty level of quizzes or exams can be dynamically adjusted based on students’ interactions with historical data. Additionally, our research can utilise near state-of-the-art results for a variety of unstructured and structured language-processing tasks [34], while remaining more memory and computationally efficient than deep recurrent models. Small feed-forward networks are sufficient to achieve accuracy on various tasks under the deep recurrent model. In the context of NLP, AL serves as a straightforward technique for data labelling. It involves selecting instances from an unlabelled dataset, which are then annotated by a human oracle, and this process is repeated until a termination criterion is met. Meanwhile, Stream-Based Learning can incorporate unlabelled data into real-time streams for agent decision-making and state classification [35].

3. Research Design

3.1. Experiment Hypothesis

Hypothesis 1.

The same student cohort will exhibit similar levels of responsiveness to feedback, even when presented with different questions.

This hypothesis is premised on, that irrespective of the question type, students who excel in one AI/NLP quiz item are expected to demonstrate similar performance in other AI/NLP quiz items. The personalised feedback (PF) group will be offered customised feedback tailored to their quiz responses and understanding level, whereas the natural feedback (NF) group will receive standardised, non-personalised feedback.

Should this hypothesis hold true, it is anticipated that students within each group will exhibit similar levels of satisfaction and engagement, despite encountering different quiz questions.

Hypothesis 2.

The variation in survey result between the PF group and NF group is expected due to the provision of superior user experience and more detailed feedback in the PF group.

The underlying assumption is that personalised feedback (PF) will result in an enhanced user experience and more detailed feedback compared to natural feedback (NF). The survey questions will assess aspects such as feedback clarity, usefulness, and fairness, enabling the evaluation of satisfaction levels within the PF and NF groups. Should this hypothesis prove valid, it is expected that students in the PF group will express higher levels of satisfaction than those in the NF group, as the former will have received more custom-tailored and informative feedback.

3.2. Experiment Setup

Under normal circumstances, the curriculum is followed in a sequential manner. Sometimes, there is no direct link between the knowledge points covered in two consecutive chapters (i.e., no prerequisite of learning “a” before “b”). Thus, an automated approach can be adopted to devise the most appropriate learning sequence for students at a particular level, which is known as learning path planning (Figure 2).

Figure 2.

Student learning path.

The objective of the system revolves around extracting information from publicly available educational resources on the Internet. It takes into account the learners’ backgrounds and requirements to develop modules and a planner system that facilitates the learning process. This process is further supported by the creation of an ontology, which optimises the information extraction procedure. The module’s aim is to introduce these students to machine-learning concepts and improve their problem-solving abilities. Throughout the course, students’ learning progress will be monitored, and the system will collect their participation data and incorporate it into a matrix. Upon completing quizzes, participants will receive personalised feedback. The experiment incorporates two distinct levels of personalised feedback and two varying task-related features (Table 3).

Table 3.

2 levels of feedback details.

3.2.1. Experiment Conditions

RCT Group

The process of randomisation is crucial to ensuring that any differences in outcomes between the two groups are solely attributed to the intervention, rather than any other factors, such as demographic differences or pre-existing conditions.

Participants will be enrolled in the study and randomly assigned to either the RCT group or the control group. Both groups will have access to identical online learning activities, including quizzes and interactive exercises, within the Learning Management System (LMS). These activities are designed to teach the same concepts and skills to all participants. However, the RCT group will also have access to additional adaptive learning features within the LMS, such as personalised feedback, targeted practice exercises, and customised review materials. For instance, different blurbs can be written for learners who either passed or failed a set of questions in the same quiz, or separate suggestions can be provided for those who are minimally, partially, or completely engaged with the course activities. These rules are applied to each individual learner to generate personalised text (or resources). The features are designed to offer enhanced support and reinforcement to participants in the RCT group. To compare the outcomes between the RCT group and the control group, the data collected from assessments and LMS usage will be analysed.

In our Moodle LMS, two courses will be established, both containing the same tutor slides and quizzes. However, the RCT course setup will include personalised feedback and targeted exercises in the configuration, and the control group will receive low-level feedback.

Gamified Elements A/B Testing

In addition, two student cohorts will be set up to observe the efficacy of gamified learning. One of the groups shall be exposed to badges and leaderboards, including points, metres, and resource allocation dashboards, along with instant event feedback loops and real-time responses. Pertinent feedback will be provided, stating, “Remember that decision you made or did not make in the previous level-well, you need that tool or information now, so go back and earn it”. Conversely, the other group of students will only have access to an unadorned dashboard, offering delayed and simplistic instructional feedback such as “Go back and learn it”.

3.2.2. Evaluation

Subsequently, by analysing students’ submitted answers, completion rates, time taken, and satisfaction surveys, the students’ Learning Performance (LP) shall be calculated. Here, k represents the number of items, and the correctness of the students’ responses on each item i is considered, with zi ∈ 0,1, in conjunction with the item’s difficulty level. Each item has been previously weighted based on its difficulty level and contributes differently to the overall self-assessment score, ranging from 0.5 points (easy) to 1 point (medium) to 1.5 points (hard). The final score will be presented on a [0–10] scale.

During the evaluation phase, participants will be shown feedback with the Details Relevant level, and the results from the learning stage will be emphasised. All forthcoming enhancements provided by participants will be recorded through an additional round of course slides and quizzes from the system in the Learning Stage (Table 4).

Table 4.

Feedback Samples with different level of details.

4. Results

4.1. Data Collection Process

Step 1: Participant Selection and Random Assignment

For the experimental phase, a total of eight participants will be chosen and randomly allocated to either the personalised feedback (PF) group or the natural feedback (NF) group. These participants will be profiled into two categories: four of them possess a background in information technology and software engineering, whereas the remaining four have a background in marketing and company strategy. All participants share a common interest in learning artificial intelligence or machine learning, along with a certain level of discovery in the domain.

According to the research settings, two participants from the IT background and two from the marketing background will be placed in the personalised feedback (PF) group, whereas the rest (maintaining the same configuration) will be assigned to the natural feedback (NF) group. The NF group will receive limited feedback subsequent to quiz submission. Both groups will undergo five AI/NLP quiz questions, specially designed to assess their comprehension and knowledge of the subject matter.

Considering that the above-mentioned experiment serves as our pilot test, we intend to carry out further experiments, refining the questions and feedback mechanisms, and collecting more data to validate our hypothesis.

Step 2: AI/NLP Quiz Questions

Both groups will undertake five AI/NLP quiz questions, which will be designed to test their knowledge and understanding of the subject matter.

Step 3: Feedback and Scoring

Upon submitting their answers, participants will receive varying degrees of feedback and scoring in accordance with their respective group assignments. The PF group will receive personalised feedback tailored to their quiz responses and level of understanding, whereas the NF group will receive standard, non-personalised feedback. Participants will receive their scores immediately after submitting their answers.

Step 4: Survey Questions

The researchers will administer three survey questions to both groups, aiming to assess their satisfaction with the feedback and scoring process.

Survey 1: Rate your confidence level for completing the knowledge or skill presented.

Survey 2: Was the quiz feedback timely and relevant?

Survey 3: Did the answer feedback help you gain a clearer understanding of the subject?

Step 5: Data Collection and Analysis

The data collected will include participants’ quiz scores, feedback, and survey responses. Statistical methods will be employed to analyse this data and determine if there is a significant difference in quiz performance, feedback satisfaction, and overall engagement between the PF and NF groups.

For quantitative data, descriptive statistics will be utilised to summarise and describe the quantitative data associated with quiz questions and feedback scoring. Correlation analysis will be conducted to appraise the relationship between variables concerning quiz questions and feedback scoring. The variables included in the correlation analysis will include quiz scores and feedback ratings. Moreover, regression analysis will be employed to assess the relationship between variables related to quiz questions and feedback scoring. The regression analysis will involve utilizing the dependent variable (e.g., feedback ratings) and independent variables (e.g., quiz scores, time spent on the quiz).

Regarding qualitative survey questions, the researchers will adopt theoretical sampling, selectively choosing new participants or data sources to gather additional information that can further refine the emerging theory. Additionally, data that challenges or expands the existing concepts and relationships will be sought. This iterative process of data collection, coding, and theory development will continue until theoretical saturation is achieved.

Overall, the experiment is designed to test whether personalised feedback significantly impacts quiz performance and feedback satisfaction compared to standard feedback. By randomly assigning participants to different groups and carefully collecting and analysing data, the effectiveness of personalised feedback can be evaluated, thus providing insights for future educational practices and technology development.

4.2. Data Import

The Import is as follows.

import pandas as pd

import seaborn as sns

import numpy as np

data = pd.read_csv('~/Downloads/PersonalisedLearning.csv')

data.head()

4.3. Data Prepossessing

Preprocess the collected data for the preparation of analysis, fill any missing data with zeros.

data = pd.get_dummies(data) data = data.fillna(0)

Scale the data to derive zero mean and unit variance.

from sklearn.preprocessing import StandardScaler data = StandardScaler().fit_transform(data)

4.4. Data Clustering and Shaping

Unsupervised Learning: Used unsupervised learning techniques to identify interesting patterns in the data. Perform k-means clustering on the data.

kmeans = KMeans(n_clusters=3) clusters = kmeans.fit_predict(data) from sklearn.cluster import KMeans

from sklearn.cluster import KMeans kmeans = KMeans(n_clusters=3) clusters = kmeans.fit_predict(data)

4.5. Provide Personalised Feedback to Each Student Based on Cluster

Design the interactive feedback system that provides personalised feedback to the students based on the identified patterns as well as feedback to students based on their cluster membership.

Define feedback messages for each cluster. The analysis will be conducted through the next step when we have collected the responses concerning the feedback from students.

feedback = {

0: 'You may benefit from setting specific goals for studying and breaking tasks into manageable parts.',

1: 'Keep up the great work!',

2: 'You may benefit from establishing specific study schedules and creating a distraction-free study environment.'

}

Provide personalised feedback to each student based on their cluster.

for i in range(len(data)): cluster_num = clusters[i]

print('Student {}: {}'.format(i, feedback[cluster_num]))

4.6. Data Analysis

4.6.1. t-Test for Hypothesis 1

Hypothesis 1: The same student should receive similar levels of feedback, even for different subject questions (Q1: NLP related and Q3: GPT model related). To test this hypothesis, a t-test was performed using the feedback ratings for Q1 [3, 5, 5, 4, 4, 3, 5, 5] and Q3 [4, 5, 5, 4, 4, 4, 5, 5].

import pandas as pd import seaborn as sns import numpy as np import scipy.stats as stats

q1_feedback_scores = [3, 5, 5, 4, 4, 3, 5, 5] q3_feedback_scores = [4, 5, 5, 4, 4, 4, 5, 5]

t_statistic, p_value = stats.ttest_ind(q1_feedback_scores, q3_feedback_scores) print(‘‘T-Statistic: '', t_statistic) print(‘‘P-Value: '', p_value)

-

T-Statistic: −0.6831300510639732 p-Value: 0.5056732339622882

4.6.2. ANOVA for Hypothesis 2

Hypothesis 2: Use ANOVA to establish a significant gap in the survey results between various groups of students: Students A and B of the PF group, and Students A and B of the natural group. The PF group, which provided detailed feedback, was expected to yield a better user experience as inferred from the collected survey ratings. Tukey’s HSD (Tukey’s Honestly Significant Difference) post hoc test was planned for further examination of the treatment levels.

Pick first student of natural group and first student from pf group.

n1_scores = [2, 3, 3] n2_scores = [4, 4, 5] pf1_scores = [4, 5, 4] pf2_scores = [4, 4, 5]

Perform one-way ANOVA test and print results.

f_value, p_value = stats.f_oneway(pf1_scores, pf2_scores, n1_scores, n2_scores) print(‘‘F-value:'', f_value) print(‘‘p-value:'', p_value)

F-value: 6.249999999999997 p-value: 0.01716202358048234

Interpret results

if p_value < 0.05:

print(‘‘There is a significant difference in test scores between the 2 groups.'')

else:

print(‘‘There is not a significant difference in test scores between the 2 groups.'')

5. Conclusions and Future Work

5.1. Conclusions

The t-test represents a well-established statistical analysis method with extensive applications in scientific research. Its purpose is to determine the presence of a significant effect resulting from an intervention or treatment: through the comparison of means between two groups, researchers can identify whether the intervention or treatment has yielded a significant effect, while also considering whether the observed results are attributed to the intervention or treatment being tested or if they arise from other influencing factors.

The t-test results indicated that there was no significant difference between the feedback on different questions. The p-Value of 0.5056732339622882 exceeded the conventional threshold of 0.05, providing evidence to support the hypothesis that there is no significant difference in student test scores concerning the different feedback for the questions.

The experimental results indicate that the evaluated feedback on different types of questions did not lead to a significant impact on student test scores. Accordingly, it suggests that employing variations in feedback approaches or techniques may not result in noticeable differences in student performance within this particular context. Nevertheless, it is important to acknowledge that different types of feedback could still hold value in terms of enhancing student understanding, motivation, or metacognitive abilities, even if they do not directly influence test scores. To gain further insights, future research efforts could explore the potential benefits of various feedback strategies beyond their impact on test performance. Replicating this study or conducting similar experiments with larger sample sizes or diverse populations could serve to corroborate or challenge these findings.

Analysis of variance (ANOVA) testing is a statistical analysis method employed to ascertain whether a significant difference exists among the means of three or more groups. ANOVA testing proves to be a potent instrument for researchers appraising extensive datasets with multiple groups and is commonly utilised in experiments where efforts are made to control variables that could potentially influence the results.

ANOVA testing is a well-established statistical analysis approach with a considerable history of application in scientific research. Therefore, there exists an extensive body of knowledge and research guiding the effective use of ANOVA testing, which researchers can draw upon to design and analyse their experiments.

From ANOVA testing, when comparing the natural and personalised feedback groups with data collected from two students, the F-value of 6.249999999999997 indicates a significant difference between the groups. The p-value of 0.01716202358048234 falls below the conventional threshold of 0.05, providing robust evidence against the null hypothesis.

The finding suggests that personalised feedback exhibits a significant impact on individual students compared to natural feedback. This indicates that the tailored and individualised nature of personalised feedback likely contributed to differences in student experiences, perceptions, or outcomes. The significant difference between the two feedback groups implies that providing personalised feedback may be more effective in engaging students, enhancing their understanding, or addressing their specific learning needs compared to generic or natural feedback. This finding highlights the potential benefits of tailoring feedback to individual students.

Educators and instructional designers should contemplate integrating personalised feedback strategies into their teaching practices. Our finding suggests that dedicating time and effort to provide personalised and targeted feedback, considering each student’s strengths, weaknesses, and learning styles, can yield more positive outcomes. Meanwhile, educators and researchers may need to explore other variables or instructional strategies that could exert a more substantial influence on student learning and test outcomes.

5.2. Future Work

5.2.1. Feedback of Feedback

This study has focused on understanding how students respond to receiving feedback on their performance within an educational setting. Specifically, the impact of personalised feedback on student satisfaction, engagement, and learning outcomes will be examined. Surveys will be conducted, and qualitative data will be collected from students who have received feedback on their work, as well as those who have not. By analysing the feedback of feedback, insight into the effectiveness of different types of feedback will be gained, and areas for improvement in the feedback process will be identified. Additionally, the extent of engagement and learning in offline settings, such as peer-to-peer study and mentoring, will be considered.

5.2.2. Tukey’s HSD Post-Hoc Test

Tukey’s HSD post hoc test is a statistical analysis method employed to compare the means of multiple groups to identify differences between them. Within the scope of this study, Tukey’s HSD test shall be utilised to analyse the results of the survey data and determine potential significant distinctions in feedback satisfaction and engagement between the groups receiving personalised feedback (PF) and natural feedback (NF). This test will enable the identification of specific groups exhibiting significant differences and offer insights into the potential drivers of those differences.

5.2.3. Bridge the Required but Missing Information to Evaluate Each Individual’s Portfolio Matrix and Learning Progress

To appraise each student’s portfolio matrix and learning progress, access to exhaustive and accurate information concerning their performance, strengths, and areas for improvement holds vital importance. However, it is frequently encountered that certain fragments of this information are missing or incomplete, impeding a comprehensive understanding of each student’s educational journey. In order to surmount this challenge, an approach will be devised that integrates data from various sources, including assessment results, learning logs, peer feedback, and teacher observations. Employing machine-learning algorithms, this approach will uncover patterns and trends within the data, subsequently rendering personalised recommendations for each student, thereby aiding them in attaining their learning objectives. By bridging the information gap, we aspire to provide a more precise and all-encompassing assessment of each student’s portfolio matrix and learning progress.

5.2.4. Investigate the Various Forms of Participant Support Offered in These Digital Learning Environments

The debate over the most suitable pedagogical approach in MOOCs has profound implications for the future of online education; although the pedagogy of abundance promises scalability and accessibility, concerns arise regarding the quality of learning experiences and the potential detachment of learners from human interactions. On the other hand, the human-centric pedagogy champions the creation of meaningful learning environments but might struggle to accommodate a vast number of participants.

5.2.5. The Design and Development of an Innovative Online Virtual Simulation Course Platform

Creative thinking has become a highly sought-after skill in the contemporary job market, with employers valuing individuals who can think critically, generate novel ideas, and solve complex problems. To address this demand and empower college students with essential skills, the CreativeThinker platform was conceived. By leveraging the power of virtual simulations, we are looking forward to inspiring and guiding learners in cultivating their creative thinking capabilities with such an add-on.

Author Contributions

Conceptualization, X.Q. and G.S.; methodology, X.Q.; software, X.Q.; validation, X.Q., G.S. and L.Y.; formal analysis, X.Q.; investigation, X.Q.; resources, X.Q.; data curation, X.Q.; writing—original draft preparation, X.Q.; writing—review and editing, G.S. and L.Y.; visualization, X.Q. and L.Y.; supervision, G.S. and L.Y.; project administration, X.Q.; funding acquisition, G.S. All authors have read and agreed to the published version of the manuscript.

Funding

This project is supported by the Science and Technology Research Program of Chongqing Municipal Education Commission (Grant No. KJZD-M202201801) and (Grant No. KJZD-K202001801).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Pandey, A. What is Personalized Learning? 2017. Available online: https://medium.com/%20personalizing-the-learning-experience-insights/what-is-personalized-learning-bc874799b6f (accessed on 1 June 2023).

- Henderson, M.; Boud, D.; Molloy, E.; Ajjawi, R. The Impact of Feedback in Higher Education; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Lim, L.A.; Dawson, S.; Gašević, D.; Joksimović, S.; Pardo, A.; Fudge, A.; Gentili, S. Students’ perceptions of, and emotional responses to, personalised learning analytics-based feedback: An exploratory study of four courses. Assess. Eval. High. Educ. 2021, 46, 339–359. [Google Scholar] [CrossRef]

- Fischer, H.; Heinz, M. Onboarding by Gamification. Design and Evaluation of an Online Service to Support First Year Students. In EdMedia+ Innovate Learning; Association for the Advancement of Computing in Education: Chesapeake, VA, USA, 2020. [Google Scholar]

- Reich, J.; Ruipérez-Valiente, J.A. The MOOC Pivot. Science 2019, 363, 130–131. [Google Scholar] [CrossRef] [PubMed]

- Han-Huei Tsay, C.; Kofinas, A.; Luo, J. Enhancing student learning experience with technology-mediated gamification: An empirical study. Comput. Educ. 2018, 121, 1–17. [Google Scholar] [CrossRef]

- Leftheriotis, I.; Giannakos, M.N.; Jaccher, L. Gamifying informal learning activities using interactive displays: An empirical investigation of students’ learning and engagement. Smart Learn. Environ. 2017, 4, 2. [Google Scholar] [CrossRef]

- Antonaci, A.; Klemke, R.; Stracke, C.M.; Specht, M. Gamification in MOOCs to enhance users’ goal achievement. In Proceedings of the 2017 IEEE Global Engineering Education Conference (EDUCON), Athens, Greece, 25–28 April 2017. [Google Scholar]

- Henderikx, M.A.; Kreijns, K.; Kalz, M. Refining success and dropout in massive open online courses based on the intention–behavior gap. Distance Educ. 2017, 38, 353–368. [Google Scholar] [CrossRef]

- Antonaci, A.; Klemke, R.; Specht, M. The Effects of Gamification in Online Learning Environments: A Systematic Literature Review. Informatics 2019, 6, 32. [Google Scholar] [CrossRef]

- Halli, S.; Lavoué, E.; Serna, A. To Tailor or Not to Tailor Gamification? An Analysis of the Impact of Tailored Game Elements on Learners’ Behaviours and Motivation. In International Conference on Artificial Intelligence in Education; Springer International Publishing: Cham, Switzerland, 2020. [Google Scholar]

- Faust, A. The Effects of Gamification on Motivation and Performance; Springer Gabler: Berlin, Germany, 2021. [Google Scholar]

- Riiid Answer Correctness Prediction. 2020. Available online: https://www.kaggle.com/c/riiid-test-answer-prediction/overview/description (accessed on 1 June 2023).

- Iandoli, L.; Quinto, I.; De Liddo, A.; Buckingham Shum, S. Socially-augmented argumentation tools: Rationale, design and evaluation of a debate dashboard. Int. J.-Hum.-Comput. Stud. 2014, 72, 298–319. [Google Scholar] [CrossRef]

- Brusilovsky, P. KnowledgeTree: A distributed architecture for adaptive e-learning. In Proceedings of the 13th international World Wide Web conference on Alternate track Papers & Poster, New York, NY, USA, 19–24 May 2004. [Google Scholar]

- Kitto, K. Using Data to Help Students Get on TRACK to Success. 2020. Available online: https://lx.uts.edu.au/blog/2020/02/24/using-data-to-help-students-get-on-track-to-success/ (accessed on 1 June 2023).

- Xua, D.; Wang, H. Intelligent agent supported personalization for virtual learning environments. Decis. Support Syst. 2005, 42, 825–843. [Google Scholar] [CrossRef]

- Antonaci, A.; Klemke, R.; Dirk, K.; Specht, M. May the Plan be with you! A Usability Study of the Stimulated Planning Game Element Embedded in a MOOC Platform. Int. J. Serious Games 2019, 6, 49–70. [Google Scholar] [CrossRef]

- Pardo, A. OnTask has piloted courses at USYD, UTS, UniSA, UNSW and UTA. 2020. Available online: https://www.ontasklearning.org/ (accessed on 1 June 2023).

- Pardo, A.; Liu, D.; Vigentini, L.; Blumenstein, M. Scaling Personalised Student Communication Current Initiatives and Future Directions. In Australian Learning Analytics Summer Institute “Promoting Cross-Disciplinary Collaborations, Linking Data, and Building Scale”; 2019; Available online: https://www.ontasklearning.org/wp-content/uploads/ALASI_Scale_personalised_communication_2019.pdf (accessed on 1 June 2023).

- Lim, L.A.; Gentili, S.; Pardo, A.; Kovanović, V.; Whitelock-Wainwright, A.; Gašević, D.; Dawsona, S. What changes, and for whom? A study of the impact of learning analytics-based process feedback in a large course. Learn. Instr. 2019, 72, 101202. [Google Scholar] [CrossRef]

- Piloting Personalised Feedback at Scale with OnTask. 2019. Available online: https://cic.uts.edu.au/piloting-personalised-feedback-at-scale-with-ontask/ (accessed on 1 June 2023).

- Roosta, F.; Taghiyareh, F.; Mosharraf, M. Personalization of gamification-elements in an e-learning environment based on learners’ motivation. In Proceedings of the 2016 8th International Symposium on Telecommunications (IST), Tehran, Iran, 27–28 September 2016. [Google Scholar]

- de Marcos, L.; Domínguez, A.; de Navarrete, J.S.; Pagés, C. An empirical study comparing gamification and social networking on e-learning. Comput. Educ. 2014, 75, 82–91. [Google Scholar] [CrossRef]

- Hallifax, S.; Serna, A. Adaptive Gamification in Education: A Literature Review of Current Trends and Developments. In Transforming Learning with Meaningful Technologies: Proceedings of the 14th European Conference on Technology Enhanced Learning, EC-TEL 2019, Delft, The Netherlands, 16–19 September 2019; Springer International Publishing: Delft, The Netherlands, 2019. [Google Scholar]

- Lyons, K.E.; Zelazo, P. Monitoring, metacognition, and executive function: Elucidating the role of self-reflection in the development of self-regulation. Adv. Child Dev. Behav. 2011, 40, 379–412. [Google Scholar] [PubMed]

- Lang, C.; Siemens, G.; Wise, A.; Gasevic, D. (Eds.) Handbook of Learning Analytics; Society for Learning Analytics and Research: New York, NY, USA, 2019. [Google Scholar]

- Broadbent, J.; Panadero, E.; Boud, D. Implementing summative assessment with a formative flavour: A case study in a large class. Assess. Eval. High. Educ. 2018, 43, 307–322. [Google Scholar] [CrossRef]

- Krulwich, B.; Burkey, C. Learning user information interests through extraction of semantically significant phrases. In Proceedings of the AAAI Spring Symposium on Machine Learning in Information Access, Palo Alto, CA, USA, 25–27 March 1996. [Google Scholar]

- Alomari, M.M.; El-Kanj, H.; Alshdaifat, N.I.; Topal, A. A Framework for the Impact of Human Factors on the Effectiveness of Learning Management Systems. IEEE Access 2020, 8, 23542–23558. [Google Scholar] [CrossRef]

- Kreutzer, J.; Riezler, S. Self-Regulated Interactive Sequence-to-Sequence Learning. arXiv 2019, arXiv:1907.05190. [Google Scholar]

- Siddhant, A.; Lipton, Z.C. Deep Bayesian Active Learning for Natural Language Processing: Results of a Large-Scale Empirical Study. arXiv 2018, arXiv:1808.05697. [Google Scholar]

- Azevedo, R. MetaTutor: An Intelligent Multi-Agent Tutoring System Designed to Detect, Track, Model, and Foster Self-Regulated Learning. In Proceedings of the Fourth Workshop on Self-Regulated Learning in Educational Technologies; 2012. Available online: https://www.researchgate.net/profile/Francois_Bouchet2/publication/234163751_MetaTutor_An_Intelligent_MultiAgent_Tutoring_System_Designed_to_Detect_Track_Model_and_Foster_SelfRegulated_Learning/links/09e41512f0b6e88370000000/MetaTutor-An-Intelligent-Multi-Agent-Tutoring-System-Designed-to-Detect-Track-Model-and-Foster-Self-Regulated-Learning.pdf (accessed on 1 June 2023).

- Palmer, M. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing. Copenhagen, Denmark, 7–11 September 2017; Available online: https://www.aclweb.org/anthology/D17-1.pdf (accessed on 1 June 2023).

- Fang, M.; Li, Y.; Cohn, T. Learning how to Active Learn: A Deep Reinforcement Learning Approach. arXiv 2017, arXiv:1708.02383. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).