Two-Dimensional-Simultaneous Localisation and Mapping Study Based on Factor Graph Elimination Optimisation

Abstract

1. Introduction

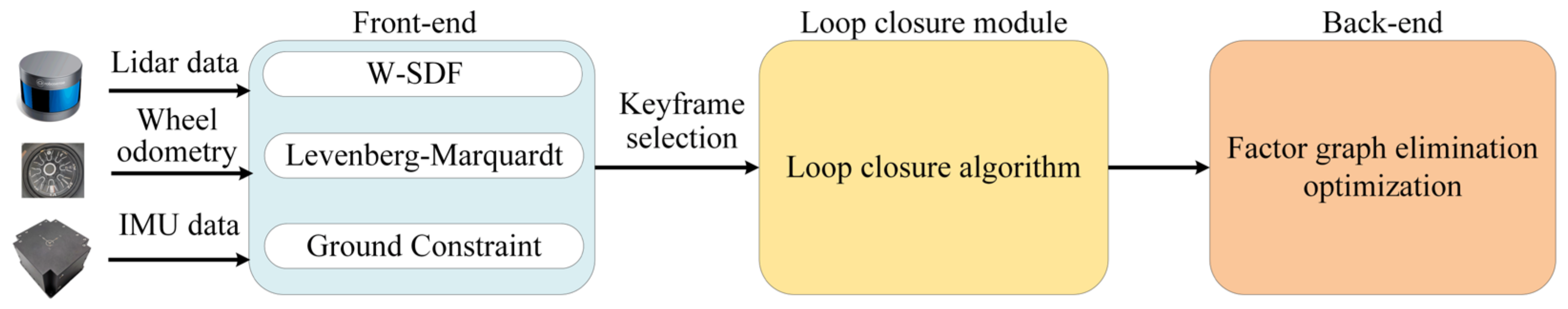

2. System Framework

3. Front-End Scan-Matching

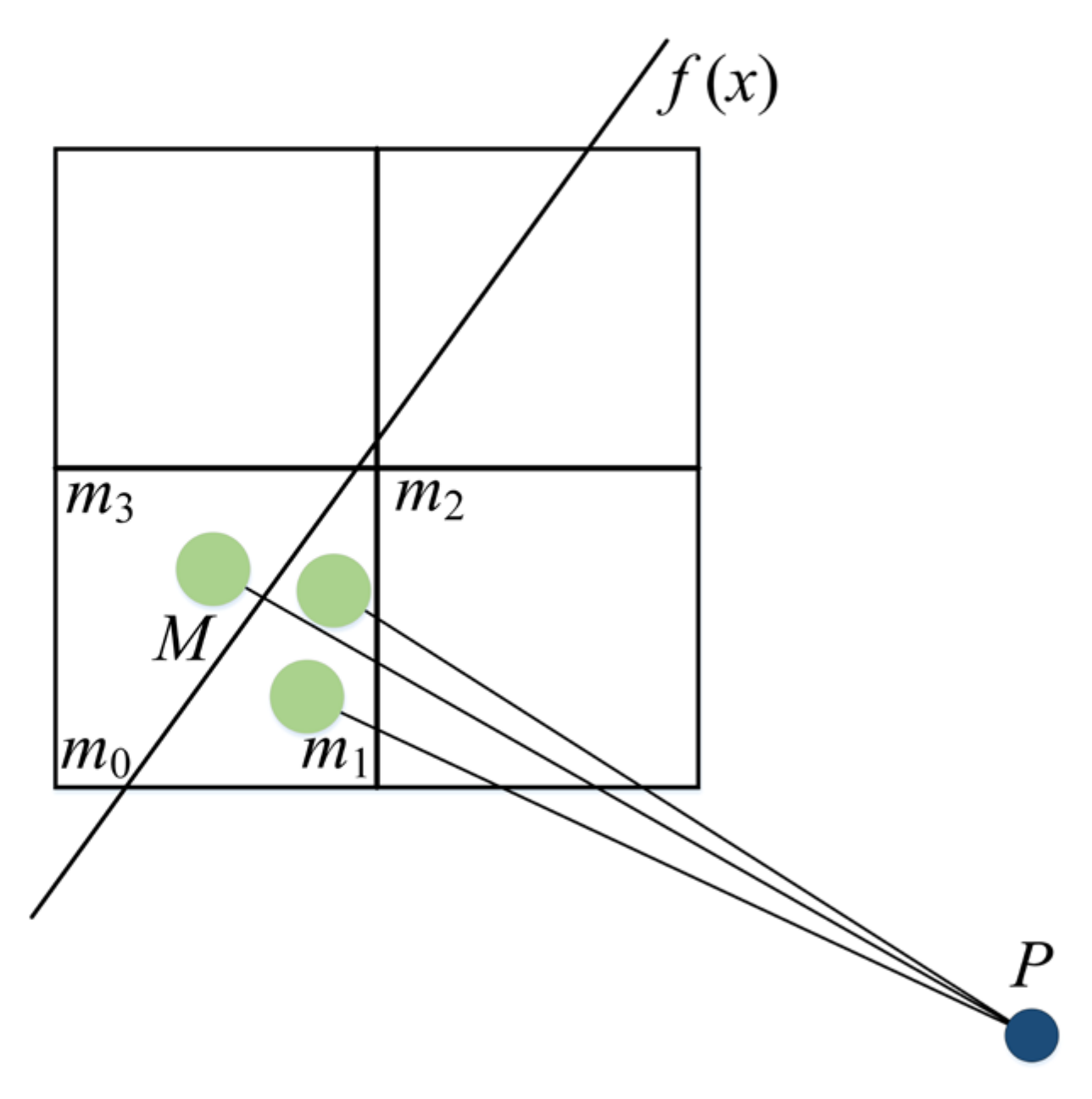

3.1. Scan-Matching

3.2. Ground Constraint Factor

3.3. Key Frame Selection

4. Closure Detection

| Algorithm 1. Loop closure algorithm. | |

| Input: | and from the current point cloud frame |

| Output: | |

| 1: | if .isKeyframe() then |

| 2: | if or then |

| 3: | KDtree.RadiusSearch |

| 4: | registerPointCloud |

| 5: | if then |

| 6: | ← ComputeICP |

| 7: | end if |

| 8: | end if |

| 9: | 0 |

| 10: | |

| 11: | |

| 12: | end if |

5. Factor Graph Optimisation

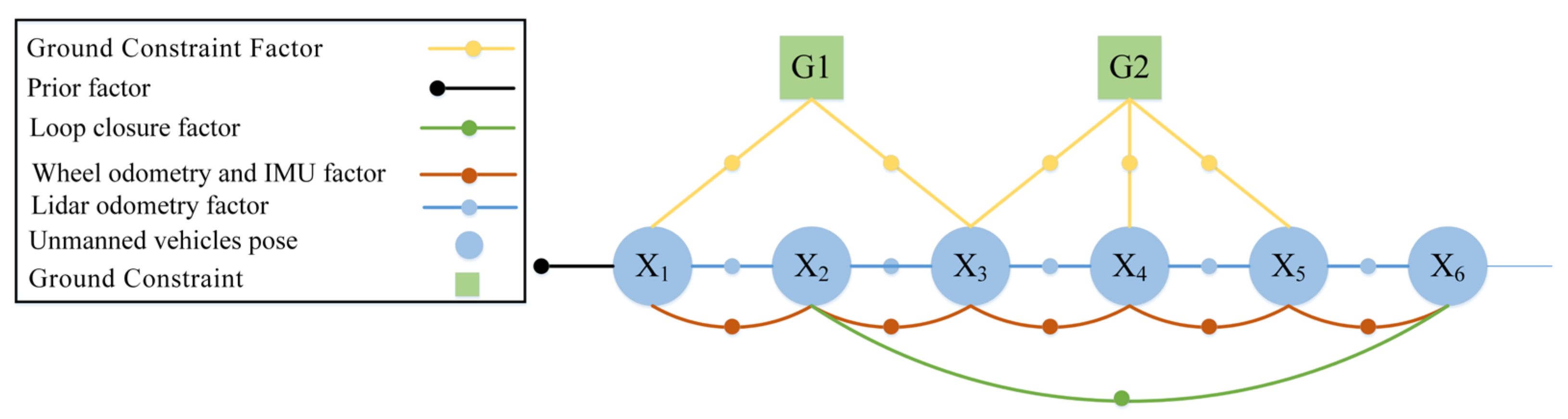

5.1. Factor Graphs

- (1)

- The a priori factor is considered as the first node of the fixed pose graph; it is also considered as the origin of the world coordinate system (which is fixed by the a priori factor).

- (2)

- The wheel odometer and IMU coefficients are obtained from two sensors, i.e., the wheel odometer and IMU. These allow for the calculation of the relative attitude between the two key frames, and the relative attitude provides a spatial constraint between the two key frames.

- (3)

- The LiDAR odometry factor is derived from the front-end scan-matching module. The front-end scan-matching module calculates the relative attitude between two key frames by weighting the SDF and L–M results.

- (4)

- The closed-loop factor originates from the closed-loop detection module, and the validated closed-loop detection constraints are added to the factor map.

- (5)

- The ground constraint factor originates from the extracted ground constraint in the front-end scan-matching module, and multiple key frames are connected by the ground constraint factor.

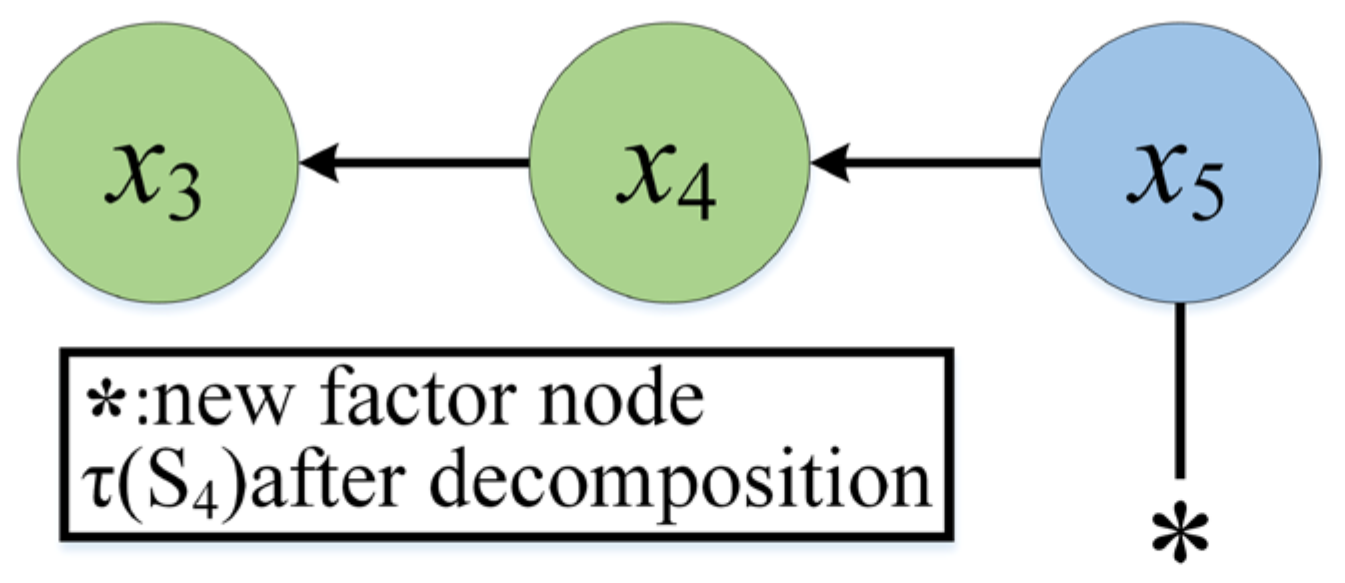

5.2. Factor Graph Elimination Optimisation

- (1)

- Remove all factors adjacent to .

- (2)

- Generate the separator (all variables associated with except ).

- (3)

- Generate the product factor .

- (4)

- Decompose the product .

- (5)

- Add a new factor at the end of the factor graph.

6. Experiments

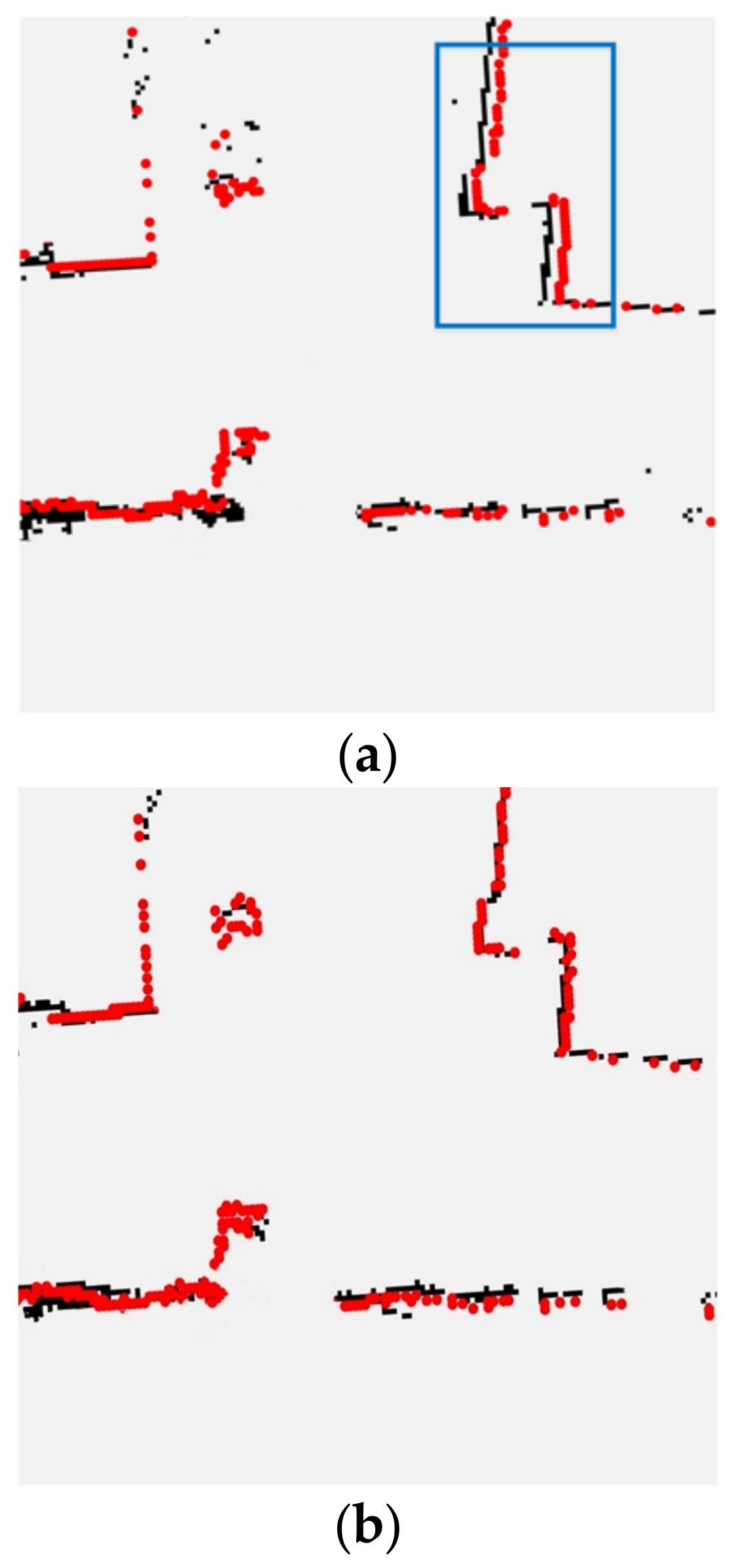

6.1. Front-End Experiments

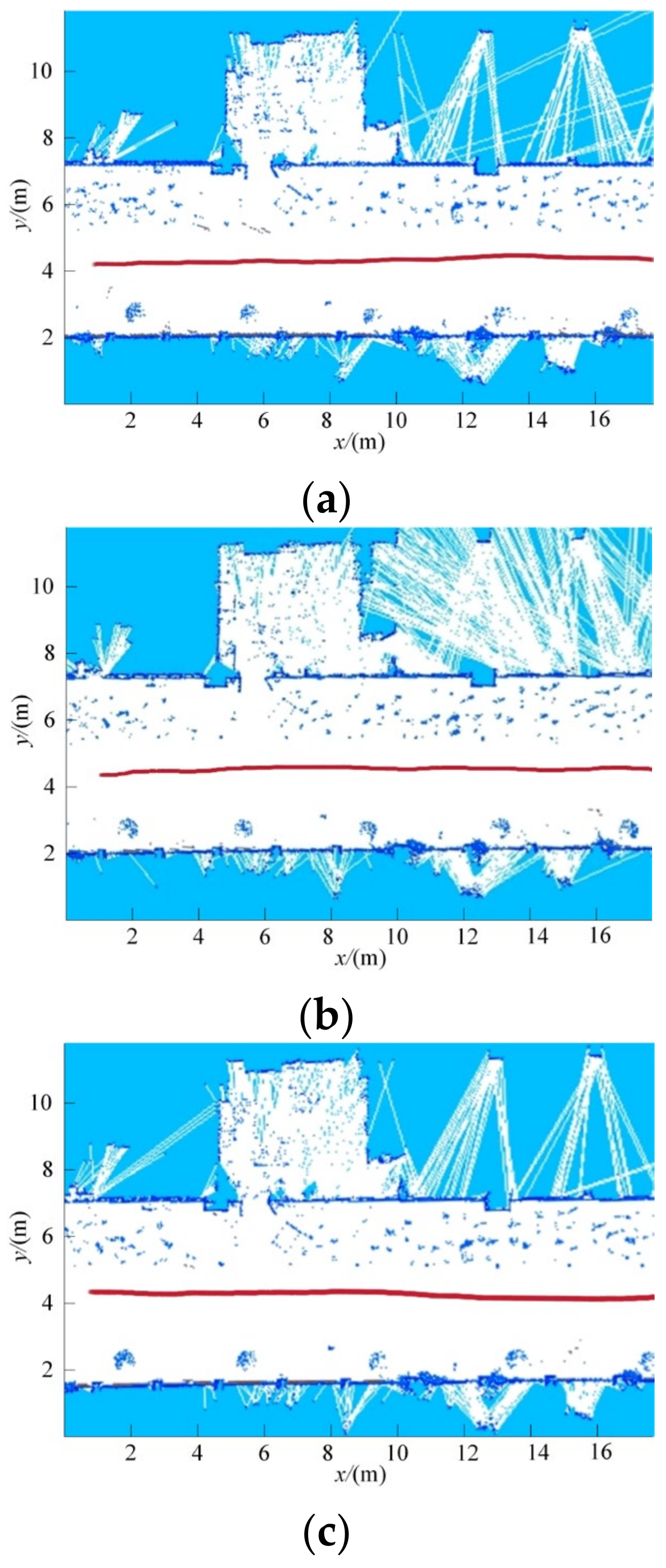

6.2. Closed-Loop Detection and Back-End Experiments

6.3. Data Analysis and Comparison

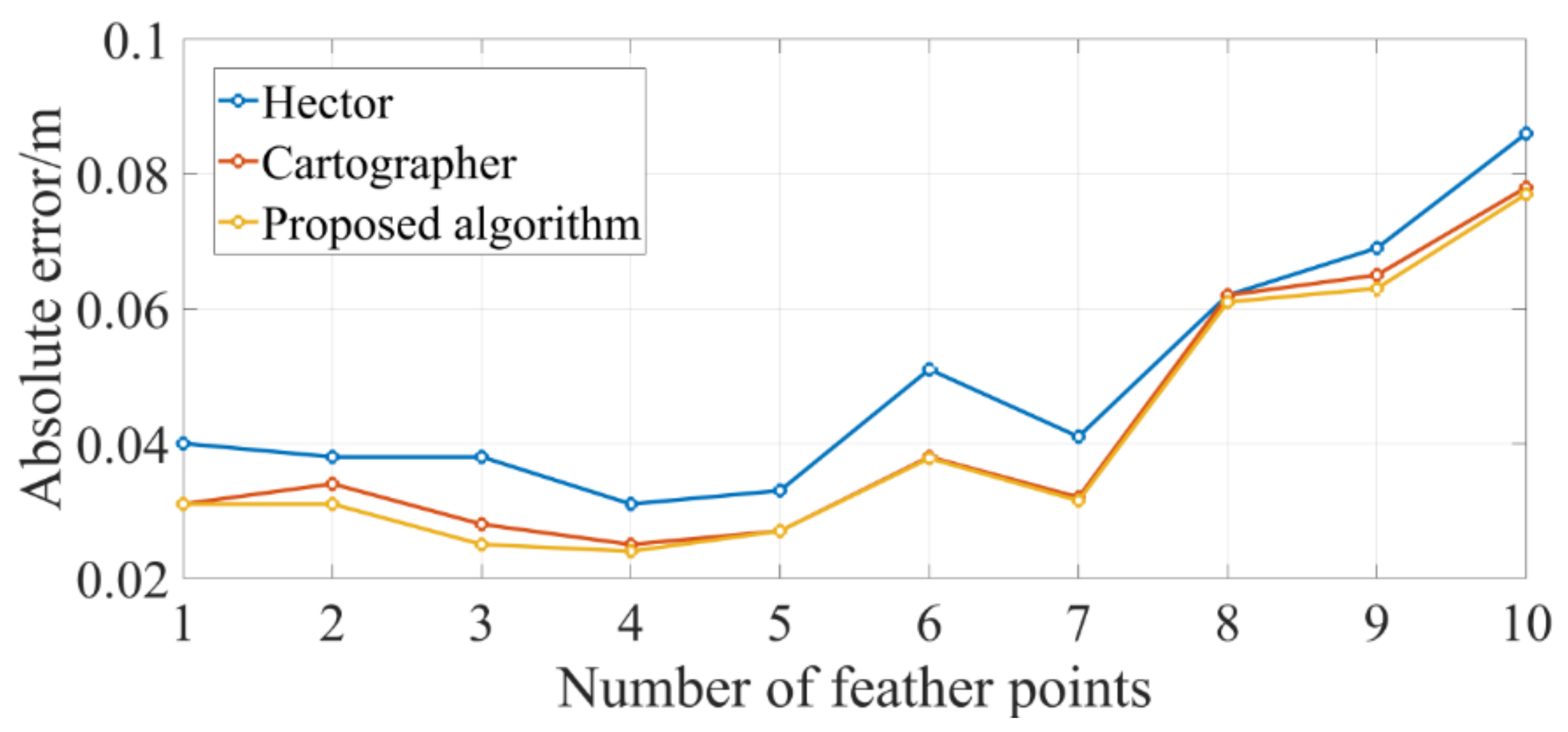

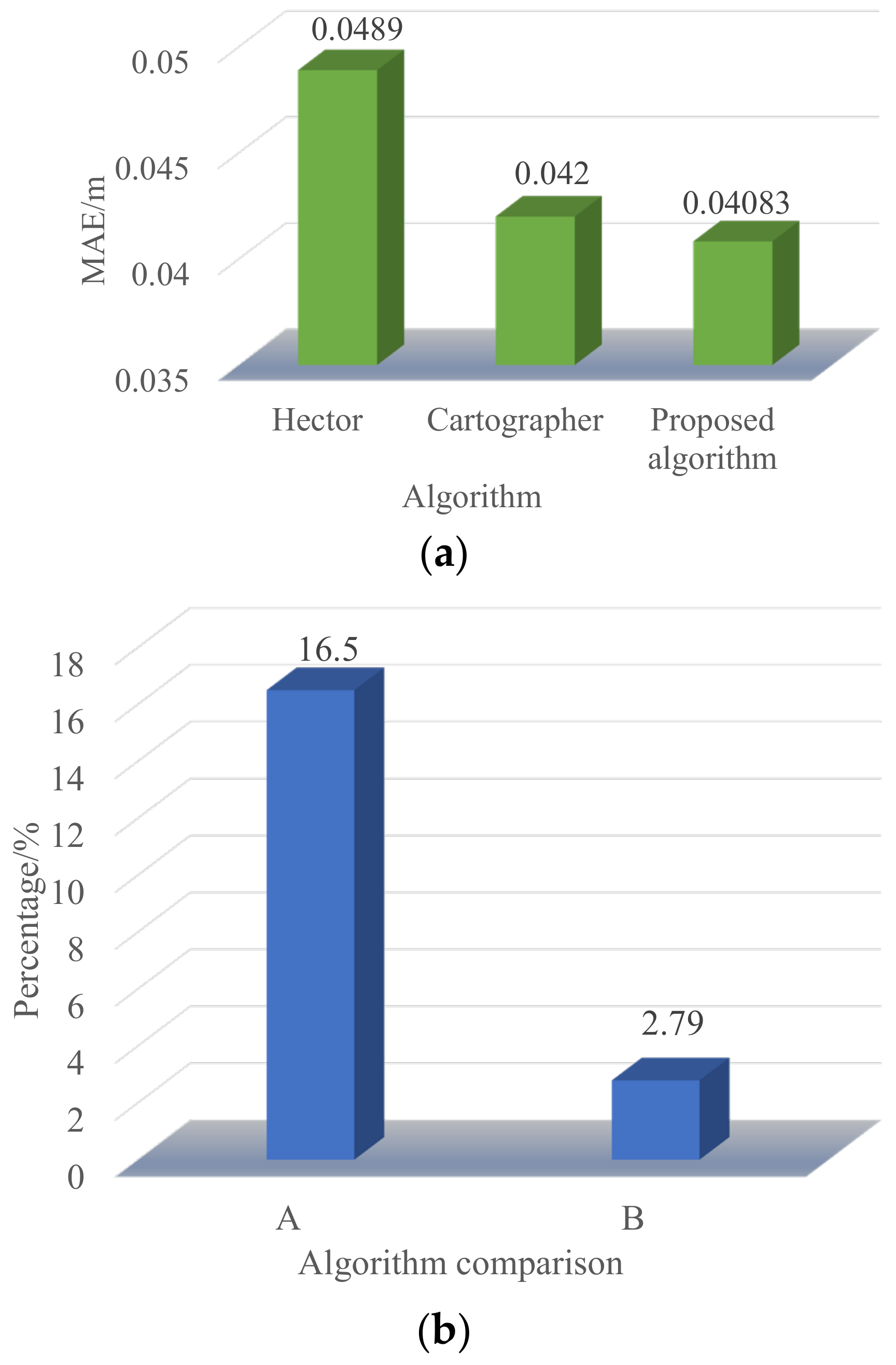

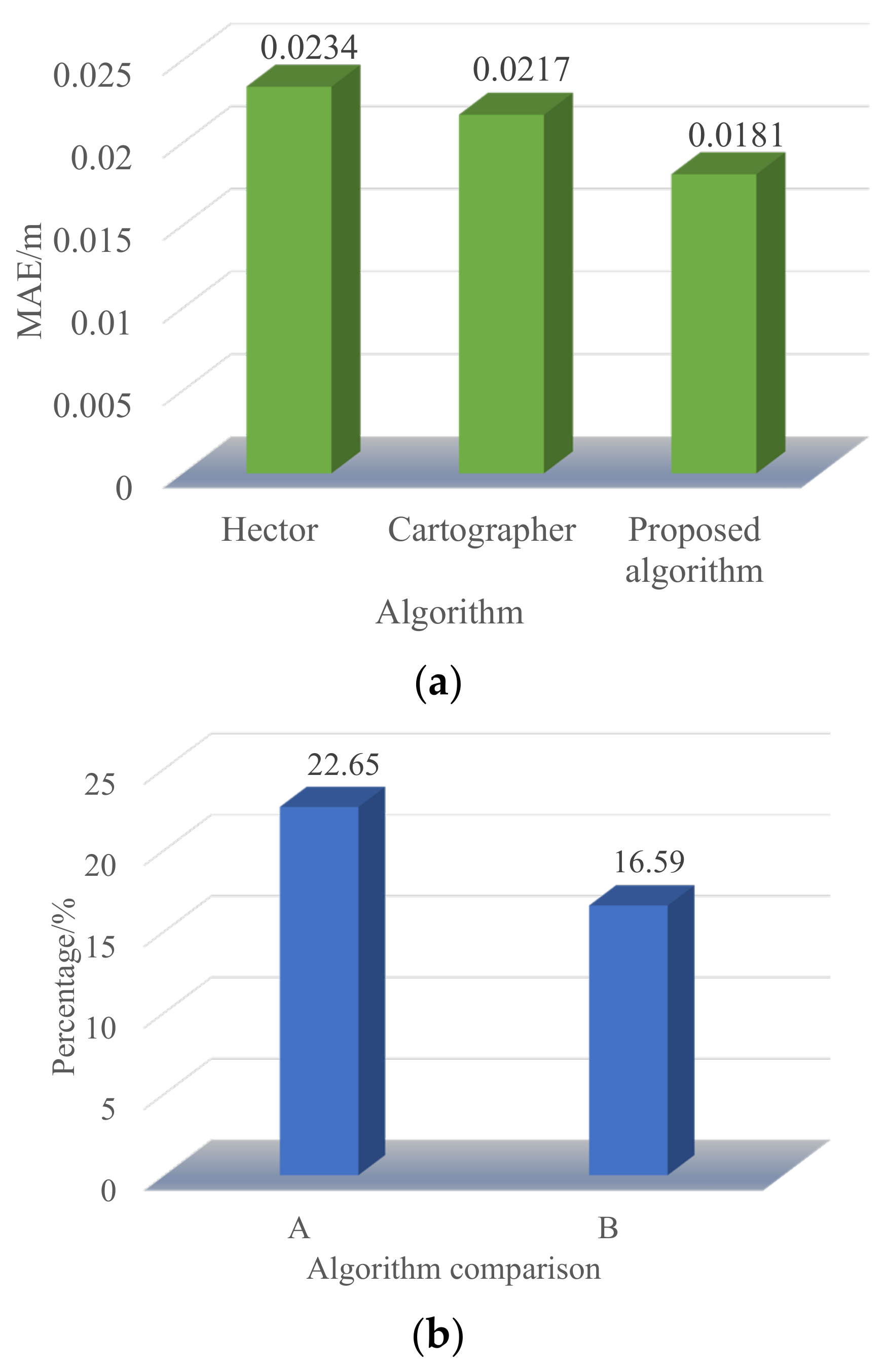

6.3.1. Trajectory Error Analysis

- (1)

- Promenade test scenes

- (2)

- Office building test scenario

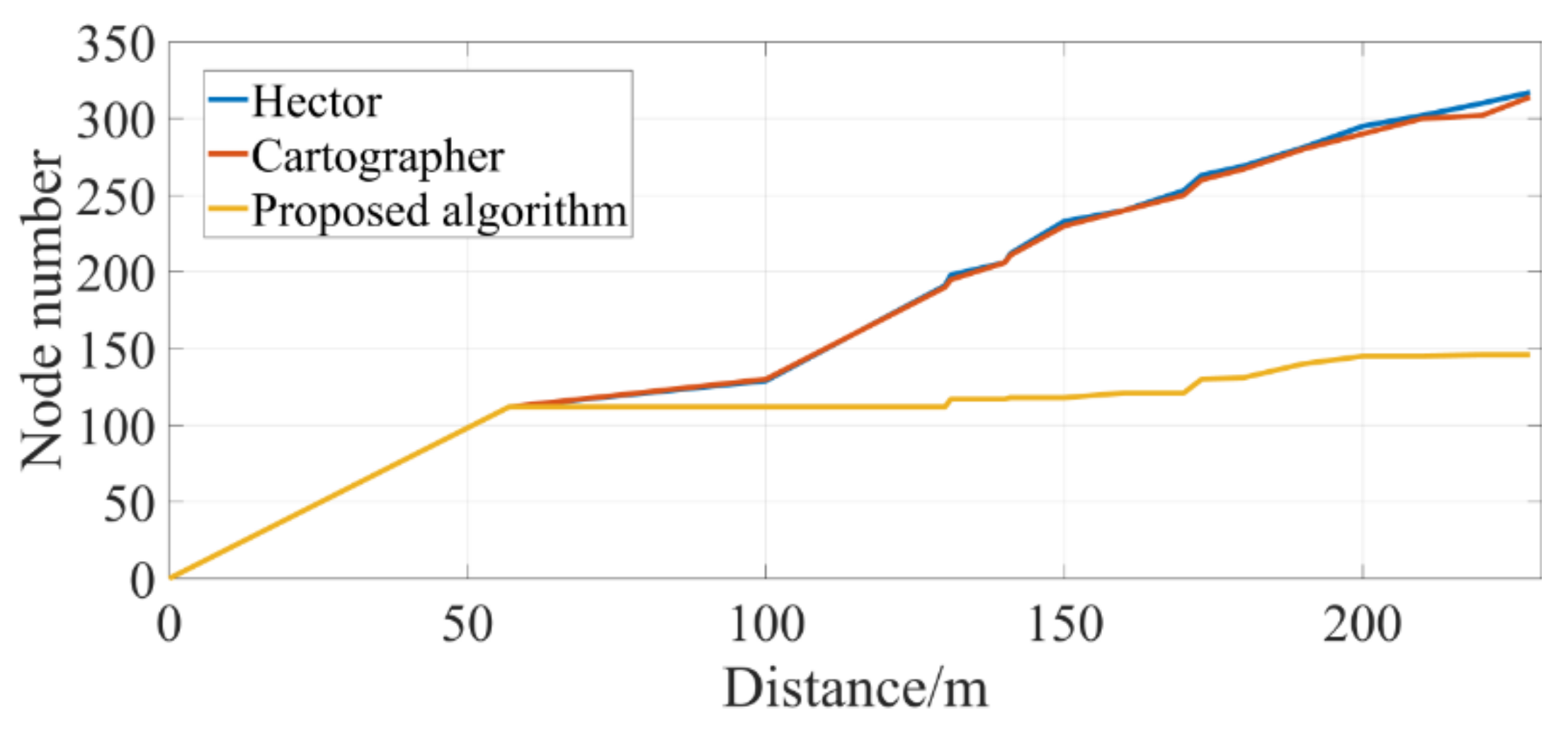

6.3.2. Memory Occupancy Analysis

7. Conclusions

- (1)

- Introducing a weighted SDF map and adding ground constraints, the L–M method is used to solve for reduced localisation errors, improved matching accuracy of front-end scan matching and robustness on uneven road surfaces.

- (2)

- Combining closed-loop detection and keyframing enables optimal closed-loop detection in the shortest possible time.

- (3)

- The proposed factor graph elimination algorithm adds a sliding window to the chain factor graph model to retain the historical state information within the window and improve the fault tolerance. In order to avoid high-dimensional matrix operations, the elimination algorithm is introduced to transform the factor graph into a Bayesian network, which sequentially marginalizes the historical states to achieve matrix dimensionality reduction and reduce the memory occupation rate.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, L.; Cai, Z.X. Progress of CML for mobile robots in unknown environments. Robot 2004, 26, 380–384. [Google Scholar] [CrossRef]

- Zou, Q.; Sun, Q.; Chen, L.; Nie, B.; Li, Q.Q. A Comparative Analysis of LiDAR SLAM-Based Indoor Navigation for Autonomous Vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 6907–6921. [Google Scholar] [CrossRef]

- Mac, T.T.; Lin, C.Y.; Huan, N.G.; Nhat, L.D.; Hoang, P.C.; Hai, H.H. Hybrid SLAM-based Exploration of a Mobile Robot for 3D Scenario Reconstruction and Autonomous Navigation. Acta Polytech. Hung. 2021, 18, 197–212. [Google Scholar]

- Gupta, A.; Fernando, X. Simultaneous Localization and Mapping (SLAM) and Data Fusion in Unmanned Aerial Vehicles: Recent Advances and Challenges. Drones 2022, 6, 85. [Google Scholar] [CrossRef]

- Song, J.E.; Joongjin, K. Building a Mobile AR System based on Visual SLAM. J. Semicond. Disp. Technol. 2021, 20, 96–101. [Google Scholar]

- Kuo, C.Y.; Huang, C.C.; Tsai, C.H.; Shi, Y.S.; Smith, S. Development of an immersive SLAM-based VR system for teleoperation of a mobile manipulator in an unknown environment. Comput. Ind. 2021, 132, 103502. [Google Scholar] [CrossRef]

- Lepej, P.; Rakun, J. Simultaneous localisation and mapping in a complex field environment. Biosyst. Eng. 2016, 150, 160–169. [Google Scholar] [CrossRef]

- Li, C.Y.; Peng, C.; Zhang, Z.Q.; Miao, Y.L.; Zhang, M.; Li, H. Positioning and map construction for agricultural robots integrating odometer information. Trans. Chin. Soc. Agric. Eng. 2021, 37, 16–23. [Google Scholar] [CrossRef]

- Dwijotomo, A.; Rahman, M.A.; Ariff, M.H.M.; Zamzuri, H.; Azree, W.M.H.W. Cartographer SLAM Method for Optimization with an Adaptive Multi-Distance Scan Scheduler. Appl. Sci. 2020, 10, 347. [Google Scholar] [CrossRef]

- Singh, P.; Jeon, H.; Yun, S.; Kim, B.W.; Jung, S.Y. Vehicle Positioning Based on Optical Camera Communication in V2I Environments. CMC Comput. Mater. Contin. 2022, 72, 1927–2945. [Google Scholar] [CrossRef]

- Pedrosa, E.; Pereira, A.; Lau, N. A Non-Linear Least Squares Approach to SLAM using a Dynamic Likelihood Field. J. Intell. Robotic Systems 2019, 93, 519–532. [Google Scholar] [CrossRef]

- Ding, Y.H.; Wu, H.Y.; Chen, Y. SLAM method based on 2D laser scan-to-submap matching. Comput. Eng. Des. 2020, 41, 3458–3463. [Google Scholar] [CrossRef]

- Jiang, G.L.; Yin, L.; Liu, G.D.; Xi, W.N.; Ou, Y.S. FFT-Based Scan-Matching for SLAM Applications with Low-Cost Laser Range Finders. Appl. Sci. - 2019, 9, 41. [Google Scholar] [CrossRef]

- Ren, R.K.; Fu, H.; Wu, M. Large-Scale Outdoor SLAM Based on 2D Lidar. Electronics 2019, 8, 613. [Google Scholar] [CrossRef]

- Liang, S.; Cao, Z.Q.; Wang, C.P.; Yu, J.Z. A Novel 3D LiDAR SLAM Based on Directed Geometry Point and Sparse Frame. IEEE Robot. Autom. Lett. 2021, 6, 374–381. [Google Scholar] [CrossRef]

- Marchel, L.; Specht, C.; Specht, M. Testing the Accuracy of the Modified ICP Algorithm with Multimodal Weighting Factors. Energies 2020, 13, 5939. [Google Scholar] [CrossRef]

- Li, X.Y.; Ling, H.B. Hybrid Camera Pose Estimation With Online Partitioning for SLAM. IEEE Robot. Autom. Lett. 2020, 5, 1453–1460. [Google Scholar] [CrossRef]

- Xu, Y.; An, W.F. RGB-D SLAM System Based on BA Optimization and KL Divergence. J. Northeast. Univ. Nat. Sci. 2020, 41, 933–937. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, F. BALM:Bundle Adjustment for Lidar Mapping. IEEE Robot. Autom. Lett. 2021, 6, 3184–3191. [Google Scholar] [CrossRef]

- Fossel, J.D.; Tuyls, K.; Sturm, J. 2D-SDF-SLAM: A signed distance function based SLAM frontend for laser scanners. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–3 October 2015; pp. 1949–1955. [Google Scholar] [CrossRef]

- Wang, Z.L.; Li, W.Y. Moving Objects Tracking and SLAM Method Based on Point Cloud Segmentation. Robot 2021, 43, 177–192. [Google Scholar] [CrossRef]

- Wen, J.R.; Qian, C.; Tang, J.; Liu, H.; Ye, W.F.; Fan, X.Y. 2D LiDAR SLAM Back-End Optimization with Control Network Constraint for Mobile Mapping. Sensors 2018, 18, 3668. [Google Scholar] [CrossRef]

- Zhang, H.K.; Chen, N.S.; Dai, Z.X.; Fan, G.Y. A Multi-level Data Fusion Localization Algorithm for SLAM. Robot 2021, 43, 641–652. [Google Scholar] [CrossRef]

- Jiang, S.K.; Wang, S.L.; Yi, Z.Y.; Zhang, M.N.; Lv, X.L. Autonomous Navigation System of Greenhouse Mobile Robot Based on 3D Lidar and 2D Lidar SLAM. Front. Plant Sci. 2022, 13, 815218. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.B.; Li, X.X.; Liao, J.C.; Feng, S.Q.; Li, S.Y.; Zhou, Y.X. Tightly-coupled stereo visual-inertial-LiDAR SLAM based on graph optimization. Acta Geod. Et Cartogr. Sin. 2022, 51, 1744–1756. [Google Scholar] [CrossRef]

- Fang, X.; Wang, C.; Nguyen, T.M.; Xie, L.H. Graph Optimization Approach to Range-Based Localization. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 6830–6841. [Google Scholar] [CrossRef]

- Dai, T.; Miao, L.J.; Shao, H.J. A Multi-beam Sonar-based Graph SLAM Method. Acta Armamentarii 2020, 41, 1384–1392. [Google Scholar] [CrossRef]

- Li, B.Y.; Liu, S.J.; Cui, M.Y.; Zhao, Z.H.; Huang, K. Multi-Vehicle Collaborative SLAM Framework for Minimum Loop Detection. Acta Electron. Sin. 2021, 49, 2241–2250. [Google Scholar] [CrossRef]

- Joan, V.; Joan, S.; Juan, A.C. Pose-graph SLAM sparsification using factor descent. Robot. Auton. Syst. 2019, 119, 108–118. [Google Scholar] [CrossRef]

- Bai, S.Y.; Lai, J.i.Z.; Lv, P.; Ji, B.W.; Zheng, X.Y.; Fang, W.; Cen, Y.T. Mobile Robot Localization and Perception Method for Subterranean Space Exploration. Robot 2022, 44, 463–470. [Google Scholar] [CrossRef]

- Joan, V.; Joan, S.; Juan, A.C. Graph SLAM Sparsification With Populated Topologies Using Factor Descent Optimization. IEEE Robot. Autom. Lett. 2018, 3, 1322–1329. [Google Scholar] [CrossRef]

- Huang, Q.Q.; Papalia, A.; Leonard, J.J. Nested Sampling for Non-Gaussian Inference in SLAM Factor Graphs. IEEE Robot. Autom. Lett. 2022, 7, 9232–9239. [Google Scholar] [CrossRef]

- Fan, S.W.; Zhang, Y.; Hao, Q.; Jiang, P.; Yu, F. Cooperative positioning and error estimation algorithm based on factor graph. Syst. Eng. Electron. 2021, 43, 499–507. [Google Scholar] [CrossRef]

- Yang, L.; Ma, H.W.; Wang, Y.; Xia, J.; Wang, C.W. A Tightly Coupled LiDAR-Inertial SLAM for Perceptually Degraded Scenes. Sensors 2022, 22, 3063. [Google Scholar] [CrossRef]

- Hemmat, H.J.; Bondarev, E.; de With, P.H.N. Exploring Distance-Aware Weighting Strategies for Accurate Reconstruction of Voxel-Based 3D Synthetic Models. Lect. Notes Comput. Sci. 2014, 8325, 412–423. [Google Scholar] [CrossRef]

- Hornung, A.; Wurm, K.M.; Bennewitz, M.; Stachniss, C.; Burgard, W. OctoMap: An efficient probabilistic 3D mapping framework based on octrees. Auton. Robot. 2013, 34, 189–206. [Google Scholar] [CrossRef]

- Yang, J.C.; Lin, C.J.; You, B.Y.; Yan, Y.L.; Cheng, T.H. RTLIO: Real-Time LiDAR-Inertial Odometry and Mapping for UAVs. Sensors 2021, 21, 3955. [Google Scholar] [CrossRef]

- Huang, S.; Huang, H.Z.; Zeng, Q.; Huang, P. A Robust 2D Lidar SLAM Method in Complex Environment. Photonic Sens. 2022, 14, 220416. [Google Scholar] [CrossRef]

- Indelman, V.; Williams, S.; Kaess, M.; Dellaert, F. Information fusion in navigation systems via factor graph based incremental smoothing. Robot. Auton. Syst. 2013, 61, 721–738. [Google Scholar] [CrossRef]

- Gao, J.Q.; Tang, X.Q.; Zhang, H.; Wu, M. Vehicle INS/GNSS/OD integrated navigation algorithm based on factor graph. Syst. Eng. Electron. 2018, 40, 2547–2553. [Google Scholar] [CrossRef]

- Trejos, K.; Rincon, L.; Bolanos, M.; Fallas, J.; Marin, L. 2D SLAM Algorithms Characterization, Calibration, and Comparison Considering Pose Error, Map Accuracy as Well as CPU and Memory Usage. Sensors 2022, 22, 6903. [Google Scholar] [CrossRef]

| Parameter | Hector | Cartographer | Proposed Algorithm |

|---|---|---|---|

| Max error/m | 0.0860 | 0.0780 | 0.0770 |

| Min error/m | 0.0310 | 0.0250 | 0.0240 |

| Mean absolute error(MAE)/m | 0.0489 | 0.0420 | 0.0408 |

| Parameter | Hector | Cartographer | Proposed Algorithm |

|---|---|---|---|

| Max error/m | 0.0270 | 0.0250 | 0.0245 |

| Min error/m | 0.0200 | 0.0170 | 0.0165 |

| Mean absolute error(MAE)/m | 0.0234 | 0.0217 | 0.0181 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, X.; Li, P.; Li, Q.; Li, Z. Two-Dimensional-Simultaneous Localisation and Mapping Study Based on Factor Graph Elimination Optimisation. Sustainability 2023, 15, 1172. https://doi.org/10.3390/su15021172

Wu X, Li P, Li Q, Li Z. Two-Dimensional-Simultaneous Localisation and Mapping Study Based on Factor Graph Elimination Optimisation. Sustainability. 2023; 15(2):1172. https://doi.org/10.3390/su15021172

Chicago/Turabian StyleWu, Xinzhao, Peiqing Li, Qipeng Li, and Zhuoran Li. 2023. "Two-Dimensional-Simultaneous Localisation and Mapping Study Based on Factor Graph Elimination Optimisation" Sustainability 15, no. 2: 1172. https://doi.org/10.3390/su15021172

APA StyleWu, X., Li, P., Li, Q., & Li, Z. (2023). Two-Dimensional-Simultaneous Localisation and Mapping Study Based on Factor Graph Elimination Optimisation. Sustainability, 15(2), 1172. https://doi.org/10.3390/su15021172