A Review of Deep Learning-Based Vehicle Motion Prediction for Autonomous Driving

Abstract

:1. Introduction

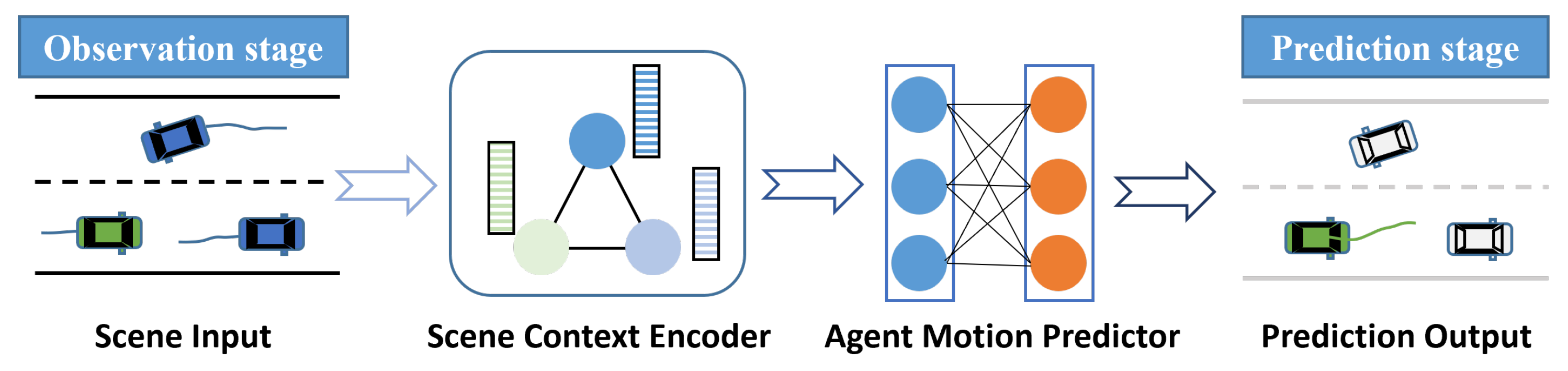

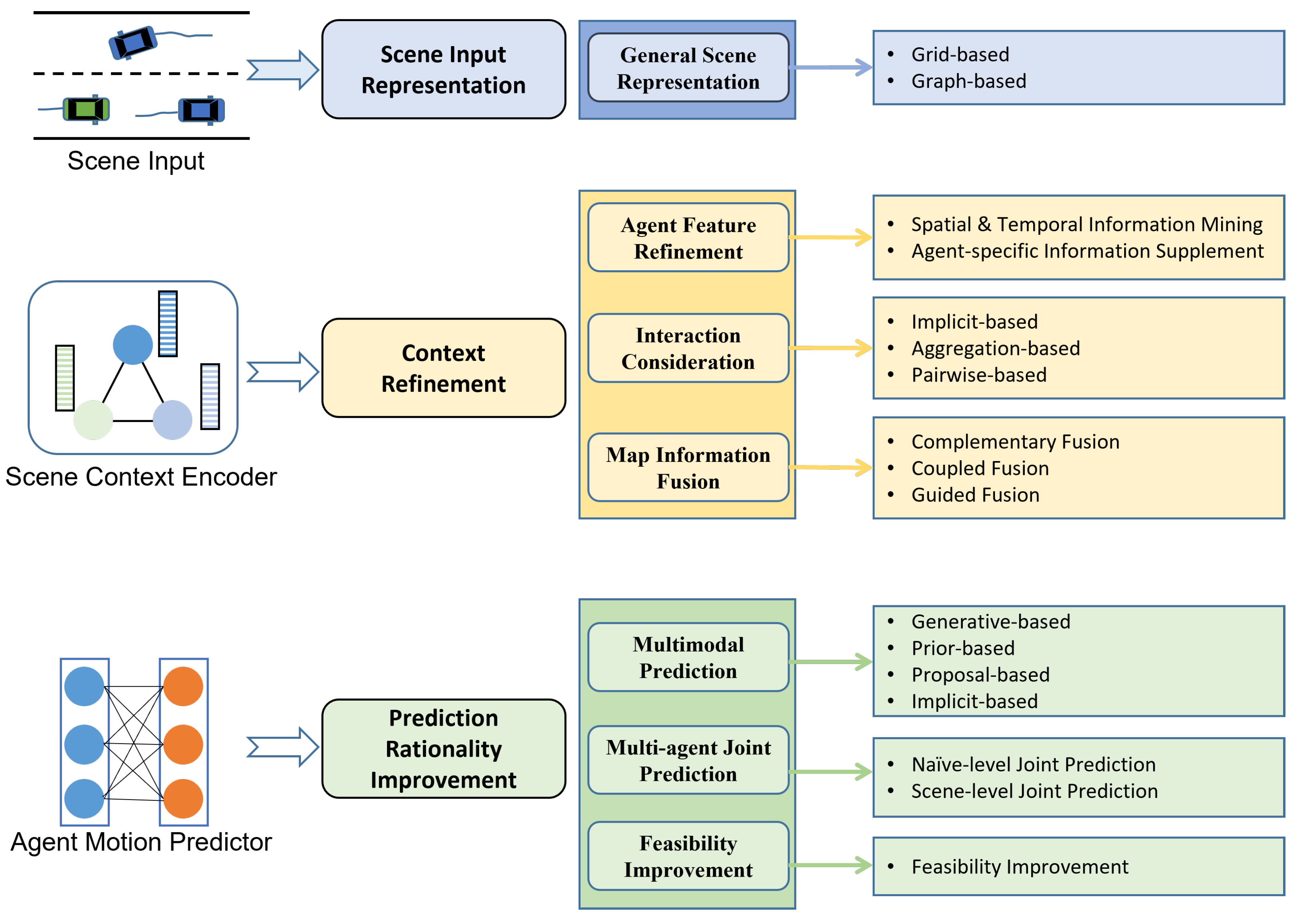

2. Basics, Challenges, and Classification

2.1. Basic Concepts and Terminology

2.2. Implementation Paradigm and Mathematical Expression

2.3. Current Open Challenges

- There is inter-dependency [28,30] or complex interactions [25,31,32,33] within the scene. For example, when a target vehicle wants to change lanes, it needs to consider the driving states of surrounding vehicles. Meanwhile, the lane-change actions taken by the target vehicle will also affect surrounding vehicles. Therefore, the prediction model should consider the state of TVs and the interactions in the scene.

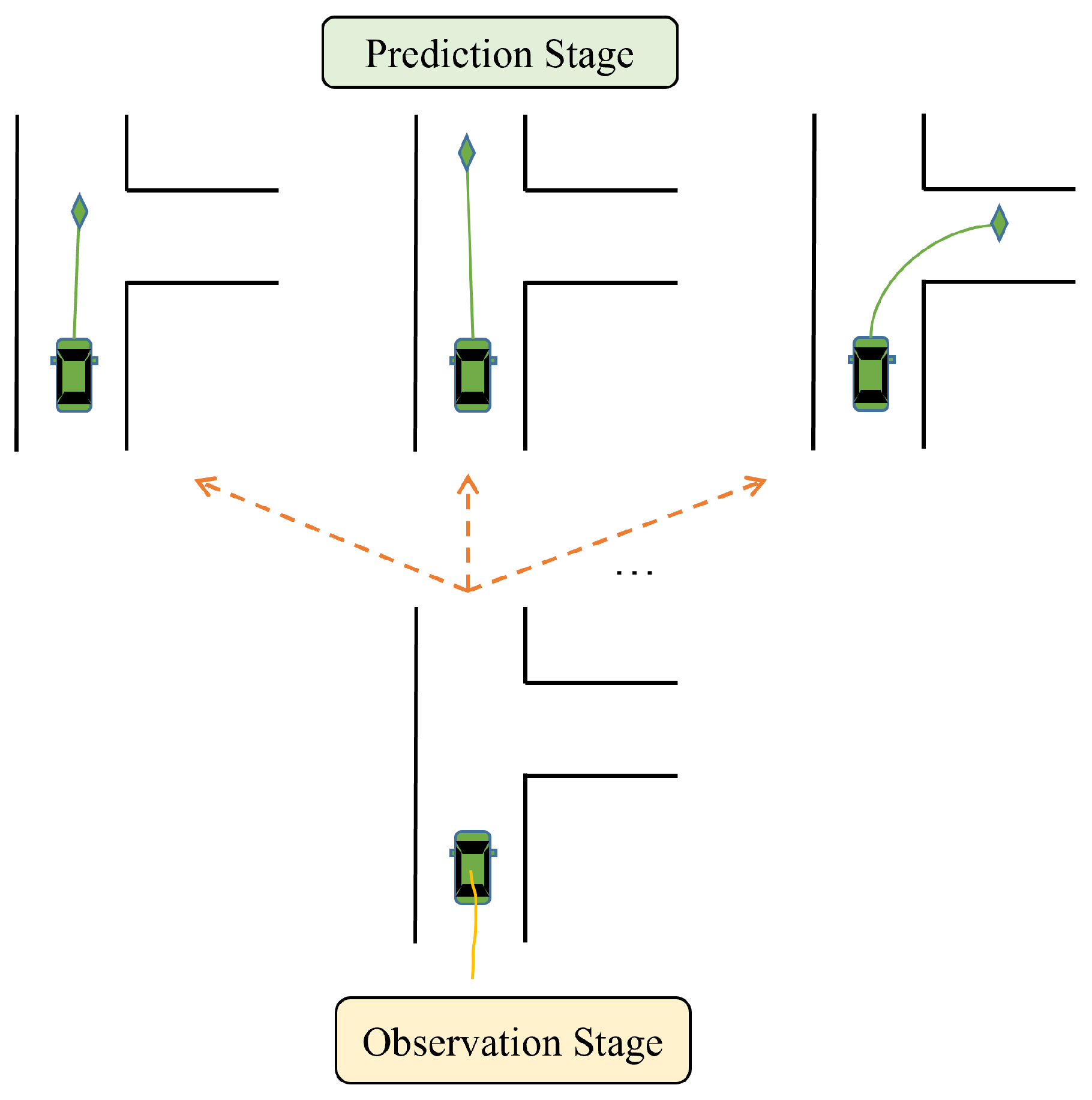

- Another difficulty in vehicle motion prediction is that vehicle motion is multimodal [25,28,30,34,35,36]. There is hardly direct access to drivers’ intentions, and drivers tend to have different driving styles. Thus, TVs have a high degree of uncertainty of futural motion. For example, at the junction in Figure 2, the target vehicle may choose to go straight or turn right, despite consistent historical input information. Depending on the driving style, it may have different driving speeds when going straight. Many current motion prediction methods use multimodal trajectories to represent multimodality. Multimodal trajectory prediction requires the model to effectively explore modal diversity, and the set of predicted trajectories should cover trajectories close to the ground truth value.

- Futural motion states of TVs are often constrained by static map elements such as lane structure and traffic rules, e.g., vehicles in right-turn lanes need to perform right turns. Thus, models should effectively integrate the map information to extract full context features related to the future motion of TVs.

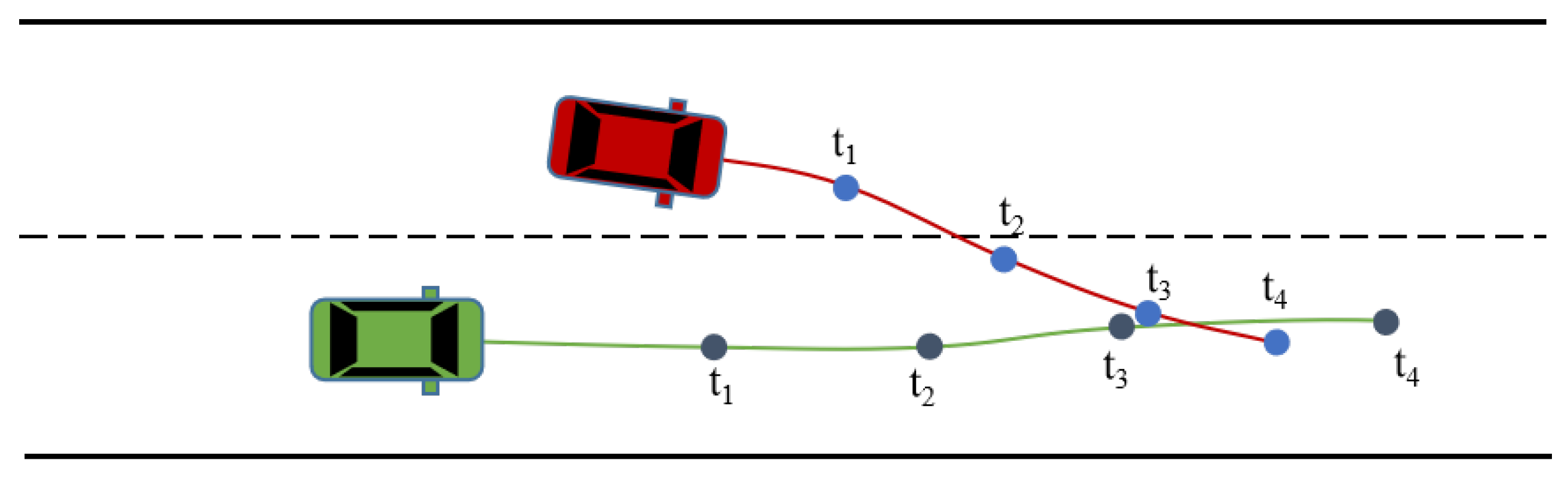

- Many methods only make predictions for a single target vehicle, i.e., always equals 1. But in dense scenarios, we may need to predict the motion states of several or all vehicles around the EV. More generally, varies all the time. Therefore, models are required to predict multiple target agents jointly, and the number of TVs can be flexibly changed according to the current traffic condition. Moreover, the joint prediction of multiple agents needs to consider the mutual coordination of the future motion states of each vehicle, e.g., there should be no overlap of future trajectories between vehicles at any moment.

- DL-based methods can easily consider a variety of input information. However, as the type and number of inputs increase, the complexity of encoding input increases and may cause confusion in learning different types of information. So, the prediction model needs to efficiently and adequately represent the input scene information to better encode and extract features. Furthermore, DL-based prediction models need to extract the scene context related to the motion prediction from the pre-processed input information, and how to extract the full context feature is still an open challenge in this field.

- The practical deployment of prediction models is also a challenge. Firstly, many works assume that the model has access to the complete observation of RVs. However, the track may be missed during the actual driving process due to occlusion. Achieving accurate target vehicle prediction based on the missing input remains a problem. Secondly, many prediction methods treat the prediction function as an independent module, lacking links with other modules of autonomous driving systems. In addition, there is an issue of timeliness in practical deployment, especially for models using complex deep neural networks, which consume a large number of computational resources when running.

2.4. Classification

3. Scene Input Representation

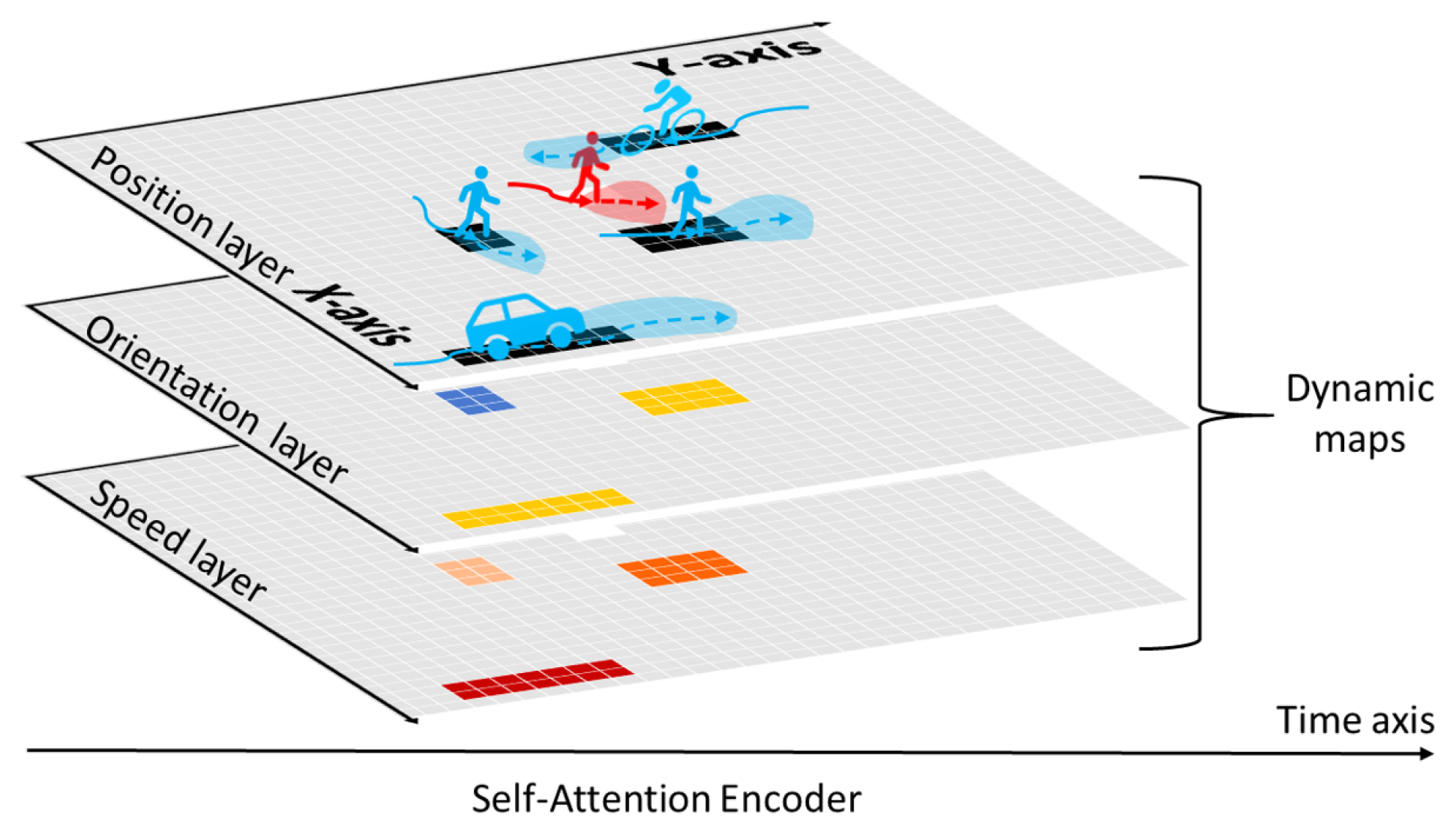

3.1. Grid-Based

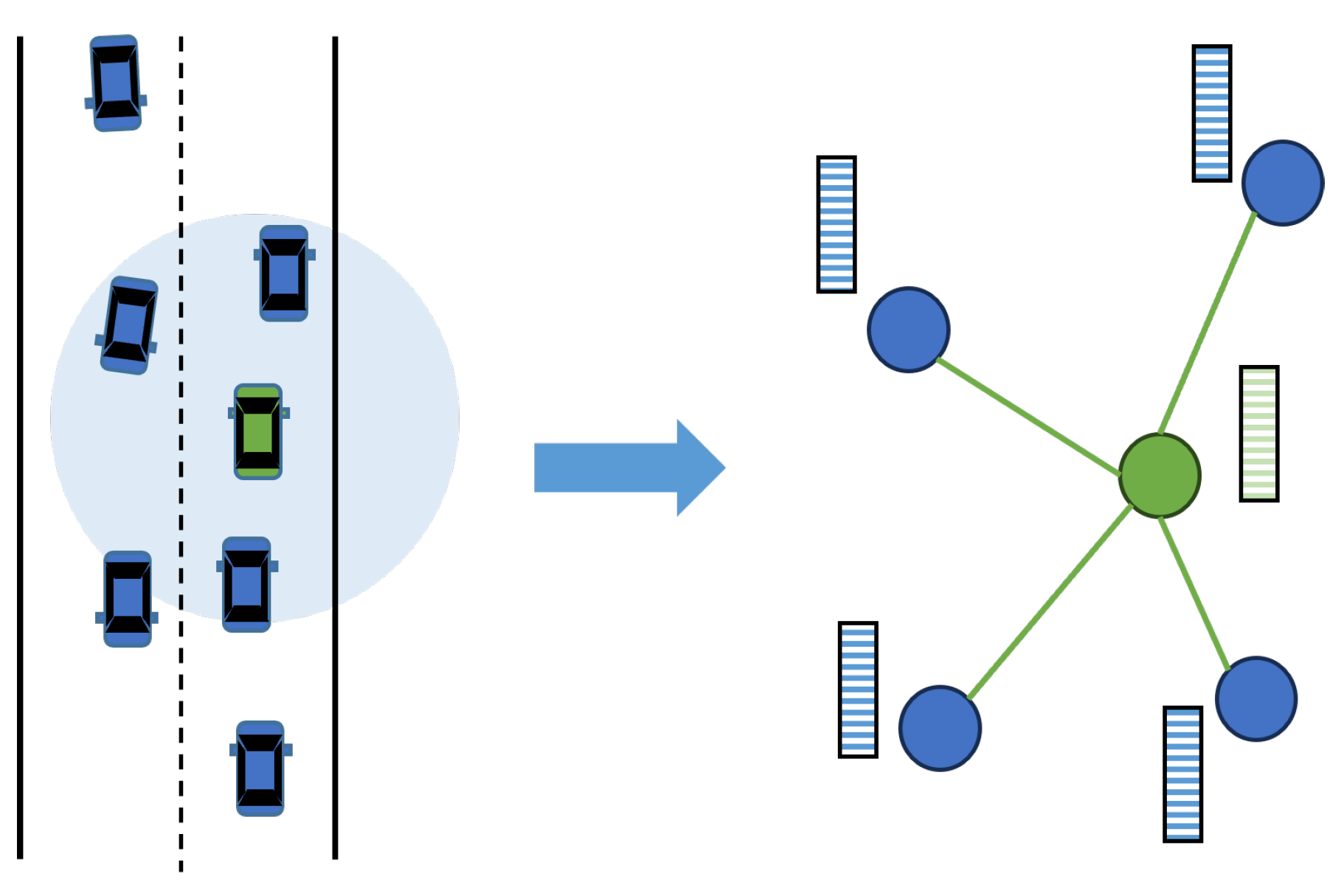

3.2. Graph-Based

4. Context Refinement

4.1. Agent Feature Refinement

4.1.1. Spatial and Temporal Information Mining

4.1.2. Agent-Specific Information Supplement

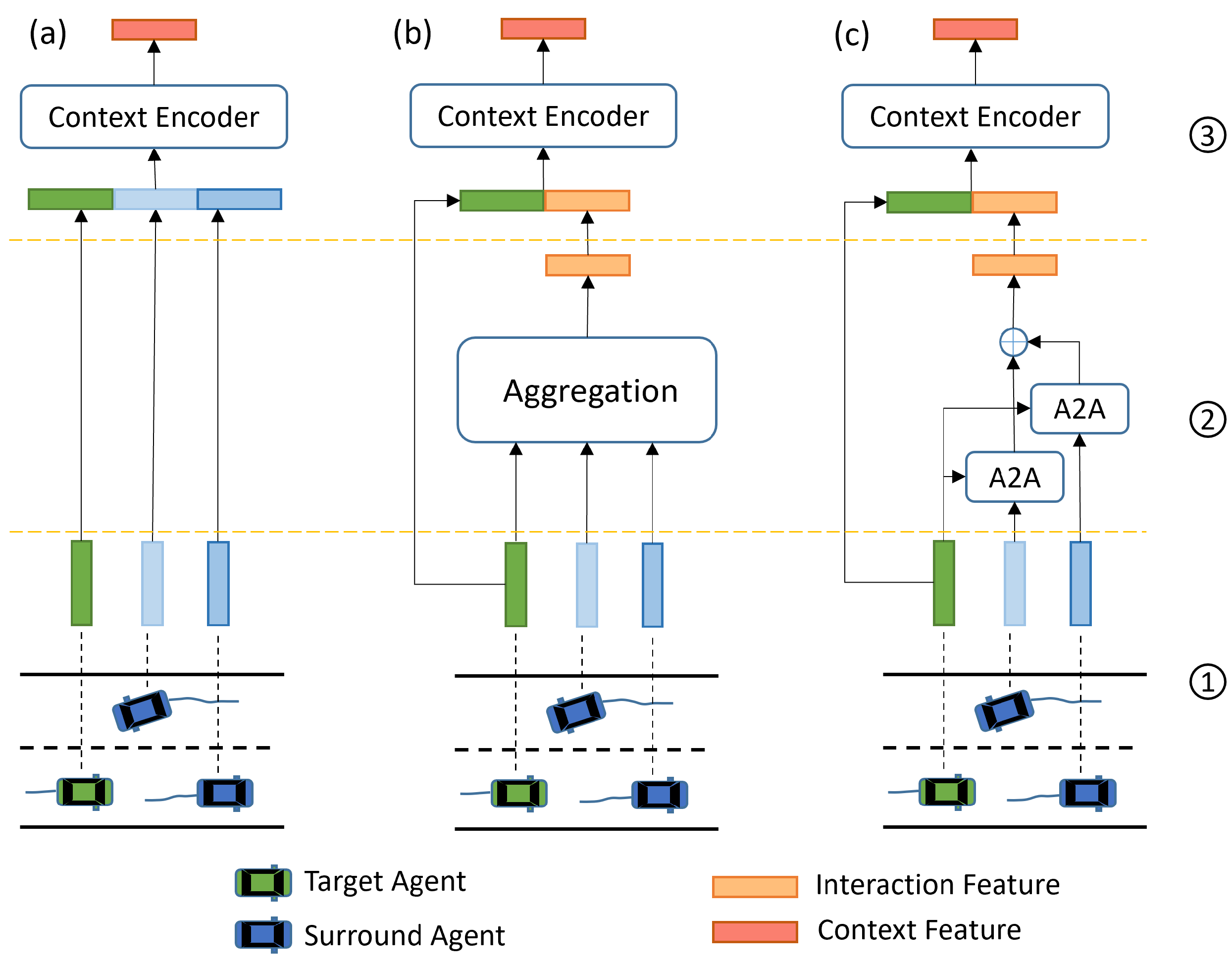

4.2. Interaction Consideration

4.2.1. Implicit-Based

4.2.2. Aggregation-Based

4.2.3. Pairwise-Based

4.3. Map Information Fusion

4.3.1. Complementary Fusion

4.3.2. Coupled Fusion

4.3.3. Guided Fusion

5. Prediction Rationality Improvement

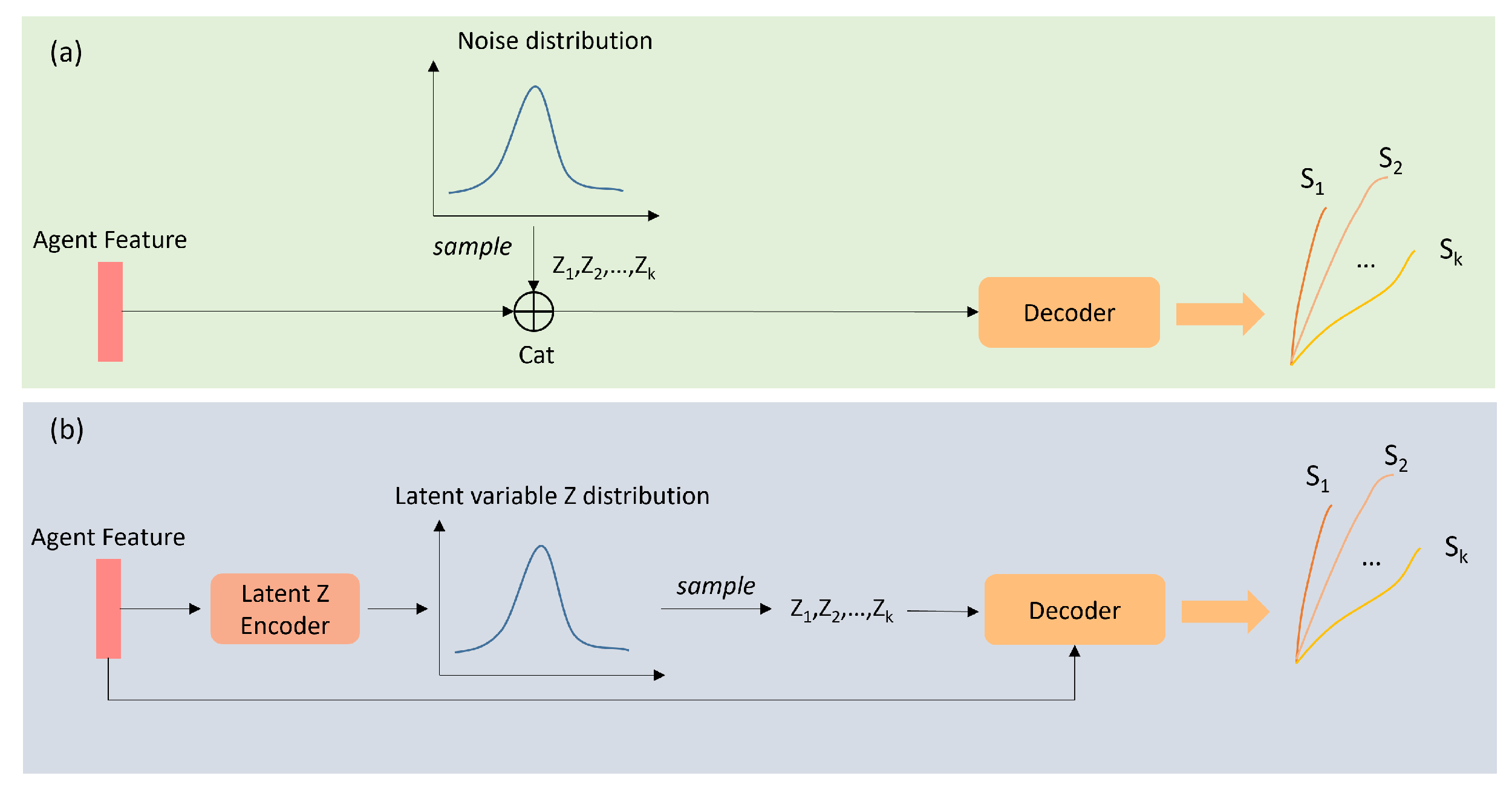

5.1. Multimodal Prediction

5.1.1. Generative-Based

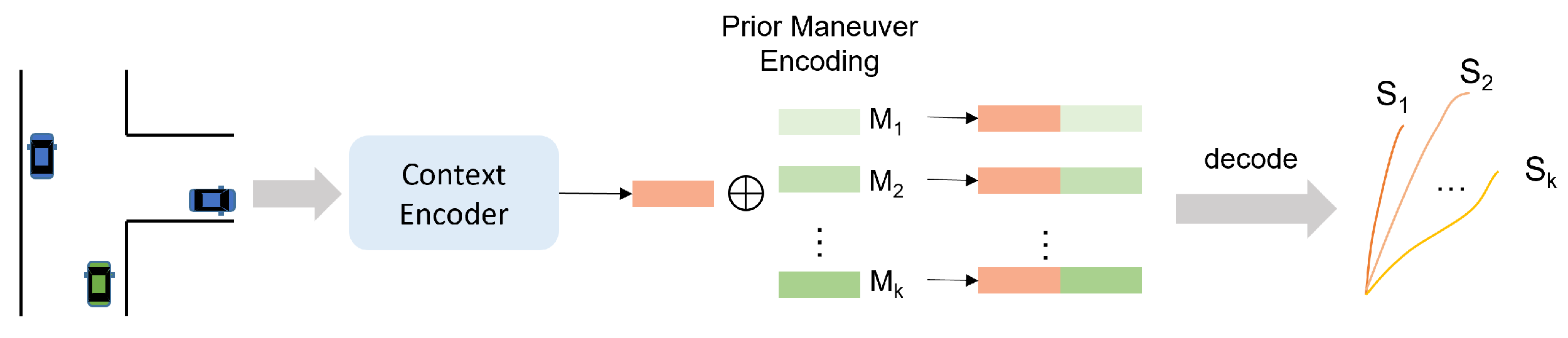

5.1.2. Prior-Based

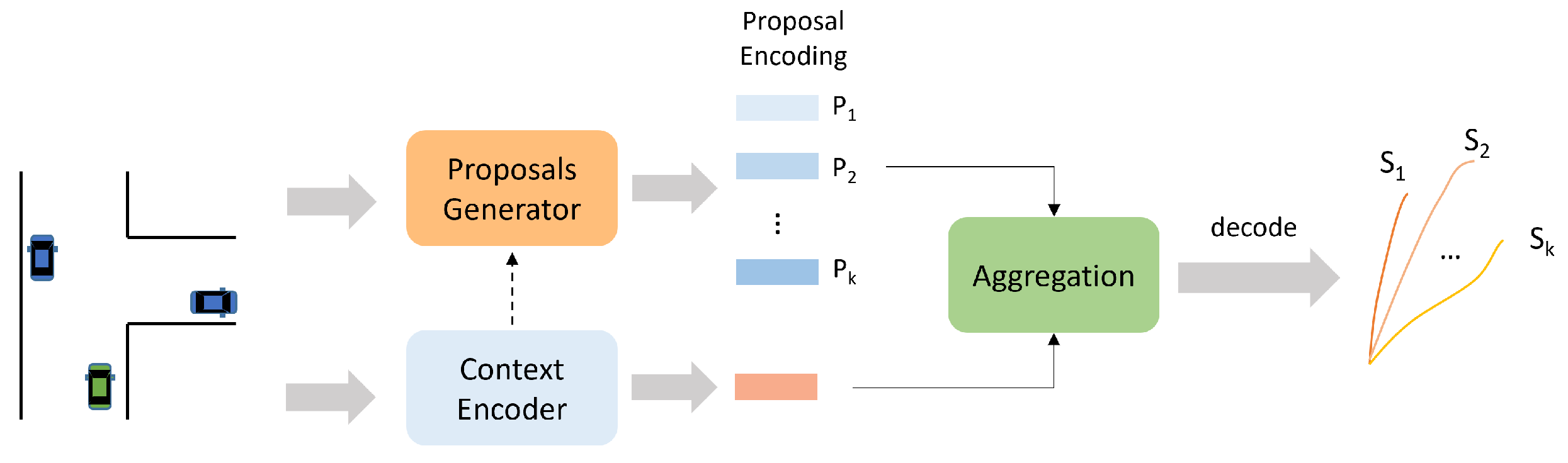

5.1.3. Proposal-Based

5.1.4. Implicit-Based

5.2. Multi-Agent Joint Prediction

5.2.1. Naïve-Level Joint Prediction

5.2.2. Scene-Level Joint Prediction

5.3. Feasibility Improvement

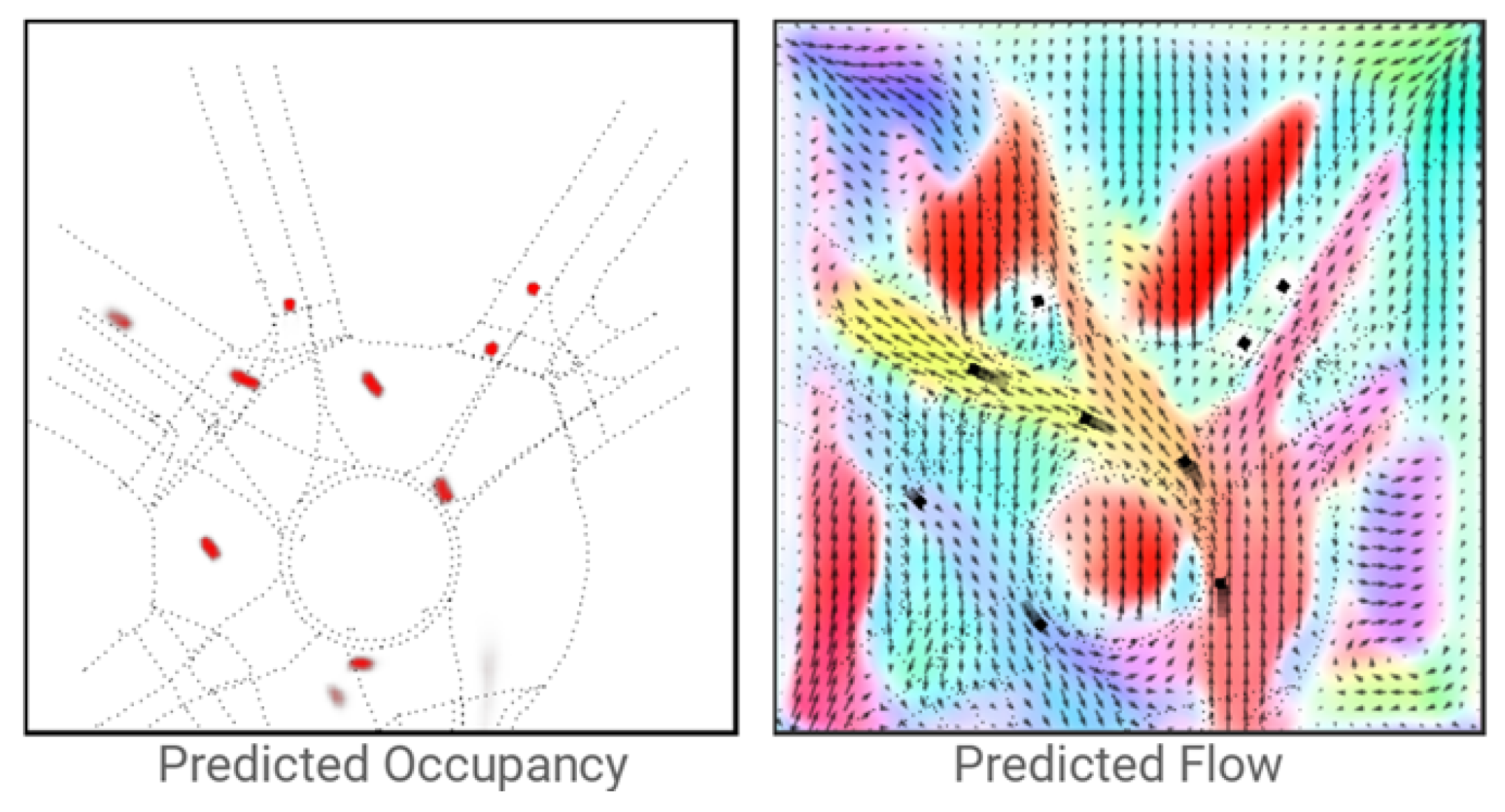

6. Occupancy Flow Prediction

7. Public Motion Prediction Datasets

7.1. Aerial Perspective Dataset

7.2. Vehicle Perspective Dataset

8. Evaluation Metrics and Future Potential Directions

8.1. Trajectory-Based Metrics

8.1.1. Displacement Metrics for Single-Modal Prediction

- Final Displacement Error (FDE): This metric calculates the L2 distance between the predicted trajectory end point and the ground truth end point:where represent the predicted 2D endpoint of a TV, subscript is the total number of timesteps in the prediction horizon, and superscript represents the ground truth value. The trajectory endpoint is related to the TV’s driving intention, so it is important to evaluate the accuracy of the endpoint prediction.

- Average Displacement Error (ADE): It is used to measure the overall distance deviation of the predicted trajectory to the ground truth trajectory:where represent the predicted point at timestep t. The same variables are used in later formulas.

- Root Mean Square Error (RMSE): This metric is the average recalculation of the sum of squared distances of total timesteps from a single predicted trajectory to the ground truth trajectory:

- Mean Absolute Error (MAE): It is the average absolute error between the predicted trajectory and the ground truth trajectory, and is generally used in the horizontal and vertical directions:

8.1.2. Displacement Metrics for Multimodal Prediction

8.1.3. Displacement Metrics for Multi-Agent Joint Prediction

8.1.4. Other Metrics for Trajectory Prediction

- Miss Rate (MR): If the predicted trajectory’s endpoint deviation from the true endpoint exceeds a threshold d for a sample, that sample is called a miss. The ratio of the total number of misses m to all samples M is called the miss rate. MR reflects the overall closeness of the model’s prediction of the trajectory endpoint. There are different ways of defining the distance threshold d, e.g., refs. [110,112] use a predefined fixed distance value. However, the endpoint bias tends to increase with the prediction horizon because the longer the time, the higher the motion uncertainty. In addition, the initial velocity also has an influence on the endpoint deviation. Therefore, ref. [124] defined the distance threshold based on the prediction horizon and initial velocity of the target agent. The longer the prediction horizon and the larger the initial velocity, the deviation threshold will be set larger.

- Overlap Rate (OR): OR places higher demands on the physical feasibility of the prediction model. If the predicted trajectory of a specific agent overlaps geometrically with another agent at any timestep in the prediction stage, this predicted trajectory is said to have an overlapping [124], and the OR refers to the ratio of the total number of overlapped trajectories to the trajectories of all predicted agents. The dataset has to provide the size and orientation of the detected objects to determine whether the overlapping events occur or not.

- Mean Average Precision (mAP): WOMD [124] introduces this metric which is often used in the object detection task to help test the multimodal trajectory prediction effect. Ref. [124] first groups the target agents according to the shape of their future trajectories (e.g., straight trajectories, left-turn trajectories, etc.), and then first calculates mAP within each group. Each agent has only one True Positive (TP) predicted trajectory, which is obtained based on MR, and if more than one trajectory meets the MR threshold, the one with the highest confidence is taken as the TP. The multimodal prediction trajectories of target agents are sorted by probability value, and the accuracy and recall of each prediction trajectory can be obtained from the highest to the lowest confidence level. Then, the mAP can be calculated similarly in the object detection task. The mAP value for each group is obtained and then averaged over all groups as the final result.

8.2. Occupancy-Based Metrics

- Area Under the Curve (AUC): In [29,42,106], AUC is used to evaluate the predicted occupancy grid map, obtaining the predicted occupancy grid map and the ground truth occupancy grid map at t. Each cell in has the probability of being occupied, while each cell in is 0 or 1, indicating whether the object is partially occupied. A set of linearly spaced thresholds in [0,1] is selected, the PR curve is plotted based on these thresholds, and then the AUC is obtained by calculating the area under the curve.

- Soft-Intersection-over-Union (Soft-IoU): This metric calculates the intersection ratio of predicted occupancy grid map and the ground truth occupancy grid map at keyframe t.

- End Point Error (EPE): This metric can evaluate the effectiveness of the predicted occupancy flow field . The cells in store the 2D motion vectors for the corresponding cell grid at t. The EPE then calculates the mean square error of the motion vector in the for the corresponding region based on the cells with non-zero occupancy. As shown in Equation (9), where denotes the occupancy flow field at keyframe t (the predicted one is , and the ground truth is ) and M denotes the total number of cells with Non-Zero GT occupancy.

- In addition, the occupancy flow depicts the change of the occupancy between two keyframes so that the occupancy flow can be evaluated in combination with the occupied grid. Ref. [29] uses and of the previous frame to recover the occupancy grid map of the current frame. Then, the metrics and Soft-IoU are calculated with the true occupancy grid at current keyframe t.

8.3. Comparison of State-of-the-Art Methods

| Models | Classification of Improvement Design | Metrics (K = 6) | |||||

|---|---|---|---|---|---|---|---|

| Scene Input Representation | Context Refinement | Prediction Rationality Improvement | mAP | minADE | minFDE | MR | |

| StopNet [42] | General Scene Representation | Interaction Consideration, Map Information Fusion | Multimodal Prediction | - | 0.51 | 1.49 | 0.38 |

| SceneTransformer [58] | - | Interaction Consideration | Multimodal Prediction, Multi-agent Joint Prediction | 0.28 | 0.61 | 1.21 | 0.16 |

| DenseTNT [48] | General Scene Representation | Interaction Consideration, Map Information Fusion | Multimodal Prediction | 0.33 | 1.04 | 1.55 | 0.18 |

| MPA [97] | - | Interaction Consideration, Map Information Fusion | Multimodal Prediction | 0.39 | 0.59 | 1.25 | 0.16 |

| Multipath [94] | General Scene Representation | Interaction Consideration | Multimodal Prediction | 0.41 | 0.88 | 2.04 | 0.34 |

| Golfer [80] | - | Interaction Consideration, Map Information Fusion | Multimodal Prediction | 0.41 | 0.55 | 1.16 | 0.14 |

| Multipath++ [60] | - | Interaction Consideration, Map Information Fusion, Agent Feature Refinement | Multimodal Prediction | 0.41 | 0.56 | 1.16 | 0.13 |

| MTRA [51] | - | Interaction Consideration, Map Information Fusion | Multimodal Prediction | 0.45 | 0.56 | 1.13 | 0.12 |

| Models | Classification of Improvement Design | Metrics (K = 6) | ||||

|---|---|---|---|---|---|---|

| Scene Input Representation | Context Refinement | Prediction Rationality Improvement | minADE | minFDE | MR | |

| DenseTNT [48] | General Scene Representation | Interaction Consideration, Map Information Fusion | Multimodal Prediction | 0.43 | 0.80 | 0.06 |

| Multipath [94] | General Scene Representation | Interaction Consideration | Multimodal Prediction | 0.32 | 0.88 | - |

| TNT [47] | General Scene Representation | Interaction Consideration, Map Information Fusion | Multimodal Prediction | 0.21 | 0.67 | - |

| GOHOME [79] | - | Interaction Consideration | Multimodal Prediction | 0.20 | 0.60 | 0.05 |

| StopNet [42] | General Scene Representation | Interaction Consideration, Map Information Fusion | Multimodal Prediction | 0.20 | 0.58 | 0.02 |

| Model | Works | Year | DL-Approaches | Observation Horizon | Prediction Horizon | Training Device |

|---|---|---|---|---|---|---|

| MTP | [35] | 2019 | CNN, FC | 2 s | 3 s | Nvidia Titan X GPU × 16 |

| Multipath | [94] | 2019 | CNN | 1.1 s | 8s | - |

| TPNet | [22] | 2020 | CNN, MLP | 2–3.2 s | 3–4.8 s | - |

| DiversityGAN | [83] | 2020 | LSTM, GAN | 2 s | 3 s | Nvidia Tesla V100 GPU × 1 |

| chenxu | [84] | 2020 | LSTM, 1D-CNN, MLP | 2 s | 3 s | - |

| SAMMP | [73] | 2020 | LSTM, AM | 2–3 s | 3–5 s | - |

| TNT | [47] | 2020 | GNN, AM, MLP | 2 s | 3 s | - |

| WIMP | [74] | 2020 | LSTM, GNN, AM | 2 s | 3 s | - |

| LaneGCN | [55] | 2020 | GCN, 1D-CNN, FPN | 2 s | 3 s | Nvidia Titan X GPU × 4 |

| PRIME | [31] | 2021 | LSTM, AM | 2 s | 3 s | - |

| HOME | [40] | 2021 | CNN, GRU, AM | 2 s | 3 s | - |

| THOMAS | [76] | 2021 | 1D-CNN, GRU, AM | 1 s | 3 s | - |

| LaneRCNN | [49] | 2021 | 1D-CNN, GNN, MLP | 2 s | 3 s | - |

| mmTransformer | [95] | 2021 | Transformer | 2 s | 3 s | - |

| DenseTNT | [48] | 2021 | GNN, AM, MLP | 1.1–2 s | 3–8 s | - |

| TPCN | [56] | 2021 | CNN, MLP | 2 s | 3 s | - |

| SceneTransformer | [58] | 2021 | Transformer | 2 s | 3 s | Nvidia Tesla V100 GPU × 1 |

| GOHOME | [79] | 2022 | 1D-CNN, GRU, GCN, AM | 2 s | 3–6 s | - |

| StopNet | [42] | 2022 | CNN | 1.1–2 s | 3–8 s | - |

| Multipath++ | [60] | 2022 | LSTM, MLP | 1.1–2 s | 3–8 s | - |

| DCMS | [96] | 2022 | CNN, MLP | 2 s | 3 s | - |

| MPA | [97] | 2022 | LSTM, MLP | 1.1 s | 8 s | |

| Golfer | [80] | 2022 | Transformer | 1.1 s | 8 s | - |

| MTRA | [51] | 2022 | Transformer | 1.1 s | 8 s | - |

| xiaoxu | [53] | 2023 | GAT, GRU | 2 s | 3 s | Nvidia RTX 2080 Ti GPU × 1 |

| ME-GAN | [81] | 2023 | GAN, FPN | 2 s | 3 s | Nvidia RTX 3090 GPU × 1 |

| MTR++ | [127] | 2023 | Transformer | 1.1 s | 8 s | GPU × 8 (No specific type) |

8.4. Discussion of Future Directions

- Many methods only consider single-target agent prediction, while multi-agent joint prediction has more significance in practice. Though there are some multi-agent joint prediction works [58,72,78], few of them consider the coordination of the agents’ motion in the prediction phase well, which may cause invalid outputs, e.g., trajectories of multiple target vehicles overlap in the same future timestep. Therefore, the prediction model should realize multi-agent joint prediction with full consideration of the interaction in both historical and future stages and should ensure the coordination between the predicted motion states in the future.

- Most prediction methods default the target vehicles with complete historical tracking. Nevertheless, in actual cases, the sensors will inevitably encounter the problem of occlusion so that the motion inputs may be mutilated. Therefore, future prediction works should consider this problem of residual input due to perceptual failures.

- Many deep learning-based prediction models are trained and validated on a single specific dataset and may become less effective when validated on other datasets. Autonomous vehicles may often meet unfamiliar scenarios. Thus, the generalization capability of the models also needs to be improved in the future.

- Most current approaches treat the motion prediction function as a stand-alone module, ignoring the link between prediction and other functions of autonomous driving. In the future, the close coupling between the prediction module and other modules should be further considered, such as coupling the motion prediction of surrounding vehicles with the EV’s decision-making and planning. What is more, a joint evaluation platform for prediction and decision-making can also be built.

- Occupancy flow prediction is a new form of motion prediction that aims to reason about the spatial occupancy around the EV. Occupancy flow prediction has the ability to estimate traffic dynamics, and it can even predict occluded vehicles or suddenly appearing vehicles, which is hardly possible in the form of trajectory prediction. Such models currently rely on rich inputs and complex neural networks and thus may fall short in real-time performance. Therefore, future occupancy flow prediction models should consider how to efficiently extract contextual features that can adequately characterize changes in the surrounding traffic dynamics while ensuring real-time model operation.

- Existing deep learning-based prediction methods focus on improving model prediction accuracy, but few works have adequately evaluated the timeliness of inference of prediction models. Future benchmark datasets related to vehicle motion prediction should contain quantitative metrics that could adequately evaluate the computational efficiency of models.

- Current deep learning-based prediction models characterize prediction uncertainty in two main ways: one is to output the Gaussian distribution parameters of the target vehicle states at each time step, i.e., the position mean and its covariance, such as MHA-LSTM [32]. The other is to consider multimodal outputs to simultaneously predict multiple trajectories and their probabilities, such as DenseTNT [48]. Current work based on deep learning mainly measures model uncertainty from the perspective of resultant outputs. It is often based on a priori knowledge, such as predefining a finite number of modalities. In the future, prediction uncertainty should be better considered from a modeling perspective, e.g., deep learning methods can be combined with probabilistic modeling methods.

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hussain, R.; Zeadally, S. Autonomous cars: Research results, issues, and future challenges. IEEE Commun. Surv. Tutor. 2018, 21, 1275–1313. [Google Scholar] [CrossRef]

- Alahi, A.; Goel, K.; Ramanathan, V.; Robicquet, A.; Fei-Fei, L.; Savarese, S. Social lstm: Human trajectory prediction in crowded spaces. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 961–971. [Google Scholar]

- Gupta, A.; Johnson, J.; Fei-Fei, L.; Savarese, S.; Alahi, A. Social gan: Socially acceptable trajectories with generative adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2255–2264. [Google Scholar]

- Xue, H.; Huynh, D.Q.; Reynolds, M. Bi-prediction: Pedestrian trajectory prediction based on bidirectional LSTM classification. In Proceedings of the 2017 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Sydney, Australia, 29 November–1 December 2017; pp. 1–8. [Google Scholar]

- Zhang, P.; Ouyang, W.; Zhang, P.; Xue, J.; Zheng, N. Sr-lstm: State refinement for lstm towards pedestrian trajectory prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 12085–12094. [Google Scholar]

- Zhou, Y.; Wu, H.; Cheng, H.; Qi, K.; Hu, K.; Kang, C.; Zheng, J. Social graph convolutional LSTM for pedestrian trajectory prediction. IET Intell. Transp. Syst. 2021, 15, 396–405. [Google Scholar] [CrossRef]

- Saleh, K.; Hossny, M.; Nahavandi, S. Cyclist trajectory prediction using bidirectional recurrent neural networks. In Proceedings of the AI 2018: Advances in Artificial Intelligence: 31st Australasian Joint Conference, Wellington, New Zealand, 11–14 December 2018; pp. 284–295. [Google Scholar]

- Gao, H.; Su, H.; Cai, Y.; Wu, R.; Hao, Z.; Xu, Y.; Wu, W.; Wang, J.; Li, Z.; Kan, Z. Trajectory prediction of cyclist based on dynamic Bayesian network and long short-term memory model at unsignalized intersections. Sci. China Inf. Sci. 2021, 64, 172207. [Google Scholar] [CrossRef]

- Rudenko, A.; Palmieri, L.; Herman, M.; Kitani, K.M.; Gavrila, D.M.; Arras, K.O. Human motion trajectory prediction: A survey. Int. J. Robot. Res. 2020, 39, 895–935. [Google Scholar] [CrossRef]

- Sighencea, B.I.; Stanciu, R.I.; Căleanu, C.D. A review of deep learning-based methods for pedestrian trajectory prediction. Sensors 2021, 21, 7543. [Google Scholar] [CrossRef]

- Ridel, D.; Rehder, E.; Lauer, M.; Stiller, C.; Wolf, D. A literature review on the prediction of pedestrian behavior in urban scenarios. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 3105–3112. [Google Scholar]

- Ammoun, S.; Nashashibi, F. Real time trajectory prediction for collision risk estimation between vehicles. In Proceedings of the 2009 IEEE 5th International Conference on Intelligent Computer Communication and Processing, Cluj-Napoca, Romania, 27–29 August 2009; pp. 417–422. [Google Scholar]

- Kaempchen, N.; Weiss, K.; Schaefer, M.; Dietmayer, K.C. IMM object tracking for high dynamic driving maneuvers. In Proceedings of the IEEE Intelligent Vehicles Symposium, Parma, Italy, 14–17 June 2004; pp. 825–830. [Google Scholar]

- Jin, B.; Jiu, B.; Su, T.; Liu, H.; Liu, G. Switched Kalman filter-interacting multiple model algorithm based on optimal autoregressive model for manoeuvring target tracking. IET Radar Sonar Navig. 2015, 9, 199–209. [Google Scholar] [CrossRef]

- Dyckmanns, H.; Matthaei, R.; Maurer, M.; Lichte, B.; Effertz, J.; Stüker, D. Object tracking in urban intersections based on active use of a priori knowledge: Active interacting multi model filter. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium (IV), Baden-Baden, Germany, 5–9 June 2011; pp. 625–630. [Google Scholar]

- Laugier, C.; Paromtchik, I.E.; Perrollaz, M.; Yong, M.; Yoder, J.D.; Tay, C.; Mekhnacha, K.; Nègre, A. Probabilistic analysis of dynamic scenes and collision risks assessment to improve driving safety. IEEE Intell. Transp. Syst. Mag. 2011, 3, 4–19. [Google Scholar] [CrossRef]

- Qiao, S.; Shen, D.; Wang, X.; Han, N.; Zhu, W. A self-adaptive parameter selection trajectory prediction approach via hidden Markov models. IEEE Trans. Intell. Transp. Syst. 2014, 16, 284–296. [Google Scholar] [CrossRef]

- Kumar, P.; Perrollaz, M.; Lefevre, S.; Laugier, C. Learning-based approach for online lane change intention prediction. In Proceedings of the 2013 IEEE Intelligent Vehicles Symposium (IV), Gold Coast, Australia, 23–26 June 2013; pp. 797–802. [Google Scholar]

- Aoude, G.S.; Luders, B.D.; Lee, K.K.; Levine, D.S.; How, J.P. Threat assessment design for driver assistance system at intersections. In Proceedings of the 13th International IEEE Conference on Intelligent Transportation Systems, Funchal, Portugal, 19–22 September 2010; pp. 1855–1862. [Google Scholar]

- Schreier, M.; Willert, V.; Adamy, J. Bayesian, maneuver-based, long-term trajectory prediction and criticality assessment for driver assistance systems. In Proceedings of the 17th International IEEE Conference on Intelligent Transportation Systems (ITSC), Qingdao, China, 8–11 October 2014; pp. 334–341. [Google Scholar]

- Schreier, M.; Willert, V.; Adamy, J. An integrated approach to maneuver-based trajectory prediction and criticality assessment in arbitrary road environments. IEEE Trans. Intell. Transp. Syst. 2016, 17, 2751–2766. [Google Scholar] [CrossRef]

- Fang, L.; Jiang, Q.; Shi, J.; Zhou, B. Tpnet: Trajectory proposal network for motion prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 6797–6806. [Google Scholar]

- Lefèvre, S.; Vasquez, D.; Laugier, C. A survey on motion prediction and risk assessment for intelligent vehicles. ROBOMECH J. 2014, 1, 1–14. [Google Scholar] [CrossRef]

- Gomes, I.; Wolf, D. A review on intention-aware and interaction-aware trajectory prediction for autonomous vehicles. TechRxiv 2022. [Google Scholar] [CrossRef]

- Leon, F.; Gavrilescu, M. A review of tracking and trajectory prediction methods for autonomous driving. Mathematics 2021, 9, 660. [Google Scholar] [CrossRef]

- Karle, P.; Geisslinger, M.; Betz, J.; Lienkamp, M. Scenario understanding and motion prediction for autonomous vehicles-review and comparison. IEEE Trans. Intell. Transp. Syst. 2022, 23, 16962–16982. [Google Scholar] [CrossRef]

- Huang, Y.; Du, J.; Yang, Z.; Zhou, Z.; Zhang, L.; Chen, H. A Survey on Trajectory-Prediction Methods for Autonomous Driving. IEEE Trans. Intell. Veh. 2022, 7, 652–674. [Google Scholar] [CrossRef]

- Mozaffari, S.; Al-Jarrah, O.Y.; Dianati, M.; Jennings, P.; Mouzakitis, A. Deep learning-based vehicle behavior prediction for autonomous driving applications: A review. IEEE Trans. Intell. Transp. Syst. 2020, 23, 33–47. [Google Scholar] [CrossRef]

- Mahjourian, R.; Kim, J.; Chai, Y.; Tan, M.; Sapp, B.; Anguelov, D. Occupancy flow fields for motion forecasting in autonomous driving. IEEE Robot. Autom. Lett. 2022, 7, 5639–5646. [Google Scholar] [CrossRef]

- Kolekar, S.; Gite, S.; Pradhan, B.; Kotecha, K. Behavior prediction of traffic actors for intelligent vehicle using artificial intelligence techniques: A review. IEEE Access 2021, 9, 135034–135058. [Google Scholar] [CrossRef]

- Song, H.; Luan, D.; Ding, W.; Wang, M.Y.; Chen, Q. Learning to predict vehicle trajectories with model-based planning. In Proceedings of the Conference on Robot Learning, London, UK, 8–11 November 2021; pp. 1035–1045. [Google Scholar]

- Messaoud, K.; Yahiaoui, I.; Verroust-Blondet, A.; Nashashibi, F. Attention based vehicle trajectory prediction. IEEE Trans. Intell. Veh. 2020, 6, 175–185. [Google Scholar] [CrossRef]

- Casas, S.; Gulino, C.; Liao, R.; Urtasun, R. Spagnn: Spatially-aware graph neural networks for relational behavior forecasting from sensor data. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 9491–9497. [Google Scholar]

- Tang, C.; Salakhutdinov, R.R. Multiple futures prediction. In Advances in Neural Information Processing Systems; Springer: Berlin/Heidelberg, Germany, 2019; Volume 32. [Google Scholar]

- Cui, H.; Radosavljevic, V.; Chou, F.C.; Lin, T.H.; Nguyen, T.; Huang, T.K.; Schneider, J.; Djuric, N. Multimodal trajectory predictions for autonomous driving using deep convolutional networks. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 2090–2096. [Google Scholar]

- Phan-Minh, T.; Grigore, E.C.; Boulton, F.A.; Beijbom, O.; Wolff, E.M. Covernet: Multimodal behavior prediction using trajectory sets. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 14074–14083. [Google Scholar]

- Djuric, N.; Radosavljevic, V.; Cui, H.; Nguyen, T.; Chou, F.C.; Lin, T.H.; Schneider, J. Short-Term Motion Prediction of Traffic Actors for Autonomous Driving Using Deep Convolutional Networks; Uber Advanced Technologies Group: Pittsburgh, PA, USA, 2018; Volume 1, p. 6. [Google Scholar]

- Hong, J.; Sapp, B.; Philbin, J. Rules of the road: Predicting driving behavior with a convolutional model of semantic interactions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 8454–8462. [Google Scholar]

- Cheng, H.; Liao, W.; Tang, X.; Yang, M.Y.; Sester, M.; Rosenhahn, B. Exploring dynamic context for multi-path trajectory prediction. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 12795–12801. [Google Scholar]

- Gilles, T.; Sabatini, S.; Tsishkou, D.; Stanciulescu, B.; Moutarde, F. Home: Heatmap output for future motion estimation. In Proceedings of the 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; pp. 500–507. [Google Scholar]

- Cheng, H.; Liao, W.; Yang, M.Y.; Rosenhahn, B.; Sester, M. Amenet: Attentive maps encoder network for trajectory prediction. Isprs J. Photogramm. Remote Sens. 2021, 172, 253–266. [Google Scholar] [CrossRef]

- Kim, J.; Mahjourian, R.; Ettinger, S.; Bansal, M.; White, B.; Sapp, B.; Anguelov, D. Stopnet: Scalable trajectory and occupancy prediction for urban autonomous driving. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 8957–8963. [Google Scholar]

- Hou, L.; Li, S.E.; Yang, B.; Wang, Z.; Nakano, K. Integrated Graphical Representation of Highway Scenarios to Improve Trajectory Prediction of Surrounding Vehicles. IEEE Trans. Intell. Veh. 2023, 8, 1638–1651. [Google Scholar] [CrossRef]

- Ma, Y.; Zhu, X.; Zhang, S.; Yang, R.; Wang, W.; Manocha, D. Trafficpredict: Trajectory prediction for heterogeneous traffic-agents. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 6120–6127. [Google Scholar]

- Pan, J.; Sun, H.; Xu, K.; Jiang, Y.; Xiao, X.; Hu, J.; Miao, J. Lane-Attention: Predicting Vehicles’ Moving Trajectories by Learning Their Attention Over Lanes. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2019–24 January 2020; pp. 7949–7956. [Google Scholar] [CrossRef]

- Gao, J.; Sun, C.; Zhao, H.; Shen, Y.; Anguelov, D.; Li, C.; Schmid, C. Vectornet: Encoding hd maps and agent dynamics from vectorized representation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 11525–11533. [Google Scholar]

- Zhao, H.; Gao, J.; Lan, T.; Sun, C.; Sapp, B.; Varadarajan, B.; Shen, Y.; Shen, Y.; Chai, Y.; Schmid, C.; et al. Tnt: Target-driven trajectory prediction. In Proceedings of the Conference on Robot Learning, Virtual, 16–18 November 2020; pp. 895–904. [Google Scholar]

- Gu, J.; Sun, C.; Zhao, H. Densetnt: End-to-end trajectory prediction from dense goal sets. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 15303–15312. [Google Scholar]

- Zeng, W.; Liang, M.; Liao, R.; Urtasun, R. Lanercnn: Distributed representations for graph-centric motion forecasting. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 532–539. [Google Scholar]

- Li, Z.; Lu, C.; Yi, Y.; Gong, J. A hierarchical framework for interactive behaviour prediction of heterogeneous traffic participants based on graph neural network. IEEE Trans. Intell. Transp. Syst. 2021, 23, 9102–9114. [Google Scholar] [CrossRef]

- Shi, S.; Jiang, L.; Dai, D.; Schiele, B. MTR-A: 1st Place Solution for 2022 Waymo Open Dataset Challenge—Motion Prediction. arXiv 2022, arXiv:cs.CV/2209.10033. [Google Scholar]

- Zhang, K.; Zhao, L.; Dong, C.; Wu, L.; Zheng, L. AI-TP: Attention-Based Interaction-Aware Trajectory Prediction for Autonomous Driving. IEEE Trans. Intell. Veh. 2023, 8, 73–83. [Google Scholar] [CrossRef]

- Mo, X.; Xing, Y.; Liu, H.; Lv, C. Map-Adaptive Multimodal Trajectory Prediction Using Hierarchical Graph Neural Networks. IEEE Robot. Autom. Lett. 2023, 8, 3685–3692. [Google Scholar] [CrossRef]

- Xu, D.; Shang, X.; Liu, Y.; Peng, H.; Li, H. Group Vehicle Trajectory Prediction With Global Spatio-Temporal Graph. IEEE Trans. Intell. Veh. 2023, 8, 1219–1229. [Google Scholar] [CrossRef]

- Liang, M.; Yang, B.; Hu, R.; Chen, Y.; Liao, R.; Feng, S.; Urtasun, R. Learning lane graph representations for motion forecasting. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 541–556. [Google Scholar]

- Ye, M.; Cao, T.; Chen, Q. Tpcn: Temporal point cloud networks for motion forecasting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 11318–11327. [Google Scholar]

- Chen, W.; Wang, F.; Sun, H. S2tnet: Spatio-temporal transformer networks for trajectory prediction in autonomous driving. In Proceedings of the Asian Conference on Machine Learning, Virtual, 17–19 November 2021; pp. 454–469. [Google Scholar]

- Ngiam, J.; Caine, B.; Vasudevan, V.; Zhang, Z.; Chiang, H.T.L.; Ling, J.; Roelofs, R.; Bewley, A.; Liu, C.; Venugopal, A.; et al. Scene transformer: A unified multi-task model for behavior prediction and planning. arXiv 2021, arXiv:2106.08417. [Google Scholar]

- Hasan, F.; Huang, H. MALS-Net: A Multi-Head Attention-Based LSTM Sequence-to-Sequence Network for Socio-Temporal Interaction Modelling and Trajectory Prediction. Sensors 2023, 23, 530. [Google Scholar] [CrossRef]

- Varadarajan, B.; Hefny, A.; Srivastava, A.; Refaat, K.S.; Nayakanti, N.; Cornman, A.; Chen, K.; Douillard, B.; Lam, C.P.; Anguelov, D.; et al. Multipath++: Efficient information fusion and trajectory aggregation for behavior prediction. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 7814–7821. [Google Scholar]

- Li, J.; Shi, H.; Guo, Y.; Han, G.; Yu, R.; Wang, X. TraGCAN: Trajectory Prediction of Heterogeneous Traffic Agents in IoV Systems. IEEE Internet Things J. 2023, 10, 7100–7113. [Google Scholar] [CrossRef]

- Feng, X.; Cen, Z.; Hu, J.; Zhang, Y. Vehicle trajectory prediction using intention-based conditional variational autoencoder. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 3514–3519. [Google Scholar]

- Zou, Q.; Hou, Y.; Wang, Z. Predicting vehicle lane-changing behavior with awareness of surrounding vehicles using LSTM network. In Proceedings of the 2019 IEEE 6th International Conference on Cloud Computing and Intelligence Systems (CCIS), Singapore, 19–21 December 2019; pp. 79–83. [Google Scholar]

- Deo, N.; Trivedi, M.M. Trajectory forecasts in unknown environments conditioned on grid-based plans. arXiv 2020, arXiv:2001.00735. [Google Scholar]

- Zhang, T.; Song, W.; Fu, M.; Yang, Y.; Wang, M. Vehicle motion prediction at intersections based on the turning intention and prior trajectories model. IEEE/CAA J. Autom. Sin. 2021, 8, 1657–1666. [Google Scholar] [CrossRef]

- Liu, X.; Wang, Y.; Jiang, K.; Zhou, Z.; Nam, K.; Yin, C. Interactive trajectory prediction using a driving risk map-integrated deep learning method for surrounding vehicles on highways. IEEE Trans. Intell. Transp. Syst. 2022, 23, 19076–19087. [Google Scholar] [CrossRef]

- Zhou, D.; Wang, H.; Li, W.; Zhou, Y.; Cheng, N.; Lu, N. SA-SGAN: A Vehicle Trajectory Prediction Model Based on Generative Adversarial Networks. In Proceedings of the 2021 IEEE 94th Vehicular Technology Conference (VTC2021-Fall), Norman, OK, USA, 27–30 September 2021; pp. 1–5. [Google Scholar]

- Zhi, Y.; Bao, Z.; Zhang, S.; He, R. BiGRU based online multi-modal driving maneuvers and trajectory prediction. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2021, 235, 3431–3441. [Google Scholar] [CrossRef]

- Chen, L.; Zhou, Q.; Cai, Y.; Wang, H.; Li, Y. CAE-GAN: A hybrid model for vehicle trajectory prediction. IET Intell. Transp. Syst. 2022, 16, 1682–1696. [Google Scholar] [CrossRef]

- Gao, K.; Li, X.; Chen, B.; Hu, L.; Liu, J.; Du, R.; Li, Y. Dual Transformer Based Prediction for Lane Change Intentions and Trajectories in Mixed Traffic Environment. IEEE Trans. Intell. Transp. Syst. 2023, 24, 6203–6216. [Google Scholar] [CrossRef]

- Li, X.; Ying, X.; Chuah, M.C. Grip++: Enhanced graph-based interaction-aware trajectory prediction for autonomous driving. arXiv 2019, arXiv:1907.07792. [Google Scholar]

- Carrasco, S.; Llorca, D.F.; Sotelo, M. Scout: Socially-consistent and understandable graph attention network for trajectory prediction of vehicles and vrus. In Proceedings of the 2021 IEEE Intelligent Vehicles Symposium (IV), Nagoya, Japan, 11–17 July 2021; pp. 1501–1508. [Google Scholar]

- Mercat, J.; Gilles, T.; El Zoghby, N.; Sandou, G.; Beauvois, D.; Gil, G.P. Multi-head attention for multi-modal joint vehicle motion forecasting. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 9638–9644. [Google Scholar]

- Khandelwal, S.; Qi, W.; Singh, J.; Hartnett, A.; Ramanan, D. What-if motion prediction for autonomous driving. arXiv 2020, arXiv:2008.10587. [Google Scholar]

- Casas, S.; Gulino, C.; Suo, S.; Luo, K.; Liao, R.; Urtasun, R. Implicit latent variable model for scene-consistent motion forecasting. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 624–641. [Google Scholar]

- Gilles, T.; Sabatini, S.; Tsishkou, D.; Stanciulescu, B.; Moutarde, F. Thomas: Trajectory heatmap output with learned multi-agent sampling. arXiv 2021, arXiv:2110.06607. [Google Scholar]

- Yu, J.; Zhou, M.; Wang, X.; Pu, G.; Cheng, C.; Chen, B. A dynamic and static context-aware attention network for trajectory prediction. ISPRS Int. J. Geo-Inf. 2021, 10, 336. [Google Scholar] [CrossRef]

- Li, X.; Ying, X.; Chuah, M.C. Grip: Graph-based interaction-aware trajectory prediction. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 3960–3966. [Google Scholar]

- Gilles, T.; Sabatini, S.; Tsishkou, D.; Stanciulescu, B.; Moutarde, F. Gohome: Graph-oriented heatmap output for future motion estimation. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 9107–9114. [Google Scholar]

- Tang, X.; Eshkevari, S.S.; Chen, H.; Wu, W.; Qian, W.; Wang, X. Golfer: Trajectory Prediction with Masked Goal Conditioning MnM Network. arXiv 2022, arXiv:2207.00738. [Google Scholar]

- Guo, H.; Meng, Q.; Zhao, X.; Liu, J.; Cao, D.; Chen, H. Map-enhanced generative adversarial trajectory prediction method for automated vehicles. Inf. Sci. 2023, 622, 1033–1049. [Google Scholar] [CrossRef]

- Li, R.; Qin, Y.; Wang, J.; Wang, H. AMGB: Trajectory prediction using attention-based mechanism GCN-BiLSTM in IOV. Pattern Recognit. Lett. 2023, 169, 17–27. [Google Scholar] [CrossRef]

- Huang, X.; McGill, S.G.; DeCastro, J.A.; Fletcher, L.; Leonard, J.J.; Williams, B.C.; Rosman, G. DiversityGAN: Diversity-aware vehicle motion prediction via latent semantic sampling. IEEE Robot. Autom. Lett. 2020, 5, 5089–5096. [Google Scholar] [CrossRef]

- Luo, C.; Sun, L.; Dabiri, D.; Yuille, A. Probabilistic multi-modal trajectory prediction with lane attention for autonomous vehicles. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2019–24 January 2020; pp. 2370–2376. [Google Scholar]

- Messaoud, K.; Deo, N.; Trivedi, M.M.; Nashashibi, F. Trajectory prediction for autonomous driving based on multi-head attention with joint agent-map representation. In Proceedings of the 2021 IEEE Intelligent Vehicles Symposium (IV), Nagoya, Japan, 11–17 July 2021; pp. 165–170. [Google Scholar]

- Kim, B.; Park, S.H.; Lee, S.; Khoshimjonov, E.; Kum, D.; Kim, J.; Kim, J.S.; Choi, J.W. Lapred: Lane-aware prediction of multi-modal future trajectories of dynamic agents. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 14636–14645. [Google Scholar]

- Zhong, Z.; Luo, Y.; Liang, W. STGM: Vehicle Trajectory Prediction Based on Generative Model for Spatial-Temporal Features. IEEE Trans. Intell. Transp. Syst. 2022, 23, 18785–18793. [Google Scholar] [CrossRef]

- Zhang, L.; Su, P.H.; Hoang, J.; Haynes, G.C.; Marchetti-Bowick, M. Map-adaptive goal-based trajectory prediction. In Proceedings of the Conference on Robot Learning, Virtual, 16–18 November 2020; pp. 1371–1383. [Google Scholar]

- Narayanan, S.; Moslemi, R.; Pittaluga, F.; Liu, B.; Chandraker, M. Divide-and-conquer for lane-aware diverse trajectory prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 15799–15808. [Google Scholar]

- Tian, W.; Wang, S.; Wang, Z.; Wu, M.; Zhou, S.; Bi, X. Multi-modal vehicle trajectory prediction by collaborative learning of lane orientation, vehicle interaction, and intention. Sensors 2022, 22, 4295. [Google Scholar] [CrossRef] [PubMed]

- Ding, W.; Shen, S. Online vehicle trajectory prediction using policy anticipation network and optimization-based context reasoning. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 9610–9616. [Google Scholar]

- Deo, N.; Trivedi, M.M. Multi-modal trajectory prediction of surrounding vehicles with maneuver based lstms. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 1179–1184. [Google Scholar]

- Guo, H.; Meng, Q.; Cao, D.; Chen, H.; Liu, J.; Shang, B. Vehicle trajectory prediction method coupled with ego vehicle motion trend under dual attention mechanism. IEEE Trans. Instrum. Meas. 2022, 71, 1–16. [Google Scholar] [CrossRef]

- Chai, Y.; Sapp, B.; Bansal, M.; Anguelov, D. Multipath: Multiple probabilistic anchor trajectory hypotheses for behavior prediction. arXiv 2019, arXiv:1910.05449. [Google Scholar]

- Liu, Y.; Zhang, J.; Fang, L.; Jiang, Q.; Zhou, B. Multimodal motion prediction with stacked transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 7577–7586. [Google Scholar]

- Ye, M.; Xu, J.; Xu, X.; Cao, T.; Chen, Q. Dcms: Motion forecasting with dual consistency and multi-pseudo-target supervision. arXiv 2022, arXiv:2204.05859. [Google Scholar]

- Konev, S. MPA: MultiPath++ Based Architecture for Motion Prediction. arXiv 2022, arXiv:2206.10041. [Google Scholar]

- Wang, Y.; Zhou, H.; Zhang, Z.; Feng, C.; Lin, H.; Gao, C.; Tang, Y.; Zhao, Z.; Zhang, S.; Guo, J.; et al. TENET: Transformer Encoding Network for Effective Temporal Flow on Motion Prediction. arXiv 2022, arXiv:2207.00170. [Google Scholar]

- Yao, H.; Li, X.; Yang, X. Physics-Aware Learning-Based Vehicle Trajectory Prediction of Congested Traffic in a Connected Vehicle Environment. IEEE Trans. Veh. Technol. 2023, 72, 102–112. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Lee, S.; Purushwalkam Shiva Prakash, S.; Cogswell, M.; Ranjan, V.; Crandall, D.; Batra, D. Stochastic multiple choice learning for training diverse deep ensembles. In Advances in Neural Information Processing Systems; Springer: Berlin/Heidelberg, Germany, 2016; Volume 29. [Google Scholar]

- Gonzalez, T.F. Clustering to minimize the maximum intercluster distance. Theor. Comput. Sci. 1985, 38, 293–306. [Google Scholar] [CrossRef]

- Hochbaum, D.S.; Shmoys, D.B. A best possible heuristic for the k-center problem. Math. Oper. Res. 1985, 10, 180–184. [Google Scholar] [CrossRef]

- Park, S.H.; Kim, B.; Kang, C.M.; Chung, C.C.; Choi, J.W. Sequence-to-sequence prediction of vehicle trajectory via LSTM encoder-decoder architecture. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 1672–1678. [Google Scholar]

- Choi, D.; Yim, J.; Baek, M.; Lee, S. Machine learning-based vehicle trajectory prediction using v2v communications and on-board sensors. Electronics 2021, 10, 420. [Google Scholar] [CrossRef]

- Liu, H.; Huang, Z.; Lv, C. STrajNet: Occupancy Flow Prediction via Multi-modal Swin Transformer. arXiv 2022, arXiv:2208.00394. [Google Scholar]

- Hu, Y.; Shao, W.; Jiang, B.; Chen, J.; Chai, S.; Yang, Z.; Qian, J.; Zhou, H.; Liu, Q. HOPE: Hierarchical Spatial-temporal Network for Occupancy Flow Prediction. arXiv 2022, arXiv:2206.10118. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Waymo Open Challenges. Available online: https://waymo.com/open/challenges/ (accessed on 1 September 2023).

- Argoverse 2: Motion Forecasting Competition. Available online: https://eval.ai/web/challenges/challenge-page/1719/overview (accessed on 1 September 2023).

- INTERPRET: INTERACTION-Dataset-Based PREdicTion Challenge. Available online: http://challenge.interaction-dataset.com/prediction-challenge/intro (accessed on 1 September 2023).

- NuScenes Prediction Task. Available online: https://www.nuscenes.org/prediction?externalData=all&mapData=all&modalities=Any (accessed on 1 September 2023).

- APOLLOSCAPE Trajectory. Available online: http://apolloscape.auto/trajectory.html (accessed on 1 September 2023).

- Krajewski, R.; Bock, J.; Kloeker, L.; Eckstein, L. The highd dataset: A drone dataset of naturalistic vehicle trajectories on german highways for validation of highly automated driving systems. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 2118–2125. [Google Scholar]

- NGSIM—US Highway 101 Dataset. Available online: https://www.fhwa.dot.gov/publications/research/operations/07030/index.cfm (accessed on 1 September 2023).

- NGSIM—Interstate 80 Freeway Dataset. Available online: https://www.fhwa.dot.gov/publications/research/operations/06137/index.cfm (accessed on 1 September 2023).

- Zhan, W.; Sun, L.; Wang, D.; Shi, H.; Clausse, A.; Naumann, M.; Kummerle, J.; Konigshof, H.; Stiller, C.; de La Fortelle, A.; et al. Interaction dataset: An international, adversarial and cooperative motion dataset in interactive driving scenarios with semantic maps. arXiv 2019, arXiv:1910.03088. [Google Scholar]

- Bock, J.; Krajewski, R.; Moers, T.; Runde, S.; Vater, L.; Eckstein, L. The ind dataset: A drone dataset of naturalistic road user trajectories at german intersections. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 1929–1934. [Google Scholar]

- Krajewski, R.; Moers, T.; Bock, J.; Vater, L.; Eckstein, L. The round dataset: A drone dataset of road user trajectories at roundabouts in germany. In Proceedings of the 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20–23 September 2020; pp. 1–6. [Google Scholar]

- Moers, T.; Vater, L.; Krajewski, R.; Bock, J.; Zlocki, A.; Eckstein, L. The exiD dataset: A real-world trajectory dataset of highly interactive highway scenarios in germany. In Proceedings of the 2022 IEEE Intelligent Vehicles Symposium (IV), Aachen, Germany, 4–9 June 2022; pp. 958–964. [Google Scholar]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuscenes: A multimodal dataset for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 11621–11631. [Google Scholar]

- Chang, M.F.; Lambert, J.; Sangkloy, P.; Singh, J.; Bak, S.; Hartnett, A.; Wang, D.; Carr, P.; Lucey, S.; Ramanan, D.; et al. Argoverse: 3d tracking and forecasting with rich maps. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 8748–8757. [Google Scholar]

- Wilson, B.; Qi, W.; Agarwal, T.; Lambert, J.; Singh, J.; Khandelwal, S.; Pan, B.; Kumar, R.; Hartnett, A.; Pontes, J.K.; et al. Argoverse 2: Next generation datasets for self-driving perception and forecasting. arXiv 2023, arXiv:2301.00493. [Google Scholar]

- Ettinger, S.; Cheng, S.; Caine, B.; Liu, C.; Zhao, H.; Pradhan, S.; Chai, Y.; Sapp, B.; Qi, C.R.; Zhou, Y.; et al. Large scale interactive motion forecasting for autonomous driving: The waymo open motion dataset. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 9710–9719. [Google Scholar]

- Houston, J.; Zuidhof, G.; Bergamini, L.; Ye, Y.; Chen, L.; Jain, A.; Omari, S.; Iglovikov, V.; Ondruska, P. One thousand and one hours: Self-driving motion prediction dataset. In Proceedings of the Conference on Robot Learning, Virtual, 16–18 November 2020; pp. 409–418. [Google Scholar]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. Pointpillars: Fast encoders for object detection from point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 12697–12705. [Google Scholar]

- Shi, S.; Jiang, L.; Dai, D.; Schiele, B. MTR++: Multi-Agent Motion Prediction with Symmetric Scene Modeling and Guided Intention Querying. arXiv 2023, arXiv:cs.CV/2306.17770. [Google Scholar]

| Criteria | Specific Classification | References | |

|---|---|---|---|

| Scene Input Representation | General Scene Representation | Grid-based | [35,36,37,38,39,40,41,42,43] |

| Graph-based | [44,45,46,47,48,49,50,51,52,53,54] | ||

| Context Refinement | Agent Feature Refinement | Spatial and Temporal Information Mining | [40,44,45,49,55,56,57,58,59] |

| Agent-specific Information Supplement | [32,44,50,57,60,61] | ||

| Interaction Consideration | Implicit-based | [35,36,37,38,62,63,64,65,66] | |

| Aggregation-based | [2,3,56,67,68,69,70] | ||

| Pairwise-based | [31,38,40,44,46,47,48,52,53,54,59,60,61,71,72,73,74,75,76,77,78,79,80,81,82] | ||

| Map Information Fusion | Complementary Fusion | [77,83] | |

| Coupled Fusion | [35,36,37,38,45,46,47,48,53,55,58,64,76,81,84,85,86,87] | ||

| Guided Fusion | [88,89,90] | ||

| Prediction Rationality Improvement | Multimodal Prediction | Generative-based | [3,34,39,62,66,67,83,91] |

| Prior-based | [36,68,92,93] | ||

| Proposal-based | [40,47,48,49,53,60,76,79,89,90,94,95,96,97,98] | ||

| Implicit-based | [32,35,55,56,58,74,80,85,86] | ||

| Multi-agent Joint Prediction | Naïve-level Joint Prediction | [58,71,73,75,78] | |

| Scene-level Joint Prediction | [76] | ||

| Feasibility Improvement | [22,31,66,81,96,99] | ||

| Class | Characteristics | Works | Year | DL Approaches | Summary |

|---|---|---|---|---|---|

| Grid-based | —Emphasize the spatial proximity of scene elements. —Usually oriented to a single target agent. —Easy to supplement different input. —Prone to ignoring long-range dependency. | [37] | 2018 | CNN | Use a 3-channel RGB raster map to represent scene inputs. |

| [35] | 2019 | CNN | |||

| [36] | 2020 | CNN | |||

| [43] | 2023 | CNN, LSTM | Three single-channel grid maps with different cover ranges and resolution are constructed. | ||

| [38] | 2019 | CNN | Construct a 20-channel semantic grid map of observation timesteps. | ||

| [40] | 2021 | CNN, GRU, AM | Construct a 45-channel semantic grid map. | ||

| [39] | 2020 | CNN, LSTM, CVAE | Construct a 3-channel semantic grid map, with three channels representing orientation, velocity, and position. | ||

| [41] | 2021 | CNN, LSTM, CVAE | |||

| [42] | 2022 | CNN | Represent each scene element with a sparse set of points and represent them in a grid map. | ||

| Graph-based | —Non-Euclidean representation. —Able to learn complex interactions with the scene. —Efficient to learn long-range dependency. | [52] | 2023 | GAT, CNN, GRU | Construct a scene graph based on the spatial relationship between vehicles. |

| [46] | 2020 | GNN, Attention, MLP | Construct a fully connected undirected graph to represent scene information. | ||

| [47] | 2020 | GNN, AM, MLP | |||

| [48] | 2021 | GNN, AM, MLP | |||

| [51] | 2022 | GNN, Transformer | |||

| [49] | 2021 | GCN, 1D-CNN | Construct an actor-centric directed graph, defining four connection edges between nodes. | ||

| [53] | 2023 | GAT, GRU, AM | Construct a heterogeneous hierarchical graph to represent dynamic objects and candidate centerlines. | ||

| [44] | 2019 | GNN, AM | Construct a spatial–temporal graph with temporal edges. | ||

| [50] | 2021 | GNN, AM | |||

| [45] | 2020 | GNN, AM, LSTM | |||

| [54] | 2023 | GCN, CNN, GRU |

| Class | Characteristics | Works | Year | DL Approaches | Summary |

|---|---|---|---|---|---|

| Spatial–Temporal Information Mining | —Emphasis on the spatial–temporal carrier characteristics of the vehicle. —Explore full spatial–temporal features | [55] | 2020 | GCN, 1D-CNN, FPN | Use 1D-CNN to encode historical motion information and obtain multi-scale features, and then fuse the multi-scale features based on FPN to fully consider temporal information. |

| [56] | 2021 | CNN, MLP | Extract and fuse features at multiple time intervals for the input trajectory sequences, and use dual spatial representation method to fully extract spatial information. | ||

| [40] | 2021 | CNN, GRU, AM | Apply transposed CNNs to consider larger prediction region. | ||

| [49] | 2021 | 1D-CNN, GCN, MLP | Use multi-order GCN for the actor-specific scene graph to consider long-range dependency. | ||

| [57] | 2021 | Transformer | Define a spatial–temporal Transformer to explore spatial–temporal agent features. | ||

| [58] | 2021 | Transformer | |||

| [44] | 2019 | GNN, AM | Construct a spatial–temporal graph. | ||

| [45] | 2020 | GNN, AM, LSTM | |||

| [59] | 2023 | Transformer, LSTM | Perform attention operations in the time dimension when encoding each vehicle’s motion feature. | ||

| Agent-Specific Information Supplement | —Optimize the extraction of individual agent features for consideration of the specific agent information. | [57] | 2021 | Transformer | Embed the numerical category value into the agent input state vector. |

| [32] | 2021 | LSTM, AM | |||

| [61] | 2023 | GCN, LSTM | |||

| [44] | 2019 | GNN, AM | Define “Super node” for different agent categories. | ||

| [50] | 2021 | LSTM, GNN, AM | |||

| [60] | 2022 | LSTM, MLP | Additionally consider encoding the features of the EV as contextual information supplement. |

| Class | Characteristics | Works | Year | DL Approaches | Summary |

|---|---|---|---|---|---|

| Implicit-based | —Consider nearby vehicle states but have no explicit process to extract the interaction feature. —The implementation is relatively simple, but they learn insufficient interaction information. | [37] | 2018 | CNN | Define a raster map to represent the historical states of all vehicles which implicitly contains the interaction. |

| [35] | 2019 | CNN | |||

| [36] | 2020 | CNN | |||

| [38] | 2019 | CNN | |||

| [64] | 2020 | CNN, GRU, AM | |||

| [63] | 2019 | LSTM | Represent the target vehicle and nearby vehicles in the same tensor to form the context feature. | ||

| [65] | 2021 | LSTM | |||

| [66] | 2022 | GRU, CVAE | |||

| [62] | 2018 | GRU, CVAE | |||

| Aggregation-based | —Aggregate nearby vehicle encodings to generate the interaction feature. —Aggregation is often performed based on spatial relative positions. —Interaction information is limited and actor-specific interaction is lost. | [2] | 2016 | LSTM | Pool LSTM-encoded motion features of surrounding agents as the interaction feature. |

| [3] | 2018 | LSTM, GAN | Use maximum pooling to aggregate embeddings of relative distance between target and nearby agents. | ||

| [67] | 2021 | LSTM, GAN, AM | |||

| [56] | 2021 | CNN, MLP | Use CNNs to aggregate grids which contain different vehicle features. | ||

| [68] | 2021 | GRU, CNN | |||

| [69] | 2022 | LSTM, CNN, GAN | |||

| [70] | 2023 | Transformer, LSTM | A fully connected layer is applied to aggregate the longitudinal distance and velocity of surrounding vehicles. | ||

| Pairwise-based | —Focus on the interrelationship between two agents, and consider different agent interactions by weighting. | [40] | 2021 | CNN, GRU, AM | Consider the different influence of nearby vehicles on the target vehicle using the attention mechanism. |

| [76] | 2021 | 1D-CNN, GRU, AM | |||

| [79] | 2022 | 1D-CNN, GRU, GCN, AM | |||

| [31] | 2021 | LSTM, AM | |||

| [32] | 2021 | LSTM, AM | |||

| [73] | 2020 | LSTM, AM | |||

| [77] | 2021 | LSTM, CNN, AM | |||

| [60] | 2022 | LSTM, MLP | |||

| [80] | 2022 | Transformer, MLP | |||

| [81] | 2023 | GAN, GCN, FPN, AM | Encode the correlation features between each surrounding vehicle and the target vehicle based on a fully connected layer. | ||

| [46] | 2020 | GNN, AM, MLP | Construct a fully connected undirected graph, and then extract the interaction feature based on graph attention network. | ||

| [47] | 2020 | GNN, AM, MLP | |||

| [48] | 2021 | GNN, AM, MLP | |||

| [74] | 2020 | LSTM, GNN, AM | |||

| [59] | 2023 | Transformer, LSTM | |||

| [71] | 2019 | GRU, CNN, GCN | Use GCN to transfer surrounding vehicle information to the target vehicle. | ||

| [61] | 2023 | GCN, LSTM | Construct a graph of traffic participants and apply GCN to extract inter-agent features. | ||

| [82] | 2023 | GCN, LSTM, AM | |||

| [75] | 2020 | GRU, GNN, CVAE | Construct a graph of traffic participants around the target vehicle. | ||

| [44] | 2019 | GNN, AM | Construct a spatial–temporal graph of traffic participants and learn the interaction information in both spatial and temporal dimensions. | ||

| [72] | 2019 | GNN, AM | |||

| [78] | 2021 | CNN, GCN, LSTM | |||

| [54] | 2023 | GCN, CNN, GRU | |||

| [52] | 2023 | GAT, CNN, GRU | Use GAT to efficiently extract interaction features between vehicles. | ||

| [53] | 2023 | GAT, GRU, AM |

| Class | Characteristics | Works | Year | DL Approaches | Summary |

|---|---|---|---|---|---|

| Complementary Fusion | —Add map information as additional input. —Ignore the connection between the map and vehicles. | [77] | 2021 | LSTM, CNN, AM | Encode road, weather and other environment information as constraint features. |

| [83] | 2020 | LSTM, GAN | Encode quadratic fitting polynomial coefficients of lane centerlines near target vehicles. | ||

| Coupled Fusion | —Consider a fuller integration of map elements and vehicle motion information. —Highlights the specific correlations between map elements and vehicles. —Hard to add various types of map elements. | [38] | 2019 | CNN | Uniformly represent map information and vehicle motion information using grid map, and then use CNNs to extract the fusion features. |

| [37] | 2018 | CNN | |||

| [36] | 2020 | CNN | |||

| [35] | 2019 | CNN | |||

| [85] | 2021 | CNN, LSTM, AM | |||

| [87] | 2022 | CNN, LSTM, CVAE | |||

| [64] | 2020 | CNN, GRU, AM | |||

| [84] | 2020 | LSTM, 1D-CNN, MLP | Consider vehicle encoding features as Query, map encoding features as Keys and Values, and fuse map information using the cross-attention mechanism. | ||

| [58] | 2021 | Transformer | |||

| [76] | 2021 | 1D-CNN, U-GRU, AM | |||

| [45] | 2020 | GNN, AM, LSTM | |||

| [86] | 2021 | AM, LSTM | |||

| [53] | 2023 | GAT, GRU, AM | |||

| [46] | 2020 | GNN, AM, MLP | Construct an undirected fully connected graph with vehicles and lanes as nodes, and then extract fusion features using graph attention network. | ||

| [47] | 2020 | GNN, AM, MLP | |||

| [48] | 2021 | GNN, AM, MLP | |||

| [55] | 2020 | GCN, 1D-CNN, FPN | Construct a graph of lanes, and fuse lane information based on GCN. | ||

| [81] | 2023 | GAN, GCN, AM, FPN | Construct a lane graph and fuse vehicle motion information to lane segment nodes within the graph to obtain traffic flow information. | ||

| Guided Fusion | —Highlight the role of lanes in guiding vehicle motion and can constrain the region of prediction. —Heavily depends on the generated guided paths. | [88] | 2020 | GNN, MLP | Define reference paths based on the future lanes in which the target vehicle may travel. |

| [89] | 2021 | CNN | |||

| [90] | 2022 | LSTM, AM | Project vehicle motion states to the corresponding reference path coordinate for encoding to consider the road guidance role. |

| Class | Characteristics | Works | Year | DL Approaches | Summary |

|---|---|---|---|---|---|

| Generative-based | —Generate different modes based on the idea of random sampling. —The sampling number is uncertain, and easily fall into the “mode collapse” problem. —Poor modal interpretability. —Predicted results often have no probabilities. | [3] | 2018 | LSTM, GAN | Sample multiple random noise variables that obey the standard normal distribution to represent different modes. |

| [67] | 2021 | LSTM, GAN, AM | |||

| [91] | 2019 | LSTM, MLP, GAN | |||

| [83] | 2020 | LSTM, GAN | Based on the FPS algorithm to generate modalities that are as different as possible. | ||

| [62] | 2019 | GRU, CVAE | Use a neural network to learn the distribution of the random latent variable associated with scene information and future motion of the target vehicle, and sample several latent variables to represent different modalities. | ||

| [39] | 2020 | LSTM, CNN, CVAE | |||

| [66] | 2022 | GRU, CVAE | |||

| [34] | 2019 | GRU, CNN, CVAE | |||

| Prior-based | —The modal number is predetermined based on priori knowledge. —Hard to predefine a complete number of modalities. | [92] | 2018 | LSTM | Predefine 6 maneuvers for highway lane change prediction task. |

| [68] | 2021 | GRU, CNN | |||

| [93] | 2022 | LSTM, CNN, AM | Predefine 9 maneuvers for highway lane change prediction task. | ||

| [36] | 2020 | CNN | Regard the multimodal trajectory prediction task as a classification problem in a predefined finite set of trajectories. | ||

| Proposal-based | —Multiple proposals are generated based on scene information, and then multimodal trajectories are predicted by decoding proposal-based features. —Modal generation with scene adaptivity. | [47] | 2020 | GNN, AM, MLP | First predict end points of the target agent and use them as proposals for multimodal trajectories generation. |

| [48] | 2021 | GNN, AM, MLP | |||

| [96] | 2022 | CNN, MLP | |||

| [49] | 2021 | 1D-CNN, GNN, MLP | |||

| [89] | 2021 | CNN, LSTM | Extract the possible future lanes of the target vehicle as proposals. | ||

| [90] | 2022 | LSTM, AM | Decouple multimodality into intent and motion modes. | ||

| [53] | 2023 | GAT, GRU, AM | Dynamically and adaptively generate multiple prediction trajectories. | ||

| [40] | 2021 | CNN, GRU, AM | A probabilistic heat map representing the distribution of trajectory endpoints is obtained. | ||

| [79] | 2022 | 1D-CNN, GRU, GCN, AM | |||

| [76] | 2021 | 1D-CNN, GRU, AM | |||

| [94] | 2019 | CNN | Use K-means to obtain reference trajectories based on the dataset. | ||

| [95] | 2021 | Transformer | The queries in the transformer decoder are used as proposals, and each proposal feature is encoded independently in parallel. | ||

| [60] | 2021 | LSTM, MLP | Use input-independent learnable anchor encodings to represent different modes. | ||

| [97] | 2022 | LSTM, MLP | |||

| [98] | 2022 | Transformer, MLP, LSTM | Introduce learnable tokens to represent different modalities. | ||

| Implicit-based | —Have no explicit process for feature extraction of each modal. —Lack of interpretability and insufficient modal diversity. | [35] | 2019 | CNN | Based on the context features obtained in the encoding stage, multiple trajectories and corresponding scores are directly regressed in the decoder. |

| [55] | 2020 | GCN, 1D-CNN, FPN | |||

| [74] | 2020 | LSTM, GNN, AM | |||

| [58] | 2021 | Transformer | |||

| [80] | 2022 | Transformer | |||

| [56] | 2021 | CNN, MLP | The decoding stage directly regresses multiple trajectories and the deviation of each trajectory endpoint from the true trajectory endpoint. | ||

| [86] | 2021 | 1D-CNN, LSTM | Use multiple independent decoders to obtain multimodal trajectories. | ||

| [32] | 2021 | LSTM, AM | Decode multimodal trajectories from different attention heads. | ||

| [85] | 2021 | CNN, LSTM, AM |

| Class | Characteristics | Works | Year | DL Approaches | Summary |

|---|---|---|---|---|---|

| Naïve-level Joint Prediction | —Assume that the context feature already includes the information to decode future motion of all target agents. —Define the loss function for multi-agent joint prediction. —Lack of consideration of coordination between future prediction trajectories. | [78] | 2019 | CNN, GCN, LSTM | Predict the future trajectories of all target agents in parallel based on weight-sharing LSTMs. |

| [71] | 2019 | GRU, CNN, GCN | |||

| [58] | 2021 | Transformer | Use weight-sharing MLPs to simultaneously predict future trajectories of all target agents. | ||

| [73] | 2020 | LSTM, AM | Extract the feature of each agent based on the attention mechanism and then output the Gaussian Mixture Model (GMM) parameters of each agent’s future trajectory at each time step based on a FC layer. | ||

| [75] | 2020 | GRU, GNN, CVAE | Generate latent variables for each agent in the scene simultaneously, and then decode the future trajectories of all agents in parallel. | ||

| Scene-level Joint Prediction | —Highlights the coordination between agents in the prediction stage. | [76] | 2021 | 1D-CNN, GRU, AM | First predict the respective multimodal trajectories of each agent, then consider the interaction of each vehicle in the prediction stage based on the attention mechanism, and output the future predicted trajectory of multiple agents from the perspective of scene coordination. |

| Class | Characteristics | Works | Year | DL Approaches | Summary |

|---|---|---|---|---|---|

| Feasibility Improvement | —Aim at improving the feasibility and robustness of model’s predictions. | [96] | 2022 | CNN, MLP | Define a spatial–temporal coherence loss to improve the coherence of the predicted output trajectories. |

| [22] | 2020 | CNN, MLP | Use successive cubic polynomial curves to generate reference trajectories. | ||

| [31] | 2021 | LSTM, AM | The prediction task is divided into a trajectory set generation stage with model-based motion planner and a deep learning-based trajectory classification stage. The trajectories generated in the first stage is consistent with the map structure as well as the current vehicle motion states. | ||

| [99] | 2023 | CNN, LSTM, AM | Combine physics of traffic flow with the learning-based prediction models to improve prediction interpretability. | ||

| [81] | 2023 | GAN, GCN, AM, FPN | Design a new discriminator for GAN-based prediction models used to train the generator to generate predicted trajectories that match the target vehicle’s historical motion states, scene context, and map constraints. | ||

| [66] | 2022 | GRU, CVAE | A loss function beyond the road is defined to train the model to generate trajectories that match the structure of the drivable area. |

| Datasets | Refs. | Sensors | Traffic Scenarios | Motion Information | Map Information | Traffic Participants | Dataset Size |

|---|---|---|---|---|---|---|---|

| NGSIM | [115,116] | roadside cameras | highway | 2D coordinate | lane ID | car, van, truck | Total 1.5 h |

| HighD | [114] | drone camera | highway | 2D coordinate, velocity, acceleration, heading angle | RGB aerial map image | car, van, truck | Total over 16.5 h |

| INTERACTION | [117] | drone camera | interactive scenarios | 2D coordinate, velocity | lanelet2 HD map | vehicle, pedestrian, cyclist | Total 16.5 h |

| InD | [118] | drone camera | unsignalized intersections | 2D coordinate, velocity, acceleration, heading angle | RGB aerial map image | car, truck, bus, pedestrian, cyclist | Total 11,500 trajectories |

| RounD | [119] | drone camera | roundabouts | 2D coordinate, velocity, acceleration, heading angle | lanelet2 HD map | car, van, bus, truck, pedestrian, cyclist, motorcycle | Total over 3.6 h; Total 13,746 recorded vehicles |

| ExiD | [120] | drone camera | highway ramps | 2D coordinate, velocity, acceleration, heading angle | OpenDRIVE HD map | car, van, truck | Total over 16 h; Total 69,712 vehicles |

| Datasets | Refs. | Sensors | Traffic Scenarios | Motion Information | Map Information | Traffic Participants | Dataset Size |

|---|---|---|---|---|---|---|---|

| NuScenes | [121] | camera, radar, lidar | dense urban | 3D coordinate, heading angle | HD map | vehicle, pedestrian, cyclist | Total over 333 h |

| Argoverse 1 Motion Forecast dataset | [122] | camera, lidar | dense urban | 2D coordinate | HD map | vehicle | Total over 320 h; Total 10,572 tracked objects |

| ApolloScape Trajectory | [44] | camera, radar, lidar | dense urban | 3D coordinate, heading angle | - | vehicle, pedestrian, cyclist | Total over 155 min |

| Lyft | [125] | camera, radar, lidar | suburban | 2D coordinate, velocity, acceleration, heading angle | HD map, BEV image | vehicle, pedestrian, cyclist | Total over 1118 h |

| Waymo Open Motion Dataset | [124] | camera, lidar | dense urban | 3D coordinate, velocity, heading angle | 3D HD map | vehicle, pedestrian, cyclist | Total over 570 h |

| Argoverse 2 Motion Forecast dataset | [123] | camera, lidar | dense urban | 2D coordinate, velocity, heading angle | 3D HD map | vehicle, pedestrian; cyclist, bus motorcycle | Total 763 h |

| Models | Classification of Improvement Design | Metrics (K = 6) | ||||

|---|---|---|---|---|---|---|

| Scene Input Representation | Context Refinement | Prediction Rationality Improvement | minADE | minFDE | MR | |

| TPNet [22] | - | Map Information Fusion | Feasibility Improvement, Multimodal Prediction | 1.61 | 3.28 | - |

| DiversityGAN [83] | - | Map Information Fusion | Multimodal Prediction | 1.38 | 2.66 | 0.42 |

| PRIME [31] | - | Interaction Consideration | Feasibility Improvement, Multimodal Prediction | 1.22 | 1.56 | 0.12 |

| Chenxu [84] | - | Map Information Fusion | Multimodal Prediction | 0.99 | 1.71 | 0.19 |

| SAMMP [73] | - | Interaction Consideration | Multimodal Prediction, Multi-agent Joint Prediction | 0.97 | 1.42 | 0.13 |

| MTP [35] | General Scene Representation | Interaction Consideration, Map Information Fusion | Multimodal Prediction | 0.94 | 1.55 | 0.22 |

| TNT [47] | General Scene Representation | Interaction Consideration, Map Information Fusion | Multimodal Prediction | 0.94 | 1.54 | 0.13 |

| HOME [40] | General Scene Representation | Interaction Consideration, Agent Feature Refinement | Multimodal Prediction | 0.94 | 1.45 | 0.10 |

| GOHOME [79] | - | Interaction Consideration | Multimodal Prediction | 0.94 | 1.45 | 0.10 |

| THOMAS [76] | - | Interaction Consideration, Map Information Fusion | Multimodal Prediction, Multi-agent Joint Prediction | 0.94 | 1.44 | 0.10 |

| WIMP [74] | - | Interaction Consideration | Multimodal Prediction | 0.90 | 1.42 | 0.17 |

| LaneRCNN [49] | General Scene Representation | Interaction Consideration, Map Information Fusion, Agent Feature Refinement | Multimodal Prediction | 0.90 | 1.45 | 0.12 |

| Xiaoyu [53] | General Scene Representation | Interaction Consideration, Map Information Fusion | Multimodal Prediction | 0.89 | 1.40 | 0.17 |

| ME-GAN [81] | - | Interaction Consideration, Map Information Fusion | Multimodal Prediction | 0.88 | 1.39 | 0.12 |

| DenseTNT [48] | General Scene Representation | Interaction Consideration, Map Information Fusion | Multimodal Prediction | 0.88 | 1.28 | 0.10 |

| TPCN [56] | - | Interaction Consideration, Agent Feature Refinement | Multimodal Prediction | 0.87 | 1.38 | 0.16 |

| LaneGCN [55] | - | Interaction Consideration, Map Information Fusion, Agent Feature Refinement | Multimodal Prediction | 0.87 | 1.36 | 0.16 |

| mmTransformer [95] | - | Interaction Consideration, Agent Feature Refinement | Multimodal Prediction | 0.87 | 1.34 | 0.15 |

| StopNet [42] | General Scene Representation | Interaction Consideration, Map Information Fusion | Multimodal Prediction | 0.83 | 1.54 | 0.19 |

| SceneTransformer [58] | - | Interaction Consideration | Multimodal Prediction, Multi-agent Joint Prediction | 0.80 | 1.23 | 0.13 |

| Multipath++ [60] | - | Interaction Consideration, Map Information Fusion, Agent Feature Refinement | Multimodal Prediction | 0.79 | 1.21 | 0.13 |

| DCMS [96] | - | Interaction Consideration, Agent Feature Refinement | Feasibility Improvement, Multimodal Prediction | 0.77 | 1.14 | 0.11 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, R.; Zhuo, G.; Xiong, L.; Lu, S.; Tian, W. A Review of Deep Learning-Based Vehicle Motion Prediction for Autonomous Driving. Sustainability 2023, 15, 14716. https://doi.org/10.3390/su152014716

Huang R, Zhuo G, Xiong L, Lu S, Tian W. A Review of Deep Learning-Based Vehicle Motion Prediction for Autonomous Driving. Sustainability. 2023; 15(20):14716. https://doi.org/10.3390/su152014716

Chicago/Turabian StyleHuang, Renbo, Guirong Zhuo, Lu Xiong, Shouyi Lu, and Wei Tian. 2023. "A Review of Deep Learning-Based Vehicle Motion Prediction for Autonomous Driving" Sustainability 15, no. 20: 14716. https://doi.org/10.3390/su152014716

APA StyleHuang, R., Zhuo, G., Xiong, L., Lu, S., & Tian, W. (2023). A Review of Deep Learning-Based Vehicle Motion Prediction for Autonomous Driving. Sustainability, 15(20), 14716. https://doi.org/10.3390/su152014716