Abstract

As Chinese cities transition into a stage of stock development, the revitalization of industrial areas becomes increasingly crucial, serving as a pivotal factor in urban renewal. The renovation of old factory buildings is in full swing, and architects often rely on matured experience to produce several profile renovation schemes for selection during the renovation process. However, when dealing with a large number of factories, this task can consume a significant amount of manpower. In the era of maturing machine learning, this study, set against the backdrop of the renovation of old factory buildings in an industrial district, explores the potential application of deep learning technology in improving the efficiency of factory renovation. We establish a factory renovation profile generation model based on the generative adversarial networks (GANs), learning and generating design features for the renovation of factory building profiles. To ensure a balance between feasibility and creativity in the generated designs, this study employs various transformation techniques on each original profile image during dataset construction, creating mappings between the original profile images and various potential renovation schemes. Additionally, data augmentation techniques are applied to expand the dataset, and the trained models are validated and analyzed on the test set. This study demonstrates the significant potential of the GANs in factory renovation profile design, providing designers with richer reference solutions.

1. Introduction

As China’s economy rapidly develops, urbanization continues to advance, leading to a growing demand for resources. Consequently, sustainable resource utilization has garnered significant attention, with a particular emphasis on the field of architectural design. New constructions often entail substantial energy consumption and significant environmental impacts. Therefore, there is a shift towards urban renewal strategies that prioritize optimizing existing structures over focusing solely on new developments [1,2]. Additionally, traditional industries within urban centers are progressively being replaced by high-tech industries as urban spaces continuously expand. Consequently, industrial heritages that were once situated on the periphery of cities are gradually relocating to central urban areas [3]. This shift leads to the abandonment of original factories and residential areas in these central zones, resulting in the wastage of valuable urban central space [4]. Against the backdrop of global sustainability, demolishing these abandoned industrial sites is not a preferred option. Instead, functional repurposing should be pursued to revitalize these sites, enhance urban vitality, and preserve city history, while also reducing extensive construction activities [5,6]. This approach can improve the livability of urban environments, which is crucial for both the physical and mental health of city residents [7].

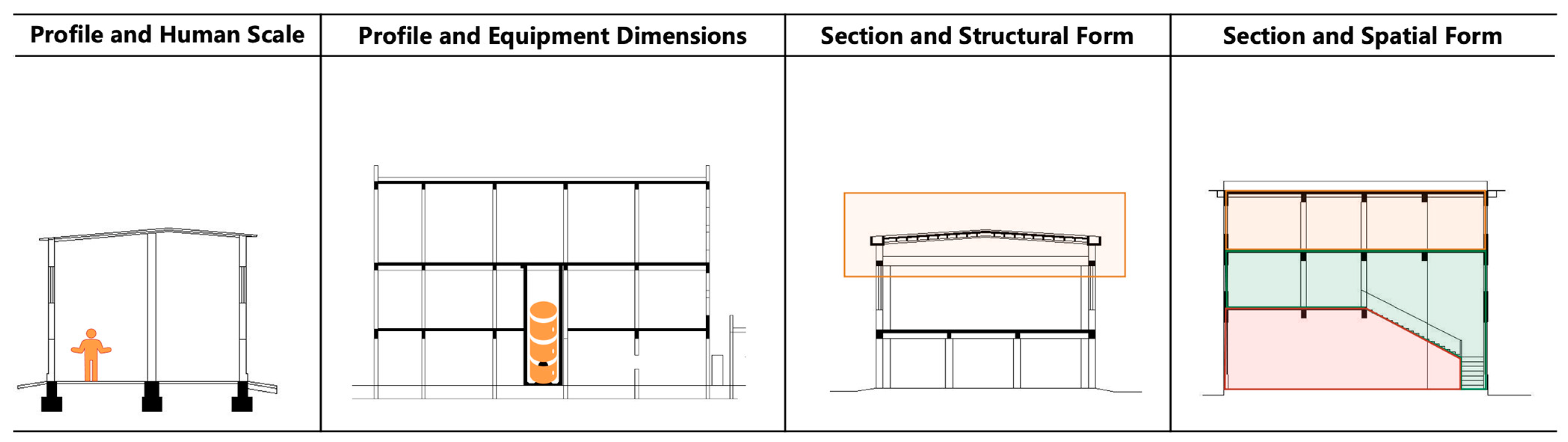

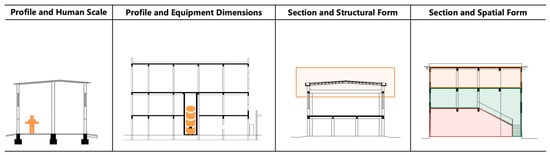

Architectural drawings are essential mediums for exchanging information about architectural spatial forms and form the foundation of both design and construction. These drawings include architectural plans, elevations, sections, and site plans, among others [8]. Specifically, factory renovations differ significantly from other types of architectural design. Beyond just layout considerations, sectional design plays a crucial role. In architecture, a section refers to a cut through a part of a building, depicted in drawings that reveal its internal structure. This is one of the three primary views in architectural design. Sections vertically display the structural and spatial relationships between different elements of a building. They often detail the construction methods and materials used for the main components of a building. Sections are indispensable for understanding and designing the three-dimensional aspects of architectural spaces. These drawings assist designers and stakeholders in understanding the internal spatial layout and relationships, and demonstrating how spaces can fulfill the functional needs of a building. Particularly in transforming large spaces like factories, starting with sectional views facilitates a clear perception of the relationships between human scale, equipment size, and spatial dimensions, as shown in Figure 1. Moreover, sections are vital for architects to comprehend factory structural systems, including the forms of large-span roof structures and beam positions. When renovating, this understanding enables designers to adhere more closely to the original structural principles and reconstruct the internal space without compromising the existing framework.

Figure 1.

Illustration of sectional view in factory renovation.

Designs initiated from sectional drawings are often inclined towards exploring spatial possibilities, aligning seamlessly with the primary focus of factory renovations on internal spatial reorganization. The renovation of the Lens Beijing headquarters, situated within a 1958 vintage factory, saw the subdivision of its original vast space. From the architect’s hand-drawn sectional sketches, one can distinctly discern the architect’s comprehension of the architectural structure, the reassignment of spatial functionalities, and the cultivation of diverse spatial atmospheres. These elements accurately convey the architect’s contemplations on spatial transformation and conceptual expression. The Jiangsu Wuxi Canal Hub 1958 project, situated on the site of an old steel factory, features a notably large third factory building. In its renovation, architects similarly utilized sectional drawings to organize and arrange the internal spatial forms and relationships. During the presentation of the outcomes, they visually articulated the concept of a “miniature city” through sectional perspectives. Conversely, the inaugural renovation project of the Great Wall Collider at the Beijing Huairou Science City meticulously employed sectional drawings to refine structural modifications. Integrating the requirements of lower-level spaces, while preserving the existing roof’s core structure, architects strategically inserted skylights at appropriate positions to optimize indoor lighting. The harmonious integration of new and old structures underscores the project’s overarching goal of maximizing the utilization of the original architectural features. Furthermore, numerous outstanding cases will not be individually introduced in this paper. In the context of sustainable urban development, the previously mentioned traditional sectional renovation design of old factories presents unique challenges. These challenges necessitate a comprehensive understanding of their historical and functional aspects. Architects must integrate historical, functional, aesthetic, and environmental values into their sectional renovation plans. This process often demands extensive knowledge and experience, as dealing with buildings of different constructions is time-consuming and involves collecting and integrating multifaceted information [9]. Traditional architectural design processes such as sketching, refining, evaluating, and redesigning are particularly labor-intensive and costly when it comes to the mass renovation of factory sections.

Artificial intelligence (AI) is rapidly developing and being applied in many areas [10,11,12,13,14,15,16,17]. Given the high frequency of similar types in factory sections, there is a significant amount of repetitive work, making it a prime candidate for the application of emerging AI technologies [18,19]. With the continuous development of computer science technology and the inevitable trend of future smart city construction, the technical intelligence domain of urban design and architectural design is being increasingly explored by designers. For instance, many architects are attempting to use AI to assist in the design of architectural drawings, enabling the rapid and intelligent generation of layout plans, which significantly enhances design efficiency [20,21,22]. Generative Adversarial Networks (GANs), introduced by Goodfellow et al. [23] in 2014, are a type of generative model in deep learning that are widely used to produce new data with the same statistical distribution as the given dataset. In the field of computer vision, GANs learn image features and are employed to generate new images. They have been successfully applied in image restoration, style transfer, and enhancement, demonstrating impressive results [24,25,26]. Moreover, GANs have shown versatile potential in various areas, including game design [27], analysis of healthcare data [28], understanding the structure and features of images [29], and other computer vision applications. GANs have also been adapted for architectural design assistance. Chan and Spaeth [30] have used GANs to transform architectural conceptual sketches into realistic renderings. Ali and Lee [31] propose an integrated bottom-up digital design approach using deep convolutional GANs to explore early-stage architectural design and generate intricate facade designs for urban interiors. Huang et al. [32] have developed an automated design process using GANs to accelerate performance-driven urban design, optimizing urban morphology for better environmental quality in real-time. Fei et al. [33] introduced a knowledge-enhanced GAN approach for the schematic design of framed tube structures, integrating domain knowledge for compliance checking and significantly boosting design efficiency. GANs can also be employed for automatic floor layout generation, even competing with floor plans designed by professional architects [34,35]. Furthermore, Du et al. [36] successfully applied GAN to the generation of 3D architectural design schemes, providing a valuable approach for realistic architecture fabrication with coordinated geometry and texture.

One GAN-based algorithm named pix2pix, proposed by Isola et al. [37], can be used for image category transformation and is commonly utilized in the field of architectural design for generating architectural drawings. Wan et al. [38] explore using pix2pix to generate building plans, aiming to reduce energy consumption. Trained on a characterized sample set, the neural network successfully optimizes space allocation, leading to a notable decrease in energy usage. Additionally, Pix2pix has been used to generate layouts for various architectural types, including residential buildings, emergency departments in hospitals, outdoor green spaces, campuses, and classroom layouts [39,40,41,42,43,44]. Building on this, Kim et al. [45] use pix2pix to enhance door placement in building plans, significantly boosting door generation accuracy and room interconnectivity, thus aiding architects in creating more coherent spatial layouts. The capabilities of pix2pix in architectural drawing design have been established, suggesting its potential for the automated generation of sectional drawings in factory renovations. However, current research has not yet thoroughly investigated pix2pix’s ability to generate sectional drawings to aid in design, which is the focus of exploration in this paper.

To this end, this study selects sectional drawings from a dilapidated industrial park in Changsha, China, that is in need of renovation, as the data source. Training samples are constructed through manually designed sectional renovation plans, enabling the training of the pix2pix model to learn factory sectional design techniques. Tests are conducted on actual factory sectional drawings slated for renovation to validate the effectiveness of GANs in factory sectional design. We outline our contributions as follows:

- To overcome the extensive time and labor costs associated with traditional manual design of factory renovation plans, we have developed a sectional drawing generation method based on the pix2pix model, facilitating architectural design with artificial intelligence support.

- We constructed a dataset of sectional renovation plans that are applicable to dilapidated factories, developed by architects through manual design interventions tailored for the factories in need of renovation.

- By introducing flexibility and a diversity of architectural design schemes during model training, we demonstrated that the model can learn various architectural drawing renovation techniques and possess a certain degree of creative autonomy.

The rest of this paper is organized as follows: Section 2 introduces the proposed pix2pix-based algorithm for generating factory sectional drawings. Section 3 presents the dataset used, the experimental setup, the results obtained, and an analysis and evaluation of these results. Section 4 discusses the practical applications and limitations of using GANs in architectural design. Lastly, we summarize the findings of this study in Section 5.

2. Methodology

2.1. Pix2pix Background

Pix2pix is an image-to-image translation algorithm based on GANs [23,37]. A GAN consists of two networks: a generator and a discriminator. The generator is responsible for producing realistic fake images, while the discriminator attempts to distinguish between real images and the fake images generated by the generator. In pix2pix, both the generator and the discriminator receive conditional inputs, that is, paired input and target output images. The introduction of conditional inputs allows the generator to produce corresponding outputs based on the given input, thus realizing supervised learning for image-to-image translation tasks. The objective function of the pix2pix algorithm consists of two parts: adversarial loss and L1 loss. The adversarial loss utilizes the structure of conditional GANs for training both the discriminator and the generator networks. This process can be described as a least squares classification problem, aiming to minimize the probability that generated images are classified as fake. The adversarial loss is defined in Equation (1):

where is the discriminator network and is the generator network. The objective function aims to maximize the log probability of the discriminator for real images and minimize the log probability of the discriminator for generated images . Here, is the input image and is the noise.

L1 loss is used to constrain pixel-level matching between the generated image and the target output image to promote similarity between the generated and target output images. L1 loss is defined as:

where represents the L1 norm, i.e., the sum of absolute values. The choice of L1 norm over other norms, such as L2, is based on its ability to preserve sharpness and fine details in the generated images. Unlike the L2 norm, which can lead to overly smooth results by averaging pixel values, the L1 norm minimizes differences at the pixel level without introducing excessive smoothing, thereby enhancing the realism and structural integrity of the generated images.

Our decision to use L1 loss aligns with the original pix2pix implementation [37], which found that L1 encourages less blurring and promotes pixel-level fidelity. This combined approach, using both L1 loss and cGAN loss, allows the generator to produce high-quality image translations by leveraging the strengths of both methods.

The final objective function is defined as in Equation (3):

where is a hyperparameter used to balance the adversarial loss and L1 loss. By minimizing the objective function, a generator network can be trained to transform input images into target output images.

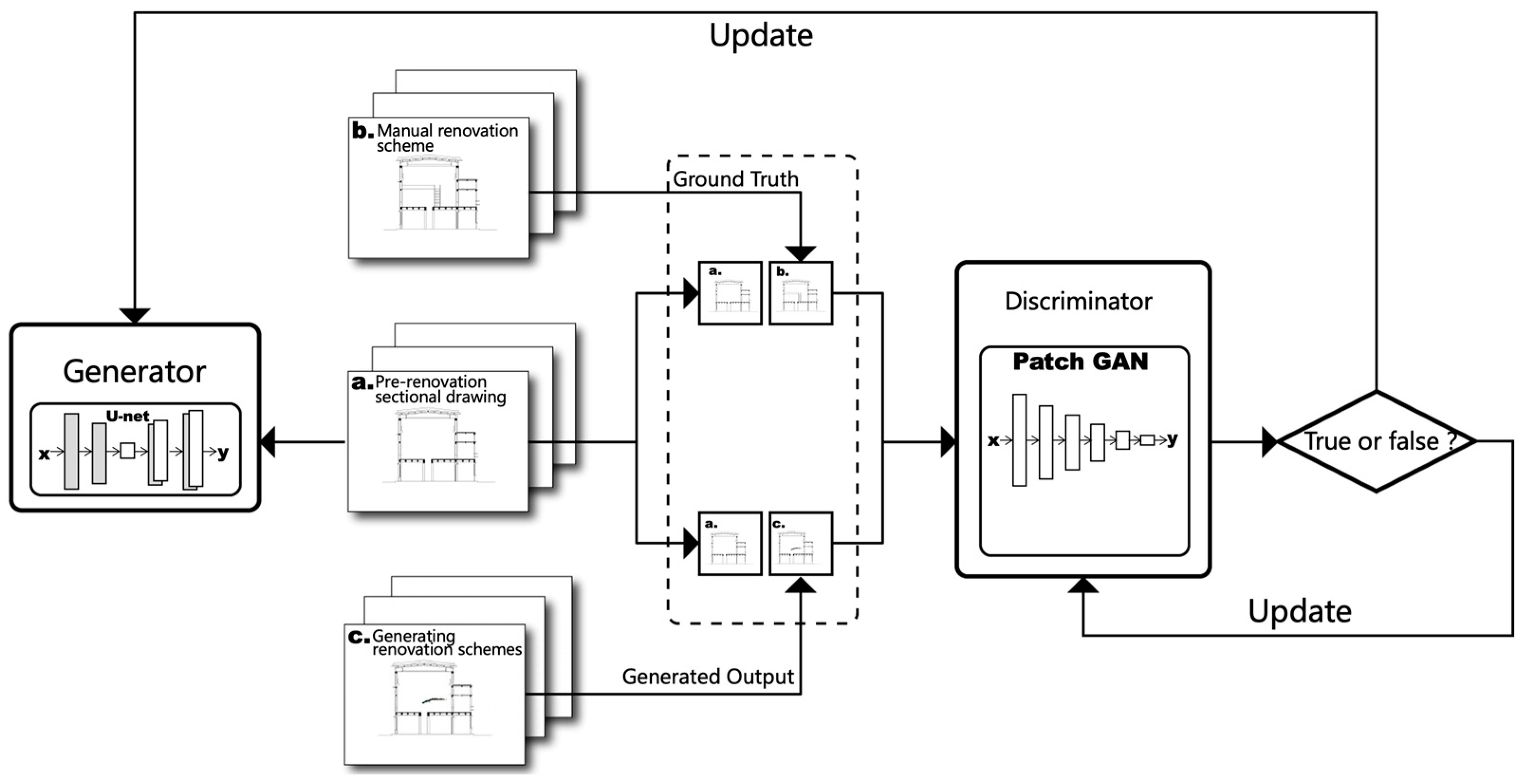

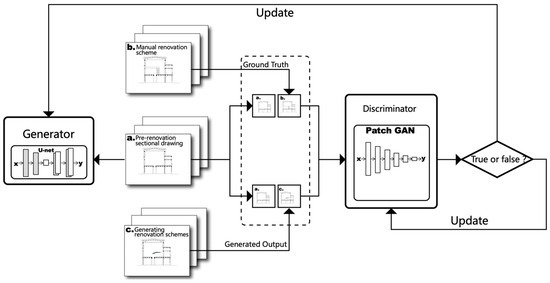

2.2. Pix2pix-Based Sectional Renovation Schemes Generation

In the process of training the pix2pix model to generate sectional schemes, each iteration requires pairs of images to train the pix2pix algorithm. These include the original sectional drawing a and its corresponding sectional scheme b modified using single or combined techniques, as shown in Figure 2. When drawing a is input into the generator and processed by the neural network, the machine-generated sectional renovation scheme c is obtained, which can be understood as a false value predicted by the neural network. Finally, a and c can form a pair of false values used to train the discriminator’s ability to discern the quality of sectional renovation schemes.

Figure 2.

Illustration of the pix2pix model.

Simultaneously, a and b are also combined into a pair of true values, which, along with the pair of false values, are input into the discriminator. The discriminator then learns from the classification between the combination of a and c (false values) and a and b (true values), updating the parameters of the discriminator network. The parameters that are updated include the weights and biases of the neural layers within the discriminator. These updates are performed through backpropagation, using the gradients derived from the discriminator’s classification errors between true and false pairs. By adjusting these parameters, the discriminator improves its ability to accurately classify real versus generated images, thereby enhancing the overall adversarial training process. The feedback from these updates is then used to refine the generator, enabling it to produce increasingly realistic images, i.e., more plausible renovation schemes, to deceive the discriminator. This helps the generator learn how to produce increasingly realistic images, i.e., more reasonable renovation schemes, to deceive the discriminator. Through this adversarial optimization, a generator is ultimately obtained that can master the techniques of sectional drawing renovation. When faced with a new sectional drawing requiring renovation, it only needs the original sectional drawing as input to generate a new sectional renovation scheme.

3. Results

3.1. Constructing Training Dataset

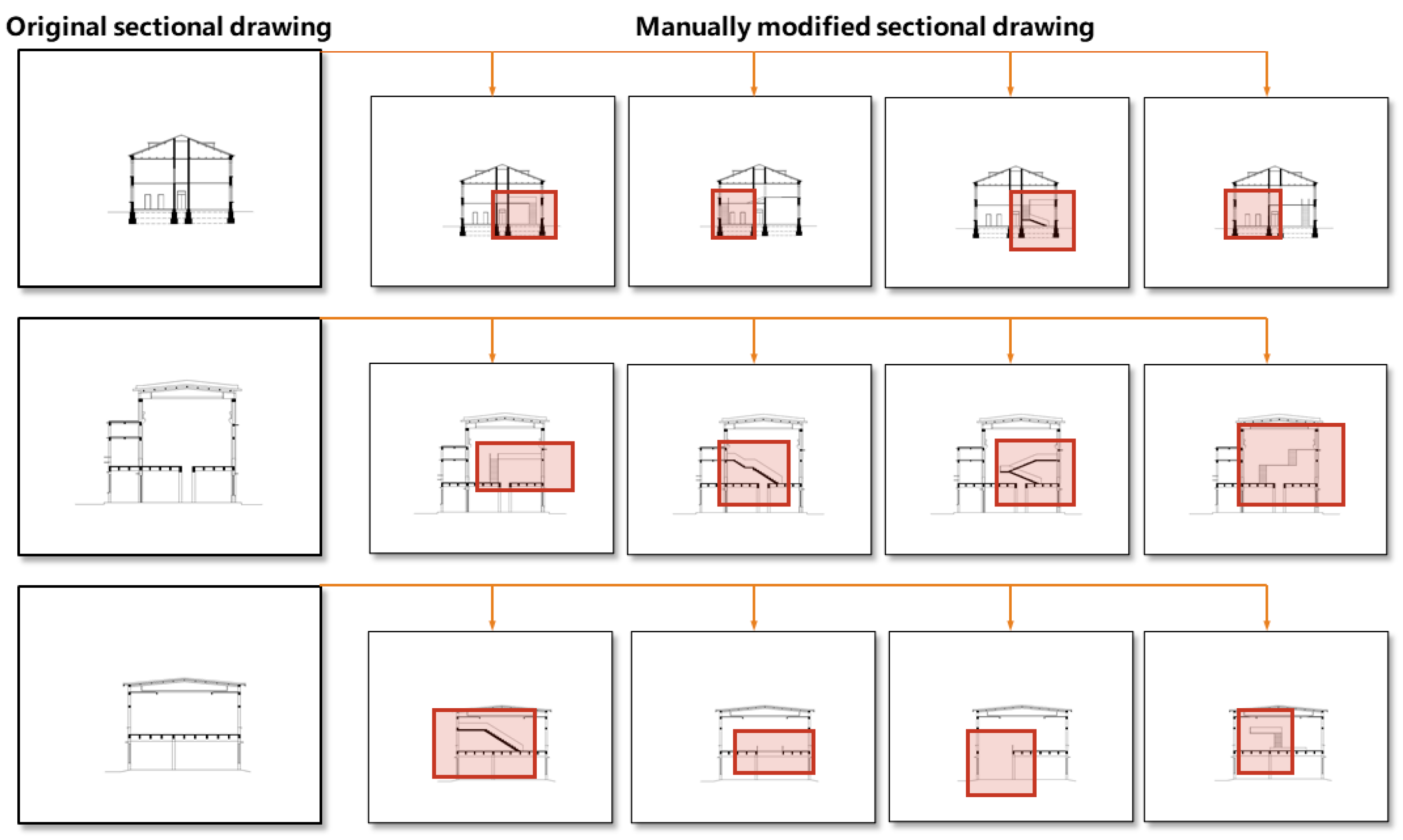

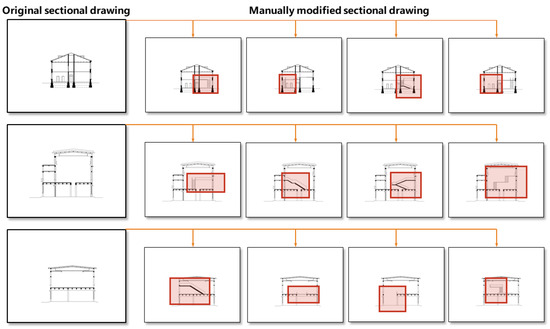

The training data were collected from sectional drawings of a dilapidated industrial park in Changsha, China, that was in need of renovation. These drawings were resized to a consistent dimension of 256 × 256 pixels for uniformity. Training samples are constructed through manually designed sectional renovation plans. In order to ensure more ideal experimental results, we excluded redundant and low-value sectional drawings from the original data, and ultimately selected 34 original sectional drawings. Since training the pix2pix model with only one original sectional drawing and one renovation scheme image as training samples would cause the generator to learn to fit only one image distribution, similar to completing traditional supervised learning tasks, it would be difficult to imbue the generator with the creative thinking necessary for architectural design, thus making it lack practical application value. Therefore, in this study, each original sectional drawing underwent architectural transformations by professional architects, including the removal of floor slabs, addition of stairs, and installation of partitions [46,47]. These transformations were aimed at generating diverse renovation schemes from each original drawing, ensuring varied input for training the model. These modifications resulted in multiple renovation schemes, which were then peer-reviewed to ensure alignment with architectural principles. Only those transformations that were validated through this peer review process, confirming their practicality and adherence to realistic architectural scenarios, were included in the dataset. This process ensured the relevance and realism of the transformations, simulating actual renovation challenges and solutions.

Each of these renovation scheme images was combined with its corresponding original sectional drawing to form a group of training samples in the training set. Examples of training samples derived from manually designed renovation schemes are shown in Figure 3:

Figure 3.

Examples of sectional renovation training samples.

This study aims to enable the generator network to fit multiple different distributions under the same input during training, i.e., to learn multiple transformation methods for one original sectional drawing through the aforementioned data processing methods. It should be noted that while maintaining basic usability, this study aims to achieve breakthroughs in creativity. Therefore, in subsequent discussions of usability, we prioritize effective transformation methods when evaluating image quality, which may differ from other experiments. This represents a compromise on usability while pursuing creativity in this study. The hypothesis regarding achieving breakthroughs in creativity will be validated through experiments. Through this approach, a total of 119 sets of renovation schemes were obtained as training samples in this study.

Due to the small size of the training dataset, we employed data augmentation techniques to expand the dataset. This technique includes geometric transformations (such as flipping, rotation, scaling, cropping, and shifting), color transformations, and perturbations. However, because the sectional drawings in this experiment have specific graphical features, methods such as rotation, cropping, or shifting would alter these features and affect the results. Therefore, we only adopted the image flipping technique from the data augmentation methods. Eventually, a training set consisting of 238 renovation schemes was formed, with each renovation scheme containing one pre-renovation sectional drawing and one post-renovation sectional drawing.

To evaluate the model’s performance, we selected 24 sets of sectional drawings from the original dataset as the test set. Importantly, these test set drawings were not used during the model’s training or validation phases, thereby preventing data leakage and ensuring an unbiased assessment of the model’s capabilities. The test set consisted of the same type of sectional drawings used in the training process, but comprised distinct samples not previously encountered by the model, ensuring the reliability and integrity of the evaluation.

As a result, we obtained a collection of 214 pre-renovation sectional drawings, denoted as Dataset A, and a corresponding set of 214 manually crafted renovation scheme drawings, denoted as Dataset B, for our training set. Additionally, we curated a validation set, denoted as Dataset C, comprising 24 sectional drawings.

3.2. Model Parameter Setting

3.2.1. Optimizer Setting

During the training process of the pix2pix model, the optimizer settings directly influence the convergence speed and quality of the results. Therefore, choosing an appropriate optimizer is crucial. In this study, we adopted the standard approach outlined in reference [23], which involves alternating training of the discriminator and the generator. Furthermore, we referenced the literature [37] and utilized mini-batch stochastic gradient descent along with the Adam optimizer [48]. The hyperparameters are configured as shown in Table 1.

Table 1.

Hyperparameters involved in the model.

3.2.2. Iteration Count Setting

During the training process, the iteration count is a critical hyperparameter that directly influences the convergence speed and quality of the model. The iteration count can be understood as the number of times the model is trained using the entire training dataset. For instance, in this experiment, there are a total of 238 sets of training data. In one iteration, the algorithm traverses through all 238 sets of data for computation and then updates the parameters of the discriminator and generator once before proceeding to the next iteration.

To compare the impact of different iteration counts on the training results, the model training was conducted with iteration counts of 100, 200, 300, and 400, respectively, based on parameter settings from similar experiments [49]. In our experiments, we observed that convergence was generally achieved by 400 iterations, indicated by stable validation loss and satisfactory image quality. If convergence is not achieved within 400 iterations, the training can be extended with continued monitoring of the validation loss and image quality to ensure effective learning.

3.3. Results Evaluation

3.3.1. Iteration Count Setting

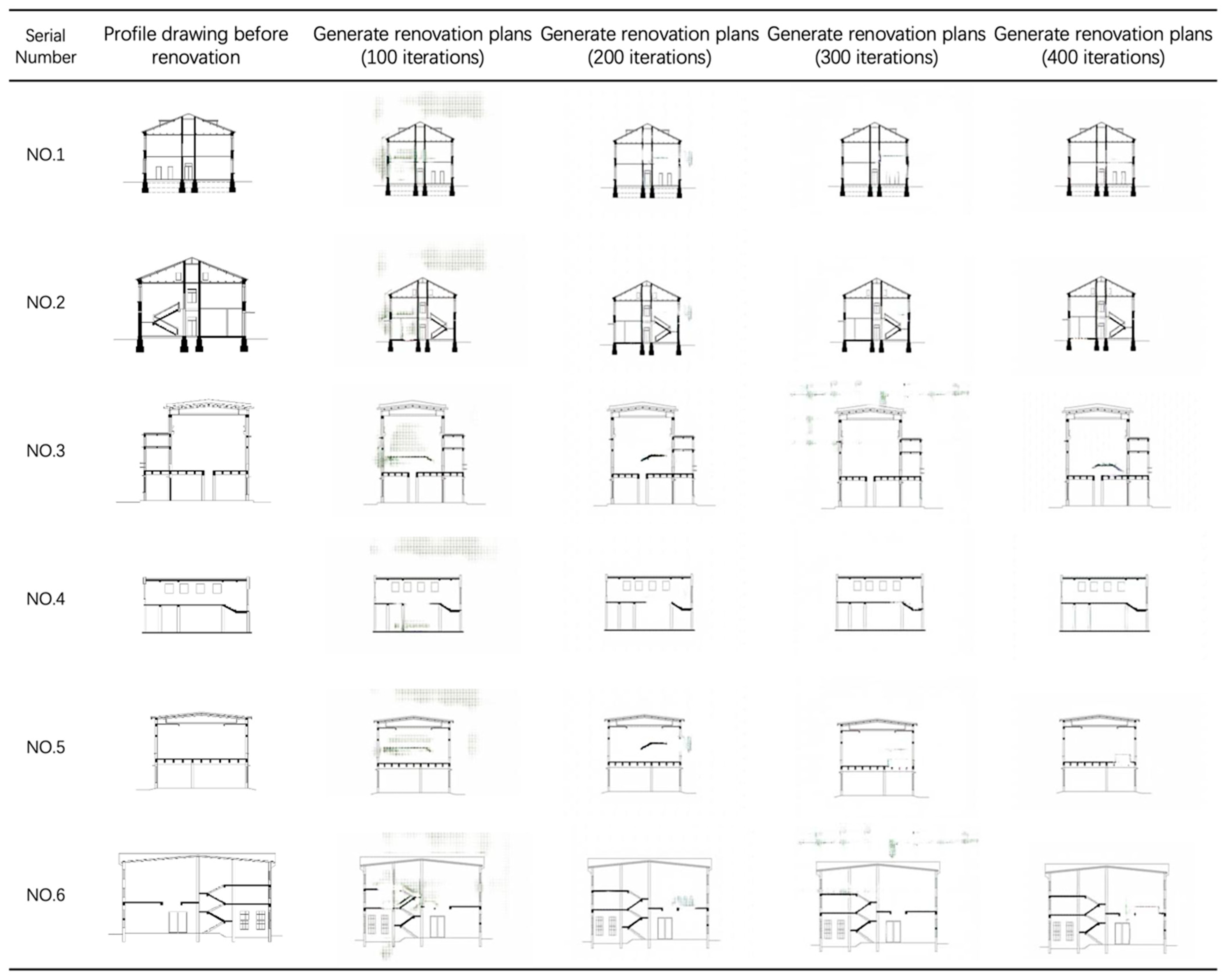

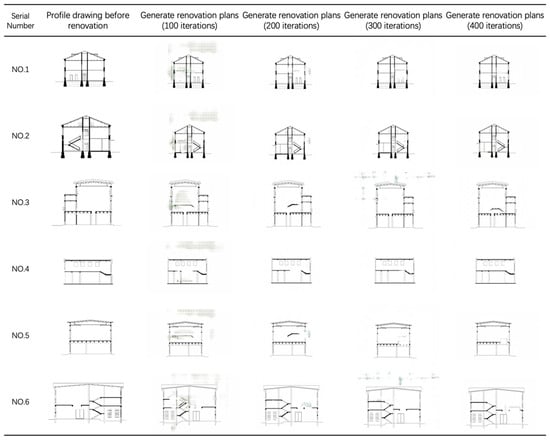

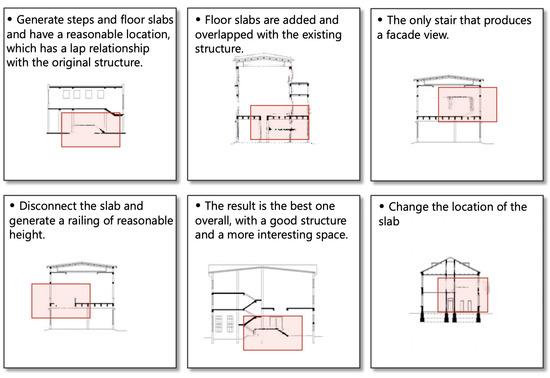

Upon completion of the training, a subset of output results is illustrated in Figure 4, with the input image and generated image presented from left to right. A comparative analysis between the output image and target image reveals that pix2pix adeptly transforms profiles, adhering closely to conventional design principles. Hence, it is evident that when presented with well-defined structural profiles, pix2pix demonstrates the capacity to generate plausible alterations.

Figure 4.

Comparison of input image and generated image.

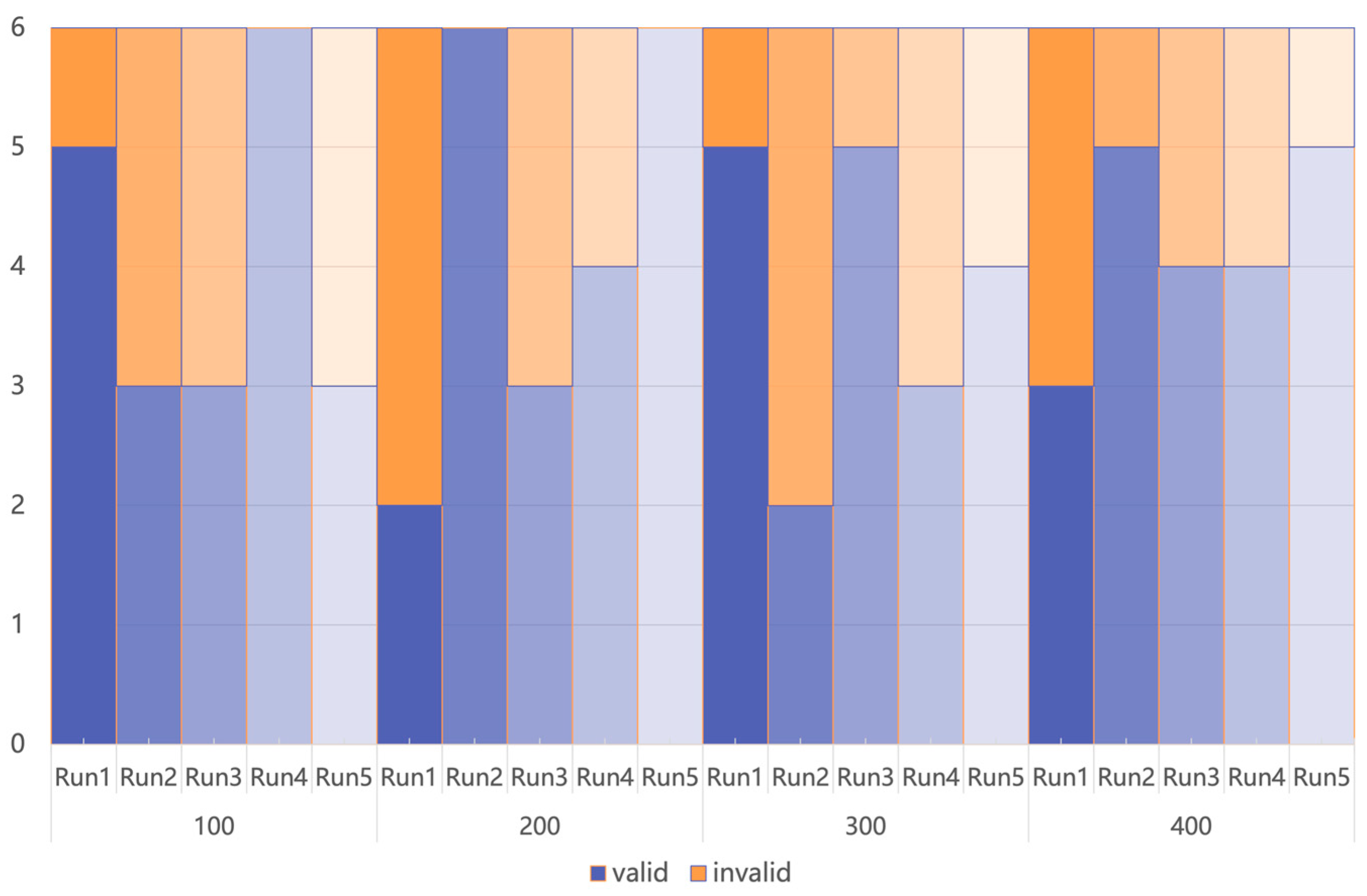

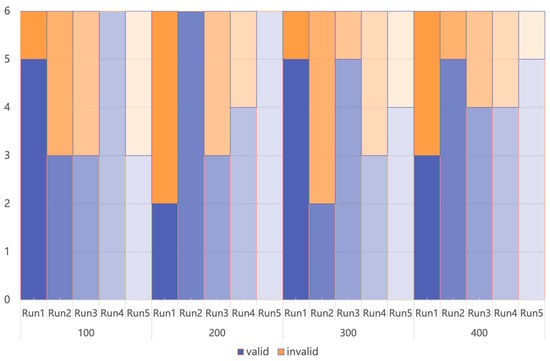

To provide clearer and more convincing results, we have added statistical charts of all generated outcomes, as shown in Figure 5. The x-axis represents iteration counts of 100, 200, 300, and 400, each run five times. The y-axis shows the number of images generated in each run, with blue blocks indicating usable images and orange blocks indicating unusable ones. Transparency is used to distinguish differences between runs at the same iteration count. Overall, a significant proportion of blue blocks can be observed, indicating that the model successfully learned techniques for image transformation.

Figure 5.

Count of valid and invalid images for different iteration counts.

While each output image may not precisely match the target image, from the standpoint of architectural design, the transformation of profiles embodies a high degree of flexibility and subjectivity. Consequently, deviations from the target image in the output cannot be dismissed outright as erroneous outcomes. When faced with the same original profile, distinct designers would inevitably propose diverse alternatives, reflecting the inherent uncertainty characteristic of human cognition and machine learning outcomes. Indeed, upon scrutinizing the output results, it becomes apparent that pix2pix has preliminarily assimilated certain “rules” pertinent to profile transformation design.

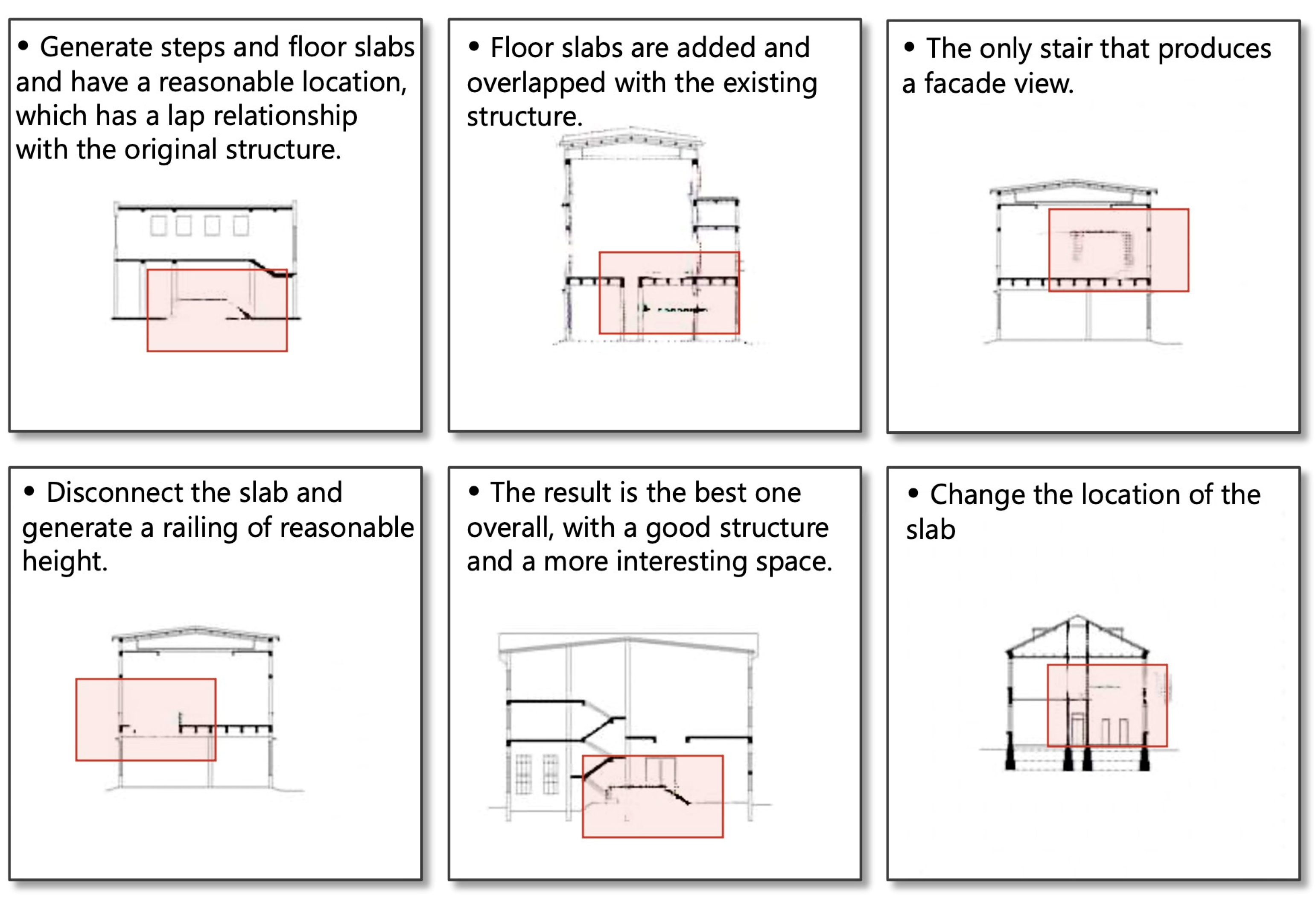

First, we delve into the structural perspective. The repertoire of structural modifications within the input dataset B encompasses fundamental techniques such as floor variations and staircase installations, all of which are evidently assimilated by pix2pix in its training outcomes. Upon closer scrutiny of the generated imagery, take the example depicted below. In contrast to the original sectional profile, this generated output delineates a distinctly defined morphology of the staircase, seamlessly integrated with adjoining floor structures that interface with the building’s existing load-bearing framework. From a structural integration standpoint, the generated output appears rational, as manual calibration yields a sectional image indistinguishable from one subjected to human intervention.

Secondly, considering the scale aspect, the pix2pix algorithm learns solely from pixel distributions within images, which refer to the spatial arrangement and patterns of architectural features and design elements. Given that the scale of transformation techniques in the input dataset is meticulously controlled, the majority of generated images exhibit scales that are perceptually congruent with conventional norms. For precise scrutiny, we can analyze one particular result: assuming the original sectional height in the diagram below is 8400 mm, post-floor installation, this height is partitioned into 2800 mm and 5600 mm sections, conforming to ergonomic proportions and representing a comfortable and logical height division consistent with human scale.

Lastly, from a spatial perspective, owing to the rudimentary nature of transformation techniques within the input dataset and the relatively constrained size of the training set, the generated outcomes are comparably straightforward. Nevertheless, discernible alterations in internal spatial configurations between the generated results and the original sectional profile are evident. Basic transformation techniques employed by the generated results exert notable changes on spatial configurations, such as elevating or interrupting floor structures to disrupt homogeneous spatial morphologies, differentiating between larger and smaller spaces, introducing staircases to alter spatial boundaries, thereby facilitating vertical circulation, and so forth.

3.3.2. Evaluation of Results Generated with Different Iteration Counts

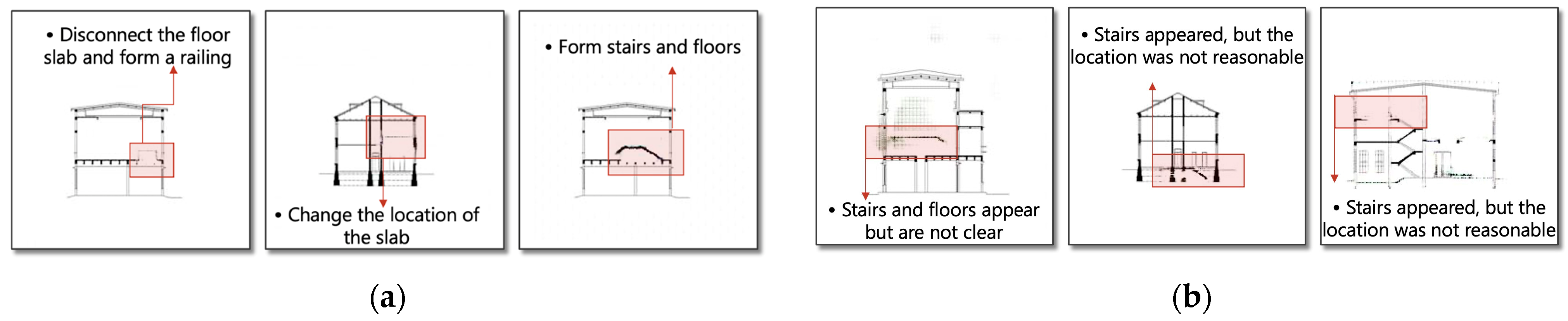

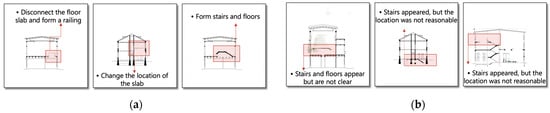

After comprehensive evaluation of all results, we analyzed the impact of iteration numbers on the results. As shown in Figure 6, we divided the iterations into low iteration categories for 100 and 200 iterations, and high iteration categories for 300 and 400 iterations, conducting a horizontal comparison. It is evident from the final training outcomes that the images generated with 300 and 400 iterations exhibit clearer visual quality, with significantly fewer instances of implausible alterations in the profile. This observation underscores the critical role of iteration count in refining the reconstruction process and achieving more coherent results. Furthermore, higher iteration counts contribute to enhanced convergence towards desired transformations, thereby reducing the occurrence of aberrations or inconsistencies in the reconstructed profiles. This underscores the importance of meticulous iteration control in optimizing the quality and realism of the generated outputs in architectural transformation tasks.

Figure 6.

Comparative analysis of generated results across different iterations (a) 300, 400 iterations generated results (partial); (b) 100, 200 iterations generated results (partial).

To further quantify the performance, we calculated the Pearson correlation coefficients between the generated images and the target images. The Pearson correlation coefficient measures the linear relationship between the pixel values of the generated and target images and is defined as:

where and are the pixel values of the generated and target images, and are their respective means, and is the number of pixels. Our results show a mean correlation coefficient of 0.717, indicating a moderate to strong positive correlation. This demonstrates that the pix2pix algorithm captures a substantial number of structural and visual patterns from the target images.

It is noteworthy that, as shown in Figure 7, even in the low iteration categories, pix2pix exhibits the capacity to generate meaningful images, indicating its ability to learn essential features from input data. This resilience to iteration count challenges conventional assumptions, suggesting a potential efficiency in feature extraction during early training stages. This finding prompts a reevaluation of training strategies, emphasizing the importance of efficient feature learning over sheer iteration numbers. Such insights not only enhance our understanding of pix2pix’s learning dynamics but also offer valuable implications for optimizing deep learning models’ training processes, potentially leading to more resource-efficient and effective training methods in the future.

Figure 7.

One hundred, 200 iterations generated results (partial).

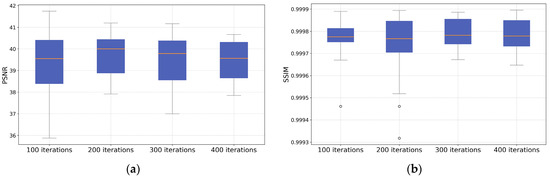

3.3.3. Evaluation of PSNR and SSIM Metrics

From the generated results listed above, it is evident that pix2pix possesses the capability to learn profile designs. However, the aforementioned evaluations lean towards subjective judgment. To provide a more objective assessment of the generated results, this paper introduces two metrics: Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index (SSIM) to evaluate the similarity between the output and target images.

PSNR is a widely used image quality assessment metric in image processing and computer vision, typically employed to evaluate the quality difference between compressed or reconstructed images and their original high-quality versions. A PSNR value exceeding 30 indicates relatively good image quality. SSIM measures the structural similarity between two images based on luminance, contrast, and structural information, taking into account the human visual system’s ability to perceive changes in brightness and contrast. SSIM values range from −1 to 1, with higher values indicating greater similarity between the two images.

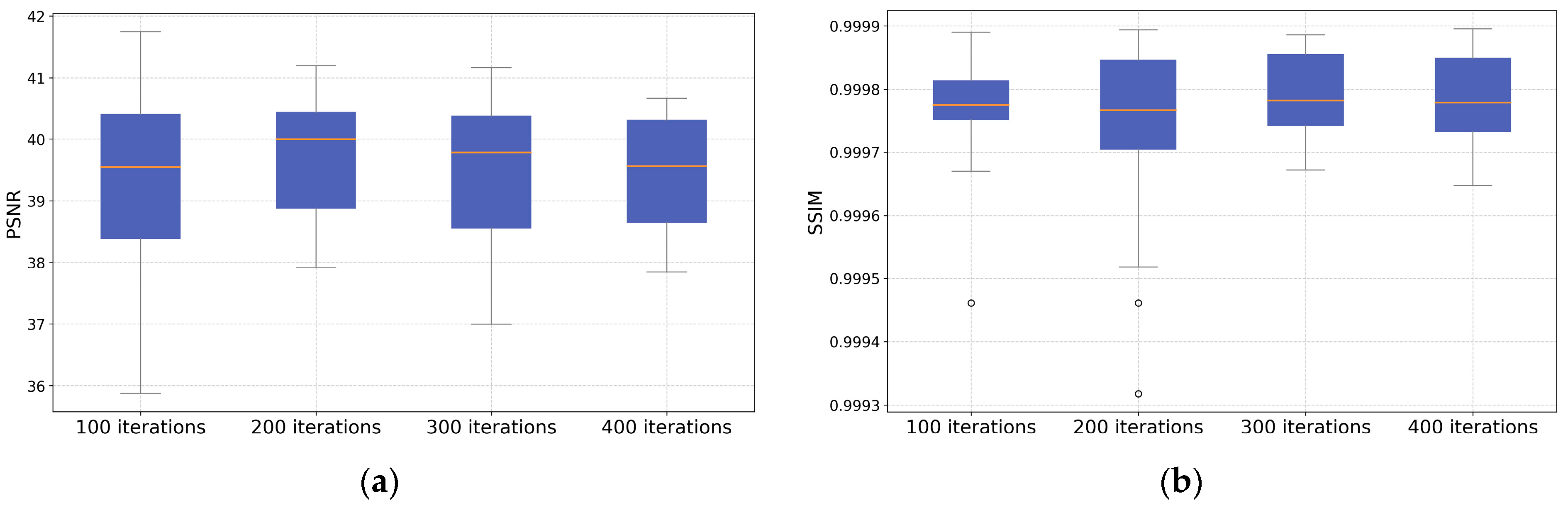

The dataset with iteration counts of 100, 200, 300, and 400 are divided into four groups. Each group’s dataset B and the generated result dataset are sequentially input for metrics computation. The evaluation metrics for the four groups of generated results are illustrated in Figure 7, with specific numerical values detailed in Table 2. In this context, a PSNR value greater than 30 dB is generally considered indicative of good quality, while an SSIM value closer to 1 indicates higher image quality.

Table 2.

The average PSNR and SSIM values for the generated results.

As shown in Figure 8, the horizontal comparison of the four data groups reveals a significant improvement in stability with increasing iteration counts. When the iteration count is set to 100, the SSIM index performs admirably, yet some anomalous values are observed, and the distribution of PSNR values is scattered compared to other groups, indicating data instability. The group with 200 iterations exhibits unstable SSIM values. Conversely, groups with 300 and 400 iterations demonstrate favorable PSNR and SSIM value Interquartile Ranges (IQR), indicating minimal data fluctuation and better stability.

Figure 8.

The quantitative evaluation results of the profile generated by the model with different training iterations: (a) PSNR Statistical Results of Metrics; (b) SSIM Statistical Results of Metrics.

Calculating the average PSNR and SSIM values for the four data groups, we observe that each group’s metrics fall within a favorable range, indicating that pix2pix can generate high-quality images with fewer iterations. However, upon horizontal comparison, it becomes evident that the stability of results from 300 and 400 iterations is notably higher than that of 100 and 200 iterations, validating previous subjective evaluations of generated results. The difference between 300 and 400 iterations is minor, and considering the longer computation time for 400 iterations, 300 iterations emerge as the preferred choice for iteration count.

In addition to using PSNR and SSIM for evaluating the quality of generated images, we also considered the average loss during the pix2pix training process to provide a more comprehensive assessment of model performance. During training, the average L1 loss and cGAN loss were monitored to track the stability and convergence of the model. The average L1 loss, which measures the pixel-level accuracy between the generated and target images, stabilized around 0.5234 after 400 iterations. Similarly, the average cGAN loss, which reflects the adversarial component of the training, stabilized around 0.9438.

Furthermore, from an overall image metric perspective, manually transformed results align closely with those generated by pix2pix, demonstrating excellent image quality. This suggests the effectiveness of pix2pix in generating high-quality images comparable to those manually produced, further highlighting its potential in various applications.

4. Discussion

This study aims to utilize GANs to assist in profile-based generative design for factory renovation. Our method demonstrates the capability to propose relatively reasonable renovation schemes based on given profile images, showcasing rich details and realistic effects in the generated images, thereby providing feasible design directions for factory renovation. Evaluation results reveal outstanding performance, with PSNR exceeding 39.100 and SSIM surpassing 0.99974. These metrics signify the high quality and similarity of the generated images when compared to the original ones. While machine learning may not exhibit significant advantages over human architects in terms of image quality and accuracy, it substantially enhances solution efficiency. This efficiency improvement, under the premise of ensuring the basic rationality of generated images, can alleviate the workload of architects. Especially when dealing with large-scale projects, architects face a dual challenge: on the one hand, they need to undertake extensive preliminary work to arrive at a few viable design options, and on the other hand, they must handle a large number of sectional diagrams. At this point, it can become an effective tool to assist in design tasks. Furthermore, from the perspective of architectural expertise, we discuss the usability of the experimental results and the potential for further optimization of this method. By exploring these aspects, we strengthen the credibility and practicality of our research findings. In addition, in the subsequent practical application and testing phase, from an architectural perspective, we will use professional drawing software such as AutoCAD 2018 and Photoshop 2020 to further refine the drawings, delve into the potential for further optimizing the images, and thereby enhance the credibility and practicality of our research findings [50,51].

However, we must acknowledge several limitations in our experiments. Firstly, due to the relatively small dataset size, the experimental results may exhibit a somewhat homogeneous trend, requiring validation with larger datasets. Secondly, compared to other architectural drawings, profile diagrams contain less information. From an aesthetic perspective, their attractiveness and level of detail are not as high as those created by professional architects. Therefore, further manual optimization is currently required to provide a comprehensive solution. Nevertheless, from the perspective of architectural design expertise, this also preserves designers’ creative freedom and offers more possibilities for customized solutions.

Looking ahead, we anticipate further optimization and development of GANs to provide architects with more sophisticated and professional graphical solutions. By overcoming the limitations present in the current experiments, we believe that research in this field will make greater strides, leading to more innovation and breakthroughs in the domain of factory transformation.

5. Conclusions

This paper addresses the efficiency concerns in urban factory renovation and proposes the utilization of GANs to assist architects in profile design. The experiment involves training the pix2pix model using original profile images that have been transformed through architectural techniques, serving as training samples. Different groups with varying numbers of iterations are established for horizontal comparison. Through architectural analysis and the quantitative evaluation of image quality, the results demonstrate that GANs can comprehend profile design patterns and generate fundamentally usable renovation profiles, indicating the feasibility of this approach. This study provides new insights into existing research on GAN-assisted architectural design, offering potential avenues for further exploration and advancement in the field. Future directions for expanding research could include enhancing architectural applications, such as exploring facade generation and interior layout optimization; conducting multi-modal learning, such as incorporating 3D models or textual descriptions into training to enhance the diversity and realism of generated outputs; fostering AI–human collaboration to ensure that AI-generated designs align with human aesthetic preferences and functional requirements; and utilizing field studies or pilot projects for practical application validation, examining the real-world applicability and impact of GAN-assisted architectural design in urban renewal projects and beyond. These expansion directions will help further expand and refine the architectural design methods based on GANs, promoting the sustainable development of urban factory transformations.

Author Contributions

Conceptualization, Y.L.; methodology and validation, Y.L.; writing—original draft preparation, Y.L.; writing—review and editing, M.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Science Foundation of Hunan Province [grant number 2023JJ30148].

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to involvement in other unpublished work.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Mo, C.; Wang, L.; Rao, F. Typology, preservation, and regeneration of the post-1949 industrial heritage in China: A case study of Shanghai. Land 2022, 11, 1527. [Google Scholar] [CrossRef]

- Wang, Z.; Fu, H.; Liu, H.; Liao, C. Urban development sustainability, industrial structure adjustment, and land use efficiency in China. Sustain. Cities Soc. 2023, 89, 104338. [Google Scholar] [CrossRef]

- Son, T.H.; Weedon, Z.; Yigitcanlar, T.; Sanchez, T.; Corchado, J.M.; Mehmood, R. Algorithmic urban planning for smart and sustainable development: Systematic review of the literature. Sustain. Cities Soc. 2023, 94, 104562. [Google Scholar] [CrossRef]

- Ma, Y.; Zheng, M.; Zheng, X.; Huang, Y.; Xu, F.; Wang, X.; Liu, J.; Lv, Y.; Liu, W. Land Use Efficiency Assessment under Sustainable Development Goals: A Systematic Review. Land 2023, 12, 894. [Google Scholar] [CrossRef]

- Claver, J.; García-Domínguez, A.; Sebastián, M.A. Multicriteria decision tool for sustainable reuse of industrial heritage into its urban and social environment. Case studies. Sustainability 2020, 12, 7430. [Google Scholar] [CrossRef]

- Sauer, L. Joining Old and New: Neighbourhood Planning and Architecture for City Revitalization. Archit. Behav. 1989, 5, 357–372. [Google Scholar]

- Chi, Y.L.; Mak, H.W.L. From comparative and statistical assessments of liveability and health conditions of districts in Hong Kong towards future city development. Sustainability 2021, 13, 8781. [Google Scholar] [CrossRef]

- Ching, F.D. Architectural Graphics; John Wiley & Sons: Hoboken, NJ, USA, 2023. [Google Scholar]

- Wu, Y.; Zhou, Q. Research on Adaptive Facade Renovation Design of Industrial Architectural Heritage: A Case Study of Nanjing Hutchison Factory; IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2020; p. 012005. [Google Scholar]

- Liu, Y.; Jia, R.; Ye, J.; Qu, X. How machine learning informs ride-hailing services: A survey. Commun. Trans. Res. 2022, 2, 100075. [Google Scholar] [CrossRef]

- Han, Y.; Wang, M.; Leclercq, L. Leveraging reinforcement learning for dynamic traffic control: A survey and challenges for field implementation. Commun. Trans. Res. 2023, 3, 100104. [Google Scholar] [CrossRef]

- Li, F.; Cheng, J.; Mao, Z.; Wang, Y.; Feng, P. Enhancing safety and efficiency in automated container terminals: Route planning for hazardous material AGV using LSTM neural network and Deep Q-Network. J. Intell. Connect. Veh. 2024, 7, 64–77. [Google Scholar] [CrossRef]

- Yang, L.; Yuan, J.; Zhao, X.; Fang, S.; He, Z.; Zhan, J.; Hu, Z.; Li, X. SceGAN: A method for generating autonomous vehicle cut-in scenarios on highways based on deep learning. J. Intell. Connect. Veh. 2023, 6, 264–274. [Google Scholar] [CrossRef]

- Wang, K.; Xiao, Y.; He, Y. Charting the future: Intelligent and connected vehicles reshaping the bus system. J. Intell. Connect. Veh. 2023, 6, 113–115. [Google Scholar] [CrossRef]

- Yang, L.; He, Z.; Zhao, X.; Fang, S.; Yuan, J.; He, Y.; Li, S.; Liu, S. A deep learning method for traffic light status recognition. J. Intell. Connect. Veh. 2023, 6, 173–182. [Google Scholar] [CrossRef]

- Liu, Y.; Wu, F.; Liu, Z.; Wang, K.; Wang, F.; Qu, X. Can language models be used for real-world urban-delivery route optimization? Innovation 2023, 4, 100520. [Google Scholar] [CrossRef] [PubMed]

- Liao, H.; Shen, H.; Li, Z.; Wang, C.; Li, G.; Bie, Y.; Xu, C. Gpt-4 enhanced multimodal grounding for autonomous driving: Leveraging cross-modal attention with large language models. Commun. Trans. Res. 2024, 4, 100116. [Google Scholar] [CrossRef]

- Qu, X.; Lin, H.; Liu, Y. Envisioning the future of transportation: Inspiration of ChatGPT and large models. Commun. Trans. Res. 2023, 3, 100103. [Google Scholar] [CrossRef]

- He, Y.; Liu, Y.; Yang, L.; Qu, X. Deep adaptive control: Deep reinforcement learning-based adaptive vehicle trajectory control algorithms for different risk levels. IEEE Trans. Intell. Veh. 2024, 9, 1654–1666. [Google Scholar] [CrossRef]

- Pena, M.L.C.; Carballal, A.; Rodríguez-Fernández, N.; Santos, I.; Romero, J. Artificial intelligence applied to conceptual design. A review of its use in architecture. Autom. Constr. 2021, 124, 103550. [Google Scholar] [CrossRef]

- Sun, C.; Zhou, Y.; Han, Y. Automatic generation of architecture facade for historical urban renovation using generative adversarial network. Build. Environ. 2022, 212, 108781. [Google Scholar] [CrossRef]

- Jiang, H. Smart urban governance in the ‘smart’era: Why is it urgently needed? Cities 2021, 111, 103004. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 2672–2680. Available online: https://proceedings.neurips.cc/paper/2014/file/5ca3e9b122f61f8f06494c97b1afccf3-Paper.pdf (accessed on 25 May 2024).

- Lin, H.; Liu, Y.; Li, S.; Qu, X. How generative adversarial networks promote the development of intelligent transportation systems: A survey. IEEE/CAA J. Autom. Sin. 2023, 10, 1781–1796. [Google Scholar] [CrossRef]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- Yuan, Y.; Liu, S.; Zhang, J.; Zhang, Y.; Dong, C.; Lin, L. Unsupervised image super-resolution using cycle-in-cycle generative adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 701–710. [Google Scholar]

- Mak, H.W.L.; Han, R.; Yin, H.H. Application of variational autoEncoder (VAE) model and image processing approaches in game design. Sensors 2023, 23, 3457. [Google Scholar] [CrossRef] [PubMed]

- Purandhar, N.; Ayyasamy, S.; Siva Kumar, P. Classification of clustered health care data analysis using generative adversarial networks (GAN). Soft Comput. 2022, 26, 5511–5521. [Google Scholar] [CrossRef]

- Feigin, Y.; Spitzer, H.; Giryes, R. Cluster with gans. Comput. Vis. Image Underst. 2022, 225, 103571. [Google Scholar] [CrossRef]

- Chan, Y.H.E.; Spaeth, A.B. Architectural visualisation with conditional generative adversarial networks (cGAN). In Proceedings of the 38th eCAADe Conference, Berlin, Germany, 14–18 September 2020; pp. 299–308. [Google Scholar]

- Ali, A.K.; Lee, O.J. Facade style mixing using artificial intelligence for urban infill. Architecture 2023, 3, 258–269. [Google Scholar] [CrossRef]

- Huang, C.; Zhang, G.; Yao, J.; Wang, X.; Calautit, J.K.; Zhao, C.; An, N.; Peng, X. Accelerated environmental performance-driven urban design with generative adversarial network. Build. Environ. 2022, 224, 109575. [Google Scholar] [CrossRef]

- Fei, Y.; Liao, W.; Huang, Y.; Lu, X. Knowledge-enhanced generative adversarial networks for schematic design of framed tube structures. Autom. Constr. 2022, 144, 104619. [Google Scholar] [CrossRef]

- Nauata, N.; Chang, K.-H.; Cheng, C.-Y.; Mori, G.; Furukawa, Y. House-gan: Relational generative adversarial networks for graph-constrained house layout generation. In Computer Vision–ECCV 2020, Proceedings of the 16th European Conference, Glasgow, UK, 23–28 August 2020; Part I 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 162–177. [Google Scholar]

- Nauata, N.; Hosseini, S.; Chang, K.-H.; Chu, H.; Cheng, C.-Y.; Furukawa, Y. House-gan++: Generative adversarial layout refinement network towards intelligent computational agent for professional architects. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 13632–13641. [Google Scholar]

- Du, Z.; Shen, H.; Li, X.; Wang, M. 3D building fabrication with geometry and texture coordination via hybrid GAN. J. Ambient. Intell. Humaniz. Comput. 2022, 13, 5177–5188. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Wan, D.; Zhao, X.; Lu, W.; Li, P.; Shi, X.; Fukuda, H. A deep learning approach toward energy-effective residential building floor plan generation. Sustainability 2022, 14, 8074. [Google Scholar] [CrossRef]

- Zhao, C.-W.; Yang, J.; Li, J. Generation of hospital emergency department layouts based on generative adversarial networks. J. Build. Eng. 2021, 43, 102539. [Google Scholar] [CrossRef]

- Mostafavi, F.; Tahsildoost, M.; Zomorodian, Z.S.; Shahrestani, S.S. An interactive assessment framework for residential space layouts using pix2pix predictive model at the early-stage building design. Smart Sustain. Built Environ. 2022, 13, 809–827. [Google Scholar] [CrossRef]

- Rahbar, M.; Mahdavinejad, M.; Markazi, A.H.; Bemanian, M. Architectural layout design through deep learning and agent-based modeling: A hybrid approach. J. Build. Eng. 2022, 47, 103822. [Google Scholar] [CrossRef]

- Karadag, I.; Güzelci, O.Z.; Alaçam, S. EDU-AI: A twofold machine learning model to support classroom layout generation. Constr. Innov. 2023, 23, 898–914. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, Z.; Deng, Q. Exploration on diversity generation of campus layout based on GAN. In Proceedings of the International Conference on Computational Design and Robotic Fabrication, Shanghai, China, 24 July 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 233–243. [Google Scholar]

- Chen, R.; Zhao, J.; Yao, X.; Jiang, S.; He, Y.; Bao, B.; Luo, X.; Xu, S.; Wang, C. Generative design of outdoor green spaces based on generative adversarial networks. Buildings 2023, 13, 1083. [Google Scholar] [CrossRef]

- Kim, S.; Lee, J.; Jeong, K.; Lee, J.; Hong, T.; An, J. Automated door placement in architectural plans through combined deep-learning networks of ResNet-50 and Pix2Pix-GAN. Expert Syst. Appl. 2024, 244, 122932. [Google Scholar] [CrossRef]

- Ching, F.D. Architecture: Form, Space, and Order; John Wiley & Sons: Hoboken, NJ, USA, 2023. [Google Scholar]

- Corbusier, L. Towards a New Architecture; Courier Corporation: North Chelmsford, MA, USA, 2013. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Karras, T.; Aittala, M.; Hellsten, J.; Laine, S.; Lehtinen, J.; Aila, T. Training generative adversarial networks with limited data. NeurIPS 2020, 33, 12104–12114. [Google Scholar]

- Pressman, A. Architectural Graphic Standards; John Wiley & Sons: Hoboken, NJ, USA, 2007. [Google Scholar]

- Schreyer, A.C. Architectural Design with SketchUp: Component-Based Modeling, Plugins, Rendering, and Scripting; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).