Abstract

This study concentrates on the effects of teacher feedback (FB) on students’ learning performance when students are tackling guiding questions (GQ) during the online session in a flipped classroom environment. Next to students’ performance, this research evaluates the sustainability in students’ self-efficacy beliefs and their appreciation of the feedback. Participants were second year college students (n = 90) taking the “Environmental Technology” course at Can Tho College (Vietnam). They were assigned randomly to one of two research conditions: (1) with extra feedback (WEF, n = 45) and (2) no extra feedback (NEF, n = 45) during the online phase of the flipped classroom design. In both conditions, students spent the same amount of time in the online environment as well as in the face-to-face environment. The findings indicate that students studying in the WEF condition achieve higher learning outcomes as compared to students in the NEF condition. With respect to student variables, we observe no significant differences between the two research conditions in terms of self-efficacy beliefs at various occasions. However, we explore significant differences between the two research conditions in terms of feedback appreciation during the posttest assessment.

1. Introduction

Flipped classroom instructional designs have attracted the increasing attention of educators and researchers in higher education [1,2,3]. This new instructional approach is considered a promising way for organizing instructional activities, by providing information and preparatory tasks in an online environment prior to face-to-face classroom sessions. Though challenges and negative side-effects have been reported, many recent flipped classroom reviews keep stressing its potential [4,5]. However, the reviews also suggest setting up more fine-grained research to define the mechanisms explaining its impact more accurately. In the present study we therefore focus on specific design guidelines to identify explanatory mechanisms that can be related to the impact on learning performance.

We claim that feedback and guiding questions are key design elements in both the face-to-face and the online learning phases of a flipped classroom instructional design [6]. Guiding questions, on the one hand, are considered as queries leading to a higher level of understanding since they stimulate students’ critical thinking and encourage creativity and discussion [7]. Some authors stress that guiding questions have a positive effect on students’ performance [8].

They attribute this positive effect to the fact that students are activated to process and apply newly acquired information. Feedback, on the other hand, is considered one of the most powerful influences on learning achievement with reported effect sizes d > 0.70 [9]. Authors stress that students, receiving feedback after their responses to questions, achieve better [10]. In addition, students develop a positive attitude and autonomous motivation due to receiving elaborated and immediate feedback [11].

In flipped classroom research, feedback is mostly provided in relation to activities during the face-to-face phase. Authors suggest that feedback should also be provided during the online part of the learning experience [12,13]. In an earlier study, we demonstrated the positive impact of adding feedback during the online part of a flipped classroom setting. However, one critical factor was not controlled for in this previous study, namely the flexibility in time spent in the online environment [6]. This brings us to the key focus of the present article in which we research the impact of studying in a flipped classroom setting on learning performance (LP), in two conditions which differ in the extent to which extra feedback has been added after solving guiding questions during the online phase of a flipped classroom design, while time usage is being controlled for. In addition, we explore the impact of this additional feedback on two key student cognitions, namely self-efficacy beliefs (SE) and appreciation of feedback (FB).

2. Conceptual and Theoretical Framework

In this study, we concentrate on the following flipped classroom design elements: web-based lectures, guiding questions, feedback, group discussion, and the impact of these design elements on learning performance and student cognition.

2.1. Flipped Classroom Design Elements

The first design element in our flipped classroom set-up is built on web-based lectures. Web-based lectures can be defined as lectures which are digitally recorded to deliver during the online phase of a flipped classroom set-up [14]. Most web-based lectures use specific materials such as text, images, or videos [15]. These lectures are often created with the specific aim to be distributed as a video, so they are not recorded versions of live regular classroom lectures, but rather shorter and more focused versions often taking only half the amount of time of live lectures [16]. In this respect, Gorissen, Bruggen, and Jochems argue that in view of students’ concentration [17], web-based lectures should not exceed 20 min. Web-based lectures offer students flexibility in time and place to access the lectures [18]. Gorissen and his colleagues also confirm this and report that students like recorded lectures to compensate for missed lectures, preparing for exams, improving retention of content, replacing live attendance, etc. This was already observed in earlier attempts to deliver lectures online. Some researchers have stressed how video recorded lectures helped students in preparing for examinations and helped meet their demand for flexibility in accessing lectures anywhere and anytime [17]. An interesting finding in this early study about online lectures is the fact that students “would prefer to have a combination of face-to-face lectures, video recorded lectures, and uploaded course documents” (ibid, p. 792).

Researchers stress the positive effects of web-based lectures on learning outcomes [19,20]. To explain this positive influence, authors refer to the possibility to review, rewind, check difficult concepts, revise for exams, etc. [14,18]. A recent review by O’Callaghan, Neumann, Jones, and Creed balances both negative and positive outcomes related to web-based lectures [21]. The authors refer to risks related to student attendance and engagement and restricting the style and structure of lectures. Nevertheless, the literature review concludes that the positives outweigh the negatives, and its use is to be recommended. In the present study, all students were presented with web-based lectures in a controlled environment.

A second element in our flipped classroom design builds on the use of guiding questions. A guiding question is considered as a query to push students’ comprehension. Blosser argues that guiding questions help students to review complex learning content, help check their comprehension, stimulate critical thinking, and emphasize critical points to tackle during problem solving [7]. They also help the teacher, because such questions support control classroom activities, cut down on disruptive behavior, encourage discussion, and discourage inattentiveness. Research repeatedly demonstrated how guiding questions have a positive effect on learning performance [8]. Budé and his colleagues furthermore indicate that providing guiding questions stimulates correct self-explanation and enhances learning performance without causing cognitive overload. They suggested that guiding questions should be used in e-learning environments to support better understanding in learners [8]. To design guiding questions, one study puts forward specific design guidelines: they should be open-ended, non-judgmental, intrinsically interesting, and succinct to boost student thinking [22]. In the setting of the present study, all students in both research conditions receive guiding questions to boost the processing of the new learning content.

The third element in our flipped classroom design builds—as suggested above—on the potential of feedback. Feedback can be defined as information provided by teachers related to some aspects of a student’s performance or understanding [9]. The mechanisms explaining its impact are related to the fact that students receive information telling them where they are (feedback about goal attainment), whether they evolve in the right direction (feed-up as to where they are in the process of pursuing the goal) and what they could do next (feed-forward to start developing new knowledge and knowledge applications). Many researchers confirm the positive impact of feedback on learning outcomes and explain this by pointing to how feedback helps correct mistakes or activates students’ prior knowledge [23,24,25]. Other authors state that feedback boosts deep-level learning by giving students follow up questions and helps them locate correct information in a task environment [26]. A key discussion is related to the timing of feedback. One group of researchers claim that feedback should be continuous and provide support during each step and phase in a learning process [27]. Another group of authors stress that feedback should be offered as immediately as possible to learners after carrying out tasks [28]. In a flipped classroom setting, this introduces the question of whether feedback should be provided to activities online and the face-to-face phases of the instructional set-up. We expect that providing students with—immediate—feedback in both phases will help students attain higher learning performance as compared to those who only receive feedback in the face-to-face setting.

The fourth element in our flipped classroom design is the incorporation of group discussions. Group discussions are used as an instructional strategy to stimulate collaborative learning. This is considered effective in both face-to-face and online environments [29]. Authors present evidence about the positive impact on deep-level processing, metacognitive processing, and the resulting learning performance [30,31,32]. When set up in an online environment, group discussions offer a larger flexibility while remaining effective in terms of learning performance and the regulation of learning [14]. Whether face-to-face or online, research stresses the need to structure group discussions carefully. Some authors suggest adding structure by presenting scripts, e.g., by assigning roles to students (e.g., monitor, timekeeper, secretary) or by sequencing specific tasks or activities [30,33,34]. Research has found that scripting fosters higher levels of cognitive processing, metacognitive regulation in relation to planning, achieving clarity, and monitoring related learning performance [35,36,37]. Some authors suggest that groups should be small enough to allow for a sufficient degree of interactivity during discussions [36,38]. Building on the above, in the present flipped classroom design, we will incorporate scripted group discussions to all students in each research condition, that present communication to students in small group settings.

2.2. The Influence of Feedback on Self-Efficacy Beliefs and Appreciation of Feedback

Related to self-efficacy beliefs (SE), we expect an effect of adding (extra) feedback to the flipped classroom design on self-efficacy (SE). Self-efficacy can be defined as an individual’s judgments on their capacity to perform a task [39]. Self-efficacy reflects confidence in the ability to control one’s cognition and behavior. A study by Bandura and his colleagues confirms that feedback invokes motivation and student aspiration [40]. Together with other authors, he stresses the relationship between self-efficacy and learning performance [41,42]. This connection is based on the impact of feedback on students’ expectations as to their future performance, meaning that when students receive feedback on their performance, their levels of self-efficacy increase [43,44]. Building on the above assumptions about adding feedback to both phases in a flipped classroom setting, we expect that self-efficacy will be boosted in research conditions where this extra feedback has been provided.

Next, we focus on appreciation of feedback. A study by Zinman and his colleagues stresses that self-confidence—one’s conception of self-efficacy—and previous experiences with feedback influence how students value and use feedback [45]. Students with a high level of self-efficacy stress the positive effect of feedback on their learning and use feedback constructively to improve their learning outcomes, while students with low self-efficacy are negatively affected and benefit less from feedback. Boud and Molloy indicate that feedback is an instructional feature with two faces: (1) it positions the teacher as a driver of feedback, and (2) it positions students as drivers of their own learning by constructing and soliciting feedback [29]. This can be linked to the findings of some researchers [40,46], who confirm the positive impact of feedback on students’ self-regulation. Students are motivated by themselves learning. This brings us to the instructional design of the flipped classroom conditions in the present study. We expect that students receiving feedback in both phases of the flipped classroom setting (with extra feedback, WEF) will be pushed to improve their learning outcomes and therefore will also express a stronger appreciation of feedback; we compared this to students only receiving feedback in the face-to-face phase of the flipped classroom setting (no extra feedback, NEF).

3. Materials and Methods

3.1. Participants

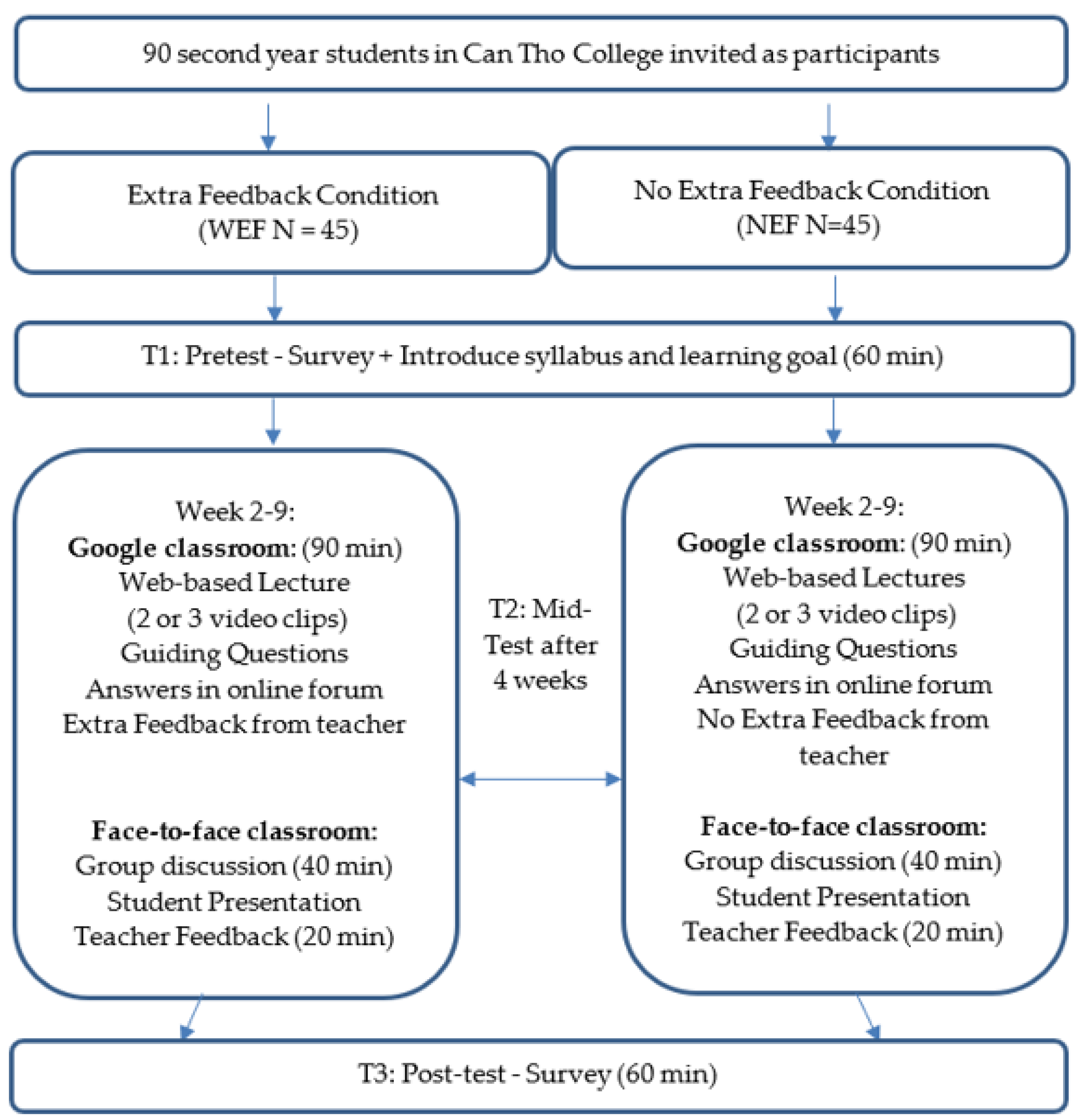

Participants were second-year college students (n = 90) enrolled in the “Environmental Technology” course in the Faculty of Engineering and Technology at Can Tho College, Vietnam. The student age ranged from 20 to 22 years and 63.3% of the students were female. Informed consent was obtained from every student; none of the students requested their data to be excluded from the analysis. Two classes were involved in this study and were randomly assigned to one of the two experimental conditions: (1) with extra feedback (WEF, n = 45) and (2) no extra feedback (NEF, n = 45). This study was implemented during the first 10 weeks of the second semester in 2015–2016.

3.2. Data Collection

All research variables were assessed three times by the management of a pretest, midtest and posttest.

3.2.1. Learning Performance (LP)

Knowledge tests were designed to assess prior knowledge and the level of knowledge acquisition. Bloom’s taxonomy of learning objectives was used to develop test items at the three basic behavioral levels: knowledge, comprehension, and application [47]. Each parallel test consisted of 22 multiple-choice questions and three essay questions. The parallel test versions consistently reflected the same behavioral levels but focused on different content. The two teachers received master’s in environmental technology and had many years of experience teaching in environmental science area at Can Tho College; they independently checked the curriculum fit and adequacy of the test items.

3.2.2. Self-Efficacy Beliefs (SE) and Appreciation of Feedback (FB)

Self-efficacy (SE) was measured with the 27-item scale from Zajacova, Lynch, and Espenshade [48]. Students indicated on a scale, ranging from 0 to 100, to what extent they felt able to carry out specific tasks. A high reliability of the self-efficacy scale was observed in the context of this present study (Cronbach’s alpha SE_Pre = 0.94, SE_Mid = 0.96, SE_Post = 0.97). In addition, appreciation of feedback was measured with the instrument from Ghilay and Ghilay [49]. Scale items were rated by students via a 5-point Likert scale. In this study, the reliability of appreciation of feedback scales was acceptable to high (Cronbach’s alpha FB_Pre = 0.71, FB_Mid = 0.82, FB_Post = 0.86).

3.3. Design of the Research Conditions

An exploratory design was conducted to investigate potential changes in learning outcomes, self-efficacy, and appreciation of feedback during the pre-, mid-, and posttest stages, building on two research conditions that differed in the extent to which extra feedback was added to the online phase of the flipped classroom setting.

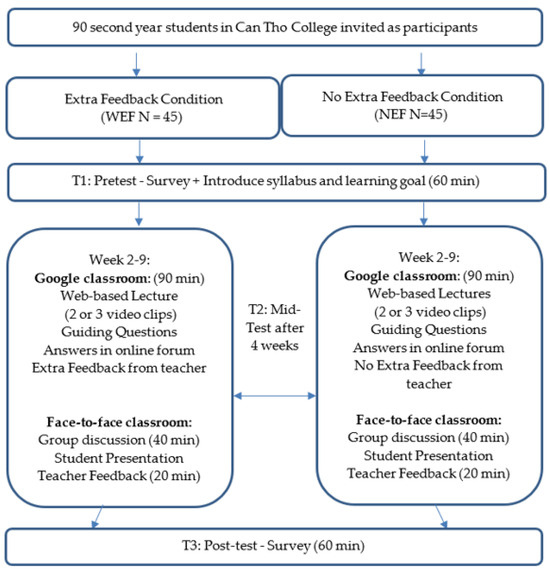

Table 1 depicts that in the with extra feedback (WEF) condition, extra feedback has been added after solving the guiding questions in the online context, and after studying the web-based lecture. This feedback is not presented in the no extra feedback (NEF) condition in the online environment. In both conditions, students receive feedback in relation to the guiding questions presented in the face-to-face phase of the flipped classroom setting.

Table 1.

Specification of instructional design elements in the two flipped classroom learning conditions.

3.4. Procedure

In this study, a pretest was administered during the first week of the 10-week research period. In this first week students were also introduced to the online learning environment, implemented in Google Classrooms. This was followed by an 8-week instructional period and followed with a last week in which the posttest was administered. In the fifth week, the midtest was administered.

Each weekly session consisted of two parts. The first part consisted of individual study of web-based lectures, linked to a chapter in the printed textbook and enriched with guiding questions, again to be solved individually. The web-based lectures were between 10 and 17 min each, and depending on the content and duration, 1 to 3 WBL were presented to the students. All WBL consisted of a video of the lecturer, combined with visual material (text, images, videos). Students were instructed by one teacher who has experience teaching in the environmental science area. Students studied in a computer room at the college to be able to control the actual setting. Students put their individual answers in the online forum after they watched the web-based lectures. Students were forced to finish their tasks within 90 min after watching the web-based lecture and to solve guiding questions in an e-learning environment. For research purposes, no flexibility in time and place was allowed in this flipped classroom setting.

The second part of each weekly session consisted of a face-to-face group discussion (1 h) in which students tackled new guiding questions, reflecting a focus on higher levels in Bloom’s taxonomy to push students’ critical thinking and expand their knowledge through group discussion. Students worked in groups of five and were invited to adopt roles such as monitor, timekeeper, and secretary, by agreement of the members in the group. As stated in the theoretical section, this scripting approach was adopted to guarantee the sufficient involvement of every individual student in the collaborative work. Group discussions lasted for 40 min. After finishing the group discussion, a member of a group was randomly selected to present their results in front of the class; groups received feedback from the teacher and other students based on this presentation.

In this study, students in both the NEF and the WEF conditions developed their answers to the guiding questions in the online forum. Only in the WEF condition did the teacher provide feedback; this feedback was provided immediately. All students in the WEF condition received general feedback in relation to the way the students tackled the guiding questions. Individual feedback was given each week to 10–15 randomly chosen individual students, guaranteeing that at the end of the intervention all students did receive, besides general, also individual feedback: in total, each individual WEF student received two times the individual feedback and eight times the general feedback from the teacher. Building on the model of Hattie and Timperley, feedback consisted of feedback (hints as to the extent to which the goal in question had been attained) and feed-up (hints to pursue further elaboration of responses) [9]. In the NEF condition, students put their answers in the forum but did not receive the related extra teacher feedback (see Figure 1).

Figure 1.

Graphical representation of the procedure, involving different components in the two research conditions.

3.5. Analysis Approach

In the present study the Statistical Package for Social Sciences (SPSS, Version 29) was used for statistical analysis of the quantitative data. First, descriptives were summarized and presented. Second, we checked for differences between and within the two research conditions at pretest, midtest, and posttest stages by applying a two-way repeated measures ANOVA. In addition, we used the Bonferroni correction for multiple comparisons. Since we dealt with small sample sizes in this present study, we do not only report the p-values but also report the effect sizes (Cohen’s d) alongside with partial Eta2 to interpret the significant results. In view of the interpretation of the effect sizes, we build on a study of Baguley [50], who puts forward the following values: small effect size (from d = 0.2), medium effect size (from d = 0.5), large effect size (from d = 0.8). A significance level of p < 0.05 level was put forward.

4. Results

4.1. Descriptives

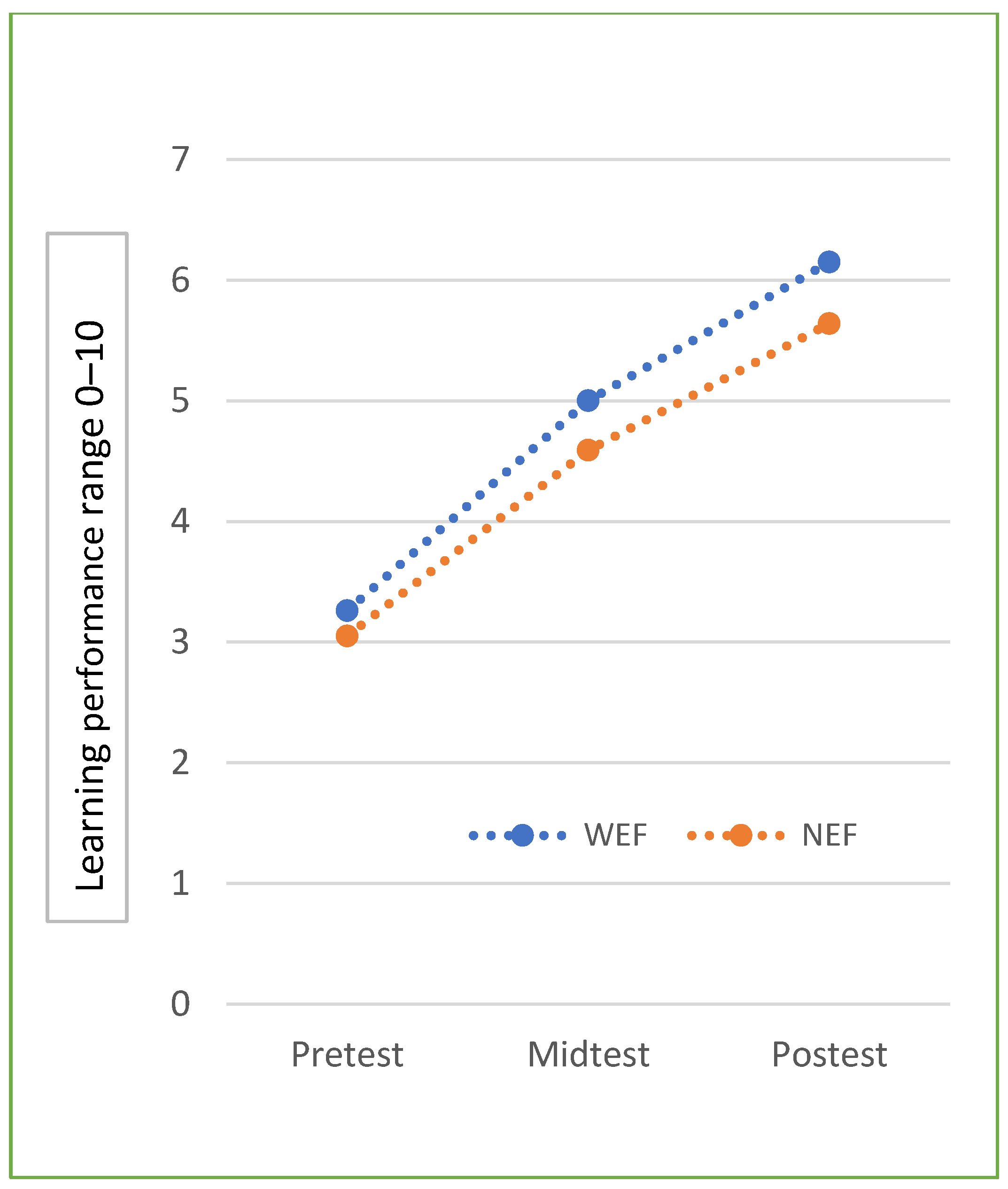

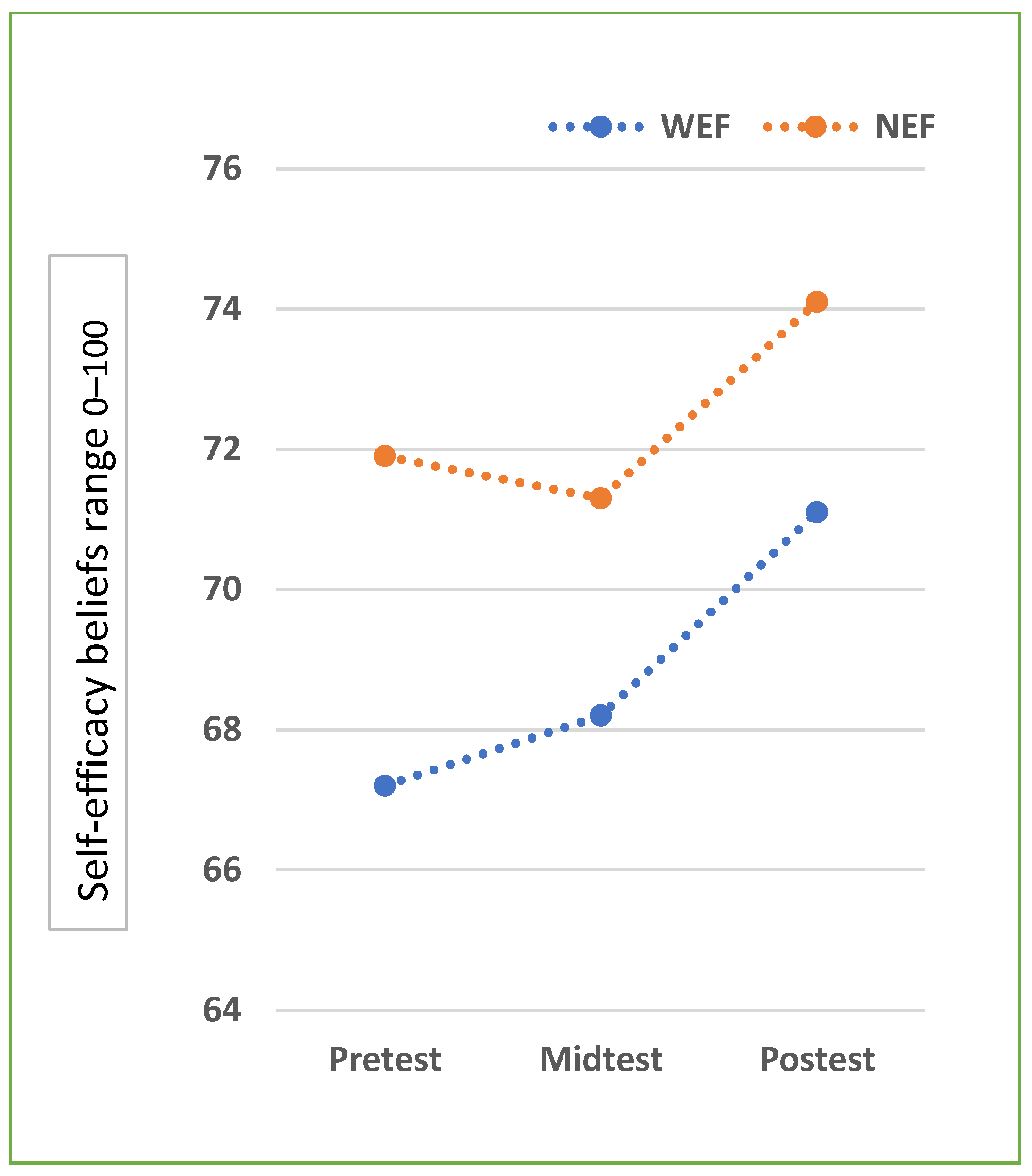

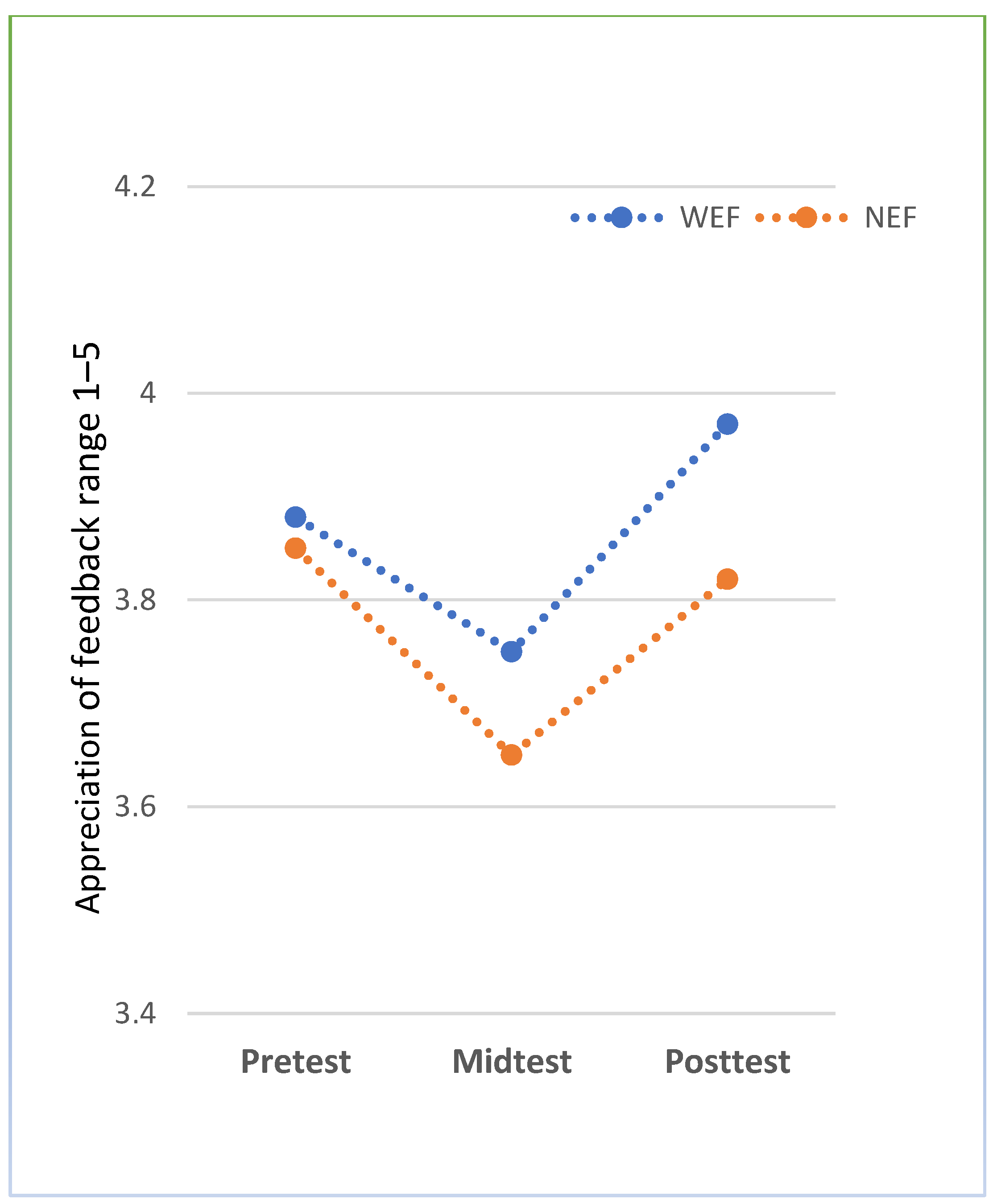

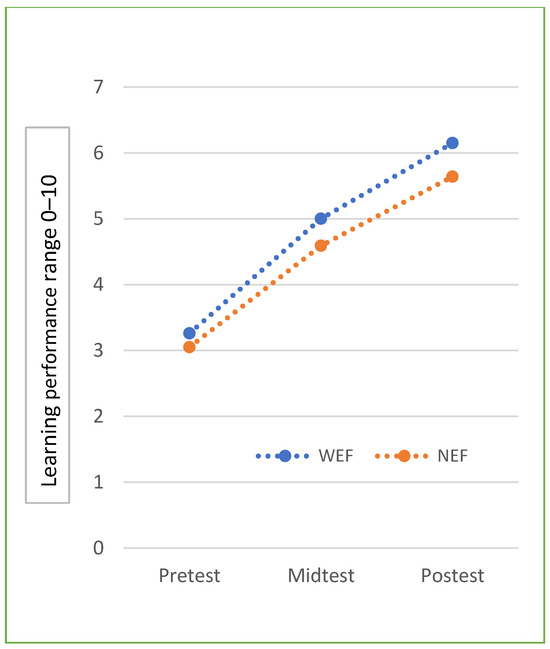

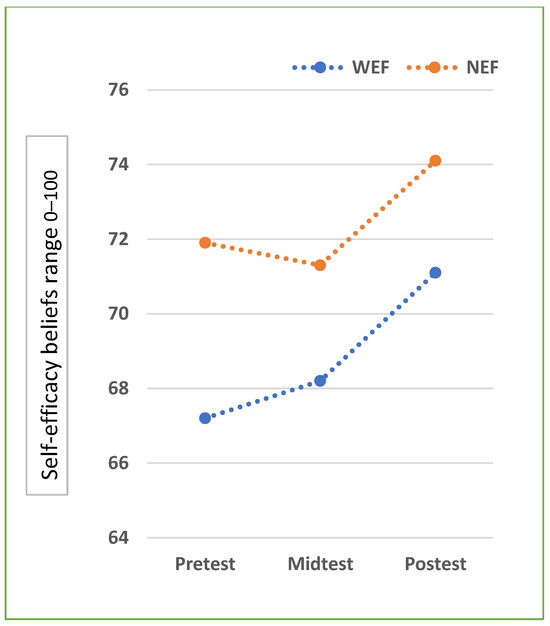

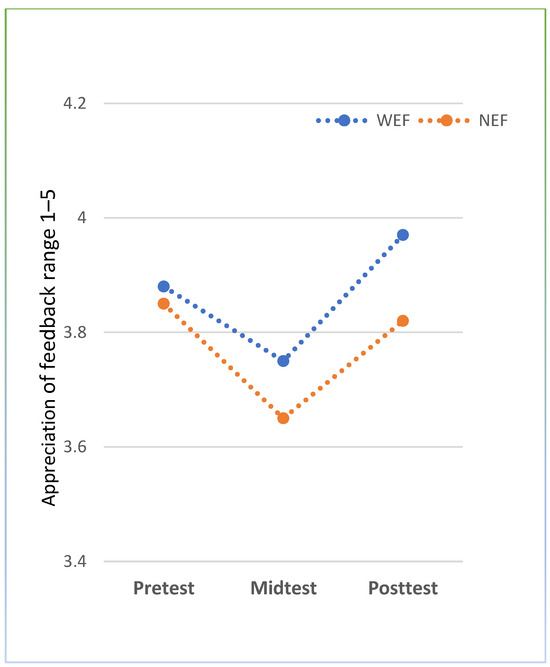

Table 2 depicts how the mean learning performance scores of students steadily increased from pretest to posttest in both research conditions. At the time of the posttest, we observed that the mean scores in the WEF condition are higher than in the NEF condition. Looking at self-efficacy beliefs, we hardly observe differences between WEF and NEF students at pretest, midtest, or posttest stages. As to students’ appreciation of feedback, mean scores of students hardly fluctuate over time.

Table 2.

Mean and standard deviation of learning performance, self-efficacy, and appreciation of feedback (n = 90). Note: a Maximum LP (learning performance) score = 10; b maximum SE (self-efficacy) score = 100; c maximum FB (appreciation of feedback) score = 5.

4.2. Testing: Learning Performance

To test the differential influence of giving extra feedback on learning performance, a two-way repeated measures analysis was applied to measure the differences within and between the two group conditions during all time points (pretest, midtest and posttest).

Firstly, we observe significant differences over time within both research conditions: (F (2,176) = 223.3, p < 0.001, η2p = 0.72).

Secondly, we observe no significant differences over time within subject effects by the interaction effect of the research condition (F (2,176) = 0.70, p = 0.50, η2p = 0.01).

Finally, when it comes to between-group differences, we observe overall significant differences between the WEF and NEF conditions (F (1,88) = 4.44, p = 0.038, η2p = 0.048, d = 0.45). This reflects a medium effect size.

The increase in learning performance over time in both research conditions can be seen in Figure 2, as well as the analysis results of pairwise comparison by using the Bonferroni correction to point out a significant difference from pretest to midtest (Diff_Mean = −1.64, p < 0.001) from midtest to posttest (Diff_Mean = −1.10, p < 0.001) and from pretest to posttest (Diff_Mean = −2.74, p < 0.001).

Figure 2.

The differences in learning performance within and between the two research conditions.

4.3. Testing: Self-Efficacy Beliefs and Appreciation of Feedback

Regarding self-efficacy beliefs, a two-way repeated measures analysis was applied to measure the differences within and between the two research conditions WEF and NEF during all time points (pretest, midtest and posttest).

Firstly, we observe significant differences over time within subject effects (F (2,176) = 3.55, p < 0.031, η2p = 0.04).

Secondly, we observe no significant differences over time within subject effects by the interaction effect of the research condition (F (2,176) = 0.27, p = 0.76, η2p = 0.03).

Next, when we look at the overall differences between the research conditions, the analysis results show no significant differences between the WEF and NEF conditions (F (1,88) = 2.27, p = 0.14, η2p = 0.25).

Figure 3 shows that there is a slight increase in the WEF condition while there is a slight decrease in NEF condition (not significantly different) from pretest to midtest in both conditions (Diff_Mean = −2.19, p = 1). However, the line increases in both the WEF and NEF conditions from midtest to posttest (though not significantly) (Diff_Mean = −2.83, p = 0.097). Also, with the increase from pretest to posttest in both conditions, we can observe a significant difference in the mean score’s comparison (Diff_Mean = −3.05, p < 0.001).

Figure 3.

The differences in self-efficacy between and within the two learning conditions.

With respect to appreciation of feedback, a two-way repeated measures analysis was applied to measure the differences within and between the two research conditions WEF and NEF during all time points (pretest, midtest and posttest).

Firstly, we observe significant differences over all time within subject effects: (F (2,176) = 11.5, p < 0.001, η2p = 0.12).

Secondly, we observe no significant differences over time within subject effects by the interaction effect of the research condition (F (2,176) = 0.96, p = 0.38, η2p = 0.01).

Finally, when it comes to between-group differences, we observe overall significant differences between the WEF and NEF conditions (F (1,88) = 4.23, p = 0.043, η2p = 0.046).

As presented in Figure 4, we can observe significant differences as a mean score comparison of both research conditions from pretest to midtest (Diff_Mean = 0.167, p = 0.002) and from midtest to posttest (Diff_Mean = −0.198, p < 0.001). However, there are no significant differences from pretest to posttest (Diff_Mean = −1.64, p < 0.001).

Figure 4.

The differences in appreciation of feedback within and between the two learning conditions over time.

5. Discussion

This present study investigates the effect of providing additional feedback in the online session of a flipped classroom approach on learning performance and considers the effect of this extra feedback on the student variables of self-efficacy and appreciation of feedback. The extra feedback was built into the online session (in addition to the already existing feedback provided in the face-to-face session), as this was often neglected in earlier research. Based on the theoretical framework, we generated assumptions related to feedback in the online environment in the flipped classroom design. In this study, the time was constrained for both the online session (90′) and the face-to-face activities (60′) in two research conditions. Controlling time allowed us to specifically investigate the association of the extra feedback. Students received general feedback after the group presentation in the classroom setting, but only students in the WEF condition received more extra feedback in the online setting, which comprised both individual and general teacher feedback after they solved the guiding questions. Based on the results, we first discuss the influence of extra feedback on learning outcomes. We observe that students receiving extra feedback attain higher learning outcomes as compared to students studying without extra feedback in an online environment. This finding is in part consistent with earlier studies by some authors, who confirmed that feedback has a positive effect on learning outcomes [23,24,25]. However, other researchers indicated no contribution of feedback to learning performance [11]. This could confirm our expectation of extra feedback. In this respect, our study shows that feedback is not only important to be provided during the face-to-face sessions of a flipped classroom approach, but also that it should be introduced already in the online sessions.

Related to self-efficacy beliefs, the results show that students studying in the condition with extra feedback reported higher SE levels. This finding is consistent with the findings of some authors, who confirm that feedback supports higher self-efficacy beliefs [43,44]. An explanation could be related to the short duration of the study. Experiencing the feedback loop could require more time than the 8 weeks in the present study. Experiencing better learning outcomes after receiving more feedback and, as a result, again receiving more (positive) feedback, might require a longer time window. In addition, the time constraints, set in the present study to control the time students spend in the online phase of the flipped classroom setting, could have negatively affected students. The 90 min period could have been too short to benefit sufficiently from the additional feedback received. We return to this in the limitations section of this article.

Given that the feedback in the face-to-face session was equal in both studies, we can assume that the difference in feedback appreciation in the end is due to the extra feedback received in the online session. The analysis results reflect differences between the research conditions at the 5% significance level; the difference is marginally significant (p = 0.04). The results look promising and seem to confirm what has been stated by Boom, Bandura, and his colleagues [40,46], when they link feedback to higher levels of self-regulation and self-learning, thus boosting the resulting performance.

In the present study, some limitations can be observed. First, this study was set up involving all students enrolled for one course. Nevertheless, this did not represent many participants. This might have affected the power of the analyses. It must be acknowledged that for both students and teachers, the experience of teaching and studying in a flipped classroom environment was still very new. Also, studying in the online environment was still new for many students and new for the teachers. We tried to counter this by providing introductory training. As stated earlier, the research conditions were designed such that compared to earlier studies, we could control the study time students spent in the online phase of the flipped classroom setting, which is an advantage in view of comparing the two conditions, but which of course reduced students’ flexibility in time and place for attending the online sessions of the flipped classroom. This resulted in a flipped classroom design that is less authentic, and this could have affected specific student variables. Next to varying the time window in the online phase, future research can also zoom in on students’ appreciation of these constrained settings.

Next, appreciation of feedback and self-efficacy were measured through self-report measures. We can question whether the instruments were sufficiently sensitive to capture actual changes in these variables. A mixed methods approach that adds a qualitative component to the study could have helped to triangulate the present results.

Of course, the actual design of our flipped classroom only manipulated a small set of instructional design elements. In terms of educational sustainability, exploring alternative feedback mechanisms such as peer feedback could further enhance student engagement and reduce the workload on educators. Moreover, investigating other instructional design elements like mind maps, or self-assessment activities not only enriches pedagogical practices but also promotes sustainability by fostering diverse learning modalities. Future studies should consider these factors to advance both educational effectiveness and sustainability within the flipped classroom framework.

Based on the findings of the present study, we can put forward some implications for future flipped classroom designs. Feedback remains a key design element in boosting learning. In addition, guiding questions clearly encourage students’ thinking and foster discussions. Therefore, guiding questions and feedback should be implemented in flipped classrooms. What we especially learn is that providing feedback during every phase in the flipped classroom design is valuable to enhance students’ learning outcomes.

6. Conclusions

In conclusion, this study highlights a positive implication of providing extra feedback after solving guiding questions in an online environment on students’ learning performance. Particularly, given that students achieve higher learning outcomes when they receive additional feedback from the teacher after finishing their tasks in the online phase of a flipped classroom design, we can explore that feedback is supporting students in every phase of their learning process. Moreover, integrating feedback into online education not only enhances learning outcomes but also aligns with principles of sustainable education. This fosters interactive learning experiences, providing guidance and support to students.

In terms of student variables, although we could not find a positive impact of extra feedback in the online phase of a flipped classroom on self-efficacy beliefs, we did find that students’ appreciation of feedback was higher when they received additional feedback. This emphasizes the importance of providing feedback to motivate students’ engagement in their learning journey. Based on these findings, providing feedback should be considered to support students in solving guiding questions related to web-based lectures in the flipped classroom environment to upgrade students’ learning outcomes while also advancing sustainable educational practices.

Author Contributions

This study contributes by all authors, conceptualization, N.T.T.T., B.D.W. and M.V.; methodology, N.T.T.T., B.D.W. and M.V.; formal analysis, N.T.T.T.; data curation, N.T.T.T.; writing—original draft preparation, N.T.T.T.; writing—review and editing, N.T.T.T., B.D.W. and M.V.; supervision, B.D.W. and M.V.; project administration, M.V. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the MOET (Ministry of Education and Training) Project 911 from Vietnam with grant No. 911/QD-TTg.

Institutional Review Board Statement

Ethical review and approval were waived for this study due to the purpose of research and to help students in approaching the new methodology.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Acknowledgments

We appreciate the participation of lecturer Duong Ngoc Tran (Faculty of Engineering and Technology) and the students enrolled in the “Environmental Technology” course at Can Tho College, Vietnam.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Bristol, T. Flipping the Classroom. Teach. Learn. Nurs. 2014, 9, 43–46. [Google Scholar] [CrossRef]

- He, W.; Holton, A.; Farkas, G.; Warschauer, M. The effects of flipped instruction on out-of-class study time, exam performance, and student perceptions. Learn. Instr. 2016, 45, 61–71. [Google Scholar] [CrossRef]

- McLaughlin, J.E.; Roth, M.T.P.; Glatt, D.M.; Gharkholonarehe, N.; Davidson, C.A.M.; Griffin, L.M.; Esserman, D.A.; Mumper, R.J. The Flipped Classroom. Acad. Med. 2014, 89, 236–243. [Google Scholar] [CrossRef] [PubMed]

- Akçayır, G.; Akçayır, M. The flipped classroom: A review of its advantages and challenges. Comput. Educ. 2018, 126, 334–345. [Google Scholar] [CrossRef]

- Lo, C.K.; Hew, K.F. A critical review of flipped classroom challenges in K-12 education: Possible solutions and recommendations for future research. Res. Pract. Technol. Enhanc. Learn. 2017, 12, 4. [Google Scholar] [CrossRef] [PubMed]

- Thai, N.T.T.; De Wever, B.; Valcke, M. Feedback: An important key in the online environment of a flipped classroom setting. Interact. Learn. Environ. 2020, 31, 924–937. [Google Scholar] [CrossRef]

- Blosser, P.E. How to Ask the Right Questions; National Science Teachers Association: Arlington, VA, USA, 2000. [Google Scholar] [CrossRef]

- Budé, L.; van de Wiel, M.W.J.; Imbos, T.; Berger, M.P.F. The effect of guiding questions on students’ performance and attitude towards statistics. Br. J. Educ. Psychol. 2012, 82, 340–359. [Google Scholar] [CrossRef] [PubMed]

- Hattie, J.; Timperley, H. The Power of Feedback. Rev. Educ. Res. 2007, 77, 81–112. [Google Scholar] [CrossRef]

- Goldhacker, M.; Rosengarth, K.; Plank, T.; Greenlee, M.W. The effect of feedback on performance and brain activation during perceptual learning. Vis. Res. 2014, 99, 99–110. [Google Scholar] [CrossRef]

- Van der Kleij, F.M.; Eggen, T.J.H.M.; Timmers, C.F.; Veldkamp, B.P. Effects of feedback in a computer-based assessment for learning. Comput. Educ. 2012, 58, 263–272. [Google Scholar] [CrossRef]

- Gikandi, J.W.; Morrow, D.; Davis, N.E. Online formative assessment in higher education: A review of the literature. Comput. Educ. 2011, 57, 2333–2351. [Google Scholar] [CrossRef]

- Laiken, M.E.; Milland, R.; Wagner, J. Capturing the Magic of Classroom Training in Blended Learning. Open Prax. 2014, 6, 295–304. [Google Scholar] [CrossRef][Green Version]

- Gosper, M.; Mcneill, M.; Phillips, R.; Preston, G.; Woo, K.; Green, D. Web-based lecture technologies and learning and teaching: A study of change in four Australian universities. Res. Learn. Technol. 2011, 18, 251–263. [Google Scholar] [CrossRef]

- Ridgway, P.F.; Sheikh, A.; Sweeney, K.J.; Evoy, D.; McDermott, E.; Felle, P.; Hill, A.D.; O’Higgins, N.J. Simulation and E-Learning Surgical e-learning: Validation of multimedia web-based lectures. Med. Educ. 2007, 41, 168–172. [Google Scholar] [CrossRef] [PubMed]

- Day, J. Investigating Learning with Web Lectures; Georgia Institute of Technology: Atlanta, GA, USA, 2008. [Google Scholar]

- Gorissen, P.; Van Bruggen, J.; Jochems, W. Students and recorded lectures: Survey on current use and demands for higher education. Res. Learn. Technol. 2012, 20, 297–311. [Google Scholar] [CrossRef]

- Williams, J.; Fardon, M. “Perpetual Connectivity”: Lecture Recordings and Portable Media Players. In Proceedings of the World Conference on Educational Multimedia, Hypermedia and Telecommunications, Vancouver, BC, Canada, 26 June 2007; pp. 3083–3091. [Google Scholar]

- Stanojevic, L.; Randjelovic, M.; Papic, M. The Effect of Web-based Classroom Response System on Students Learning Outcomes: Results from Programming Course. In Proceedings of the Conference of Information Technology and Development of Education—ITRO, Zrenjanin, Serbia, 22 June 2017. [Google Scholar]

- Wieling, M.B.; Hofman, W.H.A. The impact of online video lecture recordings and automated feedback on student performance. Comput. Educ. 2010, 54, 992–998. [Google Scholar] [CrossRef]

- O’callaghan, F.V.; Neumann, D.L.; Jones, L.; Creed, P.A. The use of lecture recordings in higher education: A review of institutional, student, and lecturer issues. Educ. Inf. Technol. 2017, 22, 399–415. [Google Scholar] [CrossRef]

- Traver, R. What is a Good Guiding Question? Educ. Leadersh. 1998, 55, 70–73. [Google Scholar]

- Anseel, F.; Lievens, F.; Schollaert, E. Reflection as a strategy to enhance task performance after feedback. Organ. Behav. Hum. Decis. Process. 2009, 110, 23–35. [Google Scholar] [CrossRef]

- Marden, N.Y.; Ulman, L.G.; Wilson, F.S.; Velan, G.M. Online feedback assessments in physiology: Effects on students’ learning experiences and outcomes. Adv. Physiol. Educ. 2013, 37, 192–200. [Google Scholar] [CrossRef]

- Wu, P.H.; Hwang, G.J.; Milrad, M.; Ke, H.R.; Huang, Y.M. An innovative concept map approach for improving students’ learning performance with an instant feedback mechanism. Br. J. Educ. Technol. 2012, 43, 217–232. [Google Scholar] [CrossRef]

- Finn, B.; Thomas, R.; Rawson, K.A. Learning more from feedback: Elaborating feedback with examples enhances concept learning. Learn. Instr. 2018, 54, 104–113. [Google Scholar] [CrossRef]

- Corbalan, G.; Paas, F.; Cuypers, H. Computer-based feedback in linear algebra: Effects on transfer performance and motivation. Comput. Educ. 2010, 55, 692–703. [Google Scholar] [CrossRef]

- Auld, R.G.; Belfiore, P.J.; Scheeler, M.C. Increasing Pre-service Teachers’ Use of Differential Reinforcement: Effects of Performance Feedback on Consequences for Student Behavior. J. Behav. Educ. 2010, 19, 169–183. [Google Scholar] [CrossRef]

- Boud, D.; Molloy, E. Rethinking models of feedback for learning: The challenge of design. Assess. Eval. High. Educ. 2013, 38, 698–712. [Google Scholar] [CrossRef]

- De Wever, B.; Van Keer, H.; Schellens, T.; Valcke, M. Roles as a structuring tool in online discussion groups: The differential impact of different roles on social knowledge construction. Comput. Hum. Behav. 2010, 26, 516–523. [Google Scholar] [CrossRef]

- Ellis, R.A.; Goodyear, P.; O’hara, A.; Prosser, M. The university student experience of face-to-face and online discussions: Coherence, reflection and meaning. Res. Learn. Technol. 2007, 15, 83–97. [Google Scholar] [CrossRef]

- Slavin, R.E. When does cooperative learning increase student achievement? Psychol. Bull. 2003, 94, 429–445. [Google Scholar] [CrossRef]

- King, A. Scripting Computer-Supported Collaborative Learning. In Computer-Supported Collaborative Learning, Cognitive, Computational and Educational Perspectives; Springer: New York, NY, USA, 2007; pp. 13–37. [Google Scholar]

- Noroozi, O.; Busstra, M.C.; Mulder, M.; Biemans, H.J.A. Online discussion compensates for suboptimal timing of supportive information presentation in a digitally supported learning environment. Educ. Technol. Res. Dev. 2011, 60, 193–221. [Google Scholar] [CrossRef][Green Version]

- Valcke, M.; De Wever, B.; Zhu, C.; Deed, C. Supporting active cognitive processing in collaborative groups: The potential of Bloom’s taxonomy as a labeling tool. Internet High. Educ. 2009, 12, 165–172. [Google Scholar] [CrossRef]

- Vogel, F.; Wecker, C.; Kollar, I.; Fischer, F. Socio-Cognitive Scaffolding with Computer-Supported Collaboration Scripts: A Meta-Analysis. Educ. Psychol. Rev. 2016, 29, 477–511. [Google Scholar] [CrossRef]

- Wang, X.; Kollar, I.; Stegmann, K.; Fischer, F. Adaptable Scripting in Computer-Supported Collaborative Learning to Foster Knowledge and Skill Acquisition. In Proceedings of the International Conference on Computer Supported Collaborative, Hong Kong, China, 4–8 July 2011; pp. 382–389. [Google Scholar]

- Kim, J. Influence of group size on students’ participation in online discussion forums. Comput. Educ. 2013, 62, 123–129. [Google Scholar] [CrossRef]

- Bandura, A. Self-efficacy mechanism in human agency. Am. Psychol. 1982, 37, 122–147. [Google Scholar] [CrossRef]

- Bandura, A.; Barbaranelli, C.; Caprara, G.V.; Pastorelli, C. Multifaceted Impact of Self-Efficacy Beliefs on Academic Functioning. Child Dev. 1996, 67, 1206–1222. [Google Scholar] [CrossRef]

- Frank, P. Self-Efficacy in Academic Settings; ERIC: Washington, DC, USA, 1995. [Google Scholar]

- Wang, A.Y.; Newlin, M.H. Predictors of web-student performance: The role of self-efficacy and reasons for taking an on-line class. Comput. Hum. Behav. 2002, 18, 151–163. [Google Scholar] [CrossRef]

- Karl, K.A.; O’Leary-Kelly, A.M.; Martocchio, J.J. The impact of feedback and self-efficacy on performance in training. J. Organ. Behav. 1993, 14, 379–394. [Google Scholar] [CrossRef]

- Wang, S.-L.; Wu, P.-Y. The role of feedback and self-efficacy on web-based learning: The social cognitive perspective. Comput. Educ. 2008, 51, 1589–1598. [Google Scholar] [CrossRef]

- Zinman, M.; Meyer, J.; Plastow, K.; Fyfe, G.; Fyfe, S.; Saunders, K.; Hill, J.; Brightwell, R. Student optimism and appreciation of feedback. In Proceedings of the 16th Annual Teaching and Learning Forum, Perth, Australia, 30–31 January 2007. [Google Scholar]

- Van den Boom, G.; Paas, F.; Van Merrienboer, J.J. Effects of Elicited Reflections Combined with Tutor or Peer Feedback on Self-Regulated Learning and Learning Outcomes. Learn. Instr. 2007, 17, 532–548. [Google Scholar] [CrossRef]

- Krathwohl, D.R. A revision of bloom’s taxonomy: An overview. Theory Pract. 2002, 41, 212–218. [Google Scholar] [CrossRef]

- Zajacova, A.; Lynch, S.M.; Espenshade, T.J. Self-Efficacy, Stress, and Academic Success in College. Res. High. Educ. 2005, 46, 677–706. [Google Scholar] [CrossRef]

- Ghilay, Y.; Ghilay, R. FBL: Feedback Based Learning in Higher Education. High. Educ. Stud. 2015, 5, 1–10. [Google Scholar] [CrossRef]

- Baguley, T. Standardized or simple effect size: What should be reported? Br. J. Psychol. 2009, 100, 603–617. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).