Artificial Intelligence Applications in Primary Education: A Quantitatively Complemented Mixed-Meta-Method Study

Abstract

1. Introduction

1.1. Artificial Intelligence in Education

1.2. Artificial Intelligence in Elementary Schools

1.3. Purpose and Significance of This Study

- What are teachers’ perspectives within the scope of this meta-analysis (quantitative dimension)?

- What are teachers’ perspectives within the scope of this meta-thematic analysis (qualitative dimension)?

- What are teachers’ perspectives within the scope of the Rasch measurement model (quantitative dimension)?

- Determining the overall effect size of different variables on AI applications;

- Assessing the effect size of different variables on AI use based on subject area, the duration of implementation, and sample size.Within the scope of the meta-thematic analysis, the outlined was performed:

- Identifying the impact of AI applications on learning environments and determining potential challenges and solutions in AI implementation;

- Conducting a general analysis of teachers’ opinions on AI applications;

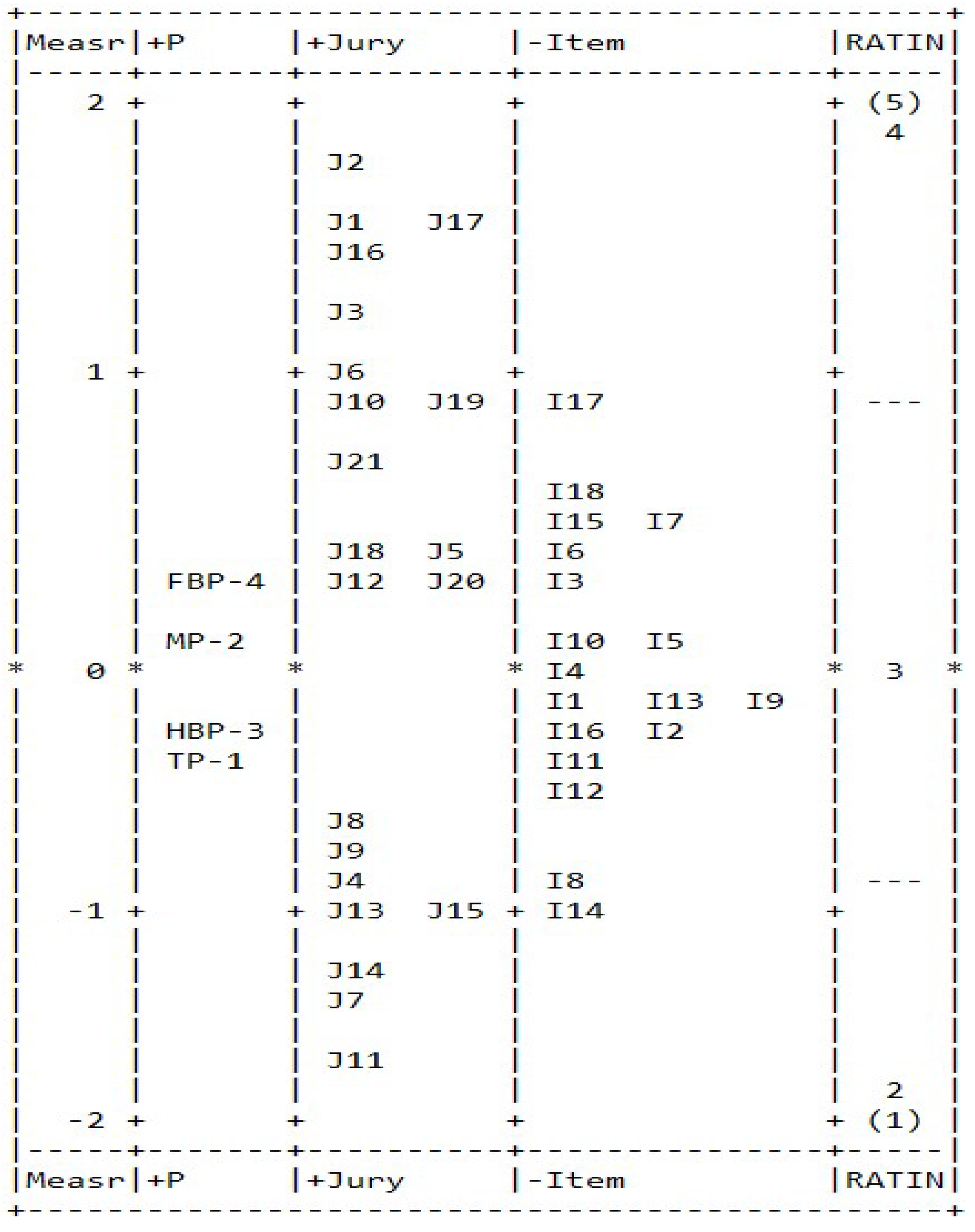

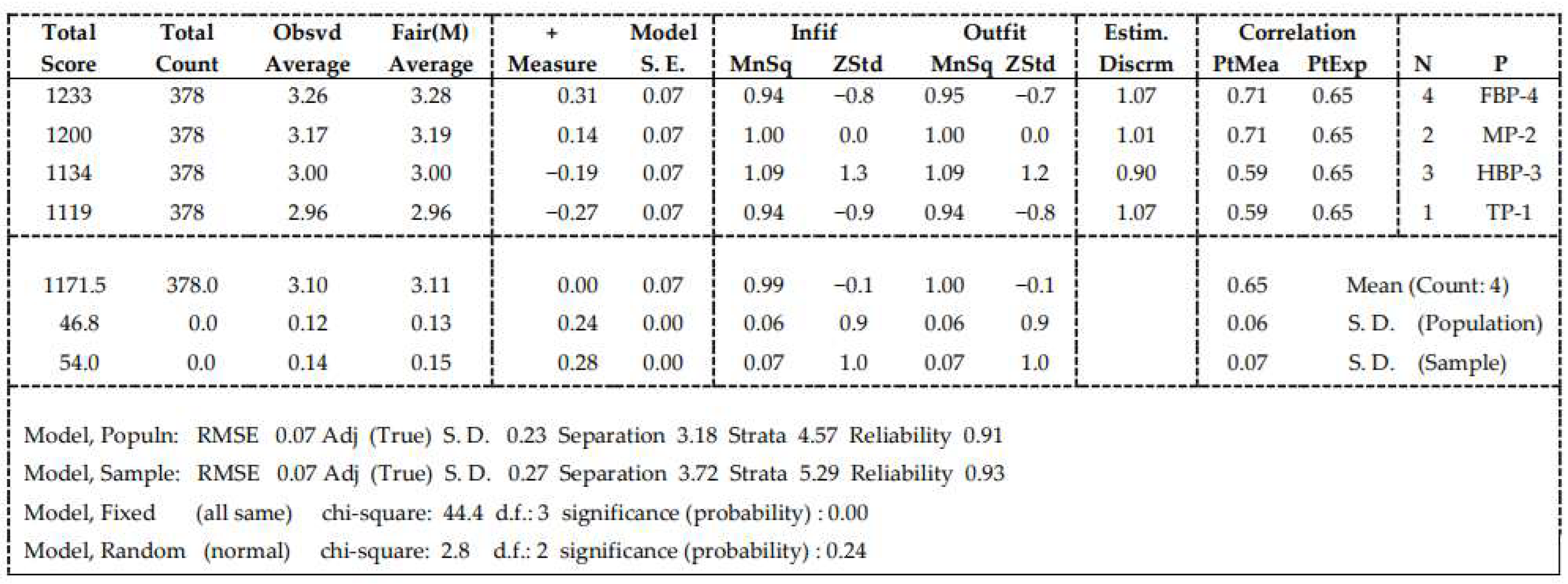

- Analyzing the leniency or strictness of evaluators (jury members);

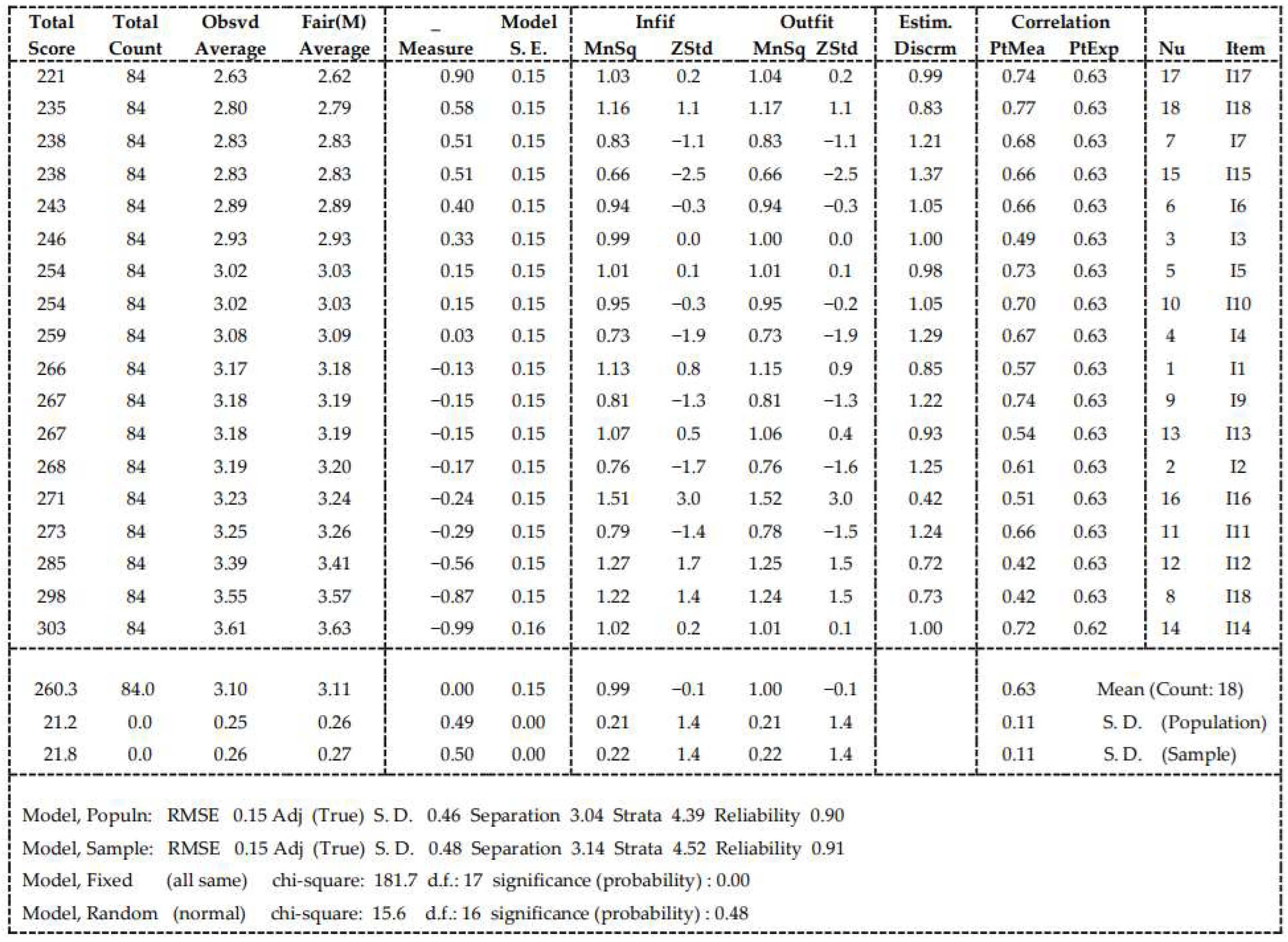

- Performing a difficulty analysis of AI-related assessment items (criteria).

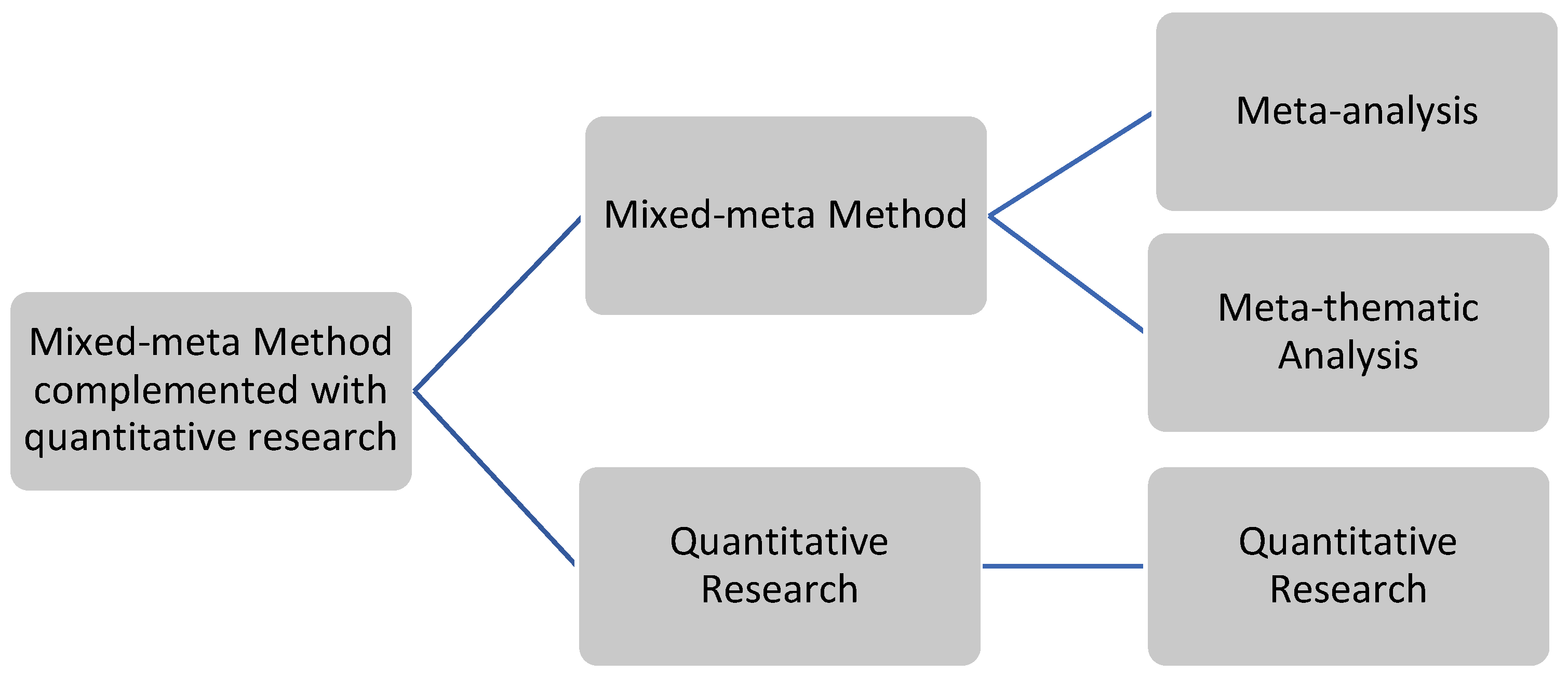

2. Methods

- Meta-analysis: a quantitative synthesis of data to determine the effect size of AI applications;

- Meta-thematic analysis: a qualitative examination of recurring themes in the literature, focusing on the effects of AI applications in educational contexts;

- The Rasch measurement model: a quantitative analysis of participant opinions, providing insights into teacher perspectives and evaluating response consistency.

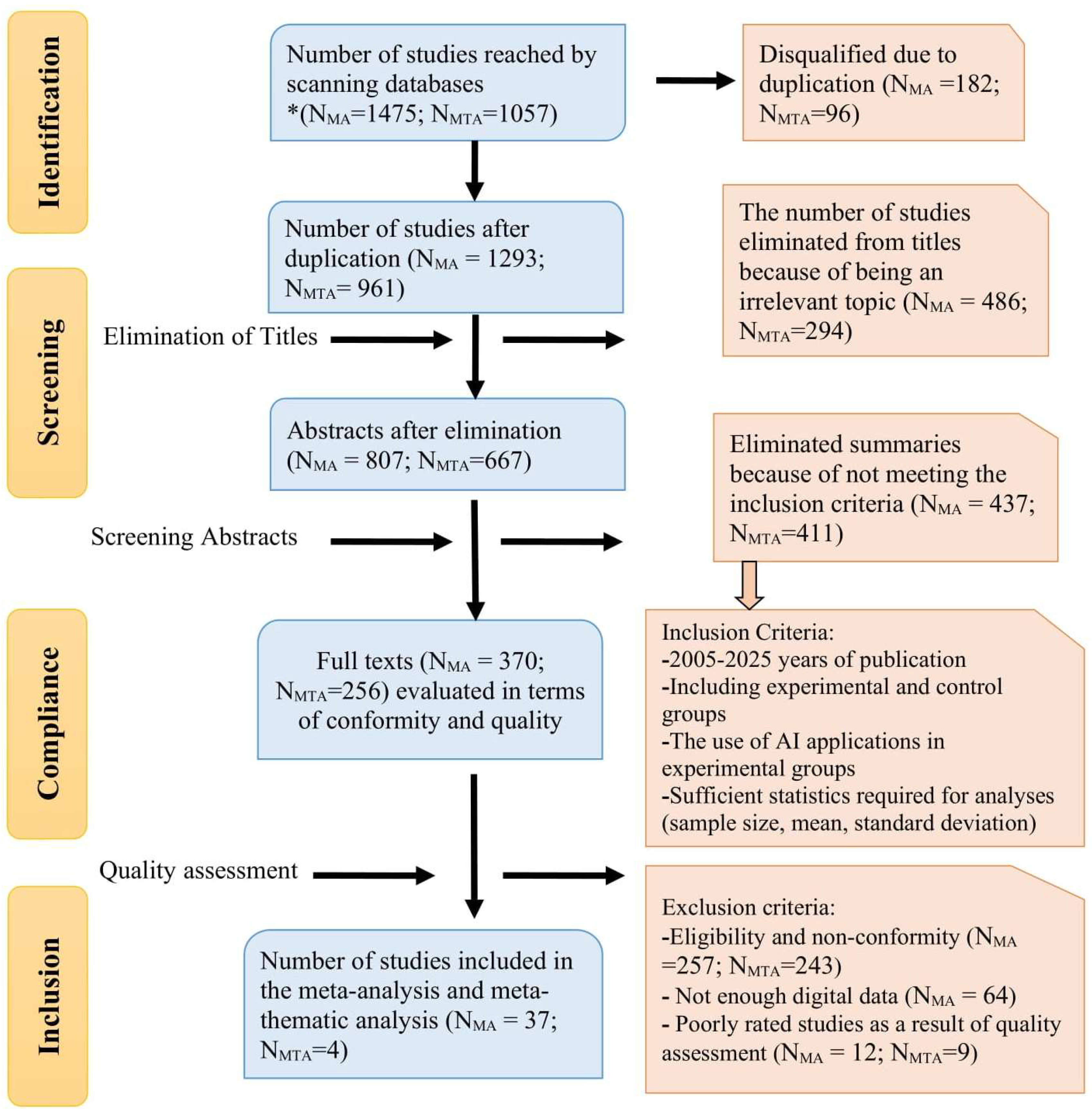

2.1. Meta-Analysis Process

2.1.1. Data Collection and Analysis

2.1.2. Effect Size and Model Selection

2.1.3. Moderator Analysis

2.1.4. Publication Bias

2.2. Meta-Thematic Analysis Process

2.2.1. Data Collection and Review

2.2.2. Coding Process

2.2.3. Reliability in the Meta-Thematic Analysis Process

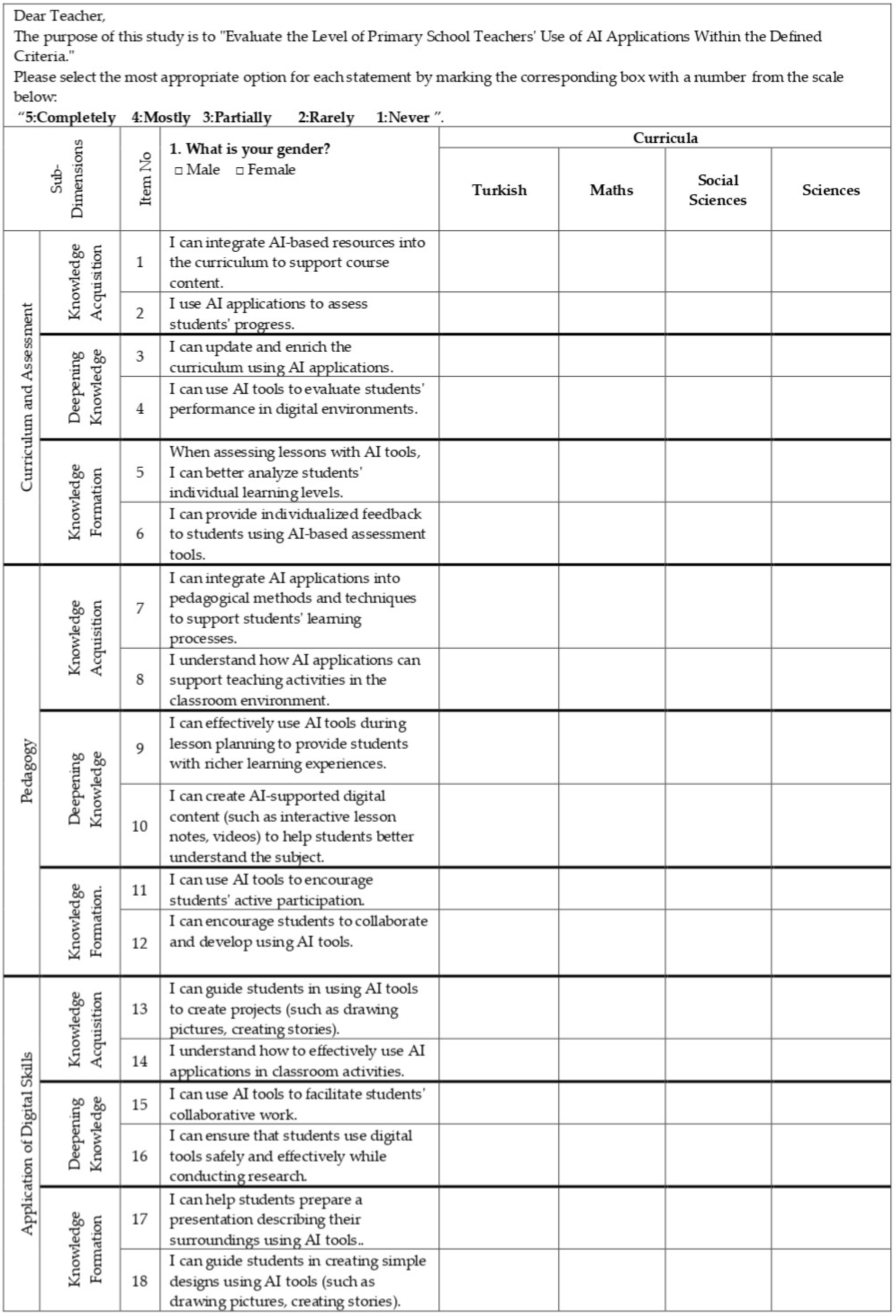

2.3. Rasch Measurement Model Analysis Process

2.3.1. Study Group

2.3.2. Research Data and Analysis

3. Findings

3.1. Meta-Analysis Findings on AI Applications

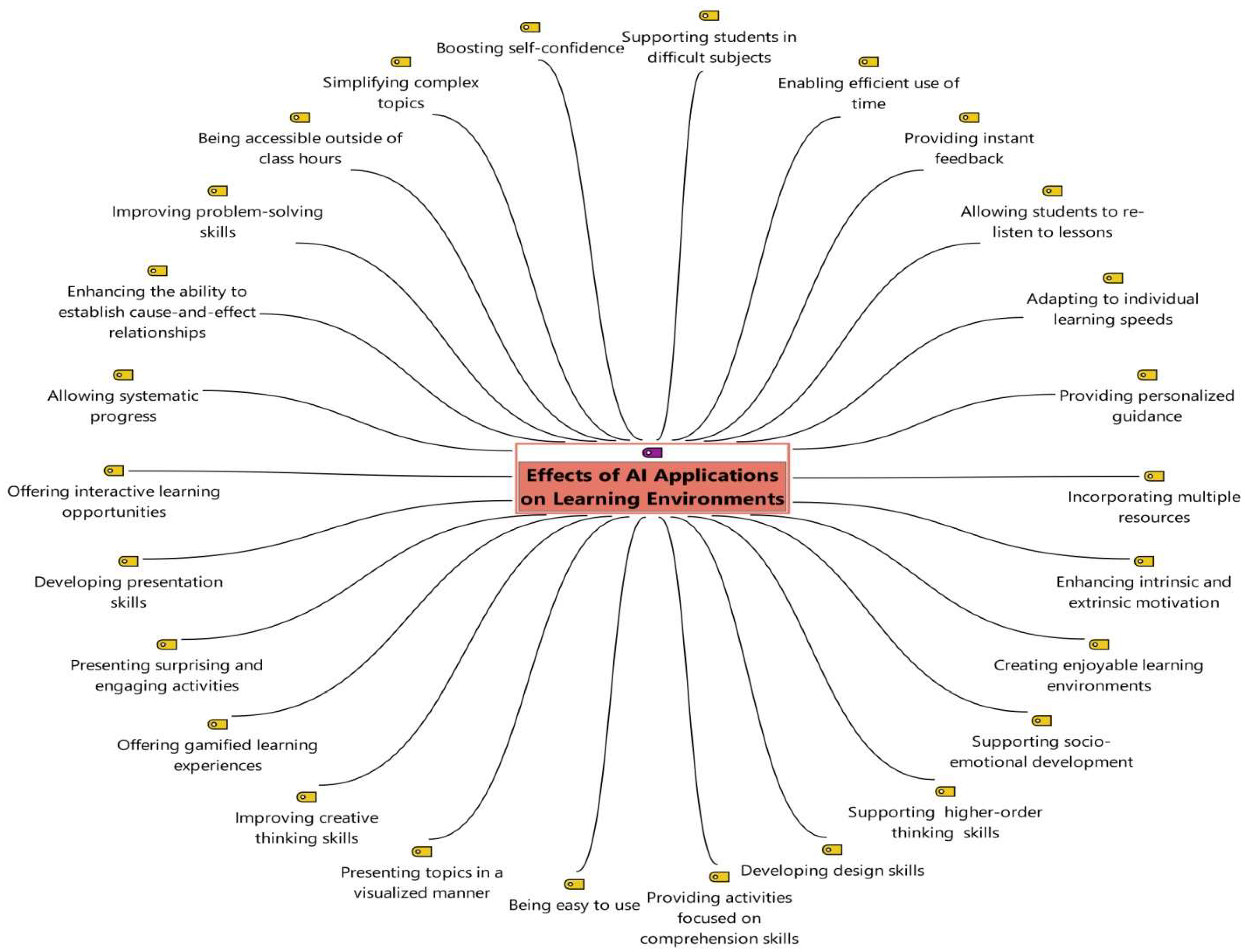

3.2. Meta-Thematic Findings Regarding Artificial Intelligence Applications

3.3. Findings Related to the Rasch Measurement Model for Artificial Intelligence Applications

4. Discussion and Conclusions

4.1. Results of the Meta-Analysis Process

4.2. Results of the Meta-Thematic Analysis Process

4.3. Results Related to the Rasch Measurement Model Process

4.4. Integrative Results of This Study

4.5. Limitations and Future Research

4.6. Recommendations

- The application duration, subject areas, and sample sizes in AI-related research have significant effects on academic success and the impact of AI on educational environments. The use of the mixed-meta method, supported by the Rasch measurement model, has provided a more holistic perspective, allowing for a deeper exploration of the topic. Based on the limitations and findings of this study, the following points are recommended:

- Research on AI applications in primary school subject areas such as art, music, and physical education can be conducted. In addition to quantitative methods, qualitative methods could be employed to explore the effectiveness and applicability of survey questions;

- The meta-analysis phase of this study could include investigations into the impact of AI applications on attitudes and long-term retention;

- Studies could explore teachers’ information and technology competencies [86] within other professional practice areas;

- This study focused on perspectives from classroom teachers. Including evaluators from different expertise levels could broaden the scope of the study;

- Despite teachers’ positive expectations regarding AI, it is essential that they first familiarize themselves with the technology and learn how to integrate it into their classrooms. Many teachers may regard AI as an advanced technological product without prior experience. In this regard, in-service training could increase teachers’ knowledge about AI and improve their integration of this technology, significantly enhancing student success and the learning experience [86];

- Given the methodological diversity, the use of a mixed-meta method combined with quantitative analyses has allowed for a comprehensive examination of the findings, with detailed insights into how various variables affect the use of AI applications. Therefore, it is recommended to apply the mixed-meta method integrated with either qualitative or quantitative analyses in other areas to achieve comprehensive research findings;

- Policymakers should take necessary measures to address concerns related to ethics, data security, and human rights as AI becomes more integrated into education;

- Artificial-intelligence-supported assessment tools are highly effective in monitoring student performance and providing immediate feedback. Educational institutions can make these systems more widespread to reduce teachers’ workload and track students’ progress in more detail;

- For students to succeed in AI-supported learning environments, they need to possess critical thinking, problem-solving, and digital literacy skills. Curriculum adjustments should be made to equip students with these skills.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Agreement Value Ranges of Themes Related to Artificial Intelligence Applications

| Effect on Learning Environments | Problems Encountered | Related Solution Suggestions | Problems Encountered and Solution Suggestions | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| K2 | K2 | K2 | K2 | ||||||||||||||||

| K1 | + | - | Σ | K1 | + | - | Σ | K1 | + | - | Σ | K1 | + | - | Σ | ||||

| + | 26 | 2 | 28 | + | 14 | 1 | 15 | + | 12 | 1 | 13 | + | 26 | 2 | 28 | ||||

| - | 3 | 18 | 21 | - | 0 | 9 | 9 | - | 1 | 7 | 8 | - | 1 | 16 | 17 | ||||

| Σ | 29 | 20 | 49 | Σ | 14 | 10 | 24 | Σ | 13 | 8 | 21 | Σ | 27 | 18 | 45 | ||||

Appendix B. Primary School Teachers’ Artificial Intelligence Applications Evaluation Form

Appendix C. The Content Validity Ratios of the Artificial Intelligence Application Evaluation Items

| Item No | Items | Necessary | Useful/Insufficient | Unnecessary | CVC * |

|---|---|---|---|---|---|

| 1 | I can integrate AI-based resources into the curriculum to support course content. | 12 | - | - | 100% |

| 2 | I use AI applications to assess students’ progress. | 12 | - | - | 100% |

| 3 | I can update and enrich the curriculum using AI applications. | 11 | 1 | - | 83% |

| 4 | I can use Al tools to evaluate students’ performance in digital environments. | 12 | - | - | 100% |

| 5 | When assessing lessons with AI tools, I can better analyze students’ individual learning levels. | 10 | 1 | 1 | 67% |

| 6 | I can provide individualized feedback to students using AI-based assessment tools. | 11 | 1 | - | 83% |

| 7 | I can integrate AI applications into pedagogical methods and techniques to support students’ learning processes. | 10 | 1 | 1 | 67% |

| 8 | I understand how AI applications can support teaching activities in the classroom environment. | 12 | - | - | 100% |

| 9 | I can effectively use AI tools during lesson planning to provide students with richer learning experiences. | 11 | -- | 1 | 83% |

| 10 | I can create AI-supported digital content (such as interactive lesson notes, videos) to help students better understand the subject. | 10 | - | 2 | 67% |

| 11 | I can use Al tools to encourage students’ active participation. | 12 | - | - | 100% |

| 12 | I can encourage students to collaborate and develop using Al tools. | 10 | 2 | - | 67% |

| 13 | I can guide students in using AI tools to create projects (such as drawing pictures, creating stories). | 10 | 1 | 1 | 67% |

| 14 | I understand how to effectively use Al applications in classroom activities. | 10 | 1 | 1 | 67% |

| 15 | I can use Al tools to facilitate students’ collaborative work. | 10 | - | 2 | 67% |

| 16 | I can ensure that students use digital tools safely and effectively while conducting research. | 12 | - | - | 100% |

| 17 | I can help students prepare a presentation describing their surroundings using Al tools. | 10 | - | 2 | 67% |

| 18 | I can guide students in creating simple designs using Al tools (such as drawing pictures, creating stories). | 10 | 1 | 1 | 67% |

Appendix D. Demographic Information of the Participants

| Participant Number | Participant Gender | Professional Seniority | Educational Status |

|---|---|---|---|

| 1 | Female | 19 years | Major |

| 2 | Male | 18 years | Major |

| 3 | Female | 24 years | Major |

| 4 | Male | 23 years | Major |

| 5 | Female | 19 years | Major |

| 6 | Female | 22 years | Major |

| 7 | Male | 36 years | Minor |

| 8 | Male | 28 years | Major |

| 9 | Female | 25 years | Major |

| 10 | Female | 22 years | Major |

| 11 | Female | 35 years | Minor |

| 12 | Male | 23 years | Master’s |

| 13 | Female | 25 years | Major |

| 14 | Male | 19 years | Major |

| 15 | Female | 14 years | Major |

| 16 | Male | 26 years | Master’s |

| 17 | Male | 34 years | Minor |

| 18 | Male | 28 years | Major |

| 19 | Female | 22 years | Major |

| 20 | Female | 32 years | Major |

| 21 | Male | 24 years | Major |

References

- Candan, F.; Başaran, M. A meta-thematic analysis of using technology-mediated gamification tools in the learning process. Interact. Learn. Environ. 2023, 1–17. [Google Scholar] [CrossRef]

- Russell, S.J.; Norvig, P. Artificial Intelligence: A Modern Approach, 3rd ed.; Pearson Education: Hoboken, NJ, USA, 2009. [Google Scholar]

- Akerkar, R. Introduction to Artificial İntelligence; Prentice-Hall of India Private Limited Learning Press: New Delhi, India, 2014. [Google Scholar]

- Ginsenberg, M. Essentials of Artificial Intelligence; Morgan Kaufmann Publishers: Burlington, MA, USA, 2012. [Google Scholar]

- Komalavalli, K.; Hemalatha, R.; Dhanalakshmi, S. A survey of artificial intelligence in smart phones and its applications among the students of higher education in and around Chennai City. Shanlax Int. J. Educ. 2020, 8, 89–95. [Google Scholar] [CrossRef]

- Topol, E. Deep Medicine: How Artificial Intelligence Can Make Healthcare Human Again; Basic Books: Hachette, UK, 2019. [Google Scholar]

- Litan, A. Hype cycle for blockchain 2021; more action than hype. Gartner 2021, 21, 7–14. Available online: https://stephenlibby.wordpress.com/2021/07/14/hype-cycle-for-blockchain-2021-more-action-than-hype/ (accessed on 18 March 2025).

- Hamet, P.; Tremblay, J. Artificial intelligence in medicine. Metab. Clin. Experimental. 2017, 69, 36–40. [Google Scholar] [CrossRef] [PubMed]

- Li, B.; Hou, B.; Yu, W.; Lu, X.; Yang, C. Applications of artificial intelligence in intelligent manufacturing: A review. Front. Inf. Technol. Electron. Eng. 2017, 18, 86–96. [Google Scholar] [CrossRef]

- Polat, M.; Aralan, T. Yapay zekâ tabanlı teknolojilerin BM Sürdürülebilir Kalkınma Hedeflerine katkıları. J. Orig. Stud. 2024, 5, 85–101. [Google Scholar] [CrossRef]

- Jing, X.; Zhu, R.; Lin, J.; Yu, B.; Lu, M. Education sustainability for ıntelligent manufacturing in the context of the new generation of artificial ıntelligence. Sustainability 2022, 14, 14148. [Google Scholar] [CrossRef]

- Chiu, T.K.F.; Chai, C.-s. Sustainable curriculum planning for artificial ıntelligence education: A self-determination theory perspective. Sustainability 2020, 12, 5568. [Google Scholar] [CrossRef]

- Pedró, F.; Subosa, M.; Rivas, A.; Valverde, P. Artificial Intelligence in Education: Challenges and Opportunities for Sustainable Development; UNESCO: Paris, France, 2019. [Google Scholar]

- Lee, K. A systematic review on social sustainability of artificial intelligence in product design. Sustainability 2021, 13, 2668. [Google Scholar] [CrossRef]

- Ta, M.D.P.; Wendt, S.; Sigurjonsson, T.O. Applying artificial intelligence to promote sustainability. Sustainability 2024, 16, 4879. [Google Scholar] [CrossRef]

- Maqbool, F.; Ansari, S.; Otero, P. The role of artificial ıntelligence and smart classrooms during COVID-19 pandemic and its impact on education. J. Indep. Stud. Res. Comput. 2021, 19, 7–14. [Google Scholar] [CrossRef]

- Mijwil, M.M.; Aggarwal, K.; Mutar, D.S.; Mansour, N.; Singh, R.S.S. The position of artificial ıntelligence in the future of education: An overview. Asian J. Appl. Sci. 2022, 10, 102–108. [Google Scholar] [CrossRef]

- Pantelimon, F.V.; Bologa, R.; Toma, A.; Posedaru, B.S. The evolution of AI-driven educational systems during the COVID-19 pandemic. Sustainability 2021, 13, 13501. [Google Scholar] [CrossRef]

- Self, J. The birth of IJAIED. Int. J. Artif. Intell. Educ. 2016, 26, 4–12. [Google Scholar] [CrossRef]

- Bracaccio, R.; Hojaij, F.; Notargiacomo, P. Gamification in the study of anatomy: The use of artificialintelligence to improve learning. FASEB J. 2019, 33, 444.28. [Google Scholar] [CrossRef]

- Santos, O.C. Training the body: The potential of AIED to support personalized motor skills learning. Int. J. Artif. Intell. Educ. 2016, 26, 730–755. [Google Scholar] [CrossRef]

- Roscoe, R.D.; Walker, E.A.; Patchan, M.M. Facilitating peer tutoring and assessment in intelligentlearning systems. In Tutoring and Intelligent Tutoring Systems; Craig, S.D., Ed.; Nova Science Publishers: Hauppauge, NY, USA, 2018; pp. 41–68. [Google Scholar]

- Liang, Y.; Chen, L. Analysis of current situation, typical characteristics and development trend of artificial intelligence education application. China Electrofication Educ. 2018, 3, 24–30. [Google Scholar]

- Xue, Q.; Li, F. Security risks and countermeasures in artificial intelligence education applications. J. Distance Educ. 2018, 36, 88–94. [Google Scholar]

- Mu, P. Research on artificial intelligence education and its value orientation. In Proceedings of the 1st International Education Technology and Research Conference (IETRC), Tianjin, China, 14–15 September 2019. [Google Scholar]

- Ouyang, F.; Jiao, P. Artifcial intelligence in education: The three paradigms. Comput. Educ. Artifcial Intell. 2021, 2, 100020. [Google Scholar] [CrossRef]

- Luckin, R.; Holmes, W.; Griffiths, M.; Forcier, L.B. Intelligence Unleashed—An Argument for AI in Education; Pearson: London, UK, 2016. [Google Scholar]

- Panigrahi, C.M.A. Use of artificial intelligence in education. Manag. Account. 2020, 55, 64–67. Available online: https://ssrn.com/abstract=3606936 (accessed on 20 October 2024).

- Ryu, M.; Han, S. The educational perception on artificial intelligence by elementary school teachers. J. Korean Assoc. Inform. Educ 2018, 22, 317–324. [Google Scholar] [CrossRef]

- Jia, J.; He, Y.; Le, H. A Multimodal Human-Computer Interaction System and Its Application in Smart Learning Environments. In Proceedings of the Blended Learning. Education in a Smart Learning Environment: 13th International Conference ICBL 2020, Bangkok, Thailand, 24–27 August 2020. [Google Scholar]

- Holmes, W.; Bialik, M.; Fadel, C. Artificial Intelligence in Education; Center for Curriculum Redesign: Boston, MA, USA, 2019. [Google Scholar] [CrossRef]

- Qin, F.; Li, K.; Yan, J. Understanding user trust in artificial intelligence-based educational systems: Evidence from China. Br. J. Educ. Technol 2020, 51, 1693–1710. [Google Scholar] [CrossRef]

- Avci, O.; Abdeljaber, O.; Kiranyaz, S.; Hussein, M.; Gabbouj, M.; Inman, D.J. A review of vibration-based damage detection in civil structures: From traditional methods to machine learning and deep learning applications. Mech. Syst. Signal Process. 2021, 147, 107077. [Google Scholar] [CrossRef]

- Jain, P.; Coogan, S.C.; Subramanian, S.G.; Crowley, M.; Taylor, S.; Flannigan, M.D. A review of machine learning applications in wildfire science and management. Environ. Rev. 2020, 28, 478–505. [Google Scholar] [CrossRef]

- Nichols, J.A.; Herbert Chan, H.W.; Baker, M.A. Machine learning: Applications of artificial intelligence to imaging and diagnosis. Biophys. Rev. 2019, 11, 111–118. [Google Scholar] [CrossRef] [PubMed]

- Sharma, R.; Kamble, S.S.; Gunasekaran, A.; Kumar, V.; Kumar, A. A systematic literature review on machine learning applications for sustainable agriculture supply chain performance. Comput. Oper. Res. 2020, 119, 104926. [Google Scholar] [CrossRef]

- Su, J.; Yang, W. Artificial intelligence in early childhood education: A scoping review. Comput. Educ. Artif. Intell. 2022, 3, 100049. [Google Scholar] [CrossRef]

- Yue, M.; Jong, M.S.Y.; Dai, Y. Pedagogical design of K-12 artificial intelligence education: A systematic review. Sustainability 2022, 14, 15620. [Google Scholar] [CrossRef]

- Francis, K.; Rothschuh, S.; Poscente, D.; Davis, B. Malleability of spatial reasoning with short-term and long-term robotics interventions. Technol. Knowl. Learn. 2022, 27, 927–956. [Google Scholar] [CrossRef]

- Chu, Y.-S.; Yang, H.-C.; Tseng, S.-S.; Yang, C.-C. Implementation of a model-tracing-based learning diagnosis system to promote elementary students’ learning in mathematics. Educ. Technol. Soc. 2014, 17, 347–357. Available online: https://www.jstor.org/stable/jeductechsoci.17.2.347 (accessed on 24 November 2024).

- González-Calero, J.A.; Cózar, R.; Villena, R.; Merino, J.M. The development of mental rotation abilities through robotics-based instruction: An experience mediated by gender. Br. J. Educ. Technol. 2019, 50, 3198–3213. [Google Scholar] [CrossRef]

- Yang, W. Artificial Intelligence education for young children: Why, what, and how in curriculum design and implementation. Comput. Educ. Artif. Intell. 2022, 3, 100061. [Google Scholar] [CrossRef]

- Siemens, G. Connectivism: A learning theory for the digital age. Int. J. Instr. Technol. Distance Learn. 2005, 2, 14–16. Available online: http://www.itdl.org/Journal/Jan_05/article01.htm (accessed on 10 January 2025).

- Goldie, J.G.S. Connectivism: A knowledge learning theory for the digital age? Med. Teach. 2016, 38, 1064–1069. [Google Scholar] [CrossRef] [PubMed]

- Guerra, F.C.H. A Model for Putting Connectivism Into Practice in a Classroom Environment. Master Thesis, Universidade Nova, Lisboa, Potugal, 2022. [Google Scholar]

- Chiu, T.K. A Holistic approach to the design of Artificial Intelligence (AI) education for k-12 schools. TechTrends 2021, 65, 796–807. [Google Scholar] [CrossRef]

- Humble, N.; Mozelius, P. Artificial Intelligence in Education-a Promise, a Threat or a Hype? In Proceedings of the European Conference on the Impact of Artificial Intelligence and Robotics 2019 (ECIAIR 2019), Oxford, UK, 31 October–1 November 2019; pp. 149–156. [Google Scholar]

- Kaplan-Rakowski, R.; Grotewold, K.; Hartwick, P.; Papin, K. Generative AI ve teachers’ perspectives on ıts ımplementation in education. J. Interact. Learn. Res. 2023, 34, 313–338. [Google Scholar]

- Chiu, T.K.; Xia, Q.; Zhou, X.; Chai, C.S.; Cheng, M. Systematic literature review on opportunities, challenges, and future research recommendations of artificial intelligence in education. Comput. Educ. Artif. Intell. 2023, 4, 100118. [Google Scholar] [CrossRef]

- Fu, S.; Gu, H.; Yang, B. The affordances of AI-enabled automatic scoring applications on learners’ continuous learning intention: An empirical study in China. Br. J. Educ. Technol. 2020, 51, 1674–1692. [Google Scholar] [CrossRef]

- Li, M.; Su, Y. Evaluation of online teaching quality of basic education based on artificial ıntelligence. Int. J. Emerg. Technol. Learn. (iJET) 2020, 15, 147–161. [Google Scholar] [CrossRef]

- Luo, D. Guide teaching system based on artificial ıntelligence. Int. J. Emerg. Technol. Learn. (iJET) 2018, 13, 90–102. [Google Scholar] [CrossRef]

- Koçel, T. Yönetim ve organizasyonda metodoloji ve güncel kavramlar. İstanbul Üniversitesi İşletme Fakültesi Derg. 2017, 46, 3–8. [Google Scholar]

- Toraman, S. Karma yöntemler araştırması: Kısa tarihi, tanımı, bakış açıları ve temel kavramlar. Nitel Sos. Bilim. 2021, 3, 1–29. [Google Scholar] [CrossRef]

- Molina-Azorín, J.F.; López-Gamero, M.D.; Pereira-Moliner, J.; Pertusa-Ortega, E.M. Mixed methods studies in entrepreneurship research: Applications and contributions. Entrep. Reg. Dev. 2012, 24, 425–456. [Google Scholar] [CrossRef]

- Creswell, J.W.; Sözbilir, M. Karma Yöntem Araştırmalarına Giriş; Pegem Akademi Yayıncılık: Ankara, Türkiye, 2017. [Google Scholar]

- Creswell, J.W.; Plano Clark, V.L.; Gutmann, M.; Hanson, W. Advanced mixed methods research designs. In Handbook of Mixed Methods in Social and Behavioral Research; Tashakkori, A., Teddlie, C., Eds.; Sage: New York, NY, USA, 2003; pp. 209–240. [Google Scholar]

- Batdı, V. Farklı değişkenlerin öznel iyi oluş düzeyine etkisi: Nitel analizle bütünleştirilmiş karma-meta yöntemi. Gaziantep Üniversitesi Eğitim Bilim. Derg. 2024, 8, 53–83. [Google Scholar]

- Batdı, V. Yabancılara dil öğretiminde teknolojinin kullanımı: Bir karma-meta yöntemi. Milli Eğitim Derg. 2021, 50, 1213–1244. [Google Scholar] [CrossRef]

- Batdı, V. Meta-thematic analysis. In Meta-Thematic Analysis: Sample Applications; Batdı, V., Ed.; Anı Publication: Ankara, Türkiye, 2019; pp. 10–76. [Google Scholar]

- Glass, G.V. Primary secondary and meta-analysis of research. Educ. Res. 1976, 5, 3–8. [Google Scholar]

- Tsagris, M.; Fragkos, K.C. Meta-analyses of clinical trials versus diagnostic test accuracy studies. Diagn. Meta-Anal. A Useful Tool Clin. Decis.-Mak. 2018, 31–40. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G.; PRISMA Group. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. BMJ 2009, 339, b2535. [Google Scholar] [PubMed]

- Miles, M.B.; Huberman, A.M. Qualitative Data Analysis: An Expanded Sourcebook, 2nd ed.; Sage Publication: London, UK, 1994. [Google Scholar]

- Thalheimer, W.; Cook, S. How to calculate effect sizes from published research: A simplified methodology. Work.-Learn. Res. 2002, 1, 1–9. [Google Scholar]

- Schmidt, F.L.; Oh, I.S.; Hayes, T.L. Fixed-versus random-effects models in meta-analysis: Model properties and an empirical comparison of differences in results. Br. J. Math. Stat. Psychol. 2009, 62, 97–128. [Google Scholar] [CrossRef]

- Cooper, H.; Hedges, L.V.; Valentine, J.C. The Handbook of Research Synthesis and Meta-Analysis, 2nd ed.; Russell Sage Publication: New York, NY, USA, 2009. [Google Scholar]

- Duval, S.; Tweedie, R. A nonparametric “trim and fill” method of accounting for publication bias in meta-analysis. J. Am. Stat. Assoc. 2000, 95, 89–98. [Google Scholar]

- Sterne, J.A.; Harbord, R.M. Funnel plots in meta-analysis. Stata J. Promot. Commun. Stat. Stata 2004, 4, 127–141. [Google Scholar] [CrossRef]

- Rodríguez, M.D. Glossary on meta-analysis. J. Epidemiol Community Health 2001, 55, 534–536. [Google Scholar] [CrossRef]

- Rosenthal, R. The file drawer problem and tolerance for null results. Psychol. Bull. 1979, 86, 638–641. [Google Scholar] [CrossRef]

- Borenstein, M.; Hedges, L.V.; Higgins, J.P.T.; Rothstein, H.R. Introduction to Meta-Analysis; John Wiley & Sons Ltd. Press: Hoboken, NJ, USA, 2009. [Google Scholar]

- Sak, R.; Sak, İ.T.Ş.; Şendil, Ç.Ö.; Nas, E. Bir araştırma yöntemi olarak doküman analizi. Kocaeli Üniversitesi Eğitim Derg. 2021, 4, 227–256. [Google Scholar] [CrossRef]

- Bowen, G.A. Naturalistic inquiry and the saturation concept: A research note. Qual. Res. 2008, 8, 137–152. [Google Scholar] [CrossRef]

- Bryman, A. Social Research Methods; Oxford University Press: Oxford, UK, 2016. [Google Scholar]

- Mayring, P. Qualitative content analysis. A Companion Qual. Res. 2000, 1, 159–176. [Google Scholar]

- Cohen, J.A. Coefficient of agreement for nominal scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar]

- Streubert, H.J.; Carpenter, D.R. Qualitative Research in Nursing: Advancing the Humanistic Imperative, 5th ed.; Wolters Kluwer: Alphen aan den Rijn, The Netherlands, 2011. [Google Scholar]

- Linacre, J.M. Generalizability Theory and Many Facet Rasch Measurement; Annual Meeting of the American Educational Research Association: Denver, CO, USA, 1993. [Google Scholar]

- Linacre, J.M. A User’s Guide to Winsteps, Ministep Rasch-Model Computer Programs. 2008. Available online: https://www.researchgate.net/publication/238169941_A_User’’s_Guide_to_Winsteps_Rasch-Model_Computer_Program (accessed on 18 March 2025).

- Semerci, Ç. Mikro öğretim uygulamalarının çok-yüzeyli Rasch ölçme modeli ile analizi. Eğitim Ve Bilim 2011, 36, 161. [Google Scholar]

- İlhan, M. Açık uçlu sorularla yapılan ölçmelerde klasik test kuramı ve çok yüzeyli Rasch modeline göre hesaplanan yetenek kestirimlerinin karşılaştırılması. Hacet. Üniversitesi Eğitim Fakültesi Derg. 2016, 31, 346–368. [Google Scholar] [CrossRef]

- Lynch, B.K.; McNamara, T.F. Using G-theory and many-facet Rasch measurement in the development of performance assessments of the ESL speaking skills of immigrants. Lang. Test. 1998, 15, 158–180. [Google Scholar] [CrossRef]

- Hambleton, R.K.; Swaminathan, H. Estimation of item and ability parameters. In Item Response Theory: Principles and Applications; Springer Science+Business Media: New York, NY, USA, 1985; pp. 125–150. [Google Scholar]

- Sevil, Ş.; Aras, İ.S. Eğitimde Kullanılan Yapay Zekâ Araçları: Öğretmen El Kitabı; Milli Eğitim Bakanlığı Yenilik ve Eğitim Teknolojileri Genel Müdürlüğü: Ankara, Türkiye, 2024. [Google Scholar]

- UNESCO. Öğretmenlere Yönelik Bilgi ve İletişim Teknolojileri Yetkinlik Çerçevesi; UNESCO: Paris, France, 2018. Available online: https://yegitek.meb.gov.tr/www/unesco-ogretmenlere-yonelik-bilgi-ve-iletisim-teknolojileri-yetkinlik-cercevesi/icerik/3146 (accessed on 22 October 2024).

- Lawshe, C.H. A quantitative approach to content validity. Pers. Psychol. 1975, 28, 563–575. [Google Scholar]

- Veneziano, L.; Hooper, J. A method for quantifying content validity of health-related questionnaires. Am. J. Health Behav. 1997, 21, 67–70. [Google Scholar]

- Güler, N.; Gelbal, S. A study based on classic test theory and many facet Rasch model. Eurasian J. Educ. Res. 2010, 38, 108–125. [Google Scholar]

- Higgins, J.P.; Thompson, S.G.; Deeks, J.J.; Altman, D.G. Measuring inconsistency in meta-analyses. BMJ 2003, 327, 557–560. [Google Scholar]

- Linacre, J.M.; Wright, B. Rasch Measurement Transactions; MESA Press: Chicago, IL, USA, 1995. [Google Scholar]

- Baştürk, R.; Işıkoğlu, N. Okul öncesi eğitim kurumlarının işlevsel kalitelerinin çok yüzeyli Rasch modeli ile analizi. Kuram Ve Uygulamada Eğitim Bilim. 2008, 8, 7–32. [Google Scholar]

- Batdı, V. Ortaöğretim matematik öğretim programı içeriğinin Rasch ölçme modeli ve Nvıvo ile analizi. Turk. Stud. 2014, 9, 93–109. [Google Scholar] [CrossRef]

- Shamir, G.; Levin, I. Teaching machine learning in elementary school. Int. J. Child-Comput. Interact. 2021, 31, 100415. [Google Scholar] [CrossRef]

- Kajiwara, Y.; Matsuoka, A.; Shinbo, F. Machine learning role playing game: Instructional design of AI education for age-appropriate in K-12 and beyond. Comput. Educ. Artif. Intell. 2023, 5, 100162. [Google Scholar] [CrossRef]

- Kablan, Z.; Topan, B.; Erkan, B. Sınıf içi öğretimde materyal kullanımının etkililik düzeyi: Bir meta-analiz çalışması. Kuram Ve Uygulamada Eğitim Bilim. 2013, 13, 1629–1644. [Google Scholar]

- Camnalbur, M. Bilgisayar Destekli Öğretimin Etkililiği Üzerine Bir Meta Analiz Çalışması. Master’s Thesis, Marmara Üniversitesi, İstanbul, Türkiye, 2008. [Google Scholar]

- Salas-Pilco, S.Z. The impact of AI and robotics on physical, social-emotional and intellectual learning outcomes: An integrated analytical framework. Br. J. Educ. Technol. 2020, 51, 1808–1825. [Google Scholar] [CrossRef]

- Pillai, R.; Sivathanu, B.; Metri, B.; Kaushik, N. Students’ adoption of AI-based teacher-bots (T-bots) for learning in higher education. Inf. Technol. People 2024, 37, 328–355. [Google Scholar] [CrossRef]

- Bers, M.U.; Flannery, L.; Kazakoff, E.R.; Sullivan, A. Computational thinking and tinkering: Exploration of an early childhood robotics curriculum. Comput. Educ. 2014, 72, 145–157. [Google Scholar] [CrossRef]

- Fitton, V.A.; Ahmedani, B.K.; Harold, R.D.; Shifflet, E.D. The role of technology on young adolescent development: Implications for policy, research and practice. Child Adolesc. Soc. Work. J. 2013, 30, 399–413. [Google Scholar] [CrossRef]

- Garrison, D.R. E-Learning in The 21st Century: A Community of Inquiry Framework for Research and Practice; Routledge: New York, NY, USA, 2017. [Google Scholar]

- Ke, F. Examining online teaching, cognitive, and social presence for adult students. Comput. Educ. 2010, 55, 808–820. [Google Scholar] [CrossRef]

- Ellis, R.D.; Allaire, J.C. Modeling computer interest in older adults: The role of age, education, computer knowledge, and computer anxiety. Hum. Factors 1999, 41, 345–355. [Google Scholar] [CrossRef] [PubMed]

- Toivonen, T.; Jormanainen, I.; Kahila, J.; Tedre, M.; Valtonen, T.; Vartiainen, H. Co-Designing Machine Learning Apps In K–12 With Primary School Children. In Proceedings of the 2020 IEEE 20th International Conference on Advanced Learning Technologies (ICALT), Tartu, Estonia, 6–9 July 2020; pp. 308–310. [Google Scholar]

- Randall, N.A. Survey of Robot-Assisted Language Learning (RALL). ACM Trans. Hum. -Robot. Interact. 2019, 9, 1–36. [Google Scholar] [CrossRef]

- Wang, Y.-C. Using wikis to facilitate interaction and collaboration among EFL learners: A social constructivist approach to language. System 2014, 42, 383–390. [Google Scholar] [CrossRef]

- Al-kfairy, M. Factors ımpacting the adoption and acceptance of chatgpt in educational settings: A narrative review of empirical studies. Appl. Syst. Innov. 2024, 7, 110. [Google Scholar] [CrossRef]

- Chen, L.; Chen, P.; Lin, Z. Artificial intelligence in education: A review. IEEE Access 2020, 8, 75264–75278. [Google Scholar] [CrossRef]

- Wang, S.P.; Chen, Y.L. Effects of multimodal learning analytics with concept maps on college students’ vocabulary and reading performance. J. Educ. Technol. Soc. 2018, 21, 12–25. [Google Scholar]

- Reeves, B.; Hancock, J.; Liu, X. Social Robots Are Like Real People: First Impressions, Attributes, and Stereotyping of Social Robots. Technol. Mind Behav. 2020, 1. [Google Scholar] [CrossRef]

- Huang, L. Ethics of artificial intelligence in education: Student privacy and data protection. Sci. Insights Educ. Front. 2023, 16, 2577–2587. [Google Scholar] [CrossRef]

- Baihakki, M.A.; Mohamed Saleh Ba Qutayan, S. Ethical issues of artificial intelligence (AI) in the healthcare. J. Sci. Technol. Innov. Policy 2023, 9, 32–38. [Google Scholar] [CrossRef]

- Baştürk, R. Bilimsel araştırma ödevlerinin çok yüzeyli Rasch ölçme modeli ile değerlendirilmesi. J. Meas. Eval. Educ. Psychol. 2010, 1, 51–57. [Google Scholar]

- Doğan, O.; Baloğlu, N. Üniversite öğrencilerinin endüstri 4.0 kavramsal farkındalık düzeyleri. TÜBAV Bilim Derg. 2020, 13, 126–142. [Google Scholar]

- Talan, T. Artificial intelligence in education: A bibliometric study. Int. J. Res. Educ. Sci. (IJRES) 2021, 7, 822–837. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Köse, İ.A.; Usta, H.G.; Yandı, A. Sunum yapma becerilerinin çok yüzeyli rasch analizi ile değerlendirilmesi. Abant İzzet Baysal Üniversitesi Eğitim Fakültesi Derg. 2016, 16, 1853–1864. [Google Scholar]

- Semerci, Ç. Öğrencilerin BÖTE bölümüne ilişkin görüşlerinin rasch ölçme modeline göre değerlendirilmesi (Fırat Üniversitesi örneği). Educ. Sci. 2012, 7, 777–784. [Google Scholar]

- Uluman, M.; Tavşancıl, E. Çok değişkenlik kaynaklı rasch ölçme modeli ve hiyerarşik puanlayıcı modeli ile kestirilen puanlayıcı parametrelerinin karşılaştırılması. İnsan Ve Toplum Bilim. Araştırmaları Derg. 2017, 6, 777–798. [Google Scholar]

- Benvenuti, M.; Cangelosi, A.; Weinberger, A.; Mazzoni, E.; Benassi, M.; Barbaresi, M.; Orsoni, M. Artificial intelligence and human behavioral development: A perspective on new skills and competences acquisition for the educational context. Comput. Hum. Behav. 2023, 148, 107903. [Google Scholar] [CrossRef]

- Zhang, K.; ve Aslan, A.B. AI technologies for education: Recent research & future directions. Comput. Educ. 2021, 2, 100025. [Google Scholar] [CrossRef]

- Lin, X.F.; Wang, Z.; Zhou, W.; Luo, G.; Hwang, G.J.; Zhou, Y.; Liang, Z.M. Technological support to foster students’ artificial intelligence ethics: An augmented reality-based contextualized dilemma discussion approach. Comput. Educ. 2023, 201, 104813. [Google Scholar] [CrossRef]

- Kaledio, P.; Robert, A.; Frank, L. The Impact of artificial ıntelligence on students’ learning Experience. Available SSRN 2024. [Google Scholar] [CrossRef]

| Criteria | Description |

|---|---|

| Time Period | 2005–2025 |

| Publication Language | English and Turkish |

| Appropriateness of Teaching Method | Experimental and/or quasi-experimental designed studies with pre-test–post-test control groups using artificial intelligence applications |

| Statistical Data | Sample size (n), arithmetic mean (X), and standard deviation (ss) for effect size calculation |

| Test Type | Model | 95% + Confidence Intervals | Heterogeneity | |||||

|---|---|---|---|---|---|---|---|---|

| n | g | Lower | Upper | Q | p | I2 | ||

| AA | FEM | 24 | 0.59 | 0.47 | 0.64 | 163.11 | 0.00 | 85.90 |

| REM | 24 | 0.51 | 0.28 | 0.74 | ||||

| Items | Groups | Effect Size and 95% Confidence Intervals | Null Test | Heterogeneity | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| n | g | Lower Limit | Upper Limit | Z-Value | p-Value | Q-Value | df | p-Value | ||

| Application duration | 1–4 | 0.59 | 0.59 | 0.30 | 0.88 | 4.01 | 0.00 | |||

| 5+ | 0.09 | 0.09 | −0.15 | 0.33 | 0.75 | 0.45 | ||||

| Unspecified | 0.58 | 0.58 | −0.02 | 1.19 | 1.89 | 0.06 | ||||

| Total | 0.32 | 0.32 | 0.14 | 0.50 | 3.54 | 0.00 | 7.69 | 2 | 0.02 | |

| Subjects | Maths | 19 | 0.44 | 0.18 | 0.71 | 3.25 | 0.01 | |||

| AI | 3 | 0.80 | 0.07 | 1.53 | 2.15 | 0.03 | ||||

| Others | 2 | 0.81 | 0.19 | 1.44 | 2.54 | 0.01 | ||||

| Total | 24 | 0.53 | 0.30 | 0.76 | 4.47 | 0.00 | 1.7 | 2 | 0.43 | |

| Sample size | Small | 6 | 0.50 | 0.09 | 0.90 | 2.40 | 0.02 | |||

| Medium | 9 | 0.37 | 0.18 | 0.55 | 3.93 | 0.00 | ||||

| Large | 6 | 0.63 | 0.18 | 1.08 | 2.75 | 0.01 | ||||

| Total | 24 | 0.42 | 0.26 | 0.57 | 5.24 | 0.00 | 1.29 | 2 | 0.52 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Topkaya, Y.; Doğan, Y.; Batdı, V.; Aydın, S. Artificial Intelligence Applications in Primary Education: A Quantitatively Complemented Mixed-Meta-Method Study. Sustainability 2025, 17, 3015. https://doi.org/10.3390/su17073015

Topkaya Y, Doğan Y, Batdı V, Aydın S. Artificial Intelligence Applications in Primary Education: A Quantitatively Complemented Mixed-Meta-Method Study. Sustainability. 2025; 17(7):3015. https://doi.org/10.3390/su17073015

Chicago/Turabian StyleTopkaya, Yavuz, Yunus Doğan, Veli Batdı, and Sami Aydın. 2025. "Artificial Intelligence Applications in Primary Education: A Quantitatively Complemented Mixed-Meta-Method Study" Sustainability 17, no. 7: 3015. https://doi.org/10.3390/su17073015

APA StyleTopkaya, Y., Doğan, Y., Batdı, V., & Aydın, S. (2025). Artificial Intelligence Applications in Primary Education: A Quantitatively Complemented Mixed-Meta-Method Study. Sustainability, 17(7), 3015. https://doi.org/10.3390/su17073015