Assessing the Repeatability of Automated Seafloor Classification Algorithms, with Application in Marine Protected Area Monitoring

Abstract

1. Introduction

2. Material and Methods

2.1. Study Site

2.2. Geophysical Data

2.3. Groundtruth Data

2.4. Data Preparation

2.5. Feature Extraction

2.5.1. Bathymetry Derivatives: Multiscale Terrain Analysis

2.5.2. Backscatter Derivatives: Multiscale Textural Analysis

2.6. Feature Reduction

2.7. Classification

2.8. Model Assessment

2.9. Accuracy Assessment

2.10. Repeatability Assessment

2.11. Habitat Change Assessment

2.12. Sensitivity Analysis

3. Results

3.1. Feature Selection: PCA

3.2. Model Comparison

3.2.1. Accuracy of Individual Models

3.2.2. Per Class Accuracy

3.2.3. Model Repeatability

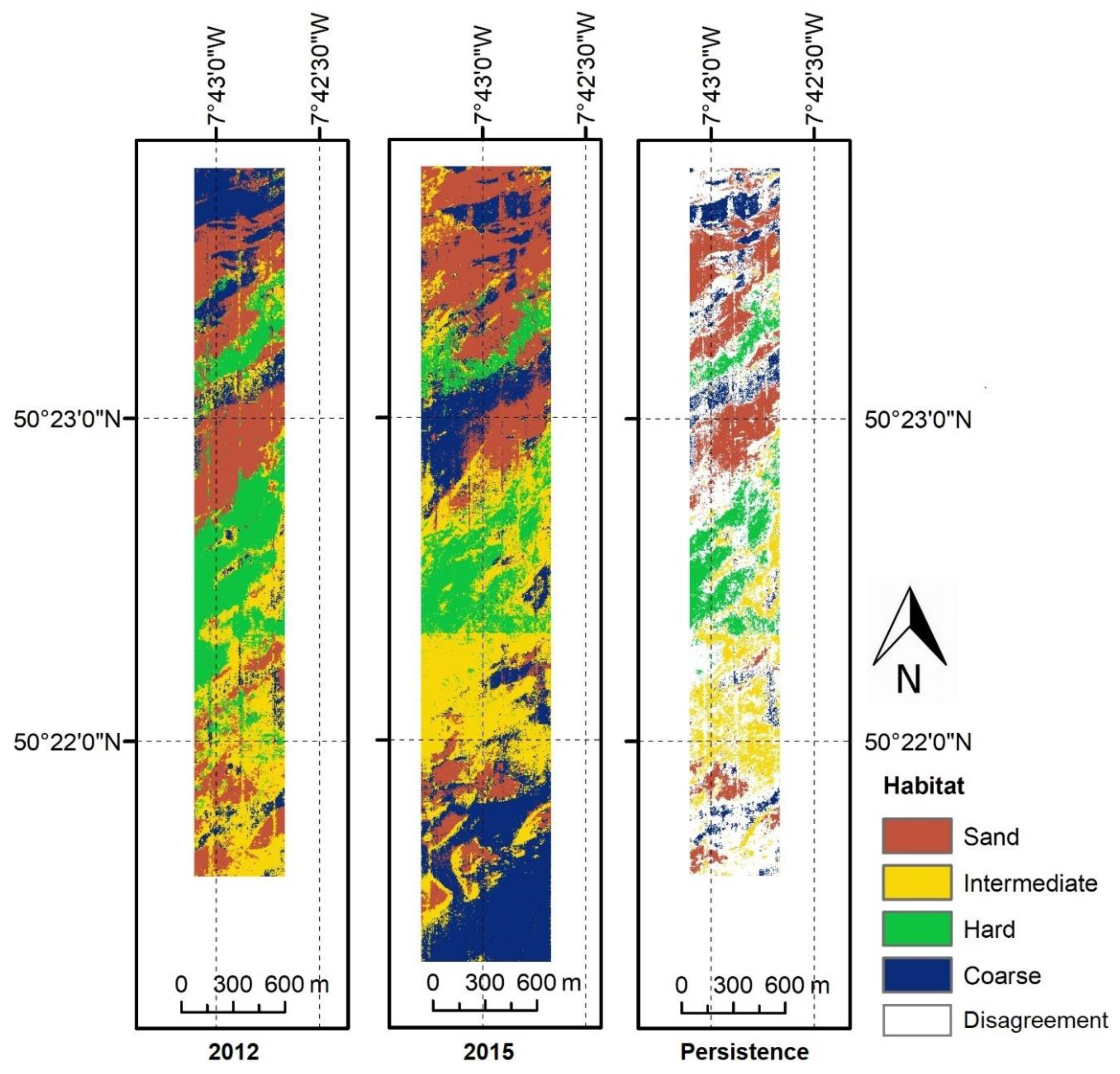

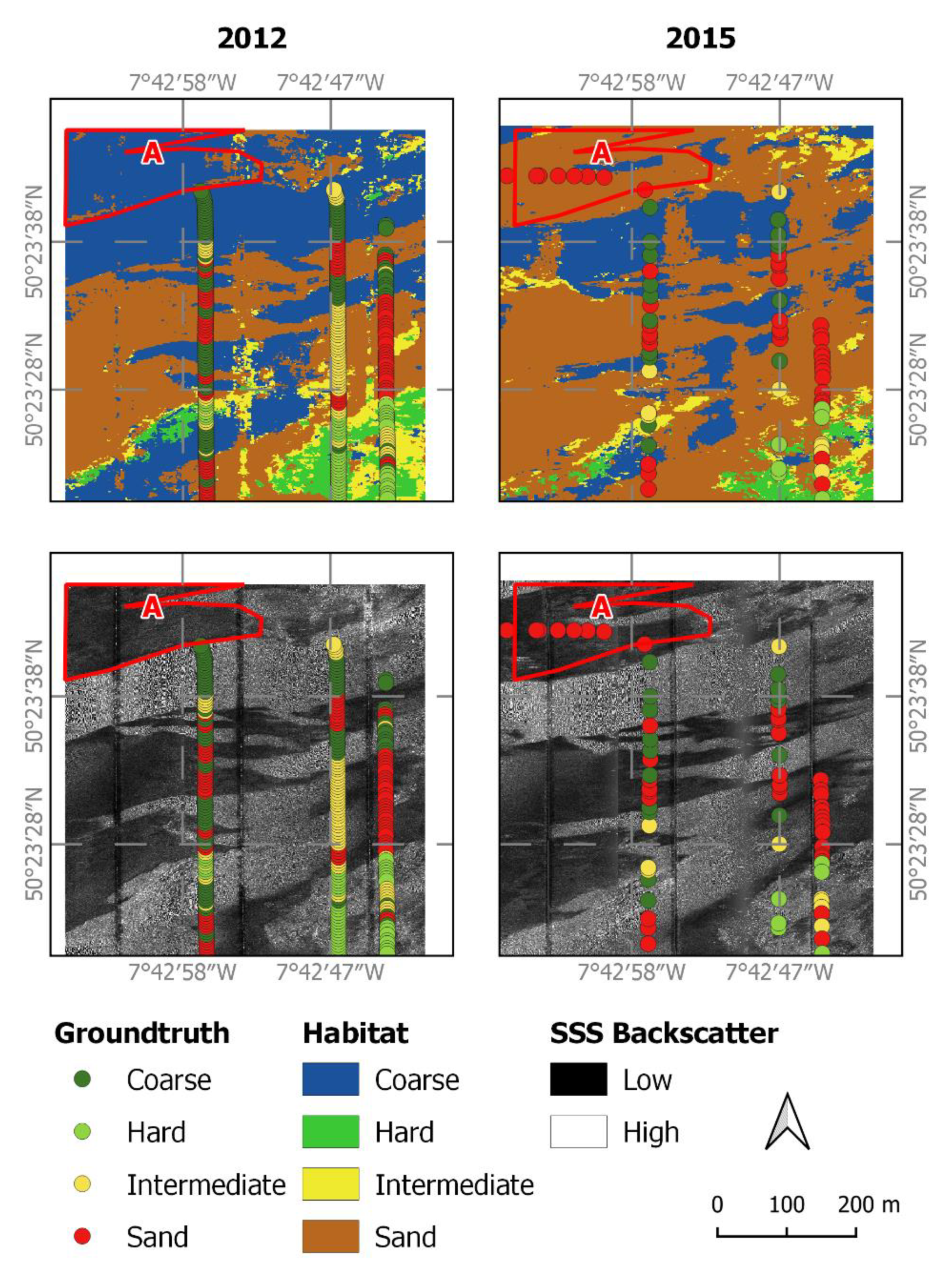

3.3. Change Assessment

3.3.1. 2012. Habitat Map

3.3.2. Habitat Transitions

3.4. Model Exploration

3.4.1. Feature Importance

3.4.2. Sensitivity Analysis

4. Discussion

4.1. Comparability of the Results

4.2. Inter-Class Confusion

4.3. Habitat Transitions

4.4. Dataset Advantages and Limitations

4.5. On the Method: Multiscale Terrain and Textural Analysis

4.6. Relative Importance of Texture and Terrain Features

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Clarke Murray, C.; Agbayani, S.; Ban, N.C. Cumulative effects of planned industrial development and climate change on marine ecosystems. Glob. Ecol. Conserv. 2015, 4, 110–116. [Google Scholar] [CrossRef]

- Wells, S.; Ray, G.C.; Gjerde, K.M.; White, A.T.; Muthiga, N.; Bezaury Creel, J.E.; Causey, B.D.; McCormick-Ray, J.; Salm, R.; Gubbay, S.; et al. Building the future of MPAs—Lessons from history. Aquat. Conserv. Mar. Freshw. Ecosyst. 2016, 26, 101–125. [Google Scholar] [CrossRef]

- UNEP-WCMC and IUCN Marine Protected Planet. Available online: https://www.protectedplanet.net (accessed on 20 January 2020).

- Harris, P.T.; Baker, E.K. Why Map Benthic Habitats? In Seafloor Geomorphology as Benthic Habitat; Elsevier: Amsterdam, The Netherlands, 2012; pp. 3–22. [Google Scholar]

- Brown, C.J.; Smith, S.J.; Lawton, P.; Anderson, J.T. Benthic habitat mapping: A review of progress towards improved understanding of the spatial ecology of the seafloor using acoustic techniques. Estuar. Coast. Shelf Sci. 2011, 92, 502–520. [Google Scholar] [CrossRef]

- Lecours, V. On the Use of Maps and Models in Conservation and Resource Management (Warning: Results May Vary). Front. Mar. Sci. 2017, 4, 1–18. [Google Scholar] [CrossRef]

- Ierodiaconou, D.; Alexandre, C.G.S.; Schimel, C.G.; Kennedy, D.; Monk, J.; Gaylard, G.; Young, M.; Diesing, M.; Rattray, A. Combining pixel and object based image analysis of ultra-high resolution multibeam bathymetry and backscatter for habitat mapping in shallow marine waters. Mar. Geophys. Res. 2018, 39, 271–288. [Google Scholar] [CrossRef]

- Innangi, S.; Tonielli, R.; Romagnoli, C.; Budillon, F.; Di Martino, G.; Innangi, M.; Laterza, R.; Le Bas, T.; Lo Iacono, C. Seabed mapping in the Pelagie Islands marine protected area (Sicily Channel, southern Mediterranean) using Remote Sensing Object Based Image Analysis (RSOBIA). Mar. Geophys. Res. 2019, 40, 333–355. [Google Scholar] [CrossRef]

- Lacharité, M.; Brown, C.J.; Gazzola, V. Multisource multibeam backscatter data: Developing a strategy for the production of benthic habitat maps using semi-automated seafloor classification methods. Mar. Geophys. Res. 2018, 39, 307–322. [Google Scholar] [CrossRef]

- Lucieer, V.; Lamarche, G. Unsupervised fuzzy classification and object-based image analysis of multibeam data to map deep water substrates, Cook Strait, New Zealand. Cont. Shelf Res. 2011, 31, 1236–1247. [Google Scholar] [CrossRef]

- Che Hasan, R.; Ierodiaconou, D.; Monk, J. Evaluation of Four Supervised Learning Methods for Benthic Habitat Mapping Using Backscatter from Multi-Beam Sonar. Remote Sens. 2012, 4, 3427–3443. [Google Scholar] [CrossRef]

- Stephens, D.; Diesing, M. A Comparison of Supervised Classification Methods for the Prediction of Substrate Type Using Multibeam Acoustic and Legacy Grain-Size Data. PLoS ONE 2014, 9, e93950. [Google Scholar] [CrossRef]

- Wilson, M.F.J.; O’connell, B.; Brown, C.; Guinan, J.C.; Grehan, A.J. Multiscale Terrain Analysis of Multibeam Bathymetry for Habitat Mapping on the Continental Slope. Mar. Geod. 2007, 30, 3–35. [Google Scholar] [CrossRef]

- Rattray, A.; Ierodiaconou, D.; Monk, J.; Versace, V.L.; Laurenson, L.J.B. Detecting patterns of change in benthic habitats by acoustic remote sensing. Mar. Ecol. Prog. Ser. 2013, 477, 1–13. [Google Scholar] [CrossRef]

- Snellen, M.; Gaida, T.C.; Koop, L.; Alevizos, E.; Simons, D.G. Performance of Multibeam Echosounder Backscatter-Based Classification for Monitoring Sediment Distributions Using Multitemporal Large-Scale Ocean Data Sets. IEEE J. Ocean. Eng. 2018, 1–14. [Google Scholar] [CrossRef]

- Montereale Gavazzi, G.; Roche, M.; Lurton, X.; Degrendele, K.; Terseleer, N.; Van Lancker, V. Seafloor change detection using multibeam echosounder backscatter: Case study on the Belgian part of the North Sea. Mar. Geophys. Res. 2018, 39, 229–247. [Google Scholar] [CrossRef]

- Montereale-Gavazzi, G.; Roche, M.; Degrendele, K.; Lurton, X.; Terseleer, N.; Baeye, M.; Francken, F.; Van Lancker, V. Insights into the Short-Term Tidal Variability of Multibeam Backscatter from Field Experiments on Different Seafloor Types. Geosciences 2019, 9, 34. [Google Scholar] [CrossRef]

- Diesing, M.; Green, S.L.; Stephens, D.; Lark, R.M.; Stewart, H.A.; Dove, D. Mapping seabed sediments: Comparison of manual, geostatistical, object-based image analysis and machine learning approaches. Cont. Shelf Res. 2014, 84, 107–119. [Google Scholar] [CrossRef]

- Frost, M.; Sanderson, W.G.; Vina-Herbon, C.; Lowe, R.J. The Potential Use of Mapped Extent and Distribution of Habitats as Indicators of Good Environmental Status (GES). Healthy and Biologically Diverse Seas Evidence Group Workshop Report; Joint Nature Conservation Committee: Peterborough, UK, 2013. [Google Scholar]

- Anderson, J.T.; Van Holliday, D.; Kloser, R.; Reid, D.G.; Simard, Y. Acoustic seabed classification: Current practice and future directions. ICES J. Mar. Sci. 2008, 65, 1004–1011. [Google Scholar] [CrossRef]

- Wynn, R.B.; Bett, B.J.; Evans, A.J.; Griffiths, G.; Huvenne, V.A.I.; Jones, A.R.; Palmer, M.R.; Dove, D.; Howe, J.A.; Boyd, T.J.; et al. Investigating the Feasibility of Utilizing AUV and Glider Technology for Mapping and Monitoring of the UK MPA Network; Final report for Defra project MB0118; National Oceanography Centre: Southampton, UK, 2012. [Google Scholar]

- Wynn, R.B.; Huvenne, V.A.I.; Le Bas, T.P.; Murton, B.J.; Connelly, D.P.; Bett, B.J.; Ruhl, H.A.; Morris, K.J.; Peakall, J.; Parsons, D.R.; et al. Autonomous Underwater Vehicles (AUVs): Their past, present and future contributions to the advancement of marine geoscience. Mar. Geol. 2014, 352, 451–468. [Google Scholar] [CrossRef]

- Jones, D.O.B.; Gates, A.R.; Huvenne, V.A.I.; Phillips, A.B.; Bett, B.J. Autonomous marine environmental monitoring: Application in decommissioned oil fields. Sci. Total Environ. 2019, 668, 835–853. [Google Scholar] [CrossRef]

- Huvenne, V.A.I.; Wynn, R.B.; Gales, J.A. RRS James Cook Cruise 124-125-126 09 Aug-12 Sep 2016. CODEMAP2015: Habitat mapping and ROV vibrocorer trials around Whittard Canyon and Haig Fras.; National Oceanography Centre: Southampton, UK, 2016. [Google Scholar]

- The Greater Haig Fras Marine Conservation Zone Designation Order 2016; Ministerial Order 2016, No. 9; Wildlife Environmental Protection Marine Management: London, UK, 2016.

- Ruhl, H.A. RRS Discovery Cruise 377 & 378, 05–27 Jul 2012. In Autonomous Ecological Surveying Of the abyss: Understanding Mesoscale Spatical Heterogeneity at the Porcupine Abyssal Plain; National Oceanography Centre: Southampton, UK, 2013. [Google Scholar]

- Morris, K.J.; Bett, B.J.; Durden, J.M.; Huvenne, V.A.I.; Milligan, R.; Jones, D.O.B.; McPhail, S.; Robert, K.; Bailey, D.M.; Ruhl, H.A. A new method for ecological surveying of the abyss using autonomous underwater vehicle photography. Limnol. Oceanogr. Methods 2014, 12, 795–809. [Google Scholar] [CrossRef]

- Benoist, N.M.A.; Morris, K.J.; Bett, B.J.; Durden, J.M.; Huvenne, V.A.I.; Le Bas, T.P.; Wynn, R.B.; Ware, S.J.; Ruhl, H.A. Monitoring mosaic biotopes in a marine conservation zone by autonomous underwater vehicle. Conserv. Biol. 2019, 33, 1174–1186. [Google Scholar] [CrossRef] [PubMed]

- Lundblad, E.R.; Wright, D.J.; Miller, J.; Larkin, E.M.; Rinehart, R.; Naar, D.F.; Donahue, B.T.; Anderson, S.M.; Battista, T. A Benthic Terrain Classification Scheme for American Samoa. Mar. Geod. 2006, 29, 89–111. [Google Scholar] [CrossRef]

- Walbridge, S.; Slocum, N.; Pobuda, M.; Wright, D.J. Unified Geomorphological Analysis Workflows with Benthic Terrain Modeler. Geosciences 2018, 8, 94. [Google Scholar] [CrossRef]

- Misiuk, B.; Lecours, V.; Bell, T. A multiscale approach to mapping seabed sediments. PLoS ONE 2018, 13, e0193647. [Google Scholar] [CrossRef] [PubMed]

- Ismail, K.; Huvenne, V.A.I.; Masson, D.G. Objective automated classification technique for marine landscape mapping in submarine canyons. Mar. Geol. 2015, 362, 17–32. [Google Scholar] [CrossRef]

- Jain, A.K.; Farrokhnia, F. Unsupervised Texture Segmentation Using Gabor Filters. In Proceedings of the 1990 IEEE International Conference On Systems, Man, and Cybernetics Conference Proceedings, Los Angeles, CA, USA, 4–7 November 1990; pp. 14–19. [Google Scholar]

- Barber, D.G.; LeDrew, E.F. SAR Sea Ice Discrimination Using Texture Statistics: A Multivariate Approach. Photogramm. Eng. Remote Sens. 1991, 57, 385–395. [Google Scholar]

- Prampolini, M.; Blondel, P.; Foglini, F.; Madricardo, F. Habitat mapping of the Maltese continental shelf using acoustic textures and bathymetric analyses. Estuar. Coast. Shelf Sci. 2016, 1–16. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, 3, 610–621. [Google Scholar] [CrossRef]

- Clausi, D.A. Comparison and fusion of co-occurrence, Gabor and MRF texture features for classification of SAR sea-ice imagery. Atmos. Ocean 2001, 39, 183–194. [Google Scholar] [CrossRef]

- Ulaby, F.; Kouyate, F.; Brisco, B.; Williams, T.H. Textural Infornation in SAR Images. IEEE Trans. Geosci. Remote Sens. 1986, GE-24, 235–245. [Google Scholar] [CrossRef]

- Blondel, P. Segmentation of the Mid-Atlantic Ridge south of the Azores, based on acoustic classification of TOBI data. In Tectonic, Magmatic, Hydrothermal and Biological Segmentation of Mid-Ocean Ridges; MacLeod, C.J., Tyler, P.A., Walker, C.L., Eds.; Geological Society: London, UK, 1996; Volume 118, pp. 17–28. [Google Scholar]

- Huvenne, V.A.I.; Blondel, P.; Henriet, J.P. Textural analyses of sidescan sonar imagery from two mound provinces in the Porcupine Seabight. Mar. Geol. 2002, 189, 323–341. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Bremner, D.; Demaine, E.; Erickson, J.; Iacono, J.; Langerman, S.; Morin, P.; Toussaint, G. Output-sensitive algorithms for computing nearest-neighbour decision boundaries. Discret. Comput. Geom. 2005, 33, 593–604. [Google Scholar] [CrossRef]

- Lloyd, S.P. Least Squares Quantization in PCM. IEEE Trans. Inf. Theory 1982, 28, 129–137. [Google Scholar] [CrossRef]

- Huo, G.; Li, Q.; Zhou, Y. Seafloor Segmentation Using Combined Texture Features of Sidescan Sonar Images. In Proceedings of the 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 9–12 October 2016; pp. 3794–3799. [Google Scholar]

- Alevizos, E.; Snellen, M.; Simons, D.G.; Siemes, K.; Greinert, J. Acoustic discrimination of relatively homogeneous fine sediments using Bayesian classification on MBES data. Mar. Geol. 2015, 370, 31–42. [Google Scholar] [CrossRef]

- Montereale Gavazzi, G.; Madricardo, F.; Janowski, L.; Kruss, A.; Blondel, P.; Sigovini, M.; Foglini, F. Evaluation of seabed mapping methods for fine-scale classification of extremely shallow benthic habitats—Application to the Venice Lagoon, Italy. Estuar. Coast. Shelf Sci. 2016, 170, 45–60. [Google Scholar] [CrossRef]

- Lucieer, V.; Hill, N.A.; Barrett, N.S.; Nichol, S. Do marine substrates “look” and “sound” the same? Supervised classification of multibeam acoustic data using autonomous underwater vehicle images. Estuar. Coast. Shelf Sci. 2013, 117, 94–106. [Google Scholar] [CrossRef]

- Diesing, M.; Stephens, D. A multi-model ensemble approach to seabed mapping. J. Sea Res. 2015, 100, 62–69. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Turner, J.A.; Babcock, R.C.; Hovey, R.; Kendrick, G.A. Can single classifiers be as useful as model ensembles to produce benthic seabed substratum maps? Estuar. Coast. Shelf Sci. 2018, 204, 149–163. [Google Scholar] [CrossRef]

- Duro, D.C.; Franklin, S.E.; Dubé, M.G. A comparison of pixel-based and object-based image analysis with selected machine learning algorithms for the classification of agricultural landscapes using SPOT-5 HRG imagery. Remote Sens. Environ. 2012, 118, 259–272. [Google Scholar] [CrossRef]

- Che Hasan, R.; Ierodiaconou, D.; Laurenson, L.; Schimel, A. Integrating Multibeam Backscatter Angular Response, Mosaic and Bathymetry Data for Benthic Habitat Mapping. PLoS ONE 2014, 9, e97339. [Google Scholar] [CrossRef] [PubMed]

- Cohen, J. A Coefficient of Agreement for Nominal Scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The Measurement of Observer Agreement for Categorical Data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef] [PubMed]

- Pontius, R.G.; Millones, M. Death to Kappa: Birth of quantity disagreement and allocation disagreement for accuracy assessment. Int. J. Remote Sens. 2011, 32, 4407–4429. [Google Scholar] [CrossRef]

- McNemar, Q. Note on the sampling error of the difference between correlated proportions or percentages. Psychometrika 1947, 12, 153–157. [Google Scholar] [CrossRef]

- Foody, G.M. Thematic Map Comparison: Evaluating the Statistical Significance of Differences in Classification Accuracy. Photogramm. Eng. Remote Sens. 2004, 70, 627–633. [Google Scholar] [CrossRef]

- Herkül, K.; Peterson, A.; Paekivi, S. Applying multibeam sonar and mathematical modeling for mapping seabed substrate and biota of offshore shallows. Estuar. Coast. Shelf Sci. 2017, 192, 57–71. [Google Scholar] [CrossRef]

- Calvert, J.; Strong, J.A.; Service, M.; McGonigle, C.; Quinn, R. An evaluation of supervised and unsupervised classification techniques for marine benthic habitat mapping using multibeam echosounder data. ICES J. Mar. Sci. 2015, 72, 1498–1513. [Google Scholar] [CrossRef]

- Kågesten, G.; Fiorentino, D.; Baumgartner, F.; Zillén, L. How do continuous high-resolution models of patchy seabed habitats enhance classification schemes? Geosciences 2019, 9, 237. [Google Scholar] [CrossRef]

- Ferrier, S.; Guisan, A. Spatial modelling of biodiversity at the community level. J. Appl. Ecol. 2006, 43, 393–404. [Google Scholar] [CrossRef]

- Strong, J.A.; Clements, A.; Lillis, H.; Galparsoro, I.; Bildstein, T.; Pesch, R. A review of the influence of marine habitat classification schemes on mapping studies: Inherent assumptions, influence on end products, and suggestions for future developments. ICES J. Mar. Sci. 2019, 76, 10–22. [Google Scholar] [CrossRef]

- Foody, G.M. Assessing the accuracy of land cover change with imperfect ground reference data. Remote Sens. Environ. 2010, 114, 2271–2285. [Google Scholar] [CrossRef]

- Coppin, P.; Jonckheere, I.; Nackaerts, K.; Muys, B.; Lambin, E. Digital change detection methods in ecosystem monitoring: A review. Int. J. Remote Sens. 2004, 25, 1565–1596. [Google Scholar] [CrossRef]

- Foody, G.; Pal, M.; Rocchini, D.; Garzon-Lopez, C.; Bastin, L. The Sensitivity of Mapping Methods to Reference Data Quality: Training Supervised Image Classifications with Imperfect Reference Data. ISPRS Int. J. Geo Inf. 2016, 5, 199. [Google Scholar] [CrossRef]

- Foody, G.M. Sample size determination for image classification accuracy assessment and comparison. Int. J. Remote Sens. 2009, 30, 5273–5291. [Google Scholar] [CrossRef]

- Xiao, X.; Gertner, G.; Wang, G.; Anderson, A.B. Optimal sampling scheme for estimation landscape mapping of vegetation cover. Landsc. Ecol. 2004, 20, 375–387. [Google Scholar] [CrossRef]

- Foster, S.D.; Hosack, G.R.; Hill, N.A.; Barrett, N.S.; Lucieer, V.L. Choosing between strategies for designing surveys: Autonomous underwater vehicles. Methods Ecol. Evol. 2014, 5, 287–297. [Google Scholar] [CrossRef]

- Luque, A.; Carrasco, A.; Martín, A.; De las Heras, A. The impact of class imbalance in classification performance metrics based on the binary confusion matrix. Pattern Recognit. 2019, 91, 216–231. [Google Scholar] [CrossRef]

- Li, D.; Tang, C.; Xia, C.; Zhang, H. Acoustic mapping and classification of benthic habitat using unsupervised learning in artificial reef water. Estuar. Coast. Shelf Sci. 2017, 185, 11–21. [Google Scholar] [CrossRef]

- Huvenne, V.A.I.; Huhnerbach, V.; Blondel, P.; Gomez Sichi, O.; Le Bas, T. Detailed Mapping of Shallow-Water Environments Using Image Texture Analysis on Sidescan Sonar and Multibeam Backscatter Imagery. In Proceedings of the 2nd International Conference & Exhibition on Underwater Acoustic Measurements: Technologies & Results, Heraklion, Greece, 25–29 June 2007; pp. 879–886. [Google Scholar]

- Hogg, O.T.; Huvenne, V.A.I.; Griffiths, H.J.; Linse, K. On the ecological relevance of landscape mapping and its application in the spatial planning of very large marine protected areas. Sci. Total Environ. 2018, 626, 384–398. [Google Scholar] [CrossRef] [PubMed]

- Preston, J. Automated acoustic seabed classification of multibeam images of Stanton Banks. Appl. Acoust. 2009, 70, 1277–1287. [Google Scholar] [CrossRef]

- Stephens, D.; Diesing, M. Towards Quantitative Spatial Models of Seabed Sediment Composition. PLoS ONE 2015, 10, e0142502. [Google Scholar] [CrossRef] [PubMed]

- Lucieer, V.L. Object-oriented classification of sidescan sonar data for mapping benthic marine habitats. Int. J. Remote Sens. 2008, 29, 905–921. [Google Scholar] [CrossRef]

- Karoui, I.; Fablet, R.; Boucher, J.M.; Augustin, J.M. Seabed segmentation using optimized statistics of sonar textures. IEEE Trans. Geosci. Remote Sens. 2009, 47, 1621–1631. [Google Scholar] [CrossRef]

| Acquisition Dates | Platform | System | Operating Frequency (kHz) | Survey Altitude (m) | Processing Software | Output |

|---|---|---|---|---|---|---|

| 25–26 July 2012 | Autosub6000 | EdgeTech 2200-FS | 410 | 15 | Chesapeake Sonarwiz6 | 8-bit greyscale geotif 0.15 m res. |

| 10–12 Aug. 2015 | Autosub6000 | Edgetech 2200-M | 410 | 15 | Chesapeake Sonarwiz6 | 8-bit greyscale geotif 0.15 m res. |

| Acquisition Dates | Platform | System | Operating Frequency (kHz) | Survey Altitude (m) | Processing Software | Output |

|---|---|---|---|---|---|---|

| 25–26 July 2012 | Autosub6000 | Kongsberg EM 2000 | 200 | 50 | CARIS HIPS and SIPS | 2 m grid |

| 10–12 Aug. 2015 | Autosub6000 | Kongsberg EM 2040 | 200 | 50 | IFREMER CARAIBES | 3 m grid |

| 10–12 Aug. 2015 | RRS James Cook | Kongsberg EM710 | 70–100 | - | CARIS HIPS and SIPS | 2 m grid |

| Habitat Class | 2012 | 2015 Day 1 | 2015 Day 2 | |||

|---|---|---|---|---|---|---|

| T | V | T | V | T | V | |

| Sand | 216 | 215 | 80 | 82 | 87 | 87 |

| Coarse | 146 | 145 | 90 | 89 | 95 | 95 |

| Hard | 216 | 216 | 86 | 86 | 102 | 102 |

| Intermediate | 199 | 199 | 56 | 56 | 57 | 58 |

| Total | 777 | 775 | 312 | 313 | 341 | 342 |

| Texture Statistic | Description | Calculation Tool |

|---|---|---|

| Contrast | Correlation measures the linear dependency of grey levels of neighbouring pixels. | MATLAB functions graycomatrix() graycoprops() |

| Correlation | Contrast measures how regular the pixel value differences within the window are. | MATLAB functions graycomatrix() graycoprops() |

| Grey Levels | Window Size | Interpixel Distances | Orientations | Static Descriptors | Total Features |

|---|---|---|---|---|---|

| 32 | 11 | 5 | 0°, 45°, 90°, 135° | Contrast, Correlation | 8 |

| 32 | 21 | 5, 10 | 0°, 45°, 90°, 135° | Contrast, Correlation | 16 |

| 32 | 51 | 5, 10, 15, 20, 25 | 0°, 45°, 90°, 135° | Contrast, Correlation | 40 |

| Total | 64 |

| 2012 | 2015 | Interpretation |

|---|---|---|

| Terrain_PC1 | Terrain_PC1 | Eastness |

| Terrain_PC2 | Terrain_PC2 | Slope |

| Terrain_PC3 | Terrain_PC3 | Northness |

| Texture_PC1 | Texture_PC1 | GLCM Contrast at 51 × 51 pixel windows |

| Texture_PC2 | Texture_PC2 | GLCM Correlation at 51 × 51 pixel windows |

| Texture_PC3 | Texture_PC3 | Gabor and GLCM features calculated at 0° orientation |

| Texture_PC5 | Texture_PC4 | Gabor features of short wavelength (up to 91 pixels) |

| Texture_PC4 | - | Gabor features of long wavelengths (362, 724 and 1448 pixels) |

| - | Texture_PC5 | GLCM Correlation at 135° orientation |

| 2015 Day 1 | 2015 Day 2 | Comparison | ||||||

|---|---|---|---|---|---|---|---|---|

| Model | Overall Accuracy | Kappa Coeff. | BER | Overall Accuracy | Kappa Coeff. | BER | Agree-ment | Kappa Coeff. |

| RF | 59.40% | 0.46 | 0.4 | 63.70% | 0.51 | 0.36 | 60.3% | 0.46 |

| KNN | 53.70% | 0.38 | 0.47 | 52.30% | 0.35 | 0.48 | 47.2% | 0.27 |

| KMEANS | 45.50% | 0.27 * | 0.54 | 31.70% | 0.11 * | 0.64 | 53.4% | 0.39 |

| Groundtruth Class | |||||||

|---|---|---|---|---|---|---|---|

| Coarse | Hard | Intermediate | Sand | Total | User’s Accuracy | ||

| Predicted Class | Coarse | 64 | 0 | 27 | 11 | 102 | 62.7% |

| Hard | 3 | 36 | 9 | 5 | 53 | 67.9% | |

| Intermediate | 14 | 18 | 56 | 9 | 97 | 57.7% | |

| Sand | 14 | 4 | 10 | 62 | 90 | 68.9% | |

| Total | 95 | 58 | 102 | 87 | 342 | ||

| Producer’s Accuracy | 67.4% | 62.1% | 54.9% | 71.3% | |||

| Overall Accuracy = 63.7% | NIR = 29.8% | Kappa Coeff. = 0.51 | BER = 0.36 | ||||

| Groundtruth Class | |||||||

|---|---|---|---|---|---|---|---|

| Coarse | Hard | Intermediate | Sand | Total | User’s Accuracy | ||

| Predicted Class | Coarse | 76 | 4 | 35 | 7 | 122 | 62.3% |

| Hard | 7 | 154 | 15 | 3 | 179 | 86.0% | |

| Intermediate | 38 | 34 | 140 | 23 | 235 | 59.6% | |

| Sand | 24 | 7 | 25 | 182 | 238 | 76.5% | |

| Total | 145 | 199 | 215 | 215 | 774 | ||

| Producer’s Accuracy | 52.4% | 77.4% | 65.1% | 84.7% | |||

| Overall Accuracy = 71.3% | NIR = 27.8% | Kappa Coeff. = 0.61 | BER = 0.30 | ||||

| 2012 | |||||||

|---|---|---|---|---|---|---|---|

| Coarse | Hard | Intermediate | Sand | Total | User’s Accuracy | ||

| 2015 | Coarse | 7.6 | 1.0 | 11.4 | 5.3 | 25.3 | 30.0% |

| Hard | 0.3 | 10.8 | 1.9 | 0.7 | 13.7 | 78.8% | |

| Intermediate. | 1.4 | 10.9 | 12.4 | 7.3 | 32.0 | 38.8% | |

| Sand | 6.4 | 1.7 | 2.9 | 18.0 | 29.0 | 62.1% | |

| Total | 15.7 | 24.4 | 28.6 | 31.3 | 100.0 | ||

| Producer’s accuracy | 48.4% | 44.3% | 43.4% | 57.5% | |||

| Persistence = 48.8% | BER = 0.52 | Kappa Coeff. = 0.31 | |||||

| Gain | Loss | Total Change | Swap | Net Change | |

|---|---|---|---|---|---|

| Coarse | 17.7 | 8.1 | 25.8 | 16.2 | 9.6 |

| Hard | 2.9 | 13.6 | 16.5 | 5.8 | −10.7 |

| Intermediate | 19.6 | 16.2 | 35.8 | 32.4 | 3.4 |

| Sand | 11.0 | 13.3 | 24.3 | 22.0 | −2.3 |

| Total | 51.2 | 38.2 | 13.0 |

| 2012 | 2015 | |||

|---|---|---|---|---|

| OA | Kappa | OA | Kappa | |

| All | 72.0% | 0.62 | 67.8% | 0.56 |

| No Terrain PCs | 70.8% | 0.61 | 66.1% | 0.54 |

| No Gabor PCs | 72.7% | 0.63 | 65.8% | 0.54 |

| No GLCM PCs | 65.9% | 0.54 * | 60.5% | 0.46 * |

| No spatial context | 68.7% | 0.58 * | 64.6% | 0.52 * |

| No Textural PCs | 69.4% | 0.59 | 58.8% | 0.44 * |

| Only Bathymetry and Backscatter | 46.3% | 0.28 * | 41.8% | 0.21 * |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zelada Leon, A.; Huvenne, V.A.I.; Benoist, N.M.A.; Ferguson, M.; Bett, B.J.; Wynn, R.B. Assessing the Repeatability of Automated Seafloor Classification Algorithms, with Application in Marine Protected Area Monitoring. Remote Sens. 2020, 12, 1572. https://doi.org/10.3390/rs12101572

Zelada Leon A, Huvenne VAI, Benoist NMA, Ferguson M, Bett BJ, Wynn RB. Assessing the Repeatability of Automated Seafloor Classification Algorithms, with Application in Marine Protected Area Monitoring. Remote Sensing. 2020; 12(10):1572. https://doi.org/10.3390/rs12101572

Chicago/Turabian StyleZelada Leon, America, Veerle A.I. Huvenne, Noëlie M.A. Benoist, Matthew Ferguson, Brian J. Bett, and Russell B. Wynn. 2020. "Assessing the Repeatability of Automated Seafloor Classification Algorithms, with Application in Marine Protected Area Monitoring" Remote Sensing 12, no. 10: 1572. https://doi.org/10.3390/rs12101572

APA StyleZelada Leon, A., Huvenne, V. A. I., Benoist, N. M. A., Ferguson, M., Bett, B. J., & Wynn, R. B. (2020). Assessing the Repeatability of Automated Seafloor Classification Algorithms, with Application in Marine Protected Area Monitoring. Remote Sensing, 12(10), 1572. https://doi.org/10.3390/rs12101572