UAV-Based Multispectral Phenotyping for Disease Resistance to Accelerate Crop Improvement under Changing Climate Conditions

Abstract

:1. Introduction

2. Materials and Methods

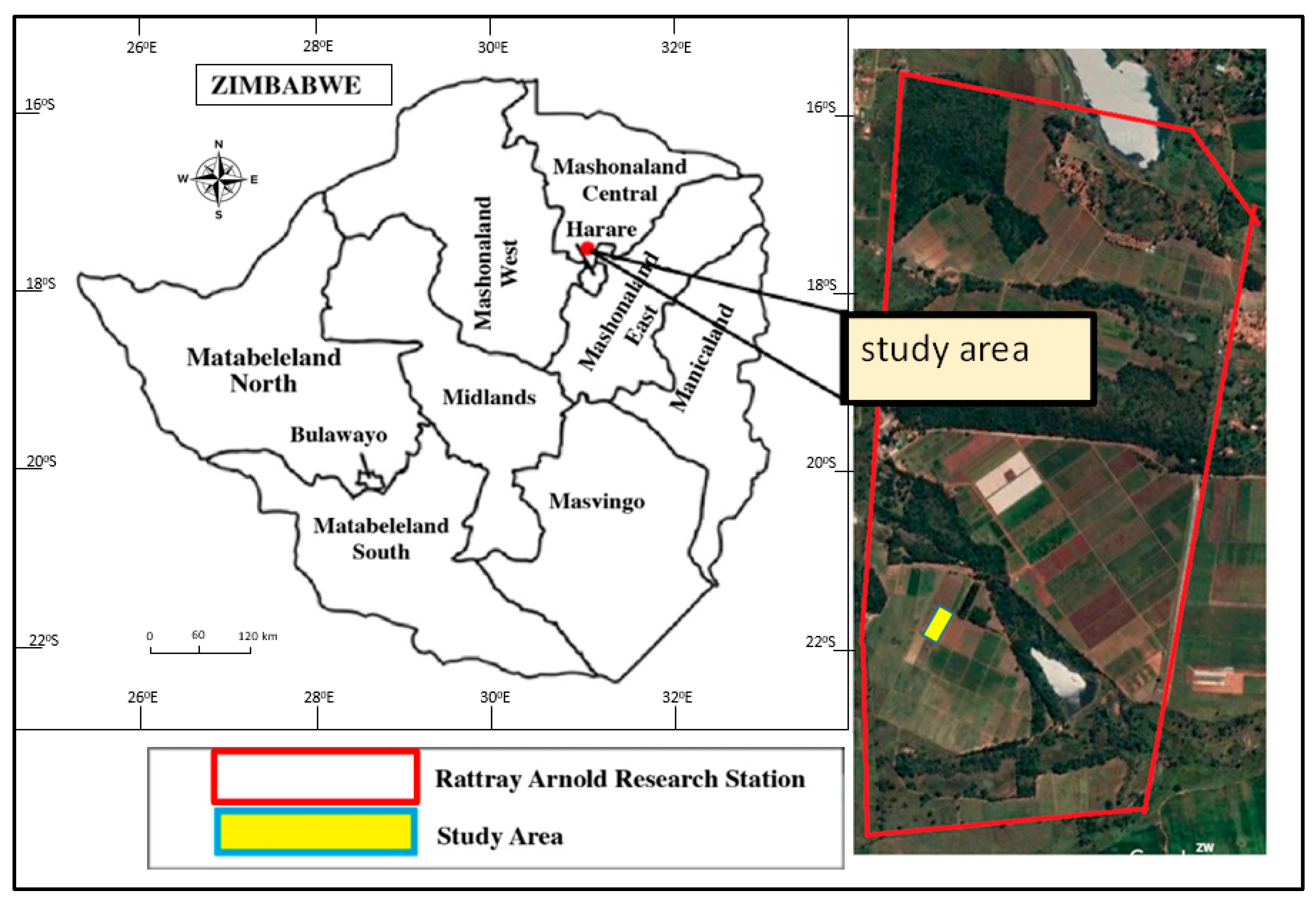

2.1. Study Area

2.2. Maize Varieties, Experimental Design, and Ground Truth Data

2.3. UAV Platform, Imagery Acquisition, and Processing

2.3.1. The UAV Platform

2.3.2. Image Acquisition and Processing

- (a)

- Initial processing involved key points identification, extraction, and matching; camera model optimization—calibration of the internal (focal length) and external parameters (orientation) of the camera; and geolocation GPS/GCP (Ground Control Points).

- (b)

- Point cloud and mesh: this step builds on the automatic tie points, which entail point densification and creation of 3D textured mesh.

- (c)

- Digital Surface Model (DSM) creation to determine orthomosaics and vegetation indices maps. Orthomosaics creation was based on orthorectification to remove perspective distortions from the images to produce vegetation index maps with the value of each pixel with true-to-type reflectance from the area of interest.

2.3.3. Reflectance Data Extraction

2.3.4. Vegetation Indices

2.4. Varietal Classification

2.4.1. Variable Optimization

2.4.2. Accuracy Assessment

3. Results

3.1. Varietal Response to MSV

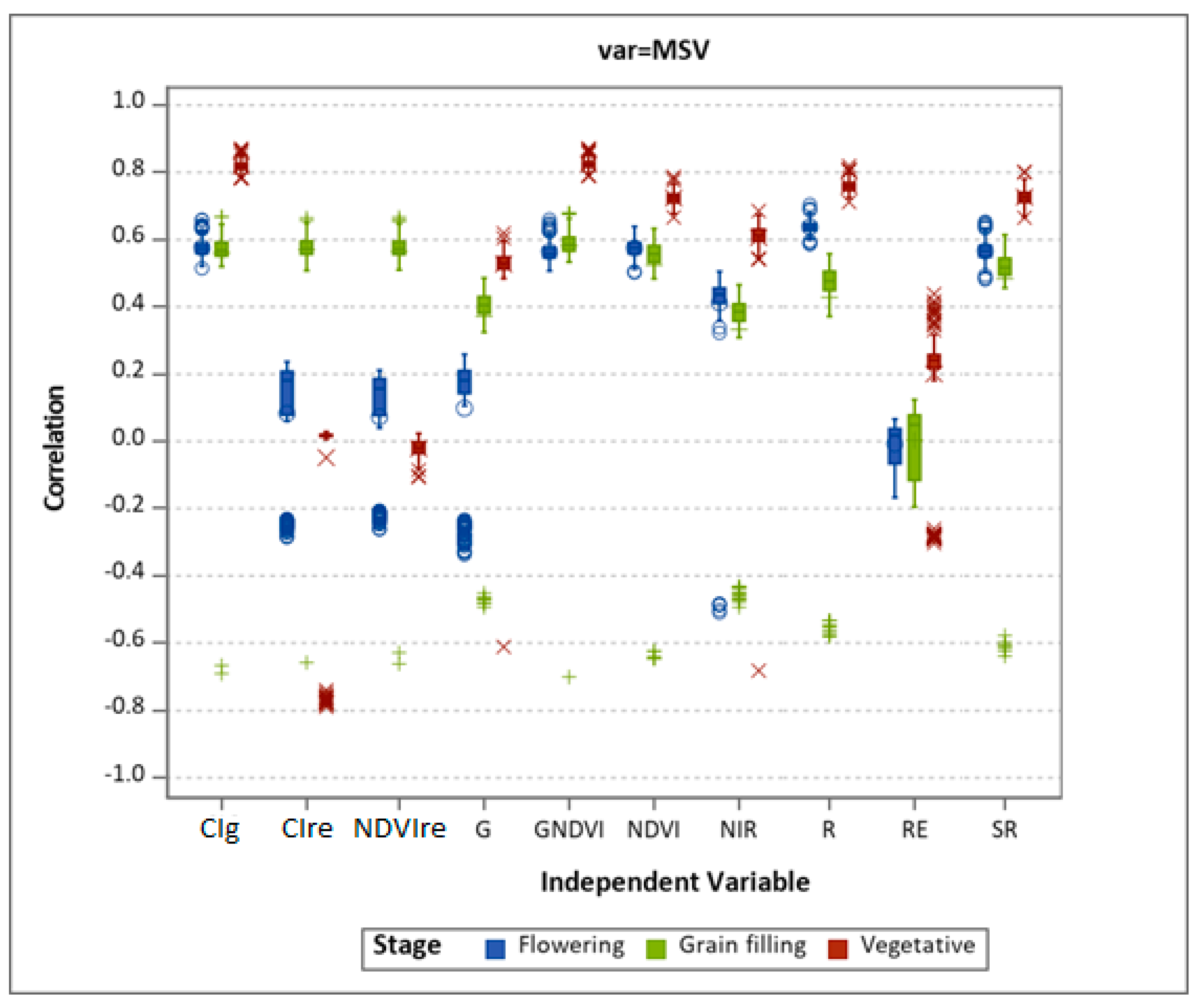

3.2. Comparison of UAV-Derived and Ground Truth Data

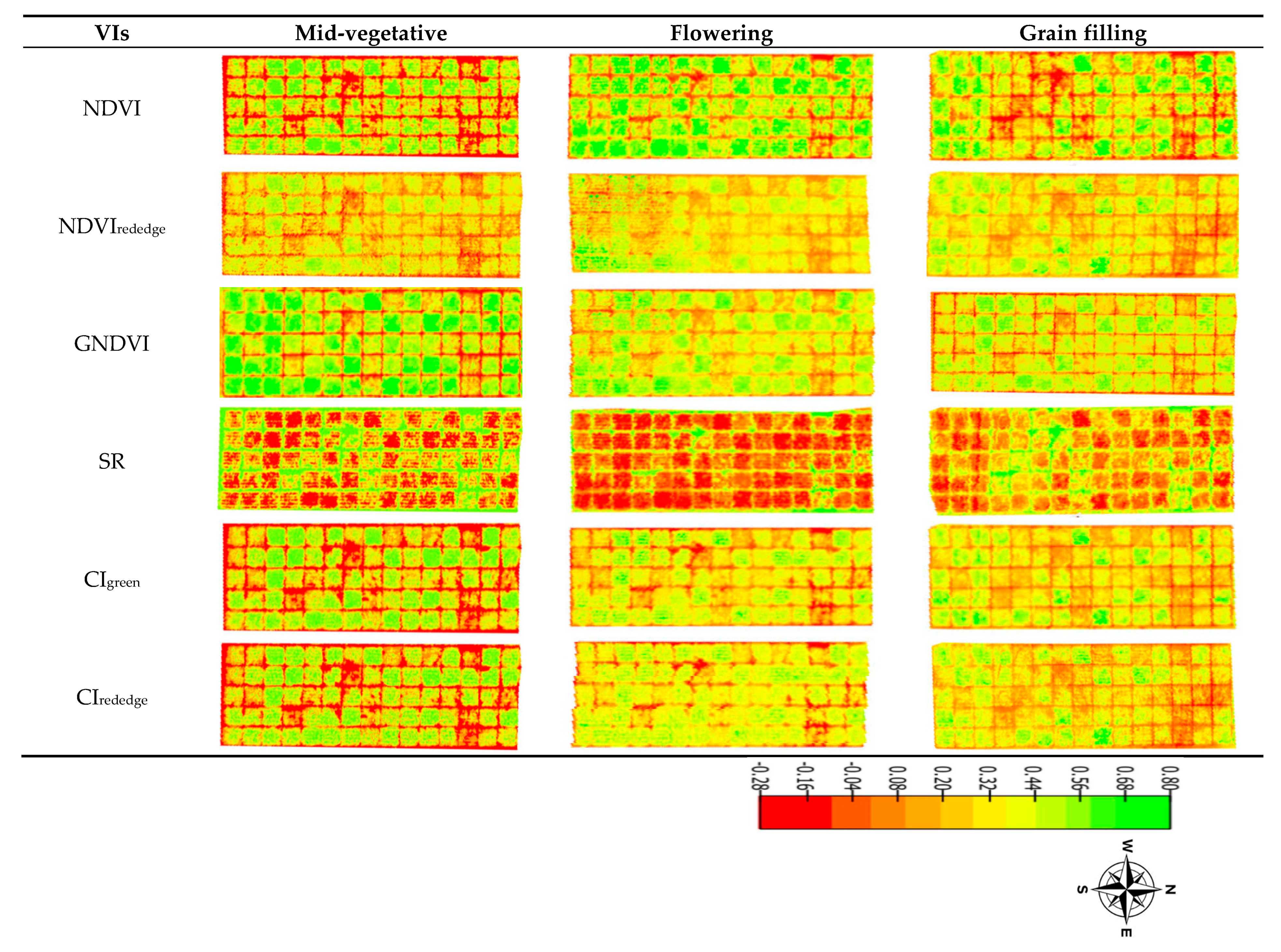

3.3. Phenology-Based Classification Using UAV-Derived Data

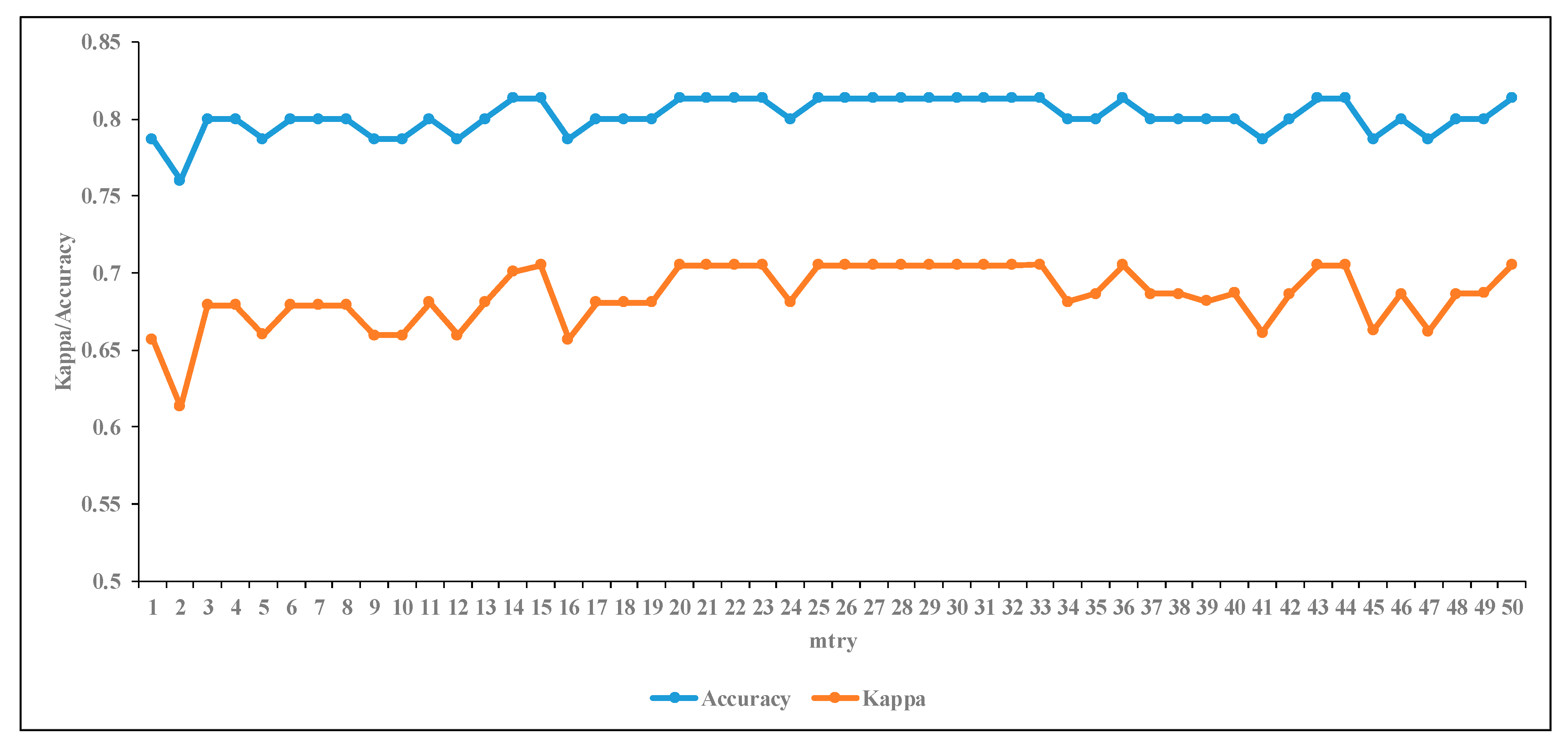

3.3.1. The Effect of RF Input Parameter on Classification

3.3.2. Classification with All Variables

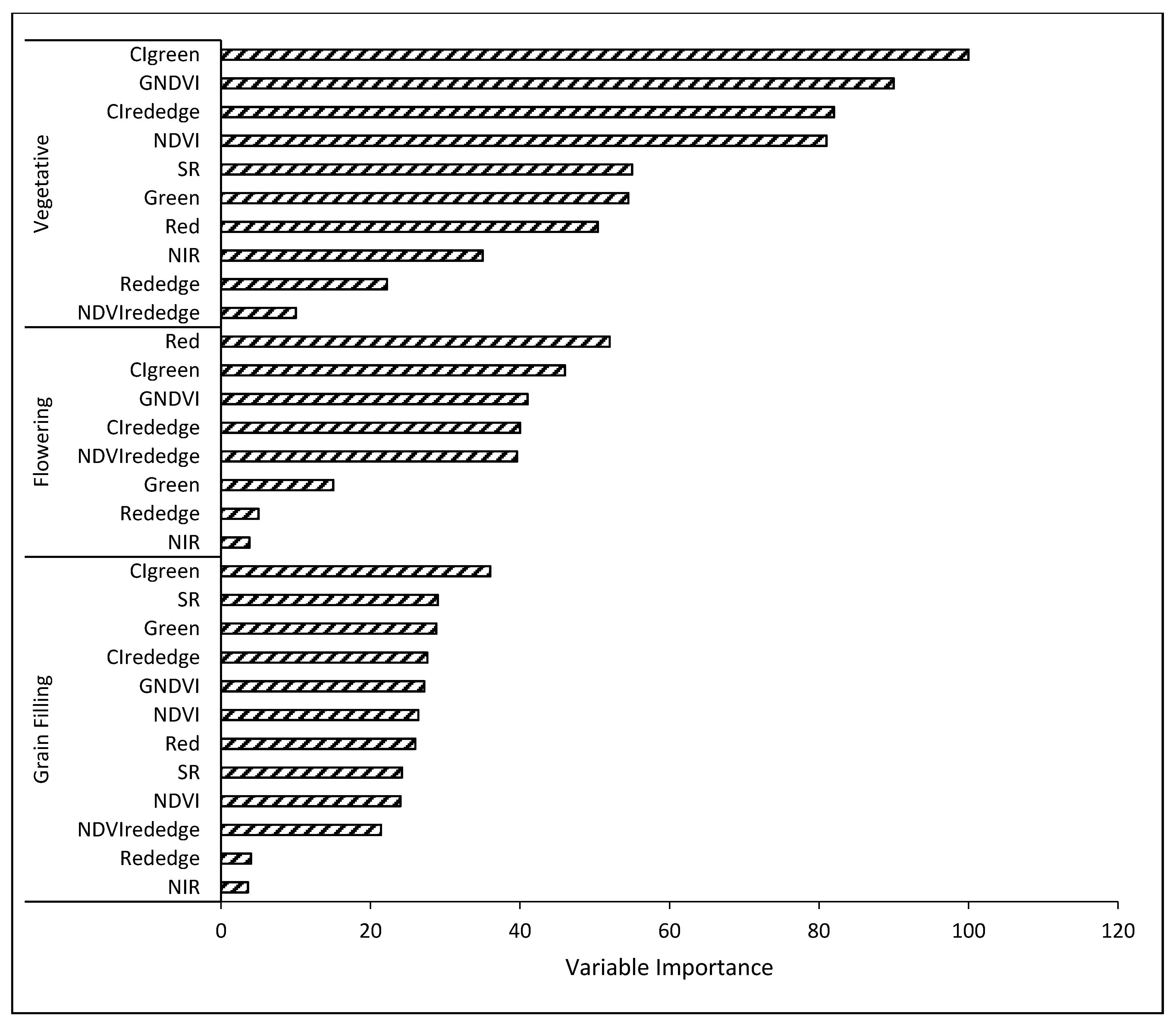

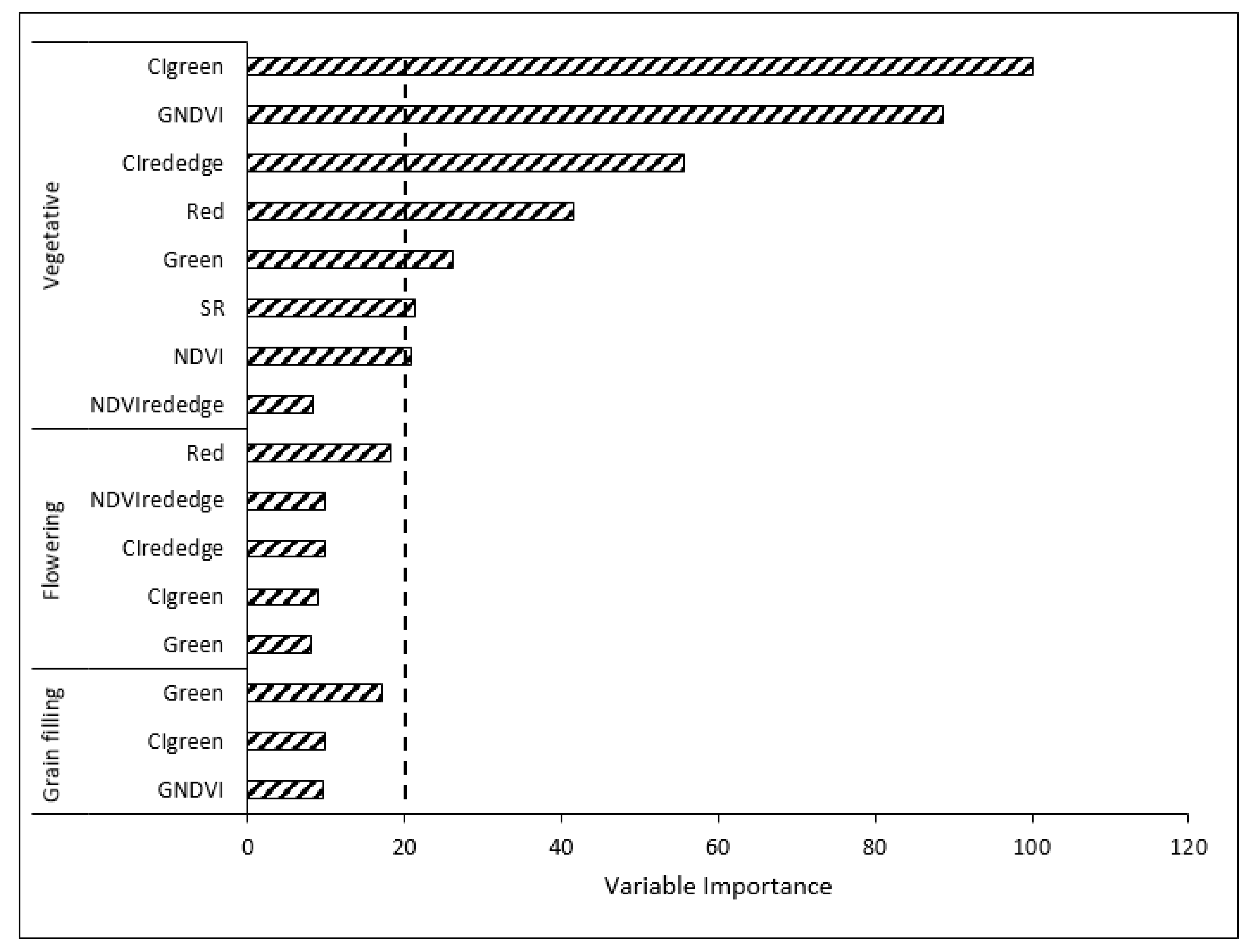

3.3.3. Variable Optimization

3.3.4. Classification Using Optimized Variables

4. Discussion

4.1. Comparison of UAV-Derived Data and Ground Truth Measurements

4.2. RF Classification Performance Using Spectral Bands and VIs

4.3. Variable Optimization Effect on RF Algorithm Classification

4.4. The Utility of UAV-Based Multispectral Data in High-Throughput Phenotyping

4.5. Leveraging High-Throughput Image-Based Phenotyping Technology to Fast-Track Crop Improvement under Changing Climate Conditions

5. Conclusions

- UAV-based remotely sensed data provides plausible accuracy, thereby offering a step-change towards data availability and turnaround time in varietal analysis for quick and robust high-throughput plant phenotyping in maize breeding and variety evaluation programs, to address the vagaries brought by climate change and meet global food security. Specifically, the study has demonstrated that VIs measured at vegetative stage are the most important for classification of maize varieties under artificial MSV inoculation using UAVs.

- UAV-derived remotely sensed data correlates well with ground truth measurements, confirming the utility of a UAV approach in field-based high-throughput phenotyping in breeding programs, where final varietal selection must be based on extensive screening of multiple genotypes. This will reduce selection bottlenecks caused by manual phenotyping and offers decision support tools for large-scale varietal screening.

- Variable optimization improves classification accuracy when compared to the use of variables without optimization. Thus, the RF classifier is a robust algorithm capable of determining the depth of variable importance and their rankings using our data.

- Image-based high-throughput phenotyping can relieve the breeding community of phenotyping bottlenecks usually experienced when evaluating large populations of genotypes in order to accelerate crop breeding and selection addressing multiple stresses associated with climate change.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Alston, J.M.; Beddow, J.M.; Pardey, P.G. Agricultural Research, Productivity, and Food Prices in the Long Run. Science 2009, 325, 1209–1210. [Google Scholar] [CrossRef] [PubMed]

- Godfray, H.C.J.; Beddington, J.R.; Crute, I.R.; Haddad, L.; Lawrence, D.; Muir, J.F.; Pretty, J.; Robinson, S.; Thomas, S.M.; Toulmin, C. Food Security: The Challenge of Feeding 9 Billion People. Science 2010, 327, 812–818. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Intergovernmental Panel on Climate Change. Climate change: Synthesis report. In Contribution of Working Groups I, II and III to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change; Pachauri, R.K., Meyer, L.A., Eds.; IPCC: Geneva, Switzerland, 2014. [Google Scholar]

- Velásquez, A.C.; Castroverde, C.D.M.; He, S.Y. Plant-Pathogen Warfare under Changing Climate Conditions. Curr. Boil. 2018, 28, R619–R634. [Google Scholar] [CrossRef] [PubMed]

- Coakley, S.M.; Scherm, H.; Chakraborty, S. Climate Change and Plant Disease Management. Annu. Rev. Phytopathol. 1999, 37, 399–426. [Google Scholar] [CrossRef]

- Garrett, K.A.; Dendy, S.P.; Frank, E.E.; Rouse, M.N.; Travers, S.E. Climate Change Effects on Plant Disease: Genomes to Ecosystems. Annu. Rev. Phytopathol. 2006, 44, 489–509. [Google Scholar] [CrossRef] [Green Version]

- Luo, Y. The Effects of Global Temperature Change on Rice Leaf Blast Epidemics: A Simulation Study in Three Agroecological Zones. Agric. Ecosyst. Environ. 1998, 68, 187–196. [Google Scholar] [CrossRef]

- Garrett, K.A.; Forbes, G.; Savary, S.; Skelsey, P.; Sparks, A.H.; Valdivia, C.; Van Bruggen, A.H.C.; Willocquet, L.; Djurle, A.; Duveiller, E.; et al. Complexity in climate-change impacts: An analytical framework for effects mediated by plant disease. Plant Pathol. 2011, 60, 15–30. [Google Scholar] [CrossRef] [Green Version]

- Rose, D.J.W. Epidemiology of Maize Streak Disease. Annu. Rev. Èntomol. 1978, 23, 259–282. [Google Scholar] [CrossRef]

- IPCC. Fourth Assessment Report: Synthesis. 2007. Available online: http://www.ipcc.ch/pdf/assessment-report/ar4/syr/ar4_syr.pdf (accessed on 28 July 2020).

- Kloppers, F. Maize Diseases: Reflection on the 2004/2005 Season. 2005. Available online: http://saspp.org/index2.php?option=com_content&do_pdf=1&id=2 (accessed on 28 July 2020).

- Stanley, J.; Boulton, M.I.; Davies, J.W. Geminiviridae. In Embryonic Encyclopedia of Life Sciences; Nature Publishing Group: London, UK, 1999. [Google Scholar]

- Efron, Y.; Kim, S.K.; Fajemisin, J.M.; Mareck, J.H.; Tang, C.Y.; Dabrowski, Z.T.; Rossel, H.W.; Thottappilly, G.; Buddenhagen, I.W. Breeding for Resistance to Maize Streak Virus: A Multidisciplinary Team Approach1. Plant Breed. 1989, 103, 1–36. [Google Scholar] [CrossRef]

- Rossel, H.W.; Thottappilly, G. Virus Diseases of Important Food Crops in Tropical Africa; International Institute of Tropical Agriculture (IITA): Ibadan, Nigeria, 1985; p. 61. [Google Scholar]

- Njuguna, J.A.M.; Kendera, J.G.; Muriithi, L.M.M.; Songa, S.; Othiambo, R.B. Overview of maize diseases in Kenya. In Maize Review Workshop in Kenya; Kenya Agricultural Research Institute: Nairobi, Kenya, 1990; pp. 52–62. [Google Scholar]

- Dabrowski, Z.T. Cicadulina Ghaurii (Hem., Euscelidae): Distribution, Biology and Maize Streak Virus (MSV) Transmission. J. Appl. Entomol. 1987, 103, 489–496. [Google Scholar] [CrossRef]

- Phillips, R.L. Mobilizing Science to Break Yield Barriers. Crop. Sci. 2010, 50, S99–S108. [Google Scholar] [CrossRef] [Green Version]

- Poland, J. Breeding-Assisted Genomics. Curr. Opin. Plant Boil. 2015, 24, 119–124. [Google Scholar] [CrossRef] [PubMed]

- Bilder, R.M.; Sabb, F.W.; Cannon, T.D.; London, E.D.; Jentsch, J.D.; Parker, D.S.; Poldrack, R.A.; Evans, C.; Freimer, N.B. Phenomics: The Systematic Study of Phenotypes on a Genome-Wide Scale. Neuroscience 2009, 164, 30–42. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Araus, J.L.; Cairns, J.E. Field High-Throughput Phenotyping: The New Crop Breeding Frontier. Trends Plant Sci. 2014, 19, 52–61. [Google Scholar] [CrossRef] [PubMed]

- Ghanem, M.E.; Marrou, H.; Sinclair, T.R. Physiological Phenotyping of Plants for Crop Improvement. Trends Plant Sci. 2015, 20, 139–144. [Google Scholar] [CrossRef] [PubMed]

- Sankaran, S.; Khot, L.R.; Espinoza, C.Z.; Jarolmasjed, S.; Sathuvalli, V.; VanDeMark, G.J.; Miklas, P.N.; Carter, A.H.; Pumphrey, M.; Knowles, N.R.; et al. Low-Altitude, High-Resolution Aerial Imaging Systems for Row and Field Crop Phenotyping: A Review. Eur. J. Agron. 2015, 70, 112–123. [Google Scholar] [CrossRef]

- Tardieu, F.; Cabrera-Bosquet, L.; Pridmore, T.; Bennett, M.J. Plant Phenomics, from Sensors to Knowledge. Curr. Boil. 2017, 27, R770–R783. [Google Scholar] [CrossRef]

- Hickey, L.; Hafeez, A.N.; Robinson, H.M.; Jackson, S.A.; Leal-Bertioli, S.C.M.; Tester, M.; Gao, C.; Godwin, I.D.; Hayes, B.J.; Wulff, B.B.H. Breeding Crops to Feed 10 Billion. Nat. Biotechnol. 2019, 37, 744–754. [Google Scholar] [CrossRef] [Green Version]

- Fahlgren, N.; Gehan, M.A.; Baxter, I. Lights, Camera, Action: High-Throughput Plant Phenotyping Is Ready for a Close-Up. Curr. Opin. Plant Boil. 2015, 24, 93–99. [Google Scholar] [CrossRef] [Green Version]

- White, J.; Andrade-Sanchez, P.; Gore, M.A.; Bronson, K.F.; Coffelt, T.A.; Conley, M.M.; Feldmann, K.A.; French, A.; Heun, J.T.; Hunsaker, D.; et al. Field-Based Phenomics for Plant Genetics Research. Field Crop. Res. 2012, 133, 101–112. [Google Scholar] [CrossRef]

- Zaman-Allah, M.; Vergara, O.; Araus, J.L.; Tarekegne, A.; Magorokosho, C.; Zarco-Tejada, P.J.; Hornero, A.; Albà, A.H.; Das, B.; Craufurd, P.Q.; et al. Unmanned Aerial Platform-Based Multi-Spectral Imaging for Field Phenotyping of Maize. Plant Methods 2015, 11, 1–10. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Furbank, R.T.; Tester, M. Phenomics—Technologies to Relieve the Phenotyping Bottleneck. Trends Plant Sci. 2011, 16, 635–644. [Google Scholar] [CrossRef] [PubMed]

- Cobb, J.N.; Declerck, G.; Greenberg, A.J.; Clark, R.; McCouch, S.R.M. Next-Generation Phenotyping: Requirements and Strategies for Enhancing Our Understanding of Genotype-Phenotype Relationships and Its Relevance to Crop Improvement. Theor. Appl. Genet. 2013, 126, 867–887. [Google Scholar] [CrossRef] [Green Version]

- Dhondt, S.; Wuyts, N.; Inzé, D. Cell to Whole-Plant Phenotyping: The Best Is Yet to Come. Trends Plant Sci. 2013, 18, 428–439. [Google Scholar] [CrossRef] [PubMed]

- Fiorani, F.; Schurr, U. Future Scenarios for Plant Phenotyping. Annu. Rev. Plant Boil. 2013, 64, 267–291. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Anthony, D.; Detweiler, C. UAV Localization in Row Crops. J. Field Robot. 2017, 34, 1275–1296. [Google Scholar] [CrossRef]

- Deery, D.M.; Jimenez-Berni, J.A.; Jones, H.G.; Sirault, X.R.R.; Furbank, R.T. Proximal Remote Sensing Buggies and Potential Applications for Field-Based Phenotyping. Agronomy 2014, 4, 349–379. [Google Scholar] [CrossRef] [Green Version]

- Prashar, A.; Jones, H.G. Infra-Red Thermography as a High-Throughput Tool for Field Phenotyping. Agronomy 2014, 4, 397–417. [Google Scholar] [CrossRef]

- Mahlein, A.-K. Plant Disease Detection by Imaging Sensors—Parallels and Specific Demands for Precision Agriculture and Plant Phenotyping. Plant Dis. 2016, 100, 241–251. [Google Scholar] [CrossRef] [Green Version]

- Báez-González, A.D.; Chen, P.-Y.; Tiscareño-López, M.; Srinivasan, R. Using Satellite and Field Data with Crop Growth Modeling to Monitor and Estimate Corn Yield in Mexico. Crop. Sci. 2002, 42, 1943–1949. [Google Scholar] [CrossRef]

- Battude, M.; Al Bitar, A.; Morin, D.; Cros, J.; Huc, M.; Sicre, C.M.; Le Dantec, V.; Demarez, V. Estimating Maize Biomass and Yield Over Large Areas Using High Spatial and Temporal Resolution Sentinel-2 Like Remote Sensing Data. Remote Sens. Environ. 2016, 184, 668–681. [Google Scholar] [CrossRef]

- Hoffer, N.V.; Coopmans, C.; Jensen, A.M.; Chen, Y.Q. A Survey and Categorization of Small Low-Cost Unmanned Aerial Vehicle System Identification. J. Intell. Robot. Syst. 2013, 74, 129–145. [Google Scholar] [CrossRef]

- Jimenez-Berni, J.A.; Zarco-Tejada, P.J.; Suarez, L.; Fereres, E. Thermal and Narrowband Multispectral Remote Sensing for Vegetation Monitoring From an Unmanned Aerial Vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef] [Green Version]

- Lelong, C.; Burger, P.; Jubelin, G.; Roux, B.; Labbé, S.; Baret, F. Assessment of Unmanned Aerial Vehicles Imagery for Quantitative Monitoring of Wheat Crop in Small Plots. Sensors 2008, 8, 3557–3585. [Google Scholar] [CrossRef]

- Jin, X.; Liu, S.; Baret, F.; Hemerlé, M.; Comar, A. Estimates of plant density of wheat crops at emergence from very low altitude UAV imagery. Remote Sens. Environ. 2017, 198, 105–114. [Google Scholar] [CrossRef] [Green Version]

- Maimaitijiang, M.; Ghulam, A.; Sagan, V.; Hartling, S.; Maimaitiyiming, M.; Peterson, K.; Shavers, E.; Fishman, J.; Peterson, J.; Kadam, S.; et al. Unmanned Aerial System (UAS)-based phenotyping of soybean using multi-sensor data fusion and extreme learning machine. ISPRS J. Photogramm. Remote Sens. 2017, 134, 43–58. [Google Scholar] [CrossRef]

- Zhou, X.; Zheng, H.; Xu, X.; He, J.; Ge, X.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.; Tian, Y. Predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery. ISPRS J. Photogramm. Remote Sens. 2017, 130, 246–255. [Google Scholar] [CrossRef]

- Yue, J.; Feng, H.; Jin, X.; Yuan, H.; Li, Z.; Zhou, C.; Yang, G.; Tian, Q. A Comparison of Crop Parameters Estimation Using Images from UAV-Mounted Snapshot Hyperspectral Sensor and High-Definition Digital Camera. Remote Sens. 2018, 10, 1138. [Google Scholar] [CrossRef] [Green Version]

- Chivasa, W.; Mutanga, O.; Biradar, C. Phenology-based discrimination of maize (Zea mays L.) varieties using multitemporal hyperspectral data. J. Appl. Remote Sens. 2019, 13, 017504. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Okains with ERTS. In The Third Earth Resources Technology Satellite—1 Symposium; Goddard Space Flight Center: Washington, WA, USA, 1974; pp. 309–317. [Google Scholar]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of Soil-Adjusted Vegetation Indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Jiang, Z.; Huete, A.; Didan, K.; Miura, T. Development of a Two-Band Enhanced Vegetation Index Without a Blue Band. Remote Sens. Environ. 2008, 112, 3833–3845. [Google Scholar] [CrossRef]

- Tattaris, M.; Reynolds, M.P.; Chapman, S.C. A Direct Comparison of Remote Sensing Approaches for High-Throughput Phenotyping in Plant Breeding. Front. Plant Sci. 2016, 7, 1131. [Google Scholar] [CrossRef] [PubMed]

- Chapman, S.C.; Merz, T.; Chan, A.; Jackway, P.; Hrabar, S.; Dreccer, M.F.; Holland, E.; Zheng, B.; Ling, T.J.; Jimenez-Berni, J.A. Pheno-Copter: A Low-Altitude, Autonomous Remote-Sensing Robotic Helicopter for High-Throughput Field-Based Phenotyping. Agronomy 2014, 4, 279–301. [Google Scholar] [CrossRef] [Green Version]

- Liebisch, F.; Kirchgessner, N.; Schneider, D.; Walter, A.; Hund, A. Remote, Aerial Phenotyping of Maize Traits with a Mobile Multi-Sensor Approach. Plant Methods 2015, 11, 9. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Grenzdörffer, G.J.; Engelb, A.; Teichert, B. The Photogrammetric Potential of Low-Cost UAVs in Forestry and Agriculture. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 31 Pt B3, 1207–1214. [Google Scholar]

- Hunt, E.; Hively, W.D.; Daughtry, C.S.; McCarty, G.W.; Fujikawa, S.J.; Ng, T.; Tranchitella, M.; Linden, D.S.; Yoel, D.W. Remote sensing of crop leaf area index using unmanned airborne vehicles. In Proceedings of the Pecora 17 Symposium, Denver, CO, USA, 18–20 November 2008. [Google Scholar]

- Nebiker, S.; Annen, A.; Scherrer, M.; Oesch, D. A Light-Weight Multispectral Sensor for Micro UAV—Opportunities for Very High Resolution Airborne Remote Sensing. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 1193–1200. [Google Scholar]

- Perry, E.M.; Brand, J.; Kant, S.; Fitzgerald, G.J. Field-based rapid phenotyping with unmanned aerial vehicles (UAV). In Capturing Opportunities and Overcoming Obstacles in Australian Agronomy. In Proceedings of the 16th ASA Conference, Armidale, Australia, 14–18 October 2012; Available online: http://www.regional.org.au/au/asa/2012/precision-a (accessed on 28 July 2020).

- Zhang, C.; Kovacs, J. The Application of Small Unmanned Aerial Systems for Precision Agriculture: A Review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Wei, X.; Xu, J.; Guo, H.; Jiang, L.; Chen, S.; Yu, C.; Zhou, Z.; Hu, P.; Zhai, H.; Wan, J. DTH8 Suppresses Flowering in Rice, Influencing Plant Height and Yield Potential Simultaneously. Plant Physiol. 2010, 153, 1747–1758. [Google Scholar] [CrossRef] [Green Version]

- Ilker, E.; Tonk, F.A.; Tosun, M.; Tatar, O. Effects of Direct Selection Process for Plant Height on Some Yield Components in Common Wheat (Triticum Aestivum) Genotypes. Int. J. Agric. Biol. 2013, 15, 795–797. [Google Scholar]

- Swain, K.C.; Zaman, Q.U. Rice crop monitoring with unmanned helicopter remote sensing images. In Remote Sensing of Biomass-Principles and Applications; Fatoyinbo, T., Ed.; InTech: Rijeka, Croatia, 2012; pp. 252–273. [Google Scholar]

- Zarco-Tejada, P.J.; Gonzalez-Dugo, V.; Berni, J.; Jimenez-Berni, J.A. Fluorescence, Temperature and Narrow-Band Indices Acquired from a UAV Platform for Water Stress Detection Using a Micro-Hyperspectral Imager and a Thermal Camera. Remote Sens. Environ. 2012, 117, 322–337. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Catalina, A.; Gonzalez, M.; Martín, P. Relationships between Net Photosynthesis and Steady-State Chlorophyll Fluorescence Retrieved from Airborne Hyperspectral Imagery. Remote Sens. Environ. 2013, 136, 247–258. [Google Scholar] [CrossRef] [Green Version]

- Hairmansis, A.; Berger, B.; Tester, M.; Roy, S.J. Image-Based Phenotyping for Non-Destructive Screening of Different Salinity Tolerance Traits in Rice. Rice 2014, 7, 16. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Calderón, R.; Navas-Cortés, J.A.; Lucena, C.; Zarco-Tejada, P.J. High resolution airborne hyperspectral and thermal imagery for early detection of Verticillium wilt using fluorescence, temperature and narrowband spectral indices. Remote Sens. Environ. 2013, 139, 231–245. [Google Scholar] [CrossRef]

- Garcia-Ruiz, F.; Sankaran, S.; Maja, J.M.; Lee, W.S.; Rasmussen, J.; Ehsani, R. Comparison of Two Aerial Imaging Platforms for Identification of Huanglongbing-Infected Citrus Trees. Comput. Electron. Agric. 2013, 91, 106–115. [Google Scholar] [CrossRef]

- Sankaran, S.; Khot, L.R.; Carter, A.H.; Garland-Campbell, K. Unmanned aerial systems based imaging for field-based crop phenotyping: Winter wheat emergence evaluation, Paper No. 1914284. In Proceedings of the 2014 ASABE Annual International Meeting, Montreal, QC, Canada, 13–14 July 2014. [Google Scholar]

- Sugiura, R.; Noguchi, N.; Ishii, K. Remote-sensing Technology for Vegetation Monitoring Using an Unmanned Helicopter. Biosyst. Eng. 2005, 90, 369–379. [Google Scholar] [CrossRef]

- Khot, L.R.; Sankaran, S.; Cummings, T.; Johnson, D.; Carter, A.H.; Serra, S.; Musacchi, S. Applications of unmanned aerial system in Washington state agriculture, Paper No. 1637. In Proceedings of the 12th International Conference on Precision Agriculture, Sacramento, CA, USA, 20–23 July 2014. [Google Scholar]

- Dhau, I.; Adam, E.; Mutanga, O.; Ayisi, K.K. Detecting the Severity of Maize Streak Virus Infestations in Maize Crop Using in Situ Hyperspectral Data. Trans. R. Soc. S. Afr. 2017, 73, 8–15. [Google Scholar] [CrossRef]

- Shepherd, D.N.; Martin, D.P.; Van Der Walt, E.; Dent, K.; Varsani, A.; Rybicki, E.P. Maize Streak Virus: An Old and Complex ‘Emerging’ Pathogen. Mol. Plant Pathol. 2009, 11, 1–12. [Google Scholar] [CrossRef]

- Jourdan-Ruf, C.; Marchand, J.L.; Peterschmitt, M.; Reynaud, B.; Dintinger, J. Maize Streak, Maize Stripe and Maize Mosaic Virus Diseases in the Tropics (Africa and Islands in the Indian Ocean). Agric. Et Dév. Special Issue (December 1995) 1995, 55–69. [Google Scholar]

- Barton, C.V.M. Advances in Remote Sensing of Plant Stress. Plant Soil 2011, 354, 41–44. [Google Scholar] [CrossRef]

- Engelbrecht, A.H.P. Chloroplast Development in Streak Infected Zea Mays. S. Afr. J. Botany 1982, 1, 80. [Google Scholar]

- Sankaran, S.; Mishra, A.; Ehsani, R.; Davis, C. A review of Advanced Techniques for Detecting Plant Diseases. Comput. Electron. Agric. 2010, 72, 1–13. [Google Scholar] [CrossRef]

- Bauriegel, E.; Giebel, A.; Geyer, M.; Schmidt, U.; Herppich, W. Early Detection of Fusarium Infection in Wheat Using Hyper-Spectral Imaging. Comput. Electron. Agric. 2011, 75, 304–312. [Google Scholar] [CrossRef]

- Dammer, K.-H.; Möller, B.; Rodemann, B.; Heppner, D. Detection of Head Blight (Fusarium ssp.) in Winter Wheat by Color and Multispectral Image Analyses. Crop. Prot. 2011, 30, 420–428. [Google Scholar] [CrossRef]

- Wang, D.; Kurle, J.; De Jensen, C.E.; Percich, J. Radiometric Assessment of Tillage and Seed Treatment Effect on Soybean Root Rot Caused by Fusarium spp. in Central Minnesota. Plant Soil 2004, 258, 319–331. [Google Scholar] [CrossRef]

- Rodier, A.; Marchand, J.-L. Breeding Maize Lines for Complete and Partial Resistance to Maize Streak Virus (MSV). Euphytica 1995, 81, 57–70. [Google Scholar] [CrossRef]

- Eyal, Z.; Scharen, A.L.; Prescott, J.M.; van Ginkel, M. The Septoria Diseases of Wheat: Concepts and Methods of Disease Management; CIMMYT: EI Batan, Mexico, 1987; p. 52. [Google Scholar]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a Green Channel in Remote Sensing of Global Vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Gitelson, A.; Merzlyak, M.N. Quantitative Estimation of Chlorophyll—A Using Reflectance Spectra: Experiments with Autumn Chestnut and Maple Leaves. J. Photochem. Photobiol. B Boil. 1994, 22, 247–252. [Google Scholar] [CrossRef]

- Baret, F.; Guyot, G. Potentials and Limits of Vegetation Indices for LAI and APAR Assessment. Remote Sens. Environ. 1991, 35, 161–173. [Google Scholar] [CrossRef]

- Gitelson, A.; Ciganda, V.S.; Rundquist, D.C.; Viña, A.; Arkebauer, T.J. Remote Estimation of Canopy Chlorophyll Content in Crops. Geophys. Res. Lett. 2005, 32, 08403. [Google Scholar] [CrossRef] [Green Version]

- Jenks, G.F. Optimal Data Classification for Chloropleth Maps. Occasional Paper No. 2. Lawrence; University of Kansas, Department of Geography: Kansas, KS, USA, 1977. [Google Scholar]

- Rogan, J.; Franklin, J.; Stow, D.; Miller, J.; Woodcock, C.; Roberts, D. Mapping Land-Cover Modifications Over Large Areas: A Comparison of Machine Learning Algorithms. Remote Sens. Environ. 2008, 112, 2272–2283. [Google Scholar] [CrossRef]

- Nitze, I.; Schulthess, U.; Asche, H. Comparison of machine learning algorithms random forest, artificial neural network and support vector machine to maximum likelihood for supervised crop type classification. In 4th GEOBIA; Feitosa, R.Q., Costa, G.A.O.P., Almeida, C.M., Fonseca, L.M.G., Kux, H.J.H., Eds.; Brazilian National Institute for Space Research: Rio de Janeiro, Brazil, 2012; pp. 35–40. [Google Scholar]

- Lebedev, A.V.; Westman, E.; Van Westen, G.J.P.; Kramberger, M.; Lundervold, A.; Aarsland, D.; Soininen, H.; Kłoszewska, I.; Mecocci, P.; Tsolaki, M.; et al. Random Forest Ensembles for Detection and Prediction of Alzheimer’s Disease with a Good between Cohort Robustness. NeuroImage Clin. 2014, 6, 115–125. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chemura, A.; Mutanga, O.; Dube, T. Separability of Coffee Leaf Rust Infection Levels with Machine Learning Methods at Sentinel-2 MSI Spectral Resolutions. Precis. Agric. 2016, 18, 859–881. [Google Scholar] [CrossRef]

- Pal, M. Random Forest Classifier for Remote Sensing Classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

- Duro, D.; Franklin, S.E.; Dubé, M.G. A Comparison of Pixel-Based and Object-Based Image Analysis with Selected Machine Learning Algorithms for the Classification of Agricultural Landscapes Using SPOT-5 HRG imagery. Remote Sens. Environ. 2012, 118, 259–272. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of Machine-Learning Classification in Remote Sensing: An Applied Review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef] [Green Version]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Lin, X.; Sun, L.; Li, Y.; Guo, Z.; Li, Y.; Zhong, K.; Wang, Q.; Lü, X.; Yang, Y.; Xua, G. A Random Forest of Combined Features in the Classification of Cut Tobacco Based on Gas Chromatography Fingerprinting. Talanta 2010, 82, 1571–1575. [Google Scholar] [CrossRef]

- Genuer, R.; Poggi, J.-M.; Malot, C. Variable Selection Using Random Forests. Pattern Recognit. Lett. 2010, 31, 2225–2236. [Google Scholar] [CrossRef] [Green Version]

- Mutanga, O.; Adam, E.; Cho, M.A. High Density Biomass Estimation for Wetland Vegetation Using WorldView-2 Imagery and Random Forest Regression Algorithm. Int. J. Appl. Earth Obs. Geoinf. 2012, 18, 399–406. [Google Scholar] [CrossRef]

- R Development Core Team. R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing; R Development Core Team: Vienna, Austria, 2019; Available online: http://www.r-project.org/index.html (accessed on 28 July 2020).

- Breiman, L.; Cutler, A. Random Forests-classification Description [Online]. 2007. Available online: http://www.stat.berkeley.edu/~breiman/RandomForests/cc_home.htm (accessed on 1 May 2020).

- Gislason, P.O.; Benediktsson, J.A.; Sveinsson, J.R. Random forest classification of multisource remote sensing and geographic data. In Geoscience and Remote Sensing Symposium. IGARSS’04 2004, 2, 1049–1052. [Google Scholar]

- Story, M.; Congalton, R. Accuracy Assessment: A User’s Perspective. Photogramm. Eng. Remote Sens. 1986, 52, 397–399. [Google Scholar]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices; CRC Press: Boca Raton, FL, USA, 2009; p. 183. [Google Scholar]

- Congalton, R.G. A Review of Assessing the Accuracy of Classifications of Remotely Sensed Data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Jensen, J.R.; Lulla, K. Introductory Digital Image Processing: A remote Sensing Perspective. Geocarto Int. 1987, 2, 65. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and Regression by Randomforest. R News 2002, 2, 18–22. [Google Scholar]

- Díaz-Uriarte, R.; Alvarez, S. Gene Selection and Classification of Microarray Data Using Random Forest. BMC Bioinform. 2006, 7, 3. [Google Scholar] [CrossRef] [Green Version]

- Adam, E.; Mutanga, O.; Odindi, J.; Abdel-Rahman, E.M. Land-Use/Cover Classification in a Heterogeneous Coastal Landscape Using RapidEye Imagery: Evaluating the Performance of Random Forest and Support Vector Machines Classifiers. Int. J. Remote Sens. 2014, 35, 3440–3458. [Google Scholar] [CrossRef]

- Sankaran, S.; Maja, J.M.; Buchanon, S.; Ehsani, R. Huanglongbing (Citrus Greening) Detection Using Visible, Near Infrared and Thermal Imaging Techniques. Sensors 2013, 13, 2117–2130. [Google Scholar] [CrossRef] [Green Version]

- Jarolmasjed, S.; Sankaran, S.; Marzougui, A.; Kostick, S.; Si, Y.; Vargas, J.J.Q.; Evans, K. High-Throughput Phenotyping of Fire Blight Disease Symptoms Using Sensing Techniques in Apple. Front. Plant Sci. 2019, 10, 576. [Google Scholar] [CrossRef]

- Mahlein, A.-K.; Rumpf, T.; Welke, P.; Dehne, H.-W.; Plümer, L.; Steiner, U.; Oerke, E.-C. Development of Spectral Indices for Detecting and Identifying Plant Diseases. Remote Sens. Environ. 2013, 128, 21–30. [Google Scholar] [CrossRef]

- Jansen, M.; Bergsträsser, S.; Schmittgen, S.; Müller-Linow, M.; Rascher, U. Non-Invasive Spectral Phenotyping Methods can Improve and Accelerate Cercospora Disease Scoring in Sugar Beet Breeding. Agriculture 2014, 4, 147–158. [Google Scholar] [CrossRef] [Green Version]

- Andrade-Sanchez, P.; Gore, M.A.; Heun, J.T.; Thorp, K.R.; Silva, A.B.; French, A.; Salvucci, M.E.; White, J.W. Development and Evaluation of a Field-Based High-Throughput Phenotyping Platform. Funct. Plant Boil. 2014, 41, 68–79. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Crain, J.L.; Wei, Y.; Barker, J.; Thompson, S.M.; Alderman, P.D.; Reynolds, M.P.; Zhang, N.; Poland, J. Development and Deployment of a Portable Field Phenotyping Platform. Crop. Sci. 2016, 56, 965–975. [Google Scholar] [CrossRef]

- Condorelli, G.E.; Maccaferri, M.; Newcomb, M.; Andrade-Sanchez, P.; White, J.W.; French, A.N.; Sciara, G.; Ward, R.W.; Tuberosa, R. Comparative Aerial and Ground Based High Throughput Phenotyping for the Genetic Dissection of NDVI as a Proxy for Drought Adaptive Traits in Durum Wheat. Front. Plant Sci. 2018, 9, 893. [Google Scholar] [CrossRef] [PubMed]

- Bock, C.H.; Poole, G.H.; Parker, P.E.; Gottwald, T.R. Plant Disease Severity Estimated Visually, by Digital Photography and Image Analysis, and by Hyperspectral Imaging. Crit. Rev. Plant Sci. 2010, 29, 59–107. [Google Scholar] [CrossRef]

- Masuka, B.; Magorokosho, C.; Olsen, M.; Atlin, G.; Bänziger, M.; Pixley, K.; Vivek, B.S.; Labuschagne, M.; Matemba-Mutasa, R.; Burgueño, J.; et al. Gains in Maize Genetic Improvement in Eastern and Southern Africa: II. CIMMYT Open-Pollinated Variety Breeding Pipeline. Crop. Sci. 2016, 57, 180–191. [Google Scholar] [CrossRef] [Green Version]

- Barbagallo, R.P.; Oxborough, K.; Pallett, K.E.; Baker, N.R. Rapid, Noninvasive Screening for Perturbations of Metabolism and Plant Growth Using Chlorophyll Fluorescence Imaging1. Plant Physiol. 2003, 132, 485–493. [Google Scholar] [CrossRef] [Green Version]

- Nutter, J.F.W. Assessing the Accuracy, Intra-rater Repeatability, and Inter-Rater Reliability of Disease Assessment Systems. Phytopathology 1993, 83, 806. [Google Scholar] [CrossRef]

- Pal, M.; Foody, G.M. Feature Selection for Classification of Hyperspectral Data by SVM. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2297–2307. [Google Scholar] [CrossRef] [Green Version]

- Huang, C.; Davis, L.S.; Townshend, J.R.G. An Assessment of Support Vector Machines for Land Cover Classification. Int. J. Remote Sens. 2002, 23, 725–749. [Google Scholar] [CrossRef]

- Swain, P.H. Fundamentals of pattern recognition in remote sensing. In Remote Sensing: The Quantitative Approach; Swain, P.H., Davis, S.M., Eds.; McGraw Hill: New York, NY, USA, 1978; pp. 136–187. [Google Scholar]

- Lu, D.; Weng, Q. A Survey of Image Classification Methods and Techniques for Improving Classification Performance. Int. J. Remote Sens. 2007, 28, 823–870. [Google Scholar] [CrossRef]

- Li, C.; Wang, J.; Wang, L.; Hu, L.; Gong, P. Comparison of Classification Algorithms and Training Sample Sizes in Urban Land Classification with Landsat Thematic Mapper Imagery. Remote Sens. 2014, 6, 964–983. [Google Scholar] [CrossRef] [Green Version]

- Ghimire, B.; Rogan, J.; Rodriguez-Galiano, V.F.; Panday, P.; Neeti, N. An Evaluation of Bagging, Boosting, and Random Forests for Land-Cover Classification in Cape Cod, Massachusetts, USA. GIScience Remote Sens. 2012, 49, 623–643. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Olmo, M.C.; Rigol-Sanchez, J.P. An Assessment of the Effectiveness of a Random Forest Classifier for Land-Cover Classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Ismail, R.; Mutanga, O. Discriminating the Early Stages Ofsirex Noctilioinfestation Using Classification Tree Ensembles and Shortwave Infrared Bands. Int. J. Remote Sens. 2011, 32, 4249–4266. [Google Scholar] [CrossRef]

- Naik, H.S.; Zhang, J.; Lofquist, A.; Assefa, T.; Sarkar, S.; Ackerman, D.; Singh, A.K.; Singh, A.K.; Ganapathysubramanian, B. A Real-Time Phenotyping Framework Using Machine Learning for Plant Stress Severity Rating in Soybean. Plant Methods 2017, 13, 23. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Duddu, H.S.N.; Johnson, E.; Willenborg, C.J.; Shirtliffe, S.J. High-Throughput UAV Image-Based Method Is More Precise Than Manual Rating of Herbicide Tolerance. Plant Phenomics 2019, 2019, 1–9. [Google Scholar] [CrossRef] [Green Version]

- Guan, J.; Nutter, F.W. Quantifying the Intrarater Repeatability and Interrater Reliability of Visual and Remote-Sensing Disease-Assessment Methods in the Alfalfa Foliar Pathosystem. Can. J. Plant Pathol. 2003, 25, 143–149. [Google Scholar] [CrossRef]

- Rumpf, T.; Mahlein, A.-K.; Steiner, U.; Oerke, E.-C.; Dehne, H.-W.; Plümer, L. Early Detection and Classification of Plant Diseases with Support Vector Machines Based on Hyperspectral Reflectance. Comput. Electron. Agric. 2010, 74, 91–99. [Google Scholar] [CrossRef]

- Mahlein, A.-K.; Steiner, U.; Dehne, H.-W.; Oerke, E.-C. Spectral Signatures of Sugar Beet Leaves for the Detection and Differentiation of Diseases. Precis. Agric. 2010, 11, 413–431. [Google Scholar] [CrossRef]

- Pauli, D.; Chapman, S.C.; Bart, R.S.; Topp, C.N.; Lawrence-Dill, C.J.; Poland, J.A.; Gore, M.A. The Quest for Understanding Phenotypic Variation via Integrated Approaches in the Field Environment. Plant Physiol. 2016, 172, 622–634. [Google Scholar] [CrossRef] [Green Version]

- Rutkoski, J.E.; Poland, J.; Mondal, S.; Autrique, E.; González-Pérez, L.; Crossa, J.; Reynolds, M.P.; Singh, R. Canopy Temperature and Vegetation Indices from High-Throughput Phenotyping Improve Accuracy of Pedigree and Genomic Selection for Grain Yield in Wheat. G3 Genes|Genomes|Genetics 2016, 6, 2799–2808. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Crain, J.; Mondal, S.; Rutkoski, J.E.; Singh, R.P.; Poland, J. Combining High-Throughput Phenotyping and Genomic Information to Increase Prediction and Selection Accuracy in Wheat Breeding. Plant Genome 2018, 11, 1–14. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Juliana, P.; Montesinos-López, O.A.; Crossa, J.; Mondal, S.; Pérez, L.G.; Poland, J.; Huerta-Espino, J.; Crespo-Herrera, L.A.; Govindan, V.; Dreisigacker, S.; et al. Integrating Genomic-Enabled Prediction and High-Throughput Phenotyping in Breeding for Climate-Resilient Bread Wheat. Theor. Appl. Genet. 2018, 132, 177–194. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Sensor Specifications | Spectral Features |

|---|---|

| Sensor type | Multispectral sensor + RGB camera |

| Multispectral sensor | 4-band |

| RGB resolution | 16 mega-pixel (MP), 4608 × 3456 px |

| Single-band resolution | 1.2 MP, 1280 × 960 px |

| Multispectral bands | Green (0.55 ± 0.04 μm); Red (0.66 ± 0.04 μm); Redeedge (0.735 ± 0.01 μm); Near Infrared (0.79 ± 0.04 μm) |

| Field of view | 64° |

| Data spectral resolution | Green, Red, Rededge, NIR |

| Image spatial resolution | 11.5 cm at 42.5 m altitude |

| Resistant | Moderately Resistant | Susceptible | Total | UA (%) | |

|---|---|---|---|---|---|

| Resistant | 4 | 1 | 0 | 5 | 80 |

| Moderately resistant | 1 | 7 | 1 | 9 | 77.8 |

| Susceptible | 0 | 2 | 6 | 8 | 75 |

| Total | 5 | 10 | 7 | 22 | - |

| PA (%) | 80 | 70 | 85.7 | - | - |

| Overall accuracy (%) | 77.3 | - | - | - | - |

| Kappa coefficient | 0.64 | - | - | - | - |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chivasa, W.; Mutanga, O.; Biradar, C. UAV-Based Multispectral Phenotyping for Disease Resistance to Accelerate Crop Improvement under Changing Climate Conditions. Remote Sens. 2020, 12, 2445. https://doi.org/10.3390/rs12152445

Chivasa W, Mutanga O, Biradar C. UAV-Based Multispectral Phenotyping for Disease Resistance to Accelerate Crop Improvement under Changing Climate Conditions. Remote Sensing. 2020; 12(15):2445. https://doi.org/10.3390/rs12152445

Chicago/Turabian StyleChivasa, Walter, Onisimo Mutanga, and Chandrashekhar Biradar. 2020. "UAV-Based Multispectral Phenotyping for Disease Resistance to Accelerate Crop Improvement under Changing Climate Conditions" Remote Sensing 12, no. 15: 2445. https://doi.org/10.3390/rs12152445

APA StyleChivasa, W., Mutanga, O., & Biradar, C. (2020). UAV-Based Multispectral Phenotyping for Disease Resistance to Accelerate Crop Improvement under Changing Climate Conditions. Remote Sensing, 12(15), 2445. https://doi.org/10.3390/rs12152445