Wheat Yellow Rust Detection Using UAV-Based Hyperspectral Technology

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Site

2.2. Data Acquisition and Processing

2.2.1. Field Data Acquisition

2.2.2. Unmanned Aerial Vehicle (UAV) Hyperspectral Image Acquisition

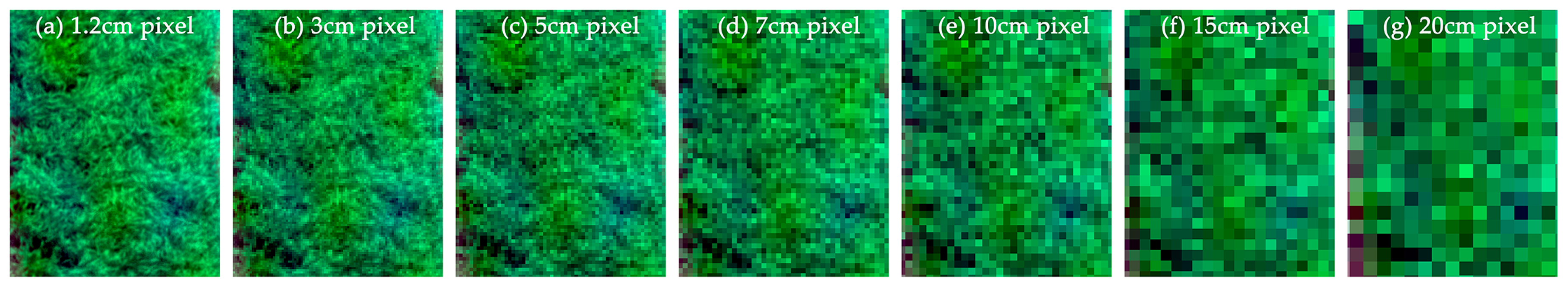

2.2.3. UAV Hyperspectral Image Processing

2.3. Methods

2.3.1. Vegetation Index Extraction

2.3.2. Texture Feature Extraction

2.3.3. Features Selection

2.3.4. Severity Estimation Model Based on Partial Least Squares Regression

2.3.5. Accuracy Assessment

3. Results

3.1. Spectral Response of Wheat Yellow Rust at Different Inoculation Stages

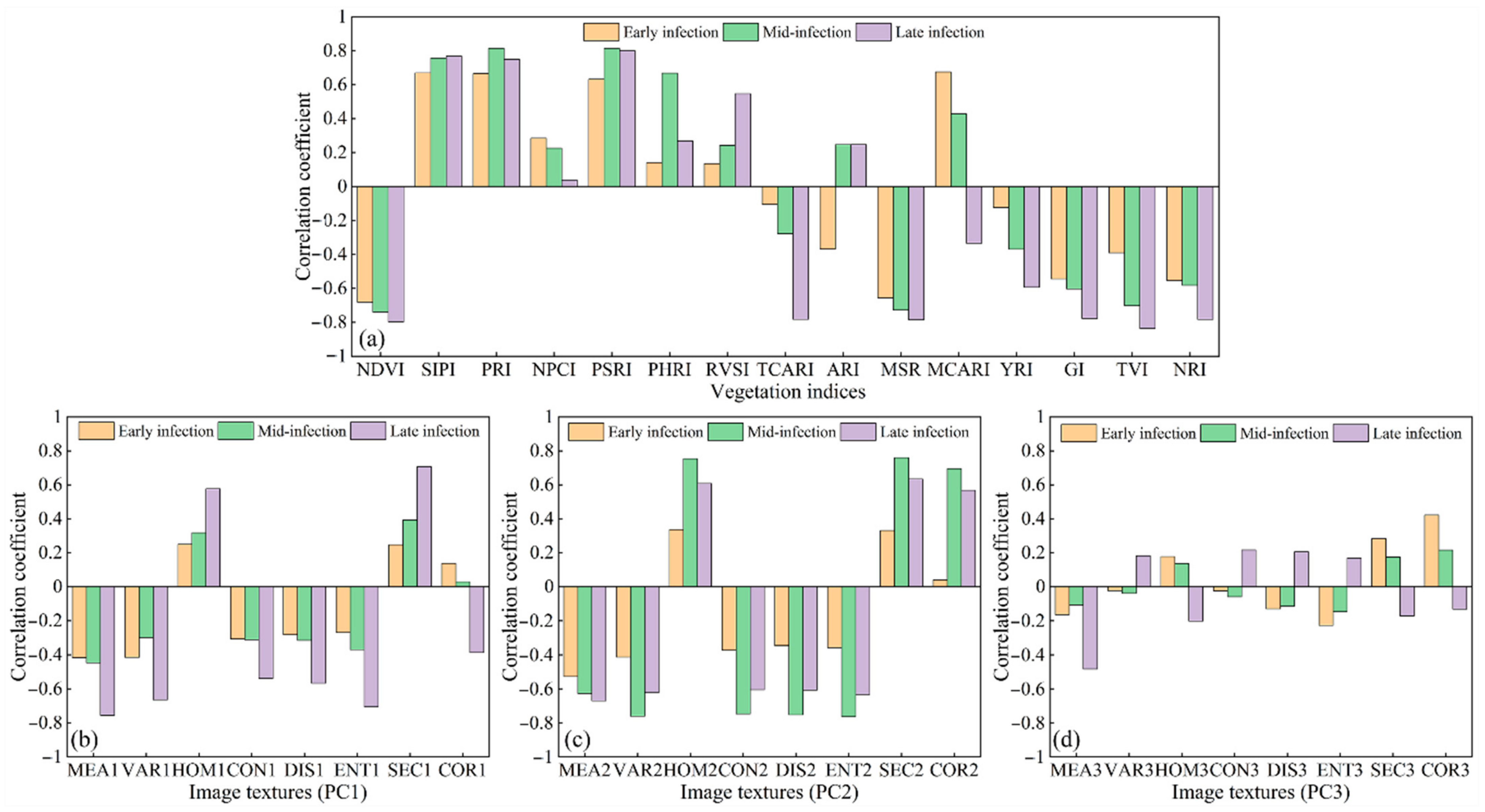

3.2. Features Sensitive to Yellow Rust

3.3. Establishment and Evaluation of the Wheat Yellow Rust Monitoring Models

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| VIs | Early Infection | Mid-Infection | Late Infection |

|---|---|---|---|

| NDVI | −0.681 ** | −0.739 ** | −0.797 ** |

| SIPI | 0.669 ** | 0.757 ** | 0.768 ** |

| PRI | 0.665 ** | 0.814 ** | 0.750 ** |

| NPCI | 0.284 | 0.226 | 0.038 |

| PSRI | 0.632 ** | 0.814 ** | 0.800 ** |

| PhRI | 0.140 | 0.668 ** | 0.268 |

| RVSI | 0.135 | 0.243 | 0.547 ** |

| TCARI | −0.104 | −0.277 | −0.782 ** |

| ARI | −0.368 * | 0.247 | 0.247 |

| MSR | −0.656 ** | −0.728 ** | −0.785 ** |

| MCARI | 0.675 ** | 0.428 ** | −0.334 * |

| YRI | −0.123 | −0.370 ** | −0.594 ** |

| GI | −0.544 ** | −0.603 ** | −0.777 ** |

| TVI | −0.390 ** | −0.702 ** | −0.836 ** |

| NRI | −0.554 ** | −0.582 ** | −0.783 ** |

| TFs | Early Infection | Mid-Infection | Late Infection |

|---|---|---|---|

| MEA1 | −0.417 ** | −0.446 ** | −0.755 ** |

| VAR1 | −0.414 ** | −0.299 * | −0.664 ** |

| HOM1 | 0.249 | 0.318 * | 0.577 ** |

| CON1 | −0.308 * | −0.312 * | −0.538 ** |

| DIS1 | −0.283 | −0.316 * | −0.567 ** |

| ENT1 | −0.270 | −0.369 ** | −0.706 ** |

| SEC1 | 0.247 | 0.393 ** | 0.707 ** |

| COR1 | 0.137 | 0.029 | −0.386 ** |

| MEA2 | −0.527 ** | −0.627 ** | −0.670 ** |

| VAR2 | −0.413 ** | −0.761 ** | −0.623 ** |

| HOM2 | 0.336 * | 0.754 ** | 0.608 ** |

| CON2 | −0.372 ** | −0.747 ** | −0.605 ** |

| DIS2 | −0.346 * | −0.753 ** | −0.607 ** |

| ENT2 | −0.357 * | −0.764 ** | −0.633 ** |

| SEC2 | 0.331 * | 0.760 ** | 0.634 ** |

| COR2 | 0.039 | 0.695 ** | 0.568 ** |

| MEA3 | −0.164 | −0.107 | −0.483 ** |

| VAR3 | −0.023 | −0.040 | 0.182 |

| HOM3 | 0.177 | 0.137 | −0.202 |

| CON3 | −0.024 | −0.060 | 0.216 |

| DIS3 | −0.131 | −0.115 | 0.205 |

| ENT3 | −0.228 | −0.147 | 0.169 |

| SEC3 | 0.283 | 0.175 | −0.172 |

| COR3 | 0.422 ** | 0.215 | −0.132 |

| Feature | Infection Stages | R2/RRMSE | ||||||

|---|---|---|---|---|---|---|---|---|

| 1.2 cm | 3 cm | 5 cm | 7 cm | 10 cm | 15 cm | 20 cm | ||

| VIs | Early infection | 0.47/0.755 | 0.48/0.757 | 0.43/0.758 | 0.49/0.756 | 0.46/0.765 | 0.52/0.716 | 0.41/0.793 |

| Mid-infection | 0.70/0.472 | 0.69/0.470 | 0.72/0.469 | 0.53/0.605 | 0.71/0.469 | 0.75/0.425 | 0.72/0.457 | |

| Late infection | 0.64/0.469 | 0.65/0.468 | 0.67/0.467 | 0.68/0.465 | 0.61/0.467 | 0.65/0.467 | 0.70/0.428 | |

| TFs | Early infection | 0.18/0.942 | 0.28/0.880 | 0.10/0.984 | 0.26/0.893 | 0.25/0.896 | 0.25/0.896 | 0.20/0.926 |

| Mid-infection | 0.58/0.566 | 0.56/0.566 | 0.50/0.563 | 0.44/0.585 | 0.65/0.513 | 0.51/0.608 | 0.60/0.574 | |

| Late infection | 0.70/0.388 | 0.77/0.380 | 0.73/0.365 | 0.68/0.367 | 0.79/0.359 | 0.82/0.332 | 0.80/0.352 | |

| Vis + TFs | Early infection | 0.50/0.694 | 0.53/0.748 | 0.48/0.776 | 0.50/0.784 | 0.55/0.694 | 0.53/0.768 | 0.44/0.812 |

| Mid-infection | 0.71/0.464 | 0.72/0.460 | 0.74/0.471 | 0.64/0.518 | 0.76/0.415 | 0.79/0.406 | 0.75/0.456 | |

| Late infection | 0.80/0.332 | 0.83/0.320 | 0.85/0.301 | 0.81/0.285 | 0.88/0.266 | 0.86/0.319 | 0.82/0.311 | |

References

- Han, J.; Zhang, Z.; Cao, J.; Luo, Y.; Zhang, L.; Li, Z.; Zhang, J. Prediction of Winter Wheat Yield Based on Multi-Source Data and Machine Learning in China. Remote Sens. 2020, 12, 236. [Google Scholar] [CrossRef]

- Zheng, Q.; Huang, W.; Cui, X.; Dong, Y.; Shi, Y.; Ma, H.; Liu, L. Identification of Wheat Yellow Rust Using Optimal Three-Band Spectral Indices in Different Growth Stages. Sensors 2018, 19, 35. [Google Scholar] [CrossRef] [PubMed]

- Ma, H.; Huang, W.; Jing, Y.; Pignatti, S.; Laneve, G.; Dong, Y.; Ye, H.; Liu, L.; Guo, A.; Jiang, J. Identification of Fusarium Head Blight in Winter Wheat Ears Using Continuous Wavelet Analysis. Sensors 2020, 20, 20. [Google Scholar] [CrossRef] [PubMed]

- Moshou, D.; Bravo, C.; West, J.; Wahlen, S.; McCartney, A.; Ramon, H. Automatic detection of ‘yellow rust’ in wheat using reflectance measurements and neural networks. Comput. Electron. Agric. 2004, 44, 173–188. [Google Scholar] [CrossRef]

- Chen, X. Epidemiology and control of stripe rust [Puccinia striiformis f. sp. tritici] on wheat. Can. J. Plant. Pathol. 2005, 27, 314–337. [Google Scholar] [CrossRef]

- Zheng, Q.; Huang, W.; Cui, X.; Shi, Y.; Liu, L. New spectral index for detecting wheat yellow rust using sentinel-2 multispectral imagery. Sensors 2018, 18, 868. [Google Scholar] [CrossRef]

- Yu, K.; Anderegg, J.; Mikaberidze, A.; Karisto, P.; Mascher, F.; McDonald, B.A.; Walter, A.; Hund, A. Hyperspectral Canopy Sensing of Wheat Septoria Tritici Blotch Disease. Front. Plant. Sci. 2018, 9, 1195. [Google Scholar] [CrossRef]

- He, L.; Qi, S.-L.; Duan, J.-Z.; Guo, T.-C.; Feng, W.; He, D.-X. Monitoring of Wheat Powdery Mildew Disease Severity Using Multiangle Hyperspectral Remote Sensing. ITGRS 2020. [Google Scholar] [CrossRef]

- Hatton, N.M.; Menke, E.; Sharda, A.; van der Merwe, D.; Schapaugh, W., Jr. Assessment of sudden death syndrome in soybean through multispectral broadband remote sensing aboard small unmanned aerial systems. Comput. Electron. Agric. 2019, 167, 105094. [Google Scholar] [CrossRef]

- Chivasa, W.; Onisimo, M.; Biradar, C.M. UAV-Based Multispectral Phenotyping for Disease Resistance to Accelerate Crop Improvement under Changing Climate Conditions. Remote Sens. 2020, 12, 2445. [Google Scholar] [CrossRef]

- Abdulridha, J.; Ehsani, R.; Abd-Elrahman, A.; Ampatzidis, Y. A remote sensing technique for detecting laurel wilt disease in avocado in presence of other biotic and abiotic stresses. Comput. Electron. Agric. 2019, 156, 549–557. [Google Scholar] [CrossRef]

- Abdulridha, J.; Ampatzidis, Y.; Roberts, P.D.; Kakarla, S.C. Detecting powdery mildew disease in squash at different stages using UAV-based hyperspectral imaging and artificial intelligence. Biosyst. Eng. 2020, 197, 135–148. [Google Scholar] [CrossRef]

- Awad, M.M. Forest mapping: A comparison between hyperspectral and multispectral images and technologies. J. For. Res. 2017, 29, 1395–1405. [Google Scholar] [CrossRef]

- Yang, C.; Fernandez, C.J.; Everitt, J.H. Comparison of airborne multispectral and hyperspectral imagery for mapping cotton root rot. Biosyst. Eng. 2010, 107, 131–139. [Google Scholar] [CrossRef]

- Mariotto, I.; Thenkabail, P.S.; Huete, A.R.; Slonecker, E.T.; Platonov, A. Hyperspectral versus multispectral crop-productivity modeling and type discrimination for the HyspIRI mission. Remote Sens. Environ. 2013, 139, 291–305. [Google Scholar] [CrossRef]

- Yao, Z.; Lei, Y.; He, D. Early Visual Detection of Wheat Stripe Rust Using Visible/Near-Infrared Hyperspectral Imaging. Sensors 2019, 19, 952. [Google Scholar] [CrossRef] [PubMed]

- Shi, Y.; Huang, W.; González-Moreno, P.; Luke, B.; Dong, Y.; Zheng, Q.; Ma, H.; Liu, L. Wavelet-Based Rust Spectral Feature Set (WRSFs): A Novel Spectral Feature Set Based on Continuous Wavelet Transformation for Tracking Progressive Host–Pathogen Interaction of Yellow Rust on Wheat. Remote Sens. 2018, 10, 525. [Google Scholar] [CrossRef]

- Zhang, J.-C.; Pu, R.-L.; Wang, J.-H.; Huang, W.-J.; Yuan, L.; Luo, J.-H. Detecting powdery mildew of winter wheat using leaf level hyperspectral measurements. Comput. Electron. Agric. 2012, 85, 13–23. [Google Scholar] [CrossRef]

- Chen, T.; Zhang, J.; Chen, Y.; Wan, S.; Zhang, L. Detection of peanut leaf spots disease using canopy hyperspectral reflectance. Comput. Electron. Agric. 2019, 156, 677–683. [Google Scholar] [CrossRef]

- Gu, Q.; Sheng, L.; Zhang, T.; Lu, Y.; Zhang, Z.; Zheng, K.; Hu, H.; Zhou, H. Early detection of tomato spotted wilt virus infection in tobacco using the hyperspectral imaging technique and machine learning algorithms. Comput. Electron. Agric. 2019, 167, 105066. [Google Scholar] [CrossRef]

- Yang, C.-M. Assessment of the severity of bacterial leaf blight in rice using canopy hyperspectral reflectance. Precis. Agric. 2010, 11, 61–81. [Google Scholar] [CrossRef]

- Mahlein, A.; Rumpf, T.; Welke, P.; Dehne, H.-W.; Plumer, L.; Steiner, U.; Oerke, E.-C. Development of spectral indices for detecting and identifying plant diseases. Remote Sens. Environ 2013, 128, 21–30. [Google Scholar] [CrossRef]

- Liu, L.; Dong, Y.; Huang, W.; Du, X.; Ren, B.; Huang, L.; Zheng, Q.; Ma, H. A Disease Index for Efficiently Detecting Wheat Fusarium Head Blight Using Sentinel-2 Multispectral Imagery. IEEE Access 2020, 8, 52181–52191. [Google Scholar] [CrossRef]

- Pydipati, R.; Burks, T.; Lee, W.S. Identification of citrus disease using color texture features and discriminant analysis. Comput. Electron. Agric. 2006, 52, 49–59. [Google Scholar] [CrossRef]

- Guo, A.; Huang, W.; Ye, H.; Dong, Y.; Ma, H.; Ren, Y.; Ruan, C. Identification of Wheat Yellow Rust Using Spectral and Texture Features of Hyperspectral Images. Remote Sens. 2020, 12, 1419. [Google Scholar] [CrossRef]

- Al-Saddik, H.; Laybros, A.; Billiot, B.; Cointault, F. Using image texture and spectral reflectance analysis to detect Yellowness and Esca in grapevines at leaf-level. Remote Sens 2018, 10, 618. [Google Scholar] [CrossRef]

- Su, J.; Liu, C.; Hu, X.; Xu, X.; Guo, L.; Chen, W.-H. Spatio-temporal monitoring of wheat yellow rust using UAV multispectral imagery. Comput. Electron. Agric. 2019, 167, 105035. [Google Scholar] [CrossRef]

- Su, J.; Liu, C.; Coombes, M.; Hu, X.; Wang, C.; Xu, X.; Li, Q.; Guo, L.; Chen, W.-H. Wheat yellow rust monitoring by learning from multispectral UAV aerial imagery. Comput. Electron. Agric. 2018, 155, 157–166. [Google Scholar] [CrossRef]

- Calou, V.B.C.; Teixeira, A.D.S.; Moreira, L.C.J.; Lima, C.S.; De Oliveira, J.B.; De Oliveira, M.R.R. The use of UAVs in monitoring yellow sigatoka in banana. Biosyst. Eng. 2020, 193, 115–125. [Google Scholar] [CrossRef]

- Ye, H.; Huang, W.; Huang, S.; Cui, B.; Dong, Y.; Guo, A.; Ren, Y.; Jin, Y. Recognition of Banana Fusarium Wilt Based on UAV Remote Sensing. Remote Sens. 2020, 12, 938. [Google Scholar] [CrossRef]

- Franceschini, M.H.D.; Bartholomeus, H.M.; Van Apeldoorn, D.F.; Suomalainen, J.; Kooistra, L. Feasibility of Unmanned Aerial Vehicle Optical Imagery for Early Detection and Severity Assessment of Late Blight in Potato. Remote Sens. 2019, 11, 224. [Google Scholar] [CrossRef]

- Mahlein, A.-K. Plant Disease Detection by Imaging Sensors—Parallels and Specific Demands for Precision Agriculture and Plant Phenotyping. Plant Dis. 2016, 100, 241–251. [Google Scholar] [CrossRef] [PubMed]

- Abdulridha, J.; Ampatzidis, Y.; Kakarla, S.C.; Roberts, P.D. Detection of target spot and bacterial spot diseases in tomato using UAV-based and benchtop-based hyperspectral imaging techniques. Precis. Agric. 2019, 21, 955–978. [Google Scholar] [CrossRef]

- Abdulridha, J.; Batuman, O.; Ampatzidis, Y. UAV-based remote sensing technique to detect citrus canker disease utilizing hyperspectral imaging and machine learning. Remote Sens. 2019, 11, 1373. [Google Scholar] [CrossRef]

- Deng, X.; Zhu, Z.; Yang, J.; Zheng, Z.; Huang, Z.; Yin, X.; Wei, S.; Lan, Y. Detection of Citrus Huanglongbing Based on Multi-Input Neural Network Model of UAV Hyperspectral Remote Sensing. Remote Sens. 2020, 12, 2678. [Google Scholar] [CrossRef]

- De Castro, A.; Ehsani, R.; Ploetz, R.; Crane, J.; Abdulridha, J. Optimum spectral and geometric parameters for early detection of laurel wilt disease in avocado. Remote Sens. Environ. 2015, 171, 33–44. [Google Scholar] [CrossRef]

- Ramírez-Cuesta, J.; Allen, R.; Zarco-Tejada, P.J.; Kilic, A.; Santos, C.; Lorite, I. Impact of the spatial resolution on the energy balance components on an open-canopy olive orchard. Int. J. Appl. Earth Obs. Geoinf. 2019, 74, 88–102. [Google Scholar] [CrossRef]

- Roth, K.L.; Roberts, D.A.; Dennison, P.E.; Peterson, S.H.; Alonzo, M. The impact of spatial resolution on the classification of plant species and functional types within imaging spectrometer data. Remote Sens. Environ. 2015, 171, 45–57. [Google Scholar] [CrossRef]

- Breunig, F.M.; Galvão, L.S.; Dalagnol, R.; Santi, A.L.; Della Flora, D.P.; Chen, S. Assessing the effect of spatial resolution on the delineation of management zones for smallholder farming in southern Brazil. Remote Sens. Appl. Soc. Environ. 2020, 19, 100325. [Google Scholar]

- Zhou, K.; Cheng, T.; Zhu, Y.; Cao, W.; Ustin, S.L.; Zheng, H.; Yao, X.; Tian, Y. Assessing the impact of spatial resolution on the estimation of leaf nitrogen concentration over the full season of paddy rice using near-surface imaging spectroscopy data. Front. Plant Sci. 2018, 9, 964. [Google Scholar] [CrossRef]

- Dash, J.P.; Watt, M.S.; Pearse, G.D.; Heaphy, M.; Dungey, H.S. Assessing very high resolution UAV imagery for monitoring forest health during a simulated disease outbreak. ISPRS J. Photogramm. Remote Sens. 2017, 131, 1–14. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, C.; Yang, C.; Xie, T.; Jiang, Z.; Hu, T.; Luo, Z.; Zhou, G.; Xie, J. Assessing the Effect of Real Spatial Resolution of In Situ UAV Multispectral Images on Seedling Rapeseed Growth Monitoring. Remote Sens. 2020, 12, 1207. [Google Scholar] [CrossRef]

- Liu, M.; Yu, T.; Gu, X.; Sun, Z.; Yang, J.; Zhang, Z.; Mi, X.; Cao, W.; Li, J. The Impact of Spatial Resolution on the Classification of Vegetation Types in Highly Fragmented Planting Areas Based on Unmanned Aerial Vehicle Hyperspectral Images. Remote Sens. 2020, 12, 146. [Google Scholar] [CrossRef]

- Huang, W.; Lamb, D.W.; Niu, Z.; Zhang, Y.; Liu, L.; Wang, J. Identification of yellow rust in wheat using in-situ spectral reflectance measurements and airborne hyperspectral imaging. Precis. Agric. 2007, 8, 187–197. [Google Scholar] [CrossRef]

- Cubert-GmbH Hyperspectral Firefleye S185 SE. Available online: http://cubert-gmbh.de/ (accessed on 15 October 2020).

- Yue, J.; Yang, G.; Tian, Q.; Feng, H.; Xu, K.; Zhou, C. Estimate of winter-wheat above-ground biomass based on UAV ultrahigh-ground-resolution image textures and vegetation indices. ISPRS J. Photogramm. Remote. Sens. 2019, 150, 226–244. [Google Scholar] [CrossRef]

- Rouse, J.W., Jr.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the great plains with ERTS. In Proceedings of the Third Earth Resources Technology Satellite-1 Symposium, Washington, DC, USA, 10–14 December 1973; pp. 309–317. [Google Scholar]

- Zhang, X.; Han, L.; Dong, Y.; Shi, Y.; Huang, W.; Han, L.; González-Moreno, P.; Ma, H.; Ye, H.; Sobeih, T. A Deep Learning-Based Approach for Automated Yellow Rust Disease Detection from High-Resolution Hyperspectral UAV Images. Remote Sens. 2019, 11, 1554. [Google Scholar] [CrossRef]

- Ashourloo, D.; Mobasheri, M.R.; Huete, A. Developing two spectral disease indices for detection of wheat leaf rust (Pucciniatriticina). Remote Sens. 2014, 6, 4723–4740. [Google Scholar] [CrossRef]

- Devadas, R.; Lamb, D.W.; Simpfendorfer, S.; Backhouse, D. Evaluating ten spectral vegetation indices for identifying rust infection in individual wheat leaves. Precis. Agric. 2008, 10, 459–470. [Google Scholar] [CrossRef]

- Li, B.; Li, B.; Zhang, L.; Han, J.; Bian, C.; Li, G.; Xu, X.; Li-Ping, J. Above-ground biomass estimation and yield prediction in potato by using UAV-based RGB and hyperspectral imaging. ISPRS J. Photogramm. Remote. Sens. 2020, 162, 161–172. [Google Scholar] [CrossRef]

- Gamon, J.; Penuelas, J.; Field, C. A narrow-waveband spectral index that tracks diurnal changes in photosynthetic efficiency. Remote Sens. Environ. 1992, 41, 35–44. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Berjón, A.; López-Lozano, R.; Miller, J.R.; Martín, P.; Cachorro, V.; González, M.; De Frutos, A. Assessing vineyard condition with hyperspectral indices: Leaf and canopy reflectance simulation in a row-structured discontinuous canopy. Remote Sens. Environ. 2005, 99, 271–287. [Google Scholar] [CrossRef]

- Huang, W.; Guan, Q.; Luo, J.; Zhang, J.; Zhao, J.; Liang, D.; Huang, L.; Zhang, D. New Optimized Spectral Indices for Identifying and Monitoring Winter Wheat Diseases. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2516–2524. [Google Scholar] [CrossRef]

- Daughtry, C.; Walthall, C.; Kim, M.; De Colstoun, E.B.; McMurtrey Iii, J. Estimating corn leaf chlorophyll concentration from leaf and canopy reflectance. Remote Sens. Environ. 2000, 74, 229–239. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Merzlyak, M.N.; Chivkunova, O.B. Optical properties and nondestructive estimation of anthocyanin content in plant leaves. Photochem. Photobiol. 2001, 74, 38–45. [Google Scholar] [CrossRef]

- Merzlyak, M.N.; Gitelson, A.; Chivkunova, O.B.; Rakitin, V.Y. Non-destructive optical detection of pigment changes during leaf senescence and fruit ripening. Physiol. Plant. 1999, 106, 135–141. [Google Scholar] [CrossRef]

- Filella, I.; Serrano, L.; Serra, J.; Penuelas, J. Evaluating wheat nitrogen status with canopy reflectance indices and discriminant analysis. Crop. Sci. 1995, 35, 1400–1405. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Tremblay, N.; Zarco-Tejada, P.J.; Dextraze, L. Integrated narrow-band vegetation indices for prediction of crop chlorophyll content for application to precision agriculture. Remote Sens. Environ. 2002, 81, 416–426. [Google Scholar] [CrossRef]

- Zhang, M.; Su, W.; Fu, Y.; Zhu, D.; Xue, J.-H.; Huang, J.; Wang, W.; Wu, J.; Yao, C. Super-resolution enhancement of Sentinel-2 image for retrieving LAI and chlorophyll content of summer corn. Eur. J. Agron. 2019, 111, 125938. [Google Scholar] [CrossRef]

- Zhang, C.; Xie, Z. Combining object-based texture measures with a neural network for vegetation mapping in the Everglades from hyperspectral imagery. Remote Sens. Environ. 2012, 124, 310–320. [Google Scholar] [CrossRef]

- Fu, Y.; Zhao, C.; Wang, J.; Jia, X.; Yang, G.; Song, X.; Feng, H. An Improved Combination of Spectral and Spatial Features for Vegetation Classification in Hyperspectral Images. Remote Sens. 2017, 9, 261. [Google Scholar] [CrossRef]

- Ferreira, M.P.; Wagner, F.H.; Aragão, L.E.; Shimabukuro, Y.E.; de Souza Filho, C.R. Tree species classification in tropical forests using visible to shortwave infrared WorldView-3 images and texture analysis. ISPRS J. Photogramm. Remote Sens. 2019, 149, 119–131. [Google Scholar] [CrossRef]

- Li, S.; Yuan, F.; Ata-Ul-Karim, S.T.; Zheng, H.; Cheng, T.; Liu, X.; Tian, Y.; Zhu, Y.; Cao, W.; Cao, Q. Combining Color Indices and Textures of UAV-Based Digital Imagery for Rice LAI Estimation. Remote. Sens. 2019, 11, 1763. [Google Scholar] [CrossRef]

- Szantoi, Z.; Escobedo, F.; Abd-Elrahman, A.; Smith, S.; Pearlstine, L. Analyzing fine-scale wetland composition using high resolution imagery and texture features. Int. J. Appl. Earth Obs. Geoinf. 2013, 23, 204–212. [Google Scholar] [CrossRef]

- Farwell, L.S.; Gudex-Cross, D.; Anise, I.E.; Bosch, M.J.; Olah, A.M.; Radeloff, V.C.; Razenkova, E.; Rogova, N.; Silveira, E.M.; Smith, M.M.; et al. Satellite image texture captures vegetation heterogeneity and explains patterns of bird richness. Remote Sens. Environ. 2021, 253, 112175. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, 6, 610–621. [Google Scholar] [CrossRef]

- Wang, C.; Wang, S.; He, X.; Wu, L.; Li, Y.; Guo, J. Combination of spectra and texture data of hyperspectral imaging for prediction and visualization of palmitic acid and oleic acid contents in lamb meat. Meat Sci. 2020, 169, 108194. [Google Scholar] [CrossRef] [PubMed]

- Xiong, Z.; Sun, D.-W.; Pu, H.; Zhu, Z.; Luo, M. Combination of spectra and texture data of hyperspectral imaging for differentiating between free-range and broiler chicken meats. LWT 2015, 60, 649–655. [Google Scholar] [CrossRef]

- Liu, D.; Pu, H.; Sun, D.-W.; Wang, L.; Zeng, X.-A. Combination of spectra and texture data of hyperspectral imaging for prediction of pH in salted meat. Food Chem. 2014, 160, 330–337. [Google Scholar] [CrossRef]

- Cen, H.; Lu, R.; Zhu, Q.; Mendoza, F. Nondestructive detection of chilling injury in cucumber fruit using hyperspectral imaging with feature selection and supervised classification. Postharvest Biol. Technol. 2016, 111, 352–361. [Google Scholar] [CrossRef]

- Lu, J.; Zhou, M.; Gao, Y.; Jiang, H. Using hyperspectral imaging to discriminate yellow leaf curl disease in tomato leaves. Precis. Agric. 2018, 19, 379–394. [Google Scholar] [CrossRef]

- Astor, T.; Dayananda, S.; Nidamanuri, R.R.; Nautiyal, S.; Hanumaiah, N.; Gebauer, J.; Wachendorf, M. Estimation of Vegetable Crop Parameter by Multi-temporal UAV-Borne Images. Remote Sens. 2018, 10, 805. [Google Scholar] [CrossRef]

- Li, X.; Zhang, Y.; Luo, J.; Jin, X.; Xu, Y.; Yang, W. Quantification winter wheat LAI with HJ-1CCD image features over multiple growing seasons. Int. J. Appl. Earth Obs. Geoinf. 2016, 44, 104–112. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Maimaitiyiming, M.; Hartling, S.; Peterson, K.T.; Maw, M.J.; Shakoor, N.; Mockler, T.; Fritschi, F.B. Vegetation Index Weighted Canopy Volume Model (CVMVI) for soybean biomass estimation from Unmanned Aerial System-based RGB imagery. ISPRS J. Photogramm. Remote Sens. 2019, 151, 27–41. [Google Scholar] [CrossRef]

- Jay, S.; Gorretta, N.; Morel, J.; Maupas, F.; Bendoula, R.; Rabatel, G.; Dutartre, D.; Comar, A.; Baret, F. Estimating leaf chlorophyll content in sugar beet canopies using millimeter- to centimeter-scale reflectance imagery. Remote. Sens. Environ. 2017, 198, 173–186. [Google Scholar] [CrossRef]

- Navrozidis, I.; Alexandridis, T.; Dimitrakos, A.; Lagopodi, A.L.; Moshou, D.; Zalidis, G. Identification of purple spot disease on asparagus crops across spatial and spectral scales. Comput. Electron. Agric. 2018, 148, 322–329. [Google Scholar] [CrossRef]

- Mahlein, A.-K.; Kuska, M.T.; Thomas, S.; Wahabzada, M.; Behmann, J.; Rascher, U.; Kersting, K. Quantitative and qualitative phenotyping of disease resistance of crops by hyperspectral sensors: Seamless interlocking of phytopathology, sensors, and machine learning is needed! Curr. Opin. Plant. Biol. 2019, 50, 156–162. [Google Scholar] [CrossRef]

- Penuelas, J.; Filella, I.; Gamon, J.A. Assessment of photosynthetic radiation-use efficiency with spectral reflectance. New Phytol. 1995, 131, 291–296. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.; Chica-Olmo, M.; Abarca-Hernandez, F.; Atkinson, P.M.; Jeganathan, C. Random Forest classification of Mediterranean land cover using multi-seasonal imagery and multi-seasonal texture. Remote Sens. Environ. 2012, 121, 93–107. [Google Scholar] [CrossRef]

| VIs. | Equations | Application | Crop | Reference | Publication |

|---|---|---|---|---|---|

| SIPI | Biomass estimation and yield prediction | Potato | [51] | ISPRS Journal of Photogrammetry and Remote Sensing | |

| PRI | Photosynthetic efficiency | Sunflower | [52] | Remote sensing of Environment | |

| NPCI | Chlorophyll estimation | Vine | [53] | Remote sensing of Environment | |

| MSR | Powdery mildew detection | Wheat | [18] | Computers and Electronics in Agriculture | |

| RVSI | Target spot detection | Tomato | [33] | Precision Agriculture | |

| YRI | Yellow rust detection | Wheat | [54] | IEEE J-STARS | |

| GI | Leaf rust detection | Wheat | [49] | Remote sensing | |

| PhRI | Chlorophyll estimation | Corn | [55] | Remote sensing of Environment | |

| ARI | Anthocyanin estimation | Norway maple | [56] | Photochemistry and Photobiology | |

| PSRI | Pigment estimation | Potato | [57] | Physiologia Plantarum | |

| NRI | Nitrogen status evaluation | Wheat | [58] | Crop science | |

| TCARI | Chlorophyll estimation | Corn | [59] | Remote sensing of Environment | |

| TVI | Laurel wilt detection | Avocado | [36] | Remote sensing of Environment | |

| NDVI | Diseases detection | Sugar beet | [22] | Remote sensing of Environment | |

| MCARI | LAI and chlorophyll estimation | Corn | [60] | European Journal of Agronomy |

| Texture | Equation |

|---|---|

| Mean, MEA | |

| Variance, VAR | |

| Homogeneity, HOM | |

| Contrast, CON | |

| Dissimilarity, DIS | |

| Entropy, ENT | |

| Second moment, SEC | |

| Correlation, COR |

| Feature | Infection Stages | Spatial Resolution | PLSR-Based Model Equations |

|---|---|---|---|

| VIs | Early infection | 15 cm | DI = −1.6143 − 3.9349NDVI + 6.0053SIPI + 7.173PRI + 10.3403PSRI − 0.263MSR |

| Mid-infection | 15 cm | DI = −391.994 + 374.044NDVI + 59.786SIPI + 338.364PRI + 759.008PSRI − 3.844MSR | |

| Late infection | 20 cm | DI = 26.762 − 51.012NDVI + 39.463SIPI + 178.313PRI + 91.32PSRI − 8.395MSR | |

| TFs | Early infection | 3 cm | DI = 16.9718 − 0.1463MEA1 − 0.2437MEA2 − 5.8203VAR2 + 3.1486CON2 |

| Mid-infection | 10 cm | DI = 316.811 + 0.588MEA1 − 5.671MEA2 − 32.4VAR2 + 11.707CON2 | |

| Late infection | 15 cm | DI = 479.509 + 0.54MEA1- 8.504MEA2 − 22.926VAR2 + 1.054CON2 | |

| Vis + TFs | Early infection | 10 cm | DI = −4.355 − 0.008MEA1 − 0.011MEA2 + 0.094VAR2 + 0.175CON2 −4.272NDVI + 8.729SIPI + 14.065PR + 7.918PSRI − 0.189MSR |

| Mid-infection | 15 cm | DI = 209.235 + 1.339MEA1 − 1.75MEA2 − 109.907VAR2 + 21.766CON2 − 41.262NDVI − 18.082SIPI + 311.905PRI − 10.207PSRI − 13.047MSR | |

| Late infection | 10 cm | DI = −248.697 + 2.211MEA1 − 4.391MEA2 − 14.686VAR2 + 4.341CON2 + 421.597NDVI + 69 SIPI + 288.678PRI + 680.157PSRI − 5.277MSR |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, A.; Huang, W.; Dong, Y.; Ye, H.; Ma, H.; Liu, B.; Wu, W.; Ren, Y.; Ruan, C.; Geng, Y. Wheat Yellow Rust Detection Using UAV-Based Hyperspectral Technology. Remote Sens. 2021, 13, 123. https://doi.org/10.3390/rs13010123

Guo A, Huang W, Dong Y, Ye H, Ma H, Liu B, Wu W, Ren Y, Ruan C, Geng Y. Wheat Yellow Rust Detection Using UAV-Based Hyperspectral Technology. Remote Sensing. 2021; 13(1):123. https://doi.org/10.3390/rs13010123

Chicago/Turabian StyleGuo, Anting, Wenjiang Huang, Yingying Dong, Huichun Ye, Huiqin Ma, Bo Liu, Wenbin Wu, Yu Ren, Chao Ruan, and Yun Geng. 2021. "Wheat Yellow Rust Detection Using UAV-Based Hyperspectral Technology" Remote Sensing 13, no. 1: 123. https://doi.org/10.3390/rs13010123

APA StyleGuo, A., Huang, W., Dong, Y., Ye, H., Ma, H., Liu, B., Wu, W., Ren, Y., Ruan, C., & Geng, Y. (2021). Wheat Yellow Rust Detection Using UAV-Based Hyperspectral Technology. Remote Sensing, 13(1), 123. https://doi.org/10.3390/rs13010123