Airborne LiDAR Intensity Correction Based on a New Method for Incidence Angle Correction for Improving Land-Cover Classification

Abstract

1. Introduction

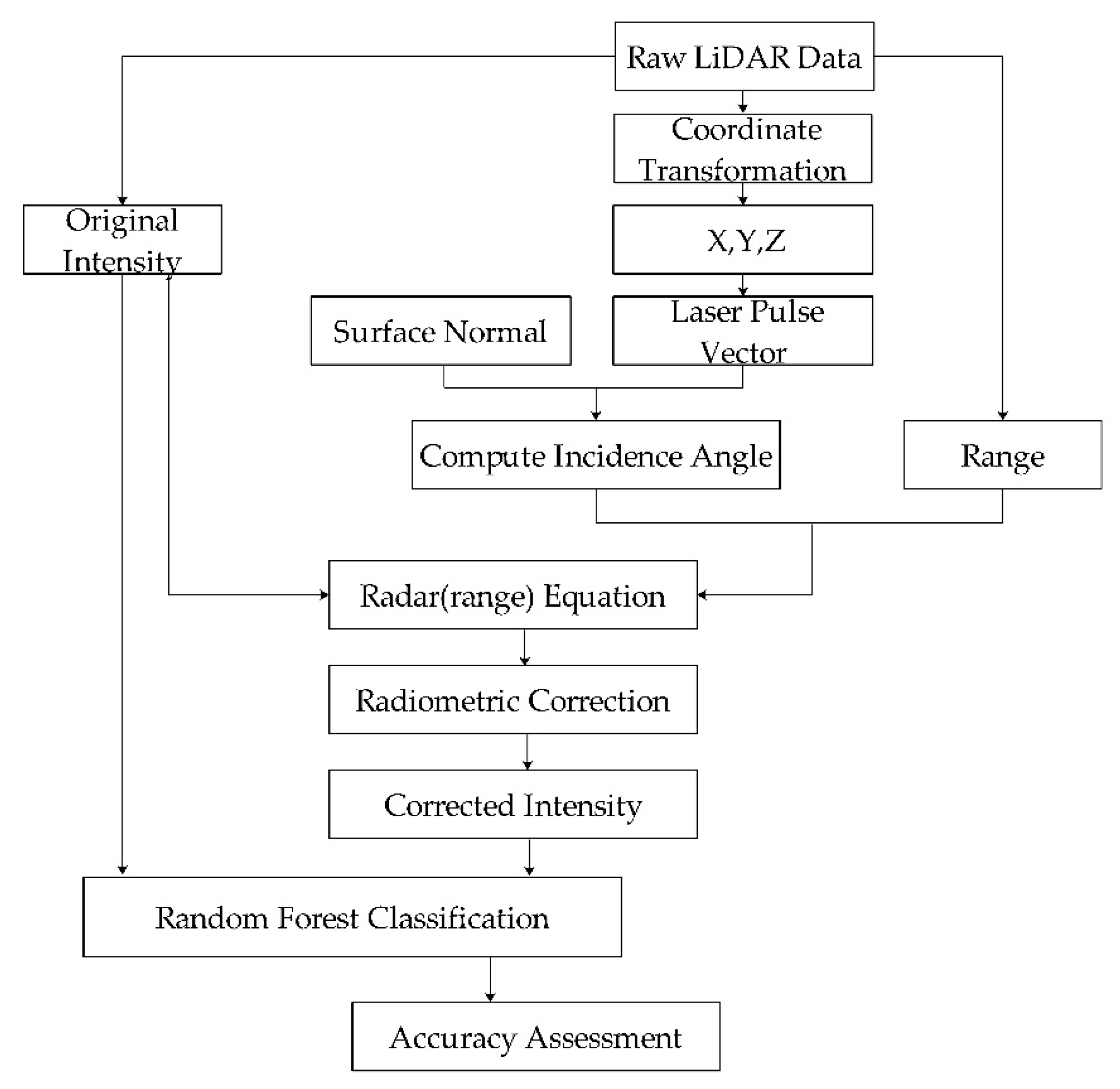

2. Materials and Methods

2.1. Radar (Range) Equation

2.2. A New Calculation Method for Incident Angle

2.3. The Surface Normal

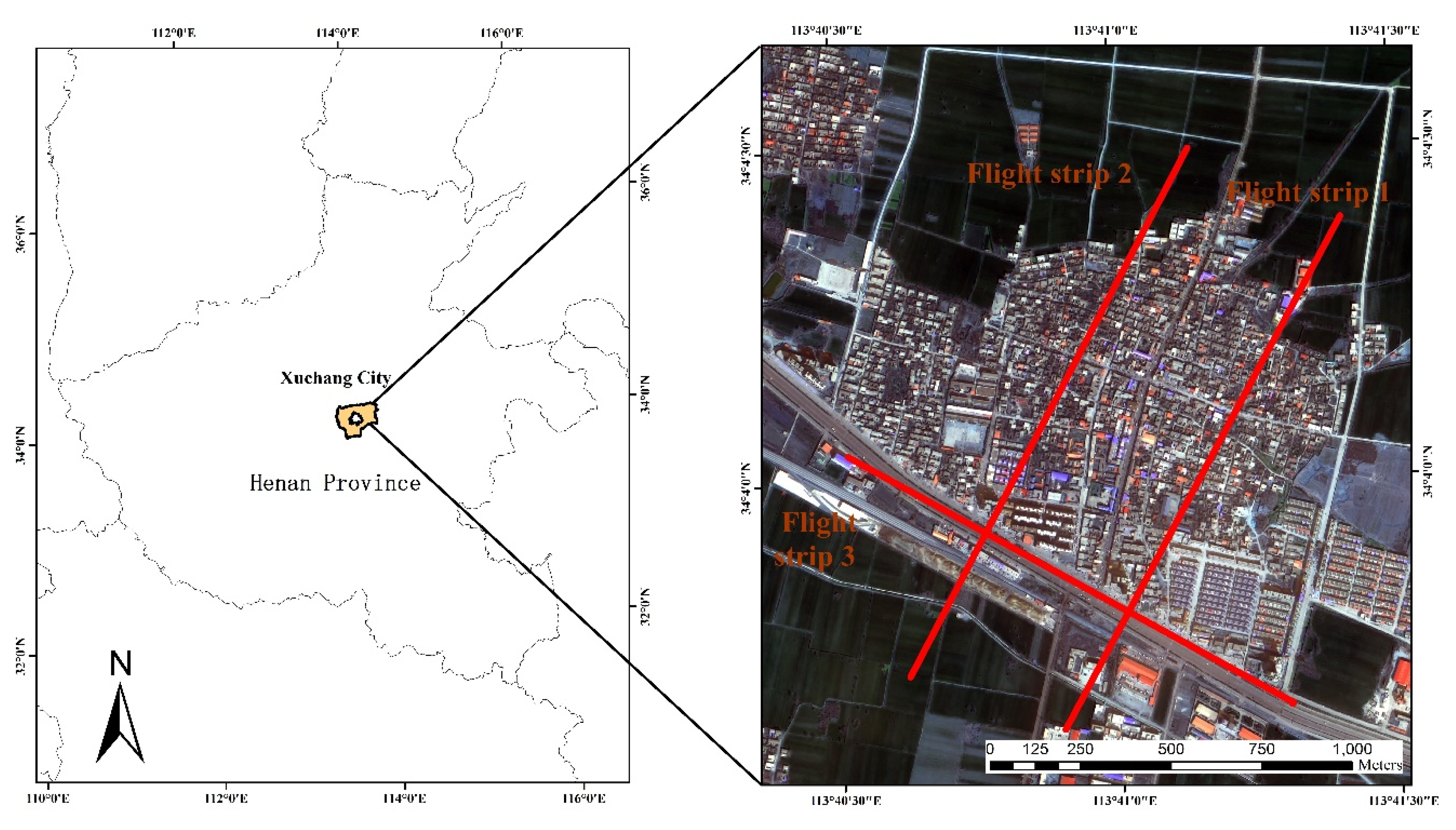

2.4. Experiment

2.4.1. RIEGL Laser Scanning Equipment

2.4.2. Study Area and Dataset

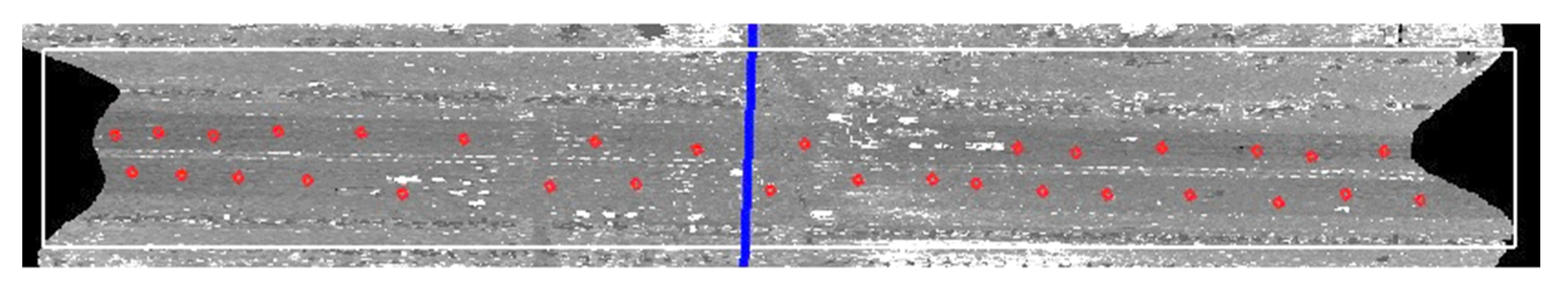

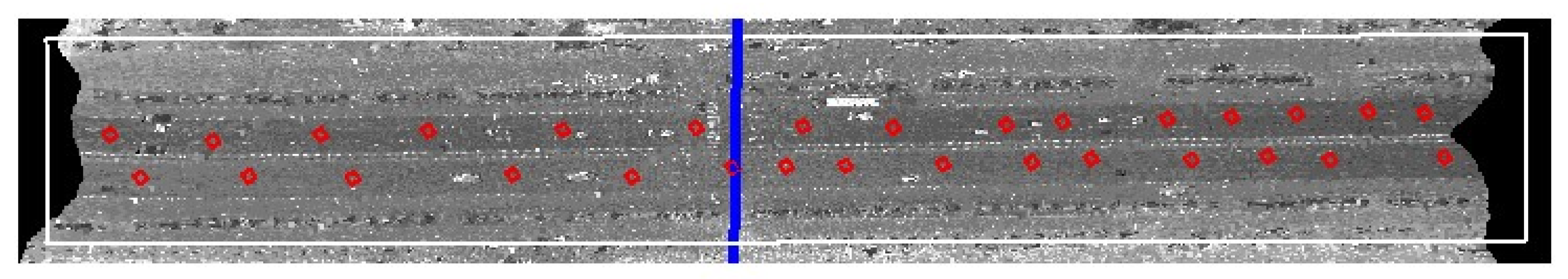

2.4.3. Evaluation Method of Intensity Correction

3. Results

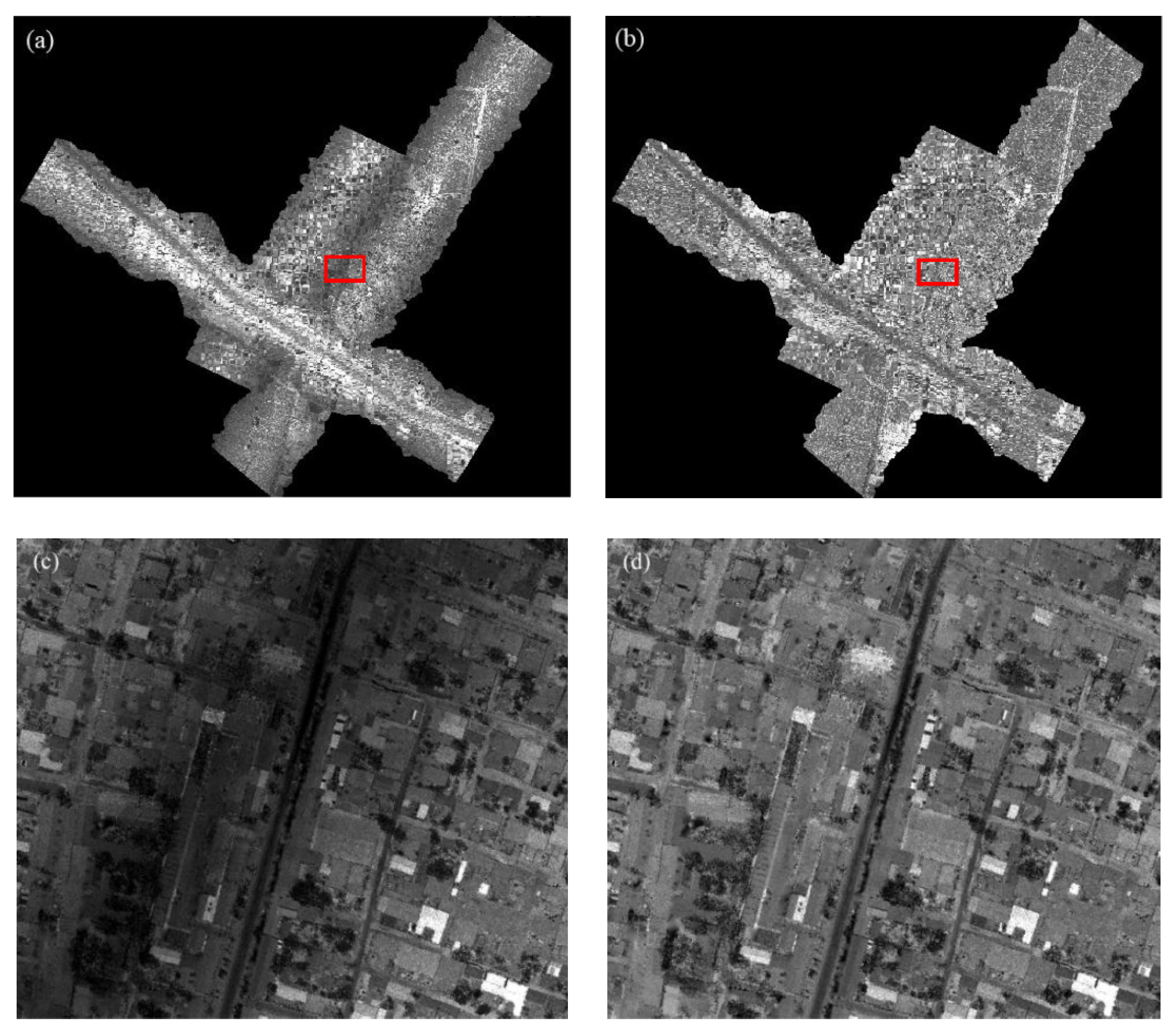

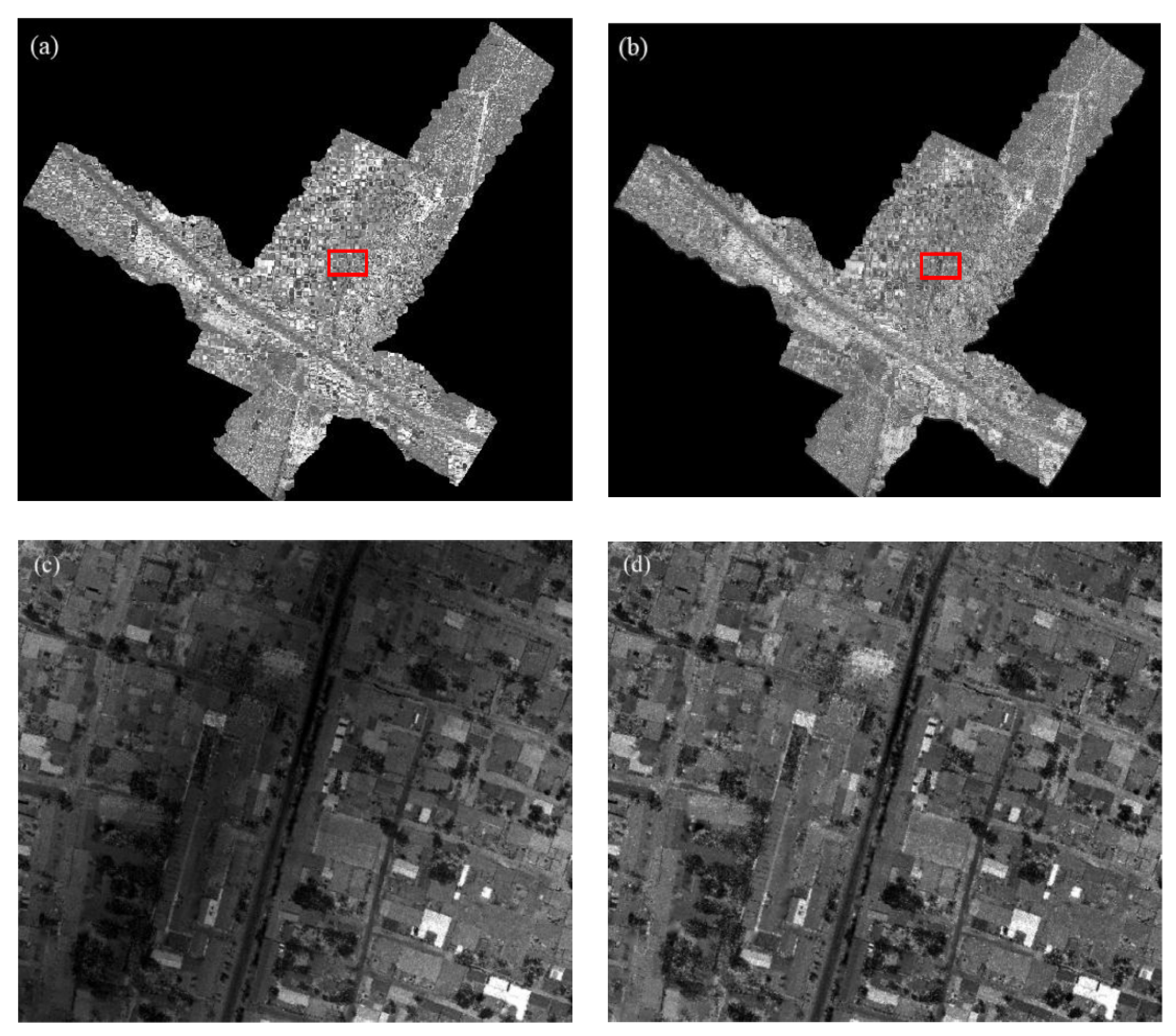

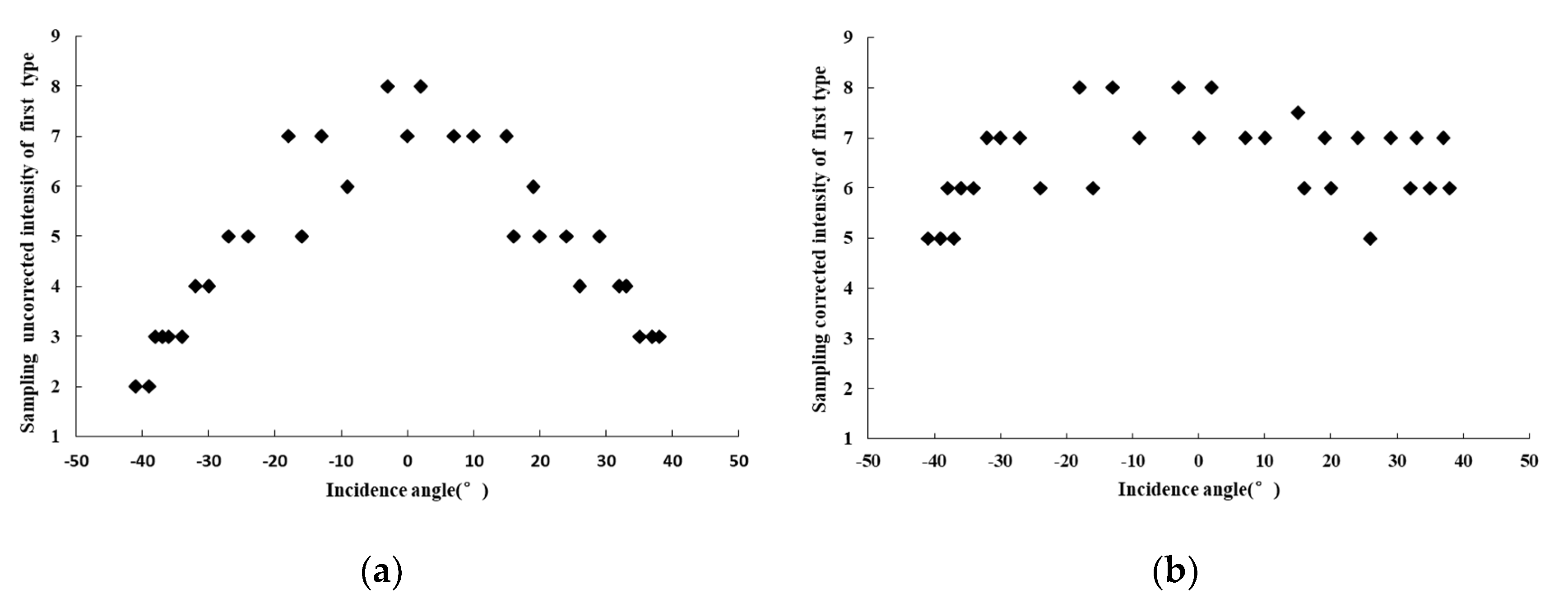

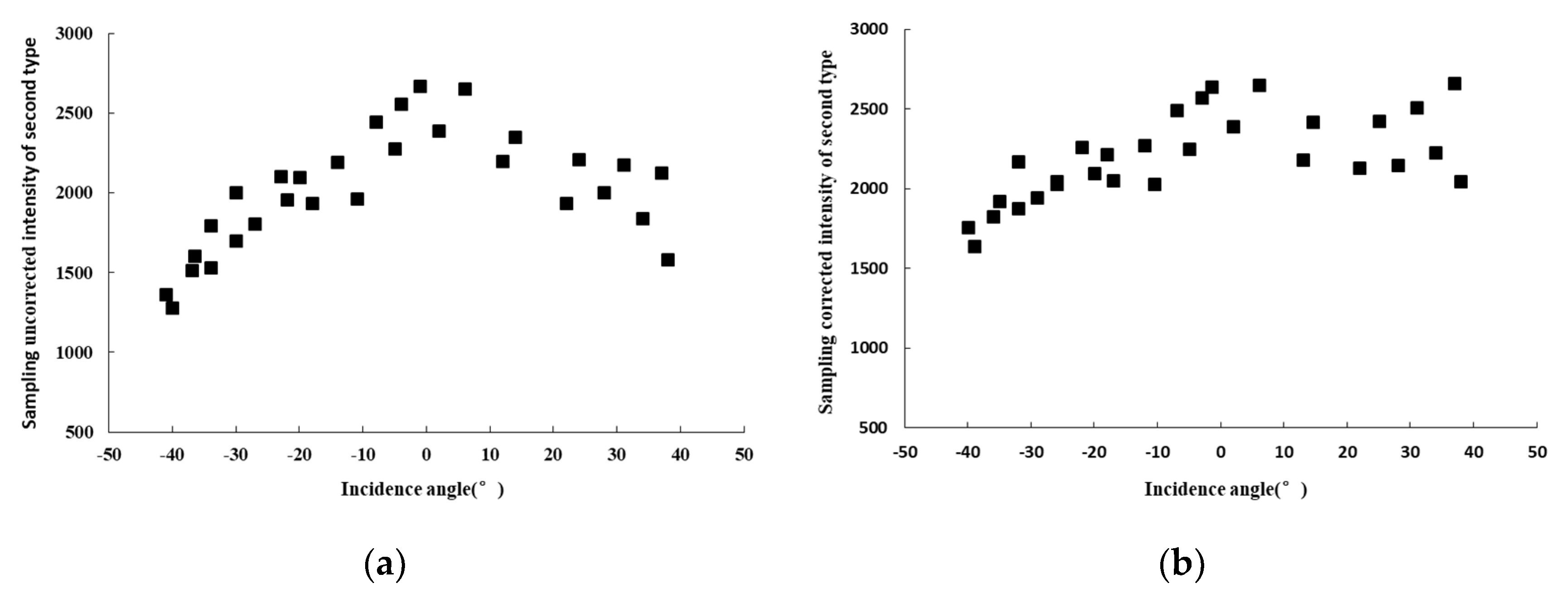

3.1. Assessment of Homogeneous Areas

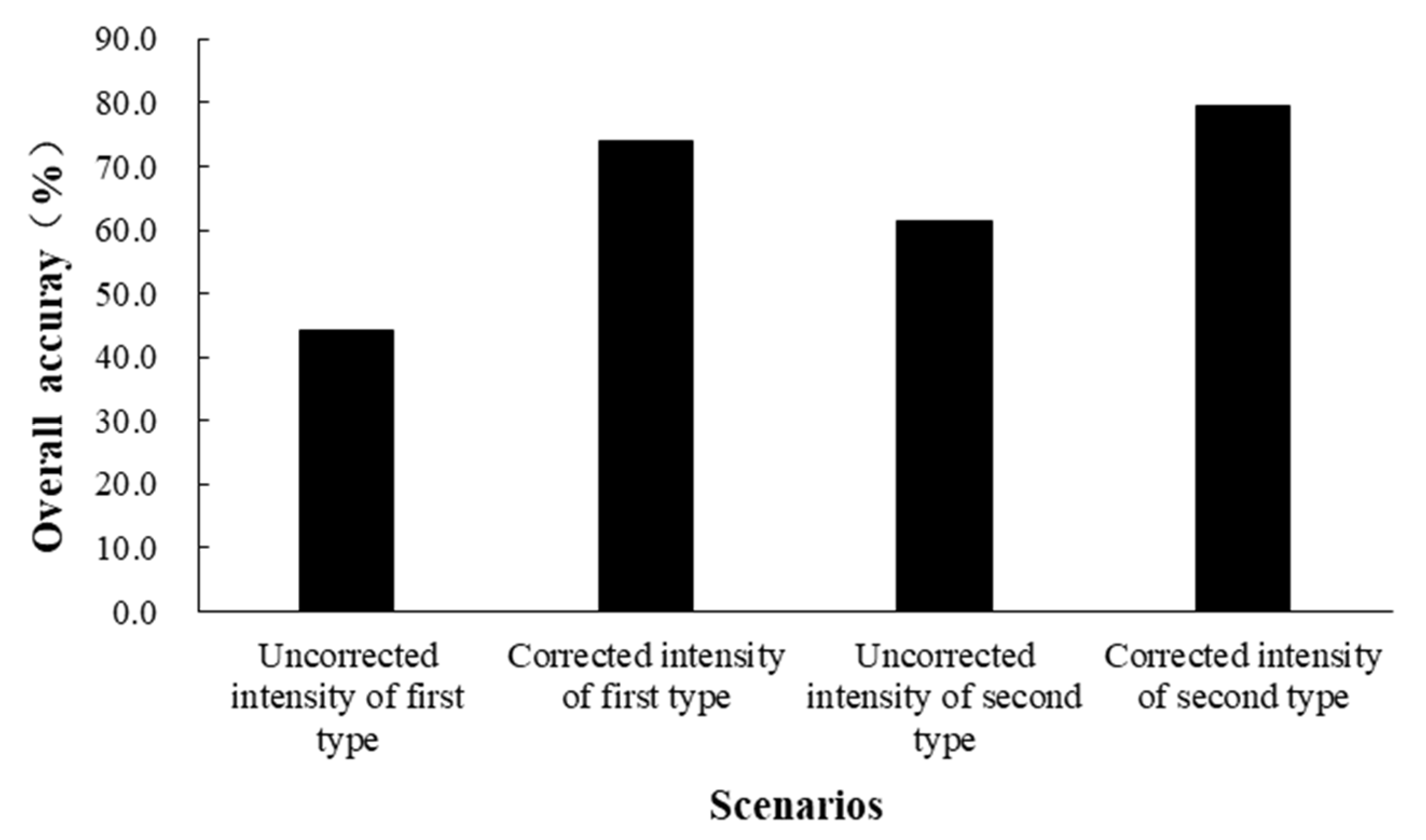

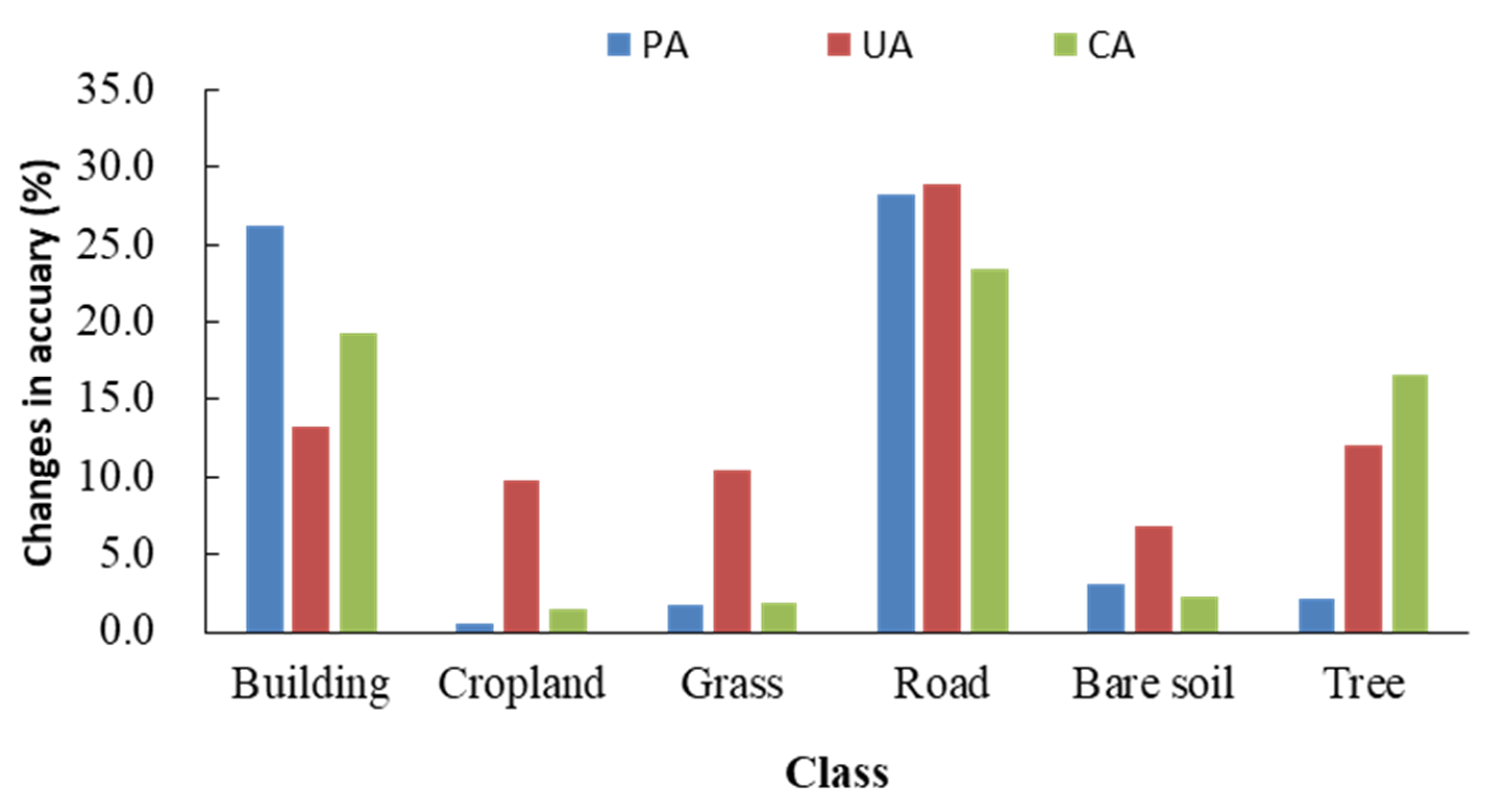

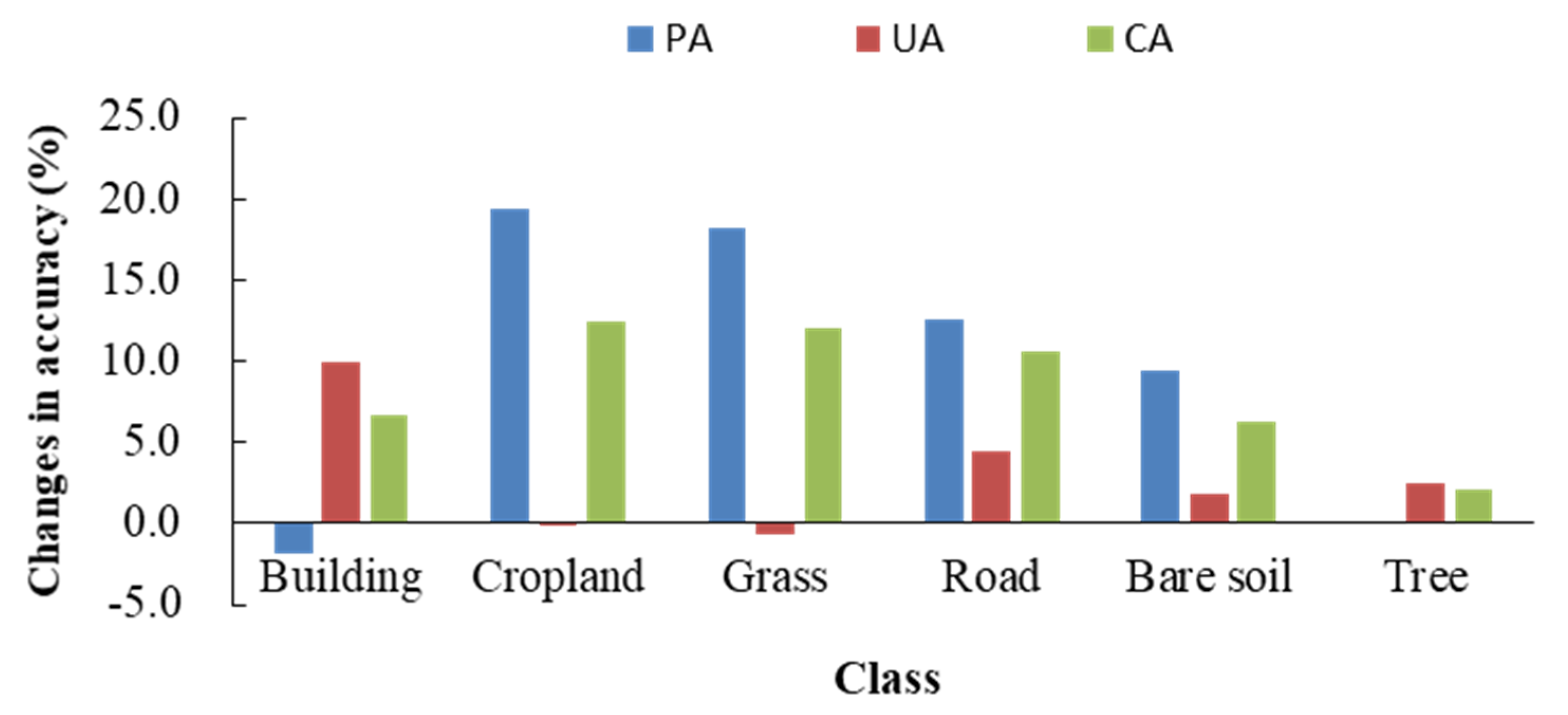

3.2. Comparison of Land Cover Classification Performance Based on Airborne LiDAR Intensity before and after Correction

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kashani, A.G.; Olsen, M.J.; Parrishet, C.E. A Review of LIDAR Radiometric Processing: From Ad Hoc Intensity Correction to Rigorous Radiometric Calibration. Sensors 2015, 15, 28099–28128. [Google Scholar] [CrossRef] [PubMed]

- Eitel, J.U.H.; Höfle, B.; Vierling, L.A.; Abellán, A.; Asner, G.P.; Deems, J.S.; Glennie, C.L.; Joerg, P.C.; LeWinter, A.L.; Magney, T.S.; et al. Beyond 3-D: The new spectrum of lidar applications for earth and ecological sciences. Remote Sens. Environ. 2016, 186, 372–392. [Google Scholar] [CrossRef]

- Huang, J.X.; Sedano, F.; Huang, Y.B.; Ma, H.Y.; Li, X.L.; Liang, S.L.; Tian, L.Y.; Zhang, X.D.; Fan, J.L.; Wu, W.B. Assimilating a synthetic Kalman filter leaf area index series into the WOFOST model to estimate regional winter wheat yield. Agric. For. Meteorol. 2016, 216, 188–202. [Google Scholar] [CrossRef]

- Ramezan, C.A.; Warner, T.A.; Maxwell, A.E. Evaluation of sampling and cross-validation tuning strategies for regional-scale machine learning classification. Remote Sens. 2019, 11, 185. [Google Scholar] [CrossRef]

- Marchi, N.; Pirotti, F.; Lingua, E. Airborne and terrestrial laser scanning data for the assessment of standing and lying deadwood: Current situation and new perspectives. Remote Sens. 2018, 10, 1356. [Google Scholar] [CrossRef]

- Baltsavias, E.P. A comparison between photogrammetry and laser scanning. ISPRS J. Photogramm. Remote Sens. 1999, 54, 83–94. [Google Scholar] [CrossRef]

- Song, J.H.; Han, S.H.; Yu, K.Y.; Kim, Y.I. Assessing the possibility of land-cover classification using LIDAR intensity data. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2002, 34, 259–262. [Google Scholar]

- Coren, F.; Sterzai, P. Radiometric correction in laser scanning. Int. J. Remote Sens. 2006, 27, 3097–3104. [Google Scholar] [CrossRef]

- Donoghue, D.N.; Watt, P.J.; Cox, N.J.; Wilson, J. Remote sensing of species mixtures in conifer plantations using LIDAR height and intensity data. Remote Sens. Environ. 2007, 110, 509–522. [Google Scholar] [CrossRef]

- Chust, G.; Galparsoro, I.; Borja, A. Coastal and estuarine habitat mapping, using LIDAR height and intensity and multi-spectral imagery. Estuar. Coast. Shelf Sci. 2008, 78, 633–643. [Google Scholar] [CrossRef]

- Gatziolis, D. Dynamic range-based intensity normalization for airborne, discrete return LiDAR data of forest canopies. Photogramm. Eng. Remote Sens. 2011, 77, 251–259. [Google Scholar] [CrossRef]

- Singh, K.K.; Vogler, J.B.; Shoemaker, D.A.; Meentemeyer, R.K. LiDAR-Landsat data fusion for large-area assessment of urban land cover: Balancing spatial resolution, data volume and mapping accuracy. ISPRS J. Photogramm. Remote Sens. 2012, 74, 110–121. [Google Scholar] [CrossRef]

- Yan, W.Y.; Shaker, A. Radiometric Correction and Normalization of Airborne LIDAR Intensity Data for Improving Land-Cover Classification. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7658–7673. [Google Scholar]

- Zhu, X.; Wang, T.; Darvishzadeh, R.; Skidmore, A.K.; Niemann, K.O. 3D leaf water content mapping using terrestrial laser scanner backscatter intensity with radiometric correction. ISPRS J. Photogramm. Remote Sens. 2015, 110, 14–23. [Google Scholar] [CrossRef]

- You, H.; Wang, T.; Skidmore, A.; Xing, Y. Quantifying the Effects of Normalisation of Airborne LiDAR Intensity on Coniferous Forest Leaf Area Index Estimations. Remote Sens. 2017, 9, 163. [Google Scholar] [CrossRef]

- Goodale, R.; Hopkinson, C.; Colville, D.; Amirault-Langlais, D. Mapping piping plover (Charadrius melodus melodus) habitat in coastal areas using airborne lidar data. Can. J. Remote Sens. 2007, 33, 519–533. [Google Scholar] [CrossRef]

- Im, J.; Jensen, J.R.; Hodgson, M.E. Object-based land cover classification using high-posting-density LIDAR data. GISci. Remote Sens. 2008, 45, 209–228. [Google Scholar] [CrossRef]

- Antonarakis, A.; Richards, K.; Brasington, J. Object-based land cover classification using airborne LiDAR. Remote Sens. Environ. 2008, 112, 2988–2998. [Google Scholar] [CrossRef]

- Buján, S.; González-Ferreiro, E.; Reyes-Bueno, F.; Barreiro-Fernández, L.; Crecente, R.; Miranda, D. Land use classification from LiDAR data and ortho-images in arural area. Photogramm. Rec. 2012, 27, 401–422. [Google Scholar] [CrossRef]

- Chen, Z.; Gao, B. An Object-Based Method for Urban Land Cover Classification Using Airborne Lidar Data. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2014, 7, 4243–4254. [Google Scholar] [CrossRef]

- Yan, W.Y.; Shaker, A.; El-Ashmawy, N. Urban Land Cover Classification Using Airborne Lidar Data: A Review. Remote Sens. Environ. 2015, 158, 295–310. [Google Scholar] [CrossRef]

- Yan, W.Y.; Shaker, A.; Habib, A.; Kersting, A.P. Improving classification accuracy of airborne LIDAR intensity data by geometric calibration and radiometric correction. ISPRS J. Photogramm. Remote Sens. 2012, 67, 35–44. [Google Scholar] [CrossRef]

- Yan, W.Y.; Shaker, A. Radiometric normalization of overlapping LiDAR intensity data for reduction of striping noise. Int. J. Digit. Earth 2016, 9, 13. [Google Scholar] [CrossRef]

- Xia, S.B.; Chen, D.; Wang, R.S.; Li, J.; Zhang, X.C. Geometric Primitives in LiDAR Point Clouds: A Review. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2020, 13, 685–707. [Google Scholar] [CrossRef]

- Höfle, B.; Pfeifer, N. Correction of laser scanning intensity data: Data and model-driven approaches. ISPRS J. Photogramm. Remote Sens. 2007, 62, 415–433. [Google Scholar] [CrossRef]

- Kaasalainen, S.; Ahokas, E.; Hyyppa, J.; Suomalainen, J. Study of Surface Brightness from Backscattered Laser Intensity: Calibration of Laser Data. IEEE Geosci. Remote Sens. Lett. 2005, 2, 255–259. [Google Scholar] [CrossRef]

- Kaasalainen, S.; Hyyppä, J.; Litkey, P.; Hyyppä, H.; Ahokas, E.; Kukko, A.; Kaartinen, H. Radiometric calibration of ALS intensity. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2007, 36, 201–205. [Google Scholar]

- Kaasalainen, S.; Kukko, A.; Lindroos, T.; Litkey, P.; Kaartinen, H.; Hyyppa, J.; Ahokas, E. Brightness Measurements and Calibration With Airborne and Terrestrial Laser Scanners. IEEE Trans. Geosci. Remote Sens. 2008, 46, 528–534. [Google Scholar] [CrossRef]

- Kaasalainen, S.; Hyyppä, H.; Kukko, A.; Litkey, P.; Ahokas, E.; Hyyppa, J.; Lehner, H.; Jaakkola, A.; Suomalainen, J.; Akujarvi, A.; et al. Radiometric calibration of LIDAR intensity with commercially available reference targets. IEEE Trans. Geosci. Remote Sens. 2009, 47, 588–598. [Google Scholar] [CrossRef]

- Kaasalainen, S.; Pyysalo, U.; Krooks, A.; Vain, A.; Kukko, A.; Hyyppä, J.; Kaasalainen, M. Absolute Radiometric Calibration of ALS Intensity Data: Effects on Accuracy and Target Classification. Sensors 2011, 11, 10586–10602. [Google Scholar] [CrossRef]

- Tan, K.; Cheng, X.J. Correction of Incidence Angle and Distance Effects on TLS Intensity Data Based on Reference Targets. Remote Sens. 2016, 8, 251. [Google Scholar] [CrossRef]

- Scaioni, M.; Höfle, B.; Baungarten, K.A.P.; Barazzetti, L.; Previtali, M.; Wujanz, D. Methods From Information Extraction From LiDAR Intensity Data and Multispectral LiDAR Technology. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2018, 42, 1503–1510. [Google Scholar] [CrossRef]

- Ding, Q.; Chen, W.; King, B.; Liu, Y.X.; Liu, G.X. Combination of overlap-driven adjustment and Phong model for LIDAR intensity correction. ISPRS J. Photogramm. Remote Sens. 2013, 75, 40–47. [Google Scholar] [CrossRef]

- Korpela, I.S. Mapping of understory lichens with airborne discrete-return LiDAR data. Remote Sens. Environ. 2008, 112, 3891–3897. [Google Scholar] [CrossRef]

- Vain, A.; Yu, X.; Kaasalainen, S.; Hyyppa, J. Correcting airborne laser scanning intensity data for automatic gain control effect. IEEE Geosci. Remote Sens. Lett. 2010, 7, 511–514. [Google Scholar] [CrossRef]

- Korpela, I.; Ørka, H.O.; Hyyppä, J.; Heikkinen, V.; Tokola, T. Range and AGC normalization in airborne discrete-return LIDAR intensity data for forest canopies. ISPRS J. Photogramm. Remote Sens. 2010, 65, 369–379. [Google Scholar] [CrossRef]

- Fang, W.; Huang, X.; Zhang, F.; Li, D. Intensity Correction of Terrestrial Laser Scanning Data by Estimating Laser Transmission Function. IEEE Trans. Geosci. Remote Sens. 2015, 53, 942–951. [Google Scholar] [CrossRef]

- Tan, K.; Cheng, X.J. Intensity data correction based on incidence angle and distance for terrestrial laser scanner. J. Appl. Remote Sens. 2015, 9, 094. [Google Scholar] [CrossRef]

- Calders, K.; Disney, M.I.; Armst, J.; Burt, A.; Brede, B.; Origo, N.; Muir, J.; Nightingale, J. Evaluation of the Range Accuracy and the Radiometric Calibration of Multiple Terrestrial Laser Scanning Instruments for Data Interoperability. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2716–2724. [Google Scholar] [CrossRef]

- Ewijk, K.V.; Treitz, P.; Woods, M.; Jones, T.; Caspersen, J.P. Forest site and type variability in ALS-based forest resource inventory attribute predictions over three Ontario forest sites. Forests 2019, 10, 226. [Google Scholar] [CrossRef]

- Shaker, A.; Yan, W.Y.; El-Ashmawy, N. The effects of laser reflection angle on radiometric correction of the airborne lidar intensity data. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2011, 3812, 213–217. [Google Scholar] [CrossRef]

- Yi, P.Y.; Man, W.; Peng, T.; Qiu, J.T.; Zhao, Y.J.; Zhao, J.F. Calibration algorithm and object tilt angle analysis and calculation for LiDAR intensity data. J. Remote Sens. 2016, 20. [Google Scholar] [CrossRef]

- Baltsavias, E.P. Airborne laser scanning: Basic relations and formulas. ISPRS J. Photogramm. Remote Sens. 1999, 54, 199–214. [Google Scholar] [CrossRef]

- Yuan, X.X.; Zhang, X.P.; Fu, J.H. Transformation of angular elements obtained via a position and orientation system in Gauss-Kruger projection coordinate system. Acta Geod. Cartogr. Sin. 2011, 40, 338–344. [Google Scholar]

- LAS Extrabytes Implementation in RIEGL Software Whitepaper. Available online: http://www.riegl.com/uploads/tx_pxpriegldownloads/Whitepaper_-_LAS_extrabytes_implementation_in_Riegl_software_01.pdf (accessed on 5 November 2015).

- Oh, D. Radiometric Correction of Mobile Laser Scanning Intensity Data. Master’s Thesis, International Institute for Geoinformation Science and Earth Observation, Enschede, The Netherlands, 2010. [Google Scholar]

- Fernandez-Delgado, M.; Cernadas, E.; Barro, S. Do we Need Hundreds of Classifiers to Solve Real World Classification Problem. J. Mach. Learn. Res. 2014, 15, 3133–3181. [Google Scholar]

- Kukko, A.; Kaasalainen, S.; Litkey, P. Effect of incidence angle on laser scanner intensity and surface data. Appl. Opt. 2008, 47, 986–992. [Google Scholar] [CrossRef]

| Types | Total Samples (Pixels) | p Value (Uncorrected Intensity Values of First Type | p Value (Corrected Intensity Values of First Type) | p Value (Uncorrected Intensity Values of Second Type) | p Value (Corrected Intensity Values of Second Type) |

|---|---|---|---|---|---|

| building | 11,932 | ||||

| pavement/road | 9003 | 0.000 | 0.000 | 0.000 | 0.000 |

| tree | 7685 | ||||

| cropland | 3266 | ||||

| grass | 1730 | ||||

| bare soil | 211 |

| Intensity Value | Mean | Standard Deviation | Variation Coefficient |

|---|---|---|---|

| Uncorrected intensity values of first type | 4.84 | 1.74 | 0.36 |

| Corrected intensity values of first type | 6.56 | 0.89 | 0.14 |

| Uncorrected intensity values of second type | 1992.37 | 402.14 | 0.20 |

| Corrected intensity values of second type | 2209.05 | 309.41 | 0.14 |

| Intensity Value | Mean | Standard Deviation | Variation Coefficient |

|---|---|---|---|

| Uncorrected intensity values of first type | 4.80 | 1.45 | 0.30 |

| Corrected intensity values of first type | 6.63 | 0.87 | 0.13 |

| Uncorrected intensity values of second type | 2009.22 | 357.41 | 0.18 |

| Corrected intensity values of second type | 2196.27 | 266.00 | 0.12 |

| Dataset | Types | Producer’s Accuracy (%) | User’s Accuracy (%) | Overall Class Accuracy for a Single Class (%) | The Average Rank | p Value | |

|---|---|---|---|---|---|---|---|

| LiDAR | building | 60.68 | 47.43 | 36.28 | 155.10 | 0.000 | |

| cropland | 8.17 | 12.00 | 5.11 | ||||

| grass | 3.19 | 4.74 | 1.94 | 2.45 | |||

| pavement/road | 24.93 | 32.61 | 16.46 | ||||

| bare soil | 1.56 | 1.49 | 0.77 | ||||

| tree | 65.52 | 63.14 | 38.70 | ||||

| LiDAR | building | 86.10 | 68.86 | 61.97 | |||

| cropland | 49.75 | 70.94 | 41.32 | ||||

| grass | 48.59 | 73.37 | 41.31 | 2.48 | |||

| pavement/road | 59.26 | 72.39 | 48.33 | ||||

| bare soil | 68.75 | 66.67 | 51.16 | ||||

| tree | 88.44 | 87.04 | 78.14 | ||||

| LiDAR | building | 86.89 | 60.63 | 55.55 | |||

| cropland | 8.68 | 21.77 | 6.62 | ||||

| grass | 4.88 | 15.20 | 3.83 | 2.47 | |||

| pavement/road | 53.14 | 61.55 | 39.89 | ||||

| bare soil | 4.69 | 8.33 | 3.09 | ||||

| tree | 67.60 | 75.14 | 55.24 | ||||

| LiDAR | building | 84.30 | 78.78 | 68.70 | |||

| cropland | 69.12 | 70.76 | 53.77 | ||||

| grass | 66.79 | 72.65 | 53.37 | ||||

| pavement/road | 71.76 | 76.81 | 58.98 | 2.60 | |||

| bare soil | 78.13 | 68.49 | 57.47 | ||||

| tree | 88.48 | 89.54 | 80.19 |

| Proposed Correction I [1] | Proposed Correction II [2] | Method I [3] | Method II [4] | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PA (%) | UA (%) | CA (%) | PA (%) | UA (%) | CA (%) | PA (%) | UA (%) | CA (%) | PA (%) | UA (%) | CA (%) | |

| building | 86.10 | 68.86 | 61.97 | 84.30 | 78.78 | 68.70 | 84.60 | 46.55 | 42.91 | 84.90 | 48.49 | 44.64 |

| cropland | 49.75 | 70.94 | 41.32 | 69.12 | 70.76 | 53.77 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| grass | 48.59 | 73.37 | 41.31 | 66.79 | 72.65 | 53.37 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| pavement/road | 59.26 | 72.39 | 48.33 | 71.76 | 76.81 | 58.98 | 31.51 | 38.52 | 20.96 | 31.73 | 38.41 | 21.03 |

| bare soil | 68.75 | 66.67 | 51.16 | 78.13 | 68.49 | 57.47 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| tree | 88.44 | 87.04 | 78.14 | 88.48 | 89.54 | 80.19 | 51.04 | 83.33 | 46.31 | 61.29 | 86.75 | 56.04 |

| Overall Accuracy = 74.11% | Overall Accuracy = 79.59% | Overall Accuracy = 50.02% | Overall Accuracy = 52.55% | |||||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Q.; Zhong, R.; Dong, P.; Mo, Y.; Jin, Y. Airborne LiDAR Intensity Correction Based on a New Method for Incidence Angle Correction for Improving Land-Cover Classification. Remote Sens. 2021, 13, 511. https://doi.org/10.3390/rs13030511

Wu Q, Zhong R, Dong P, Mo Y, Jin Y. Airborne LiDAR Intensity Correction Based on a New Method for Incidence Angle Correction for Improving Land-Cover Classification. Remote Sensing. 2021; 13(3):511. https://doi.org/10.3390/rs13030511

Chicago/Turabian StyleWu, Qiong, Ruofei Zhong, Pinliang Dong, You Mo, and Yunxiang Jin. 2021. "Airborne LiDAR Intensity Correction Based on a New Method for Incidence Angle Correction for Improving Land-Cover Classification" Remote Sensing 13, no. 3: 511. https://doi.org/10.3390/rs13030511

APA StyleWu, Q., Zhong, R., Dong, P., Mo, Y., & Jin, Y. (2021). Airborne LiDAR Intensity Correction Based on a New Method for Incidence Angle Correction for Improving Land-Cover Classification. Remote Sensing, 13(3), 511. https://doi.org/10.3390/rs13030511