Structure Tensor-Based Infrared Small Target Detection Method for a Double Linear Array Detector

Abstract

:1. Introduction

1.1. Related Works

1.2. Contributions

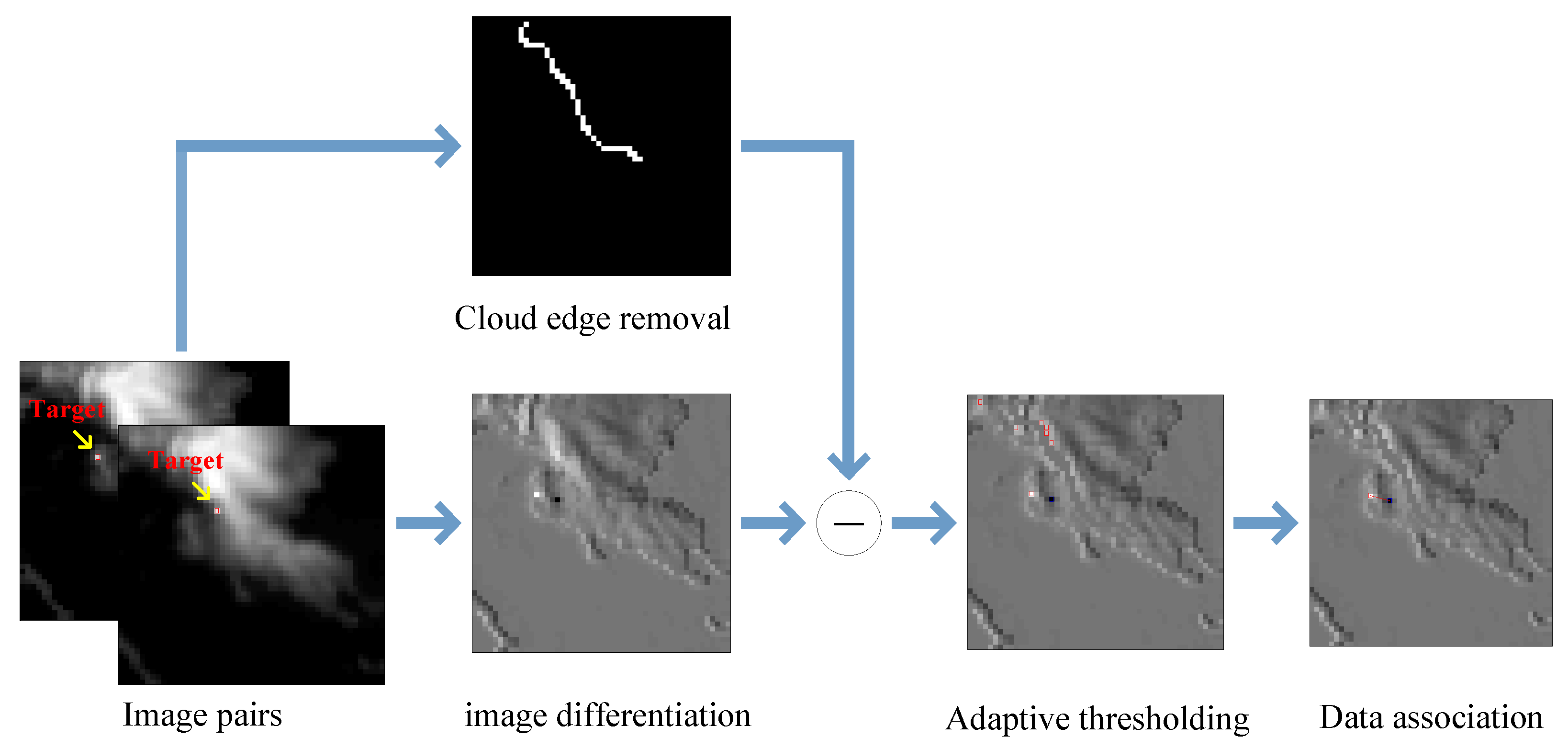

- The structure tensor is used to detect infrared small targets, which is used as an adaptive weighting parameter to suppress strong cloud edges.

- Considering that using information of image sequences requires more prior information and a large amount of data processing, the temporal image differentiation filter is used to extract target pairs using movement information of the target.

- Adaptive thresholding-based constant false alarm (H-CFAR) is performed to obtain candidate targets, and data association is performed to extract positive and negative target pairs.

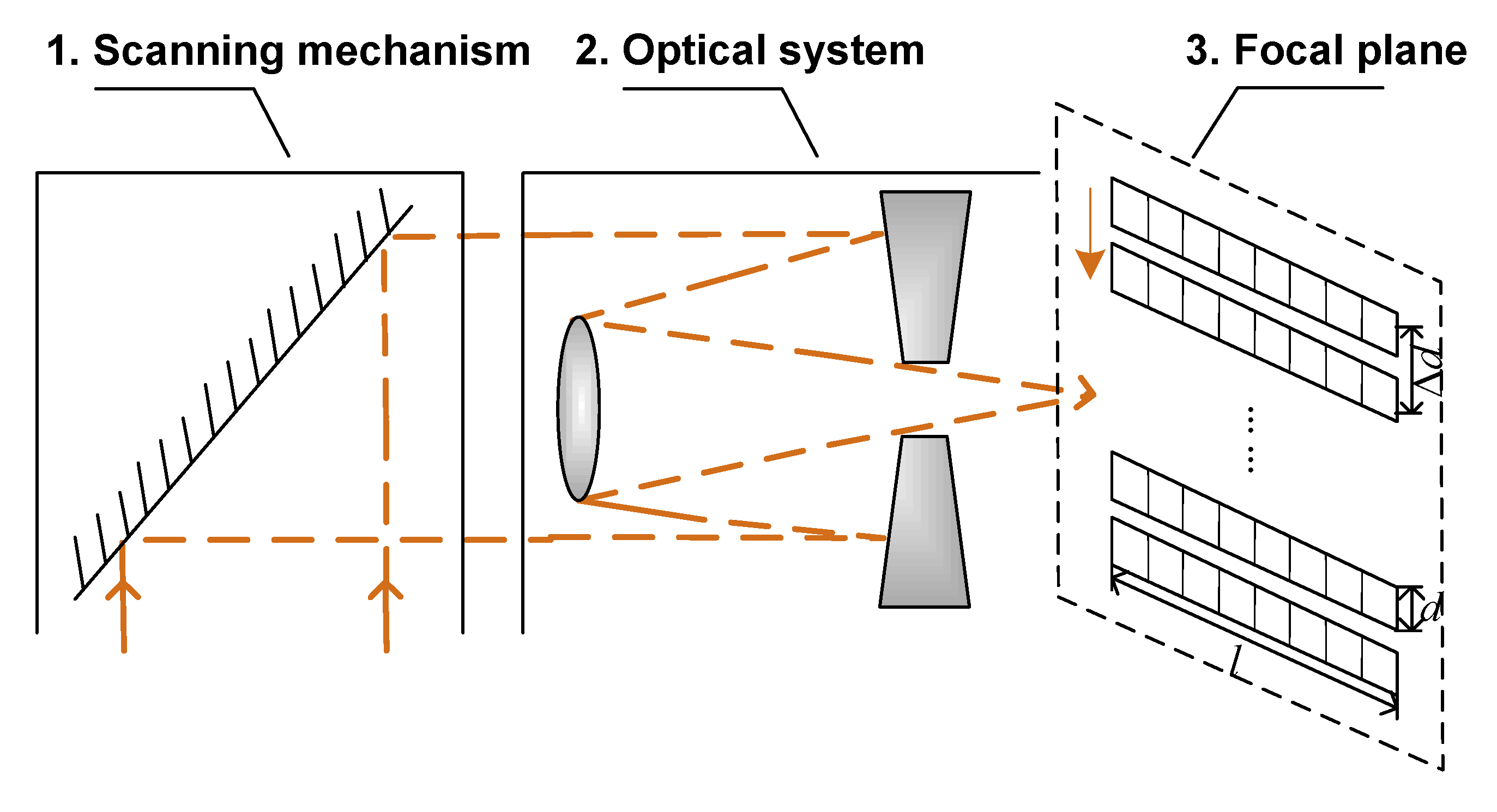

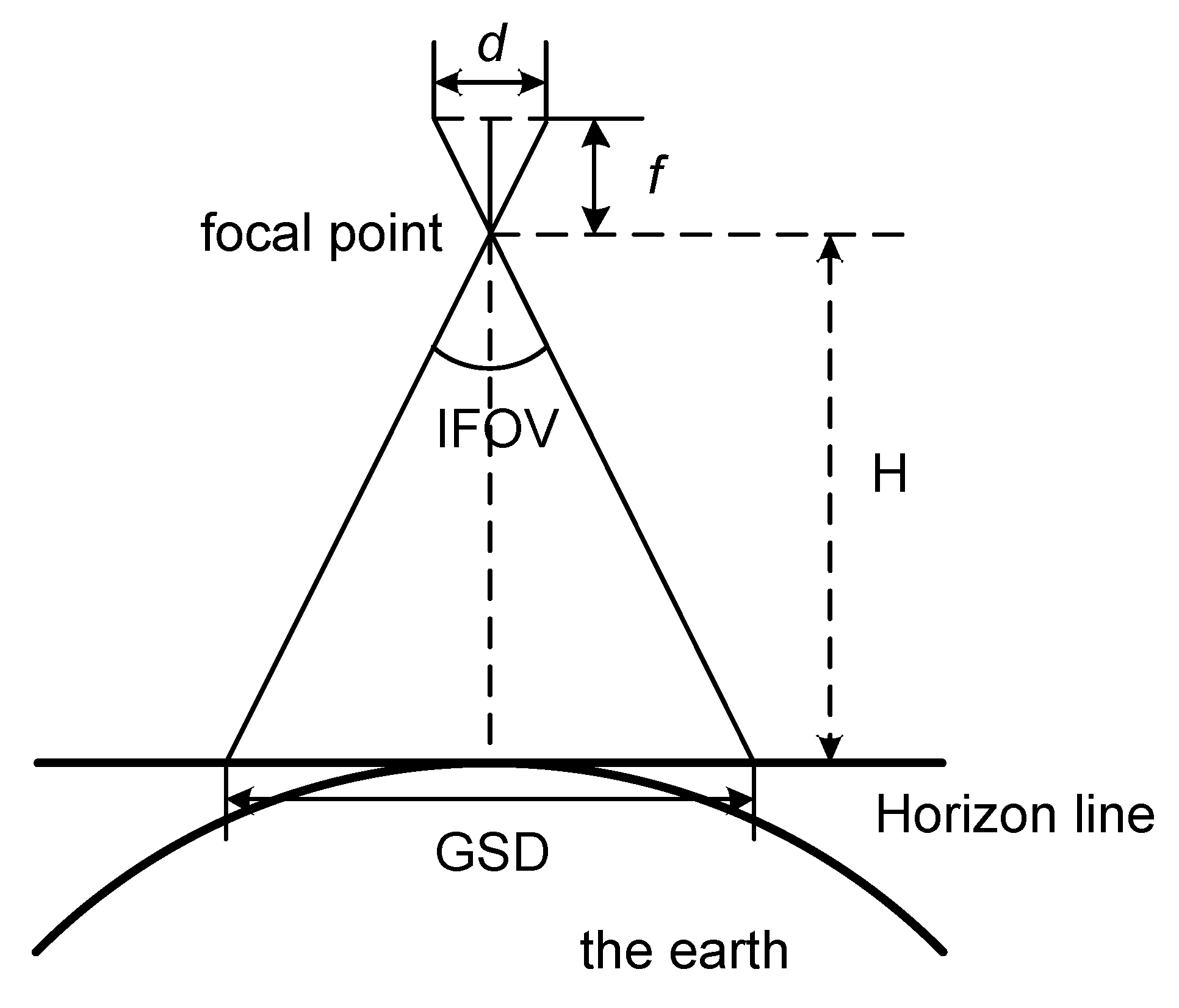

2. The Double Linear Array Detector

3. Target Detection Model

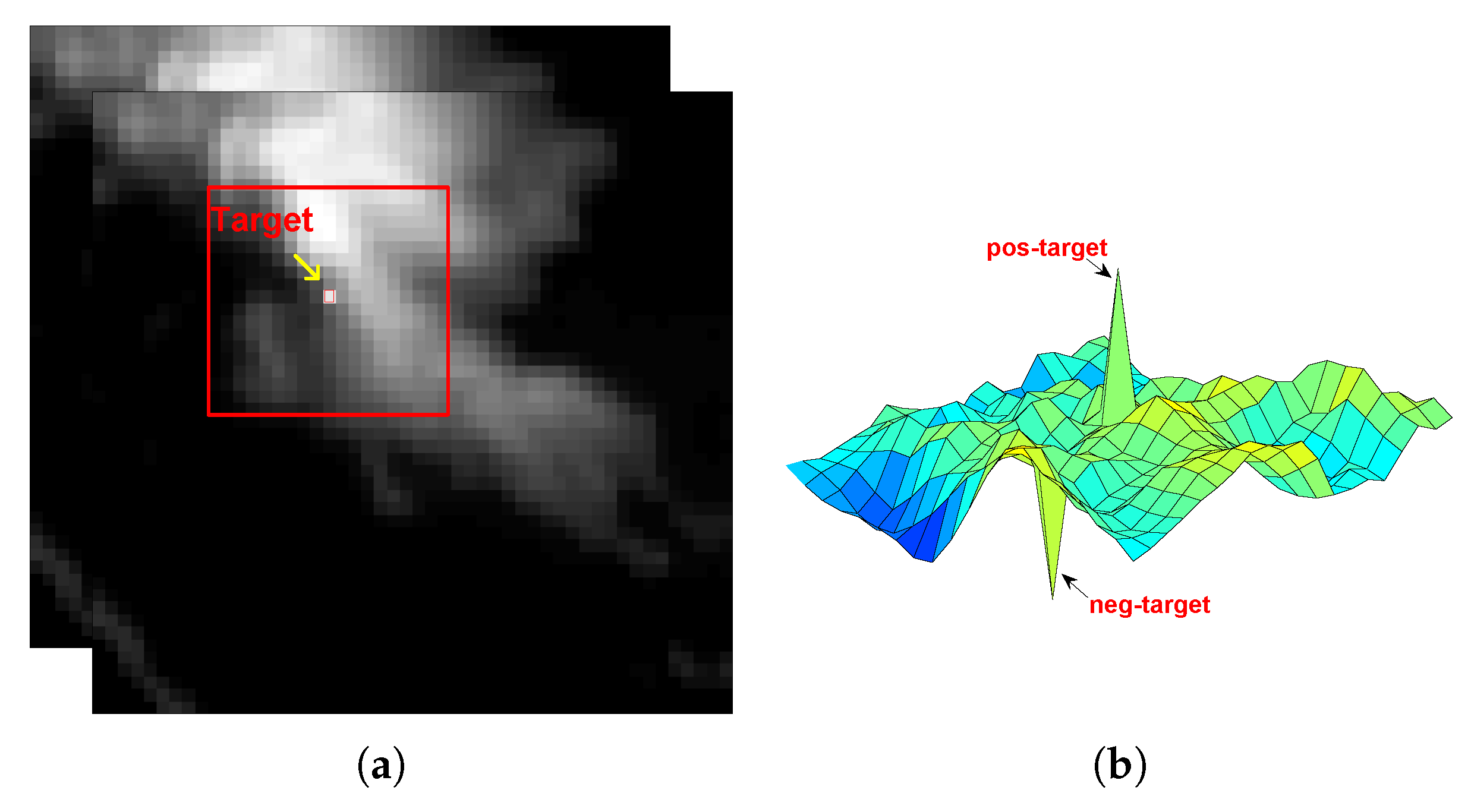

Temporal Differentiation of Image Pairs

4. Experimental Results and Discussions

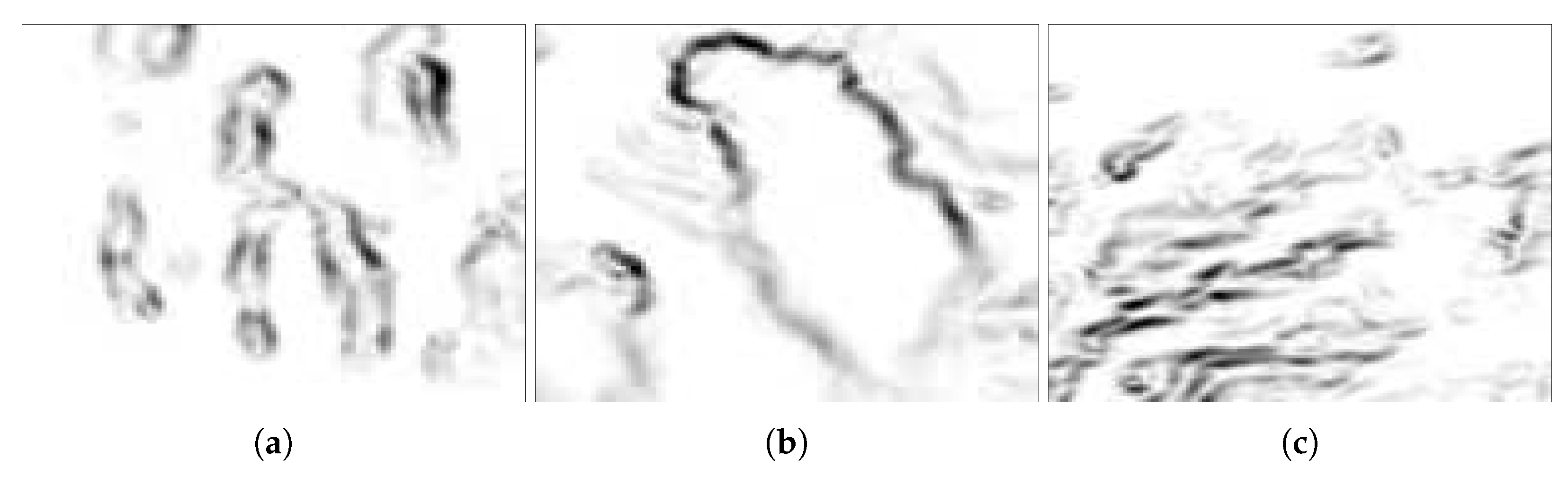

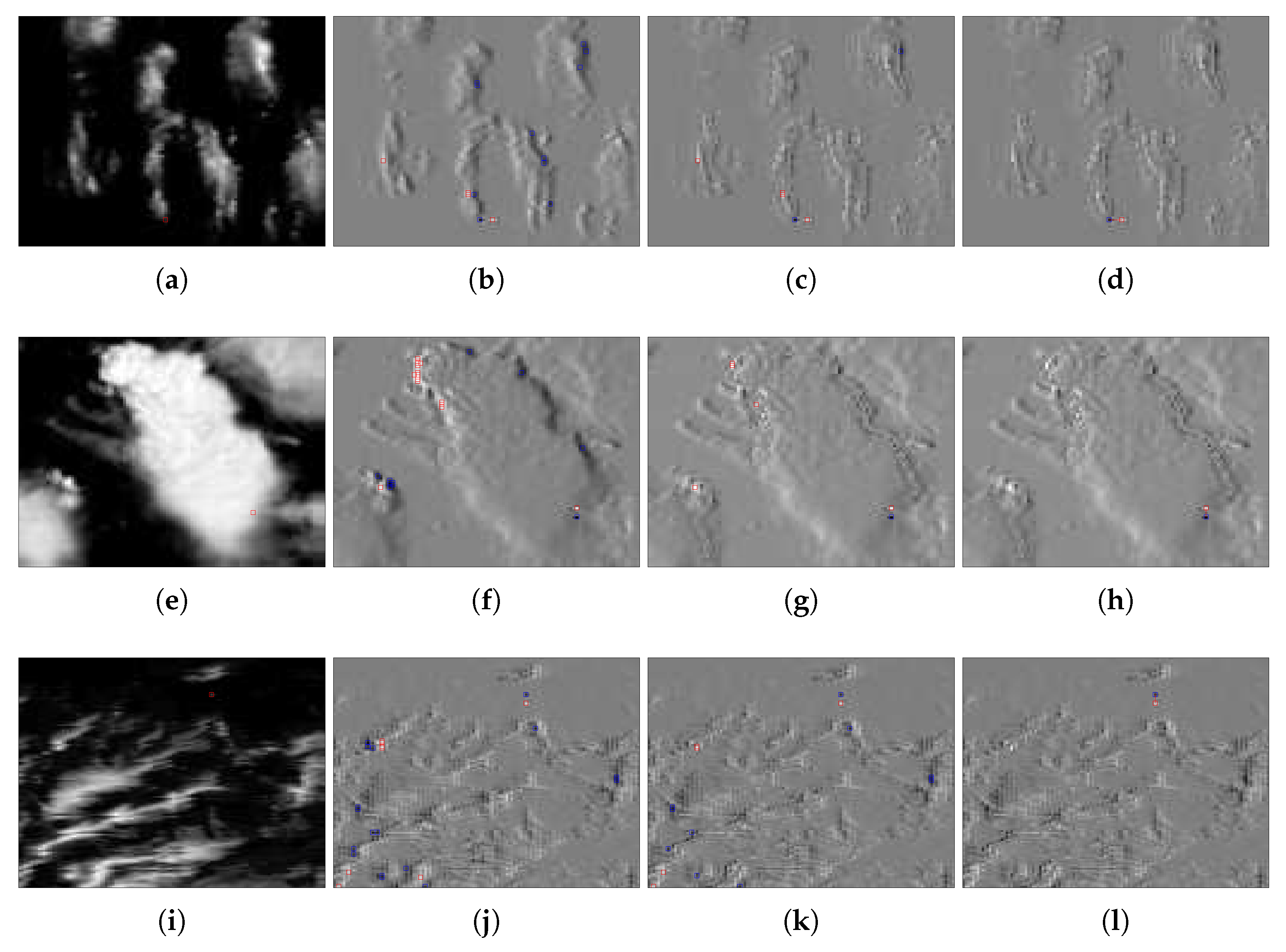

4.1. Simulation Scenes

4.2. Experiments on Simulation Scenes

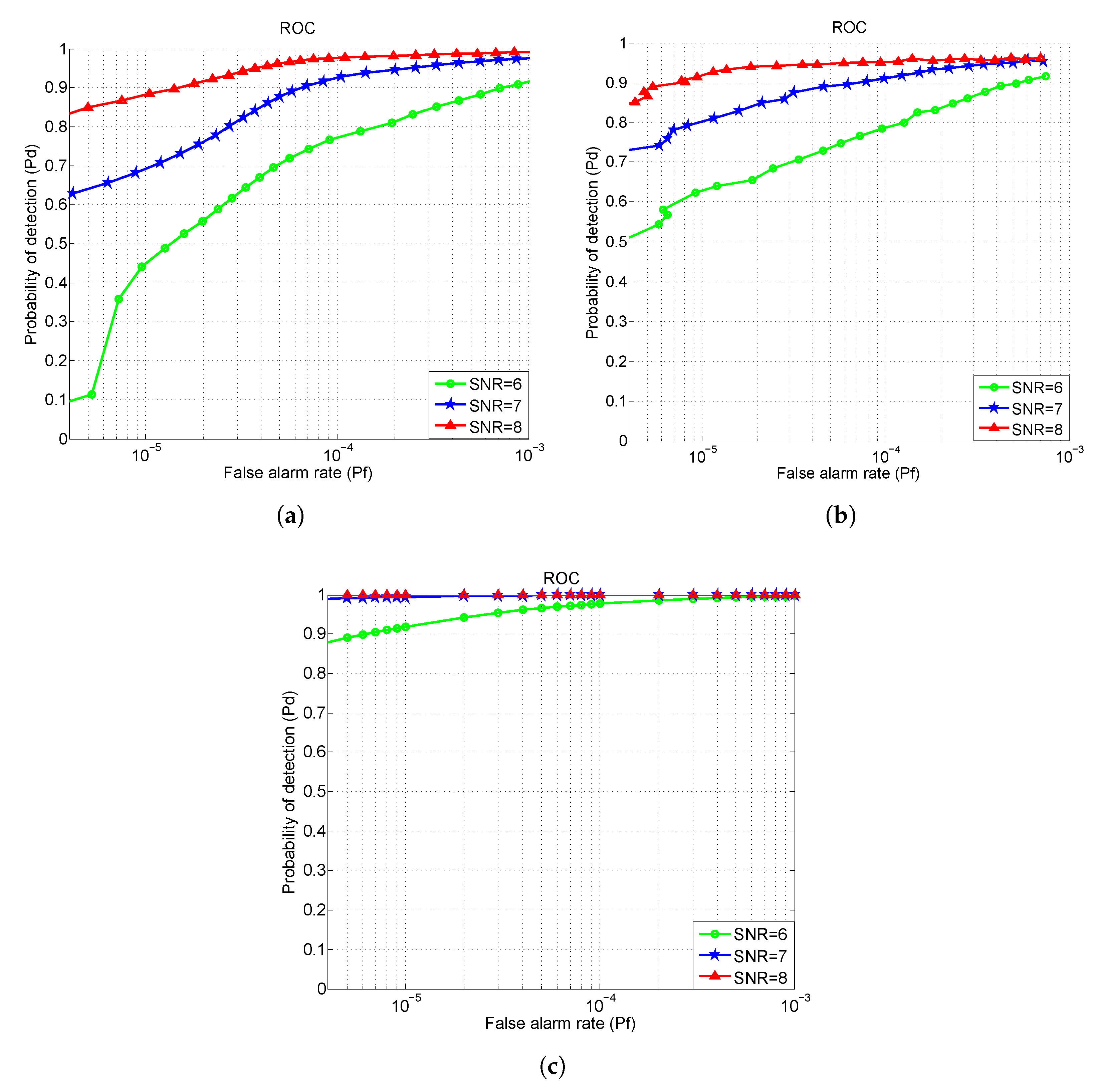

4.3. ROC Curves Evaluation

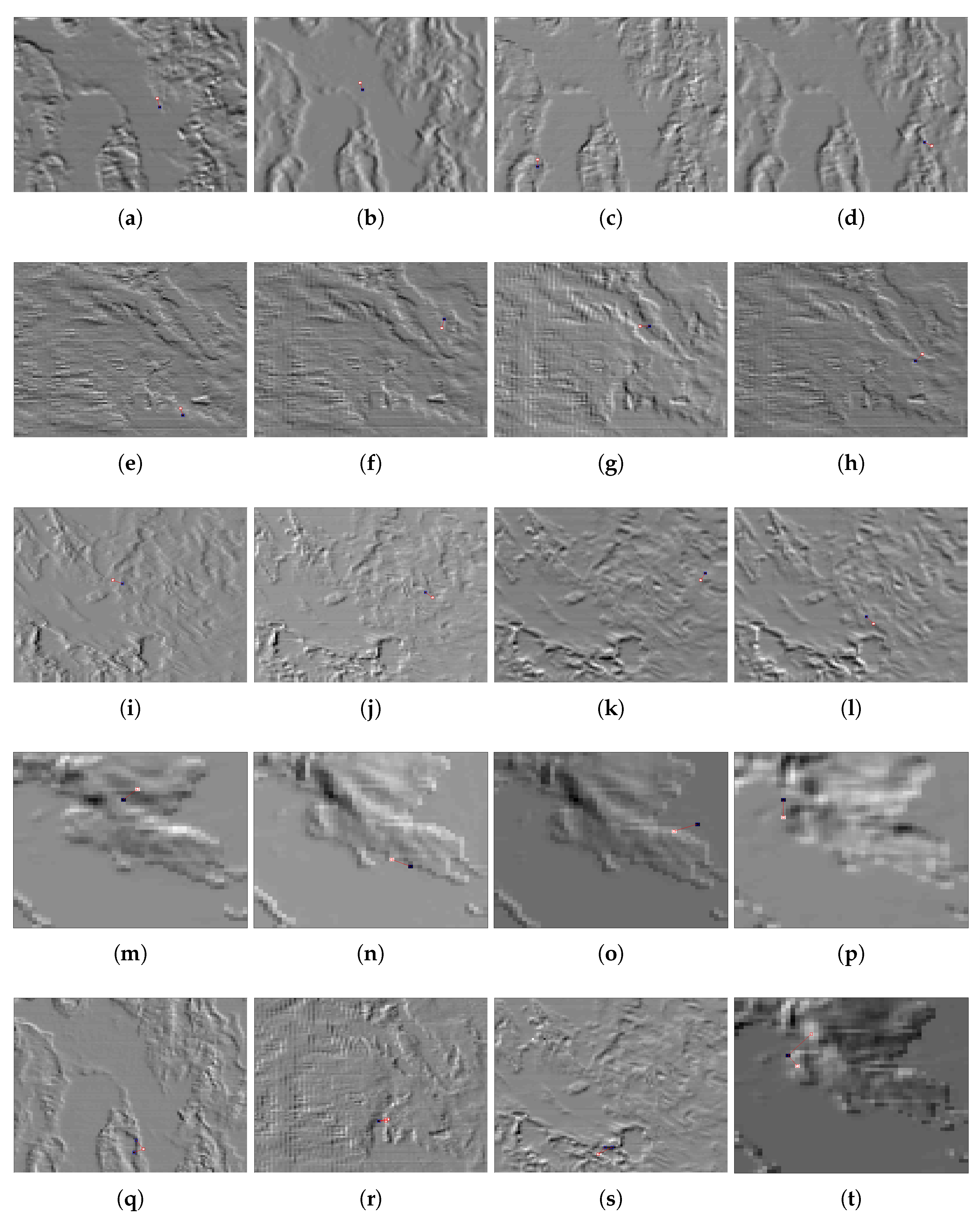

4.4. Evaluation of the Proposed Detection Framework

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhang, M.; Dong, L.; Ma, D.; Xu, W. Infrared target detection in marine images with heavy waves via local patch similarity. Infrared Phys. Technol. 2022, 125, 104283. [Google Scholar] [CrossRef]

- Rawat, S.S.; Verma, S.K.; Kumar, Y. Infrared small target detection based on non-convex triple tensor factorisation. IET Image Process. 2021, 15, 556–570. [Google Scholar] [CrossRef]

- Zhu, Q.; Zhu, S.; Liu, G.; Peng, Z. Infrared Small Target Detection Using Local Feature-Based Density Peaks Searching. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6507805. [Google Scholar] [CrossRef]

- He, X.; Ling, Q.; Zhang, Y.; Lin, Z.; Zhou, S. Detecting Dim Small Target in Infrared Images via Sub-Pixel Sampling Cuneate Network. IEEE Geosci. Remote Sens. Lett. 2022, 19, 3189225. [Google Scholar] [CrossRef]

- Lu, R.; Yang, X.; Li, W.; Fan, J.; Li, D.; Jing, X. Robust infrared small target detection via multidirectional derivative-based weighted contrast measure. IEEE Geosci. Remote Sens. Lett. 2020, 19, 7000105. [Google Scholar] [CrossRef]

- Yang, X.; Wu, T.; Wang, N.; Huang, Y.; Song, B.; Gao, X. HCNN-PSI: A hybrid CNN with partial semantic information for space target recognition. Pattern Recognit. 2020, 108, 107531. [Google Scholar] [CrossRef]

- Bai, X.h.; Xu, S.w.; Guo, Z.x.; Shui, P.l. Floating Small Target Detection Based on the Dual-polarization Cross-time-frequency Distribution in Sea Clutter. Digital Signal Process. 2022, 129, 103625. [Google Scholar] [CrossRef]

- Liu, T.; Yang, J.; Li, B.; Xiao, C.; Sun, Y.; Wang, Y.; An, W. Nonconvex Tensor Low-Rank Approximation for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5614718. [Google Scholar] [CrossRef]

- Gao, J.; Guo, Y.; Lin, Z.; An, W.; Li, J. Robust infrared small target detection using multiscale gray and variance difference measures. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 5039–5052. [Google Scholar] [CrossRef]

- Huang, Z.; Zhang, Y.; Yue, X.; Li, X.; Fang, H.; Hong, H.; Zhang, T. Joint horizontal-vertical enhancement and tracking scheme for robust contact-point detection from pantograph-catenary infrared images. Infrared Phys. Technol. 2020, 105, 103156. [Google Scholar] [CrossRef]

- Gao, Z.; Dai, J.; Xie, C. Dim and small target detection based on feature mapping neural networks. J. Vis. Commun. Image Represent. 2019, 62, 206–216. [Google Scholar] [CrossRef]

- Ding, L.; Xu, X.; Cao, Y.; Zhai, G.; Yang, F.; Qian, L. Detection and tracking of infrared small target by jointly using SSD and pipeline filter. Digit. Signal Process. 2021, 110, 102949. [Google Scholar] [CrossRef]

- Wang, N.; Li, B.; Wei, X.; Wang, Y.; Yan, H. Ship detection in spaceborne infrared image based on lightweight CNN and multisource feature cascade decision. IEEE Trans. Geosci. Remote Sens. 2020, 59, 4324–4339. [Google Scholar] [CrossRef]

- Li, Y.; Li, Z.; Shen, Y.; Guo, Z. Infrared Small Target Detection Via Center-surround Gray Difference Measure with Local Image Block Analysis. IEEE Trans. Aerosp. Electron. Syst. 2022. [Google Scholar] [CrossRef]

- Zuo, Z.; Tong, X.; Wei, J.; Su, S.; Wu, P.; Guo, R.; Sun, B. AFFPN: Attention Fusion Feature Pyramid Network for Small Infrared Target Detection. Remote Sens. 2022, 14, 3412. [Google Scholar] [CrossRef]

- Zhang, T.; Peng, Z.; Wu, H.; He, Y.; Li, C.; Yang, C. Infrared small target detection via self-regularized weighted sparse model. Neurocomputing 2021, 420, 124–148. [Google Scholar] [CrossRef]

- Wang, H.; Li, H.; Zhou, H.; Chen, X. Low-altitude infrared small target detection based on fully convolutional regression network and graph matching. Infrared Phys. Technol. 2021, 115, 103738. [Google Scholar] [CrossRef]

- Chen, G.; Wang, W.; Tan, S. IRSTFormer: A Hierarchical Vision Transformer for Infrared Small Target Detection. Remote Sens. 2022, 14, 3258. [Google Scholar] [CrossRef]

- Qin, Y.; Bruzzone, L.; Gao, C.; Li, B. Infrared small target detection based on facet kernel and random walker. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7104–7118. [Google Scholar] [CrossRef]

- Zhao, M.; Li, L.; Li, W.; Tao, R.; Li, L.; Zhang, W. Infrared small-target detection based on multiple morphological profiles. IEEE Trans. Geosci. Remote Sens. 2020, 59, 6077–6091. [Google Scholar] [CrossRef]

- Yang, P.; Dong, L.; Xu, W. Detecting Small Infrared Maritime Targets Overwhelmed in Heavy Waves by Weighted Multidirectional Gradient Measure. IEEE Geosci. Remote Sens. Lett. 2021, 19, 3080389. [Google Scholar] [CrossRef]

- Zhang, T.; Wu, H.; Liu, Y.; Peng, L.; Yang, C.; Peng, Z. Infrared small target detection based on non-convex optimization with Lp-norm constraint. Remote Sens. 2019, 11, 559. [Google Scholar] [CrossRef]

- Dai, Y.; Wu, Y.; Zhou, F.; Barnard, K. Attentional local contrast networks for infrared small target detection. IEEE Trans. Geosci. Remote Sens. 2021, 59, 9813–9824. [Google Scholar] [CrossRef]

- Zhou, F.; Wu, Y.; Dai, Y. Infrared small target detection via incorporating spatial structural prior into intrinsic tensor sparsity regularization. Digit. Signal Process. 2021, 111, 102966. [Google Scholar] [CrossRef]

- Tian, Y.; Liu, J.; Zhu, S.; Xu, F.; Bai, G.; Liu, C. Ship Detection in Visible Remote Sensing Image Based on Saliency Extraction and Modified Channel Features. Remote Sens. 2022, 14, 3347. [Google Scholar] [CrossRef]

- Deng, L.; Zhang, J.; Xu, G.; Zhu, H. Infrared small target detection via adaptive M-estimator ring top-hat transformation. Pattern Recognit. 2021, 112, 107729. [Google Scholar] [CrossRef]

- Hou, Q.; Wang, Z.; Tan, F.; Zhao, Y.; Zheng, H.; Zhang, W. RISTDnet: Robust infrared small target detection network. IEEE Geosci. Remote Sens. Lett. 2021, 19, 3050828. [Google Scholar] [CrossRef]

- Shahraki, H.; Aalaei, S.; Moradi, S. Infrared small target detection based on the dynamic particle swarm optimization. Infrared Phys. Technol. 2021, 117, 103837. [Google Scholar] [CrossRef]

- Zhao, M.; Li, W.; Li, L.; Ma, P.; Cai, Z.; Tao, R. Three-order tensor creation and tucker decomposition for infrared small-target detection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5000216. [Google Scholar] [CrossRef]

- Peršić, J.; Petrović, L.; Marković, I.; Petrović, I. Spatiotemporal multisensor calibration via gaussian processes moving target tracking. IEEE Trans. Robot. 2021, 37, 1401–1415. [Google Scholar] [CrossRef]

- Cui, H.; Li, L.; Liu, X.; Su, X.; Chen, F. Infrared Small Target Detection Based on Weighted Three-Layer Window Local Contrast. IEEE Geosci. Remote Sens. Lett. 2021, 19, 3133649. [Google Scholar] [CrossRef]

- Xue, W.; Qi, J.; Shao, G.; Xiao, Z.; Zhang, Y.; Zhong, P. Low-Rank Approximation and Multiple Sparse Constraint Modeling for Infrared Low-Flying Fixed-Wing UAV Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4150–4166. [Google Scholar] [CrossRef]

- Stojnić, V.; Risojević, V.; Muštra, M.; Jovanović, V.; Filipi, J.; Kezić, N.; Babić, Z. A method for detection of small moving objects in UAV videos. Remote Sens. 2021, 13, 653. [Google Scholar] [CrossRef]

- Aghaziyarati, S.; Moradi, S.; Talebi, H. Small infrared target detection using absolute average difference weighted by cumulative directional derivatives. Infrared Phys. Technol. 2019, 101, 78–87. [Google Scholar] [CrossRef]

- Pang, D.; Shan, T.; Li, W.; Ma, P.; Liu, S.; Tao, R. Infrared dim and small target detection based on greedy bilateral factorization in image sequences. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3394–3408. [Google Scholar] [CrossRef]

- Dai, Y.; Wu, Y.; Zhou, F.; Barnard, K. Asymmetric contextual modulation for infrared small target detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2021; pp. 950–959. [Google Scholar]

- Zhou, F.; Wu, Y.; Dai, Y.; Ni, K. Robust infrared small target detection via jointly sparse constraint of l 1/2-metric and dual-graph regularization. Remote Sens. 2020, 12, 1963. [Google Scholar] [CrossRef]

- Liu, D.; Cao, L.; Li, Z.; Liu, T.; Che, P. Infrared Small Target Detection Based on Flux Density and Direction Diversity in Gradient Vector Field. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2018, 2528–2554. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, Y. Robust infrared small target detection using local steering kernel reconstruction. Pattern Recognit. 2018, 77, 113–125. [Google Scholar] [CrossRef]

- Li, C.; Chen, N.; Zhao, H.; Yu, T. Multiple-beam lidar detection technology. In Proceedings of the Seventh Asia Pacific Conference on Optics Manufacture and 2021 International Forum of Young Scientists on Advanced Optical Manufacturing (APCOM and YSAOM 2021), SPIE, Hong Kong, China, 13–16 August 2022; Volume 12166, pp. 1773–1778. [Google Scholar]

- Liu, H.K.; Zhang, L.; Huang, H. Small target detection in infrared videos based on spatio-temporal tensor model. IEEE Trans. Geosci. Remote Sens. 2020, 58, 8689–8700. [Google Scholar] [CrossRef]

- Pang, D.; Shan, T.; Li, W.; Ma, P.; Tao, R.; Ma, Y. Facet derivative-based multidirectional edge awareness and spatial–temporal tensor model for infrared small target detection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5001015. [Google Scholar] [CrossRef]

- Zhang, P.; Zhang, L.; Wang, X.; Shen, F.; Pu, T.; Fei, C. Edge and corner awareness-based spatial–temporal tensor model for infrared small-target detection. IEEE Trans. Geosci. Remote Sens. 2020, 59, 10708–10724. [Google Scholar] [CrossRef]

- Hu, Y.; Ma, Y.; Pan, Z.; Liu, Y. Infrared Dim and Small Target Detection from Complex Scenes via Multi-Frame Spatial–Temporal Patch-Tensor Model. Remote Sens. 2022, 14, 2234. [Google Scholar] [CrossRef]

- Sun, Y.; Yang, J.; An, W. Infrared dim and small target detection via multiple subspace learning and spatial-temporal patch-tensor model. IEEE Trans. Geosci. Remote Sens. 2020, 59, 3737–3752. [Google Scholar] [CrossRef]

- Pang, D.; Ma, P.; Shan, T.; Li, W.; Tao, R.; Ma, Y.; Wang, T. STTM-SFR: Spatial–Temporal Tensor Modeling with Saliency Filter Regularization for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–18. [Google Scholar] [CrossRef]

- Kong, X.; Yang, C.; Cao, S.; Li, C.; Peng, Z. Infrared small target detection via nonconvex tensor fibered rank approximation. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–21. [Google Scholar] [CrossRef]

- Biswas, S.K.; Milanfar, P. Linear support tensor machine with LSK channels: Pedestrian detection in thermal infrared images. IEEE Trans. Image Process. 2017, 26, 4229–4242. [Google Scholar] [CrossRef]

- Guan, X.; Zhang, L.; Huang, S.; Peng, Z. Infrared small target detection via non-convex tensor rank surrogate joint local contrast energy. Remote Sens. 2020, 12, 1520. [Google Scholar] [CrossRef]

- Deng, L.; Song, J.; Xu, G.; Zhu, H. When Infrared Small Target Detection Meets Tensor Ring Decomposition: A Multiscale Morphological Framework. IEEE Trans. Aerosp. Electron. Syst. 2022, 3162–3176. [Google Scholar] [CrossRef]

| SCR | Temporal | Spatial-Temporal | Theory | Temporal | Spatial-Temporal | Theory |

|---|---|---|---|---|---|---|

| 6 | 11.39% | 54.40% | 88.98% | 44.03% | 63.93% | 91.90% |

| 7 | 65.53% | 74.16% | 99.02% | 70.67% | 81.06% | 99.38% |

| 8 | 84.93% | 86.53% | 99.97% | 88.39% | 92.74% | 99.98% |

| SCR | Temporal | Spatial-Temporal | Theory | Temporal | Spatial-Temporal | Theory |

|---|---|---|---|---|---|---|

| 6 | ||||||

| 7 | ||||||

| 8 | ||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, J.; Wang, L.; Yu, J.; Pan, Z. Structure Tensor-Based Infrared Small Target Detection Method for a Double Linear Array Detector. Remote Sens. 2022, 14, 4785. https://doi.org/10.3390/rs14194785

Gao J, Wang L, Yu J, Pan Z. Structure Tensor-Based Infrared Small Target Detection Method for a Double Linear Array Detector. Remote Sensing. 2022; 14(19):4785. https://doi.org/10.3390/rs14194785

Chicago/Turabian StyleGao, Jinyan, Luyuan Wang, Jiyang Yu, and Zhongshi Pan. 2022. "Structure Tensor-Based Infrared Small Target Detection Method for a Double Linear Array Detector" Remote Sensing 14, no. 19: 4785. https://doi.org/10.3390/rs14194785