1. Introduction

Wheat lodging is one of the main factors for wheat yield reductions [

1], which is usually presented in the form of obliquely and horizontally distributed crop stalks [

2]. Lodging in wheat is often a result of the combined effects of the inadequate standing power of the crop, mainly caused by nitrogen excess [

3,

4]. In addition to nitrogen excess, conditions such as rain, wind, topography, and soil [

5,

6,

7] also lead to wheat lodging. Easson et al. [

8] showed that as little as 4 mm of rainfall could cause a decrease in soil strength and an increased risk of lodging. Strong winds and rainstorms are important reasons for large-area wheat lodging [

9]. Lodging makes mechanical harvesting difficult, crops become vulnerable to diseases [

10], and it blocks the photosynthesis of crops [

11], which reduces the grain’s quality and yield [

12]. The timely and accurate extraction of wheat lodging information is beneficial for yield prediction and essential for relevant agricultural insurance claims. In this sense, it is necessary to detect and extract lodging areas accurately and to quickly aid in decision-making processes for loss assessments in agricultural insurance and post-agricultural risk management.

In traditional wheat lodging detection, field measurements are used for marking and identifying lodging areas, which is time-consuming, laborious, even harmful to crops during measuring processes [

13,

14,

15]. With the development of remote sensing, extensive studies have been conducted to detect and extract crop lodging information using various sensors, such as visible light sensors [

16], infrared sensors [

17], and microwave sensors [

18]. Many useful features, including spectral characteristics [

19,

20], object height [

21,

22], thermal infrared brightness temperature [

23], and texture information [

24,

25,

26] from different remote sensing techniques, have been used to identify wheat lodging areas. Zhou et al. [

21] utilized a canopy height model to retrieve the height of the maize canopy based on light detection and ranging (LiDAR) data from an unmanned aerial vehicle (UAV), which suggested that plant heights can be used to access the lodging degree of maize with R

2 = 0.964. Wang et al. [

20] combined synthetic aperture radar (SAR) features with spectral indices (SI) extracted from Sentinel-1 and Sentinel-2 observations to identify lodged rice and constructed a model for an optimal sensitive parameters selection (OSPL) with respect to lodging rice detection. The OSPL can distinguish lodged rice from healthy rice and selects the best SI and SAR features for classification with an accuracy of 91.29%. Extracting texture information from optical UAV data by gray-level co-occurrence matrix (GLCM) is also beneficial for crop lodging extraction. Rajapaksa et al. [

24] used GLCM, local binary patterns (LBP), and Gabor filters to extract texture features from UAV images and trained a support vector machine (SVM). The prediction accuracy of lodged rape and wheat reached 97.6% and 96.4%, respectively. Liu et al. [

25] used the principal component analysis transform, GLCM algorithm, and object-oriented method to extract image features. The results showed that the user’s accuracy for wheat lodging area detection was 81.86%. In addition, due to the temperature difference before and after the lodging event, Liu et al. [

17] constructed a rice lodging recognition model using particle swarm optimization and an SVM algorithm with features of thermal-infrared information. The results showed that the combination of visible and thermal-infrared image features can significantly improve the recognition accuracy of rice lodging. Cao et al. [

23] proposed a wheat lodging extraction method based on a watershed algorithm (WA) and an adaptive threshold segmentation algorithm (ATS), proving that the spectral reflectance and temperature of lodged wheat are higher than those of non-lodged wheat. The classification accuracy of the hybrid algorithm of WA and ATS is 93.58%. Traditional feature extraction methods mentioned above can extract crop lodging information using remote sensing images effectively. However, studies on the identification of crop lodging using the edge features generated by edge operators have not been found. Due to clear boundaries between lodged and non-lodged crops, extraction of edge feature is necessary for this research.

In addition, UAV data were used in most current research studies on crop lodging detection and extraction based on remote sensing technology. Tian et al. [

27] constructed a rice lodging detection model based on the characteristics of spectral reflectance, vegetation indices (VIs), texture, and color features of UAV images, which indicated that the spectral reflectance of green- and red-edge bands and the green normalized difference vegetation index (GNDVI) was sensitive to rice lodging, and the accuracy of lodged wheat in the proposed lodging detection model was 98.74%. Wang et al. [

28] employed a UAV carrying an RGB camera to compare various color features and found that the texture feature of the mean G/B was the optimum indicator. An unsupervised classification method based on the indicator was used to distinguish lodged wheat from non-lodged wheat, with an accuracy of 86.15% in the lodged wheat category. Chauhan et al. [

29] used multispectral UAV data in nine bands to compare different grades of lodging severity and explored the variation in spectral reflectance in each band. The results showed that the more severe the lodging, the greater the spectral reflectance, particularly in the green-edge, red-edge, and near-infrared (NIR) bands. The overall accuracy of the nearest-neighbor classification was 90%. Because various features derived from UAV data can be taken as input into various machine learning algorithms, Zhang et al. [

30] introduced five different features (i.e., gray level co-occurrence matrix, local binary pattern, Gabor, intensity, and Hu-moment) into three machine learning classifiers and compared the three algorithms. It showed that the accuracy of wheat lodging detection by combining the RGB images of UAV with a deep learning algorithm (i.e., GoogLeNet) is as large as 93% and can be considered as a simple and reliable tool for wheat lodging detection. The spatial resolution of UAV data is much higher than that of satellite data. In that case, more detailed information can be extracted, so the accuracy of crop lodging detection is highly based on UAV data. However, due to the limitations of their altitude and endurance, UAVs cannot be used for large-scale lodging detection. In addition, the data preprocessing of UAV data (especially radiance calibration) varies in different UAV categories because of various sensors and calibration plates. Satellite images are much wider than UAV images, which is up to tens of kilometers. Low-orbiting satellites can achieve sub-meter resolutions and short revisit periods. Thus, satellites can perform crop lodging detections over large areas and serve as tools for large-scale lodging area mapping. However, studies on crop lodging detection using optical satellite imagery are limited. A maize lodging monitoring model [

31] was proposed by random forest (RF) and partial least squares (PLS), realizing the large-scale monitoring of maize lodging levels with GaoFen-1 satellite images. The results showed that the accuracy of lodging samples reached 79% with the method of RF, outperforming PLS. Researchers combined SAR images from Sentinel-1 with multi-spectral images from Sentinel-2 to assess the loss caused by crop lodging. For example, Abhishek et al. [

32] utilized Sentinel-2 data to draw a map of jute distribution and Sentinel-1 data to assist in distinguishing the vitality categories of jute. Findings suggested that 12.46% of the total jute area over the study area was affected by a super cyclonic storm. Chauhan et al. [

33] used Sentinel-1 and Sentinel-2 data to detect lodging incidences in wheat using time-series analysis. They demonstrated that dense time-series SAR and optical data can be used to detect wheat lodging and recognize lodging severity. Nevertheless, in these studies, the results of crop lodging monitoring are relatively rough. One reason is that the used satellite data possess lower spatial resolution than sub-meter-resolution satellite imagery. On the other hand, these traditional methods rely on the number and selection of samples.

With the rapid development of deep learning, semantic segmentation neural networks have shown powerful feature extraction abilities in recent years. This technology can be used to create effective schemes for detecting crop lodging. Yang et al. [

34] established semantic segmentation network models to identify rice lodging using VIs, achieving an accuracy of 94.43% using FCN-AlexNet. A method [

35] that combines transfer learning and DeepLabv3+ network proposed by Zhang et al. was used to extract lodging areas of wheat at multiple growth stages. It can be used to predict wheat lodging and has improved prediction effects compared to the U-Net. Song et al. [

36] proposed an image fusion-based method for sunflower lodging recognition. Low-resolution multispectral images were fused with high-resolution visible images to obtain high-resolution multispectral images. Skip connection, deep separate convolution, and conditional random field technology were used to improve SegNet and segmentation accuracy reached 89.8%. Su et al. [

37] improved U-Net by combining dense blocks, DenseNet, and skip connections. The accuracy of the model for rice lodging images was 97.30%. Unfortunately, these improved neural networks are all based on UAV data, whereas, compared with UAV data, the interclass homogeneity and intraclass heterogeneity of satellite data are high due to relatively low spatial resolutions. To the best of our knowledge, no semantic segmentation neural network is proposed based on satellite images.

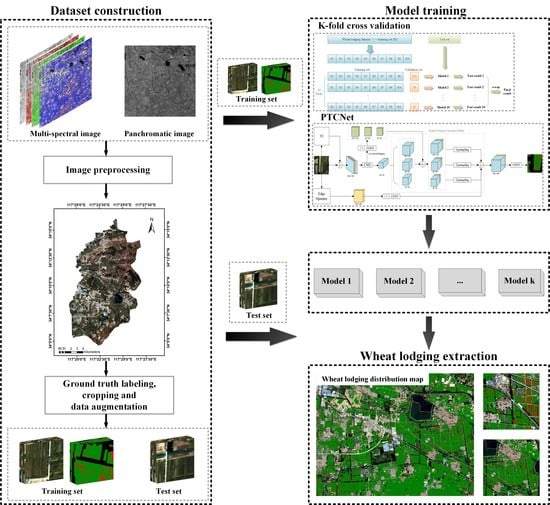

Large-scale detection of wheat lodging is challenging due to the small coverage of UAV images used in previous studies. Classical semantic segmentation networks do not pay attention to the characteristics of wheat lodging so the boundaries of wheat lodging areas cannot be clearly extracted. The main objective of this study is to propose a semantic segmentation neural network that can extract wheat lodging areas using satellite images with sub-meter spatial resolution. Datasets for wheat lodging from a satellite and a UAV were first constructed. Subsequently, the network in this study incorporated VIs and combined low-level features with high-level features at multiple scales. Edge features were generated by three edge operators and then concatenated with upsampled feature maps to enhance the boundary extraction for lodged wheat. Finally, the proposed network achieved accurate and large-scale detections of wheat lodging, which are significant in the areas of loss assessment and agricultural insurance claims.