Integrating a UAV-Derived DEM in Object-Based Image Analysis Increases Habitat Classification Accuracy on Coral Reefs

Abstract

:1. Introduction

2. Materials and Methods

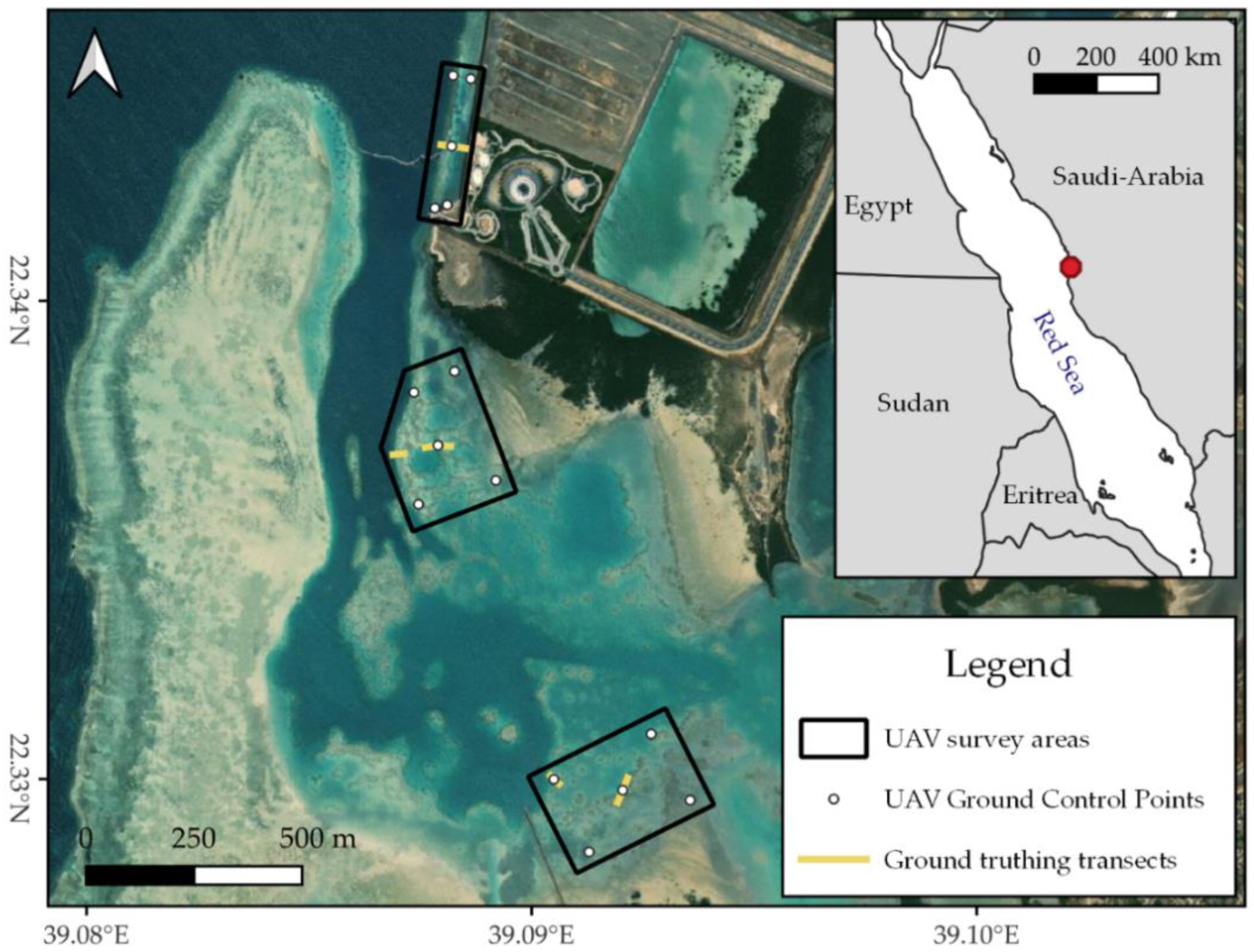

2.1. Study Area

2.2. Aerial Data Collection

2.3. PPK Geolocation of UAV Images and GCPs

2.4. Ground Truth Data Collection

2.5. Structure-from-Motion Processing

2.6. Geomorphometric Variables

2.7. Habitat Classification through Object-Based Image Analysis

2.8. Accuracy Assessment of the Habitat Classification

3. Results

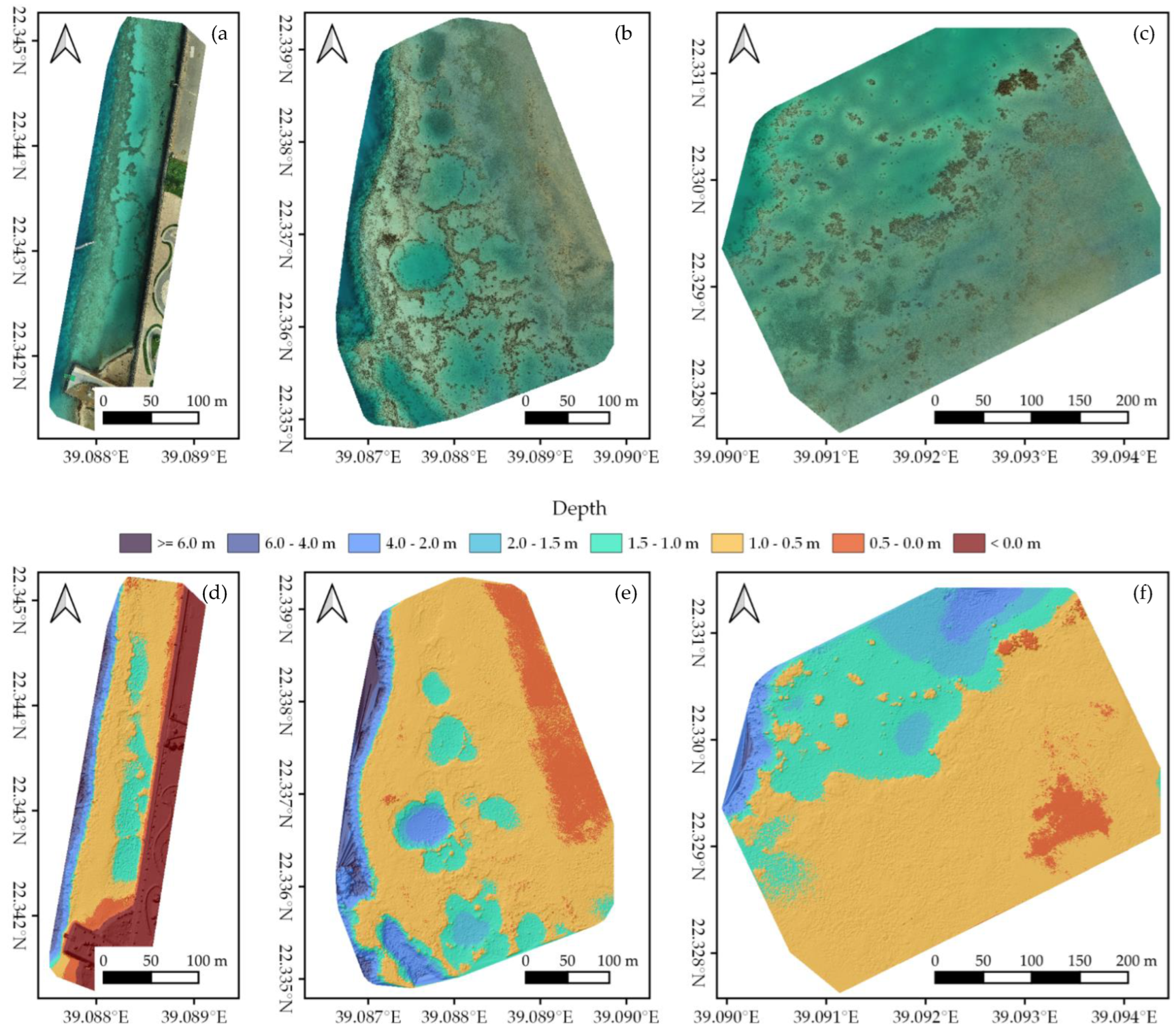

3.1. Structure-from-Motion Output

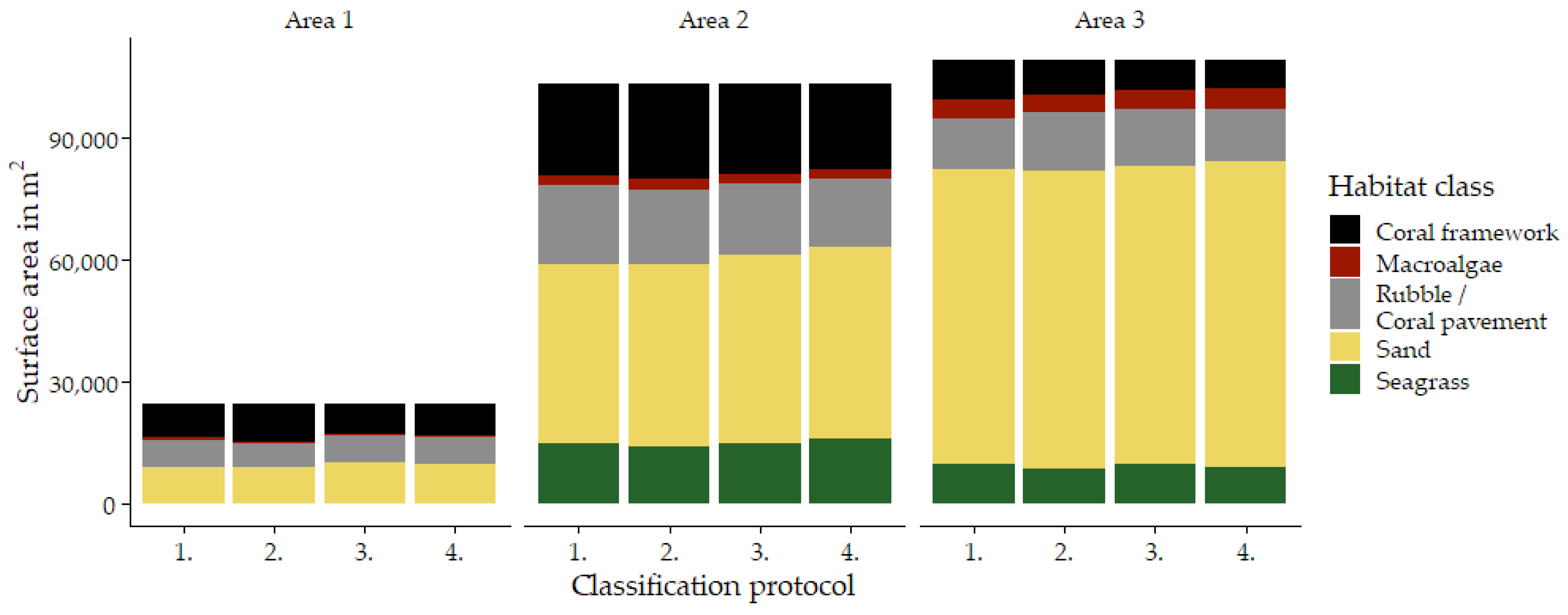

3.2. Habitat Maps

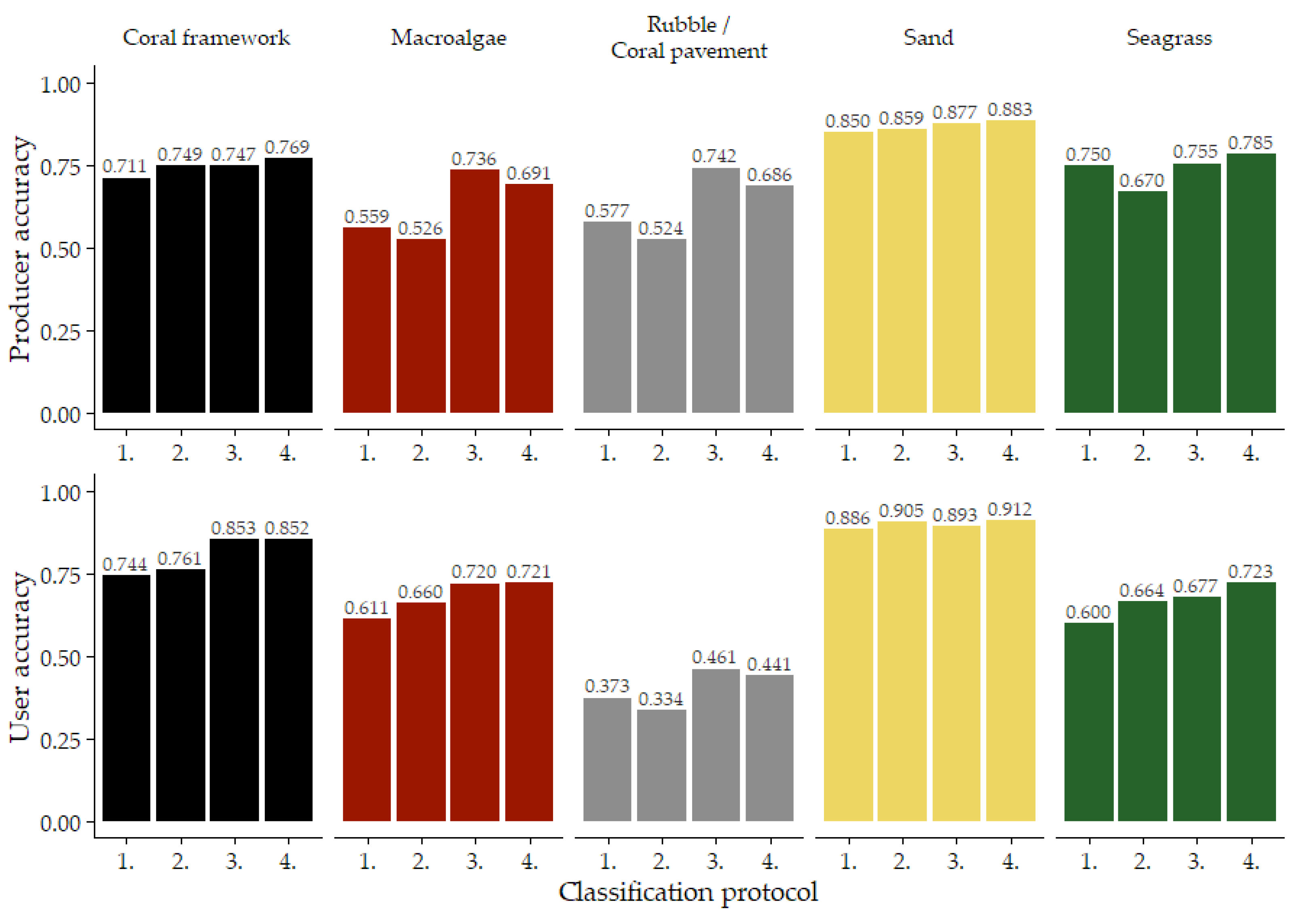

3.3. Habitat Classification Accuracy

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Bellwood, D.R.; Hughes, T.P.; Folke, C.; Nyström, M. Confronting the coral reef crisis. Nature 2004, 429, 827–833. [Google Scholar] [CrossRef] [PubMed]

- Hughes, T.P.; Kerry, J.T.; Álvarez-Noriega, M.; Álvarez-Romero, J.G.; Anderson, K.D.; Baird, A.H.; Babcock, R.C.; Beger, M.; Bellwood, D.R.; Berkelmans, R.; et al. Global warming and recurrent mass bleaching of corals. Nature 2017, 543, 373–377. [Google Scholar] [CrossRef] [PubMed]

- Baird, A.; Madin, J.; Álvarez-Noriega, M.; Fontoura, L.; Kerry, J.; Kuo, C.; Precoda, K.; Torres-Pulliza, D.; Woods, R.; Zawada, K.; et al. A decline in bleaching suggests that depth can provide a refuge from global warming in most coral taxa. Mar. Ecol. Prog. Ser. 2018, 603, 257–264. [Google Scholar] [CrossRef] [Green Version]

- Monroe, A.A.; Ziegler, M.; Roik, A.; Röthig, T.; Hardenstine, R.S.; Emms, M.A.; Jensen, T.; Voolstra, C.R.; Berumen, M.L. In situ observations of coral bleaching in the central Saudi Arabian Red Sea during the 2015/2016 global coral bleaching event. PLoS ONE 2018, 13, e0195814. [Google Scholar] [CrossRef] [PubMed]

- Furby, K.A.; Bouwmeester, J.; Berumen, M.L. Susceptibility of central Red Sea corals during a major bleaching event. Coral Reefs 2013, 32, 505–513. [Google Scholar] [CrossRef]

- Mumby, P.J.; Steneck, R.S. Coral reef management and conservation in light of rapidly evolving ecological paradigms. Trends Ecol. Evol. 2008, 23, 555–563. [Google Scholar] [CrossRef] [PubMed]

- Brandl, S.J.; Rasher, D.B.; Côté, I.M.; Casey, J.M.; Darling, E.S.; Lefcheck, J.S.; Duffy, J.E. Coral reef ecosystem functioning: Eight core processes and the role of biodiversity. Front. Ecol. Environ. 2019, 17, 445–454. [Google Scholar] [CrossRef]

- Hughes, T.P.; Barnes, M.L.; Bellwood, D.R.; Cinner, J.E.; Cumming, G.S.; Jackson, J.B.C.; Kleypas, J.; van de Leemput, I.A.; Lough, J.M.; Morrison, T.H.; et al. Coral reefs in the Anthropocene. Nature 2017, 546, 82–90. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- McLeod, K.L.; Lubchenco, J.; Palumbi, S.; Rosenberg, A.A. Scientific Consensus Statement on Marine Ecosystem-Based Management. 2005, 1–5. Available online: https://marineplanning.org/wp-content/uploads/2015/07/Consensusstatement.pdf (accessed on 27 June 2022).

- Crowder, L.; Norse, E. Essential ecological insights for marine ecosystem-based management and marine spatial planning. Mar. Policy 2008, 32, 772–778. [Google Scholar] [CrossRef]

- Ehler, C.; Douvere, F. Marine Spatial Planning: A Step-by-Step Approach. IOC. Available online: https://www.oceanbestpractices.net/handle/11329/459 (accessed on 27 June 2022).

- Cogan, C.B.; Todd, B.J.; Lawton, P.; Noji, T.T. The role of marine habitat mapping in ecosystem-based management. ICES J. Mar. Sci. 2009, 66, 2033–2042. [Google Scholar] [CrossRef]

- Baker, E.K.; Harris, P.T. Habitat mapping and marine management. In Seafloor Geomorphology as Benthic Habitat; Elsevier: Amsterdam, The Netherlands, 2020; pp. 17–33. ISBN 9780128149607. [Google Scholar]

- Harris, P.T.; Baker, E.K. Why map benthic habitats? In Seafloor Geomorphology as Benthic Habitat; Elsevier: Amsterdam, The Netherlands, 2020; pp. 3–15. ISBN 9780128149607. [Google Scholar]

- Hamylton, S.M. Mapping coral reef environments. Prog. Phys. Geogr. Earth Environ. 2017, 41, 803–833. [Google Scholar] [CrossRef] [Green Version]

- Costa, B.M.; Battista, T.A.; Pittman, S.J. Comparative evaluation of airborne LiDAR and ship-based multibeam SoNAR bathymetry and intensity for mapping coral reef ecosystems. Remote Sens. Environ. 2009, 113, 1082–1100. [Google Scholar] [CrossRef]

- Purkis, S.J. Remote Sensing Tropical Coral Reefs: The View from Above. Ann. Rev. Mar. Sci. 2018, 10, 149–168. [Google Scholar] [CrossRef] [PubMed]

- Joyce, K.E.; Duce, S.; Leahy, S.M.; Leon, J.; Maier, S.W. Principles and practice of acquiring drone-based image data in marine environments. Mar. Freshw. Res. 2019, 70, 952. [Google Scholar] [CrossRef]

- Roelfsema, C.; Phinn, S.R. Integrating field data with high spatial resolution multispectral satellite imagery for calibration and validation of coral reef benthic community maps. J. Appl. Remote Sens. 2010, 4, 043527. [Google Scholar] [CrossRef] [Green Version]

- Leiper, I.A.; Phinn, S.R.; Roelfsema, C.M.; Joyce, K.E.; Dekker, A.G. Mapping Coral Reef Benthos, Substrates, and Bathymetry, Using Compact Airborne Spectrographic Imager (CASI) Data. Remote Sens. 2014, 6, 6423–6445. [Google Scholar] [CrossRef] [Green Version]

- Anderson, K.; Gaston, K.J. Lightweight unmanned aerial vehicles will revolutionize spatial ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef] [Green Version]

- Fallati, L.; Saponari, L.; Savini, A.; Marchese, F.; Corselli, C.; Galli, P. Multi-Temporal UAV Data and Object-Based Image Analysis (OBIA) for Estimation of Substrate Changes in a Post-Bleaching Scenario on a Maldivian Reef. Remote Sens. 2020, 12, 2093. [Google Scholar] [CrossRef]

- Bennett, M.K.; Younes, N.; Joyce, K. Automating Drone Image Processing to Map Coral Reef Substrates Using Google Earth Engine. Drones 2020, 4, 50. [Google Scholar] [CrossRef]

- Cornet, V.J.; Joyce, K.E. Assessing the Potential of Remotely-Sensed Drone Spectroscopy to Determine Live Coral Cover on Heron Reef. Drones 2021, 5, 29. [Google Scholar] [CrossRef]

- David, C.G.; Kohl, N.; Casella, E.; Rovere, A.; Ballesteros, P.; Schlurmann, T. Structure-from-Motion on shallow reefs and beaches: Potential and limitations of consumer-grade drones to reconstruct topography and bathymetry. Coral Reefs 2021, 40, 835–851. [Google Scholar] [CrossRef]

- Mohamad, M.N.; Reba, M.N.; Hossain, M.S. A screening approach for the correction of distortion in UAV data for coral community mapping. Geocarto Int. 2021, 37, 7089–7121. [Google Scholar] [CrossRef]

- Muslim, A.M.; Chong, W.S.; Safuan, C.D.M.; Khalil, I.; Hossain, M.S. Coral Reef Mapping of UAV: A Comparison of Sun Glint Correction Methods. Remote Sens. 2019, 11, 2422. [Google Scholar] [CrossRef] [Green Version]

- Collin, A.; Ramambason, C.; Pastol, Y.; Casella, E.; Rovere, A.; Thiault, L.; Espiau, B.; Siu, G.; Lerouvreur, F.; Nakamura, N.; et al. Very high resolution mapping of coral reef state using airborne bathymetric LiDAR surface-intensity and drone imagery. Int. J. Remote Sens. 2018, 39, 5676–5688. [Google Scholar] [CrossRef] [Green Version]

- Casella, E.; Collin, A.; Harris, D.; Ferse, S.; Bejarano, S.; Parravicini, V.; Hench, J.L.; Rovere, A. Mapping coral reefs using consumer-grade drones and structure from motion photogrammetry techniques. Coral Reefs 2017, 36, 269–275. [Google Scholar] [CrossRef]

- Chirayath, V.; Earle, S.A. Drones that see through waves—Preliminary results from airborne fluid lensing for centimetre-scale aquatic conservation. Aquat. Conserv. Mar. Freshw. Ecosyst. 2016, 26, 237–250. [Google Scholar] [CrossRef] [Green Version]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef] [Green Version]

- Burns, J.H.R.; Delparte, D.; Gates, R.D.; Takabayashi, M. Integrating structure-from-motion photogrammetry with geospatial software as a novel technique for quantifying 3D ecological characteristics of coral reefs. PeerJ 2015, 3, e1077. [Google Scholar] [CrossRef]

- Graham, N.A.J.; Nash, K.L. The importance of structural complexity in coral reef ecosystems. Coral Reefs 2013, 32, 315–326. [Google Scholar] [CrossRef]

- Miller, S.; Yadav, S.; Madin, J.S. The contribution of corals to reef structural complexity in Kāne‘ohe Bay. Coral Reefs 2021, 40, 1679–1685. [Google Scholar] [CrossRef]

- Carlot, J.; Rovère, A.; Casella, E.; Harris, D.; Grellet-Muñoz, C.; Chancerelle, Y.; Dormy, E.; Hedouin, L.; Parravicini, V. Community composition predicts photogrammetry-based structural complexity on coral reefs. Coral Reefs 2020, 39, 967–975. [Google Scholar] [CrossRef]

- Andréfouët, S. Coral reef habitat mapping using remote sensing: A user vs. producer perspective. implications for research, management and capacity building. J. Spat. Sci. 2008, 53, 113–129. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Feitosa, R.Q.; van der Meer, F.; van der Werff, H.; van Coillie, F.; et al. Geographic Object-Based Image Analysis—Towards a new paradigm. ISPRS J. Photogramm. Remote Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef] [Green Version]

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS J. Photogramm. Remote Sens. 2004, 58, 239–258. [Google Scholar] [CrossRef]

- Ventura, D.; Bonifazi, A.; Gravina, M.F.; Belluscio, A.; Ardizzone, G. Mapping and Classification of Ecologically Sensitive Marine Habitats Using Unmanned Aerial Vehicle (UAV) Imagery and Object-Based Image Analysis (OBIA). Remote Sens. 2018, 10, 1331. [Google Scholar] [CrossRef] [Green Version]

- Nababan, B.; Mastu, L.O.K.; Idris, N.H.; Panjaitan, J.P. Shallow-Water Benthic Habitat Mapping Using Drone with Object Based Image Analyses. Remote Sens. 2021, 13, 4452. [Google Scholar] [CrossRef]

- Sumesh, K.C.; Ninsawat, S.; Som-ard, J. Integration of RGB-based vegetation index, crop surface model and object-based image analysis approach for sugarcane yield estimation using unmanned aerial vehicle. Comput. Electron. Agric. 2021, 180, 105903. [Google Scholar] [CrossRef]

- Juel, A.; Groom, G.B.; Svenning, J.-C.; Ejrnæs, R. Spatial application of Random Forest models for fine-scale coastal vegetation classification using object based analysis of aerial orthophoto and DEM data. Int. J. Appl. Earth Obs. Geoinf. 2015, 42, 106–114. [Google Scholar] [CrossRef]

- Kraaijenbrink, P.D.A.; Shea, J.M.; Pellicciotti, F.; Jong, S.M.; de Immerzeel, W.W. Object-based analysis of unmanned aerial vehicle imagery to map and characterise surface features on a debris-covered glacier. Remote Sens. Environ. 2016, 186, 581–595. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Lebourgeois, V.; Dupuy, S.; Vintrou, É.; Ameline, M.; Butler, S.; Bégué, A. A Combined Random Forest and OBIA Classification Scheme for Mapping Smallholder Agriculture at Different Nomenclature Levels Using Multisource Data (Simulated Sentinel-2 Time Series, VHRS and DEM). Remote Sens. 2017, 9, 259. [Google Scholar] [CrossRef] [Green Version]

- Phinn, S.R.; Roelfsema, C.M.; Mumby, P.J. Multi-scale, object-based image analysis for mapping geomorphic and ecological zones on coral reefs. Int. J. Remote Sens. 2012, 33, 3768–3797. [Google Scholar] [CrossRef]

- Monteiro, J.G.; Jiménez, J.L.; Gizzi, F.; Přikryl, P.; Lefcheck, J.S.; Santos, R.S.; Canning-Clode, J. Novel approach to enhance coastal habitat and biotope mapping with drone aerial imagery analysis. Sci. Rep. 2021, 11, 1–13. [Google Scholar] [CrossRef]

- Conger, C.L.; Hochberg, E.J.; Fletcher, C.H.; Atkinson, M.J. Decorrelating remote sensing color bands from bathymetry in optically shallow waters. IEEE Trans. Geosci. Remote Sens. 2006, 44, 1655–1660. [Google Scholar] [CrossRef]

- Stumpf, R.P.; Holderied, K.; Sinclair, M. Determination of water depth with high-resolution satellite imagery over variable bottom types. Limnol. Oceanogr. 2003, 48, 547–556. [Google Scholar] [CrossRef]

- Fine, M.; Cinar, M.; Voolstra, C.R.; Safa, A.; Rinkevich, B.; Laffoley, D.; Hilmi, N.; Allemand, D. Coral reefs of the Red Sea—Challenges and potential solutions. Reg. Stud. Mar. Sci. 2019, 25, 100498. [Google Scholar] [CrossRef]

- DJI. Phantom 4 RTK User Manual V2.4. Available online: https://www.dji.com/nl/downloads/products/phantom-4-rtk (accessed on 27 June 2022).

- Pugh, D.T.; Abualnaja, Y.; Jarosz, E. The Tides of the Red Sea. In Oceanographic and Biological Aspects of the Red Sea; Rasul, N.M.A., Stewart, I.C.F., Eds.; Springer Nature: Cham, Switzerland, 2019; pp. 11–40. ISBN 9783319994161. [Google Scholar]

- James, M.R.; Robson, S. Mitigating systematic error in topographic models derived from UAV and ground-based image networks. Earth Surf. Process. Landforms 2014, 39, 1413–1420. [Google Scholar] [CrossRef] [Green Version]

- Štroner, M.; Urban, R.; Seidl, J.; Reindl, T.; Brouček, J. Photogrammetry Using UAV-Mounted GNSS RTK: Georeferencing Strategies without GCPs. Remote Sens. 2021, 13, 1336. [Google Scholar] [CrossRef]

- Taddia, Y.; Stecchi, F.; Pellegrinelli, A. Coastal Mapping Using DJI Phantom 4 RTK in Post-Processing Kinematic Mode. Drones 2020, 4, 9. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S.; D’Oleire-Oltmanns, S.; Niethammer, U. Optimising UAV topographic surveys processed with structure-from-motion: Ground control quality, quantity and bundle adjustment. Geomorphology 2017, 280, 51–66. [Google Scholar] [CrossRef] [Green Version]

- Przybilla, H.-J.; Bäumker, M.; Luhmann, T.; Hastedt, H.; Eilers, M. Interaction between direct georeferencing, control point configuration and camera self-calibration for RTK-based UAV photogrammetry. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 485–492. [Google Scholar] [CrossRef]

- Tomaštík, J.; Mokroš, M.; Surový, P.; Grznárová, A.; Merganič, J. UAV RTK/PPK Method—An Optimal Solution for Mapping Inaccessible Forested Areas? Remote Sens. 2019, 11, 721. [Google Scholar] [CrossRef] [Green Version]

- Famiglietti, N.A.; Cecere, G.; Grasso, C.; Memmolo, A.; Vicari, A. A Test on the Potential of a Low Cost Unmanned Aerial Vehicle RTK/PPK Solution for Precision Positioning. Sensors 2021, 21, 3882. [Google Scholar] [CrossRef]

- General Authority for Survey and Geospatial Information KSACORS. Available online: https://ksacors.gcs.gov.sa/ (accessed on 22 February 2022).

- REDcatch GmbH. REDtoolbox v2.82 User Manual. Available online: https://www.redcatch.at/downloads_all/REDtoolbox_manual_EN.pdf (accessed on 2 October 2022).

- Jiang, S.; Jiang, C.; Jiang, W. Efficient structure from motion for large-scale UAV images: A review and a comparison of SfM tools. ISPRS J. Photogramm. Remote Sens. 2020, 167, 230–251. [Google Scholar] [CrossRef]

- Sona, G.; Pinto, L.; Pagliari, D.; Passoni, D.; Gini, R. Experimental analysis of different software packages for orientation and digital surface modelling from UAV images. Earth Sci. Inf. 2014, 7, 97–107. [Google Scholar] [CrossRef]

- Burns, J.H.R.; Delparte, D. Comparison of commercial Structure-from-Motion photogrammetry software used for underwater three-dimensional modeling of Coral reef environments. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W3, 127–131. [Google Scholar] [CrossRef] [Green Version]

- Conrad, O.; Bechtel, B.; Bock, M.; Dietrich, H.; Fischer, E.; Gerlitz, L.; Wehberg, J.; Wichmann, V.; Böhner, J. System for Automated Geoscientific Analyses (SAGA) v. 2.1.4. Geosci. Model Dev. 2015, 8, 1991–2007. [Google Scholar] [CrossRef] [Green Version]

- General Authority Geospatial Information. SANSRS Transformation Tools. Available online: https://gds.gasgi.gov.sa/ (accessed on 9 March 2022).

- General Authority Geospatial Information. Technical Summary for Saudi Arabia National Spatial Reference System (SANSRS). Available online: https://www.gasgi.gov.sa/En/Products/Geodesy/Documents/Technical Summary for SANSRS.pdf (accessed on 27 June 2022).

- Zevenbergen, L.W.; Thorne, C.R. Quantitative analysis of land surface topography. Earth Surf. Process. Landforms 1987, 12, 47–56. [Google Scholar] [CrossRef]

- Sappington, J.M.; Longshore, K.M.; Thompson, D.B. Quantifying Landscape Ruggedness for Animal Habitat Analysis: A Case Study Using Bighorn Sheep in the Mojave Desert. J. Wildl. Manag. 2007, 71, 1419–1426. [Google Scholar] [CrossRef]

- Guisan, A.; Weiss, S.B.; Weiss, A.D. GLM versus CCA Spatial Modeling of Plant Species Distribution. Plant Ecol. 1999, 143, 107–122. [Google Scholar] [CrossRef]

- Zimmermann, N.E. Toposcale.Aml. Available online: www.wsl.ch/staff/niklaus.zimmermann/programs/aml4_1.html (accessed on 9 March 2022).

- Trimble GmbH. User Guide eCognition Developer. Available online: https://docs.ecognition.com/v9.5.0/Page collection/eCognition Suite Dev UG.htm (accessed on 27 June 2022).

- Roelfsema, C.; Kovacs, E.; Roos, P.; Terzano, D.; Lyons, M.; Phinn, S. Use of a semi-automated object based analysis to map benthic composition, Heron Reef, Southern Great Barrier Reef. Remote Sens. Lett. 2018, 9, 324–333. [Google Scholar] [CrossRef]

- Liu, D.; Xia, F. Assessing object-based classification: Advantages and limitations. Remote Sens. Lett. 2010, 1, 187–194. [Google Scholar] [CrossRef]

- Drăguţ, L.; Csillik, O.; Eisank, C.; Tiede, D. Automated parameterisation for multi-scale image segmentation on multiple layers. ISPRS J. Photogramm. Remote Sens. 2014, 88, 119–127. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Landis, J.R.; Koch, G.G. The Measurement of Observer Agreement for Categorical Data. Biometrics 1977, 33, 159. [Google Scholar] [CrossRef] [Green Version]

- Lehmann, J.; Nieberding, F.; Prinz, T.; Knoth, C. Analysis of Unmanned Aerial System-Based CIR Images in Forestry—A New Perspective to Monitor Pest Infestation Levels. Forests 2015, 6, 594–612. [Google Scholar] [CrossRef] [Green Version]

- Rende, S.F.; Bosman, A.; di Mento, R.; Bruno, F.; Lagudi, A.; Irving, A.D.; Dattola, L.; Giambattista, L.; di Lanera, P.; Proietti, R.; et al. Ultra-High-Resolution Mapping of Posidonia oceanica (L.) Delile Meadows through Acoustic, Optical Data and Object-based Image Classification. J. Mar. Sci. Eng. 2020, 8, 647. [Google Scholar] [CrossRef]

- Hafeez, J.; Jeon, H.J.; Hamacher, A.; Kwon, S.C.; Lee, S.H. The effect of patterns on image-based modelling of texture-less objects. Metrol. Meas. Syst. 2018, 25, 755–767. [Google Scholar] [CrossRef]

- Rossi, L.; Mammi, I.; Pelliccia, F. UAV-Derived Multispectral Bathymetry. Remote Sens. 2020, 12, 3897. [Google Scholar] [CrossRef]

- Roelfsema, C.M.; Lyons, M.; Kovacs, E.M.; Maxwell, P.; Saunders, M.I.; Samper-Villarreal, J.; Phinn, S.R. Multi-temporal mapping of seagrass cover, species and biomass: A semi-automated object based image analysis approach. Remote Sens. Environ. 2014, 150, 172–187. [Google Scholar] [CrossRef]

- Hughes, T.P. Catastrophes, Phase Shifts, and Large-Scale Degradation of a Caribbean Coral Reef. Science 1994, 265, 1547–1551. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tolentino-Pablico, G.; Bailly, N.; Froese, R.; Elloran, C. Seaweeds preferred by herbivorous fishes. J. Appl. Phycol. 2008, 20, 933–938. [Google Scholar] [CrossRef]

- McIvor, A.J.; Spaet, J.L.Y.; Williams, C.T.; Berumen, M.L. Unoccupied aerial video (UAV) surveys as alternatives to BRUV surveys for monitoring elasmobranch species in coastal waters. ICES J. Mar. Sci. 2022, 79, 1604–1613. [Google Scholar] [CrossRef]

- Leurs, G.; Nieuwenhuis, B.O.; Zuidewind, T.J.; Hijner, N.; Olff, H.; Govers, L.L. Where Land Meets Sea: Intertidal Areas as Key-Habitats for Sharks and Rays; University of Groningen: Groningen, The Netherlands, 2022; to be submitted. [Google Scholar]

- Gregory, M.R.; Ballance, P.F.; Gibson, G.W.; Ayling, A.M. On How Some Rays (Elasmobranchia) Excavate Feeding Depressions by Jetting Water. SEPM J. Sediment. Res. 1979, 49, 1125–1129. [Google Scholar] [CrossRef]

- Takeuchi, S.; Tamaki, A. Assessment of benthic disturbance associated with stingray foraging for ghost shrimp by aerial survey over an intertidal sandflat. Cont. Shelf Res. 2014, 84, 139–157. [Google Scholar] [CrossRef] [Green Version]

- Verweij, M.C.; Nagelkerken, I.; Hans, I.; Ruseler, S.M.; Mason, P.R.D. Seagrass nurseries contribute to coral reef fish populations. Limnol. Oceanogr. 2008, 53, 1540–1547. [Google Scholar] [CrossRef]

- Nagelkerken, I. Evaluation of Nursery function of Mangroves and Seagrass beds for Tropical Decapods and Reef fishes: Patterns and Underlying Mechanisms. In Ecological Connectivity among Tropical Coastal Ecosystems; Nagelkerken, I., Ed.; Springer: Dordrecht, The Netherlands, 2009; pp. 357–399. ISBN 978-90-481-2405-3. [Google Scholar]

- Abrogueña, J.B.R.; Joydas, T.V.; Pappathy, M.; Cali, N.A.; Alcaria, J.; Shoeb, M. Structure and composition of the macrobenthic community associated to shallow mangrove-seagrass habitat along the southern Red Sea coast, Saudi Arabia. Egypt. J. Aquat. Res. 2021, 47, 61–66. [Google Scholar] [CrossRef]

- Liu, T.; Abd-Elrahman, A.; Morton, J.; Wilhelm, V.L. Comparing fully convolutional networks, random forest, support vector machine, and patch-based deep convolutional neural networks for object-based wetland mapping using images from small unmanned aircraft system. GISci. Remote Sens. 2018, 55, 243–264. [Google Scholar] [CrossRef]

- Parsons, M.; Bratanov, D.; Gaston, K.J.; Gonzalez, F. UAVs, Hyperspectral Remote Sensing, and Machine Learning Revolutionizing Reef Monitoring. Sensors 2018, 18, 2026. [Google Scholar] [CrossRef]

| Setting | Value |

|---|---|

| Mode | 2D Photogrammetry |

| Height | 40 m |

| Speed | 2 m/s |

| Horizontal Overlapping Rate | 75% |

| Vertical Overlapping Rate | 90% |

| Shooting Mode | Timed Shooting |

| Altitude Optimisation | On |

| White Balance | Sunny |

| Metering Mode | Average |

| Shutter Priority | On (1/640 s) |

| Distortion Correction | Off |

| Margin | Manual (10 m) |

| Classification Protocol | ||||

|---|---|---|---|---|

| 1. Traditional Classification | 2. Using Reef Zones | 3. Using Geomorphometric Features | 4. Reef Zones and Geomorphometric Features | |

| Divided by geomorphological zone | NO | YES | NO | YES |

| Image object features used | ||||

| Mean Brightness (RGB) | x | x | x | x |

| HSI Transformation Hue (RGB) | x | x | x | x |

| HSI Transformation Saturation (RGB) | x | x | x | x |

| Mean + Standard deviation: | ||||

| Red | x | x | x | x |

| Green | x | x | x | x |

| Blue | x | x | x | x |

| Digital Elevation Model | x | x | ||

| Slope | x | x | ||

| Aspect | x | x | ||

| Profile Curvature | x | x | ||

| Vector Ruggedness (r = 5.25 cm) | x | x | ||

| Topographic Position Index (r = 45–150 cm) | x | x | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nieuwenhuis, B.O.; Marchese, F.; Casartelli, M.; Sabino, A.; van der Meij, S.E.T.; Benzoni, F. Integrating a UAV-Derived DEM in Object-Based Image Analysis Increases Habitat Classification Accuracy on Coral Reefs. Remote Sens. 2022, 14, 5017. https://doi.org/10.3390/rs14195017

Nieuwenhuis BO, Marchese F, Casartelli M, Sabino A, van der Meij SET, Benzoni F. Integrating a UAV-Derived DEM in Object-Based Image Analysis Increases Habitat Classification Accuracy on Coral Reefs. Remote Sensing. 2022; 14(19):5017. https://doi.org/10.3390/rs14195017

Chicago/Turabian StyleNieuwenhuis, Brian O., Fabio Marchese, Marco Casartelli, Andrea Sabino, Sancia E. T. van der Meij, and Francesca Benzoni. 2022. "Integrating a UAV-Derived DEM in Object-Based Image Analysis Increases Habitat Classification Accuracy on Coral Reefs" Remote Sensing 14, no. 19: 5017. https://doi.org/10.3390/rs14195017

APA StyleNieuwenhuis, B. O., Marchese, F., Casartelli, M., Sabino, A., van der Meij, S. E. T., & Benzoni, F. (2022). Integrating a UAV-Derived DEM in Object-Based Image Analysis Increases Habitat Classification Accuracy on Coral Reefs. Remote Sensing, 14(19), 5017. https://doi.org/10.3390/rs14195017