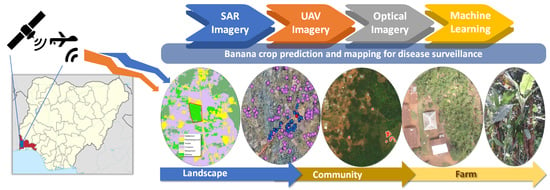

Banana Mapping in Heterogenous Smallholder Farming Systems Using High-Resolution Remote Sensing Imagery and Machine Learning Models with Implications for Banana Bunchy Top Disease Surveillance

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. UAV Data Acquisition

2.3. UAV Image and Ancillary Data Processing

2.4. Sentinel 1 Image and Preprocessing

2.5. Sentinel 2 Image and Preprocessing

2.6. Processing of Vegetation Indices

2.7. Image Classification

2.8. Machine Learning Algorithms

2.8.1. Random Forest Classifier

2.8.2. Support Vector Machine Classifier

2.9. Accuracy Assessment

2.10. Evaluation of the Banana Land Cover Maps for BBTV Surveillance

3. Results

3.1. UAV Classification Performance across Locations and Datasets with the Two ML Models

3.2. Class-Specific Classification Performance for the UAV Datasets Using the Two ML Models

3.3. UAV RF and SVM Confusion Matrices by Crop Type and Other Land Use Types

3.4. Random Forest and Support Vector Machine Classification Performance for Different Sentinel 2A and SAR Datasets

3.5. Use of a Banana Predictor Map for BBTV Surveys

3.6. Feature Importance of the Predictor Variables

4. Discussion

4.1. Banana Detection with UAV Data Using RF and SVM Models

4.2. Crop Type and Landcover Classification with Sentinel 2A and SAR Data

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Brown, A.; Tumuhimbise, R.; Amah, D.; Uwimana, B.; Nyine, M.; Mduma, H.; Talengera, D.; Karamura, D.; Kuriba, J.; Swennen, R. Bananas and Plantains (Musa Spp.). In Genetic Improvement of Tropical Crops; Springer: Cham, Switzerland, 2017; pp. 219–240. [Google Scholar] [CrossRef]

- UNPD. Household: Size and Composition 2018—Countries. Available online: https://population.un.org/household/#/countries/840 (accessed on 25 July 2022).

- FAO. FAOSTAT. Available online: https://www.fao.org/faostat/en/#data/ (accessed on 25 July 2022).

- Kumar, P.L.; Selvarajan, R.; Iskra-Caruana, M.L.; Chabannes, M.; Hanna, R. Biology, Etiology, and Control of Virus Diseases of Banana and Plantain. In Advances in Virus Research; Elsevier: Amsterdam, The Netherlands, 2015; Volume 91, pp. 229–269. [Google Scholar] [CrossRef]

- Lokossou, B.; Gnanvossou, D.; Ayodeji, O.; Akplogan, F.; Safiore, A.; Migan, D.Z.; Pefoura, A.M.; Hanna, R.; Kumar, P.L. Occurrence of Banana Bunchy Top Virus in Banana and Plantain (Musa Spp.) in Benin. New Dis. Rep. 2012, 25, 13. [Google Scholar] [CrossRef] [Green Version]

- Adegbola, R.O.; Ayodeji, O.; Awosusi, O.O.; Atiri, G.I.; Kumar, P.L. First Report of Banana Bunchy Top Virus in Banana and Plantain (Musa Spp.) in Nigeria. Plant Dis. 2013, 97, 290. [Google Scholar] [CrossRef] [PubMed]

- Kolombia, Y.; Oviasuyi, T.; AYISAH, K.D.; Ale Gonh-Goh, A.; Atsu, T.; Oresanya, A.; Ogunsanya, P.; Alabi, T.; Kumar, P.L. First Report of Banana Bunchy Top Virus in Banana (Musa Spp.) and Its Eradication in Togo. Plant Dis. 2021, 105, 3312. [Google Scholar] [CrossRef] [PubMed]

- Ocimati, W.; Tazuba, A.F.; Tushemereirwe, W.K.; Tugume, J.; Omondi, B.A.; Acema, D.; Were, E.; Onyilo, F.; Ssekamate, A.M.; Namanya, P.; et al. First Report of Banana Bunchy Top Disease Caused by Banana Bunchy Top Virus in Uganda. New Dis. Rep. 2021, 44, e12052. [Google Scholar] [CrossRef]

- Shimwela, M.M.; Mahuku, G.; Mbanzibwa, D.R.; Mkamilo, G.; Mark, D.; Mosha, H.I.; Pallangyyo, B.; Fihavango, M.; Oresanya, A.; Ogunsanya, P.; et al. First Report of Banana Bunchy Top Virus in Banana and Plantain (Musa Spp.) in Tanzania. Plant Dis. 2022, 106, 1312. [Google Scholar] [CrossRef]

- Pu, R.; Landry, S. Remote Sensing of Environment A Comparative Analysis of High Spatial Resolution IKONOS and WorldView-2 Imagery for Mapping Urban Tree Species. Remote Sens. Environ. 2012, 124, 516–533. [Google Scholar] [CrossRef]

- Hossain, M.D.; Chen, D. Segmentation for Object-Based Image Analysis (OBIA): A Review of Algorithms and Challenges from Remote Sensing Perspective. ISPRS J. Photogramm. Remote Sens. 2019, 150, 115–134. [Google Scholar] [CrossRef]

- Aeberli, A.; Johansen, K.; Robson, A.; Lamb, D.W.; Phinn, S. Detection of Banana Plants Using Multi-Temporal Multispectral UAV Imagery. Remote Sens. 2021, 13, 2123. [Google Scholar] [CrossRef]

- Karydas, C.; Dimic, G.; Filchev, L.; Chabalala, Y.; Adam, E.; Adem Ali, K. Machine Learning Classification of Fused Sentinel-1 and Sentinel-2 Image Data towards Mapping Fruit Plantations in Highly Heterogenous Landscapes. Remote Sens. 2022, 14, 2621. [Google Scholar] [CrossRef]

- TaTariq, A.; Yan, J.; Gagnon, A.S.; Khan, M.R.; Mumtaz, F. Mapping of Cropland, Cropping Patterns and Crop Types by Combining Optical Remote Sensing Images with Decision Tree Classifier and Random Forest. Geo-Spat. Inf. Sci. 2022. [Google Scholar] [CrossRef]

- Mei, W.; Wang, H.; Fouhey, D.; Zhou, W.; Hinks, I.; Gray, J.M.; van Berkel, D.; Jain, M. Using Deep Learning and Very-High-Resolution Imagery to Map Smallholder Field Boundaries. Remote Sens. 2022, 14, 3046. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Daloye, A.M.; Erkbol, H.; Fritschi, F.B. Crop Monitoring Using Satellite/UAV Data Fusion and Machine Learning. Remote Sens. 2020, 12, 1357. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of Machine-Learning Classification in Remote Sensing: An Applied Review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef] [Green Version]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An Assessment of the Effectiveness of a Random Forest Classifier for Land-Cover Classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Saini, R.; Ghosh, S.K. Crop Classification on Singled Dates Sentinel-2 Imagery Using Random Forest and Support Vector Machine. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII–5, 20–23. [Google Scholar]

- Feng, S.; Zhao, J.; Liu, T.; Zhang, H.; Zhang, Z.; Guo, X. Crop Type Identification and Mapping Using Machine Learning Algorithms and Sentinel-2 Time Series Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3295–3306. [Google Scholar] [CrossRef]

- Sun, C.; Bian, Y.; Zhou, T.; Pan, J. Using of Multi-Source and Multi-Temporal Remote Sensing Data Improves Crop-Type Mapping in the Subtropical Agriculture Region. Sensors 2019, 19, 2401. [Google Scholar] [CrossRef] [Green Version]

- Al-Najjar, H.A.H.; Kalantar, B.; Pradhan, B.; Saeidi, V.; Halin, A.A.; Ueda, N.; Mansor, S. Land Cover Classification from Fused DSM and UAV Images Using Convolutional Neural Networks. Remote Sens. 2019, 11, 1461. [Google Scholar] [CrossRef] [Green Version]

- Iqbal, N.; Mumtaz, R.; Shafi, U.; Zaidi, S.M.H. Gray Level Co-Occurrence Matrix (GLCM) Texture Based Crop Classification Using Low Altitude Remote Sensing Platforms. PeerJ Comput. Sci. 2021, 7, e536. [Google Scholar] [CrossRef]

- Gumma, M.K.; Tummala, K.; Dixit, S.; Collivignarelli, F.; Holecz, F.; Kolli, R.N.; Whitbread, A.M. Crop Type Identification and Spatial Mapping Using Sentinel-2 Satellite Data with Focus on Field-Level Information. Geocarto Int. 2020, 37, 1833–1849. [Google Scholar] [CrossRef]

- Johansen, K.; Sohlbach, M.; Sullivan, B.; Stringer, S.; Peasley, D.; Phinn, S. Mapping Banana Plants from High Spatial Resolution Orthophotos to Facilitate Plant Health Assessment. Remote Sens. 2014, 6, 8261–8286. [Google Scholar] [CrossRef] [Green Version]

- Chew, R.; Rineer, J.; Beach, R.; O’neil, M.; Ujeneza, N.; Lapidus, D.; Miano, T.; Hegarty-Craver, M.; Polly, J.; Temple, D.S. Deep Neural Networks and Transfer Learning for Food Crop Identification in UAV Images. Drones 2020, 4, 7. [Google Scholar] [CrossRef] [Green Version]

- Selvaraj, G.M.; Vergara, A.; Montenegro, F.; Alonso Ruiz, H.; Safari, N.; Raymaekers, D.; Ocimati, W.; Ntamwira, J.; Tits, L.; Omondi, A.B.; et al. Detection of Banana Plants and Their Major Diseases through Aerial Images and Machine Learning Methods: A Case Study in DR Congo and Republic of Benin. ISPRS J. Photogramm. Remote Sens. 2020, 169, 110–124. [Google Scholar] [CrossRef]

- Hall, O.; Dahlin, S.; Marstorp, H.; Bustos, M.F.A.; Öborn, I.; Jirström, M. Classification of Maize in Complex Smallholder Farming Systems Using UAV Imagery. Drones 2018, 2, 22. [Google Scholar] [CrossRef] [Green Version]

- Eberhardt, I.D.R.; Schultz, B.; Rizzi, R.; Sanches, I.D.A.; Formaggio, A.R.; Atzberger, C.; Mello, M.P.; Immitzer, M.; Trabaquini, K.; Foschiera, W.; et al. Cloud Cover Assessment for Operational Crop Monitoring Systems in Tropical Areas. Remote Sens. 2016, 8, 219. [Google Scholar] [CrossRef] [Green Version]

- Abubakar, G.A.; Wang, K.; Shahtahamssebi, A.; Xue, X.; Belete, M.; Gudo, A.J.A.; Shuka, K.A.M.; Gan, M. Mapping Maize Fields by Using Multi-Temporal Sentinel-1A and Sentinel-2A Images in Makarfi, Northern Nigeria, Africa. Sustainability 2020, 12, 2539. [Google Scholar] [CrossRef] [Green Version]

- Blaes, X.; Vanhalle, L.; Defourny, P. Efficiency of Crop Identification Based on Optical and SAR Image Time Series. Remote Sens. Environ. 2005, 96, 352–365. [Google Scholar] [CrossRef]

- Batjes, N.H. Overview of Procedures and Standards in Use at ISRIC WDC-Soils. ISRIC-World Soil information, Wageningen, The Netherlands. 2017. Available online: https://www.isric.org/sites/default/files/isric_report_2017_01doi.pdf (accessed on 3 March 2022).

- Fick, S.E.; Hijmans, R.J. WorldClim 2: New 1-Km Spatial Resolution Climate Surfaces for Global Land Areas. Int. J. Climatol. 2017, 37, 4302–4315. [Google Scholar] [CrossRef]

- Böhler, J.E.; Schaepman, M.E.; Kneubühler, M. Crop Classification in a Heterogeneous Arable Landscape Using Uncalibrated UAV Data. Remote Sens. 2018, 10, 1282. [Google Scholar] [CrossRef]

- Kucharczyk, M.; Hay, G.J.; Ghaffarian, S.; Hugenholtz, C.H. Geographic Object-Based Image Analysis: A Primer and Future Directions. Remote Sens. 2020, 12, 2012. [Google Scholar] [CrossRef]

- Lee, J.; Pottier, E. Polarimetric Radar Imaging: From Basics to Applications; Vienna, Austria. 2017, pp. 1–398. Available online: https://www.taylorfrancis.com/books/mono/10.1201/9781420054989/polarimetric-radar-imaging-jong-sen-lee-eric-pottier (accessed on 12 February 2022).

- Yeom, J.; Jung, J.; Chang, A.; Ashapure, A.; Maeda, M.; Maeda, A.; Landivar, J. Comparison of Vegetation Indices Derived from UAV Data for Differentiation of Tillage Effects in Agriculture. Remote Sens. 2019, 11, 1548. [Google Scholar] [CrossRef] [Green Version]

- Sonobe, R.; Yamaya, Y.; Tani, H.; Wang, X.; Kobayashi, N.; Mochizuki, K. Crop Classification from Sentinel-2-Derived Vegetation Indices Using Ensemble Learning. J. Appl. Remote Sens. 2018, 12, 026019. [Google Scholar] [CrossRef] [Green Version]

- Leutner, B.; Horning, N.; Schwalb-Willmann, J.; Hijmans, R.J. Tools for Remote Sensing Data Analysis-Package ‘RStoolbox’; CRAN; R-Project: Vienna, Austria, 2019. [Google Scholar]

- Suab, S.A.; Avtar, R. Unmanned Aerial Vehicle System (UAVS) Applications in Forestry and Plantation Operations: Experiences in Sabah and Sarawak, Malaysian Borneo. In Unmanned Aerial Vehicle: Applications in Agriculture and Environment; Avtar, R., Watanabe, T., Eds.; Springer: Cham, Switzerland, 2020. [Google Scholar] [CrossRef]

- ESRI. ArcGIS Desktop: Release 10.7.1; Environmental Systems Research Institute: Redlands, CA, USA, 2019. [Google Scholar]

- Kuhn, M.; Wing, J.; Weston, S.; Williams, A.; Keefer, C.; Engelhardt, A.; Cooper, T.C.; Mayer, Z.; Kenkel, B.; Benesty, M.; et al. Package ‘Caret’—Classification and Regression Training version 6.0-93 2022. Available online: https://cran.r-project.org/web/packages/caret/caret.pdf (accessed on 12 February 2022).

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2020. [Google Scholar]

- Misra, S.; Li, H. Noninvasive Fracture Characterization Based on the Classification of Sonic Wave Travel Times. In Machine Learning for Subsurface Characterization; Elsevier: Amsterdam, The Netherlands, 2019; pp. 243–287. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Pal, M.; Mather, P.M. Support Vector Machines for Classification in Remote Sensing. Int. J. Remote Sens. 2005, 26, 1007–1011. [Google Scholar] [CrossRef]

- Duke, O.P.; Alabi, T.; Neeti, N.; Adewopo, J. Comparison of UAV and SAR Performance for Crop Type Classification Using Machine Learning Algorithms: A Case Study of Humid Forest Ecology Experimental Research Site of West Africa. Int. J. Remote Sens. 2022, 43, 4259–4286. [Google Scholar] [CrossRef]

- Haynes, W. Wilcoxon Rank Sum Test. In Encyclopedia of Systems Biology; Springer: New York, NY, USA, 2013; pp. 2354–2355. [Google Scholar] [CrossRef]

- Kruskal-Wallis Test. The Concise Encyclopedia of Statistics; Springer: New York, NY, USA, 2008; pp. 288–290. [Google Scholar] [CrossRef]

- Kursa, M.B.; Rudnicki, W.R. Package ‘Boruta’-Wrapper Algorithm for All Relevant Feature Selection 2022. Available online: https://cran.r-project.org/web/packages/Boruta/Boruta.pdf (accessed on 12 February 2022).

- Kursa, M.B.; Rudnicki, W.R. Feature Selection with the Boruta Package. J. Stat. Softw. 2010, 36, 1–13. [Google Scholar] [CrossRef] [Green Version]

- Sanchez-Pinto, L.N.; Venable, L.R.; Fahrenbach, J.; Churpek, M.M. Comparison of Variable Selection Methods for Clinical Predictive Modeling. Int. J. Med. Inform. 2018, 116, 10–17. [Google Scholar] [CrossRef]

- Speiser, J.L.; Miller, M.E.; Tooze, J.; Ip, E. A Comparison of Random Forest Variable Selection Methods for Classification Prediction Modeling. Expert Syst. Appl. 2019, 134, 93–101. [Google Scholar] [CrossRef]

- Kedia, A.C.; Kapos, B.; Liao, S.; Draper, J.; Eddinger, J.; Updike, C.; Frazier, A.E. An Integrated Spectral–Structural Workflow for Invasive Vegetation Mapping in an Arid Region Using Drones. Drones 2021, 5, 19. [Google Scholar] [CrossRef]

- Neupane, B.; Horanont, T.; Hung, N.D. Deep Learning Based Banana Plant Detection and Counting Using High-Resolution Red-Green-Blue (RGB) Images Collected from Unmanned Aerial Vehicle (UAV). PLoS ONE 2019, 14, e0223906. [Google Scholar] [CrossRef]

- Wu, J.; Yang, G.; Yang, H.; Zhu, Y.; Li, Z.; Lei, L.; Zhao, C. Extracting Apple Tree Crown Information from Remote Imagery Using Deep Learning. Comput. Electron. Agric. 2020, 174, 105504. [Google Scholar] [CrossRef]

- Alabi, T.R.; Abebe, A.T.; Chigeza, G.; Fowobaje, K.R. Estimation of Soybean Grain Yield from Multispectral High-Resolution UAV Data with Machine Learning Models in West Africa. Remote Sens. Appl. 2022, 27, 100782. [Google Scholar] [CrossRef]

- Gitelson, A.A. Wide Dynamic Range Vegetation Index for Remote Quantification of Biophysical Characteristics of Vegetation. J. Plant Physiol. 2004, 161, 165–173. [Google Scholar] [CrossRef] [Green Version]

- Boonprong, S.; Cao, C.; Chen, W.; Bao, S. Random Forest Variable Importance Spectral Indices Scheme for Burnt Forest Recovery Monitoring-Multilevel RF-VIMP. Remote Sens. 2018, 10, 807. [Google Scholar] [CrossRef] [Green Version]

- Nioti, F.; Xystrakis, F.; Koutsias, N.; Dimopoulos, P. A Remote Sensing and GIS Approach to Study the Long-Term Vegetation Recovery of a Fire-Affected Pine Forest in Southern Greece. Remote Sens. 2015, 7, 7712–7731. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Gritz, Y.; Merzlyak, M.N. Relationships between Leaf Chlorophyll Content and Spectral Reflectance and Algorithms for Non-Destructive Chlorophyll Assessment in Higher Plant Leaves. J. Plant Physiol. 2003, 160, 271–282. [Google Scholar] [CrossRef] [PubMed]

- Perry, C.R.; Lautenschlager, L.F. Functional Equivalence of Spectral Vegetation Indices [Species, Leaf Area, Stress, Biomass, Multispectral Scanner Measurements, Landsat, Remote Sensing]. Available online: https://agris.fao.org/agris-search/search.do?recordID=US19850043085 (accessed on 16 August 2021).

- Richardson, A.J.; Weigand, C. Distinguishing Vegetation from Soil Background Information. Photogrammetric Eng. Remote Sens. 1977, 43, 1541–1552. [Google Scholar]

- Huete, A.; Justice, C. Modis Vegetation Index (MOD13) Algorithm Theoretical Basis Document. Available online: https://modis.gsfc.nasa.gov/data/atbd/atbd_mod13.pdf (accessed on 12 February 2022).

- Pinty, B.; Verstraete, M.M. GEMI: A Non-Linear Index to Monitor Global Vegetation from Satellites. Vegetation 1992, 101, 15–20. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Viña, A.; Ciganda, V.; Rundquist, D.C.; Arkebauer, T.J. Remote Estimation of Canopy Chlorophyll Content in Crops. Geophys. Res. Lett. 2005, 32, L08403. [Google Scholar] [CrossRef] [Green Version]

- Gitelson, A.A.; Merzlyak, M.N. Remote Sensing of Chlorophyll Concentration in Higher Plant Leaves. Adv. Space Res. 1998, 22, 689–692. [Google Scholar] [CrossRef]

- Daughtry, C.S.T.; Walthall, C.L.; Kim, M.S.; De Colstoun, E.B.; McMurtrey, J.E. Estimating Corn Leaf Chlorophyll Concentration from Leaf and Canopy Reflectance. Remote Sens. Environ. 2000, 74, 229–239. [Google Scholar] [CrossRef]

- Xu, H. Modification of Normalised Difference Water Index (NDWI) to Enhance Open Water Features in Remotely Sensed Imagery. Int. J. Remote Sens. 2007, 27, 3025–3033. [Google Scholar] [CrossRef]

- Qi, J.; Chehbouni, A.; Huete, A.R.; Kerr, Y.H.; Sorooshian, S. A Modified Soil Adjusted Vegetation Index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- García, M.J.L.; Caselles, V. Mapping Burns and Natural Reforestation Using Thematic Mapper Data. Geocarto Int. 2008, 6, 31–37. [Google Scholar] [CrossRef]

- Barnes, E.M.; Clarke, T.R.; Richards, S.E.; Colaizzi, P.D.; Haberland, J.; Kostrzewski, M.; Waller, P.; Choi, C.; Riley, E.; Thompson, T.; et al. Coincident Detection of Crop Water Stress, Nitrogen Status and Canopy Density Using Ground Based Multispectral Data. In Proceedings of the Fifth International Conference on Precision Agriculture, Bloomington, MN, USA, 16–19 July 2000. [Google Scholar]

- Rouse, J.W.; Hass, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with ERTS. NASA. Goddard Space Flight Center 3d ERTS-1 Symp., Vol. 1, Sect. A Jan 1. 1974. Available online: https://ntrs.nasa.gov/api/citations/19740022614/downloads/19740022614.pdf (accessed on 16 August 2021).

- McFeeters, S.K. The Use of the Normalized Difference Water Index (NDWI) in the Delineation of Open Water Features. Int. J. Remote Sens. 2007, 17, 1425–1432. [Google Scholar] [CrossRef]

- Gao, B.C. NDWI—A Normalized Difference Water Index for Remote Sensing of Vegetation Liquid Water from Space. Remote Sens. Environ. 1996, 58, 257–266. [Google Scholar] [CrossRef]

- Baret, F.; Guyot, G. Potentials and Limits of Vegetation Indices for LAI and APAR Assessment. Remote Sens. Environ. 1991, 35, 161–173. [Google Scholar] [CrossRef]

- Marsett, R.C.; Qi, J.; Heilman, P.; Biedenbender, S.H.; Watson, M.C.; Amer, S.; Weltz, M.; Goodrich, D.; Marsett, R. Remote Sensing for Grassland Management in the Arid Southwest. Rangel. Ecol. Manag. 2006, 59, 530–540. [Google Scholar] [CrossRef]

- Huete, A.R. A Soil-Adjusted Vegetation Index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Lymburner, L.; Beggs, P.J.; Jacobson, C.R. Estimation of Canopy-Average Surface-Specific Leaf Area Using Landsat TM Data. Photogramm. Eng. Remote Sens. 2000, 66, 183–191. [Google Scholar]

- Birth, G.S.; McVey, G.R. Measuring the Color of Growing Turf with a Reflectance Spectrophotometer1. Agron. J. 1968, 60, 640–643. [Google Scholar] [CrossRef]

- Thiam, A.K. Geographic Information Systems and Remote Sensing Methods for Assessing and Monitoring Land Degradation in the Sahel: The Case of Southern Mauritania. Ph.D. Thesis, Clark University, Worcester, MA, USA, 1997. [Google Scholar]

- Deering, D.W.; Rouse, J.W.; Haas, R.H.; Schell, J.A. Measuring “Forage Production” of Grazing Units from Landsat MSS Data. In Proceedings of the 10th International Symposium on Remote Sensing of Environment, Ann Arbor, MI, USA, 6 October 1975; pp. 1169–1178. [Google Scholar]

| S. No. | Flight Site | Flight Area Coverage (Ha) | Number of Multispectral (MS) and RGB Images | Pixels of the Mosaiced MS Images (12 cm/Pixel) |

|---|---|---|---|---|

| 1 | Okoeye | 140.7 | 5095 | 100,271,680 |

| 2 | Olujere | 234.3 | 6620 | 178,918,529 |

| 3 | Erimi | 132.5 | 5225 | 99,285,096 |

| 4 | Olokuta | 390 | 11,160 | 298,709,636 |

| 5 | Ipaja Road | 117.3 | 4125 | 75,646,024 |

| 6 | Ipaja Town | 243.9 | 6540 | 187,536,630 |

| 7 | Igbeji | 192.9 | 5265 | 142,513,653 |

| Total | 1451.50 | 44,030 | 1,082,881,248 |

| Sentinel 1 (SAR) | Sentinel 2A | ||||

|---|---|---|---|---|---|

| Parameters | Dates of Acquisitions | Band ID | Spatial Resolution (m) | ||

| Azimuth resolution | 10 | 11 June 2020 | 1 | (Coastal)—0.443 µm | 60 |

| Polarization | Dual (VV-VH) | 17 July 2020 | 2 | (Blue)—0.490 µm | 10 |

| Mode | IW | 22 August 2020 | 3 | (Green)—0.560 µm | 10 |

| Incidence angle | ascending 30.9–46 | 15 September 2020 | 4 | (Red)—0.665 µm | 10 |

| 27 September 2020 | 5 | (Red Edge)—0.705 µm | 20 | ||

| 21 October 2020 | 6 | (Red Edge)—0.740 µm | 20 | ||

| 8 December 2020 | 7 | (Red Edge)—0.783 µm | 20 | ||

| 8 | (NIR)—0.842 µm | 10 | |||

| 8A | (NIR)—0.865 µm | 20 | |||

| 9 | (Water)—0.940 µm | 60 | |||

| 10 | (SWIR)—1.357 µm | 60 | |||

| 11 | (SWIR)—1.610 µm | 20 | |||

| 12 | (SWIR)—2.190 µm | 20 | |||

| Data Series | Data Combination | Abbreviation | Number of Predictor Variables Used |

|---|---|---|---|

| 1. | UAV spectral bands and height | UAV-B | 5 |

| 2. | UAV spectral indices and height | UAV-VI | 12 |

| 3. | UAV spectral bands, indices, and height | UAV-BVI | 16 |

| 4. | UAV spectral bands and indices, excluding height | BVI-H | 15 |

| 5. | S2A spectral bands | S2B | 10 |

| 6. | S2A spectral indices | S2VI | 27 |

| 7. | S2A spectral bands and indices | S2BVI | 37 |

| 8. | SAR data | SAR | 16 |

| 9. | S2A spectral bands, indices, and SAR data | S2BVI-SAR | 53 |

| Site | Metric | RF | SVM | ||||||

|---|---|---|---|---|---|---|---|---|---|

| UAV-B | UAV-VI | UAV-BVI | BVI-H | UAV-B | UAV-VI | UAV-BVI | BVI-H | ||

| Olokuta | OA | 89.9 | 88.9 | 90.0 | 77.8 | 89.4 | 89.3 | 89.4 | 77.0 |

| KC | 0.87 | 0.85 | 0.87 | 0.69 | 0.86 | 0.86 | 0.86 | 0.68 | |

| Igbeji | OA | 95.0 | 95.1 | 95.3 | 87.2 | 95.3 | 95.4 | 95.3 | 88.0 |

| KC | 0.91 | 0.91 | 0.91 | 0.75 | 0.91 | 0.91 | 0.91 | 0.76 | |

| Ipaja Road | OA | 91.9 | 92.4 | 91.9 | 70.7 | 92.4 | 92.4 | 92.4 | 73.9 |

| KC | 0.87 | 0.88 | 0.87 | 0.50 | 0.88 | 0.88 | 0.88 | 0.54 | |

| Ipaja Town | OA | 95.1 | 94.4 | 95.1 | 81.1 | 95.0 | 94.2 | 94.7 | 72.6 |

| KC | 0.93 | 0.91 | 0.92 | 0.71 | 0.92 | 0.91 | 0.92 | 0.59 | |

| Mean | OA | 93.0 | 92.7 | 93.1 | 79.2 | 93.0 | 92.8 | 93.0 | 77.9 |

| Mean | KC | 0.89 | 0.89 | 0.89 | 0.64 | 0.89 | 0.89 | 0.89 | 0.65 |

| Site | Model | Metric | Dataset | Banana | Building | Cassava | Forest | Grassland | Maize | Bare Ground/ Road |

|---|---|---|---|---|---|---|---|---|---|---|

| Olokuta | RF | UA | BVI | 77.4 | 98.3 | 78.0 | 91.5 | 53.5 | 83.3 | 96.2 |

| BVI-H | 49.1 | 95.6 | 36.3 | 89.2 | 35.1 | 52.9 | 60.7 | |||

| PA | BVI | 70.9 | 99.3 | 72.0 | 88.8 | 71.7 | 83.1 | 96.5 | ||

| BVI-H | 61.8 | 88.2 | 62.6 | 72.4 | 55.6 | 61.1 | 82.2 | |||

| SVM | UA | BVI | 74.7 | 97.6 | 71.5 | 91.0 | 61.4 | 54.1 | 96.5 | |

| BVI-H | 35.4 | 90.1 | 34.9 | 94.8 | 27.6 | 44.5 | 75.2 | |||

| PA | BVI | 74.3 | 99.2 | 75.2 | 88.4 | 63.0 | 81.1 | 94.0 | ||

| BVI-H | 68.1 | 91.9 | 68.6 | 68.0 | 60.5 | 56.5 | 72.4 | |||

| Ipaja Town | RF | UA | BVI | 69.3 | 99.9 | 80.4 | 97.2 | 95.5 | 91.8 | 99.6 |

| BVI-H | 14.1 | 96.5 | 32.6 | 89.9 | 75.6 | 93.4 | 98.4 | |||

| PA | BVI | 77.5 | 100.0 | 81.9 | 97.3 | 94.6 | 91.9 | 99.8 | ||

| BVI-H | 36.6 | 96.3 | 61.2 | 81.2 | 80.8 | 80.7 | 98.6 | |||

| SVM | UA | BVI | 52.8 | 99.7 | 79.6 | 97.2 | 95.7 | 91.4 | 99.7 | |

| BVI-H | 6.6 | 97.8 | 31.3 | 86.6 | 55.2 | 97.1 | 70.7 | |||

| PA | BVI | 80.6 | 99.9 | 80.2 | 97.1 | 94.2 | 90.3 | 99.8 | ||

| BVI-H | 21.2 | 57.6 | 45.9 | 77.5 | 73.1 | 60.1 | 98.8 |

| Random Forest (RF) | ||||||||

|---|---|---|---|---|---|---|---|---|

| Banana | Building | Cassava | Forest | Grassland | Maize | Bare Ground/ Road | PA | |

| Banana | 18,832 | 598 | 203 | 3134 | 3532 | 8 | 241 | 0.71 |

| Building | 27 | 81,934 | 0 | 23 | 28 | 0 | 464 | 0.99 |

| Cassava | 62 | 0 | 6186 | 1060 | 1270 | 11 | 0 | 0.72 |

| Forest | 4703 | 35 | 555 | 57,573 | 1737 | 0 | 249 | 0.89 |

| Grassland | 622 | 136 | 990 | 1097 | 8076 | 253 | 96 | 0.72 |

| Maize | 26 | 3 | 1 | 0 | 256 | 1551 | 29 | 0.83 |

| Bare ground/road | 45 | 680 | 0 | 43 | 184 | 38 | 26,987 | 0.96 |

| UA | 0.77 | 0.98 | 0.78 | 0.91 | 0.54 | 0.83 | 0.96 | |

| OA:90.0 and KC:87.0 | ||||||||

| Support Vector Machine (SVM) | ||||||||

| Banana | 18,173 | 206 | 87 | 2527 | 3300 | 0 | 168 | 0.74 |

| Building | 16 | 81418 | 0 | 6 | 56 | 0 | 570 | 0.99 |

| Cassava | 36 | 5 | 5677 | 984 | 845 | 1 | 0 | 0.75 |

| Forest | 4822 | 133 | 1075 | 57,256 | 1364 | 0 | 93 | 0.88 |

| Grassland | 1150 | 331 | 1086 | 1959 | 9267 | 796 | 131 | 0.63 |

| Maize | 40 | 86 | 10 | 3 | 61 | 1007 | 35 | 0.81 |

| Bare ground/road | 80 | 1207 | 0 | 195 | 190 | 57 | 27,069 | 0.94 |

| UA | 0.75 | 0.98 | 0.72 | 0.91 | 0.61 | 0.54 | 0.96 | |

| OA:89.4 and KC:86.0 | ||||||||

| Site | UAV RF | UAV SVM | S2SAR RF | S2SAR SVM |

|---|---|---|---|---|

| Igbebji | 44.7 | 43.2 | 14.7 | 13.0 |

| Olokuta | 55.3 | 63.3 | 59.8 | 66.3 |

| Ipaja Road | 10.7 | 7.7 | 7.4 | 7.8 |

| Ipaja Town | 11.5 | 11.1 | 22.7 | 24.8 |

| Dataset | Model | Metric | Banana | Building | Cassava | Forest | Grassland | Maize | Bare Ground/Road | Water | OA | KC |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| S2B | RF | UA | 72.8 | 67.2 | 75.5 | 92.9 | 81.4 | 67.0 | 62.6 | 100 | 88.0 | 0.85 |

| PA | 76.0 | 85.1 | 75.5 | 91.9 | 80.3 | 62.4 | 60.4 | 100 | ||||

| SVM | UA | 51.5 | 64.1 | 66.0 | 93.2 | 79.2 | 70.2 | 60.4 | 100 | 85.9 | 0.82 | |

| PA | 59.0 | 79.6 | 71.7 | 91.5 | 78.3 | 59.8 | 54.9 | 100 | ||||

| S2VI | RF | UA | 71.1 | 68.0 | 70.5 | 93.9 | 79.4 | 60.7 | 61.9 | 100 | 87.3 | 0.84 |

| PA | 67.6 | 87.0 | 69.5 | 92.4 | 82.2 | 58.9 | 58.1 | 100 | ||||

| SVM | UA | 57.0 | 64.8 | 61.5 | 92.7 | 77.7 | 72.8 | 62.6 | 100 | 85.9 | 0.82 | |

| PA | 60.4 | 83.0 | 66.5 | 90.9 | 80.2 | 58.2 | 60.4 | 100 | ||||

| S2BVI | RF | UA | 74.5 | 64.1 | 75.0 | 93.6 | 79.0 | 61.8 | 63.3 | 100 | 87.6 | 0.84 |

| PA | 68.4 | 84.5 | 71.1 | 92.6 | 82.8 | 63.8 | 56.1 | 100 | ||||

| SVM | UA | 55.7 | 64.1 | 63.5 | 93.1 | 77.3 | 70.7 | 61.2 | 100 | 85.8 | 0.82 | |

| PA | 60.6 | 80.4 | 69.8 | 90.7 | 79.4 | 58.2 | 57.0 | 100 | ||||

| SAR | RF | UA | 64.3 | 46.9 | 65.5 | 84.6 | 48.8 | 54.5 | 24.5 | 100 | 77.3 | 0.70 |

| PA | 76.3 | 83.3 | 63.9 | 69.5 | 51.0 | 77.6 | 35.1 | 100 | ||||

| SVM | UA | 28.1 | 49.2 | 55.5 | 84.4 | 48.6 | 50.3 | 27.3 | 99.5 | 74.1 | 0.66 | |

| PA | 49.3 | 62.4 | 53.4 | 67.4 | 51.7 | 70.6 | 35.5 | 99.9 | ||||

| S2BVI-SAR | RF | UA | 83.0 | 68.0 | 78.5 | 93.7 | 81.8 | 76.4 | 65.5 | 100 | 89.8 | 0.87 |

| PA | 77.7 | 84.5 | 78.1 | 93.0 | 84.8 | 72.3 | 61.9 | 100 | ||||

| SVM | UA | 74.0 | 64.8 | 76.5 | 94.1 | 82.4 | 75.4 | 64.0 | 100 | 89.0 | 0.86 | |

| PA | 72.8 | 80.6 | 73.2 | 92.9 | 86.2 | 67.6 | 63.6 | 100 |

| Year | Plantations with BBTV | Plantations without BBTV | Total Plantations |

|---|---|---|---|

| 2021 * | 17 | 23 | 40 |

| 2020 | 117 | 93 | 210 |

| 2019 | 37 | 13 | 50 |

| Total | 171 | 129 | 300 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alabi, T.R.; Adewopo, J.; Duke, O.P.; Kumar, P.L. Banana Mapping in Heterogenous Smallholder Farming Systems Using High-Resolution Remote Sensing Imagery and Machine Learning Models with Implications for Banana Bunchy Top Disease Surveillance. Remote Sens. 2022, 14, 5206. https://doi.org/10.3390/rs14205206

Alabi TR, Adewopo J, Duke OP, Kumar PL. Banana Mapping in Heterogenous Smallholder Farming Systems Using High-Resolution Remote Sensing Imagery and Machine Learning Models with Implications for Banana Bunchy Top Disease Surveillance. Remote Sensing. 2022; 14(20):5206. https://doi.org/10.3390/rs14205206

Chicago/Turabian StyleAlabi, Tunrayo R., Julius Adewopo, Ojo Patrick Duke, and P. Lava Kumar. 2022. "Banana Mapping in Heterogenous Smallholder Farming Systems Using High-Resolution Remote Sensing Imagery and Machine Learning Models with Implications for Banana Bunchy Top Disease Surveillance" Remote Sensing 14, no. 20: 5206. https://doi.org/10.3390/rs14205206

APA StyleAlabi, T. R., Adewopo, J., Duke, O. P., & Kumar, P. L. (2022). Banana Mapping in Heterogenous Smallholder Farming Systems Using High-Resolution Remote Sensing Imagery and Machine Learning Models with Implications for Banana Bunchy Top Disease Surveillance. Remote Sensing, 14(20), 5206. https://doi.org/10.3390/rs14205206