Estimation of Above-Ground Biomass of Winter Wheat Based on Consumer-Grade Multi-Spectral UAV

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area and Experimental Design

2.2. Data Acquisition and Processing

2.2.1. Field Data Acquisition

2.2.2. Acquisition and Pre-Processing of UAV Remote Sensing Data

2.3. Methods

2.3.1. Selection of VIs

2.3.2. Modeling Methods

2.3.3. Evaluation of Model Accuracy

3. Results

3.1. Variations of Winter Wheat Above-Ground Biomass

3.2. AGB Model Based on LR

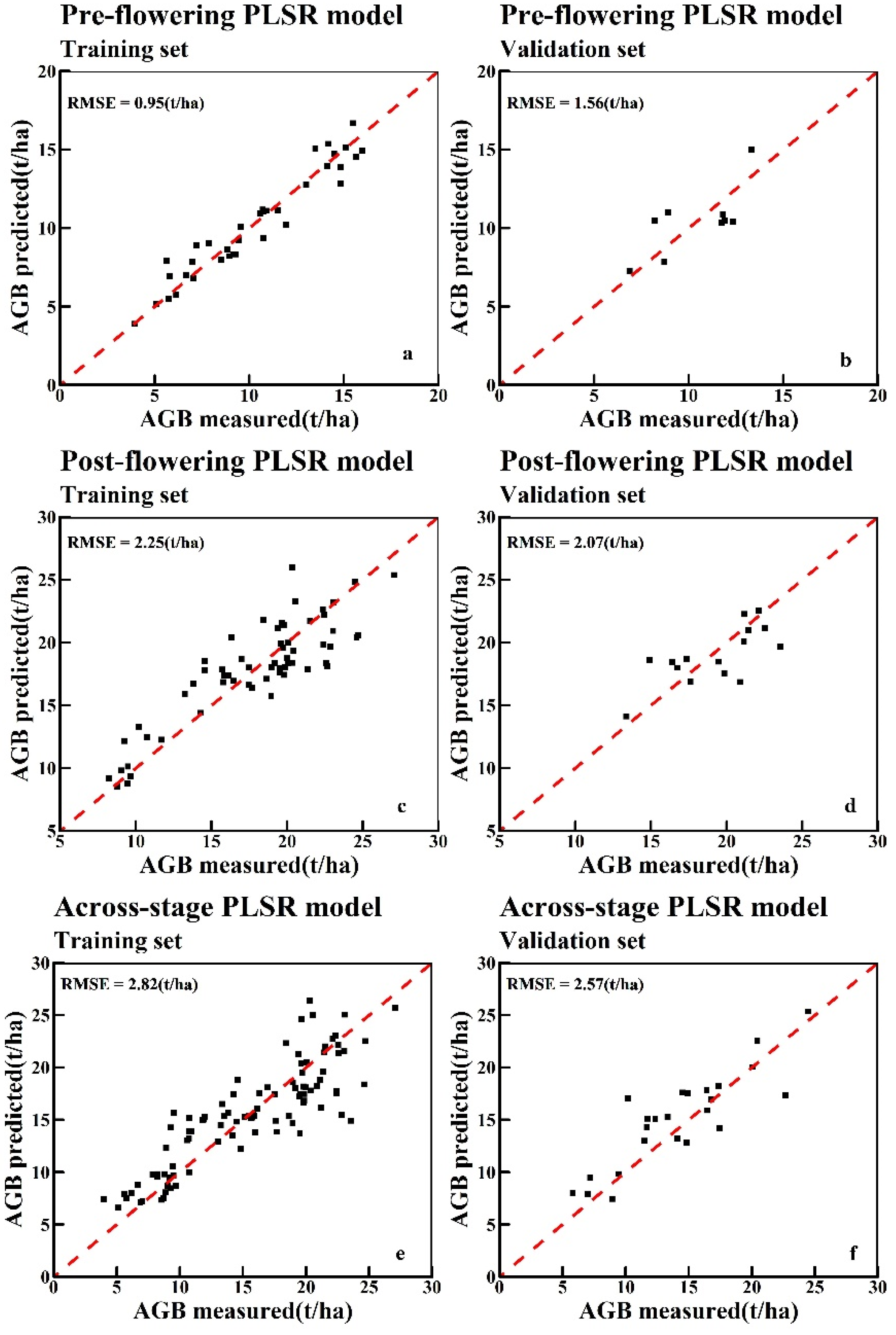

3.3. AGB Model Based on PLSR

3.4. AGB Model Based on RF

4. Discussion

4.1. The Optimal Time Window for the AGB Monitoring

4.2. The Comparison of Sensitive Bands

4.3. The Performances of PLSR and RF Models for AGB Estimation

4.4. The Limitations of the Study and Suggestions for Future AGB Estimation

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, J.; Zhang, Z.; Liu, Y.; Yao, C.; Song, W.; Xu, X.; Zhang, M.; Zhou, X.; Gao, Y.; Wang, Z.; et al. Effects of micro-sprinkling with different irrigation amount on grain yield and water use efficiency of winter wheat in the North China Plain. Agric. Water Manag. 2019, 224, 105736. [Google Scholar] [CrossRef]

- Huang, J.; Sedano, F.; Huang, Y.; Ma, H.; Li, X.; Liang, S.; Tian, L.; Zhang, X.; Fan, J.; Wu, W. Assimilating a synthetic Kalman filter leaf area index series into the WOFOST model to improve regional winter wheat yield estimation. Agric. For. Meteorol. 2016, 216, 188–202. [Google Scholar] [CrossRef]

- Zhu, W.; Sun, Z.; Peng, J.; Huang, Y.; Li, J.; Zhang, J.; Yang, B.; Liao, X. Estimating Maize Above-Ground Biomass Using 3D Point Clouds of Multi-Source Unmanned Aerial Vehicle Data at Multi-Spatial Scales. Remote Sens. 2019, 11, 2678. [Google Scholar] [CrossRef] [Green Version]

- Han, L.; Yang, G.; Dai, H.; Xu, B.; Yang, H.; Feng, H.; Li, Z.; Yang, X. Modeling maize above-ground biomass based on machine learning approaches using UAV remote-sensing data. Plant Methods 2019, 15, 1–19. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gil-Docampo, M.L.; Arza-García, M.; Ortiz-Sanz, J.; Martínez-Rodríguez, S.; Marcos-Robles, J.L.; Sánchez-Sastre, L.F. Above-ground biomass estimation of arable crops using UAV-based SfM photogrammetry. Geocarto Int. 2019, 35, 687–699. [Google Scholar] [CrossRef]

- He, L.; Wang, R.; Mostovoy, G.; Liu, J.; Chen, J.; Shang, J.; Liu, J.; McNairn, H.; Powers, J. Crop Biomass Mapping Based on Ecosystem Modeling at Regional Scale Using High Resolution Sentinel-2 Data. Remote Sens. 2021, 13, 806. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Tian, Q.; Feng, H.; Xu, K.; Zhou, C. Estimate of winter-wheat above-ground biomass based on UAV ultrahigh-ground-resolution image textures and vegetation indices. ISPRS J. Photogramm. Remote Sens. 2019, 150, 226–244. [Google Scholar] [CrossRef]

- Meng, S.; Pang, Y.; Zhang, Z.; Jia, W.; Li, Z. Mapping Aboveground Biomass using Texture Indices from Aerial Photos in a Temperate Forest of Northeastern China. Remote Sens. 2016, 8, 230. [Google Scholar] [CrossRef] [Green Version]

- Feng, D.; Xu, W.; He, Z.; Zhao, W.; Yang, M. Advances in plant nutrition diagnosis based on remote sensing and computer application. Neural Comput. Appl. 2020, 32, 16833–16842. [Google Scholar] [CrossRef]

- Curran, P.J.; Dungan, J.L.; Peterson, D.L. Estimating the foliar biochemical concentration of leaves with reflectance spectrometry: Testing the Kokaly and Clark methodologies. Remote Sens. Environ. 2001, 76, 349–359. [Google Scholar] [CrossRef]

- Mauser, W.; Bach, H.; Hank, T.; Zabel, F.; Putzenlechner, B. How spectroscopy from space will support world agriculture. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 12 November 2012; pp. 7321–7324. [Google Scholar] [CrossRef]

- Wang, L.J.; Zhang, G.M.; Wang, Z.Y.; Liu, J.G.; Shang, J.L.; Liang, L. Bibliometric Analysis of Remote Sensing Research Trend in Crop Growth Monitoring: A Case Study in China. Remote Sens. 2019, 11, 809. [Google Scholar] [CrossRef] [Green Version]

- Zha, H.; Miao, Y.; Wang, T.; Li, Y.; Zhang, J.; Sun, W.; Feng, Z.; Kusnierek, K. Improving Unmanned Aerial Vehicle Remote Sensing-Based Rice Nitrogen Nutrition Index Prediction with Machine Learning. Remote Sens. 2020, 12, 215. [Google Scholar] [CrossRef] [Green Version]

- Pinto, J.; Powell, S.; Peterson, R.; Rosalen, D.; Fernandes, O. Detection of Defoliation Injury in Peanut with Hyperspectral Proximal Remote Sensing. Remote Sens. 2020, 12, 3828. [Google Scholar] [CrossRef]

- Maimaitiyiming, M.; Sagan, V.; Sidike, P.; Kwasniewski, M.T. Dual Activation Function-Based Extreme Learning Machine (ELM) for Estimating Grapevine Berry Yield and Quality. Remote Sens. 2019, 11, 740. [Google Scholar] [CrossRef] [Green Version]

- Chen, P. Estimation of Winter Wheat Grain Protein Content Based on Multisource Data Assimilation. Remote Sens. 2020, 12, 3201. [Google Scholar] [CrossRef]

- Bispo, P.C.; Rodríguez-Veiga, P.; Zimbres, B.; De Miranda, S.D.C.; Cezare, C.H.G.; Fleming, S.; Baldacchino, F.; Louis, V.; Rains, D.; Garcia, M.; et al. Woody Aboveground Biomass Mapping of the Brazilian Savanna with a Multi-Sensor and Machine Learning Approach. Remote Sens. 2020, 12, 2685. [Google Scholar] [CrossRef]

- Hu, T.; Zhang, Y.; Su, Y.; Zheng, Y.; Lin, G.; Guo, Q. Mapping the Global Mangrove Forest Aboveground Biomass Using Multisource Remote Sensing Data. Remote Sens. 2020, 12, 1690. [Google Scholar] [CrossRef]

- Naik, P.; Dalponte, M.; Bruzzone, L. Prediction of Forest Aboveground Biomass Using Multitemporal Multispectral Remote Sensing Data. Remote Sens. 2021, 13, 1282. [Google Scholar] [CrossRef]

- Atzberger, C.; Darvishzadeh, R.; Immitzer, M.; Schlerf, M.; Skidmore, A.; le Maire, G. Comparative analysis of different retrieval methods for mapping grassland leaf area index using airborne imaging spectroscopy. Int. J. Appl. Earth Obs. Geoinf. 2015, 43, 19–31. [Google Scholar] [CrossRef] [Green Version]

- Yang, H.; Li, F.; Wang, W.; Yu, K. Estimating Above-Ground Biomass of Potato Using Random Forest and Optimized Hyperspectral Indices. Remote Sens. 2021, 13, 2339. [Google Scholar] [CrossRef]

- Féret, J.-B.; le Maire, G.; Jay, S.; Berveiller, D.; Bendoula, R.; Hmimina, G.; Cheraiet, A.; Oliveira, J.; Ponzoni, F.; Solanki, T.; et al. Estimating leaf mass per area and equivalent water thickness based on leaf optical properties: Potential and limitations of physical modeling and machine learning. Remote Sens. Environ. 2019, 231, 110959. [Google Scholar] [CrossRef]

- Feret, J.-B.; François, C.; Asner, G.P.; Gitelson, A.A.; Martin, R.E.; Bidel, L.P.; Ustin, S.L.; Le Maire, G.; Jacquemoud, S. PROSPECT-4 and 5: Advances in the leaf optical properties model separating photosynthetic pigments. Remote Sens. Environ. 2008, 112, 3030–3043. [Google Scholar] [CrossRef]

- Wang, Z.; Skidmore, A.K.; Darvishzadeh, R.; Heiden, U.; Heurich, M.; Wang, T. Leaf Nitrogen Content Indirectly Estimated by Leaf Traits Derived From the PROSPECT Model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3172–3182. [Google Scholar] [CrossRef]

- Soenen, S.A.; Peddle, D.R.; Hall, R.J.; Coburn, C.A.; Hall, F.G. Estimating aboveground forest biomass from canopy reflectance model inversion in mountainous terrain. Remote Sens. Environ. 2010, 114, 1325–1337. [Google Scholar] [CrossRef]

- Wang, X.Y.; Guo, Y.G.; He, J. Estimation of forest biomass by integrating ALOS PALSAR And HJ1B data. Land Surf. Remote Sens. II 2014, 9260, 92603I. [Google Scholar] [CrossRef]

- Fu, Y.; Yang, G.; Wang, J.; Song, X.; Feng, H. Winter wheat biomass estimation based on spectral indices, band depth analysis and partial least squares regression using hyperspectral measurements. Comput. Electron. Agric. 2014, 100, 51–59. [Google Scholar] [CrossRef]

- Ferwerda, J.G.; Skidmore, A. Can nutrient status of four woody plant species be predicted using field spectrometry? ISPRS J. Photogramm. Remote Sens. 2007, 62, 406–414. [Google Scholar] [CrossRef]

- Zheng, H.; Cheng, T.; Zhou, M.; Li, D.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Improved estimation of rice aboveground biomass combining textural and spectral analysis of UAV imagery. Precis. Agric. 2019, 20, 611–629. [Google Scholar] [CrossRef]

- Zhang, L.; Verma, B.; Stockwell, D.; Chowdhury, S. Density Weighted Connectivity of Grass Pixels in image frames for biomass estimation. Expert Syst. Appl. 2018, 101, 213–227. [Google Scholar] [CrossRef] [Green Version]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef] [Green Version]

- Gnyp, M.L.; Miao, Y.; Yuan, F.; Ustin, S.L.; Yu, K.; Yao, Y.; Huang, S.; Bareth, G. Hyperspectral canopy sensing of paddy rice aboveground biomass at different growth stages. Field Crops Res. 2014, 155, 42–55. [Google Scholar] [CrossRef]

- Breunig, F.M.; Galvão, L.S.; Dalagnol, R.; Dauve, C.E.; Parraga, A.; Santi, A.L.; Della Flora, D.P.; Chen, S. Delineation of management zones in agricultural fields using cover–crop biomass estimates from PlanetScope data. Int. J. Appl. Earth Obs. Geoinf. 2020, 85, 102004. [Google Scholar] [CrossRef]

- Venancio, L.P.; Mantovani, E.C.; Amaral, C.H.D.; Neale, C.M.U.; Gonçalves, I.Z.; Filgueiras, R.; Eugenio, F.C. Potential of using spectral vegetation indices for corn green biomass estimation based on their relationship with the photosynthetic vegetation sub-pixel fraction. Agric. Water Manag. 2020, 236, 106155. [Google Scholar] [CrossRef]

- Pölönen, I.; Saari, H.; Kaivosoja, J.; Honkavaara, E.; Pesonen, L. Hyperspectral imaging based biomass and nitrogen content estimations from light-weight UAV. In Remote Sensing for Agriculture, Ecosystems, and Hydrology XV; SPIE: Bellingham, WA, USA, 2013; Volume 8887, pp. 141–149. [Google Scholar] [CrossRef]

- Kross, A.; McNairn, H.; Lapen, D.; Sunohara, M.; Champagne, C. Assessment of RapidEye vegetation indices for estimation of leaf area index and biomass in corn and soybean crops. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 235–248. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Liu, S.; Li, J.; Guo, X.; Wang, S.; Lu, J. Estimating biomass of winter oilseed rape using vegetation indices and texture metrics derived from UAV multispectral images. Comput. Electron. Agric. 2019, 166, 105026. [Google Scholar] [CrossRef]

- Li, S.; Potter, C. Patterns of Aboveground Biomass Regeneration in Post-Fire Coastal Scrub Communities. GIScience Remote Sens. 2012, 49, 182–201. [Google Scholar] [CrossRef]

- Deery, D.M.; Rebetzke, G.J.; Jimenez-Berni, J.A.; Condon, A.G.; Smith, D.J.; Bechaz, K.M.; Bovill, W.D. Ground-Based LiDAR Improves Phenotypic Repeatability of Above-Ground Biomass and Crop Growth Rate in Wheat. Plant Phenomics 2020, 2020, 1–11. [Google Scholar] [CrossRef]

- Varela, S.; Pederson, T.; Bernacchi, C.J.; Leakey, A.D.B. Understanding Growth Dynamics and Yield Prediction of Sorghum Using High Temporal Resolution UAV Imagery Time Series and Machine Learning. Remote Sens. 2021, 13, 1763. [Google Scholar] [CrossRef]

- Zhang, Y.; Xia, C.; Zhang, X.; Cheng, X.; Feng, G.; Wang, Y.; Gao, Q. Estimating the maize biomass by crop height and narrowband vegetation indices derived from UAV-based hyperspectral images. Ecol. Indic. 2021, 129, 107985. [Google Scholar] [CrossRef]

- Yue, J.; Feng, H.; Jin, X.; Yuan, H.; Li, Z.; Zhou, C.; Yang, G.; Tian, Q. A Comparison of Crop Parameters Estimation Using Images from UAV-Mounted Snapshot Hyperspectral Sensor and High-Definition Digital Camera. Remote Sens. 2018, 10, 1138. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Zhang, K.; Tang, C.; Cao, Q.; Tian, Y.; Zhu, Y.; Cao, W.; Liu, X. Estimation of Rice Growth Parameters Based on Linear Mixed-Effect Model Using Multispectral Images from Fixed-Wing Unmanned Aerial Vehicles. Remote Sens. 2019, 11, 1371. [Google Scholar] [CrossRef] [Green Version]

- Yue, J.; Feng, H.; Yang, G.; Li, Z. A Comparison of Regression Techniques for Estimation of Above-Ground Winter Wheat Biomass Using Near-Surface Spectroscopy. Remote Sens. 2018, 10, 66. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J.; Tian, H.; Wang, D.; Li, H.; Mouazen, A.M. A Novel Approach for Estimation of Above-Ground Biomass of Sugar Beet Based on Wavelength Selection and Optimized Support Vector Machine. Remote Sens. 2020, 12, 620. [Google Scholar] [CrossRef] [Green Version]

- Dong, L.; Du, H.; Han, N.; Li, X.; Zhu, D.; Mao, F.; Zhang, M.; Zheng, J.; Liu, H.; Huang, Z.; et al. Application of Convolutional Neural Network on Lei Bamboo Above-Ground-Biomass (AGB) Estimation Using Worldview-2. Remote Sens. 2020, 12, 958. [Google Scholar] [CrossRef] [Green Version]

- Bhardwaj, A.; Sam, L.; Akanksha; Martín-Torres, F.J.; Kumar, R. UAVs as remote sensing platform in glaciology: Present applications and future prospects. Remote Sens. Environ. 2016, 175, 196–204. [Google Scholar] [CrossRef]

- Qiu, Z.; Ma, F.; Li, Z.; Xu, X.; Ge, H.; Du, C. Estimation of nitrogen nutrition index in rice from UAV RGB images coupled with machine learning algorithms. Comput. Electron. Agric. 2021, 189, 106421. [Google Scholar] [CrossRef]

- Zhang, X.; Han, L.; Dong, Y.; Shi, Y.; Huang, W.; Han, L.; González-Moreno, P.; Ma, H.; Ye, H.; Sobeih, T. A Deep Learning-Based Approach for Automated Yellow Rust Disease Detection from High-Resolution Hyperspectral UAV Images. Remote Sens. 2019, 11, 1554. [Google Scholar] [CrossRef] [Green Version]

- Han, X.; Wei, Z.; Chen, H.; Zhang, B.; Li, Y.; Du, T. Inversion of Winter Wheat Growth Parameters and Yield Under Different Water Treatments Based on UAV Multispectral Remote Sensing. Front. Plant Sci. 2021, 12, 1–13. [Google Scholar] [CrossRef]

- Roth, L.; Aasen, H.; Walter, A.; Liebisch, F. Extracting leaf area index using viewing geometry effects—A new perspective on high-resolution unmanned aerial system photography. ISPRS J. Photogramm. Remote Sens. 2018, 141, 161–175. [Google Scholar] [CrossRef]

- Jiang, Q.; Fang, S.; Peng, Y.; Gong, Y.; Zhu, R.; Wu, X.; Ma, Y.; Duan, B.; Liu, J. UAV-Based Biomass Estimation for Rice-Combining Spectral, TIN-Based Structural and Meteorological Features. Remote Sens. 2019, 11, 890. [Google Scholar] [CrossRef] [Green Version]

- Wang, F.-M.; Huang, J.-F.; Tang, Y.-L.; Wang, X.-Z. New Vegetation Index and Its Application in Estimating Leaf Area Index of Rice. Rice Sci. 2007, 14, 195–203. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Gritz, Y.; Merzlyak, M.N. Relationships between leaf chlorophyll content and spectral reflectance and algorithms for non-destructive chlorophyll assessment in higher plant leaves. J. Plant Physiol. 2003, 160, 271–282. [Google Scholar] [CrossRef]

- Clevers, J.G.P.W.; Kooistra, L.; Brande, M.M.M.V.D. Using Sentinel-2 Data for Retrieving LAI and Leaf and Canopy Chlorophyll Content of a Potato Crop. Remote Sens. 2017, 9, 405. [Google Scholar] [CrossRef] [Green Version]

- Vincini, M.; Frazzi, E.; D’Alessio, P. A broad-band leaf chlorophyll vegetation index at the canopy scale. Precis. Agric. 2008, 9, 303–319. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deeering, D. Monitoring Vegetation Systems in the Great Plains with ERTS (Earth Resources Technology Satellite). In Proceedings of the Third Earth Resources Technology Satellite-1 Symposium, Washington, DC, USA, 10–14 December 1973; Volume 1, pp. 309–317. Available online: https://ntrs.nasa.gov/citations/19740022614 (accessed on 26 January 2022).

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Jiang, Z.; Huete, A.R.; Didan, K.; Miura, T. Development of a two-band enhanced vegetation index without a blue band. Remote Sens. Environ. 2008, 112, 3833–3845. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Merzlyak, M.N.; Lichtenthaler, H.K. Detection of Red Edge Position and Chlorophyll Content by Reflectance Measurements Near 700 nm. J. Plant Physiol. 1996, 148, 501–508. [Google Scholar] [CrossRef]

- Siegmann, B.; Jarmer, T.; Lilienthal, H.; Richter, N.; Selige, T.; Höfled, B. Comparison of narrow band vegetation indices and empirical models from hyperspectral remote sensing data for the assessment of wheat nitrogen concentration. In Proceedings of the 8th EARSeL Workshop on Imaging Spectroscopy, Nantes, France, 1 January 2013; pp. 1–2. [Google Scholar]

- Xiao, Y.; Zhao, W.; Zhou, D.; Gong, H. Sensitivity Analysis of Vegetation Reflectance to Biochemical and Biophysical Variables at Leaf, Canopy, and Regional Scales. IEEE Trans. Geosci. Remote Sens. 2014, 52, 4014–4024. [Google Scholar] [CrossRef]

- Daughtry, C.S.T.; Walthall, C.L.; Kim, M.S.; De Colstoun, E.B.; McMurtrey, J.E. Estimating Corn Leaf Chlorophyll Concentration from Leaf and Canopy Reflectance. Remote. Sens. Environ. 2000, 74, 229–239. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Pattey, E.; Zarco-Tejada, P.J.; Strachan, I.B. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- Gong, P.; Pu, R.; Biging, G.; Larrieu, M. Estimation of forest leaf area index using vegetation indices derived from hyperion hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1355–1362. [Google Scholar] [CrossRef] [Green Version]

- Chen, J.M. Evaluation of Vegetation Indices and a Modified Simple Ratio for Boreal Applications. Can. J. Remote Sens. 1996, 22, 229–242. [Google Scholar] [CrossRef]

- Dash, J.; Curran, P.J. The MERIS terrestrial chlorophyll index. Int. J. Remote Sens. 2004, 25, 5403–5413. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Merzlyak, M.N. Remote estimation of chlorophyll content in higher plant leaves. Int. J. Remote 1997, 18, 2691–2697. [Google Scholar] [CrossRef]

- Hassan, M.A.; Yang, M.; Rasheed, A.; Jin, X.; Xia, X.; Xiao, Y.; He, Z. Time-Series Multispectral Indices from Unmanned Aerial Vehicle Imagery Reveal Senescence Rate in Bread Wheat. Remote Sens. 2018, 10, 809. [Google Scholar] [CrossRef] [Green Version]

- Agapiou, A.; Alexakis, D.D.; Stavrou, M.; Sarris, A.; Themistocleous, K.; Hadjimitsis, D.G. Prospects and limitations of vegetation indices in archeological research: The Neolithic Thessaly case study. SPIE Remote Sens. 2013, IV, 88930D. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Roujean, J.-L.; Breon, F.-M. Estimating PAR absorbed by vegetation from bidirectional reflectance measurements. Remote Sens. Environ. 1995, 51, 375–384. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Walsh, O.S.; Shafian, S.; Marshall, J.M.; Jackson, C.; McClintick-Chess, J.R.; Blanscet, S.M.; Swoboda, K.; Thompson, C.; Belmont, K.M.; Walsh, W.L. Assessment of UAV Based Vegetation Indices for Nitrogen Concentration Estimation in Spring Wheat. Adv. Remote Sens. 2018, 7, 71–90. [Google Scholar] [CrossRef] [Green Version]

- Huete, A.R. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Verrelst, J.; Schaepman, M.; Koetz, B.; Kneubühler, M. Angular sensitivity analysis of vegetation indices derived from CHRIS/PROBA data. Remote Sens. Environ. 2008, 112, 2341–2353. [Google Scholar] [CrossRef]

- Raper, T.B.; Varco, J.J. Canopy-scale wavelength and vegetative index sensitivities to cotton growth parameters and nitrogen status. Precis. Agric. 2014, 16, 62–76. [Google Scholar] [CrossRef] [Green Version]

- Penuelas, J.; Gamon, J.; Fredeen, A.; Merino, J.; Field, C. Reflectance indices associated with physiological changes in nitrogen- and water-limited sunflower leaves. Remote Sens. Environ. 1994, 48, 135–146. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Tremblay, N.; Zarco-Tejada, P.J.; Dextraze, L. Integrated narrow-band vegetation indices for prediction of crop chlorophyll content for application to precision agriculture. Remote Sens. Environ. 2002, 81, 416–426. [Google Scholar] [CrossRef]

- Broge, N.H.; Leblanc, E. Comparing prediction power and stability of broadband and hyperspectral vegetation indices for estimation of green leaf area index and canopy chlorophyll density. Remote Sens. Environ. 2001, 76, 156–172. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Robert, S.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef] [Green Version]

- Gitelson, A.A. Remote estimation of crop fractional vegetation cover: The use of noise equivalent as an indicator of performance of vegetation indices. Int. J. Remote Sens. 2013, 34, 6054–6066. [Google Scholar] [CrossRef]

- Pilson, D.; Decker, K.L. Compensation for herbivory in wild sunflower: Response to simulated damage by the head-clipping weevil. Ecology 2002, 83, 3097–3107. [Google Scholar] [CrossRef]

- Mevik, B.-H.; Wehrens, R. TheplsPackage: Principal Component and Partial Least Squares Regression inR. J. Stat. Softw. 2007, 18, 1–23. [Google Scholar] [CrossRef] [Green Version]

- Liaw, A.; Wiener, M. Package ‘randomForest’. Breiman and Cutler’s Random Forests for Classification and Regression. Tutorial 2015, 29. Available online: https://cran.r-project.org/web/packages/randomForest/index.html (accessed on 26 January 2022).

- Pak, S.I.; Oh, T.H. Correlation and simple linear regression. J. Vet. Clin. 2010, 27, 427–434. [Google Scholar]

- Wold, S.; Sjostrom, M.; Eriksson, L. PLS-regression: A basic tool of chemometrics. Chemom. Intell. Lab. Syst. 2001, 58, 109–130. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning: Data Mining, Inference and Prediction. Math. Intell. 2005, 27, 83–85. [Google Scholar]

- Farrés, M.; Platikanov, S.; Tsakovski, S.; Tauler, R. Comparison of the variable importance in projection (VIP) and of the selectivity ratio (SR) methods for variable selection and interpretation. J. Chemom. 2015, 29, 528–536. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Aiyelokun, O.O.; Agbede, O.A. Development of random forest model as decision support tool in water resources management of Ogun headwater catchments. Appl. Water Sci. 2021, 11, 1–9. [Google Scholar] [CrossRef]

- Huang, X.; Zhu, W.; Wang, X.; Zhan, P.; Liu, Q.; Li, X.; Sun, L. A Method for Monitoring and Forecasting the Heading and Flowering Dates of Winter Wheat Combining Satellite-Derived Green-Up Dates and Accumulated Temperature. Remote Sens. 2020, 12, 3536. [Google Scholar] [CrossRef]

- Pavuluri, K.; Chim, B.K.; Griffey, C.A.; Reiter, M.S.; Balota, M.; Thomason, W.E. Canopy spectral reflectance can predict grain nitrogen use efficiency in soft red winter wheat. Precis. Agric. 2014, 16, 405–424. [Google Scholar] [CrossRef]

- Ouaadi, N.; Jarlan, L.; Ezzahar, J.; Zribi, M.; Khabba, S.; Bouras, E.; Bousbih, S.; Frison, P.-L. Monitoring of wheat crops using the backscattering coefficient and the interferometric coherence derived from Sentinel-1 in semi-arid areas. Remote Sens. Environ. 2020, 251, 112050. [Google Scholar] [CrossRef]

- Sun, G.; Jiao, Z.; Zhang, A.; Li, F.; Fu, H.; Li, Z. Hyperspectral image-based vegetation index (HSVI): A new vegetation index for urban ecological research. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102529. [Google Scholar] [CrossRef]

- Song, C. Optical remote sensing of forest leaf area index and biomass. Prog. Phys. Geogr. Earth Environ. 2013, 37, 98–113. [Google Scholar] [CrossRef]

- Guo, J.; Pradhan, S.; Shahi, D.; Khan, J.; McBreen, J.; Bai, G.; Murphy, J.P.; Babar, A. Increased Prediction Accuracy Using Combined Genomic Information and Physiological Traits in A Soft Wheat Panel Evaluated in Multi-Environments. Sci. Rep. 2020, 10, 1–12. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Li, C.; Li, Z.; Wang, Y.; Feng, H.; Xu, B. Estimation of Winter Wheat Above-Ground Biomass Using Unmanned Aerial Vehicle-Based Snapshot Hyperspectral Sensor and Crop Height Improved Models. Remote Sens. 2017, 9, 708. [Google Scholar] [CrossRef] [Green Version]

- Thenkabail, P.S.; Smith, R.B.; De Pauw, E. Hyperspectral Vegetation Indices and Their Relationships with Agricultural Crop Characteristics. Remote Sens. Environ. 2000, 71, 158–182. [Google Scholar] [CrossRef]

- Hatfield, J.L.; Gitelson, A.A.; Schepers, J.S.; Walthall, C.L. Application of Spectral Remote Sensing for Agronomic Decisions. Agron. J. 2008, 100, S-117–S-131. [Google Scholar] [CrossRef] [Green Version]

- Nguy-Robertson, A.; Gitelson, A.; Peng, Y.; Viña, A.; Arkebauer, T.; Rundquist, D. Green Leaf Area Index Estimation in Maize and Soybean: Combining Vegetation Indices to Achieve Maximal Sensitivity. Agron. J. 2012, 104, 1336–1347. [Google Scholar] [CrossRef] [Green Version]

- Luo, S.; He, Y.; Li, Q.; Jiao, W.; Zhu, Y.; Zhao, X. Nondestructive estimation of potato yield using relative variables derived from multi-period LAI and hyperspectral data based on weighted growth stage. Plant Methods 2020, 16, 1–14. [Google Scholar] [CrossRef]

- Martínez-Muñoz, G.; Suárez, A. Out-of-bag estimation of the optimal sample size in bagging. Pattern Recognit. 2010, 43, 143–152. [Google Scholar] [CrossRef] [Green Version]

- Garg, A.; Tai, K. Comparison of statistical and machine learning methods in modelling of data with multicollinearity. Int. J. Model. Identif. Control 2013, 18, 295. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Feng, H. Comparative of remote sensing estimation models of winter wheat biomass based on random forest algorithm. Nongye Gongcheng Xuebao/Transactions Chinese. Soc. Agric. Eng. 2016, 32, 175–182. [Google Scholar] [CrossRef]

- Lu, N.; Zhou, J.; Han, Z.; Li, D.; Cao, Q.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W.; Cheng, T. Improved estimation of aboveground biomass in wheat from RGB imagery and point cloud data acquired with a low-cost unmanned aerial vehicle system. Plant Methods 2019, 15, 17. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cen, H.; Wan, L.; Zhu, J.; Li, Y.; Li, X.; Zhu, Y.; Weng, H.; Wu, W.; Yin, W.; Xu, C.; et al. Dynamic monitoring of biomass of rice under different nitrogen treatments using a lightweight UAV with dual image-frame snapshot cameras. Plant Methods 2019, 15, 32. [Google Scholar] [CrossRef]

- Sims, D.A.; Gamon, J.A. Relationships between leaf pigment content and spectral reflectance across a wide range of species, leaf structures and developmental stages. Remote Sens. Environ. 2002, 81, 337–354. [Google Scholar] [CrossRef]

- Li, F.; Mistele, B.; Hu, Y.; Chen, X.; Schmidhalter, U. Optimising three-band spectral indices to assess aerial N concentration, N uptake and aboveground biomass of winter wheat remotely in China and Germany. ISPRS J. Photogramm. Remote Sens. 2014, 92, 112–123. [Google Scholar] [CrossRef]

- Wang, W.; Yao, X.; Yao, X.; Tian, Y.; Liu, X.; Ni, J.; Cao, W.; Zhu, Y. Estimating leaf nitrogen concentration with three-band vegetation indices in rice and wheat. Field Crop. Res. 2012, 129, 90–98. [Google Scholar] [CrossRef]

- Badreldin, N.; Sanchez-Azofeifa, A. Estimating Forest Biomass Dynamics by Integrating Multi-Temporal Landsat Satellite Images with Ground and Airborne LiDAR Data in the Coal Valley Mine, Alberta, Canada. Remote Sens. 2015, 7, 2832–2849. [Google Scholar] [CrossRef] [Green Version]

- Foster, A.J.; Kakani, V.G.; Ge, J.; Mosali, J. Discrimination of Switchgrass Cultivars and Nitrogen Treatments Using Pigment Profiles and Hyperspectral Leaf Reflectance Data. Remote Sens. 2012, 4, 2576–2594. [Google Scholar] [CrossRef] [Green Version]

- Barillé, L.; Mouget, J.-L.; Méléder, V.; Rosa, P.; Jesus, B. Spectral response of benthic diatoms with different sediment backgrounds. Remote Sens. Environ. 2011, 115, 1034–1042. [Google Scholar] [CrossRef]

- Masjedi, A.; Zhao, J.; Thompson, A.M.; Yang, K.-W.; Flatt, J.E.; Crawford, M.M.; Ebert, D.S.; Tuinstra, M.R.; Hammer, G.; Chapman, S. Sorghum Biomass Prediction Using Uav-Based Remote Sensing Data and Crop Model Simulation. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 7719–7722. [Google Scholar]

- Yu, K.; Li, F.; Gnyp, M.L.; Miao, Y.; Bareth, G.; Chen, X. Remotely detecting canopy nitrogen concentration and uptake of paddy rice in the Northeast China Plain. ISPRS J. Photogramm. Remote Sens. 2013, 78, 102–115. [Google Scholar] [CrossRef]

- Hatfield, J.L.; Prueger, J.H. Value of Using Different Vegetative Indices to Quantify Agricultural Crop Characteristics at Different Growth Stages under Varying Management Practices. Remote Sens. 2010, 2, 562–578. [Google Scholar] [CrossRef] [Green Version]

- Li, F.; Miao, Y.; Hennig, S.D.; Gnyp, M.L.; Chen, X.; Jia, L.; Bareth, G. Evaluating hyperspectral vegetation indices for estimating nitrogen concentration of winter wheat at different growth stages. Precis. Agric. 2010, 11, 335–357. [Google Scholar] [CrossRef]

- Yu, K.; Lenz-Wiedemann, V.; Chen, X.; Bareth, G. Estimating leaf chlorophyll of barley at different growth stages using spectral indices to reduce soil background and canopy structure effects. ISPRS J. Photogramm. Remote Sens. 2014, 97, 58–77. [Google Scholar] [CrossRef]

| Sampling Date | Growth Stage | Zadoks Codes |

|---|---|---|

| 18 April 2021 | Jointing stage (JS) | GS31 |

| 27 April 2021 | Booting stage (BS) | GS40 |

| 5 May 2021 | Heading stage (HS) | GS50 |

| 12 May 2021 | 5 Days after Flowering (AF5) | GS70 |

| 17 May 2021 | 10 Days after Flowering (AF10) | GS75 |

| 22 May 2021 | 15 Days after Flowering (AF15) | GS80 |

| 27 May 2021 | 20 Days after Flowering (AF20) | GS85 |

| 1 June 2021 | 25 Days after Flowering (AF25) | GS90 |

| Aircraft Parameters | Camera Parameters | ||

|---|---|---|---|

| Takeoff Weight | 1487 g | FOV | 62.7° |

| Diagonal Distance | 350 mm | Focal Length | 5.74 mm |

| Maximum Flying Altitude | 6000 m | Aperture | f/2.2 |

| Max Ascent Speed | 6 m/s | RGB Sensor ISO | 200–800 |

| Max Descent Speed | 3 m/s | Monochrome Sensor Gain | 1–8 × |

| Max Speed | 50 km/h | Max Image Size | 1600 × 1300 |

| Max Flight Time | 27 min | Photo Format | JPEG/TIFF |

| Operating Temperature | 0 to 40 °C | Supported File Systems | ≥32 GB |

| Operating Frequency | 5.72 to 5.85 GHz | Operating Temperature | 0° to 40 °C |

| Index | Formula | Authors |

|---|---|---|

| BNDVI | [53] | |

| CI-GREEN | [54] | |

| CI-RED | [55] | |

| CI-REG | [54] | |

| CVI | [56] | |

| DVI | [57] | |

| DVI-GREEN | [57] | |

| DVI-REG | [57] | |

| EVI | [58] | |

| EVI2 | [59] | |

| GARI | [60] | |

| GNDVI | [54] | |

| GOSAVI | [61] | |

| GRVI | [31] | |

| LCI | [62] | |

| MCARI | [63] | |

| MCARI1 | [64] | |

| MCARI2 | [64] | |

| MNLI | [65] | |

| MSR | [66] | |

| MSR-REG | [66] | |

| MTCI | [67] | |

| NDRE | [68] | |

| NDREI | [69] | |

| NAVI | [70] | |

| NDVI | [57] | |

| OSAVI | [71] | |

| OSAVI-GREEN | [71] | |

| OSAVI-REG | [71] | |

| RDVI | [72] | |

| RDVI-REG | [72] | |

| RGBVI | [73] | |

| RTVI-CORE | [74] | |

| RVI | [57] | |

| SAVI | [75] | |

| SAVI-GREEN | [76] | |

| S-CCCI | [77] | |

| SIPI | [78] | |

| SR-REG | [74] | |

| TCARI | [79] | |

| TCARI/OSAVI | [79] | |

| TVI | [80] | |

| VARI | [81] | |

| WDRVI | [82] |

| Stage | Min | Max | Mean | Median | SD | Var | CV |

|---|---|---|---|---|---|---|---|

| Jointing | 3.96 | 10.73 | 7.82 | 8.51 | 1.90 | 3.60 | 0.24 |

| Booting | 5.81 | 12.35 | 10.09 | 10.76 | 2.21 | 4.91 | 0.22 |

| Heading | 6.87 | 15.96 | 13.02 | 14.18 | 3.24 | 10.54 | 0.25 |

| AF5 | 8.26 | 19.99 | 15.41 | 16.76 | 3.89 | 15.11 | 0.25 |

| AF10 | 9.04 | 22.67 | 16.50 | 17.46 | 4.13 | 17.15 | 0.25 |

| AF15 | 9.27 | 23.03 | 18.49 | 19.70 | 4.31 | 18.59 | 0.23 |

| AF20 | 10.18 | 27.08 | 20.44 | 21.43 | 4.56 | 20.77 | 0.22 |

| AF25 | 14.53 | 24.59 | 19.79 | 19.86 | 3.33 | 11.08 | 0.17 |

| BF | 3.96 | 15.96 | 10.31 | 10.56 | 3.27 | 10.69 | 0.31 |

| AF | 8.26 | 27.08 | 18.13 | 19.46 | 4.40 | 19.35 | 0.24 |

| Full dataset | 3.96 | 27.08 | 15.30 | 15.56 | 5.51 | 30.42 | 0.36 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, F.; Yang, M.; Ma, L.; Zhang, T.; Qin, W.; Li, W.; Zhang, Y.; Sun, Z.; Wang, Z.; Li, F.; et al. Estimation of Above-Ground Biomass of Winter Wheat Based on Consumer-Grade Multi-Spectral UAV. Remote Sens. 2022, 14, 1251. https://doi.org/10.3390/rs14051251

Wang F, Yang M, Ma L, Zhang T, Qin W, Li W, Zhang Y, Sun Z, Wang Z, Li F, et al. Estimation of Above-Ground Biomass of Winter Wheat Based on Consumer-Grade Multi-Spectral UAV. Remote Sensing. 2022; 14(5):1251. https://doi.org/10.3390/rs14051251

Chicago/Turabian StyleWang, Falv, Mao Yang, Longfei Ma, Tong Zhang, Weilong Qin, Wei Li, Yinghua Zhang, Zhencai Sun, Zhimin Wang, Fei Li, and et al. 2022. "Estimation of Above-Ground Biomass of Winter Wheat Based on Consumer-Grade Multi-Spectral UAV" Remote Sensing 14, no. 5: 1251. https://doi.org/10.3390/rs14051251

APA StyleWang, F., Yang, M., Ma, L., Zhang, T., Qin, W., Li, W., Zhang, Y., Sun, Z., Wang, Z., Li, F., & Yu, K. (2022). Estimation of Above-Ground Biomass of Winter Wheat Based on Consumer-Grade Multi-Spectral UAV. Remote Sensing, 14(5), 1251. https://doi.org/10.3390/rs14051251