Reinforcement Learning and Genetic Algorithm-Based Network Module for Camera-LiDAR Detection

Abstract

:1. Introduction

- The model training of the reinforcement learning network was optimized by dividing it into two stages.

- The search time to create a practical network module was shortened by reducing the search space using reinforcement learning in GA’s population process.

- Performance and network convergence speed are improved based on GA assisted with reinforcement learning.

2. Related Works

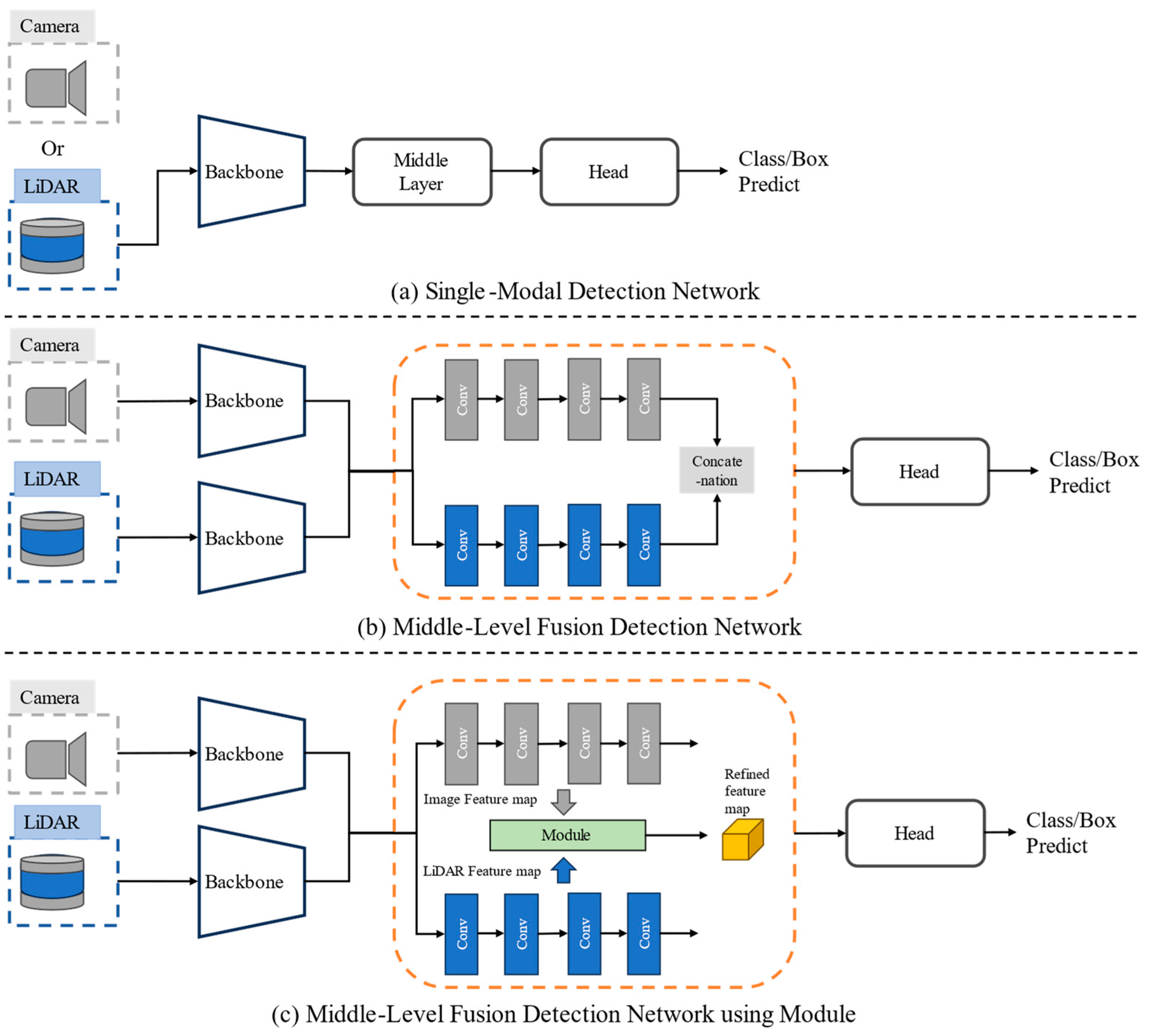

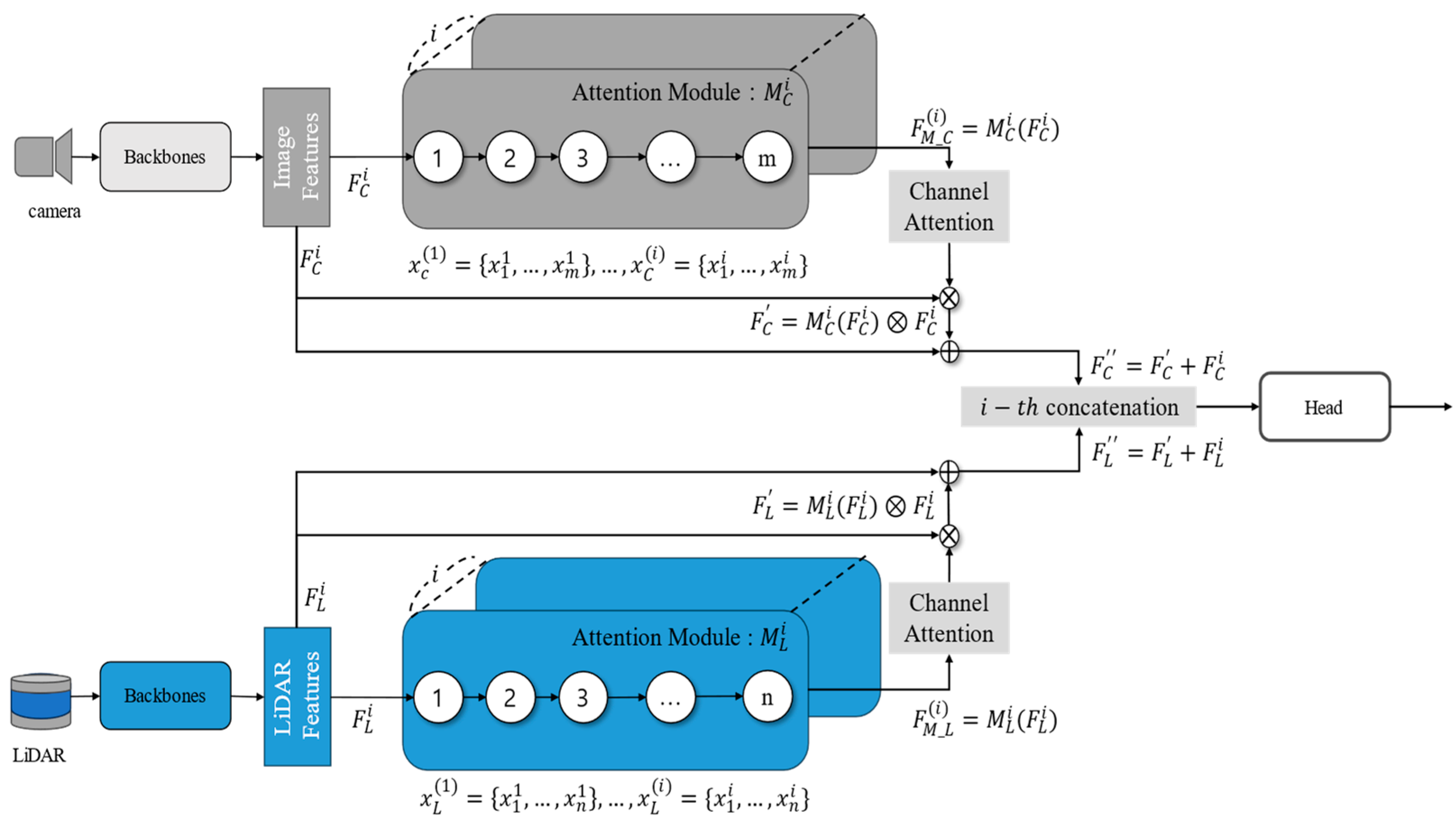

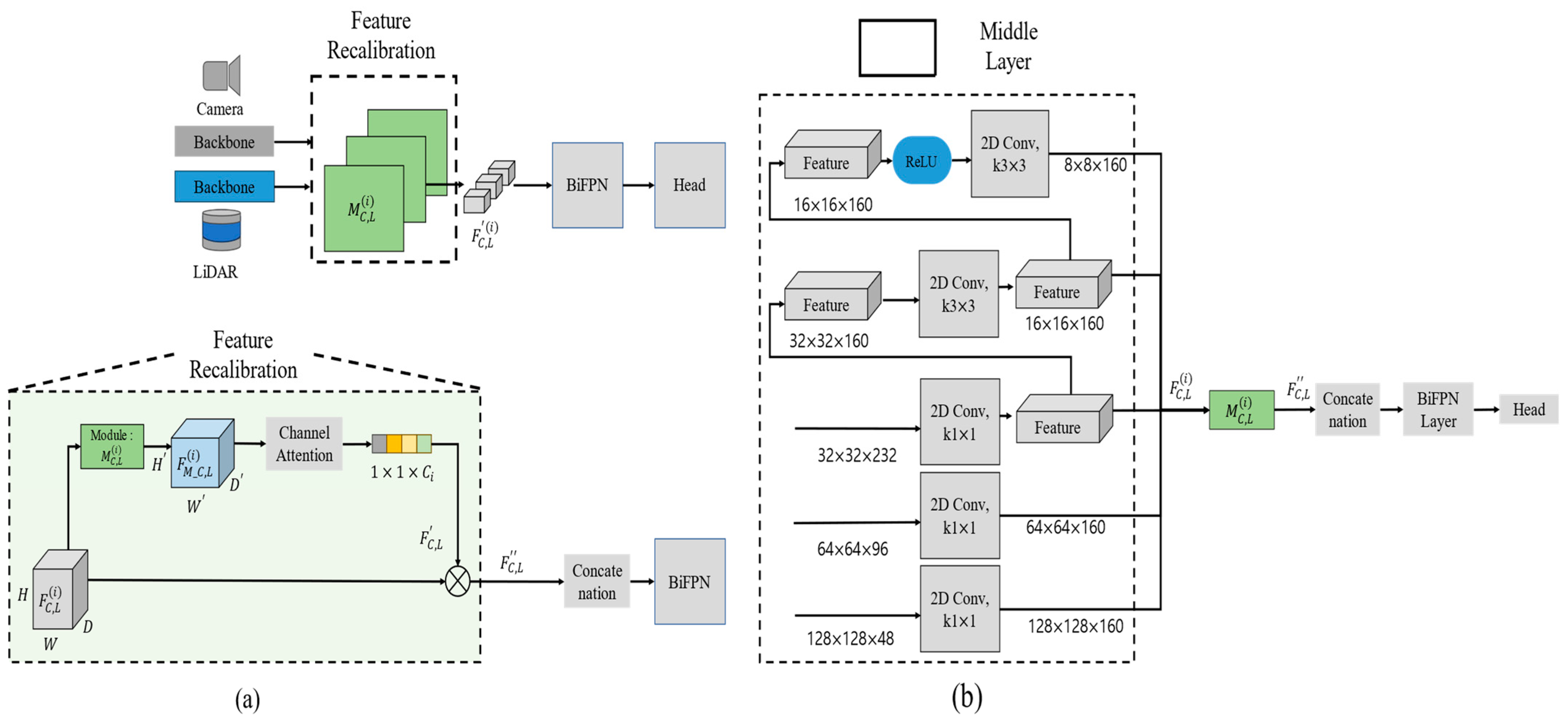

3. Method Overview

4. Efficient Module Design Method

4.1. Genetic Algorithm for Neural Architecture Search

- (1)

- Encoding: In the proposed method, each iterative process gradually builds up a good group. The middle layer consists of blocks and nodes, and Figure 4 shows the encoding process for each block. In this study, the node of the block is expressed as , where the subscript indicates the sensor and the superscript indicates the location of the block. Each block can consist of up to m or n nodes. Each node is a basic computation based on VGG [54], ResNet [1], and DenseNet [55] in the CNN literature. We chose a computation operation from among the following options, collected based on their prevalence in the CNN literature (1–8).

- 1 1 max pooling;

- 3 3 max pooling;

- 1 1 avg pooling;

- 3 3 avg pooling;

- 3 3 local binary conv;

- 5 5 local binary conv;

- 3 3 dilated convolution;

- 5 5 dilated convolution.

refers to the camera or LiDAR feature map and is the 1st conv block. A node consists of a total of 6 bits; 3 bits are the operation described above and the remaining 3 bits are the output channel size. When the GA algorithm determines only channel and operation, the kernel size may be larger than the input, and in this case, it is skipped without operation. - (2)

- Selection: Selection plays an important role in the process of GA finding the global optimal solution, and the tournament method was mainly used. Selection results due to fitness bias do not always provide us with a global optimal solution and can also have the disadvantage of widening the search space. In the proposed method, the best candidates were derived in terms of performance and calculation time, respectively, so that nondominant solutions could be passed on to the next generation. Then, it is to be noted once again that a certain probability is also added to the outcome proposed by the reinforcement learning network later.

- (3)

- Crossover: Fitness is improved by stacking blocks through selection based on fitness bias and gene combination process crossover. The building block represents various node connection combinations, and the various connections optimize the conv block. Our proposed method used a one-point method, randomly selecting a node in the encoding vector and changing the designated point. If the crossover is diverse, the search space expands and a global near optimal can be found. However, to reduce the search time, a simple method is used and supplemented with reinforcement learning.

- (4)

- Mutation: To improve diversity, there is mutation as a gene generation process that deviates from the local optimal solution during the population process, and we changed the connection node by flipping the bits of the encoding vector. The bits of the encoding vector are reversed and the operation or output channel at the connection node is changed. The proposed method only changes the form of the operation or channel, but it is difficult to find a new structure. However, in this study, a reinforcement learning network was able to solve the problem of diversity by proposing a new structure.

- (5)

- Reproduction: The softmax method of selecting the reproduction process, like the method proposed by DARTs [33], is suitable when using only genetic algorithms. To form an optimal gene population, we must appropriately combine genes generated through reinforcement learning with previous generations during reproduction. A reasonable moment when reinforcement learning genes should be sampled is when the update size of the loss is small.

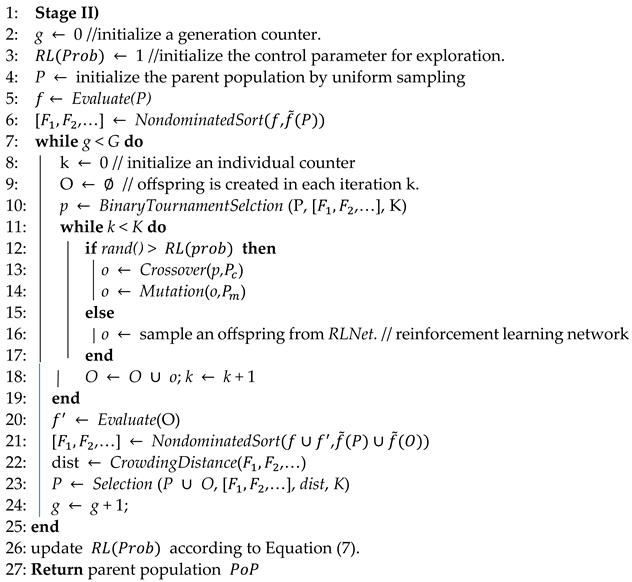

| Algorithm 1: Population Strategy of Genetic Algorithm using Reinforcement Learning Network |

| Input: Max, number of generations , Size of crossover selection , Crossover probability , Mutation probability , Reinforcement Learning Network (RLNet) Output: Parent population |

|

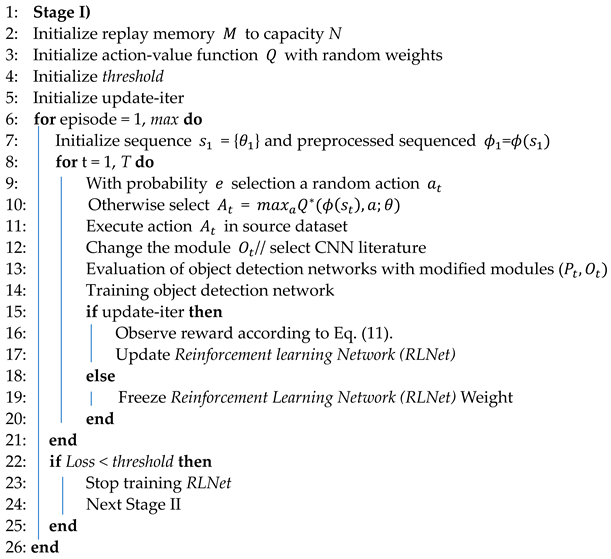

4.2. Reinforcement Learning Network for Supported Genetic Algorithm

| Algorithm 2: Reinforcement Learning Network Training Method |

| Input: Embedding feature Output: Action |

|

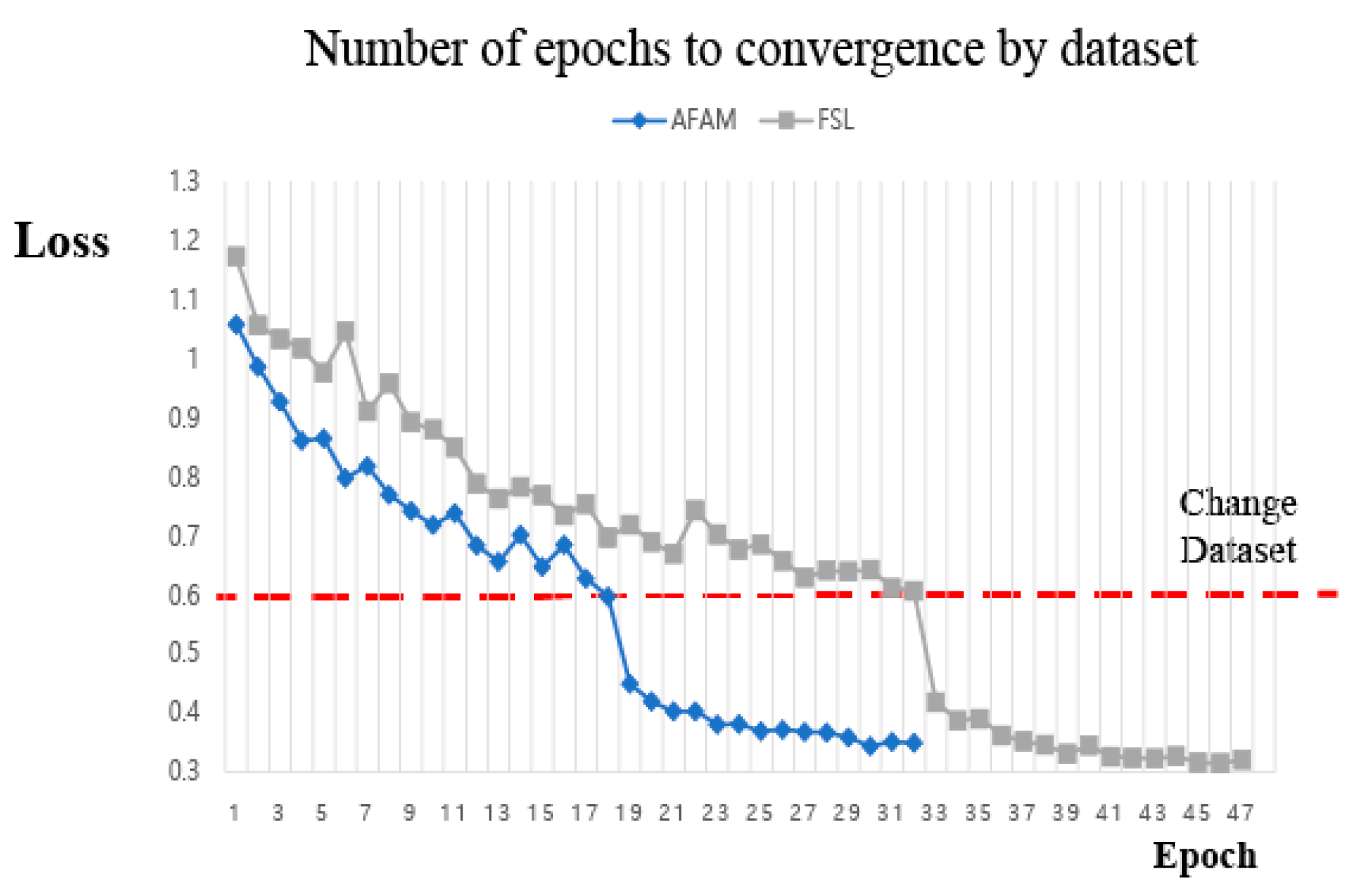

4.3. Search Strategy for Fast Convergence Module

5. Experiment Setup and Evaluation of the Proposed Method

5.1. Implementation Details

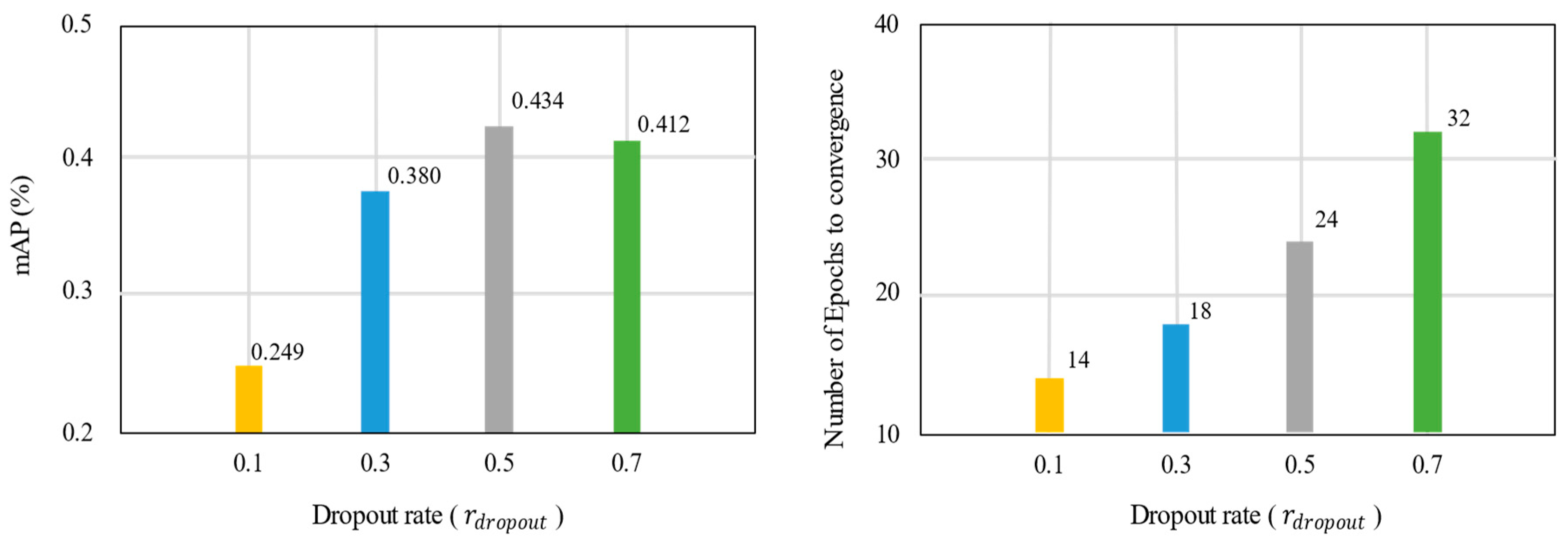

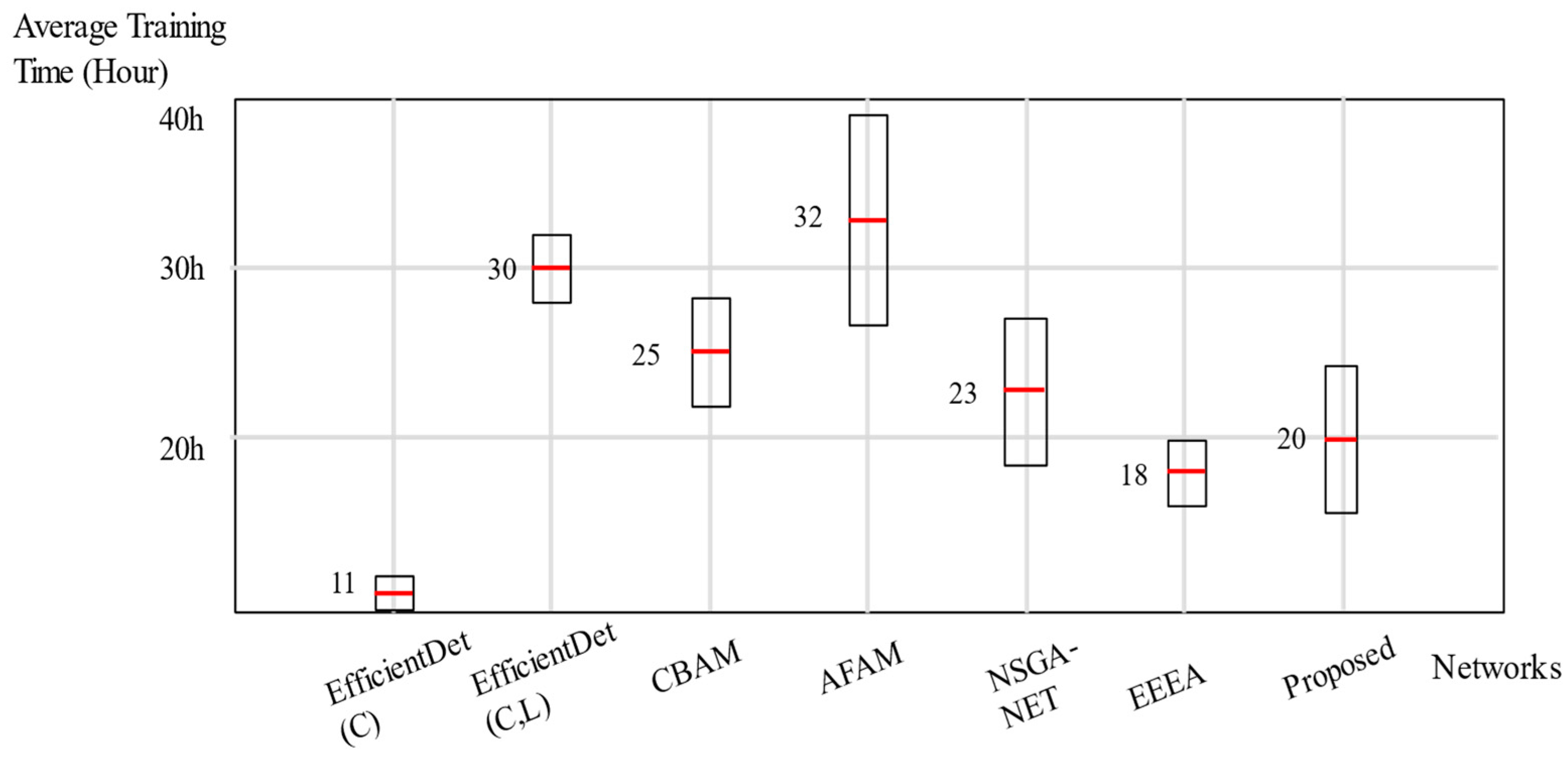

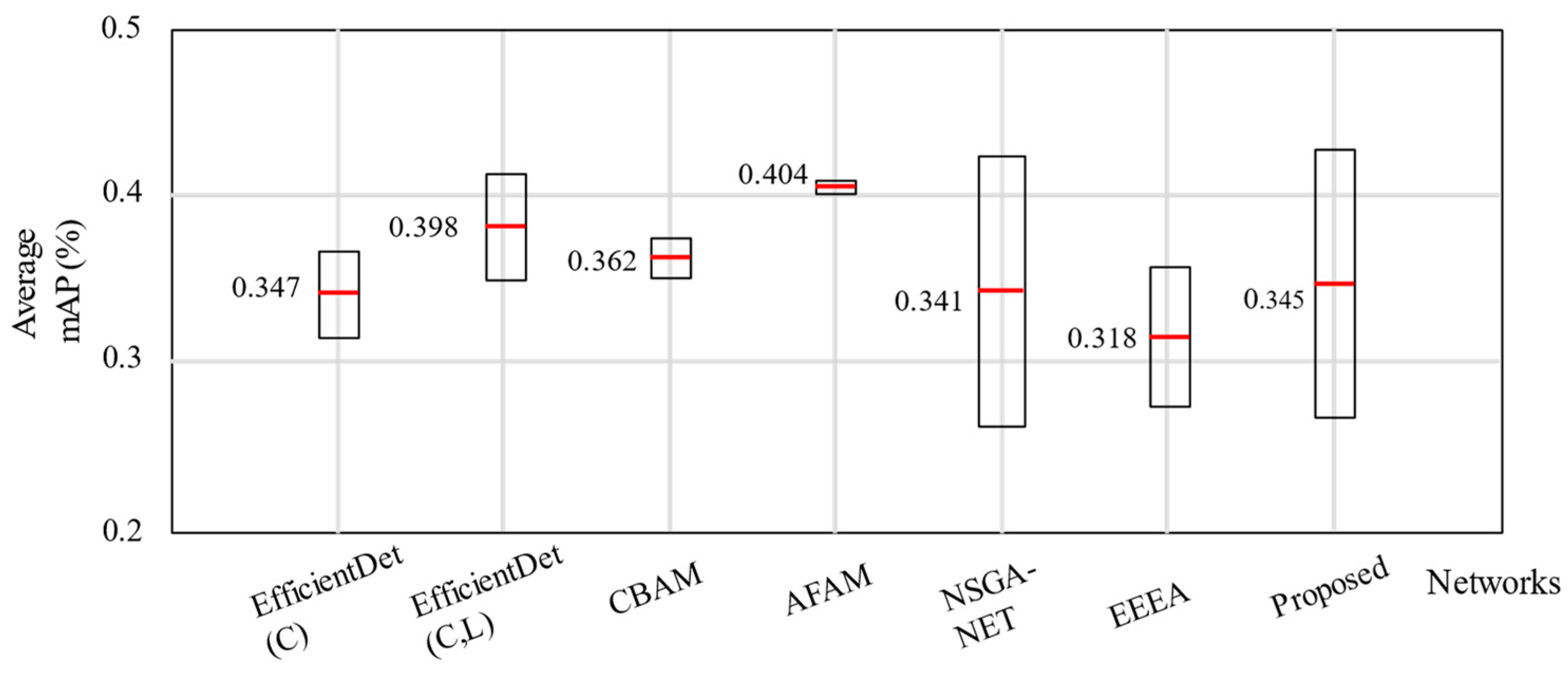

5.2. Effectiveness and Efficiency of Proposed Method

5.3. Ablation Study

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June 26–1 July 2016; pp. 770–778. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bijelic, M.; Gruber, T.; Mannan, F.; Kraus, F.; Ritter, W.; Dietmayer, K.; Heide, F. Seeing through fog without seeing fog: Deep multimodal sensor fusion in unseen adverse weather. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–18 June 2020; pp. 11682–11692. [Google Scholar]

- Gu, R.; Zhang, S.-X.; Xu, Y.; Chen, L.; Zou, Y.; Yu, D. Multi-modal multi-channel target speech separation. IEEE J. Sel. Top. Signal Process. 2020, 14, 530–541. [Google Scholar] [CrossRef]

- Li, Y.; Yu, A.W.; Meng, T.; Caine, B.; Ngiam, J.; Peng, D.; Shen, J.; Lu, Y.; Zhou, D.; Le Quoc, V.; et al. DeepFusion: Lidar-Camera Deep Fusion for Multi-Modal 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 17182–17191. [Google Scholar]

- Joze, H.R.V.; Shaban, A.; Iuzzolino, M.L.; Koishida, K. MMTM: Multimodal transfer module for CNN fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–18 June 2020; pp. 13289–13299. [Google Scholar]

- Menghani, G. Efficient deep learning: A survey on making deep learning models smaller, faster, and better. ACM Comput. Surv. 2023, 55, 1–37. [Google Scholar] [CrossRef]

- Menghani, G. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Proceedings of the Computer Vision–ECCV (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Kim, T.-L.; Arshad, S.; Park, T.-H. Adaptive Feature Attention Module for Robust Visual–LiDAR Fusion-Based Object Detection in Adverse Weather Conditions. Remote Sens. 2023, 55, 3992. [Google Scholar] [CrossRef]

- Kim, T.-L.; Park, T.-H. Camera-lidar fusion method with feature switch layer for object detection networks. Sensors 2022, 22, 7163. [Google Scholar] [CrossRef] [PubMed]

- Gao, S.; Li, Z.-Y.; Han, Q.; Cheng, M.-M.; Wang, L. RF-Next: Efficient receptive field search for convolutional neural networks. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 2984–3002. [Google Scholar] [CrossRef] [PubMed]

- Lu, Z.; Whalen, I.; Dhebar, Y.; Deb, K.; Goodman, E.D.; Banzhaf, W.; Boddeti, V.N. Multiobjective evolutionary design of deep convolutional neural networks for image classification. IEEE Trans. Evol. Comput. 2020, 25, 277–291. [Google Scholar] [CrossRef]

- Angeline, P.J.; Saunders, G.M.; Pollack, J.B. An evolutionary algorithm that constructs recurrent neural networks. IEEE Trans. Neural Netw. 1994, 5, 54–65. [Google Scholar] [CrossRef] [PubMed]

- Termritthikun, C.; Pholpramuan, J.; Arpapunya, A.; Nee, T.P. EEEA-Net: An Early Exit Evolutionary Neural Architecture Search. Eng. Appl. Artif. Intell. 2021, 104, 104397. [Google Scholar] [CrossRef]

- Tenorio, M.; Lee, W.-T. Self organizing neural networks for the identification problem. Adv. Neural Inf. Process. Syst. 1988, 1. Available online: https://proceedings.neurips.cc/paper/1988/hash/f2217062e9a397a1dca429e7d70bc6ca-Abstract.html (accessed on 18 June 2024).

- Deb, K.; Pratap, A.; Agarwal, S.; Meyarivan, T. A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 2002, 6, 182–197. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Cui, T.; Zheng, F.; Zhang, X.; Tang, Y.; Tang, C.; Liao, X. Fast One-Stage Unsupervised Domain Adaptive Person Search. arXiv 2024, arXiv:2405.02832. [Google Scholar]

- Peng, J.; Zhang, X.; Wang, Y.; Liu, H.; Wang, J.; Huang, Z. ReFID: Reciprocal Frequency-aware Generalizable Person Re-identification via Decomposition and Filtering. ACM Trans. Multimed. Comput. Commun. Appl. 2024, 20, 1–20. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Rekesh, D.; Koluguri, N.R.; Kriman, S.; Majumdar, S.; Noroozi, V.; Huang, H.; Hrinchuk, O.; Puvvada, K.; Kumar, A.; Balam, J.; et al. Fast conformer with linearly scalable attention for efficient speech recognition. In 2023 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU); IEEE: New York, NY, USA, 2023; pp. 1–8. [Google Scholar]

- Zong, Z.; Song, G.; Liu, Y. Detrs with collaborative hybrid assignments training. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 6748–6758. [Google Scholar]

- Hu, J.C.; Cavicchioli, R.; Capotondi, A. Exploiting Multiple Sequence Lengths in Fast End to End Training for Image Captioning. In 2023 IEEE International Conference on Big Data (BigData); IEEE: Sorrento, Italy, 2023; pp. 2173–2182. [Google Scholar]

- Li, C.; Qian, Y. Deep audio-visual speech separation with attention mechanism. In Proceedings of the ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; IEEE: New York, NY, USA, 2020; pp. 7314–7318. [Google Scholar]

- Iuzzolino, M.L.; Koishida, K. AV(se)2: Audio-visual squeeze-excite speech enhancement. In Proceedings of the ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; IEEE: New York, NY, USA, 2020; pp. 7539–7543. [Google Scholar]

- Yang, X.; Zhang, H.; Cai, J. Learning to collocate neural modules for image captioning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4250–4260. [Google Scholar]

- Li, J.; Wen, Y.; He, L. Scconv: Spatial and channel reconstruction convolution for feature redundancy. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 6153–6162. [Google Scholar]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning transferable architectures for scalable image recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 8697–8710. [Google Scholar]

- Bender, G.; Kindermans, P.J.; Zoph, B.; Vasudevan, V.; Le, Q. Understanding and simplifying one-shot architecture search. In Proceedings of the International Conference on Machine Learning (PMLR), Stockholm Sweden, 10–15 July 2018; pp. 550–559. [Google Scholar]

- Liu, H.; Simonyan, K.; Yang, Y. DARTS: Differentiable architecture search. In Proceedings of the International Conference on Learning Representations (ICLR), New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Loni, M.; Sinaei, S.; Zoljodi, A.; Daneshtalab, M.; Sjödin, M. DeepMaker: A multi-objective optimization framework for deep neural networks in embedded systems. Microprocess. Microsyst. 2020, 73, 102989. [Google Scholar] [CrossRef]

- Sun, Y.; Xue, B.; Zhang, M.; Yen, G.G. Automatically Designing CNN Architectures Using the Genetic Algorithm for Image Classification. IEEE Trans. Cybern. 2020, 50, 3840–3854. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 1097–1105. Available online: https://dblp.org/rec/conf/nips/KrizhevskySH12.html (accessed on 18 June 2024).

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The kitti vision benchmark suite. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Providence, Phode Island, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Wang, H.; Wang, C.; Li, Y.; Chen, H.; Zhou, J.; Zhang, J. Towards Adaptive Consensus Graph: Multi-View Clustering via Graph Collaboration. In IEEE Transactions on Multimedia; IEEE: New York, NY, USA, 2022. [Google Scholar]

- Liang, M.; Yang, B.; Chen, Y.; Hu, R.; Urtasun, R. Multi-task multi-sensor fusion for 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 7345–7353. [Google Scholar]

- Ku, J.; Mozifian, M.; Lee, J.; Harakeh, A.; Waslander, S.L. Joint 3d proposal generation and object detection from view aggregation. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1–8. [Google Scholar]

- Vora, S.; Lang, A.H.; Helou, B.; Beijbom, O. Pointpainting: Sequential fusion for 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–18 June 2020; pp. 4606–4612. [Google Scholar]

- Heinzler, R.; Schindler, P.; Seekircher, J.; Ritter, W.; Stork, W. Weather influence and classification with automotive lidar sensors. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 1527–1534. [Google Scholar]

- Sebastian, G.; Vattem, T.; Lukic, L.; Burgy, C.; Schumann, T. RangeWeatherNet for LiDAR-only weather and road condition classification. In Proceedings of the 2021 IEEE Intelligent Vehicles Symposium (IV), Nagoya, Japan, 11–17 July 2021; pp. 1527–1534. [Google Scholar]

- Heinzler, R.; Piewak, F.; Schindler, P.; Stork, W. CNN-Based Lidar Point Cloud De-Noising in Adverse Weather. IEEE Robot Autom. Lett. 2020, 5, 2514–2521. [Google Scholar] [CrossRef]

- Seppänen, A.; Ojala, R.; Tammi, K. 4DenoiseNet: Adverse Weather Denoising from Adjacent Point Clouds. IEEE Robot. Autom. Lett. 2022, 8, 456–463. [Google Scholar] [CrossRef]

- Cai, B.; Xu, X.; Jia, K.; Qing, C.; Tao, D. Dehazenet: An end-to-end system for single image haze removal. IEEE Trans. Image Process. 2016, 25, 5187–5198. [Google Scholar] [CrossRef] [PubMed]

- Hasirlioglu, S.; Riener, A. A general approach for simulating rain effects on sensor data in real and virtual environments. IEEE Trans. Intell. Veh. 2019, 5, 426–438. [Google Scholar] [CrossRef]

- Shao, Y.; Liu, Y.; Zhu, C.; Chen, H.; Li, Z.; Zhang, J. Domain Adaptation for Image Dehazing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2808–2817. [Google Scholar]

- Wu, A.; Li, W.; Zhou, W.; Cao, X. Instance-Invariant Domain Adaptive Object Detection via Progressive Disentanglement. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 4178–4193. [Google Scholar] [CrossRef]

- Wu, A.; Li, W.; Zhou, W.; Cao, X. Vector-Decomposed Disentanglement for Domain-Invariant Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 9342–9351. [Google Scholar]

- Wu, A.; Deng, C. Single-Domain Generalized Object Detection in Urban Scene via Cyclic-Disentangled Self-Distillation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 847–856. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for largescale image recognition. In Proceedings of the 3rd International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M.A. Playing Atari with Deep Reinforcement Learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Zhang, Q.-L.; Yang, Y.-B. ResT: An Efficient Transformer for Visual Recognition. Adv. Neural Inf. Process. Syst. 2021, 34, 15475–15485. [Google Scholar]

- Meyer, G.P.; Laddha, A.; Kee, E.; Vallespi-Gonzalez, C.; Wellington, C.K. LaserNet: An Efficient Probabilistic 3D Object Detector for Autonomous Driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 12677–12686. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

| Dataset | Environmental Condition (Light, Weather) | Training | Validation | Testing |

|---|---|---|---|---|

| T1 | Daytime, Clear | 2183 | 399 | 1005 |

| T2 | Daytime, Snow | 1615 | 226 | 452 |

| T3 | Daytime, Fog | 525 | 69 | 140 |

| T4 | Nighttime, Clear | 1343 | 409 | 877 |

| T5 | Nighttime, Snow | 1720 | 240 | 480 |

| T6 | Nighttime, Fog | 525 | 69 | 140 |

| Total | 8238 | 1531 | 3189 |

| Categories | Parameters | Settings |

|---|---|---|

| Search space | Initial channel Maximum nodes | 160 6 |

| Learning optimizer | Maximum epochs Learning rate schedule | 0.5 500 ADAM [61] |

| Search strategy | Population size Crossover probability Mutation probability (Loss) (FPS) (Convergence) | 10 0.3 0.1 0.8 0.1 0.1 |

| Reinforcement Learning | Batch size Gamma Learning rate | 128 0.99 0.9 0.05 10,000 1 × 10−4 |

| Backbone | Fusion Module (C, L) | FPS (Frame/s) | Top1-mAP (%) | Number of Epochs to Convergence |

|---|---|---|---|---|

| ResNet | None | 15 | 0.275 | 16 |

| CBAM | 12 | 0.278 | 28(+12) | |

| AFAM (CBAM + MMTM) | 10 | 0.293 | 29(+13) | |

| Propose | 11 | 0.292 | 20(+4) | |

| Efficientnet-b3 | None | 15 | 0.414 | 28 |

| CBAM | 12 | 0.388 | 18(−10) | |

| AFAM (CBAM + MMTM) | 10 | 0.419 | 27(−1) | |

| Propose | 11 | 0.434 | 24(−4) | |

| ResT | None | 5 | 0.247 | 35 |

| CBAM | 4 | 0.299 | 40(+5) | |

| AFAM (CBAM + MMTM) | 4 | 0.319 | 45(+10) | |

| Propose | 3 | 0.334 | 31(−4) |

| Backbone | Module Search Method | Components | GPU-Days | FPS (Frame/s) | Top1-mAP (%) | Number of Epochs to Convergence |

|---|---|---|---|---|---|---|

| ResNet | None | - | - | 15 | 0.275 | 16 |

| NSGA-NET | GA | 4 | 11 | 0.288 | 22(+6) | |

| EEEA | GA + Rule | 0.2 | 13 | 0.280 | 17(+1) | |

| Propose | GA + RL | 3 | 11 | 0.292 | 20(+4) | |

| Efficientnet-b3 | None | - | - | 15 | 0.414 | 28 |

| NSGA-NET | GA | 4 | 11 | 0.428 | 25(−3) | |

| EEEA | GA + Rule | 0.2 | 12 | 0.381 | 22(−6) | |

| Propose | GA + RL | 3 | 11 | 0.434 | 24(−4) | |

| ResT | None | - | - | 5 | 0.247 | 35 |

| NSGA-NET | GA | 8 | 3 | 0.322 | 33(−2) | |

| EEEA | GA + Rule | 0.5 | 4 | 0.283 | 30(−5) | |

| Propose | GA + RL | 7 | 3 | 0.334 | 31(−4) |

| Backbone | Fitness | Top1-Acc | Number of Epochs to Convergence |

|---|---|---|---|

| EfficientDet-b3 | Loss, FPS | 0.406 | 25 |

| w/Convergence (Loss, Q) | 0.424 | 24 | |

| ResNet- EfficientDet | Loss, FPS | 0.290 | 21 |

| w/Convergence (Loss, Q) | 0.292 | 20 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, T.-L.; Park, T.-H. Reinforcement Learning and Genetic Algorithm-Based Network Module for Camera-LiDAR Detection. Remote Sens. 2024, 16, 2287. https://doi.org/10.3390/rs16132287

Kim T-L, Park T-H. Reinforcement Learning and Genetic Algorithm-Based Network Module for Camera-LiDAR Detection. Remote Sensing. 2024; 16(13):2287. https://doi.org/10.3390/rs16132287

Chicago/Turabian StyleKim, Taek-Lim, and Tae-Hyoung Park. 2024. "Reinforcement Learning and Genetic Algorithm-Based Network Module for Camera-LiDAR Detection" Remote Sensing 16, no. 13: 2287. https://doi.org/10.3390/rs16132287