Generation of High-Resolution Blending Data Using Gridded Visibility Data and GK2A Fog Product

Abstract

1. Introduction

2. Materials and Methods

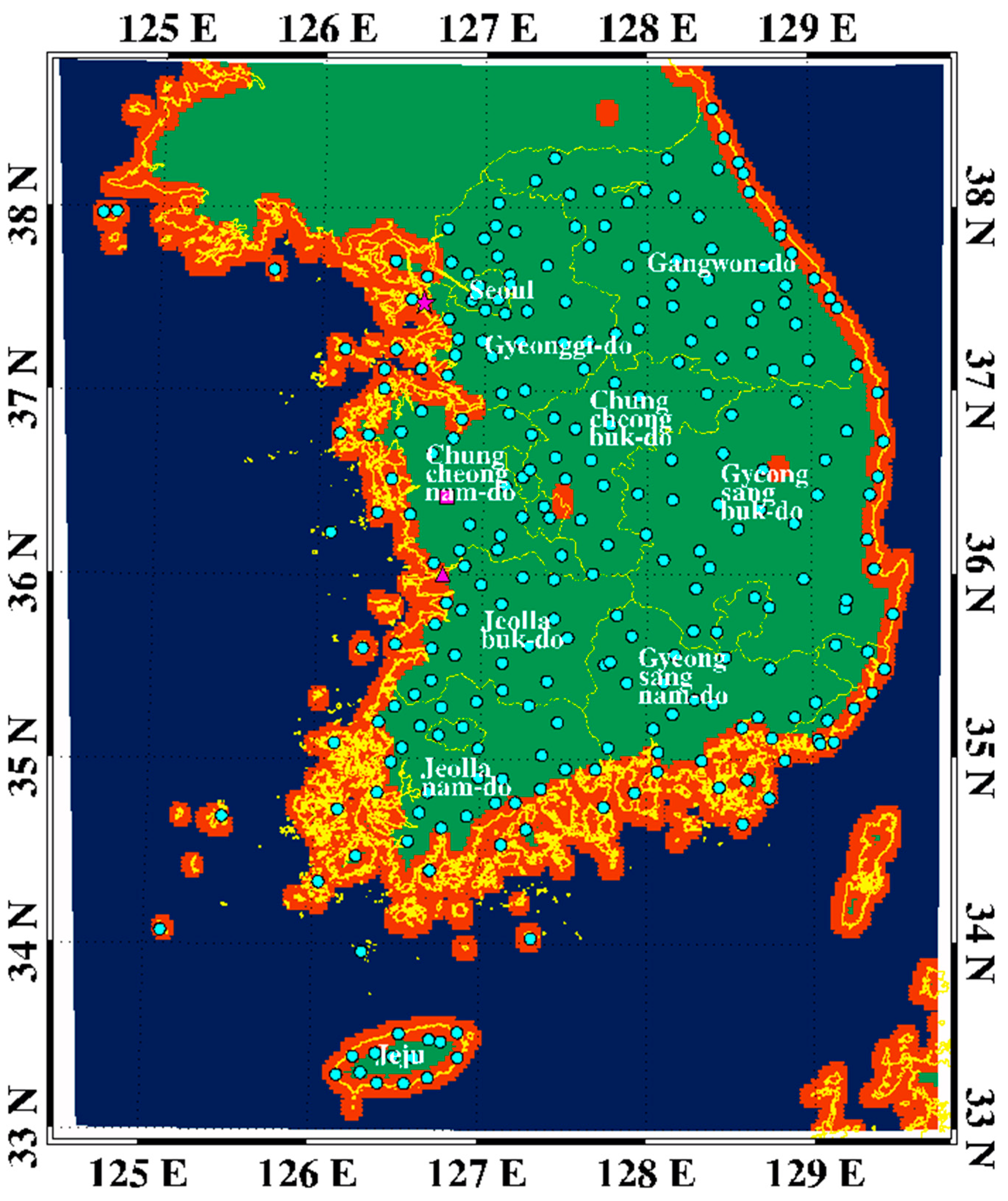

2.1. Materials

2.2. Methods

3. Results

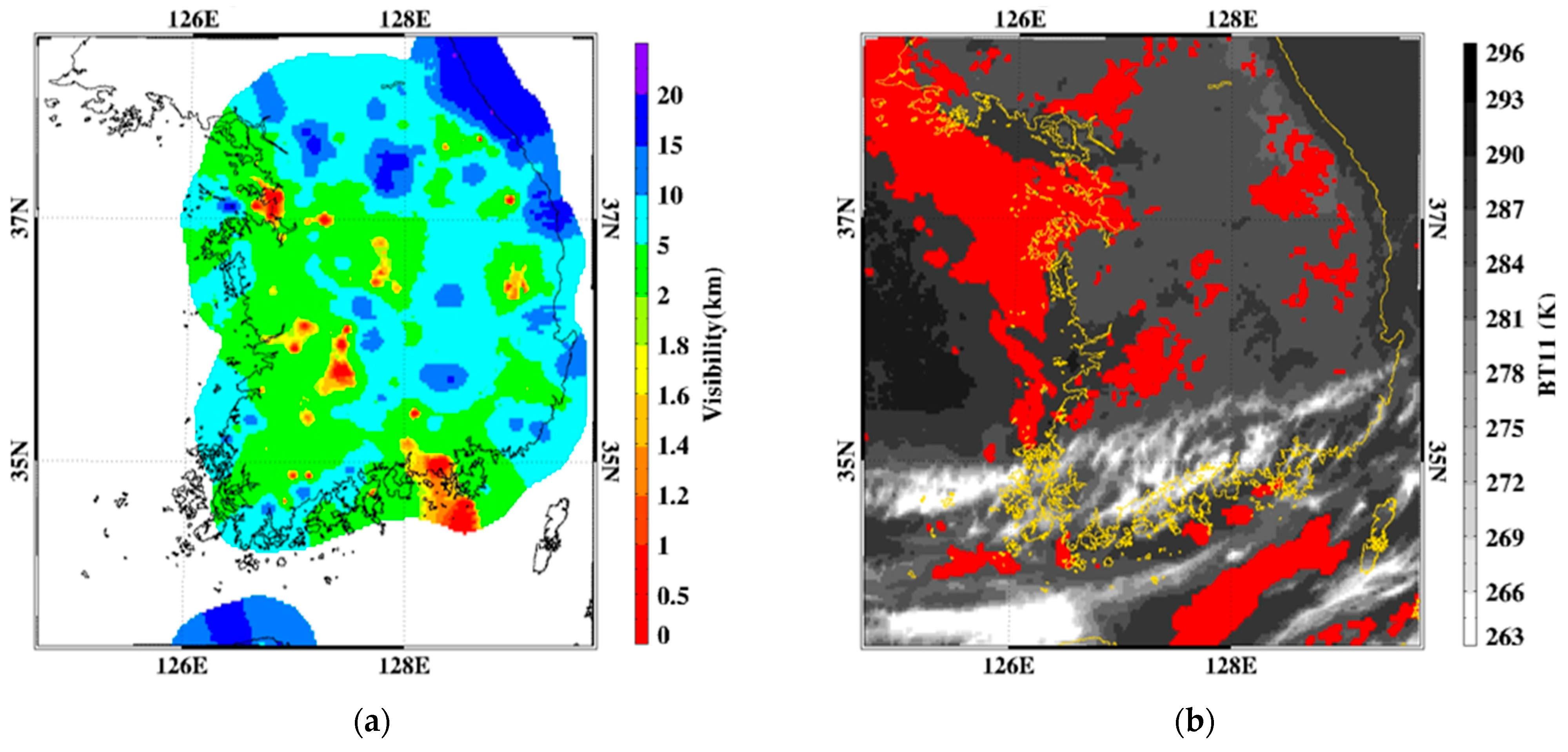

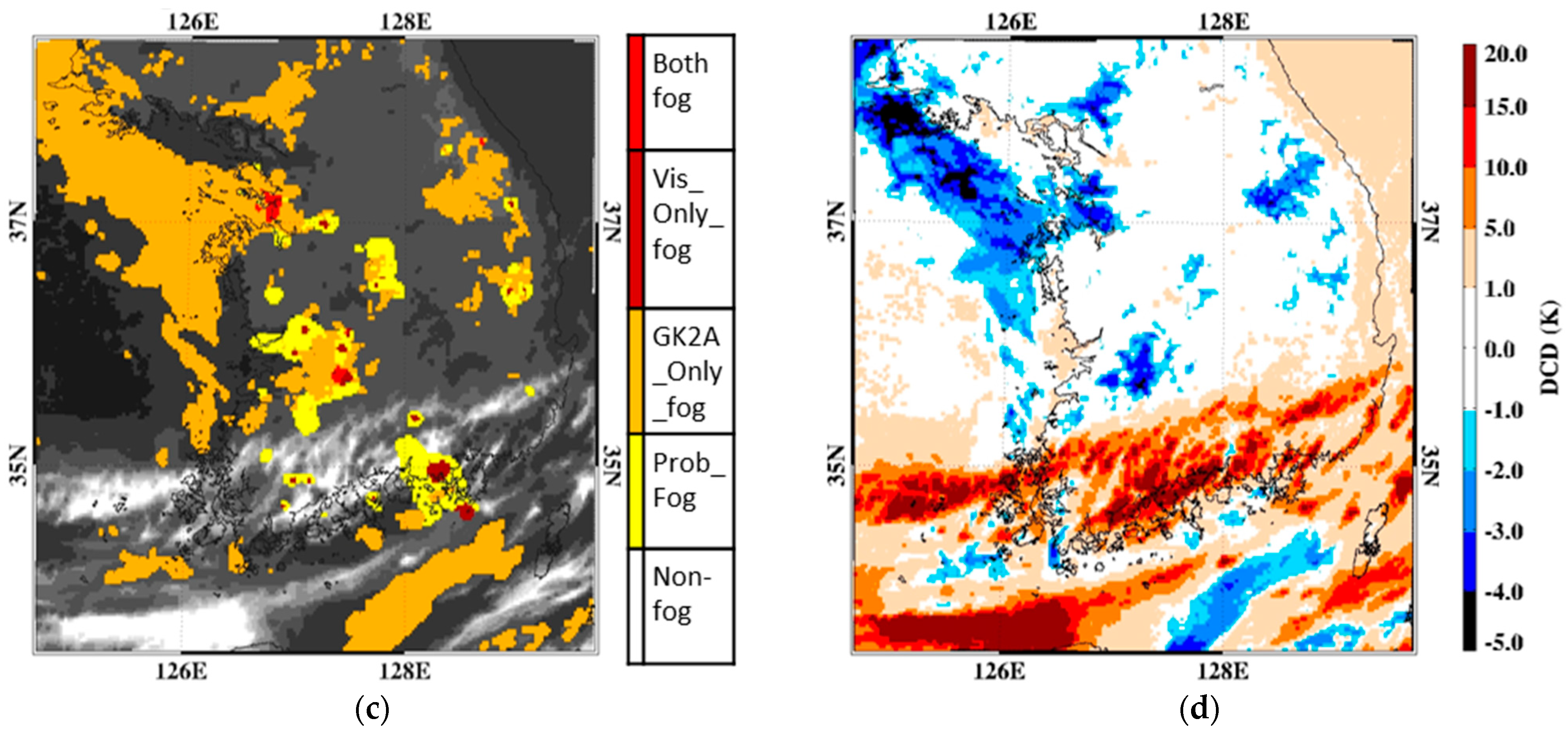

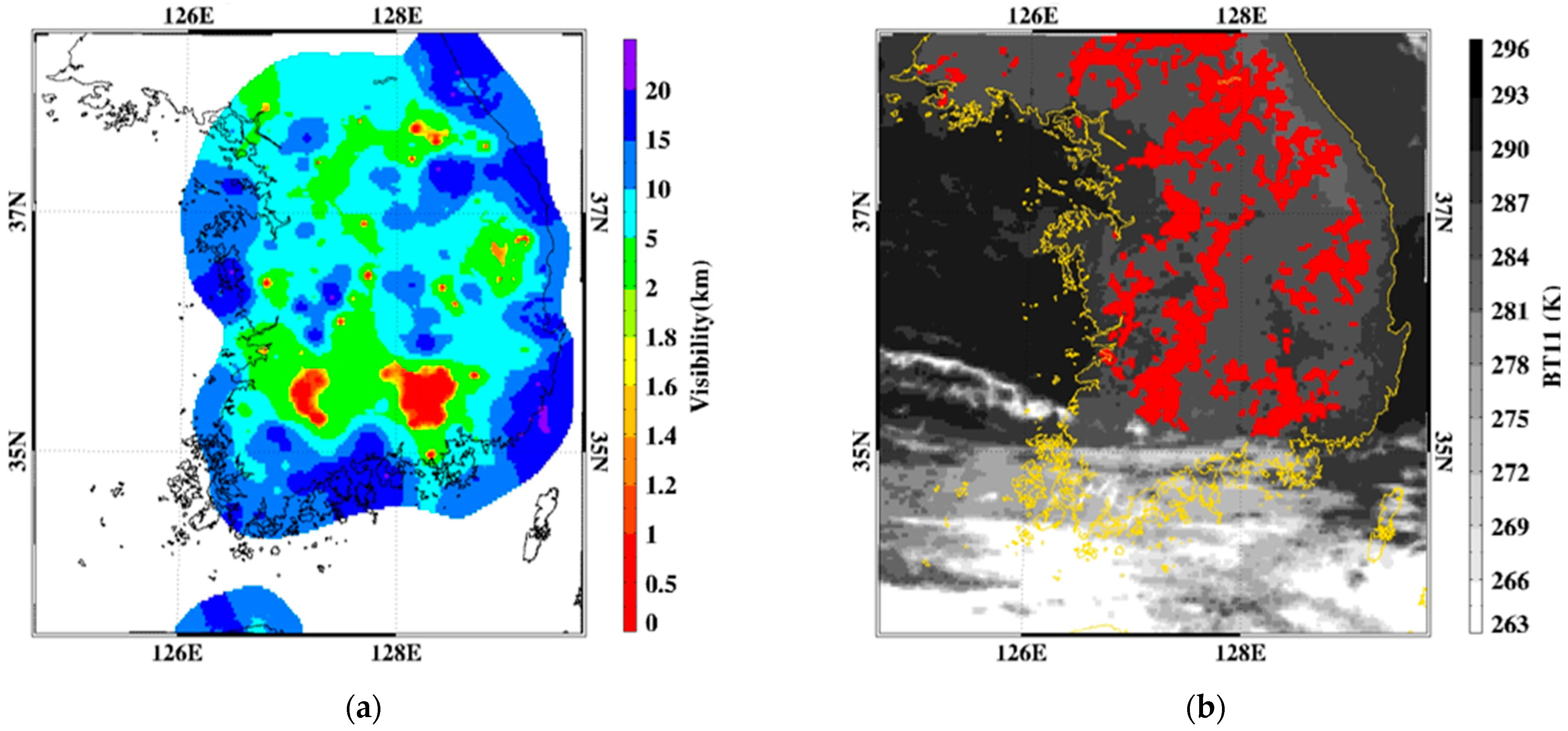

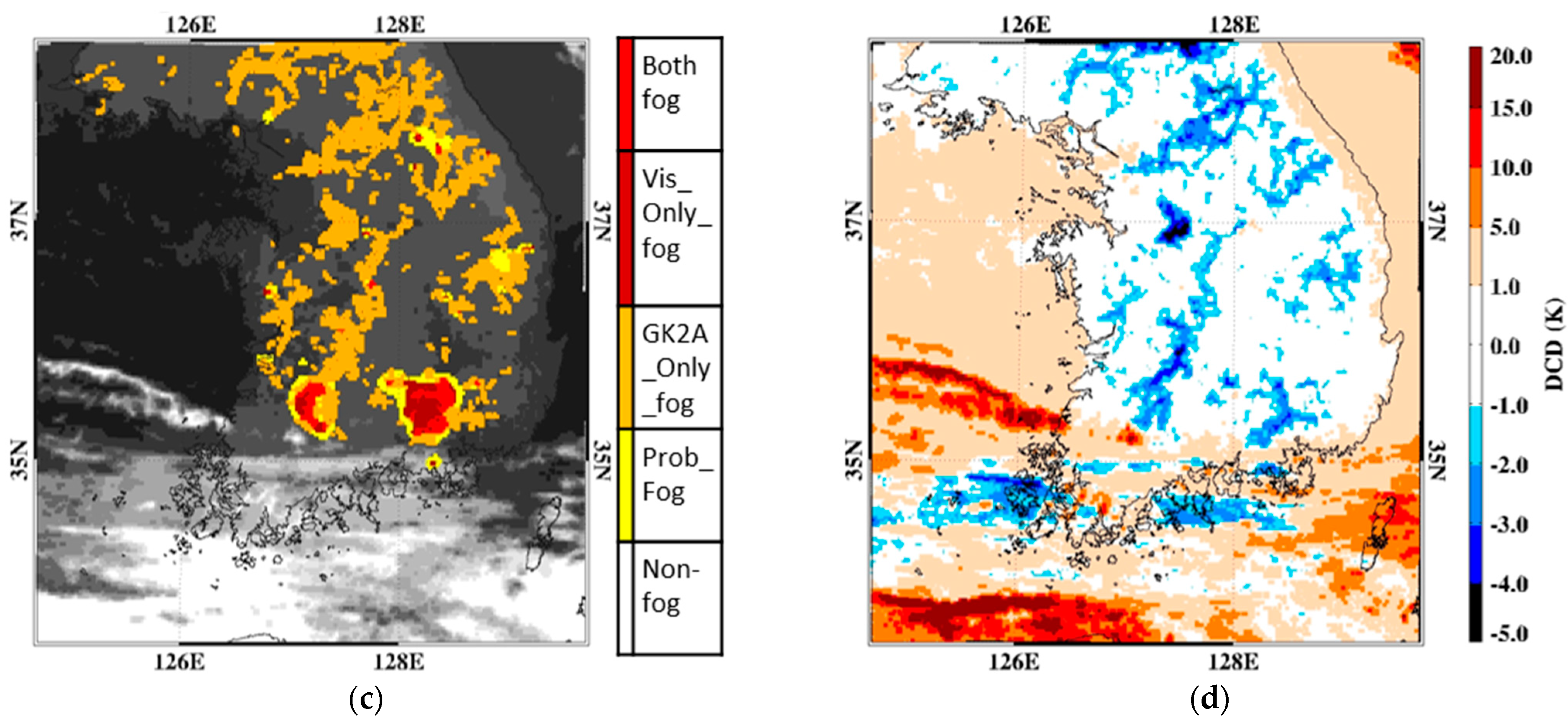

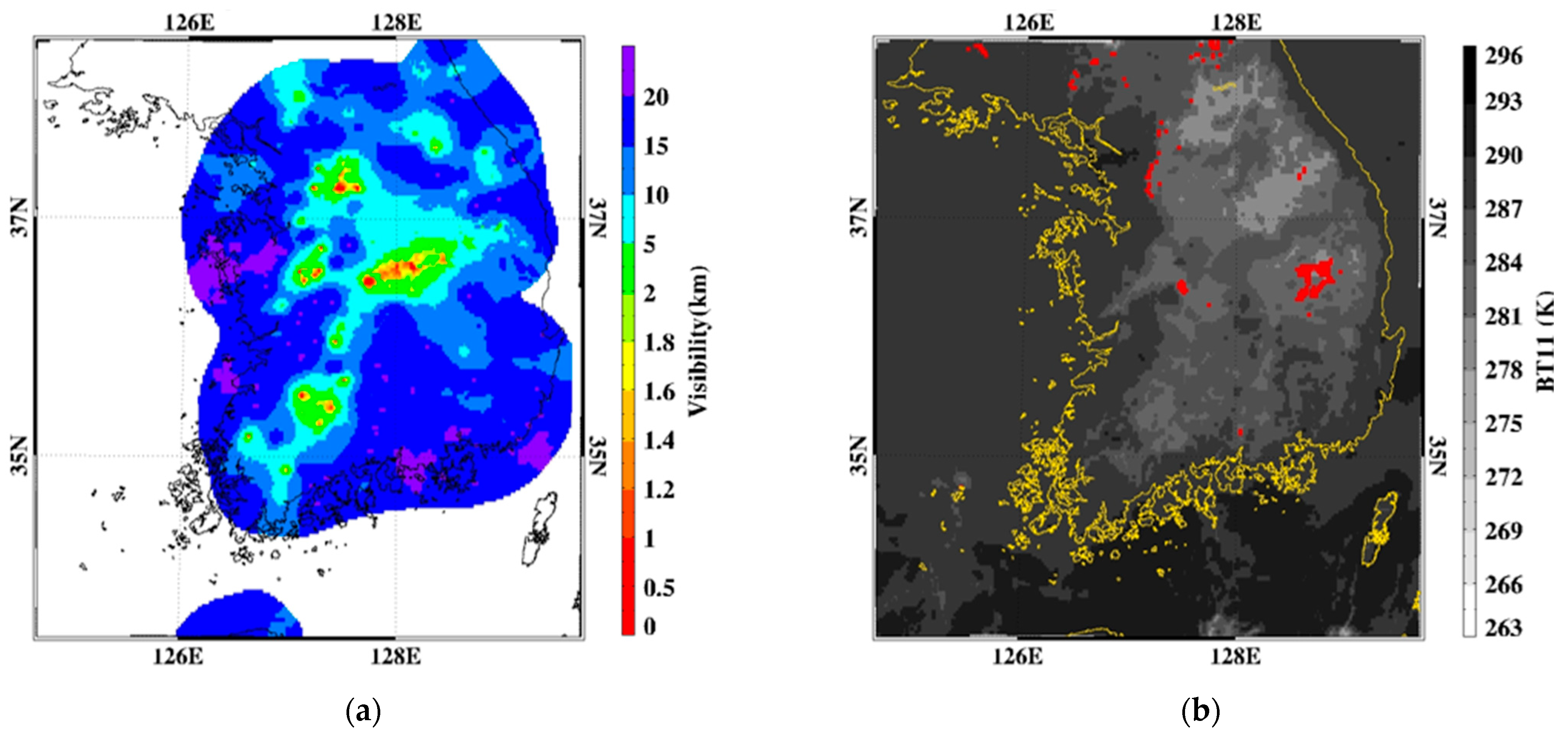

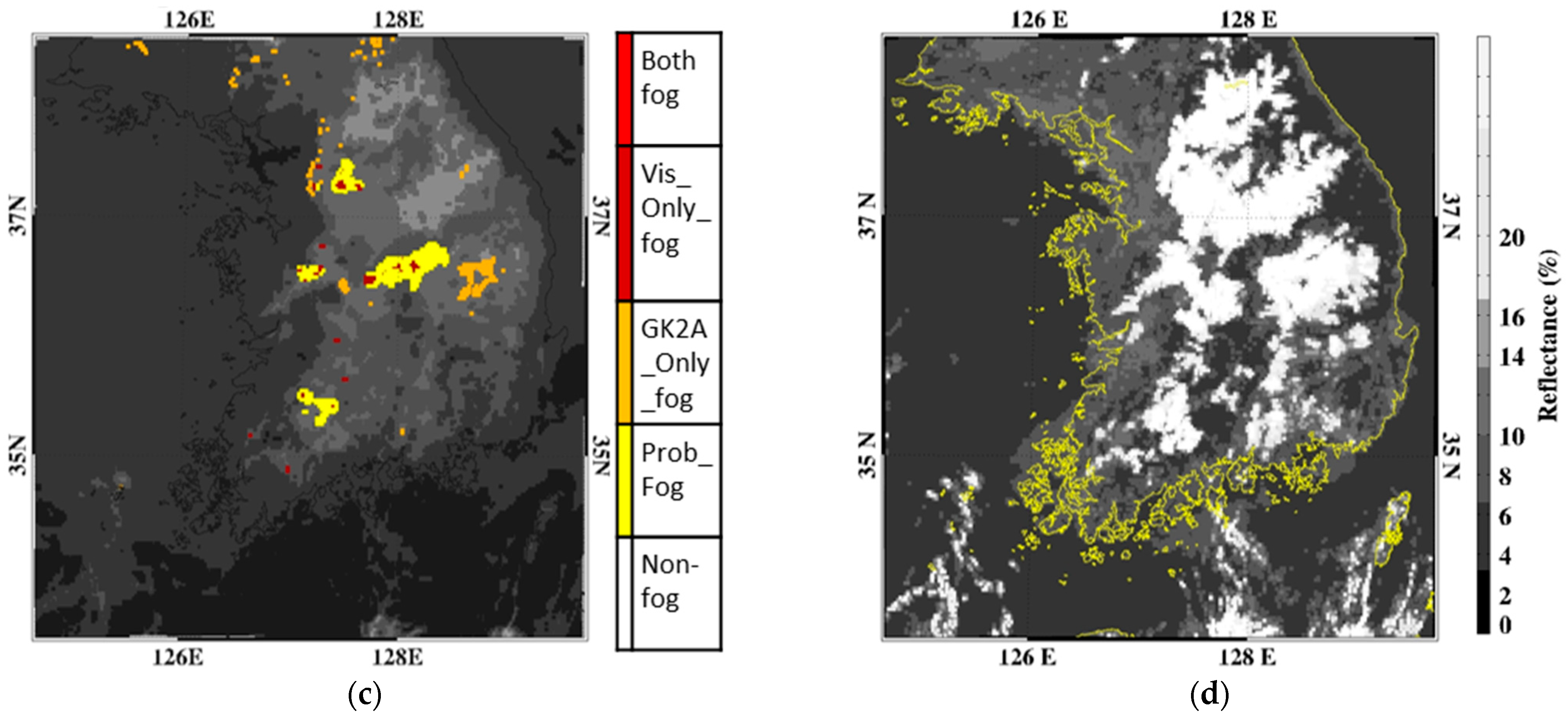

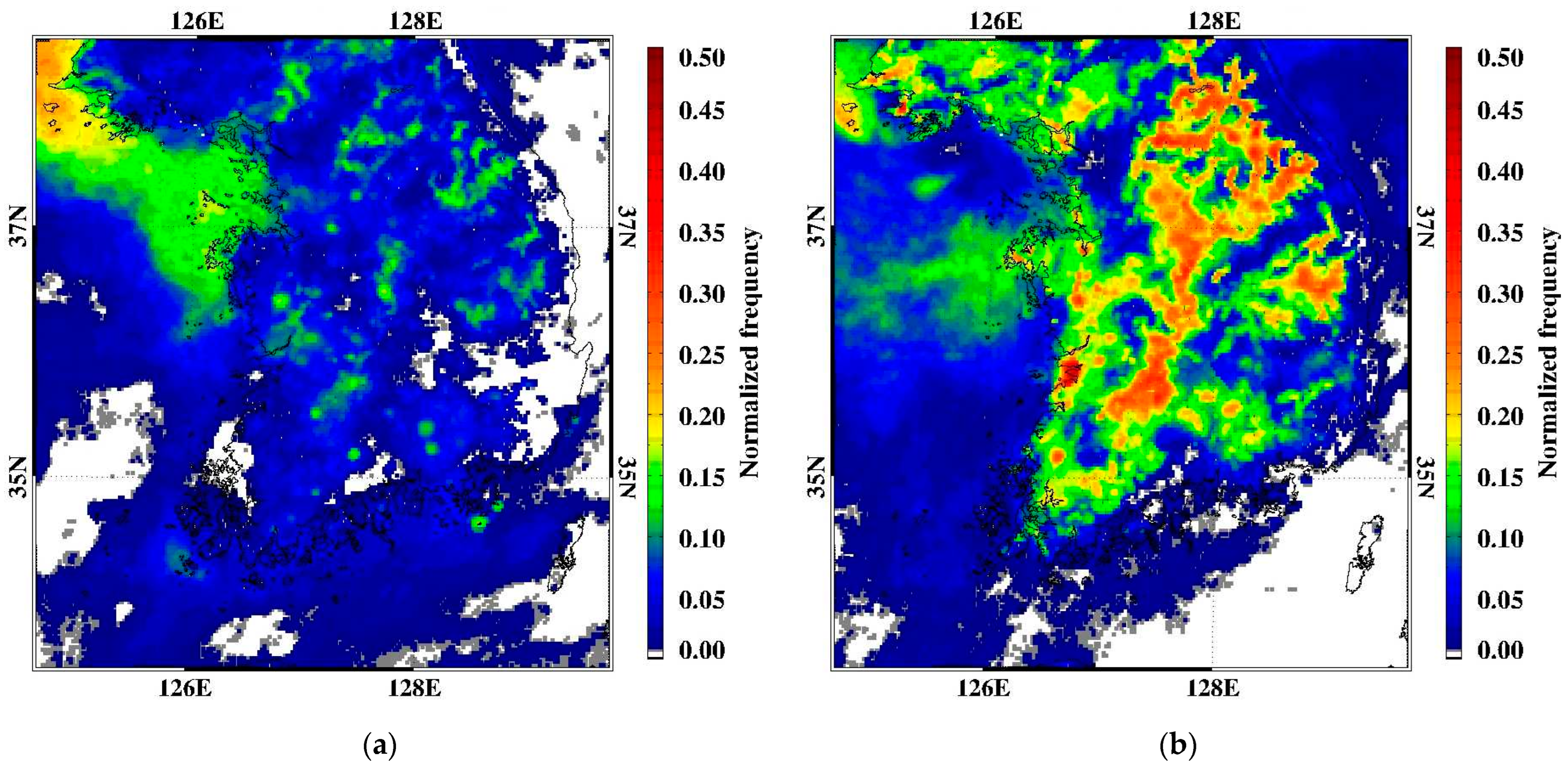

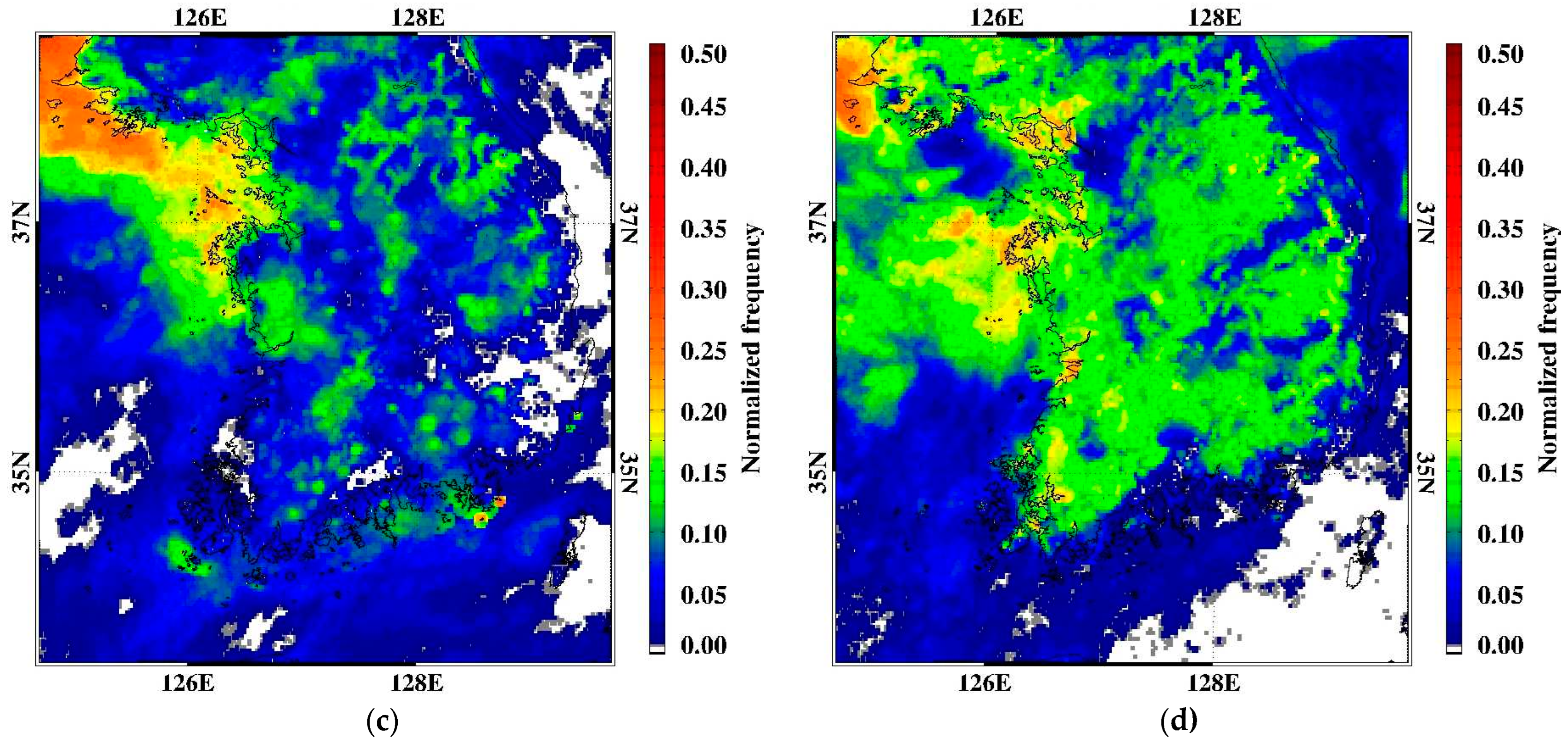

3.1. Qualitative Analysis

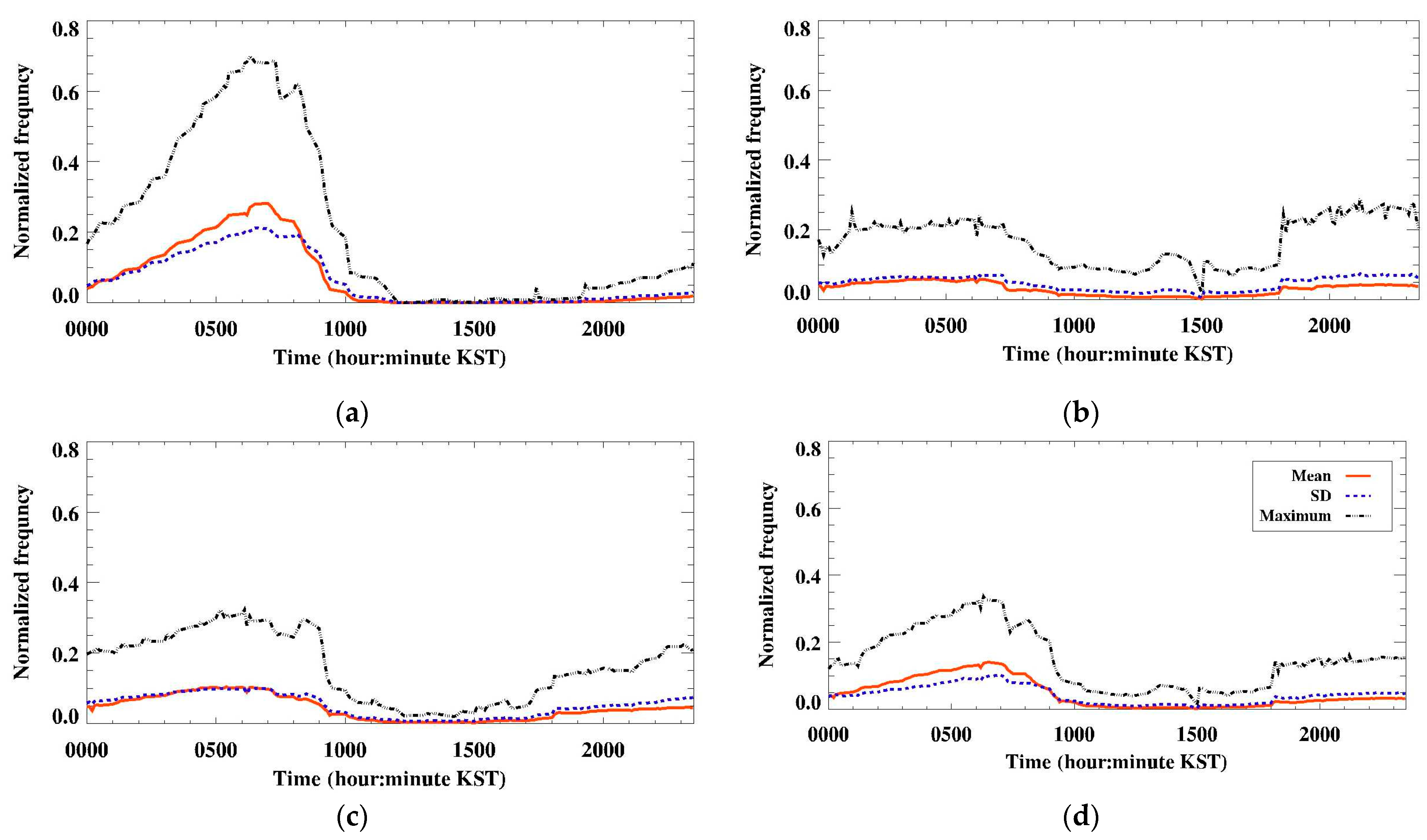

3.2. Analysis of Fog Occurrence Characteristics

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Eyre, J.R.; Brownscombe, J.L.; Allam, R.J. Detection of fog at night using Advanced Very High Resolution Radiometer (AVHRR) imagery. Meteorol. Mag. 1984, 113, 266–271. [Google Scholar]

- Ellrod, G.P.; Gultepe, I. Inferring low cloud base heights at night for aviation using satellite infrared and surface temperature data. Pure Appl. Geophys. 2007, 164, 1193–1205. [Google Scholar] [CrossRef]

- Gultepe, I.; Pagowski, M.; Reid, J. A satellite-based fog detection scheme using screen air temperature. Weather Forecast. 2007, 22, 444–456. [Google Scholar] [CrossRef]

- Gultepe, I.; Tardif, R.; Michaelides, S.C.; Cermak, J.; Bott, A.; Bendix, J.; Müller, M.D.; Pagowski, M.; Hansen, B.; Ellrod, G.; et al. Fog research: A review of past achievements and future perspectives. Pure Appl. Geophys. 2007, 164, 1121. [Google Scholar] [CrossRef]

- Koracin, D.; Dorman, C.E.; Lewis, J.M.; Hudson, J.G.; Wilcox, E.M.A. Torregrosa, Marine fog: A review. Atoms. Res. 2014, 143, 142–175. [Google Scholar] [CrossRef]

- Jhun, J.G.; Lee, E.J.; Ryu, S.A.; Yoo, S.H. Characteristics of regional fog occurrence and its relation to concentration of air pollutants in South Korea. Asia Pac. J. Atmos. Sci. 1998, 23, 103–112. [Google Scholar]

- Bendix, J. A satellite-based climatology of fog and low-level stratus in Germany and adjacent areas. Atmos. Res. 2002, 64, 3–18. [Google Scholar] [CrossRef]

- Underwood, S.J.; Ellrod, G.P.; Kuhnert, A.L. A multiple-case analysis of nocturnal radiation-fog development in the central valley of California utilizing the GOES nighttime fog product. J. Appl. Meteorol. 2004, 43, 297–311. [Google Scholar] [CrossRef]

- Cermak, J. SOFOS—A New Satellite-Based Operational Fog Observation Scheme. Ph.D. Thesis, Phillipps-University, Marburg, Germany, July 2006. [Google Scholar]

- Yi, L.; Thies, B.; Zhang, S.; Shi, X.; Bendix, J. Optical thickness and effective radius retrievals of low stratus and fog from MTSAT daytime data as a prerequisite for Yellow Sea fog detection. Remote Sens. 2015, 8, 8. [Google Scholar] [CrossRef]

- Suh, M.S.; Lee, S.J.; Kim, S.H.; Han, J.H.; Seo, E.K. Development of land fog detection algorithm based on the optical and textural properties of fog using COMS Data. Korean J. Remote Sens. 2017, 33, 359–375. [Google Scholar] [CrossRef]

- Han, J.H.; Suh, M.S.; Kim, S.H. Development of day fog detection algorithm based on the optical and textural characteristics using Himawari-8 data. Korean J. Remote Sens. 2019, 35, 117–136. [Google Scholar] [CrossRef]

- KoROAD: Traffic Accident Analysis System. Available online: http://taas.koroad.or.kr/web/bdm/srs/selectStaticalReportsDetail (accessed on 2 May 2020).

- Lee, S.J. Development of fog Detection Algorithm Based on the Optical and Textural Properties of Fog Using COMS Data. Master’s Thesis, Gongju National University, Gongju, Korea, February 2016. [Google Scholar]

- Lee, Y.S.; Choi, R.K.Y.; Kim, K.H.; Park, S.H.; Nam, H.J.; Kim, S.B. Improvement of automatic present weather observation with in situ visibility and humidity measurements. Atmosphere 2019, 29, 439–450. [Google Scholar] [CrossRef]

- Oh, Y.J.; Suh, M.S. Development of Quality Control Method for Visibility Data Based on the Characteristics of Visibility Data. Korean J. Remote Sens. 2020, 36, 707–723. [Google Scholar] [CrossRef]

- Lee, H.K.; Suh, M.S. Objective Classification of Fog Type and Analysis of Fog Characteristics Using Visibility Meter and Satellite Observation Data over South Korea. Atmosphere 2019, 29, 639–658. [Google Scholar] [CrossRef]

- Lee, J.R.; Chung, C.Y.; Ou, M.L. Fog detection using geostationary satellite data: Temporally continuous algorithm. Asia Pac. J. Atmos. Sci. 2011, 47, 113–122. [Google Scholar] [CrossRef]

- Shin, D.G.; Park, H.M.; Kim, J.H. Analysis of the fog detection algorithm of DCD method with SST and CALIPSO data. Atmosphere 2013, 23, 471–483. [Google Scholar] [CrossRef]

- Shin, D.G.; Kim, J.H. A new application of unsupervised learning to nighttime sea fog detection. Asia Pac. J. Atmos. Sci. 2018, 54, 527–544. [Google Scholar] [CrossRef]

- Castanedo, F. A Review of Data Fusion Techniques. Sci. World J. 2013, 2013, 704504. [Google Scholar] [CrossRef]

- Lee, Y.R.; Shin, D.B.; Kim, J.H.; Park, H.S. Precipitation estimation over radar gap areas based on satellite and adjacent radar observations. Atmos. Meas. Tech. 2015, 8, 719–728. [Google Scholar] [CrossRef][Green Version]

- Jang, S.M.; Park, K.Y.; Yoon, S.K. A Multi-sensor based very short-term rainfall forecasting using radar and satellite data—A Case Study of the Busan and Gyeongnam Extreme Rainfall in August, 2014. Korean J. Remote Sens. 2016, 32, 155–169. [Google Scholar] [CrossRef]

- Lim, H.; Choi, M.; Kim, M.; Kim, J.; Go, S.; Lee, S. Intercomparing the aerosol optical depth using the geostationary satellite sensors (AHI, GOCI and MI) from Yonsei AErosol Retrieval (YAER) algorithm. J. Korean Earth Sci. Soc. 2018, 39, 119–130. [Google Scholar] [CrossRef]

- Ehsan Bhuiyan, M.A.E.; Nikolopoulos, E.I.; Anagnostou, E.N. Machine learning–based blending of satellite and reanalysis precipitation datasets: A Multiregional Tropical Complex Terrain Evaluation. J. Hydrometeorol. 2019, 20, 2147–2161. [Google Scholar] [CrossRef]

- Lee, K.; Yu, J.; Lee, S.; Park, M.; Hong, H.; Park, S.Y.; Choi, M.; Kim, J.; Kim, Y.; Woo, J.H.; et al. Development of Korean Air Quality Prediction System version 1 (KAQPS v1) with focuses on practical issues. Geosci. Model Dev. 2020, 13, 1055–1073. [Google Scholar] [CrossRef]

- Yu, J.; Li, X.F.; Lewis, E.; Blenkinsop, S.; Fowler, H.J. UKGrsHP: A UK high-resolution gauge–radar–satellite merged hourly precipitation analysis dataset. Clim. Dyn. 2020, 54, 2919–2940. [Google Scholar] [CrossRef]

- Egli, S.; Thies, B.; Bendix, J. A Hybrid Approach for Fog Retrieval Based on a Combination of Satellite and Ground Truth Data. Remote Sens. 2018, 10, 628. [Google Scholar] [CrossRef]

- Kang, T.; Suh, M.S. Retrieval of high-resolution grid type visibility data in South Korea using inverse distance weighting and kriging. Korean J. Remote Sens. 2021, 37, 97–110. [Google Scholar] [CrossRef]

- Han, J.H.; Suh, M.S.; Yu, H.Y.; Roh, N.Y. Development of fog detection algorithm using GK2A/AMI and ground data. Remote Sens. 2020, 12, 3181. [Google Scholar] [CrossRef]

- NMSC: GK2A AMI Algorithm Theoretical Basis Document (ATBD). Available online: http://nmsc.kma.go.kr/homepage/html/base/cmm/selectPage.do?page=static.edu.atbdGk2a (accessed on 7 January 2021).

- Bruce, P.; Bruce, A. Practical Statistics for Data Scientists, 1st ed.; O’Reilly Media: Sebastopol, CA, USA, 2017; pp. 277–287. [Google Scholar]

- Kim, D.H.; Park, M.S.; Park, Y.J.; Kim, W.K. Geostationary Ocean Color Imager (GOCI) marine fog detection in combination with Himawari-8 based on the decision tree. Remote Sens. 2020, 12, 149. [Google Scholar] [CrossRef]

- Kang, T.H.; Suh, M.S. Detailed characteristics of fog occurrence in South Korea by geographic location and season—Based on the recent three years (2016–2018) visibility data. J. Clim. Res. 2019, 14, 221–244. [Google Scholar] [CrossRef]

- Lee, H.D.; Ahn, J.B. Study on classification of fog type based on its generation mechanism and fog predictability using empirical method. Atmosphere 2013, 23, 103–112. [Google Scholar] [CrossRef]

| Characteristics | Gridded Vis. | Sat. Fog Product |

|---|---|---|

| Frequency | 10 min | 10 min |

| Spatial resolution | 2 km | 2 km |

| Domain | South Korea (only land) | East Asia |

| Retrieval method | IDW method | Decision tree method |

| Initial data | Visibility meter | GK2A/AMI |

| Units | km (fog: <1 km) | Category (1–7) |

| Range of valid values | 0–20 km | 1: Clear 2: Middle or High Cloud 3: Unknown 4: Probably Fog 5: Fog 6: Snow 7: Desert or Semi-desert |

| Case # | Date | # of Fog |

|---|---|---|

| 1 | 4 July 2019 | 1244 |

| 2 | 14 July 2019 | 774 |

| 3 | 24 July 2019 | 320 |

| 4 | 26 July 2019 | 676 |

| 5 | 25 August 2019 | 570 |

| 6 | 26 August 2019 | 815 |

| 7 | 30 August 2019 | 1464 |

| 8 | 31 August 2019 | 227 |

| 9 | 17 September 2019 | 525 |

| 10 | 24 September 2019 | 2483 |

| 11 | 29 September 2019 | 2286 |

| 12 | 30 September 2019 | 2011 |

| 13 | 1 October 2019 | 719 |

| 14 | 4 October 2019 | 2385 |

| 15 | 20 October 2019 | 3823 |

| 16 | 5 November 2019 | 2995 |

| 17 | 6 November 2019 | 3360 |

| 18 | 12 November 2019 | 1893 |

| 19 | 8 December 2019 | 696 |

| 20 | 19 December 2019 | 76 |

| 21 | 10 February 2020 | 72 |

| 22 | 11 February 2020 | 151 |

| 23 | 1 March 2020 | 1277 |

| Total | 28,570 | |

| GVD | GKFP | Product Name | Code | Color | Comments |

|---|---|---|---|---|---|

| Fog | Fog | Both fog | 1 | Red | Both data are fog |

| Non-fog | Vis_Only_fog | 2 | Deep red | Visibility < 1 km | |

| No data | Vis_Only_fog | 6 | Deep red | Only gridded visibility data | |

| Non-fog | Fog | GK2A_Only_fog | 3 | Orange | GK2A fog value is fog |

| Non-fog | Prob_fog | 4 | Yellow | 1 km ≤ ave. 3 × 3 < 2 km | |

| Non-fog | 5 | - | Both data are non-fog | ||

| No data | Prob_fog | 7 | Yellow | 1 km ≤ ave. 3 × 3 < 2 km | |

| Non-fog | 8 | - | Only gridded visibility data | ||

| No data | Fog | GK2A_only_fog | 9 | Orange | Only satellite fog data |

| Non-fog | Non-fog | 10 | - | Only satellite fog data | |

| No data | Missing | −999 | - | Both data are missing |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Suh, M.-S.; Han, J.-H.; Yu, H.-Y.; Kang, T.-H. Generation of High-Resolution Blending Data Using Gridded Visibility Data and GK2A Fog Product. Remote Sens. 2024, 16, 2350. https://doi.org/10.3390/rs16132350

Suh M-S, Han J-H, Yu H-Y, Kang T-H. Generation of High-Resolution Blending Data Using Gridded Visibility Data and GK2A Fog Product. Remote Sensing. 2024; 16(13):2350. https://doi.org/10.3390/rs16132350

Chicago/Turabian StyleSuh, Myoung-Seok, Ji-Hye Han, Ha-Yeong Yu, and Tae-Ho Kang. 2024. "Generation of High-Resolution Blending Data Using Gridded Visibility Data and GK2A Fog Product" Remote Sensing 16, no. 13: 2350. https://doi.org/10.3390/rs16132350

APA StyleSuh, M.-S., Han, J.-H., Yu, H.-Y., & Kang, T.-H. (2024). Generation of High-Resolution Blending Data Using Gridded Visibility Data and GK2A Fog Product. Remote Sensing, 16(13), 2350. https://doi.org/10.3390/rs16132350