Integrating Sentinel 2 Imagery with High-Resolution Elevation Data for Automated Inundation Monitoring in Vegetated Floodplain Wetlands

Abstract

1. Introduction

2. Materials and Methods

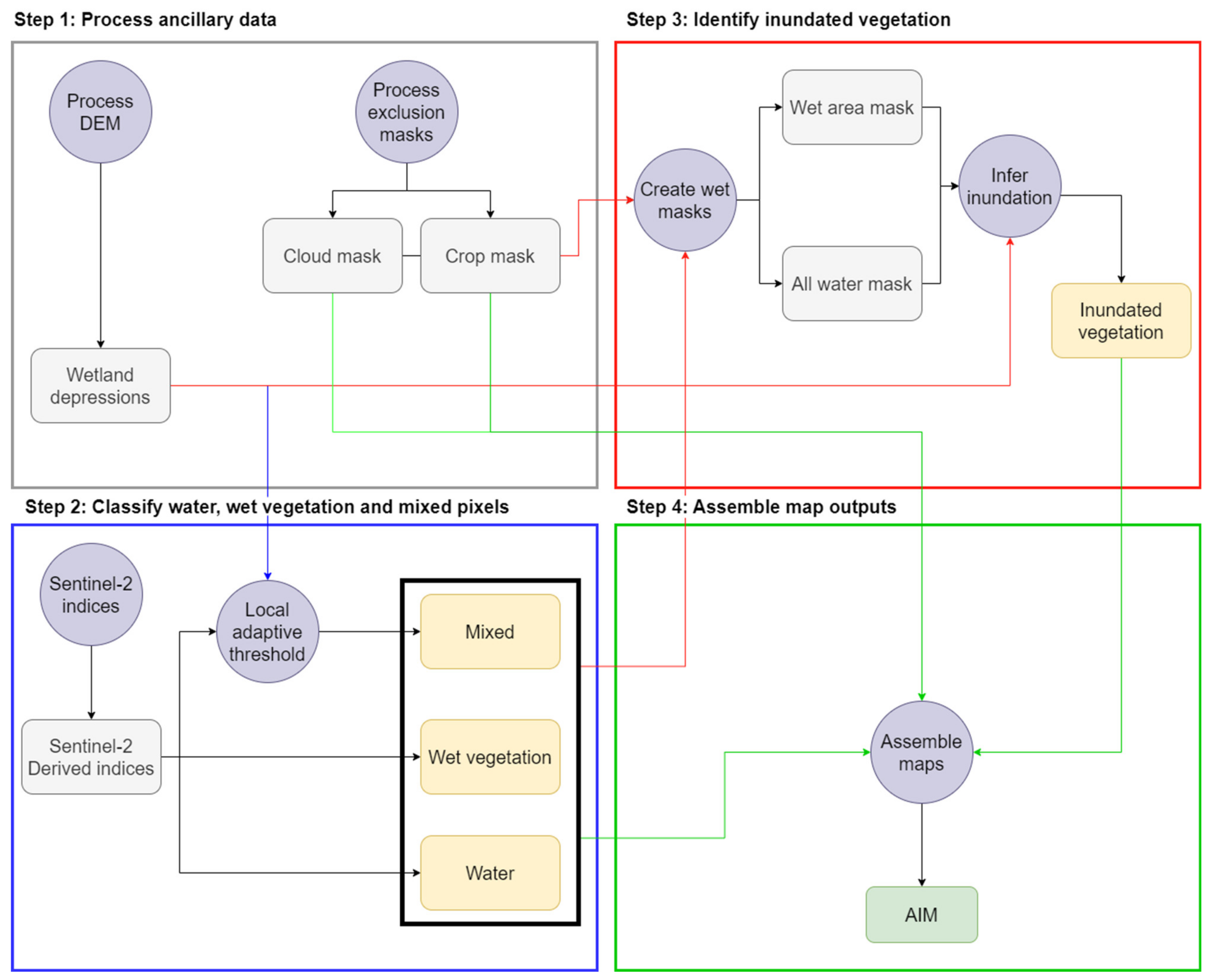

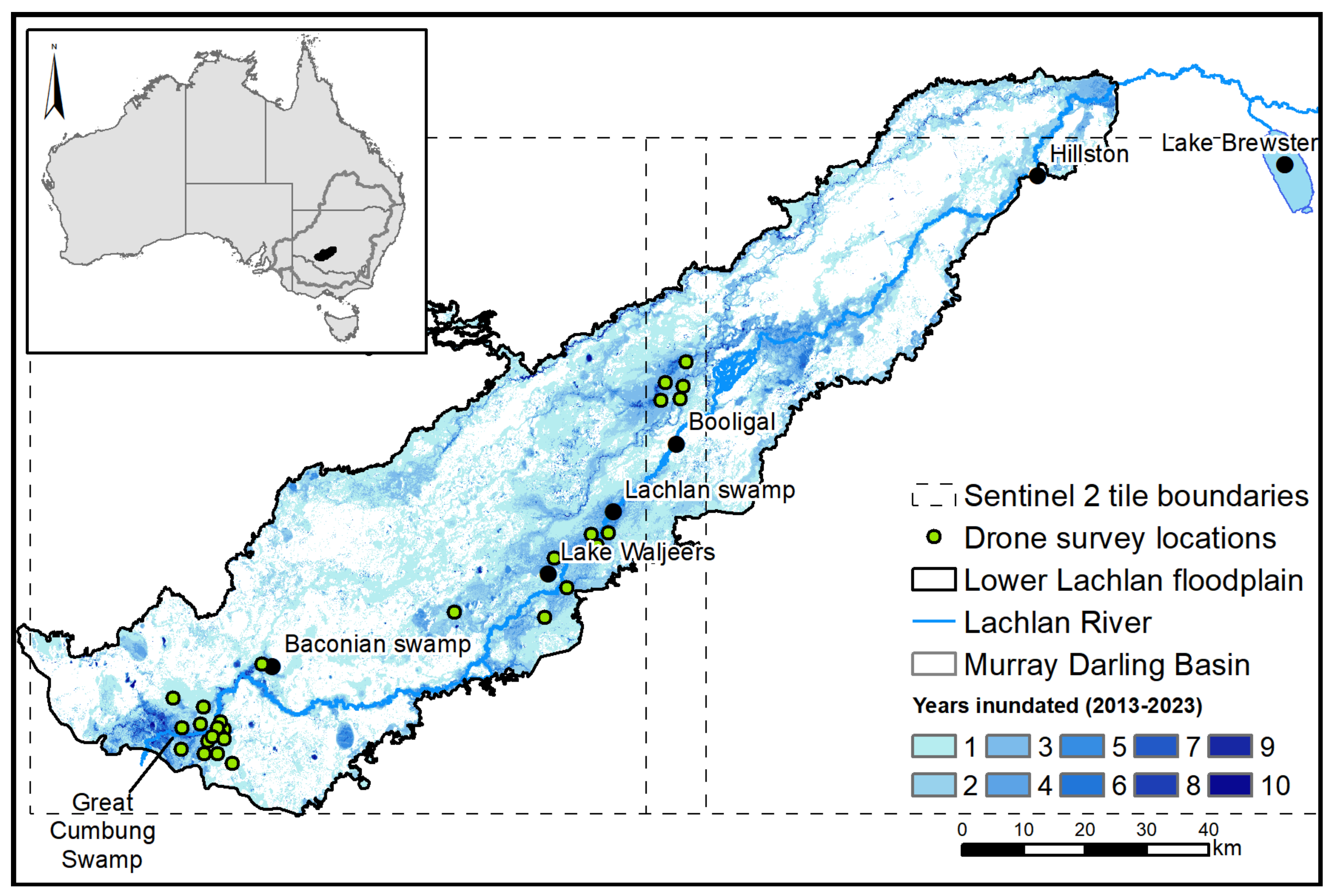

2.1. Automated Inundation Monitoring

2.2. Image Preprocessing and Calculating Indices

2.3. Ancillary Data Processing

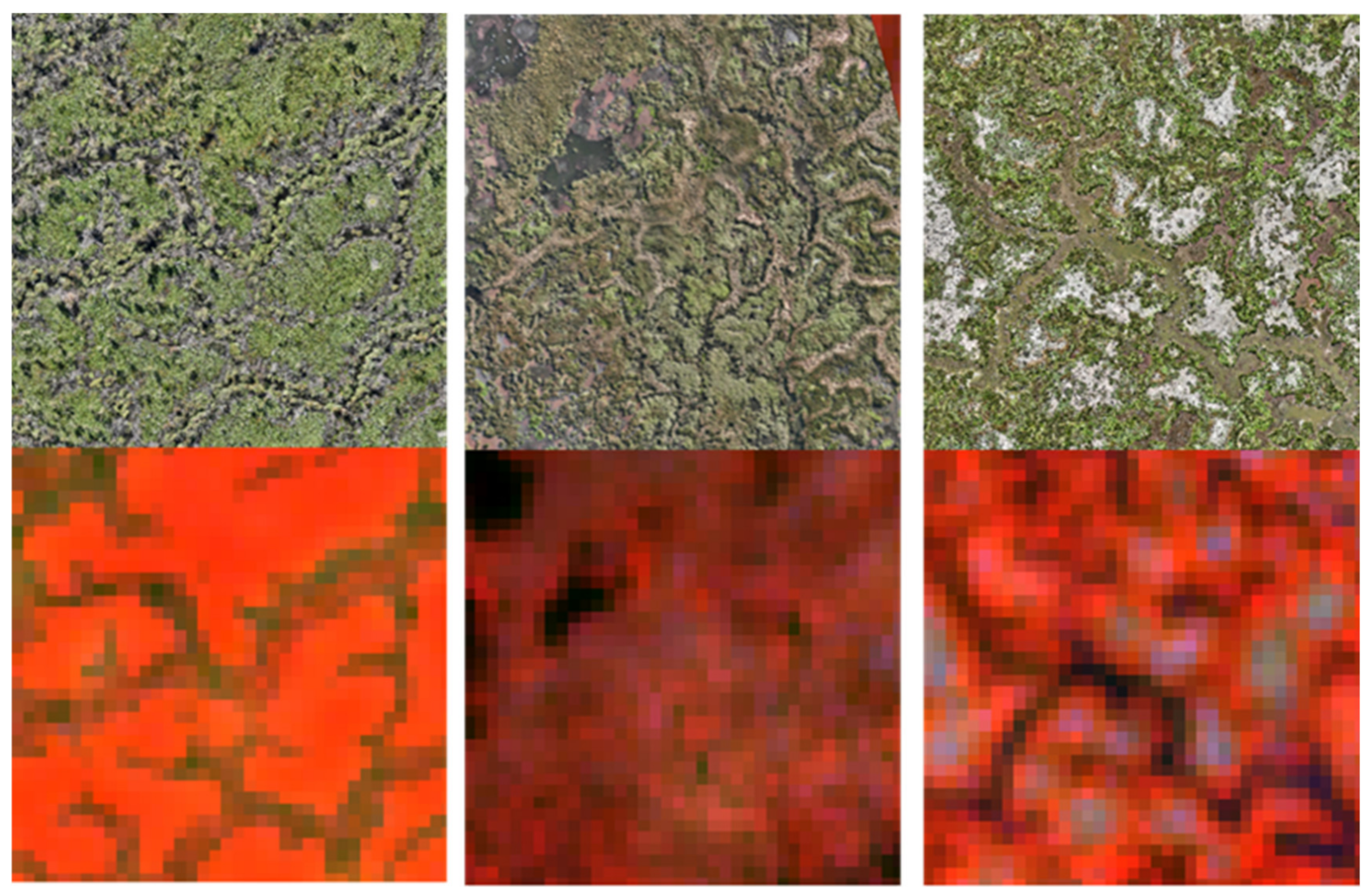

2.4. Aerial Image Data Collection

2.5. Detecting Inundation Classes

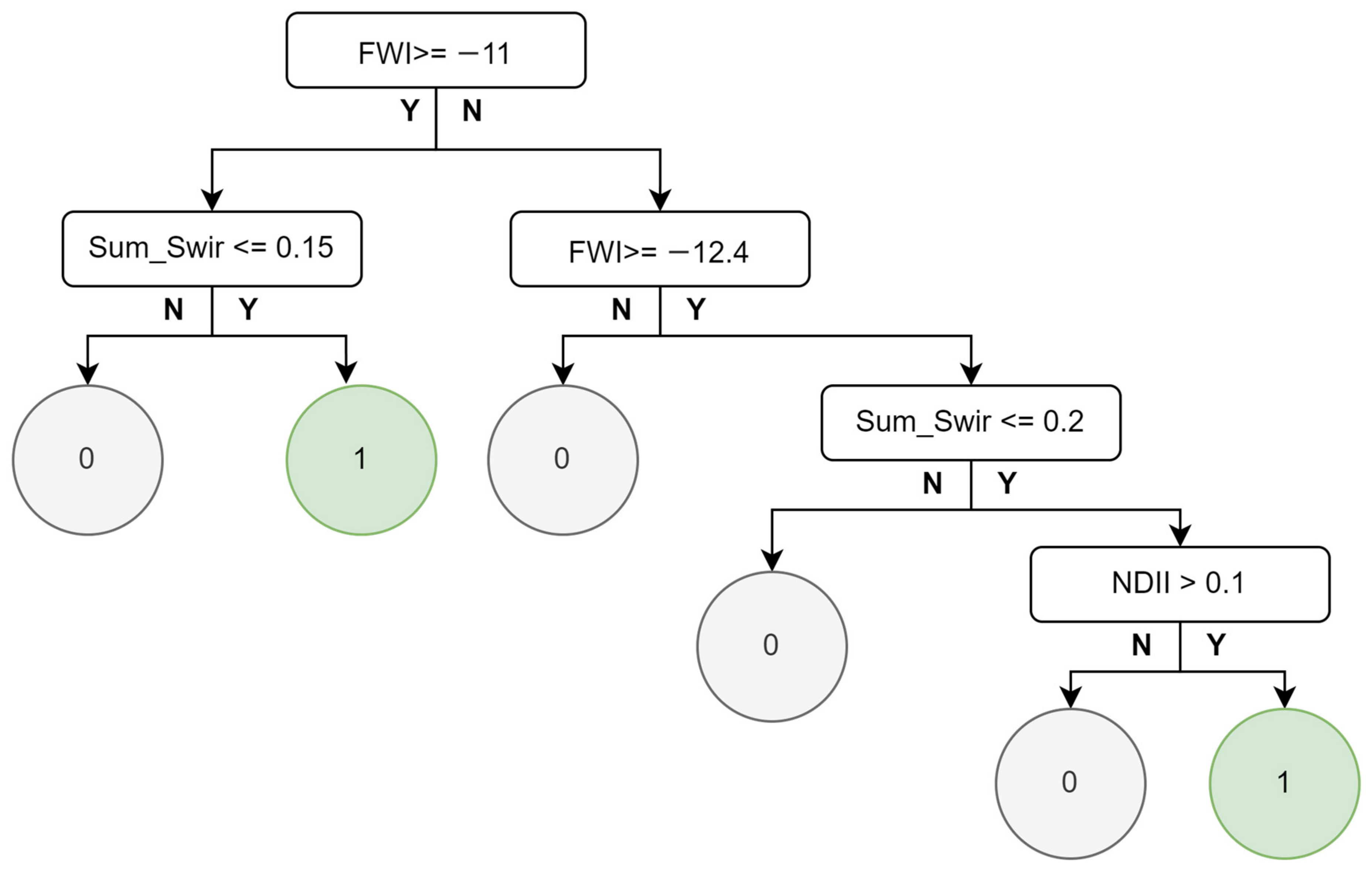

2.5.1. Identifying Water

2.5.2. Identifying Mixed Pixels

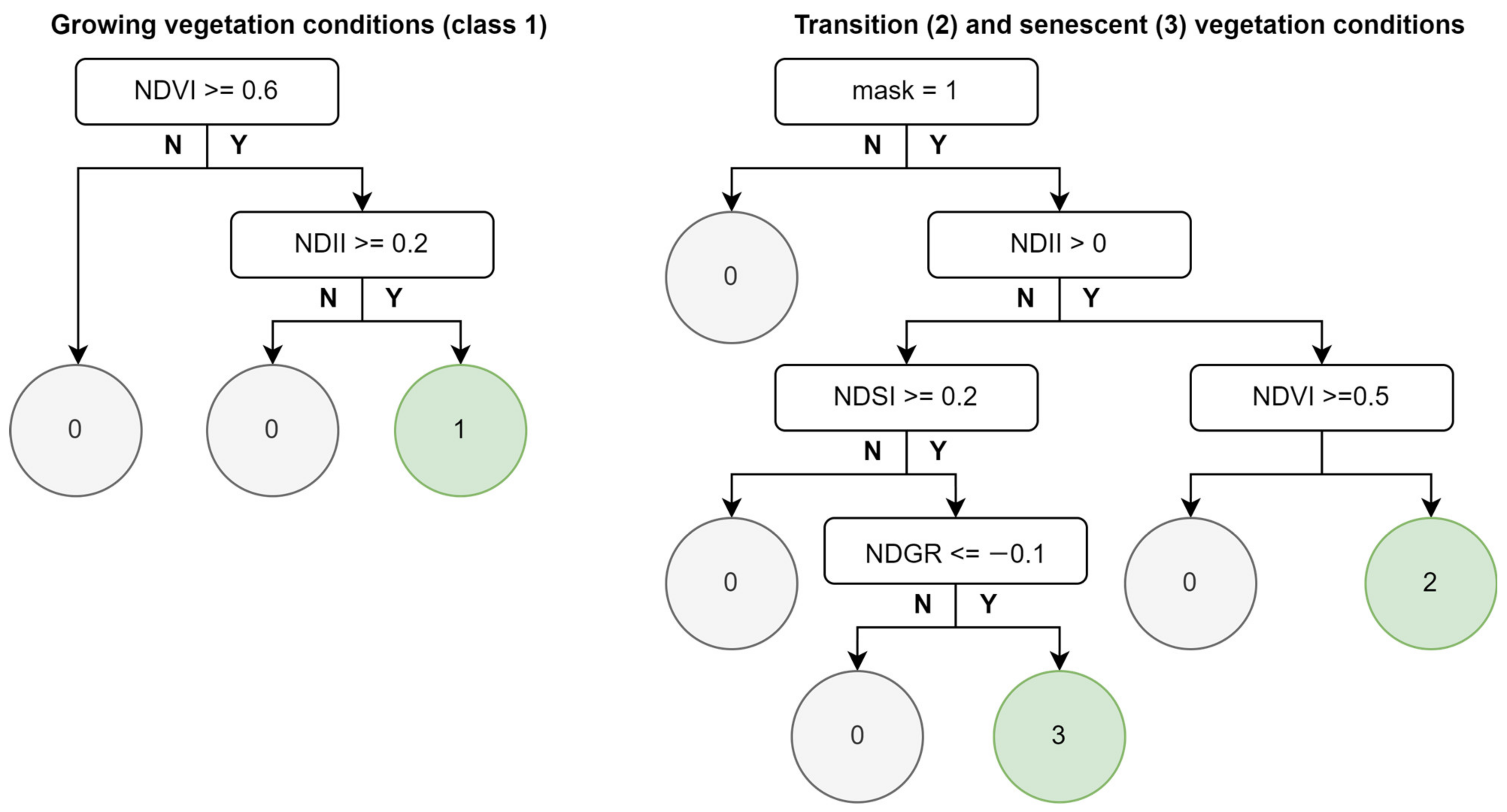

2.5.3. Identifying Wetland Vegetation

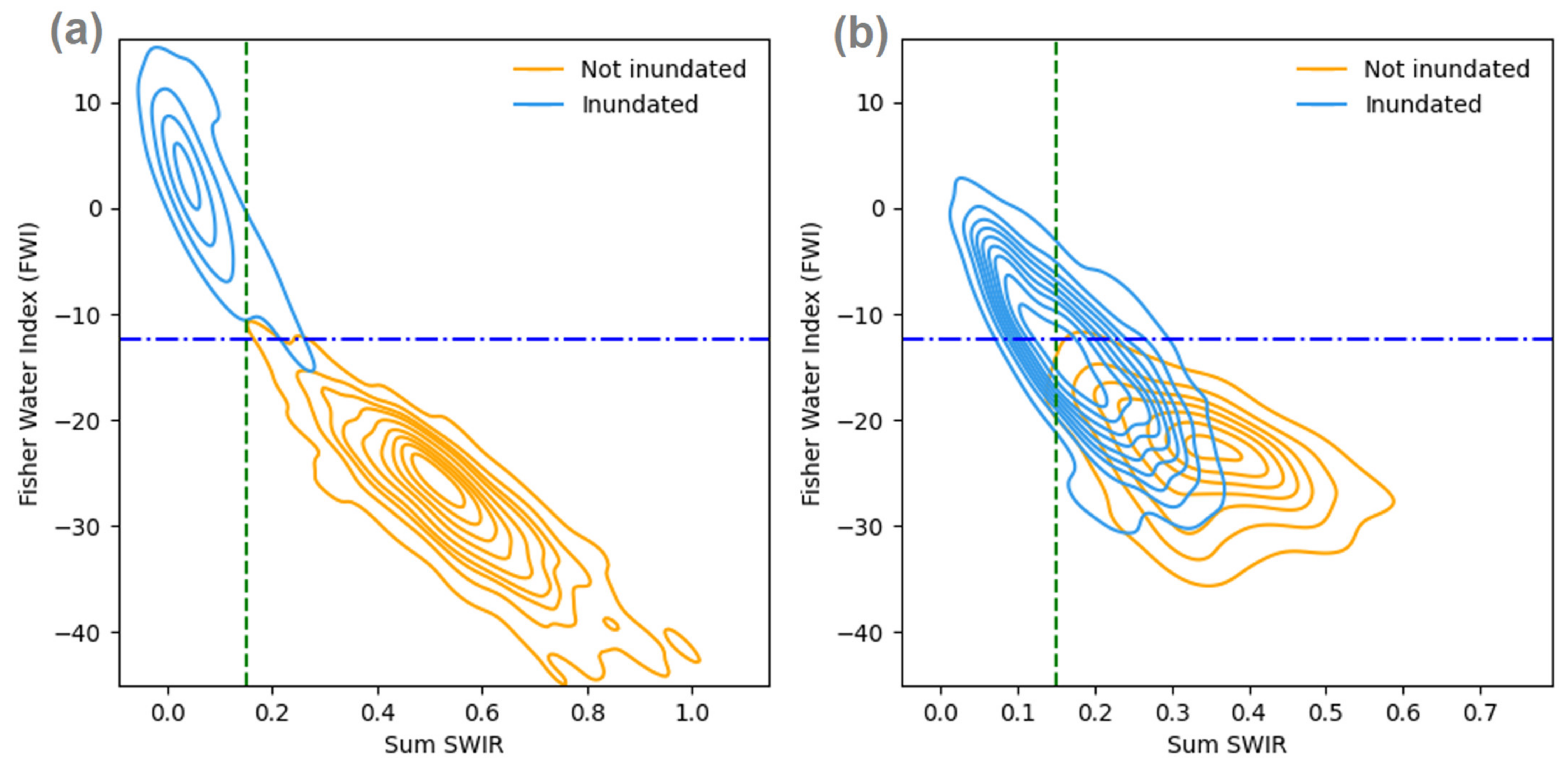

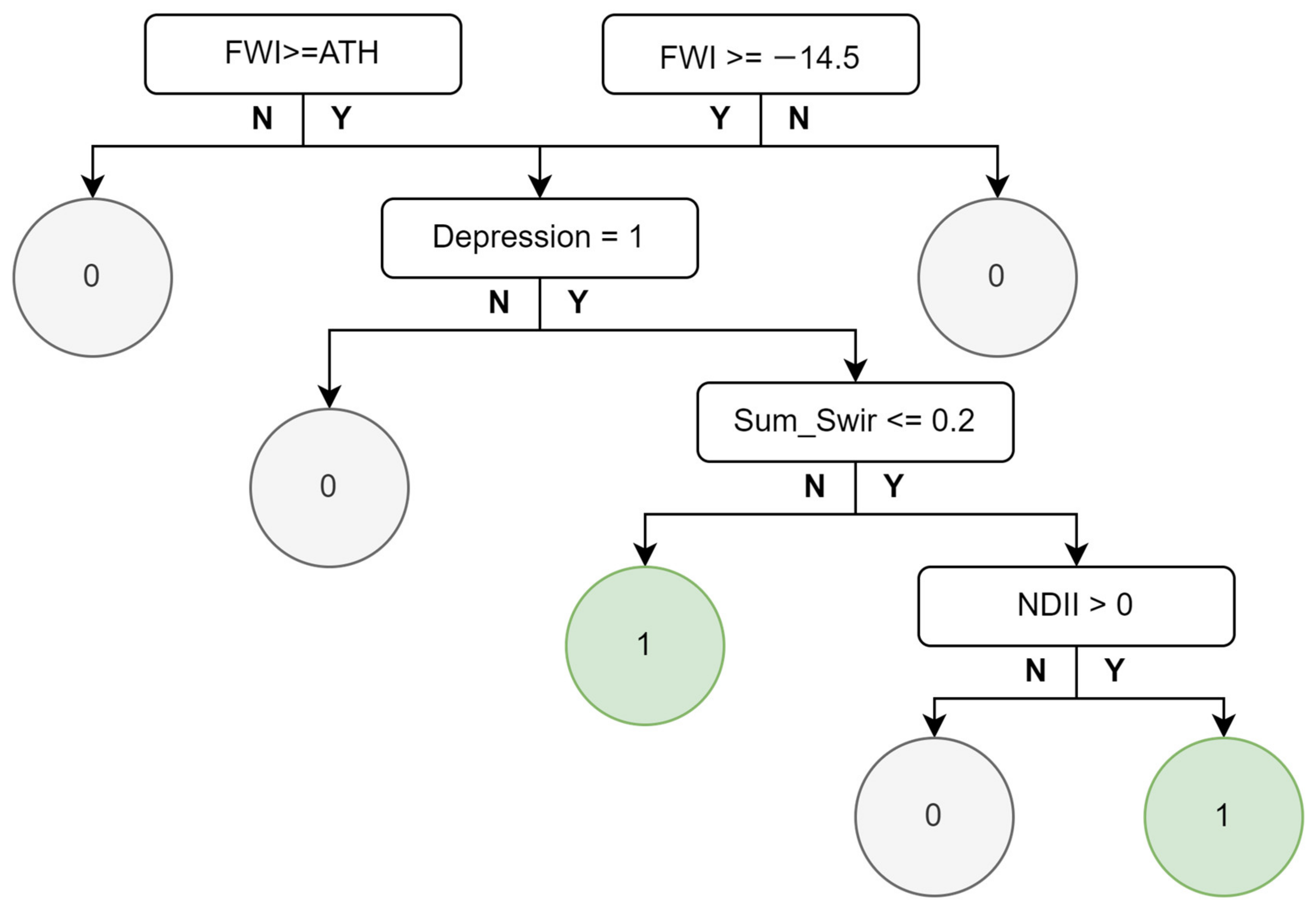

2.5.4. Classifying Inundation Status of Wetland Vegetation

2.6. Final Map Assembly

2.7. Evaluation

3. Results

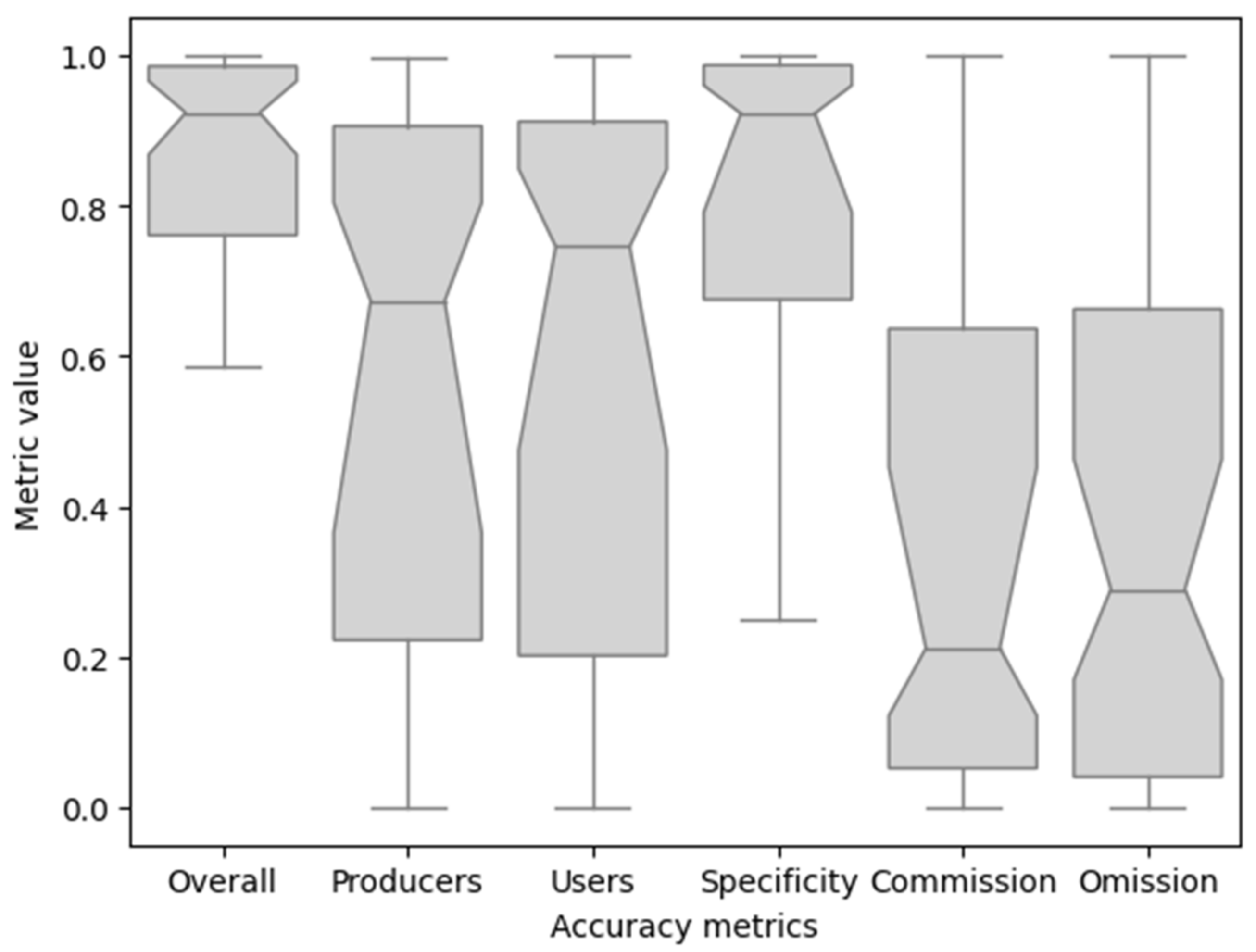

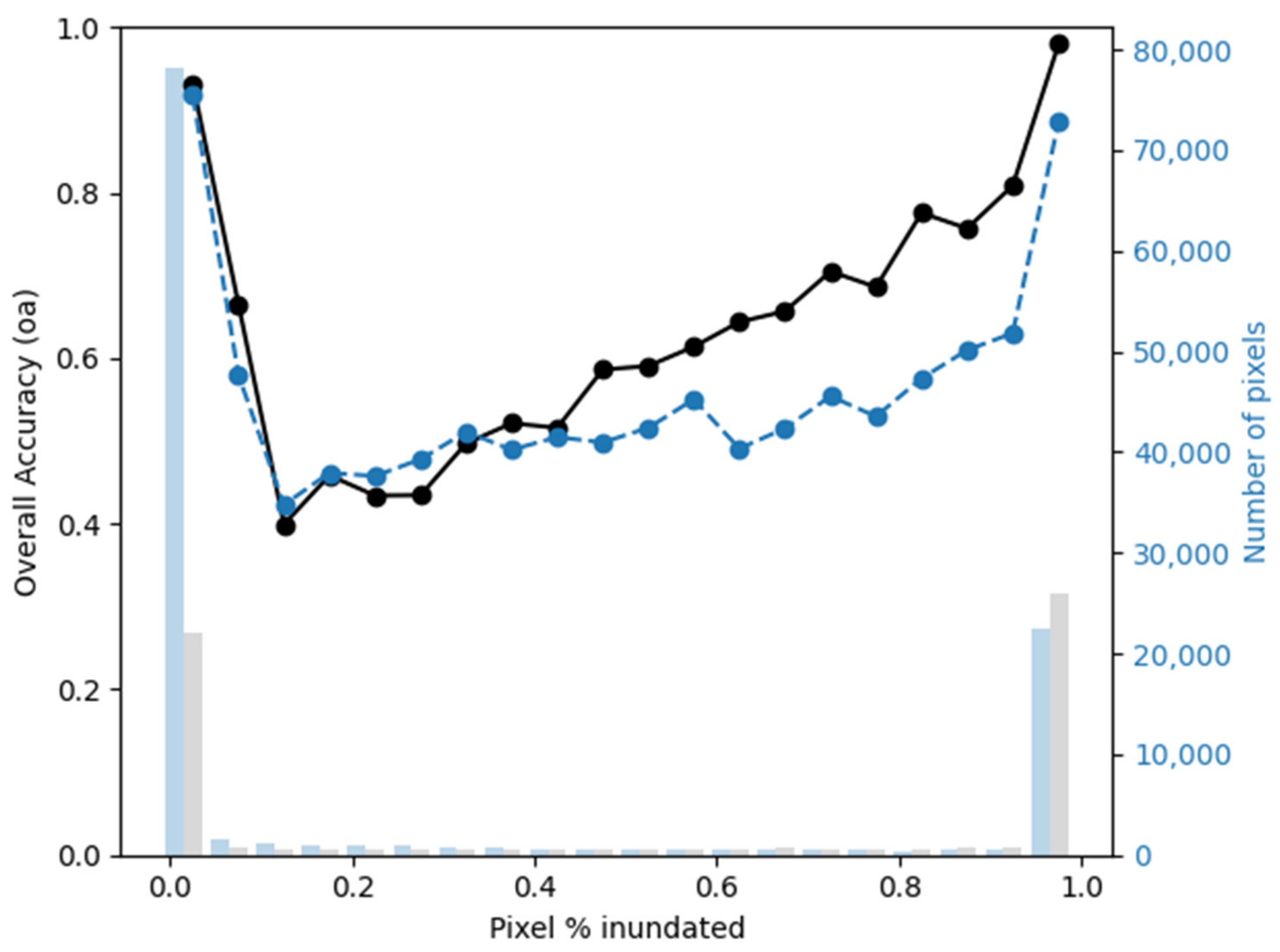

3.1. Accuracy Assessment

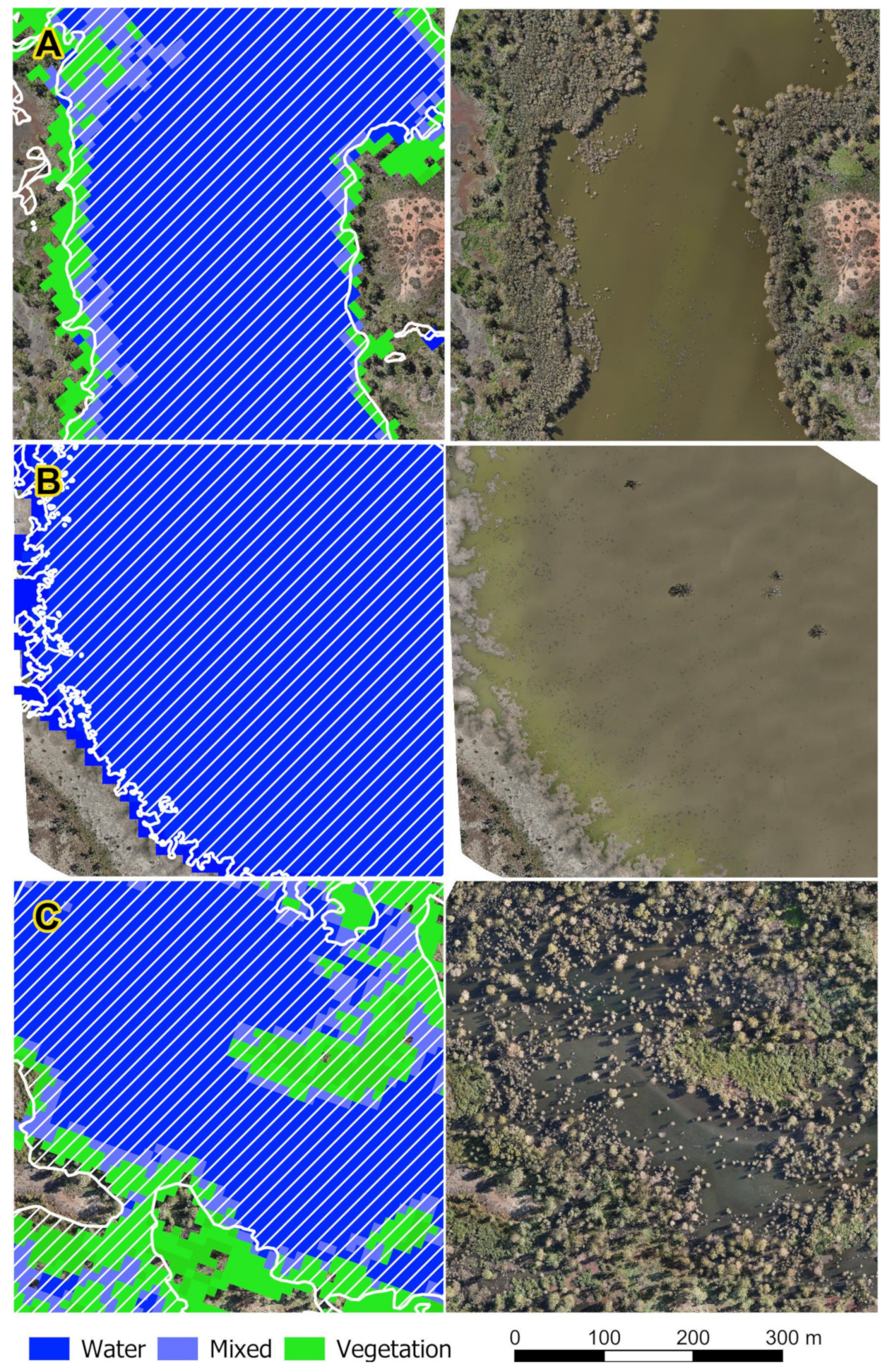

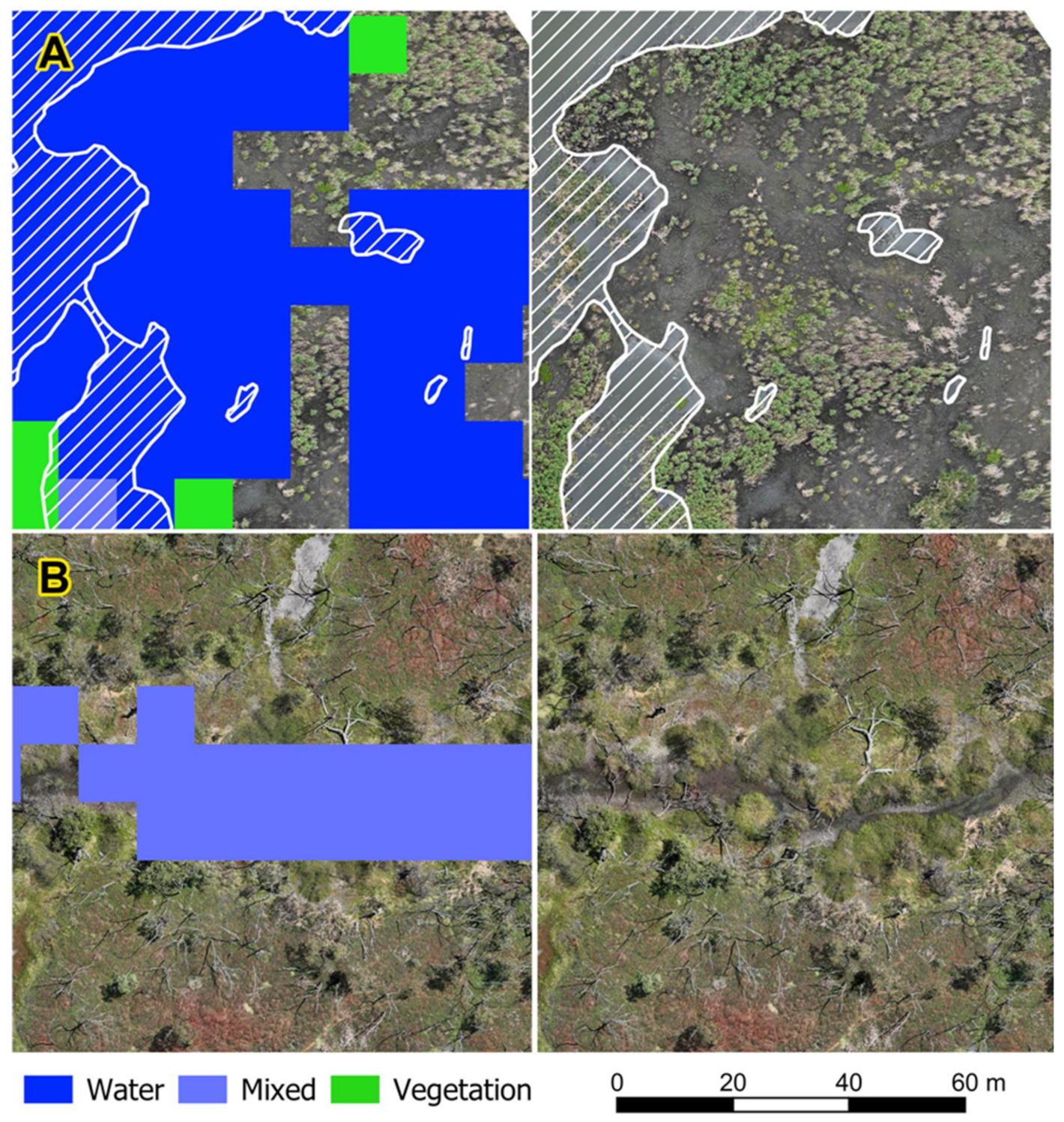

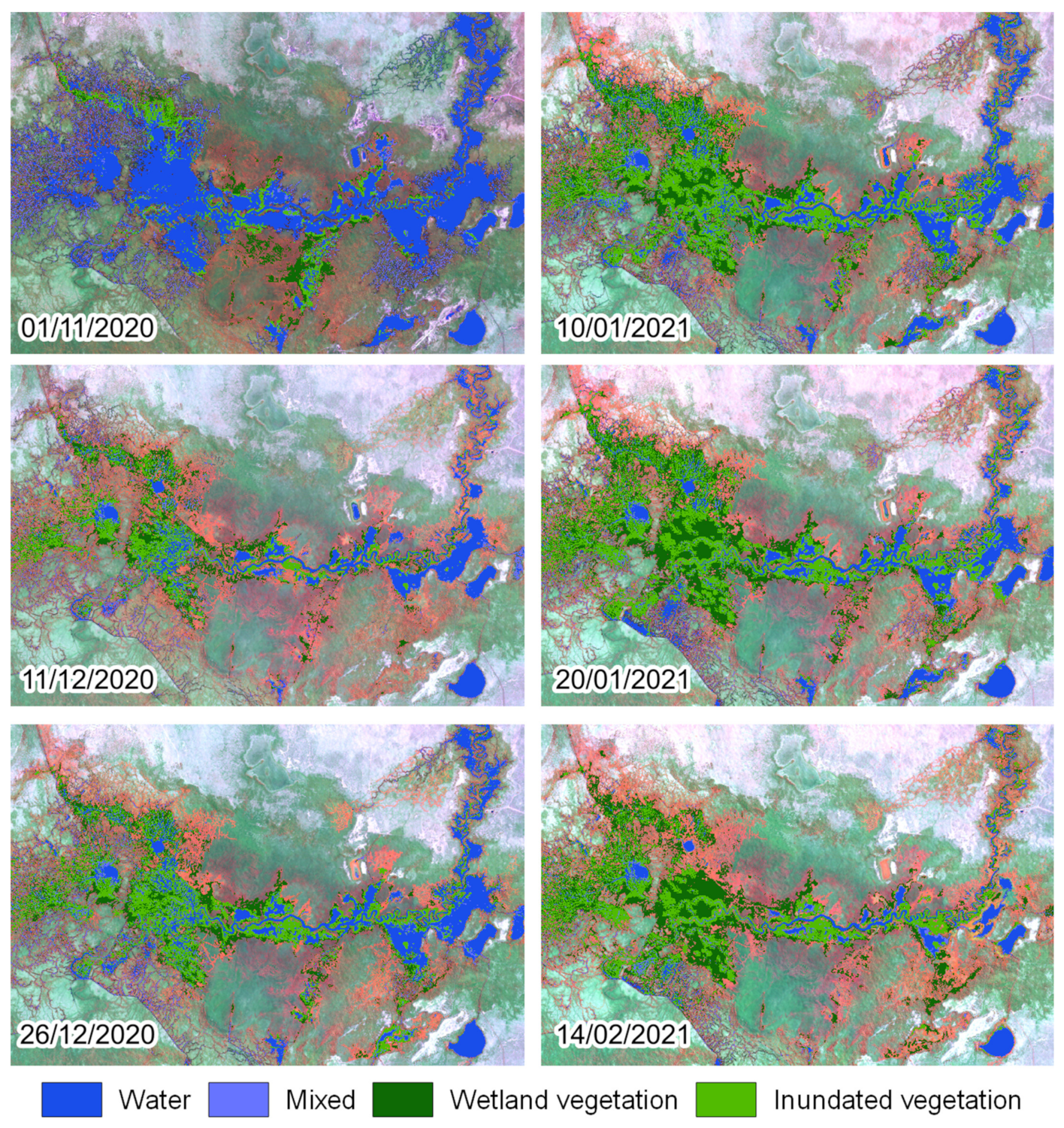

3.2. Visual Assessment

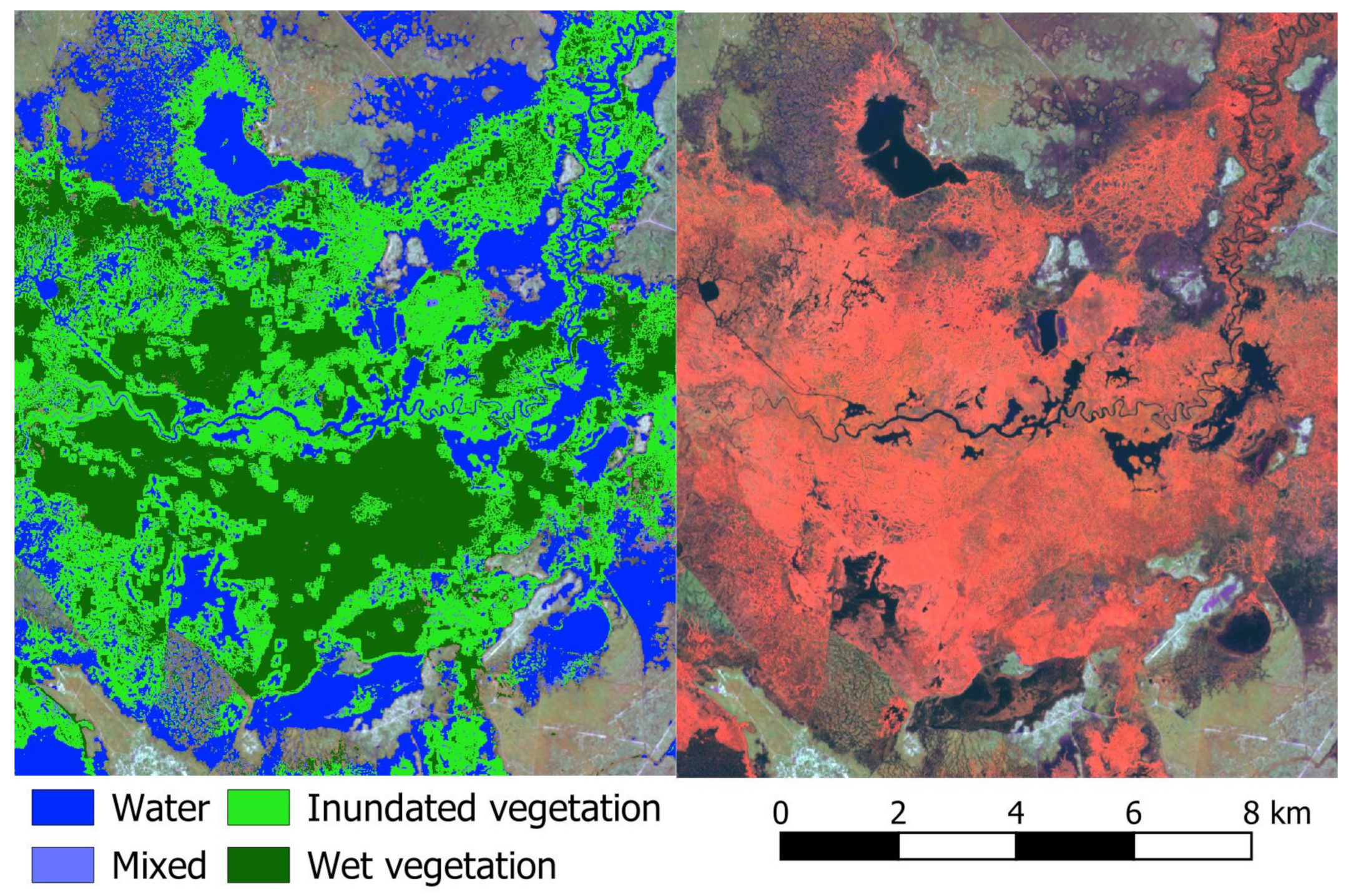

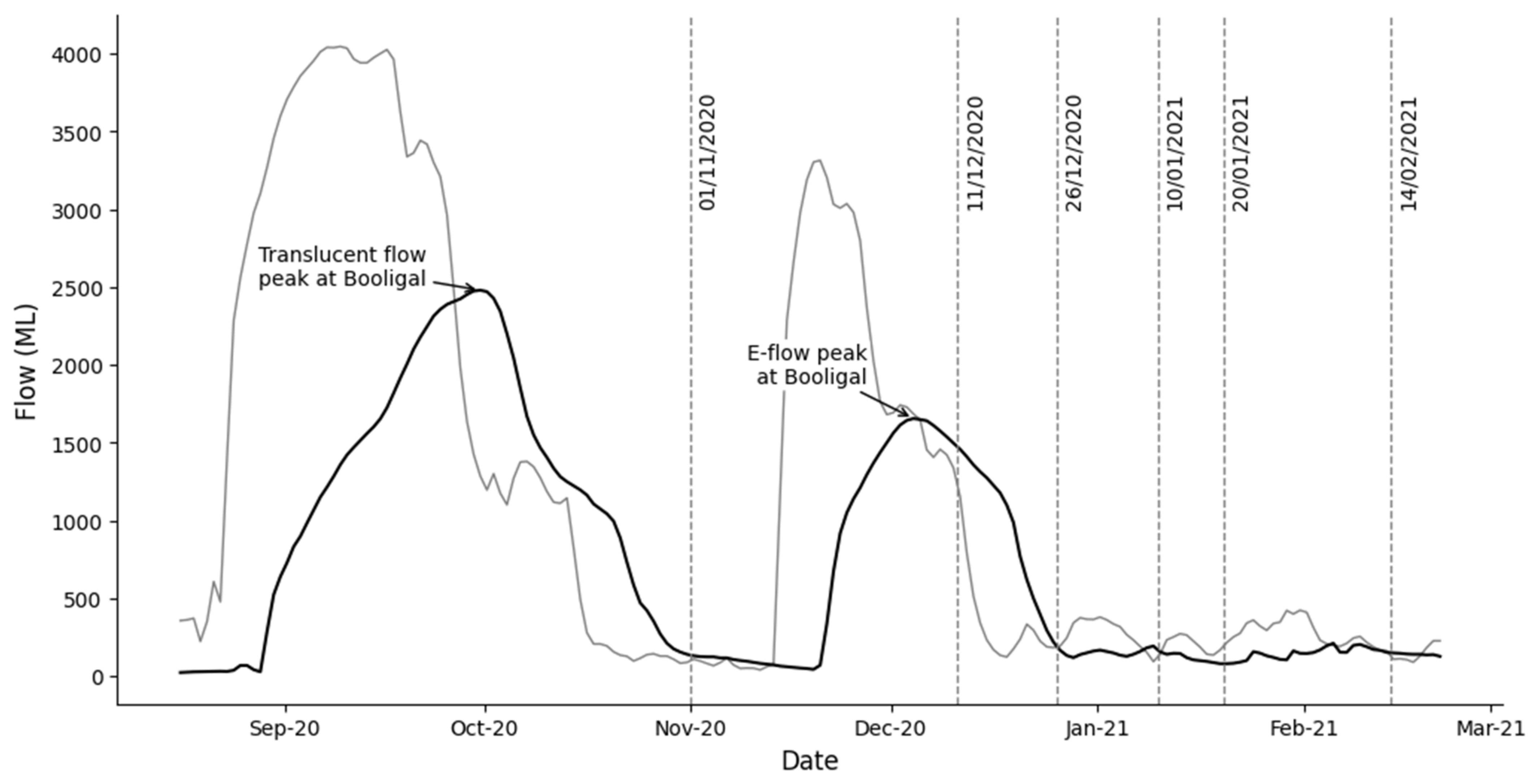

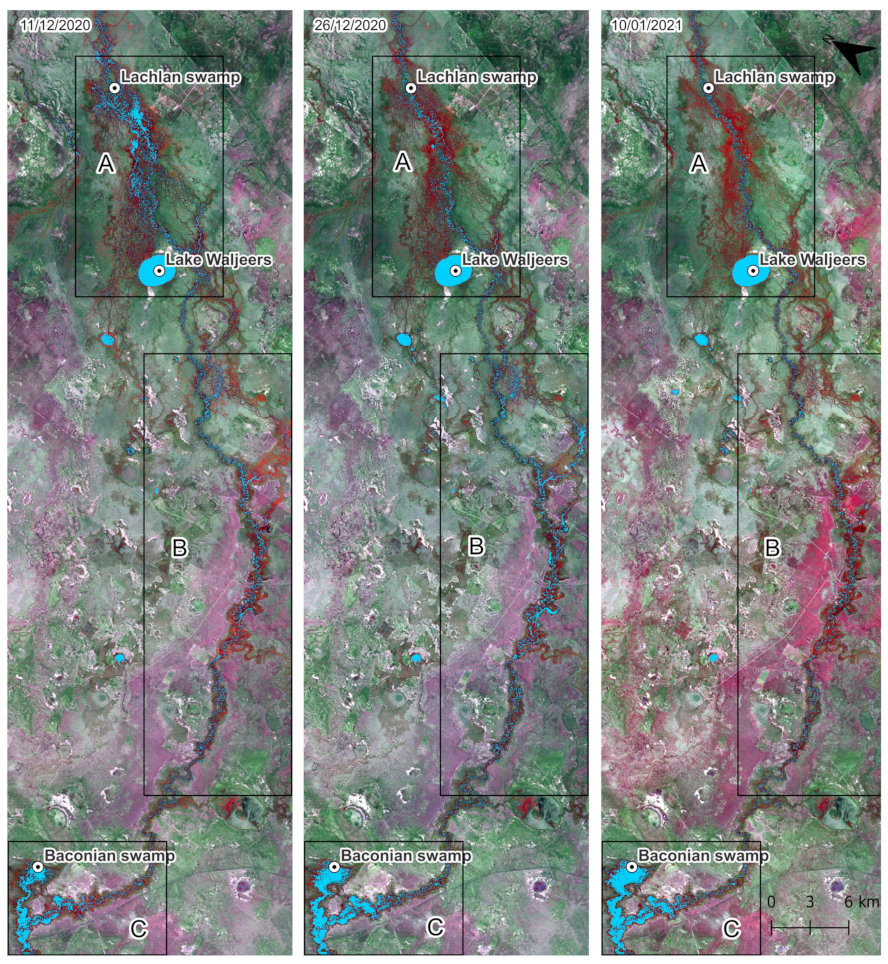

3.3. Case Study of Environmental Flow Event

3.3.1. Overview of the Flow Event

3.3.2. Evaluation of Map Performance

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ling, J.E.; Casanova, M.T.; Shannon, I.; Powell, M. Development of a wetland plant indicator list to inform the delineation of wetlands in New South Wales. Mar. Freshw. Res. 2019, 70, 322–344. [Google Scholar] [CrossRef]

- New South Wales, Department of Climate Change, Energy, the Environment and Water. NSW Wetlands Policy. DECCW; 2010. Available online: https://www.environment.nsw.gov.au/topics/water/wetlands/protecting-wetlands/nsw-wetlands-policy (accessed on 29 April 2024).

- Casanova, M.T.; Brock, M.A. How do depth, duration and frequency of flooding influence the establishment of wetland plant communities? Plant Ecol. 2000, 147, 237–250. [Google Scholar] [CrossRef]

- Ocock, J.F.; Walcott, A.; Spencer, J.; Karunaratne, S.; Thomas, R.F.; Heath, J.T.; Preston, D. Managing flows for frogs: Wetland inundation extent and duration promote wetland-dependent amphibian breeding success. Mar. Freshw. Res. 2024, 75, MF23181. [Google Scholar] [CrossRef]

- Brandis, K.; Bino, G.; Spencer, J.; Ramp, D.; Kingsford, R. Decline in colonial waterbird breeding highlights loss of Ramsar wetland function. Biol. Conserv. 2018, 225, 22–30. [Google Scholar] [CrossRef]

- Pander, J.; Mueller, M.; Geist, J. Habitat diversity and connectivity govern the conservation value of restored aquatic floodplain habitats. Biol. Conserv. 2018, 217, 1–10. [Google Scholar] [CrossRef]

- Frazier, P.; Page, K. The effect of river regulation on floodplain wetland inundation, Murrumbidgee River, Australia. Mar. Freshw. Res. 2006, 57, 133–141. [Google Scholar] [CrossRef]

- Virkki, V.; Alanärä, E.; Porkka, M.; Ahopelto, L.; Gleeson, T.; Mohan, C.; Wang-Erlandsson, L.; Flörke, M.; Gerten, D.; Gosling, S.N.; et al. Globally widespread and increasing violations of environmental flow envelopes. Hydrol. Earth Syst. Sci. 2022, 26, 3315–3336. [Google Scholar] [CrossRef]

- Kingsford, R.T.; Basset, A.; Jackson, L. Wetlands: Conservation’s poor cousins. Aquat. Conserv. Mar. Freshw. Ecosyst. 2016, 26, 892–916. [Google Scholar] [CrossRef]

- Tickner, D.; Opperman, J.J.; Abell, R.; Acreman, M.; Arthington, A.H.; Bunn, S.E.; Cooke, S.J.; Dalton, J.; Darwall, W.; Edwards, G.; et al. Bending the Curve of Global Freshwater Biodiversity Loss: An Emergency Recovery Plan. Bioscience 2020, 70, 330–342. [Google Scholar] [CrossRef]

- Chen, Y.; Colloff, M.J.; Lukasiewicz, A.; Pittock, J. A trickle, not a flood: Environmental watering in the Murray–Darling Basin, Australia. Mar. Freshw. Res. 2021, 72, 601–619. [Google Scholar] [CrossRef]

- Department of Planning and Environment. NSW Long Term Water Plans: Background Information; Department of Planning and Environment: Parramatta, Australia, 2023. [Google Scholar]

- Sheldon, F.; Rocheta, E.; Steinfeld, C.; Colloff, M.; Moggridge, B.J.; Carmody, E.; Hillman, T.; Kingsford, R.T.; Pittock, J. Testing the achievement of environmental water requirements in the Murray-Darling Basin, Australia. Preprints 2023. [Google Scholar] [CrossRef]

- Higgisson, W.; Cobb, A.; Tschierschke, A.; Dyer, F. The Role of Environmental Water and Reedbed Condition on the Response of Phragmites australis Reedbeds to Flooding. Remote Sens. 2022, 14, 1868. [Google Scholar] [CrossRef]

- Higgisson, W.; Higgisson, B.; Powell, M.; Driver, P.; Dyer, F. Impacts of water resource development on hydrological connectivity of different floodplain habitats in a highly variable system. River Res. Appl. 2020, 36, 542–552. [Google Scholar] [CrossRef]

- Thomas, R.F.; Ocock, J.F. Macquarie Marshes: Murray-Darling River Basin (Australia). In The Wetland Book; Finlayson, C., Milton, G., Prentice, R., Davidson, N., Eds.; Springer: Dordrecht, The Netherlands, 2016. [Google Scholar] [CrossRef]

- Thomas, R.F.; Kingsford, R.T.; Lu, Y.; Hunter, S.J. Landsat mapping of annual inundation (1979–2006) of the Macquarie Marshes in semi-arid Australia. Int. J. Remote Sens. 2011, 32, 4545–4569. [Google Scholar] [CrossRef]

- Burke, J.J.; Pricope, N.G.; Blum, J. Thermal imagery-derived surface inundation modeling to assess flood risk in a flood-pulsed savannah watershed in Botswana and Namibia. Remote Sens. 2016, 8, 676. [Google Scholar] [CrossRef]

- Tiwari, V.; Kumar, V.; Matin, M.A.; Thapa, A.; Ellenburg, W.L.; Gupta, N.; Thapa, S. Flood inundation mapping-Kerala 2018; Harnessing the power of SAR, automatic threshold detection method and Google Earth Engine. PLoS ONE 2020, 15, e0237324. [Google Scholar] [CrossRef] [PubMed]

- Kordelas, G.A.; Manakos, I.; Aragonés, D.; Díaz-Delgado, R.; Bustamante, J. Fast and automatic data-driven thresholding for inundation mapping with Sentinel-2 data. Remote Sens. 2018, 10, 910. [Google Scholar] [CrossRef]

- Shen, X.; Wang, D.; Mao, K.; Anagnostou, E.; Hong, Y. Inundation extent mapping by synthetic aperture radar: A review. Remote Sens. 2019, 11, 879. [Google Scholar] [CrossRef]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Bargellini, P.; et al. Sentinel-2: ESA’s optical high-resolution mission for GMES operational services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Fisher, A.; Flood, N.; Danaher, T. Comparing Landsat water index methods for automated water classification in eastern Australia. Remote Sens. Environ. 2016, 175, 167–182. [Google Scholar] [CrossRef]

- Xu, H. Modification of normalised difference water index (NDWI) to enhance open water features in remotely sensed imagery. Int. J. Remote Sens. 2006, 27, 3025–3033. [Google Scholar] [CrossRef]

- McFeeters, S.K. The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Lefebvre, G.; Davranche, A.; Willm, L.; Campagna, J.; Redmond, L.; Merle, C.; Guelmami, A.; Poulin, B. Introducing WIW for Detecting the Presence of Water in Wetlands with Landsat and Sentinel Satellites. Remote Sens. 2019, 11, 2210. [Google Scholar] [CrossRef]

- Ticehurst, C.; Teng, J.; Sengupta, A. Development of a Multi-Index Method Based on Landsat Reflectance Data to Map Open Water in a Complex Environment. Remote Sens. 2022, 14, 1158. [Google Scholar] [CrossRef]

- Kordelas, G.A.; Manakos, I.; Lefebvre, G.; Poulin, B. Automatic inundation mapping using sentinel-2 data applicable to both camargue and do’ana biosphere reserves. Remote Sens. 2019, 11, 2251. [Google Scholar] [CrossRef]

- Senanayake, I.P.; Yeo, I.-Y.; Kuczera, G.A. A Random Forest-Based Multi-Index Classification (RaFMIC) Approach to Mapping Three-Decadal Inundation Dynamics in Dryland Wetlands Using Google Earth Engine. Remote Sens. 2023, 15, 1263. [Google Scholar] [CrossRef]

- Tulbure, M.G.; Broich, M.; Stehman, S.V.; Kommareddy, A. Surface water extent dynamics from three decades of seasonally continuous Landsat time series at subcontinental scale in a semi-arid region. Remote Sens. Environ. 2016, 178, 142–157. [Google Scholar] [CrossRef]

- Mueller, N.; Lewis, A.; Roberts, D.; Ring, S.; Melrose, R.; Sixsmith, J.; Lymburner, L.; McIntyre, A.; Tan, P.; Curnow, S.; et al. Water observations from space: Mapping surface water from 25 years of Landsat imagery across Australia. Remote Sens. Environ. 2016, 174, 341–352. [Google Scholar] [CrossRef]

- Dunn, B.; Lymburner, L.; Newey, V.; Hicks, A.; Carey, H. Developing a Tool for Wetland Characterization Using Fractional Cover, Tasseled Cap Wetness and Water Observations from Space. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 6095–6097. [Google Scholar]

- Lymburner, L.; Sixsmith, J.; Lewis, A.; Purss, M. Fractional Cover (FC25). Geoscience Australia Dataset 2014. Available online: https://pid.geoscience.gov.au/dataset/ga/79676 (accessed on 29 April 2024).

- Ticehurst, C.J.; Teng, J.; Sengupta, A. Generating two-monthly surface water images for the Murray-Darling Basin. In Proceedings of the MODSIM2021, 24th International Congress on Modelling and Simulation, Sydney, Australia, 5–9 December 2021. [Google Scholar]

- Ma, S.; Zhou, Y.; Gowda, P.H.; Dong, J.; Zhang, G.; Kakani, V.G.; Wagle, P.; Chen, L.; Flynn, K.C.; Jiang, W. Application of the water-related spectral reflectance indices: A review. Ecol. Indic. 2019, 98, 68–79. [Google Scholar] [CrossRef]

- Thomas, R.F.; Kingsford, R.T.; Lu, Y.; Cox, S.J.; Sims, N.C.; Hunter, S.J. Mapping inundation in the heterogeneous floodplain wetlands of the Macquarie Marshes, using Landsat Thematic Mapper. J. Hydrol. 2015, 524, 194–213. [Google Scholar] [CrossRef]

- Flood, N.; Danaher, T.; Gill, T.; Gillingham, S. An Operational Scheme for Deriving Standardised Surface Reflectance from Landsat TM/ETM+ and SPOT HRG Imagery for Eastern Australia. Remote Sens. 2013, 5, 83–109. [Google Scholar] [CrossRef]

- Farr, T.G.; Rosen, P.A.; Caro, E.; Crippen, R.; Duren, R.; Hensley, S.; Kobrick, M.; Paller, M.; Rodriguez, E.; Roth, L.; et al. The Shuttle Radar Topography Mission. Rev. Geophys. 2007, 45. [Google Scholar] [CrossRef]

- Gallant, J.; Read, A. Enhancing the SRTM data for Australia. Proc. Geomorphometry 2009, 31, 149–154. [Google Scholar]

- Green, D.; Petrovic, J.; Burrell, M.; Moss, P. Water Resources and Management Overview: Lachlan Catchment; NSW Office of Water: Sydney, Australia, 2011. [Google Scholar]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Klemas, V.; Smart, R. The influence of soil salinity, growth form, and leaf moisture on the spectral radiance of Spartina Alterniflora canopies. Photogramm. Eng. Remote Sens 1983, 49, 77–83. [Google Scholar]

- Van Deventer, A.; Ward, A.D.; Gowda, P.H.; Lyon, J.G. Using thematic mapper data to identify contrasting soil plains and tillage practices. Photogramm. Eng. Remote Sens. 1997, 63, 87–93. [Google Scholar]

- Motohka, T.; Nasahara, K.N.; Oguma, H.; Tsuchida, S. Applicability of Green-Red Vegetation Index for Remote Sensing of Vegetation Phenology. Remote Sens. 2010, 2, 2369–2387. [Google Scholar] [CrossRef]

- Geoscience Australia. Digital Elevation Model (DEM) of Australia Derived from LiDAR 5 Metre Grid. Canberra, 2015. Available online: https://ecat.ga.gov.au/geonetwork/srv/eng/catalog.search#/metadata/89644 (accessed on 29 April 2024).

- Lindsay, J. The whitebox geospatial analysis tools project and open-access GIS. In Proceedings of the GIS Research UK 22nd Annual Conference, Glasgow, UK, 16–18 April 2014. [Google Scholar]

- Lindsay, J.B.; Creed, I.F. Distinguishing actual and artefact depressions in digital elevation data. Comput. Geosci. 2006, 32, 1192–1204. [Google Scholar] [CrossRef]

- Felzenszwalb, P.F.; Huttenlocher, D.P. Efficient Graph-Based Image Segmentation. Int. J. Comput. Vis. 2004, 59, 167–181. [Google Scholar] [CrossRef]

- OpenDroneMap. OpenDroneMap Documentation. 2020. Available online: https://docs.opendronemap.org/ (accessed on 29 April 2024).

- Mahdavi, S.; Salehi, B.; Granger, J.; Amani, M.; Brisco, B.; Huang, W. Remote sensing for wetland classification: A comprehensive review. GIScience Remote Sens. 2018, 55, 623–658. [Google Scholar] [CrossRef]

- Chen, D.; Huang, J.; Jackson, T.J. Vegetation water content estimation for corn and soybeans using spectral indices derived from MODIS near-and short-wave infrared bands. Remote Sens. Environ. 2005, 98, 225–236. [Google Scholar] [CrossRef]

- Joiner, J.; Yoshida, Y.; Anderson, M.; Holmes, T.; Hain, C.; Reichle, R.; Koster, R.; Middleton, E.; Zeng, F.-W. Global relationships among traditional reflectance vegetation indices (NDVI and NDII), evapotranspiration (ET), and soil moisture variability on weekly timescales. Remote Sens Env. 2018, 219, 339–352. [Google Scholar] [CrossRef] [PubMed]

- Bradski, G. The openCV library. Dr. Dobb’s J. Softw. Tools Prof. Program. 2000, 25, 120–123. [Google Scholar]

- Colloff, M.J.; Baldwin, D.S. Resilience of floodplain ecosystems in a semi-arid environment. Rangel. J. 2010, 32, 305–314. [Google Scholar] [CrossRef]

- Powell, S.J.; Jakeman, A.; Croke, B. Can NDVI response indicate the effective flood extent in macrophyte dominated floodplain wetlands? Ecol. Indic. 2014, 45, 486–493. [Google Scholar] [CrossRef]

- Wilson, N.R.; Norman, L.M. Analysis of vegetation recovery surrounding a restored wetland using the normalized difference infrared index (NDII) and normalized difference vegetation index (NDVI). Int. J. Remote Sens. 2018, 39, 3243–3274. [Google Scholar] [CrossRef]

- Lisenby, P.E.; Tooth, S.; Ralph, T.J. Product vs. process? The role of geomorphology in wetland characterization. Sci. Total Environ. 2019, 663, 980–991. [Google Scholar] [CrossRef]

- Semeniuk, C.; Semeniuk, V. A Comprehensive Classification of Inland Wetlands of Western Australia Using the Geomorphic-Hydrologic Approach; Royal Society of Western Australia: Karawara, Australia, 2016; Volume 94, pp. 449–464. [Google Scholar]

- Thapa, R.; Thoms, M.; Parsons, M. An adaptive cycle hypothesis of semi-arid floodplain vegetation productivity in dry and wet resource states. Ecohydrology 2016, 9, 39–51. [Google Scholar] [CrossRef]

- Psomiadis, E.; Soulis, K.X.; Zoka, M.; Dercas, N. Synergistic Approach of Remote Sensing and GIS Techniques for Flash-Flood Monitoring and Damage Assessment in Thessaly Plain Area, Greece. Water 2019, 11, 448. [Google Scholar] [CrossRef]

| Index Name | Equation | Reference |

|---|---|---|

| Fisher water index (FWI) | [23] | |

| Sum of shortwave infrared bands (SUMswir) | This study | |

| Normalised difference vegetation index (NDVI) | [41] | |

| Normalised difference infrared index (NDII) | [42] | |

| Normalised difference shortwave-infrared index (NDSI) | [43] | |

| Green-red vegetation index (GRVI) | [44] |

| Accuracy Metric | Formula |

|---|---|

| User’s accuracy | TP/(TP + FP) |

| Producer’s accuracy | TP/(TP + FN) |

| Overall accuracy | (TP + TN)/(TP + FP + TN + FN) |

| Specificity | TN/(TN + FP) |

| Omission error | FN/(FN + TP) |

| Commission error | FP/(FP + TP) |

| Cover | Overall | Producers | Users (TPR) | Specificity (TNR) | Omission | Commission | Inundated % |

|---|---|---|---|---|---|---|---|

| Sparse | 0.89 | 0.87 | 0.95 | 0.92 | 0.13 | 0.05 | 61.3% |

| Vegetated | 0.87 | 0.76 | 0.78 | 0.91 | 0.24 | 0.22 | 29.7% |

| All types | 0.88 | 0.82 | 0.87 | 0.92 | 0.18 | 0.13 | 40.5% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Heath, J.T.; Grimmett, L.; Gopalakrishnan, T.; Thomas, R.F.; Lenehan, J. Integrating Sentinel 2 Imagery with High-Resolution Elevation Data for Automated Inundation Monitoring in Vegetated Floodplain Wetlands. Remote Sens. 2024, 16, 2434. https://doi.org/10.3390/rs16132434

Heath JT, Grimmett L, Gopalakrishnan T, Thomas RF, Lenehan J. Integrating Sentinel 2 Imagery with High-Resolution Elevation Data for Automated Inundation Monitoring in Vegetated Floodplain Wetlands. Remote Sensing. 2024; 16(13):2434. https://doi.org/10.3390/rs16132434

Chicago/Turabian StyleHeath, Jessica T., Liam Grimmett, Tharani Gopalakrishnan, Rachael F. Thomas, and Joanne Lenehan. 2024. "Integrating Sentinel 2 Imagery with High-Resolution Elevation Data for Automated Inundation Monitoring in Vegetated Floodplain Wetlands" Remote Sensing 16, no. 13: 2434. https://doi.org/10.3390/rs16132434

APA StyleHeath, J. T., Grimmett, L., Gopalakrishnan, T., Thomas, R. F., & Lenehan, J. (2024). Integrating Sentinel 2 Imagery with High-Resolution Elevation Data for Automated Inundation Monitoring in Vegetated Floodplain Wetlands. Remote Sensing, 16(13), 2434. https://doi.org/10.3390/rs16132434