Abstract

In recent years, research on adversarial attack techniques for remote sensing object detection (RSOD) has made great progress. Still, most of the research nowadays is on end-to-end attacks, which mainly design adversarial perturbations based on the prediction information of the object detectors (ODs) to achieve the attack. These methods do not discover the common vulnerabilities of the ODs and, thus, the transferability is weak. Based on this, this paper proposes a foreground feature approximation (FFA) method to generate adversarial examples (AEs) that discover the common vulnerabilities of the ODs by changing the feature information carried by the image itself to implement the attack. Specifically, firstly, the high-quality predictions are filtered as attacked objects using the detector, after which a hybrid image without any target is made, and the hybrid foreground is created based on the attacked targets. The images’ shallow features are extracted using the backbone network, and the features of the input foreground are approximated towards the hybrid foreground to implement the attack. In contrast, the model predictions are used to assist in realizing the attack. In addition, we have found the effectiveness of FFA for targeted attacks, and replacing the hybrid foreground with the targeted foreground can realize targeted attacks. Extensive experiments are conducted on the remote sensing target detection datasets DOTA and UCAS-AOD with seven rotating target detectors. The results show that the mAP of FFA under the IoU threshold of 0.5 untargeted attack is 3.4% lower than that of the advanced method, and the mAP of FFA under targeted attack is 1.9% lower than that of the advanced process.

1. Introduction

The development of remote sensing (RS) imaging techniques has led to the advancement of many important applications in RS, including image classification [1,2,3], image segmentation [4,5], object detection [6,7,8], and object tracking [9,10]. Recent studies have found that image-processing techniques based on deep learning exhibit greater potential than traditional image-processing techniques, which makes them a hot research direction and leads to the current implementation of these tasks using deep neural networks (DNNs). Xie et al. [11] introduced a new landslide extraction framework using context correlation features, effectively utilizing contextual information and improving feature extraction capabilities. A deeply supervised classifier was also designed to enhance the network’s ability to recognize information in the prediction stage. Zhu et al. [12] proposed a cross-view intelligent human search method based on multi-feature constraints, which designed a variety of feature modules to fully extract the context information, feature information, and confidence information and improve the searchability of people in complex scenarios. Xu et al. [13] proposed a method to extract building height from high-resolution, single-view remote sensing images using shadow and side information. First, multi-scale features were used to extract shadow and side information. Then, the accuracy of measuring the building side and shadow length was improved by analyzing the geometric relationship between buildings.

Recent research has demonstrated that DNNs are susceptible to certain perturbations, and the results of detectors can be altered by adding a perturbation to an image that is unrecognizable to the human eye, despite the fact that deep learning has achieved increased success [14,15]. This phenomenon reveals the great security risks of DNNs and also inspires people to research their security. Adversarial examples (AEs), which can be created by subtly altering the source image to increase the success rate of attacks, were initially identified by Szegedy et al. [16]. Since then, more and more people have been devoted to studying the vulnerabilities of DNNs and improving their security and robustness.

Object detection [17], as an important research area in image processing, has likewise given birth to some important applications, such as autonomous driving [18,19] and intelligent recognition systems [20,21]; naturally, it has yet to escape the attack of AEs. Compared with attacking image classification, attacking object detection is more difficult [22]. In image classification, each image contains only one category, which is easier to realize. In object detection, in which each image contains multiple objects, it is necessary to find out the object as well as determine its location and lock its size, which leads to object detection being more practical but more difficult to attack. Therefore, it is more meaningful to attack the object detection system and study its vulnerabilities.

Adversarial attacks can be classified into digital and physical attacks, depending on the domains in which they are applied [22]. Digital attacks mainly make the detector detect errors by changing the image pixels, which is applicable to all images, such as the DFool attack proposed by Lu et al. [23] and the DAG attack proposed by Xie et al. [24]. Physical attacks mainly make the detector misclassify by adding patches to the objects, mainly for images with a large percentage of objects. Kurakin et al. [25] first experimentally verified that AEs are also present in the actual world, and Thys et al. [26] successfully spoofed a pedestrian monitor by generating adversarial patches.

Remote sensing images (RSIs), with their high resolution and wide spatial distribution, add some difficulties for object detection and likewise lead to their difficulty in realizing physical attacks [27,28]. Therefore, the majority of the current attacks on RSIs are digital attacks, and a few physical attacks are limited to RSIs with a large percentage of targets. Czaja et al. [27] first investigated the AEs in RSIs and successfully attacked the image classification models. Lu et al. [28] successfully designed an adaptive patch to attack the object detectors (ODs) for RSIs. Du et al. [29] and Lian et al. [30] successfully applied adversarial patches against RSIs to the physical world. More and more people have been devoted to the work of RS adversarial attacks.

The work of Xu et al. [3] and Li et al. [22] inspires us. Xu et al. [3] investigated the generic AEs for RSI classification models, and Li et al. [22] devised a method for adaptively controlling the perturbation size and location based on the behavior of the ODs. It is well known that object detection has three main tasks: determining the object location, determining the object size, and determining the object category [17]. These are indispensable, and the attack can be realized by successfully attacking any one of the three tasks. Determining the object category is the image classification task; so, we choose to attack the classifier in the ODs. We found that for object detection, using global adversarial perturbation will result in significant resource waste since the objects in the RSIs only represent a minor fraction of the entire image, and the background accounts for a much larger portion than the foreground; thus, we use local adversarial perturbation, which only changes the pixels in the foreground portion.

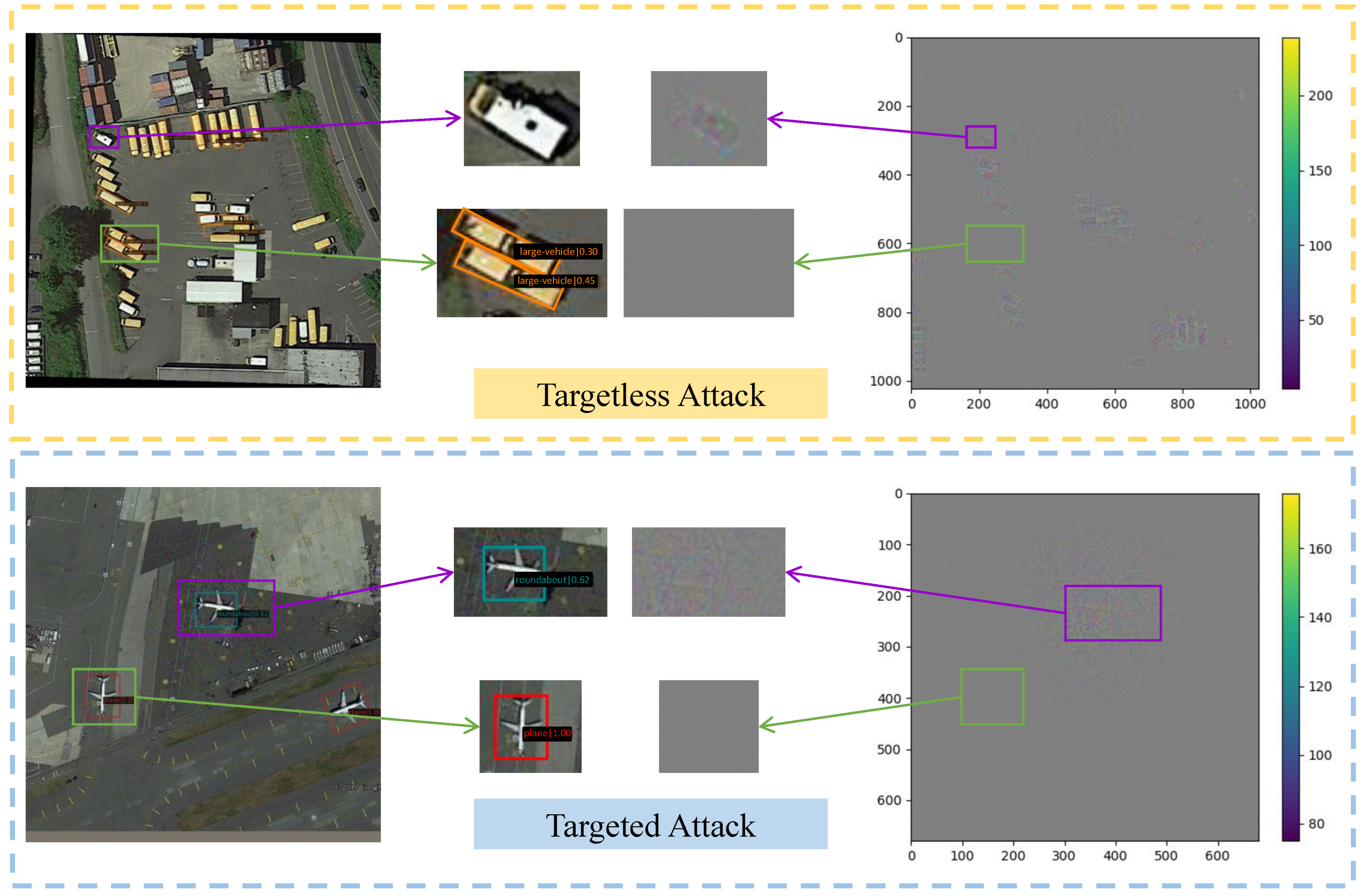

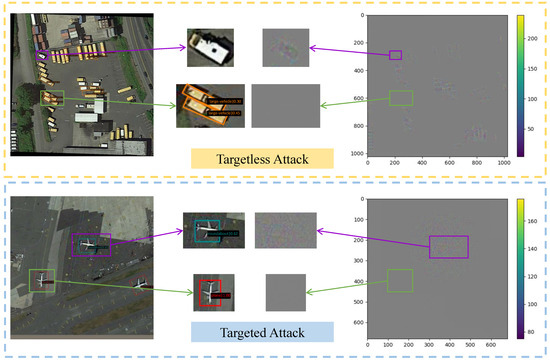

We suggest a digital attack method in this paper, which we call foreground feature approximation (FFA), which mainly utilizes the backbone network of the ODs (feature extraction network) to attack the classifier, which is an important part of the detection task and is most effective in utilizing it for the attack. The attack effect of FFA is shown in Figure 1.

Figure 1.

Illustration of the FFA realizing the targetless attack and the targeted attack. The purple box indicates the part that joins the perturbation and succeeds in the attack, and the green box indicates the part that does not join the perturbation and is recognized correctly. The orange dashed box part indicates the targetless attack, and the blue dashed box part indicates the targeted attack.

The contributions of this paper are as follows:

- We propose a universal digital attack framework for foreground feature approximation (FFA) using the output information of multiple modules of target detectors, aiming to find common vulnerabilities between different detectors and improve the aggression and migration of adversarial examples;

- We used a relatively complete evaluation system, including the attack efficiency, attack speed, quality of the confrontation sample, and other relevant parameters and made a detailed quantitative evaluation of the algorithm in this paper, which improved the persuasiveness of the results;

- The results of attacking seven rotating target detectors on the remote sensing target detection datasets DOTA and UCAS-COD show that our method can produce confrontation samples with strong attack ability, high mobility, and strong non-sensing type at a fast speed.

2. Related Work

We touch on the theory of adversarial attacks in this section, including those used in image classification, object detection, and RS.

2.1. Adversarial Attacks in Image Classification

Image classification is a critical task in machine learning, the goal of which is to enable the computer to assign input images to the real categories [31]. Given an input image , whose true label is y, the model’s prediction function is . The task of correctly classifying the image is even if the model’s prediction is equal to the true label of the target—that is, . Generating AEs for an image classification model requires generating an adversarial perturbation ; then, . By limiting the size of the perturbation, it is possible to generate AEs that appear unchanged to the human eyes but that the computer cannot correctly classify, even if . The above method is a targetless attack, which only requires that the computer be rendered incapable of correct classification. Adversarial examples also enable targeted attacks, also known as directed attacks, where the goal is to make the computer assign our carefully designed AEs to a particular class, assuming that the label of the particular class is p and .

In the beginning, researchers studied white-box attacks [32,33,34]. This method assumes that the structure and parameters of the models we want to attack are known, and when we attack according to this known information, the attack success rate of these methods is higher. For example, the optimization-based method L-BFGS [16] and gradient-based method FGSM [35] are white-box attack methods. However, in practice, it is difficult to obtain the framework and settings for the target models, so black-box attacks arise [36]. A black-box attack assumes that the framework and settings for the target models are unknown, and we need to discover the vulnerability of the target models through the known models and other information to realize the attack. The attack success rate of this method is generally low but has a high value of use. For example, transfer-based attacks (Curls&Whey [36], FA [2], etc.), query-based attacks (ZOO [37], MCG [38], etc.), and decision boundary-based attacks (BoundaryAttack [39], CGBA [40], etc.) are black box attack methods.

2.2. Adversarial Attacks in Object Detection

Object detection, an advanced task of image classification and segmentation, occupies an increasing share in computer vision and is also applied in task scenarios such as face recognition [41], unmanned vehicles [42], aircraft recognition [43], terrain detection [44], etc. The goal is for the computer to find the part of interest in an image and give it the correct classification, location, and size. The emergence of AEs also puts higher demands on the security of object detection.

Xie et al. [24] first discovered the existence of AEs in object detection and semantic segmentation tasks. They proposed the DAG attack with good transferability between multiple datasets and different network structures. The target of the attack is placed in the region of interest (RoI), and the attack expression is

where denotes the perturbation generated by the mth iteration and denotes the AE generated by the mth iteration. denotes a certain object among all objects in the image, denotes the result function of object detection, refers to the true category label of the attacked object, and refers to the category label specified by the attack. The perturbation is also normalized in order to limit the range of the perturbation. denotes the iterative learning rate, which controls the range of the perturbation and is a fixed hyperparameter value.

Lu et al. [23] proposed the DFool attack to construct AEs against intersection stop signs in videos to successfully attack the then more advanced Faster RCNN [45] model and implement the attack in the physical world as well. It generates AEs by minimizing the average score produced by Faster RCNN for all stop signs in the training set. The average score of stop signs is

where denotes the texture of the stop sign in the root coordinate system and denotes the image formed using the mapping superimposed on the . is the mapping formed by the correspondence between the eight vertices of the stop sign and the vertices of the . is the set of stop signs detected by the image through the Faster RCNN, b is a certain detection box, and denotes the score predicted by the Faster RCNN for the detection box.

Afterwards, a small gradient descent direction is used for optimization:

where sign(·) denotes the sign function, which is used to control the size of the perturbation, and denotes the AE generated by the n + 1st iteration. denotes the iteration step size and denotes the adversarial perturbation generated by the nth iteration.

Both of the above methods are global perturbation attacks [3,46], i.e., each pixel in the image is changed to generate the AE. The defects of these methods are very obvious. They change the foreground and background at the same time, resulting in a waste of attack resources and prolonging the attack time. Based on this, researchers have discovered local perturbation attacks [22,47] and gradually developed them as patch attacks [26,30] in the physical world.

The Dpatch attack was put forward by Liu et al. [48], which centers on generating a patch with the ground truth detection box property and optimizing it into a real object through backpropagation. In addition, the OD is made to ignore other possible objects. For two-stage ODs, such as Faster RCNN [45], the idea of the attack is to make the RPN network unable to generate the correct candidate network so that the generated patch becomes the only object. For single-stage ODs, such as YOLO [49], the core of which is the bounding box prediction and confidence scores, the attack is to make the grid where the patch is located be regarded as an object and ignore other grids. The objective function is optimized by training the patches, and the resulting patches are

where represents that the input image places the patch on l through a transformation t—the transformation t includes basic transformations such as rotation scaling. represents the likelihood that the input is associated with the label . The principle of Dpatch is to maximize the loss of the ODs to the true label and the bounding box label.

Thys et al. [26] designed the first printable adversarial patch for pedestrians, which achieved better attack results against the YOLO V2 OD in the physical world. Their optimization objective consists of three parts.

One is the non-printable score , which donates whether the patch can be printed using a printer:

where represents a pixel in P, and represents a color in a set of printable colors in C. This loss benefits colors in the image that closely resemble the colors in the set of printable colors.

The second is the total cost in the image, which is used to ensure a smoother transition between the patch and the image:

The third is the maximum objective score of the image, which represents the score assigned to the object detected by the detector. The three are combined according to reasonable weights, and adversarial patches are generated iteratively.

2.3. Adversarial Attacks in Remote Sensing

Adversarial attacks have been investigated in ground imagery but they also have numerous uses in RS. Li et al. [22] designed an adaptive local perturbation attack method aiming at generalizing the attack to all ODs, which establishes an object constraint to adaptively utilize foreground–background separation to induce perturbations as a way of controlling the magnitude and distribution of perturbations so that perturbations are attached to the object region of the image. It employs three loss functions—classification loss, position loss, and shape loss—to generate the AE, and the three parts of the loss are rationally combined using a weighted approach.

where N denotes objects’ number in the image, denotes the shape constraint, denotes the location constraint, denotes the classification constraint, and refers to the weights of the three constraints. Each object is assigned a random bounding box for a shape attack, a real border for a localization attack, and a background category for a classification attack. In addition, the distribution of perturbations is adaptively controlled using the foreground separation method so that the perturbations are attached to the foreground as much as possible.

Lian et al. [30] investigated physical attacks in RS. They proposed the patch-based Contextual Background Attack (CBA), which aims to achieve steganography of the object in the detector without contaminating the object in the image. It places the patch outside the object instead of on the object, which is more conducive to the realization of physical attacks. An AE with an adversarial patch can be precisely described as

where denotes the antipatch, denotes the mask of the antipatch, foreground pixels are 1 and background is 0, and ⊙ denotes the Hadamard product.

Objectivity loss and smoothness shrinkage are also used to update the adversarial patches. Objectivity loss refers to updating the AEs by detecting differences between objects in the inner and outer parts of the patch, and smoothing contraction reduces the distance between the patch and the target to make the transition smoother.

3. Methodology

3.1. Problem Analysis

To design a compliant AE, two aspects need to be considered: (1) where the perturbation is placed; (2) how the perturbation should be designed.

3.1.1. Location of the Perturbations

To enhance the effectiveness of the perturbation, we need to choose a suitable location to add the perturbation. Compared with the global adversarial perturbation for the image classification task, it is not very suitable to design a global adversarial perturbation for the object detection task. Especially for RSI object detection, the number of objects is large, and the area occupied is small. In this case, we need to design a more useful perturbation generation strategy to limit the perturbation to a suitable range.

3.1.2. Magnitude of the Perturbations

After determining the location of the perturbation, the next step is to determine its design scheme. The principle of perturbation generation is to successfully deceive the ODs and ensure that human eyes cannot perceive them. Therefore, the design of the perturbation can be seen as a joint optimization problem by considering multiple factors and balancing multiple losses so that the final generated AEs are globally optimal rather than locally optimal.

The input picture is expected to be , and there are n objects in the image. is the parameter of the ith object, which represents the width, height, centroid horizontal coordinate, centroid vertical coordinate, object label, and object score of the object box, respectively. When detecting rotated objects, the object box’s rotation angle parameter must be added. The goal of AE design is to identify an appropriate perturbation that satisfies

where P denotes the detector, represents the image’s ith detection result, and the generated AE is . It can be seen from this that there are three manifestations of a successful attack: first, the size of the detection box is incorrect; second, the location of the detection box is incorrect (including no detection box, i.e., the object is “invisible” in the detector); third, the category of the detected object is incorrect. These three manifestations can be superimposed.

3.2. Overview of the Methodology

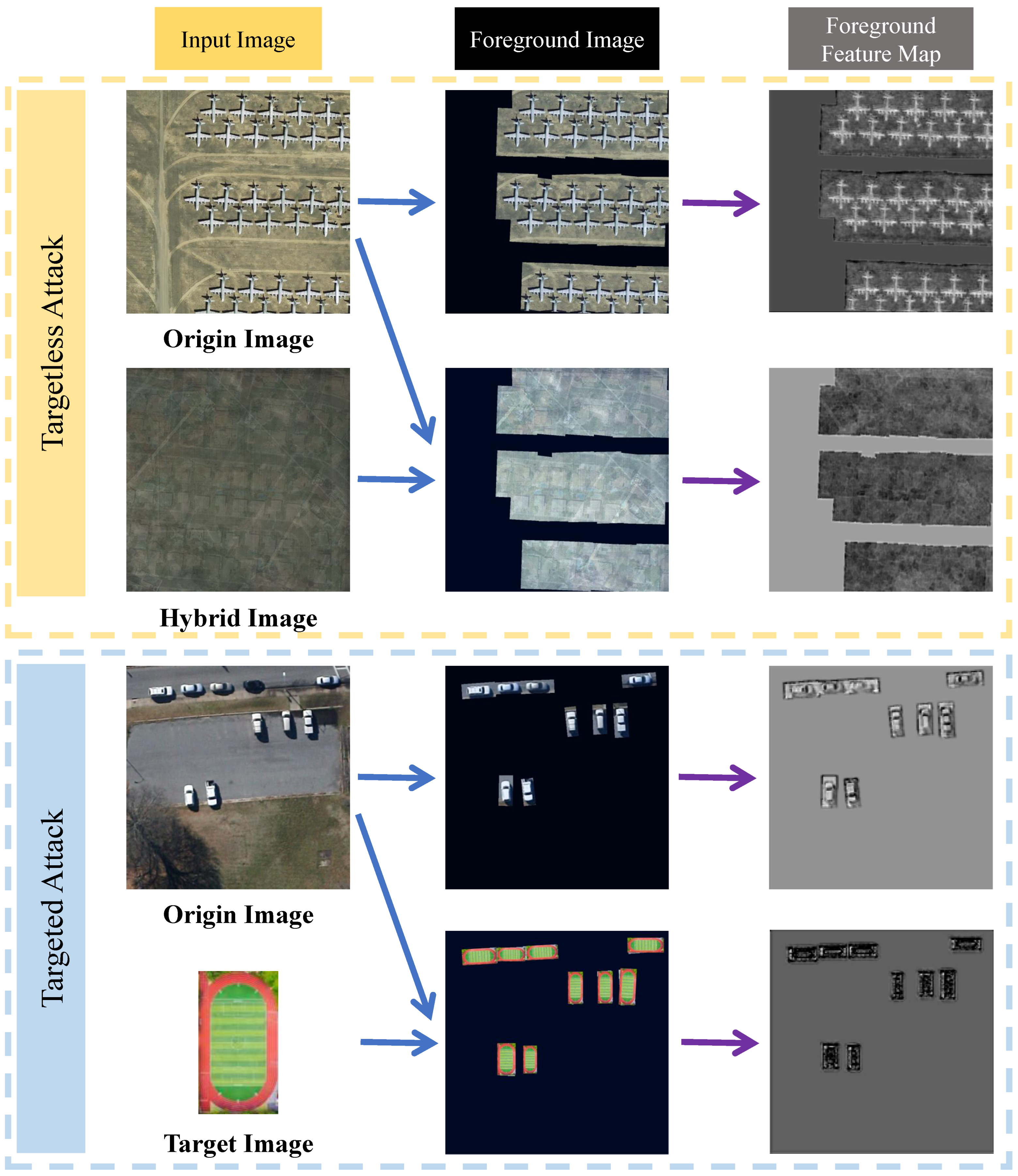

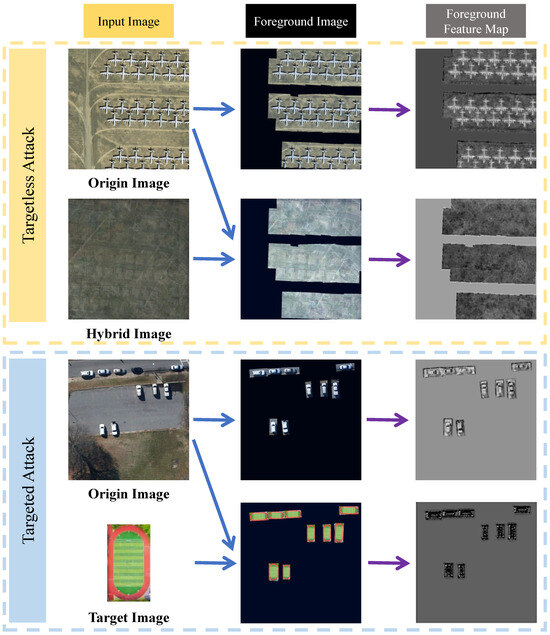

The topic of attacks against ODs has been extensively studied. Since ODs contain several important components, attacks against these specific components are effective. Still, they pose a huge problem at the same time: specific ODs contain specific frameworks and components, which prevent the generalization of the attacks [22]. In order to improve the transferability of attacks, we need to discover the similarities between different ODs. All ODs are inseparable from a common backbone network. The most commonly used backbone network is the ResNet network [8]. So, we can change the features of the image for the attack and form an AE with high transferability. The process of extracting features is visually represented in Figure 2.

Figure 2.

Visualization of the feature extraction process. The left column shows the input image, the middle column shows the foreground portion of various images, and the right column represents the corresponding shallow features of the foreground image. Blue arrows indicate the foreground extraction process, and purple arrows indicate the feature extraction process. The orange dashed box portion indicates an targetless attack, and the blue dashed box portion indicates a targeted attack.

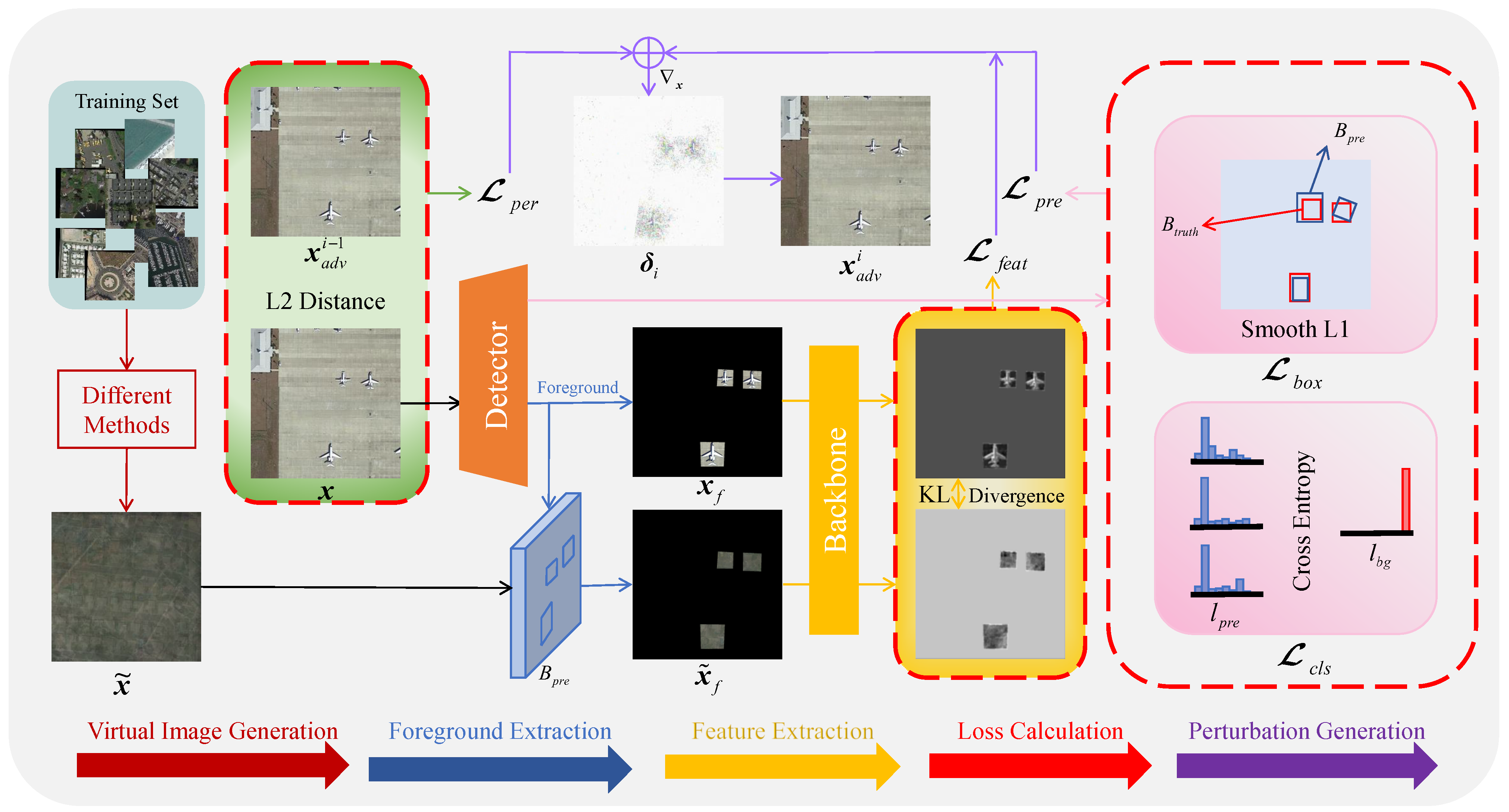

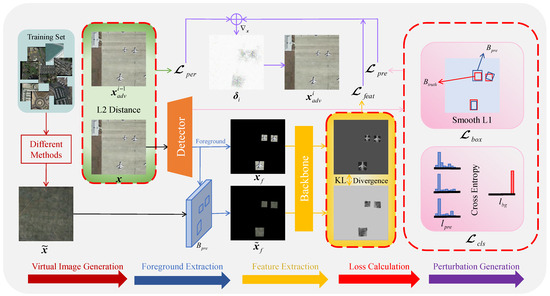

We first use an OD to detect the image; separate the high-quality foreground and the background; make a record of the foreground data of the image, including the object category as well as the position and the object box size; and cut the image of all the object boxes as a backup. After that, we need to design a hybrid image with the principle that it cannot contain objects of either category and its dimensions are identical to those of the original image. Utilizing the backbone network for obtaining the features of hybrid foreground and high-quality foreground, we design the body of the loss function by minimizing the KL divergence between the latter and the former and use the prediction result of the OD to perform the assisted attack. To accomplish the attack, the original image’s pixels should finally be altered so that the hybrid image’s features roughly match those of the original image. The flowchart of FFA attack is shown in Figure 3.

Figure 3.

Flowchart of FFA for generating AEs. The bottommost arrow in the figure represents the adversarial example generation process. First, we need to generate a hybrid image . Input image after the detector to obtain the predicted foreground box and predicted labels . Use to intercept the input image and hybrid image to obtain the input foreground and hybrid foreground . After extracting the features through the backbone network, the KL divergence of the two is calculated as the feature loss. The Smooth L1 loss between and the real object box is also calculated as the object box loss, and the cross-entropy loss between and the background label is calculated as the classification loss, which together constitute the detector prediction loss. The sum of the L2 distance between the i-1st generated AE and is calculated as the perception loss. The weighted combination of feature loss, prediction loss, and perception loss yields the total loss, which is iterated to generate the adversarial perturbation , which is summed with to obtain the adversarial example after iteration.

3.3. Foreground Feature Approximation (FFA) Attack

3.3.1. Targetless Attack

Location of the Perturbation

For the object detection task, only the foreground section of the image, which is a small part, is relevant, while the background portion is irrelevant. Therefore, to attack the object detection, we only need to add perturbations to the portion of the ODs that is of interest. We use the attacked ODs to predict the image, obtain the prediction boxes, and perform further filtering based on the prediction boxes scores:

where is the object to be attacked; is the prediction boxes of the ODs; is the maximum value of the prediction scores for each category; is the threshold of the prediction scores; the prediction boxes with prediction scores greater than are selected as the object to be attacked; and the prediction boxes with prediction scores less than are considered to have no value to be attacked, so we do not attack them.

The Size of the Perturbation

Most of the previous methods design perturbations based on the predictive information of ODs and construct a variety of loss functions based on the object boxes detected by the ODs as well as the object categories, including position loss, shape loss, category loss, and so on. These methods depend on the accuracy of the ODs and the fact that the generated AEs do not have high transferability. Based on this, we propose a feature approximation strategy to change the features of the image in the view of the backbone network to mislead the detectors and achieve the attack effect. The loss function is designed as follows.

Feature Loss: After selecting the foreground portion to attack, we need to design a suitable loss function to update the perturbation iteratively. Before starting, we need to generate a hybrid image to determine the direction of each iterative update. We choose ten images from the training set of the dataset to be superimposed, and the final image formed by the superimposition is a hybrid image that does not contain any object, which we call the full background image.

Using the prediction boxes obtained from the ODs, the input image and the hybrid image of the ith iteration are cropped separately to obtain the input foreground and the corresponding hybrid image . We define the feature loss function as the KL divergence between the two images’ pixel distributions:

where denotes the feature loss; denotes the backbone network parameters; and denote the number of rows, columns, and channels of the feature map, respectively. denotes the mapping function for extracting shallow features using the backbone network. Note that the formula is preceded by a negative sign in order to minimize the KL divergence of the clean and mixed foregrounds.

To improve the effectiveness of the attack, we also have to design the prediction loss to assist the attack based on the ODs’ prediction results. The classification loss and the object box loss are the two components of this loss.

Classification Loss: Let the AEs carry incorrect labeling information to mislead the classifier. Since the probability that the predicted object’s background is calculated during the detector prediction process, it has a background label, which we assume to be . Assume that the prediction results in m objects . Their labels are finding the true label of a prediction box by calculating the cross-entropy loss of the true box to the prediction box. By reducing the cross-entropy loss between the background category and the prediction box category, the attack is accomplished. The classification loss for each object is

where indicates the number of categories in all images, is the mapping function of the ODs’ detection results, is the jth object, and the total classification loss for this image is

Object box loss: This loss is used to control the offset between the real object box and the prediction box. By increasing this offset, the gap between the detection result and the real result becomes bigger and bigger to carry out the attack. Assuming that the centroid of the jth prediction box has the coordinates , and the centroid of the real box corresponding to this prediction box has the coordinates , the Euclidean distance between the two is calculated and the object box loss is defined in terms of the Smooth L1 loss at this distance:

The object box loss for the entire image is then the sum of the object box losses for each prediction box:

The prediction loss is the sum of the categorization loss and the object box loss, both of which are equally weighted:

Perceptual Loss: In order to make the perturbation look more natural, we also need to control the range of the perturbation. The perceptibility of the AEs is reduced by minimizing the L2 distance between the AEs and the original images. Assuming that the ith iteration generates an adversarial example , we define the perceptual loss as

The ultimate loss function is a weighted combination of the three losses:

The AE generated in the ith iteration is

where denotes the gradient of the computational loss with respect to the original image .

Algorithm 1 gives the implementation details of the FFA to achieve the targetless attack.

| Algorithm 1: Foreground feature approximation (FFA). |

|

3.3.2. Targeted Attack

Location of the Perturbation

As with the targetless attack, we use Equation (12) to obtain the objects of the attack.

The Size of the Perturbation

The targeted attack is executed by deceiving the classifier in the detector, causing it to classify all of the objects in the image as the specific target. The loss function is designed as follows.

Feature Loss: To realize the targeted attack, we use an image of the target category instead of the original hybrid image and extract a target in the target image as a benchmark. Due to the large number of prediction boxes and their different sizes, we have to redesign the target foreground before performing feature approximation. First, create a blank image that is the same shape as the input image, identify the location and size of each prediction box, and resize the target image so that the two are of the same size; following this, the target image is transferred into the corresponding position in the blank image to create a new target foreground . After that, the feature loss is computed:

Classification Loss: The design principle of the targeted attack is to move the classification result of the classifier continuously closer to the target label, assuming that the target label is . The classification loss is calculated as follows:

Object box loss: In contrast to targetless attacks, the object box loss in targeted attacks is designed to allow the detection box to coincide with the real box gradually; thus, the loss is designed to be

As with the targetless attack, the total of the object box loss and the classification loss is the prediction loss.

Perceptual Loss: As with the targetless attack, we construct the perceptual loss by minimizing the L2 distance between the clean image and the AE.

The ultimate loss function is a weighted amalgamation of the three loss functions. The adversarial perturbation is determined by calculating the gradient of the loss function in relation to the input image. Adding the perturbation to the input image creates the AE.

4. Experiments

4.1. Experimental Preparation

4.1.1. Datasets

We examine our method on two frequently used RSOD datasets, DOTA [50] and UCAS-AOD [51].

DOTA [50]: Since the original images of this dataset are too large, we cropped the original dataset to facilitate the processing. The whole dataset has more than 5000 PNG images with 1024 × 1024 pixels after cropping. After our filtering, we obtain 1500 images covering 15 categories. This research utilizes the DOTA dataset to validate the efficacy of FFA in targetless attacks.

UCAS-AOD [51]: This dataset contains a total of 1510 images and 14596 image instances of only two categories, aircraft and vehicles. The counterexample images in the dataset are of less value for the study of adversarial attacks; thus, we do not consider them. As with the DOTA dataset, we cut the images and processed them to contain a total of 3000 plane images and 1500 vehicle images, each with pixels of 680 × 680. We filter the dataset finely and remove similar images to obtain 1000 aeroplane images and 1000 car images, which constitute our experimental dataset. This dataset is used to validate the performance of FFA with targeted attack.

4.1.2. Detectors

In total, we selected seven rotated ODs as both training and attacked detectors; four for two-stage detectors, namely, Oriented R-CNN (OR) [52], Gliding Vertex (GV) [53], RoI Transformer (RT) [54], and ReDet (RD) [55]; and three in total for single-stage detectors, namely, Rotated Retinanet (RR) [56], Rotated FCOS (RF) [57], and S2A-Net (S2A) [58]. For the feature extraction network, we use ResNet50 [8], hereafter referred to as R50. All the above models are implemented using the open-source MMrotate [59] toolbox.

4.1.3. Evaluation Metrics

For targetless attacks, we use the mean accuracy (mAP) to evaluate the attack effect of the FFA attack. As long as the detected object class is not a real class, the attack is judged to be successful. The smaller the mAP, the better the attack effect is.

The mAP is the average of AP from multiple categories, and the AP value of each category refers to the area under the precision–recall curve of that category, which is a function of precision and recall. Values range between 0 and 1, and a larger value indicates a better detection effect. The calculation formula of AP is

where and are the values of two adjacent recall points and is the precision corresponding to .

For targetless attacks, we use mAP and the number of detection boxes of the target class detected by the detector to validate the effect of the attack. The smaller the mAP, the bigger the , indicating that the effect of the attack is better. The IoU threshold is set to 0.5. For image perceptibility, we use Information content weighted Structural SIMilarity (IW-SSIM) [60] to evaluate the AEs’ perceptibility. The smaller the value, the lower the perceptibility of the image perturbation and the better the effect.

The calculation formula for the IW-SSIM is

where M is the number of scales and is the number of evaluation windows under scale j. are the calculated brightness, contrast, and structural similarity. and are the i-th window sections under two images on scale j and is the scale weight.

Brightness, contrast, and structural similarity are calculated as follows:

where represent the mean, standard pool, and cross correlation evaluation, respectively, and are small constants.

For observational comparison, we multiply both mAP50 and IW-SSIM data by 100. In addition, we assess the attack speed of various algorithms by calculating the average attack time of an image computed using an NVIDIA GeForce RTX 3060 (12 GB) graphics card.

4.1.4. Parameter Setting

The iterations number I is set to 50, the learning rate is assigned a value of 0.1, and the threshold for high-quality prediction box filtering is set to 0.75. For the targetless attack, the weight of feature loss is set to 1, and the weights and of prediction loss and perceptual loss are set to 0.1. For the targetless attack, the weight of prediction loss is set to 1 and that of perceptual loss is set to 0.1.

This study was implemented on the Pytorch platform (https://pytorch.org/).

4.2. Targetless Attack

The test of targetless attack is performed on the DOTA [50] dataset using seven rotating ODs, whose backbone networks are all ResNet50 [8]. We test the attack capability of the attacks using the mAP with an IoU threshold of 0.5 (hereafter referred to as mAP50), with smaller values indicating stronger attack capability. We test the imperceptibility of the perturbation using IW-SSIM [60], with smaller values indicating that the perturbation is imperceptible. We use the average attack time of each image to test the attack speed of all attacks; the smaller the value, the faster the attack speed. Table 1 illustrates the experimental outcomes. In order to facilitate the observation, we multiply the value of mAP50 with the value of IW-SSIM by 100 to obtain the data in the table.

Table 1.

Targetless attack effectiveness of different adversarial attack methods on DOTA dataset.

From the data in the table, we can see that the attack capability of FFA is significantly stronger than the current state-of-the-art methods, especially since the attack transferability achieves a large improvement. The reason is that we designed a generic attack for the current commonly used backbone network by changing the characteristics of the image in the view of the backbone network, attacking the model’s attentional mechanism, thus generating the adversarial perturbation with a more powerful attack. The imperceptibility of the perturbation generated by FFA is slightly weaker than the LGP attack due to the fact that LGP designs an adaptive perturbation region updating strategy to select more cost-effective regions to attack. On the other hand, FFA selects the attacked regions based on the prediction of the ODs and the real labels of the clean images, which implies that the ODs’ detection accuracy and the attacking effect of FFA are somewhat correlated, and it will inevitably select some cost-ineffective regions for attacking during the attacking process, which attenuates the imperceptibility of the perturbation. In terms of attack speed, FFA is slightly slower than LGP because FFA uses the feature approximation strategy, and the feature distance has to be calculated during the attack process, which increases the amount of computation. FFA sacrifices part of the imperceptibility and attack speed for the improvement of attack effectiveness.

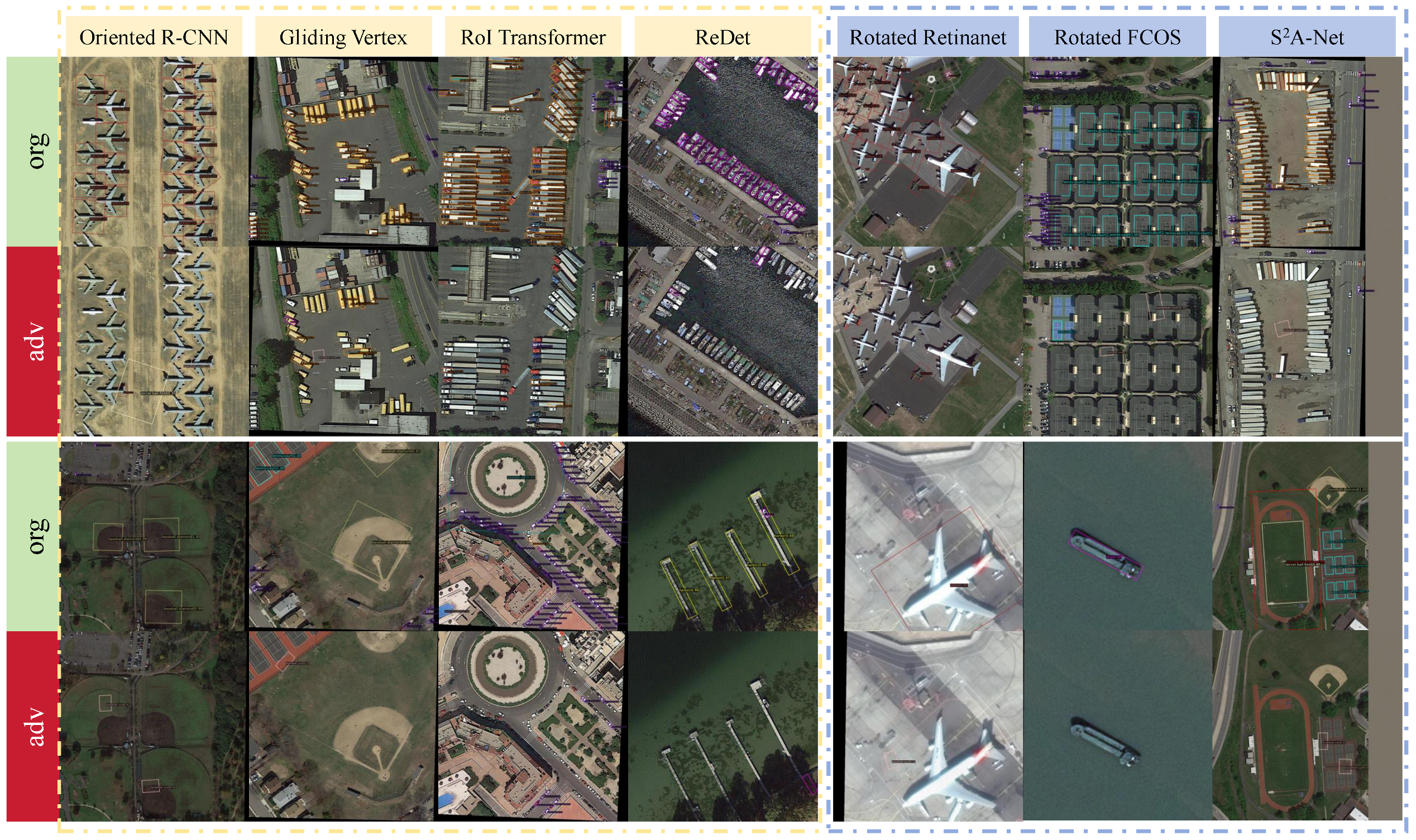

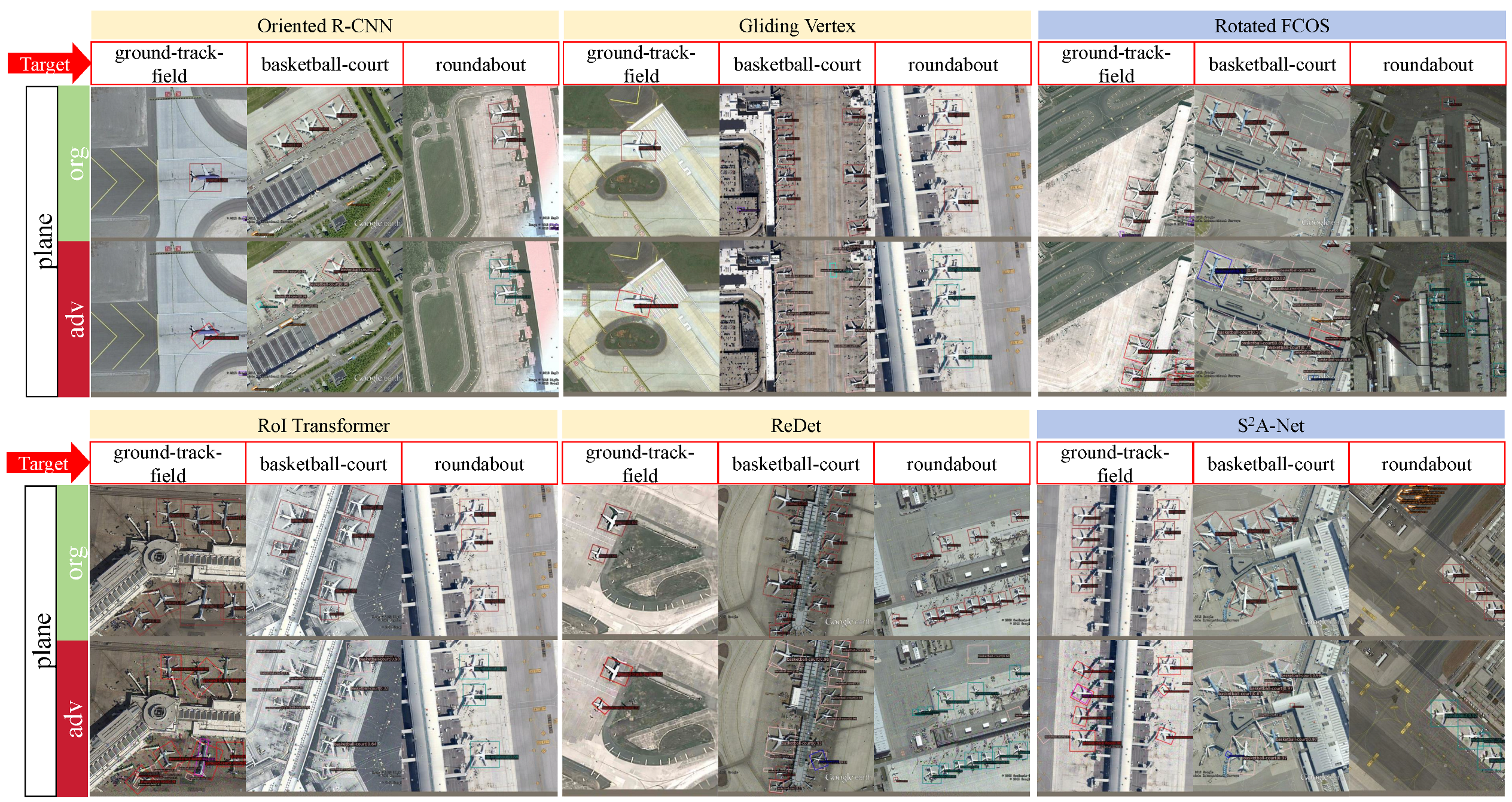

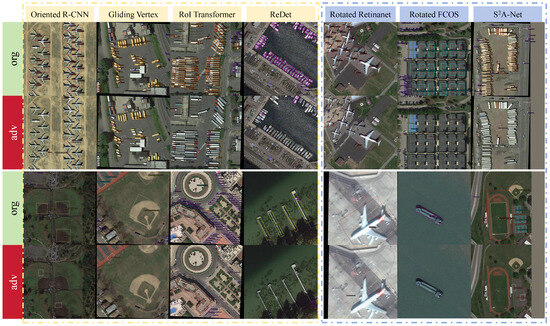

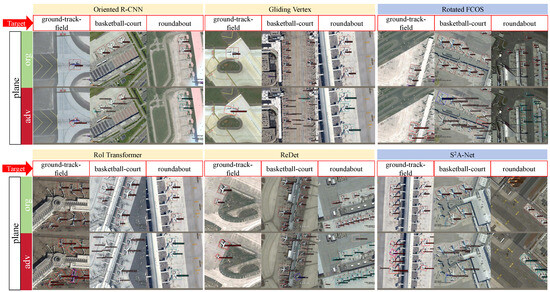

The visualization results of the targetless attack are displayed in Figure 4. Based on the figure, it is evident that the FFA produces better attack results not only for large and sparse objects but also for small and dense objects.

Figure 4.

Visualization of FFA targetless attack detection results. There are four layers in total. The top two layers represent the original images and AEs detection results for small objects, and the bottom two layers represent the original images and AEs detection results for large objects. The orange dashed section represents the detection outcomes of the two-stage ODs, while the blue dashed section represents the detection outcomes of the single-stage ODs.

4.3. Targeted Attack

4.3.1. White Box Attack Performance

Targeted attacks were tested on the UCAS-AOD [51] dataset using seven rotating ODs whose backbone networks are all ResNet50. We tested the method’s attack capability using mAP50 and the number of targets with the IoU threshold of 0.75 (hereafter referred to as ), where a smaller value of mAP50 and a larger value of mAP50 indicate a stronger attack capability. The test results are shown in Table 2. From the table, it can be seen that FFA’s attack capability is significantly stronger than the existing advanced attack methods. This shows that FFA can realize a high success rate by changing the object features in the image.

Table 2.

White-box targeted attack effectiveness of different adversarial attack methods on UCAS-AOD dataset.

4.3.2. Transferability Experiments

To verify the targeted attack transferability of FFA, we designed the following experiment. A two-stage OD OR [52] and a single-stage OR S2A [58] are selected as trained detectors to attack the remaining five detectors, with the original categories of planes and vehicles and the corresponding target categories of basketball courts and ground track fields, respectively. The evaluation metrics are mAP50 and . The empirical findings are displayed in Table 3.

Table 3.

Mobility testing of targeted adversarial attack methods.

The data presented in the table demonstrate that FFA has better transferability compared to other methods. We also find that FFA has a stronger attack effect on simple models than on complex models. mAP50 of FFA for single-stage detectors is, on average, 2 percentage points lower than that of dual-stage detectors, while is, on average, more than 200. In addition, the accuracy of the trained OD also affects the attack effect. Since the accuracy of the two-stage OD is slightly higher than that of the single-stage OD, the attack effect of the attack using the two-stage OD is stronger than that using the single-stage OD. At the same time, the success rate of targeted attacks on planes is significantly higher than that on vehicles because the ODs’ detection accuracy for planes is higher than that for vehicles.

4.3.3. Imperceptibility and Attack Speed Test

Similar to the targetless attack, we also tested the perturbation’s imperceptibility and the algorithm’s attack speed. The empirical findings are displayed in Table 4.

Table 4.

Imperceptibility and attack speed tests for targeted attacks.

The data presented in the table demonstrate that the perturbation imperceptibility type of FFA is slightly weaker and the attack speed is slower than LGP. The reason is that we will sacrifice some of the perturbation imperceptibility with the attack speed to obtain a high-transferability attack. In addition, we also find that the success rate of an attack against single-stage ODs is higher than that against two-stage ODs due to the simpler model structure and longer attack time of single-stage ODs, leading to better attack performance.

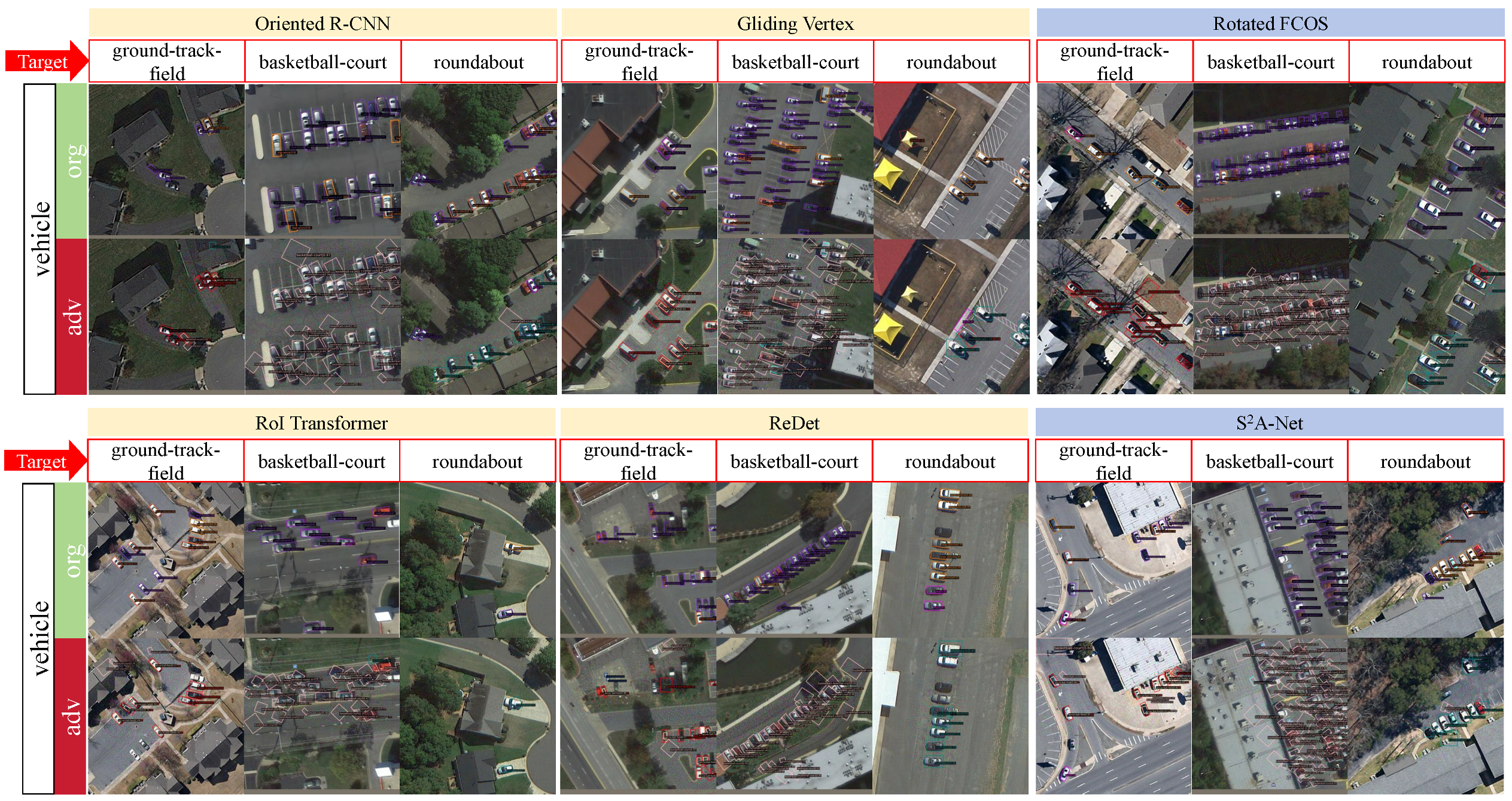

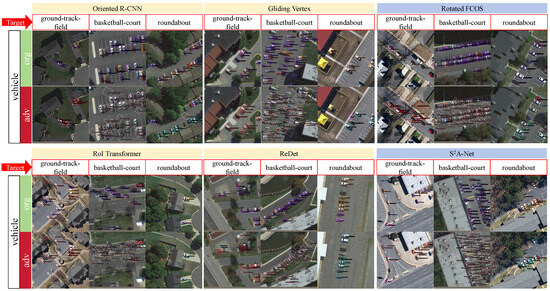

The visualization results of targeted attacks are displayed in Figure 5 and Figure 6. We can see that FFA has better attack results on different targets in different detectors.

Figure 5.

Visualization of object detection results for targeted attacks against planes. The part indicated by the red arrow is the target category, the orange color is the detection result of the two-stage OD, and the blue color is the detection result of the single-stage OD.

Figure 6.

Visualization of object detection results for targeted attacks against vehicles. The part indicated by the red arrow is the target category, the orange color is the detection result of the two-stage OD, and the blue color is the detection result of the single-stage OD.

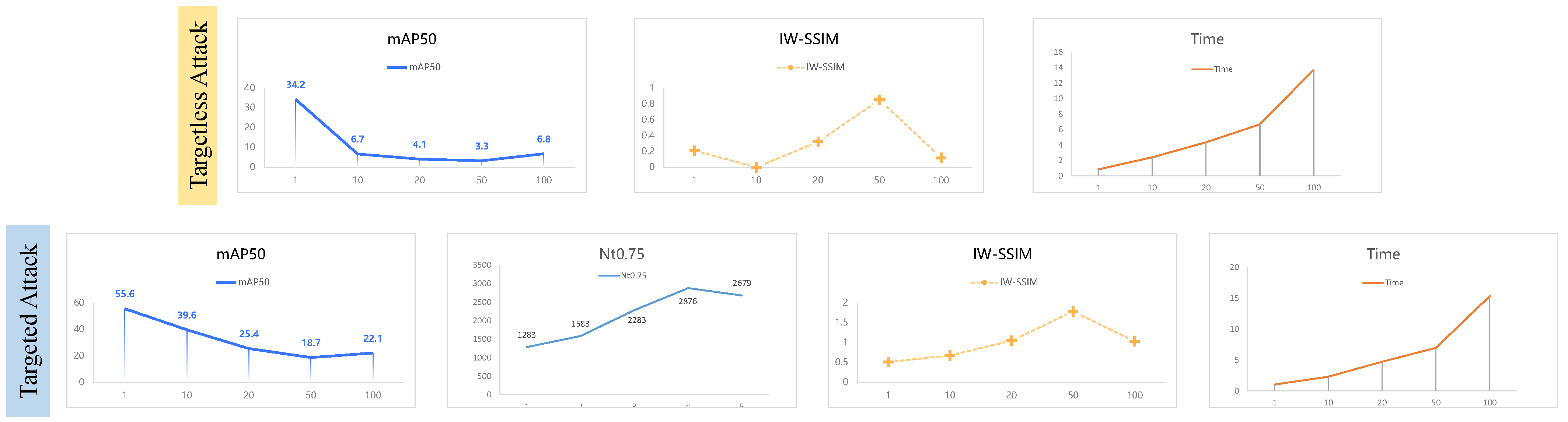

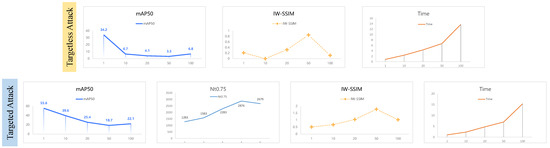

4.4. Effect of Iteration Number

The number of iterations is a direct parameter that impacts the efficiency of the attack; so, we assessed the influence of the iteration number using multiple evaluation metrics. The results are displayed in Table 5 and Figure 7.

Table 5.

Effect of iteration number on attack results.

Figure 7.

The impact of the iteration number on the attack. The trained and attacked ODs for both targetless attack and targeted attack are OR, and the backbone network is ReSNet50.

From the results, we can see that only the attack time is positively correlated with the iteration number, and the more iteration numbers, the longer the attack time. The relationship between the attack effect and imperceptibility and the iteration number is more complicated: the attack effect is first enhanced and then weakened with the increase in iteration number, while the imperceptibility of the perturbation is exactly the opposite, first weakened and then enhanced. This shows that there is a contradiction between the attack effect and imperceptibility, and the stronger the attack effect is, the weaker the imperceptibility of the perturbation is. Since the results in the table show that the imperceptibility of the perturbation is still at a low level, taking this fact into account, we set the iteration number of FFA to 50.

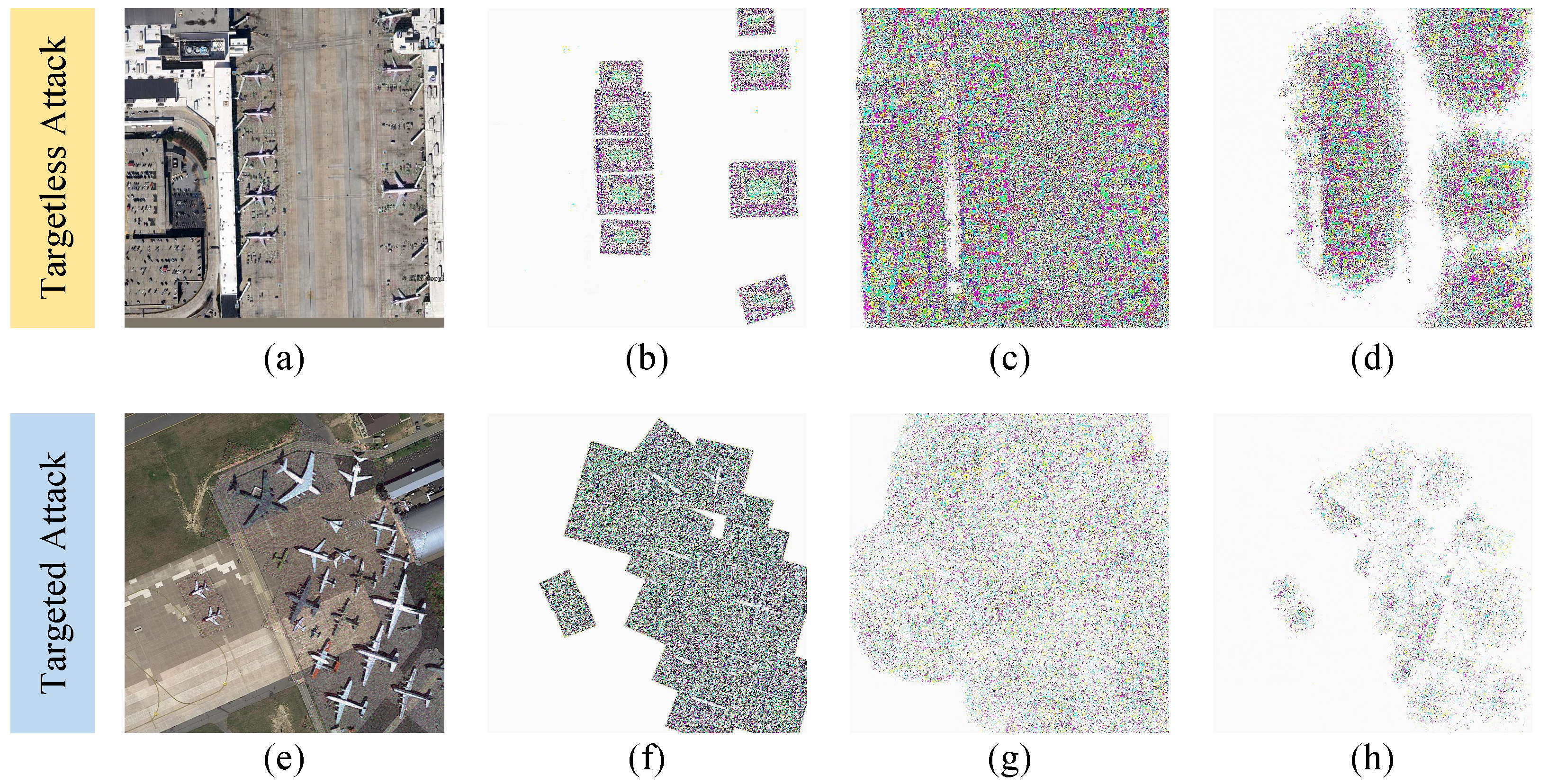

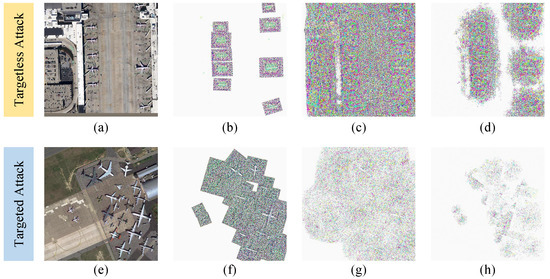

4.5. Ablation Experiments

In this section, we verify the effect of different losses used in the method on the attack efficacy. The quantitative results are displayed in Table 6, and the visualization results are displayed in Figure 8.

Table 6.

The impact of different components of the loss function on the attack effectiveness.

Figure 8.

Visualization results of perturbations in ablation experiments. The upper layer is the targetless attack, and the lower layer is the targeted attack. (a,e) are the original images; (b,f) are the perturbations with feature loss only; (c,g) are the perturbations with prediction loss and feature loss; and (d,h) are the perturbations with feature loss, prediction loss, and perception loss.

5. Conclusions

This work explores a strategy for generating AEs for RSOD. Most existing methods directly attack the predictive information of the image to achieve the effect of fooling the ODs. Unlike these methods, we propose an attack method that utilizes the information of the image itself by changing the feature of the image in view of the backbone. Specifically, we first use the OD to filter out high-quality predictions as the object to implement the attack, create a hybrid foreground without any target, and use KL divergence to minimize the shallow features of the attack object and the shallow features of the hybrid foreground to achieve a targetless attack. We replace the hybrid foreground with the target foreground and repeat the above process to realize the targeted attack. Although this method is relatively simple, the results of attacking seven rotating ODs on two datasets show that this method can generate AEs with high transferability.

There are still some directions that can be improved in this study: (1) When selecting the object to implement the attack, we use the prediction scores and IoU for threshold screening, which can result in poor-quality attack objects, leading to the weakening of the effect of the attack; this can be improved by adding a better screening strategy. (2) The imperceptibility of the perturbation is weak. More effective perturbation control methods can be set to increase the imperceptibility of the perturbation. (3) The attack speed of the algorithm is slow, and there are still some redundant calculations in the algorithm, which increases the amount of calculation; a more efficient iteration method can be designed to reduce the amount of calculation.

Author Contributions

Conceptualization, S.M.; methodology, L.H.; software, R.Z.; validation, R.Z., S.M., and L.H.; formal analysis, W.G.; investigation, R.Z.; resources, W.G.; data curation, R.Z.; writing—original draft preparation, R.Z.; writing—review and editing, W.G.; visualization, R.Z.; supervision, S.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data associated with this research are available online. The DOTA dataset is available at https://captain-whu.github.io/DOTA/dataset.html (accessed on 16 May 2024). The UCAS-AOD dataset is available at https://hyper.ai/datasets/5419 (accessed on 16 May 2024). At the same time, we have made sensible citations in the paper.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Dou, P.; Huang, C.; Han, W.; Hou, J.; Zhang, Y.; Gu, J. Remote sensing image classification using an ensemble framework without multiple classifiers. ISPRS J. Photogramm. Remote Sens. 2024, 208, 190–209. [Google Scholar] [CrossRef]

- Zhu, R.; Ma, S.; Lian, J.; He, L.; Mei, S. Generating Adversarial Examples Against Remote Sensing Scene Classification via Feature Approximation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 10174–10187. [Google Scholar] [CrossRef]

- Xu, Y.; Ghamisi, P. Universal adversarial examples in remote sensing: Methodology and benchmark. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Gu, H.; Gu, G.; Liu, Y.; Lin, H.; Xu, Y. Multi-Branch Attention Fusion Network for Cloud and Cloud Shadow Segmentation. Remote Sens. 2024, 16, 2308. [Google Scholar] [CrossRef]

- Xiao, T.; Liu, Y.; Huang, Y.; Li, M.; Yang, G. Enhancing multiscale representations with transformer for remote sensing image semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–16. [Google Scholar] [CrossRef]

- Yi, H.; Liu, B.; Zhao, B.; Liu, E. Small Object Detection Algorithm Based on Improved YOLOv8 for Remote Sensing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 1734–1747. [Google Scholar] [CrossRef]

- Wang, W.; Cai, Y.; Luo, Z.; Liu, W.; Wang, T.; Li, Z. SA3Det: Detecting Rotated Objects via Pixel-Level Attention and Adaptive Labels Assignment. Remote Sens. 2024, 16, 2496. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Chen, Y.; Tang, Y.; Xiao, Y.; Yuan, Q.; Zhang, Y.; Liu, F.; He, J.; Zhang, L. Satellite video single object tracking: A systematic review and an oriented object tracking benchmark. ISPRS J. Photogramm. Remote Sens. 2024, 210, 212–240. [Google Scholar] [CrossRef]

- Zhang, Y.; Pu, C.; Qi, Y.; Yang, J.; Wu, X.; Niu, M.; Wei, M. CDTracker: Coarse-to-Fine Feature Matching and Point Densification for 3D Single-Object Tracking. Remote Sens. 2024, 16, 2322. [Google Scholar] [CrossRef]

- Xie, Y.; Zhan, N.; Zhu, J.; Xu, B.; Chen, H.; Mao, W.; Luo, X.; Hu, Y. Landslide extraction from aerial imagery considering context association characteristics. Int. J. Appl. Earth Obs. Geoinf. 2024, 131, 103950. [Google Scholar] [CrossRef]

- Zhu, J.; Zhang, J.; Chen, H.; Xie, Y.; Gu, H.; Lian, H. A cross-view intelligent person search method based on multi-feature constraints. Int. J. Digit. Earth 2024, 17, 2346259. [Google Scholar] [CrossRef]

- Xu, W.; Feng, Z.; Wan, Q.; Xie, Y.; Feng, D.; Zhu, J.; Liu, Y. Building Height Extraction From High-Resolution Single-View Remote Sensing Images Using Shadow and Side Information. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024. [Google Scholar] [CrossRef]

- Mei, S.; Lian, J.; Wang, X.; Su, Y.; Ma, M.; Chau, L.P. A comprehensive study on the robustness of image classification and object detection in remote sensing: Surveying and benchmarking. arXiv 2023, arXiv:2306.12111. [Google Scholar] [CrossRef]

- Baniecki, H.; Biecek, P. Adversarial attacks and defenses in explainable artificial intelligence: A survey. Inf. Fusion 2024, 107, 102303. [Google Scholar] [CrossRef]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing properties of neural networks. arXiv 2013, arXiv:1312.6199. [Google Scholar]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Cui, C.; Ma, Y.; Cao, X.; Ye, W.; Zhou, Y.; Liang, K.; Chen, J.; Lu, J.; Yang, Z.; Liao, K.D.; et al. A survey on multimodal large language models for autonomous driving. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 4–8 January 2024; pp. 958–979. [Google Scholar]

- Zhao, J.; Zhao, W.; Deng, B.; Wang, Z.; Zhang, F.; Zheng, W.; Cao, W.; Nan, J.; Lian, Y.; Burke, A.F. Autonomous driving system: A comprehensive survey. Expert Syst. Appl. 2023, 242, 122836. [Google Scholar] [CrossRef]

- Cai, X.; Tang, X.; Pan, S.; Wang, Y.; Yan, H.; Ren, Y.; Chen, N.; Hou, Y. Intelligent recognition of defects in high-speed railway slab track with limited dataset. Comput.-Aided Civ. Infrastruct. Eng. 2024, 39, 911–928. [Google Scholar] [CrossRef]

- Niu, H.; Yin, F.; Kim, E.S.; Wang, W.; Yoon, D.Y.; Wang, C.; Liang, J.; Li, Y.; Kim, N.Y. Advances in flexible sensors for intelligent perception system enhanced by artificial intelligence. InfoMat 2023, 5, e12412. [Google Scholar] [CrossRef]

- Li, G.; Xu, Y.; Ding, J.; Xia, G.S. Towards generic and controllable attacks against object detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–12. [Google Scholar] [CrossRef]

- Lu, J.; Sibai, H.; Fabry, E. Adversarial examples that fool detectors. arXiv 2017, arXiv:1712.02494. [Google Scholar] [CrossRef]

- Xie, C.; Wang, J.; Zhang, Z.; Zhou, Y.; Xie, L.; Yuille, A. Adversarial examples for semantic segmentation and object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1369–1378. [Google Scholar]

- Kurakin, A.; Goodfellow, I.J.; Bengio, S. Adversarial examples in the physical world. In Artificial Intelligence Safety and Security; Chapman and Hall/CRC: Boca Raton, FL, USA, 2018; pp. 99–112. [Google Scholar]

- Thys, S.; Van Ranst, W.; Goedemé, T. Fooling automated surveillance cameras: Adversarial patches to attack person detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 15–20 June 2019; pp. 49–55. [Google Scholar]

- Czaja, W.; Fendley, N.; Pekala, M.; Ratto, C.; Wang, I.J. Adversarial examples in remote sensing. In Proceedings of the 26th ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems, Seattle, WA, USA, 6–9 November 2018; pp. 408–411. [Google Scholar] [CrossRef]

- Lu, M.; Li, Q.; Chen, L.; Li, H. Scale-adaptive adversarial patch attack for remote sensing image aircraft detection. Remote Sens. 2021, 13, 4078. [Google Scholar] [CrossRef]

- Du, A.; Chen, B.; Chin, T.J.; Law, Y.W.; Sasdelli, M.; Rajasegaran, R.; Campbell, D. Physical adversarial attacks on an aerial imagery object detector. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 1796–1806. [Google Scholar]

- Lian, J.; Wang, X.; Su, Y.; Ma, M.; Mei, S. CBA: Contextual background attack against optical aerial detection in the physical world. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–16. [Google Scholar] [CrossRef]

- Rawat, W.; Wang, Z. Deep convolutional neural networks for image classification: A comprehensive review. Neural Comput. 2017, 29, 2352–2449. [Google Scholar] [CrossRef] [PubMed]

- Agnihotri, S.; Jung, S.; Keuper, M. Cospgd: A unified white-box adversarial attack for pixel-wise prediction tasks. arXiv 2023, arXiv:2302.02213. [Google Scholar] [CrossRef]

- Liu, H.; Ge, Z.; Zhou, Z.; Shang, F.; Liu, Y.; Jiao, L. Gradient correction for white-box adversarial attacks. IEEE Trans. Neural Netw. Learn. Syst. 2023, 1–12. [Google Scholar] [CrossRef]

- Lin, G.; Pan, Z.; Zhou, X.; Duan, Y.; Bai, W.; Zhan, D.; Zhu, L.; Zhao, G.; Li, T. Boosting adversarial transferability with shallow-feature attack on SAR images. Remote Sens. 2023, 15, 2699. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and Harnessing Adversarial Examples. In Proceedings of the International Conference on Learning Representations, Banff, AB, Canada, 14–16 April 2014. [Google Scholar] [CrossRef]

- Shi, Y.; Wang, S.; Han, Y. Curls & whey: Boosting black-box adversarial attacks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition; Long Beach, CA, USA, 16–17 June 2019, pp. 6519–6527.

- Chen, P.Y.; Zhang, H.; Sharma, Y.; Yi, J.; Hsieh, C.J. Zoo: Zeroth order optimization based black-box attacks to deep neural networks without training substitute models. In Proceedings of the 10th ACM Workshop on Artificial Intelligence and Security, Dallas, TX, USA, 3 November 2017; pp. 15–26. [Google Scholar] [CrossRef]

- Yin, F.; Zhang, Y.; Wu, B.; Feng, Y.; Zhang, J.; Fan, Y.; Yang, Y. Generalizable black-box adversarial attack with meta learning. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 46, 1804–1818. [Google Scholar] [CrossRef]

- Brendel, W.; Rauber, J.; Bethge, M. Decision-based adversarial attacks: Reliable attacks against black-box machine learning models. arXiv 2017, arXiv:1712.04248. [Google Scholar] [CrossRef]

- Reza, M.F.; Rahmati, A.; Wu, T.; Dai, H. Cgba: Curvature-aware geometric black-box attack. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 124–133. [Google Scholar]

- Boutros, F.; Struc, V.; Fierrez, J.; Damer, N. Synthetic data for face recognition: Current state and future prospects. Image Vis. Comput. 2023, 135, 104688. [Google Scholar] [CrossRef]

- Sun, Y.; Zhang, Y.; Wang, H.; Guo, J.; Zheng, J.; Ning, H. SES-YOLOv8n: Automatic driving object detection algorithm based on improved YOLOv8. Signal Image Video Process. 2024, 18, 3983–3992. [Google Scholar] [CrossRef]

- Wenqi, Y.; Gong, C.; Meijun, W.; Yanqing, Y.; Xingxing, X.; Xiwen, Y.; Junwei, H. MAR20: A benchmark for military aircraft recognition in remote sensing images. Natl. Remote Sens. Bull. 2024, 27, 2688–2696. [Google Scholar] [CrossRef]

- Yang, J.; Xu, J.; Lv, Y.; Zhou, C.; Zhu, Y.; Cheng, W. Deep learning-based automated terrain classification using high-resolution DEM data. Int. J. Appl. Earth Obs. Geoinf. 2023, 118, 103249. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Wu, S.; Tan, Y.a.; Wang, Y.; Ma, R.; Ma, W.; Li, Y. Towards transferable adversarial attacks with centralized perturbation. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 6109–6116. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, C. Attacking object detector by simultaneously learning perturbations and locations. Neural Process. Lett. 2023, 55, 2761–2776. [Google Scholar] [CrossRef]

- Liu, X.; Yang, H.; Liu, Z.; Song, L.; Li, H.; Chen, Y. Dpatch: An adversarial patch attack on object detectors. arXiv 2018, arXiv:1806.02299. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A large-scale dataset for object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3974–3983. [Google Scholar]

- Zhu, H.; Chen, X.; Dai, W.; Fu, K.; Ye, Q.; Jiao, J. Orientation robust object detection in aerial images using deep convolutional neural network. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 3735–3739. [Google Scholar] [CrossRef]

- Xie, X.; Cheng, G.; Wang, J.; Yao, X.; Han, J. Oriented R-CNN for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3520–3529. [Google Scholar]

- Xu, Y.; Fu, M.; Wang, Q.; Wang, Y.; Chen, K.; Xia, G.S.; Bai, X. Gliding vertex on the horizontal bounding box for multi-oriented object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 1452–1459. [Google Scholar] [CrossRef]

- Ding, J.; Xue, N.; Long, Y.; Xia, G.S.; Lu, Q. Learning RoI transformer for oriented object detection in aerial images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2849–2858. [Google Scholar]

- Han, J.; Ding, J.; Xue, N.; Xia, G.S. Redet: A rotation-equivariant detector for aerial object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 2786–2795. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully convolutional one-stage object detection. arXiv 2019, arXiv:1904.01355. [Google Scholar]

- Han, J.; Ding, J.; Li, J.; Xia, G.S. Align deep features for oriented object detection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–11. [Google Scholar] [CrossRef]

- Zhou, Y.; Yang, X.; Zhang, G.; Wang, J.; Liu, Y.; Hou, L.; Jiang, X.; Liu, X.; Yan, J.; Lyu, C.; et al. Mmrotate: A rotated object detection benchmark using pytorch. In Proceedings of the 30th ACM International Conference on Multimedia, Lisbon, Portugal, 10–14 October 2022; pp. 7331–7334. [Google Scholar] [CrossRef]

- Wang, Z.; Li, Q. Information content weighting for perceptual image quality assessment. IEEE Trans. Image Process. 2010, 20, 1185–1198. [Google Scholar] [CrossRef]

- Chow, K.H.; Liu, L.; Loper, M.; Bae, J.; Gursoy, M.E.; Truex, S.; Wei, W.; Wu, Y. Adversarial objectness gradient attacks in real-time object detection systems. In Proceedings of the 2020 Second IEEE International Conference on Trust, Privacy and Security in Intelligent Systems and Applications (TPS-ISA), Atlanta, GA, USA, 28–31 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 263–272. [Google Scholar] [CrossRef]

- Chen, P.C.; Kung, B.H.; Chen, J.C. Class-aware robust adversarial training for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 10420–10429. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).