Abstract

There has been substantial research on multi-medium visual measurement in fields such as underwater three-dimensional reconstruction and underwater structure monitoring. Addressing the issue where traditional air-based visual-measurement models fail due to refraction when light passes through different media, numerous studies have established refraction-imaging models based on the actual geometry of light refraction to compensate for the effects of refraction on cross-media imaging. However, the calibration of refraction parameters inevitably contains errors, leading to deviations in these parameters. To analyze the impact of refraction-parameter deviations on measurements in underwater structure visual navigation, this paper develops a dual-media stereo-vision measurement simulation model and conducts comprehensive simulation experiments. The results indicate that to achieve high-precision underwater-measurement outcomes, the calibration method for refraction parameters, the distribution of the targets in the field of view, and the distance of the target from the camera must all be meticulously designed. These findings provide guidance for the construction of underwater stereo-vision measurement systems, the calibration of refraction parameters, underwater experiments, and practical applications.

1. Introduction

Multi-medium visual measurement is a crucial branch of the visual-measurement field. Common multi-media visual-measurement scenarios include situations where light traverses two mediums, such as air–water, or three mediums, such as air–glass–air and air–glass–water [1,2,3]. Examples of such scenarios include the visual measurement of thermal deformation of alumina ceramic plates and stainless-steel plates under radiant heating [4] as well as the three-dimensional shape measurement of underwater bridge piers [5].

Multi-medium visual measurement has garnered extensive attention in areas such as underwater deformation monitoring and three-dimensional reconstruction [1,5,6,7,8]. In addition, it can also be applied to underwater navigation and positioning. In underwater engineering, such as immersed tunnels, the precise alignment of prefabricated elements requires high-precision underwater navigation and positioning [9]. Similarly, the autonomous recovery of autonomous underwater vehicles (AUVs) necessitates accurate navigation [10]. For navigation scenarios involving the docking of underwater structures at close range, visual measurement becomes a crucial method due to its rich information and high accuracy [11,12].

In underwater visual measurement, cameras are either placed in waterproof housings or positioned above the water surface. Consequently, a significant characteristic is that light propagation undergoes refraction at the interfaces of different media, causing the light rays to deviate from a straight-line path. Therefore, traditional air-based stereo-vision measurement models are no longer applicable under multi-media conditions [13]. When the camera images an object through a sealed housing’s transparent window, the interface typically comes in shapes such as planar, hemispherical, and cylindrical [14,15,16]. Hemispherical ports counteract the refraction effect through their specific shapes, do not reduce the field of view, and can withstand high pressure in deep water, but they may induce image blurring and have higher manufacturing requirements [17,18]. Cylindrical interfaces have the advantage of allowing a larger field of view (in one direction) and are relatively simple and cost-effective to manufacture [19]. Although flat interfaces can significantly reduce the field of view and may introduce chromatic aberration, they are well-studied and have lower manufacturing costs [17]. Therefore, the focus of this paper is on underwater visual measurement with a flat interface.

The basic problems of underwater photogrammetry with a flat interface, including the basic formulas and the methods of automatic reduction to one perspective, were explored decades ago [20,21,22]. Since no later than the 1960s, researchers have proposed numerous methods for refractive compensation in planar underwater visual measurement. Broadly, these methods can be categorized into three solutions. The first approach involves placing two auxiliary calibration grids or a calibration frame within the object space, utilizing the calibration structure to determine the direction of light prior to its incident [23]. However, this method necessitates the customization of specific calibration grids or frames, making the implementation process relatively complex. The second approach entails focal length compensation or refraction absorption, wherein the pixel offset error induced by refraction is approximated as an error stemming from changes in lens focal length or distortion. By calibrating the camera’s lens or distortion parameters, the influence of refraction can be mitigated [24,25,26]. Nevertheless, due to the nonlinearity of the refractive effect and the dependence of the refraction error’s magnitude on the object’s position and the angle of incidence, these methods only approximate the elimination of refraction effects [13,27]. The final method is geometric refraction correction, which involves establishing an underwater refraction imaging model through geometric analysis to explicitly account for the refractive effects, thereby theoretically ensuring the accuracy of the measurement results [8,28,29,30,31,32,33,34,35,36,37].

Research on geometric refraction correction primarily focuses on the development of calibration methods and measurement models. The two-stage underwater camera calibration represents a groundbreaking advancement in the field [29]. The optimization method can also be employed to calibrate parameters such as the normal vector of the refraction interface and the distance from the interface to the camera. However, it is essential to assign appropriate initial values in advance [30]. Leveraging the geometric property that two incident light rays from the same object entering the stereo camera lie on the same plane, the calibration parameters can also be optimized through 3D point remapping [33]. The refractive index varies with different light frequencies. The parameters of the underwater camera can be calibrated by calculating the offset in the imaging position of different light frequencies emitted by a specially designed calibration plate placed at the same location [31]. To eliminate measurement errors caused by the spherical refraction interface, an underwater calibration algorithm based on an advanced non-dominated sorting genetic algorithm is proposed. This approach, utilizing an integrated geometric refraction model, significantly enhances the performance of underwater visual measurement [35].

Regarding the influence of refraction on visual measurements, for multi-view (more than two views) underwater 3D reconstruction, the influence on the accuracy of the 3D reconstruction is evaluated quantitatively and systematically in [25]. Tong [38] used simulation and real experiments to analyze the influence of different refraction parameters and proposed measures to reduce the influence. However, due to the inevitability of measurement errors, the calibrated refraction parameters must contain deviations. When using the refraction-measurement model for refraction compensation, the impact of the parameter deviation on the visual measurement results is also worth studying. In addition, conducting underwater experiments is relatively challenging, so performing relevant analyses through simulation experiments is a preferable option. To the best of our knowledge, current research does not provide specific algorithms for simulation analysis. To investigate the impact of refraction-parameter deviations on stereo-visual measurement in dual-media conditions, this paper first established a simulation model for stereo-visual measurement in the air–water scenario. Then, a thorough analysis of the relationship between the stereo-visual measurement model and refraction-parameter deviations using simulation experiments was conducted. The conclusions can provide guidance for the construction of underwater stereo-vision measurement systems, refraction parameter calibration, underwater experiments, and practical applications.

The subsequent structure of the paper is arranged as follows. Section 2 introduces the light refraction geometry and measurement model for multi-media stereo vision, establishes a simulation algorithm for dual-media stereo-visual measurement and provides a simulation experimental design. The experimental results are presented and discussed in Section 3 and Section 4, respectively. Section 5 presents the conclusions.

2. Methods

2.1. Measurement Model

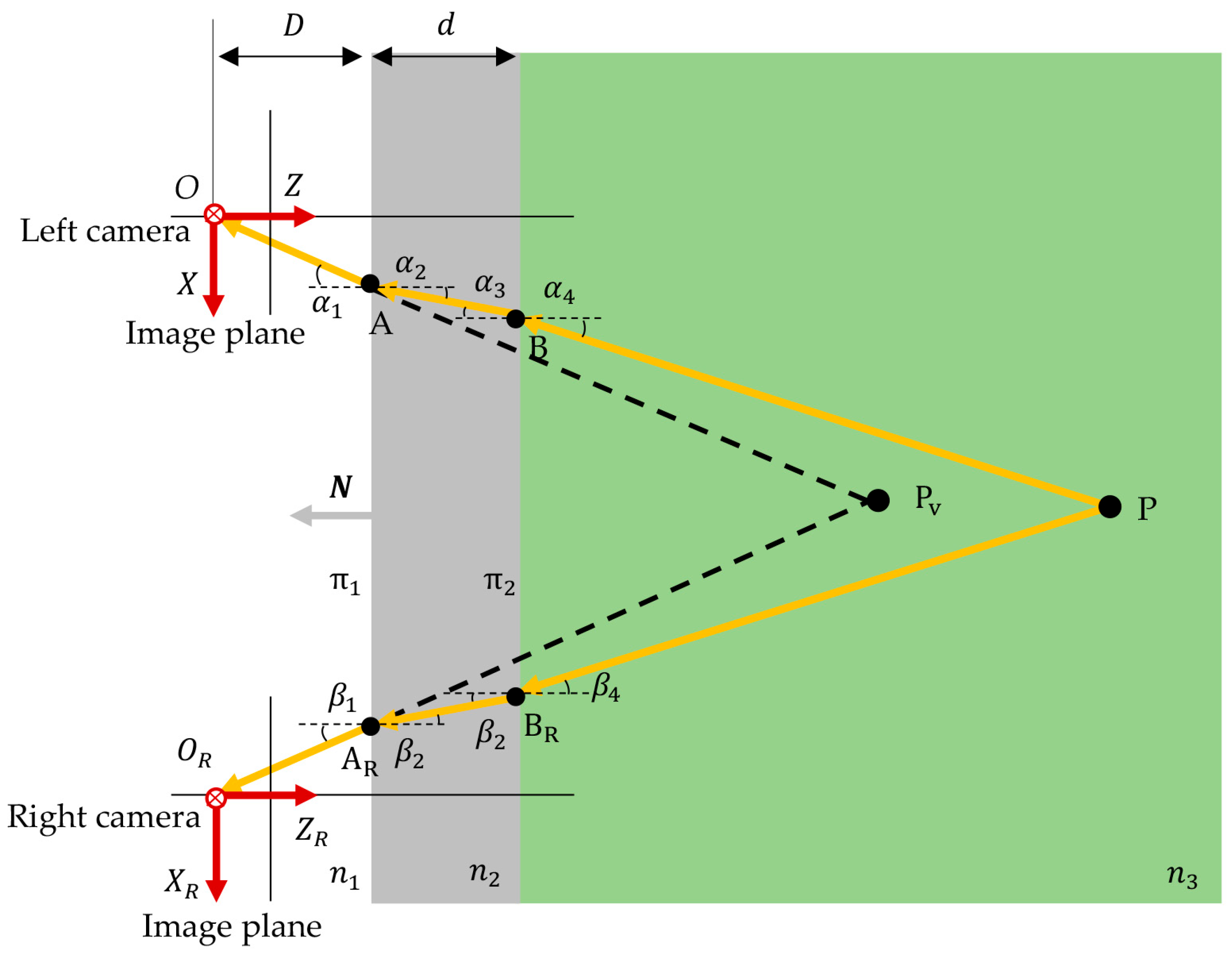

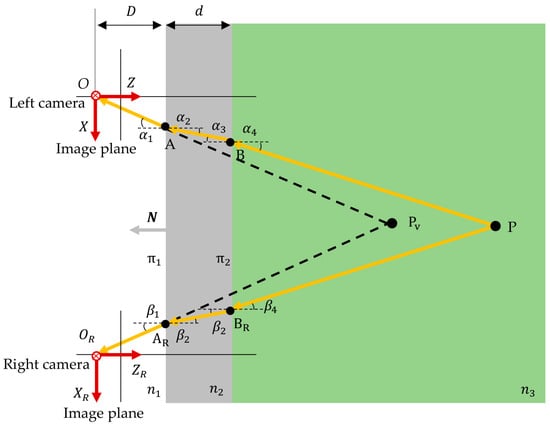

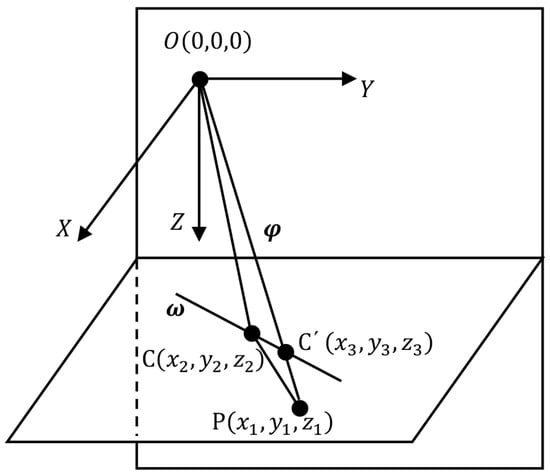

The stereo-vision measurement system includes left and right cameras. It is assumed that the cameras image the target through a transparent window inside a watertight cabin. The material of the transparent window is generally glass. The light reflected or emitted by the underwater target reaches the camera through three mediums and two refraction interfaces. Both interfaces are assumed to be planar and parallel to each other. The refraction geometry is shown in Figure 1.

Figure 1.

Refractive geometry for air–glass–water medium with two flat interfaces.

The camera coordinate system of the left camera is used as the reference coordinate system. P is the target point underwater and its coordinate is . The two refraction interfaces are denoted as and . The distance from the left camera optical center to the interface is D, and the thickness of the glass layer is d. Due to refraction, the optical paths between P and the cameras are not straight lines, but the broken lines and according to Snell’s law. The refraction angles of the two rays are denoted by and . It is assumed that both cameras have been carefully calibrated. The camera coordinate system of the right camera is , and the coordinates of the origin in the reference coordinate system are denoted as , which is the translation vector of the right camera with respect to the left camera.

The target point P is the intersection point of the lines BP and . If the equations of two lines are found, the intersection point is the coordinate of P. The derivation of the equations for the lines BP and is detailed below [1].

After obtaining two images from the left and right cameras and completing target recognition and matching, the point is obtained using conventional triangulation. The points O, A, and are collinear. The points , , and are also collinear. The coordinates of the points O, , and are known. Therefore, the unit direction vectors of the lines OA and can be obtained as

In terms of the direction vector of the line and a point on the line, the equations for the lines OA and are

The points A and are the intersections of the lines OA and with the interface . Assuming that the normal vector of is known, the equation for can be expressed as

Combining Equations (2) and (3), the coordinates of the points A and can be obtained as

According to the vector inner product formula, the angles and are

Based on Snell’s law, the angles and are

For the left camera, the lines OA, AB, and the camera optical axis are coplanar, so the normal vector of this plane is

where is the unit direction vector of the left camera optical axis. Through Rodriguez’s formula, the rotation matrix and the unit direction vector of the line AB are determined as

where is the unit matrix and is the antisymmetric matrix generated by .

Considering the thickness of the glass layer, we can obtain the coordinates of point B

Because , similar to Equations (6) and (8), the angle and the unit direction vector of line BP can be calculated as

For the line BP, the direction vector and a point on the line, B, are known; therefore, its equation is

For the right camera, the equation of the line can be obtained following a similar derivation process

In theory, the intersection of the lines BP and is point P, which is to be determined. However, the lines BP and may not intersect due to the errors. Suppose there exists a point H on the line BP and a point M on the line and the line from H to M forms the vector Q

where and are the coefficients. Our aim is to minimize the length of Q. The length of the common perpendicular segment of two lines is the smallest. If Q is perpendicular to both lines BP and , we have

According to Equation (14), and can be calculated, and then and can be obtained. The average of and is considered to be point P

The model is derived for a three-medium scenario, but with minor adjustments, it is equally applicable to a two-medium scenario.

2.2. Dual-Medium Simulation Model

The refraction parameters involved in the above measurement model include the distance from the camera to the refraction interface, the normal direction of the refraction interface, and the refractive indices of different mediums. Calibration errors of these parameters may impact the measurement results. Therefore, it is necessary to assess the impact of refractive parameter deviations on measurement results. However, conducting such evaluations through underwater experiments is relatively complex. In contrast, simulation experiments are more efficient. Consequently, this paper investigates a simulation model for underwater stereo-visual measurement. For simplicity, the camera is placed in the air and the target in the water, constituting an air–water scenario.

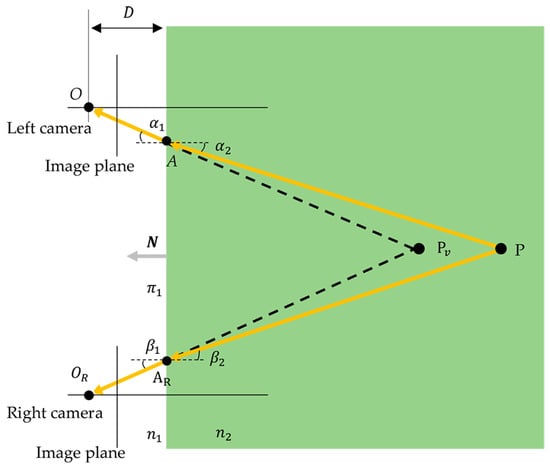

Taking the left camera coordinate system as the reference coordinate system. Given the coordinate of the target P is ; the distance from the left camera to the refractive interface is , and the normal vector of the refractive interface is N. The camera is in the air. The target is in the water. The refractive indices of air and water are and , respectively.

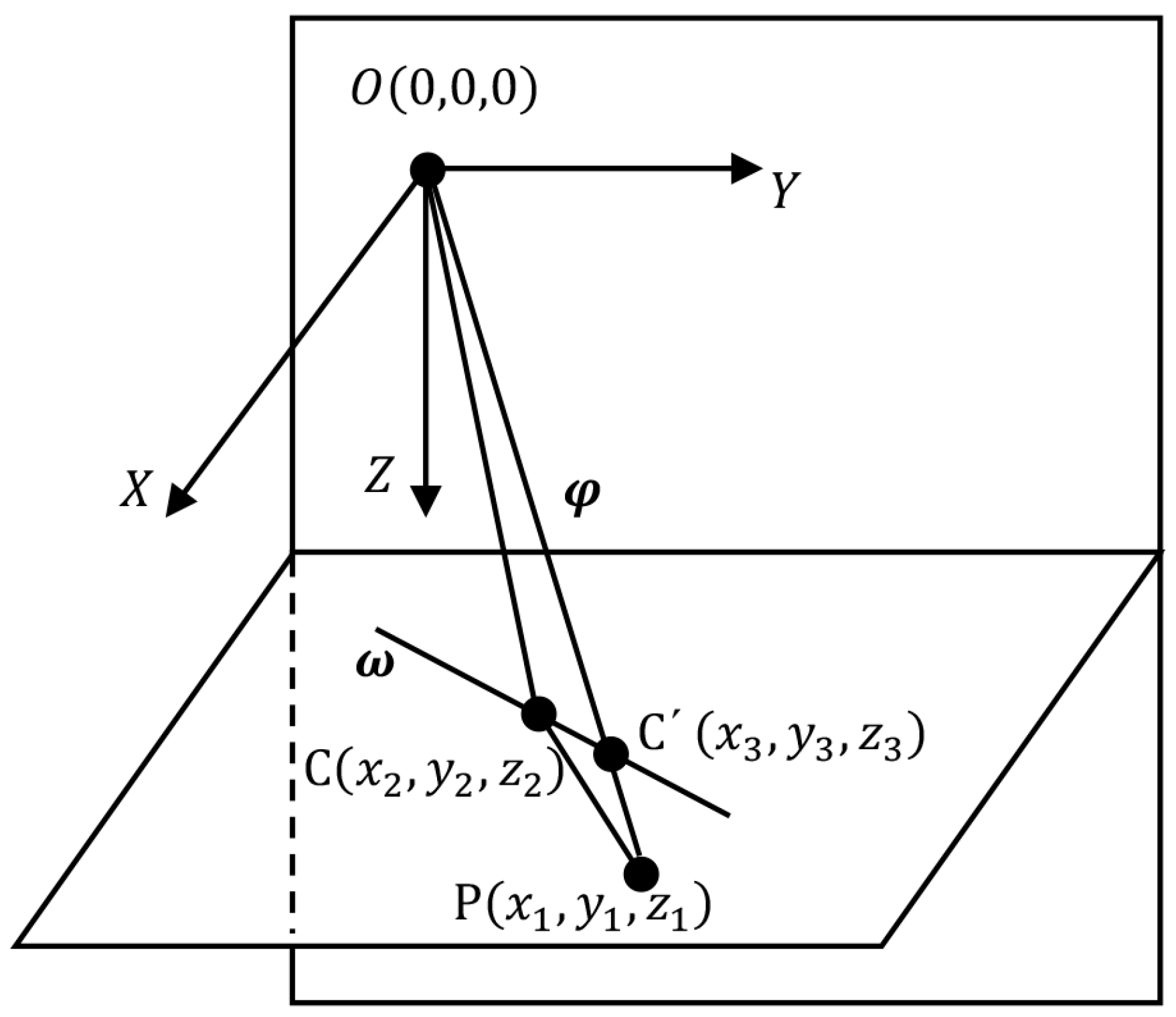

For the left camera, the refraction geometry is shown in Figure 2. represents the camera’s optical center. P is the target. C is the refraction point. C′ is the intersection of the line OP with the refractive interface. The objective is to calculate the coordinate of point C.

Figure 2.

Refracted light in the dual-medium scenario.

The direction vector of line OP is

The entire refracted light PC-CO is in a refraction plane. Since PC and CO are coplanar with the normal vector N of the refraction interface, the normal vector of the refraction plane is

where denotes the cross-product. The unit normal vector of the intersection CC′ of the refraction plane and the refractive interface is

Determine the coordinate of a point on line CC′, denoted as . Then, the line CC′ can be expressed as

where is the coefficient.

According to the relationship between refractive index and the speed of light, if the speed of light in vacuum is c, the speeds of light in the air and water are

Let the coordinate of point C be . The lengths of the two optical paths OC and CP are

According to the relationship between speed, time, and distance, the propagation time of the light between O and P is

The refraction point C is a point that satisfies Equation (19). When all refraction parameters are known, Equation (22) is a function of coefficient . According to Fermat’s principle, the actual path of light passing between two fixed points in space is always the shortest optical path (or propagation time). So, the derivative of is equal to 0, which is

Solving the above formula, we can get the value of . Combining it with Equation (19), the coordinate of point C can be obtained. So, the complete propagation path of the light is established. For the right camera, the simulation process is similar, the only difference is that in Equation (21) is transformed into the following form

where is the coordinate of the origin of the right camera coordinate system in the reference coordinate system.

The target pixel coordinate can be easily calculated based on the coordinate of point C and the camera parameters using the perspective imaging model, thus realizing the simulation of the imaging process.

2.3. Simulation Experimental Design

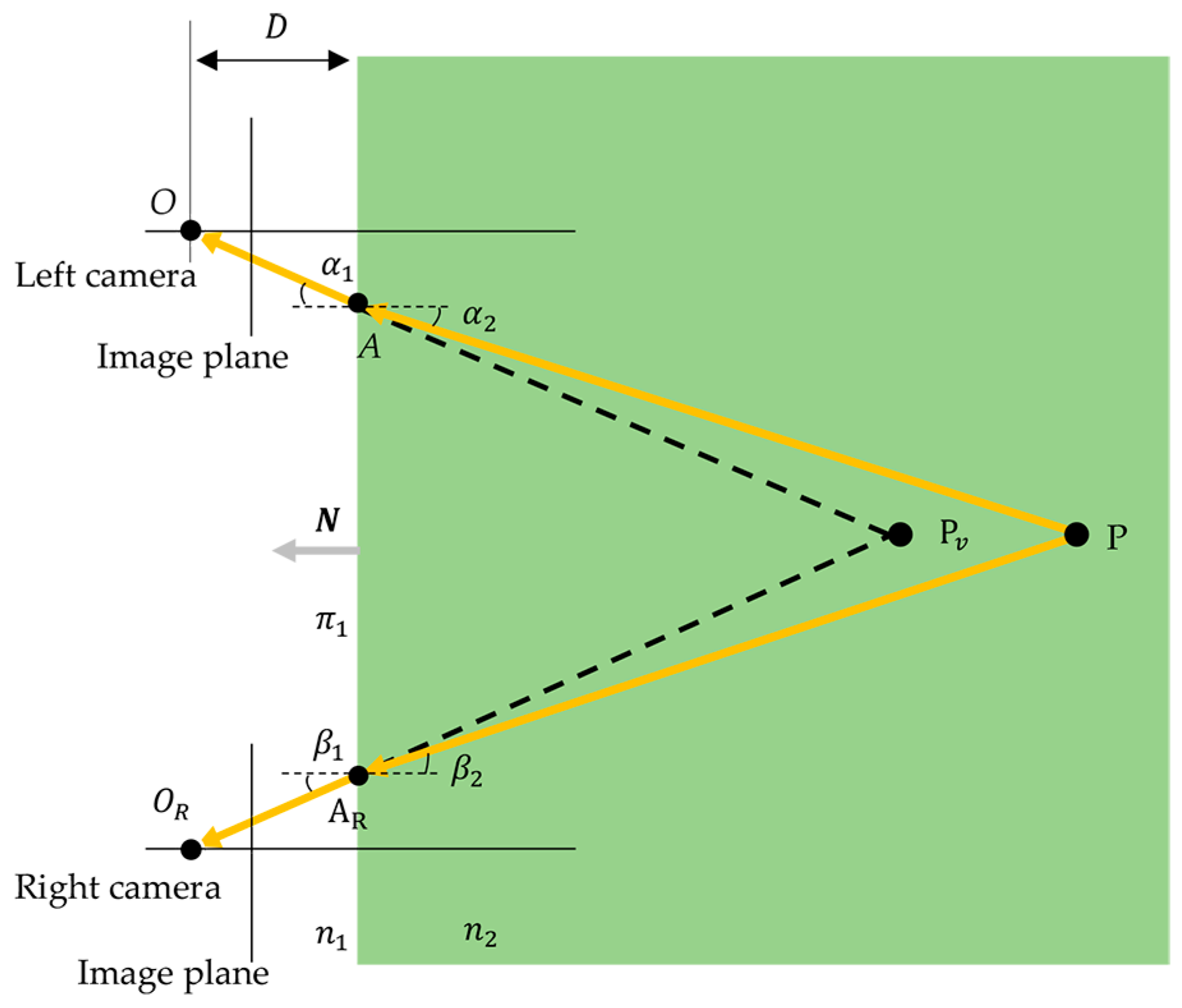

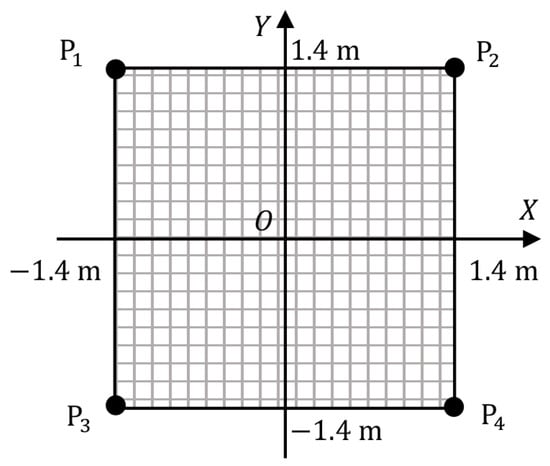

The purpose of the simulation experiments is to evaluate the impact of the deviations of the refraction parameters in the measurement model on the results and to provide guidance for the calibration of the refraction parameters and the construction of the measurement system. The cameras are placed in the air and the target in the water, as shown in Figure 3. The intrinsic and external parameters of the left and right cameras are known. The two cameras share the same refraction interface. The refraction parameters that affect the measurement model are the distance D between the left camera and the refraction interface, the medium refractive indices and , and the refraction interface normal N. Since the refraction angle of the light depends on the relative refractive index, the relative refractive index is discussed in the simulation experiments.

Figure 3.

Air–water dual-medium visual-measurement scenario with one flat interface.

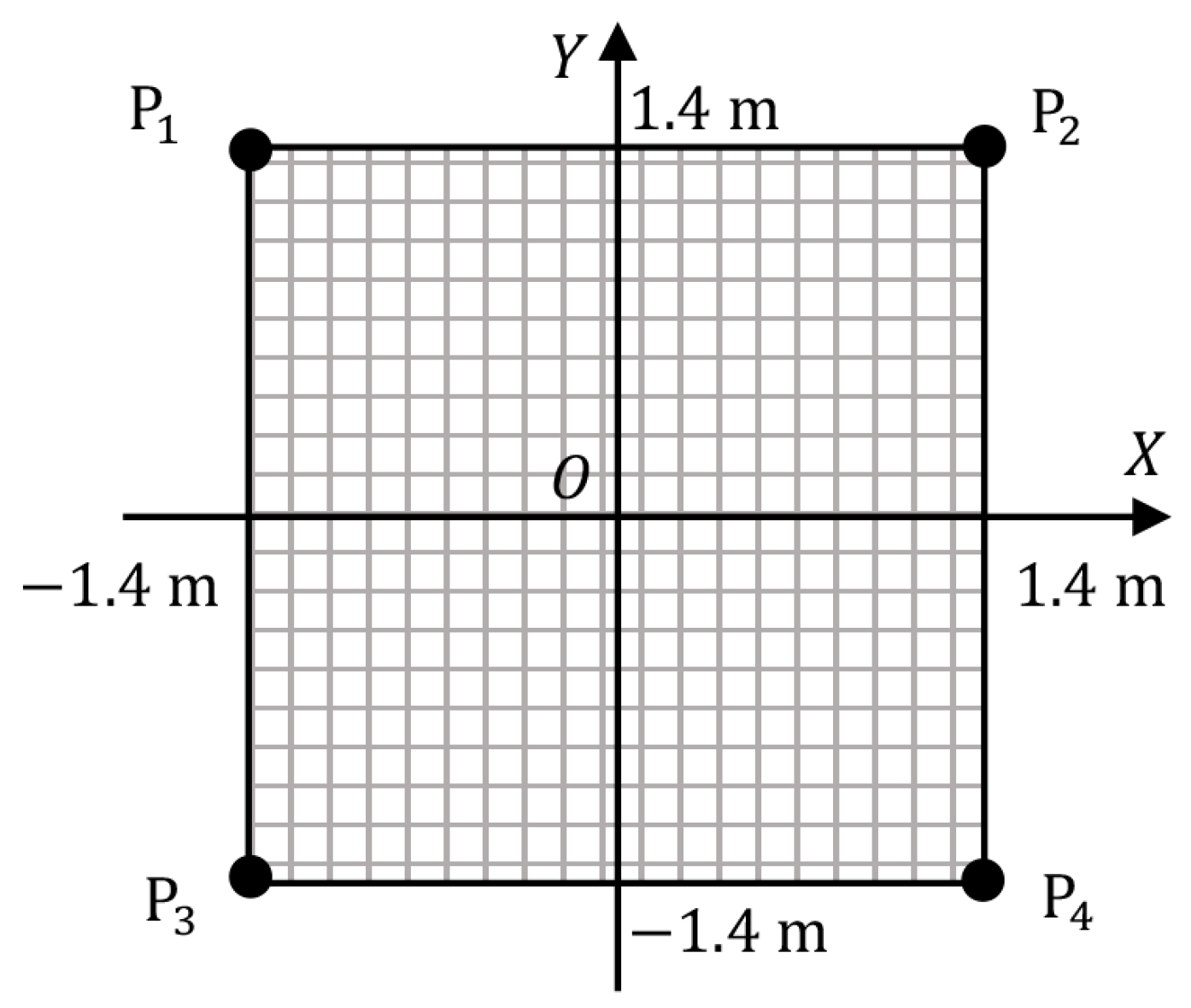

Take the left camera coordinate system as the reference coordinate system. We simulated a square area and its distribution in the XOY plane of the left camera coordinate system is shown in Figure 4. The coordinate of the square area can take different values.

Figure 4.

Target area and four feature points.

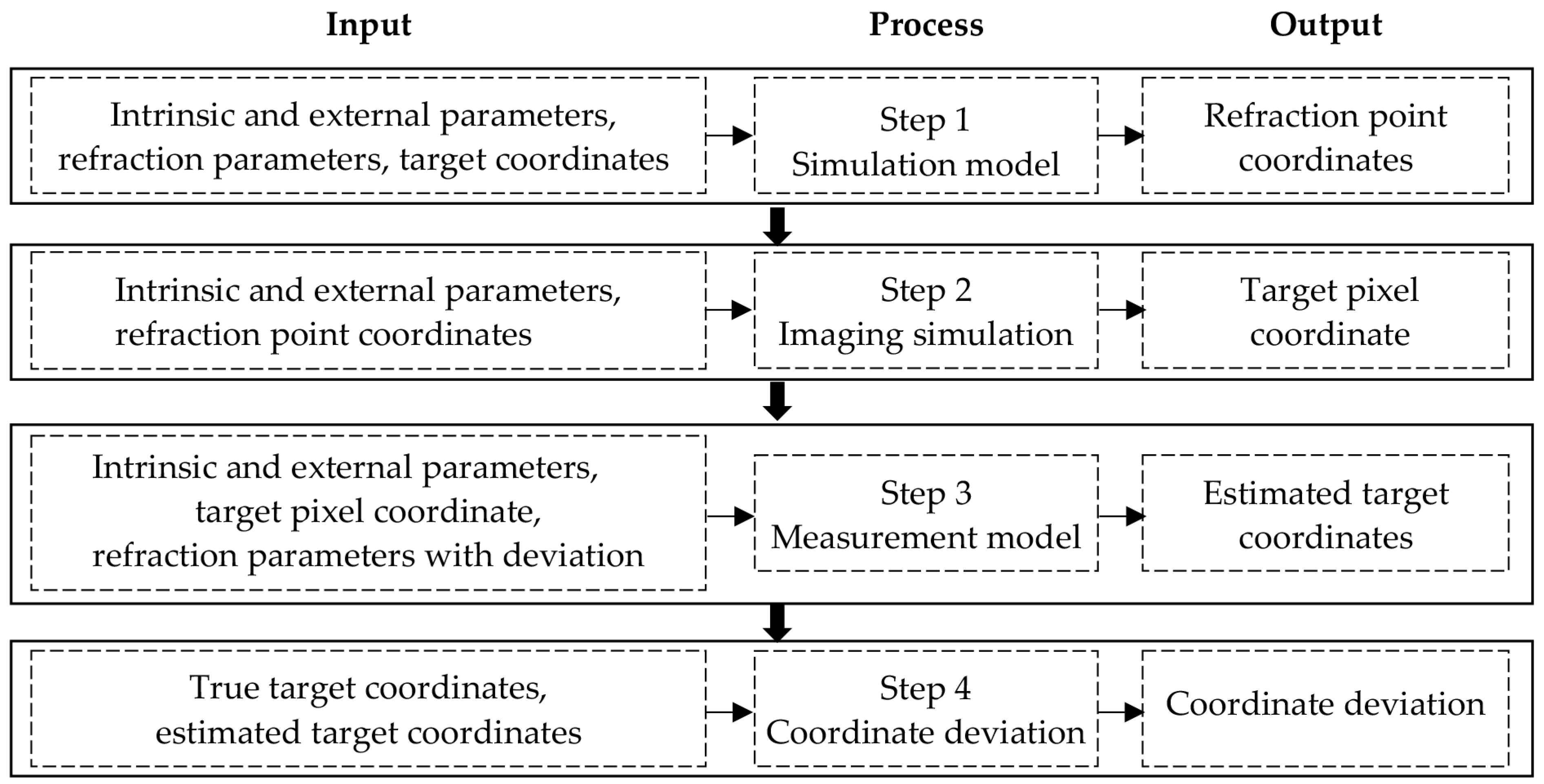

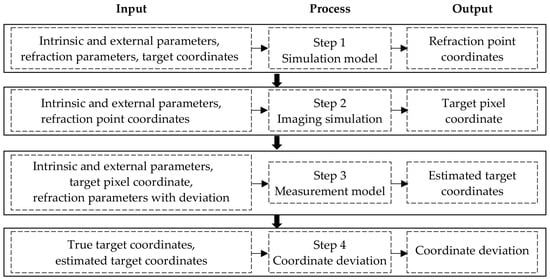

The intrinsic and external parameters of the left and right cameras and the target coordinates are known. The true values of the refraction parameters are set as shown in Table 1. The simulation process is shown in Figure 5. The experiments consist of four steps. (1) In step 1, based on the camera parameters, the refraction parameters without deviations, and the target coordinates, the simulation model proposed in Section 2.2 is used to simulate the coordinate of the refraction point. (2) In step 2, based on the camera parameters and the refraction point coordinate, the corresponding pixel coordinate is obtained using the perspective imaging model. (3) In step 3, based on the camera parameters, the target pixel coordinate, and the refraction parameters with certain deviations, the refractive measurement model described in Section 2.1 is used to estimate the target coordinate. (4) In step 4, the deviation between the estimated and the true coordinates of the target is calculated. The true coordinate of the target is , and the estimated coordinate obtained in step 3 is . The coordinate deviations are denoted as , , and , respectively.

Table 1.

True values of the refraction parameters.

Figure 5.

Simulation process.

The total coordinate deviation is given by , where . Since the pixel coordinate of the target is obtained through the imaging model in step 2, they do not contain any errors. The refraction parameters used in step 3 are added with a certain amount of deviation, with the requirement that the deviation is added to only one parameter at a time. Three experiments were conducted. Experiment 1 discussed the sensitivity of the measurement model to the refraction parameters. Experiment 2 analyzed the influence of the fixed refraction-parameter deviation on the targets at different distances. Experiment 3 studied the change in the measurement results of fixed targets with the refraction parameter.

3. Results

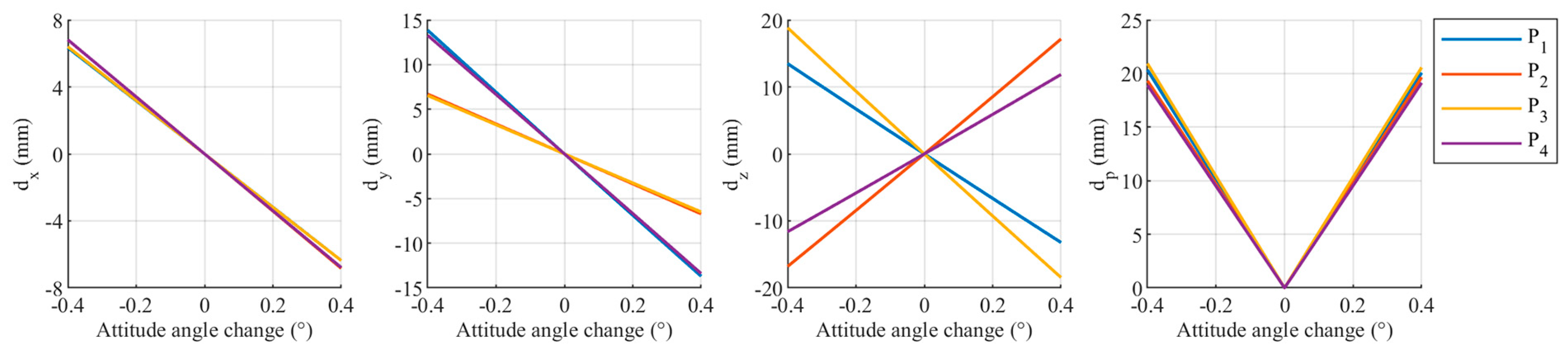

3.1. Experiment 1

The aim of this experiment is to analyze the sensitivity of the dual-medium visual measurement model to deviations in refraction parameters. All targets are on the same plane with a -distance of 5 m as shown in Figure 4. After intentionally introducing deviations in the refraction parameters, the measured coordinates of the targets will differ from their true values, resulting in coordinate discrepancies. Sequentially introduce deviations in different refraction parameters until the maximum reaches 1 cm.

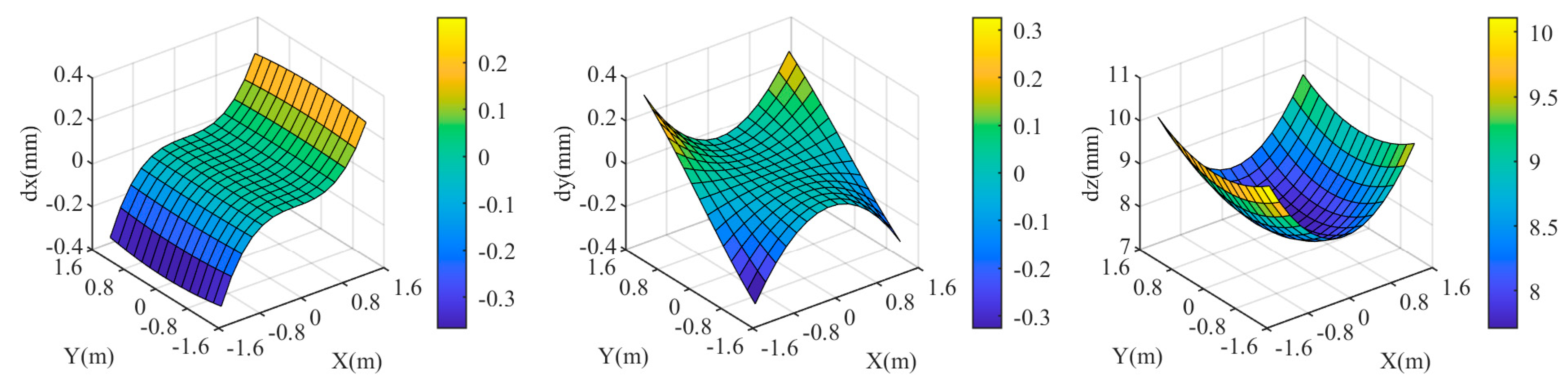

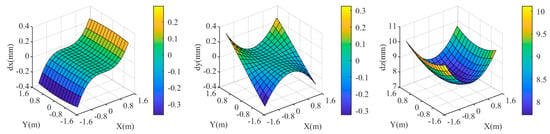

First, analyze the impact of deviation in the relative refractive index. The true value of the relative refractive index is . When the relative refractive index changes to 0.99843, the maximum is 10 mm, as shown in Figure 6. In this case, the discrepancies in and coordinate components are minimal, less than 0.5 mm, and primarily depends on the discrepancy in coordinate component. Relative refractive index deviation is more likely to cause coordinate deviation. The maximum coordinate discrepancy occurs at the four corners in Figure 4.

Figure 6.

Coordinate deviation of the target area at when the relative refraction is 0.99843. .

Next, analyze the impact of deviation in the distance from the camera to the refractive interface. The true value of this distance is denoted as D. When the distance changes to 0.805D, the maximum is 10 mm, as shown in Figure 7. The deviation in D has a minimal impact on and coordinate components of the target, less than 1 mm. It is more likely to cause discrepancies in the coordinate component, with primarily depending on the discrepancy in the coordinate component. The maximum coordinate discrepancy occurs at the four corners in Figure 4.

Figure 7.

Coordinate deviation of the target area at when the distance is . .

Finally, analyze the impact of deviation in the normal direction of the refractive surface. The theoretical direction of the refractive surface normal is parallel to the optical axis of the left camera. The normal direction deviation is represented by the simultaneous rotation of its three attitude angles by the same angle. When the rotation angle is 0.195°, the maximum is 10 mm, as shown in Figure 8. Deviations in the normal direction have a significant impact on all three coordinate components of the targets, especially the and coordinates. The extent of the impact varies across different points in the target area; points closer to the center are less affected, while points closer to the four corners are more affected.

Figure 8.

Coordinate deviation of the target area at when the rotation angle of the normal direction of the refractive surface is 0.195°. .

Combining Figure 6, Figure 7 and Figure 8, it is evident that the dual-medium stereo-vision measurement model is highly sensitive to deviations in the relative refractive index. Even a deviation of 0.157% can result in a maximum comprehensive coordinate deviation of up to 10 mm in the target area. In contrast, the measurement model is less sensitive to deviations in the distance from the camera to the refractive interface and the normal direction of the refractive interface. This indicates that in underwater experiments or practical applications, special attention must be given to the calibration of the refractive indices of different mediums.

3.2. Experiment 2

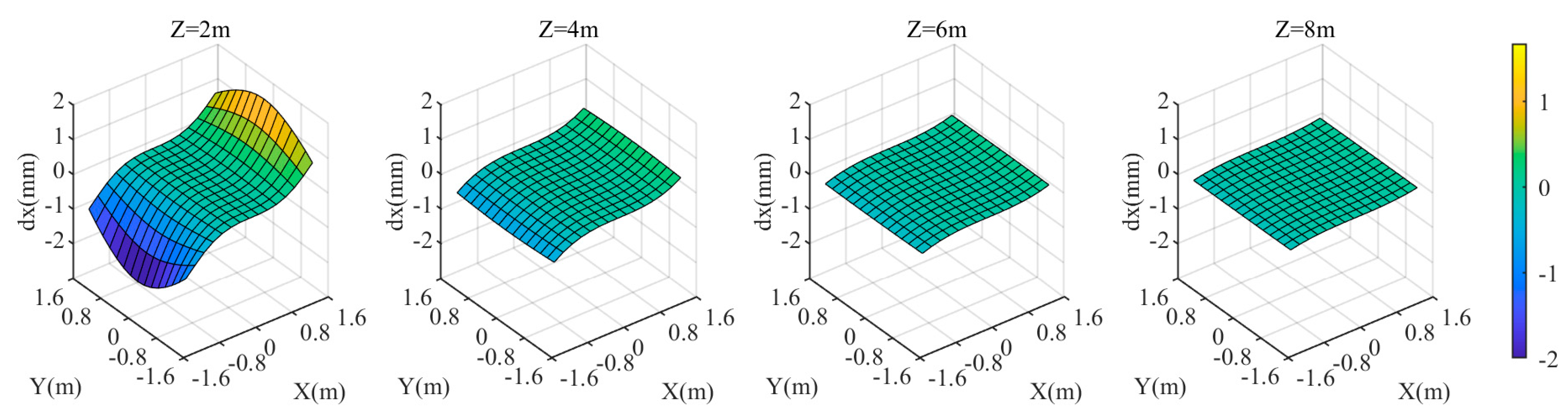

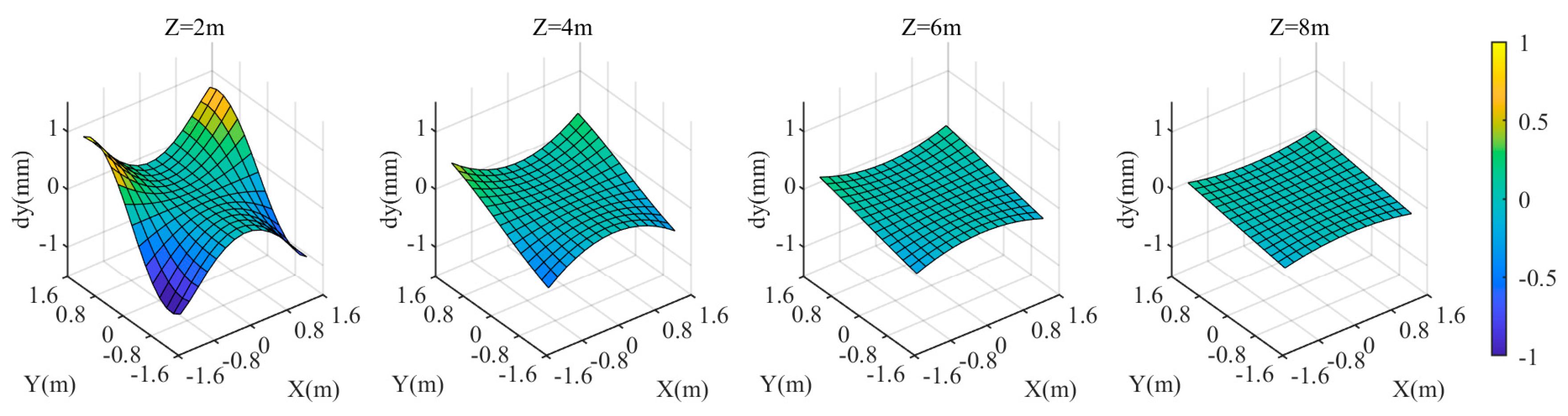

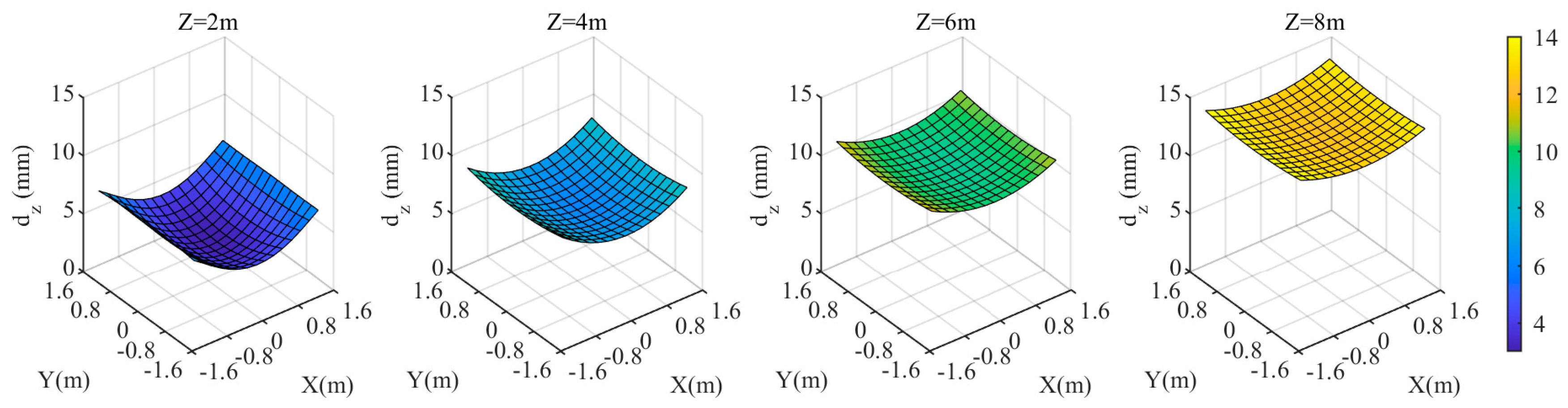

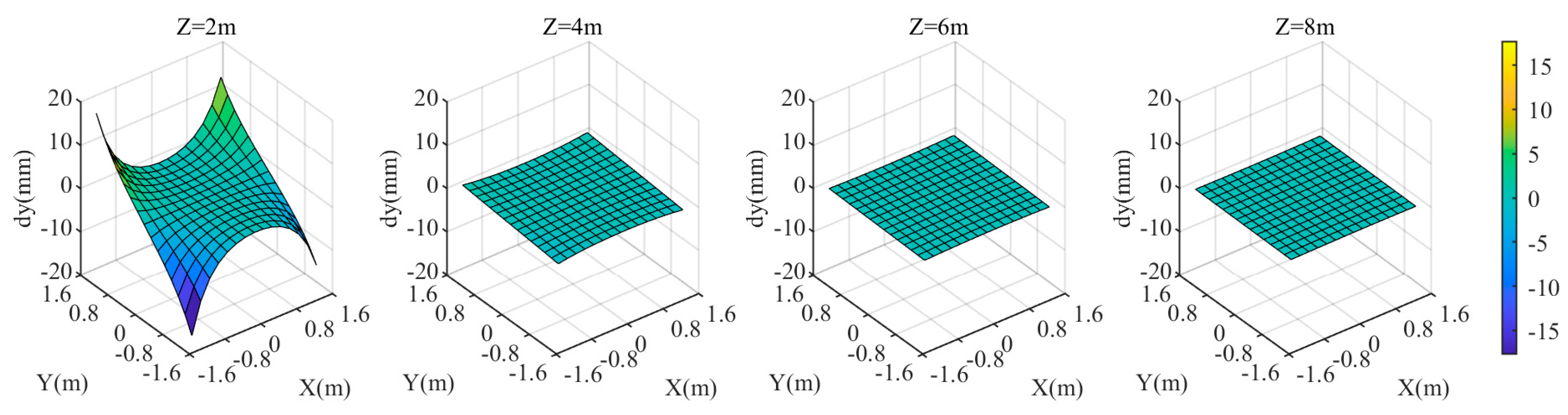

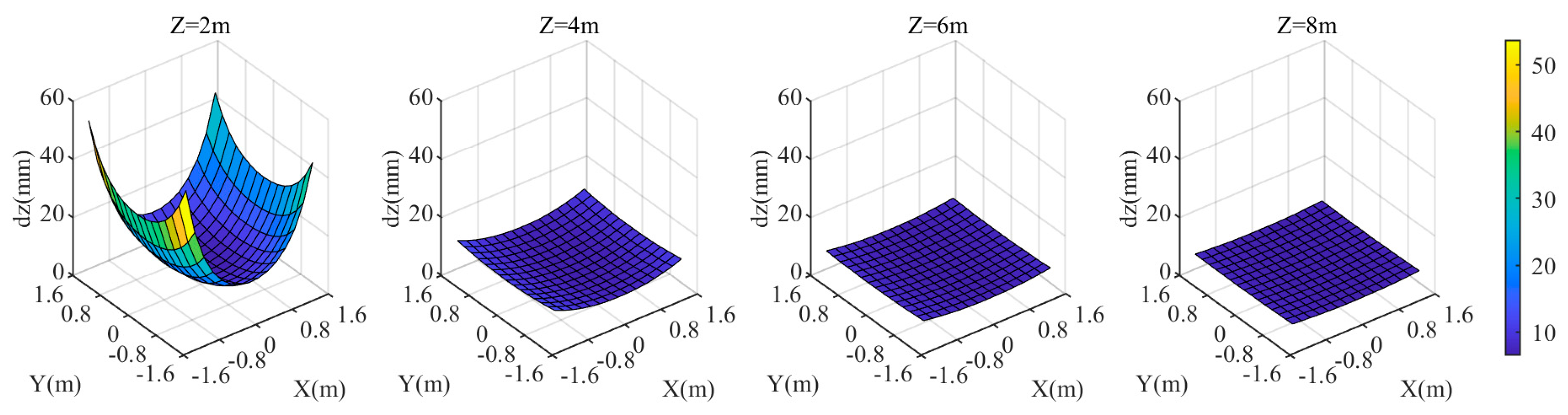

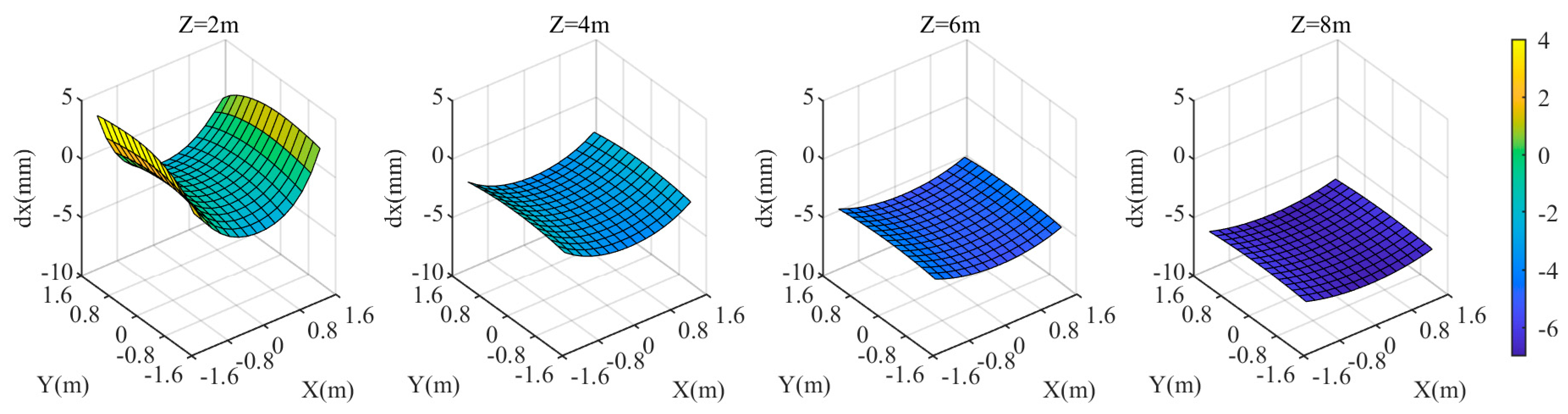

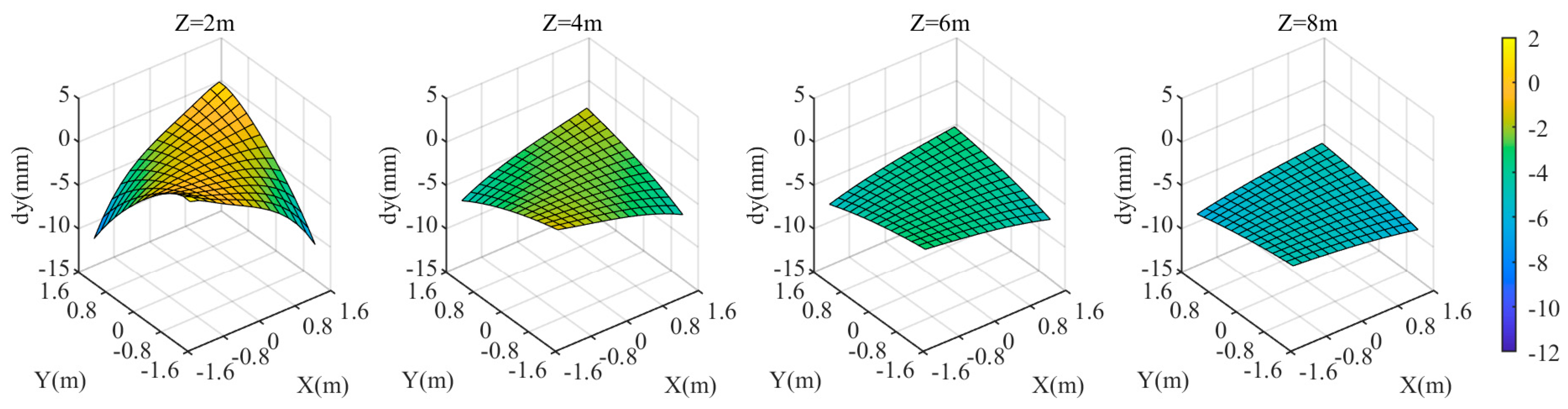

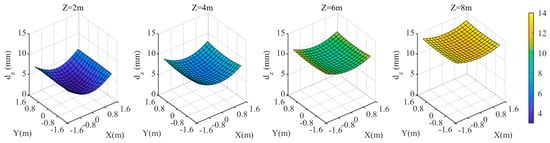

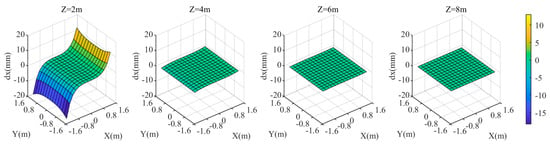

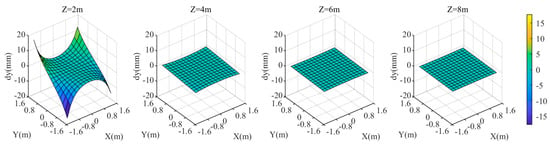

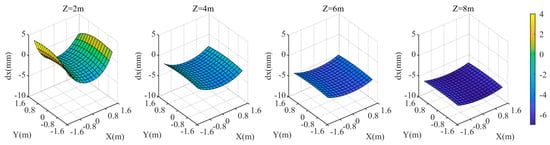

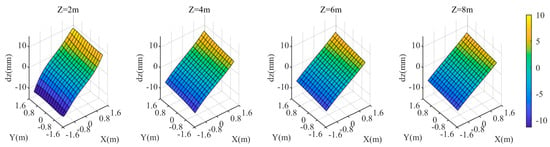

In Experiment 1, we fixed the target area at . However, with the same deviations in refraction parameters, the coordinate deviations of the target might differ if the distance between the target and the camera varies. Four different values were set at intervals of 2 m, ranging from 2 m to 8 m. Deviations were sequentially introduced in different refraction parameters: the relative refractive index changed to 0.99843, the distance from the camera to the refractive interface changed to 0.805D, and the rotation angle of the refractive surface normal was 0.195°.

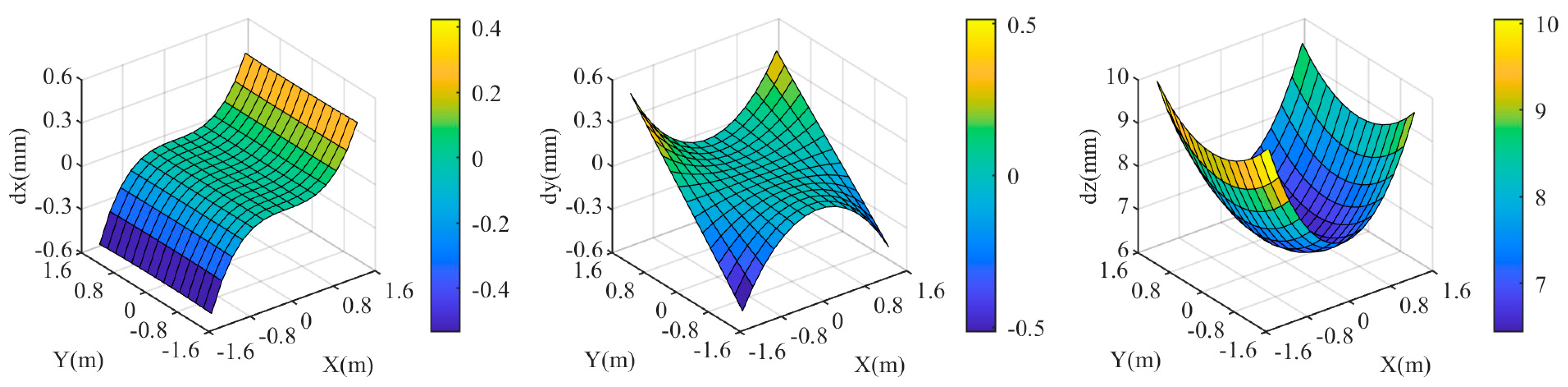

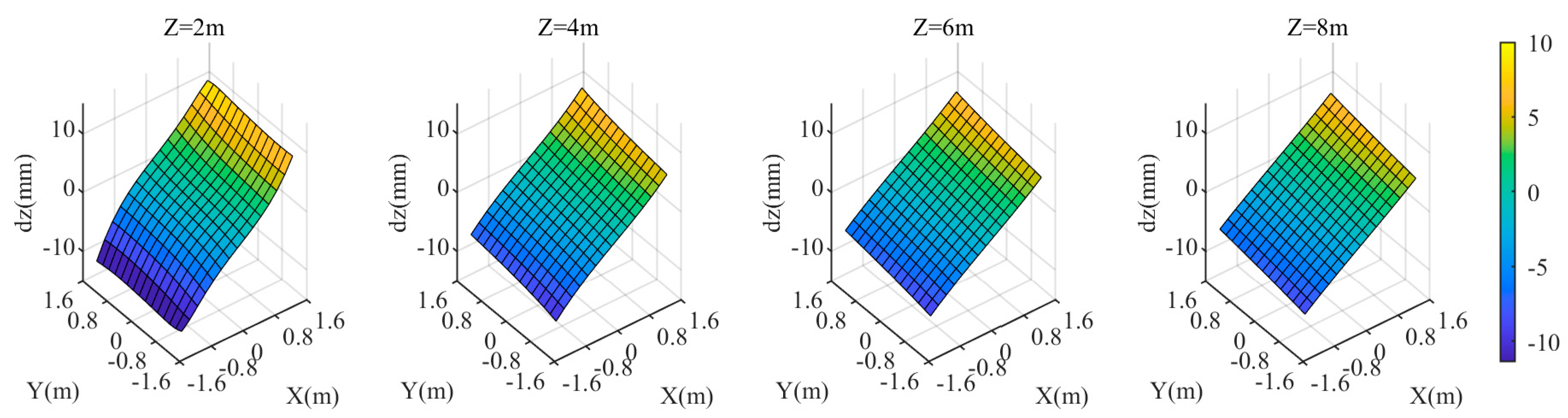

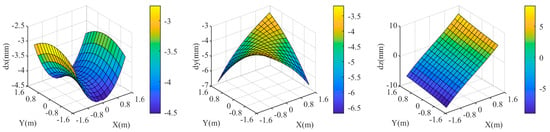

3.2.1. Target Plane

When the relative refractive index is 0.99843, the coordinate discrepancies of the three components in the target area are shown in Figure 9, Figure 10 and Figure 11. For different values, the discrepancies in and coordinate components remain relatively small. However, the smaller the value, the relatively larger the and discrepancies. At , the maximum values of and are approximately ±2 mm and ±1 mm, respectively. The discrepancy in coordinate component, , exhibits a significantly different variation pattern. The larger the value, the larger the . At , is the smallest, with a maximum value of approximately 7.4 mm in the edge of the target area; at = 8 m, is the largest, with both the maximum and minimum values of in the target area being approximately 14 mm. Since is significantly larger than and , when the relative refractive index deviation is constant, the closer the target is to the camera, the smaller the resulting . The closer the target is to the center of the region, the smaller its is.

Figure 9.

coordinate deviation of the target area at different distances from the camera when the relative refraction is 0.99843.

Figure 10.

coordinate deviation of the target area at different distances from the camera when the relative refraction is 0.99843.

Figure 11.

coordinate deviation of the target area at different distances from the camera when the relative refraction is 0.99843.

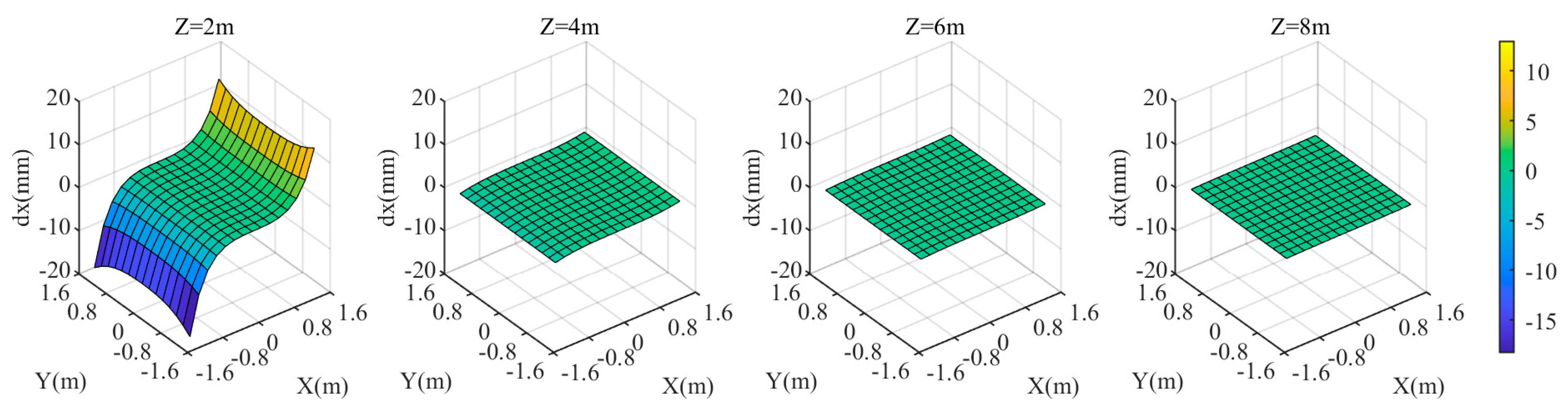

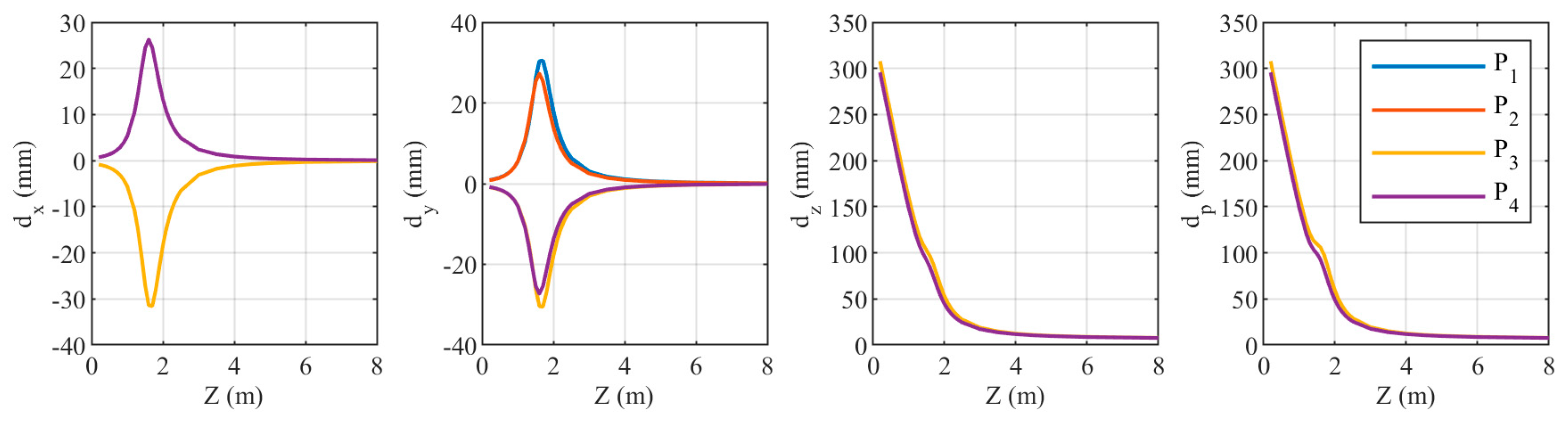

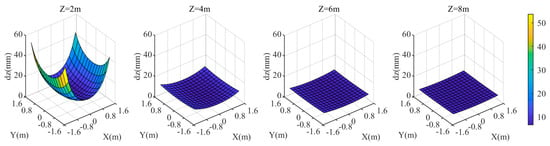

When the distance from the camera to the refractive interface is 0.805D, the coordinate discrepancies of the three components in the target area are shown in Figure 12, Figure 13 and Figure 14. When is greater than 4 m, as value increases, , , and gradually decrease. At , the maximum values of and are approximately ±18 mm, and the maximum value of is approximately 54 mm, occurring at the four corners of the area. Overall, the farther the target plane is from the camera, the smaller the values of , , and , resulting in a smaller . Additionally, the closer the target is to the center of the area, the smaller the .

Figure 12.

coordinate deviation of the target area at different distances from the camera when the distance is .

Figure 13.

coordinate deviation of the target area at different distances from the camera when the distance is .

Figure 14.

coordinate deviation of the target area at different distances from the camera when the distance is .

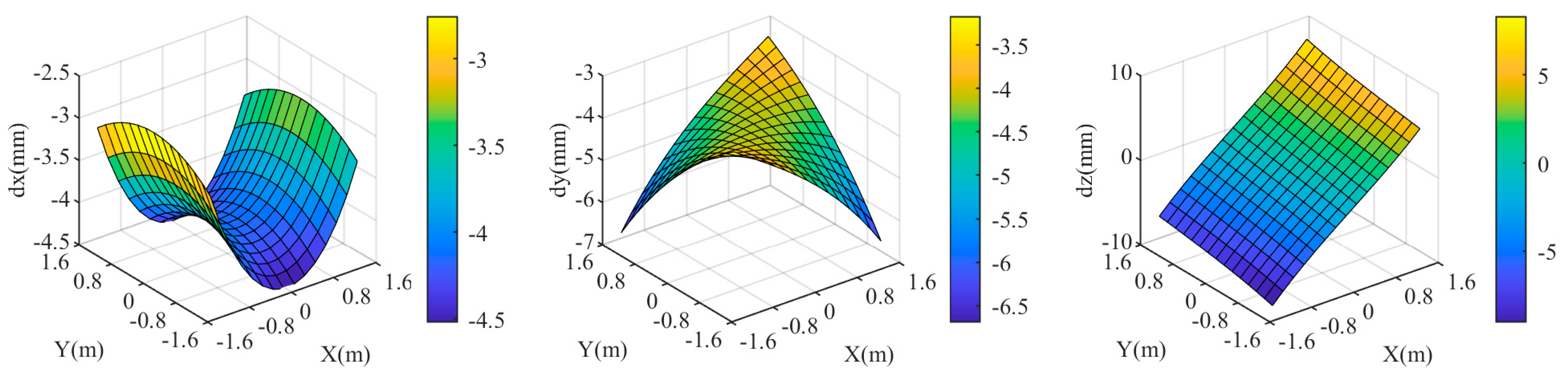

When the three attitude angles of the refractive interface normal all change by 0.195°, the coordinate discrepancies of the three components in the target area are shown in Figure 15, Figure 16 and Figure 17. As the value increases from 2 m to 8 m, changes from positive to negative, and its absolute value generally increases. At = 2 m, there is a noticeable variation in among different points in the target area, with decreasing towards the center of the area. As the value increases, the variation in among different points in the target area gradually diminishes. At = 8 m, reaches −7 mm. The component exhibits a similar trend with changes in ; the larger the value, the greater the absolute value of . At = 2 m, there is a noticeable variation in among different points in the target area, with decreasing towards the center of the area. The relationship between and is relatively less pronounced. As increases from 2 m to 8 m, slightly decreases. However, there are noticeable differences in among different points in the target area.

Figure 15.

coordinate deviation of the target area at different distances from the camera when the rotation angle of the normal direction of the refractive surface is 0.195°.

Figure 16.

coordinate deviation of the target area at different distances from the camera when the rotation angle of the normal direction of the refractive surface is 0.195°.

Figure 17.

coordinate deviation of the target area at different distances from the camera when the rotation angle of the normal direction of the refractive surface is 0.195°.

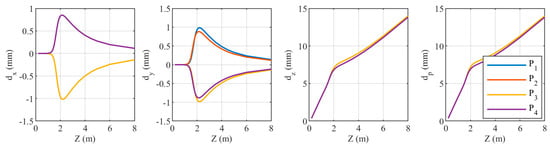

3.2.2. Fixed Points

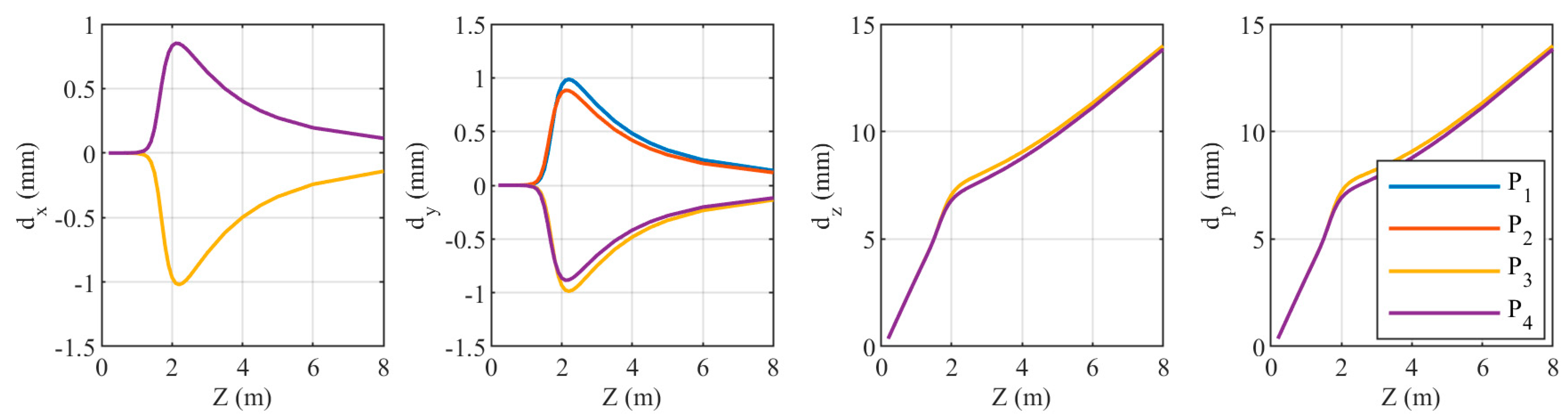

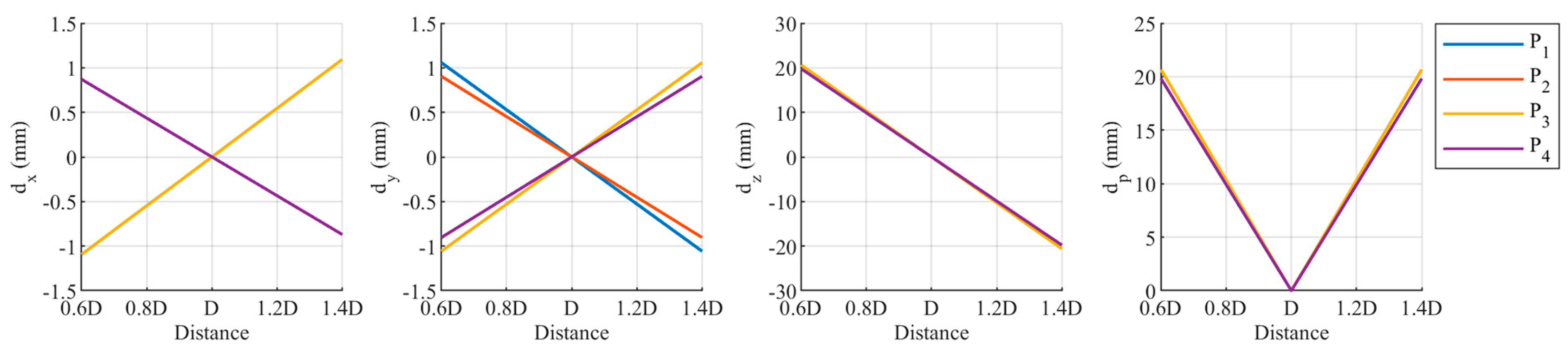

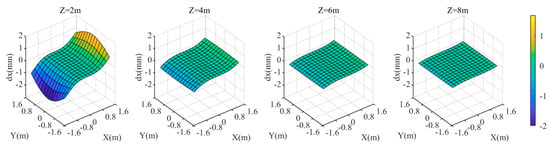

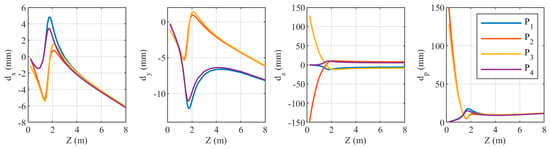

To further analyze the relationship between the coordinate deviations of the target and value, we selected the four corners of the target area shown in Figure 4 as feature points for the experiment. The values range from 0.5 m to 8 m. Based on the previous analysis, we know that when value is fixed, these four points have the largest within the entire target area. We sequentially analyzed different refraction parameters, maintaining the same deviation magnitudes as discussed earlier.

When the relative refractive index is 0.99843, the coordinate discrepancies of the four points are shown in Figure 18. As increases, and exhibit similar trends: both initially increase rapidly, then decrease, with the rate of decrease being fast at first and then slowing down. The maximum values of and occur around = 2.2 m, about ±1 mm. For , the trends for and , as well as and , are completely consistent. For , the trends for and , as well as and , are very similar. increases with increasing . Since is much larger than and , mainly depends on the magnitude of . The trends for and are consistent for all four points.

Figure 18.

Relationship between the coordinate components of the four target points and when the relative refraction is 0.99843.

When the distance from the camera to the refractive interface is , the coordinate discrepancies of the four points are shown in Figure 19. Similar to Figure 18, as increases, and exhibit similar trends: both initially increase rapidly, then decrease, with the rate of decrease being fast at first and then slowing down. However, the maximum values of and occur around , reaching approximately ±30 mm. For , the trends for and , as well as for and , are consistent. For , the trends for and , as well as for and , are similar. decreases with increasing , with the rate of decrease being fast at first and then slowing down, with an inflection point around . Although the values of and around exceed ±20 mm, they are still an order of magnitude smaller than . Therefore, mainly depends on the magnitude of . The trends for and are consistent for all four points; as value increases, decreases.

Figure 19.

Relationship between the coordinate components of the four target points and when the distance is .

When the three attitude angles of the refraction interface normal change by 0.195°, the coordinate component deviations of the four points are shown in Figure 20. For less than 3 m, the variation trends of the three coordinate component deviations are quite complex. The variation trends of differ significantly among different points. For points and , is significantly larger than and . In contrast, for points and , the deviations in all three coordinate components are relatively small. When is greater than 3 m, and generally increase with increasing , while shows no obvious variation. Additionally, and are similar in magnitude and significantly larger than . For points and , when is small, the deviation is mainly determined by . However, when is large, is primarily influenced by and . For points and , is affected by all three coordinate components, and as increases, the influence of and becomes more significant.

Figure 20.

Relationship between the coordinate components of the four target points and when the rotation angle of the normal direction of the refractive surface is 0.195°.

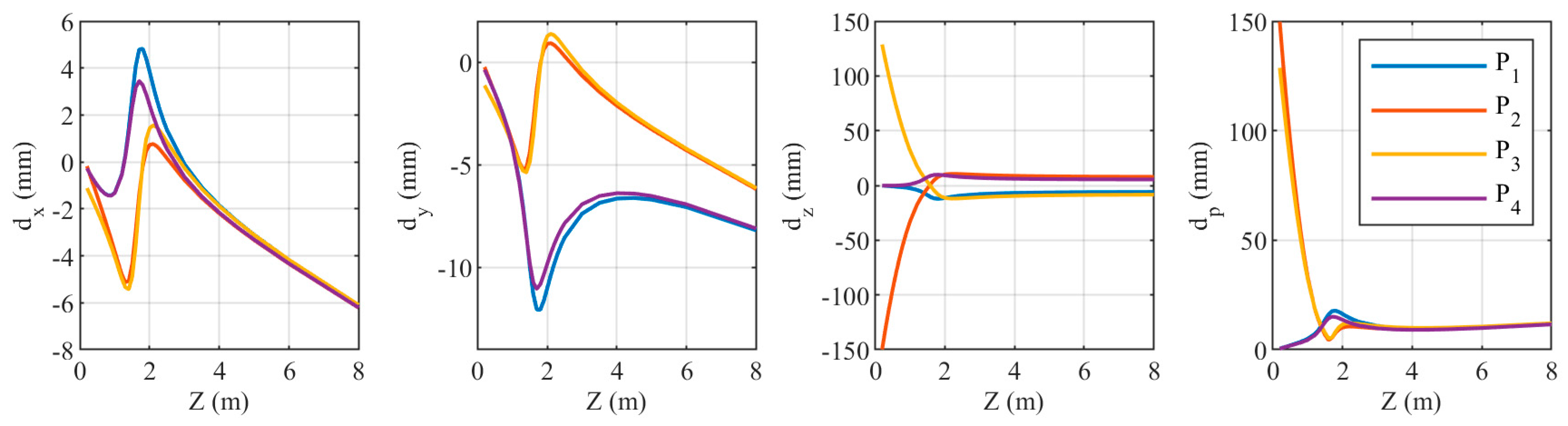

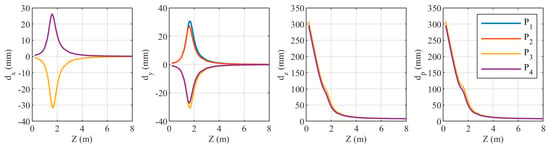

3.3. Experiment 3

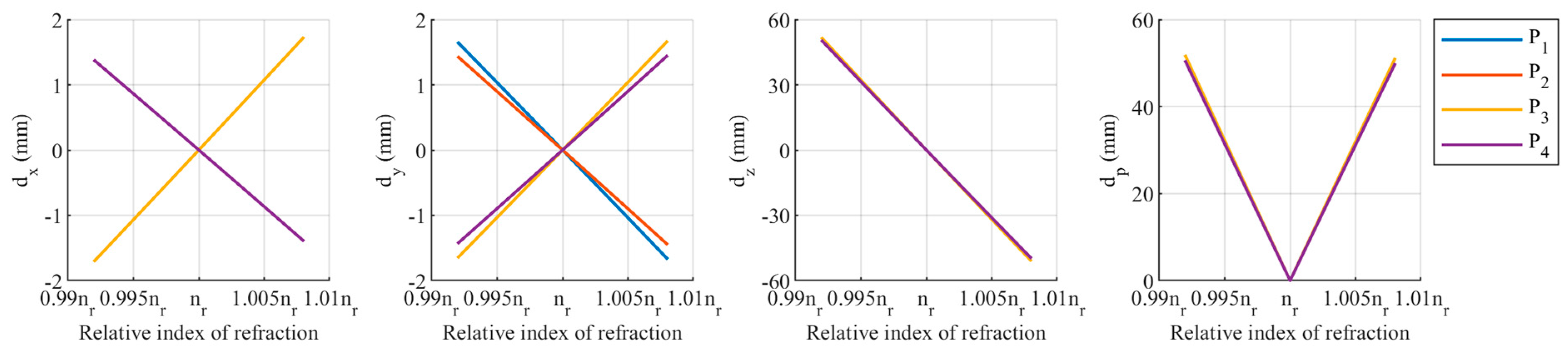

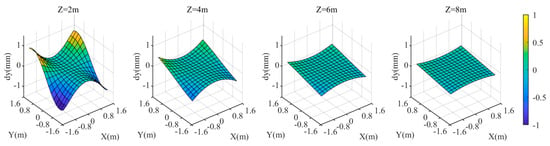

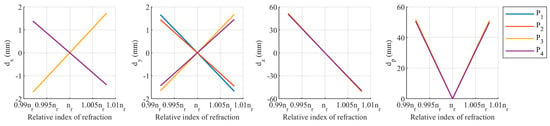

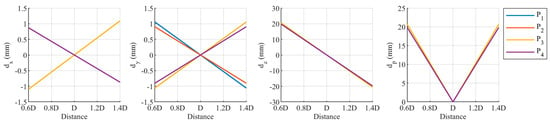

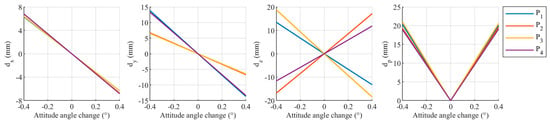

In the aforementioned experiments, we fixed the deviation of the refraction parameters. However, as the deviations of the refraction parameters vary, the target-coordinate deviations also change accordingly. Therefore, we conducted this experiment to investigate the relationship between target-coordinate deviations and refraction-parameter deviations. In this experiment, we continued to select the four feature points shown in Figure 4 as targets and set to 5 m. We then sequentially introduced deviation sequences into different refraction parameters.

The relationship between the deviations of the target coordinate and the three refraction-parameter deviations—relative refractive index, the distance from the camera to the refraction interface, and the direction of the refraction interface normal—is shown in Figure 21, Figure 22 and Figure 23. , , and all exhibit a linear relationship with the refraction-parameter deviations. The plot displays a symmetric distribution with the true value of the refraction parameters as the axis of symmetry, indicating that both positive and negative deviations in the refraction parameters have the same effect on . The smaller the deviation in the refraction parameters, the closer , , , and are to zero.

Figure 21.

Relationship between target-coordinate deviation and relative refractive index deviation.

Figure 22.

Relationship between target-coordinate deviation and camera-to-refractive-interface distance deviation.

Figure 23.

Relationship between target-coordinate deviation and normal direction of refraction interface.

4. Discussion

When the cameras are in the air and the target is in the water, the light path from the target to the camera undergoes refraction, causing the light to deviate from a straight line. In order to compensate for refraction in stereo-vision measurement, a theoretically rigorous approach is to use the refraction-measurement model. However, the refraction-measurement model involves refraction parameters such as the refractive indices of the medium, the distance from the camera to the refractive interface, and the normal of the refractive interface. These parameters generally require calibration. Due to the presence of errors, the calibrated refraction parameters inevitably differ from their true values. The experimental results in Section 3 demonstrate the impact of deviations in refraction parameters on stereo-vision measurement.

The impact of different refraction-parameter deviations on stereo-vision measurement varies, and the magnitude of this impact is related to the distribution of the target within the plane. Deviations in the relative refractive index and the distance between the target and the camera are more likely to cause discrepancies in the Z coordinate, while their effect on the X and Y coordinates is relatively minor. The total coordinate deviation of the target is primarily determined by the Z-coordinate deviation, and the closer the target is to the edge of the field of view, the greater its coordinate deviation. Deviations in the orientation of the refractive interface normal significantly affect all three coordinate components of the target. Regardless of the type of refraction-parameter deviation, the overall trend shows that the closer the target is to the edge of the field of view, the greater the coordinate deviation. A deviation of 0.157% in the relative refractive index can result in a maximum target-coordinate deviation of up to 1 cm, indicating that the stereo-visual measurement model is highly sensitive to deviations in the relative refractive index in the air–water condition. In underwater experiments or practical applications, special attention must be paid to the calibration of refractive indices of different media.

The impact of refraction-parameter deviations on stereo-visual measurement is related to the distance between the target and the camera, and the influence of different refraction parameters varies with the distance. When the deviation in the relative refractive index is constant, the closer the target is to the camera, the smaller the total coordinate deviation of the targets. When the deviation in the distance between the camera and the refractive interface is constant, as the distance between the target and the camera increases, the deviations in X and Y coordinates first increase rapidly, then decrease rapidly, and finally stabilize, while the deviation in Z coordinate decreases rapidly and gradually stabilizes. The deviation in the Z coordinate is an order of magnitude greater than that in the X and Y coordinates, so the overall coordinate deviation is determined by the Z coordinate deviation. For points at different positions within the field of view, the overall coordinate deviations caused by the deviations in the relative refractive index and the camera-refractive interface distance with the changes in the Z coordinate are approximately consistent. The impact of deviations in the orientation of the refractive interface normal varies more complexly with changes in the Z coordinate and is related to the distribution of the target. In underwater experiments or practical applications to mitigate the impact of deviations in the orientation of the refractive interface normal, attention must be paid to the distribution of the target within the field of view.

For the fixed targets, changes in the refraction parameters deviations result in linear variations in the X, Y, Z, and overall coordinate deviations. The larger the deviation in the refraction parameters, the greater the coordinate deviations. Whether the refraction parameter is greater or less than its true value, the impact on the overall coordinate deviation remains the same.

According to the experimental results, targets closer to the edge of the field of view are more susceptible to the influence of refraction-parameter deviations. This indicates that the current refraction-measurement model performs better for targets near the center of the field of view, while it may not fully eliminate refraction errors for targets near the edges.

5. Conclusions

This paper introduces a refraction-based visual-measurement model, establishes a stereo-vision simulation model, and conducts specific simulation experiments to study in detail the impact of deviations in various refraction parameters on stereo-visual measurement. The simulation accurately determines the coordinates of the refraction points based on the positions of the cameras and the targets, thereby obtaining the corresponding pixel coordinates used to estimate the targets’ position. Experimental results demonstrate that the influence of different refraction-parameter deviations on stereo-visual measurement varies and is related to the distribution of the target within the field of view and the distance between the target and the camera. The overall coordinate deviation of the target is not necessarily minimized by simply increasing or decreasing the distance between the target and the camera; it must be determined based on the magnitude of the deviation in each refraction parameter. The stereo-visual measurement model is particularly sensitive to deviations in the relative refractive index in the air–water condition. Therefore, in underwater experiments or practical applications, careful calibration of the refractive indices of different media and attention to the distribution of targets within the field of view are crucial. The work and conclusions presented in this paper provide guidance for the construction of underwater stereo-vision measurement systems, refraction parameter calibration, underwater experiments, and practical applications. However, this study primarily conducted research by developing a simulation model and performing simulated experiments. Future work should include underwater experiments to validate the conclusions of this paper. In addition, the current refraction-measurement model is more suitable for targets located near the center of the field of view, whereas its effectiveness for targets at the edges is less than ideal, indicating the need for further optimization of the refraction-measurement model in the future.

Author Contributions

Conceptualization, G.L. and S.H.; Formal analysis, G.L.; Funding acquisition, G.L.; Investigation, G.L.; Methodology, G.L.; Project administration, N.Z.; Resources, G.L., Z.Y., S.H., N.Z. and K.Z.; Software, G.L.; Supervision, K.Z.; Validation, Z.Y., N.Z. and K.Z.; Visualization, G.L.; Writing—original draft, G.L.; Writing—review and editing, S.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Fundamental Research Funds for the Central Universities, grant number 2022QN1081.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Su, Z.; Pan, J.; Lu, L.; Dai, M.; He, X.; Zhang, D. Refractive three-dimensional reconstruction for underwater stereo digital image correlation. Opt. Express 2021, 29, 12131–12144. [Google Scholar] [CrossRef]

- Ding, T.; Sun, C.; Chen, J. Cross-medium imaging model and calibration method based on refractive optical path for underwater morphology measurement. Meas. Sci. Technol. 2024, 35, 15205. [Google Scholar] [CrossRef]

- Łuczyński, T.; Pfingsthorn, M.; Birk, A. The Pinax-model for accurate and efficient refraction correction of underwater cameras in flat-pane housings. Ocean. Eng. 2017, 133, 9–22. [Google Scholar] [CrossRef]

- Chi, Y.; Yu, L.; Pan, B. Low-cost, portable, robust and high-resolution single-camera stereo-DIC system and its application in high-temperature deformation measurements. Opt. Lasers Eng. 2018, 104, 141–148. [Google Scholar] [CrossRef]

- Wu, T.; Hou, S.; Sun, W.; Shi, J.; Yang, F.; Zhang, J.; Wu, G.; He, X. Visual measurement method for three-dimensional shape of underwater bridge piers considering multirefraction correction. Autom. Constr. 2023, 146, 104706. [Google Scholar] [CrossRef]

- Beall, C.; Lawrence, B.J.; Ila, V.; Dellaert, F. 3D reconstruction of underwater structures. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems IEEE, Taipei, Taiwan, 18–22 October 2010; pp. 4418–4423. [Google Scholar]

- Bruno, F.; Bianco, G.; Muzzupappa, M.; Barone, S.; Razionale, A.V. Experimentation of structured light and stereo vision for underwater 3D reconstruction. ISPRS J. Photogramm. Remote Sens. 2011, 66, 508–518. [Google Scholar] [CrossRef]

- Chadebecq, F.; Vasconcelos, F.; Lacher, R.; Maneas, E.; Desjardins, A.; Ourselin, S.; Vercauteren, T.; Stoyanov, D. Refractive Two-View Reconstruction for Underwater 3D Vision. Int. J. Comput. Vis. 2020, 128, 1101–1117. [Google Scholar] [CrossRef]

- Li, G.; Klingbeil, L.; Zimmermann, F.; Huang, S.; Kuhlmann, H. An Integrated Positioning and Attitude Determination System for Immersed Tunnel Elements: A Simulation Study. Sensors 2020, 20, 7296. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Xu, H.; Lin, Y.; Gao, L. Visual Navigation for Recovering an AUV by Another AUV in Shallow Water. Sensors 2019, 19, 1889. [Google Scholar] [CrossRef] [PubMed]

- Cowen, S.; Briest, S.; Dombrowski, J. Underwater docking of autonomous undersea vehicles using optical terminal guidance. In Proceedings of the Oceans ’97. MTS/IEEE Conference, Halifax, NS, Canada, 6–9 October 1997; Volume 2, pp. 1143–1147. [Google Scholar]

- Liu, S.; Ozay, M.; Okatani, T.; Xu, H.; Sun, K.; Lin, Y. Detection and Pose Estimation for Short-Range Vision-Based Underwater Docking. IEEE Access 2019, 7, 2720–2749. [Google Scholar] [CrossRef]

- Treibitz, T.; Schechner, Y.; Kunz, C.; Singh, H. Flat Refractive Geometry. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 51–65. [Google Scholar] [CrossRef]

- Treibitz, T.; Schechner, Y.Y. Active Polarization Descattering. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 385–399. [Google Scholar] [CrossRef] [PubMed]

- Schechner, Y.Y.; Karpel, N. Recovery of Underwater Visibility and Structure by Polarization Analysis. IEEE J. Ocean. Eng. 2005, 30, 570–587. [Google Scholar] [CrossRef]

- Yamashita, A.; Kawanishi, R.; Koketsu, T.; Kaneko, T.; Asama, H. Underwater sensing with omni-directional stereo camera. In Proceedings of the 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain, 6–13 November 2011; pp. 304–311. [Google Scholar]

- Menna, F.; Nocerino, E.; Fassi, F.; Remondino, F. Geometric and Optic Characterization of a Hemispherical Dome Port for Underwater Photogrammetry. Sensors 2016, 16, 48. [Google Scholar] [CrossRef] [PubMed]

- She, M.; Nakath, D.; Song, Y.; Köser, K. Refractive geometry for underwater domes. ISPRS J. Photogramm. Remote Sens. 2022, 183, 525–540. [Google Scholar] [CrossRef]

- Bosch, J.; Gracias, N.; Ridao, P.; Ribas, D. Omnidirectional Underwater Camera Design and Calibration. Sensors 2015, 15, 6033–6065. [Google Scholar] [CrossRef]

- Shmutter, B. Orientation Problems in Two-Medium Photogrammetry. Photogramm. Eng. 1967, 33, 1421–1428. [Google Scholar]

- Rinner, K. Problems of Two-Medium Photogrammetry. Photogramm. Eng. 1969, 35, 275–282. [Google Scholar]

- Masry, S.E.; Konecny, G. New Programs for the Analytical Plotter. Photogramm. Eng. 1970, 36, 1269–1276. [Google Scholar]

- Kwon, Y.; Casebolt, J.B. Effects of light refraction on the accuracy of camera calibration and reconstruction in underwater motion analysis. Sports Biomech. 2006, 5, 95–120. [Google Scholar] [CrossRef]

- Fabio, M.; Erica, N.; Salvatore, T.; Fabio, R. A photogrammetric approach to survey floating and semi-submerged objects. In Proceedings of the Videometrics, Range Imaging, and Applications XII, and Automated Visual Inspection, Munich, Germany, 13–16 May 2013. [Google Scholar]

- Kang, L.; Wu, L.; Yang, Y. Experimental study of the influence of refraction on underwater three-dimensional reconstruction using the SVP camera model. Appl. Opt. 2012, 51, 7591–7603. [Google Scholar] [CrossRef] [PubMed]

- Lavest, J.M.; Rives, G.; Laprest, J.T. Dry camera calibration for underwater applications. Mach. Vision. Appl. 2003, 13, 245–253. [Google Scholar] [CrossRef]

- Kang, L.; Wu, L.; Wei, Y.; Lao, S.; Yang, Y. Two-view underwater 3D reconstruction for cameras with unknown poses under flat refractive interfaces. Pattern Recognit. 2017, 69, 251–269. [Google Scholar] [CrossRef]

- Agrawal, A.; Ramalingam, S.; Taguchi, Y.; Chari, V. A theory of multi-layer flat refractive geometry. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Li, R.; Li, H.; Zou, W.; Smith, R.G.; Curran, T.A. Quantitative photogrammetric analysis of digital underwater video imagery. IEEE J. Ocean. Eng. 1997, 22, 364–375. [Google Scholar] [CrossRef]

- Jordt-Sedlazeck, A.; Koch, R. Refractive Structure-from-Motion on Underwater Images. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Sydney, NSW, Australia, 1–8 December 2013. [Google Scholar]

- Yau, T.; Gong, M.; Yang, Y. Underwater Camera Calibration Using Wavelength Triangulation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- Telem, G.; Filin, S. Photogrammetric modeling of underwater environments. ISPRS J. Photogramm. Remote Sens. 2010, 65, 433–444. [Google Scholar] [CrossRef]

- Chen, X.; Yang, Y.H. Two-View Camera Housing Parameters Calibration for Multi-layer Flat Refractive Interface. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Dolereit, T.; von Lukas, U.F.; Kuijper, A. Underwater stereo calibration utilizing virtual object points. In Proceedings of the Oceans 2015, Genova, Italy, 18–21 May 2015. [Google Scholar]

- Qiu, C.; Wu, Z.; Kong, S.; Yu, J. An Underwater Micro Cable-Driven Pan-Tilt Binocular Vision System with Spherical Refraction Calibration. IEEE Trans. Instrum. Meas. 2021, 70, 1–13. [Google Scholar] [CrossRef]

- Qi, G.; Shi, Z.; Hu, Y.; Fan, H.; Dong, J. Refraction calibration of housing parameters for a flat-port underwater camera. Opt. Eng. 2022, 61, 104105. [Google Scholar] [CrossRef]

- Ma, Y.; Zhou, Y.; Wang, C.; Wu, Y.; Zou, Y.; Zhang, S. Calibration of an underwater binocular vision system based on the refraction model. Appl. Opt. 2022, 61, 1675–1686. [Google Scholar] [CrossRef]

- Tong, Z.; Gu, L.; Shao, X. Refraction error analysis in stereo vision for system parameters optimization. Measurement 2023, 222, 113650. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).