Abstract

To address the challenges of change detection tasks, including the scarcity and dispersion of labeled samples, the difficulty in efficiently extracting features from unstructured image objects, and the underutilization of high-order correlation information, we propose a novel architecture based on hypergraph convolutional neural networks. By characterizing superpixel vertices and their high-order correlations, the method implicitly expands the number of labels while assigning adaptive weight parameters to adjacent objects. It not only describes changes in vertex features but also uncovers local and consistent changes within hyperedges. Specifically, a vertex aggregation mechanism based on superpixel segmentation is established, which segments the difference map into superpixels of diverse shapes and boundaries, and extracts their significant statistical features. Subsequently, a dynamic hypergraph structure is constructed, with each superpixel serving as a vertex. Based on the multi-head self-attention mechanism, the connection probability between vertices and hyperedges is calculated through learnable parameters, and the hyperedges are generated through threshold filtering. Moreover, a framework based on hypergraph convolutional neural networks is customized, which models the high-order correlations within the data through the learning optimization of the hypergraph, achieving change detection in remote sensing images. The experimental results demonstrate that the method obtains impressive qualitative and quantitative analysis results on the three remote sensing datasets, thereby verifying its effectiveness in enhancing the robustness and accuracy of change detection.

1. Introduction

With the continuous advancement and application of remote sensing satellite and sensor technology, the remote sensing observation of the Earth has also shown characteristics of high spatial resolution, high spectral resolution, and high temporal resolution. By monitoring dynamic images of the Earth’s surface over vast geographical areas, we can better understand our planet. In recent years, various remote sensing image processing tasks such as object detection, semantic segmentation, and change detection have been widely applied in important fields that directly affect human livelihood, including natural disaster monitoring [1], agricultural monitoring and survey [2], land use [3], urban expansion and planning [4], and national security and defense needs.

Especially affected by natural changes and human activities, the Earth’s environment, represented by land cover, land use, and urban development, is constantly evolving. The timely and accurate grasping of this important information related to people’s lives and the sustainable development of human society is a problem closely watched by many countries around the world. Therefore, remote sensing image change detection has a strong practical demand and has always been a hot field of attention for domestic and foreign researchers.

The purpose of change detection is to analyze changes on the Earth’s surface under the influence of natural transitions and human activities. It refers to the technology of identifying the state differences in surface entities or objects in different periods by preprocessing images, extracting features, dimensionality reduction, difference comparison, applying decision rules, and postprocessing images, as well as mathematical models, using remote sensing images of the same location in different periods. Because at least two periods of images are needed, compared with other remote sensing image interpretation technologies (such as classification and recognition), its process is more complex, including high-precision geometric registration between images; radiation, atmospheric, and phenological relative normalization treatment; change feature extraction; the determination of change areas and types; and accuracy verification.

Since the 1980s, domestic and foreign researchers have begun to conduct research in the field of change detection and have proposed a series of representative change detection algorithms. In the early application of change detection, the spatial resolution of remote sensing images was low, and each pixel could represent the state of objects within a certain range. Traditional change detection methods obtained the difference comparison between pixels of different time-phase images by manually designing feature extraction methods to obtain the change detection results. Therefore, most of the change detection methods in this stage were based on pixel analysis. However, the registration of high-resolution images, collection errors, and noise can greatly interfere with the traditional pixel-level change detection methods.

Since the proposal of the fractal network evolution algorithm [5], object-based methods have gained widespread attention, such as fractal network evolution segmentation [6] and Simple Linear Iterative Clustering (SLIC) [7]. Some scholars believe that even medium- and low-resolution images adopt object-based methods that are generally better than pixel-based methods. There are now quite a few scholars who have conducted research on object-based change detection methods. These methods first need to segment the image to obtain objects of different scales, and then use objects composed of homogeneous pixels as the basic unit for change detection, which generally achieves better detection accuracy [8,9,10]. According to different change detection strategies, object-level change detection methods can be roughly divided into direct object change detection [11,12], synchronized segmentation and object change detection [13,14], and classification-based change detection [15,16,17]. Most object-based methods only consider the features of the object itself and ignore the correlation between objects.

The emergence of deep learning has brought new models and paradigms to change detection, greatly improving the efficiency and accuracy of change detection. The release of a large number of large-scale benchmark datasets has made it possible to design and introduce larger and deeper models. Since Daudt et al. [18] introduced Fully Convolutional Networks (FCNs) into the field of change detection and developed three basic FCN architectures, many representative network architectures have emerged continuously. Compared with traditional change detection methods, deep learning-based change detection methods can avoid the problem of needing image difference maps in traditional methods and can extract change information from different sources of remote sensing images, and have become the leading method in this field. Although these methods have achieved good results, CNN models can only perform local receptive field representation learning, which is not capable of identifying large objects, nor is it sufficient to capture long-distance dependencies between objects and other objects in the entire image, making these methods still insufficient when dealing with complex and diverse scenes with different spatiotemporal resolutions. Many scholars have tried to introduce the global feature extraction module of Transformer to solve the problem of limited representation learning caused by using only local feature extraction. For example, Chen et al. [19] proposed a change detection model based on transformer, which enhances the model’s spatiotemporal context information extraction ability through the transformer module, helping the model to identify the change area of interest and exclude irrelevant change areas, solving the problem of difficult detection in complex scenes. Bandara et al. [20] proposed a ChangeFormer based on transformer, which combines the hierarchical structure of the transformer encoder with the multi-layer perceptron (MLP) decoder to effectively extract multi-scale remote details for precise change detection tasks. In addition, more and more researchers are trying to apply Graph neural networks (GNNs) to image classification and change detection. GNNs can process non-Euclidean data and consider the spatial relationship between pixels [21]. For instance, Saha et al. [22] applied Graph convolutional networks (GCNs) to semi-supervised high-resolution image change detection. Wu et al. [23] extended multi-scale graph convolutional networks to the land cover change detection tasks of homogeneous and heterogeneous remote sensing images. Additionally, Tang et al. [24] employed multi-scale dynamic graph convolutional networks to mine both short-range and long-range contextual information. Zhao et al. [25] proposed a graph transformer-guided information fusion framework for hyperspectral image change detection, which combines a graph transformer module with a gated change information fusion unit, significantly enhancing the performance of change detection under low sample rates. Dong et al. [26] proposed a dual-branch local information-enhanced graph-transformer (D-LIEG) detection network that fully exploits the local–global spectral–spatial features of multi-temporal HSIs for change recognition with limited training samples. These studies collectively demonstrate the immense potential of GNNs in the field of remote sensing image change detection, not only advancing technological development but also providing robust support for practical applications.

However, with the improvement of spatial resolution, the heterogeneity within the same ground feature increases. Due to the openness and complexity of the Earth’s environment, as well as the uncertainty in the acquisition and transmission process of remote sensing information, remote sensing image change detection also faces many challenges. Specifically, due to the resource-intensive nature of acquiring labeled samples, pixel-based change detection methods have consistently encountered the challenge of limited sample sizes. GNNs offer a mitigation strategy for the small sample problem, albeit at the expense of substantial computational overhead. Practical application scenarios are often constrained by limitations such as device storage capacity and computational power. Furthermore, image objects are inherently unstructured data with irregular boundaries, which poses challenges for object-based change detection methods in terms of effective feature extraction and information aggregation. Additionally, most pixel-based and object-based methods focus solely on the features of individual objects, neglecting the inter-object relationships. Remote sensing image change detection tasks frequently involve temporal data, where the states of objects evolve over different time points. The existing GNN-based models are predominantly designed for static image processing and lack structures sensitive to temporal changes. When influenced by data noise and missing data, the constructed graph structure often contains a significant number of redundant and erroneous connections, making it challenging to adaptively model complex data and downstream tasks, and failing to accurately represent data association relationships.

In an effort to ameliorate the above challenge, we propose a novel hypergraph representation learning architecture for remote sensing image change detection (CD-DSHGCN) grounded in graph theory. We utilize hypergraph convolutional neural networks with dynamic structural learning to represent the superpixel vertices and their high-order correlations, transforming the traditional tasks of difference map generation and analysis into hypergraph learning and classification. The network can efficiently model unstructured object data, implicitly expand the number of labels, and assign adaptive weight parameters to different adjacent objects. It not only describes the level of vertex change but also explores changes in local grouping and consistency within hyperedges, achieving effective feature extraction.

The primary contributions can be summarized as follows:

- We propose vertex feature extraction based on superpixel segmentation for the characteristics of the change detection task, segmenting the difference map into superpixels with various shapes and boundaries, and extracting their significant statistical features to model unstructured image objects.

- We construct a dynamic hypergraph structure based on the multi-head self-attention mechanism, updating the hypergraph structure with learnable parameters to achieve feature aggregation between vertices and hyperedges, fully learning both low-order geometric and high-order semantic features.

- We explore the application of the hypergraph convolutional neural network framework in the field of remote sensing change detection, realizing high-precision, efficient, and robust change detection by optimizing the learning of the hypergraph and modeling high-order correlations within the data.

- The hypergraph framework demonstrates highly competitive performance on three remote sensing change detection datasets, confirming its effectiveness in enhancing the accuracy of change detection.

2. Related Work

Remote sensing change detection refers to the technique of using remote sensing images of the same location from different periods, based on image processing and mathematical models, to identify differences in the state of surface entities or phenomena over time. Compared to other remote sensing image interpretation techniques (such as classification and recognition), remote sensing change detection requires images from at least two periods, and its process is more complex, including high-precision geometric registration between images; radiometric, atmospheric, and phenological relative normalization processing; change feature extraction; and the determination of change areas and types. Optical high-resolution remote sensing images have become one of the most used data sources in the field of change detection due to their ability to provide detailed texture and geometric structure information.

From different perspectives, these algorithms can be categorized into different types. We elaborate from two angles: traditional methods and deep learning-based methods.

2.1. Traditional Change Detection Methods

Based on the granularity of the analysis unit, they can be divided into pixel-based methods, object-based methods, scene-based methods, and hybrid methods.

Analyzing pixel structures to extract change information is a mainstream method in the field of traditional change detection research. The prominent advantage is that simple algebraic operations can complete large-scale change detection tasks, with the difference method and ratio method being the most commonly used. However, the disadvantage is the difficulty in applying these methods to detect changes in small-area regions, such as in urban area planning. Using pixels as the object of discrimination can lead to a large number of false alarms and noise pixel points due to spectral variability and image registration errors, especially in high-resolution remote sensing image change detection tasks, where pixel-based change detection methods are even more challenging to apply.

Change detection methods that discriminate based on image objects are more in line with human visual system observation behavior and have higher detection accuracy compared to pixel-based methods [27,28]. The core of direct object change detection methods is to segment and extract image objects from the imagery and then directly compare the image objects from different time phases by contrasting geometric features such as length, area, shape, or spectral texture features [29] to obtain results. The idea of synchronized segmentation and object change detection methods is to overlay multiple datasets and participate in the segmentation and extraction of image objects simultaneously, obtaining segmented objects that are consistent in shape, size, and position across multiple images. Desclée et al. [30] proposed a regional merging technique to segment remote sensing images, obtaining objects with prominent reflectivity, and finally identifying change objects corresponding to outliers. Post-classification change detection methods are a more classic approach, where images are independently classified with object-oriented image classification, followed by the comparative analysis of the category, geometric shape, and spatial context information of the objects [31], to obtain change areas and trajectories.

Scene-based change detection methods involve scene discrimination analysis. These methods learn the true change patterns in the imagery, and any inconsistencies with the true patterns are identified as suspected change scenes. Scene-based change detection is mainly used in semantic change tasks, such as the transformation of land cover types, which not only considers the issue of change but also serves fields such as urban planning. However, the complexity and variety of objects in remote sensing imagery, which is more pronounced in high-resolution remote sensing imagery, make it more difficult to achieve semantic-level change detection due to the pseudo-change interference caused by factors such as different brightness levels of the same object in the image.

A common hybrid method is to combine the pixel-based and object-based change detection methods, using pixels to detect small-range change areas in the image while using object analysis to extract global change areas. Combining the two methods mentioned above can obtain change image blocks of different sizes.

2.2. Deep Learning-Based Change Detection Methods

The traditional methods usually adopt manual design for feature extraction, which may not effectively extract discriminative features, and find it difficult to choose appropriate thresholds to accurately detect image changes. In recent years, with the rapid development and widespread application of deep learning, deep learning-based image change detection methods have also attracted widespread attention and in-depth research from researchers. Deep learning is a special machine learning method based on artificial neural networks, with strong representation learning ability. Deep learning-based change detection methods can automatically extract deep change features, segment remote sensing images, and thus greatly reduce manual feature engineering and more robustly complete large-scale change detection tasks [21]. In addition, compared with traditional change detection methods, deep learning-based change detection methods can avoid the problem of needing image difference maps in traditional methods and can extract change information from different sources of remote sensing images, so deep learning methods are currently the most frequently used in change detection tasks. Many domestic and foreign experts and scholars have proven through a large number of experiments that deep learning methods have practical application value in solving remote sensing image change detection tasks.

Daudt et al. [18] proposed FC-EF (Fully Convolutional Early Fusion) and the twin neural network FC-Siam-Conc, introducing skip connections similar to those in UNet to accurately detect change areas. Jiang et al. [32] utilized a twin neural network to extract image pyramid features guided by an attention mechanism that models the long-distance relationships between different features using global attention. Peng et al. [33] proposed the U-Net++_MSOF change detection network model, integrating deep supervision and dense connection mechanisms to optimize the edge details of change areas. Chen et al. [34] proposed the STANet, a multi-scale spatiotemporal attention-based change detection model with a twin structure which uses spatiotemporal attention mechanisms to capture dependencies at different scales, generating superior feature representations and effectively addressing false detections caused by registration errors. The aforementioned models learn local receptive field representations solely through CNNs, which are incapable of identifying large objects and insufficient for capturing relationships between objects and other entities across the entire image. Consequently, many scholars have attempted to introduce global feature extraction modules to resolve the limitations in representation learning caused by relying solely on local feature extraction. For instance, Chen et al. [19] proposed the BIT_CD, a transformer-based change detection model that enhances the model’s ability to extract spatiotemporal contextual information through transformer modules, aiding the model in identifying changes in interest and excluding irrelevant changes, thus tackling detection challenges in complex scenarios. Jing et al. [35] proposed a change detection method combining Tri-Siamese with a Long Short-Term Memory (LSTM) sub-network, extracting a wealth of spectral information spatially through the Tri Siamese sub-network, followed by analysis and discrimination using the LSTM sub-network, and demonstrated the effectiveness of this method through experiments. Bandara et al. [20] proposed ChangeFormer, a transformer-based twin network structure that combines a hierarchical transformer encoder with a multi-layer perceptron (MLP) decoder to effectively extract multi-scale remote detail information for precise change detection tasks. Although the aforementioned methods have achieved certain effects, deep learning-based change detection methods still inevitably have some shortcomings. Unlike other semantic segmentation and image classification tasks, change detection does not involve a single image. Therefore, issues such as noise and the extraction of deep-level features in semantic segmentation and image classification tasks bring several times the challenge in change detection tasks.

In the processing of remote sensing image data, GNNs have also attracted attention and have been successfully applied in various fields, such as the semantic segmentation of remote sensing images [36], remote sensing image retrieval [37], and classification of remote sensing images [38,39,40,41]. Recently, some methods have also been developed and applied to change detection tasks. For instance, Saha et al. [22] applied GCNs to semi-supervised high-resolution image change detection. Wu et al. [23] extended multi-scale graph convolutional networks to the land cover change detection tasks of homogeneous and heterogeneous remote sensing images. Additionally, Tang et al. [24] employed multi-scale dynamic graph convolutional networks to mine both short-range and long-range contextual information. Zhao et al. [25] proposed a graph transformer-guided information fusion framework (GTransCD) for hyperspectral image change detection, which combines a graph transformer module with a gated change information fusion unit, significantly enhancing the performance of change detection under low sample rates. Wang et al. [42] proposed an end-to-end architecture of graph-level neural networks (GLNN), utilizing the local structure of each pixel neighborhood block at the graph level to robustly capture the associations of local adjacency relationships, thereby gaining a more nuanced graphical understanding of Change Detection and learning more discriminative graphs for change detection. Wang et al. [43] developed a novel GLNN framework for change detection. They tested their method on three SAR image datasets and found that it provided satisfactory results compared to other methods. To overcome biases and illumination changes that reduce detection accuracy, Yuan et al. [44] introduced a GNN-based framework that integrates 2D and 3D features for precise building change detection. Dong et al. [26] proposed a dual-branch local information-enhanced graph-transformer (D-LIEG) detection network that fully exploits the local–global spectral–spatial features of multi-temporal HSIs for change recognition with limited training samples. The LIEG block enhances the information of the graph-transformer by learning local information representation and jointly extracting local–global features. A dual-branch structure composed of multi-layer LIEG blocks is designed to fully extract the complementary local and global features of multi-temporal HSIs, achieving the discrimination of changed and unchanged objects with limited training samples. Significant performance improvements have been achieved on four commonly used hyperspectral datasets. Zhang et al. [45] proposed a dual-branch feature extraction framework composed of multi-order graph convolutional networks and channel attention mechanisms, which can learn a wider feature aggregation and extract difference features between two branches from the channel dimension. A pixel-wise CD method based on feature fusion with an association matrix and convolutional layers was proposed which can extract local change differences within superpixels and detect subtle changes in targets. Utilizing superpixel sparse graph storage technology and a lightweight feature fusion module, an efficient hyperspectral change detection network architecture MGCN was designed featuring low computational complexity and good performance. Zhou et al. [46] proposed a graph-based method to capture the global structural relationships between different modal images, enhancing the accuracy and robustness of change detection. During the spatial relationship inference process, a cross-attention mechanism is utilized to establish semantic associations with the categorical information in the dataset, incorporating inter-category semantic correlation information into the spatial context. Notably, a large-scale pixel-level multi-modal change detection (MCD) dataset (CNAM-CD) was established, compiling images from twelve different urban scenarios spanning nearly a decade, featuring more refined labels and a more balanced class distribution. Han et al. [47] introduced the concept of multi-modal change detection (MCD). An MCD method based on Global Structural Graph Mapping (GSGM) was proposed, extracting “comparable” structural features between multi-modal datasets, and constructing a Global Structural Graph (GSG) to express the structural information of each multi-temporal image, which is then cross-mapped to other data domains. Change Intensity (CI) is determined by measuring the changes in the mapped GSGs and the differences between the mapped GSGs. The forward and backward CI mappings (CIMs) are then fused with the Latent Low-Rank Representation method (LLRR), and the change map (CM) is obtained through threshold segmentation. The effectiveness and robustness of the method were verified on five multi-modal datasets and four single-modal datasets.

3. Methodology

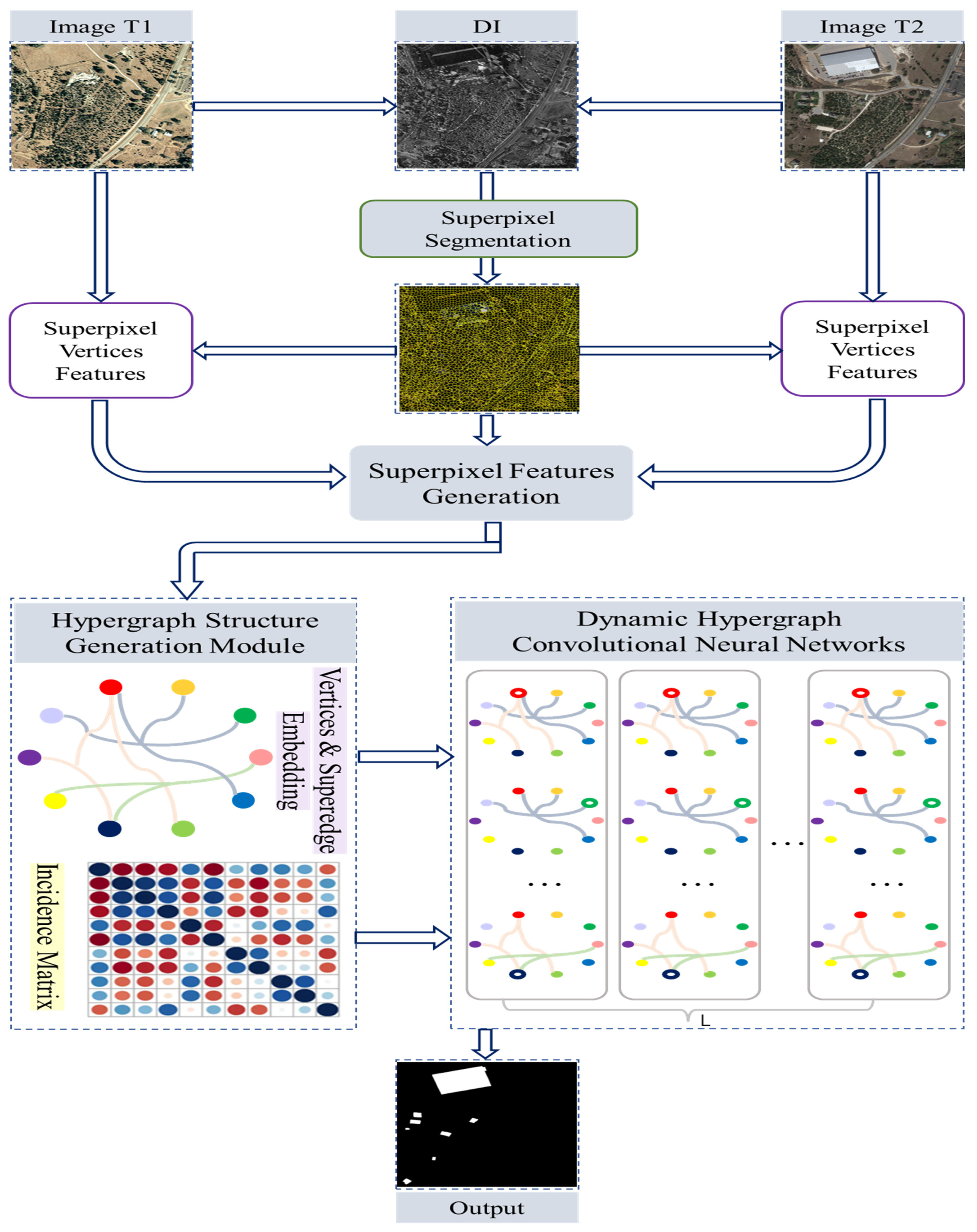

We propose a novel hypergraph representation learning architecture for remote sensing image change detection based on graph theory. The algorithm aims to utilize a hypergraph neural network to represent the superpixel vertices and their high-order correlations in images from two time points, transforming the traditional tasks of difference map generation and analysis into hypergraph learning and classification problems. Figure 1 shows the overview of the proposed CD-DSHGCN. In the hypergraph structure generation module, there are different vertices on a hypergraph, represented by different colors. Different hyperedges are represented by curves of different colors. In the dynamic hypergraph convolutional neural networks, manipulated vertices are indicated by hollow circles, and the curves of different colors represent the hyperedges corresponding to these vertices.

Figure 1.

Framework overview of proposed CD-DSHGCN.

The algorithm framework consists of the following parts:

- Vertex Aggregation based on Superpixel Segmentation: For the given remote sensing images, a difference map is constructed using Euclidean distance. Subsequently, the difference map is segmented into a multitude of unstructured multi-scale superpixels with diverse shapes and boundaries using the SLIC algorithm. The superpixel segmentation results are applied for region division, where each superpixel is considered a hypergraph vertex. Significant statistical features from each superpixel in the images are extracted to represent the vertex information of each superpixel.

- Hypergraph Structure Generation Module: With each superpixel node feature as a vertex, based on the multi-head self-attention mechanism, the connection probabilities between vertices and hyperedges are calculated using learnable parameters. Hyperedges are generated through threshold filtering, achieving the establishment of a dynamic hypergraph.

- Dynamic Hypergraph Convolutional Neural Networks: We construct a framework based on hypergraph convolutional neural networks, which includes multiple dynamic convolutional layers. It is trained based on the established hypergraph structure and superpixel vertex features. Through the learning of the hypergraph, high-order correlations in the data are optimized, thereby accomplishing the change detection task.

3.1. Vertex Aggregation Based on Superpixel Segmentation

Using image pixels as hypergraph vertices is the most direct and simple way to construct a graph, but this approach still has certain drawbacks. Specifically, when there are many pixels in remote sensing images, constructing a graph with pixels as vertices can lead to an excessively large graph size, thereby increasing the time and space complexity of the subsequent hypergraph convolution operations. This flaw severely limits the application of hypergraph neural network models on large-scale datasets. To address this issue, researchers have proposed constructing graph structures with superpixels as vertices.

Superpixels typically refer to irregular pixel blocks composed of adjacent pixels with similar features such as texture, color, and brightness. Superpixels in remote sensing images can often be obtained through image segmentation algorithms. In remote sensing images, the number of superpixels is usually much smaller than the number of pixels. Therefore, constructing a hypergraph with superpixels as vertices can reasonably limit the size of the hypergraph and effectively improve the efficiency of hypergraph neural networks. Additionally, another advantage of using superpixels to construct hypergraph structures is that the segmented superpixels can well preserve the local structural information of remote sensing images. For high-dimensional data such as hyperspectral imagery, which may have hundreds of bands and a large volume of data, it is necessary to improve efficiency by preprocessing, which includes steps such as histogram matching and data dimension reduction.

For the given remote sensing images , a difference map is constructed by normalizing the Euclidean distance between the two.

Subsequently, the difference map is segmented into a multitude of unstructured multi-scale superpixels with diverse shapes and boundaries using SLIC [7], which can be represented as

where denotes the number of superpixels.

The original SLIC (Simple Linear Iterative Clustering) method is suitable for grayscale images and RGB three-dimensional color images, and it requires converting RGB images into the CIELAB color space. When targeting high-dimensional data such as hyperspectral imagery, the spectral information expressed in the spectral dimension of hyperspectral images is different from that in the CIELAB space. The superpixel segmentation part can be replaced with the improved SLIC-H method instead of the SLIC used for ordinary images. SLIC-H first determines the number of superpixels, which is decided by the superpixel coefficient K, and this is the only hyperparameter that needs to be set. Then, it calculates the coordinates of the center point of each superpixel and initializes the spectral value of the center point. The spectral value is the value of the individual pixel point closest to the center coordinate. This center coordinate is only the initial coordinate which is uniformly distributed throughout the hyperspectral image data according to the superpixel number coefficient K, but the uniformly distributed center points are not the most conducive to the clustering center point distribution. Therefore, after determining the initial center points, new cluster centers are sought within the neighborhood of the initial center points. Calculate the gradient value of all the pixel points in all the bands within the neighborhood of the initial cluster center, and the pixel point with the smallest gradient in the neighborhood serves as the new cluster center. The secondary search for cluster center points can avoid the cluster center points being located on the boundary with large gradients, making the size difference in the superpixels generated by the subsequent clustering too large. After the initial center points are determined, labels are assigned to pixel points around all the center points. Set the search range for the center point of each superpixel. The measurement of SLIC-H needs to be within the search range of the superpixel center point, calculating the spectral and spatial distances of each pixel to the center point. The distance measurement is a weighted sum of the spectral distance and spatial distance. The SLIC-H method requires multiple iterations to continuously update the cluster center for each pixel point in order to obtain the final superpixel effect diagram.

To make the most of the original information in the dual-temporal images and ensure the correspondence of the segmented areas, we first use the segmentation results of the difference map to simultaneously segment both images, obtaining and . Subsequently, we represent the superpixel information in each image by statistically describing some significant features of the areas covered by the superpixels.

where represents the concatenation and aggregation at the feature level, where , , , , , and represent the maximum value, minimum value, mean value, kurtosis, standard deviation, and skewness of the pixels within the superpixel coverage area, respectively. By concatenating the features of the superpixels at corresponding positions in the dual-temporal images, we obtain the vertex features in the superpixel hypergraph.

If high-dimensional data (such as hyperspectral images) is being targeted, the average spectral features of all pixel points within a superpixel are utilized as the features of that superpixel. It is worth noting that using the superpixels as hypergraph nodes can retain the local structural information of the hyperspectral image, thereby effectively enhancing the model’s representation ability. When there are more errors in the segmentation results, the hypergraph structure built with superpixels as units may not accurately depict the association information between the objects in the hyperspectral image. Especially near the boundaries of image categories, due to the large fluctuation of spectral features of pixels, the results of superpixel segmentation often have more errors, and the final accuracy will also be affected to a certain extent, which may require the use of soft segmentation technology for adjustment.

3.2. Dynamic Hypergraph Structure Generation Module

A hypergraph is a commonly utilized method for modeling correlations between data. The traditional hypergraph neural networks are founded on the basis of static hypergraph structures. When the data itself does not have an explicit hypergraph structure, it is necessary to construct a hypergraph structure based on vertex attributes, features, and other information through methods such as k-nearest neighbors, thereby introducing a static hypergraph structure into the hypergraph neural network. Additionally, most current algorithms concerning hypergraphs employ the KNN rule for the establishment of hyperedges, which involves forming a hyperedge with a central pixel and K pixels that are closest to it in spatial distance [48]. In fact, hyperedges are the expressive objects of high-order correlations between vertices, and their structural form significantly impacts the description of high-order correlations as well as the performance of the hypergraph. However, the traditional k-nearest neighbors rule has certain deficiencies when constructing hyperedges [49]. When the number of adjacent points K takes a smaller value, the hypergraph established based on the k-nearest neighbor rule can only capture local high-order correlations. Conversely, when K takes a larger value, it is prone to data redundancy, greatly increasing the computational load of the algorithm. The construction of the hypergraph structure directly affects the performance of the hypergraph neural network. Therefore, in order to leverage hypergraphs and related techniques for detecting changes between two time point images, accurately constructing the hypergraph structure, and modeling high-order correlations, we propose a hypergraph structure generation module. This module constructs a dynamically updatable hypergraph structure based on the multi-head self-attention mechanism, adaptively optimizing the hypergraph structure.

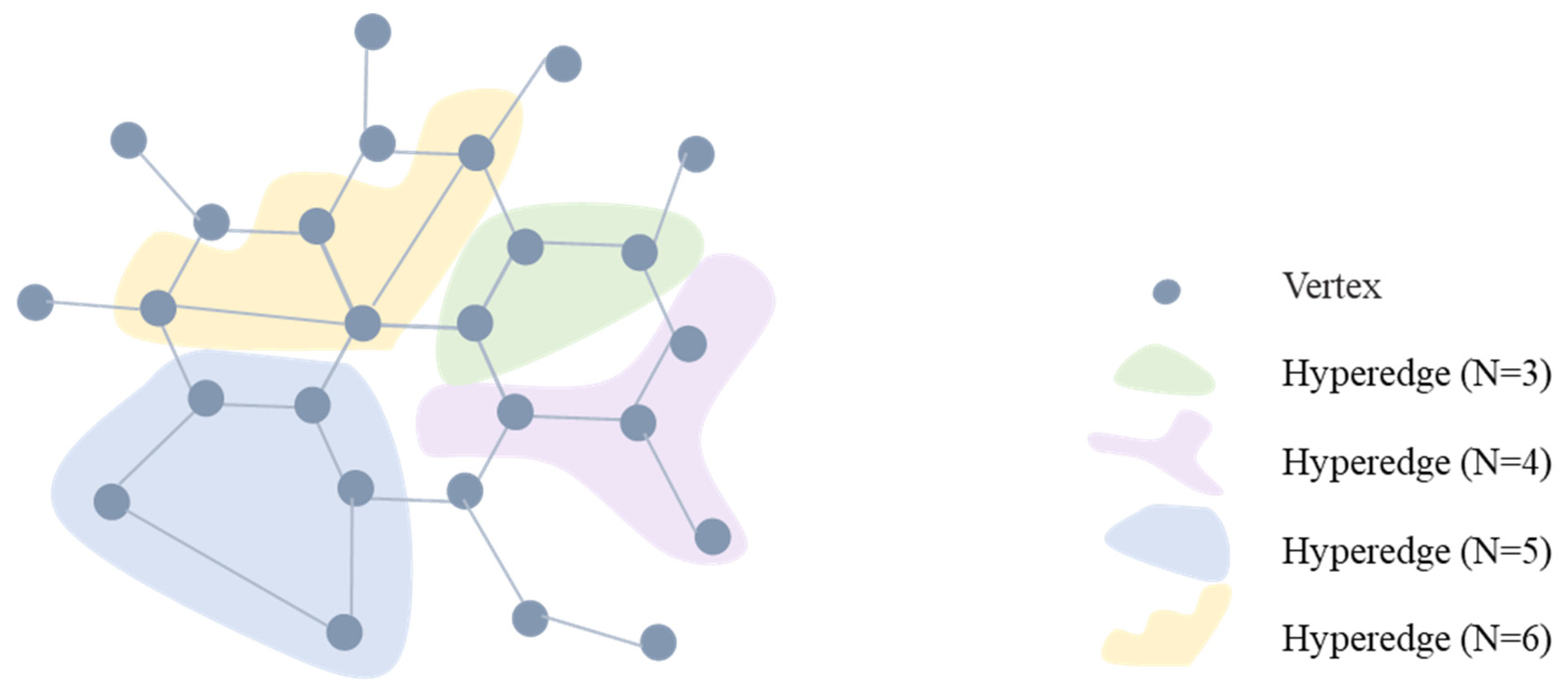

Initially, an introduction to the relevant concepts of hypergraphs is presented, as shown in Figure 2.

Figure 2.

Hypergraph schematic diagram.

A hypergraph encompasses a collection of vertices and a set of hyperedges [50], which are conventionally defined as follows:

where denotes the set of vertices, with the number of vertices in represented as . Each vertex possesses certain semantic information, and its initial feature matrix is the aforementioned superpixel feature, denoted as , where represents the feature of the -th vertex.

represents the set of hyperedges. Unlike the edges in a simple graph that can only connect two vertices, each hyperedge can connect any number of vertices, and the number of hyperedges in is denoted as .

The hypergraph is represented by the incidence matrix .

In the hypergraph, denotes the association between the -th vertex and the -th hyperedge . If is not a member of , then equals 0; otherwise, equals 1. The values in the hypergraph incidence matrix are not limited to 0 or 1; they can be any real number within the interval [0,1].

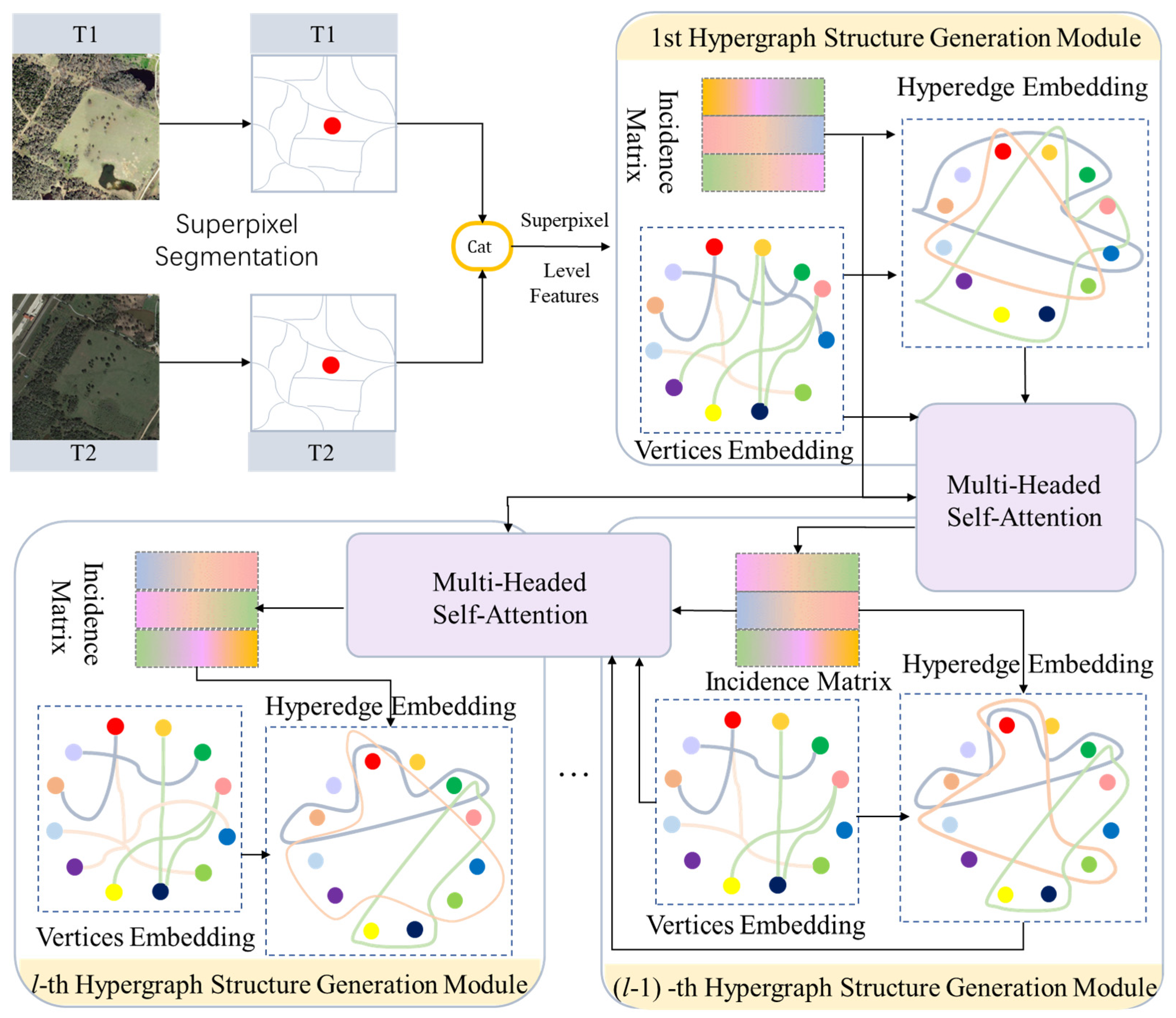

This module is implemented based on the multi-head self-attention mechanism, as shown in Figure 3.

Figure 3.

Schematic diagram of hypergraph structure generation module.

The introduction of the attention mechanism is due to the consideration that the information of a hyperedge includes the information of all the vertices it connects. Different vertices within a hyperedge have varying degrees of importance. Therefore, the representation of a hyperedge is set as the weighted aggregation of the representations of all the internal vertices, measuring the similarity of information between the vertices and hyperedges. This allows a hyperedge to focus on all the relevant vertices. Assuming the -th hyperedge contains vertices, where is the feature representation of the -th vertex in hyperedge , and is the degree of hyperedge , then the feature representation of hyperedge is denoted as

Then, the attention score between -th vertex and the -th hyperedge is denoted as

where denote the set of attention heads. Assuming the incidence matrix is sparse, set a threshold , and through operation , set all the elements less than to 0, thereby filtering out lower attention scores and preserving the connections between the vertices with high similarity and hyperedges. is utilized to adjust the proportion between the initial hypergraph structure and the newly generated one. Thereupon, based on the vertex features, hyperedge features, and the initial hypergraph structure, a new hypergraph structure is generated, achieving the dynamic updating of the hypergraph structure.

3.3. Dynamic Hypergraph Convolutional Neural Networks

The algorithmic process of dynamic hypergraph neural networks is as follows:

Firstly, initialize the hypergraph structure generation module based on the multi-head self-attention mechanism and the learnable parameters within the hypergraph convolutional neural networks to generate the first layer of hypergraph structure and the dynamic hypergraph convolutional neural network model . The learnable parameters of the hypergraph structure generation module include the multi-head attention weights , and the learnable parameters of the dynamic hypergraph convolutional neural network model are denoted as and .

Then, after inputting the vertex feature representation into , the intermediate hidden layer vertex feature representation and the output prediction result are obtained. These are then fed back into the dynamic hypergraph structure generation module to dynamically update the hyperedges on the initial hypergraph, generating a new hypergraph . The dynamic hypergraph convolutional neural network model based on the new hypergraph is denoted as , and the features are re-entered to obtain new hidden layer representations and prediction results .

Subsequently, we continue to update the hypergraph through the aforementioned steps, iterating for steps.

The output of the node embedding representation at the -th layer is as follows:

where represents the feature of the -th layer, and is a trainable parameter of layer , which can be learned in the training phase. is an arbitrary non-linear activation function.

Subsequently, by connecting a fully connected layer for vertex classification, the predicted values are output, which allows us to determine the change status of each superpixel vertex.

Based on the superpixel vertex features, the generated hypergraph structure, and the predicted values, we calculate the smoothness constraint term and the prediction error term of the hypergraph structure. The sum of these two terms serves as the loss function . Specifically, the hypergraph structure smoothness constraint is used to regulate the generation of the hypergraph structure; reducing the value of this term can make the vertices with similar features closer in the hypergraph manifold space. The prediction error term, on the other hand, ensures that the predicted labels of the sample data are as close as possible to their initial values. Using the loss function value, the learnable parameters are computed and updated through backpropagation until the convergence criteria are met, resulting in a difference map classification model. This model more flexibly constructs high-order correlations between complex superpixels, effectively enhancing the accuracy of the difference map classification task.

4. Experiments

4.1. Dataset

(1) LEVIR-CD+ [34]: The dataset is a large-scale remote sensing binary change detection dataset. It consists of 985 pairs of high-resolution images with a pixel resolution of 0.5 m/pixel, each with dimensions of 1024 × 1024 pixels. Spanning a time interval of 5 to 14 years, these multi-temporal images capture significant changes in building structures. It also includes various types of buildings, including villa residences, high-rise apartments, small garages, and large warehouses, with a focus on the emergence of new buildings and the decline of existing structures. The final dataset contains 31,333 instances of building changes, and remote sensing image interpretation experts have annotated these binary temporal images with binary labels (1 for change, 0 for no change), making the dataset a valuable benchmark for evaluating change detection methods.

(2) SYSU-CD [51]: The dataset is an indeterminate category change detection dataset, consisting of 20,000 pairs of 256 × 256 0.5 m/pixel aerial images captured in Hong Kong from 2007 to 2014. It focuses on urban and coastal changes, including the development of high-rise buildings and infrastructure, such as urban construction, suburban expansion, groundwork for construction, vegetation changes, road expansion, and marine construction. For effective deep learning applications, the dataset is structured into training, validation, and testing sets in a 6:2:2 ratio.

(3) WHU-CD [52]: The dataset is a collection of large-scale aerial imagery obtained through aerial photography, with a spatial resolution of 0.3 m/px and dimensions of 32,508 × 15,354, at a resolution of 0.3 m/pixel. It includes two sets of aerial images captured in Christchurch, New Zealand, in April 2012 and April 2016. The aerial images taken in 2012 cover an area of 20.5 square kilometers with 12,796 buildings, while the images captured in 2016 show an increase in the number of buildings to 16,077 in the same area, reflecting significant urban development over the four years, particularly in detecting changes in large and sparse building structures. In addition to the change detection labels, the dataset also includes semantic segmentation labels corresponding to the two temporal images, which can be used for a variety of remote sensing image interpretation tasks.

The information on these three datasets is summarized in Table 1.

Table 1.

The three benchmark datasets used for experiments.

4.2. Experimental Setup

Implementation Details: The proposed architectures are implemented in Pytorch. Data augmentation is performed through random rotation, horizontal flipping, and vertical flipping. We utilize the Adam optimizer to train our network, fine-tuning the parameters based on the existing theories and empirical findings through multiple experiments, with a learning rate set to 1 × 10−4 and a batch size of 16. The training iteration is set to 10,000, and an early stopping strategy is adopted; if there is no improvement in validation accuracy for 500 consecutive iterations, the training will be terminated early. All the experiments are independently and randomly repeated 10 times, and the average performance is recorded.

Evaluation Metrics: For a comprehensive and balanced assessment of the model’s performance, we employed the following metrics: Precision, Recall, Intersection over Union (IoU) for the category of change, overall accuracy (OA), and the F1-score. It is important to note that the performance of the algorithm is considered better the closer these metrics are to 1. The specific calculation methods for these metrics are detailed as follows:

where TP, TN, FP, and FN represent the number of true positives, true negatives, false positives, and false negatives, respectively.

4.3. Comparison Methods

To demonstrate the advantages of our hypergraph-based architecture in the change detection (CD) task, we have selected a number of pioneering, influential, state-of-the-art methods for comparison, mainly including:

- CNN-based methods: FC-EF [18], FC-Siam-Diff [18], FCSiam-Conc [18], DTCDSCN [53], IFNet [54], DMINet [55], SEIFNet [56], SNUNet [57], and HANet [58]. CNN-based change detection methods are effective in automatically extracting hierarchical features and have been widely applied in the field, but they are limited by a constrained receptive field that may restrict their ability to capture broader spatial contexts.

- Transformer-based methods: BIT [19], ChangeFormer [20], STANet [34], SwinSUNet [59] and AERNet [60]. Transformer-based methods, with their stacked self-attention modules, excel at modeling long-range dependencies and global relationships within images, although they come with a high computational cost due to their quadratic complexity with respect to image size.

- GNN-based methods: CSAGC [61], CGMMA [62], and GMTS [63]. GNN-based change detection methods excel in capturing complex spatial relationships between pixels using graph neural networks, effectively handling multi-scale context information. These methods integrate graph convolutions and attention mechanisms to model long-range dependencies, enhancing feature extraction and improving the robustness and accuracy of change detection.

For the aforementioned methods, if the detection performance on the corresponding dataset has been reported in the original paper, we directly adopt the data from the original work. If not, we compare based on the most recent data provided in the selected papers.

4.4. Results Analysis and Benchmark Comparison

Table 2, Table 3 and Table 4 list the quantitative analysis results of the proposed hypergraph architecture and other change detection methods on three datasets. All the values are reported in percentage (%).

Table 2.

The average quantitative results of different CD methods on the LEVIR-CD+ dataset. For convenience: BEST, 2ND-BEST, and 3RD-BEST.

Table 3.

The average quantitative results of different CD methods on the SYSU-CD dataset. For convenience: BEST, 2ND-BEST, and 3RD-BEST.

Table 4.

The average quantitative results of different CD methods on the WHU-CD dataset. For convenience: BEST, 2ND-BEST, and 3RD-BEST.

The method proposed herein exhibits a more equilibrated performance across these evaluation metrics. It is apparent from the results that our method is significantly superior to the CNN-based methods or transformer-based architectures, fully demonstrating the potential of hypergraph architecture in change detection tasks.

On the LEVIR-CD+ dataset, the proposed method achieves the highest Precision, Recall, F1 score, IoU, and OA, improving by 0.10%, 2.04%, 1.95%, 3.39%, and 0.19%, respectively, compared to the 2nd-best methods.

On the SYSU-CD dataset, the proposed method achieves the highest Precision, Recall, F1 score, IoU, and OA, improving by 1.33%, 0.76%, 1.03%, 1.5%, and 0.49%, respectively, compared to the 2nd-best methods.

On the WHU-CD dataset, the proposed method achieves the highest F1 score, IoU, and OA, improving by 0.16%, 0.27%, and 0.13%, respectively, compared to the 2nd-best methods.

In the quantitative comparison of the three datasets, our model shows impressive performance, with clear boundaries, high internal compactness, and fewer missed and false detections.

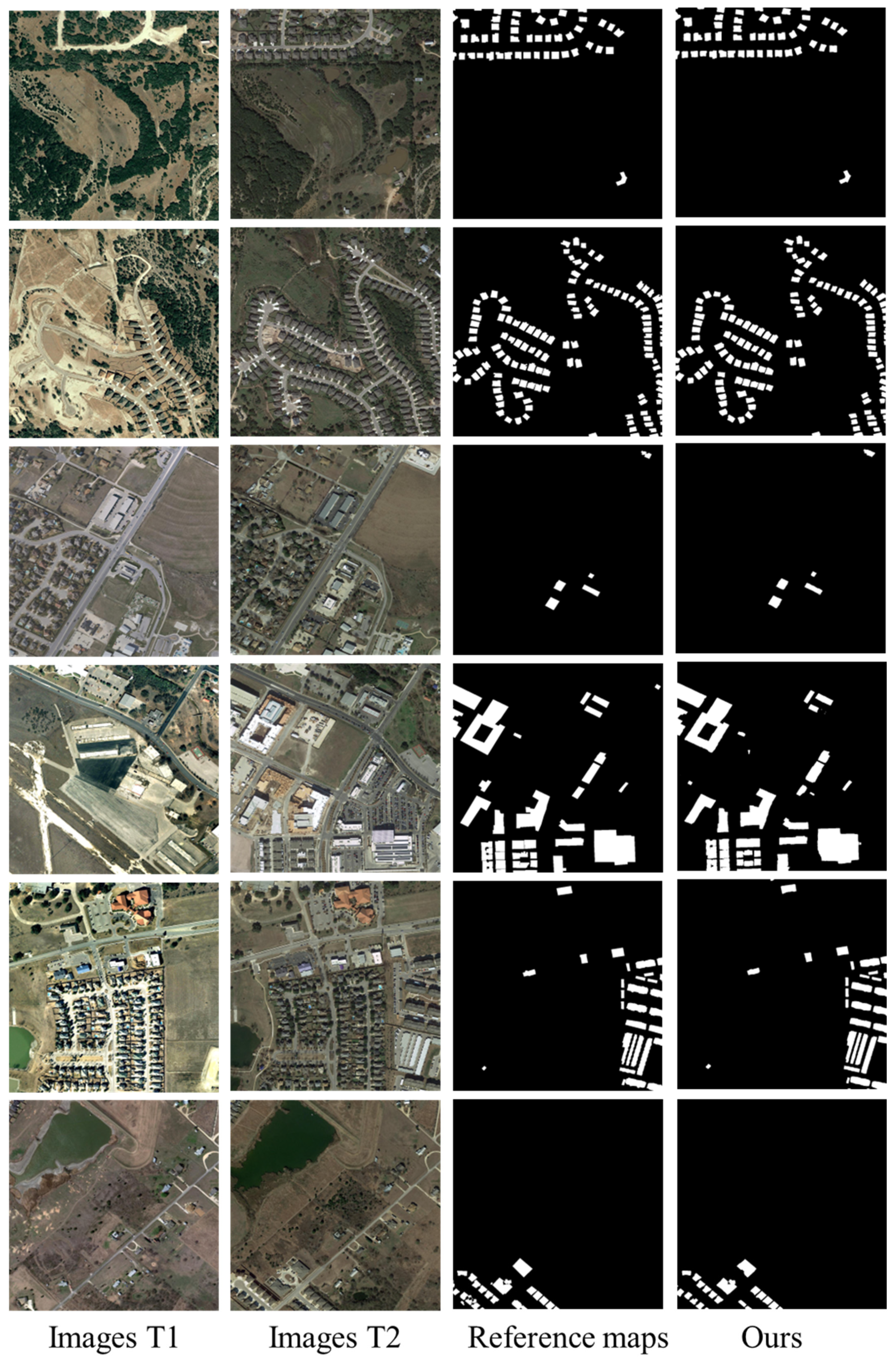

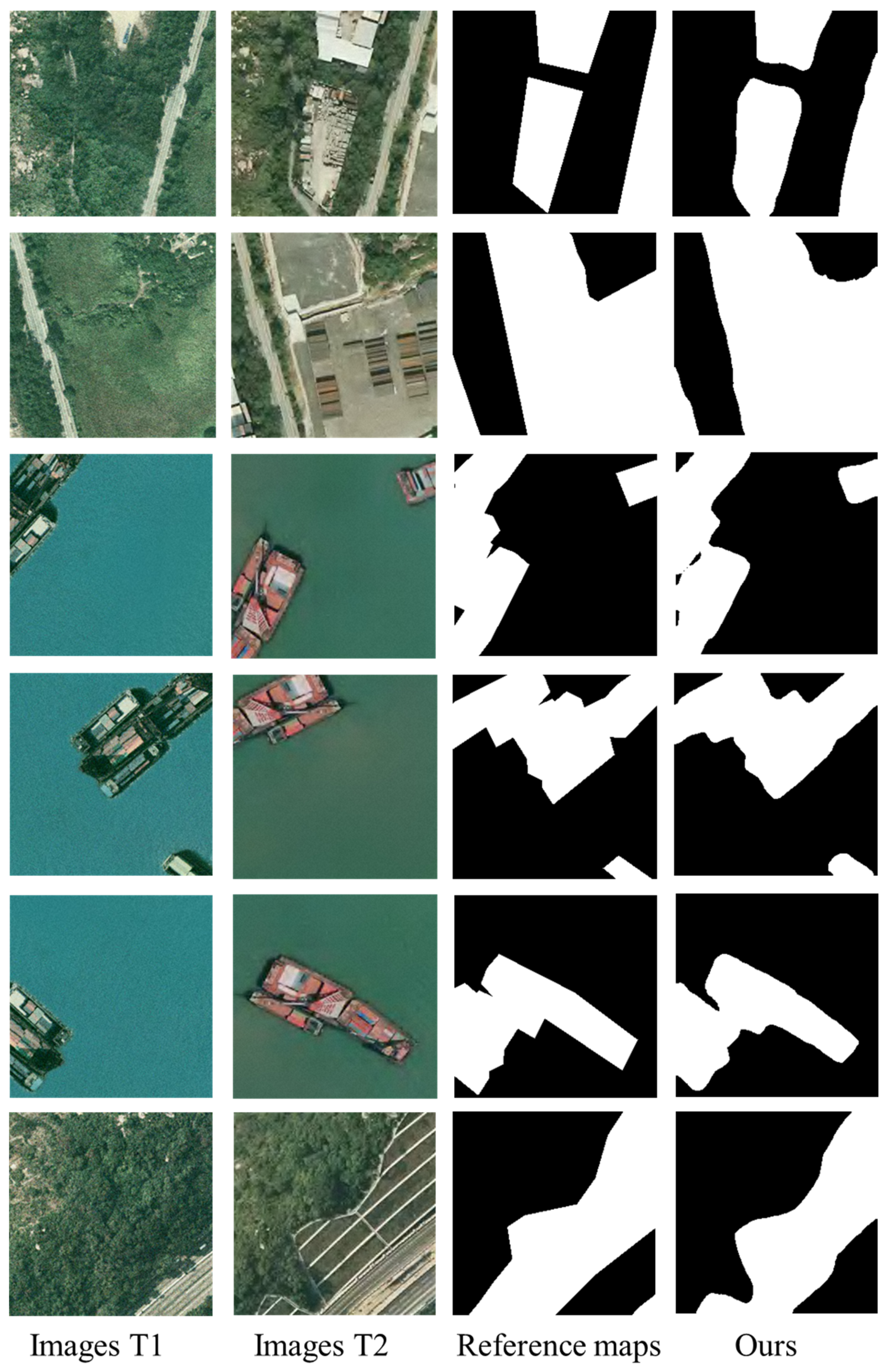

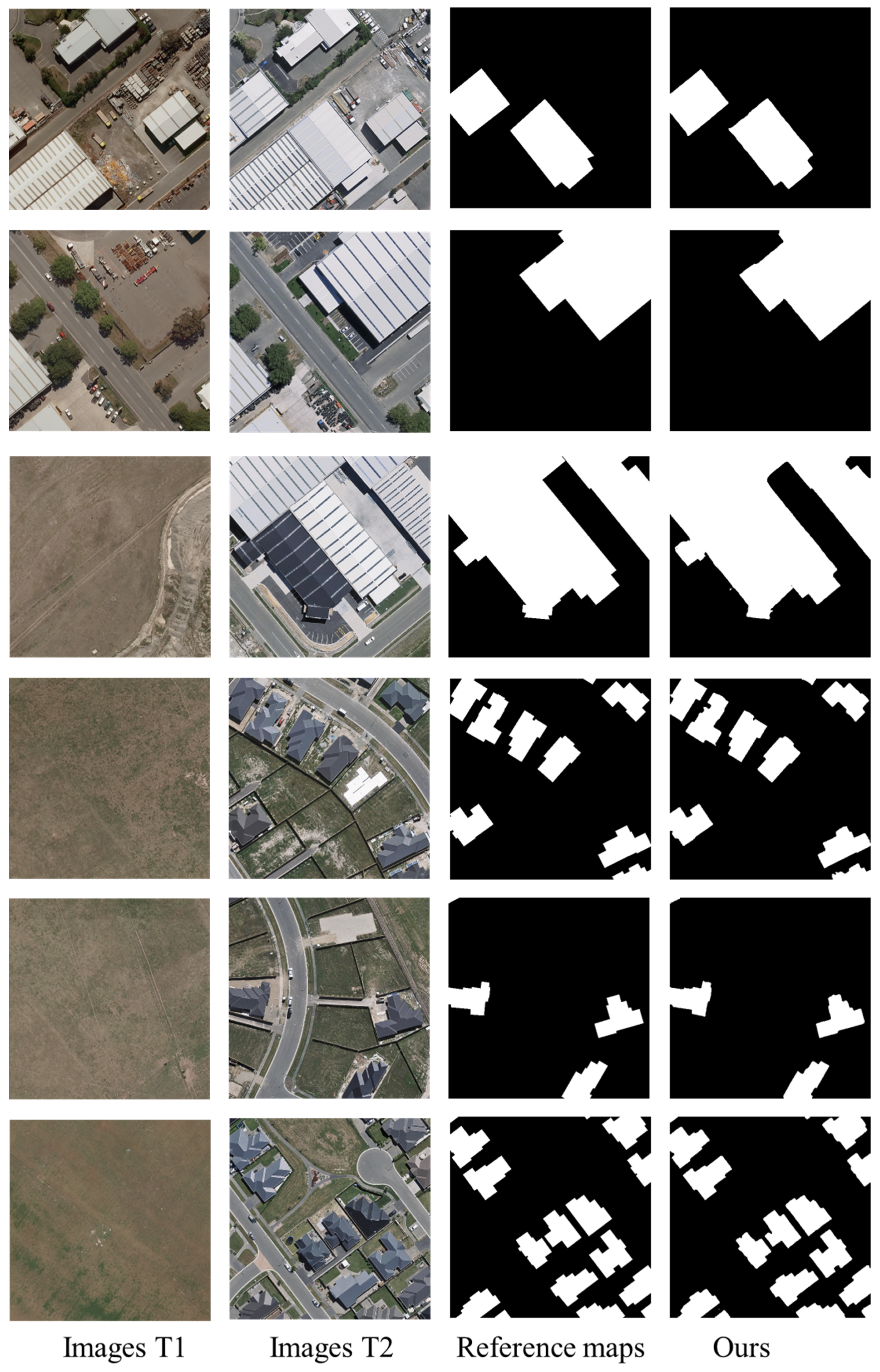

Then, Figure 4, Figure 5 and Figure 6 display the qualitative analysis results presented by our hypergraph architecture on the test sets of the three datasets.

Figure 4.

Change maps obtained by our method on the LEVIR-CD+ dataset.

Figure 5.

Change maps obtained by our method on the SYSU dataset.

Figure 6.

Change maps obtained by our method on the WHU-CD dataset.

It can be seen that the proposed method accurately detects changes in these image pairs, which contain different types, proportions, and quantities of changes, very close to the change reference map. Further analysis reveals that in remote sensing change detection tasks, due to the multi-scale phenomena of land cover features and the differences in the spatial resolution of various sensors, the sizes of change objects can vary greatly. Therefore, the hypergraph structure, which thoroughly learns global contextual information and extracts features while fully simulating spatiotemporal relationships, is particularly important for the subsequent detection of these change objects of varying sizes. Compared with the existing representative methods, our proposed hypergraph-based modeling approach demonstrates superior performance in change detection tasks. It is able to make effective use of limited annotated data, thereby enhancing the algorithm’s robustness and generalization capability for complex data.

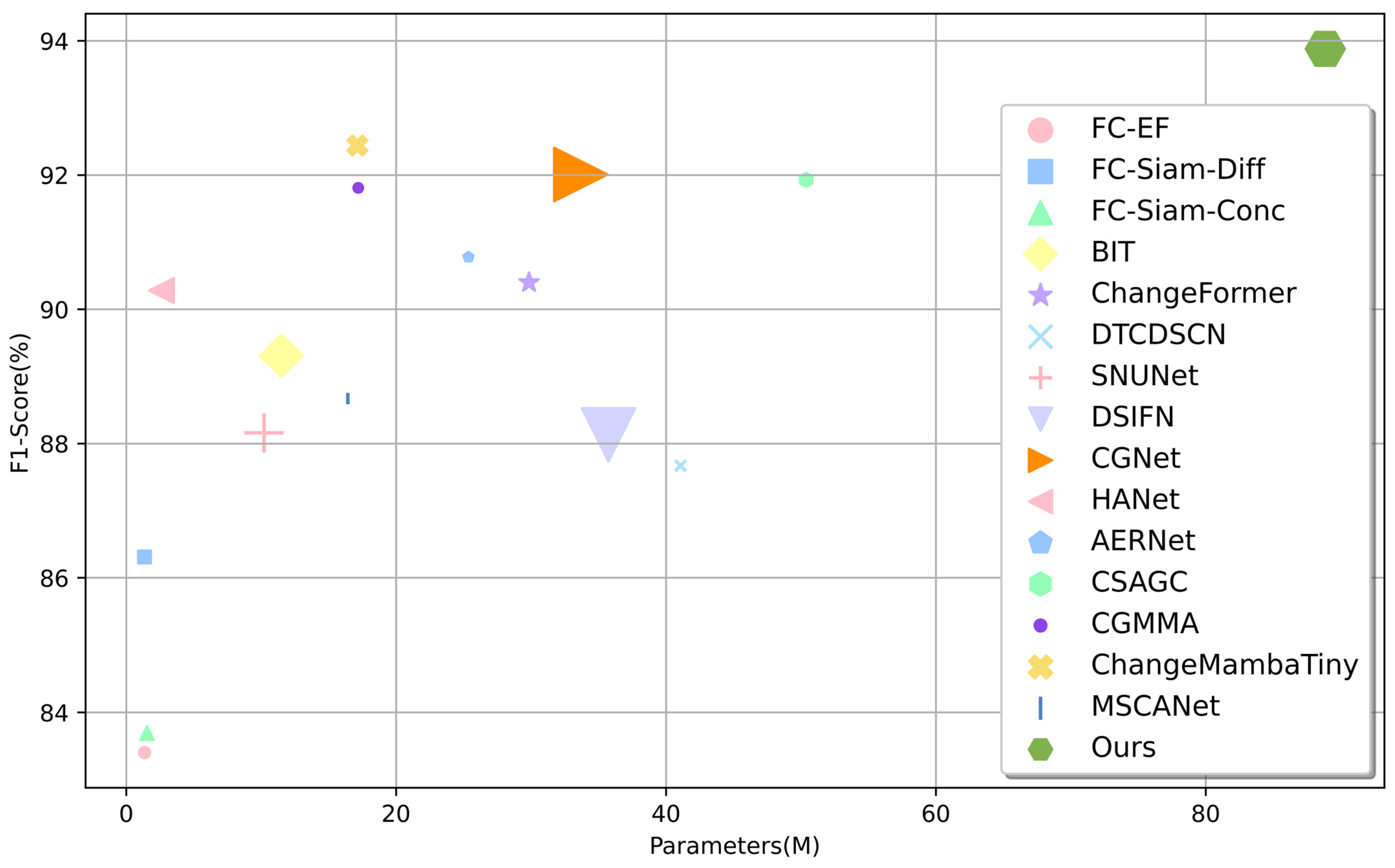

To evaluate the computational complexity of the proposed method, we compared its FLOPs (G) and parameters (M) with those of other methods such as ChangeMamba [64] and MSCANet [65], as shown in Figure 7. Different colored symbols represent different methods, with the size of the symbols indicating FLOPs. It can be observed that CNN-based methods have lower GFLOPs and fewer parameters, but a lower F1-score. Our method has the highest F1-score, but it requires more computational resources. Our future research will focus on designing a more efficient learning framework to achieve a balance between performance and computation.

Figure 7.

Comparison of different models in computational cost.

4.5. Parameter Analysis

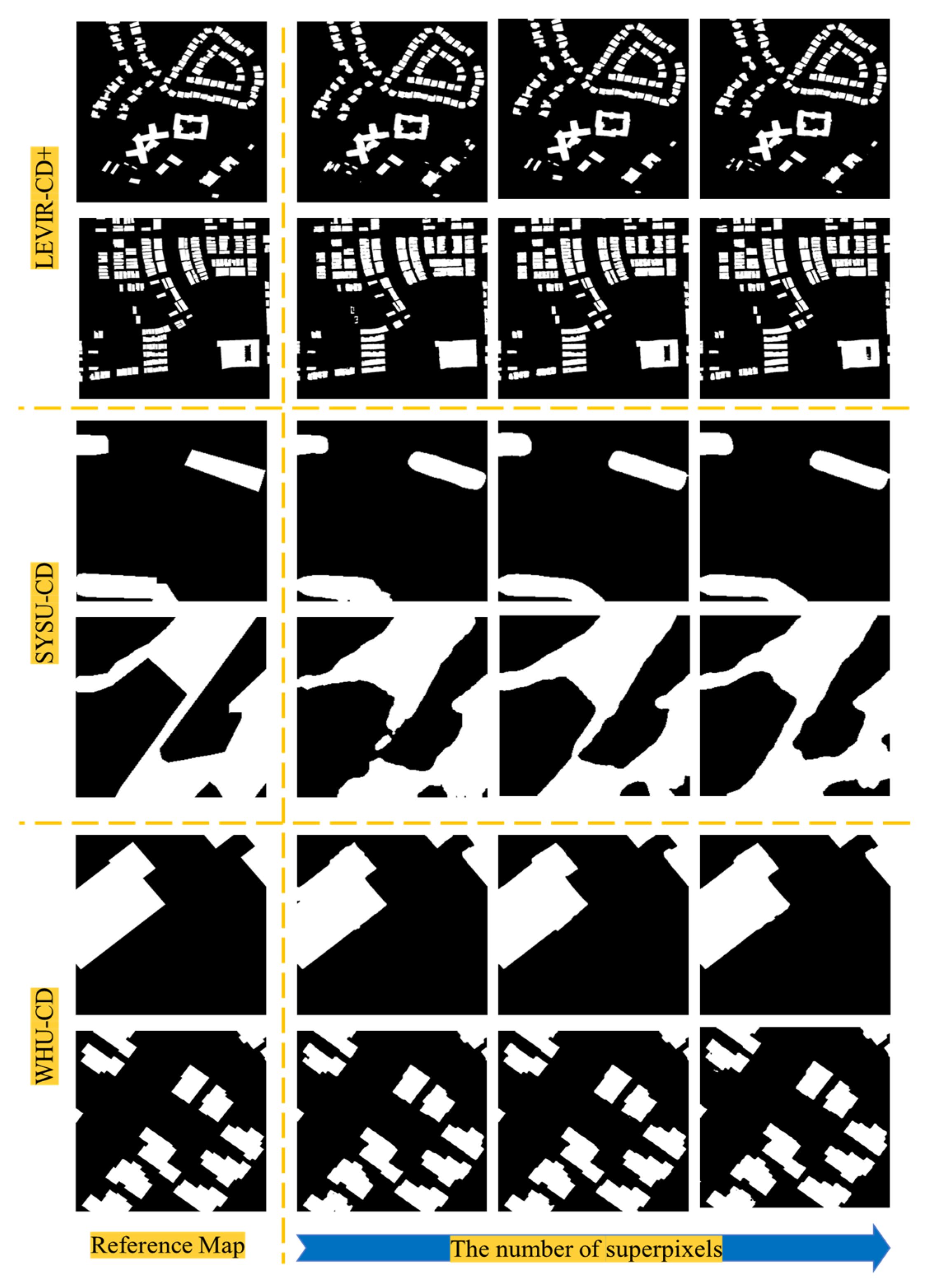

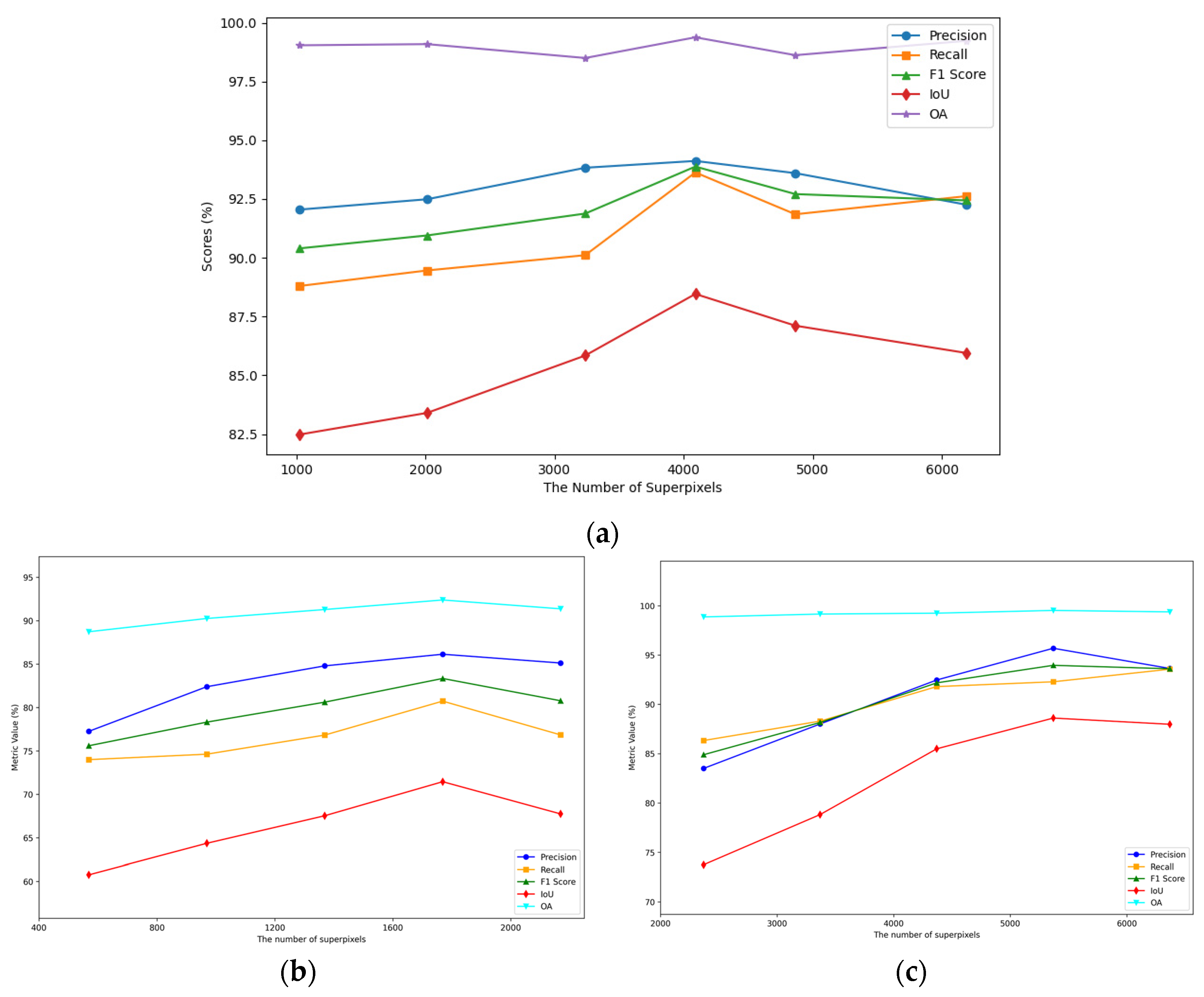

To analyze the impact of the number of superpixels on the results of the method, we first set different numbers of superpixels for the experimentation and selected results from the three datasets to be displayed in Figure 8.

Figure 8.

Qualitative comparison of our method with different superpixels on the three datasets.

It can be observed that the more superpixels there are, the smaller the segmentation scale, and the more refined the results become, but this can also easily introduce more isolated noise. Conversely, the fewer the number of superpixels, the larger the segmentation scale, and the coarser the results, but many subtle changes may be easily overlooked.

Figure 9 more intuitively demonstrates the relationship between the number of superpixels and detection accuracy. Specifically, at the beginning, all the metrics show an upward trend as the number of superpixels increases. However, if the number of superpixels exceeds a certain threshold, the accuracy will gradually decrease. Therefore, the performance of the proposed CD-DSHGCN will not continue to increase with the addition of more superpixels. Moreover, a larger number of superpixels leads to a larger hypergraph structure, which significantly increases the computational cost.

Figure 9.

Diagram of the relationship between the accuracy and the number of superpixels. (a) LEVIR-CD+. (b) SYSU-CD. (c) WHU-CD.

4.6. Ablation Study

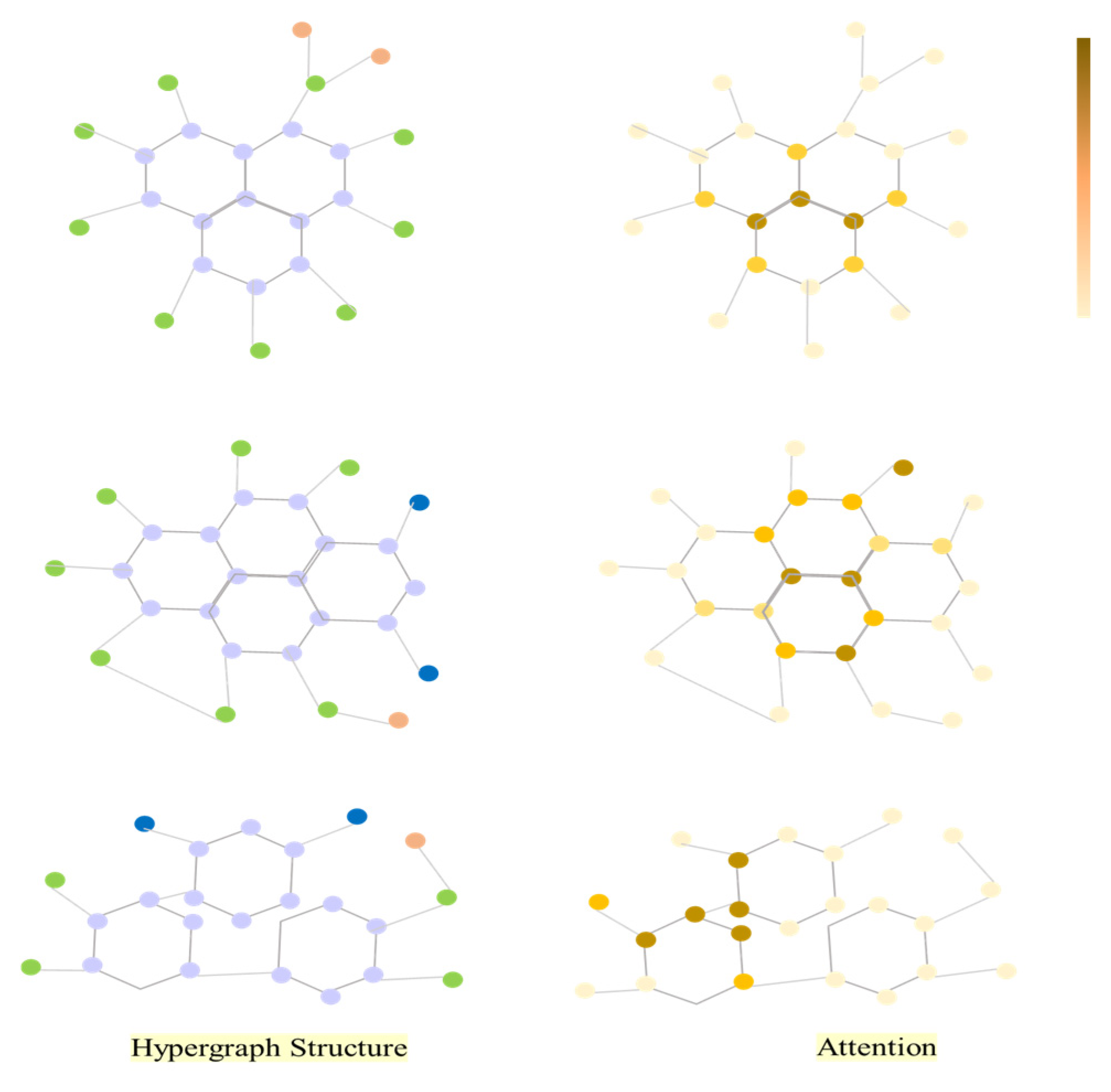

To better understand the impact of the hypergraph structure on the vertex features, we visualized some self-attention scores during the hypergraph structure evolution process, as shown in Figure 10.

Figure 10.

Attention visualization of the proposed hypergraph structure generation module.

The left side is the hypergraph structure, and the right side corresponds to the vertex weights. It can be observed that as the hypergraph structure changes, the importance of vertices also continuously changes. Such visualization indicates that our method can effectively integrate the structure and fully learn spatiotemporal features.

To explore the importance of each pivotal component of the proposed model, ablation experiments are performed on the LEVIR-CD+ dataset and the WHU-CD dataset. The basic architecture without these modules includes the superpixel initialized by a regular grid and graph convolutional networks. On the basis of the basic architecture, different modules are adopted, and other modules are restored to compare the change detection accuracy. The following four models are compared:

- Model1: The basic architecture without the three proposed modules;

- Model2: Replacing the regular grid in the basic architecture with vertex aggregation based on superpixel segmentation (VAS);

- Model3: Replacing the GCN with dynamic hypergraph convolutional neural networks (DHGCN);

- Model4: Based on Model3, embedding the hypergraph structure generation module (HSGM), which is the proposed method.

Table 5 presents the results of the ablation study. The VAS module can improve the accuracy of the basic architecture on two datasets. On the LEVIR-CD+ dataset, the F1-score, IoU, and OA increased by 3.66%, 5.91%, and 1.69%, respectively, while on the WHU-CD dataset, the F1-score, IoU, and OA increased by 2.07%, 3.3%, and 0.07%, respectively. It can be observed from the table that after replacing the GCNs with the dynamic hypergraph convolutional neural networks, the accuracy was improved to some extent. On the LEVIR-CD+ dataset, the F1-score, IoU, and OA increased by 0.54%, 0.15%, and 0.17%, respectively, while on the WHU-CD dataset, the F1-score, IoU, and OA increased by 1.09%, 1.85%, and 0.06%, respectively. However, there is still a certain gap compared to the final model that includes the HSGM. After incorporating the complete DHGCN and HSGM, the F1-score, IoU, and OA on the LEVIR-CD+ dataset increased by 3.06%, 5.27%, and 0.38%, respectively, and on the WHU-CD dataset, they increased by 3.26%, 5.63%, and 0.40%, respectively. This is attributed to the hypergraph structure, which, under the same number of vertices, has a larger search space, superior representation capability, and stronger ability to explore high-order correlations.

Table 5.

Ablation study of the model and a report of the F1/IoU/OA scores for each configuration on the LEVIR-CD+ and WHU-CD datasets.

5. Conclusions

In this paper, we explore the potential of the emerging hypergraph architecture for remote sensing image change detection tasks and propose an efficient hypergraph-based model achieving high-precision, efficient, and robust change detection. Combining the unique characteristics of the hypergraph architecture and change detection tasks, we propose a vertex aggregation mechanism based on superpixel segmentation, modeling unstructured image object data. Additionally, we construct a hypergraph structure based on the multi-headed self-attention mechanism, updating the hypergraph structure through learnable parameters, and fully learning spatiotemporal features. Meanwhile, we design a framework based on dynamic hypergraph convolutional neural networks, optimizing the learning of hypergraphs to model high-order correlations. The hypergraph framework we propose has shown very competitive, if not the best, performance on three remote sensing change detection datasets, verifying its effectiveness in improving the accuracy of change detection.

Author Contributions

Methodology, Z.C.; Formal analysis, Z.C.; Writing—original draft, Z.C.; Supervision, X.T.; Project administration, Y.Z.; Funding acquisition, Y.D. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (Nos. NSFC 61925105, 62227801, 62322109, 62171257 and U22B2001), State Key Laboratory of Space Network and Communications, New Cornerstone Science Foundation through the XPLORER PRIZE, and the Tsinghua University (Department of Electronic Engineering)—Nantong Research Institute for Advanced Communication Technologies Joint Research Center for Space, Air, Ground and Sea Cooperative Communication Network Technology.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Lu, P.; Qin, Y.; Li, Z.; Mondini, A.C.; Casagli, N. Landslide mapping from multi-sensor data through improved change detection-based Markov random field. Remote Sens. Environ. 2019, 231, 111235. [Google Scholar] [CrossRef]

- Gärtner, P.; Förster, M.; Kurban, A.; Kleinschmit, B. Object based change detection of Central Asian Tugai vegetation with very high spatial resolution satellite imagery. Int. J. Appl. Earth Obs. Geoinf. 2014, 31, 110–121. [Google Scholar] [CrossRef]

- Lin, Y.; Zhang, L.; Wang, N. A New Time Series Change Detection Method for Landsat Land use and Land Cover Change. In Proceedings of the 2019 10th International Workshop on the Analysis of Multitemporal Remote Sensing Images (MultiTemp), Shanghai, China, 5–7 August 2019. [Google Scholar]

- Daudt, R.C.; Saux, B.L.; Boulch, A.; Gousseau, Y. Urban Change Detection for Multispectral Earth Observation Using Convolutional Neural Networks. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018. [Google Scholar]

- Baatz, M.; Schpe, A. An optimization approach for high quality multi-scale image segmentation. In Beiträge zum AGIT-Symposium; Available online: https://www.amazon.co.jp/-/en/Josef-Strobl/dp/387907349X (accessed on 3 September 2024).

- Chen, Q.; Li, L.; Xu, Q.; Yang, S.; Shi, X.; Liu, X. Multi-Feature Segmentation for High-Resolution Polarimetric SAR Data Based on Fractal Net Evolution Approach. Remote Sens. 2017, 9, 570. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC Superpixels Compared to State-of-the-Art Superpixel Methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef]

- Tabib Mahmoudi, F.; Samadzadegan, F.; Reinartz, P. Context Aware Modification on the Object Based Image Analysis. J. Indian Soc. Remote Sens. 2015, 43, 709–717. [Google Scholar] [CrossRef][Green Version]

- Cai, S.; Liu, D. A Comparison of Object-Based and Contextual Pixel-Based Classifications Using High and Medium Spatial Resolution Images. Remote Sens. Lett. 2013, 4, 998–1007. [Google Scholar] [CrossRef]

- Zhang, P.; Lv, Z.; Shi, W. Object-Based Spatial Feature for Classification of very High Resolution Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1572–1576. [Google Scholar] [CrossRef]

- Zhou, W.; Troy, A.; Grove, M. Object-based Land Cover Classification and Change Analysis in the Baltimore Metropolitan Area Using Multitemporal High Resolution Remote Sensing Data. Sensors 2008, 8, 1613–1636. [Google Scholar] [CrossRef]

- Lefebvre, A.; Corpetti, T.; Hubertmoy, L. Object-Oriented Approach and Texture Analysis for Change Detection in Very High Resolution Images. In Proceedings of the IEEE International Geoscience & Remote Sensing Symposium, Boston, MA, USA, 7–11 July 2008. [Google Scholar]

- Chant, T.D.; Kelly, M. Individual Object Change Detection for Monitoring the Impact of a Forest Pathogen on a Hardwood Forest. Photogramm. Eng. Remote Sens. 2009, 75, 1005–1013. [Google Scholar] [CrossRef]

- Stow, D. Geographic Object-based Image Change Analysis. In Handbook of Applied Spatial Analysis; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Jun, H.; Shao-Hong, S. Land Use Change Detection Using High Spatial Resolution Remotely Sensed Image and GIS Data. J. Yangtze River Sci. Res. Inst. 2012, 29, 49–52. [Google Scholar]

- Zhang, P.L.; Ruan, B.L.; Chao, J. An Object-Based Basic Farmland Change Detection Using High Spatial Resolution Image and GIS Data of Land Use Planning. Key Eng. Mater. 2012, 500, 492–499. [Google Scholar] [CrossRef]

- Toure, S.; Stow, D.; Shih, H.C.; Coulter, L.; Weeks, J.; Engstrom, R.; Sandborn, A. An object-based temporal inversion approach to urban land use change analysis. Remote Sens. Lett. 2016, 7, 503–512. [Google Scholar] [CrossRef]

- Daudt, R.C.; Saux, B.L.; Boulch, A. Fully Convolutional Siamese Networks for Change Detection. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018. [Google Scholar]

- Chen, H.; Qi, Z.; Shi, Z. Remote Sensing Image Change Detection With Transformers. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5607514. [Google Scholar] [CrossRef]

- Bandara, W.G.C.; Patel, V.M. A Transformer-Based Siamese Network for Change Detection. In Proceedings of the IGARSS 2022-2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022. [Google Scholar]

- Shafique, A.; Cao, G.; Khan, Z.; Asad, M.; Aslam, M. Deep Learning-Based Change Detection in Remote Sensing Images: A Review. Remote Sens. 2022, 14, 871. [Google Scholar] [CrossRef]

- Saha, S.; Mou, L.; Zhu, X.X.; Bovolo, F.; Bruzzone, L. Semisupervised Change Detection Using Graph Convolutional Network. IEEE Geosci. Remote Sens. Lett. 2020, 18, 607–611. [Google Scholar] [CrossRef]

- Wu, J.; Li, B.; Qin, Y.; Ni, W.; Zhang, H.; Fu, R.; Sun, Y. A Multiscale Graph Convolutional Network for Change Detection in Homogeneous and Heterogeneous Remote Sensing Images. Int. J. Appl. Earth Obs. Geoinf. 2021, 105, 102615. [Google Scholar] [CrossRef]

- Tang, X.; Zhang, H.; Mou, L.; Liu, F.; Zhang, X.; Zhu, X.X.; Jiao, L. An unsupervised remote sensing change detection method based on multiscale graph convolutional network and metric learning. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5609715. [Google Scholar] [CrossRef]

- Zhao, X.; Li, S.; Geng, T.; Wang, X. GTransCD: Graph Transformer-Guided Multitemporal Information United Framework for Hyperspectral Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5500313. [Google Scholar] [CrossRef]

- Dong, W.; Yang, Y.; Qu, J.; Xiao, S.; Li, Y. Local Information-Enhanced Graph-Transformer for Hyperspectral Image Change Detection with Limited Training Samples. IEEE Trans. Geosci. Remote Sens. 2023, 61, 550814. [Google Scholar] [CrossRef]

- Zhou, Z.; Ma, L.; Fu, T.; Zhang, G.; Yao, M.; Li, M. Change Detection in Coral Reef Environment Using High-Resolution Images: Comparison of Object-Based and Pixel-Based Paradigms. ISPRS Int. J. Geo Inf. 2018, 7, 441. [Google Scholar] [CrossRef]

- Duro, D.C.; Franklin, S.E.; Dubé, M.G. A comparison of pixel-based and object-based image analysis with selected machine learning algorithms for the classification of agricultural landscapes using SPOT-5 HRG imagery. Remote Sens. Environ. 2012, 118, 259–272. [Google Scholar] [CrossRef]

- Chen, Q.; Chen, Y. Multi-Feature Object-Based Change Detection Using Self-Adaptive Weight Change Vector Analysis. Remote Sens. 2016, 8, 549. [Google Scholar] [CrossRef]

- Desclée, B.; Bogaert, P.; Defourny, P. Forest change detection by statistical object-based method. Remote Sens. Environ. 2006, 102, 1–11. [Google Scholar] [CrossRef]

- Wang, R.S.; Efford, N.D.; Roberts, S.A. An Object-based approach to integrate remotely sensed data within a GIS context for land use changes detection at urban-rural fringe areas. In Proceedings of the Aerospace Remote Sensing, London, UK, 22 December 1997. [Google Scholar]

- Jiang, H.; Hu, X.; Li, K.; Zhang, J.; Gong, J.; Zhang, M. PGA-SiamNet: Pyramid Feature-Based Attention-Guided Siamese Network for Remote Sensing Orthoimagery Building Change Detection. Remote Sens. 2020, 12, 484. [Google Scholar] [CrossRef]

- Peng, D.; Zhang, Y.; Guan, H. End-to-End Change Detection for High Resolution Satellite Images Using Improved UNet++. Remote Sens. 2019, 11, 1382. [Google Scholar] [CrossRef]

- Chen, H.; Shi, Z. A Spatial-Temporal Attention-Based Method and a New Dataset for Remote Sensing Image Change Detection. Remote Sens. 2020, 12, 1662. [Google Scholar] [CrossRef]

- Jing, R.; Liu, S.; Gong, Z.; Wang, Z.; Guan, H.; Gautam, A.; Zhao, W. Object-based change detection for VHR remote sensing images based on a Trisiamese-LSTM. Int. J. Remote Sens. 2020, 41, 6209–6231. [Google Scholar] [CrossRef]

- Ouyang, S.; Li, Y. Combining Deep Semantic Segmentation Network and Graph Convolutional Neural Network for Semantic Segmentation of Remote Sensing Imagery. Remote Sens. 2020, 13, 119. [Google Scholar] [CrossRef]

- Chaudhuri, U.; Banerjee, B.; Bhattacharya, A. Siamese graph convolutional network for content based remote sensing image retrieval. Comput. Vis. Image Underst. 2019, 184, 22–30. [Google Scholar] [CrossRef]

- Qin, A.; Shang, Z.; Tian, J.; Wang, Y.; Zhang, T.; Tang, Y.Y. Spectral–Spatial Graph Convolutional Networks for Semisupervised Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2018, 16, 241–245. [Google Scholar] [CrossRef]

- Hong, D.; Gao, L.; Yao, J.; Zhang, B.; Plaza, A.; Chanussot, J. Graph Convolutional Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2020, 59, 5966–5978. [Google Scholar] [CrossRef]

- Du, X.; Zheng, X.; Lu, X.; Doudkin, A.A. Multisource Remote Sensing Data Classification with Graph Fusion Network. IEEE Trans. Geosci. Remote Sens. 2021, 59, 10062–10072. [Google Scholar] [CrossRef]

- Cai, W.; Wei, Z. Remote Sensing Image Classification Based on a Cross-Attention Mechanism and Graph Convolution. IEEE Geosci. Remote Sens. Lett. 2020, 19, 1–5. [Google Scholar] [CrossRef]

- Wang, R.; Wang, L.; Wei, X.; Chen, J.W.; Jiao, L. Dynamic Graph-Level Neural Network for SAR Image Change Detection. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4501005. [Google Scholar] [CrossRef]

- Wang, R.; Wang, L.; Dong, P.; Jiao, L.; Chen, J.W. Graph-level neural network for SAR image change detection. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 3785–3788. [Google Scholar]

- Yuan, W.; Yuan, X.; Fan, Z.; Guo, Z.; Shi, X.; Gong, J.; Shibasaki, R. Graph Neural Network Based Multi-Feature Fusion for Building Change Detection. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 43, 377–382. [Google Scholar] [CrossRef]

- Zhang, Y.; Miao, R.; Dong, Y.; Du, B. Multiorder Graph Convolutional Network with Channel Attention for Hyperspectral Change Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 17, 1523–1534. [Google Scholar] [CrossRef]

- Zhou, Y.; Wang, J.; Ding, J.; Liu, B.; Weng, N.; Xiao, H. SIGNet: A Siamese Graph Convolutional Network for Multi-Class Urban Change Detection. Remote Sens. 2023, 15, 2464. [Google Scholar] [CrossRef]

- Han, T.; Tang, Y.; Chen, Y.; Zou, B.; Feng, H. Global structure graph mapping for multimodal change detection. Int. J. Digit. Earth 2024, 17, 2347457. [Google Scholar] [CrossRef]

- Trifunović, A.; Knottenbelt, W.J. Parallel Multilevel Algorithms for Hypergraph Partitioning. J. Parallel Distrib. Comput. 2008, 68, 563–581. [Google Scholar] [CrossRef]

- Huang, Y.; Liu, Q.; Lv, F.; Gong, Y.; Metaxas, D.N. Unsupervised Image Categorization by Hypergraph Partition. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 1266–1273. [Google Scholar] [CrossRef]

- Gao, Y.; Feng, Y.; Ji, S.; Ji, R. HGNN+: General Hypergraph Neural Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 3181–3199. [Google Scholar] [CrossRef] [PubMed]

- Shi, Q.; Liu, M.; Li, S.; Liu, X.; Wang, F.; Zhang, L. A Deeply Supervised Attention Metric-Based Network and an Open Aerial Image Dataset for Remote Sensing Change Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5604816. [Google Scholar] [CrossRef]

- Ji, S.; Wei, S.; Lu, M. Fully Convolutional Networks for Multisource Building Extraction from an Open Aerial and Satellite Imagery Data Set. IEEE Trans. Geosci. Remote Sens. 2019, 57, 574–586. [Google Scholar] [CrossRef]

- Liu, Y.; Pang, C.; Zhan, Z.; Zhang, X.; Yang, X. Building Change Detection for Remote Sensing Images Using a Dual-Task Constrained Deep Siamese Convolutional Network Model. IEEE Geosci. Remote Sens. Lett. 2021, 18, 811–815. [Google Scholar] [CrossRef]

- Zhang, C.; Yue, P.; Tapete, D.; Jiang, L.; Shangguan, B.; Huang, L.; Liu, G. A deeply supervised image fusion network for change detection in high resolution bi-temporal remote sensing images. ISPRS J. Photogramm. Remote Sens. 2020, 166, 183–200. [Google Scholar] [CrossRef]

- Feng, Y.; Jiang, J.; Xu, H.; Zheng, J. Change Detection on Remote Sensing Images Using Dual-Branch Multilevel Intertemporal Network. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4401015. [Google Scholar] [CrossRef]

- Huang, Y.; Li, X.; Du, Z.; Shen, H. Spatiotemporal Enhancement and Interlevel Fusion Network for Remote Sensing Images Change Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5609414. [Google Scholar] [CrossRef]

- Fang, S.; Li, K.; Shao, J.; Li, Z. SNUNet-CD: A Densely Connected Siamese Network for Change Detection of VHR Images. IEEE Geosci. Remote Sens. Lett. 2021, 19, 8007805. [Google Scholar] [CrossRef]

- Han, C.; Wu, C.; Guo, H.; Hu, M.; Chen, H. HANet: A hierarchical attention network for change detection with bitemporal very-high-resolution remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 3867–3878. [Google Scholar] [CrossRef]

- Zhang, C.; Wang, L.; Cheng, S.; Li, Y. SwinSUNet: Pure Transformer Network for Remote Sensing Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5224713. [Google Scholar] [CrossRef]

- Zhang, J.; Shao, Z.; Ding, Q.; Huang, X.; Wang, Y.; Zhou, X.; Li, D. AERNet: An attention-guided edge refinement network and a dataset for remote sensing building change detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5617116. [Google Scholar] [CrossRef]

- Song, X.; Hua, Z.; Li, J. Context Spatial Awareness Remote Sensing Image Change Detection Network Based on Graph and Convolution Interaction. IEEE Trans. Geosci. Remote Sens. 2024, 62, 3000316. [Google Scholar] [CrossRef]

- Zhang, Y.; Song, X.; Hua, Z.; Li, J. CGMMA: CNN-GNN Multiscale Mixed Attention Network for Remote Sensing Image Change Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 7089–7103. [Google Scholar] [CrossRef]

- Song, X.; Hua, Z.; Li, J. GMTS: GNN-based multi-scale transformer siamese network for remote sensing building change detection. Int. J. Digit. Earth 2023, 16, 1685–1706. [Google Scholar] [CrossRef]

- Chen, H.; Song, J.; Han, C.; Xia, J.; Yokoya, N. ChangeMamba: Remote Sensing Change Detection with Spatiotemporal State Space Model. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4409720. [Google Scholar] [CrossRef]

- Liu, M.; Chai, Z.; Deng, H.; Liu, R. A CNN-transformer network with multiscale context aggregation for fine-grained cropland change detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 4297–4306. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).