Automatic Fine Co-Registration of Datasets from Extremely High Resolution Satellite Multispectral Scanners by Means of Injection of Residues of Multivariate Regression

Abstract

1. Introduction

2. Materials and Methods

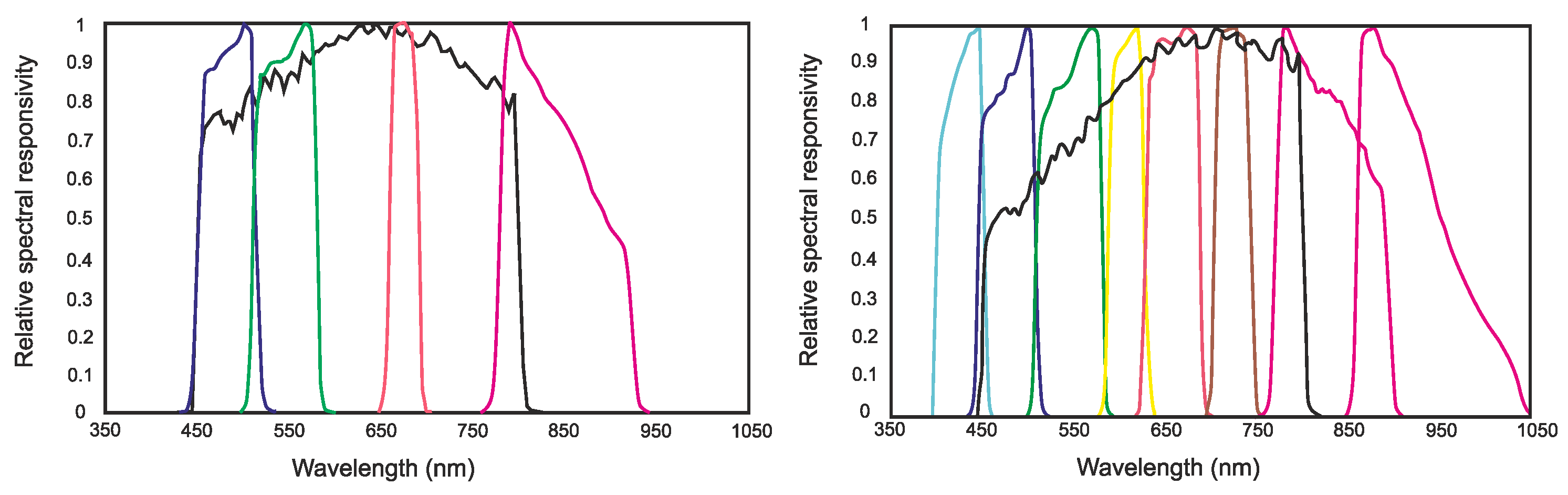

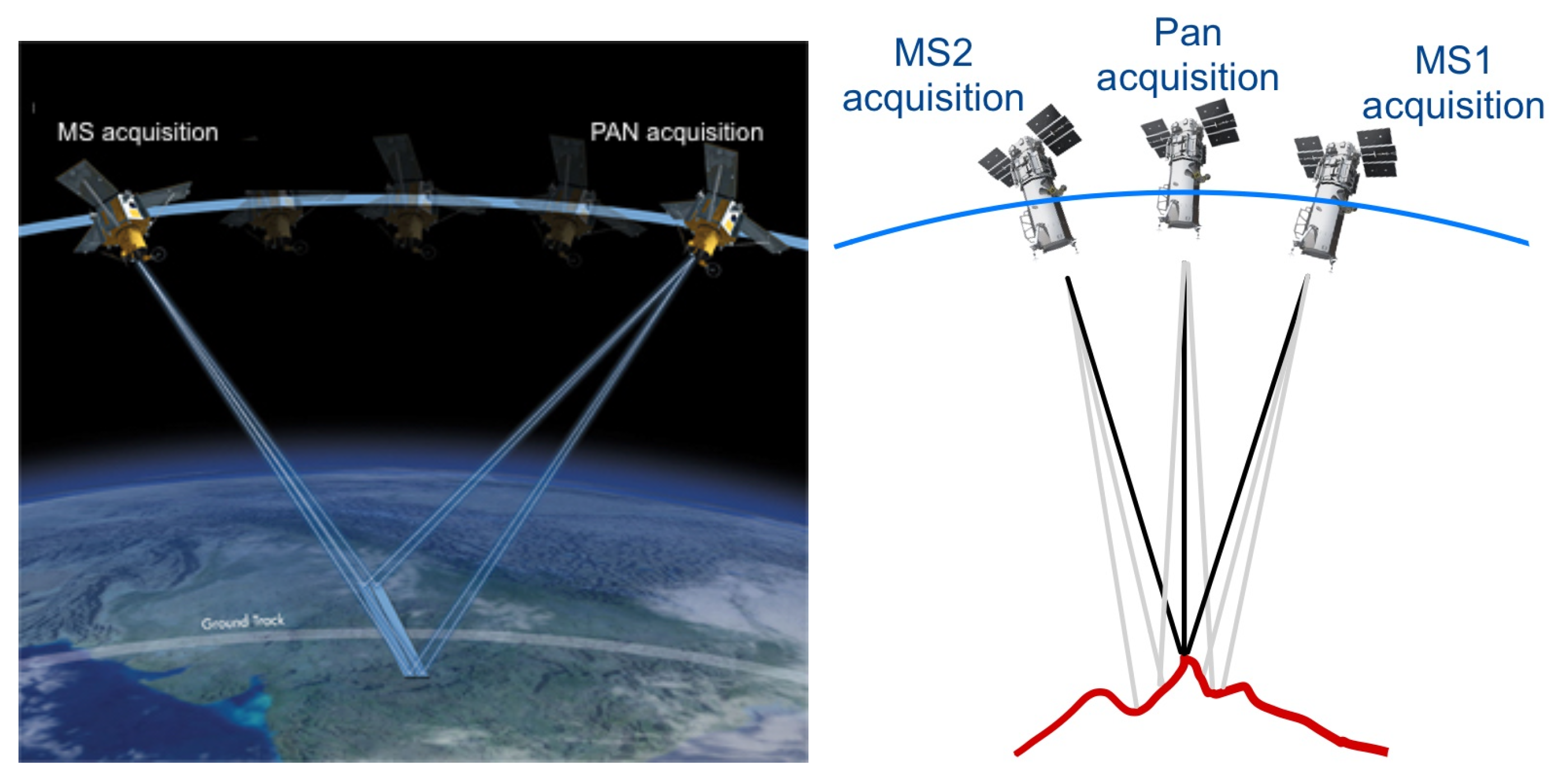

2.1. Materials

2.2. Methods

2.2.1. Alignment of GeoEye-1 Data (4-Bands + Pan)

2.2.2. Alignment of WorldView-2/3 Data (4 + 4 + Pan)

3. Experimental Results

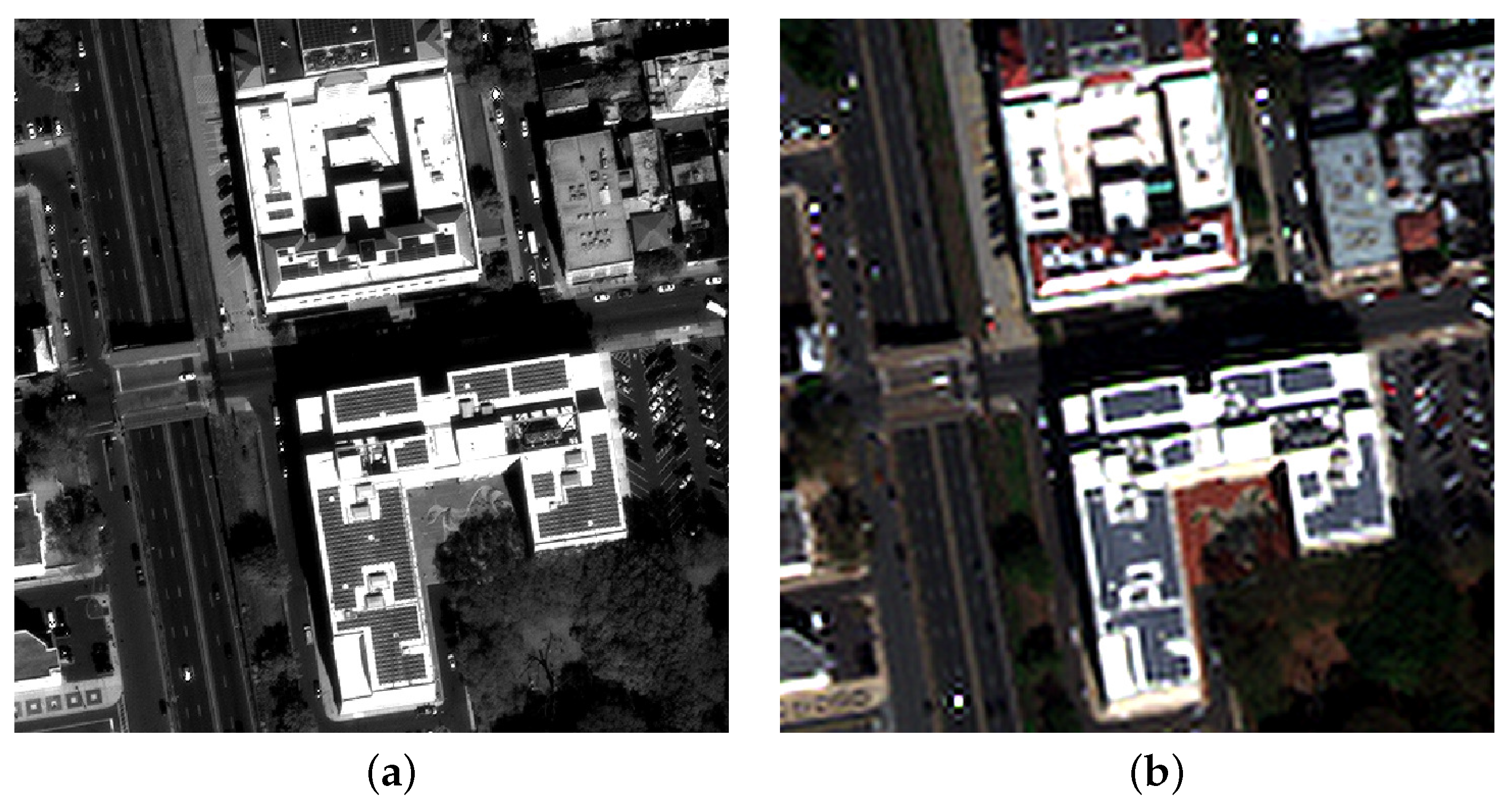

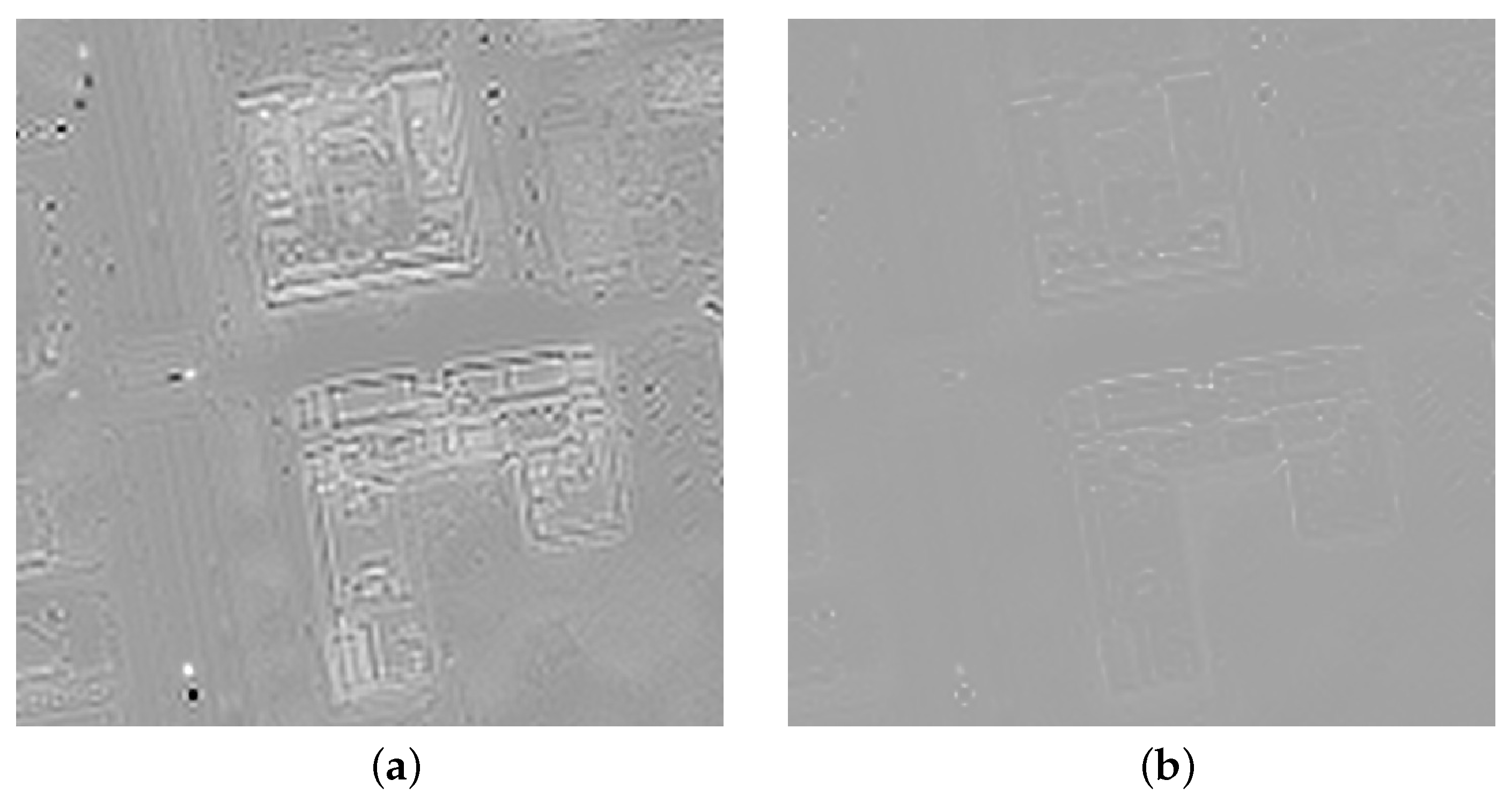

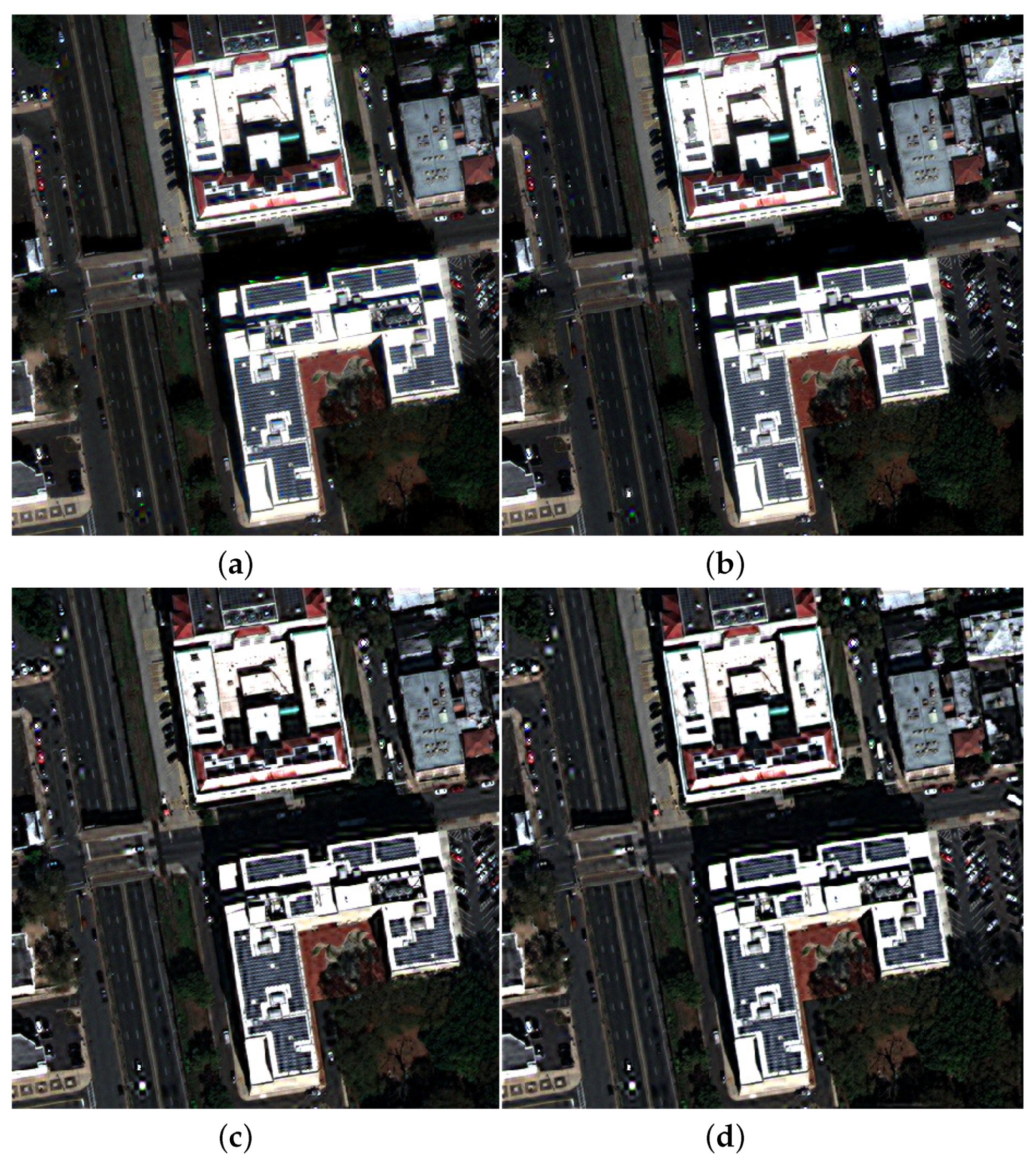

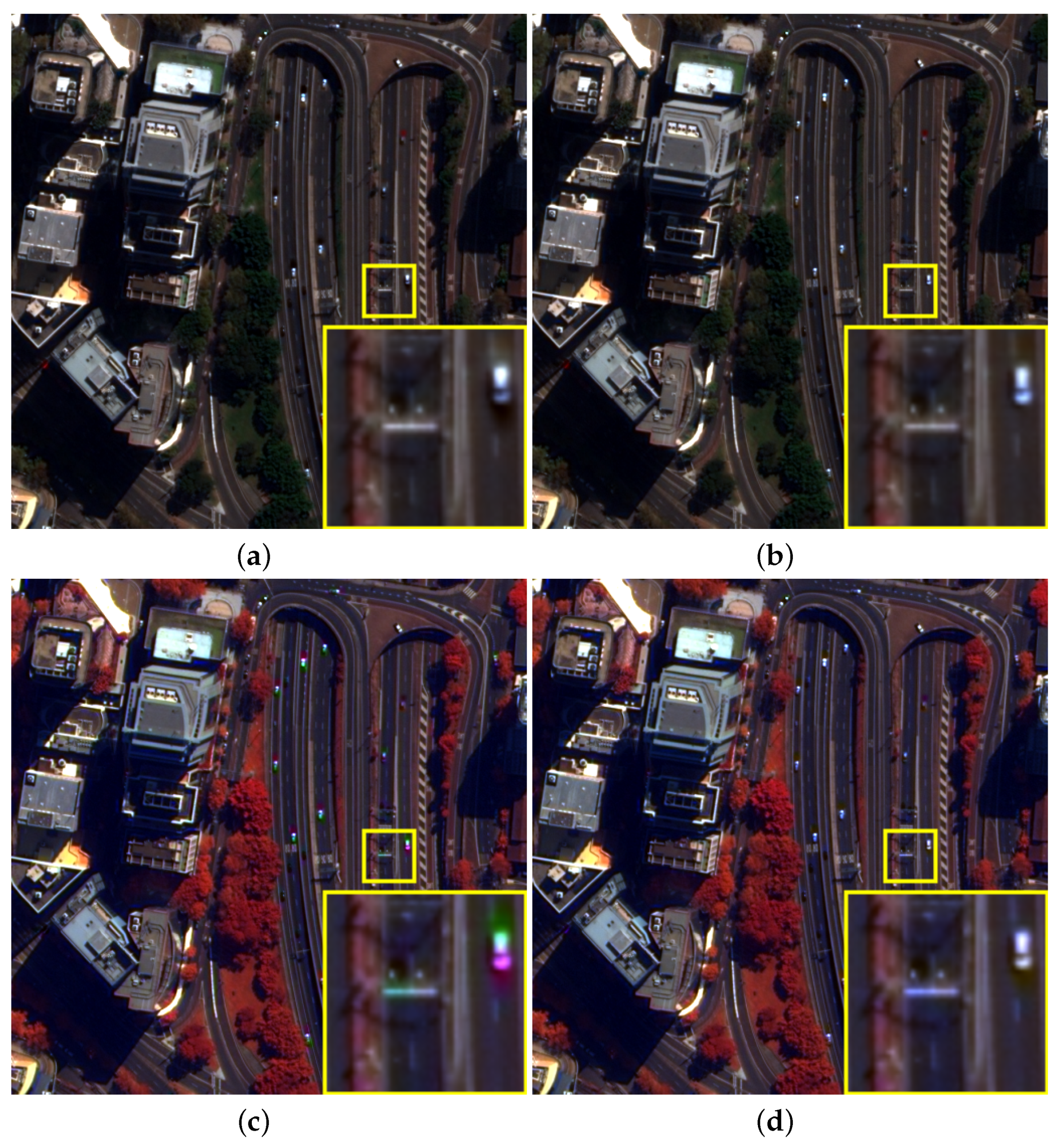

3.1. GeoEye-1 Dataset

- Gram–Schmidt with spectral adaptivity (GSA) [42], perhaps the most popular among CS methods;

- Brovey transform with haze correction (BT-H) [17], an optimized version of the popular Brovey transform CS method;

- Adaptive wavelet luminance proportional with haze correction (AWLP-H) [45], an optimized version of a popular MRA method;

- Modulation transfer function generalized Laplacian pyramid with full scale (MTF-GLP-FS) injection gains [46], an optimized version of an MRA method based on GLP.

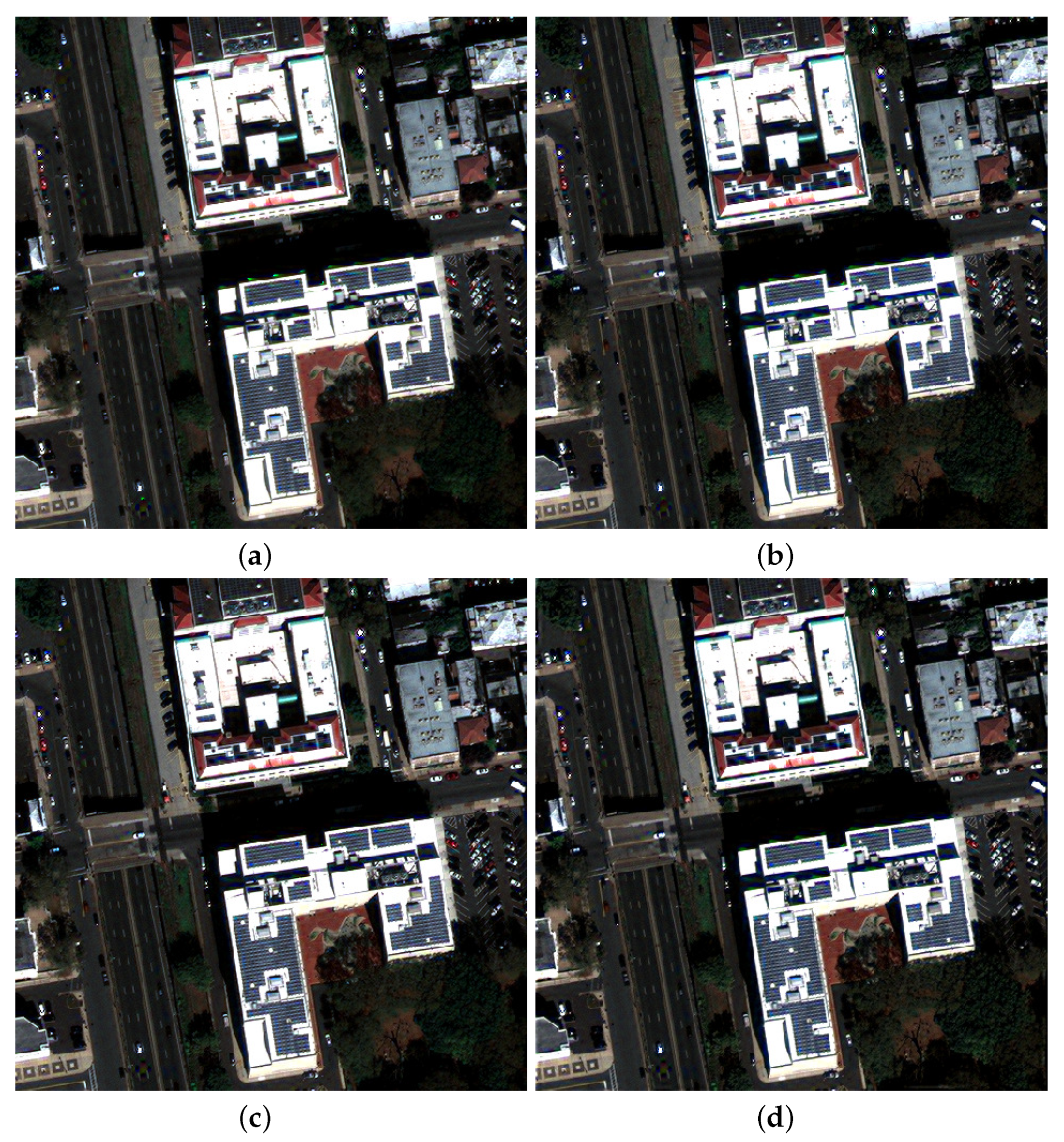

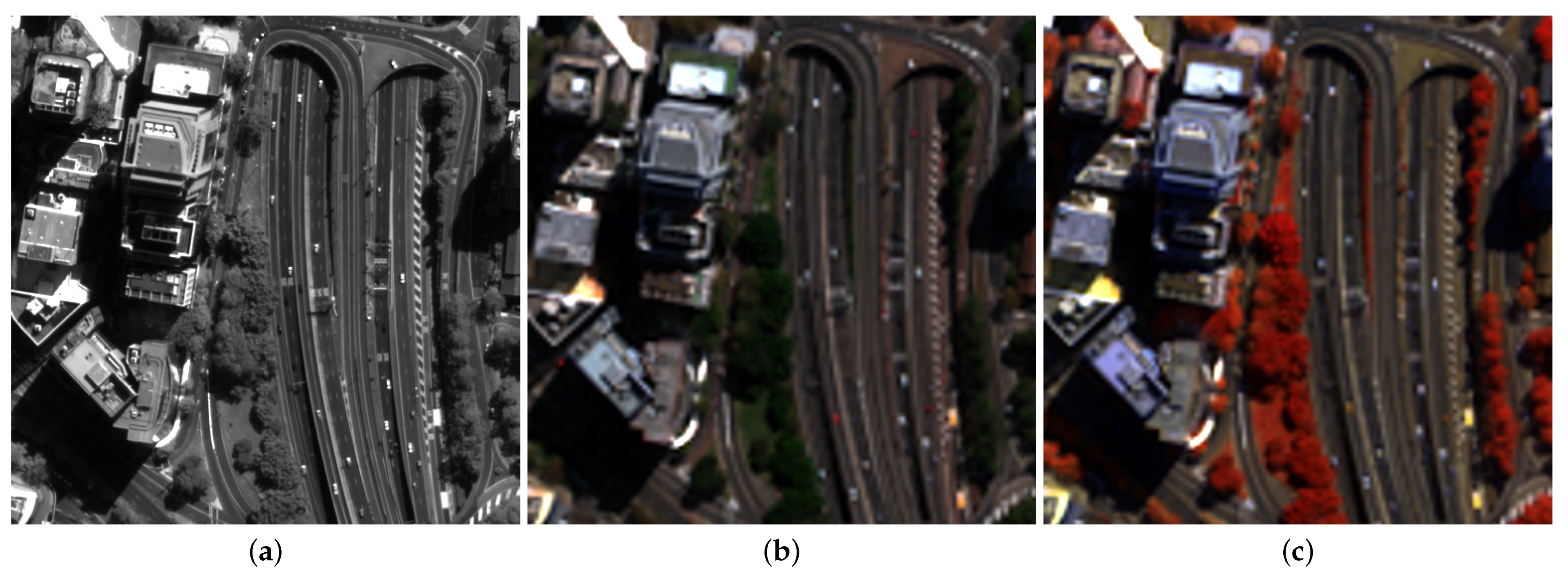

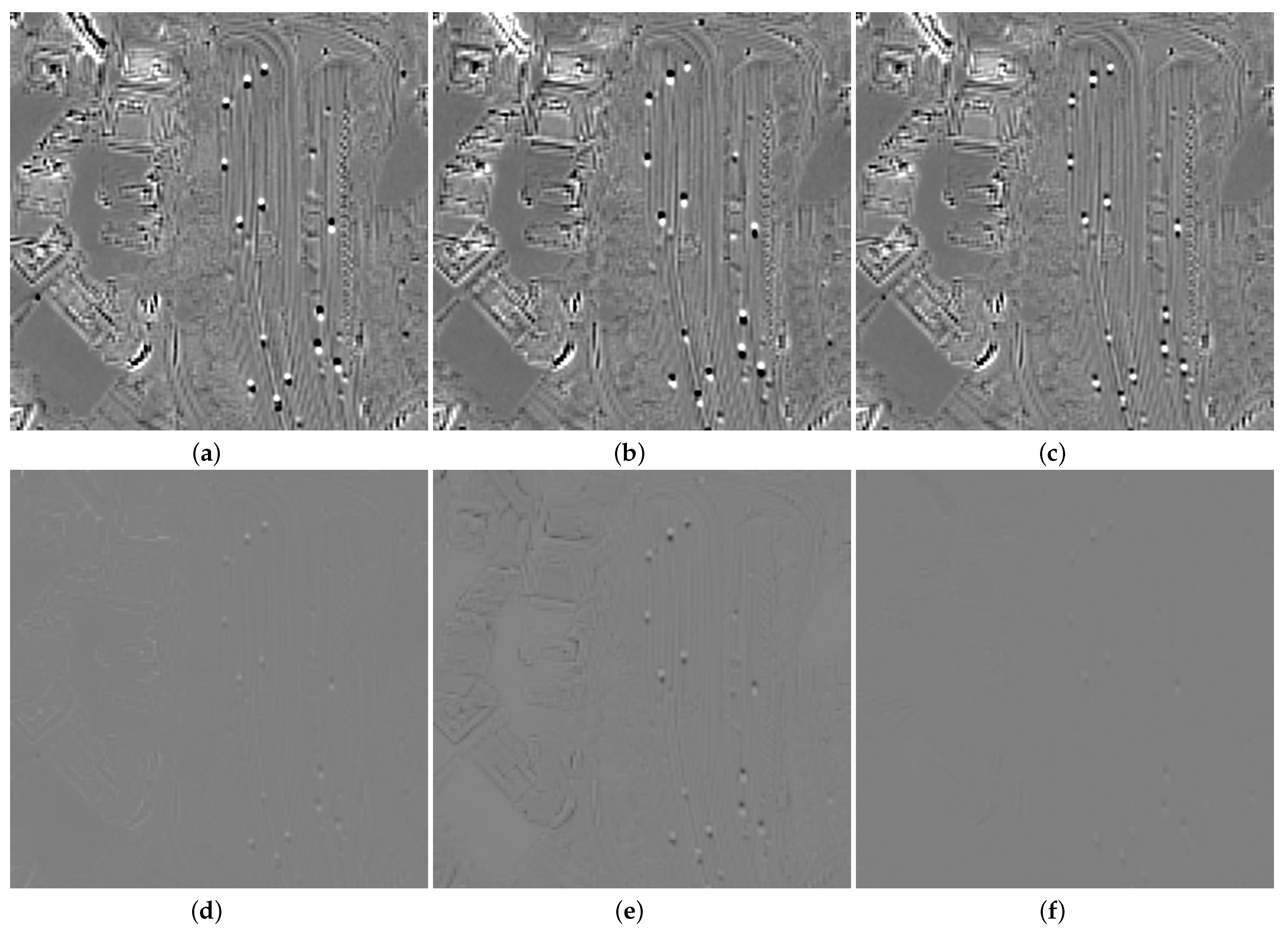

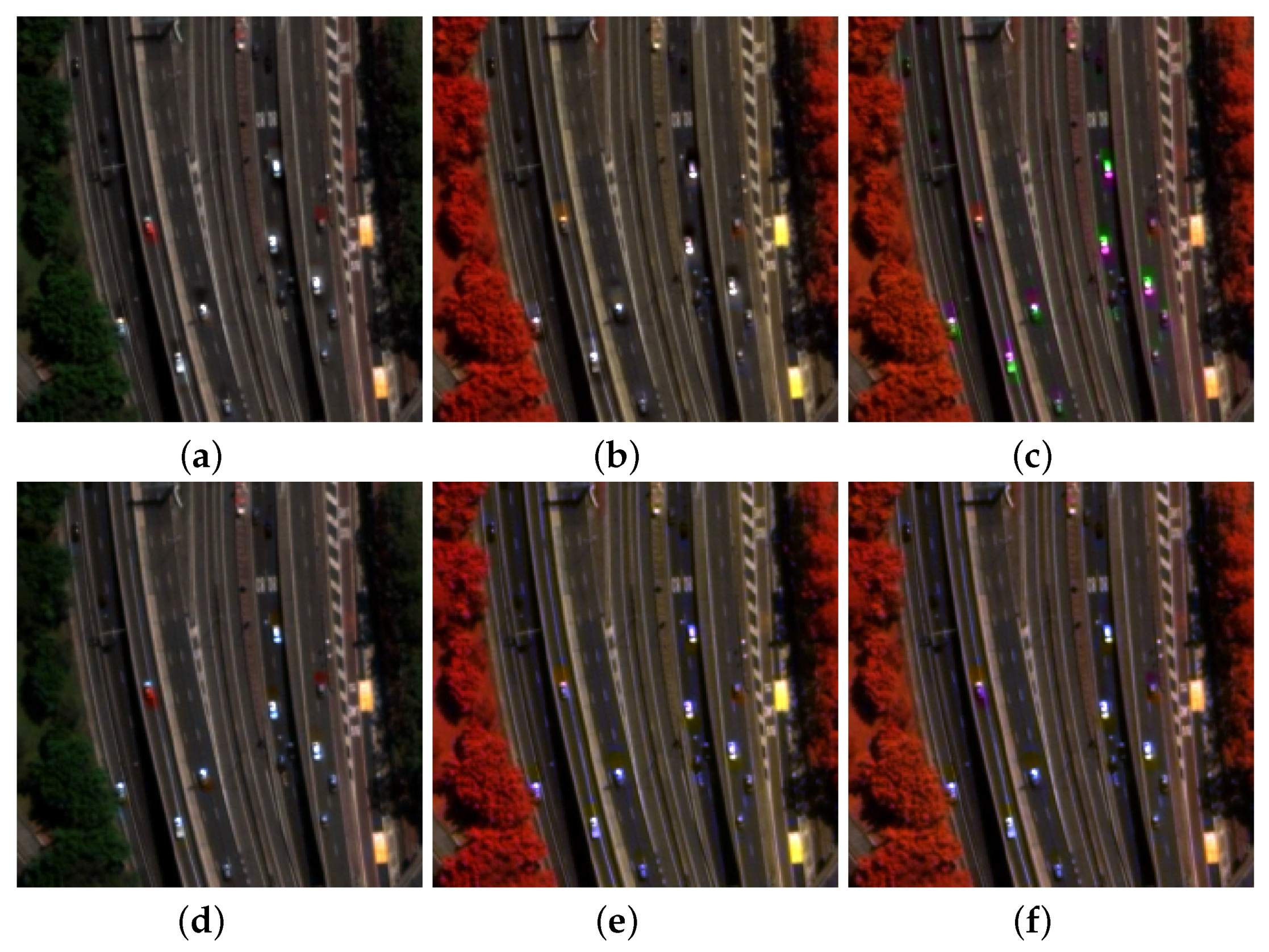

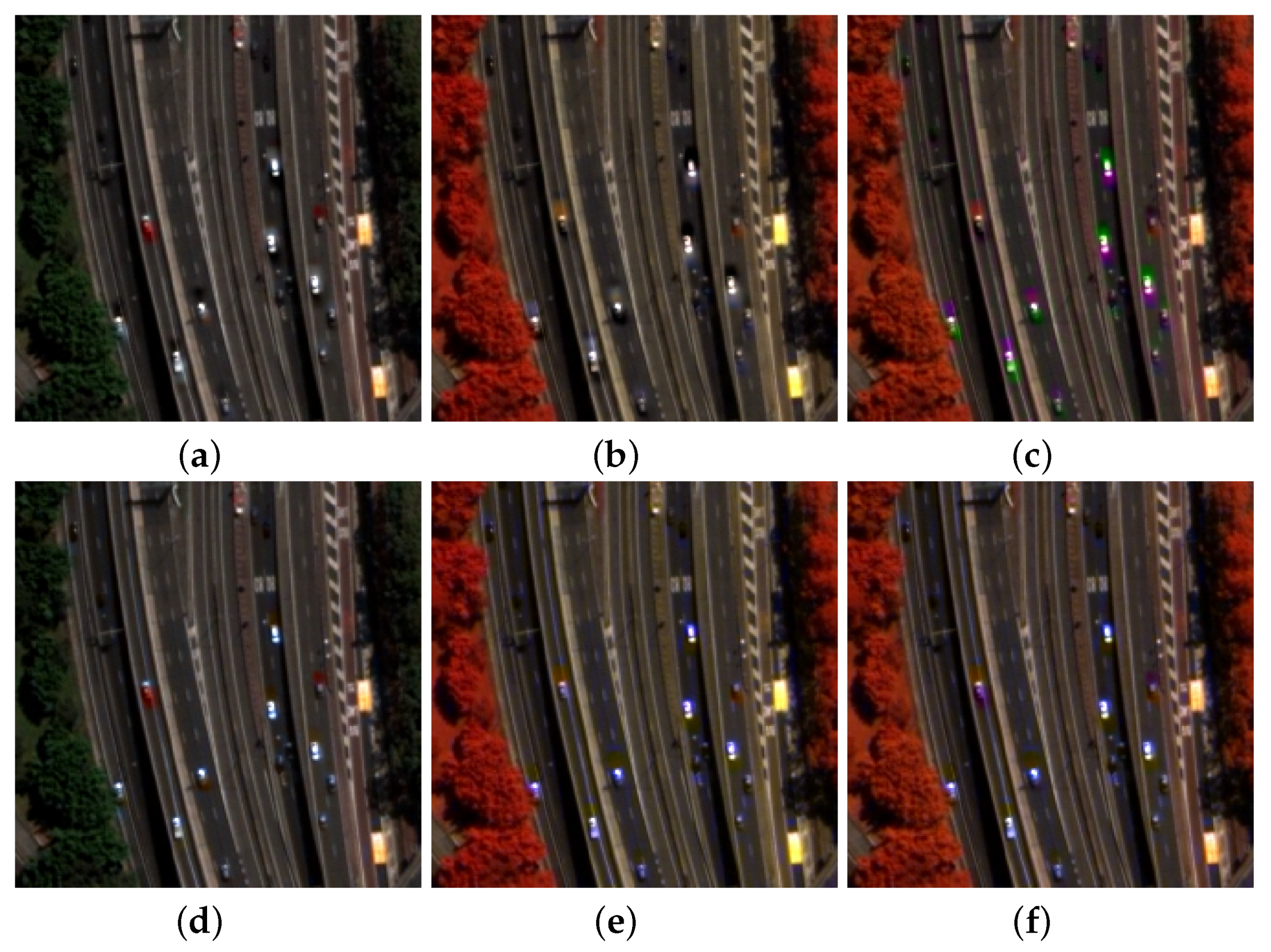

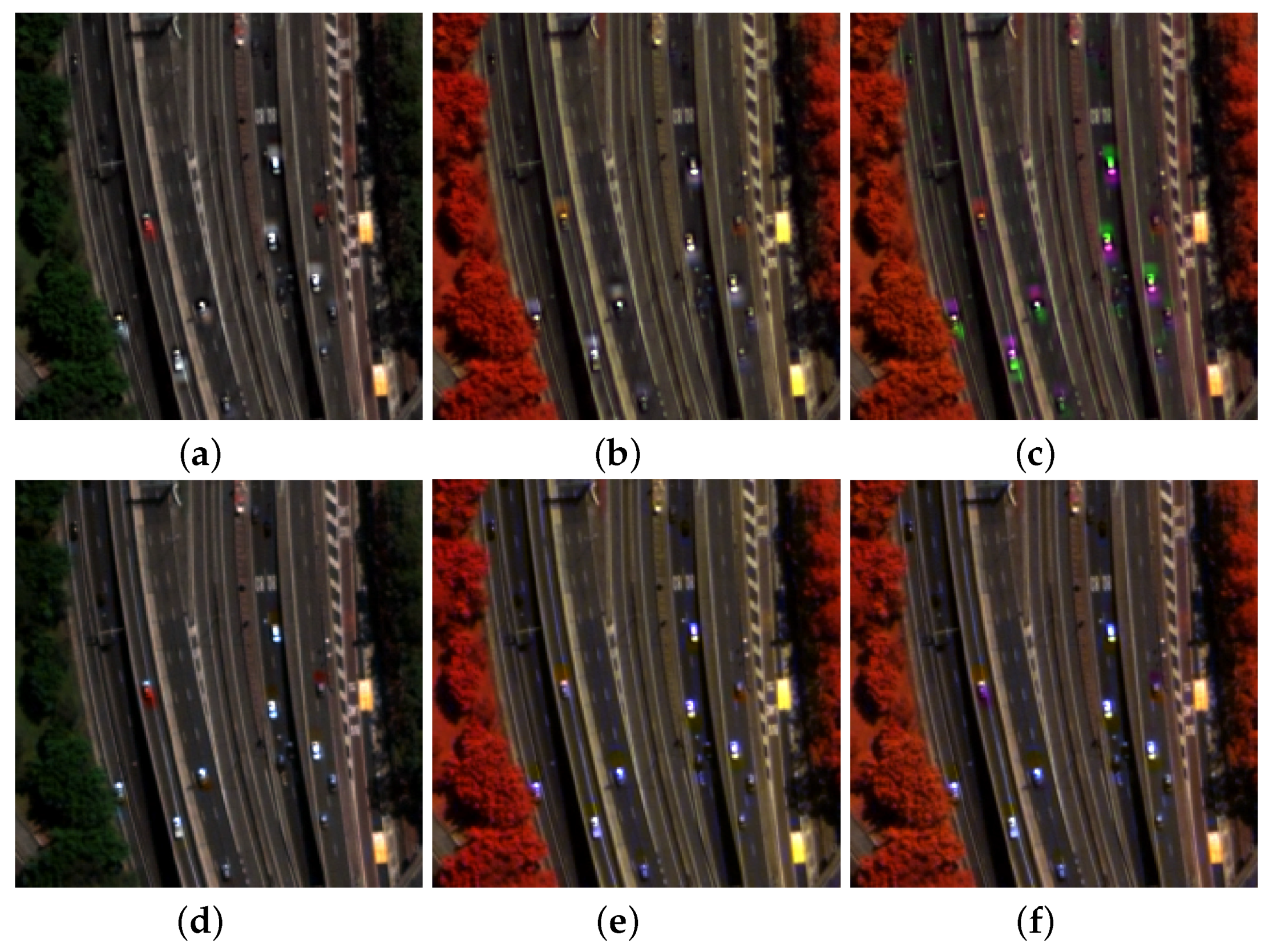

3.2. WorldView-2 Dataset

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Alparone, L.; Aiazzi, B.; Baronti, S.; Garzelli, A. Remote Sensing Image Fusion; CRC Press: Boca Raton, FL, USA, 2015. [Google Scholar]

- Iervolino, P.; Guida, R.; Riccio, D.; Rea, R. A novel multispectral, panchromatic and SAR data fusion for land classification. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 2019, 12, 3966–3979. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S. Information-theoretic heterogeneity measurement for SAR imagery. IEEE Trans. Geosci. Remote Sens. 2005, 43, 619–624. [Google Scholar] [CrossRef]

- Vivone, G.; Dalla Mura, M.; Garzelli, A.; Restaino, R.; Scarpa, G.; Ulfarsson, M.O.; Alparone, L.; Chanussot, J. A new benchmark based on recent advances in multispectral pansharpening: Revisiting pansharpening with classical and emerging pansharpening methods. IEEE Geosci. Remote Sens. Mag. 2021, 9, 53–81. [Google Scholar] [CrossRef]

- Thomas, C.; Ranchin, T.; Wald, L.; Chanussot, J. Synthesis of multispectral images to high spatial resolution: A critical review of fusion methods based on remote sensing physics. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1301–1312. [Google Scholar] [CrossRef]

- Aiazzi, B.; Baronti, S.; Selva, M.; Alparone, L. Bi-cubic interpolation for shift-free pan-sharpening. ISPRS J. Photogramm. Remote Sens. 2013, 86, 65–76. [Google Scholar] [CrossRef]

- Alparone, L.; Garzelli, A.; Zoppetti, C. Fusion of VNIR optical and C-band polarimetric SAR satellite data for accurate detection of temporal changes in vegetated areas. Remote Sens. 2023, 15, 638. [Google Scholar] [CrossRef]

- D’Elia, C.; Ruscino, S.; Abbate, M.; Aiazzi, B.; Baronti, S.; Alparone, L. SAR image classification through information-theoretic textural features, MRF segmentation, and object-oriented learning vector quantization. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 2014, 7, 1116–1126. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Argenti, F.; Baronti, S. Wavelet and pyramid techniques for multisensor data fusion: A performance comparison varying with scale ratios. In Image and Signal Processing for Remote Sensing V; Serpico, S.B., Ed.; SPIE: Bellingham, WA, USA, 1999; Volume 3871, pp. 251–262. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A.; Selva, M. Advantages of Laplacian pyramids over ”à trous” wavelet transforms for pansharpening of multispectral images. In Image and Signal Processing for Remote Sensing XVIII; Bruzzone, L., Ed.; SPIE: Bellingham, WA, USA, 2012; Volume 8537, pp. 12–21. [Google Scholar] [CrossRef]

- Garzelli, A.; Nencini, F.; Alparone, L.; Baronti, S. Multiresolution fusion of multispectral and panchromatic images through the curvelet transform. In Proceedings of the 2005 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Seoul, Republic of Korea, 25–29 July 2005; pp. 2838–2841. [Google Scholar] [CrossRef]

- Baronti, S.; Aiazzi, B.; Selva, M.; Garzelli, A.; Alparone, L. A theoretical analysis of the effects of aliasing and misregistration on pansharpened imagery. IEEE J. Sel. Top. Signal Process. 2011, 5, 446–453. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Carlà, R.; Garzelli, A.; Santurri, L. Sensitivity of pansharpening methods to temporal and instrumental changes between multispectral and panchromatic data sets. IEEE Trans. Geosci. Remote Sens. 2017, 55, 308–319. [Google Scholar] [CrossRef]

- Chen, C.; Li, Y.; Liu, W.; Huang, J. SIRF: Simultaneous satellite image registration and fusion in a unified framework. IEEE Trans. Image Process. 2015, 24, 4213–4224. [Google Scholar] [CrossRef]

- Santarelli, C.; Carfagni, M.; Alparone, L.; Arienzo, A.; Argenti, F. Multimodal fusion of tomographic sequences of medical images: MRE spatially enhanced by MRI. Comput. Meth. Progr. Biomed. 2022, 223, 106964. [Google Scholar] [CrossRef] [PubMed]

- Uss, M.L.; Vozel, B.; Lukin, V.V.; Chehdi, K. Multimodal remote sensing image registration with accuracy estimation at local and global scales. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6587–6605. [Google Scholar] [CrossRef]

- Alparone, L.; Garzelli, A.; Vivone, G. Intersensor statistical matching for pansharpening: Theoretical issues and practical solutions. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4682–4695. [Google Scholar] [CrossRef]

- Li, H.; Jing, L. Improvement of a pansharpening method taking into account haze. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 2017, 10, 5039–5055. [Google Scholar] [CrossRef]

- Lolli, S.; Alparone, L.; Garzelli, A.; Vivone, G. Haze correction for contrast-based multispectral pansharpening. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2255–2259. [Google Scholar] [CrossRef]

- Pacifici, F.; Longbotham, N.; Emery, W.J. The importance of physical quantities for the analysis of multitemporal and multiangular optical very high spatial resolution images. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6241–6256. [Google Scholar] [CrossRef]

- Addesso, P.; Longo, M.; Restaino, R.; Vivone, G. Sequential Bayesian methods for resolution enhancement of TIR image sequences. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 2015, 8, 233–243. [Google Scholar] [CrossRef]

- Fasbender, D.; Radoux, J.; Bogaert, P. Bayesian data fusion for adaptable image pansharpening. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1847–1857. [Google Scholar] [CrossRef]

- Palsson, F.; Sveinsson, J.R.; Ulfarsson, M.O. A new pansharpening algorithm based on total variation. IEEE Geosci. Remote Sens. Lett. 2014, 11, 318–322. [Google Scholar] [CrossRef]

- Vicinanza, M.R.; Restaino, R.; Vivone, G.; Dalla Mura, M.; Chanussot, J. A pansharpening method based on the sparse representation of injected details. IEEE Geosci. Remote Sens. Lett. 2015, 12, 180–184. [Google Scholar] [CrossRef]

- Masi, G.; Cozzolino, D.; Verdoliva, L.; Scarpa, G. Pansharpening by convolutional neural networks. Remote Sens. 2016, 8, 594. [Google Scholar] [CrossRef]

- Ma, J.; Yu, W.; Chen, C.; Liang, P.; Guo, X.; Jiang, J. Pan-GAN: An unsupervised pan-sharpening method for remote sensing image fusion. Inform. Fusion 2020, 62, 110–120. [Google Scholar] [CrossRef]

- Ma, J.; Yu, W.; Liang, P.; Li, C.; Jiang, J. FusionGAN: A generative adversarial network for infrared and visible image fusion. Inform. Fusion 2019, 48, 11–26. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Arienzo, A.; Baronti, S.; Garzelli, A.; Santurri, L. Deployment of pansharpening for correction of local misalignments between MS and Pan. In Image and Signal Processing for Remote Sensing XXIV; Bruzzone, L., Bovolo, F., Eds.; SPIE: Bellingham, WA, USA, 2018; Volume 10789. [Google Scholar] [CrossRef]

- Arienzo, A.; Alparone, L.; Aiazzi, B.; Garzelli, A. Automatic fine alignment of multispectral and panchromatic images. In Proceedings of the 2020 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Waikoloa, HI, USA, 26 September–2 October 2020; pp. 228–231. [Google Scholar] [CrossRef]

- Lee, C.; Oh, J. Rigorous co-registration of KOMPSAT-3 multispectral and panchromatic images for pan-sharpening image fusion. Sensors 2020, 20, 2100. [Google Scholar] [CrossRef]

- Xie, G.; Wang, M.; Zhang, Z.; Xiang, S.; He, L. Near real-time automatic sub-pixel registration of panchromatic and multispectral images for pan-sharpening. Remote Sens. 2021, 13, 3674. [Google Scholar] [CrossRef]

- Aiazzi, B.; Selva, M.; Arienzo, A.; Baronti, S. Influence of the system MTF on the on-board lossless compression of hyperspectral raw data. Remote Sens. 2019, 11, 791. [Google Scholar] [CrossRef]

- Coppo, P.; Chiarantini, L.; Alparone, L. End-to-end image simulator for optical imaging systems: Equations and simulation examples. Adv. Opt. Technol. 2013, 2013, 295950. [Google Scholar] [CrossRef]

- Aguilar, M.A.; Aguera, F.; Aguilar, F.J.; Carvajal, F. Geometric accuracy assessment of the orthorectification process from very high resolution satellite imagery for Common Agricultural Policy purposes. Int. J. Remote Sens. 2008, 29, 7181–7197. [Google Scholar] [CrossRef]

- Shepherd, J.D.; Dymond, J.R.; Gillingham, S.; Bunting, P. Accurate registration of optical satellite imagery with elevation models for topographic correction. Remote Sens. Lett. 2014, 5, 637–641. [Google Scholar] [CrossRef]

- Xin, X.; Liu, B.; Di, K.; Jia, M.; Oberst, J. High-precision co-registration of orbiter imagery and digital elevation model constrained by both geometric and photometric information. ISPRS J. Photogramm. Remote Sens. 2018, 144, 28–37. [Google Scholar] [CrossRef]

- Le Moigne, J.; Netanyahu, N.S.; Eastman, R.D. (Eds.) Image Registration for Remote Sensing; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar]

- Kääb, A.; Léprince, S. Motion detection using near-simultaneous satellite acquisitions. Remote Sens. Environ. 2014, 154, 164–179. [Google Scholar] [CrossRef]

- Jing, L.; Cheng, Q. An image fusion method for misaligned panchromatic and multispectral data. Int. J. Remote Sens. 2011, 32, 1125–1137. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Garzelli, A.; Santurri, L. Blind correction of local misalignments between multispectral and panchromatic images. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1625–1629. [Google Scholar] [CrossRef]

- Restaino, R.; Vivone, G.; Addesso, P.; Chanussot, J. Hyperspectral sharpening approaches using satellite multiplatform data. IEEE Trans. Geosci. Remote Sens. 2021, 59, 578–596. [Google Scholar] [CrossRef]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving component substitution pansharpening through multivariate regression of MS+Pan data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Garzelli, A.; Aiazzi, B.; Alparone, L.; Lolli, S.; Vivone, G. Multispectral pansharpening with radiative transfer-based detail-injection modeling for preserving changes in vegetation cover. Remote Sens. 2018, 10, 1308. [Google Scholar] [CrossRef]

- Updike, T.; Comp, C. Radiometric Use of WorldView-2 Imagery; Technical report; DigitalGlobe: Longmont, CO, USA, 2010. [Google Scholar]

- Vivone, G.; Alparone, L.; Garzelli, A.; Lolli, S. Fast reproducible pansharpening based on instrument and acquisition modeling: AWLP revisited. Remote Sens. 2019, 11, 2315. [Google Scholar] [CrossRef]

- Vivone, G.; Restaino, R.; Chanussot, J. Full scale regression-based injection coefficients for panchromatic sharpening. IEEE Trans. Image Process. 2018, 27, 3418–3431. [Google Scholar] [CrossRef]

- Arienzo, A.; Vivone, G.; Garzelli, A.; Alparone, L.; Chanussot, J. Full-resolution quality assessment of pansharpening: Theoretical and hands-on approaches. IEEE Geosci. Remote Sens. Mag. 2022, 10, 2–35. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Carlà, R. Assessment of pyramid-based multisensor image data fusion. In Image and Signal Processing for Remote Sensing IV; Serpico, S.B., Ed.; SPIE: Bellingham, WA, USA, 1998; Volume 3500, pp. 237–248. [Google Scholar] [CrossRef]

- Palsson, F.; Sveinsson, J.R.; Ulfarsson, M.O.; Benediktsson, J.A. Quantitative quality evaluation of pansharpened imagery: Consistency versus synthesis. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1247–1259. [Google Scholar] [CrossRef]

- Alparone, L.; Garzelli, A.; Lolli, S.; Zoppetti, C. Full-scale assessment of pansharpening: Why literature indexes may give contradictory results and how to avoid such an inconvenience. In Image and Signal Processing for Remote Sensing XXIX; Bruzzone, L., Bovolo, F., Eds.; SPIE: Bellingham, WA, USA, 2023; Volume 12733, p. 1273302. [Google Scholar] [CrossRef]

- Alparone, L.; Garzelli, A.; Vivone, G. Spatial consistency for full-scale assessment of pansharpening. In Proceedings of the 2018 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Valencia, Spain, 22–27 July 2018; pp. 5132–5134. [Google Scholar] [CrossRef]

- Arienzo, A.; Aiazzi, B.; Alparone, L.; Garzelli, A. Reproducibility of pansharpening methods and quality indexes versus data formats. Remote Sens. 2021, 13, 4399. [Google Scholar] [CrossRef]

- Arienzo, A.; Alparone, L.; Garzelli, A.; Lolli, S. Advantages of nonlinear intensity components for contrast-based multispectral pansharpening. Remote Sens. 2022, 14, 3301. [Google Scholar] [CrossRef]

- Cheng, X.; Wang, Y.; Jia, J.; Shu, M.W.R.; Wang, J. The effects of misregistration between hyperspectral and panchromatic images on linear spectral unmixing. Int. J. Remote Sens. 2020, 41, 8862–8889. [Google Scholar] [CrossRef]

- Seo, S.; Choi, J.S.; Lee, J.; Kim, H.H.; Seo, D.; Jeong, J.; Kim, M. UPSNet: Unsupervised pan-sharpening network with registration learning between panchromatic and multi-spectral images. IEEE Access 2020, 8, 201199–201217. [Google Scholar] [CrossRef]

- Kim, H.H.; Kim, M. Deep spectral blending network for color bleeding reduction in PAN-sharpening images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5403814. [Google Scholar] [CrossRef]

| QNR | HQNR | FQNR | RQNR | |

|---|---|---|---|---|

| EXP | 0.9222 | 0.8478 | 0.7131 | 0.8068 |

| BT-H | 0.8792 | 0.7749 | 0.8467 | 0.8575 |

| GSA | 0.8634 | 0.7470 | 0.8203 | 0.8303 |

| AWLP-H | 0.9125 | 0.9037 | 0.9175 | 0.9057 |

| MTF-GLP-FS | 0.8894 | 0.9038 | 0.9159 | 0.8973 |

| QNR | HQNR | FQNR | RQNR | |

|---|---|---|---|---|

| EXP | 0.8974 | 0.8518 | 0.7393 | 0.8504 |

| BT-H | 0.8818 | 0.9085 | 0.9485 | 0.9648 |

| GSA | 0.8672 | 0.9050 | 0.9568 | 0.9668 |

| AWLP-H | 0.8912 | 0.9212 | 0.9571 | 0.9707 |

| MTF-GLP-FS | 0.8660 | 0.9193 | 0.9537 | 0.9653 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alparone, L.; Arienzo, A.; Garzelli, A. Automatic Fine Co-Registration of Datasets from Extremely High Resolution Satellite Multispectral Scanners by Means of Injection of Residues of Multivariate Regression. Remote Sens. 2024, 16, 3576. https://doi.org/10.3390/rs16193576

Alparone L, Arienzo A, Garzelli A. Automatic Fine Co-Registration of Datasets from Extremely High Resolution Satellite Multispectral Scanners by Means of Injection of Residues of Multivariate Regression. Remote Sensing. 2024; 16(19):3576. https://doi.org/10.3390/rs16193576

Chicago/Turabian StyleAlparone, Luciano, Alberto Arienzo, and Andrea Garzelli. 2024. "Automatic Fine Co-Registration of Datasets from Extremely High Resolution Satellite Multispectral Scanners by Means of Injection of Residues of Multivariate Regression" Remote Sensing 16, no. 19: 3576. https://doi.org/10.3390/rs16193576

APA StyleAlparone, L., Arienzo, A., & Garzelli, A. (2024). Automatic Fine Co-Registration of Datasets from Extremely High Resolution Satellite Multispectral Scanners by Means of Injection of Residues of Multivariate Regression. Remote Sensing, 16(19), 3576. https://doi.org/10.3390/rs16193576