A Statistical Evaluation of the Connection between Underwater Optical and Acoustic Images

Abstract

:1. Introduction

1.1. Overview

1.2. State of the Art

- A first demonstration of the statistical relationship between underwater optical and acoustic images.

- A statistics-based method for validating optical and SAS image pairs as matching or not.

- A shared database of manually reviewed and labeled underwater optical and SAS images in which objects have already been recognized and segmented.

2. System Model

2.1. Main Assumptions

2.2. Preliminaries

2.2.1. Feature Descriptors

2.2.2. Entropy Measures

| Algorithm 1 Vector Entropy KL |

Input: feature vectors from the SAS and optical images, X and Y

|

3. Methodology

3.1. Key Idea

3.2. Entropy Matching Decision

3.3. Feature Extraction

3.3.1. Rotation

3.3.2. Scaling

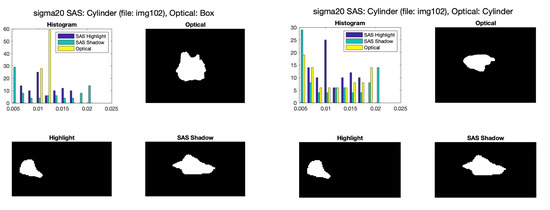

4. Exploration of Statistical Relations between SAS and Optical Images

5. Results

5.1. Dataset

5.2. Feature Analysis

5.3. Entropy Matching

5.4. Information Exchange

5.5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Chantler, M.; Lindsay, D.; Reid, C.; Wright, V. Optical and acoustic range sensing for underwater robotics. In Proceedings of the OCEANS’94, Brest, France, 13–16 September 1994; Volume 1, pp. 205–2010. [Google Scholar]

- Abu, A.; Diamant, R. Feature set for classification of man-made underwater objects in optical and SAS data. IEEE Sensors J. 2022, 22, 6027–6041. [Google Scholar] [CrossRef]

- Fumagalli, E.; Bibuli, M.; Caccia, M.; Zereik, E.; Del Bianco, F.; Gasperini, L.; Stanghellini, G.; Bruzzone, G. Combined acoustic and video characterization of coastal environment by means of unmanned surface vehicles. IFAC Proc. Vol. 2014, 47, 4240–4245. [Google Scholar] [CrossRef]

- Abu, A.; Diamant, R. Enhanced fuzzy-based local information algorithm for sonar image segmentation. IEEE Trans. Image Process. 2019, 29, 445–460. [Google Scholar] [CrossRef] [PubMed]

- Gubnitsky, G.; Diamant, R. A multispectral target detection in sonar imagery. In Proceedings of the OCEANS 2021: San Diego–Porto, San Diego, CA, USA, 20–23 September 2021; pp. 1–5. [Google Scholar]

- Schettini, R.; Corchs, S. Underwater image processing: State of the art of restoration and image enhancement methods. EURASIP J. Adv. Signal Process. 2010, 2010, 1–14. [Google Scholar] [CrossRef]

- Ghani, A.S.A.; Isa, N.A.M. Underwater image quality enhancement through integrated color model with Rayleigh distribution. Appl. Soft Comput. 2015, 27, 219–230. [Google Scholar] [CrossRef]

- Wang, F.; Vemuri, B.C. Non-rigid multi-modal image registration using cross-cumulative residual entropy. Int. J. Comput. Vis. 2007, 74, 201–215. [Google Scholar] [CrossRef] [PubMed]

- Lowe, G. Sift-the scale invariant feature transform. Int. J. 2004, 2, 2. [Google Scholar]

- Ye, Y.; Shen, L.; Hao, M.; Wang, J.; Xu, Z. Robust optical-to-SAR image matching based on shape properties. IEEE Geosci. Remote Sens. Lett. 2017, 14, 564–568. [Google Scholar] [CrossRef]

- Ye, Y.; Bruzzone, L.; Shan, J.; Bovolo, F.; Zhu, Q. Fast and robust matching for multimodal remote sensing image registration. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9059–9070. [Google Scholar] [CrossRef]

- Jang, H.; Kim, G.; Lee, Y.; Kim, A. CNN-based approach for opti-acoustic reciprocal feature matching. In Proceedings of the ICRA Workshop on Underwater Robotics Perception, Montreal, QC, Canada, 24 May 2019; Volume 1. [Google Scholar]

- Fei, T. Advances in Detection and Classification of Underwater Targets Using Synthetic Aperture Sonar Imagery. Ph.D. Thesis, Technische Universität, Berlin, Germany, 2015. [Google Scholar]

- Xu, S.; Luczynski, T.; Willners, J.S.; Hong, Z.; Zhang, K.; Petillot, Y.R.; Wang, S. Underwater visual acoustic SLAM with extrinsic calibration. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 7647–7652. [Google Scholar]

- Xu, Z.; Haroutunian, M.; Murphy, A.J.; Neasham, J.; Norman, R. An Integrated Visual Odometry System With Stereo Camera for Unmanned Underwater Vehicles. IEEE Access 2022, 10, 71329–71343. [Google Scholar] [CrossRef]

- Cardaillac, A.; Ludvigsen, M. Camera-Sonar Combination for Improved Underwater Localization and Mapping. IEEE Access 2023, 11, 123070–123079. [Google Scholar] [CrossRef]

- Abu, A.; Diamant, R. A SLAM Approach to Combine Optical and Sonar Information from an AUV. IEEE Trans. Mob. Comput. 2023. [Google Scholar] [CrossRef]

- Nguyen, T.M.; Wu, Q.J. Fast and robust spatially constrained Gaussian mixture model for image segmentation. IEEE Trans. Circuits Syst. Video Technol. 2012, 23, 621–635. [Google Scholar] [CrossRef]

- Faes, L. cTE—Matlab Tool for Computing the Corrected Transfer Entropy. Available online: http://www.lucafaes.net/cTE.html (accessed on 28 July 2023).

- MacQueen, J. Some methods for classification and analysis of multivariate observations. In Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability; University of California Press: Oakland, CA, USA, 1967; Number 14; pp. 281–297. [Google Scholar]

- Wackerly, D.; Mendenhall, W.; Scheaffer, R.L. Mathematical Statistics with Applications; Cengage Learning: Singapore, 2014. [Google Scholar]

- Shang, R.; Tian, P.; Jiao, L.; Stolkin, R.; Feng, J.; Hou, B.; Zhang, X. A spatial fuzzy clustering algorithm with kernel metric based on immune clone for SAR image segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 1640–1652. [Google Scholar] [CrossRef]

- Kullback, S.; Leibler, R.A. On information and sufficiency. Ann. Math. Stat. 1951, 22, 79–86. [Google Scholar] [CrossRef]

- Zhang, X.; Wu, H.; Sun, H.; Ying, W. Multireceiver SAS imagery based on monostatic conversion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10835–10853. [Google Scholar] [CrossRef]

- Yang, P. An imaging algorithm for high-resolution imaging sonar system. Multimed. Tools Appl. 2023, 1–17. [Google Scholar] [CrossRef]

| Name | Definition | Observations |

|---|---|---|

| Ecc | and are equal to the two eigenvalues of the co-variance matrix [13] | |

| Perimeter | Perimeter of the object’s contour | |

| Comp | A is the area of the contour and is the perimeter | |

| cir | is the area of the circle having the same length as the object’s perimeter | |

| Solidity | is the area of the convex hull [13] | |

| roughness | is the perimeter of the convex hull [13] | |

| and | , , | Normalized central moment is , for every pixel , center mass , is 1 if is within the object’s region, and each refers to a central moment and l to the normalized version of lth calculated central moment [2] |

| Low Freq Den | is the magnitude of the Fourier coefficients of the centroid distance function and the DFT is implemented with points | |

| DFT-skewness | ||

| Local curve, polynomial coefficient i [2] | ||

| Hist-BAS | Entropy-Angle Feature (EAF) [2] |

| Object Type | SAS | Optical |

|---|---|---|

| Cylinder | 9 | 9 |

| Manta Mine | 8 | 13 |

| Box | 0 | 6 |

| Natural | 25 | 14 |

| Direction | Optical to SAS HL | SAS HL to Optical | Optical to SAS SH | SAS SH to Optical | |

|---|---|---|---|---|---|

| Entropy | |||||

| Differential Entropy | sigma20 | localCur6 | l2 | l4 | |

| Mutual Information | l4 | l4 | sigma20 | sigma11 | |

| Transfer Entropy | localCur1 | localCur4 | l2 | localCur4 | |

| Normalized Transfer Entropy | sigma02 | Solidity | l2 | Solidity | |

| Vector Entropy | DFT skewness | DFT skewness | roughness | roughness | |

| Optical to SAS | |||

|---|---|---|---|

| Positive (HL) | Negative (HL) | Positive (SH) | Negative (SH) |

| 3.0744 | 5.1850 | 3.3481 | 5.2553 |

| SAS to Optical | |||

| Positive (HL) | Negative (HL) | Positive (SH) | Negative (SH) |

| 3.4682 | 4.4457 | 3.4682 | 4.4457 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chinicz, R.; Diamant, R. A Statistical Evaluation of the Connection between Underwater Optical and Acoustic Images. Remote Sens. 2024, 16, 689. https://doi.org/10.3390/rs16040689

Chinicz R, Diamant R. A Statistical Evaluation of the Connection between Underwater Optical and Acoustic Images. Remote Sensing. 2024; 16(4):689. https://doi.org/10.3390/rs16040689

Chicago/Turabian StyleChinicz, Rebeca, and Roee Diamant. 2024. "A Statistical Evaluation of the Connection between Underwater Optical and Acoustic Images" Remote Sensing 16, no. 4: 689. https://doi.org/10.3390/rs16040689

APA StyleChinicz, R., & Diamant, R. (2024). A Statistical Evaluation of the Connection between Underwater Optical and Acoustic Images. Remote Sensing, 16(4), 689. https://doi.org/10.3390/rs16040689