Comparison of Cloud-Mask Algorithms and Machine-Learning Methods Using Sentinel-2 Imagery for Mapping Paddy Rice in Jianghan Plain

Abstract

1. Introduction

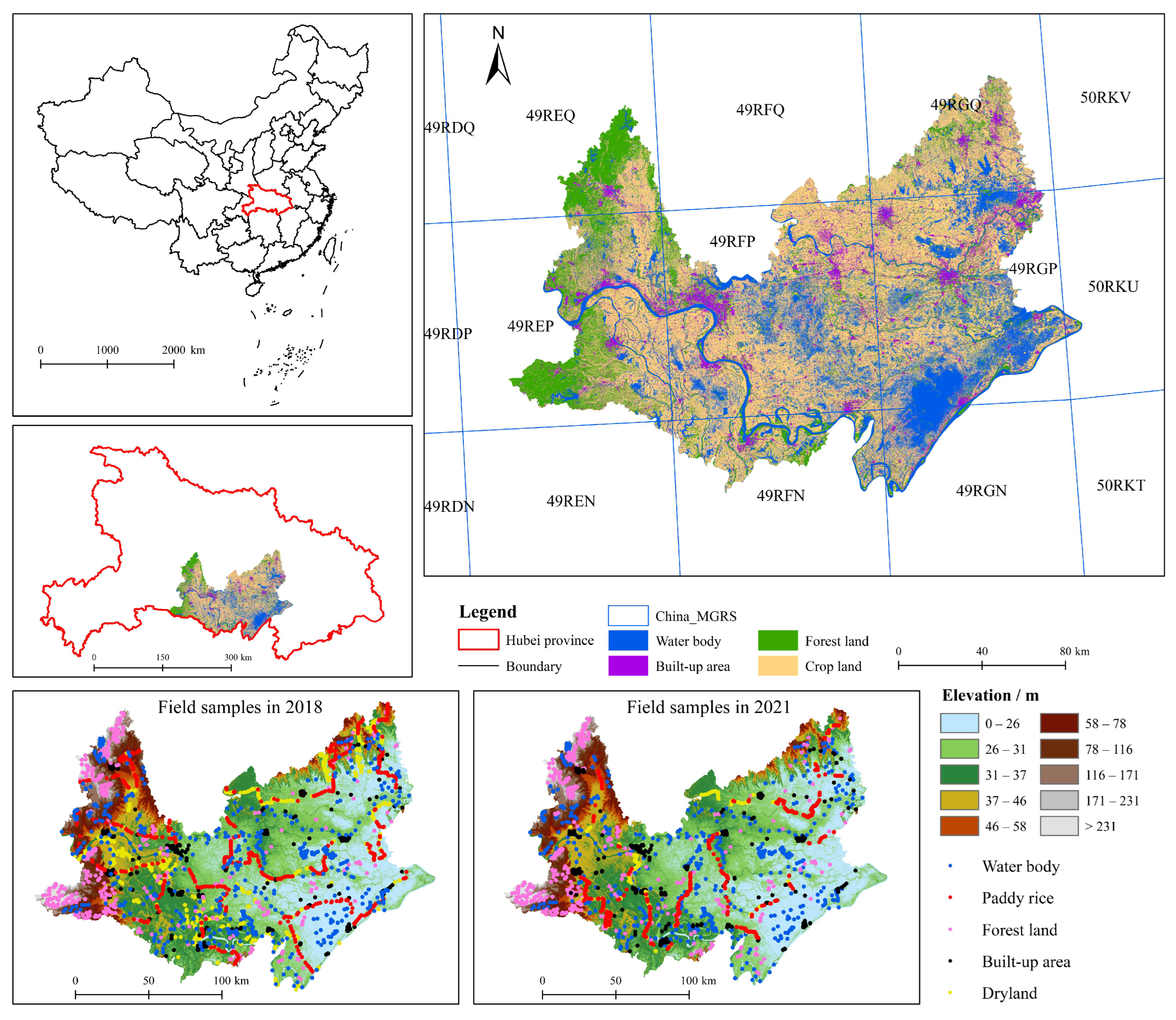

2. Study Area and Data

2.1. Study Area

2.2. Data

2.2.1. Remotely Sensed Data

2.2.2. Field Survey Data

2.2.3. Other Reference Data

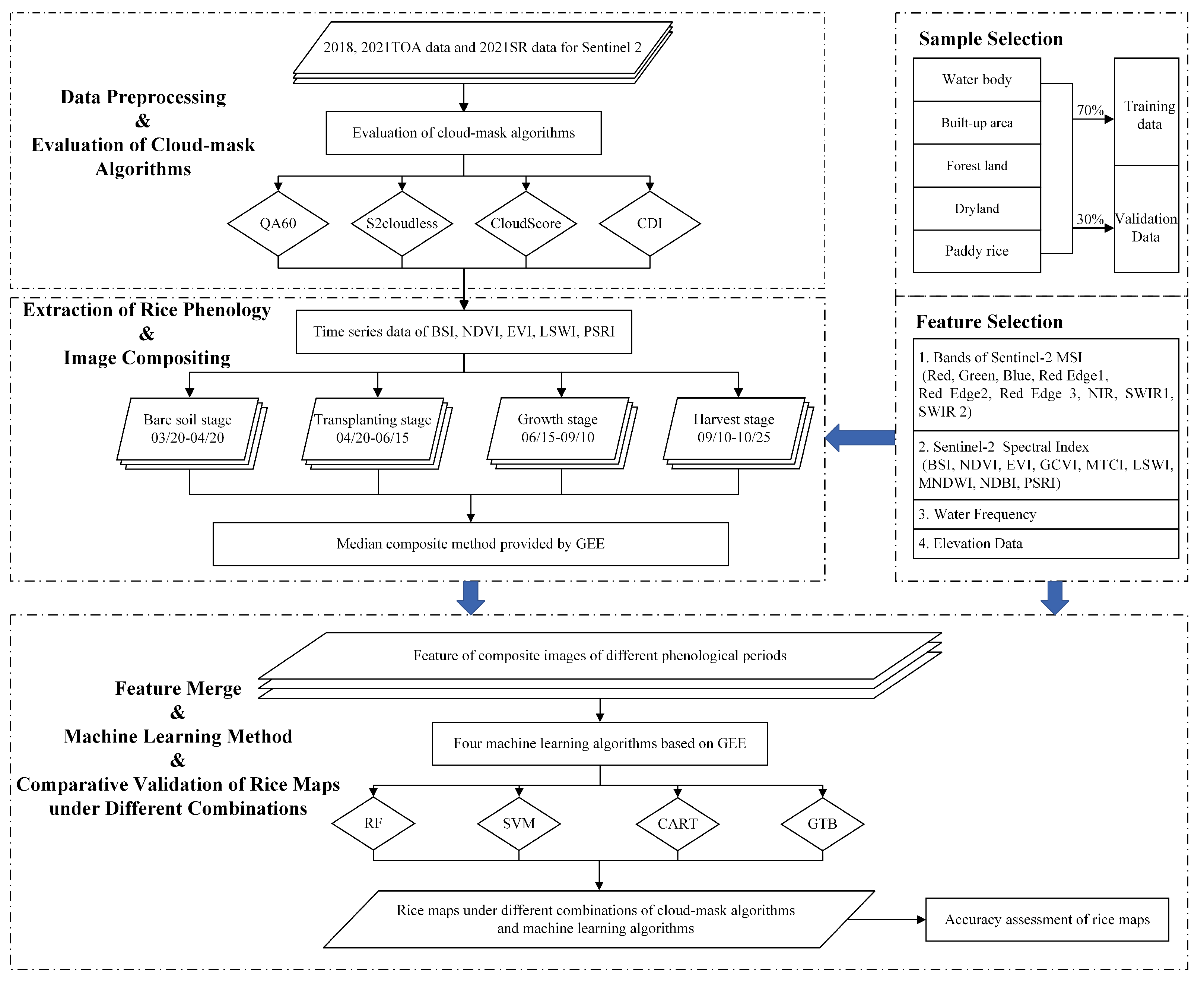

3. Methodology

3.1. Cloud-Mask Algorithms

- (1)

- QA60

- (2)

- Sentinel-2 cloud detector (S2cloudless)

- (3)

- CloudScore algorithm

- (4)

- Cloud Displacement Index (CDI)

3.2. Paddy Rice Mapping Algorithms

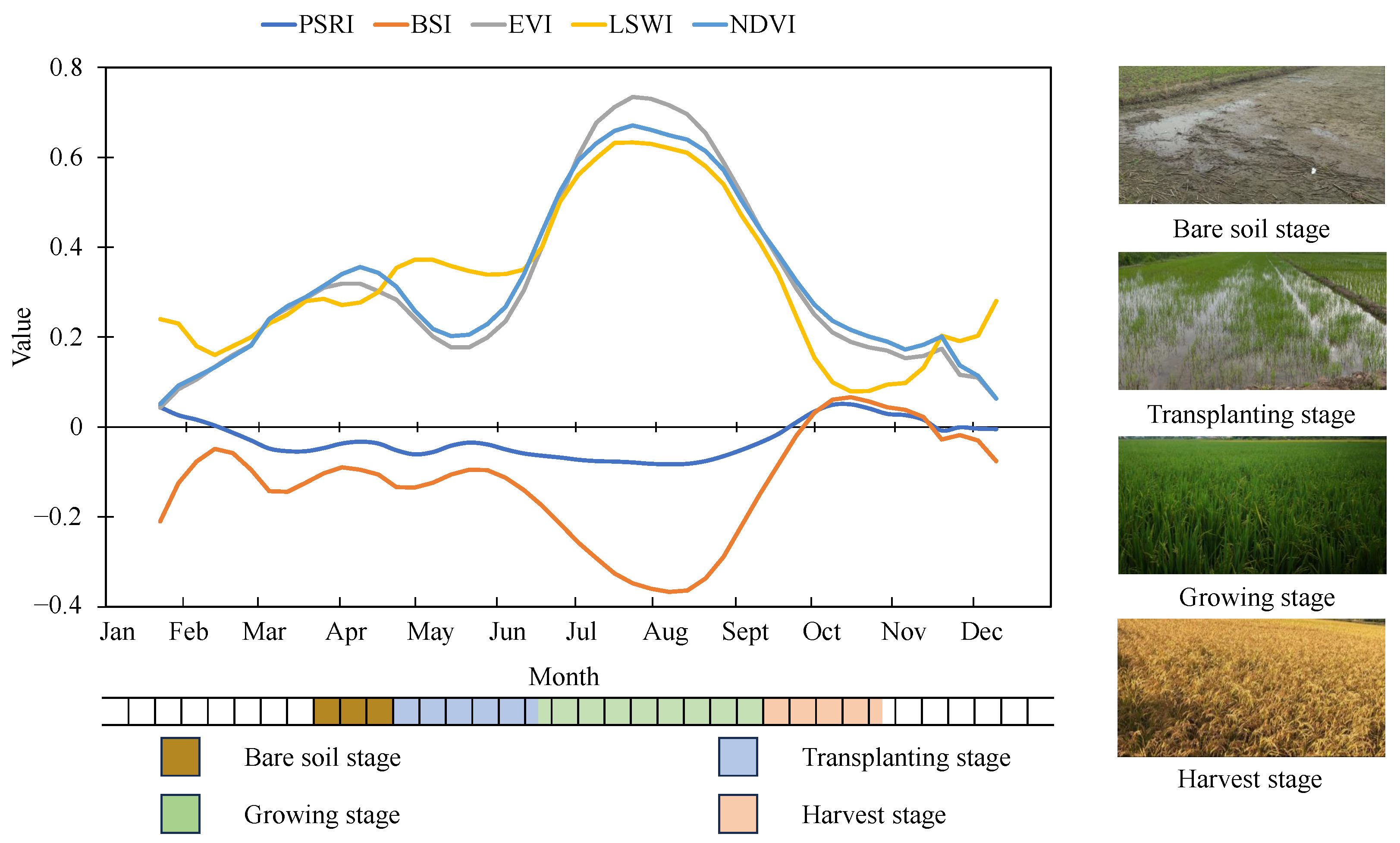

3.2.1. Extraction Phenology of Paddy Rice

3.2.2. Machine-Learning Algorithms

3.3. Assessment of Cloud-Mask Algorithms and Rice Maps

3.3.1. Assessment of Cloud-Mask Algorithms

3.3.2. Feature Evaluation of Different Cloud-Free Datasets

3.3.3. Validation of Rice Maps

4. Results

4.1. Evaluations of Cloud-Mask Algorithms

4.1.1. Accuracy Assessment of the Four Cloud-Mask Algorithms

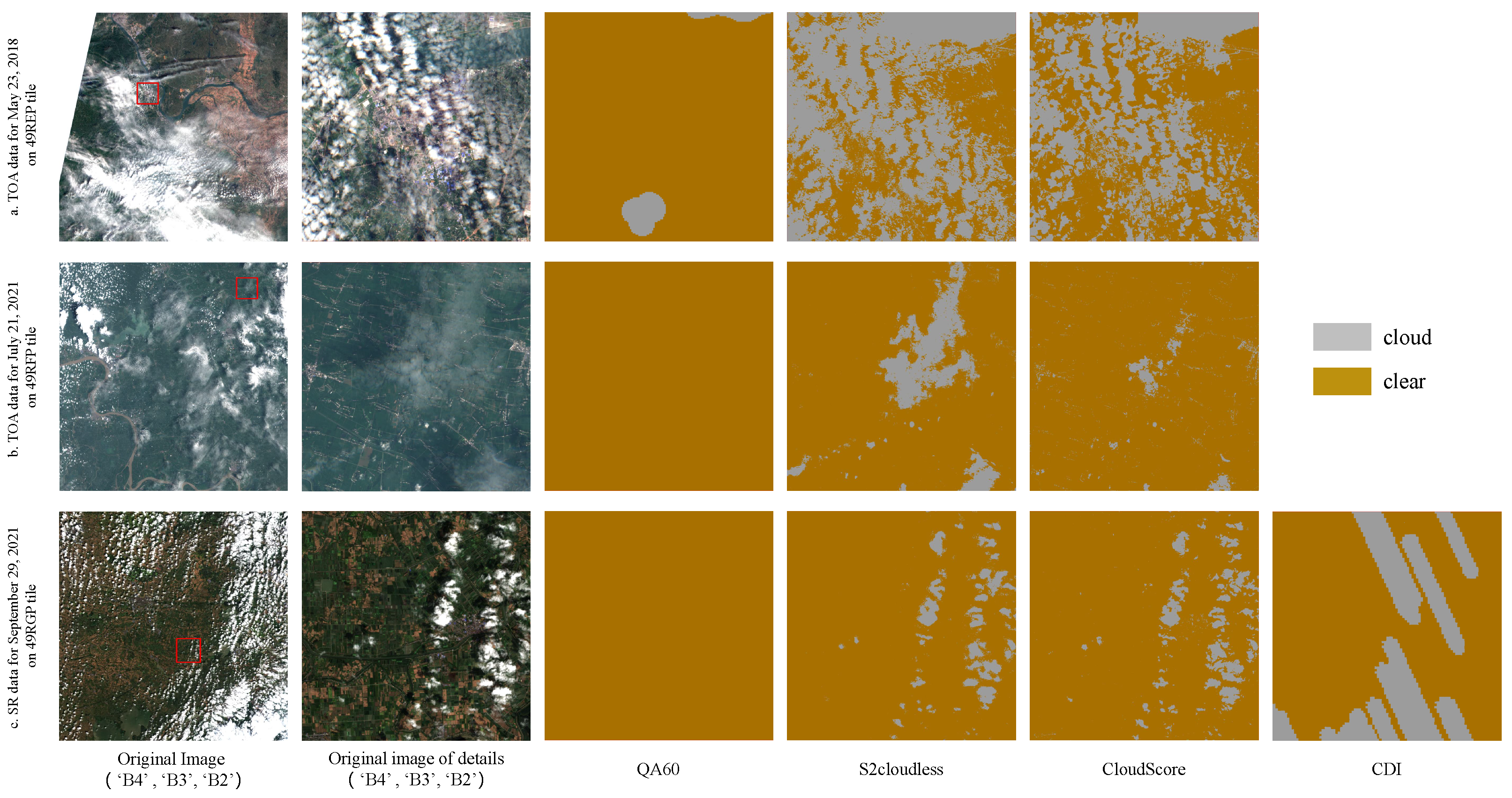

4.1.2. Visual Comparisons of the Four Cloud-Mask Algorithms

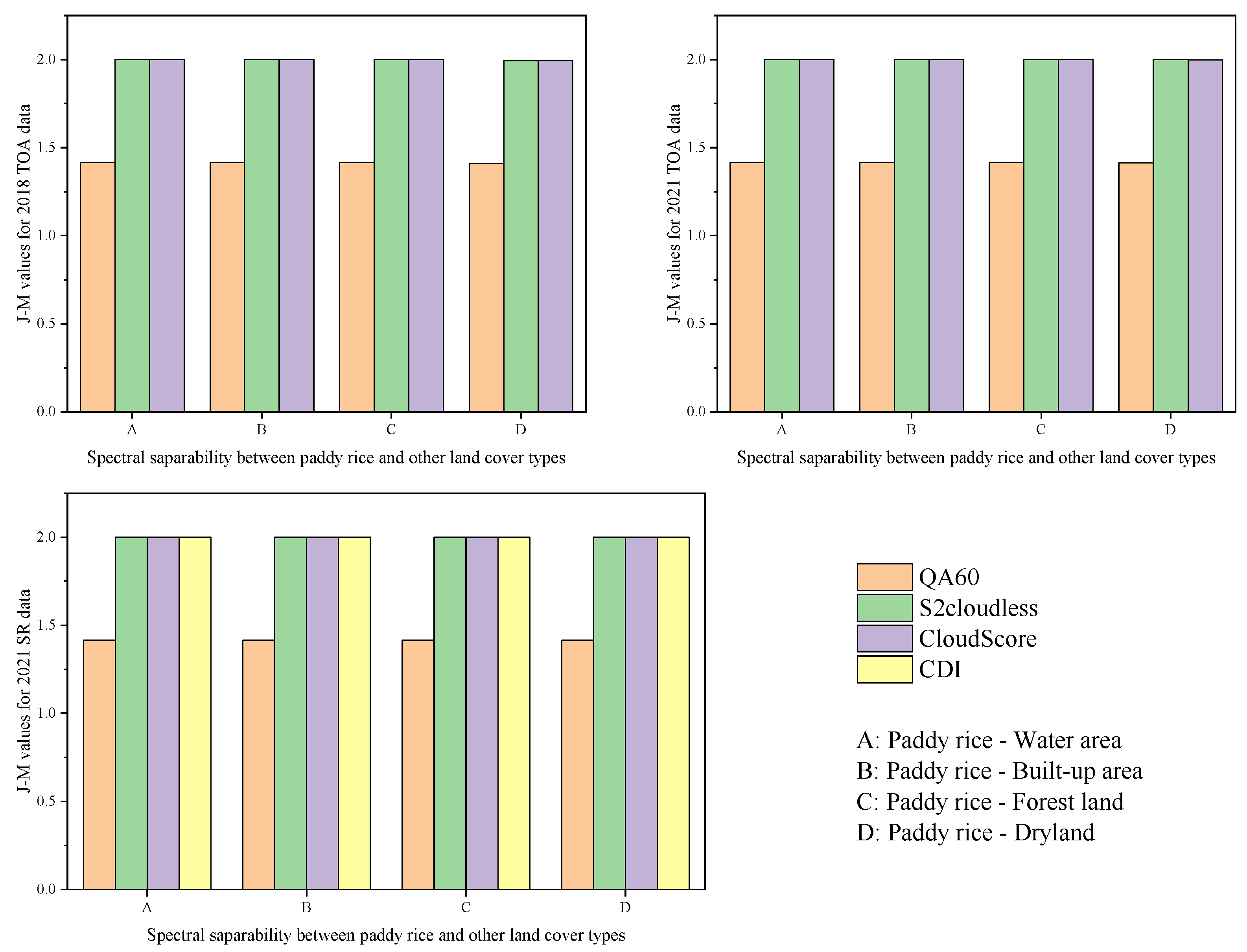

4.1.3. Spectral Separability between Paddy Rice and Other Land Cover Types in Cloud-Masked Imagery

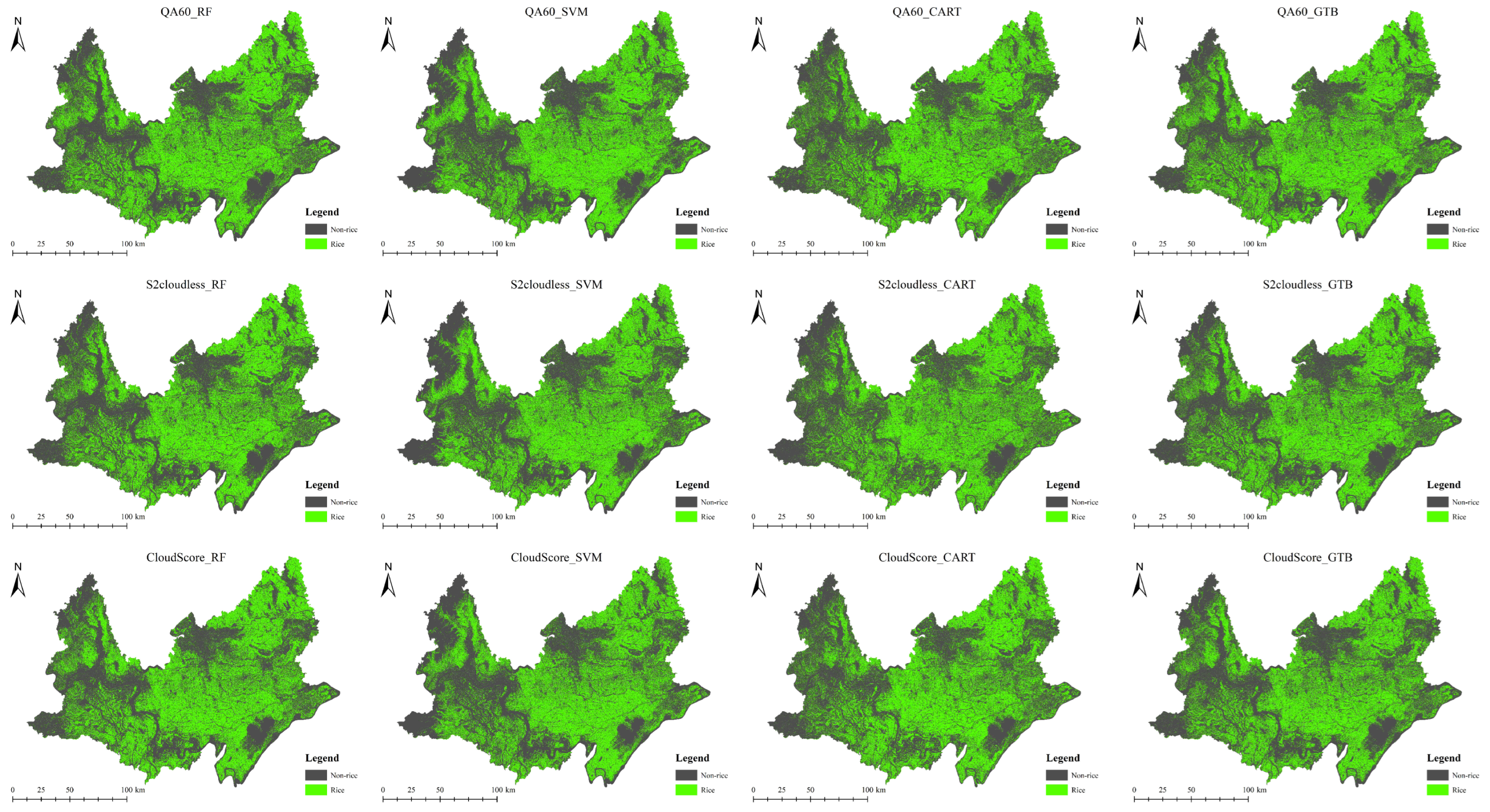

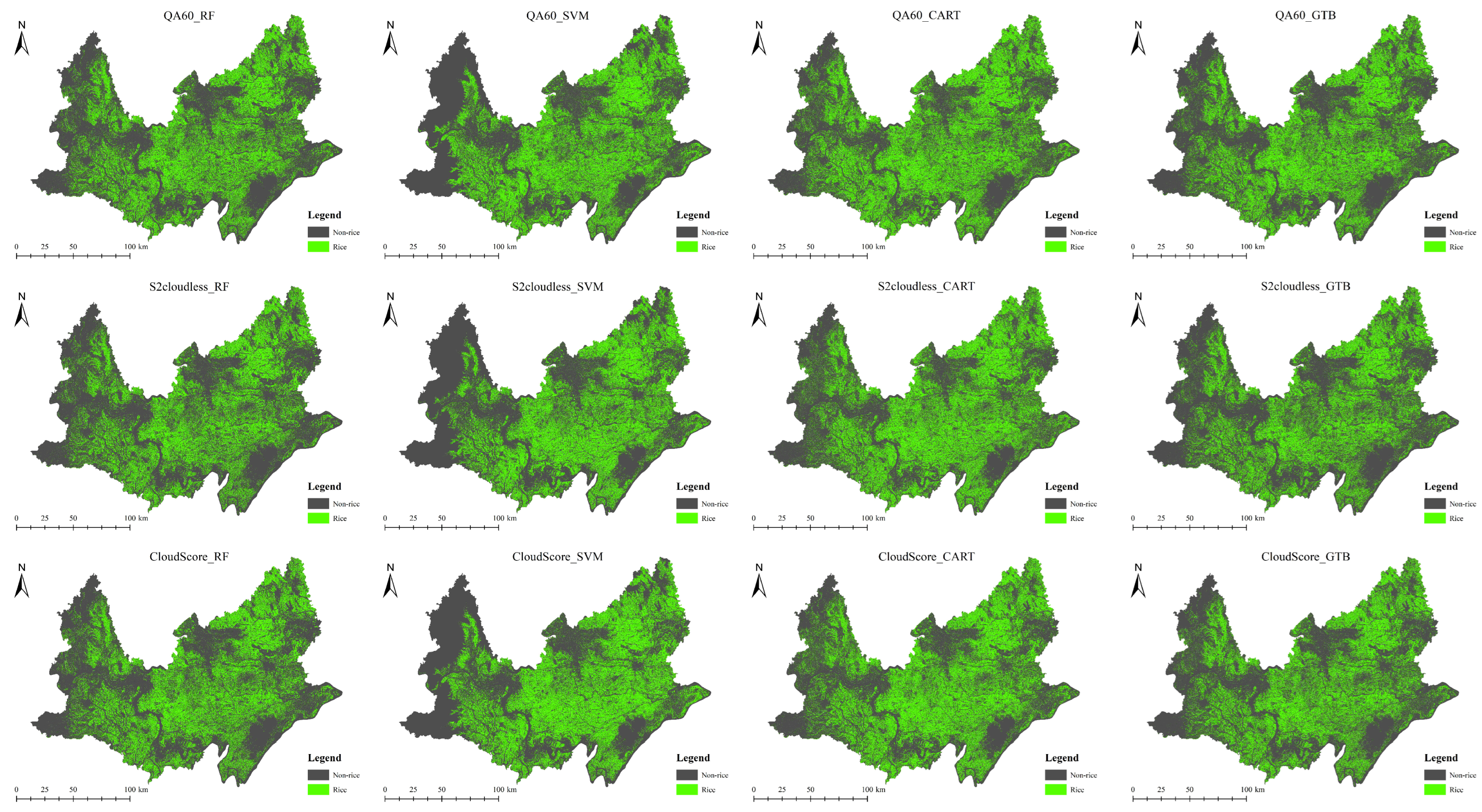

4.2. Rice Maps Extracted from the Algorithms of RF, SVM, CART and GTB

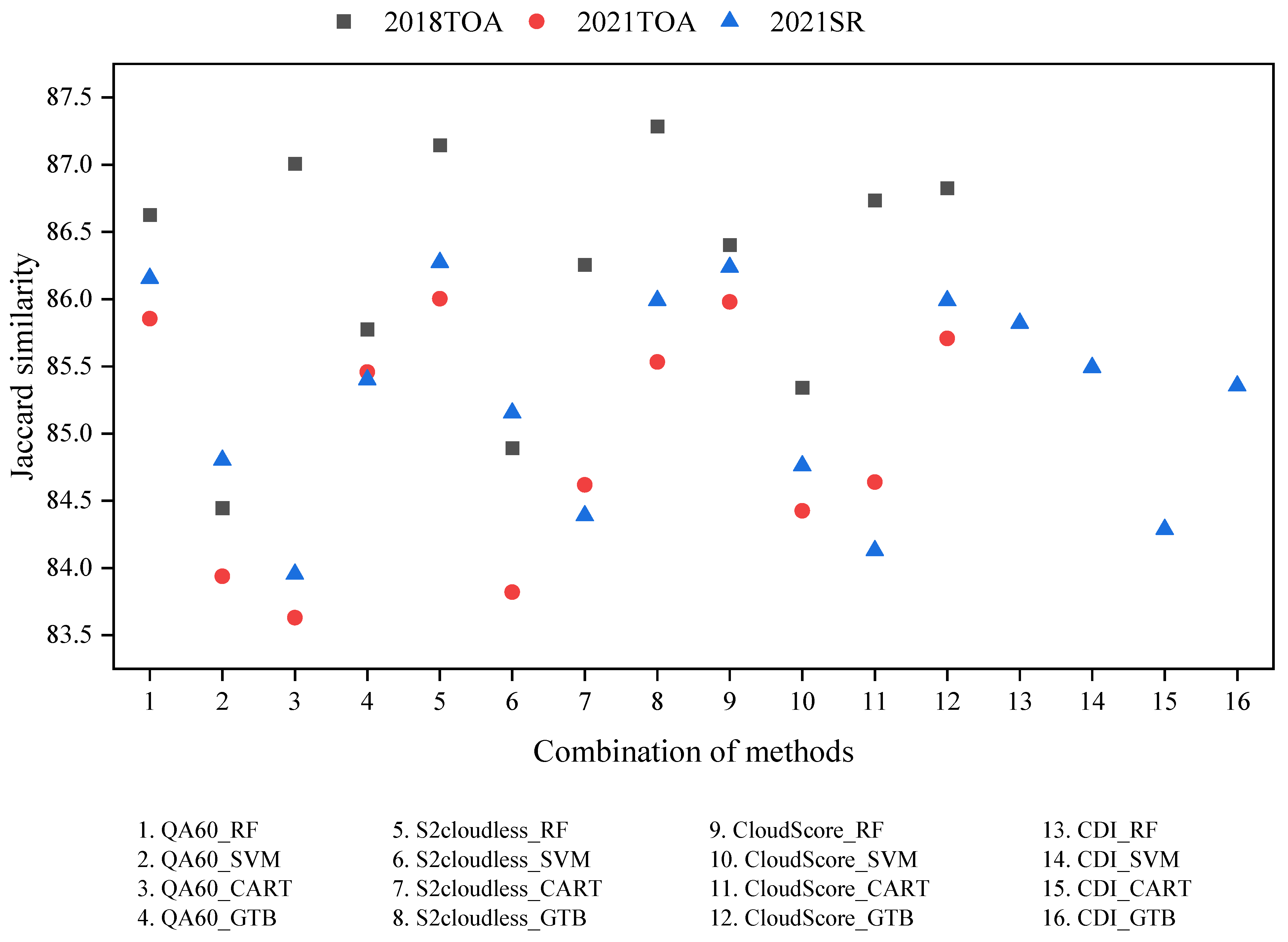

4.3. Accuracy Assessment of Rice Maps

4.3.1. Comparing with Field Survey Data

4.3.2. Comparing with the Latest 10 m Rice Mapping Product

4.3.3. Comparing with Statistical Data

5. Discussion

5.1. Clear Observations after Cloud-Mask Processing

5.2. Combination of Cloud-Mask Algorithm and Machine-Learning Algorithms Used for Rice Mapping

5.3. Limitations and Implications of the Study

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Wheeler, T.; Von Braun, J. Climate change impacts on global food security. Science 2013, 341, 508–513. [Google Scholar] [CrossRef]

- Fuller, D.Q.; Qin, L.; Zheng, Y.; Zhao, Z.; Chen, X.; Hosoya, L.A.; Sun, G.P. The domestication process and domestication rate in rice: Spikelet bases from the Lower Yangtze. Science 2009, 323, 1607–1610. [Google Scholar] [CrossRef] [PubMed]

- Dong, J.; Xiao, X.; Menarguez, M.A.; Zhang, G.; Qin, Y.; Thau, D.; Biradar, C.; Moore, B., III. Mapping paddy rice planting area in northeastern Asia with Landsat 8 images, phenology-based algorithm and Google Earth Engine. Remote Sens. Environ. 2016, 185, 142–154. [Google Scholar] [CrossRef] [PubMed]

- Xin, F.; Xiao, X.; Dong, J.; Zhang, G.; Zhang, Y.; Wu, X.; Li, X.; Zou, Z.; Ma, J.; Du, G.; et al. Large increases of paddy rice area, gross primary production, and grain production in Northeast China during 2000–2017. Sci. Total Environ. 2020, 711, 135183. [Google Scholar] [CrossRef] [PubMed]

- Zhang, G.; Xiao, X.; Biradar, C.M.; Dong, J.; Qin, Y.; Menarguez, M.A.; Zhou, Y.; Zhang, Y.; Jin, C.; Wang, J.; et al. Spatiotemporal patterns of paddy rice croplands in China and India from 2000 to 2015. Sci. Total Environ. 2017, 579, 82–92. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Shao, Q.; Li, R.; Song, M.; Zhou, Y. Governmental policies drive the LUCC trajectories in the Jianghan Plain. Environ. Monit. Assess. 2013, 185, 10521–10536. [Google Scholar] [CrossRef] [PubMed]

- Xiao, X.; Boles, S.; Liu, J.; Zhuang, D.; Frolking, S.; Li, C.; Salas, W.; Moore, B., III. Mapping paddy rice agriculture in southern China using multi-temporal MODIS images. Remote Sens. Environ. 2005, 95, 480–492. [Google Scholar] [CrossRef]

- Zhang, X.; Wu, B.; Ponce-Campos, G.E.; Zhang, M.; Chang, S.; Tian, F. Mapping up-to-date paddy rice extent at 10 m resolution in china through the integration of optical and synthetic aperture radar images. Remote Sens. 2018, 10, 1200. [Google Scholar] [CrossRef]

- Liu, L.; Xiao, X.; Qin, Y.; Wang, J.; Xu, X.; Hu, Y.; Qiao, Z. Mapping cropping intensity in China using time series Landsat and Sentinel-2 images and Google Earth Engine. Remote Sens. Environ. 2020, 239, 111624. [Google Scholar] [CrossRef]

- Cao, J.; Cai, X.; Tan, J.; Cui, Y.; Xie, H.; Liu, F.; Yang, L.; Luo, Y. Mapping paddy rice using Landsat time series data in the Ganfu Plain irrigation system, Southern China, from 1988- 2017. Int. J. Remote Sens. 2021, 42, 1556–1576. [Google Scholar] [CrossRef]

- Ni, R.; Tian, J.; Li, X.; Yin, D.; Li, J.; Gong, H.; Zhang, J.; Zhu, L.; Wu, D. An enhanced pixel-based phenological feature for accurate paddy rice mapping with Sentinel-2 imagery in Google Earth Engine. ISPRS J. Photogramm. Remote Sens. 2021, 178, 282–296. [Google Scholar] [CrossRef]

- Dong, J.; Xiao, X.; Kou, W.; Qin, Y.; Zhang, G.; Li, L.; Jin, C.; Zhou, Y.; Wang, J.; Biradar, C.; et al. Tracking the dynamics of paddy rice planting area in 1986–2010 through time series Landsat images and phenology-based algorithms. Remote Sens. Environ. 2015, 160, 99–113. [Google Scholar] [CrossRef]

- Boschetti, M.; Busetto, L.; Manfron, G.; Laborte, A.; Asilo, S.; Pazhanivelan, S.; Nelson, A. PhenoRice: A method for automatic extraction of spatio-temporal information on rice crops using satellite data time series. Remote Sens. Environ. 2017, 194, 347–365. [Google Scholar] [CrossRef]

- Xu, S.; Zhu, X.; Chen, J.; Zhu, X.; Duan, M.; Qiu, B.; Wan, L.; Tan, X.; Xu, Y.N.; Cao, R. A robust index to extract paddy fields in cloudy regions from SAR time series. Remote Sens. Environ. 2023, 285, 113374. [Google Scholar] [CrossRef]

- He, Y.; Dong, J.; Liao, X.; Sun, L.; Wang, Z.; You, N.; Li, Z.; Fu, P. Examining rice distribution and cropping intensity in a mixed single-and double-cropping region in South China using all available Sentinel 1/2 images. Int. J. Appl. Earth Obs. Geoinf. 2021, 101, 102351. [Google Scholar] [CrossRef]

- Peng, D.; Huete, A.R.; Huang, J.; Wang, F.; Sun, H. Detection and estimation of mixed paddy rice cropping patterns with MODIS data. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 13–23. [Google Scholar] [CrossRef]

- Fritz, S.; See, L.; McCallum, I.; You, L.; Bun, A.; Moltchanova, E.; Duerauer, M.; Albrecht, F.; Schill, C.; Perger, C.; et al. Mapping global cropland and field size. Glob. Chang. Biol. 2015, 21, 1980–1992. [Google Scholar] [CrossRef] [PubMed]

- Phiri, D.; Simwanda, M.; Salekin, S.; Nyirenda, V.R.; Murayama, Y.; Ranagalage, M. Sentinel-2 data for land cover/use mapping: A review. Remote Sens. 2020, 12, 2291. [Google Scholar] [CrossRef]

- Chen, N.; Yu, L.; Zhang, X.; Shen, Y.; Zeng, L.; Hu, Q.; Niyogi, D. Mapping paddy rice fields by combining multi-temporal vegetation index and synthetic aperture radar remote sensing data using google earth engine machine learning platform. Remote Sens. 2020, 12, 2992. [Google Scholar] [CrossRef]

- Inoue, S.; Ito, A.; Yonezawa, C. Mapping Paddy fields in Japan by using a Sentinel-1 SAR time series supplemented by Sentinel-2 images on Google Earth Engine. Remote Sens. 2020, 12, 1622. [Google Scholar] [CrossRef]

- Karimi, N.; Taban, M.R. A convex variational method for super resolution of SAR image with speckle noise. Signal Process. Image Commun. 2021, 90, 116061. [Google Scholar] [CrossRef]

- Zhan, P.; Zhu, W.; Li, N. An automated rice mapping method based on flooding signals in synthetic aperture radar time series. Remote Sens. Environ. 2021, 252, 112112. [Google Scholar] [CrossRef]

- Zhu, Z.; Wang, S.; Woodcock, C.E. Improvement and expansion of the Fmask algorithm: Cloud, cloud shadow, and snow detection for Landsats 4–7, 8, and Sentinel 2 images. Remote Sens. Environ. 2015, 159, 269–277. [Google Scholar] [CrossRef]

- Foga, S.; Scaramuzza, P.L.; Guo, S.; Zhu, Z.; Dilley, R.D., Jr.; Beckmann, T.; Schmidt, G.L.; Dwyer, J.L.; Hughes, M.J.; Laue, B. Cloud detection algorithm comparison and validation for operational Landsat data products. Remote Sens. Environ. 2017, 194, 379–390. [Google Scholar] [CrossRef]

- Mateo-García, G.; Gómez-Chova, L.; Amorós-López, J.; Muñoz-Marí, J.; Camps-Valls, G. Multitemporal cloud masking in the Google Earth Engine. Remote Sens. 2018, 10, 1079. [Google Scholar] [CrossRef]

- Skakun, S.; Wevers, J.; Brockmann, C.; Doxani, G.; Aleksandrov, M.; Batič, M.; Frantz, D.; Gascon, F.; Gómez-Chova, L.; Hagolle, O.; et al. Cloud Mask Intercomparison eXercise (CMIX): An evaluation of cloud masking algorithms for Landsat 8 and Sentinel-2. Remote Sens. Environ. 2022, 274, 112990. [Google Scholar] [CrossRef]

- Frantz, D.; Haß, E.; Uhl, A.; Stoffels, J.; Hill, J. Improvement of the Fmask algorithm for Sentinel-2 images: Separating clouds from bright surfaces based on parallax effects. Remote Sens. Environ. 2018, 215, 471–481. [Google Scholar] [CrossRef]

- Zekoll, V.; Main-Knorn, M.; Alonso, K.; Louis, J.; Frantz, D.; Richter, R.; Pflug, B. Comparison of masking algorithms for sentinel-2 imagery. Remote Sens. 2021, 13, 137. [Google Scholar] [CrossRef]

- Mullissa, A.; Vollrath, A.; Odongo-Braun, C.; Slagter, B.; Balling, J.; Gou, Y.; Gorelick, N.; Reiche, J. Sentinel-1 sar backscatter analysis ready data preparation in google earth engine. Remote Sens. 2021, 13, 1954. [Google Scholar] [CrossRef]

- Zupanc, A. Improving Cloud Detection with Machine Learning. Available online: https://medium.com/sentinel-hub/improving-cloud-detection-with-machine-learning-c09dc5d7cf13 (accessed on 24 March 2024).

- Housman, I.W.; Chastain, R.A.; Finco, M.V. An evaluation of forest health insect and disease survey data and satellite-based remote sensing forest change detection methods: Case studies in the United States. Remote Sens. 2018, 10, 1184. [Google Scholar] [CrossRef]

- Chastain, R.; Housman, I.; Goldstein, J.; Finco, M.; Tenneson, K. Empirical cross sensor comparison of Sentinel-2A and 2B MSI, Landsat-8 OLI, and Landsat-7 ETM+ top of atmosphere spectral characteristics over the conterminous United States. Remote Sens. Environ. 2019, 221, 274–285. [Google Scholar] [CrossRef]

- Wang, C.; Wang, G.; Zhang, G.; Cui, Y.; Zhang, X.; He, Y.; Zhou, Y. Freshwater Aquaculture Mapping in “Home of Chinese Crawfish” by Using a Hierarchical Classification Framework and Sentinel-1/2 Data. Remote Sens. 2024, 16, 893. [Google Scholar] [CrossRef]

- Wu, B.; Li, Q. Crop planting and type proportion method for crop acreage estimation of complex agricultural landscapes. Int. J. Appl. Earth Obs. Geoinf. 2012, 16, 101–112. [Google Scholar] [CrossRef]

- Shen, R.; Pan, B.; Peng, Q.; Dong, J.; Chen, X.; Zhang, X.; Ye, T.; Huang, J.; Yuan, W. High-resolution distribution maps of single-season rice in China from 2017 to 2022. Earth Syst. Sci. Data Discuss. 2023, 15, 3203–3222. [Google Scholar] [CrossRef]

- Weigand, M.; Staab, J.; Wurm, M.; Taubenböck, H. Spatial and semantic effects of LUCAS samples on fully automated land use/land cover classification in high-resolution Sentinel-2 data. Int. J. Appl. Earth Obs. Geoinf. 2020, 88, 102065. [Google Scholar] [CrossRef]

- Zhu, W.; Peng, X.; Ding, M.; Li, L.; Liu, Y.; Liu, W.; Yang, M.; Chen, X.; Cai, J.; Huang, H.; et al. Decline in Planting Areas of Double-Season Rice by Half in Southern China over the Last Two Decades. Remote Sens. 2024, 16, 440. [Google Scholar] [CrossRef]

- Chu, L.; Jiang, C.; Wang, T.; Li, Z.; Cai, C. Mapping and forecasting of rice cropping systems in central China using multiple data sources and phenology-based time-series similarity measurement. Adv. Space Res. 2021, 68, 3594–3609. [Google Scholar] [CrossRef]

- Thorp, K.; Drajat, D. Deep machine learning with Sentinel satellite data to map paddy rice production stages across West Java, Indonesia. Remote Sens. Environ. 2021, 265, 112679. [Google Scholar] [CrossRef]

- Bera, B.; Saha, S.; Bhattacharjee, S. Estimation of forest canopy cover and forest fragmentation mapping using Landsat satellite data of Silabati River Basin (India). KN J. Cartogr. Geogr. Inf 2020, 70, 181–197. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Huete, A.; Liu, H.; Batchily, K.; Van Leeuwen, W. A comparison of vegetation indices over a global set of TM images for EOS-MODIS. Remote Sens. Environ. 1997, 59, 440–451. [Google Scholar] [CrossRef]

- Merzlyak, M.N.; Gitelson, A.A.; Chivkunova, O.B.; Rakitin, V.Y. Non-destructive optical detection of pigment changes during leaf senescence and fruit ripening. Physiol. Plant. 1999, 106, 135–141. [Google Scholar] [CrossRef]

- Dash, J.; Jeganathan, C.; Atkinson, P. The use of MERIS Terrestrial Chlorophyll Index to study spatio-temporal variation in vegetation phenology over India. Remote Sens. Environ. 2010, 114, 1388–1402. [Google Scholar] [CrossRef]

- Wang, Y.; Gong, Z.; Zhou, H. Long-term monitoring and phenological analysis of submerged aquatic vegetation in a shallow lake using time-series imagery. Ecol. Indic. 2023, 154, 110646. [Google Scholar] [CrossRef]

- Li, K.; Chen, Y. A Genetic Algorithm-based urban cluster automatic threshold method by combining VIIRS DNB, NDVI, and NDBI to monitor urbanization. Remote Sens. 2018, 10, 277. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Mansaray, L.R.; Kanu, A.S.; Yang, L.; Huang, J.; Wang, F. Evaluation of machine learning models for rice dry biomass estimation and mapping using quad-source optical imagery. GISci. Remote Sens. 2020, 57, 785–796. [Google Scholar] [CrossRef]

- Aghighi, H.; Azadbakht, M.; Ashourloo, D.; Shahrabi, H.S.; Radiom, S. Machine learning regression techniques for the silage maize yield prediction using time-series images of Landsat 8 OLI. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 4563–4577. [Google Scholar] [CrossRef]

- Abdi, A.M. Land cover and land use classification performance of machine learning algorithms in a boreal landscape using Sentinel-2 data. GISci. Remote Sens. 2020, 57, 1–20. [Google Scholar] [CrossRef]

- Tolpekin, V.A.; Stein, A. Quantification of the effects of land-cover-class spectral separability on the accuracy of Markov-random-field-based superresolution mapping. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3283–3297. [Google Scholar] [CrossRef]

- Wang, Y.; Qi, Q.; Liu, Y. Unsupervised segmentation evaluation using area-weighted variance and Jeffries-Matusita distance for remote sensing images. Remote Sens. 2018, 10, 1193. [Google Scholar] [CrossRef]

- Schmidt, K.; Skidmore, A. Spectral discrimination of vegetation types in a coastal wetland. Remote Sens. Environ. 2003, 85, 92–108. [Google Scholar] [CrossRef]

- Jaccard, P. The distribution of the flora in the alpine zone. 1. New Phytol. 1912, 11, 37–50. [Google Scholar] [CrossRef]

- Qiu, S.; Zhu, Z.; He, B. Fmask 4.0: Improved cloud and cloud shadow detection in Landsats 4–8 and Sentinel-2 imagery. Remote Sens. Environ. 2019, 231, 111205. [Google Scholar] [CrossRef]

- Sanchez, A.H.; Picoli, M.C.A.; Camara, G.; Andrade, P.R.; Chaves, M.E.D.; Lechler, S.; Soares, A.R.; Marujo, R.F.; Simões, R.E.O.; Ferreira, K.R.; et al. Comparison of Cloud cover detection algorithms on sentinel–2 images of the amazon tropical forest. Remote Sens. 2020, 12, 1284. [Google Scholar] [CrossRef]

- Baetens, L.; Desjardins, C.; Hagolle, O. Validation of copernicus Sentinel-2 cloud masks obtained from MAJA, Sen2Cor, and FMask processors using reference cloud masks generated with a supervised active learning procedure. Remote Sens. 2019, 11, 433. [Google Scholar] [CrossRef]

| Bands | Central Wavelength | Space Resolution | Purpose (Cloud-Mask Algorithm/VI) |

|---|---|---|---|

| Blue (B2) | 492.4 nm (S2A)/492.1 nm (S2B) | 10 m | CloudScore/BSI, EVI, PSRI |

| Green (B3) | 559.8 nm (S2A)/559.0 nm (S2B) | 10 m | CloudScore/GCVI, MNDWI |

| Red (B4) | 664.6 nm (S2A)/664.9 nm (S2B) | 10 m | CloudScore/BSI, NDVI, EVI, PSRI, MTCI |

| RE 1 (B5) | 704.1 nm (S2A)/703.8 nm (S2B) | 20 m | -/MTCI |

| RE 2 (B6) | 740.5 nm (S2A)/739.1 nm (S2B) | 20 m | -/PSRI, MTCI |

| RE 3 (B7) | 782.8 nm (S2A)/779.7 nm (S2B) | 20 m | CDI |

| NIR (B8) | 832.8 nm (S2A)/833.0 nm (S2B) | 10 m | CloudScore, CDI/BSI, NDVI, EVI, GCVI, LSWI, NDBI |

| NIR (B8A) | 864.7 nm (S2A)/864.0 nm (S2B) | 20 m | CDI |

| Cirrus (B10) | 1373.5 nm (S2A)/1376.9 nm (S2B) | 60 m | QA60 |

| SWIR1 (B11) | 1613.7 nm (S2A)/1610.4 nm (S2B) | 20 m | CloudScore/BSI, MNDWI, NDBI |

| SWIR2 (B12) | 2202.4 nm (S2A)/2185.7 nm (S2B) | 20 m | CloudScore/LSWI |

| Class | Training | Validation | Total | |||

|---|---|---|---|---|---|---|

| 2018 | 2021 | 2018 | 2021 | 2018 | 2021 | |

| Water body | 329 | 330 | 141 | 142 | 470 | 472 |

| Built-up area | 255 | 252 | 109 | 108 | 364 | 360 |

| Forest land | 265 | 258 | 113 | 110 | 378 | 368 |

| Dryland | 328 | 232 | 140 | 100 | 468 | 332 |

| Paddy rice | 410 | 535 | 176 | 229 | 586 | 764 |

| Total | 1587 | 1607 | 680 | 689 | 2266 | 2296 |

| Phenology Stages | Time Windows |

|---|---|

| Bare soil stage | 20/03–20/04 |

| Transplanting stage | 20/04–15/06 |

| Growing stage | 15/06–10/09 |

| Harvest stage | 10/09–25/10 |

| Spectral Indices | Expressions | References |

|---|---|---|

| Bare Soil Index (BSI) | [40] | |

| Normalized Difference Vegetation Index (NDVI) | [41] | |

| Enhanced Vegetation Index (EVI) | [42] | |

| Green Chlorophyll Vegetation Index (GCVI) | [15] | |

| Plant Senescence Reflectance Index (PSRI) | [43] | |

| MERIS Terrestrial Chlorophyll Index (MTCI) | [44] | |

| Modified Normalized Difference Water Index (MNDWI) | [45] | |

| Land Surface Water Index (LSWI) | [12] | |

| Normalized Difference Built-Up Index (NDBI) | [46] |

| Data Type | Tile | Phenology Stages | Date | Cloud Cover/% | Samples | ||

|---|---|---|---|---|---|---|---|

| Cloud | Clear | Total | |||||

| 2018TOA | 49REP | Bare soil stage | 3/4/2018 | 7.4915 | 161 | 240 | 401 |

| Transplanting stage | 23/5/2018 | 33.6128 | 235 | 182 | 417 | ||

| Growing stage | 27/6/2018 | 15.294 | 187 | 261 | 448 | ||

| 6/8/2018 | 23.0023 | 304 | 120 | 424 | |||

| Harvest stage | 10/10/2018 | 5.3781 | 84 | 389 | 473 | ||

| 49RFP | Bare soil stage | 8/4/2018 | 16.5152 | 217 | 252 | 469 | |

| Transplanting stage | 28/4/2018 | 15.0278 | 260 | 225 | 485 | ||

| Growing stage | 17/7/2018 | 45.5232 | 212 | 201 | 413 | ||

| 1/8/2018 | 14.4641 | 251 | 178 | 429 | |||

| Harvest stage | 15/9/2018 | 8.6775 | 253 | 259 | 512 | ||

| 49RGP | Bare soil stage | 8/4/2018 | 15.6042 | 114 | 253 | 367 | |

| Transplanting stage | 8/5/2018 | 41.6684 | 281 | 133 | 414 | ||

| Growing stage | 17/6/2018 | 49.8558 | 259 | 146 | 405 | ||

| 22/7/2018 | 1.9465 | 204 | 237 | 441 | |||

| Harvest stage | 10/10/2018 | 0.898 | 77 | 328 | 405 | ||

| 2021TOA | 49REP | Bare soil stage | 12/4/2021 | 8.066 | 262 | 205 | 467 |

| Transplanting stage | 11/6/2021 | 63.7011 | 352 | 108 | 460 | ||

| Growing stage | 5/8/2021 | 33.6629 | 220 | 191 | 411 | ||

| 9/9/2021 | 55.6306 | 267 | 155 | 422 | |||

| Harvest stage | 24/10/2021 | 10.93 | 179 | 247 | 426 | ||

| 49RFP | Bare soil stage | 28/3/2021 | 47.4834 | 279 | 129 | 408 | |

| Transplanting stage | 1/6/2021 | 34.2679 | 273 | 156 | 429 | ||

| Growing stage | 21/7/2021 | 26.6127 | 242 | 129 | 371 | ||

| 4/9/2021 | 34.5427 | 190 | 198 | 388 | |||

| Harvest stage | 24/10/2021 | 8.3527 | 263 | 160 | 423 | ||

| 49RGP | Bare soil stage | 12/4/2021 | 33.8914 | 265 | 150 | 415 | |

| Transplanting stage | 17/5/2021 | 17.7569 | 227 | 202 | 429 | ||

| Growing stage | 5/8/2021 | 19.443 | 261 | 152 | 413 | ||

| 20/8/2021 | 32.4015 | 254 | 161 | 415 | |||

| Harvest stage | 29/9/2021 | 18.0409 | 198 | 212 | 410 | ||

| 2021SR | 49REP | Bare soil stage | 12/4/2021 | 12.200443 | 262 | 205 | 467 |

| Transplanting stage | 11/6/2021 | 75.641401 | 352 | 108 | 460 | ||

| Growing stage | 5/8/2021 | 35.574219 | 220 | 191 | 411 | ||

| 9/9/2021 | 47.876539 | 267 | 155 | 422 | |||

| Harvest stage | 24/10/2021 | 7.954566 | 179 | 247 | 426 | ||

| 49RFP | Bare soil stage | 28/3/2021 | 61.947279 | 279 | 129 | 408 | |

| Transplanting stage | 1/6/2021 | 46.110165 | 273 | 156 | 429 | ||

| Growing stage | 21/7/2021 | 17.516239 | 242 | 129 | 371 | ||

| 4/9/2021 | 27.806534 | 190 | 198 | 388 | |||

| Harvest stage | 24/10/2021 | 6.579728 | 263 | 160 | 423 | ||

| 49RGP | Bare soil stage | 12/4/2021 | 61.947279 | 265 | 150 | 415 | |

| Transplanting stage | 17/5/2021 | 42.097832 | 227 | 202 | 429 | ||

| Growing stage | 5/8/2021 | 22.158073 | 261 | 152 | 413 | ||

| 20/8/2021 | 23.202318 | 254 | 161 | 415 | |||

| Harvest stage | 29/9/2021 | 9.179795 | 198 | 212 | 410 | ||

| Data Type | Tile | Label | QA60 | S2cloudless | CloudScore | CDI | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| PA (%) | UA (%) | PA (%) | UA (%) | PA (%) | UA (%) | PA (%) | UA (%) | |||

| 2018TOA | REP | Cloud | 66.68 | 95.16 | 92.47 | 98.09 | 83.10 | 93.69 | - | - |

| Clear | 98.19 | 75.90 | 97.28 | 96.48 | 95.46 | 92.13 | - | - | ||

| OA(%) | 83.18 | 96.94 | 93.45 | - | ||||||

| RFP | Cloud | 72.67 | 96.97 | 95.97 | 92.06 | 82.51 | 92.07 | - | - | |

| Clear | 97.48 | 78.05 | 87.27 | 95.82 | 90.39 | 85.82 | - | - | ||

| OA(%) | 84.46 | 92.01 | 86.85 | - | ||||||

| RGP | Cloud | 58.42 | 96.31 | 92.92 | 97.23 | 77.56 | 87.22 | - | - | |

| Clear | 95.40 | 72.62 | 95.70 | 96.55 | 89.84 | 89.48 | - | - | ||

| OA(%) | 80.09 | 96.47 | 88.88 | - | ||||||

| 2021TOA | REP | Cloud | 69.65 | 99.39 | 99.29 | 98.10 | 99.57 | 96.16 | - | - |

| Clear | 99.26 | 68.96 | 97.07 | 99.13 | 95.67 | 99.41 | - | - | ||

| OA(%) | 81.72 | 98.54 | 97.72 | - | ||||||

| RFP | Cloud | 67.86 | 96.73 | 99.02 | 98.66 | 97.36 | 96.50 | - | - | |

| Clear | 97.21 | 69.78 | 97.50 | 98.38 | 93.76 | 96.36 | - | - | ||

| OA(%) | 77.71 | 98.52 | 96.09 | - | ||||||

| RGP | Cloud | 47.50 | 95.90 | 96.97 | 97.75 | 99.36 | 97.58 | - | - | |

| Clear | 97.22 | 58.22 | 95.78 | 96.54 | 96.54 | 99.04 | - | - | ||

| OA(%) | 69.21 | 96.86 | 98.19 | - | ||||||

| 2021SR | REP | Cloud | 69.88 | 99.39 | 99.51 | 98.11 | 98.26 | 98.93 | 98.31 | 81.06 |

| Clear | 99.26 | 69.10 | 97.07 | 99.29 | 98.63 | 97.86 | 59.16 | 95.09 | ||

| OA(%) | 81.82 | 98.64 | 98.49 | 83.70 | ||||||

| RFP | Cloud | 67.95 | 96.73 | 99.09 | 98.66 | 93.68 | 98.89 | 99.28 | 87.75 | |

| Clear | 97.21 | 69.89 | 97.50 | 98.46 | 98.21 | 92.45 | 72.83 | 97.78 | ||

| OA(%) | 77.85 | 98.64 | 95.47 | 89.92 | ||||||

| RGP | Cloud | 47.54 | 95.92 | 96.98 | 97.75 | 99.12 | 97.95 | 92.40 | 79.49 | |

| Clear | 97.26 | 58.27 | 95.78 | 96.57 | 97.16 | 98.69 | 63.85 | 87.17 | ||

| OA(%) | 69.36 | 96.92 | 98.28 | 81.68 | ||||||

| Total | Cloud | 63.13 | 96.94 | 96.91 | 97.38 | 92.28 | 95.33 | 96.63 | 82.77 | |

| Clear | 97.61 | 68.98 | 95.66 | 97.47 | 95.07 | 94.58 | 65.28 | 93.35 | ||

| OA(%) | 78.38 | 97.06 | 94.82 | 85.10 | ||||||

| Algorithm Combination | 2018 TOA | 2021 TOA | 2021 SR | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Class | Rice | Non-Rice | PA (%) | UA (%) | OA (%) | Kappa | Class | Rice | Non-Rice | PA (%) | UA (%) | OA (%) | Kappa | Class | Rice | Non-Rice | PA (%) | UA (%) | OA (%) | Kappa | |

| QA60-RF | Rice | 149 | 27 | 84.66 | 78.84 | 90.15 | 0.75 | Rice | 171 | 5 | 97.16 | 95.53 | 98.09 | 0.95 | Rice | 173 | 3 | 98.3 | 94.02 | 97.94 | 0.95 |

| Non-rice | 40 | 464 | 92.06 | 94.5 | Non-rice | 8 | 496 | 98.41 | 99 | Non-rice | 11 | 493 | 97.82 | 99.4 | |||||||

| S2cloudless-RF | Rice | 152 | 24 | 86.36 | 83.52 | 92.06 | 0.8 | Rice | 173 | 3 | 98.3 | 95.58 | 98.38 | 0.96 | Rice | 171 | 5 | 97.16 | 97.16 | 98.53 | 0.96 |

| Non-rice | 30 | 474 | 94.05 | 95.18 | Non-rice | 8 | 496 | 98.41 | 99.4 | Non-rice | 5 | 499 | 99.01 | 99.01 | |||||||

| CloudScore-RF | Rice | 143 | 33 | 81.25 | 83.14 | 90.88 | 0.76 | Rice | 172 | 4 | 97.73 | 95.03 | 98.09 | 0.95 | Rice | 173 | 3 | 98.3 | 98.3 | 99.12 | 0.98 |

| Non-rice | 29 | 475 | 94.25 | 93.5 | Non-rice | 9 | 495 | 98.21 | 99.2 | Non-rice | 3 | 501 | 99.4 | 99.4 | |||||||

| CDI-RF | Rice | Rice | Rice | 172 | 4 | 97.73 | 94.51 | 97.94 | 0.95 | ||||||||||||

| Non-rice | Non-rice | Non-rice | 10 | 494 | 98.02 | 99.2 | |||||||||||||||

| QA60-SVM | Rice | 146 | 30 | 82.95 | 75.26 | 88.53 | 0.71 | Rice | 164 | 12 | 93.18 | 92.13 | 96.18 | 0.9 | Rice | 170 | 6 | 96.59 | 94.44 | 97.65 | 0.94 |

| Non-rice | 48 | 456 | 90.48 | 93.83 | Non-rice | 14 | 490 | 97.22 | 97.61 | Non-rice | 10 | 494 | 98.02 | 98.8 | |||||||

| S2cloudless-SVM | Rice | 151 | 25 | 85.8 | 78.24 | 90.15 | 0.75 | Rice | 170 | 6 | 96.59 | 93.41 | 97.35 | 0.93 | Rice | 168 | 8 | 95.45 | 94.38 | 97.35 | 0.93 |

| Non-rice | 42 | 462 | 91.67 | 94.87 | Non-rice | 12 | 492 | 97.62 | 98.8 | Non-rice | 10 | 494 | 98.02 | 98.41 | |||||||

| CloudScore-SVM | Rice | 141 | 35 | 80.11 | 71.57 | 86.62 | 0.66 | Rice | 168 | 8 | 95.45 | 91.3 | 96.47 | 0.91 | Rice | 167 | 9 | 94.89 | 94.35 | 97.21 | 0.93 |

| Non-rice | 56 | 448 | 88.89 | 92.75 | Non-rice | 16 | 488 | 96.83 | 98.39 | Non-rice | 10 | 494 | 98.02 | 98.21 | |||||||

| CDI-SVM | Rice | Rice | Rice | 168 | 8 | 95.45 | 93.33 | 97.06 | 0.92 | ||||||||||||

| Non-rice | Non-rice | Non-rice | 12 | 492 | 97.62 | 98.4 | |||||||||||||||

| QA60-CART | Rice | 146 | 30 | 82.95 | 75.26 | 88.53 | 0.71 | Rice | 170 | 6 | 96.59 | 91.4 | 96.76 | 0.92 | Rice | 166 | 10 | 94.32 | 88.3 | 95.29 | 0.88 |

| Non-rice | 48 | 456 | 90.48 | 93.83 | Non-rice | 16 | 488 | 96.83 | 98.79 | Non-rice | 22 | 482 | 95.63 | 97.97 | |||||||

| S2cloudless-CART | Rice | 147 | 29 | 83.52 | 79.46 | 90.15 | 0.75 | Rice | 170 | 6 | 96.59 | 91.89 | 96.91 | 0.92 | Rice | 172 | 4 | 97.73 | 90.05 | 96.62 | 0.91 |

| Non-rice | 38 | 466 | 92.46 | 94.14 | Non-rice | 15 | 489 | 97.02 | 98.79 | Non-rice | 19 | 485 | 96.23 | 99.18 | |||||||

| CloudScore-CART | Rice | 138 | 38 | 78.41 | 73.02 | 86.91 | 0.67 | Rice | 174 | 2 | 98.86 | 88.32 | 96.32 | 0.91 | Rice | 171 | 5 | 97.16 | 95 | 97.94 | 0.95 |

| Non-rice | 51 | 453 | 89.88 | 92.26 | Non-rice | 23 | 481 | 95.44 | 99.59 | Non-rice | 9 | 495 | 98.21 | 99 | |||||||

| CDI-CART | Rice | Rice | Rice | 171 | 5 | 97.16 | 84.65 | 94.71 | 0.87 | ||||||||||||

| Non-rice | Non-rice | Non-rice | 31 | 473 | 93.85 | 98.95 | |||||||||||||||

| QA60-GTB | Rice | 142 | 34 | 80.68 | 80.23 | 89.85 | 0.74 | Rice | 169 | 7 | 96.02 | 96.57 | 98.09 | 0.95 | Rice | 172 | 4 | 97.73 | 93.99 | 97.79 | 0.94 |

| Non-rice | 35 | 469 | 93.06 | 93.24 | Non-rice | 6 | 498 | 98.81 | 98.61 | Non-rice | 11 | 493 | 97.82 | 99.2 | |||||||

| S2cloudless-GTB | Rice | 153 | 23 | 86.93 | 80.95 | 91.32 | 0.78 | Rice | 171 | 5 | 97.16 | 95.53 | 98.09 | 0.95 | Rice | 171 | 5 | 97.16 | 97.16 | 98.53 | 0.96 |

| Non-rice | 36 | 468 | 92.86 | 95.32 | Non-rice | 8 | 496 | 98.41 | 99 | Non-rice | 5 | 499 | 99.01 | 99.01 | |||||||

| CloudScore-GTB | Rice | 143 | 33 | 81.25 | 80.79 | 90.15 | 0.74 | Rice | 173 | 3 | 98.3 | 94.02 | 97.94 | 0.95 | Rice | 169 | 7 | 96.02 | 96.02 | 97.94 | 0.95 |

| Non-rice | 34 | 470 | 93.25 | 93.44 | Non-rice | 11 | 493 | 97.82 | 99.4 | Non-rice | 7 | 497 | 98.61 | 98.61 | |||||||

| CDI-GTB | Rice | Rice | Rice | 171 | 5 | 97.16 | 93.96 | 97.65 | 0.94 | ||||||||||||

| Non-rice | Non-rice | Non-rice | 11 | 493 | 97.82 | 99 | |||||||||||||||

| Period of Time | Cloud-Mask Algorithms | 2018TOA | 2021TOA | 2021SR | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Effective Observation Element Frequency/% | Effective Observation Element Frequency/% | Effective Observation Element Frequency/% | |||||||||||||||||

| >0 | (0, 20] | (20, 40] | (40, 60] | (60, 80] | (80, 100] | >0 | (0, 20] | (20, 40] | (40, 60] | (60, 80] | (80, 100] | >0 | (0, 20] | (20, 40] | (40, 60] | (60, 80] | (80, 100] | ||

| March | QA60 | 95.38 | 19.56 | 9.83 | 65.99 | 0.00 | 0.00 | 79.50 | 57.11 | 20.35 | 2.01 | 0.03 | 0.00 | 79.34 | 57.29 | 20.04 | 1.98 | 0.03 | 0.00 |

| S2cloudless | 71.00 | 56.08 | 13.55 | 1.36 | 0.00 | 0.00 | 61.85 | 54.69 | 6.91 | 0.24 | 0.00 | 0.00 | 61.62 | 54.65 | 6.73 | 0.24 | 0.00 | 0.00 | |

| CloudScore | 86.44 | 61.06 | 22.78 | 2.59 | 0.00 | 0.00 | 66.71 | 55.28 | 10.65 | 0.76 | 0.01 | 0.00 | 70.21 | 53.79 | 15.00 | 1.39 | 0.02 | 0.00 | |

| CDI | - | - | - | - | - | - | - | - | - | - | - | - | 46.57 | 44.55 | 2.02 | 0.00 | 0.00 | 0.00 | |

| April | QA60 | 100.00 | 0.02 | 3.20 | 39.05 | 56.02 | 1.71 | 92.96 | 60.70 | 31.89 | 0.37 | 0.00 | 0.00 | 92.96 | 60.80 | 31.88 | 0.28 | 0.00 | 0.00 |

| S2cloudless | 99.98 | 0.29 | 14.68 | 64.53 | 20.13 | 0.35 | 77.47 | 42.85 | 32.08 | 2.51 | 0.03 | 0.00 | 77.45 | 42.92 | 32.11 | 2.38 | 0.03 | 0.00 | |

| CloudScore | 99.31 | 0.66 | 4.66 | 51.95 | 38.61 | 3.41 | 93.08 | 31.61 | 53.31 | 7.54 | 0.61 | 0.00 | 95.68 | 23.41 | 60.48 | 11.02 | 0.75 | 0.01 | |

| CDI | - | - | - | - | - | - | - | - | - | - | - | - | 50.01 | 44.31 | 5.55 | 0.15 | 0.00 | 0.00 | |

| May | QA60 | 97.44 | 15.12 | 36.12 | 35.59 | 10.61 | 0.00 | 79.13 | 33.79 | 36.02 | 9.07 | 0.25 | 0.00 | 78.99 | 33.68 | 36.14 | 8.93 | 0.24 | 0.00 |

| S2cloudless | 95.71 | 14.96 | 36.24 | 38.17 | 6.33 | 0.01 | 82.55 | 32.68 | 39.23 | 10.59 | 0.05 | 0.00 | 82.51 | 32.67 | 39.37 | 10.42 | 0.04 | 0.00 | |

| CloudScore | 95.70 | 10.47 | 32.22 | 44.15 | 8.77 | 0.07 | 88.45 | 21.97 | 31.81 | 30.95 | 3.71 | 0.01 | 95.89 | 17.81 | 33.61 | 40.02 | 4.44 | 0.02 | |

| CDI | - | - | - | - | - | - | - | - | - | - | - | - | 70.15 | 42.88 | 23.61 | 3.66 | 0.00 | 0.00 | |

| June | QA60 | 96.81 | 11.25 | 35.65 | 45.03 | 4.88 | 0.00 | 100.00 | 8.67 | 46.54 | 32.93 | 10.78 | 1.08 | 100.00 | 8.73 | 46.62 | 32.79 | 10.79 | 1.07 |

| S2cloudless | 96.04 | 16.02 | 43.34 | 35.87 | 0.81 | 0.00 | 99.94 | 23.41 | 44.92 | 26.63 | 4.88 | 0.10 | 99.94 | 23.53 | 44.95 | 26.49 | 4.87 | 0.10 | |

| CloudScore | 97.70 | 9.14 | 38.79 | 46.49 | 3.27 | 0.00 | 98.61 | 15.36 | 37.13 | 35.37 | 9.63 | 1.11 | 99.34 | 9.62 | 32.23 | 42.89 | 13.04 | 1.54 | |

| CDI | - | - | - | - | - | - | - | - | - | - | - | - | 99.98 | 72.58 | 24.53 | 2.80 | 0.07 | 0.00 | |

| July | QA60 | 99.92 | 27.35 | 46.92 | 20.78 | 4.87 | 0.00 | 99.99 | 3.43 | 49.77 | 46.77 | 0.02 | 0.00 | 99.99 | 3.53 | 49.77 | 46.68 | 0.01 | 0.00 |

| S2cloudless | 99.42 | 20.03 | 43.91 | 31.40 | 4.07 | 0.00 | 99.74 | 7.66 | 53.42 | 38.61 | 0.05 | 0.00 | 99.74 | 7.81 | 53.33 | 38.55 | 0.05 | 0.00 | |

| CloudScore | 99.01 | 7.31 | 29.37 | 55.67 | 6.65 | 0.00 | 99.13 | 5.34 | 44.93 | 48.32 | 0.52 | 0.01 | 99.46 | 3.83 | 38.45 | 56.01 | 1.15 | 0.02 | |

| CDI | - | - | - | - | - | - | - | - | - | - | - | - | 92.41 | 44.70 | 39.48 | 8.23 | 0.00 | 0.00 | |

| August | QA60 | 99.98 | 0.83 | 6.18 | 21.45 | 30.88 | 40.64 | 99.58 | 12.44 | 47.20 | 39.88 | 0.06 | 0.00 | 99.57 | 12.52 | 47.24 | 39.80 | 0.01 | 0.00 |

| S2cloudless | 98.75 | 11.13 | 18.63 | 29.67 | 22.50 | 16.82 | 96.67 | 14.91 | 44.73 | 36.73 | 0.29 | 0.00 | 96.64 | 14.94 | 44.81 | 36.66 | 0.23 | 0.00 | |

| CloudScore | 98.91 | 5.71 | 12.83 | 26.24 | 26.11 | 28.00 | 97.91 | 12.19 | 38.17 | 46.83 | 0.71 | 0.00 | 98.74 | 9.95 | 35.41 | 51.65 | 1.72 | 0.00 | |

| CDI | - | - | - | - | - | - | - | - | - | - | - | - | 85.76 | 41.17 | 41.00 | 3.58 | 0.01 | 0.00 | |

| September | QA60 | 90.94 | 58.74 | 28.87 | 3.32 | 0.01 | 0.00 | 100.00 | 0.03 | 1.62 | 23.97 | 32.95 | 41.43 | 100.00 | 0.03 | 1.65 | 24.07 | 33.08 | 41.17 |

| S2cloudless | 84.95 | 65.28 | 18.96 | 0.70 | 0.01 | 0.00 | 99.98 | 0.15 | 2.85 | 23.54 | 29.96 | 43.48 | 99.98 | 0.15 | 2.85 | 23.58 | 29.98 | 43.42 | |

| CloudScore | 85.36 | 62.00 | 22.06 | 1.28 | 0.01 | 0.00 | 99.47 | 0.64 | 2.72 | 20.95 | 28.03 | 47.13 | 99.69 | 0.35 | 1.62 | 18.50 | 28.96 | 50.25 | |

| CDI | - | - | - | - | - | - | - | - | - | - | - | - | 99.74 | 3.15 | 17.20 | 31.81 | 26.54 | 21.04 | |

| October | QA60 | 99.54 | 2.64 | 32.29 | 61.82 | 2.79 | 0.00 | 100.00 | 4.56 | 85.98 | 9.41 | 0.05 | 0.00 | 100.00 | 4.66 | 86.07 | 9.23 | 0.04 | 0.00 |

| S2cloudless | 99.92 | 1.77 | 28.12 | 68.21 | 1.81 | 0.00 | 99.96 | 4.00 | 89.46 | 6.41 | 6.03 | 0.08 | 99.96 | 4.14 | 89.51 | 6.22 | 0.08 | 0.00 | |

| CloudScore | 99.45 | 1.97 | 23.21 | 70.77 | 3.48 | 0.01 | 99.02 | 3.72 | 89.94 | 5.35 | 0.00 | 0.00 | 99.61 | 1.46 | 89.38 | 8.75 | 0.01 | 0.00 | |

| CDI | - | - | - | - | - | - | - | - | - | - | - | - | 99.99 | 7.63 | 91.02 | 1.33 | 0.00 | 0.00 | |

| Bare soil stage | QA60 | 100.00 | 0.82 | 19.54 | 73.81 | 5.83 | 0.00 | 97.96 | 36.11 | 57.52 | 4.32 | 0.01 | 0.00 | 97.75 | 35.97 | 57.54 | 4.23 | 0.00 | 0.00 |

| 03/20–04/20 | S2cloudless | 99.98 | 0.48 | 17.77 | 79.89 | 1.84 | 0.00 | 88.24 | 35.38 | 46.89 | 5.73 | 0.24 | 0.00 | 88.22 | 35.44 | 46.86 | 5.69 | 0.22 | 0.00 |

| CloudScore | 99.25 | 0.70 | 6.04 | 84.53 | 7.98 | 0.00 | 96.87 | 21.53 | 59.69 | 14.66 | 0.98 | 0.01 | 99.08 | 14.77 | 63.79 | 18.49 | 1.99 | 0.04 | |

| CDI | - | - | - | - | - | - | - | - | - | - | - | - | 62.98 | 46.10 | 16.74 | 0.14 | 0.00 | 0.00 | |

| Transplanting stage | QA60 | 99.98 | 4.23 | 38.74 | 51.77 | 5.24 | 0.00 | 100.00 | 23.64 | 61.28 | 14.65 | 0.43 | 0.00 | 100.00 | 23.70 | 61.43 | 14.45 | 0.42 | 0.00 |

| 04/20–06/15 | S2cloudless | 99.09 | 13.10 | 53.51 | 32.05 | 0.43 | 0.00 | 99.96 | 29.72 | 58.43 | 11.73 | 0.08 | 0.00 | 99.96 | 29.75 | 58.60 | 11.53 | 0.08 | 0.00 |

| CloudScore | 95.56 | 3.23 | 46.53 | 44.37 | 1.43 | 0.00 | 96.79 | 16.03 | 48.42 | 31.50 | 0.83 | 0.00 | 99.59 | 8.00 | 48.31 | 41.96 | 1.31 | 0.00 | |

| CDI | - | - | - | - | - | - | - | - | - | - | - | - | 99.99 | 60.56 | 37.45 | 1.98 | 0.00 | 0.00 | |

| Growth stage | QA60 | 100.00 | 0.17 | 25.55 | 68.10 | 6.18 | 0.00 | 100.00 | 1.85 | 70.21 | 27.66 | 0.28 | 0.00 | 100.00 | 1.91 | 70.26 | 27.56 | 0.27 | 0.00 |

| 06/15–09/10 | S2cloudless | 99.99 | 3.95 | 48.07 | 45.09 | 2.88 | 0.00 | 99.99 | 9.43 | 61.59 | 28.88 | 0.08 | 0.00 | 99.99 | 9.48 | 61.66 | 28.76 | 0.08 | 0.00 |

| CloudScore | 99.59 | 1.66 | 23.45 | 65.42 | 9.06 | 0.00 | 99.67 | 5.72 | 49.71 | 42.83 | 1.41 | 0.00 | 99.81 | 2.78 | 42.92 | 52.12 | 1.99 | 0.00 | |

| CDI | - | - | - | - | - | - | - | - | - | - | - | - | 92.17 | 35.52 | 42.99 | 13.66 | 0.00 | 0.00 | |

| Harvest stage | QA60 | 100.00 | 3.67 | 81.12 | 14.90 | 0.31 | 0.00 | 100.00 | 0.00 | 0.45 | 92.90 | 6.65 | 0.00 | 100.00 | 0.00 | 0.56 | 92.84 | 6.59 | 0.00 |

| 09/10–10/25 | S2cloudless | 99.97 | 6.48 | 84.45 | 8.95 | 0.09 | 0.00 | 99.98 | 0.02 | 2.15 | 96.04 | 1.76 | 0.00 | 99.98 | 0.02 | 2.30 | 95.89 | 1.76 | 0.00 |

| CloudScore | 99.38 | 5.81 | 72.02 | 21.41 | 0.14 | 0.00 | 99.34 | 0.37 | 2.33 | 92.67 | 3.97 | 0.00 | 99.67 | 0.23 | 1.24 | 90.98 | 7.22 | 0.00 | |

| CDI | - | - | - | - | - | - | - | - | - | - | - | - | 99.99 | 0.03 | 16.09 | 83.65 | 0.22 | 0.00 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, X.; Chi, H.; Huang, J.; Han, Y.; Li, Y.; Ling, F. Comparison of Cloud-Mask Algorithms and Machine-Learning Methods Using Sentinel-2 Imagery for Mapping Paddy Rice in Jianghan Plain. Remote Sens. 2024, 16, 1305. https://doi.org/10.3390/rs16071305

Gao X, Chi H, Huang J, Han Y, Li Y, Ling F. Comparison of Cloud-Mask Algorithms and Machine-Learning Methods Using Sentinel-2 Imagery for Mapping Paddy Rice in Jianghan Plain. Remote Sensing. 2024; 16(7):1305. https://doi.org/10.3390/rs16071305

Chicago/Turabian StyleGao, Xinyi, Hong Chi, Jinliang Huang, Yifei Han, Yifan Li, and Feng Ling. 2024. "Comparison of Cloud-Mask Algorithms and Machine-Learning Methods Using Sentinel-2 Imagery for Mapping Paddy Rice in Jianghan Plain" Remote Sensing 16, no. 7: 1305. https://doi.org/10.3390/rs16071305

APA StyleGao, X., Chi, H., Huang, J., Han, Y., Li, Y., & Ling, F. (2024). Comparison of Cloud-Mask Algorithms and Machine-Learning Methods Using Sentinel-2 Imagery for Mapping Paddy Rice in Jianghan Plain. Remote Sensing, 16(7), 1305. https://doi.org/10.3390/rs16071305