A Deep-Learning-Based Guidewire Compliant Control Method for the Endovascular Surgery Robot

Abstract

1. Introduction

2. Experimental Platform

2.1. Slave End

2.2. Master End

2.3. Vascular Phantom

3. Hidden Collisions Detection

3.1. Datasets

3.2. GCCM-Net

| Models | Params | FLOPs | FPS | ||||

|---|---|---|---|---|---|---|---|

| Mobile-net [29] | 3.29 M | 11.12 | 50.34 | 90.58 ± 0.48% | 88.12 ± 0.81% | 86.21 ± 1.82% | 92.94 ± 0.41% |

| Shuffle-net [30] | 0.95 M | 7.47 | 53.70 | 89.39 ± 0.47% | 87.55 ± 1.03% | 85.29 ± 1.30% | 92.26 ± 0.73% |

| Squeeze-net [31] | 1.83 M | 37.55 | 106.78 | 88.10 ± 0.98% | 85.65 ± 1.53% | 81.84 ± 3.03% | 90.82 ± 0.44% |

| CNN-net | 4.01 M | 181.07 | 112.82 | 89.55 ± 0.97% | 87.96 ± 0.44% | 85.59 ± 2.13% | 91.94 ± 0.90% |

| SAL-net | 4.25 M | 187.88 | 99.27 | 91.10 ± 0.61% | 88.90 ± 0.42% | 86.36 ± 1.95% | 93.79 ± 0.25% |

| CCL-net | 1.11 M | 30.73 | 61.19 | 91.50 ± 0.44% | 89.16 ± 1.07% | 88.53 ± 0.84% | 94.19 ± 0.91% |

| GCCM-net | 1.24 M | 45.13 | 89.72 | 92.41 ± 0.31% | 89.68 ± 0.40% | 87.55 ± 1.71% | 94.86 ± 0.31% |

3.3. Ablation Studies

3.4. Class Activation Mapping

4. Guidewire Compliance Control Strategy

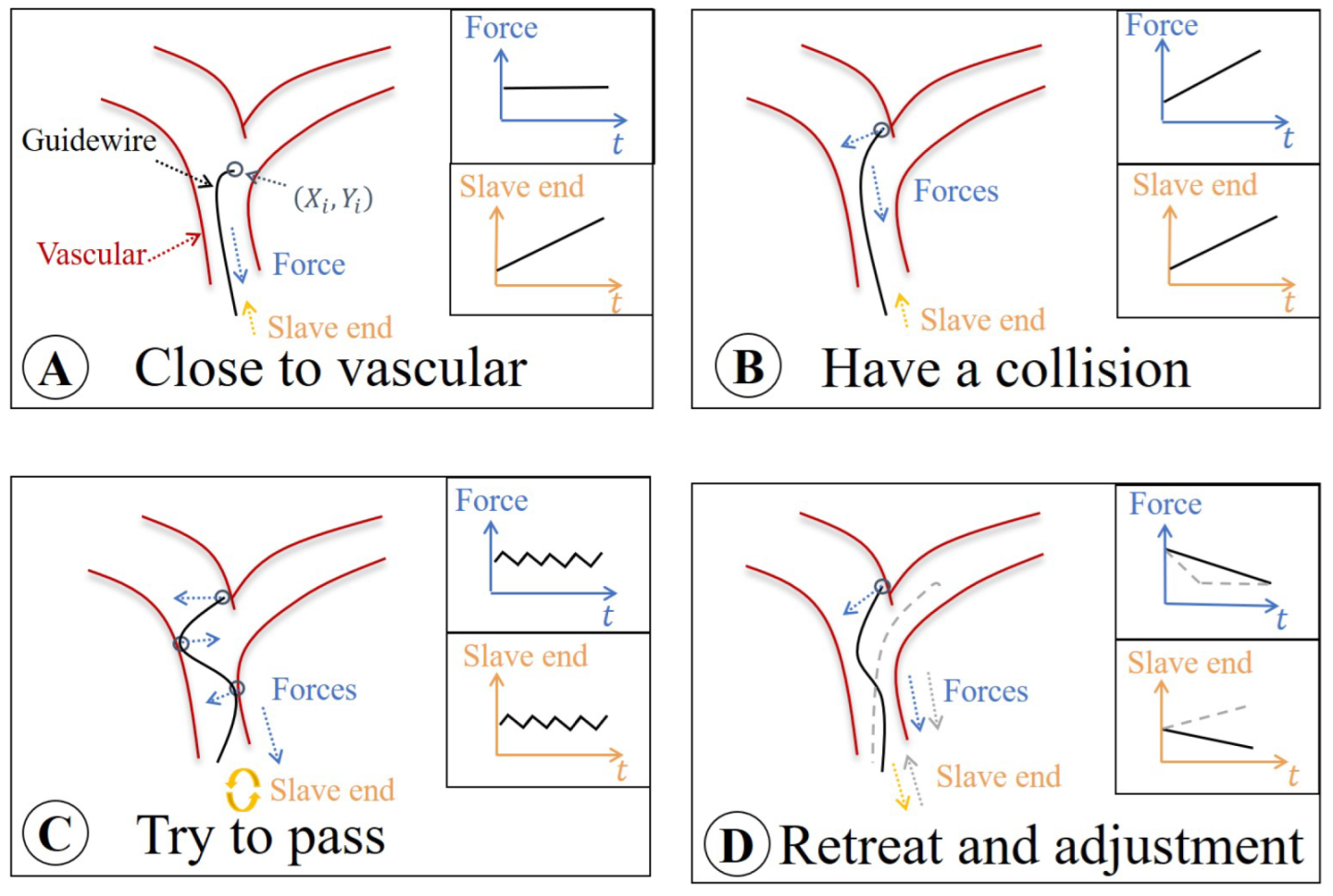

4.1. Surgical Risk Classification

- Safe area A: The slave end drives the guidewire forward in the non-collision area. At this time, the load cell only measures the friction force.

- Risk area B: As the slave end continues to move, if the guidewire tip touches the vascular wall, the “hidden collisions” occurs. The feedback force becomes the sum of collision and friction forces.

- Dangerous area C: For pushing the guidewire forward through the vascular vessel, the operator rotates the slave end. At this time, the operation will be different according to the vascular morphologies. However, feedback force demonstrates a fluctuating state. In this case, the improper operation may cause danger, and the vascular wall may be punctured.

- Safe area D: The operator is aware of the danger and proceeds to retreat and adjust the movement.

4.2. GCCM-Strategy

4.3. Evaluation Experiments

- Human hand (HH): Guidewire is directly controlled by human hands.

- Robot only (RO): Guidewire is controlled by robot

- Robot with force sensors (RF): The robot not only controls the guidewire, but also provides force feedback based on force sensors.

- Robot with GCCM-strategy (RG): The robot not only controls the guidewire, but also provides force feedback based on GCCM-strategy.

5. Discussion on the Application of GCCM

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Biso, S.M.R.; Vidovich, M.I. Radiation Protection in the Cardiac Catheterization Laboratory. J. Thorac. Dis. 2020, 12, 1648–1655. [Google Scholar] [CrossRef] [PubMed]

- Peters, B.S.; Armijo, P.R.; Krause, C.; Choudhury, S.A.; Oleynikov, D. Review of Emerging Surgical Robotic Technology. Surg. Endosc. 2018, 32, 1636–1655. [Google Scholar] [CrossRef] [PubMed]

- Patel, T.M.; Shah, S.C.; Pancholy, S.B. Long Distance Tele-Robotic-Assisted Percutaneous Coronary Intervention: A Report of First-in-Human Experience. EClinicalMedicine 2019, 14, 53–58. [Google Scholar] [CrossRef] [PubMed]

- Jamshidi, A.M.; Spiotta, A.M.; Burks, J.D.; Starke, R.M. Robotics in Cerebrovascular and Endovascular Neurosurgery. In Introduction to Robotics in Minimally Invasive Neurosurgery; Al-Salihi, M.M., Tubbs, R.S., Ayyad, A., Goto, T., Maarouf, M., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 11–24. [Google Scholar] [CrossRef]

- Abdelaziz, M.E.M.K.; Tian, L.; Hamady, M.; Yang, G.Z.; Temelkuran, B. X-Ray to MR: The Progress of Flexible Instruments for Endovascular Navigation. Prog. Biomed. Eng. 2021, 3, 032004. [Google Scholar] [CrossRef]

- Shi, P.; Guo, S.; Zhang, L.; Jin, X.; Hirata, H.; Tamiya, T.; Kawanishi, M. Design and Evaluation of a Haptic Robot-Assisted Catheter Operating System With Collision Protection Function. IEEE Sens. J. 2021, 21, 20807–20816. [Google Scholar] [CrossRef]

- Bao, X.; Guo, S.; Guo, Y.; Yang, C.; Shi, L.; Li, Y.; Jiang, Y. Multilevel Operation Strategy of a Vascular Interventional Robot System for Surgical Safety in Teleoperation. IEEE Trans. Robot. 2022, 38, 2238–2250. [Google Scholar] [CrossRef]

- Jin, X.; Guo, S.; Guo, J.; Shi, P.; Tamiya, T.; Kawanishi, M.; Hirata, H. Total Force Analysis and Safety Enhancing for Operating Both Guidewire and Catheter in Endovascular Surgery. IEEE Sens. J. 2021, 21, 22499–22509. [Google Scholar] [CrossRef]

- Bao, X.; Guo, S.; Xiao, N.; Li, Y.; Yang, C.; Shen, R.; Cui, J.; Jiang, Y.; Liu, X.; Liu, K. Operation Evaluation In-Human of a Novel Remote-Controlled Vascular Interventional Robot. Biomed. Microdevices 2018, 20, 34. [Google Scholar] [CrossRef]

- Hooshiar, A.; Najarian, S.; Dargahi, J. Haptic Telerobotic Cardiovascular Intervention: A Review of Approaches, Methods, and Future Perspectives. IEEE Rev. Biomed. Eng. 2020, 13, 32–50. [Google Scholar] [CrossRef]

- Pandya, H.J.; Sheng, J.; Desai, J.P. MEMS-Based Flexible Force Sensor for Tri-Axial Catheter Contact Force Measurement. J. Microelectromechanical Syst. 2017, 26, 264–272. [Google Scholar] [CrossRef]

- Akinyemi, T.O.; Omisore, O.M.; Duan, W.; Lu, G.; Al-Handerish, Y.; Han, S.; Wang, L. Fiber Bragg Grating-Based Force Sensing in Robot-Assisted Cardiac Interventions: A Review. IEEE Sens. J. 2021, 21, 10317–10331. [Google Scholar] [CrossRef]

- Schneider, P.A. Endovascular Skills: Guidewire and Catheter Skills for Endovascular Surgery: Guidewire and Catheter Skills for Endovascular Surgery, 4th ed.; CRC Press: Boca Raton, FL, USA, 2019. [Google Scholar] [CrossRef]

- Guan, S.; Wang, T.; Sun, K.; Meng, C. Transfer Learning for Nonrigid 2D/3D Cardiovascular Images Registration. IEEE J. Biomed. Health Inform. 2021, 25, 3300–3309. [Google Scholar] [CrossRef] [PubMed]

- Dagnino, G.; Liu, J.; Abdelaziz, M.E.M.K.; Chi, W.; Riga, C.; Yang, G.Z. Haptic Feedback and Dynamic Active Constraints for Robot-Assisted Endovascular Catheterization. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1770–1775. [Google Scholar] [CrossRef]

- Razban, M.; Dargahi, J.; Boulet, B. A Sensor-less Catheter Contact Force Estimation Approach in Endovascular Intervention Procedures. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 2100–2106. [Google Scholar] [CrossRef]

- Li, R.Q.; Xie, X.L.; Zhou, X.H.; Liu, S.Q.; Ni, Z.L.; Zhou, Y.J.; Bian, G.B.; Hou, Z.G. Real-Time Multi-Guidewire Endpoint Localization in Fluoroscopy Images. IEEE Trans. Med. Imaging 2021, 40, 2002–2014. [Google Scholar] [CrossRef] [PubMed]

- Chi, W.; Dagnino, G.; Kwok, T.M.Y.; Nguyen, A.; Kundrat, D.; Abdelaziz, M.E.M.K.; Riga, C.; Bicknell, C.; Yang, G.Z. Collaborative Robot-Assisted Endovascular Catheterization with Generative Adversarial Imitation Learning. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Virtual, 31 May–31 August 2020; IEEE: Paris, France, 2020; pp. 2414–2420. [Google Scholar] [CrossRef]

- Chi, W.; Liu, J.; Abdelaziz, M.E.M.K.; Dagnino, G.; Riga, C.; Bicknell, C.; Yang, G.Z. Trajectory Optimization of Robot-Assisted Endovascular Catheterization with Reinforcement Learning. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: Madrid, Spain, 2018; pp. 3875–3881. [Google Scholar] [CrossRef]

- Yang, Z.; Yang, L.; Zhang, M.; Wang, Q.; Yu, S.C.H.; Zhang, L. Magnetic Control of a Steerable Guidewire Under Ultrasound Guidance Using Mobile Electromagnets. IEEE Robot. Autom. Lett. 2021, 6, 1280–1287. [Google Scholar] [CrossRef]

- Hayakawa, N.; Kodera, S.; Ohki, N.; Kanda, J. Efficacy and Safety of Endovascular Therapy by Diluted Contrast Digital Subtraction Angiography in Patients with Chronic Kidney Disease. Heart Vessel. 2019, 34, 1740–1747. [Google Scholar] [CrossRef]

- Zhao, Y.; Xing, H.; Guo, S.; Wang, Y.; Cui, J.; Ma, Y.; Liu, Y.; Liu, X.; Feng, J.; Li, Y. A Novel Noncontact Detection Method of Surgeon’s Operation for a Master-Slave Endovascular Surgery Robot. Med. Biol. Eng. Comput. 2020, 58, 871–885. [Google Scholar] [CrossRef]

- Bao, X.; Guo, S.; Shi, L.; Xiao, N. Design and Evaluation of Sensorized Robot for Minimally Vascular Interventional Surgery. Microsyst. Technol. 2019, 25, 2759–2766. [Google Scholar] [CrossRef]

- Cohen, G.; Afshar, S.; Tapson, J.; van Schaik, A. EMNIST: Extending MNIST to Handwritten Letters. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 2921–2926. [Google Scholar] [CrossRef]

- Feichtenhofer, C. X3D: Expanding Architectures for Efficient Video Recognition. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 200–210. [Google Scholar] [CrossRef]

- Carreira, J.; Zisserman, A. Quo Vadis, Action Recognition? A New Model and the Kinetics Dataset. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4724–4733. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. arXiv 2018, arXiv:1807.06521. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level Accuracy with 50× Fewer Parameters and <0.5MB Model Size. arXiv 2016, arXiv:1602.07360v1. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning Deep Features for Discriminative Localization. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar] [CrossRef]

- Voelker, W.; Petri, N.; Tönissen, C.; Störk, S.; Birkemeyer, R.; Kaiser, E.; Oberhoff, M. Does Simulation-Based Training Improve Procedural Skills of Beginners in Interventional Cardiology?-A Stratified Randomized Study. J. Interv. Cardiol. 2016, 29, 75–82. [Google Scholar] [CrossRef] [PubMed]

- Welch, G.F. Kalman Filter. In Computer Vision: A Reference Guide; Ikeuchi, K., Ed.; Springer: Boston, MA, USA, 2014; pp. 435–437. [Google Scholar] [CrossRef]

| Name of Datasets | Number of Samples | ||||

|---|---|---|---|---|---|

| 8578 | 5664 | ||||

| 4085 | 2736 | ||||

| 1867 | 1230 | ||||

| - | - | - | 14,530 | 9630 | |

| Environments | Conditions | t (s) | F (mN) | (mm/s) | (mN) | (%) |

|---|---|---|---|---|---|---|

| Visible | HH | - | - | - | ||

| RO | ||||||

| RF | ||||||

| RG | ||||||

| Invisible | HH | - | - | - | ||

| RO | ||||||

| RF | ||||||

| RG |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lyu, C.; Guo, S.; Zhou, W.; Yan, Y.; Yang, C.; Wang, Y.; Meng, F. A Deep-Learning-Based Guidewire Compliant Control Method for the Endovascular Surgery Robot. Micromachines 2022, 13, 2237. https://doi.org/10.3390/mi13122237

Lyu C, Guo S, Zhou W, Yan Y, Yang C, Wang Y, Meng F. A Deep-Learning-Based Guidewire Compliant Control Method for the Endovascular Surgery Robot. Micromachines. 2022; 13(12):2237. https://doi.org/10.3390/mi13122237

Chicago/Turabian StyleLyu, Chuqiao, Shuxiang Guo, Wei Zhou, Yonggan Yan, Chenguang Yang, Yue Wang, and Fanxu Meng. 2022. "A Deep-Learning-Based Guidewire Compliant Control Method for the Endovascular Surgery Robot" Micromachines 13, no. 12: 2237. https://doi.org/10.3390/mi13122237

APA StyleLyu, C., Guo, S., Zhou, W., Yan, Y., Yang, C., Wang, Y., & Meng, F. (2022). A Deep-Learning-Based Guidewire Compliant Control Method for the Endovascular Surgery Robot. Micromachines, 13(12), 2237. https://doi.org/10.3390/mi13122237