Characterizing Malignant Melanoma Clinically Resembling Seborrheic Keratosis Using Deep Knowledge Transfer

Abstract

:Simple Summary

Abstract

1. Introduction

2. Materials and Methods

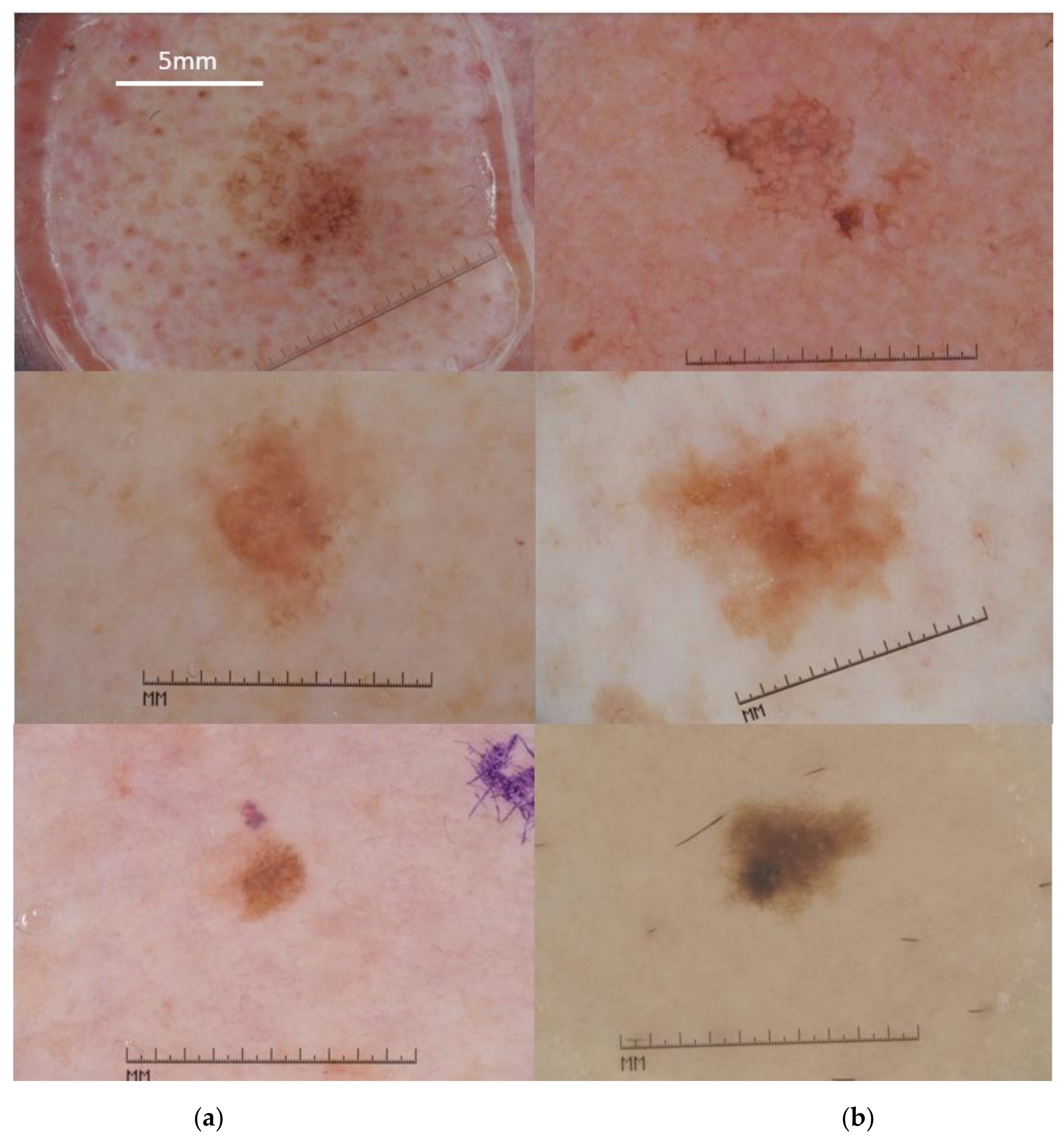

2.1. Data Set Description

2.2. Feature Extraction Using Deep Knowledge Transfer

2.3. Implementation and Evaluation of the Diagnostic Model

2.4. Image Preprocessing

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Conic, R.; Cabrera, C.I.; Khorana, A.; Gastman, B.R. Determination of the Impact of Melanoma Surgical Timing on Survival Using the National Cancer Database. J. Am. Acad. Dermatol. 2017, 78, 40–46.e7. [Google Scholar] [CrossRef] [PubMed]

- Naik, P.P. Cutaneous Malignant Melanoma: A Review of Early Diagnosis and Management. World J. Oncol. 2021, 12, 7–19. [Google Scholar] [CrossRef]

- Janowska, A.; Oranges, T.; Iannone, M.; Romanelli, M.; Dini, V. Seborrheic Keratosis-Like Melanoma: A Diagnostic Challenge. Melanoma Res. 2021, 31, 407–412. [Google Scholar] [CrossRef] [PubMed]

- Moscarella, E.; Brancaccio, G.; Briatico, G.; Ronchi, A.; Piana, S.; Argenziano, G. Differential Diagnosis and Management on Seborrheic Keratosis in Elderly Patients. Clin. Cosmet. Investig. Dermatol. 2021, 14, 395–406. [Google Scholar] [CrossRef]

- Carrera, C.; Segura, S.; Aguilera, P.; Scalvenzi, M.; Longo, C.; Barreiro-Capurro, A.; Broganelli, P.; Cavicchini, S.; Llambrich, A.; Zaballos, P.; et al. Dermoscopic Clues for Diagnosing Melanomas That Resemble Seborrheic Keratosis. JAMA Dermatol. 2017, 153, 544–551. [Google Scholar] [CrossRef]

- Izikson, L.; Sober, A.J.; Mihm, M.C.; Zembowicz, A. Prevalence of Melanoma Clinically Resembling Seborrheic Keratosis: Analysis of 9204 Cases. Arch. Dermatol. 2002, 138, 1562–1566. [Google Scholar] [CrossRef]

- Carrera, C.; Segura, S.; Aguilera, P.; Takigami, C.M.; Gomes, A.; Barreiro, A.; Scalvenzi, M.; Longo, C.; Cavicchini, S.; Thomas, L.; et al. Dermoscopy Improves the Diagnostic Accuracy of Melanomas Clinically Resembling Seborrheic Keratosis: Cross-Sectional Study of the Ability to Detect Seborrheic Keratosis-Like Melanomas by a Group of Dermatologists with Varying Degrees of Experience. Dermatology 2017, 233, 471–479. [Google Scholar] [CrossRef]

- Xiong, Y.-Q.; Ma, S.; Li, X.; Zhong, X.; Duan, C.; Chen, Q. A meta-analysis of reflectance confocal microscopy for the diagnosis of malignant skin tumours. J. Eur. Acad. Dermatol. Venereol. 2016, 30, 1295–1302. [Google Scholar] [CrossRef]

- Lan, J.; Wen, J.; Cao, S.; Yin, T.; Jiang, B.; Lou, Y.; Zhu, J.; An, X.; Suo, H.; Li, D.; et al. The Diagnostic Accuracy of Dermoscopy and Reflectance Confocal Microscopy for Amelanotic/Hypomelanotic Melanoma: A Systematic Review and Meta-Analysis. Br. J. Dermatol. 2020, 183, 210–219. [Google Scholar] [CrossRef] [PubMed]

- Blundo, A.; Cignoni, A.; Banfi, T.; Ciuti, G. Comparative Analysis of Diagnostic Techniques for Melanoma Detection: A Systematic Review of Diagnostic Test Accuracy Studies and Meta-Analysis. Front. Med. 2021, 8, 637069. [Google Scholar] [CrossRef]

- De Giorgi, V.; Massi, D.; Stante, M.; Carli, P. False “Melanocytic” Parameters Shown by Pigmented Seborrheic Keratoses: A Finding Which is not Uncommon in Dermoscopy. Dermatol. Surg. 2002, 28, 776–779. [Google Scholar] [CrossRef] [PubMed]

- Scope, A.; Benvenuto-Andrade, C.; Agero, A.L.C.; Marghoob, A.A. Nonmelanocytic Lesions Defying the Two-Step Dermoscopy Algorithm. Dermatol. Surg. 2006, 32, 1398–1406. [Google Scholar] [CrossRef]

- Lin, J.; Han, S.; Cui, L.; Song, Z.; Gao, M.; Yang, G.; Fu, Y.; Liu, X. Evaluation of Dermoscopic Algorithm for Seborrhoeic Keratosis: A Prospective Study in 412 Patients. J. Eur. Acad. Dermatol. Venereol. 2013, 28, 957–962. [Google Scholar] [CrossRef]

- Squillace, L.M.; Cappello, C.M.; Longo, E.C.; Moscarella, R.E.; Alfano, G.; Argenziano, G. Unusual Dermoscopic Patterns of Seborrheic Keratosis. Dermatology 2016, 232, 198–202. [Google Scholar] [CrossRef]

- Farnetani, F.; Pedroni, G.; Lippolis, N.; Giovani, M.; Ciardo, S.; Chester, J.; Kaleci, S.; Pezzini, C.; Cantisani, C.; Dattola, A.; et al. Facial Seborrheic Keratosis With Unusual Dermoscopic Patterns Can Be Differentiated From Other Skin Malignancies By In Vivo Reflectance Confocal Microscopy. J. Eur. Acad. Dermatol. Venereol. 2021, 35, e784–e787. [Google Scholar] [CrossRef] [PubMed]

- Pezzini, C.; Mandel, V.D.; Persechino, F.; Ciardo, S.; Kaleci, S.; Chester, J.; De Carvalho, N.; Persechino, G.; Pellacani, G.; Farnetani, F. Seborrheic Keratoses Mimicking Melanoma Unveiled by In Vivo Reflectance Confocal Microscopy. Ski. Res. Technol. 2018, 24, 285–293. [Google Scholar] [CrossRef] [PubMed]

- Argenziano, G.; Catricalà, C.; Ardigo, M.; Buccini, P.; De Simone, P.; Eibenschutz, L.; Ferrari, A.; Mariani, G.; Silipo, V.; Sperduti, I.; et al. Seven-Point Checklist of Dermoscopy Revisited. Br. J. Dermatol. 2010, 164, 785–790. [Google Scholar] [CrossRef]

- Ferrante di Ruffano, L.; Takwoingi, Y.; Dinnes, J.; Chuchu, N.; Bayliss, S.E.; Davenport, C.; Matin, R.N.; Godfrey, K.; O’Sullivan, C.; Gulati, A.; et al. Computer-Assisted Diagnosis Techniques (Dermoscopy and Spectroscopy-Based) for Diagnosing Skin Cancer in Adults. Cochrane Database Syst. Rev. 2018, 2018, CD013186. [Google Scholar] [CrossRef]

- Dick, V.; Sinz, C.; Mittlböck, M.; Kittler, H.; Tschandl, P. Accuracy of Computer-Aided Diagnosis of Melanoma: A Meta-Analysis. JAMA Dermatol. 2019, 155, 1291–1299. [Google Scholar] [CrossRef]

- Maiti, A.; Chatterjee, B.; Ashour, A.S.; Dey, N. Computer-Aided Diagnosis of Melanoma: A Review of Existing Knowledge and Strategies. Curr. Med. Imaging Former. Curr. Med. Imaging Rev. 2020, 16, 835–854. [Google Scholar] [CrossRef]

- Bozsányi, S.; Farkas, K.; Bánvölgyi, A.; Lőrincz, K.; Fésűs, L.; Anker, P.; Zakariás, S.; Jobbágy, A.; Lihacova, I.; Lihachev, A.; et al. Quantitative Multispectral Imaging Differentiates Melanoma from Seborrheic Keratosis. Diagnostics 2021, 11, 1315. [Google Scholar] [CrossRef]

- Wang, Y.; Cai, J.; Louie, D.C.; Lui, H.; Lee, T.K.; Wang, Z.J. Classifying Melanoma and Seborrheic Keratosis Automatically with Polarization Speckle Imaging. In Proceedings of the 2019 Global Conference on Signal and Information Processing, Ottawa, ON, Canada, 11–14 November 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Bahadoran, P.; Malvehy, J. Dermoscopy in Europe: Coming of Age. Br. J. Dermatol. 2016, 175, 1132–1133. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Piliouras, P.; Buettner, P.; Soyer, H.P. Dermoscopy Use in the Next Generation: A Survey of Australian Dermatology Trainees. Australas. J. Dermatol. 2013, 55, 49–52. [Google Scholar] [CrossRef] [PubMed]

- Celebi, M.E.; Codella, N.; Halpern, A. Dermoscopy Image Analysis: Overview and Future Directions. IEEE J. Biomed. Health Inform. 2019, 23, 474–478. [Google Scholar] [CrossRef]

- Naeem, A.; Farooq, M.S.; Khelifi, A.; Abid, A. Malignant Melanoma Classification Using Deep Learning: Datasets, Performance Measurements, Challenges and Opportunities. IEEE Access 2020, 8, 110575–110597. [Google Scholar] [CrossRef]

- Adegun, A.; Viriri, S. Deep Learning Techniques for Skin Lesion Analysis and Melanoma Cancer Detection: A Survey of State-Of-The-Art. Artif. Intell. Rev. 2020, 54, 811–841. [Google Scholar] [CrossRef]

- Li, L.-F.; Wang, X.; Hu, W.J.; Xiong, N.N.; Du, Y.X.; Li, B.S. Deep Learning in Skin Disease Image Recognition: A Review. IEEE Access 2020, 8, 208264–208280. [Google Scholar] [CrossRef]

- Baig, R.; Bibi, M.; Hamid, A.; Kausar, S.; Khalid, S. Deep Learning Approaches Towards Skin Lesion Segmentation and Classification from Dermoscopic Images—A Review. Curr. Med. Imaging Former. Curr. Med. Imaging Rev. 2020, 16, 513–533. [Google Scholar] [CrossRef]

- Kassem, M.; Hosny, K.; Damaševičius, R.; Eltoukhy, M. Machine Learning and Deep Learning Methods for Skin Lesion Classification and Diagnosis: A Systematic Review. Diagnostics 2021, 11, 1390. [Google Scholar] [CrossRef]

- The International Skin Imaging Collaboration. Available online: https://www.isic-archive.com (accessed on 24 October 2021).

- Codella, N.C.F.; Gutman, D.; Celebi, M.E.; Helba, B.; Marchetti, M.A.; Dusza, S.W.; Kalloo, A.; Liopyris, K.; Mishra, N.; Kittler, H.; et al. Skin Lesion Analysis toward Melanoma Detection: A Challenge. arXiv 2017, arXiv:1710.05006v3, 168–172. Available online: https://arxiv.org/abs/1710.05006v3 (accessed on 22 September 2021).

- Raghu, M.; Zhang, C.; Kleinberg, J.; Bengio, S. Transfusion: Understanding Transfer Learning for Medical Imaging. arXiv 2019, arXiv:1902.07208. Available online: http://arxiv.org/abs/1902.07208 (accessed on 4 January 2021).

- ImageNet. Available online: https://image-net.org/ (accessed on 27 September 2021).

- Sharif Razavian, A.; Azizpour, H.; Sullivan, J.; Carlsson, S. CNN Features Off-The-Shelf: An Astounding Baseline For Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Columbus, OH, USA, 23–28 June 2014; pp. 512–519. [Google Scholar] [CrossRef] [Green Version]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef] [Green Version]

- Garcia-Gasulla, D.; Parés, F.; Vilalta, A.; Moreno, J.; Ayguadé, E.; Labarta, J.; Cortés, U.; Suzumura, T. On the Behavior of Convolutional Nets for Feature Extraction. J. Artif. Intell. Res. 2018, 61, 563–592. [Google Scholar] [CrossRef] [Green Version]

- Codella, N.; Cai, J.; Abedini, M.; Garnavi, R.; Halpern, A.; Smith, J.R. Deep Learning, Sparse Coding, and SVM for Melanoma Recognition in Dermoscopy Images. In Proceedings of the 6th International Workshop on Machine Learning in Medical Imaging, Munich, Germany, 5–9 October 2015; pp. 118–126. [Google Scholar] [CrossRef]

- Majtner, T.; Yildirim-Yayilgan, S.; Hardeberg, J.Y. Optimised Deep Learning Features for Improved Melanoma Detection. Multimed Tools Appl. 2018, 78, 11883–11903. [Google Scholar] [CrossRef]

- Devassy, B.M.; Yildirim-Yayilgan, S.; Hardeberg, J.Y. The Impact of Replacing Complex Hand-Crafted Features with Standard Features for Melanoma Classification Using Both Hand-Crafted and Deep Features. Adv. Intell. Syst. Comput. 2018, 150–159. [Google Scholar] [CrossRef]

- Mahbod, A.; Schaefer, G.; Wang, C.; Ecker, R.; Ellinge, I. Skin Lesion Classification Using Hybrid Deep Neural Networks. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 1229–1233. [Google Scholar] [CrossRef] [Green Version]

- Liu, L.; Mou, L.; Zhu, X.X.; Mandal, M. Automatic Skin Lesion Classification Based on Mid-Level Feature Learning. Comput. Med. Imaging Graph. 2020, 84, 101765. [Google Scholar] [CrossRef] [PubMed]

- Yildirim-Yayilgan, S.; Arifaj, B.; Rahimpour, M.; Hardeberg, J.Y.; Ahmedi, L. Pre-trained CNN Based Deep Features with Hand-Crafted Features and Patient Data for Skin Lesion Classification. Commun. Comput. Inf. Sci. 2021, 1382, 151–162. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015; pp. 1–4. [Google Scholar] [CrossRef] [Green Version]

- Liu, L.; Chen, J.; Fieguth, P.; Zhao, G.; Chellappa, R.; Pietikäinen, M. From BoW to CNN: Two Decades of Texture Representation for Texture Classification. Int. J. Comput. Vis. 2018, 127, 74–109. [Google Scholar] [CrossRef] [Green Version]

- Tan, C.; Sun, F.; Kong, T.; Zhang, W.; Yang, C.; Liu, C. A Survey on Deep Transfer Learning. In Proceedings of the 27th International Conference on Artificial Neural Networks, Rhodes, Greece, 4–7 October 2018; pp. 270–279. [Google Scholar] [CrossRef] [Green Version]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef] [Green Version]

- Gelbart, M.A.; Snoek, J.; Adams, R.P. Bayesian Optimization with Unknown Constraints. arXiv 2014, arXiv:1403.5607, 250–259. Available online: https://arxiv.org/abs/1403.5607v1 (accessed on 26 September 2021).

- Dietterich, T.G. Approximate Statistical Tests for Comparing Supervised Classification Learning Algorithms. Neural Comput. 1998, 10, 1895–1923. [Google Scholar] [CrossRef] [Green Version]

- Bostanci, B.; Bostanci, E. An Evaluation of Classification Algorithms Using Mc Nemar’s Test. In Proceedings of the Seventh International Conference on Bio-Inspired Computing: Theories and Applications, ABV-Indian Institute of Information Technology and Management Gwalior (ABV-IIITM Gwalior), Madhya Pradesh, India, 14–16 December 2012; pp. 15–26. [Google Scholar] [CrossRef]

- Gijsenij, A.; Gevers, T.; van de Weijer, J. Computational Color Constancy: Survey and Experiments. IEEE Trans. Image Process. 2011, 20, 2475–2489. [Google Scholar] [CrossRef]

- Barata, A.F.; Celebi, M.E.; Marques, J.S. Improving Dermoscopy Image Classification Using Color Constancy. IEEE J. Biomed. Health Inform. 2015, 19, 1146–1152. [Google Scholar] [CrossRef]

- Mahbod, A.; Schaefer, G.; Ellinger, I.; Ecker, R.; Pitiot, A.; Wang, C. Fusing Fine-Tuned Deep Features for Skin Lesion Classification. Comput. Med. Imaging Graph. 2018, 71, 19–29. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J.; Xie, Y.; Xia, Y.; Shen, C. Attention Residual Learning for Skin Lesion Classification. IEEE Trans. Med. Imaging 2019, 38, 2092–2103. [Google Scholar] [CrossRef] [PubMed]

- Mahbod, A.; Tschandl, P.; Langs, G.; Ecker, R.; Ellinger, I. The Effects of Skin Lesion Segmentation on the Performance of Dermatoscopic Image Classification. Comput. Methods Programs Biomed. 2020, 197, 105725. [Google Scholar] [CrossRef] [PubMed]

- Papageorgiou, V.; Apalla, Z.; Sotiriou, E.; Papageorgiu, C.; Lazaridou, E.; Vakirlis, S.; Ioannides, D.; Lallas, A. The Limitations of Dermoscopy: False-Positive and False-Negative Tumours. J. Eur. Acad. Dermatol. Venereol. 2018, 32, 879–888. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, J.; Zhang, W.; Zhan, Y.; Guo, S.; Zheng, Q.; Wang, X. A Survey on Deploying Mobile Deep Learning Applications: A Systemic and Technical Perspective. Digit. Commun. Netw. 2021, in press. [Google Scholar] [CrossRef]

| Patient Characteristics | MM | SK |

|---|---|---|

| Female | 240 | 195 |

| Male | 248 | 230 |

| Undefined | 62 | 3 |

| Mean Age | 60.8 | 64 |

| Median Age | 65 | 65 |

| Standard Deviation (SD) of Age | 15.9 | 13.3 |

| CNN | Layer | Imager Representation (Activation) | Feature Vector Dimension (d) |

|---|---|---|---|

| VGG16 | Pool2 | 56 × 56 × 128 | 128 |

| Pool3 | 28 × 28 × 256 | 256 | |

| Pool4 | 14 × 14 × 512 | 512 | |

| Pool5 | 7 × 7 × 512 | 512 | |

| FC6 | 1 × 1 × 4096 | 4096 | |

| FC7 | 1 × 1 × 4096 | 4096 | |

| ResNet50 | ReLU_10 | 56 × 56 × 256 | 256 |

| ReLU_22 | 28 × 28 × 512 | 512 | |

| ReLU_40 | 14 × 14 × 1024 | 1024 | |

| ReLU_49 | 7 × 7 × 2048 | 2048 |

| CNN | Layer | SVM Model | Sensitivity (%) | Specificity (%) | Accuracy (%) |

|---|---|---|---|---|---|

| VGG16 | Pool2 | Polynomial | 56.7 | 86.4 | 67.2 |

| Pool3 | Gaussian | 78.6 | 84.5 | 80.7 | |

| Pool4 | Linear | 68.2 | 90.9 | 75.2 | |

| Poo5 | 59.2 | 85.4 | 68.5 | ||

| FC6 | 57.2 | 86.4 | 67.5 | ||

| FC7 | 62.2 | 82.7 | 69.4 | ||

| ResNet50 | ReLU_10 | Polynomial | 68.1 | 86.4 | 74.6 |

| ReLU_22 | Gaussian | 76.1 | 85.4 | 79.4 | |

| ReLU_40 | Linear | 70.6 | 89.1 | 77.2 | |

| ReLU_49 | 62.7 | 86.4 | 71.1 |

| Pool2 | Pool4 | Pool5 | FC6 | FC7 | ReLU_22 | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Pool3 |  | p < 0.001 |  | p < 0.001 |  | p < 0.001 |  | p < 0.001 |  | p < 0.001 |  | |

| Pool2 | - |  | p < 0.001 |  |  |  |  | p < 0.001 | ||||

| Pool4 | - | - |  | p < 0.05 |  | p < 0.001 |  | p < 0.05 |  | p < 0.001 | ||

| Pool5 | - | - | - |  |  |  | p < 0.001 | |||||

| FC6 | - | - | - | - |  | p < 0.05 |  | p < 0.001 | ||||

| FC7 | - | - | - | - | - |  | p < 0.001 | |||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Spyridonos, P.; Gaitanis, G.; Likas, A.; Bassukas, I. Characterizing Malignant Melanoma Clinically Resembling Seborrheic Keratosis Using Deep Knowledge Transfer. Cancers 2021, 13, 6300. https://doi.org/10.3390/cancers13246300

Spyridonos P, Gaitanis G, Likas A, Bassukas I. Characterizing Malignant Melanoma Clinically Resembling Seborrheic Keratosis Using Deep Knowledge Transfer. Cancers. 2021; 13(24):6300. https://doi.org/10.3390/cancers13246300

Chicago/Turabian StyleSpyridonos, Panagiota, George Gaitanis, Aristidis Likas, and Ioannis Bassukas. 2021. "Characterizing Malignant Melanoma Clinically Resembling Seborrheic Keratosis Using Deep Knowledge Transfer" Cancers 13, no. 24: 6300. https://doi.org/10.3390/cancers13246300