SBXception: A Shallower and Broader Xception Architecture for Efficient Classification of Skin Lesions

Abstract

:Simple Summary

Abstract

1. Introduction

- We analyze the characteristics of the adopted dataset (HAM10000) to show that network depth beyond an optimal level may not be suitable for classification tasks on this dataset.

- A new, shorter, broader variant of the Xception model is proposed to classify various skin lesions efficiently.

- The proposed modified model architecture is used to provide better classification performance compared to the state-of-the-art methods.

2. The Proposed Approach

2.1. Dataset and Input Images Preparation

2.2. Model Architecture

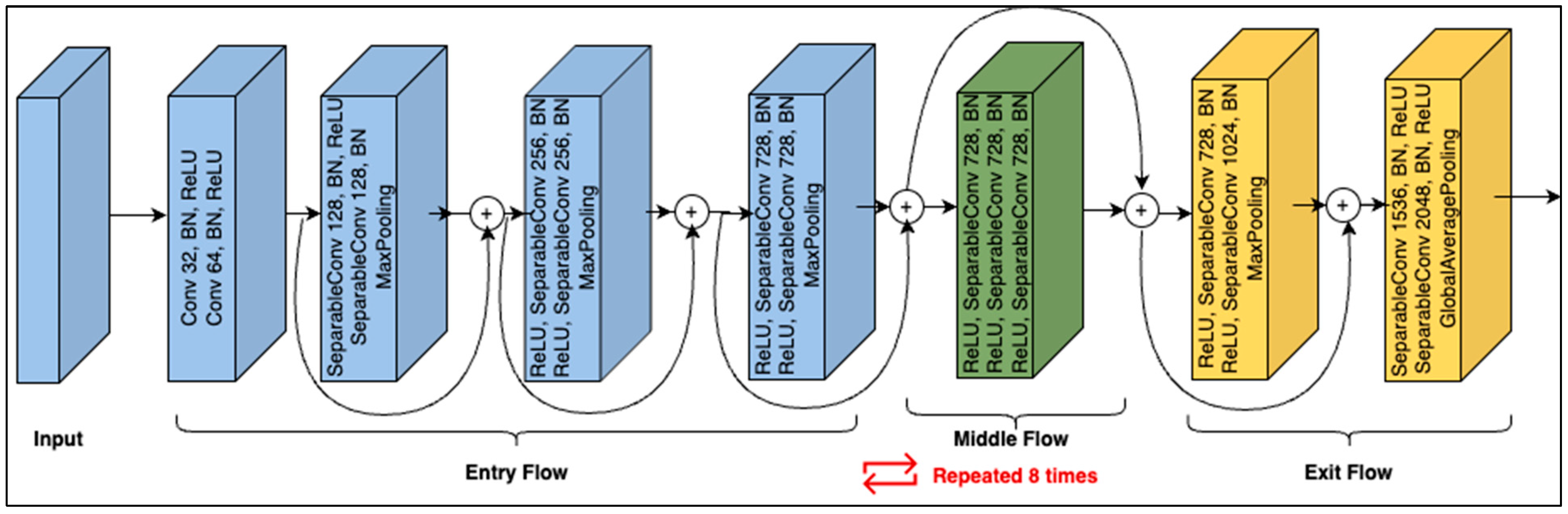

2.2.1. The Base Model

2.2.2. Shortening the Architecture

2.2.3. Broadening the Architecture

2.3. Fine-Tuning and Testing

3. Experiments

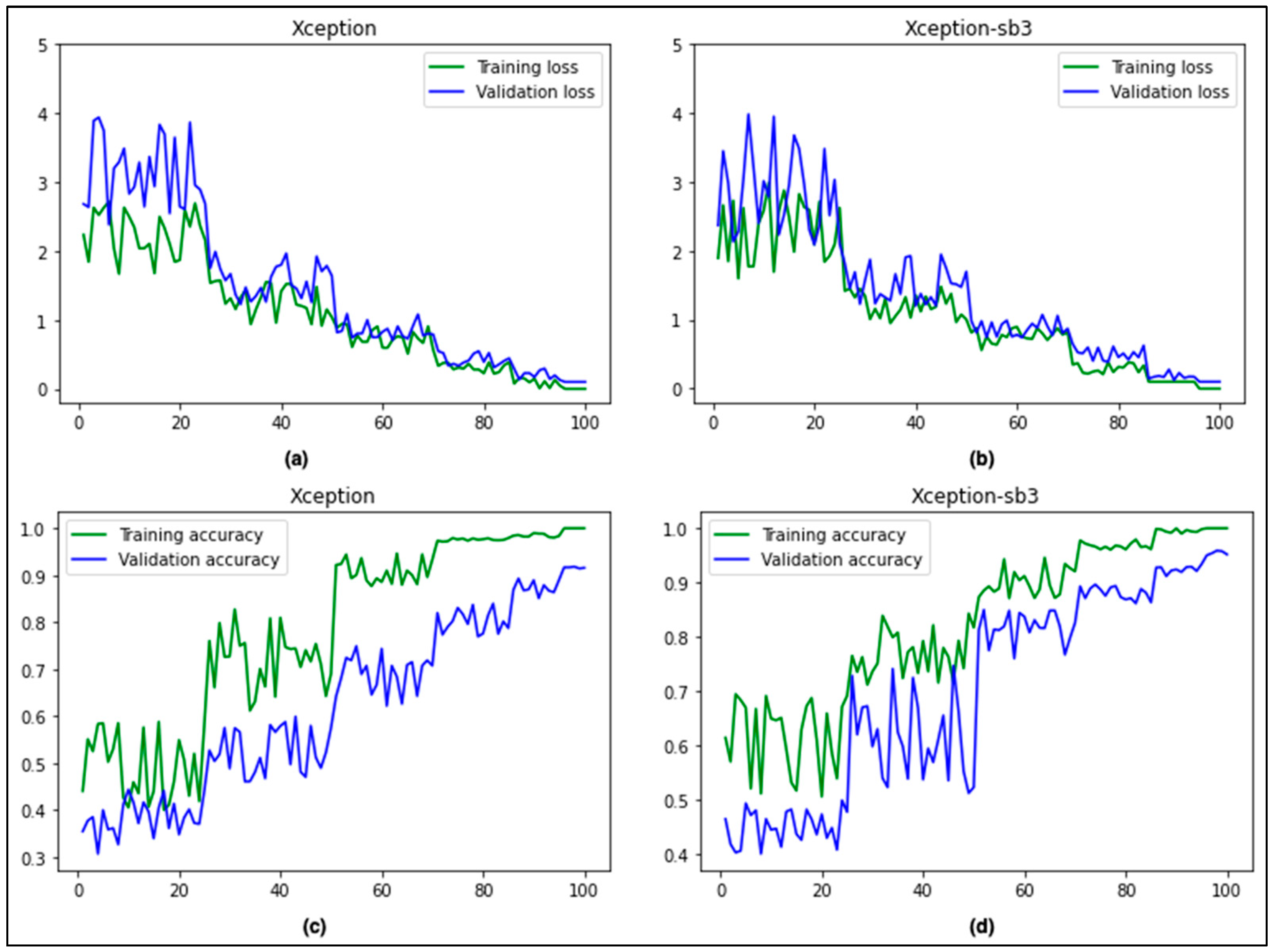

Performance Evaluation of the Proposed Approach

4. Conclusions and Future Work

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- WHO. Key Facts about Cancer. Available online: https://www.who.int/news-room/fact-sheets/detail/cancer (accessed on 20 May 2023).

- Arora, R.; Raman, B.; Nayyar, K.; Awasthi, R. Automated Skin Lesion Segmentation Using Attention-Based Deep Convolutional Neural Network. Biomed. Signal Process. Control 2021, 65, 102358. [Google Scholar] [CrossRef]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-Level Classification of Skin Cancer with Deep Neural Networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef] [PubMed]

- Kricker, A.; Armstrong, B.K.; English, D.R. Sun Exposure and Non-Melanocytic Skin Cancer. Cancer Causes Control 1994, 5, 367–392. [Google Scholar] [CrossRef] [PubMed]

- Armstrong, B.K.; Kricker, A. The Epidemiology of UV Induced Skin Cancer. J. Photochem. Photobiol. B 2001, 63, 8–18. [Google Scholar] [CrossRef]

- American Cancer Society. Available online: https://www.cancer.org/cancer/melanoma-skin-cancer/about/key-statistics.html (accessed on 20 May 2023).

- Larre Borges, A.; Nicoletti, S.; Dufrechou, L.; Nicola Centanni, A. Dermatoscopy in the Public Health Environment. Dermatol. Public. Health Environ. 2018, 1157–1188. [Google Scholar] [CrossRef]

- Kasmi, R.; Processing, K.M.-I.I. undefined Classification of Malignant Melanoma and Benign Skin Lesions: Implementation of Automatic ABCD Rule. Wiley Online Libr. 2016, 10, 448–455. [Google Scholar] [CrossRef]

- Yu, Z.; Jiang, F.; Zhou, F.; He, X.; Ni, D.; Chen, S.; Wang, T.; Lei, B. Convolutional Descriptors Aggregation via Cross-Net for Skin Lesion Recognition. Appl. Soft Comput. 2020, 92, 106281. [Google Scholar] [CrossRef]

- Celebi, M.E.; Kingravi, H.A.; Uddin, B.; Iyatomi, H.; Aslandogan, Y.A.; Stoecker, W.V.; Moss, R.H. A Methodological Approach to the Classification of Dermoscopy Images. Comput. Med. Imaging Graph. 2007, 31, 362–373. [Google Scholar] [CrossRef] [Green Version]

- Goel, N.; Yadav, A.; Singh, B.M. Breast Cancer Segmentation Recognition Using Explored DCT-DWT Based Compression. Recent. Pat. Eng. 2020, 16, 55–64. [Google Scholar] [CrossRef]

- Oliveira, R.B.; Pereira, A.S.; Tavares, J.M.R.S. Skin Lesion Computational Diagnosis of Dermoscopic Images: Ensemble Models Based on Input Feature Manipulation. Comput. Methods Programs Biomed. 2017, 149, 43–53. [Google Scholar] [CrossRef] [Green Version]

- Hosny, K.M.; Kassem, M.A.; Foaud, M.M. Classification of Skin Lesions Using Transfer Learning and Augmentation with Alex-Net. PLoS ONE 2019, 14, e0217293. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gulzar, Y.; Khan, S.A. Skin Lesion Segmentation Based on Vision Transformers and Convolutional Neural Networks—A Comparative Study. Appl. Sci. 2022, 12, 5990. [Google Scholar] [CrossRef]

- Yélamos, O.; Braun, R.P.; Liopyris, K.; Wolner, Z.J.; Kerl, K.; Gerami, P.; Marghoob, A.A. Usefulness of Dermoscopy to Improve the Clinical and Histopathologic Diagnosis of Skin Cancers. J. Am. Acad. Dermatol. 2019, 80, 365–377. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Saba, T.; Khan, M.A.; Rehman, A.; Marie-Sainte, S.L. Region Extraction and Classification of Skin Cancer: A Heterogeneous Framework of Deep CNN Features Fusion and Reduction. J. Med. Syst. 2019, 43, 289. [Google Scholar] [CrossRef]

- Emanuelli, M.; Sartini, D.; Molinelli, E.; Campagna, R.; Pozzi, V.; Salvolini, E.; Simonetti, O.; Campanati, A.; Offidani, A. The Double-Edged Sword of Oxidative Stress in Skin Damage and Melanoma: From Physiopathology to Therapeutical Approaches. Antioxidants 2022, 11, 612. [Google Scholar] [CrossRef]

- Ferlay, J.; Colombet, M.; Soerjomataram, I.; Parkin, D.M.; Piñeros, M.; Znaor, A.; Bray, F. Cancer Statistics for the Year 2020: An Overview. Int. J. Cancer 2021, 149, 778–789. [Google Scholar] [CrossRef]

- American Cancer Society. Cancer Facts and Figures. Available online: https://www.cancer.org/content/dam/cancer-org/research/cancer-facts-and-statistics/annual-cancer-facts-and-figures/2022/2022-cancer-facts-and-figures.pdf (accessed on 20 May 2023).

- Arbyn, M.; Weiderpass, E.; Bruni, L.; de Sanjosé, S.; Saraiya, M.; Ferlay, J.; Bray, F. Estimates of Incidence and Mortality of Cervical Cancer in 2018: A Worldwide Analysis. Lancet Glob. Health 2020, 8, e191–e203. [Google Scholar] [CrossRef] [Green Version]

- Australian Government. Melanoma of the Skin Statistics. Available online: https://www.canceraustralia.gov.au/cancer-types/melanoma/statistics (accessed on 19 June 2022).

- Sung, H.; Ferlay, J.; Siegel, R.L.; Laversanne, M.; Soerjomataram, I.; Jemal, A.; Bray, F. Global Cancer Statistics 2020: GLOBOCAN Estimates of Incidence and Mortality Worldwide for 36 Cancers in 185 Countries. CA Cancer J. Clin. 2021, 71, 209–249. [Google Scholar] [CrossRef]

- Silverberg, E.; Boring, C.C.; Squires, T.S. Cancer Statistics, 1990. CA Cancer J. Clin. 1990, 40, 9–26. [Google Scholar] [CrossRef]

- Alam, S.; Raja, P.; Gulzar, Y. Investigation of Machine Learning Methods for Early Prediction of Neurodevelopmental Disorders in Children. Wirel. Commun. Mob. Comput. 2022, 2022, 5766386. [Google Scholar] [CrossRef]

- Hamid, Y.; Elyassami, S.; Gulzar, Y.; Balasaraswathi, V.R.; Habuza, T.; Wani, S. An Improvised CNN Model for Fake Image Detection. Int. J. Inf. Technol. 2022, 15, 5–15. [Google Scholar] [CrossRef]

- Anand, V.; Gupta, S.; Gupta, D.; Gulzar, Y.; Xin, Q.; Juneja, S.; Shah, A.; Shaikh, A. Weighted Average Ensemble Deep Learning Model for Stratification of Brain Tumor in MRI Images. Diagnostics 2023, 13, 1320. [Google Scholar] [CrossRef] [PubMed]

- Haggenmüller, S.; Maron, R.C.; Hekler, A.; Utikal, J.S.; Barata, C.; Barnhill, R.L.; Beltraminelli, H.; Berking, C.; Betz-Stablein, B.; Blum, A.; et al. Skin Cancer Classification via Convolutional Neural Networks: Systematic Review of Studies Involving Human Experts. Eur. J. Cancer 2021, 156, 202–216. [Google Scholar] [CrossRef] [PubMed]

- Khan, S.A.; Gulzar, Y.; Turaev, S.; Peng, Y.S. A Modified HSIFT Descriptor for Medical Image Classification of Anatomy Objects. Symmetry 2021, 13, 1987. [Google Scholar] [CrossRef]

- Khan, M.A.; Akram, T.; Sharif, M.; Kadry, S.; Nam, Y. Computer Decision Support System for Skin Cancer Localization and Classification. Comput. Mater. Contin. 2021, 68, 1041–1064. [Google Scholar] [CrossRef]

- Mijwil, M.M. Skin Cancer Disease Images Classification Using Deep Learning Solutions. Multimed. Tools Appl. 2021, 80, 26255–26271. [Google Scholar] [CrossRef]

- Khamparia, A.; Singh, P.K.; Rani, P.; Samanta, D.; Khanna, A.; Bhushan, B. An Internet of Health Things-Driven Deep Learning Framework for Detection and Classification of Skin Cancer Using Transfer Learning. Trans. Emerg. Telecommun. Technol. 2021, 32, e3963. [Google Scholar] [CrossRef]

- Ayoub, S.; Gulzar, Y.; Rustamov, J.; Jabbari, A.; Reegu, F.A.; Turaev, S. Adversarial Approaches to Tackle Imbalanced Data in Machine Learning. Sustainability 2023, 15, 7097. [Google Scholar] [CrossRef]

- Ahmad, B.; Jun, S.; Palade, V.; You, Q.; Mao, L.; Zhongjie, M. Improving Skin Cancer Classification Using Heavy-Tailed Student t-Distribution in Generative Adversarial Networks (Ted-Gan). Diagnostics 2021, 11, 2147. [Google Scholar] [CrossRef]

- Kausar, N.; Hameed, A.; Sattar, M.; Ashraf, R.; Imran, A.S.; Ul Abidin, M.Z.; Ali, A. Multiclass Skin Cancer Classification Using Ensemble of Fine-Tuned Deep Learning Models. Appl. Sci. 2021, 11, 10593. [Google Scholar] [CrossRef]

- Attique Khan, M.; Sharif, M.; Akram, T.; Kadry, S.; Hsu, C.H. A Two-Stream Deep Neural Network-Based Intelligent System for Complex Skin Cancer Types Classification. Int. J. Intell. Syst. 2022, 37, 10621–10649. [Google Scholar] [CrossRef]

- Deepa, D.; Muthukumaran, V.; Vinodhini, V.; Selvaraj, S.; Sandeep Kumar, M.; Prabhu, J. Uncertainty Quantification to Improve the Classification of Melanoma and Basal Skin Cancer Using ResNet Model. J. Uncertain. Syst. 2023, 16, 2242010. [Google Scholar] [CrossRef]

- Tahir, M.; Naeem, A.; Malik, H.; Tanveer, J.; Naqvi, R.A.; Lee, S.W. DSCC_Net: Multi-Classification Deep Learning Models for Diagnosing of Skin Cancer Using Dermoscopic Images. Cancers 2023, 15, 2179. [Google Scholar] [CrossRef] [PubMed]

- Shaheen, H.; Singh, M.P. Multiclass Skin Cancer Classification Using Particle Swarm Optimization and Convolutional Neural Network with Information Security. J. Electron. Imaging 2022, 32, 42102. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, Q.; Wang, Y. Road Extraction by Deep Residual U-Net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef] [Green Version]

- Tschandl, P.; Rosendahl, C.; Kittler, H. The HAM10000 Dataset, a Large Collection of Multi-Source Dermatoscopic Images of Common Pigmented Skin Lesions. Sci. Data 2018, 5, 180161. [Google Scholar] [CrossRef]

- Shi, J.; Li, Z.; Ying, S.; Wang, C.; Liu, Q.; Zhang, Q.; Yan, P. MR Image Super-Resolution via Wide Residual Networks with Fixed Skip Connection. IEEE J. Biomed. Health Inf. 2019, 23, 1129–1140. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2016; pp. 1800–1807. [Google Scholar] [CrossRef]

- Shi, C.; Xia, R.; Wang, L. A Novel Multi-Branch Channel Expansion Network for Garbage Image Classification. IEEE Access 2020, 8, 154436–154452. [Google Scholar] [CrossRef]

- Hu, G.; Peng, X.; Yang, Y.; Hospedales, T.M.; Verbeek, J. Frankenstein: Learning Deep Face Representations Using Small Data. IEEE Trans. Image Process. 2018, 27, 293–303. [Google Scholar] [CrossRef] [Green Version]

- Liu, Z.; Chen, Y.; Chen, B.; Zhu, L.; Wu, D.; Shen, G. Crowd Counting Method Based on Convolutional Neural Network with Global Density Feature. IEEE Access 2019, 7, 88789–88798. [Google Scholar] [CrossRef]

- Ding, Y.; Chen, F.; Zhao, Y.; Wu, Z.; Zhang, C.; Wu, D. A Stacked Multi-Connection Simple Reducing Net for Brain Tumor Segmentation. IEEE Access 2019, 7, 104011–104024. [Google Scholar] [CrossRef]

- Mehmood, A. Efficient Anomaly Detection in Crowd Videos Using Pre-Trained 2D Convolutional Neural Networks. IEEE Access 2021, 9, 138283–138295. [Google Scholar] [CrossRef]

- Mehmood, A.; Doulamis, A. LightAnomalyNet: A Lightweight Framework for Efficient Abnormal Behavior Detection. Sensors 2021, 21, 8501. [Google Scholar] [CrossRef] [PubMed]

- Montúfar, G.; Pascanu, R.; Cho, K.; Bengio, Y. On the Number of Linear Regions of Deep Neural Networks. Adv. Neural Inf. Process Syst. 2014, 4, 2924–2932. [Google Scholar] [CrossRef]

- Bengio, Y.; LeCun, Y. Scaling Learning Algorithms towards AI. Large-Scale Kernel Mach. 2007, 34, 1–41. [Google Scholar]

- Chen, L.; Wang, H.; Zhao, J.; Koutris, P.; Papailiopoulos, D. The Effect of Network Width on the Performance of Large-Batch Training. Adv. Neural Inf. Process Syst. 2018, 31, 9302–9309. [Google Scholar] [CrossRef]

- Wu, Z.; Shen, C.; van den Hengel, A. Wider or Deeper: Revisiting the ResNet Model for Visual Recognition. Pattern Recognit. 2019, 90, 119–133. [Google Scholar] [CrossRef] [Green Version]

- Naeem, A.; Anees, T.; Fiza, M.; Naqvi, R.A.; Lee, S.W. SCDNet: A Deep Learning-Based Framework for the Multiclassification of Skin Cancer Using Dermoscopy Images. Sensors 2022, 22, 5652. [Google Scholar] [CrossRef]

- Calderón, C.; Sanchez, K.; Castillo, S.; Arguello, H. BILSK: A Bilinear Convolutional Neural Network Approach for Skin Lesion Classification. Comput. Methods Programs Biomed. Update 2021, 1, 100036. [Google Scholar] [CrossRef]

- Jain, S.; Singhania, U.; Tripathy, B.; Nasr, E.A.; Aboudaif, M.K.; Kamrani, A.K. Deep Learning-Based Transfer Learning for Classification of Skin Cancer. Sensors 2021, 21, 8142. [Google Scholar] [CrossRef]

- Fraiwan, M.; Faouri, E. On the Automatic Detection and Classification of Skin Cancer Using Deep Transfer Learning. Sensors 2022, 22, 4963. [Google Scholar] [CrossRef] [PubMed]

- Saarela, M.; Geogieva, L. Robustness, Stability, and Fidelity of Explanations for a Deep Skin Cancer Classification Model. Appl. Sci. 2022, 12, 9545. [Google Scholar] [CrossRef]

- Alam, T.M.; Shaukat, K.; Khan, W.A.; Hameed, I.A.; Almuqren, L.A.; Raza, M.A.; Aslam, M.; Luo, S. An Efficient Deep Learning-Based Skin Cancer Classifier for an Imbalanced Dataset. Diagnostics 2022, 12, 2115. [Google Scholar] [CrossRef] [PubMed]

| Class | Total Images | Training (Original) | Training (Augmented) | Testing |

|---|---|---|---|---|

| AKIEC | 327 | 262 | 5478 | 65 |

| BCC | 514 | 411 | 5168 | 103 |

| BKL | 1099 | 879 | 5515 | 220 |

| DF | 115 | 91 | 4324 | 24 |

| MEL | 1113 | 891 | 5088 | 222 |

| NV | 6705 | 5364 | 6306 | 1341 |

| VASC | 142 | 113 | 5317 | 29 |

| Total | 10015 | 8011 | 37197 | 2004 |

| Modified Structure | Accuracy | No. of Parameters | ||||||

|---|---|---|---|---|---|---|---|---|

| AKIEC | BCC | BKL | DF | MEL | NV | VASC | ||

| Xception | 0.9413 | 0.9412 | 0.9323 | 0.9195 | 0.9444 | 0.9455 | 0.9520 | 20,873,774 |

| Xception-m7 | 0.9413 | 0.9412 | 0.9323 | 0.9195 | 0.9444 | 0.9455 | 0.9520 | 19,255,430 |

| Xception-m6 | 0.9430 | 0.9429 | 0.9340 | 0.9212 | 0.9461 | 0.9472 | 0.9537 | 17,637,086 |

| Xception-m5 | 0.9449 | 0.9448 | 0.9359 | 0.9231 | 0.9480 | 0.9491 | 0.9556 | 16,018,742 |

| Xception-m4 | 0.9422 | 0.9405 | 0.9321 | 0.9213 | 0.9482 | 0.9503 | 0.9569 | 14,400,398 |

| Xception-m3 | 0.9470 | 0.9469 | 0.9380 | 0.9252 | 0.9501 | 0.9512 | 0.9577 | 12,782,054 |

| Xception-m2 | 0.9501 | 0.9491 | 0.9421 | 0.9273 | 0.9532 | 0.9523 | 0.9599 | 11,163,710 |

| Xception-m1 | 0.9512 | 0.9498 | 0.9434 | 0.9273 | 0.9538 | 0.9531 | 0.9599 | 9,545,366 |

| Modified Structure | Accuracy | No. of Parameters | ||||||

|---|---|---|---|---|---|---|---|---|

| AKIEC | BCC | BKL | DF | MEL | NV | VASC | ||

| Xception-sb8 | 0.9470 | 0.9523 | 0.9449 | 0.9328 | 0.9579 | 0.9572 | 0.9742 | 20,873,774 |

| Xception-sb7 | 0.9558 | 0.9609 | 0.9547 | 0.9406 | 0.9669 | 0.9640 | 0.9821 | 19,255,430 |

| Xception-sb6 | 0.9629 | 0.9678 | 0.9628 | 0.9467 | 0.9742 | 0.9691 | 0.9883 | 17,637,086 |

| Xception-sb5 | 0.9630 | 0.9682 | 0.9633 | 0.9468 | 0.9744 | 0.9693 | 0.9887 | 16,018,742 |

| Xception-sb4 | 0.9635 | 0.9683 | 0.9634 | 0.9472 | 0.9751 | 0.9701 | 0.9889 | 14,400,398 |

| Xception-sb3 | 0.9633 | 0.9685 | 0.9634 | 0.9532 | 0.9747 | 0.9702 | 0.9893 | 12,782,054 |

| Xception-sb2 | 0.9564 | 0.9548 | 0.9496 | 0.9315 | 0.9592 | 0.9563 | 0.9642 | 11,163,710 |

| Xception-sb1 | 0.9512 | 0.9498 | 0.9434 | 0.9273 | 0.9538 | 0.9531 | 0.9599 | 9,545,366 |

| Class | Recall | Precision | Accuracy | F1-Score | MCC-Score |

|---|---|---|---|---|---|

| AKIEC | 0.8737 | 0.8686 | 0.9633 | 0.8712 | 0.8498 |

| BCC | 0.8874 | 0.8923 | 0.9685 | 0.8898 | 0.8714 |

| BKL | 0.8998 | 0.8519 | 0.9634 | 0.8752 | 0.8542 |

| DF | 0.7175 | 0.9441 | 0.9532 | 0.8153 | 0.7989 |

| MEL | 0.9110 | 0.9114 | 0.9747 | 0.9112 | 0.8965 |

| NV | 0.9832 | 0.8365 | 0.9702 | 0.9039 | 0.8905 |

| VASC | 0.9680 | 0.9576 | 0.9893 | 0.9628 | 0.9565 |

| Macro Average | 0.8915 | 0.8946 | 0.9689 | 0.8899 | 0.8740 |

| Weighted Average | 0.9543 | 0.8534 | 0.9697 | 0.8996 | 0.8848 |

| Model | Accuracy (Weighted Average) | No. of Parameters | Training Time for Single Image (ms) |

|---|---|---|---|

| Xception | 0.9434 | 20,873,774 | 44.71 |

| Xception-m1 | 0.9517 | 9,545,366 | 31.09 |

| Xception-sb3 | 0.9697 | 12,782,054 | 34.82 |

| Reference | Pre-Training | Dataset | Recall | Precision | Accuracy |

|---|---|---|---|---|---|

| Calderon et al. [54] | ImageNet | HAM10000 | 0.9321 | 0.9292 | 0.9321 |

| Jain et al. [55] | ImageNet | HAM10000 | 0.8957 | 0.8876 | 0.9048 |

| Fraiwan and Faouri [56] | ImageNet | HAM10000 | 0.8250 | 0.9250 | 0.8290 |

| Saarela and Geogieva [57] | - | HAM10000 | - | - | 0.8000 |

| Naeem et al. [53] | ImageNet | ISIC 2019 | 0.9218 | 0.9219 | 0.9691 |

| Alam et al. [58] | ImageNet | HAM10000 | - | - | 0.9100 |

| Proposed method | ImageNet | HAM10000 | 0.9543 | 0.8534 | 0.9697 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mehmood, A.; Gulzar, Y.; Ilyas, Q.M.; Jabbari, A.; Ahmad, M.; Iqbal, S. SBXception: A Shallower and Broader Xception Architecture for Efficient Classification of Skin Lesions. Cancers 2023, 15, 3604. https://doi.org/10.3390/cancers15143604

Mehmood A, Gulzar Y, Ilyas QM, Jabbari A, Ahmad M, Iqbal S. SBXception: A Shallower and Broader Xception Architecture for Efficient Classification of Skin Lesions. Cancers. 2023; 15(14):3604. https://doi.org/10.3390/cancers15143604

Chicago/Turabian StyleMehmood, Abid, Yonis Gulzar, Qazi Mudassar Ilyas, Abdoh Jabbari, Muneer Ahmad, and Sajid Iqbal. 2023. "SBXception: A Shallower and Broader Xception Architecture for Efficient Classification of Skin Lesions" Cancers 15, no. 14: 3604. https://doi.org/10.3390/cancers15143604

APA StyleMehmood, A., Gulzar, Y., Ilyas, Q. M., Jabbari, A., Ahmad, M., & Iqbal, S. (2023). SBXception: A Shallower and Broader Xception Architecture for Efficient Classification of Skin Lesions. Cancers, 15(14), 3604. https://doi.org/10.3390/cancers15143604