Transfer Learning in Cancer Genetics, Mutation Detection, Gene Expression Analysis, and Syndrome Recognition

Simple Summary

Abstract

1. Introduction

2. Literature Search Strategy

3. Mutation Identification

3.1. Lung Cancer

3.2. Breast Cancer

3.3. Gastrointestinal Tract Cancer

3.4. Brain Cancers

3.5. Other Cancers

4. Gene Expression

4.1. DNA Sequences Related to Gene Expression

4.2. DNA Methylation

4.3. Other Elements Involved in Gene Expression

5. Genetic Syndromes

6. Genotype–Phenotype Association

7. Limitations and Challenges

8. Future Perspectives

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Choi, R.Y.; Coyner, A.S.; Kalpathy-Cramer, J.; Chiang, M.F.; Campbell, J.P. Introduction to Machine Learning, Neural Networks, and Deep Learning. Transl. Vis. Sci. Technol. 2020, 9, 14. [Google Scholar]

- Khayyam, H.; Madani, A.; Kafieh, R.; Hekmatnia, A. Artificial Intelligence in Cancer Diagnosis and Therapy; MDPI-Multidisciplinary Digital Publishing Institute: Basel, Switzerland, 2023. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef] [PubMed]

- Farabi Maleki, S.; Yousefi, M.; Afshar, S.; Pedrammehr, S.; Lim, C.P.; Jafarizadeh, A.; Asadi, H. Artificial Intelligence for multiple sclerosis management using retinal images: Pearl, peaks, and pitfalls. Semin. Ophthalmol. 2024, 39, 271–288. [Google Scholar] [CrossRef] [PubMed]

- Sarker, I.H. Machine Learning: Algorithms, Real-World Applications and Research Directions. SN Comput. Sci. 2021, 2, 160. [Google Scholar] [CrossRef] [PubMed]

- Jafarizadeh, A.; Maleki, S.F.; Pouya, P.; Sobhi, N.; Abdollahi, M.; Pedrammehr, S.; Lim, C.P.; Asadi, H.; Alizadehsani, R.; Tan, R.S.; et al. Current and future roles of artificial intelligence in retinopathy of prematurity. arXiv 2024, arXiv:2402.09975. [Google Scholar] [CrossRef]

- Reddy, Y.C.; Viswanath, P.; Reddy, B.E. Semi-supervised learning: A brief review. Int. J. Eng. Technol. 2018, 7, 81. [Google Scholar] [CrossRef]

- Jiang, T.; Gradus, J.L.; Rosellini, A.J. Supervised Machine Learning: A Brief Primer. Behav. Ther. 2020, 51, 675–687. [Google Scholar] [CrossRef] [PubMed]

- Yousefi, M.; Maleki, S.F.; Jafarizadeh, A.; Youshanlui, M.A.; Jafari, A.; Pedrammehr, S.; Alizadehsani, R.; Tadeusiewicz, R.; Plawiak, P. Advancements in Radiomics and Artificial Intelligence for Thyroid Cancer Diagnosis. arXiv 2024, arXiv:2404.07239. [Google Scholar] [CrossRef]

- Khayyam, H.; Hekmatnia, A.; Kafieh, R. Artificial Intelligence in Cancer, Biology and Oncology. MDPI-Multidisciplinary Digital Publishing Institute: Basel, Switzerland, 2024. [Google Scholar] [CrossRef]

- Ashayeri, H.; Jafarizadeh, A.; Yousefi, M.; Farhadi, F.; Javadzadeh, A. Retinal imaging and Alzheimer’s disease: A future powered by Artificial Intelligence. Graefe’s Arch. Clin. Exp. Ophthalmol. 2024, 1–13. [Google Scholar] [CrossRef]

- Hosna, A.; Merry, E.; Gyalmo, J.; Alom, Z.; Aung, Z.; Azim, M.A. Transfer learning: A friendly introduction. J. Big Data 2022, 9, 102. [Google Scholar] [CrossRef]

- Lv, J.; Li, G.; Tong, X.; Chen, W.; Huang, J.; Wang, C.; Yang, G. Transfer learning enhanced generative adversarial networks for multi-channel MRI reconstruction. Comput. Biol. Med. 2021, 134, 104504. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Z.; Alzubaidi, L.; Zhang, J.; Duan, Y.; Gu, Y. A comparison review of transfer learning and self-supervised learning: Definitions, applications, advantages and limitations. Expert Syst. Appl. 2024, 242, 122807. [Google Scholar] [CrossRef]

- Amaral, P.; Carbonell-Sala, S.; De La Vega, F.M.; Faial, T.; Frankish, A.; Gingeras, T.; Guigo, R.; Harrow, J.L.; Hatzigeorgiou, A.G.; Johnson, R.; et al. The status of the human gene catalogue. Nature 2023, 622, 41–47. [Google Scholar] [CrossRef] [PubMed]

- Comfort, N. Genetics: We are the 98%. Nature 2015, 520, 615–616. [Google Scholar] [CrossRef]

- Einarsson, H.; Salvatore, M.; Vaagensø, C.; Alcaraz, N.; Bornholdt, J.; Rennie, S.; Andersson, R. Promoter sequence and architecture determine expression variability and confer robustness to genetic variants. Elife 2022, 11, e80943. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Cai, M.; Jiang, X.; Lv, G.; Hu, D.; Zhang, G.; Liu, J.; Wei, W.; Xiao, J.; Shen, B.; et al. Exons 1-3 deletion in FLCN is associated with increased risk of pneumothorax in Chinese patients with Birt-Hogg-Dubé syndrome. Orphanet J. Rare Dis. 2023, 18, 115. [Google Scholar] [CrossRef] [PubMed]

- Shaul, O. How introns enhance gene expression. Int. J. Biochem. Cell Biol. 2017, 91, 145–155. [Google Scholar] [CrossRef] [PubMed]

- Lambert, S.A.; Jolma, A.; Campitelli, L.F.; Das, P.K.; Yin, Y.; Albu, M.; Chen, X.; Taipale, J.; Hughes, T.R.; Weirauch, M.T. The Human Transcription Factors. Cell 2018, 172, 650–665. [Google Scholar] [CrossRef] [PubMed]

- Thomas, H.F.; Buecker, C. What is an enhancer? Bioessays 2023, 45, e2300044. [Google Scholar] [CrossRef] [PubMed]

- Kciuk, M.; Marciniak, B.; Mojzych, M.; Kontek, R. Focus on UV-Induced DNA Damage and Repair-Disease Relevance and Protective Strategies. Int. J. Mol. Sci. 2020, 21, 7264. [Google Scholar] [CrossRef] [PubMed]

- Allen, M.J.; Sharma, S. Noonan Syndrome. In StatPearls; StatPearls Publishing LLC.: Treasure Island, FL, USA, 2024. [Google Scholar]

- Fuqua, S.A.; Gu, G.; Rechoum, Y. Estrogen receptor (ER) α mutations in breast cancer: Hidden in plain sight. Breast Cancer Res. Treat. 2014, 144, 11–19. [Google Scholar] [CrossRef] [PubMed]

- Álvarez-Machancoses, Ó.; DeAndrés Galiana, E.J.; Cernea, A.; Fernández de la Viña, J.; Fernández-Martínez, J.L. On the Role of Artificial Intelligence in Genomics to Enhance Precision Medicine. Pharmgenomics Pers. Med. 2020, 13, 105–119. [Google Scholar] [CrossRef] [PubMed]

- Sugaya, K. Chromosome instability caused by mutations in the genes involved in transcription and splicing. RNA Biol. 2019, 16, 1521–1525. [Google Scholar] [CrossRef] [PubMed]

- Ravindran, A.; He, R.; Ketterling, R.P.; Jawad, M.D.; Chen, D.; Oliveira, J.L.; Nguyen, P.L.; Viswanatha, D.S.; Reichard, K.K.; Hoyer, J.D.; et al. The significance of genetic mutations and their prognostic impact on patients with incidental finding of isolated del(20q) in bone marrow without morphologic evidence of a myeloid neoplasm. Blood Cancer J. 2020, 10, 7. [Google Scholar] [CrossRef] [PubMed]

- Fitzgerald, D.M.; Rosenberg, S.M. What is mutation? A chapter in the series: How microbes “jeopardize” the modern synthesis. PLoS Genet. 2019, 15, e1007995. [Google Scholar] [CrossRef] [PubMed]

- Waarts, M.R.; Stonestrom, A.J.; Park, Y.C.; Levine, R.L. Targeting mutations in cancer. J. Clin. Investig. 2022, 132, e154943. [Google Scholar] [CrossRef] [PubMed]

- Samir, S. Human DNA Mutations and their Impact on Genetic Disorders. Recent Pat. Biotechnol 2024, 18, 288–315. [Google Scholar] [CrossRef] [PubMed]

- Huang, T.-L.; Zhang, T.-Y.; Song, C.-Y.; Lin, Y.-B.; Sang, B.-H.; Lei, Q.-L.; Lv, Y.; Yang, C.-H.; Li, N.; Tian, X.; et al. Gene Mutation Spectrum of Thalassemia Among Children in Yunnan Province. Front. Pediatr. 2020, 8, 159. [Google Scholar] [CrossRef] [PubMed]

- Smeazzetto, S.; Saponaro, A.; Young, H.S.; Moncelli, M.R.; Thiel, G. Structure-function relation of phospholamban: Modulation of channel activity as a potential regulator of SERCA activity. PLoS ONE 2013, 8, e52744. [Google Scholar] [CrossRef] [PubMed]

- van der Zwaag, P.A.; van Rijsingen, I.A.; Asimaki, A.; Jongbloed, J.D.; van Veldhuisen, D.J.; Wiesfeld, A.C.; Cox, M.G.; van Lochem, L.T.; de Boer, R.A.; Hofstra, R.M.; et al. Phospholamban R14del mutation in patients diagnosed with dilated cardiomyopathy or arrhythmogenic right ventricular cardiomyopathy: Evidence supporting the concept of arrhythmogenic cardiomyopathy. Eur. J. Heart Fail. 2012, 14, 1199–1207. [Google Scholar] [CrossRef] [PubMed]

- van der Heide, M.Y.C.; Verstraelen, T.E.; van Lint, F.H.M.; Bosman, L.P.; de Brouwer, R.; Proost, V.M.; van Drie, E.; Taha, K.; Zwinderman, A.H.; Dickhoff, C.; et al. Long-term reliability of the phospholamban (PLN) p.(Arg14del) risk model in predicting major ventricular arrhythmia: A landmark study. EP Eur. 2024, 26, euae069. [Google Scholar] [CrossRef] [PubMed]

- Lopes, R.R.; Bleijendaal, H.; Ramos, L.A.; Verstraelen, T.E.; Amin, A.S.; Wilde, A.A.M.; Pinto, Y.M.; de Mol, B.; Marquering, H.A. Improving electrocardiogram-based detection of rare genetic heart disease using transfer learning: An application to phospholamban p.Arg14del mutation carriers. Comput. Biol. Med. 2021, 131, 104262. [Google Scholar] [CrossRef] [PubMed]

- Mendiratta, G.; Ke, E.; Aziz, M.; Liarakos, D.; Tong, M.; Stites, E.C. Cancer gene mutation frequencies for the U.S. population. Nat. Commun. 2021, 12, 5961. [Google Scholar] [CrossRef] [PubMed]

- Xiong, J.; Li, X.; Lu, L.; Lawrence, S.H.; Fu, X.; Zhao, J.; Zhao, B. Implementation strategy of a CNN model affects the performance of CT assessment of EGFR mutation status in lung cancer patients. IEEE Access 2019, 7, 64583–64591. [Google Scholar] [CrossRef] [PubMed]

- Cao, R.; Yang, F.; Ma, S.C.; Liu, L.; Zhao, Y.; Li, Y.; Wu, D.H.; Wang, T.; Lu, W.J.; Cai, W.J.; et al. Development and interpretation of a pathomics-based model for the prediction of microsatellite instability in Colorectal Cancer. Theranostics 2020, 10, 11080–11091. [Google Scholar] [CrossRef] [PubMed]

- Fu, Y.; Jung, A.W.; Torne, R.V.; Gonzalez, S.; Vöhringer, H.; Shmatko, A.; Yates, L.R.; Jimenez-Linan, M.; Moore, L.; Gerstung, M. Pan-cancer computational histopathology reveals mutations, tumor composition and prognosis. Nat. Cancer 2020, 1, 800–810. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Jiao, Y.; Qiao, Y.; Zeng, N.; Yu, R. A novel approach combined transfer learning and deep learning to predict TMB from histology image. Pattern Recognit. Lett. 2020, 135, 244–248. [Google Scholar] [CrossRef]

- Kather, J.N. Histological Images for MSI vs. MSS Classification in Gastrointestinal Cancer, FFPE Samples. 2019. Available online: https://zenodo.org/records/2530835 (accessed on 1 May 2024). [CrossRef]

- Liang, C.W.; Fang, P.W.; Huang, H.Y.; Lo, C.M. Deep Convolutional Neural Networks Detect Tumor Genotype from Pathological Tissue Images in Gastrointestinal Stromal Tumors. Cancers 2021, 13, 5787. [Google Scholar] [CrossRef] [PubMed]

- Silva, F.; Pereira, T.; Morgado, J.; Frade, J.; Mendes, J.; Freitas, C.; Negrao, E.; De Lima, B.F.; Silva, M.C.D.; Madureira, A.J.; et al. EGFR Assessment in Lung Cancer CT Images: Analysis of Local and Holistic Regions of Interest Using Deep Unsupervised Transfer Learning. IEEE Access 2021, 9, 58667–58676. [Google Scholar] [CrossRef]

- Haim, O.; Abramov, S.; Shofty, B.; Fanizzi, C.; DiMeco, F.; Avisdris, N.; Ram, Z.; Artzi, M.; Grossman, R. Predicting EGFR mutation status by a deep learning approach in patients with non-small cell lung cancer brain metastases. J. Neurooncol. 2022, 157, 63–69. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Cen, M.; Xu, J.; Zhang, H.; Xu, X.S. Improving feature extraction from histopathological images through a fine-tuning ImageNet model. J. Pathol. Inform. 2022, 13, 100115. [Google Scholar] [CrossRef] [PubMed]

- Zeng, H.; Xing, Z.; Gao, F.; Wu, Z.; Huang, W.; Su, Y.; Chen, Z.; Cai, S.; Cao, D.; Cai, C. A multimodal domain adaptive segmentation framework for IDH genotype prediction. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 1923–1931. [Google Scholar] [CrossRef] [PubMed]

- Zheng, T. TLsub: A transfer learning based enhancement to accurately detect mutations with wide-spectrum sub-clonal proportion. Front. Genet. 2022, 13, 981269. [Google Scholar] [CrossRef] [PubMed]

- Ma, F.; Guan, Y.; Yi, Z.; Chang, L.; Li, Q.; Chen, S.; Zhu, W.; Guan, X.; Li, C.; Qian, H.; et al. Assessing tumor heterogeneity using ctDNA to predict and monitor therapeutic response in metastatic breast cancer. Int. J. Cancer 2020, 146, 1359–1368. [Google Scholar] [CrossRef] [PubMed]

- Dammak, S.; Cecchini, M.J.; Breadner, D.; Ward, A.D. Using deep learning to predict tumor mutational burden from scans of H&E-stained multicenter slides of lung squamous cell carcinoma. J. Med. Imaging 2023, 10, 017502. [Google Scholar] [CrossRef] [PubMed]

- Furtney, I.; Bradley, R.; Kabuka, M.R. Patient Graph Deep Learning to Predict Breast Cancer Molecular Subtype. IEEE/ACM Trans. Comput. Biol. Bioinform. 2023, 20, 3117–3127. [Google Scholar] [CrossRef] [PubMed]

- Shao, X.; Ge, X.; Gao, J.; Niu, R.; Shi, Y.; Shao, X.; Jiang, Z.; Li, R.; Wang, Y. Transfer learning-based PET/CT three-dimensional convolutional neural network fusion of image and clinical information for prediction of EGFR mutation in lung adenocarcinoma. BMC Med. Imaging 2024, 24, 54. [Google Scholar] [CrossRef] [PubMed]

- Rashid, T.A.; Majidpour, J.; Thinakaran, R.; Batumalay, M.; Dewi, D.A.; Hassan, B.A.; Dadgar, H.; Arabi, H. NSGA-II-DL: Metaheuristic Optimal Feature Selection With Deep Learning Framework for HER2 Classification in Breast Cancer. IEEE Access 2024, 12, 38885–38898. [Google Scholar] [CrossRef]

- Thandra, K.C.; Barsouk, A.; Saginala, K.; Aluru, J.S.; Barsouk, A. Epidemiology of lung cancer. Contemp. Oncol. 2021, 25, 45–52. [Google Scholar] [CrossRef] [PubMed]

- Lim, S.L.; Jia, Z.; Lu, Y.; Zhang, H.; Ng, C.T.; Bay, B.H.; Shen, H.M.; Ong, C.N. Metabolic signatures of four major histological types of lung cancer cells. Metabolomics 2018, 14, 118. [Google Scholar] [CrossRef] [PubMed]

- O’Leary, C.; Gasper, H.; Sahin, K.B.; Tang, M.; Kulasinghe, A.; Adams, M.N.; Richard, D.J.; O’Byrne, K.J. Epidermal Growth Factor Receptor (EGFR)-Mutated Non-Small-Cell Lung Cancer (NSCLC). Pharmaceuticals 2020, 13, 273. [Google Scholar] [CrossRef] [PubMed]

- Fu, K.; Xie, F.; Wang, F.; Fu, L. Therapeutic strategies for EGFR-mutated non-small cell lung cancer patients with osimertinib resistance. J. Hematol. Oncol. 2022, 15, 173. [Google Scholar] [CrossRef] [PubMed]

- Meng, G.; Liu, X.; Ma, T.; Lv, D.; Sun, G. Predictive value of tumor mutational burden for immunotherapy in non-small cell lung cancer: A systematic review and meta-analysis. PLoS ONE 2022, 17, e0263629. [Google Scholar] [CrossRef] [PubMed]

- Ma, L.; Hernandez, M.O.; Zhao, Y.; Mehta, M.; Tran, B.; Kelly, M.; Rae, Z.; Hernandez, J.M.; Davis, J.L.; Martin, S.P.; et al. Tumor Cell Biodiversity Drives Microenvironmental Reprogramming in Liver Cancer. Cancer Cell 2019, 36, 418–430. [Google Scholar] [CrossRef] [PubMed]

- Marusyk, A.; Polyak, K. Tumor heterogeneity: Causes and consequences. Biochim. Biophys. Acta 2010, 1805, 105–117. [Google Scholar] [CrossRef] [PubMed]

- Mulder, T.A.M.; de With, M.; del Re, M.; Danesi, R.; Mathijssen, R.H.J.; van Schaik, R.H.N. Clinical CYP2D6 Genotyping to Personalize Adjuvant Tamoxifen Treatment in ER-Positive Breast Cancer Patients: Current Status of a Controversy. Cancers 2021, 13, 771. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.; Qiu, Y.; Guo, P.; Pu, T.; Feng, Y.; Bu, H. FGFR1 and HER1 or HER2 co-amplification in breast cancer indicate poor prognosis. Oncol. Lett. 2018, 15, 8206–8214. [Google Scholar] [CrossRef] [PubMed]

- Fowler, A.M.; Salem, K.; DeGrave, M.; Ong, I.M.; Rassman, S.; Powers, G.L.; Kumar, M.; Michel, C.J.; Mahajan, A.M. Progesterone Receptor Gene Variants in Metastatic Estrogen Receptor Positive Breast Cancer. Horm. Cancer 2020, 11, 63–75. [Google Scholar] [CrossRef] [PubMed]

- Morgan, E.; Arnold, M.; Gini, A.; Lorenzoni, V.; Cabasag, C.J.; Laversanne, M.; Vignat, J.; Ferlay, J.; Murphy, N.; Bray, F. Global burden of colorectal cancer in 2020 and 2040: Incidence and mortality estimates from GLOBOCAN. Gut 2023, 72, 338–344. [Google Scholar] [CrossRef] [PubMed]

- Nojadeh, J.N.; Behrouz Sharif, S.; Sakhinia, E. Microsatellite instability in colorectal cancer. EXCLI J. 2018, 17, 159–168. [Google Scholar] [CrossRef] [PubMed]

- Popat, S.; Hubner, R.; Houlston, R.S. Systematic Review of Microsatellite Instability and Colorectal Cancer Prognosis. J. Clin. Oncol. 2005, 23, 609–618. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.; Huang, Z.P.; Zhu, Y.; Fu, F.; Tian, L. Contribution of Interstitial Cells of Cajal to Gastrointestinal Stromal Tumor Risk. Med. Sci. Monit. 2021, 27, e929575. [Google Scholar] [CrossRef] [PubMed]

- Burch, J.; Ahmad, I. Gastrointestinal Stromal Cancer. In StatPearls; StatPearls Publishing LLC.: Treasure Island, FL, USA, 2024. [Google Scholar]

- Barcelos, D.; Neto, R.A.; Cardili, L.; Fernandes, M.; Carapeto, F.C.L.; Comodo, A.N.; Funabashi, K.; Iwamura, E.S.M. KIT exon 11 and PDGFRA exon 18 gene mutations in gastric GIST: Proposal of a short panel for predicting therapeutic response. Surg. Exp. Pathol. 2018, 1, 8. [Google Scholar] [CrossRef]

- Zhou, S.; Abdihamid, O.; Tan, F.; Zhou, H.; Liu, H.; Li, Z.; Xiao, S.; Li, B. KIT mutations and expression: Current knowledge and new insights for overcoming IM resistance in GIST. Cell Commun. Signal. 2024, 22, 153. [Google Scholar] [CrossRef] [PubMed]

- Sun, Y.; Yue, L.; Xu, P.; Hu, W. An overview of agents and treatments for PDGFRA-mutated gastrointestinal stromal tumors. Front. Oncol. 2022, 12, 927587. [Google Scholar] [CrossRef] [PubMed]

- Salari, N.; Ghasemi, H.; Fatahian, R.; Mansouri, K.; Dokaneheifard, S.; Shiri, M.h.; Hemmati, M.; Mohammadi, M. The global prevalence of primary central nervous system tumors: A systematic review and meta-analysis. Eur. J. Med. Res. 2023, 28, 39. [Google Scholar] [CrossRef] [PubMed]

- Alcantara Llaguno, S.R.; Parada, L.F. Cell of origin of glioma: Biological and clinical implications. Br. J. Cancer 2016, 115, 1445–1450. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Y.; Uhrbom, L. On the origin of glioma. Ups. J. Med. Sci. 2012, 117, 113–121. [Google Scholar] [CrossRef] [PubMed]

- Han, S.; Liu, Y.; Cai, S.J.; Qian, M.; Ding, J.; Larion, M.; Gilbert, M.R.; Yang, C. IDH mutation in glioma: Molecular mechanisms and potential therapeutic targets. Br. J. Cancer 2020, 122, 1580–1589. [Google Scholar] [CrossRef] [PubMed]

- Zhuang, Z.; Shen, X.; Pan, W. A simple convolutional neural network for prediction of enhancer-promoter interactions with DNA sequence data. Bioinformatics 2019, 35, 2899–2906. [Google Scholar] [CrossRef] [PubMed]

- Singh, S.; Yang, Y.; Póczos, B.; Ma, J. Predicting enhancer-promoter interaction from genomic sequence with deep neural networks. Quant. Biol. 2019, 7, 122–137. [Google Scholar] [CrossRef] [PubMed]

- Jing, F.; Zhang, S.W.; Zhang, S. Prediction of enhancer–promoter interactions using the cross-cell type information and domain adversarial neural network. BMC Bioinform. 2020, 21, 507. [Google Scholar] [CrossRef] [PubMed]

- Zhang, M.; Hu, Y.; Zhu, M. EPIshilbert: Prediction of enhancer-promoter interactions via hilbert curve encoding and transfer learning. Genes 2021, 12, 1385. [Google Scholar] [CrossRef] [PubMed]

- Kalakoti, Y.; Peter, S.C.; Gawande, S.; Sundar, D. Modulation of DNA-protein Interactions by Proximal Genetic Elements as Uncovered by Interpretable Deep Learning. J. Mol. Biol. 2023, 435, 168121. [Google Scholar] [CrossRef] [PubMed]

- Sakly, H.; Said, M.; Seekins, J.; Guetari, R.; Kraiem, N.; Marzougui, M. Brain Tumor Radiogenomic Classification of O(6)-Methylguanine-DNA Methyltransferase Promoter Methylation in Malignant Gliomas-Based Transfer Learning. Cancer Control 2023, 30, 10732748231169149. [Google Scholar] [CrossRef] [PubMed]

- Li, F.; Liu, S.; Li, K.; Zhang, Y.; Duan, M.; Yao, Z.; Zhu, G.; Guo, Y.; Wang, Y.; Huang, L.; et al. EpiTEAmDNA: Sequence feature representation via transfer learning and ensemble learning for identifying multiple DNA epigenetic modification types across species. Comput. Biol. Med. 2023, 160, 107030. [Google Scholar] [CrossRef] [PubMed]

- Salvatore, M.; Horlacher, M.; Marsico, A.; Winther, O.; Andersson, R. Transfer learning identifies sequence determinants of cell-type specific regulatory element accessibility. NAR Genom. Bioinform. 2023, 5, lqad026. [Google Scholar] [CrossRef] [PubMed]

- Mehmood, F.; Arshad, S.; Shoaib, M. ADH-Enhancer: An attention-based deep hybrid framework for enhancer identification and strength prediction. Brief Bioinform. 2024, 25, bbae030. [Google Scholar] [CrossRef] [PubMed]

- Liu, G.; Hu, Y.; Jin, S.; Zhang, F.; Jiang, Q.; Hao, J. Cis-eQTLs regulate reduced LST1 gene and NCR3 gene expression and contribute to increased autoimmune disease risk. Proc. Natl. Acad. Sci. USA 2016, 113, E6321–E6322. [Google Scholar] [CrossRef] [PubMed]

- Yin, C.; Wang, R.; Qiao, J.; Shi, H.; Duan, H.; Jiang, X.; Teng, S.; Wei, L. NanoCon: Contrastive learning-based deep hybrid network for nanopore methylation detection. Bioinformatics 2024, 40, btae046. [Google Scholar] [CrossRef] [PubMed]

- Wheeler, D.L.; Barrett, T.; Benson, D.A.; Bryant, S.H.; Canese, K.; Chetvernin, V.; Church, D.M.; DiCuccio, M.; Edgar, R.; Federhen, S.; et al. Database resources of the National Center for Biotechnology Information. Nucleic Acids Res. 2007, 35, D5–D12. [Google Scholar] [CrossRef] [PubMed]

- Howe, K.L.; Contreras-Moreira, B.; De Silva, N.; Maslen, G.; Akanni, W.; Allen, J.; Alvarez-Jarreta, J.; Barba, M.; Bolser, D.M.; Cambell, L.; et al. Ensembl Genomes 2020-enabling non-vertebrate genomic research. Nucleic Acids Res. 2020, 48, D689–D695. [Google Scholar] [CrossRef] [PubMed]

- Jain, M.; Koren, S.; Miga, K.H.; Quick, J.; Rand, A.C.; Sasani, T.A.; Tyson, J.R.; Beggs, A.D.; Dilthey, A.T.; Fiddes, I.T.; et al. Nanopore sequencing and assembly of a human genome with ultra-long reads. Nat. Biotechnol. 2018, 36, 338–345. [Google Scholar] [CrossRef] [PubMed]

- Yao, Z.; Li, F.; Xie, W.; Chen, J.; Wu, J.; Zhan, Y.; Wu, X.; Wang, Z.; Zhang, G. DeepSF-4mC: A deep learning model for predicting DNA cytosine 4mC methylation sites leveraging sequence features. Comput. Biol. Med. 2024, 171, 108166. [Google Scholar] [CrossRef] [PubMed]

- Zeng, R.; Liao, M. Developing a Multi-Layer Deep Learning Based Predictive Model to Identify DNA N4-Methylcytosine Modifications. Front. Bioeng. Biotechnol. 2020, 8, 274. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Jiang, Z.; Zeng, P. Incorporating genetic similarity of auxiliary samples into eGene identification under the transfer learning framework. J. Transl. Med. 2024, 22, 258. [Google Scholar] [CrossRef] [PubMed]

- Lanata, C.M.; Chung, S.A.; Criswell, L.A. DNA methylation 101: What is important to know about DNA methylation and its role in SLE risk and disease heterogeneity. Lupus Sci. Med. 2018, 5, e000285. [Google Scholar] [CrossRef] [PubMed]

- Moore, L.D.; Le, T.; Fan, G. DNA Methylation and Its Basic Function. Neuropsychopharmacology 2013, 38, 23–38. [Google Scholar] [CrossRef] [PubMed]

- Kumar, S.; Chinnusamy, V.; Mohapatra, T. Epigenetics of Modified DNA Bases: 5-Methylcytosine and Beyond. Front. Genet. 2018, 9, 640. [Google Scholar] [CrossRef] [PubMed]

- Feldheim, J.; Kessler, A.F.; Monoranu, C.M.; Ernestus, R.I.; Löhr, M.; Hagemann, C. Changes of O(6)-Methylguanine DNA Methyltransferase (MGMT) Promoter Methylation in Glioblastoma Relapse-A Meta-Analysis Type Literature Review. Cancers 2019, 11, 1837. [Google Scholar] [CrossRef] [PubMed]

- Szylberg, M.; Sokal, P.; Śledzińska, P.; Bebyn, M.; Krajewski, S.; Szylberg, Ł.; Szylberg, A.; Szylberg, T.; Krystkiewicz, K.; Birski, M.; et al. MGMT Promoter Methylation as a Prognostic Factor in Primary Glioblastoma: A Single-Institution Observational Study. Biomedicines 2022, 10, 2030. [Google Scholar] [CrossRef] [PubMed]

- Duong, D.; Gai, L.; Snir, S.; Kang, E.Y.; Han, B.; Sul, J.H.; Eskin, E. Applying meta-analysis to genotype-tissue expression data from multiple tissues to identify eQTLs and increase the number of eGenes. Bioinformatics 2017, 33, i67–i74. [Google Scholar] [CrossRef] [PubMed]

- Gorlov, I.; Xiao, X.; Mayes, M.; Gorlova, O.; Amos, C. SNP eQTL status and eQTL density in the adjacent region of the SNP are associated with its statistical significance in GWA studies. BMC Genet. 2019, 20, 85. [Google Scholar] [CrossRef] [PubMed]

- Shan, N.; Wang, Z.; Hou, L. Identification of trans-eQTLs using mediation analysis with multiple mediators. BMC Bioinformatics 2019, 20, 126. [Google Scholar] [CrossRef] [PubMed]

- MacLennan, S. Down’s syndrome. InnovAiT 2020, 13, 47–52. [Google Scholar] [CrossRef]

- Plaiasu, V. Down Syndrome—Genetics and Cardiogenetics. Maedica 2017, 12, 208–213. [Google Scholar] [PubMed]

- Wang, C.; Yu, L.; Su, J.; Mahy, T.; Selis, V.; Yang, C.; Ma, F. Down Syndrome detection with Swin Transformer architecture. Biomed. Signal Process. Control 2023, 86, 105199. [Google Scholar] [CrossRef]

- Raza, A.; Munir, K.; Almutairi, M.S.; Sehar, R. Novel Transfer Learning Based Deep Features for Diagnosis of Down Syndrome in Children Using Facial Images. IEEE Access 2024, 12, 16386–16396. [Google Scholar] [CrossRef]

- Yang, H.; Hu, X.R.; Sun, L.; Hong, D.; Zheng, Y.Y.; Xin, Y.; Liu, H.; Lin, M.Y.; Wen, L.; Liang, D.P.; et al. Automated Facial Recognition for Noonan Syndrome Using Novel Deep Convolutional Neural Network With Additive Angular Margin Loss. Front. Genet. 2021, 12, 669841. [Google Scholar] [CrossRef] [PubMed]

- Kozel, B.A.; Barak, B.; Kim, C.A.; Mervis, C.B.; Osborne, L.R.; Porter, M.; Pober, B.R. Williams syndrome. Nat. Rev. Dis. Primers 2021, 7, 42. [Google Scholar] [CrossRef]

- van der Burgt, I. Noonan syndrome. Orphanet J. Rare Dis. 2007, 2, 4. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Mo, Z.H.; Yang, H.; Zhang, Z.F.; Hong, D.; Wen, L.; Lin, M.Y.; Zheng, Y.Y.; Zhang, Z.W.; Xu, X.W.; et al. Automatic Facial Recognition of Williams-Beuren Syndrome Based on Deep Convolutional Neural Networks. Front. Pediatr. 2021, 9, 648255. [Google Scholar] [CrossRef] [PubMed]

- Hong, D.; Zheng, Y.Y.; Xin, Y.; Sun, L.; Yang, H.; Lin, M.Y.; Liu, C.; Li, B.N.; Zhang, Z.W.; Zhuang, J.; et al. Genetic syndromes screening by facial recognition technology: VGG-16 screening model construction and evaluation. Orphanet J. Rare Dis. 2021, 16, 344. [Google Scholar] [CrossRef] [PubMed]

- Artoni, P.; Piffer, A.; Vinci, V.; LeBlanc, J.; Nelson, C.A.; Hensch, T.K.; Fagiolini, M. Deep learning of spontaneous arousal fluctuations detects early cholinergic defects across neurodevelopmental mouse models and patients. Proc. Natl. Acad. Sci. USA 2020, 117, 23298–23303. [Google Scholar] [CrossRef] [PubMed]

- de Vries, L.; Fouquaet, I.; Boets, B.; Naulaers, G.; Steyaert, J. Autism spectrum disorder and pupillometry: A systematic review and meta-analysis. Neurosci. Biobehav. Rev. 2021, 120, 479–508. [Google Scholar] [CrossRef] [PubMed]

- Petegrosso, R.; Park, S.; Hwang, T.H.; Kuang, R. Transfer learning across ontologies for phenome-genome association prediction. Bioinformatics 2017, 33, 529–536. [Google Scholar] [CrossRef] [PubMed]

- Zhao, M.; Ma, J.; Li, M.; Zhang, Y.; Jiang, B.; Zhao, X.; Huai, C.; Shen, L.; Zhang, N.; He, L.; et al. Cytochrome P450 Enzymes and Drug Metabolism in Humans. Int. J. Mol. Sci. 2021, 22, 2808. [Google Scholar] [CrossRef] [PubMed]

- Guttman, Y.; Nudel, A.; Kerem, Z. Polymorphism in Cytochrome P450 3A4 Is Ethnicity Related. Front. Genet. 2019, 10, 224. [Google Scholar] [CrossRef] [PubMed]

- McInnes, G.; Dalton, R.; Sangkuhl, K.; Whirl-Carrillo, M.; Lee, S.B.; Tsao, P.S.; Gaedigk, A.; Altman, R.B.; Woodahl, E.L. Transfer learning enables prediction of CYP2D6 haplotype function. PLoS Comput. Biol. 2020, 16, e1008399. [Google Scholar] [CrossRef]

- Alderfer, S.; Sun, J.; Tahtamouni, L.; Prasad, A. Morphological signatures of actin organization in single cells accurately classify genetic perturbations using CNNs with transfer learning. Soft Matter 2022, 18, 8342–8354. [Google Scholar] [CrossRef]

- Kirchler, M.; Konigorski, S.; Norden, M.; Meltendorf, C.; Kloft, M.; Schurmann, C.; Lippert, C. transferGWAS: GWAS of images using deep transfer learning. Bioinformatics 2022, 38, 3621–3628. [Google Scholar] [CrossRef]

- Zhang, H.; Xu, M.S.; Fan, X.; Chung, W.K.; Shen, Y. Predicting functional effect of missense variants using graph attention neural networks. Nat. Mach. Intell. 2022, 4, 1017–1028. [Google Scholar] [CrossRef] [PubMed]

- Zheng, F.; Liu, Y.; Yang, Y.; Wen, Y.; Li, M. Assessing computational tools for predicting protein stability changes upon missense mutations using a new dataset. Protein Sci. 2024, 33, e4861. [Google Scholar] [CrossRef] [PubMed]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. J. Big Data 2016, 3, 9. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Kai, L.; Li, F.-F. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Abdollahi, M.; Jafarizadeh, A.; Asbagh, A.G.; Sobhi, N.; Pourmoghtader, K.; Pedrammehr, S.; Asadi, H.; Alizadehsani, R.; Tan, R.S.; Acharya, U.R. Artificial Intelligence in Assessing Cardiovascular Diseases and Risk Factors via Retinal Fundus Images: A Review of the Last Decade. arXiv 2023, arXiv:2311.07609. [Google Scholar] [CrossRef]

- Bazargani, Y.S.; Mirzaei, M.; Sobhi, N.; Abdollahi, M.; Jafarizadeh, A.; Pedrammehr, S.; Alizadehsani, R.; Tan, R.S.; Islam, S.M.S.; Acharya, U.R. Artificial Intelligence and Diabetes Mellitus: An Inside Look Through the Retina. arXiv 2024, arXiv:2402.18600. [Google Scholar] [CrossRef]

- Cadrin-Chênevert, A. Moving from ImageNet to RadImageNet for Improved Transfer Learning and Generalizability. Radiol. Artif. Intell. 2022, 4, e220126. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, H.; Shi, S.; Li, Y.; Zhang, J. Multi-source adversarial transfer learning based on similar source domains with local features. arXiv 2023, arXiv:2305.19067. [Google Scholar]

- Peng, M.; Li, Z.; Juan, X. Similarity-based domain adaptation network. Neurocomputing 2022, 493, 462–473. [Google Scholar] [CrossRef]

- Song, Q.; Li, M.; Li, Q.; Lu, X.; Song, K.; Zhang, Z.; Wei, J.; Zhang, L.; Wei, J.; Ye, Y.; et al. DeepAlloDriver: A deep learning-based strategy to predict cancer driver mutations. Nucleic Acids Res. 2023, 51, W129–W133. [Google Scholar] [CrossRef]

- Chen, J.; Wang, X.; Ma, A.; Wang, Q.-E.; Liu, B.; Li, L.; Xu, D.; Ma, Q. Deep transfer learning of cancer drug responses by integrating bulk and single-cell RNA-seq data. Nat. Commun. 2022, 13, 6494. [Google Scholar] [CrossRef] [PubMed]

- Kruszka, P.; Porras, A.R.; Addissie, Y.A.; Moresco, A.; Medrano, S.; Mok, G.T.K.; Leung, G.K.C.; Tekendo-Ngongang, C.; Uwineza, A.; Thong, M.K.; et al. Noonan syndrome in diverse populations. Am. J. Med. Genet. A 2017, 173, 2323–2334. [Google Scholar] [CrossRef] [PubMed]

- Azman, B.Z.; Ankathil, R.; Siti Mariam, I.; Suhaida, M.A.; Norhashimah, M.; Tarmizi, A.B.; Nor Atifah, M.A.; Kannan, T.P.; Zilfalil, B.A. Cytogenetic and clinical profile of Down syndrome in Northeast Malaysia. Singap. Med. J. 2007, 48, 550–554. [Google Scholar]

- Mallick, P.K.; Mohapatra, S.K.; Chae, G.-S.; Mohanty, M.N. Convergent learning–based model for leukemia classification from gene expression. Pers. Ubiquitous Comput. 2023, 27, 1103–1110. [Google Scholar] [CrossRef] [PubMed]

- Nazari, E.; Farzin, A.H.; Aghemiri, M.; Avan, A.; Tara, M.; Tabesh, H. Deep Learning for Acute Myeloid Leukemia Diagnosis. J. Med. Life 2020, 13, 382–387. [Google Scholar] [CrossRef]

| Author (Year) | Goal | Used AI * Models | Databases | Cancer/Pathologic Condition | Input Data | TL | AUROC | Sensitivity, Specificity | Accuracy/Precision |

|---|---|---|---|---|---|---|---|---|---|

| Xiong et al. (2019) [37] | EGFR mutation status (mutated vs. wild-type) | ResNet-101 | Patients from 2013–2017 | Lung adenocarcinoma (n = 1010) | Non-contrast-enhanced CT images | CNN model was pre-trained on ImageNet and fine-tuned on the CT images | Two-dimensional slice images in a transverse plane: 0.766 | - | - |

| Two-dimensional slice images in multi-view plane: 0.838 | |||||||||

| Cao et al. (2020) [38] | Microsatellite instability | EPLA | TCGA-COAD (n = 429), Asian-CRC (n = 785) | Colorectal Cancer | Histopathology images | The model was trained in TCGA-COAD and then generalized on Asian-CRC | 0.9264 | - | - |

| Fu et al. (2020) [39] | Tumor mutations | PC-CHiP (based on Inception-V4) | TCGA | Twenty-eight cancers | Histopathology images of cancer and normal tissue (n = 17,396) | A pre-trained model on 1536 histopathologic features was used as a mutation predictor. | BRAF in thyroid tumors: 0.92 | - | - |

| PTEN in uterine cancers: 0.82 | |||||||||

| TP53 in uterine cancer: 0.8 | |||||||||

| TP53 in low-grade glioma: 0.84 | |||||||||

| TP53 in breast invasive carcinoma: 0.82 | |||||||||

| Wang et al. (2020) [40] | Tumor mutation burden | ResNet-18 | Data was downloaded from https://doi.org/10.5281/zenodo.2530835. [41] | Gastrointestinal cancer (n = 545) was split into two cohorts named TMB-STAD (n = 280) and TMB-COAD-DX (n = 265) | Histopathology images | TL-based CNN models were used to classify the mutation burden | AUROC in TMB-STAD/TMB-COAD-DX ResNet-18: 0.73/0.77 | - | Accuracy in patch level in TMB-STAD/TMB-COAD-DX: 0.52/0.57 |

| ResNet-50 | ResNet-50: 0.71/0.76 | - | Accuracy in patch level in TMB-STAD/TMB-COAD-DX: 0.53/0.6 | ||||||

| GoogleNet | GoogleNet: 0.75/0.78 | - | Accuracy in patch level in TMB-STAD/TMB-COAD-DX: 0.55/0.59 | ||||||

| InceptionV3 | InceptionV3: 0.74/0.73 | - | Accuracy in patch level in TMB-STAD/TMB-COAD-DX: 0.52/0.57 | ||||||

| AlexNet | AlexNet: 0.68/0.76 | - | Accuracy in patch level in TMB-STAD/TMB-COAD-DX: 0.53/0.58 | ||||||

| VGG-19 | VGG-19: 0.71/0.82 | - | Accuracy in patch level in TMB-STAD/TMB-COAD-DX: 0.53/0.58 | ||||||

| SqueezeNet, | SqueezeNet: 0.7/0.75 | - | Accuracy in patch level in TMB-STAD/TMB-COAD-DX: 0.57/0.49 | ||||||

| DenseNet-201 | DenseNet-201: 0.73/0.79 | - | Accuracy in patch level in TMB-STAD/TMB-COAD-DX: 0.55/0.6 | ||||||

| Liang et al. (2021) [42] | KIT/PDGFRA gene mutation (KIT exon mutations, PDGFRA mutations, and KIT/PDGFRA wild-type mutation) | AlexNet | Three laboratories | Gastrointestinal stromal tumors (n = 365) | Histopathology images (n = 5153) | All models were pre-trained on ImageNet | - | - | Accuracy: (1) AlexNet: 70% |

| Inception-V3 | (2) Inception V3: 77% | ||||||||

| ResNet-101, | (3) ResNet-101: 84% | ||||||||

| DenseNet-201 | (4) DenseNet-201: 85% | ||||||||

| Silva et al. (2021) [43] | EGFR mutation status | A conventional autoencoder | LIDC-IDRI (n = 875), NSCLC-Radiogenomics (n = 116) | Lung cancer | CT images | A conventional autoencoder was trained on LIDC-IDRI data to perform segmentation and then TL was used to identify EGFR mutation status in the NSCLC-radiogenomics dataset. | when lung nodule was used as an input: 0.51 | - | - |

| when one lung was used as an input: 0.68 | |||||||||

| when both lungs were used as input data: 0.60 | |||||||||

| Lopes et al. (2021) [35] | Phospholamban p.Arg14del mutation carries identification | CNN | 18–60 years old patients | Cardiomyopathy | Lead I, II, V1–V6 ECG | AI trained to identify sex from ECG (n = 256,278) was fine-tuned and used to identify mutations (n = 155). | 0.87 | 80%, 78% | - |

| Hiam et al. (2022) [44] | EGFR mutation status (positive vs. negative) | ResNet-50 | Patients from 2006–2019 | Non-small cell lung cancer with brain metastasis (n = 59) | T1C MRI | A pre-trained AI model on ImageNet data was used. | 0.91 | 68.7%, 97.7% | Accuracy of 89.8% |

| Li et al. (2022) [45] | STK11, TP53, LRP1B, NF1, FAT1, FAT4, KEAP1, EGFR, KRAS mutation status | Xception (CNN-based model) | NCT-CRC | Colorectal cancer | Histopathologic images (100,000 were used for fine-tuning and 7180 for testing) | A pre-trained AI model on ImageNet was used in this study for the FE task. | AUROC data was provided in a graph | - | - |

| Zeng et al. (2022) [46] | IDH genotyping (mutant vs. wild-type) | MDSA for tumor segmentation and VGG-19 for feature extraction | BraTS 2019, patients from the hospital (2012–2020) | Grade II–IV glioma | T1W, T2W, T1C, FLAIR MRI (335 cases from BraTS and 110 from hospital) | VGG-19 feature extractor was pre-trained on ImageNet. | 0.86 | 77.78%, 75% | Accuracy of 76.39% |

| Zheng et al. (2022) [47] | Detection of sub-clonal mutations | TLsub | Hg19 was used to create their own simulated data, data provided by Ma et al. [48], the WES dataset | DNA mutation, lung cancer, breast cancer | DNA sequence (these data were split into source and target domains) | TL was used to reduce the false positive rate by transferring the knowledge from the source domain to the target domain on simulated data | - | - | Accuracy of 81.18–92.1%, Precision of 86.92–91.8% |

| they also tested their model on real human data | - | - | Accuracy of 73–90%, Precision of 67.69–90% | ||||||

| they also tested their model on real human data from the WES dataset | - | - | Accuracy of 93.6–97.45%, Precision of 77.29–95.1% | ||||||

| Dammak et al. (2023) [49] | Predicting mutation burden of tumors | VGG-16 | TCGA-LUSC | Lung SCC (n = 50) | Histopathology images (n = 50) | A pre-trained AI model on ImageNet data was used. | VGG-16: 0.8 | - | - |

| Xception | Xception: 0.7 | ||||||||

| NASNet-Large | NASNet-Large: 0.6 | ||||||||

| Furtney et al. (2023) [50] | Molecular subtypes of breast cancer | RGCN (based on CNN) | TCGA-BRCA (n = 1040), I-SPY2 (n = 987), American Association For Cancer Research Project GENIE | Breast cancer | Dynamic contrast-enhanced MRI, pathologic testing results, radiologist reports, clinical attribution | EfficientNet-B0 CNN was pre-trained on the ImageNet dataset and used as a feature extractor. | On the TCGA dataset: 0.871 On I-SPY2: 0.895 | - | - |

| Shao et al. (2024) [51] | EGFR mutation status | 3D CNN | Patients from 2018–2022 | Lung adenocarcinoma (n = 516) | PET/CT images and pre-clinical data | A pre-trained AI model called Model Genesis was acquired from GitHub | CT images: 0.701 | 74.6%, 58.5% | Accuracy: 68.8% |

| PET images:0.645 | 54.9%, 65.9% | 58.9% | |||||||

| PET/CT images: 0.722 | 67.6%, 63.4% | 66.1% | |||||||

| PET/CT with clinical data: 0.73 | 67.6%, 65.9% | 67% | |||||||

| Rashid et al. (2024) [52] | HER-2 mutation | ResNet-50 as the feature extractor, NSGA-II as the feature selector, and SVM as a classifier. | HER2SC and HER2GAN datasets | Breast cancer | Histopathology images | They used a pre-trained ResNet-50 | - | on the HER2GAN dataset: 90.19%, 91.18% | 90.8%, 90.31% |

| on the HER2SC dataset with 633 features extracted: 93.73%, 98.07% | 94.4%, 93.81% | ||||||||

| on the HER2SC dataset with 549 features extracted: 89.98%, 96.98, | 90.75%/89.96% |

| Author (Year) | Goal | Used AI Models | Databases | TL | Input Data | AUROC * | AUPRC * | F1-Score/MCC * | Accuracy/Precision * |

|---|---|---|---|---|---|---|---|---|---|

| Zhuang et al. (2019) [75] | Prediction of enhancer–promotor interactions | CNN (for FE and prediction) | Enhancer and promotor data provided by Singh et al. [76] | (1) FE task was trained on the data of five cell lines and was then paired with a specific fully connected layer to predict promotor–enhancer interaction in a specific cell line. (2) FE task was trained on the data of all cell lines and was then paired with a specific fully connected layer to predict promotor–enhancer interaction in a specific cell line | Six cell lines GM12878 | (1) 0.96, (2) 0.98 | (1) 0.88, (2) 0.92 | - | - |

| HeLa-S3 | (1) 0.96, (2) 0.98 | (1) 0.9, (2) 0.95 | - | - | |||||

| HUVEC | (1) 0.97, (2) 0.99 | (1) 0.9, (2) 0.95 | - | - | |||||

| IMR90 | (1) 0.96, (2) 0.98 | (1) 0.9, (2) 0.92 | - | - | |||||

| K562 | (1) 0.96, (2) 0.99 | (1) 0.9, (2) 0.95 | - | - | |||||

| NHEK | (1) 0.97 (2) 0.99 | (1) 0.92, (2) 0.96 | - | - | |||||

| Jing et al. (2020) [77] | Prediction of enhancer–promotor interactions | SEPT | Hi-C data, hg19 | (1) AI was trained on one cell line and then tested on the second cell line (AI can be trained on each of six cell lines and then get tested on a particular cell line, and results are reported in a range). (2) AI was trained in half of the data from each cell line and then fine-tuned in a particular cell line. | DNA sequences of seven cell lines HeLa-S3 | (1) 0.59–0.67 (2) 0.77 | - | - | - |

| GM12878 | (1) 0.58–0.64 (2) 0.72 | - | - | - | |||||

| K562 | (1) 0.56–0.63 (2) 0.73 | - | - | - | |||||

| IMR90 | (1) 0.62–0.67 (2) 0.78 | - | - | - | |||||

| NHEK | (1) 0.59–0.66 (2) 0.76 | - | - | - | |||||

| HMEC | (1) 0.61–0.65 (2) 0.76 | - | - | - | |||||

| HUVEC | (1) 0.57–0.69 (2) 0.78 | - | - | - | |||||

| Zhang et al. (2021) [78] | Prediction of enhancer–promotor interactions | EPIsHilbert | TargetFinder, SPEID | (1) AI was trained in data from five cell lines and then trained and tested in a particular cell line. (2) AI was trained in data from all six cell lines and then fine-tuned in a particular cell line | DNA sequences of six cell lines GM12878 | (1) -, (2) 0.959, | (1) 0.946, (2) 0.97 | F1 score: (1) -, (2) 0.917 | - |

| HeLa-S3 | (1) -, (2) 0.95, | (1) 0.97, (2) 0.963 | (1) -, (2) 0.907 | - | |||||

| HUVEC | (1) -, (2) 0.938 | (1) 0.949, (2) 0.953 | (1) -, (2) 0.906, | - | |||||

| IMR90 | (1) -, (2) 0.941 | (1) 0.944, (2) 0.957 | (1) -, (2) 0.898, | - | |||||

| K562 | (1) -, (2) 0.954, | (1) 0.981, (2) 0.946 | (1) -, (2) 0.925 | - | |||||

| NHEK | (1) -, (2) 0.972 | (1) 0.982 (2) 0.966 | (1) -, (2) 0.949 | - | |||||

| Li et al. (2022) [45] | FE and its correlation with 907 immune-related gene expressions | Xception (CNN-based model) | NCT-CRC | A pre-trained AI model on ImageNet was used in this study for the FE task. (R correlation data was presented in the graph) | Histopathologic images (100,000 were used for fine-tuning and 7180 for testing) | - | - | - | - |

| Kalakoti et al. (2023) [79] | Prediction of TF-DNA interactions | TFactorNN (RNN-based model) | ENCODE project, hg19, JASPAR, HOCOMOCO | A pre-trained DNABERT model creates 380-dimensional DNA sequences, and an Att-biLSTM model is used to pre-train and learn long DNA sequences. | ChIP-seq data, ATAC-seq data, TF-binding motifs, input data of three cell lines (Hela-S3, k562, and GM12878) | - | - | 0.83/0.65 | Accuracy of 95.6% |

| Sakly et al. (2023) [80] | Prediction of O6-methylguanine-DNA methyltransferase promoter methylation | ResNet50, DenseNet201 | Glioma patients | A pre-trained AI model was used in this study for the FE task. | T1-precontract, T1-postcontrast, T2-weighted and FLAIR MRI images | - | - | - | Both models had an accuracy of 100% |

| Li et al. (2023) [81] | Identification of 6-methyladenosis, 4-methylcytosine, and 5-hydroxymethylcytosine. | EpiTEAmDNA (a CNN-based model) | iDNA-MS, Hyb4mC, and DeepTorrent | AI was trained on the data methyl nucleotide data from all 15 species and then tested on a particular species dataset. | DNA sequences (n = 1,582,262) from 15 different species, including Arabidopsis thaliana, Caenorhabditis elegans, Drosophila melanogaster, Escherichia coli, and Homo sapiens | - | - | - | Average accuracy in 29 datasets: 88.62% |

| Salvatore et al. (2023) [82] | Identification of DNA regulatory elements | ChromTransfer | ENCODE project, JASPAR 2022 motif database | A pre-trained model on ENCODE data to identify regulatory element activities of DNA sequences | DNA sequences of six cell lines A549 | 0.86 | 0.42 | F1-score for: A549: 0.86 | - |

| HCT116 | 0.79 | 0.4 | HCT116: 0.8 | - | |||||

| HepG2 | 0.89 | 0.74 | HepG2: 0.79 | - | |||||

| GM12878 | 0.85 | 0.49 | GM12878: 0.8 | - | |||||

| K562 | 0.87 | 0.45 | K562: 0.86 | - | |||||

| MCF7 | 0.85 | 0.64 | MCF7: 0.73 | - | |||||

| Mehmood et al. (2024) [83] | Enhancer identification and their strength | A hybrid of ULMFIT, CNN, and attention layers | Benchmark dataset provided by Liu et al. [84] and independent data set. | AI was used to train and predict nucleotide sequences based on the previous sequence, and then the data were used to (1) classify encoder from non-encoder sequences and | DNA sequences | 0.9097 | 0.9319 | MCC: 0.686 | Accuracy: 84.3% |

| (2) predict the strength of the encoder | 0.9902 | 0.988 | 0.774 | 87.5% | |||||

| Yin et al. (2024) [85] | 5-methylcytosine identification | NanoCon | Genome data of A. thaliana [86] Oryza sativa [87] NA12878 [88] | (1) A pre-trained model on A. thaliana was tested on O. sativa. | Nanopore sequencing data | 0.9–1 | approximately 0.9 | - | Precision: 90–100% |

| (2) A pre-trained model on O. sativa was tested on A. thaliana | 0.9–1 | 0.7–0.8 | - | 40–50% | |||||

| (3) Training on CHG motifs and predicting 5-methylcytosine in CpG | 0.5105 | 0.9344 | F1 score: 0.8393 | - | |||||

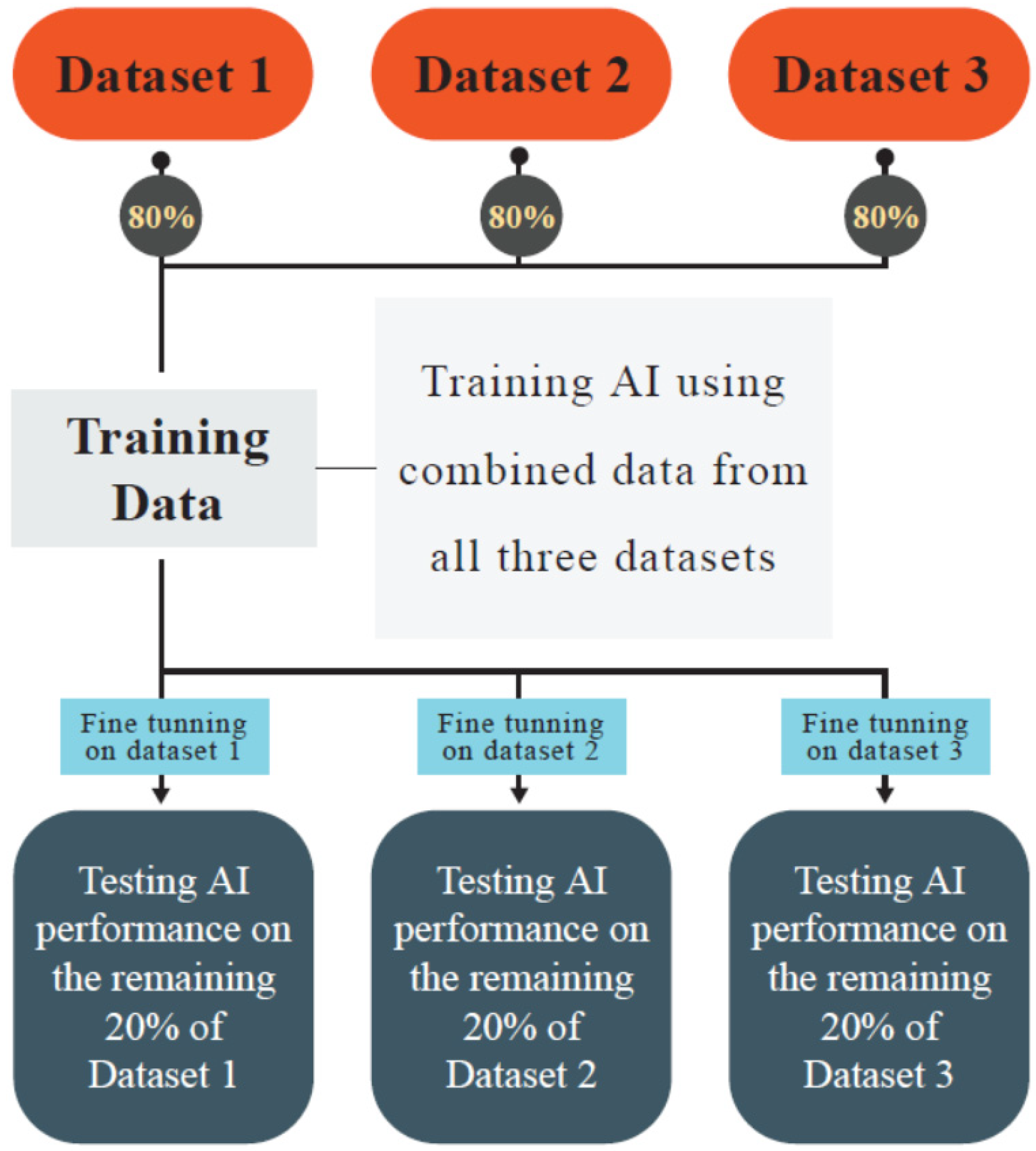

| Yao et al. (2024) [89] | 4-methylcytosine identification | DeepSF-4mC | The dataset provided by Zeng et al. (2020) [90] | They trained a CNN model on the DNA sequence of all three species and tested it on each species. | DNA sequences from A. thaliana | - | - | 0.863/0.722 | Accuracy: 86.1% |

| C. elegans | - | - | 0.855/0.814 | 90.7% | |||||

| D. melanogaster | - | - | 0.888/0.772 | 88.5% | |||||

| Zhang et al. (2024) [91] | eGene identification | TLegene | TCGA, GTEx project, Geuvadis projects | The model trained on the GTEx projects was tested on TCGA (cancers) and Geuvadis projects (non-cancer). Their four models identified between 310–325 significant genes. | Cis-single nucleotide polymorphism data | - | - | - | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ashayeri, H.; Sobhi, N.; Pławiak, P.; Pedrammehr, S.; Alizadehsani, R.; Jafarizadeh, A. Transfer Learning in Cancer Genetics, Mutation Detection, Gene Expression Analysis, and Syndrome Recognition. Cancers 2024, 16, 2138. https://doi.org/10.3390/cancers16112138

Ashayeri H, Sobhi N, Pławiak P, Pedrammehr S, Alizadehsani R, Jafarizadeh A. Transfer Learning in Cancer Genetics, Mutation Detection, Gene Expression Analysis, and Syndrome Recognition. Cancers. 2024; 16(11):2138. https://doi.org/10.3390/cancers16112138

Chicago/Turabian StyleAshayeri, Hamidreza, Navid Sobhi, Paweł Pławiak, Siamak Pedrammehr, Roohallah Alizadehsani, and Ali Jafarizadeh. 2024. "Transfer Learning in Cancer Genetics, Mutation Detection, Gene Expression Analysis, and Syndrome Recognition" Cancers 16, no. 11: 2138. https://doi.org/10.3390/cancers16112138

APA StyleAshayeri, H., Sobhi, N., Pławiak, P., Pedrammehr, S., Alizadehsani, R., & Jafarizadeh, A. (2024). Transfer Learning in Cancer Genetics, Mutation Detection, Gene Expression Analysis, and Syndrome Recognition. Cancers, 16(11), 2138. https://doi.org/10.3390/cancers16112138