Mixed Reality as a Digital Visualisation Solution for the Head and Neck Tumour Board: Application Creation and Implementation Study

Abstract

:Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Analysis of the Organisation of a Conventional HNTB

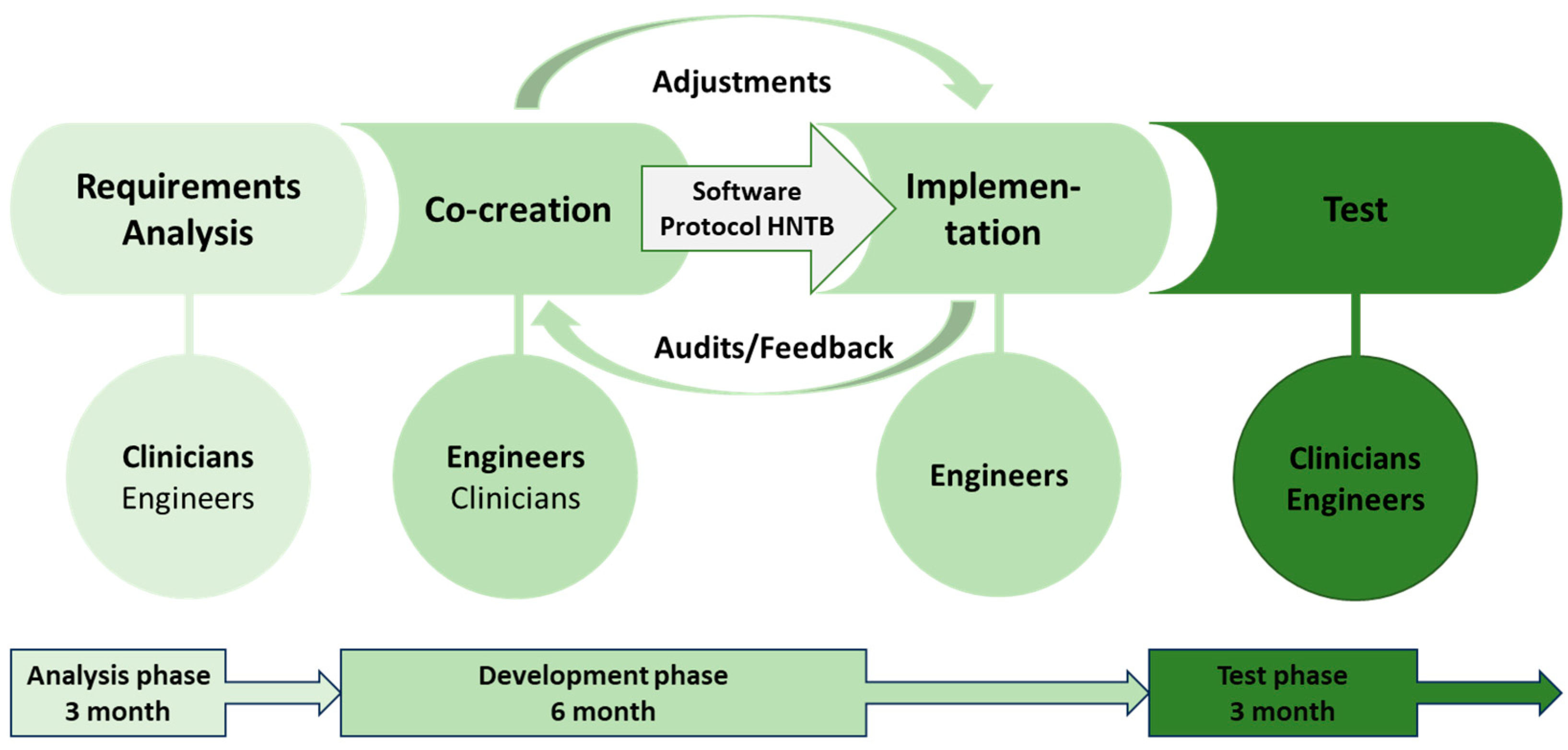

2.2. Definition of the Phases and Analysis of the Requirements for the Realisation of a MR-HNTB

- (1)

- Analysis phase

- (2)

- Development phase

- Formation of a multidisciplinary working group (3 clinicians, 2 engineers) for the development of a user interface according to the subject-specific requirements

- Regular assessments of the technical implementation

- Definition of the data that will be collected and visualised in the platform regarding the clinical case

- Regular adaptation of the platform to the requirements of the HNTB

- Definition of the workflow for the integration of the software into the HNTB

- (3)

- Test phase

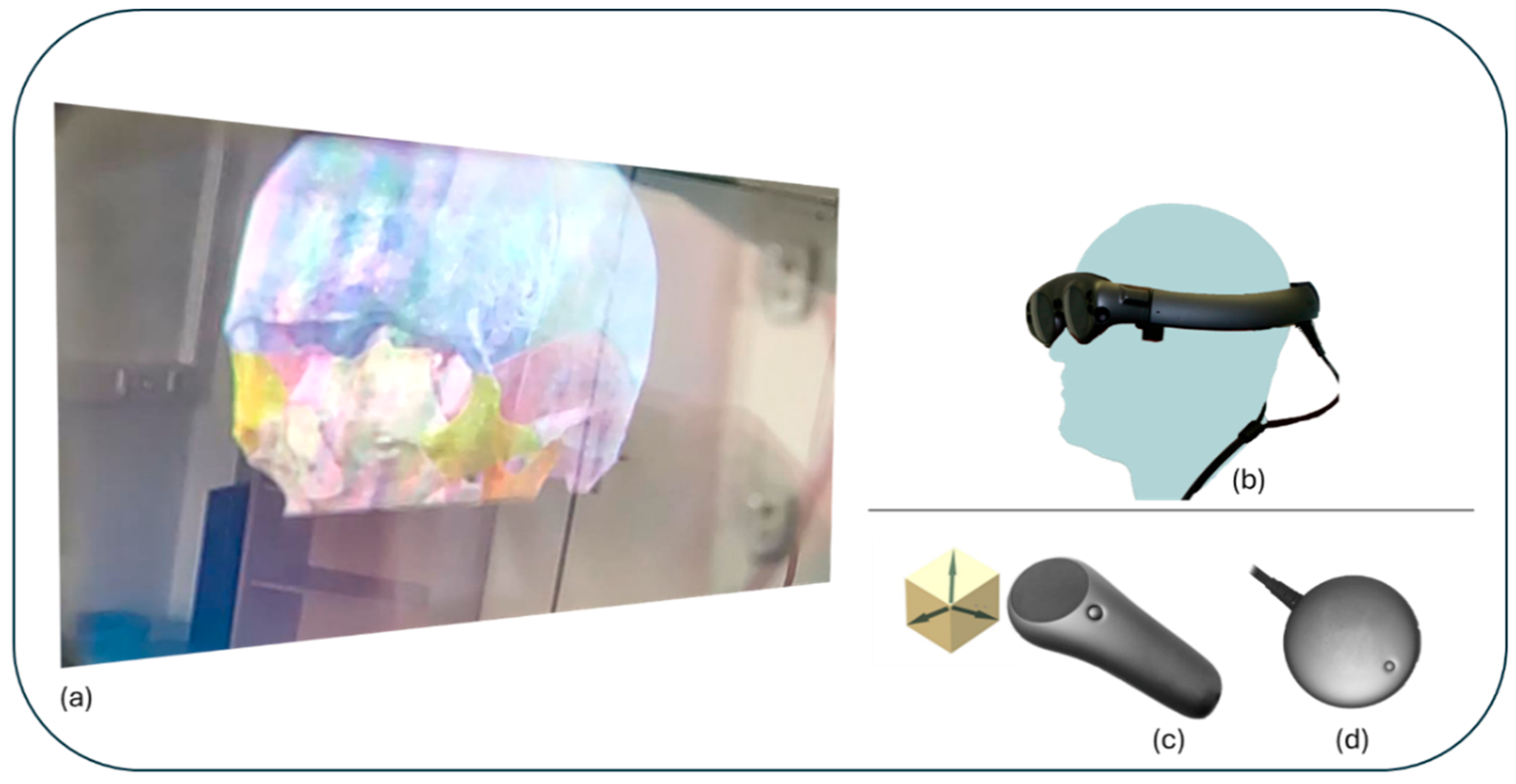

2.3. Hardware and Software

2.3.1. Hardware

2.3.2. Software

2.4. Ethics

3. Results

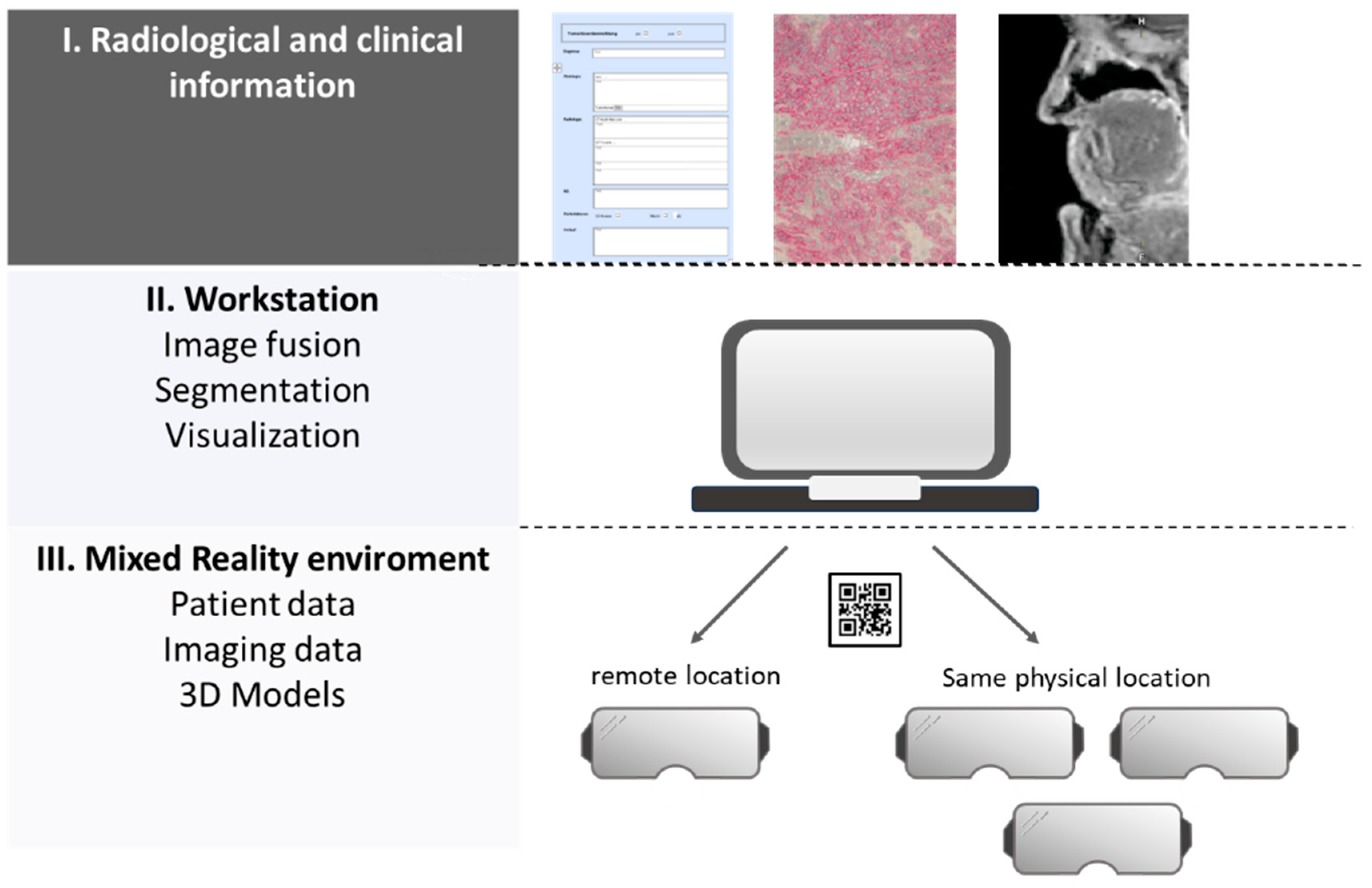

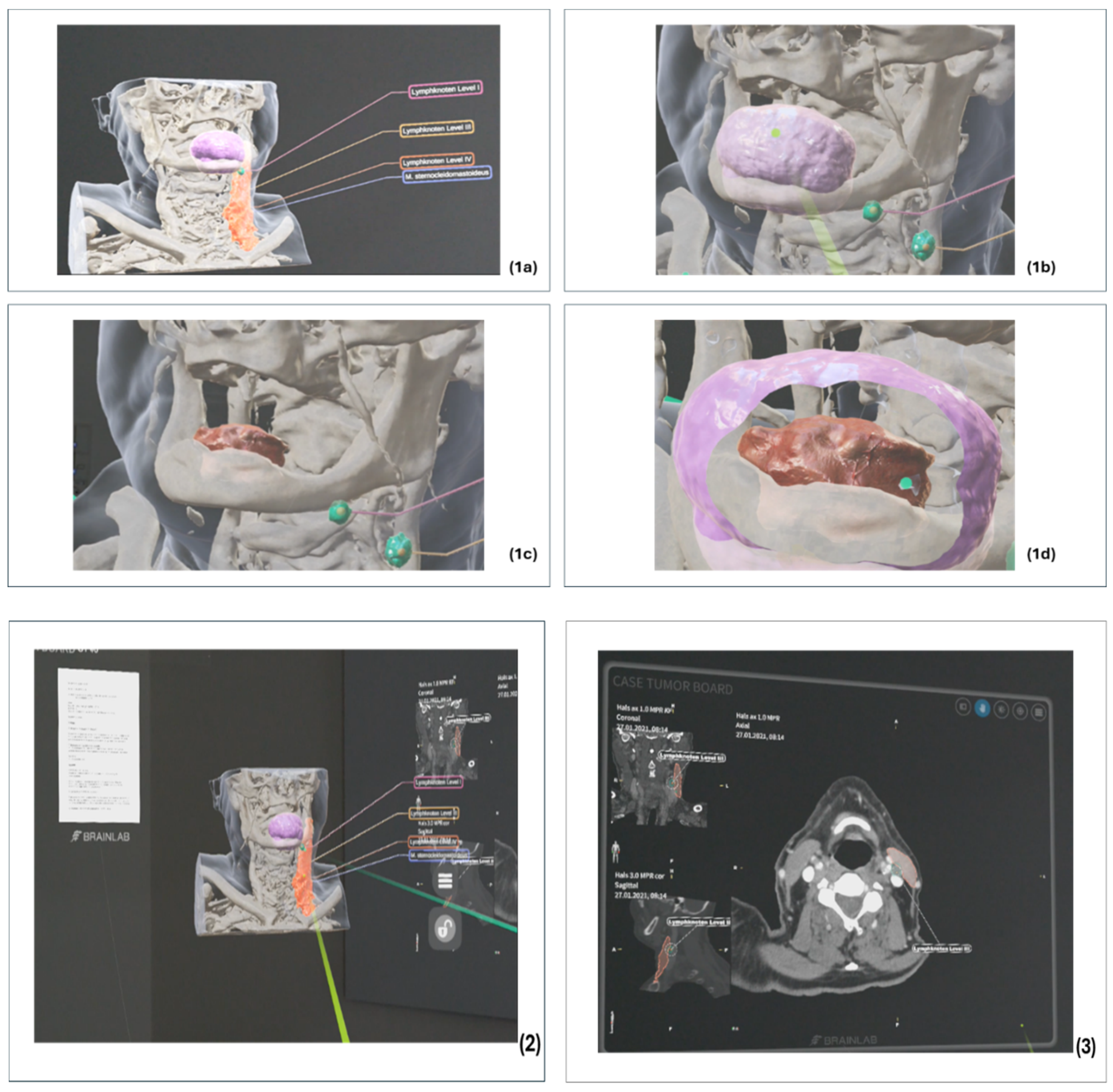

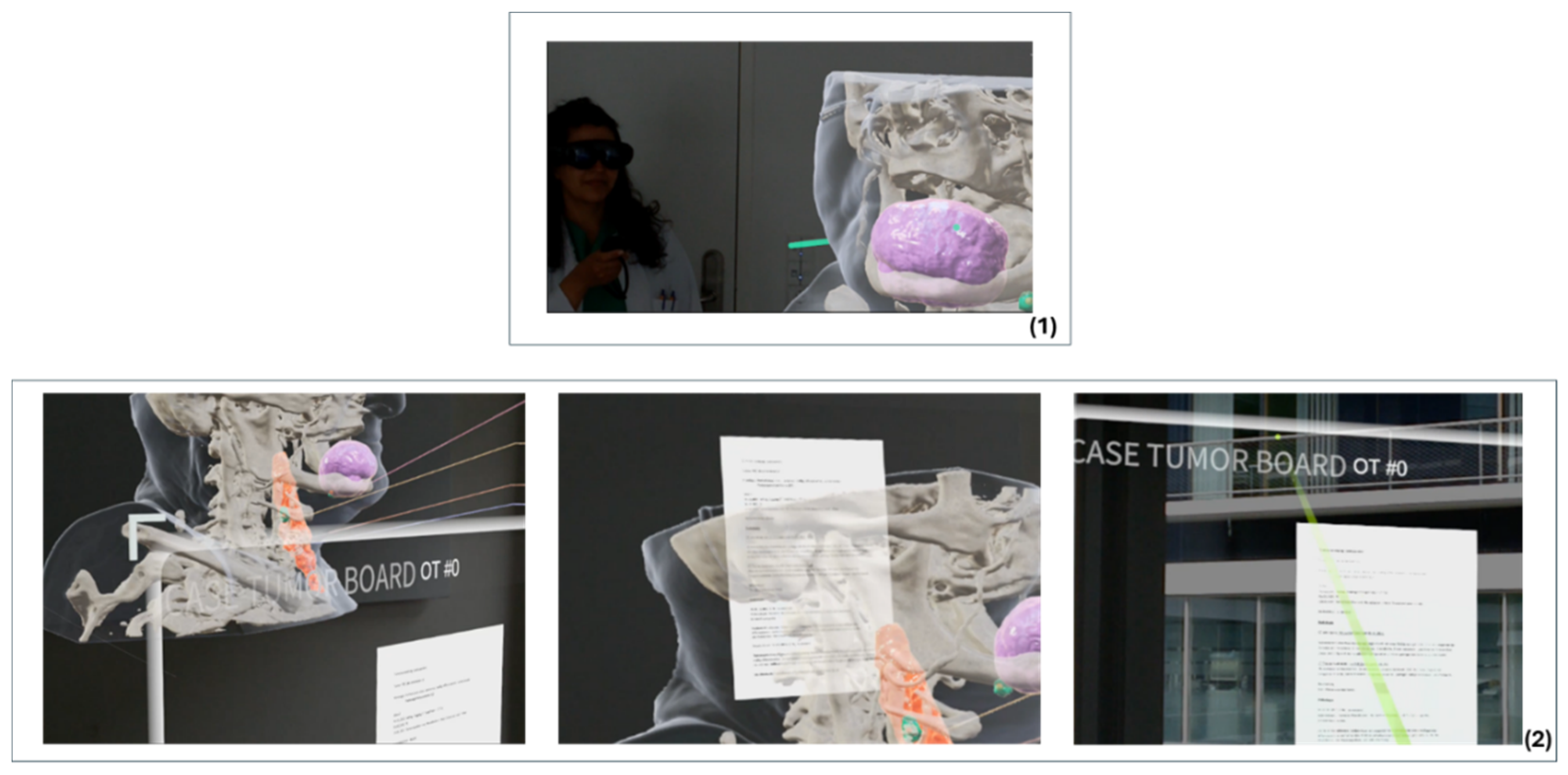

3.1. Technical Realisation and Implementation of an MR-HNTB

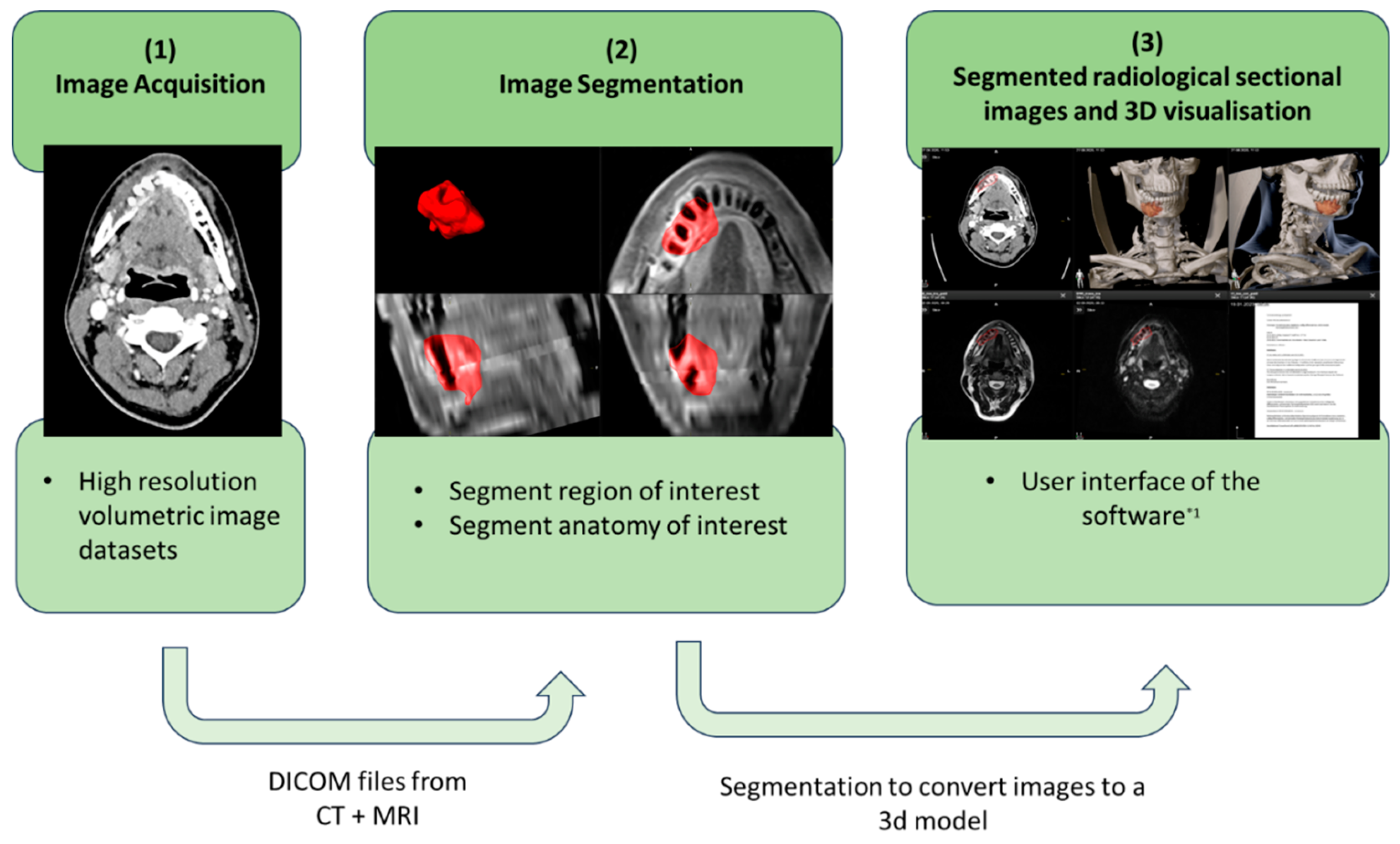

3.1.1. Preparation of the Radiological Cross-Sectional Imaging

3.1.2. Preparation of Clinical Findings and Sectional Imaging

3.1.3. Integration of Tools to Support Collaboration

3.2. Time Requirement for the Preparation of Case Discussions

3.3. Qualitative Evaluation of the Feedback Sessions and Audits

3.3.1. Qualitative Assessment of the Audits in the Analysis Phase

- Easy access to relevant findings

- Creation of one presentation per case presentation

- Integration of the various interdisciplinary requirements

- User-friendly and intuitive user interface

3.3.2. Qualitative Assessment of the Feedback Sessions in the Development Phase

3.3.3. Qualitative Evaluation of the Audits during the Test Phase

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Keating, N.L.; Landrum, M.B.; Lamont, E.B.; Bozeman, S.R.; Shulman, L.N.; McNeil, B.J. Tumor Boards and the Quality of Cancer Care. JNCI J. Natl. Cancer Inst. 2013, 105, 113–121. [Google Scholar] [CrossRef]

- Lamb, B.W.; Sevdalis, N.; Mostafid, H.; Vincent, C.; Green, J.S.A. Quality improvement in multidisciplinary cancer teams: An investigation of teamwork and clinical decision-making and cross-validation of assessments. Ann. Surg. Oncol. 2011, 18, 3535–3543. [Google Scholar] [CrossRef]

- Soukup, T.; Lamb, B.W.; Sarkar, S.; Arora, S.; Shah, S.; Darzi, A.; Green, J.S.; Sevdalis, N. Predictors of Treatment Decisions in Multidisciplinary Oncology Meetings: A Quantitative Observational Study. Ann. Surg. Oncol. 2016, 23, 4410–4417. [Google Scholar] [CrossRef]

- Fleissig, A.; Jenkins, V.; Catt, S.; Fallowfield, L. Multidisciplinary teams in cancer care: Are they effective in the UK? Lancet Oncol. 2006, 7, 935–943. [Google Scholar] [CrossRef]

- Karnatz, N.; Möllmann, H.L.; Wilkat, M.; Parviz, A.; Rana, M. Advances and Innovations in Ablative Head and Neck Oncologic Surgery Using Mixed Reality Technologies in Personalized Medicine. J. Clin. Med. 2022, 11, 4767. [Google Scholar] [CrossRef]

- Yang, R.; Li, C.; Tu, P.; Ahmed, A.; Ji, T.; Chen, X. Development and Application of Digital Maxillofacial Surgery System Based on Mixed Reality Technology. Front. Surg. 2021, 8, 719985. [Google Scholar] [CrossRef]

- Hu, H.-Z.; Feng, X.-B.; Shao, Z.-W.; Xie, M.; Xu Song Wu, X.-H.; Ye, Z.-W. Application and Prospect of Mixed Reality Technology in Medical Field. Curr. Med. Sci. 2019, 39, 1–6. [Google Scholar] [CrossRef]

- Gesulga, J.M.; Berjame, A.; Moquiala, K.S.; Galido, A. Barriers to Electronic Health Record System Implementation and Information Systems Resources: A Structured Review. Procedia Comput. Sci. 2017, 124, 544–551. [Google Scholar] [CrossRef]

- Zaresani, A.; Scott, A. Does digital health technology improve physicians’ job satisfaction and work–life balance? A cross-sectional national survey and regression analysis using an instrumental variable. BMJ Open 2020, 10, e041690. [Google Scholar] [CrossRef] [PubMed]

- Mahajan, A.P.; Inniss, D.A.; Benedict, M.D.; Dennis, A.A.; Kantor, T.; Salavitabar, A.; Stegink, C.; Nelson, J.; Kinross, J.; Cohen, M.S. International Mixed Reality Immersive Experience: Approach via Surgical Grand Rounds. J. Am. Coll. Surg. 2022, 234, 25–31. [Google Scholar] [CrossRef] [PubMed]

- Brun, H.; Pelanis, E.; Wiig, O.; Luzon, J.A.; Birkeland, S.; Kumar, R.P.; Fretland, A.A.; Suther, K.R. Mixed reality—New image technology in experimental use. Tidsskr. Nor. Laegeforen 2020, 140. [Google Scholar] [CrossRef]

- Zhang, C.; Gao, H.; Liu, Z.; Huang, H. The Potential Value of Mixed Reality in Neurosurgery. J. Craniofacial Surg. 2021, 32, 940–943. [Google Scholar] [CrossRef]

- Reis, G.; Yilmaz, M.; Rambach, J.; Pagani, A.; Suarez-Ibarrola, R.; Miernik, A.; Lesur, P.; Minaskan, N. Mixed reality applications in urology: Requirements and future potential. Ann. Med. Surg. 2021, 66, 102394. [Google Scholar] [CrossRef]

- Tian, X.; Gao, Z.-Q.; Zhang, Z.-H.; Chen, Y.; Zhao, Y.; Feng, G.-D. Validation and Precision of Mixed Reality Technology in Baha Attract Implant Surgery. Otol. Neurotol. 2020, 41, 1280–1287. [Google Scholar] [CrossRef]

- Gu, Y.; Yao, Q.; Xu, Y.; Zhang, H.; Wei, P.; Wang, L. A Clinical Application Study of Mixed Reality Technology Assisted Lumbar Pedicle Screws Implantation. Med. Sci. Monit. 2020, 26, e924982. [Google Scholar] [CrossRef]

- Jennewine, B.R.; Brolin, T.J. Emerging Technologies in Shoulder Arthroplasty: Navigation, Mixed Reality, and Preoperative Planning. Orthop. Clin. N. Am. 2023, 54, 209–225. [Google Scholar] [CrossRef]

- Iizuka, K.; Sato, Y.; Imaizumi, Y.; Mizutani, T. Potential Efficacy of Multimodal Mixed Reality in Epilepsy Surgery. Oper. Neurosurg. 2021, 20, 276–281. [Google Scholar] [CrossRef]

- Smith, R.T.; Clarke, T.J.; Mayer, W.; Cunningham, A.; Matthews, B.; Zucco, J.E. Mixed Reality Interaction and Presentation Techniques for Medical Visualisations. Adv. Exp. Med. Biol. 2020, 1260, 123–139. [Google Scholar]

- Park, B.J.; Hunt, S.J.; Martin, C.; Nadolski, G.J.; Wood, B.J.; Gade, T.P. Augmented and Mixed Reality: Technologies for Enhancing the Future of IR. J. Vasc. Interv. Radiol. 2020, 31, 1074–1082. [Google Scholar] [CrossRef] [PubMed]

- Brigham, T.J. Reality Check: Basics of Augmented, Virtual, and Mixed Reality. Med. Ref. Serv. Q. 2017, 36, 171–178. [Google Scholar] [CrossRef] [PubMed]

- Hughes, C.E.; Stapleton, C.B.; Hughes, D.E.; Smith, E.M. Mixed reality in education, entertainment, and training. IEEE Comput. Graph. Appl. 2005, 25, 24–30. [Google Scholar] [CrossRef] [PubMed]

- Helfferich, C. Die Qualität qualitativer Daten: Manual für die Durchführung qualitativer Interviews. In The Quality of Qualitative Data: Manual for Conducting Qualitative Interviews, 3rd ed.; VS Verlag für Sozialwissenschaften: Wiesbaden, Germany, 2009; p. 1214. [Google Scholar]

- Mayring, P. Qualitative Inhaltsanalyse: Grundlagen und Techniken. In Qualitative Content Analysis: Basics and Techniques, 12th ed.; Beltz Pädagogik: Weinheim, Germany, 2015; p. 152. [Google Scholar]

- Hammer, R.D.; Prime, M.S. A clinician’s perspective on co-developing and co-implementing a digital tumor board solution. Health Inform. J. 2020, 26, 2213–2221. [Google Scholar] [CrossRef] [PubMed]

- Doughty, M.; Ghugre, N.R.; Wright, G.A. Augmenting Performance: A Systematic Review of Optical See-Through Head-Mounted Displays in Surgery. J. Imaging 2022, 8, 203. [Google Scholar] [CrossRef] [PubMed]

- Zari, G.; Condino, S.; Cutolo, F.; Ferrari, V. Magic Leap 1 versus Microsoft HoloLens 2 for the Visualization of 3D Content Obtained from Radiological Images. Sensors 2023, 23, 3040. [Google Scholar] [CrossRef] [PubMed]

- Caruso, T.J.; Hess, O.; Roy, K.; Wang, E.; Rodriguez, S.; Palivathukal, C.; Haber, N. Integrated eye tracking on Magic Leap One during augmented reality medical simulation: A technical report. BMJ Simul. Technol. Enhanc. Learn. 2021, 7, 431–434. [Google Scholar] [CrossRef]

- Mano, M.S.; Çitaku, F.T.; Barach, P. Implementing multidisciplinary tumor boards in oncology: A narrative review. Futur. Oncol. 2022, 18, 375–384. [Google Scholar] [CrossRef]

- Thenappan, A.; Halaweish, I.; Mody, R.J.; Smith, E.A.; Geiger, J.D.; Ehrlich, P.F.; Rao, R.J.; Hutchinson, R.; Yanik, G.; Rabah, R.M.; et al. Review at a multidisciplinary tumor board impacts critical management decisions of pediatric patients with cancer. Pediatr. Blood Cancer 2017, 64, 254–258. [Google Scholar] [CrossRef] [PubMed]

- Ekhator, C.; Kesari, S.; Tadipatri, R.; Fonkem, E.; Grewal, J. The Emergence of Virtual Tumor Boards in Neuro-Oncology: Opportunities and Challenges. Cureus 2022, 14, e25682. [Google Scholar] [CrossRef] [PubMed]

- Prades, J.P.; Coll-Ortega, C.; Lago, L.D.; Goffin, K.; Javor, E.; Lombardo, C.; de Munter, J.; Ponce, J.; Regge, D.; Salazar, R.; et al. Use of information and communication technologies (ICTs) in cancer multidisciplinary team meetings: An explorative study based on EU healthcare professionals. BMJ Open 2022, 12, e051181. [Google Scholar] [CrossRef]

- Rosell, L.; Wihl, J.; Hagberg, O.; Ohlsson, B.; Nilbert, M. Function, information, and contributions: An evaluation of national multidisciplinary team meetings for rare cancers. Rare Tumors 2019, 11, 2036361319841696. [Google Scholar] [CrossRef]

- Soo, K.C.; Al Jajeh, I.; Quah, R.; Seah, H.K.B.; Soon, S.; Walker, E. Virtual Multidisciplinary Review of a Complex Case Using a Digital Clinical Decision Support Tool to Improve Workflow Efficiency. J. Multidiscip. Health 2021, 14, 1149–1158. [Google Scholar] [CrossRef] [PubMed]

- Janssen, A.; Robinson, T.; Brunner, M.; Harnett, P.; Museth, K.E.; Shaw, T. Multidisciplinary teams and ICT: A qualitative study exploring the use of technology and its impact on multidisciplinary team meetings. BMC Health Serv. Res. 2018, 18, 444. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Li, J.; Liao, M.; Yang, Y.; He, G.; Zhou, Z.; Feng, G.; Gao, F.; Liu, L.; Xue, X.; et al. Cloud platform to improve efficiency and coverage of asynchronous multidisciplinary team meetings for patients with digestive tract cancer. Front. Oncol. 2023, 13, 1301781. [Google Scholar] [CrossRef] [PubMed]

- Hodroj, K.; Pellegrin, D.; Menard, C.; Bachelot, T.; Durand, T.; Toussaint, P.; Dufresne, A.; Mery, B.; Tredan, O.; Goulvent, T.; et al. A Digital Solution for an Advanced Breast Tumor Board: Pilot Application Cocreation and Implementation Study. JMIR Cancer 2023, 9, e39072. [Google Scholar] [CrossRef] [PubMed]

- Barteit, S.; Lanfermann, L.; Bärnighausen, T.; Neuhann, F.; Beiersmann, C. Augmented, Mixed, and Virtual Reality-Based Head-Mounted Devices for Medical Education: Systematic Review. JMIR Serious Games 2021, 9, e29080. [Google Scholar] [CrossRef] [PubMed]

- Befrui, N.; Fischer, M.; Fuerst, B.; Lee, S.C.; Fotouhi, J.; Weidert, S.; Johnson, A.; Euler, E.; Osgood, G.; Navab, N.; et al. “3D-augmented-reality”-Visualisierung für die navigierte Osteosynthese von Beckenfrakturen [3D augmented reality visualization for navigated osteosynthesis of pelvic fractures]. Unfallchirurg 2018, 121, 264–270. [Google Scholar] [CrossRef]

- Goh, G.S.; Lohre, R.; Parvizi, J.; Goel, D.P. Virtual and augmented reality for surgical training and simulation in knee arthroplasty. Arch. Orthop. Trauma Surg. 2021, 141, 2303–2312. [Google Scholar] [CrossRef] [PubMed]

- Kolecki, R.; Pręgowska, A.; Dąbrowa, J.; Skuciński, J.; Pulanecki, T.; Walecki, P.; van Dam, P.; Dudek, D.; Richter, P.; Proniewska, K. Assessment of the utility of Mixed Reality in medical education. Transl. Res. Anat. 2022, 28, 100214. [Google Scholar] [CrossRef]

- Meyer, R.D.; Cook, D. Visualization of data. Curr. Opin. Biotechnol. 2000, 11, 89–96. [Google Scholar] [CrossRef]

- O’Donoghue, S.I. Grand Challenges in Bioinformatics Data Visualization. Front. Bioinform. 2021, 1, 669186. [Google Scholar] [CrossRef]

- Hanna, M.G.; Ahmed, I.; Nine, J.; Prajapati, S.; Pantanowitz, L. Augmented Reality Technology Using Microsoft HoloLens in Anatomic Pathology. Arch. Pathol. Lab. Med. 2018, 142, 638–644. [Google Scholar] [CrossRef]

- Dharmarajan, H.; Anderson, J.L.; Kim, S.; Sridharan, S.; Duvvuri, U.; Ferris, R.L.; Solari, M.G.; Clump, D.A., 2nd; Skinner, H.D.; Ohr, J.P.; et al. Transition to a virtual multidisciplinary tumor board during the COVID-19 pandemic: University of Pittsburgh experience. Head Neck. 2020, 42, 1310–1316. [Google Scholar] [CrossRef]

- Perlmutter, B.; Said, S.A.; Hossain, M.S.; Simon, R.; Joyce, D.; Walsh, R.M.; Augustin, T. Lessons learned and keys to success: Provider experiences during the implementation of virtual oncology tumor boards in the era of COVID-19. J. Surg. Oncol. 2022, 125, 570–576. [Google Scholar] [CrossRef]

- Mohamedbhai, H.; Fernando, S.; Ubhi, H.; Chana, S.; Visavadia, B. Advent of the virtual multidisciplinary team meeting: Do remote meetings work? Br. J. Oral. Maxillofac. Surg. 2021, 59, 1248–1252. [Google Scholar] [CrossRef]

- Krupinski, E.A.; Comas, M.; Gallego, L.G. A New Software Platform to Improve Multidisciplinary Tumor Board Workflows and User Satisfaction: A Pilot Study. J. Pathol. Inform. 2018, 9, 26. [Google Scholar] [CrossRef]

- Nadal, C.; Sas, C.; Doherty, G. Technology Acceptance in Mobile Health: Scoping Review of Definitions, Models, and Measurement. J. Med. Internet Res. 2020, 22, e17256. [Google Scholar] [CrossRef]

- Kahraman, A.; Arnold, F.M.; Hanimann, J.; Nowak, M.; Pauli, C.; Britschgi, C.; Moch, H.; Zoche, M. MTPpilot: An Interactive Software for Visualization of Next-Generation Sequencing Results in Molecular Tumor Boards. JCO Clin. Cancer Inform. 2022, 6, e2200032. [Google Scholar] [CrossRef]

- Blasi, L.; Bordonaro, R.; Serretta, V.; Piazza, D.; Firenze, A.; Gebbia, V. Virtual Clinical and Precision Medicine Tumor Boards—Cloud-Based Platform–Mediated Implementation of Multidisciplinary Reviews Among Oncology Centers in the COVID-19 Era: Protocol for an Observational Study. JMIR Res. Protoc. 2021, 10, e26220. [Google Scholar] [CrossRef]

- Gebbia, V.; Guarini, A.; Piazza, D.; Bertani, A.; Spada, M.; Verderame, F.; Sergi, C.; Potenza, E.; Fazio, I.; Blasi, L.; et al. Virtual Multidisciplinary Tumor Boards: A Narrative Review Focused on Lung Cancer. Pulm. Ther. 2021, 7, 295–308. [Google Scholar] [CrossRef]

- Wang, H.; Yushkevich, P.A. Multi-atlas segmentation without registration: A supervoxel-based approach. Med. Image Comput. Comput. Assist. Interv. 2013, 16, 535–542. [Google Scholar] [PubMed]

- Hammer, R.D.; Fowler, D.; Sheets, L.R.; Siadimas, A.; Guo, C.; Prime, M.S. Digital Tumor Board Solutions Have Significant Impact on Case Preparation. JCO Clin. Cancer Inform. 2020, 4, 757–768. [Google Scholar] [CrossRef]

- Specchia, M.L.; Di Pilla, A.; Gambacorta, M.A.; Filippella, A.; Beccia, F.; Farina, S.; Meldolesi, E.; Lanza, C.; Bellantone, R.D.A.; Valentini, V.; et al. An IT Platform Supporting Rectal Cancer Tumor Board Activities: Implementation Process and Impact Analysis. Int. J. Environ. Res. Public Health 2022, 19, 15808. [Google Scholar] [CrossRef]

| Process or Quality Challenge | Technical Requirements |

|---|---|

|

|

| Operating System | Lumin OS |

| Processor | Nvidia Parker SoC |

| GPU | Nvidia Pascal 256 CUDA |

| RAM | 8 GB |

| Storage (ROM) | 128 GB |

| Resolution | 1280 × 960 pro Auge |

| Frame rate | 122 Hz |

| Field of View | 50° diagonal, 40° horizontal and 30° vertical |

| Eye tracking | yes |

| Weight | 316 g |

| Main Category | Subcategory |

|---|---|

| Structuring the tumour board |

|

| Technical requirements/ Software requirements |

|

| Compliance with quality standards |

|

| Providing the relevant information |

|

| Main Category | Subcategory |

|---|---|

| Issue I |

|

| Issue II |

|

| Issue III |

|

| Main Category | Subcategory |

|---|---|

| Positive feedback | Process

|

| Negative feedback | Process

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karnatz, N.; Schwerter, M.; Liu, S.; Parviz, A.; Wilkat, M.; Rana, M. Mixed Reality as a Digital Visualisation Solution for the Head and Neck Tumour Board: Application Creation and Implementation Study. Cancers 2024, 16, 1392. https://doi.org/10.3390/cancers16071392

Karnatz N, Schwerter M, Liu S, Parviz A, Wilkat M, Rana M. Mixed Reality as a Digital Visualisation Solution for the Head and Neck Tumour Board: Application Creation and Implementation Study. Cancers. 2024; 16(7):1392. https://doi.org/10.3390/cancers16071392

Chicago/Turabian StyleKarnatz, Nadia, Michael Schwerter, Shufang Liu, Aida Parviz, Max Wilkat, and Majeed Rana. 2024. "Mixed Reality as a Digital Visualisation Solution for the Head and Neck Tumour Board: Application Creation and Implementation Study" Cancers 16, no. 7: 1392. https://doi.org/10.3390/cancers16071392