Classification of Appearance Quality of Red Grape Based on Transfer Learning of Convolution Neural Network

Abstract

:1. Introduction

2. Materials and Methods

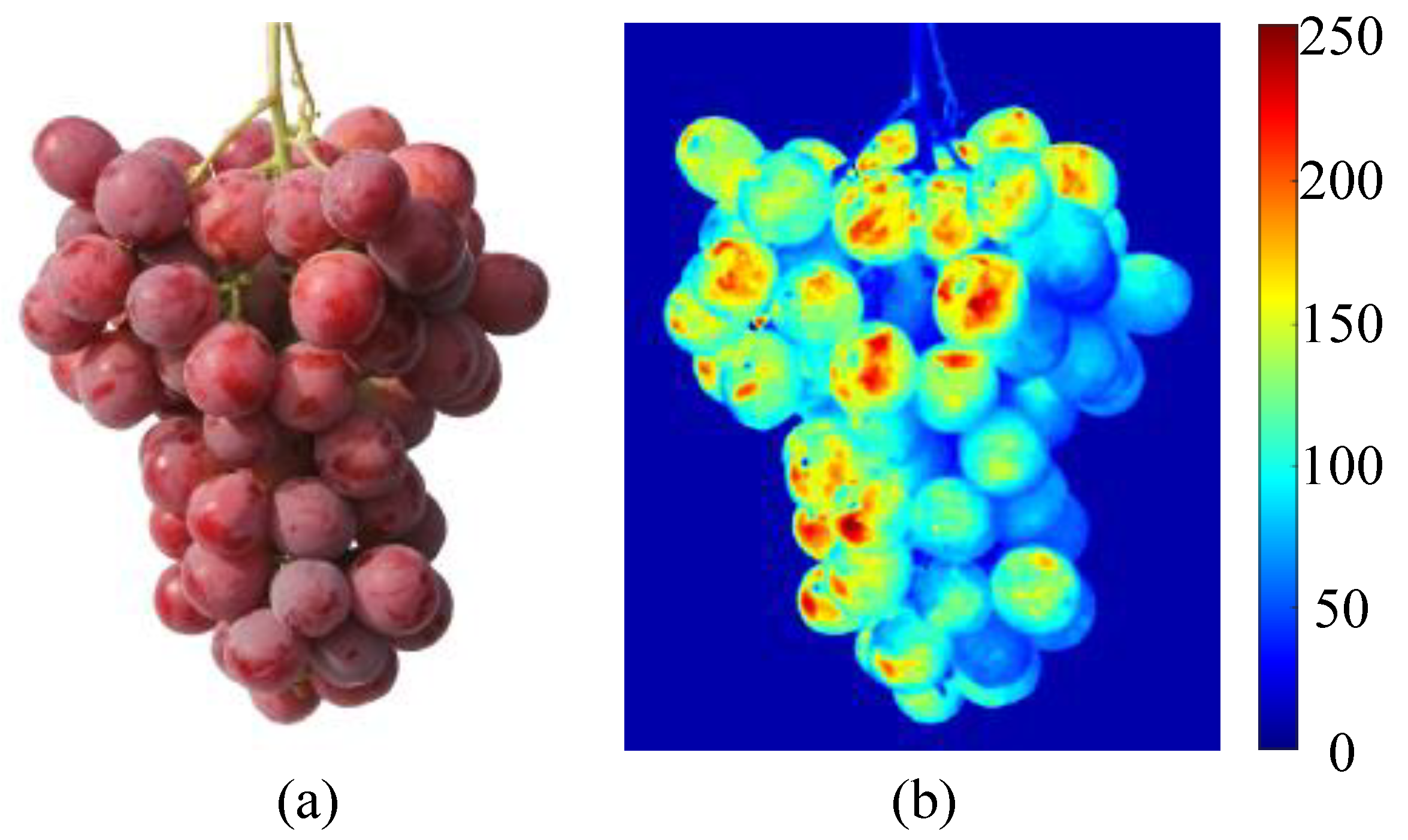

2.1. Image Acquisition of Red Grape Ear

2.2. Data Augmentation of Red Grape Cluster Images

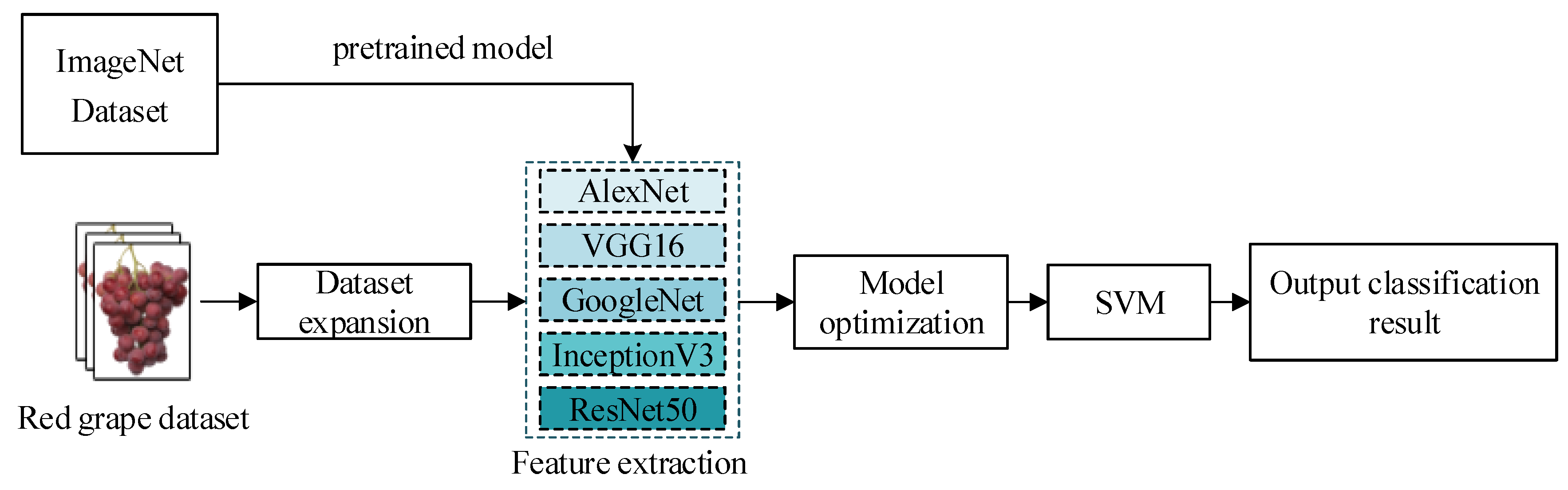

2.3. Construction Method of Appearance Quality Grading Model for Red Grapes

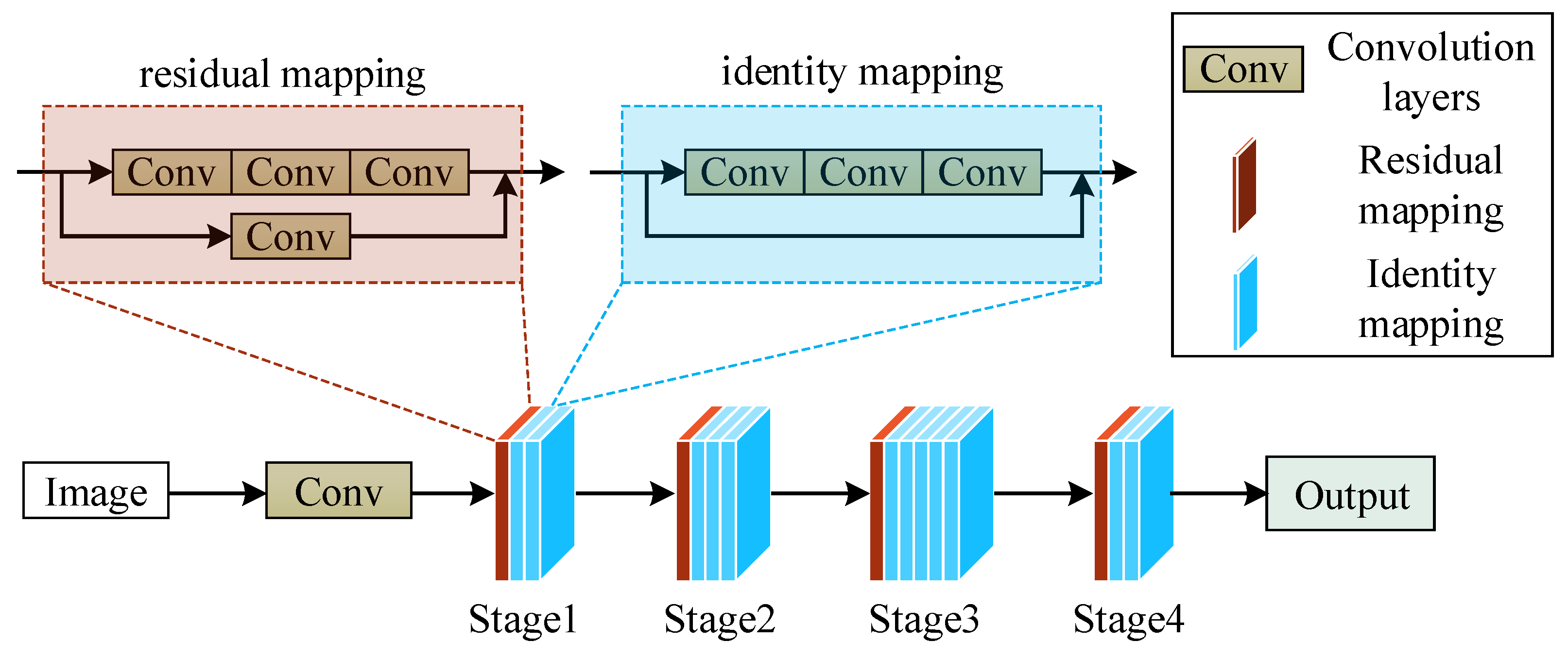

2.3.1. Transfer Learning

2.3.2. SVM Classifier

2.3.3. Red Grape Appearance Quality Grading Model Construction Process

2.4. Model Optimization

2.5. Model Evaluation Metrics

3. Results and Analysis

3.1. Performance Analysis of Pretrained Network Models

3.2. Analysis of the Factors Affecting the Performance of the ResNet50 Model

3.3. Performance Analysis of Feature Extraction Based on ResNet50 Network Model

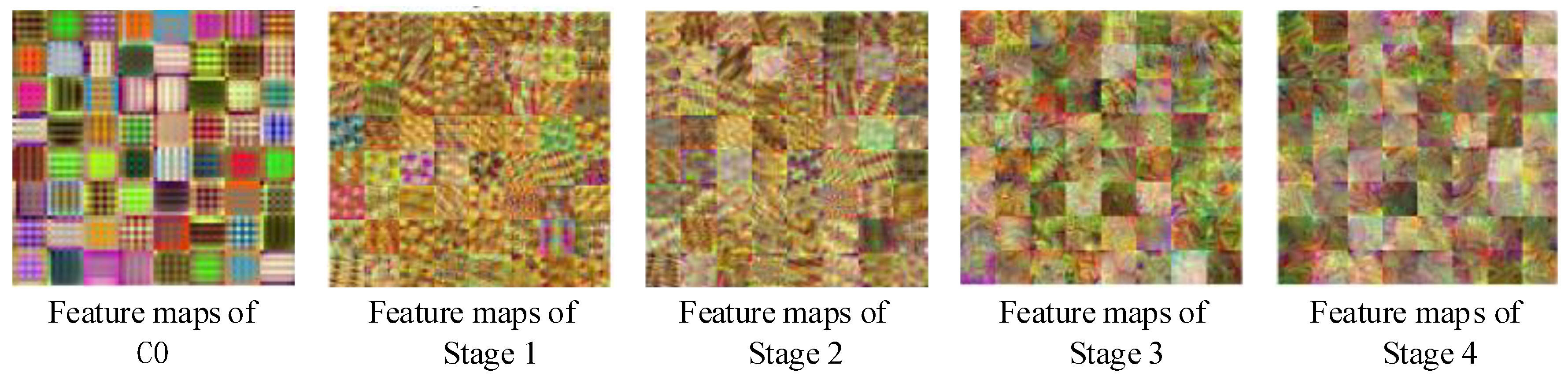

3.3.1. Performance Analysis of ResNet50 Network Feature Extraction Based on Feature Visualization

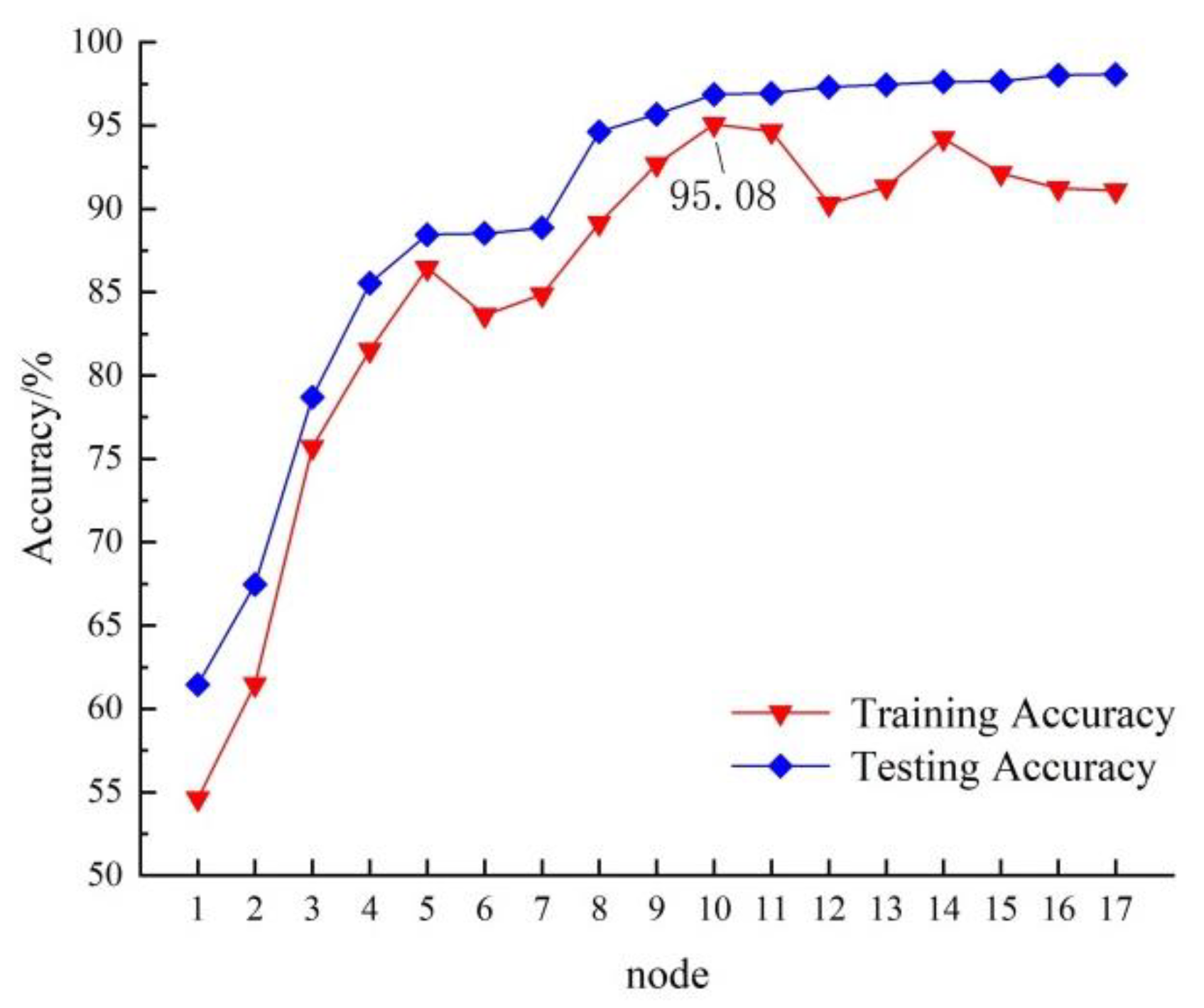

3.3.2. Analysis of Network Architecture and Classification Performance of Red Grapes

3.3.3. Improved Model Performance Analysis

4. Conclusions

- By using a model-based transfer learning method, compared with five pre-trained network models, namely, Alexnet, VGG16, Googlenet, ResNet50, and InceptionV3, ResNet50 network model is more suitable for the red globe grape dataset with the same hyperparameter settings. On the test set, the average classification accuracy of the ResNet50 network model can reach 82.85%, and the F1 value is 82.69%.

- By optimizing the hyperparameters, when the learning rate is set to 0.001 and the number of iterations is 10, the grading accuracy of the ResNet50 network model on the red table grape dataset can reach 93.89%.

- By analyzing the feature output of the intermediate convolutional layers in the ResNet50 network model layer by layer, and combining SVM technology, a grape quality grading model for red table grape clusters based on their appearance was constructed and tested on the dataset. The average classification accuracy of the constructed network model can reach 95.08%. The experimental results indicate that the deep network transfer learning algorithm proposed in this article has certain application value for grape cluster quality grading.

- In summary, this study proposes a deep network transfer learning algorithm for grape cluster quality grading, which has achieved promising results on the red table grape dataset. The proposed method has the potential to enhance transparency in the fruit market, increase consumer trust, and ultimately support the development and sustainability of the entire industry. However, the current study focuses on red grapes, utilizing specialized image acquisition equipment under specific indoor lighting conditions for grading research. There has been no investigation into the appearance quality grading of white grapes. The research on automated grading of grape clusters under natural light conditions remains a significant area of interest. In future research, our focus will be on developing an online grading model to assess the appearance quality of various categories of field grapes under natural light conditions. Additionally, we will work on the hardware deployment of the proposed model to ensure its practical applicability. This will be accomplished through the utilization of knowledge distillation or pruning algorithms. Furthermore, we aim to apply the model to field grading scenarios, allowing for practical implementation in real-world settings. For the unbalanced experimental sample in this study, we will focus on using image processing techniques, such as GAN, to solve the problem of balancing samples of different grades and to complete the construction and testing of a system for grading the quality of red grapes.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Mu, W.; Feng, J.; Tian, D.; Mou, X. The international trade and domestic demand of the table grape industry in China. China Fruits 2019, 2, 5–10. [Google Scholar] [CrossRef]

- Bhargava, A.; Bansal, A. Fruits and vegetables quality evaluation using computer vision: A review. J. King Saud Univ.-Comput. Inf. 2021, 33, 243–257. [Google Scholar] [CrossRef]

- Tian, Y.; Wu, W.; Lu, S.; Deng, H. Application of Deep Learning in Fruit Quality Detection and Grading. J. Food Sci. 2020, 42, 260–270. [Google Scholar] [CrossRef]

- Chen, Y.; Liao, T.; Lin, C.; Wan, H.; Li, W. Grape Inspection and Grading System Based on Computer Vision. Trans. Chin. Soc. Agric. Mach. 2010, 41, 169–172. [Google Scholar]

- Li, J.; Guo, J.; Hu, G.; Liu, J.; Yu, F. Research on prediction and grading of single grape quality and fruit diameter based on machine vision technology. J. Xinjiang Agric. Sci. 2014, 51, 1862–1868. [Google Scholar]

- Xiao, Z.; Wang, Q.; Wang, B.; Xu, F.; Yang, P. A Method for Detecting and Grading ‘Red Globe’ Grape Bunches Based on Digital Images and Random Least Squares. J. Food Sci. 2018, 39, 60–66. [Google Scholar]

- Mishra, R.K.; Reddy, G.Y.S.; Pathak, H. The Understanding of Deep Learning: A Comprehensive Review. Math. Probl. Eng. 2021, 2021, 5548884. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Mao, S.; Li, Y.; Ma, Y.; Zhang, B.; Zhou, J.; Wang, K. Automatic cucumber recognition algorithm for harvesting robots in the natural environment using deep learning and multi-feature fusion. Comput. Electron. Agric. 2020, 170, 105254. [Google Scholar] [CrossRef]

- Majeed, Y.; Zhang, J.; Zhang, X.; Fu, L.; Karkee, M.; Zhang, Q.; Whiting, M.D. Deep learning based segmentation for automated training of apple trees on trellis wires. Comput. Electron. Agric. 2020, 170, 105277. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, Q.; Li, K. Detection of peanut kernel quality based on machine vision and adaptive convolution neural network. Trans. Chin. Soc. Agric. Eng. 2020, 36, 269–277. [Google Scholar]

- Zheng, K.; Fang, C.; Sun, F. Quality classification of green pepper based on deep learning. J. Shandong Univ. Tech. 2020, 34, 18–23. [Google Scholar]

- Long, M.; Ouyang, C.; Liu, H.; Fu, Q. Image recognition of Camellia oleifera diseases based on convolutional neural network & transfer learning. Trans. Chin. Soc. Agric. Eng. 2018, 34, 194–201. [Google Scholar]

- Wang, G.; Sun, Y.; Wang, J. Automatic Image-Based Plant Disease Severity Estimation Using Deep Learning. Comput. Intell. Neurosci. 2017, 2017, 2917536. [Google Scholar] [CrossRef]

- Liu, Y.; Gao, G. Identification of multiple leaf diseases using improved SqueezeNet model. Trans. Chin. Soc. Agric. Eng. 2021, 37, 187–195. [Google Scholar]

- Geng, L.; Xu, W.; Zhang, F.; Xiao, Z.; Liu, Y. Dried Jujube Classification Based on a Double Branch Deep Fusion Convolution Neural Network. Food Sci. Technol. Res. 2018, 24, 1007–1015. [Google Scholar] [CrossRef]

- Li, W.; Cao, Z.; Zhu, C.; Chen, K.; Wang, J.; Liu, X.; Zheng, C. Intelligent feedback cognition of greengage grade based on deep ensemble learning. Trans. Chin. Soc. Agric. Eng. 2017, 33, 276–283. [Google Scholar]

- Momeny, M.; Jahanbakhshi, A.; Jafarnezhad, K.; Zhang, Y. Accurate classification of cherry fruit using deep CNN based on hybrid pooling approach. Postharvest Biol. Technol. 2020, 166, 111204. [Google Scholar] [CrossRef]

- Sozzi, M.; Cantalamessa, S.; Cogato, A.; Kayad, A.; Marinello, F. Automatic bunch detection in white grape varieties using YOLOv3, YOLOv4, and YOLOv5 deep learning algorithms. Agronomy 2022, 12, 319. [Google Scholar] [CrossRef]

- Gulzar, Y. Fruit image classification model based on MobileNetV2 with deep transfer learning technique. Sustainability 2023, 15, 1906. [Google Scholar] [CrossRef]

- Gulzar, Y.; Hamid, Y.; Soomro, A.B.; Alwan, A.A.; Journaux, L. A convolution neural network-based seed classification system. Symmetry 2020, 12, 2018. [Google Scholar] [CrossRef]

- Mamat, N.; Othman, M.F.; Abdulghafor, R.; Alwan, A.A.; Gulzar, Y. Enhancing image annotation technique of fruit classification using a deep learning approach. Sustainability 2023, 15, 901. [Google Scholar] [CrossRef]

- Aggarwal, S.; Gupta, S.; Gupta, D.; Gulzar, Y.; Juneja, S.; Alwan, A.A.; Nauman, A. An artificial intelligence-based stacked ensemble approach for prediction of protein subcellular localization in confocal microscopy images. Sustainability 2023, 15, 1695. [Google Scholar] [CrossRef]

- Behera, S.K.; Rath, A.K.; Sethy, P.K. Maturity status classification of papaya fruits based on machine learning and transfer learning approach. Inf. Process. Agric. 2021, 8, 244–250. [Google Scholar] [CrossRef]

- Xue, Y.; Wang, L.; Zhang, Y.; Shen, Q. Defect Detection Method of Apples Based on GoogLeNet Deep Transfer Learning. Trans. Chin. Soc. Agric. Mach. 2020, 51, 30–35. [Google Scholar]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks. Adv. Neural Inf. Process. Syst. 2014, 27, 3320–3328. [Google Scholar]

- Standardization Administration of the People’s Republic of China. DB65/T2832-2007 The Standard of Grade of Red Globe Grape, 2007. Available online: http://down.foodmate.net/standard/sort/15/58716.html (accessed on 14 May 2023).

- Nturambirwe, J.F.I.; Opara, U.L. Machine learning applications to non-destructive defect detection in horticultural products. Biosyst. Eng. 2020, 189, 60–83. [Google Scholar] [CrossRef]

| Cluster Grade | Original Image | Data Augmentation | Dataset Division | |

|---|---|---|---|---|

| Training Set | Test Set | |||

| Grade I | 195 | 585 | 546 | 234 |

| Grade II | 120 | 360 | 336 | 144 |

| Grade III | 135 | 405 | 378 | 162 |

| Feature Extractor | Network Depth | Training Set | Test Set | Mean Detection Time/ms | ||||

|---|---|---|---|---|---|---|---|---|

| Accuracy/% | Recall/% | F1-Score/% | Accuracy/% | Recall/% | F1-Score/% | |||

| AlexNet | 8 | 75.62 | 75.86 | 74.97 | 72.31 | 70.09 | 72.35 | 97.00 |

| VGG19 | 16 | 81.35 | 82.24 | 80.81 | 78.15 | 75.46 | 77.67 | 128.64 |

| GoogleNet | 22 | 82.14 | 83.35 | 82.42 | 78.79 | 76.02 | 78.16 | 236.14 |

| InceptionV3 | 48 | 88.43 | 89.94 | 88.11 | 80.31 | 78.86 | 80.99 | 480.20 |

| ResNet50 | 50 | 86.74 | 87.43 | 85.98 | 82.85 | 80.31 | 82.69 | 241.20 |

| Learning Rate | Epochs | |||||

|---|---|---|---|---|---|---|

| 5 | 10 | 15 | 20 | 30 | 40 | |

| 0.1 | 55.77% | 65.46% | 67.31% | 69.23% | 88.46% | 88.96% |

| 0.01 | 82.85% | 93.89% | 94.22% | 94.35% | 93.36% | 93.31% |

| 0.001 | 85.12% | 92.31% | 93.99% | 92.31% | 92.46% | 92.21% |

| Grading Methods | Training Set | Test Set | ||||

|---|---|---|---|---|---|---|

| Accuracy/% | Recall/% | F1-Score/% | Accuracy/% | Recall/% | F1-Score/% | |

| ResNet50 + softmax | 86.74 | 87.43 | 85.98 | 82.85 | 80.31 | 82.69 |

| ResNet50 + SVM | 96.88 | 95.36 | 96.43 | 95.08 | 94.88 | 96.78 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zha, Z.; Shi, D.; Chen, X.; Shi, H.; Wu, J. Classification of Appearance Quality of Red Grape Based on Transfer Learning of Convolution Neural Network. Agronomy 2023, 13, 2015. https://doi.org/10.3390/agronomy13082015

Zha Z, Shi D, Chen X, Shi H, Wu J. Classification of Appearance Quality of Red Grape Based on Transfer Learning of Convolution Neural Network. Agronomy. 2023; 13(8):2015. https://doi.org/10.3390/agronomy13082015

Chicago/Turabian StyleZha, Zhihua, Dongyuan Shi, Xiaohui Chen, Hui Shi, and Jie Wu. 2023. "Classification of Appearance Quality of Red Grape Based on Transfer Learning of Convolution Neural Network" Agronomy 13, no. 8: 2015. https://doi.org/10.3390/agronomy13082015

APA StyleZha, Z., Shi, D., Chen, X., Shi, H., & Wu, J. (2023). Classification of Appearance Quality of Red Grape Based on Transfer Learning of Convolution Neural Network. Agronomy, 13(8), 2015. https://doi.org/10.3390/agronomy13082015