Defective Pennywort Leaf Detection Using Machine Vision and Mask R-CNN Model

Abstract

1. Introduction

2. Materials and Methods

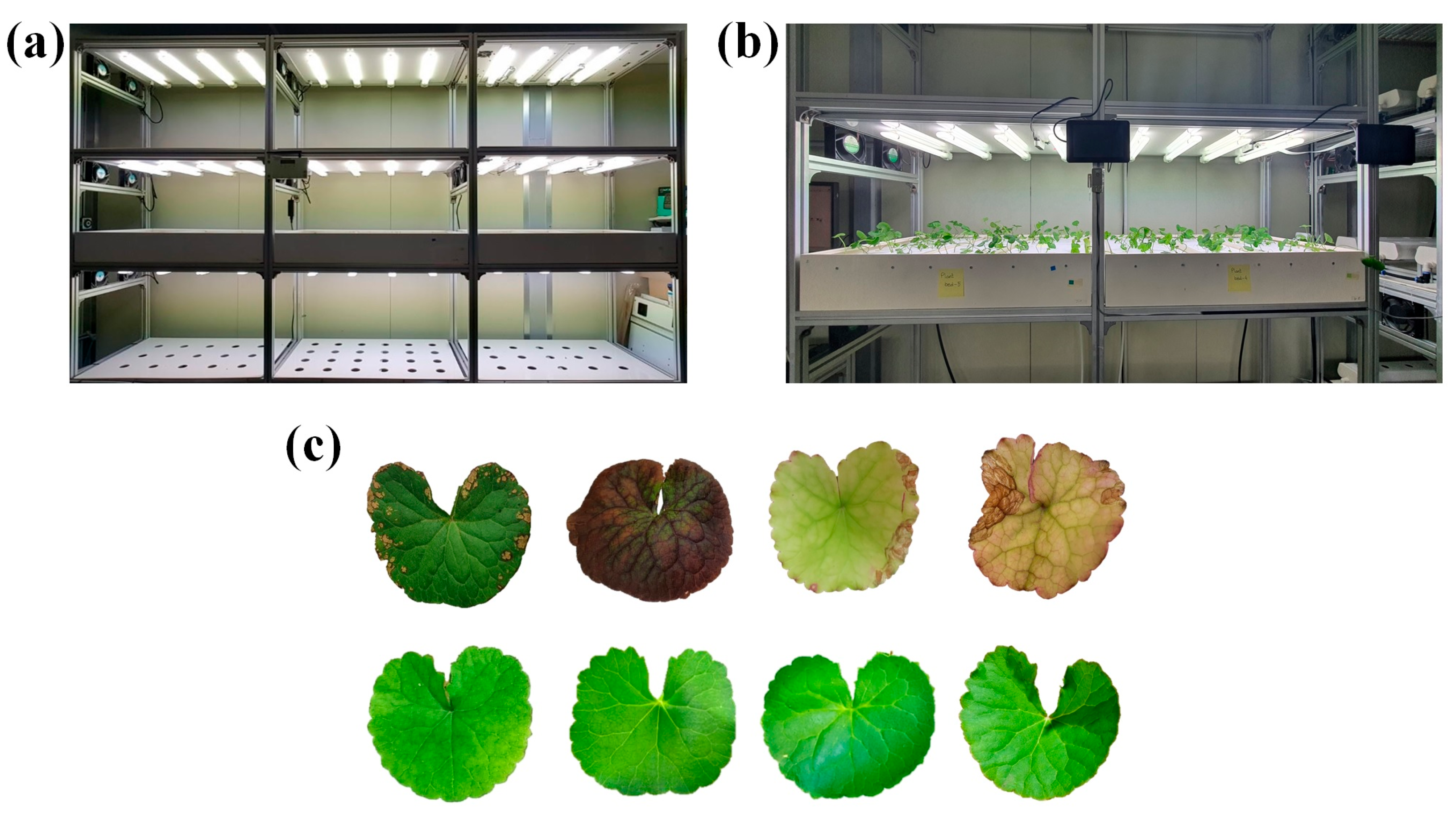

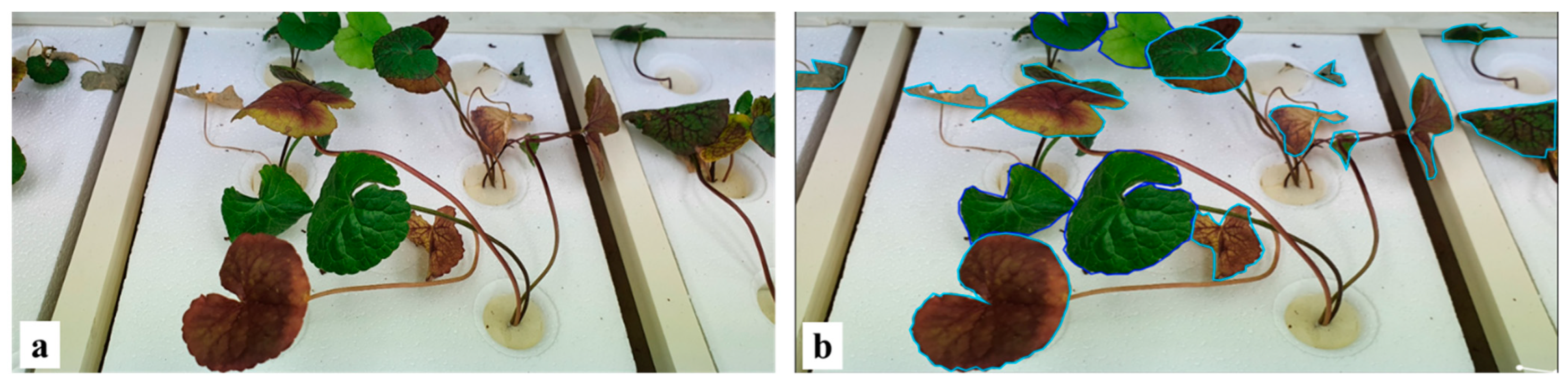

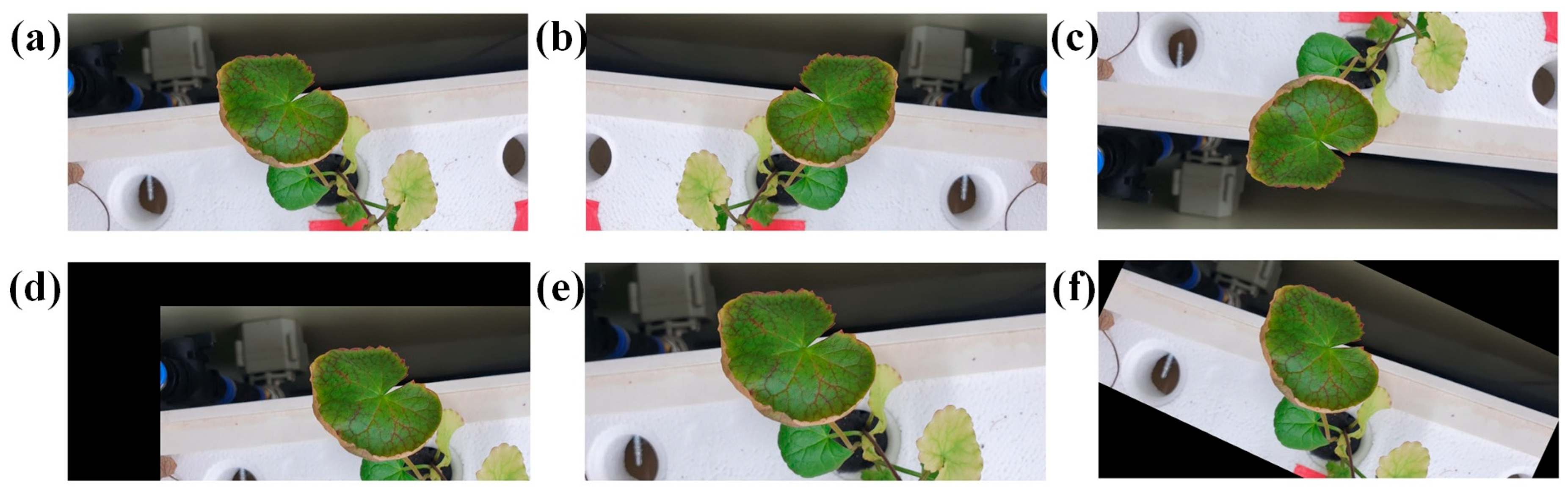

2.1. Experimental Site and Image Acquisition

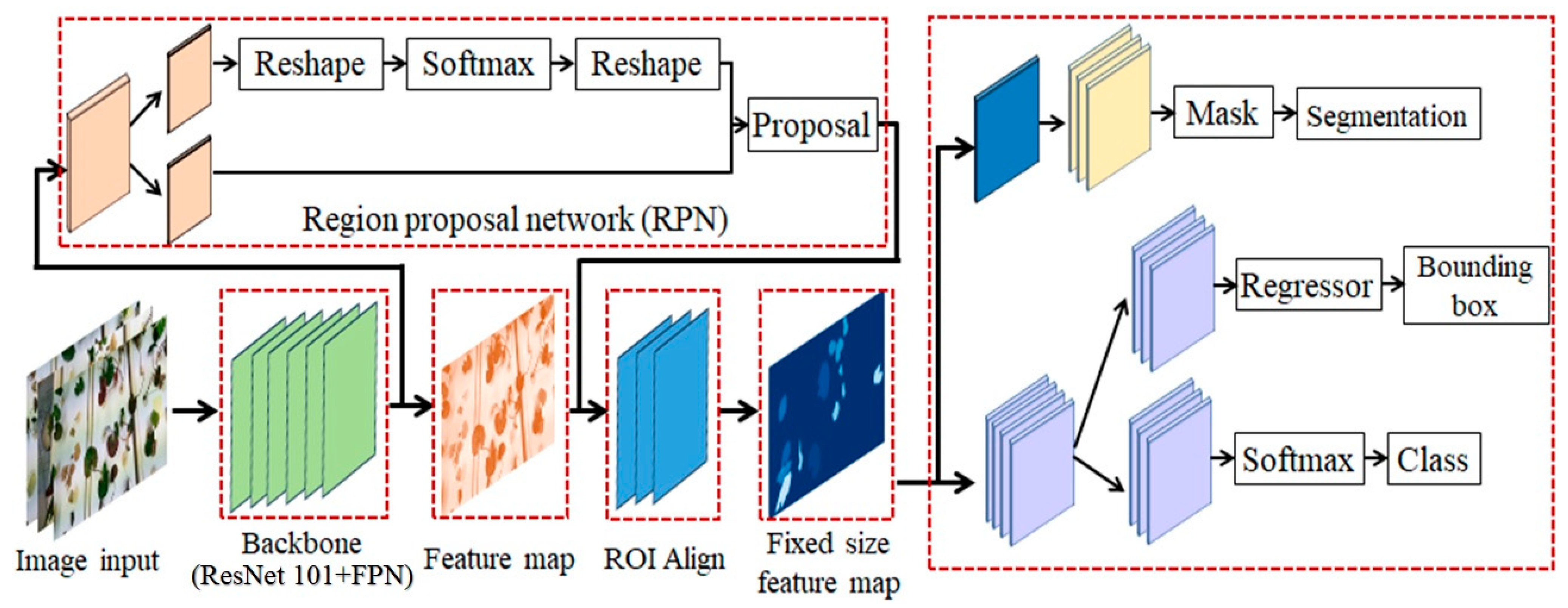

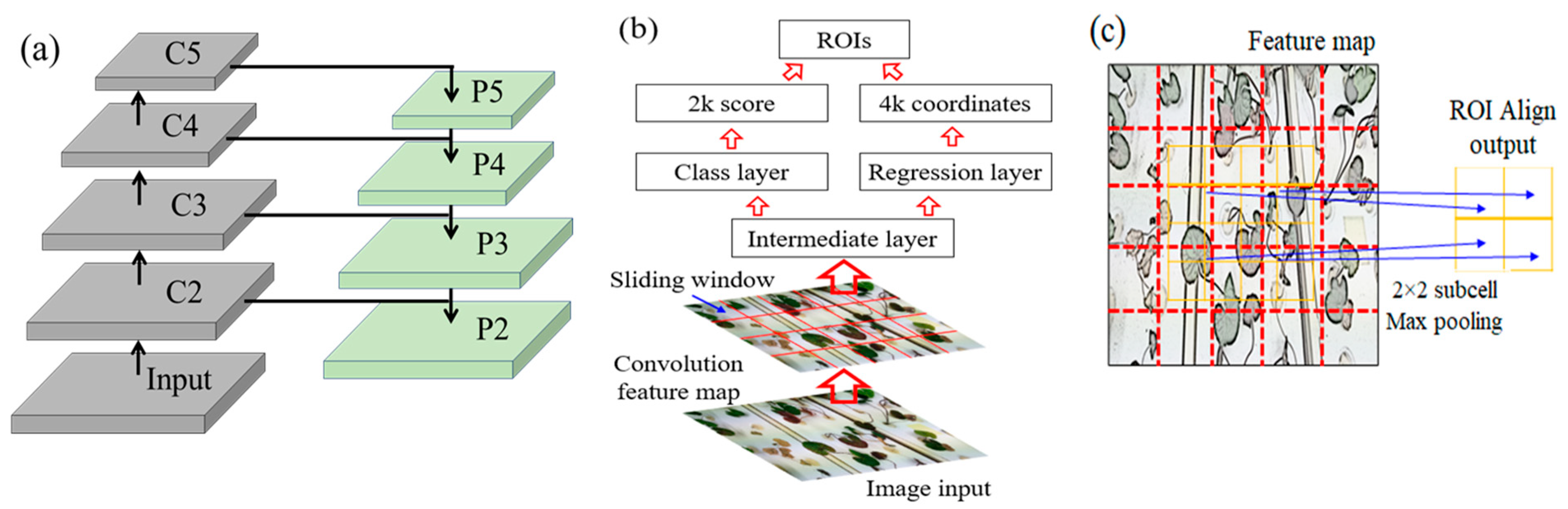

2.2. Mask R-CNN Model Structure

2.3. Improved Mask RCNN Model Using Attention Module

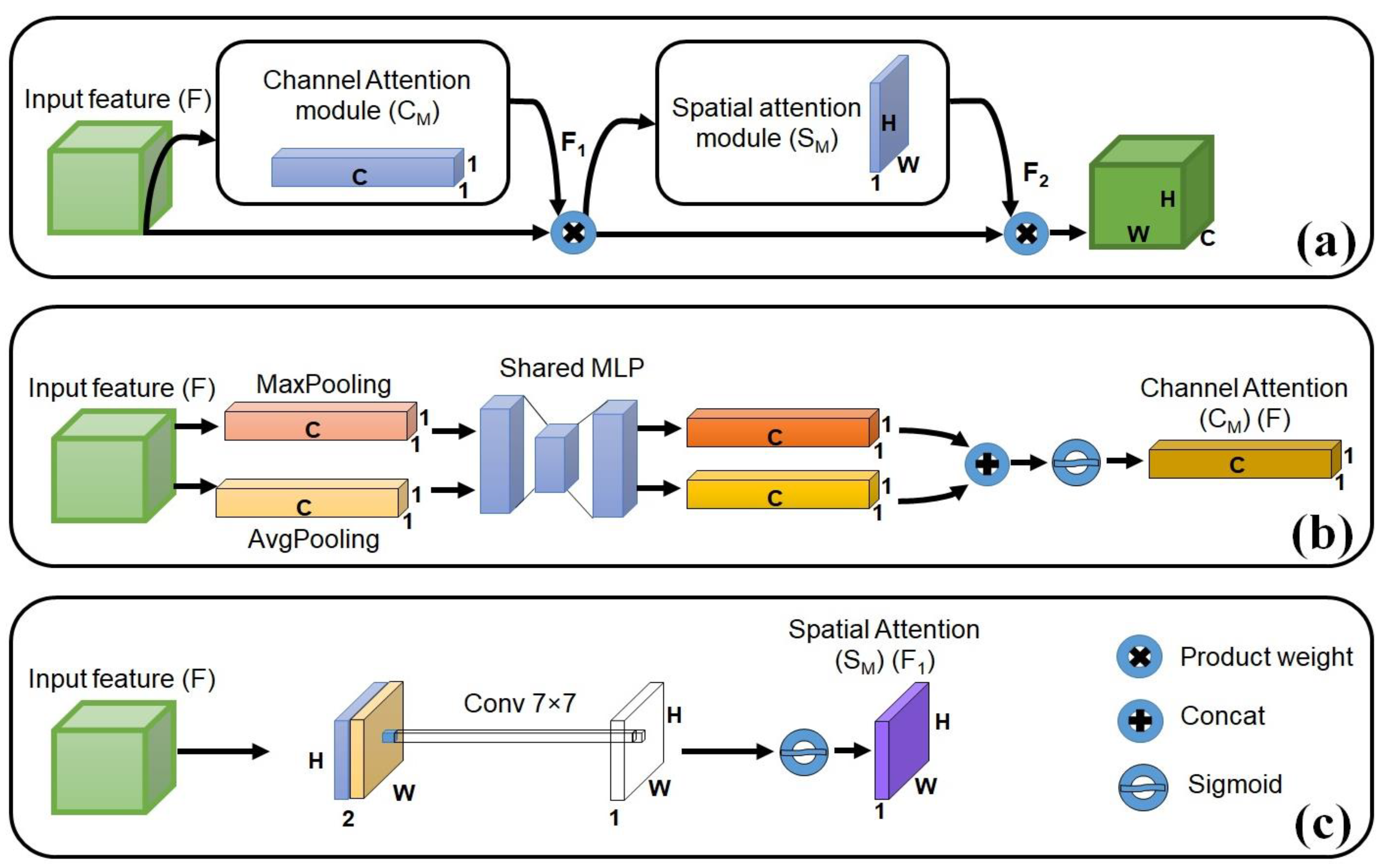

2.3.1. Convolutional Block Attention Module (CBAM)

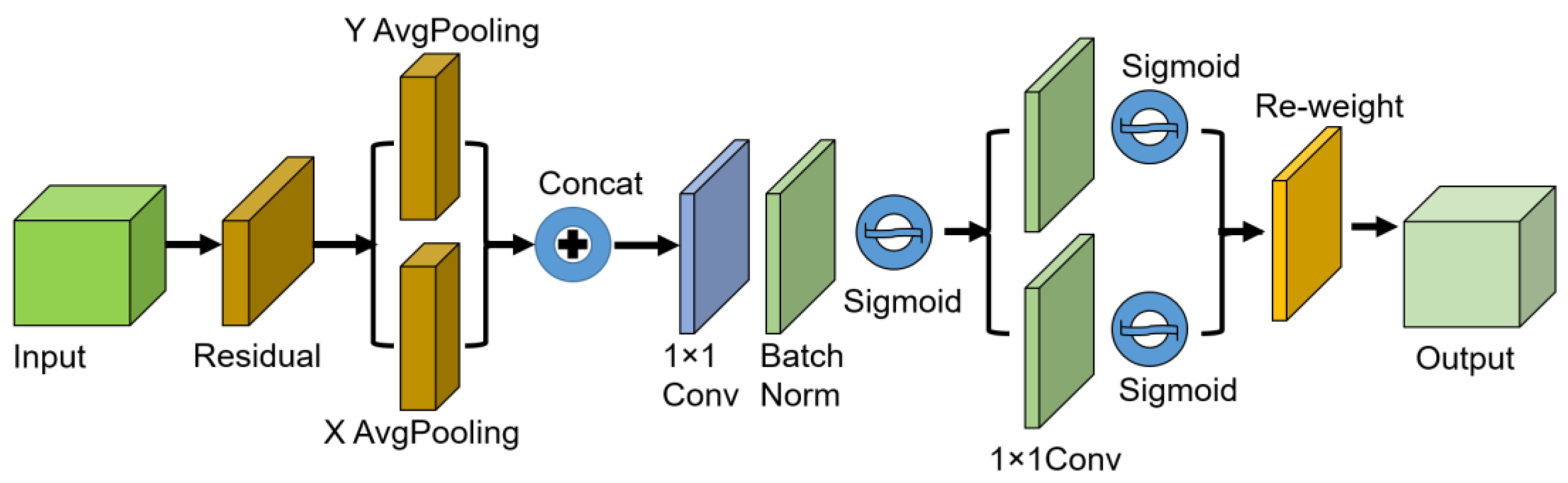

2.3.2. Coordinate Attention (CA)

2.4. Evaluation Matrices

3. Results

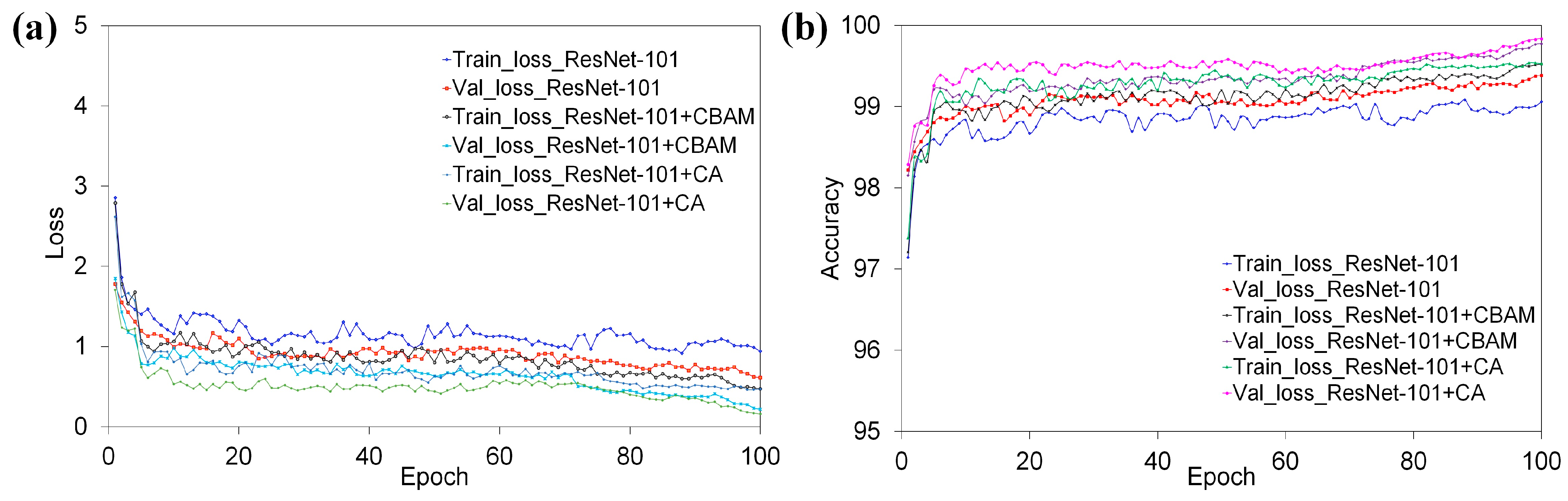

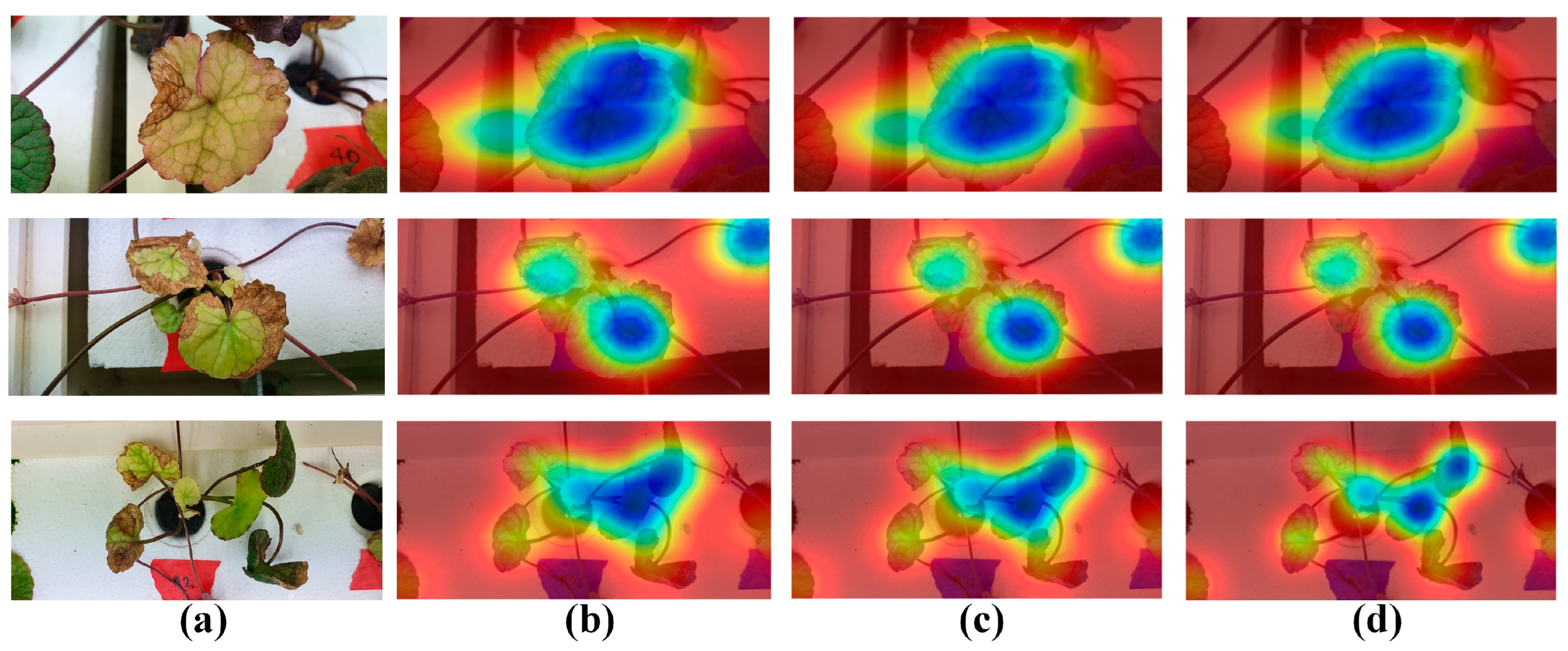

3.1. Trained Mask R-CNN Model

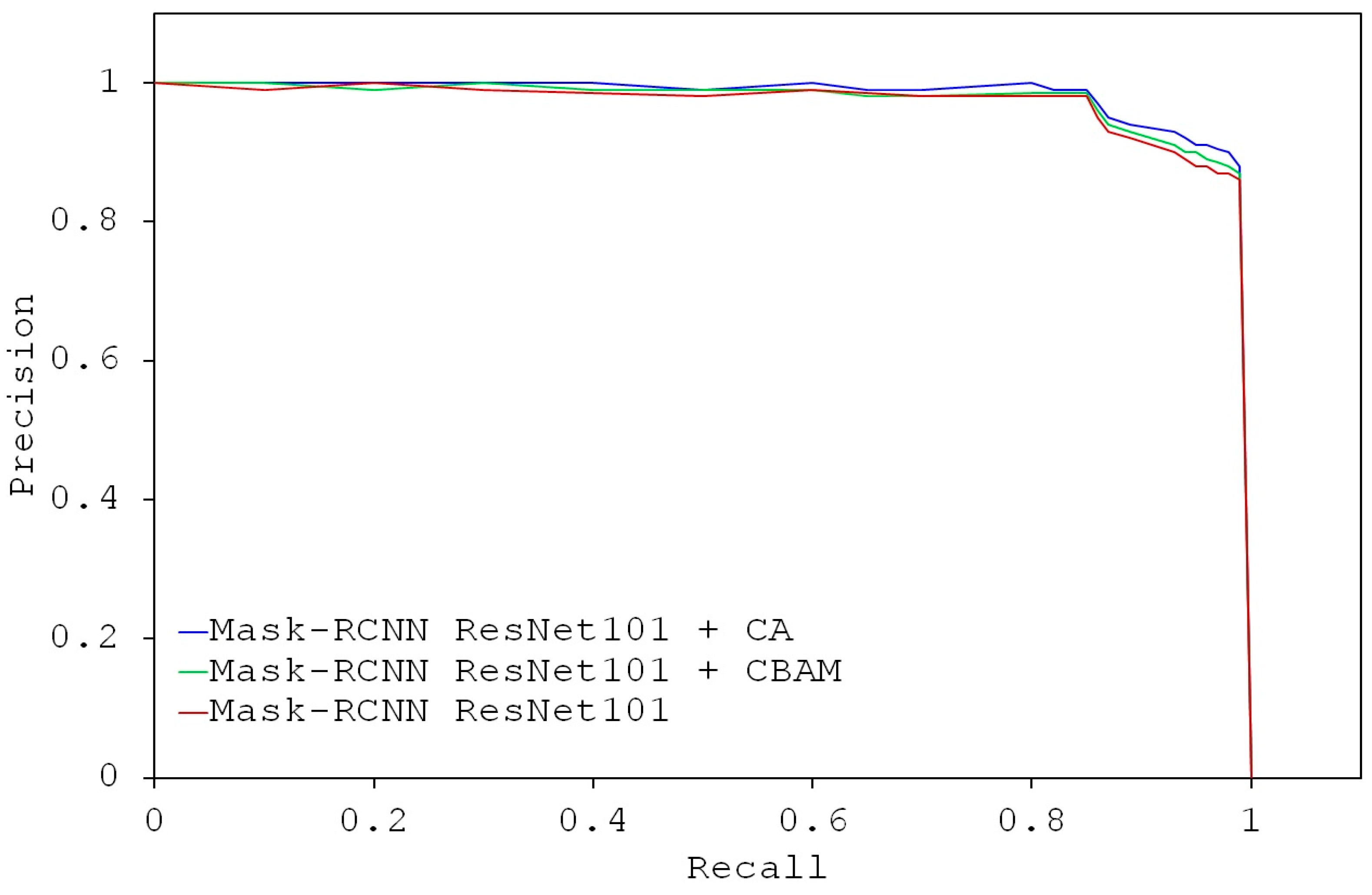

3.2. Performance Comparison of Mask RCNN Models with Different Attention Mechanisms

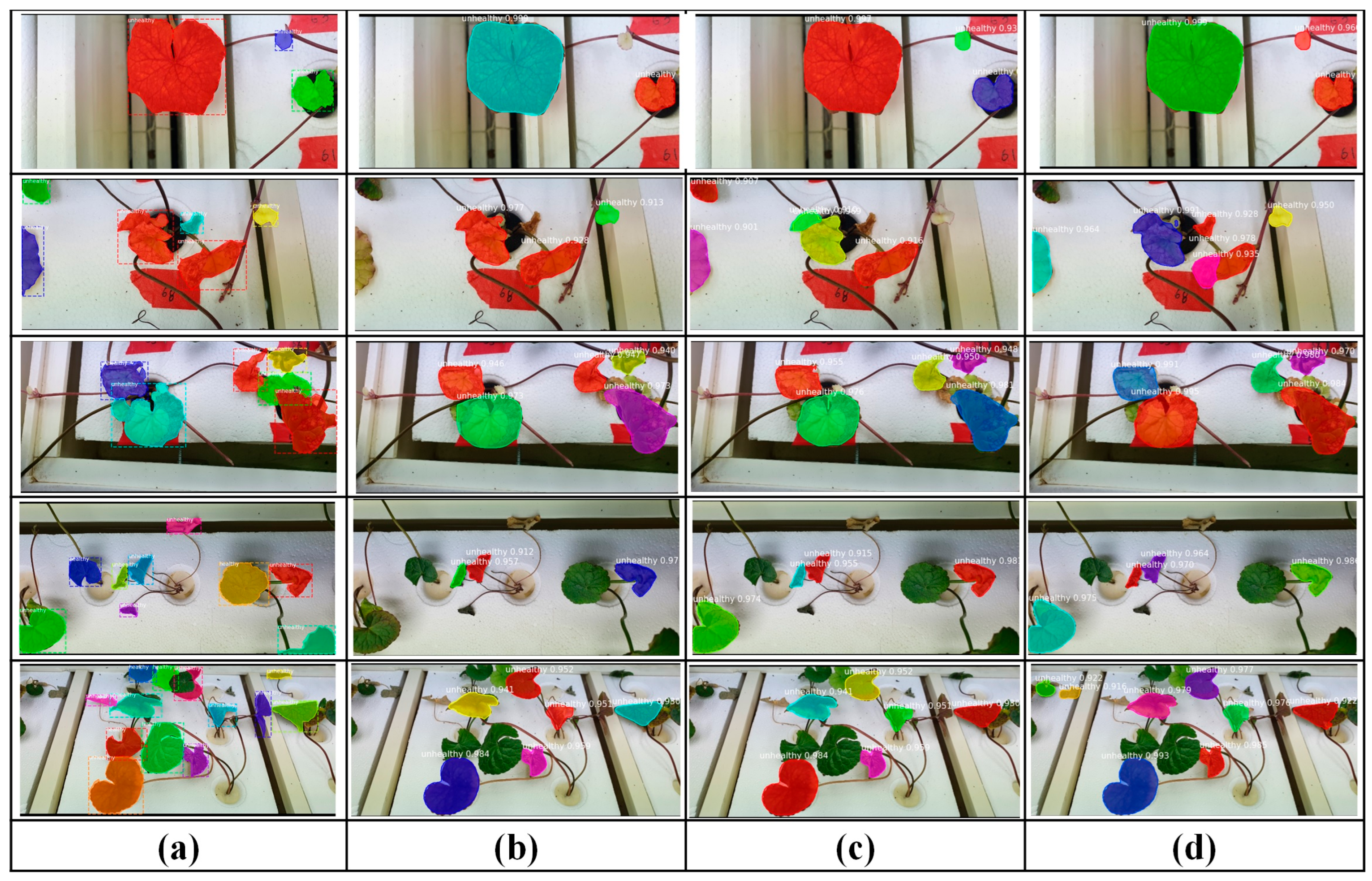

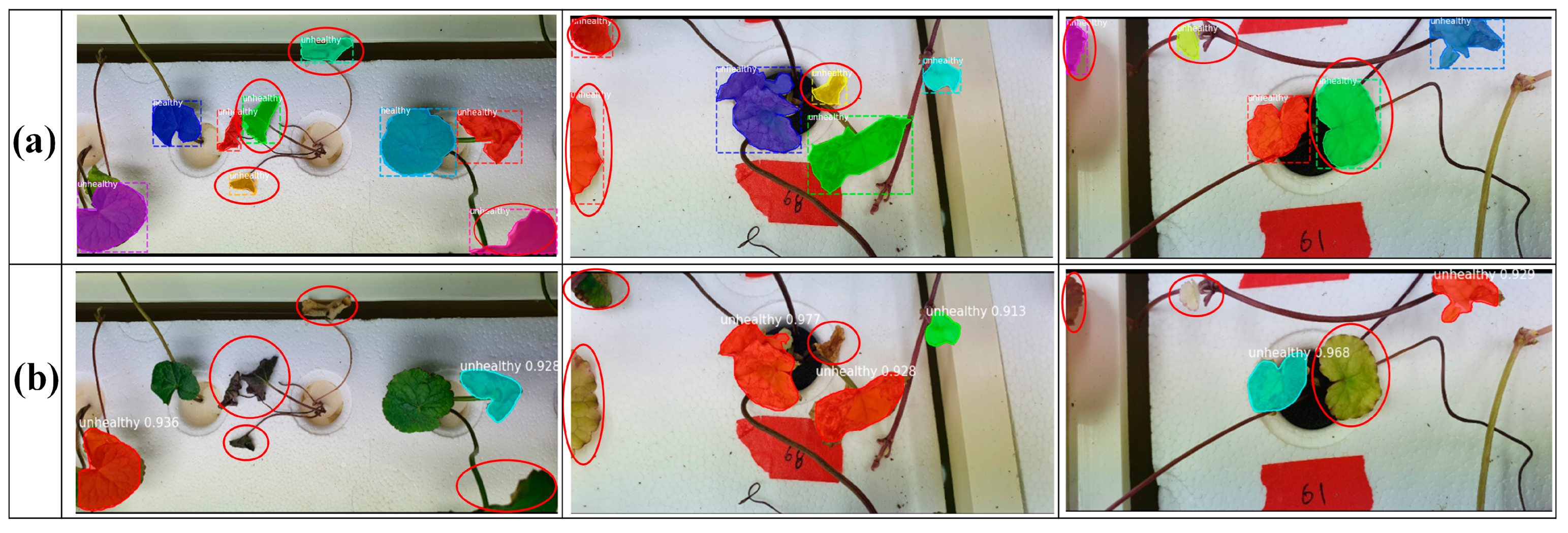

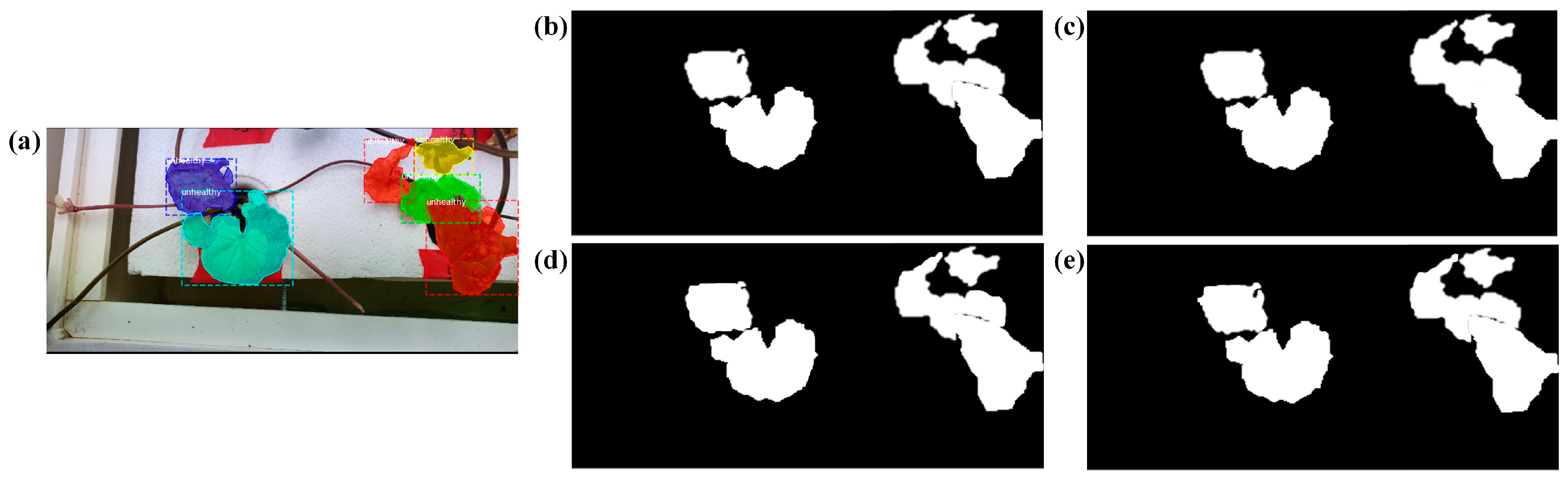

3.3. Defective Leaf Detection

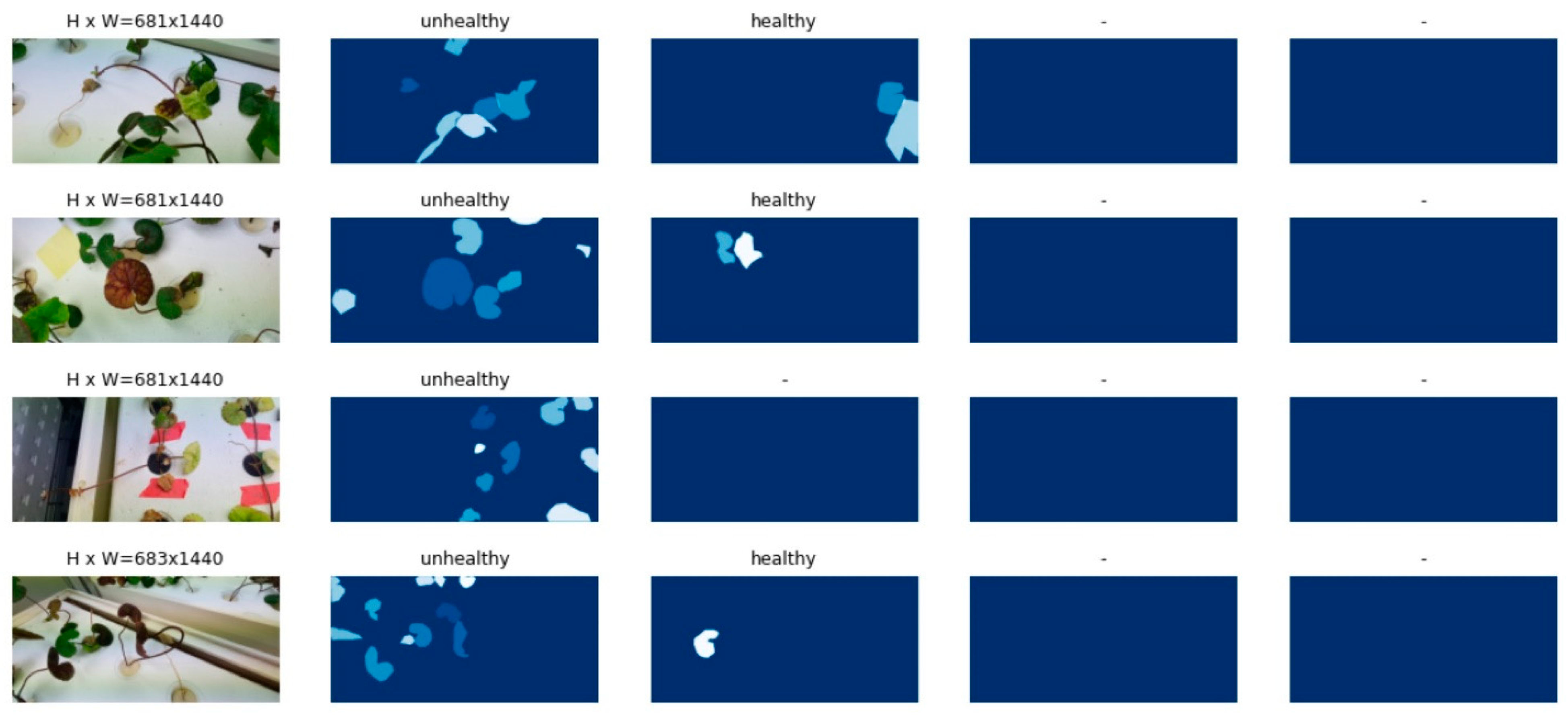

3.4. Visual Segmentation

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Gohil, K.J.; Patel, J.A.; Gajjar, A.K. Pharmacological review on centella asiatica: A potential herbal cure-all. Indian J. Pharm. Sci. 2010, 72, 546–556. [Google Scholar] [CrossRef] [PubMed]

- Poddar, S.; Sarkar, T.; Choudhury, S.; Chatterjee, S.; Ghosh, P. Indian traditional medicinal plants: A concise review. Int. J. Bot. Stud. 2020, 5, 174–190. [Google Scholar]

- Sawicka, B.; Skiba, D.; Umachandran, K.; Dickson, A. Alternative and New Plants. In Preparation of Phytopharmaceuticals for the Management of Disorders; Academic Press: Cambridge, MA, USA, 2020; pp. 491–537. [Google Scholar]

- Rattanachaikunsopon, P.; Phumkhachorn, P. Use of asiatic pennywort centella asiatica aqueous extract as a bath treatment to control columnaris in nile tilapia. J. Aquat. Anim. Health 2010, 22, 14–20. [Google Scholar] [CrossRef] [PubMed]

- Yasurin, P.; Sriariyanun, M.; Phusantisampan, T. Review: The bioavailability activity of centella asiatica. KMUTNB Int. J. Appl. Sci. Technol. 2015, 9, 1–9. [Google Scholar] [CrossRef]

- Wang, D.; Chen, Y.; Li, J.; Wu, E.; Tang, T.; Singla, R.K.; Shen, B.; Zhang, M. Natural products for the treatment of age-related macular degeneration. Phytomedicine 2024, 130, 155522. [Google Scholar] [CrossRef] [PubMed]

- Chowdhury, M.; Gulandaz, M.A.; Islam, S.; Reza, M.N.; Ali, M.; Islam, M.N.; Park, S.-U.; Chung, S.-O. Lighting conditions affect the growth and glucosinolate contents of chinese kale leaves grown in an aeroponic plant factory. Hortic. Environ. Biotechnol. 2023, 64, 97–113. [Google Scholar] [CrossRef]

- Chowdhury, M.; Kiraga, S.; Islam, M.N.; Ali, M.; Reza, M.N.; Lee, W.-H.; Chung, S.-O. Effects of temperature, relative humidity, and carbon dioxide concentration on growth and glucosinolate content of kale grown in a plant factory. Foods 2021, 10, 1524. [Google Scholar] [CrossRef] [PubMed]

- Kabir, M.S.N.; Reza, M.N.; Chowdhury, M.; Ali, M.; Samsuzzaman; Ali, M.R.; Lee, K.Y.; Chung, S.-O. Technological trends and engineering issues on vertical farms: A review. Horticulturae 2023, 9, 1229. [Google Scholar] [CrossRef]

- Chowdhury, M.; Islam, M.N.; Reza, M.N.; Ali, M.; Rasool, K.; Kiraga, S.; Lee, D.; Chung, S.-O. Sensor-based nutrient recirculation for aeroponic lettuce cultivation. J. Biosyst. Eng. 2021, 46, 81–92. [Google Scholar] [CrossRef]

- Jones, J.B., Jr. Hydroponics: A Practical Guide for the Soilless Grower; CRC Press: Boca Raton, FL, USA, 2016. [Google Scholar]

- Hasanuzzaman, M.; Nahar, K.; Alam, M.M.; Roychowdhury, R.; Fujita, M. Physiological, biochemical, and molecular mechanisms of heat stress tolerance in plants. Int. J. Mol. Sci. 2013, 14, 9643–9684. [Google Scholar] [CrossRef]

- Darko, E.; Heydarizadeh, P.; Schoefs, B.; Sabzalian, M.R. Photosynthesis under artificial light: The shift in primary and secondary metabolism. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2014, 369, 20130243. [Google Scholar] [CrossRef] [PubMed]

- Shi, Y.; Ke, X.; Yang, X.; Liu, Y.; Hou, X. Plants response to light stress. J. Genet. Genomes 2022, 49, 735–747. [Google Scholar] [CrossRef] [PubMed]

- Pandey, R.; Vengavasi, K.; Hawkesford, M.J. Plant adaptation to nutrient stress. Plant Physiol. Rep. 2021, 26, 583–586. [Google Scholar] [CrossRef]

- Li, H.; Zhang, M.; Gao, Y.; Li, M.; Ji, Y. Green ripe tomato detection method based on machine vision in greenhouse. Trans. Chin. Soc. Agric. Eng. 2017, 33, 328–334. [Google Scholar]

- Story, D.; Kacira, M. Design and implementation of a computer vision-guided greenhouse crop diagnostics system. Mach. Vision Appl. 2015, 26, 495–506. [Google Scholar] [CrossRef]

- Tian, Y.; Yang, G.; Wang, Z.; Li, E.; Liang, Z. Instance segmentation of apple flowers using the improved mask R–CNN model. Biosyst. Eng. 2020, 193, 264–278. [Google Scholar] [CrossRef]

- Tian, Z.; Ma, W.; Yang, Q.; Duan, F. Application status and challenges of machine vision in plant factory—A review. Inf. Process. Agric. 2022, 9, 195–211. [Google Scholar] [CrossRef]

- Zhang, X.; Bu, J.; Zhou, X.; Wang, X. Automatic pest identification system in the greenhouse based on deep learning and machine vision. Front. Plant Sci. 2023, 14, 1255719. [Google Scholar] [CrossRef]

- Yamamoto, K.; Guo, W.; Yoshioka, Y.; Ninomiya, S. On plant detection of intact tomato fruits using image analysis and machine learning methods. Sensors 2014, 14, 12191–12206. [Google Scholar] [CrossRef]

- Wang, Q.; Qi, F.; Sun, M.; Qu, J.; Xue, J. Identification of tomato disease types and detection of infected areas based on deep convolutional neural networks and object detection techniques. Comput. Intel. Neurosc. 2019, 2019, 9142753. [Google Scholar] [CrossRef]

- Islam, S.; Reza, M.N.; Chowdhury, M.; Islam, M.N.; Ali, M.; Kiraga, S.; Chung, S.O. Image processing algorithm to estimate ice-plant leaf area from rgb images under different light conditions. IOP Conf. Ser. Earth Environ. Sci. 2021, 924, 012013. [Google Scholar] [CrossRef]

- Liu, X.; Zhao, D.; Jia, W.; Ji, W.; Ruan, C.; Sun, Y. Cucumber fruits detection in greenhouses based on instance segmentation. IEEE Access 2019, 7, 139635–139642. [Google Scholar] [CrossRef]

- Story, D.; Kacira, M.; Kubota, C.; Akoglu, A.; An, L. Lettuce calcium deficiency detection with machine vision computed plant features in controlled environments. Comput. Electron. Agric. 2010, 74, 238–243. [Google Scholar] [CrossRef]

- Reza, M.N.; Chowdhury, M.; Islam, S.; Kabir, M.S.N.; Park, S.U.; Lee, G.-J.; Cho, J.; Chung, S.-O. Leaf area prediction of pennywort plants grown in a plant factory using image processing and an artificial neural network. Horticulturae 2023, 9, 1346. [Google Scholar] [CrossRef]

- Mohapatra, P.; Ray, A.; Sandeep, I.S.; Nayak, S.; Mohanty, S. Tissue-culture-mediated biotechnological intervention in centella asiatica: A potential antidiabetic plant. In Biotechnology of Anti-Diabetic Medicinal Plants; Gantait, S., Verma, S.K., Sharangi, A.B., Eds.; Springer: Singapore, 2021; pp. 89–116. ISBN 9789811635298. [Google Scholar]

- Mathavaraj, S.; Sabu, K.K. Genetic status of Centella asiatica (L.) Urb. (Indian pennywort): A review. Curr. Bot. 2021, 12, 150–160. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Processing Systems, Montreal, QC, Canada, 7–12 December 2016; Curran Associates Inc.: Red Hook, NY, USA, 2016; Volume 28. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Fang, S.; Zhang, B.; Hu, J. Improved mask R-CNN multi-target detection and segmentation for autonomous Driving in Complex Scenes. Sensors 2023, 23, 3853. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar]

- Gul-Mohammed, J.; Arganda-Carreras, I.; Andrey, P.; Galy, V.; Boudier, T. A generic classification-based method for segmentation of nuclei in 3d images of early embryos. BMC Bioinform. 2014, 15, 9. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An Incremental Improvement. arXiv 2018, arXiv:180402767. [Google Scholar]

- Wang, X.; Zhang, R.; Kong, T.; Li, L.; Shen, C. Solov2: Dynamic and Fast Instance Segmentation. In Proceedings of the Advances in Neural Information Processing Systems Annual Conference on Neural Information Processing Systems, Virtual, 6–12 December 2020; Volume 33, pp. 17721–17732. [Google Scholar]

- Chen, H.; Sun, K.; Tian, Z.; Shen, C.; Huang, Y.; Yan, Y. BlendMask: Top-Down Meets Bottom-Up for Instance Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Huang, F.; Li, Y.; Liu, Z.; Gong, L.; Liu, C. A method for calculating the leaf area of pak choi based on an improved mask R-CNN. Agriculture 2024, 14, 101. [Google Scholar] [CrossRef]

- Li, Y.; Wang, Y.; Xu, D.; Zhang, J.; Wen, J. An improved mask RCNN model for segmentation of ‘Kyoho’ (Vitis labruscana) grape bunch and detection of its maturity level. Agriculture 2023, 13, 914. [Google Scholar] [CrossRef]

- Shen, L.; Su, J.; Huang, R.; Quan, W.; Song, Y.; Fang, Y.; Su, B. Fusing attention mechanism with mask R-CNN for instance segmentation of grape cluster in the field. Front. Plant Sci. 2022, 13, 934450. [Google Scholar] [CrossRef] [PubMed]

- Wang, D.; He, D. Fusion of mask RCNN and attention mechanism for instance segmentation of apples under complex background. Comput. Electron. Agric. 2022, 196, 106864. [Google Scholar] [CrossRef]

- Islam, S.; Reza, M.N.; Chowdhury, M.; Ahmed, S.; Lee, K.-H.; Ali, M.; Cho, Y.J.; Noh, D.H.; Chung, S.-O. Detection and segmentation of lettuce seedlings from seedling-growing tray imagery using an improved mask R-CNN method. Smart Agric. Technol. 2024, 8, 100455. [Google Scholar] [CrossRef]

- Chu, P.; Li, Z.; Lammers, K.; Lu, R.; Liu, X. Deep learning-based apple detection using a suppression mask R-CNN. Pattern Recognit. Lett. 2021, 147, 206–211. [Google Scholar] [CrossRef]

- Triki, A.; Bouaziz, B.; Gaikwad, J.; Mahdi, W. Deep Leaf: Mask R-CNN based leaf detection and segmentation from digitized herbarium specimen images. Pattern Recognit. Lett. 2021, 150, 76–83. [Google Scholar] [CrossRef]

- López-Barrios, J.D.; Escobedo Cabello, J.A.; Gómez-Espinosa, A.; Montoya-Cavero, L.-E. Green sweet pepper fruit and peduncle detection using mask r-cnn in greenhouses. Appl. Sci. 2023, 13, 6296. [Google Scholar] [CrossRef]

- Almazaydeh, L.; Salameen, R.; Elleithy, K. Herbal leaf recognition using mask-region convolutional neural network (mask R-CNN). J. Theor. Appl. Inf. Technol. 2022, 100, 3664–3671. [Google Scholar]

- Afzaal, U.; Bhattarai, B.; Pandeya, Y.R.; Lee, J. An instance segmentation model for strawberry diseases based on mask R-CNN. Sensors 2021, 21, 6565. [Google Scholar] [CrossRef]

| Model | mAP | mAP (0.75) * | Accuracy |

|---|---|---|---|

| Mask-RCNN ResNet-101 | 0.893 | 0.886 | 0.887 |

| Mask-RCNN ResNet-101+CBAM | 0.918 | 0.907 | 0.922 |

| Mask-RCNN ResNet-101+CA | 0.931 | 0.924 | 0.937 |

| Model | mAP | Accuracy |

|---|---|---|

| Mask-RCNN ResNet-101 | - | - |

| Mask-RCNN ResNet-101+CBAM | 4.8% | 7.3% |

| Mask-RCNN ResNet-101+CA | 5.8% | 8.7% |

| Model | Evaluation Parameter | Average | Best-Fit |

|---|---|---|---|

| Mask-RCNN ResNet-101 | Precision rate | 0.89 | 0.92 |

| Recall rate | 0.87 | 0.90 | |

| F1 score | 0.89 | 0.91 | |

| Mask-RCNN ResNet-101+CBAM | Precision rate | 0.92 | 0.93 |

| Recall rate | 0.89 | 0.92 | |

| F1 score | 0.90 | 0.93 | |

| Mask-RCNN ResNet-101+CA | Precision rate | 0.94 | 0.96 |

| Recall rate | 0.90 | 0.93 | |

| F1 score | 0.92 | 0.94 |

| Model | mAP | Accuracy |

|---|---|---|

| BlendMask | 0.821 | 0.813 |

| Solo V2 | 0.832 | 0.834 |

| Yolo V3 | 0.844 | 0.826 |

| Mask-RCNN ResNet-50 | 0.875 | 0.864 |

| Mask-RCNN ResNet-101 (this study) | 0.893 | 0.887 |

| Mask-RCNN ResNet-101+CBAM (this study) | 0.918 | 0.922 |

| Mask-RCNN ResNet-101+CA (this study) | 0.931 | 0.937 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chowdhury, M.; Reza, M.N.; Jin, H.; Islam, S.; Lee, G.-J.; Chung, S.-O. Defective Pennywort Leaf Detection Using Machine Vision and Mask R-CNN Model. Agronomy 2024, 14, 2313. https://doi.org/10.3390/agronomy14102313

Chowdhury M, Reza MN, Jin H, Islam S, Lee G-J, Chung S-O. Defective Pennywort Leaf Detection Using Machine Vision and Mask R-CNN Model. Agronomy. 2024; 14(10):2313. https://doi.org/10.3390/agronomy14102313

Chicago/Turabian StyleChowdhury, Milon, Md Nasim Reza, Hongbin Jin, Sumaiya Islam, Geung-Joo Lee, and Sun-Ok Chung. 2024. "Defective Pennywort Leaf Detection Using Machine Vision and Mask R-CNN Model" Agronomy 14, no. 10: 2313. https://doi.org/10.3390/agronomy14102313

APA StyleChowdhury, M., Reza, M. N., Jin, H., Islam, S., Lee, G.-J., & Chung, S.-O. (2024). Defective Pennywort Leaf Detection Using Machine Vision and Mask R-CNN Model. Agronomy, 14(10), 2313. https://doi.org/10.3390/agronomy14102313