Design and Test of Intelligent Farm Machinery Operation Control Platform for Unmanned Farms

Abstract

:1. Introduction

2. Materials and Methods

2.1. Technical Routes

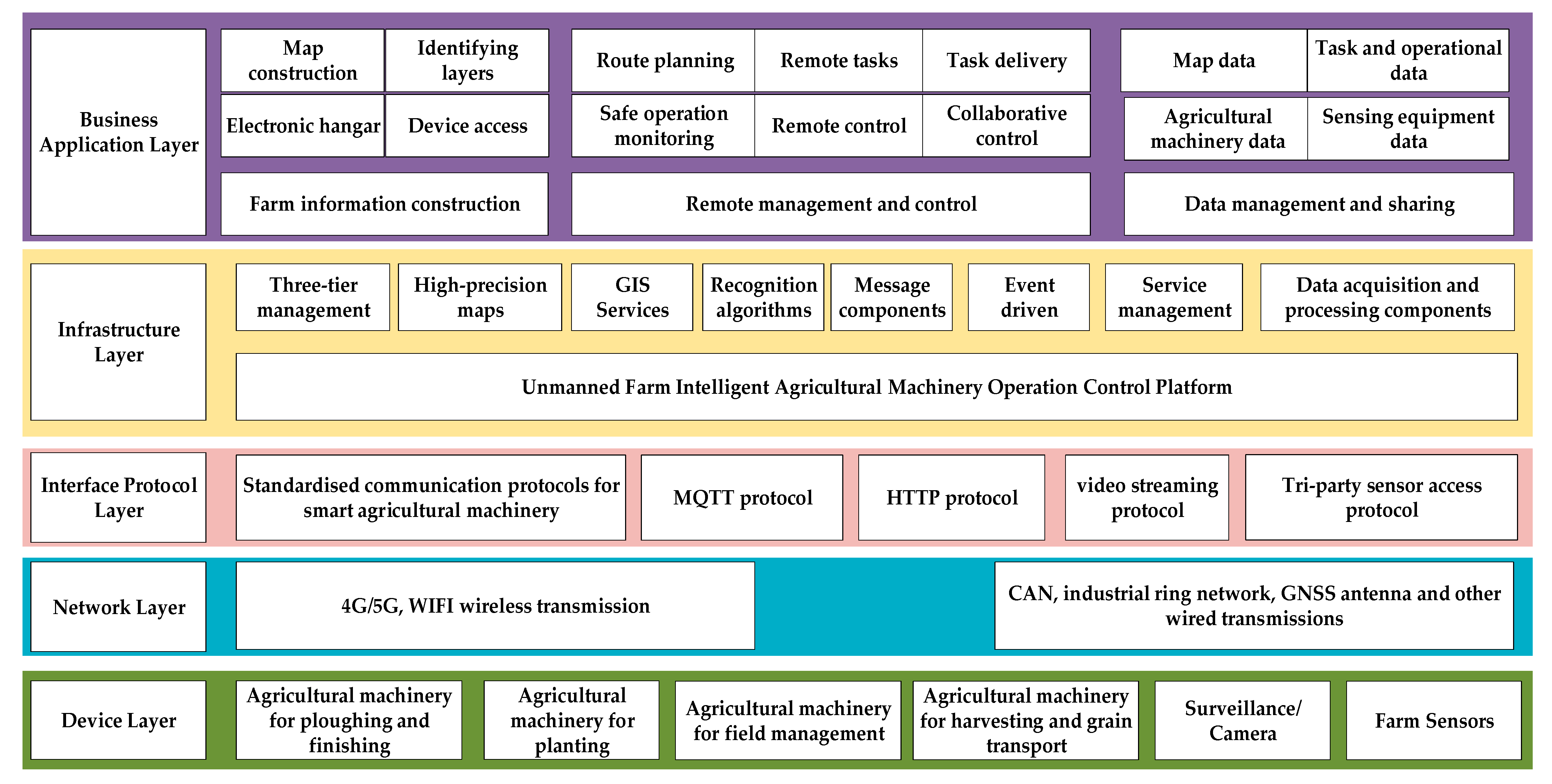

2.2. Platform Infrastructure

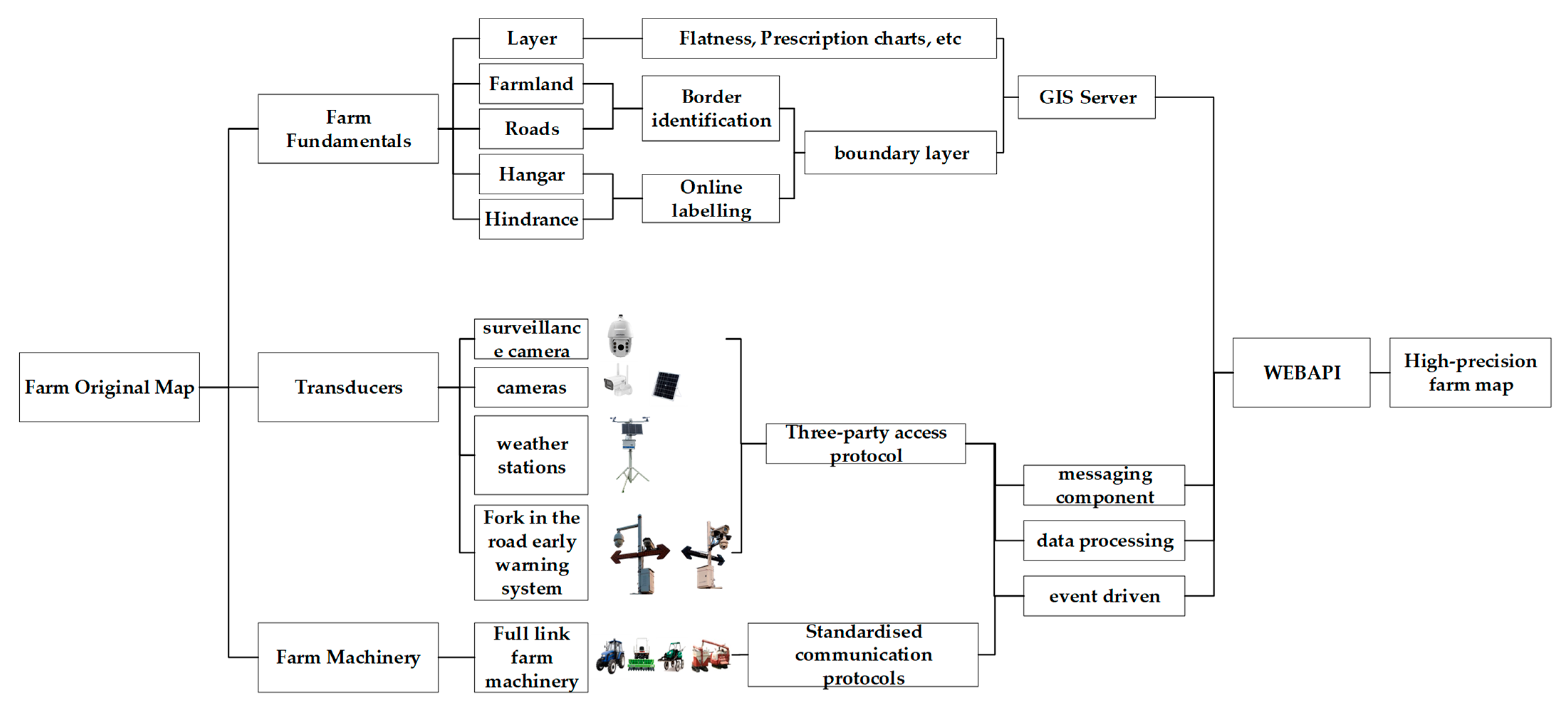

2.3. High-Precision Map Construction

2.3.1. Farm Image Acquisition and Original Map Construction

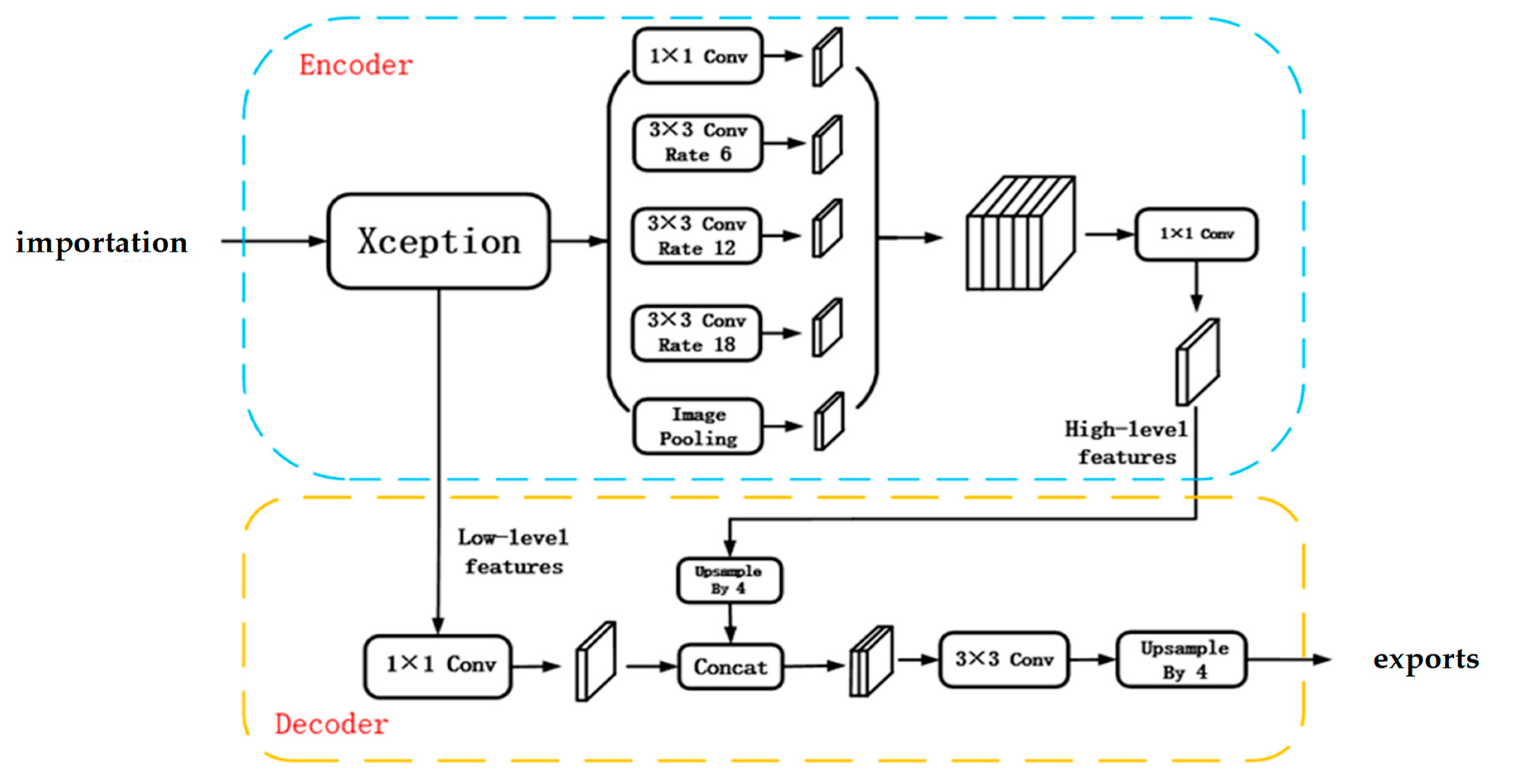

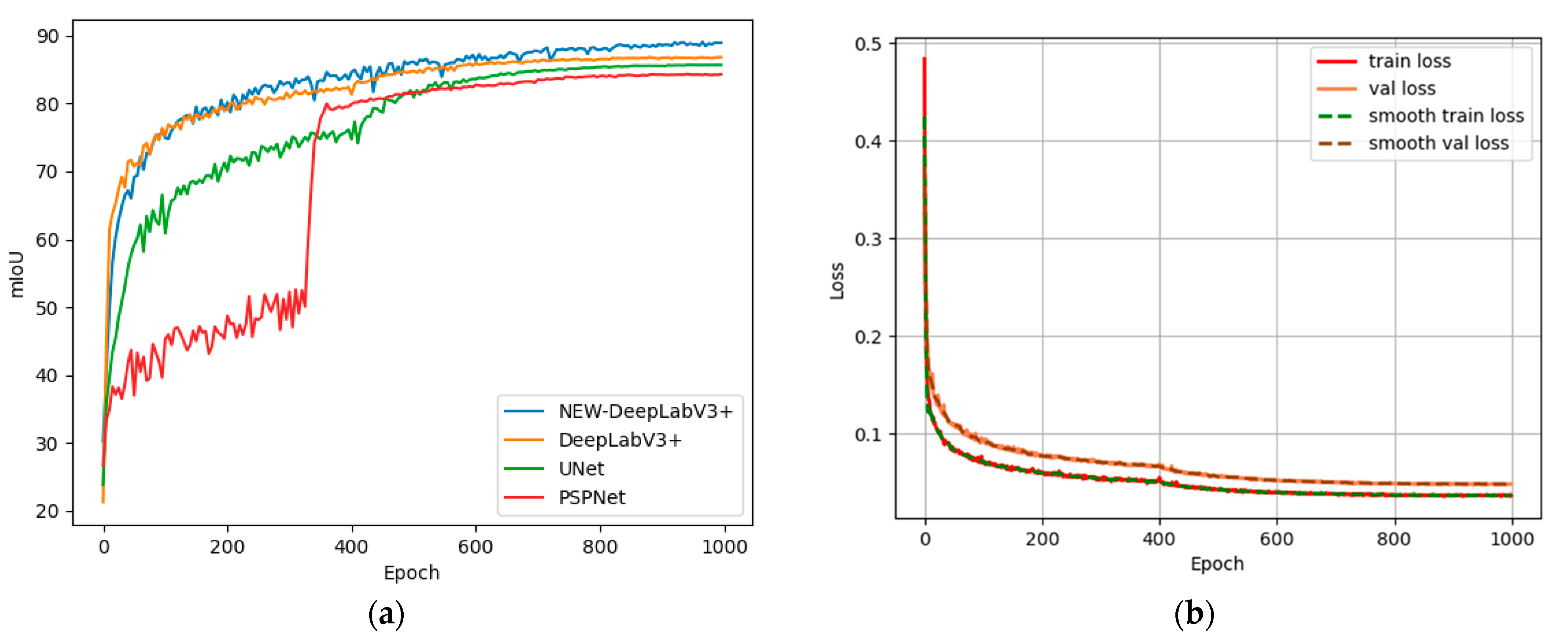

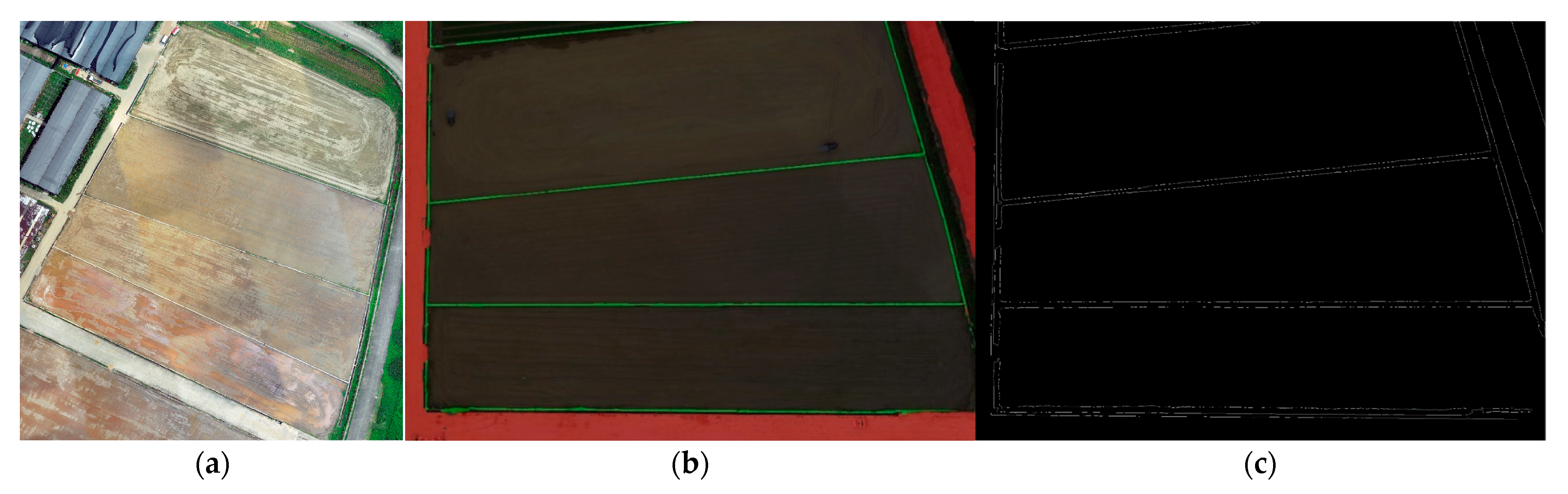

2.3.2. Identification of Field and Road Boundaries

- (1)

- Replacement of the backbone network

- (2)

- Introduction of the CBAM attention mechanism

- (3)

- Improvement of ASPP

- (4)

- Hybrid Loss Function

2.3.3. Farm Information Map Integration

2.4. Remote Management and Control

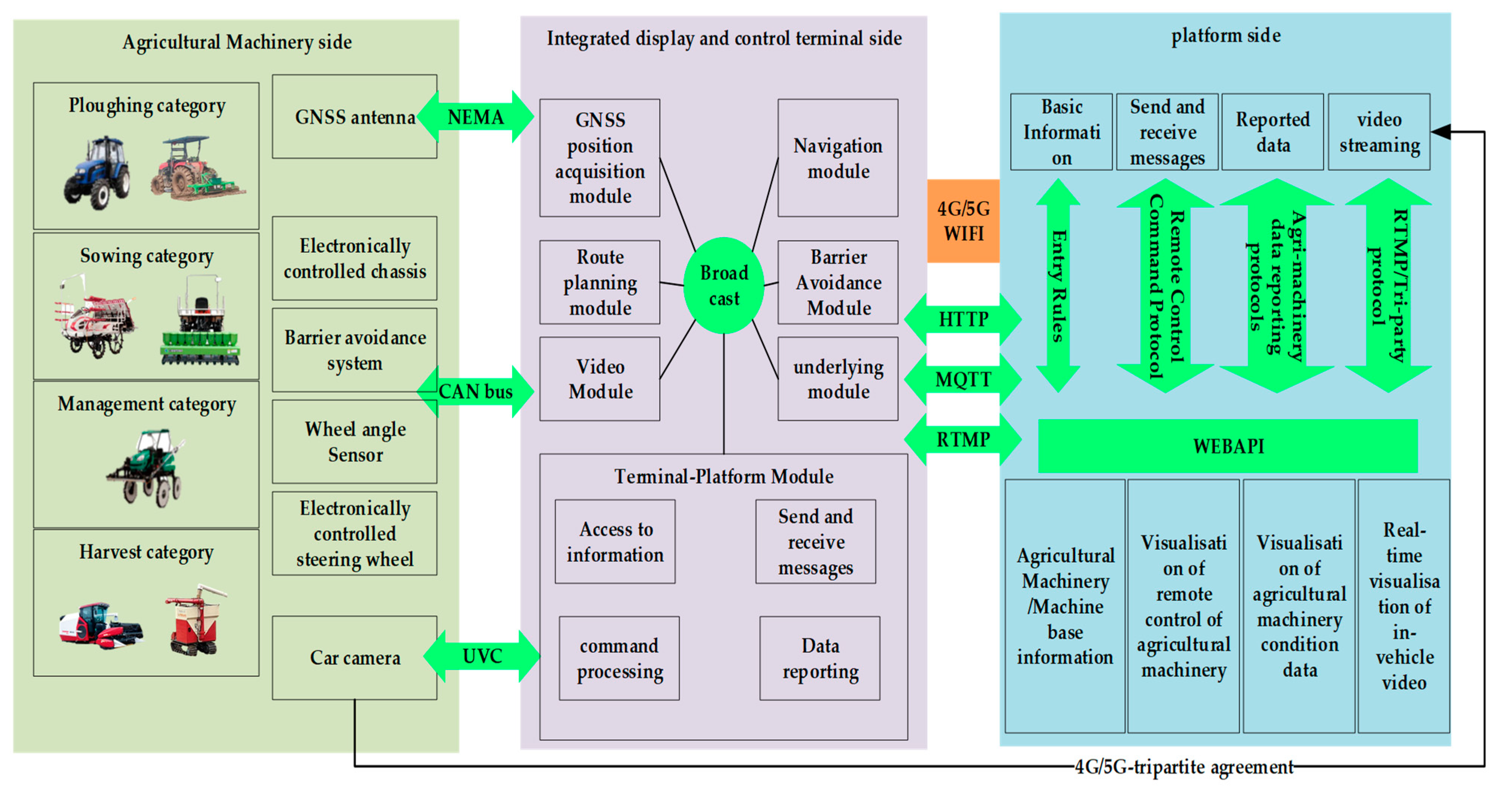

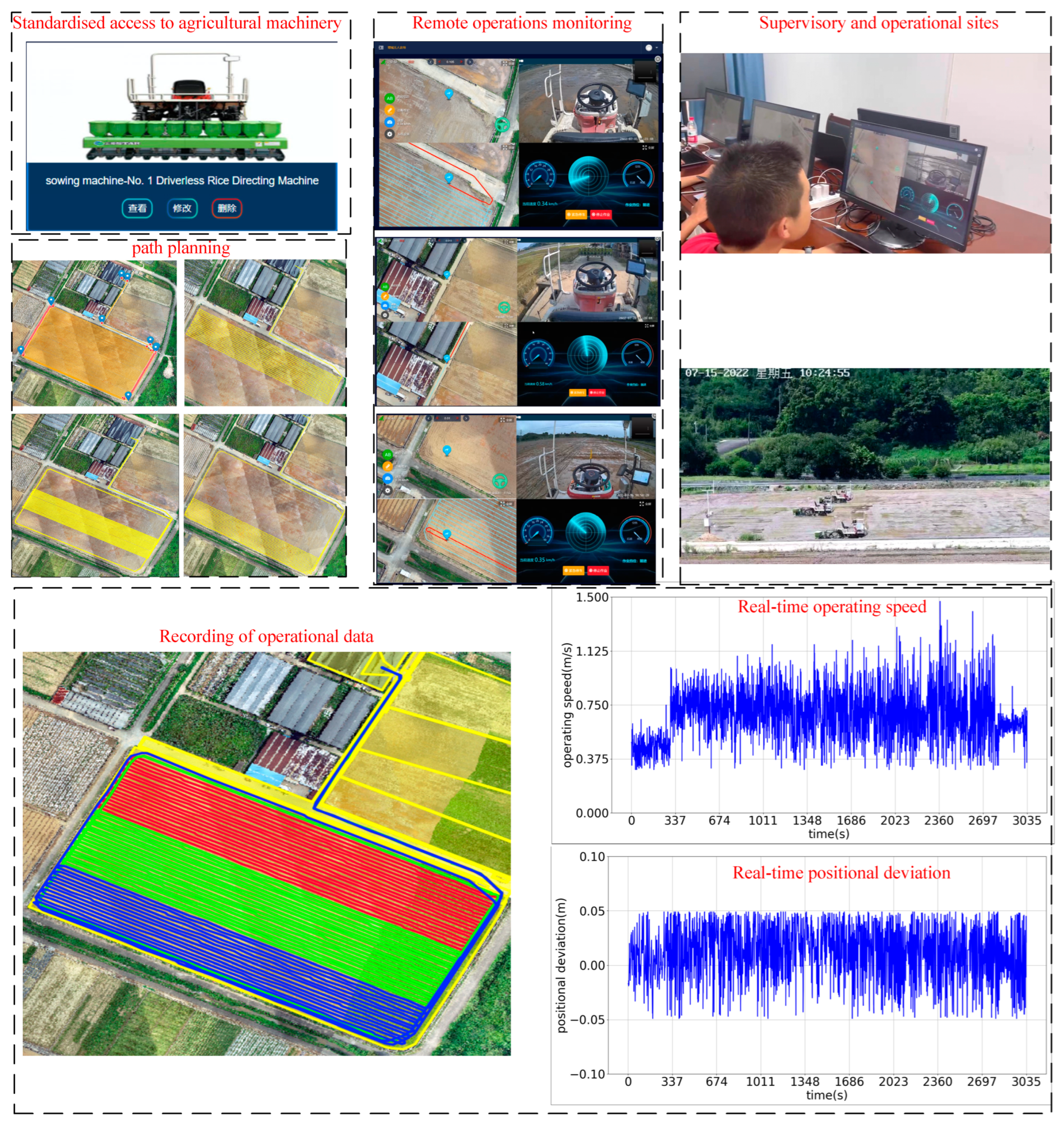

2.4.1. Standardized Access for Smart Farm Machinery

2.4.2. Remote Management and Control Model

- (1)

- Path Planning

- (2)

- Remote Task

- (3)

- Remote Control

- (4)

- Safety system

2.4.3. Multi-Agricultural Machinery Management and Control

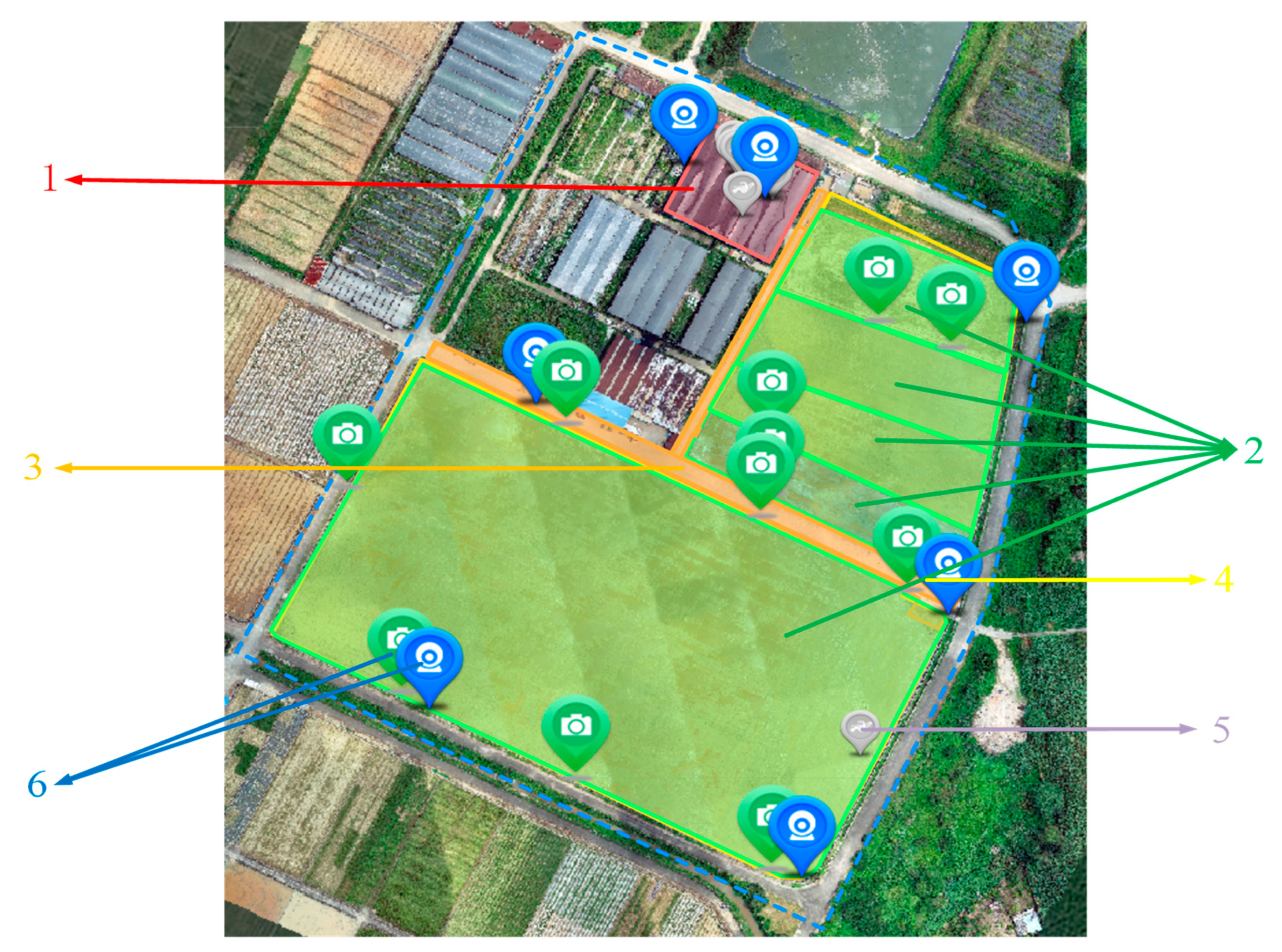

2.5. Experimental Area and Materials

- (1)

- High-precision map construction test materials

- (2)

- Remote Control Test Materials

2.6. Experimental Design

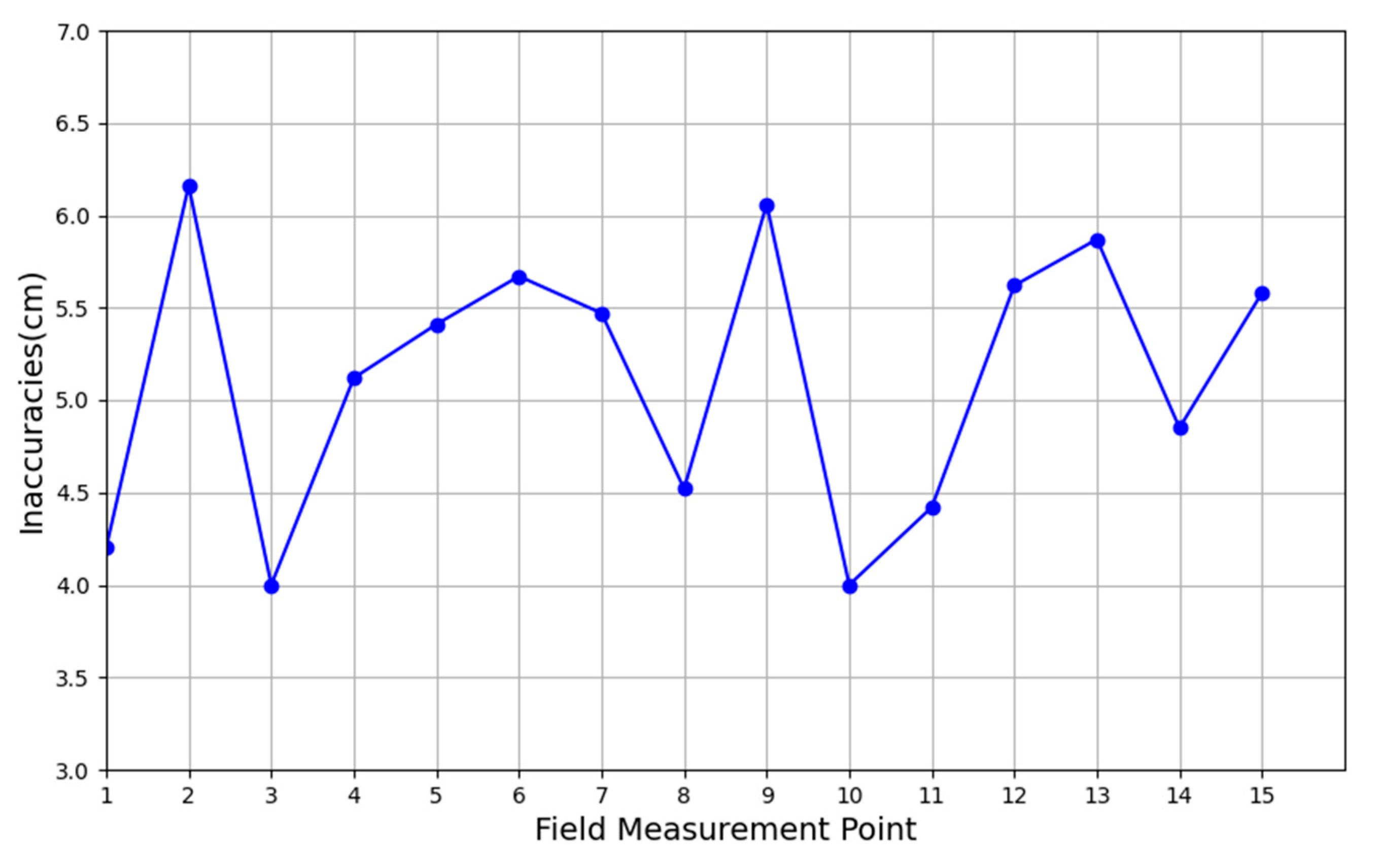

2.6.1. High-Precision Map Construction Test

2.6.2. Remote Control Experiment

3. Results

3.1. High-Precision Map Construction Results

3.2. Remote Management Test Results

4. Discuss

4.1. Analysis of Results

4.2. Platform Comparison

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Luo, X.W.; Liao, J.; Zang, Y.; Ou, Y.G.; Wang, P. Developing from Mechanized to Smart Agricultural Production in China. Strateg. Study CAE 2022, 24, 46–54. [Google Scholar] [CrossRef]

- Zhao, C.J.; Fan, B.B.; Li, J.; Feng, Q.C. Agricultural Robots: Technology Progress, Challenges and Trends. Smart Agric. 2023, 5, 1–15. [Google Scholar]

- Zhou, X.S.; Fan, S.G. Solving the Problem of “Who Will Grow Grain”: The Foundation and Path of Comprehensively Promoting Agricultural Mechanization. Acad. J. Zhongzhou 2023, 45, 54–60. [Google Scholar]

- Wu, Y.H.; Wang, H.S.; Zhou, R.Z.; Zhu, N.; Zhang, X.W. Regional differences and convergence characteristics of comprehensive development level of agricultural mechanization. J. Chin. Agric. Mech. 2024, 45, 311–319. [Google Scholar] [CrossRef]

- Chu, B.Q.; Li, C.F.; Ding, L.; Guo, Z.Y.; Wang, S.Y.; Sun, W.J.; Jin, W.Y.; He, Y. Nondestructive and Rapid Determination of Carbohydrate and Protein in T. obliquus Based on Hyperspectral Imaging Technology. Spectrosc. Spectr. Anal. 2023, 43, 3732–3741. [Google Scholar]

- Sun, J.L.; Li, D.H.; Xu, S.W.; Wu, W.B.; Yang, Y.P. Development Strategy of Agricultural Big Data and Information Infrastructure. Strateg. Study CAE 2021, 23, 10–18. [Google Scholar] [CrossRef]

- Wang, J.F. Research on Development Situation of Big Data Application in the Era of Smart Agriculture. J. Tech. Econ. Manag. 2020, 41, 124–128. [Google Scholar]

- Zhao, B.; Zhang, W.P.; Yuan, Y.W.; Wang, F.Z.; Zhou, L.M.; Niu, K. Research Progress in Information Technology for Agricultural Equipment Maintenance and Operation Service Management. Trans. Chin. Soc. Agric. Mach. 2023, 54, 1–26. [Google Scholar]

- Yin, Y.X.; Meng, Z.J.; Zhao, C.J.; Wang, H.; Wen, C.K.; Chen, J.P.; Li, L.W.; Du, J.W.; Wang, P.; An, X.F.; et al. State-of-the-art and Prospect of Research on Key Technical for Unmanned Farms of Field Corp. Smart Agric. 2022, 4, 1–25. [Google Scholar]

- Luo, X.W.; Hu, L.; He, J.; Zhang, Z.G.; Zhou, Z.Y.; Zhang, W.Y.; Liao, J.; Huang, P.K. Key technologies and practice of unmanned farm in China. Trans. Chin. Soc. Agric. Eng. 2024, 40, 1–16. [Google Scholar]

- Dou, H.J.; Chen, Z.Y.; Zhai, C.Y.; Zou, W.; Song, J.; Feng, F.; Zhang, Y.L.; Wang, X. Research Progress on Autonomous Navigation Technology for Orchard Intelligent Equipment. Trans. Chin. Soc. Agric. Mach. 2024, accepted. [Google Scholar]

- Lan, Y.B.; Zhao, D.N.; Zhang, Y.F.; Zhu, J.K. Exploration and development prospect of eco-unmanned farm modes. Trans. Chin. Soc. Agric. Eng. 2021, 37, 312–327. [Google Scholar]

- Qian, Z.J.; Jin, C.Q.; Liu, Z.; Yang, T.X. Development status and trends of intelligent control technology in unmanned farms. J. Intell. Agric. Mech. 2023, 4, 1–13. [Google Scholar]

- Cui, K.; Feng, X. The application logic, practice scenarios, and promotion suggestions of intelligent agricultural machinery equipment towards agriculture 4.0. Res. Agric. Mod. 2022, 43, 578–586. [Google Scholar]

- Li, D.L.; Li, Z. System Analysis and Development Prospect of Unmanned Farming. Trans. Chin. Soc. Agric. Mach. 2020, 51, 1–12. [Google Scholar]

- Luo, X.W.; Liao, J.; Hu, L.; Zhou, Z.Y.; Zhang, Z.G.; Zang, Y.; Wang, P.; He, J. Research progress of intelligent agricultural machinery and practice of unmanned farm in China. J. South China Agric. Univ. 2021, 42, 8–17+5. [Google Scholar]

- Kaloxylos, A.; Groumas, A.; Sarris, V.; Katsikas, L.; Magdalinos, P.; Antoniou, E.; Politopoulou, E.; Wolfert, S.; Brewster, C.; Eigenmann, R.; et al. A cloud-based Farm Management System: Architecture and implementation. Comput. Electron. Agric. 2014, 100, 168–179. [Google Scholar] [CrossRef]

- Fountas, S.; Carli, G.; Sørensen, C.G.; Tsiropoulos, Z.; Cavalaris, C.; Vatsanidou, A.; Liakos, B.; Canavari, M.; Wiebensohen, J.; Tisserye, B. Farm management information systems: Current situation and future perspectives. Comput. Electron. Agric. 2015, 115, 40–50. [Google Scholar] [CrossRef]

- Feng, M.K.; Gong, Z.F.; Xu, J.; Wu, X.J.; Lin, L.J.; Xu, J.Y.; Li, X.Y.; Wang, Z. Design and Implementation of Intelligent Control Platform for Unmanned Farms. Agric. Technol. 2022, 42, 52–55. [Google Scholar]

- Lu, B.; Dong, W.J.; Ding, Y.C.; Sun, Y.; Li, H.P.; Zhang, C.Y. An Rapeseed Unmanned Seeding System Based on Cloud-Terminal High Precision Maps. Smart Agric. 2023, 5, 33–44. [Google Scholar]

- Chen, H.L.; Li, W.X.; Du, X.T.; Zhang, W.L. Interaction Design of Intelligent Agricultural Machinery Management and Control System Based on Context-awareness. Packag. Eng. 2023, 44, 123–130. [Google Scholar]

- Li, H.; Zhong, T.; Zhang, K.Y.; Wang, Y.; Zhang, M. Design of Agricultural Machinery Multi-machine Cooperative Navigation Service Platform Based on WebGIS. Trans. Chin. Soc. Agric. Mach. 2022, 53, 28–35. [Google Scholar]

- Liu, Z.Y.; Liang, J.P. Design and application of precision scheduling and efficient operation platform for agricultural machinery based on BDS. J. Chin. Agric. Mech. 2018, 39, 97–102. [Google Scholar] [CrossRef]

- Wang, C.S.; Zhang, F.; Teng, G.F.; Matthew, E.T.; Wang, K.J.; Wang, B. Design and implementation of smart agricultural machinery management platform. J. Chin. Agric. Mech. 2018, 39, 61–68. [Google Scholar] [CrossRef]

- Lv, Y.C. Design and Implementation of Intelligent Agricultural Machinery Data Management Application System Based on Microservice Architectur. Master’s Thesis, Chongqing University of Posts and Telecommunications, Chongqing, China, 2019. [Google Scholar]

- Jia, F.; Xiong, G.; Zhu, F.H.; Tian, B.; Han, S.S.; Chen, S.C. Research and implementation of industrial Internet of things communication system based on MQTT. Chin. J. Intell. Sci. Technol. 2019, 1, 249–259. [Google Scholar]

- Chen, W.Y.; Gao, J.; Yang, H. Design and implementation of Internet of Things communication system based on MQTT protocol. J. Xi’an Univ. Posts Telecommun. 2020, 25, 26–32. [Google Scholar]

- Tsolakis, N.; Bechtsis, D.; Bochtis, D. AgROS: A robot operating system based emulation tool for agricultural robotics. Agronomy 2019, 9, 403. [Google Scholar] [CrossRef]

- Jensen, K.; Larsen, M.; Nielsen, H.S.; Larsen, B.L.; Olsen, S.K.; Jørgensen, N.R. Towards an Open Software Platform for Field Robots in Precision Agriculture. Robotics 2014, 3, 207–234. [Google Scholar] [CrossRef]

- Jo, K.; Kim, C.; Sunwoo, M. Simultaneous localization and map change update for the high definition map-based autonomous driving car. Sensors 2018, 18, 3145. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. arXiv 2016, arXiv:1610.02357. [Google Scholar]

- Li, W.; Liu, K. Confidence-Aware Object Detection Based on MobileNetv2 for Autonomous Driving. Sensors 2021, 21, 2380. [Google Scholar] [CrossRef] [PubMed]

- Wang, B.L.; Ma, Z. Visual Interpretation TM Image Land Use Classification by Applied the Software of ENVI. Mod. Surv. Mapp. 2011, 34, 11–13. [Google Scholar]

- Ming, D.P.; Qiu, Y.F.; Zhou, W. Application of Spatial Statistics in Remote Sensing Pattern Classification—An Example of Object-Oriented Farmland Extraction from Remote Sensing Images. Acta Geogr. Sin. 2016, 45, 825–833. [Google Scholar]

- Ji, X.S. Delineation of Farmland Boundaries and Estimation of Aboveground Biomass in Rice Using High Resolution Satellite Imagery. Master’s Thesis, Nanjing Agricultural University, Nanjing, China, 2019. [Google Scholar]

- Liu, D.; Ou, Y.A.; Chen, C.; Li, Y.B. Farmland boundary recognition method based on NDVI. Jiangsu Agric. Sci. 2022, 50, 196–201. [Google Scholar]

- Deng, H.; Yang, Y.T.; Liu, Z.P.; Liu, M.H.; Chen, X.F.; Liu, X. Semantic segmentation of paddy image by UAV based on deep learning. J. Chin. Agric. Mech. 2021, 42, 165–172. [Google Scholar]

- Wu, C.; Chen, Y.; Yang, W.Z.; Yang, L.L.; Qiao, P.; Ma, Q.; Zhai, W.X.; Li, D.; Zhang, X.Q.; Wan, C.F.; et al. Construction of big data system of agricultural machinery based on BeiDou. Trans. Chin. Soc. Agric. Eng. 2022, 38, 1–8. [Google Scholar]

- Wu, C.C.; Cai, Y.P.; Luo, M.J.; Su, H.H.; Ding, L.J. Time-windows Based Temporal and Spatial Scheduling Model for Agricultural Machinery Resources. Trans. Chin. Soc. Agric. Mach. 2013, 44, 237–241+231. [Google Scholar]

- Wu, L.C.; Li, Q.L.; Yuan, D.G.; Sun, L.L.; Yang, S.B. Big Data Analysis of Diesel Engine Coolant Temperature Distribution under Actual Operating Conditions of Agricultural Machinery with Internet of Vehicles. Agric. Eng. 2023, 13, 102–107. [Google Scholar]

| Meaning | |

|---|---|

| 1 | Self-inspection of operations |

| 2 | Execution |

| 3 | Emergency stop |

| 4 | Stopping the job |

| 5 | Setting initial master–slave job parameters |

| 6 | Job Delivery |

| 7 | Stop navigation |

| 8 | Start navigation |

| 9 | One-touch ignition |

| 10 | One-touch ignition off |

| 11 | Master notifies slave of arrival at the designated point |

| 12 | The slave notifies the master that it has arrived at the designated point |

| 13 | Master notifies slave to return |

| 14 | Apparatus start |

| 15 | Machine stop |

| 16 | Emergency stop cancellation |

| 17 | Machine height adjustment |

| Procedure | Resource Requirement | Parameters/Versions | Function |

|---|---|---|---|

| Farm Image Acquisition and Original Map Construction | DJI Elf 4-RTK drone with remote control | Camera lens: FOV 84°; 8.8 mm/24 mm image resolution: 4864 × 3648 (4:3) 5472 × 3648 (3:2) Photo format: JPEG Maximum flight speed: 50 km/h (positioning mode) 58 km/h (Attitude Mode) position accuracy: perpendicular1.5 cm + 1 pp (RMS); level 1 cm + 1 pp (RMS) | Acquisition of farm image data |

| Mapping software and map servers | DJI Terra (version: 3.6.6) ArcGIS Server (version: 10.2) | Image stitching and map services | |

| Identification of field and road boundaries | Farm Map Image Dataset | Small image blocks of 512 × 512 pixels | Provide samples for model training |

| Dirt, concrete, and road boundary data sets | Label map generated with Labelme annotation | Provide boundary datasets for model training | |

| Model Training Host | CPU: Intel i9-10980XE GPU: NVIDIA RTX3090 AERO RAM: 32 G CUDA: 11.3 operating system: Ubuntu 20.04 | Provide a test environment for model training and running | |

| Farm Information Map Integration | WEBGIS Service | OpenLayers (version:7.4.0) Leaflet (version:1.9.4) ArcGIS API for js (version:3.17) | Front-end maps, layers, and function calls |

| Farm Elements Layer | Vector layers in tif, shp, and other formats | Providing visual annotation of different types of geographic information on farms |

| Designation | Intelligent RTK i70 |

|---|---|

| Data Update Rate | 1 Hz, 2 Hz, 5 Hz, 10 Hz |

| Communication Interface | UHF antenna interface/RS232/USB and other interfaces |

| Static Accuracy | Horizontal accuracy: 2.5 mm + 1 ppm High-range accuracy: 5.0 mm + 1 ppm |

| Dynamic Accuracy | Horizontal accuracy: 8 mm + 1 ppm High-range accuracy: 15 mm + 1 ppm |

| Categories | Data |

|---|---|

| AM | 1.32 m |

| Algorithmic parameter | Path type: Full-coverage proctor work Turning mode: Bulb-shaped Whether to seal the circle: Yes Whether grouping: Yes, 3 groups |

| Algorithmic Model | Roads | Concrete Ridges | Earth Ridges | MPA |

|---|---|---|---|---|

| DeepLabV3+ | 96.13 | 88.62 | 84.65 | 91.51 |

| Improvements to DeepLabV3+ | 98.36 | 93.71 | 90.45 | 95.31 |

| Point | RTK Coordinates/xy | Title 3 High-Precision Map Coordinates/xy | Tolerance/cm |

|---|---|---|---|

| 1 | 2571374.215, 462724.598 | 2571374.192, 462724.618 | 3.05 |

| 2 | 2571269.245, 462938.651 | 2571269.221, 462938.668 | 2.94 |

| 3 | 2571381.101, 462990.638 | 2571381.081, 462990.656 | 2.69 |

| 4 | 2571489.807, 462785.193 | 2571489.785, 462785.214 | 3.04 |

| 5 | 2571450.663, 462886.902 | 2571450.638, 462886.922 | 3.20 |

| Reporting Frequency/Hz | Receive Data Volume/Packet | Packet Loss Rate/% | Data Correctness/% | Real-Time/s |

|---|---|---|---|---|

| 0.5 | 100 | 0 | 100 | 2 |

| 1 | 100 | 0 | 100 | 1 |

| 2 | 95 | 5 | 96 | 0.5 |

| Agricultural Machinery | Navigation Average Tracking Error/cm | Average Trajectory and Path Error/cm | Relative Error/cm |

|---|---|---|---|

| Vehicle 1 | 3.3 | 5.5 | 2.2 |

| Vehicle 2 | 4.0 | 6.1 | 2.1 |

| Vehicle 3 | 3.1 | 4.3 | 2.2 |

| Categories | Data Protocols | Communication Protocols | Time/s |

|---|---|---|---|

| trajectory updates | agricultural machine reporting data protocol | MQTT | 1 |

| control commands | remote control command protocol | MQTT | 0.28 |

| car camera | fluorite EZOPEN protocol | HTTP | 1.2 |

| Categories | Vehicle 1 | Vehicle 2 | Vehicle 3 |

|---|---|---|---|

| Degree of completion of operations/% | 100% | 100% | 100% |

| Average navigation error/cm | 3.8 | 4.6 | 4.8 |

| Average operation speed/m/s | 0.65 | 0.58 | 0.63 |

| Completion time/h | 2.2 h | 2.5 h | 2.4 h |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, P.; Yue, M.; Yang, L.; Luo, X.; He, J.; Man, Z.; Feng, D.; Liu, S.; Liang, C.; Deng, Y.; et al. Design and Test of Intelligent Farm Machinery Operation Control Platform for Unmanned Farms. Agronomy 2024, 14, 804. https://doi.org/10.3390/agronomy14040804

Wang P, Yue M, Yang L, Luo X, He J, Man Z, Feng D, Liu S, Liang C, Deng Y, et al. Design and Test of Intelligent Farm Machinery Operation Control Platform for Unmanned Farms. Agronomy. 2024; 14(4):804. https://doi.org/10.3390/agronomy14040804

Chicago/Turabian StyleWang, Pei, Mengdong Yue, Luning Yang, Xiwen Luo, Jie He, Zhongxian Man, Dawen Feng, Shanqi Liu, Chuqi Liang, Yufei Deng, and et al. 2024. "Design and Test of Intelligent Farm Machinery Operation Control Platform for Unmanned Farms" Agronomy 14, no. 4: 804. https://doi.org/10.3390/agronomy14040804