Development of a Mango-Grading and -Sorting System Based on External Features, Using Machine Learning Algorithms

Abstract

1. Introduction

- (1)

- The proposed stacking ensemble method takes advantage of the strengths of many ML algorithms to increase the system’s prediction performance.

- (2)

- Successful application of the proposed stacking ensemble method to classifying mangoes, thereby improving the efficiency of the entire mango distribution process.

- (3)

- Easy application for classifying other agricultural fruits and vegetables such as sweet potatoes, tomatoes, etc., and promoting research to create intelligent methods and equipment for agriculture.

2. Materials and Methods

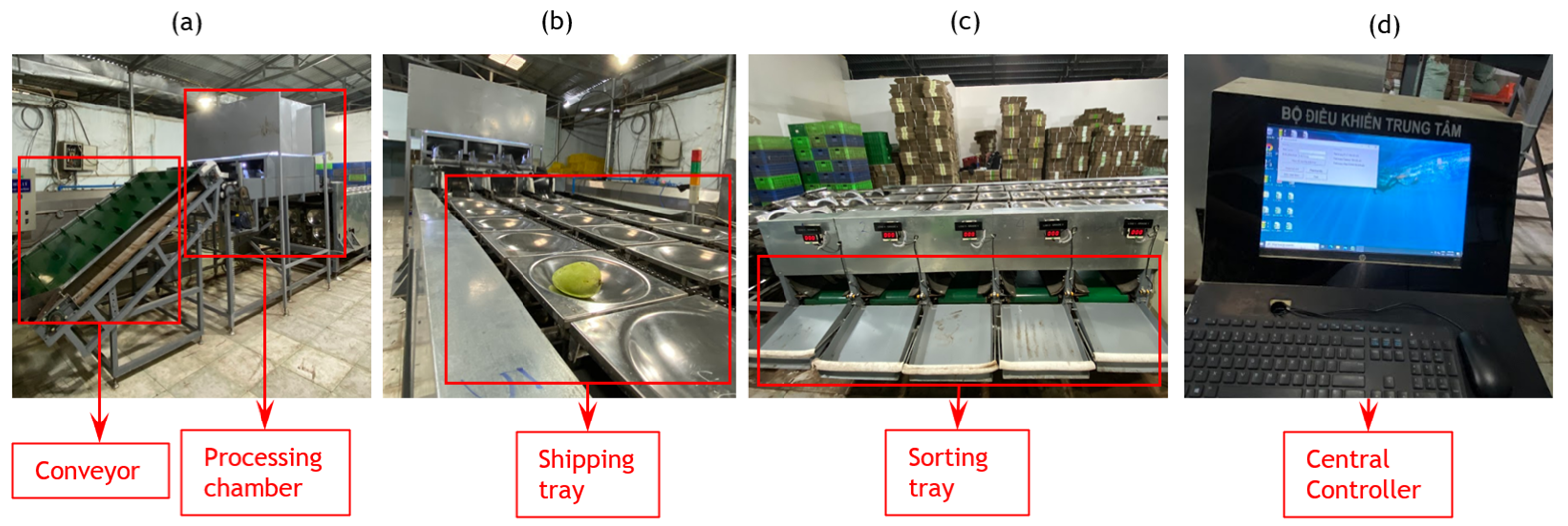

2.1. Structure of the Mango Sorting System

2.2. Extracting External Features of Mangoes Using Image Processing

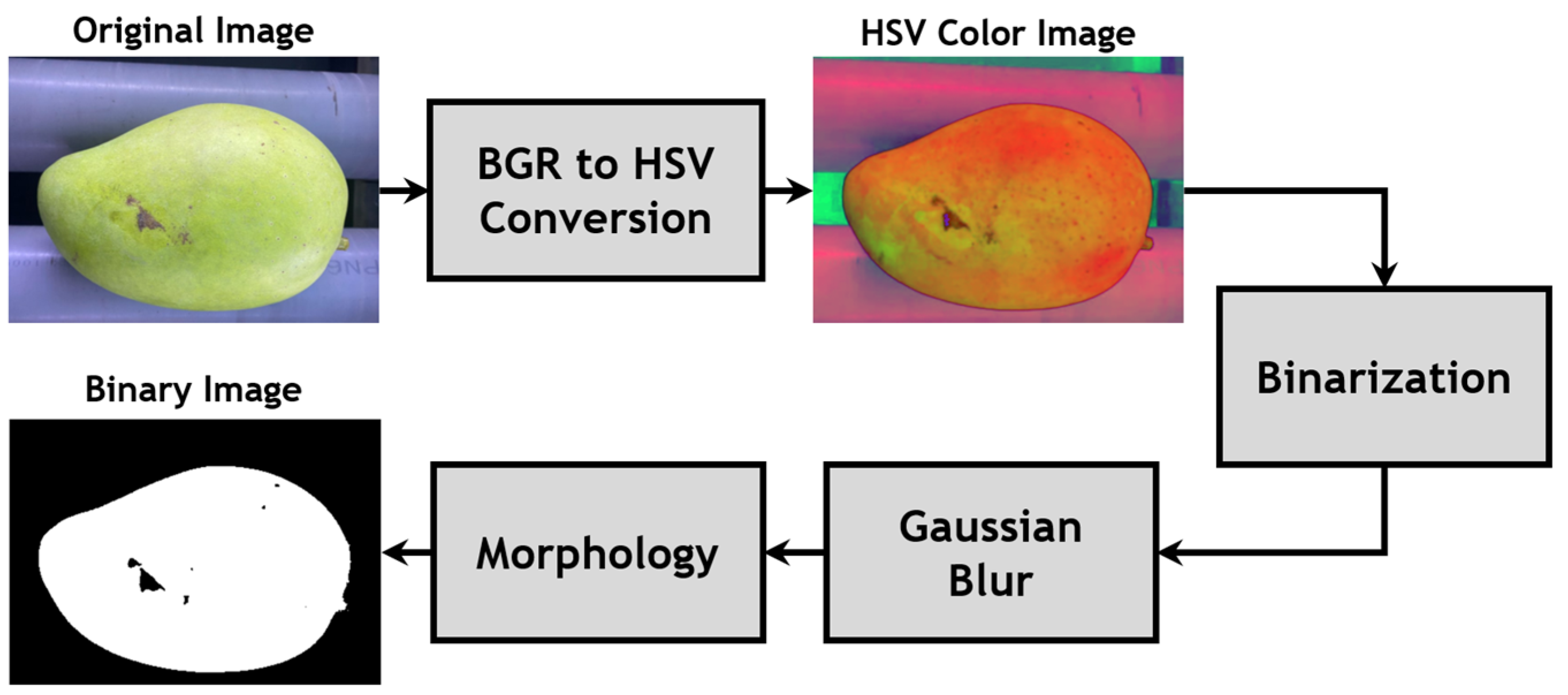

2.2.1. Mango Segmentation

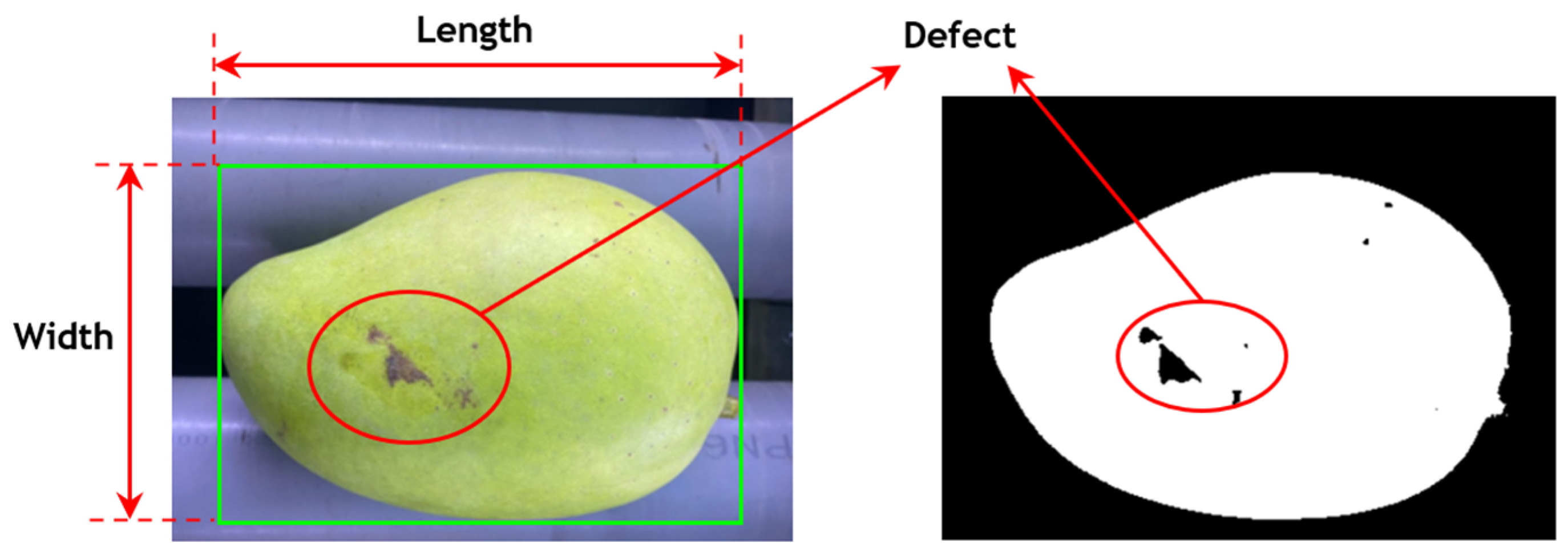

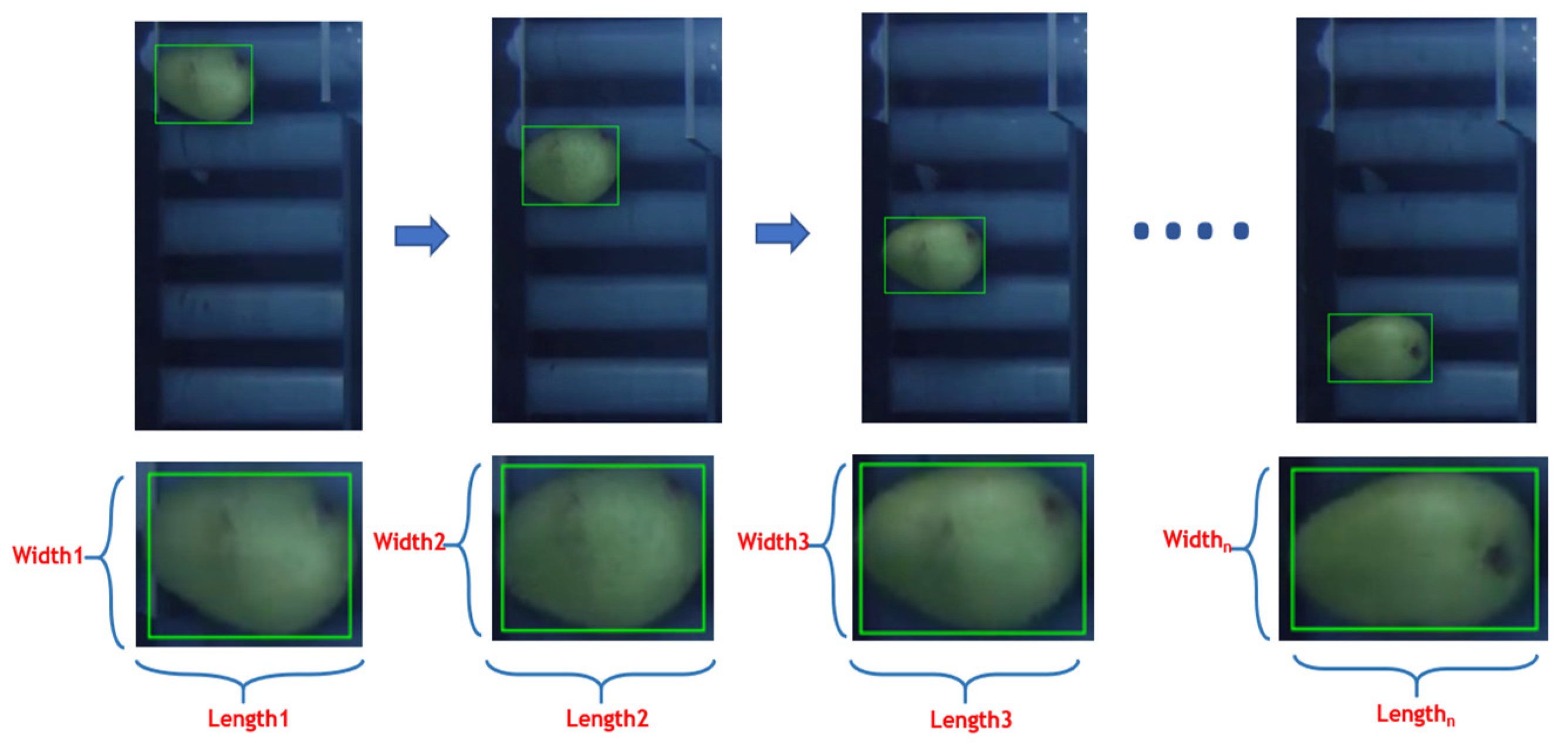

2.2.2. Mango External-Feature Extraction

2.2.3. Mango-Volume Estimation

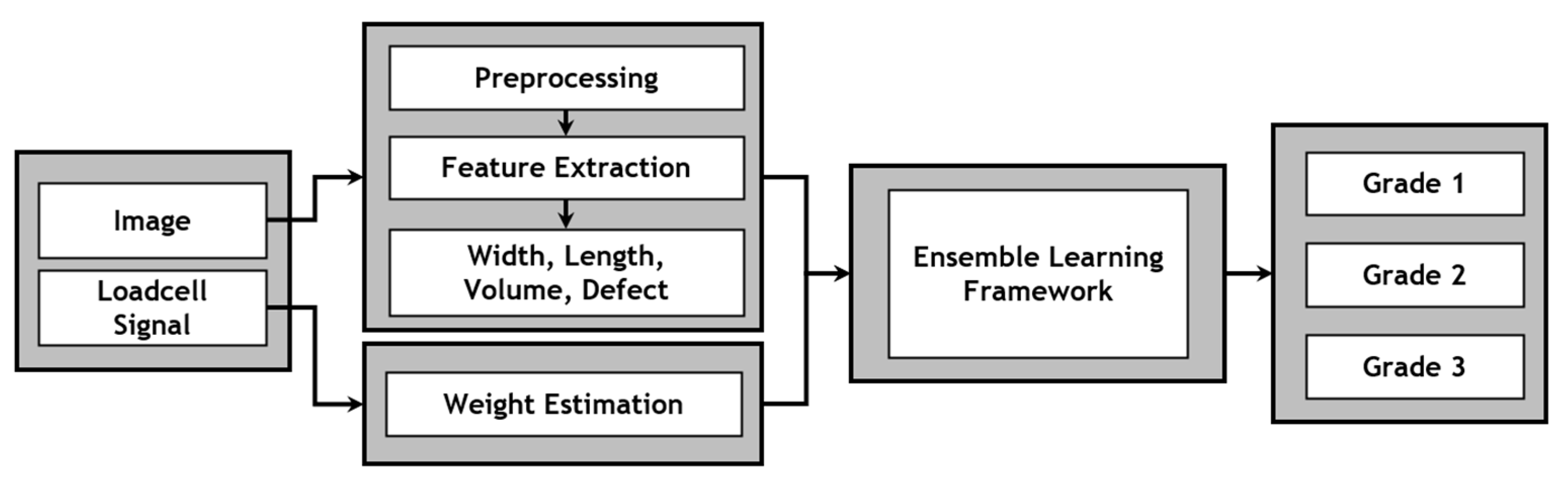

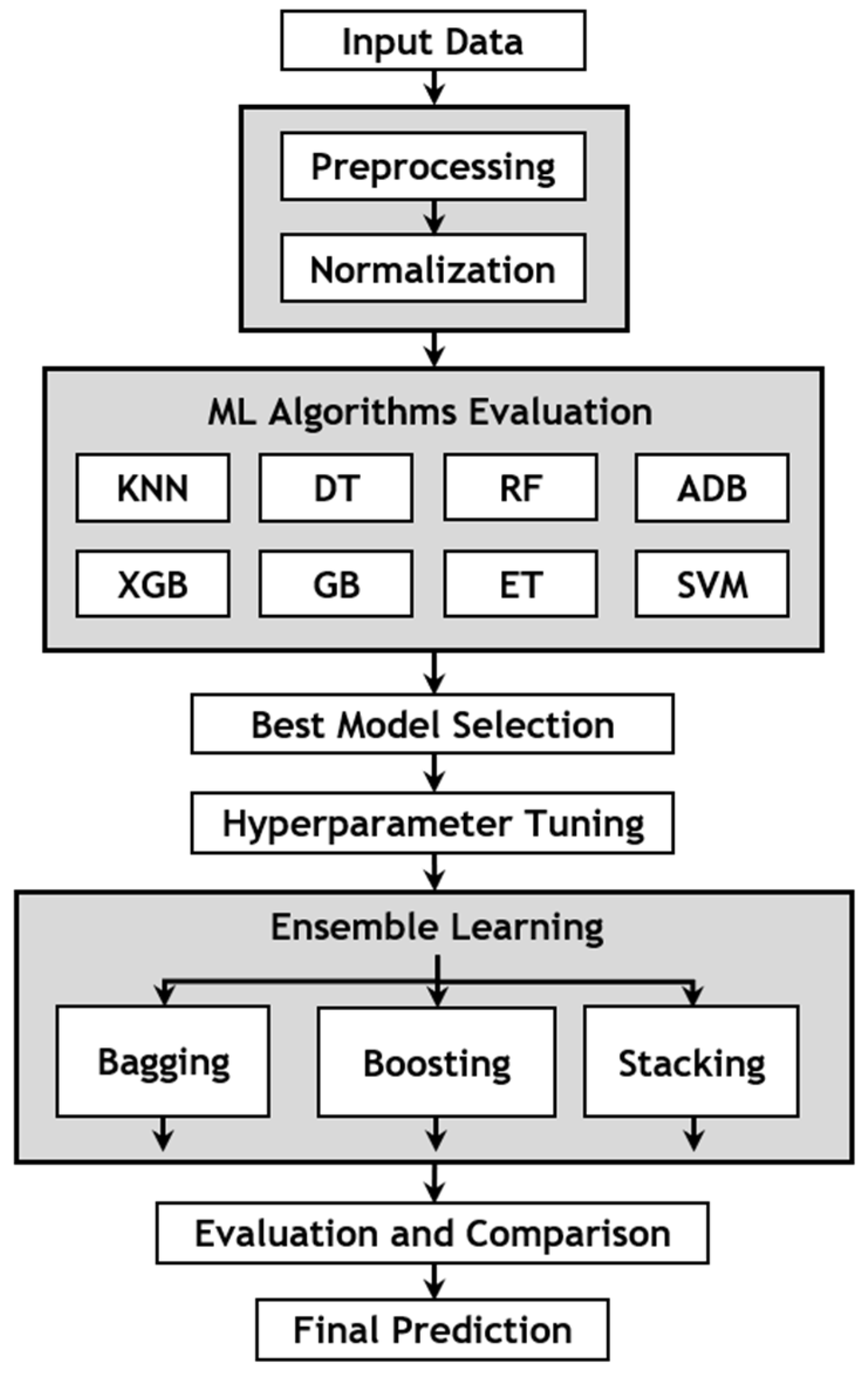

2.3. Proposed Method for Mango-Quality Classification

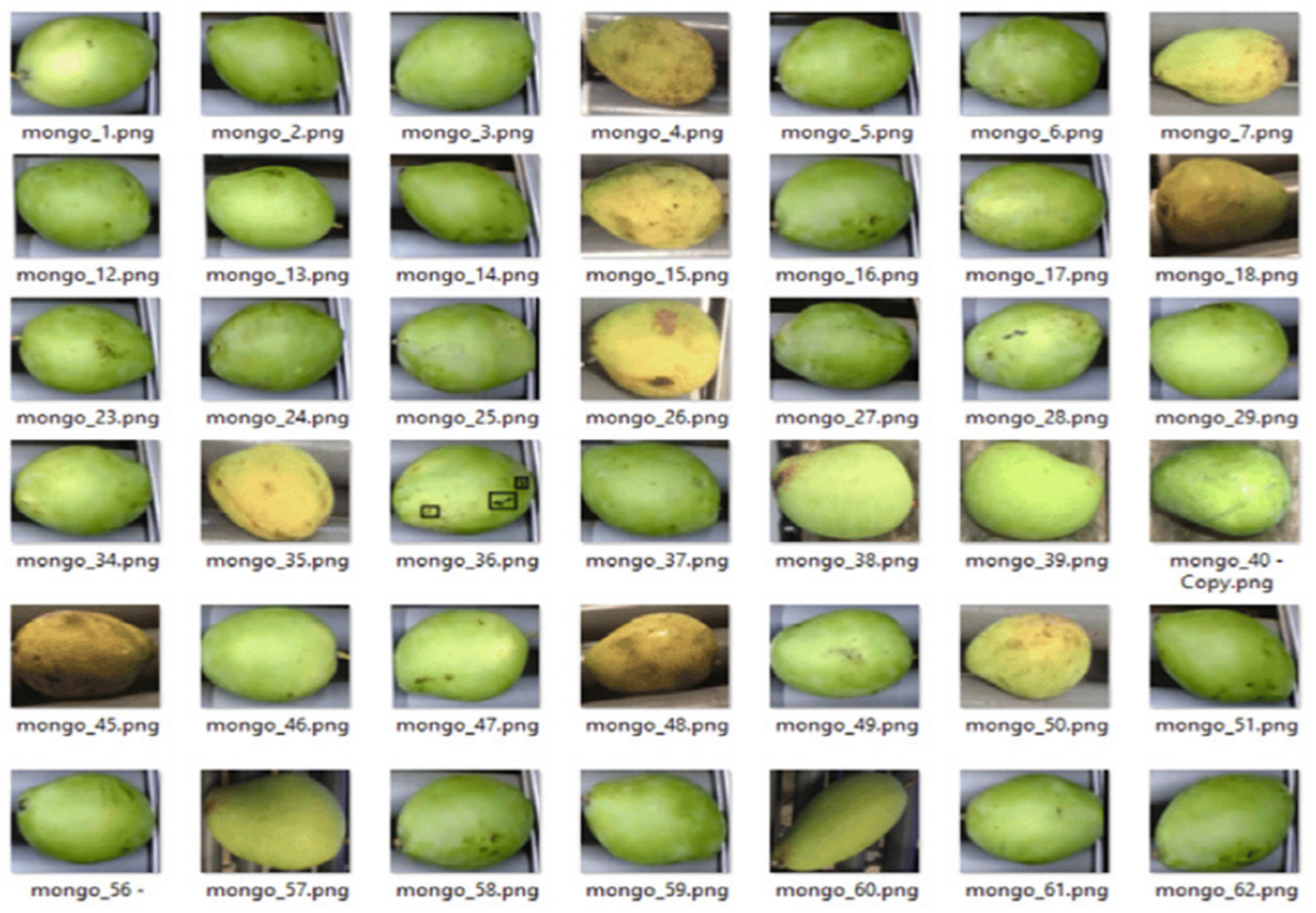

2.3.1. Data Collection

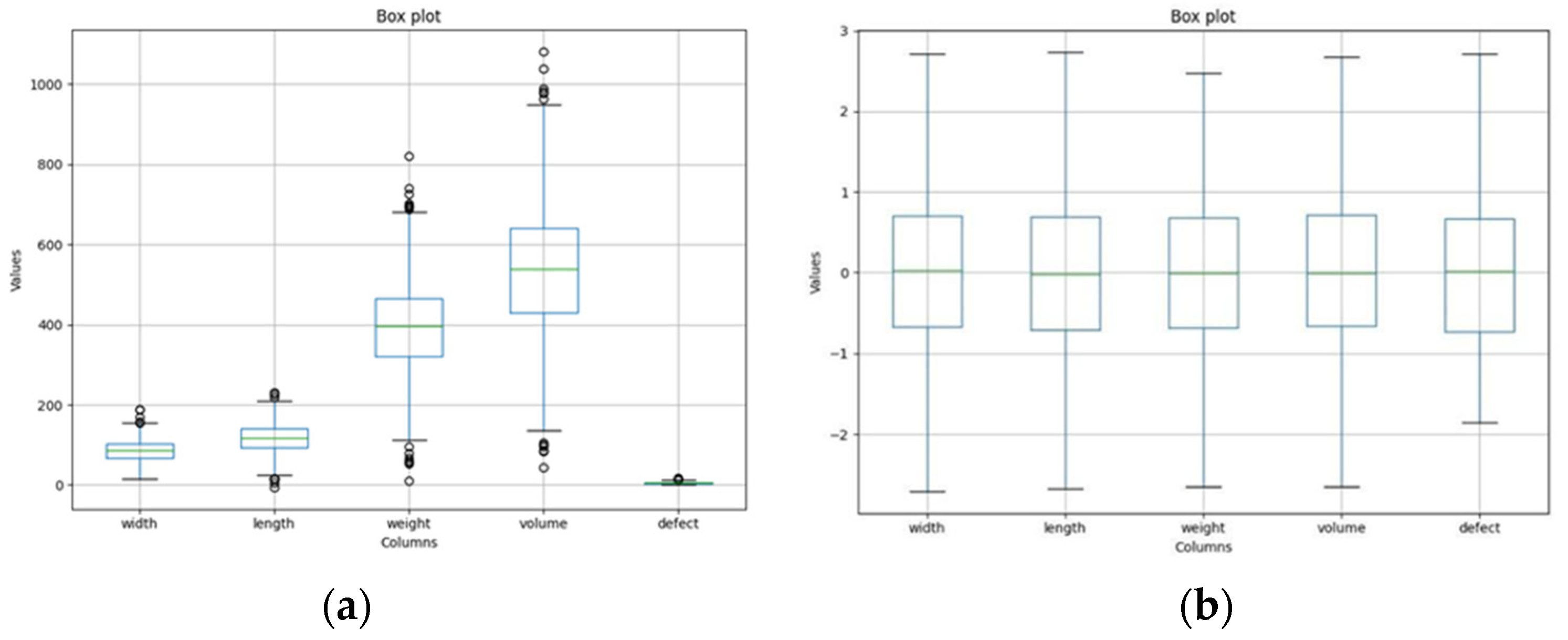

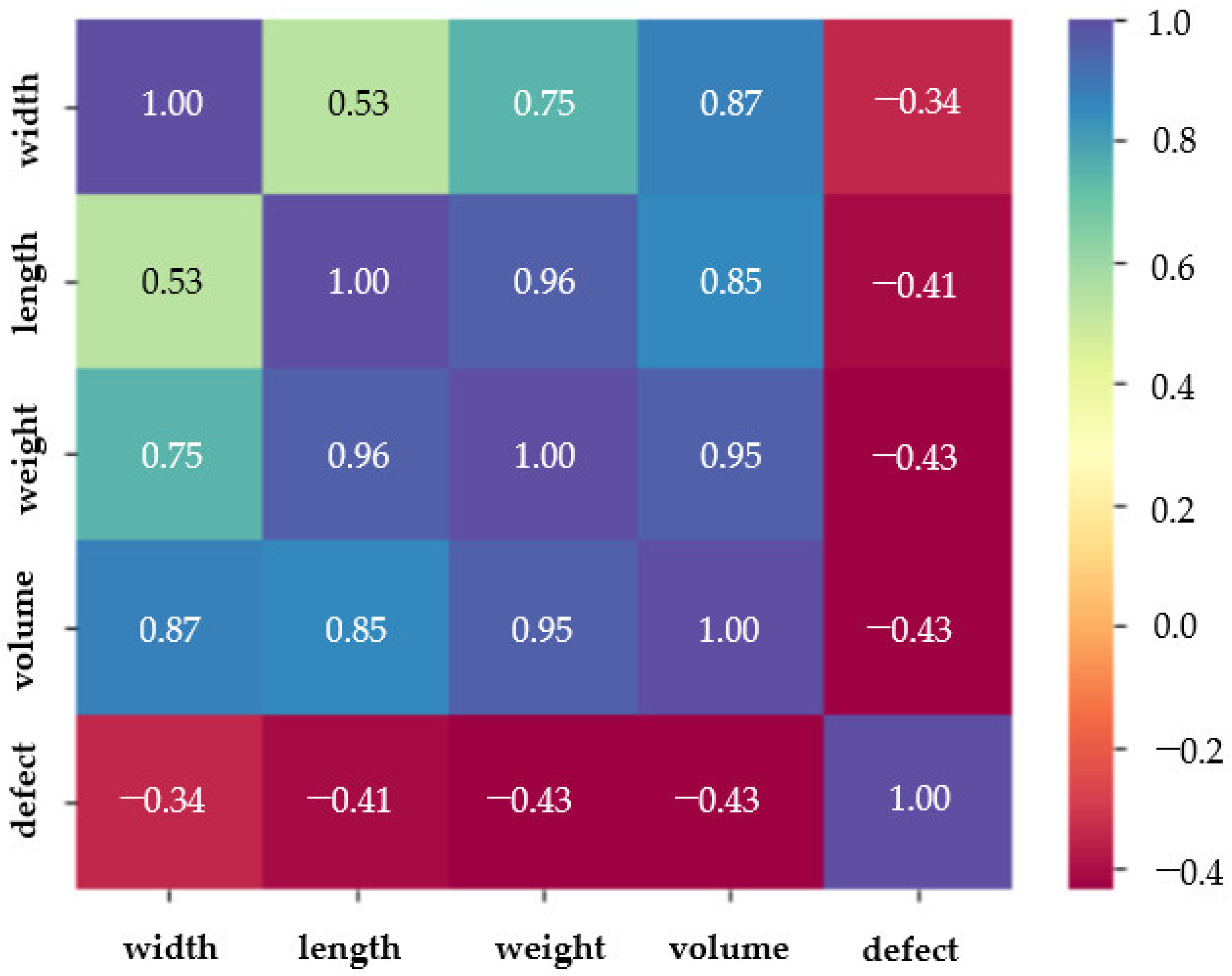

2.3.2. Data Preprocessing

2.3.3. Machine Learning Algorithms

2.3.4. Proposed Ensemble-Learning Method

2.3.5. Performance Evaluation

3. Results and Discussions

3.1. External-Feature Extraction Evaluation

3.2. Model Evaluation

3.3. Comparison with Different Studies

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Momin, M.; Rahman, M.; Sultana, M.; Igathinathane, C.; Ziauddin, A.; Grift, T. Geometry-based mass grading of mango fruits using image processing. Inf. Process. Agric. 2017, 4, 150–160. [Google Scholar] [CrossRef]

- Gururaj, N.; Vinod, V.; Vijayakumar, K. Deep grading of mangoes using convolutional neural network and computer vision. Multimed. Tools Appl. 2023, 82, 39525–39550. [Google Scholar] [CrossRef]

- Thong, N.D.; Thinh, N.T.; Cong, H.T. Mango sorting mechanical system combines image processing. In Proceedings of the 2019 7th International Conference on Control, Mechatronics and Automation (ICCMA), Delft, Netherlands, 6–8 November 2019; pp. 333–341. [Google Scholar]

- Trieu, N.M.; Thinh, N.T. A study of combining knn and ann for classifying dragon fruits automatically. J. Image Graph. 2022, 10, 28–35. [Google Scholar] [CrossRef]

- Brezmes, J.; Fructuoso, M.; Llobet, E.; Vilanova, X.; Recasens, I.; Orts, J.; Saiz, G.; Correig, X. Evaluation of an electronic nose to assess fruit ripeness. IEEE Sens. J. 2005, 5, 97–108. [Google Scholar] [CrossRef]

- Matteoli, S.; Diani, M.; Massai, R.; Corsini, G.; Remorini, D. A spectroscopy-based approach for automated nondestructive maturity grading of peach fruits. IEEE Sens. J. 2015, 15, 5455–5464. [Google Scholar] [CrossRef]

- Nandi, C.S.; Tudu, B.; Koley, C. A machine vision technique for grading of harvested mangoes based on maturity and quality. IEEE Sens. J. 2016, 16, 6387–6396. [Google Scholar] [CrossRef]

- Sa’ad, F.; Ibrahim, M.; Shakaff, A.; Zakaria, A.; Abdullah, M. Shape and weight grading of mangoes using visible imaging. Comput. Electron. Agric. 2015, 115, 51–56. [Google Scholar] [CrossRef]

- Schulze, K.; Nagle, M.; Spreer, W.; Mahayothee, B.; Müller, J. Development and assessment of different modeling approaches for size-mass estimation of mango fruits (Mangifera indica L., cv.‘Nam Dokmai’). Comput. Electron. Agric. 2015, 114, 269–276. [Google Scholar] [CrossRef]

- Mittal, S.; Dutta, M.K.; Issac, A. Non-destructive image processing based system for assessment of rice quality and defects for classification according to inferred commercial value. Measurement 2019, 148, 106969. [Google Scholar] [CrossRef]

- Cao, J.; Sun, T.; Zhang, W.; Zhong, M.; Huang, B.; Zhou, G.; Chai, X. An automated zizania quality grading method based on deep classification model. Comput. Electron. Agric. 2021, 183, 106004. [Google Scholar] [CrossRef]

- Huang, S.; Fan, X.; Sun, L.; Shen, Y.; Suo, X. Research on classification method of maize seed defect based on machine vision. J. Sens. 2019, 2019, 2716975. [Google Scholar] [CrossRef]

- Pérez-Borrero, I.; Marín-Santos, D.; Gegúndez-Arias, M.E.; Cortés-Ancos, E. A fast and accurate deep learning method for strawberry instance segmentation. Comput. Electron. Agric. 2020, 178, 105736. [Google Scholar] [CrossRef]

- Li, Z.; Yin, C.; Zhang, X. Crack Segmentation Extraction and Parameter Calculation of Asphalt Pavement Based on Image Processing. Sensors 2023, 23, 9161. [Google Scholar] [CrossRef] [PubMed]

- Ghazal, S.; Qureshi, W.S.; Khan, U.S.; Iqbal, J.; Rashid, N.; Tiwana, M.I. Analysis of visual features and classifiers for Fruit classification problem. Comput. Electron. Agric. 2021, 187, 106267. [Google Scholar] [CrossRef]

- Chithra, P.; Henila, M. Apple fruit sorting using novel thresholding and area calculation algorithms. Soft Comput. 2021, 25, 431–445. [Google Scholar] [CrossRef]

- Behera, S.K.; Rath, A.K.; Sethy, P.K. Maturity status classification of papaya fruits based on machine learning and transfer learning approach. Inf. Process. Agric. 2021, 8, 244–250. [Google Scholar] [CrossRef]

- T.K., B.; Annavarapu, C.S.R.; Bablani, A. Machine learning algorithms for social media analysis: A survey. Comput. Sci. Rev. 2021, 40, 100395. [Google Scholar] [CrossRef]

- Sen, P.C.; Hajra, M.; Ghosh, M. Supervised classification algorithms in machine learning: A survey and review. In Emerging Technology in Modelling and Graphics: Proceedings of IEM Graph 2018; Springer: Singapore, 2020; pp. 99–111. [Google Scholar]

- Zhang, S. Cost-sensitive KNN classification. Neurocomputing 2020, 391, 234–242. [Google Scholar] [CrossRef]

- Ghiasi, M.M.; Zendehboudi, S. Application of decision tree-based ensemble learning in the classification of breast cancer. Comput. Biol. Med. 2021, 128, 104089. [Google Scholar] [CrossRef]

- Ileberi, E.; Sun, Y.; Wang, Z. Performance evaluation of machine learning methods for credit card fraud detection using SMOTE and AdaBoost. IEEE Access 2021, 9, 165286–165294. [Google Scholar] [CrossRef]

- Bentéjac, C.; Csörgő, A.; Martínez-Muñoz, G. A comparative analysis of gradient boosting algorithms. Artif. Intell. Rev. 2021, 54, 1937–1967. [Google Scholar] [CrossRef]

- Qiu, Y.; Zhou, J.; Khandelwal, M.; Yang, H.; Yang, P.; Li, C. Performance evaluation of hybrid WOA-XGBoost, GWO-XGBoost and BO-XGBoost models to predict blast-induced ground vibration. Eng. Comput. 2021, 38, 4145–4162. [Google Scholar] [CrossRef]

- Cui, S.; Yin, Y.; Wang, D.; Li, Z.; Wang, Y. A stacking-based ensemble learning method for earthquake casualty prediction. Appl. Soft Comput. 2021, 101, 107038. [Google Scholar] [CrossRef]

- Wei, H.; Chen, W.; Zhu, L.; Chu, X.; Liu, H.; Mu, Y.; Ma, Z. Improved Lightweight Mango Sorting Model Based on Visualization. Agriculture 2022, 12, 1467. [Google Scholar] [CrossRef]

- Long, N.T.M.; Thinh, N.T. Using machine learning to grade the mango’s quality based on external features captured by vision system. Appl. Sci. 2020, 10, 5775. [Google Scholar] [CrossRef]

- Wang, S.-H.; Chen, Y. Fruit category classification via an eight-layer convolutional neural network with parametric rectified linear unit and dropout technique. Multimed. Tools Appl. 2020, 79, 15117–15133. [Google Scholar] [CrossRef]

- Trieu, N.M.; Thinh, N.T. Quality classification of dragon fruits based on external performance using a convolutional neural network. Appl. Sci. 2021, 11, 10558. [Google Scholar] [CrossRef]

- Blasco, J.; Cubero, S.; Gómez-Sanchís, J.; Mira, P.; Moltó, E. Development of a machine for the automatic sorting of pomegranate (Punica granatum) arils based on computer vision. J. Food Eng. 2009, 90, 27–34. [Google Scholar] [CrossRef]

- Fan, S.; Li, J.; Zhang, Y.; Tian, X.; Wang, Q.; He, X.; Zhang, C.; Huang, W. On line detection of defective apples using computer vision system combined with deep learning methods. J. Food Eng. 2020, 286, 110102. [Google Scholar] [CrossRef]

| Grade | Width (cm) | Length (cm) | Volume (mL) | Weight (g) | Defect (cm2) |

|---|---|---|---|---|---|

| 1 | 9–11 | 14.1–16 | 651–800 | 451–700 | 0–3 |

| 2 | 8–9 | 12.1–14 | 401–650 | 250–450 | 3–5 |

| 3 | 6–8 | 10–12 | 250–400 | 100–250 | >5 |

| Width (cm) | Length (cm) | Weight (g) | Volume (mL) | Defect (cm2) | |

|---|---|---|---|---|---|

| Count | 1300 | 1300 | 1300 | 1300 | 1300 |

| Mean | 85.4030 | 117.1955 | 393.8333 | 537.8166 | 5.1606 |

| Std | 26.3559 | 35.9526 | 114.3187 | 156.7680 | 2.8732 |

| Min | 16.8347 | −5.5648 | 10.68328 | 43.9788 | 0.0000 |

| 25% | 67.3743 | 93.5801 | 321.6118 | 429.6065 | 3.0858 |

| 50% | 85.7452 | 118.2014 | 397.1450 | 539.6910 | 5.1432 |

| 75% | 102.4258 | 140.2857 | 466.1139 | 641.6059 | 6.9783 |

| Max | 189.0237 | 230.4080 | 819.8204 | 1080.8878 | 16.5581 |

| Features | MAE | RMSE |

|---|---|---|

| Width | 0.5476 | 0.5584 |

| Length | 0.6295 | 0.6498 |

| Volume | 5.7834 | 5.9487 |

| Weight | 0.1598 | 0.1698 |

| Defect | 0.0318 | 0.0429 |

| ML Model | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|

| XGBoost | 0.98 | 0.94 | 0.96 | 0.97 |

| Random Forest | 0.97 | 0.95 | 0.96 | 0.96 |

| Extra Tree Classifier | 0.96 | 0.97 | 0.97 | 0.96 |

| Gradient Boosting | 0.93 | 0.96 | 0.94 | 0.95 |

| Support Vector Machine | 0.94 | 0.94 | 0.94 | 0.93 |

| Adaboost | 0.93 | 0.91 | 0.91 | 0.92 |

| Decision Tree | 0.88 | 0.87 | 0.87 | 0.88 |

| K-Nearest Neighbors | 0.85 | 0.84 | 0.82 | 0.82 |

| Method | Base Learner | Meta-Learner | Dataset | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|---|---|---|

| Bagging | XGB | Train | 0.9744 | 0.9836 | 0.9783 | 0.9793 | |

| Test | 0.9733 | 0.9785 | 0.9751 | 0.9726 | |||

| Boosting | XGB | Train | 0.9855 | 0.9890 | 0.9875 | 0.9885 | |

| Test | 0.9629 | 0.9819 | 0.9711 | 0.9756 | |||

| Stacking | RF | XGB | Train | 0.9919 | 0.9938 | 0.9928 | 0.9932 |

| ET | Test | 0.9855 | 0.9901 | 0.9876 | 0.9863 |

| Method | Target | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|---|

| CNN [26] | Mango | - | - | 0.9587 | 0.9564 |

| Random Forest [27] | Mango | 0.9801 | 0.9796 | 0.9803 | 0.981 |

| Eight-layer CNN [28] | Fruit | - | - | - | 0.9567 |

| Image processing + ANN [29] | Dragon fruit | - | - | - | 0.8310 |

| KNN+CNN [29] | Dragon fruit | - | - | - | 0.9285 |

| Proposed method | Mango | 0.9855 | 0.9901 | 0.9876 | 0.9863 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tai, N.D.; Lin, W.C.; Trieu, N.M.; Thinh, N.T. Development of a Mango-Grading and -Sorting System Based on External Features, Using Machine Learning Algorithms. Agronomy 2024, 14, 831. https://doi.org/10.3390/agronomy14040831

Tai ND, Lin WC, Trieu NM, Thinh NT. Development of a Mango-Grading and -Sorting System Based on External Features, Using Machine Learning Algorithms. Agronomy. 2024; 14(4):831. https://doi.org/10.3390/agronomy14040831

Chicago/Turabian StyleTai, Nguyen Duc, Wei Chih Lin, Nguyen Minh Trieu, and Nguyen Truong Thinh. 2024. "Development of a Mango-Grading and -Sorting System Based on External Features, Using Machine Learning Algorithms" Agronomy 14, no. 4: 831. https://doi.org/10.3390/agronomy14040831

APA StyleTai, N. D., Lin, W. C., Trieu, N. M., & Thinh, N. T. (2024). Development of a Mango-Grading and -Sorting System Based on External Features, Using Machine Learning Algorithms. Agronomy, 14(4), 831. https://doi.org/10.3390/agronomy14040831