SAW-YOLO: A Multi-Scale YOLO for Small Target Citrus Pests Detection

Abstract

:1. Introduction

2. Materials and Methods

2.1. Data Collection and Preprocessing

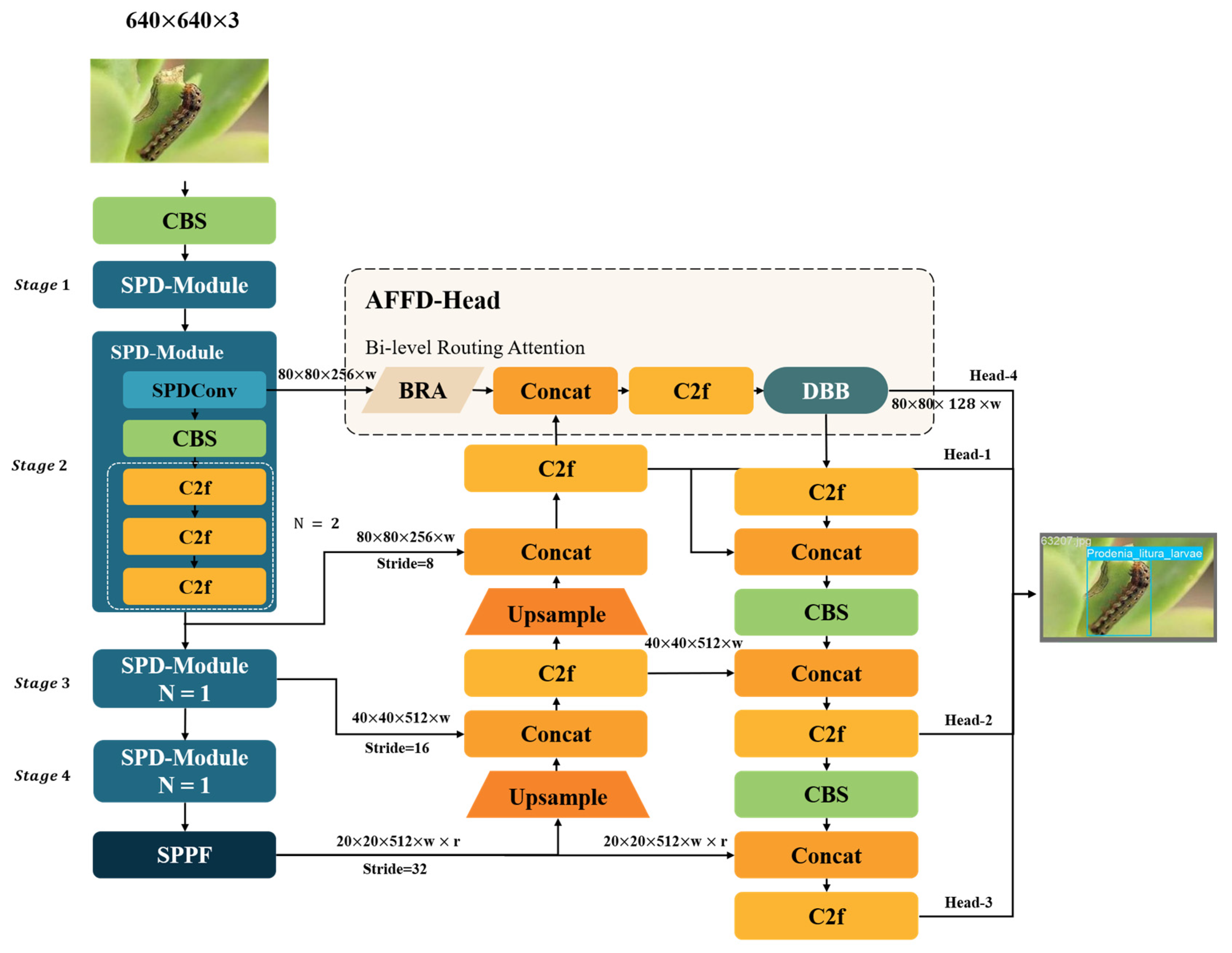

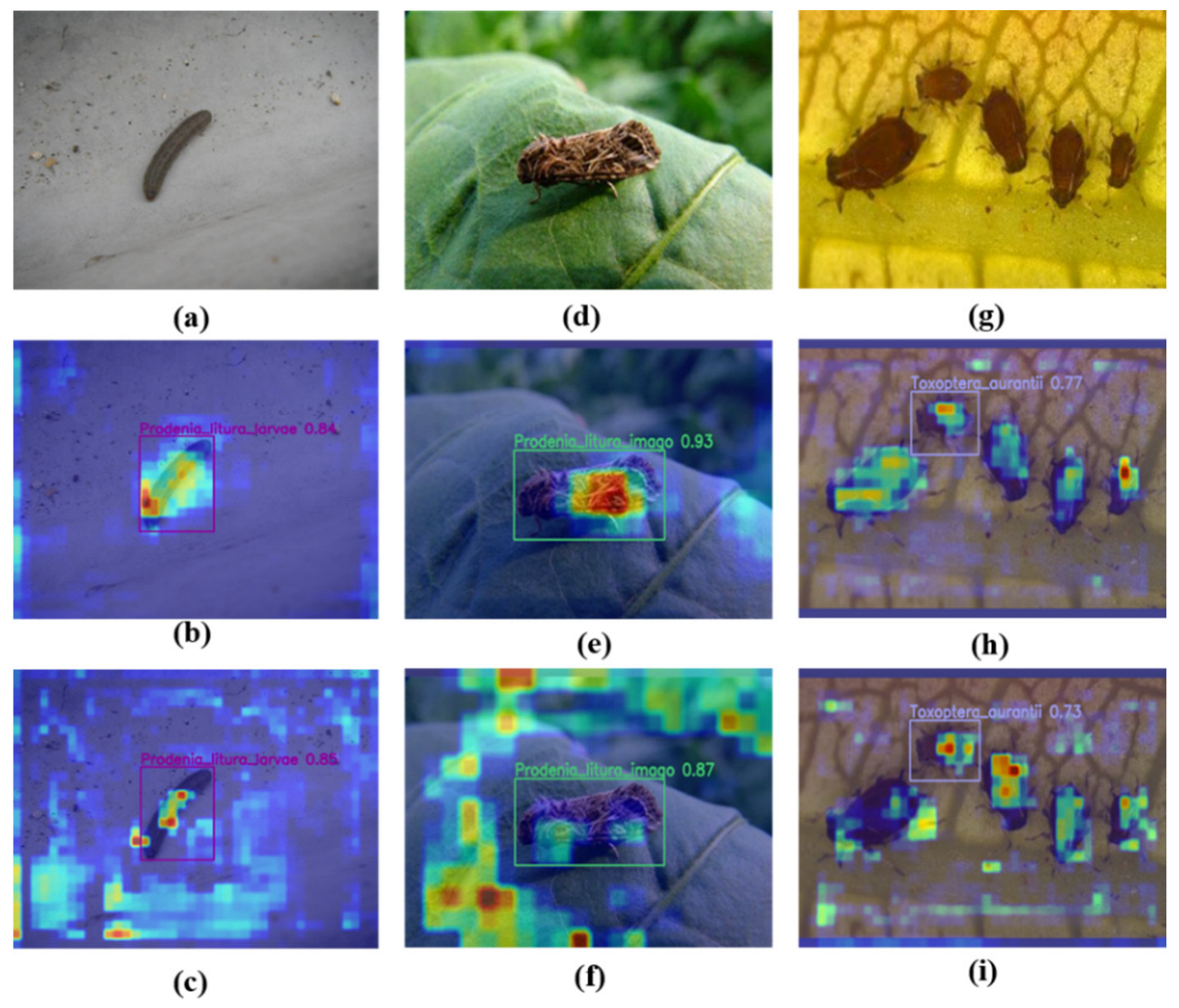

2.2. Model Design

2.2.1. The Structure of the YOLOv8 Model

2.2.2. The Method of Improved YOLOv8n Model

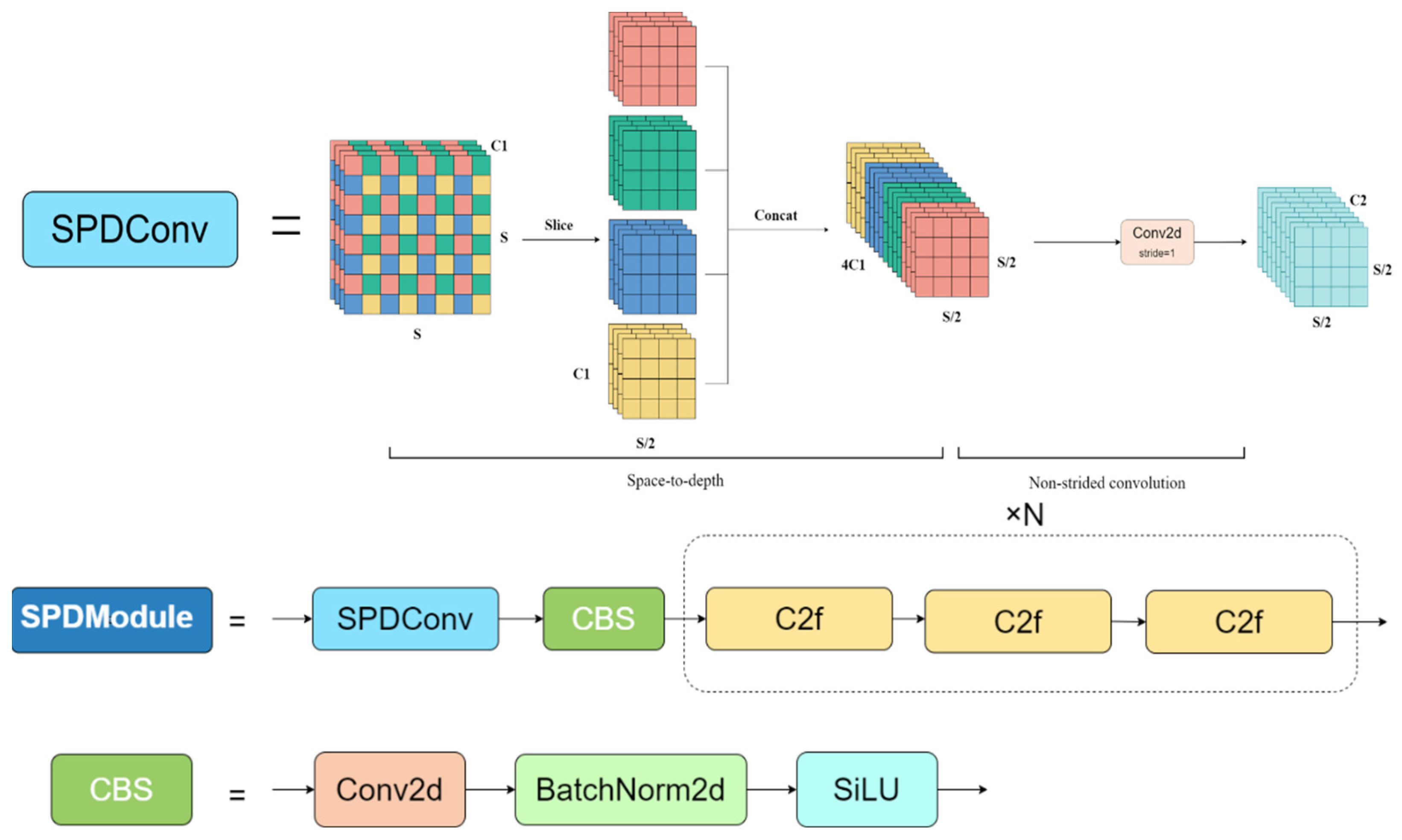

- SPD Module

- 2.

- Attention Feature Fusion Distribution Head, AFFD-Head

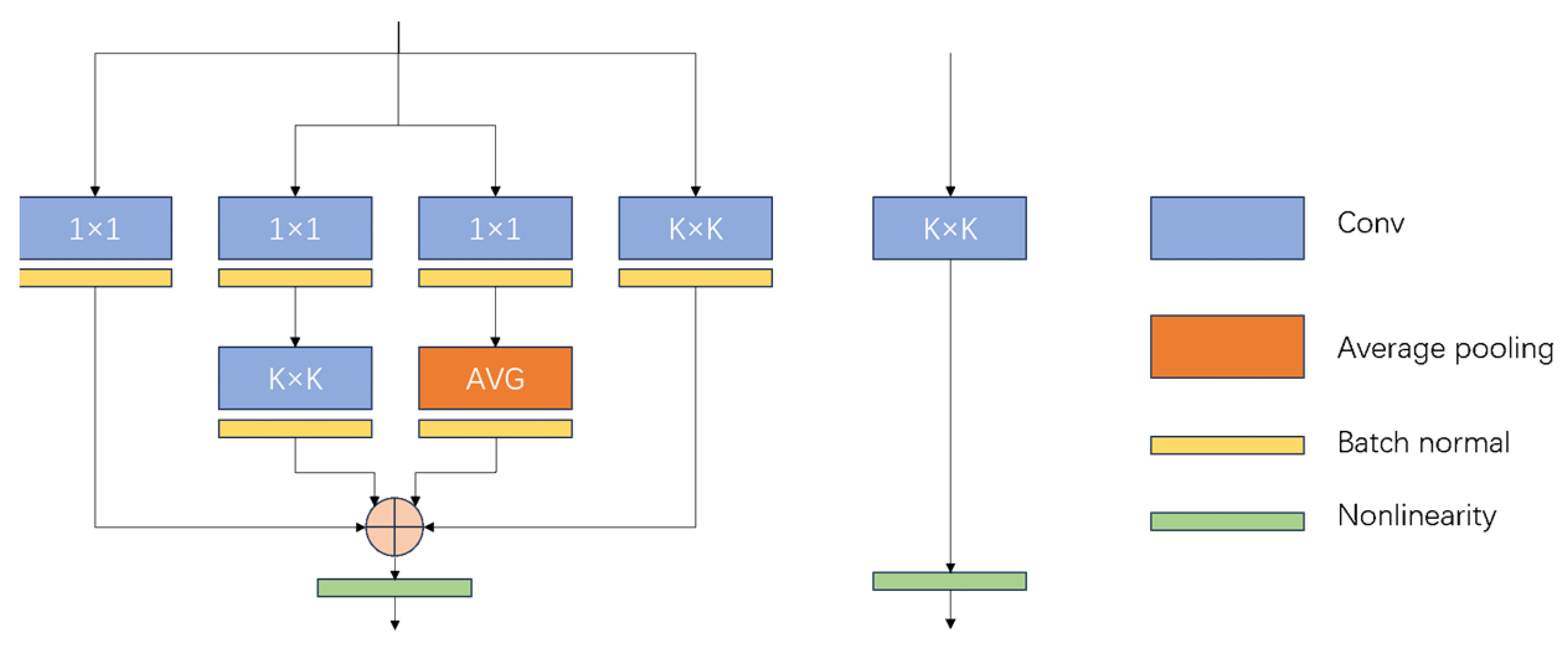

- 3.

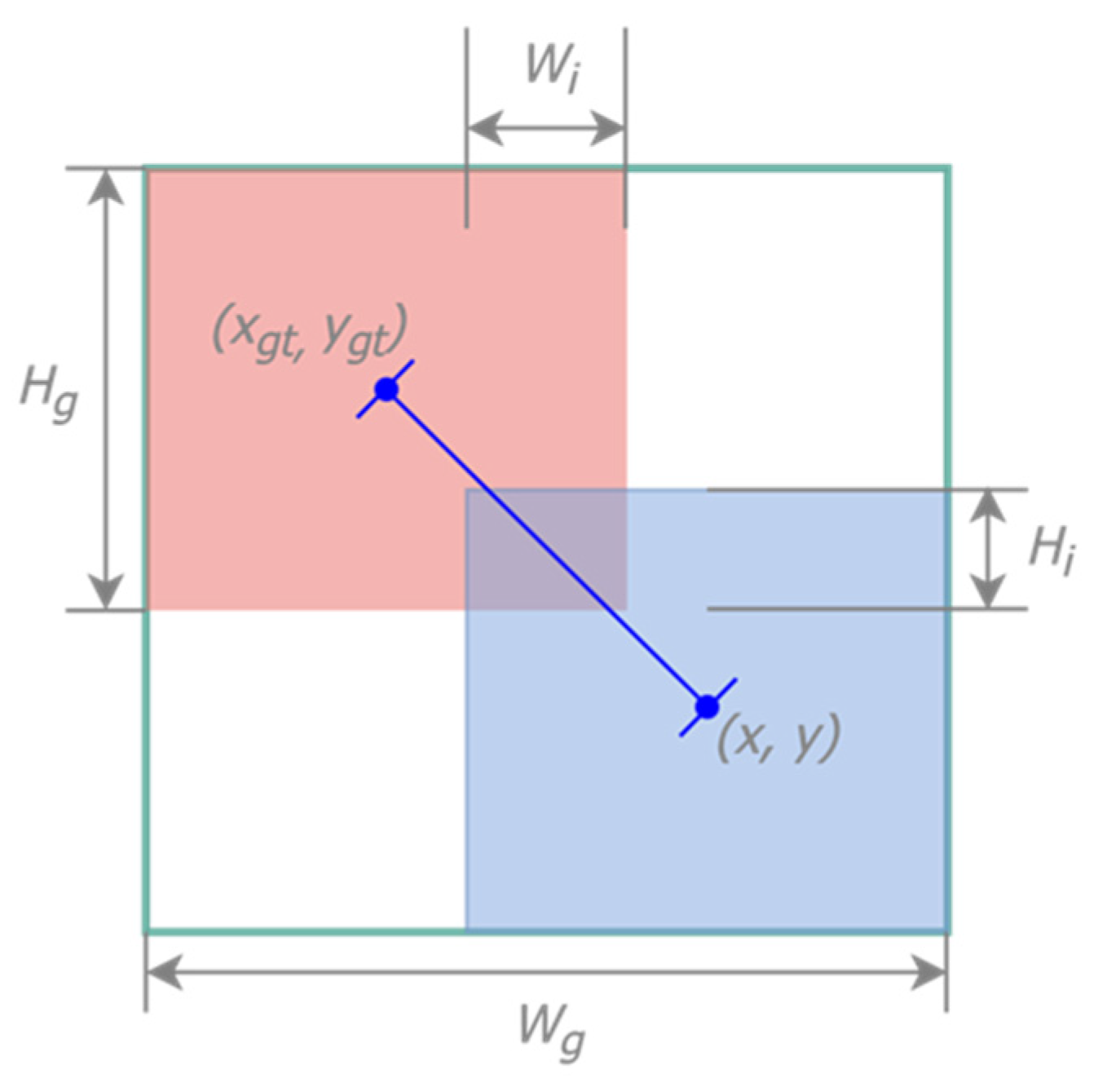

- Loss Function: Wise-IOUv3

2.2.3. Training Environment and Evaluation Indicators

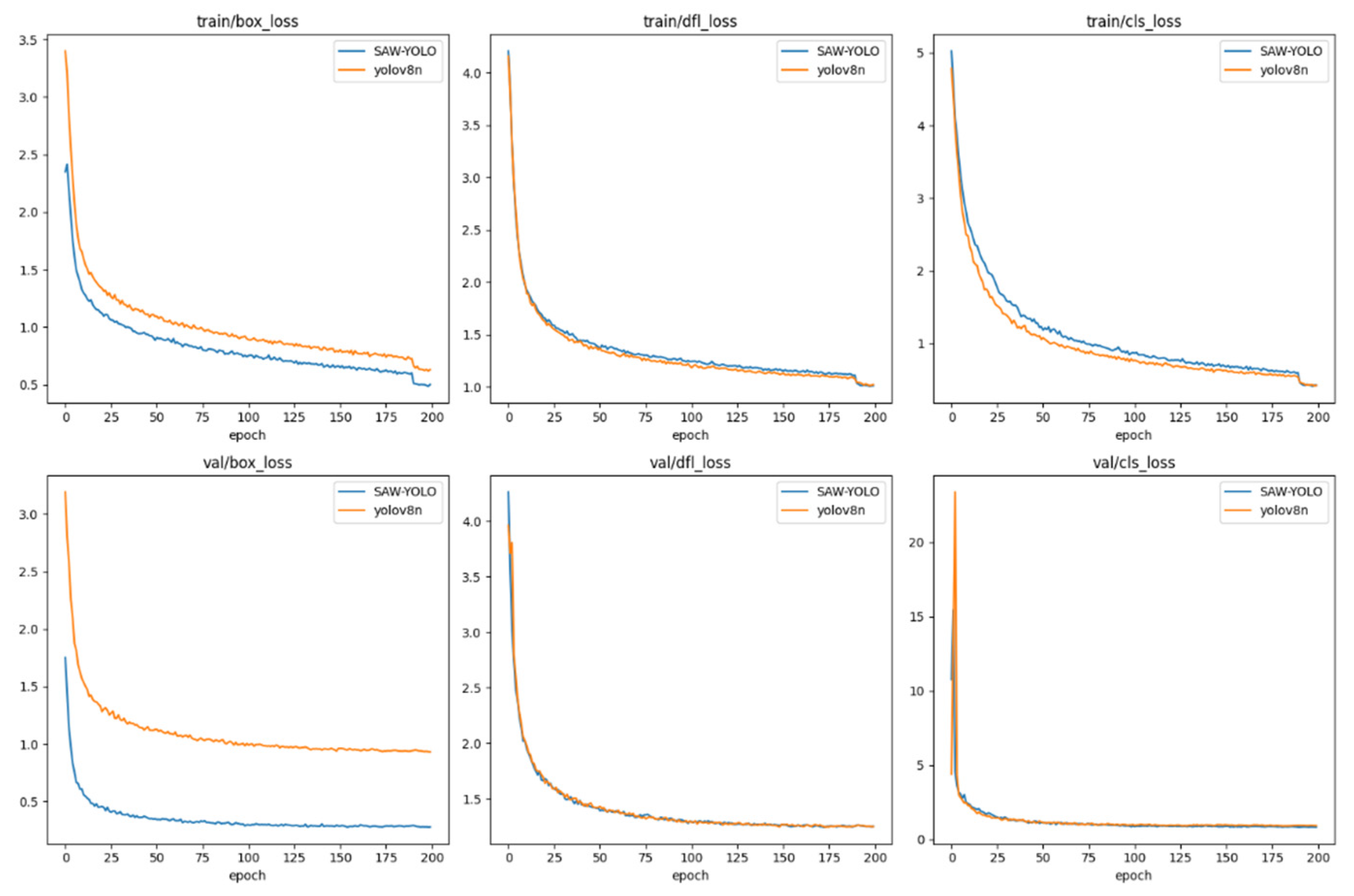

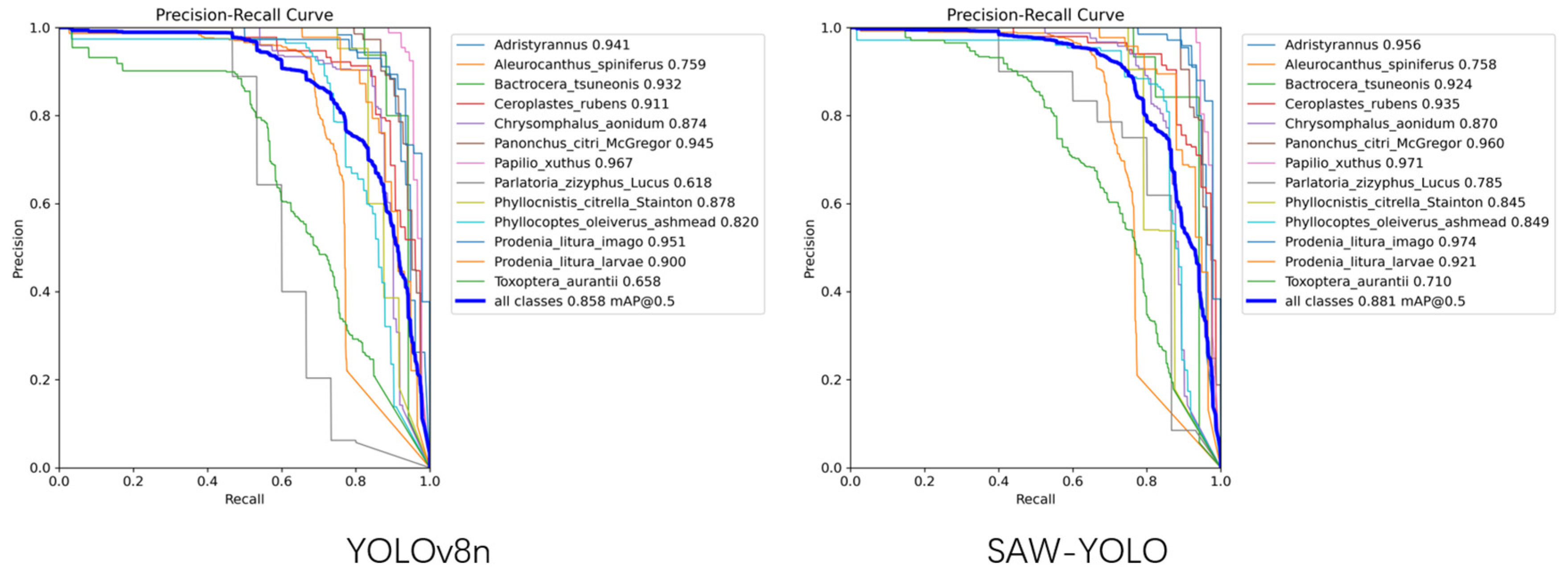

3. Results and Discussion

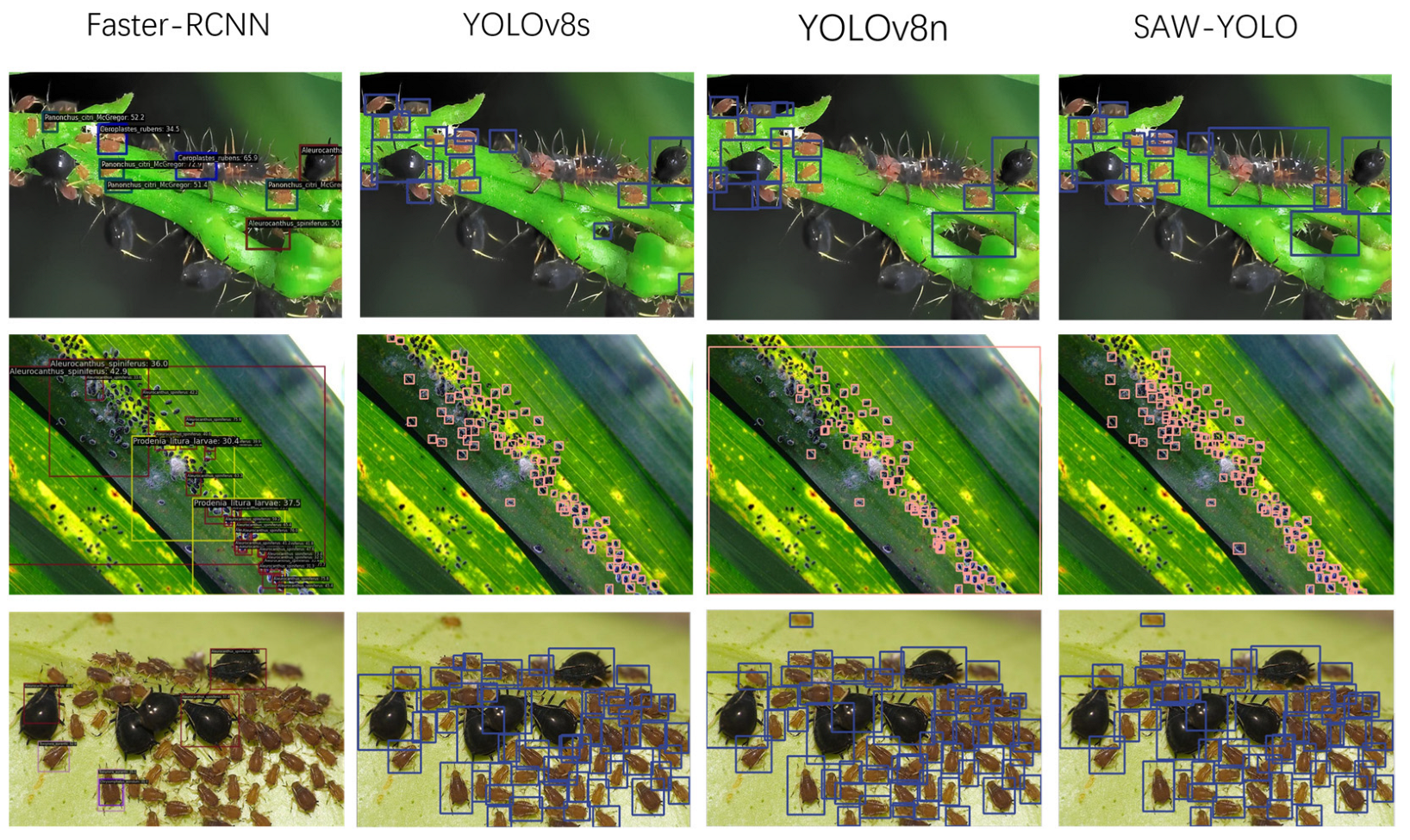

3.1. The Comparison of Various Mainstream Models

Accuracy Comparison

3.2. Ablation Experiments

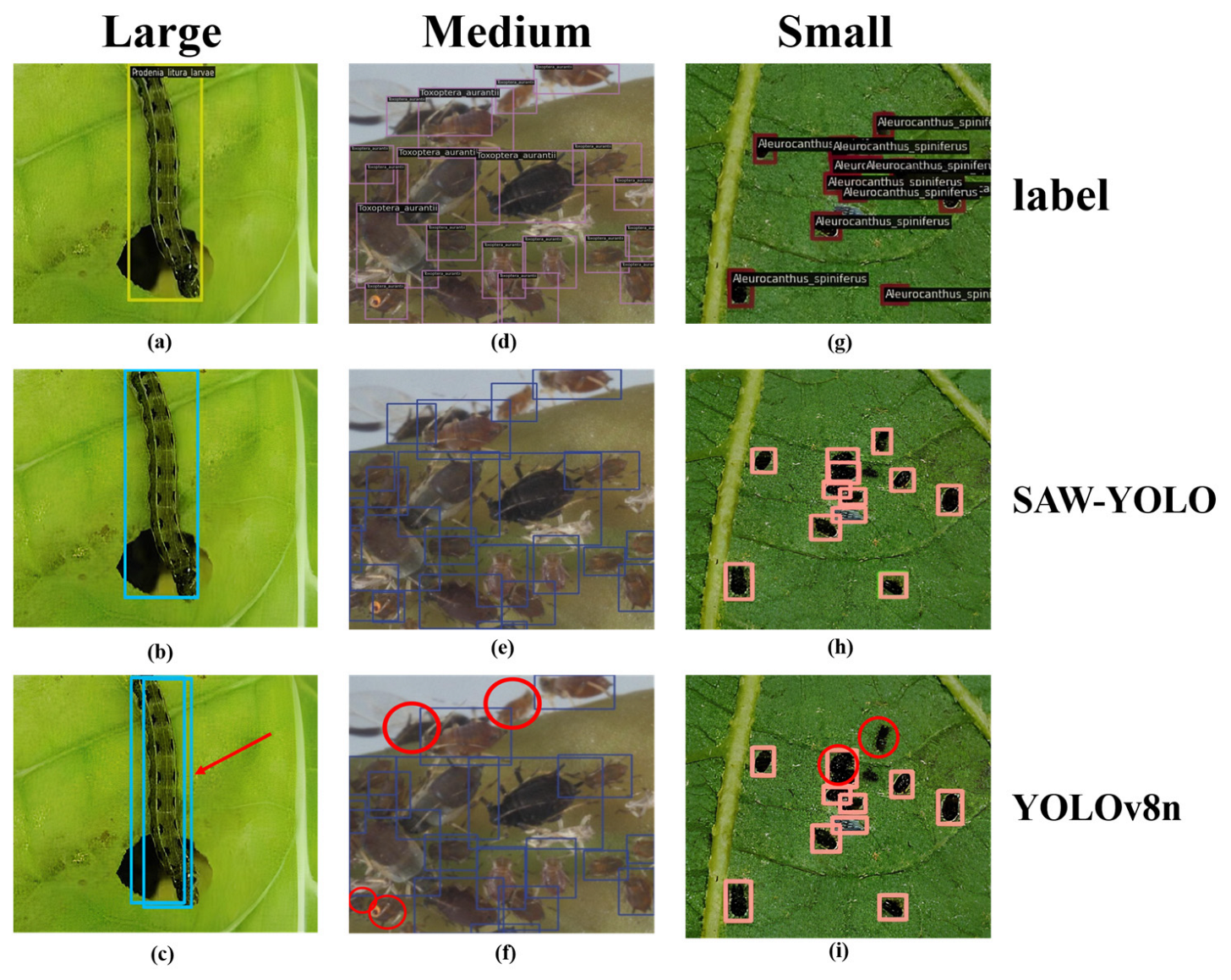

3.3. Performance of Multi-Scale Object Detection

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Van Der Ploeg, J.D. Peasant-Driven Agricultural Growth and Food Sovereignty. J. Peasant. Stud. 2014, 41, 999–1030. [Google Scholar] [CrossRef]

- Fennell, J.T.; Fountain, M.T.; Paul, N.D. Direct Effects of Protective Cladding Material on Insect Pests in Crops. Crop Prot. 2019, 121, 147–156. [Google Scholar] [CrossRef]

- Chen, K.; Tian, Z.; He, H.; Long, C.; Jiang, F. Bacillus Species as Potential Biocontrol Agents against Citrus Diseases. Biol. Control 2020, 151, 104419. [Google Scholar] [CrossRef]

- Qiang, J. Detection of Citrus Pests in Double Backbone Network Based on Single Shot Multibox Detector. Comput. Electron. Agric. 2023, 212, 108158. [Google Scholar] [CrossRef]

- Li, W.; Zheng, T.; Yang, Z.; Li, M.; Sun, C.; Yang, X. Classification and Detection of Insects from Field Images Using Deep Learning for Smart Pest Management: A Systematic Review. Ecol. Inform. 2021, 66, 101460. [Google Scholar] [CrossRef]

- Preti, M.; Verheggen, F.; Angeli, S. Insect Pest Monitoring with Camera-Equipped Traps: Strengths and Limitations. J. Pest Sci. 2021, 94, 203–217. [Google Scholar] [CrossRef]

- Dargan, S.; Kumar, M.; Ayyagari, M.R.; Kumar, G. A Survey of Deep Learning and Its Applications: A New Paradigm to Machine Learning. Arch. Computat. Methods Eng. 2020, 27, 1071–1092. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceeding of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. arXiv 2018, arXiv:1703.06870. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection 2016. In Proceeding of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer International Publishing: Heidelberg, Germany, 2016; Volume 9905, pp. 21–37. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Computer Vision–ECCV 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Heidelberg, Germany, 2020; Volume 12346, pp. 213–229. ISBN 978-3-030-58451-1. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. Available online: https://arxiv.org/abs/2103.14030v2 (accessed on 20 March 2023).

- Song, H.; Sun, D.; Chun, S.; Jampani, V.; Han, D.; Heo, B.; Kim, W.; Yang, M.-H. ViDT: An Efficient and Effective Fully Transformer-Based Object Detector. arXiv 2021, arXiv:2110.03921. [Google Scholar]

- Islam, S.; Elmekki, H.; Elsebai, A.; Bentahar, J.; Drawel, N.; Rjoub, G.; Pedrycz, W. A Comprehensive Survey on Applications of Transformers for Deep Learning Tasks. Expert Syst. Appl. 2024, 241, 122666. [Google Scholar] [CrossRef]

- Du, X.; Cheng, H.; Ma, Z.; Lu, W.; Wang, M.; Meng, Z.; Jiang, C.; Hong, F. DSW-YOLO: A Detection Method for Ground-Planted Strawberry Fruits under Different Occlusion Levels. Comput. Electron. Agric. 2023, 214, 108304. [Google Scholar] [CrossRef]

- Betti Sorbelli, F.; Palazzetti, L.; Pinotti, C.M. YOLO-Based Detection of Halyomorpha Halys in Orchards Using RGB Cameras and Drones. Comput. Electron. Agric. 2023, 213, 108228. [Google Scholar] [CrossRef]

- Yang, Y.; Xiao, Y.; Chen, Z.; Tang, D.; Li, Z.; Li, Z. FCBTYOLO: A Lightweight and High-Performance Fine Grain Detection Strategy for Rice Pests. IEEE Access 2023, 11, 101286–101295. [Google Scholar] [CrossRef]

- Jia, X.; Jiang, X.; Li, Z.; Mu, J.; Wang, Y.; Niu, Y. Application of Deep Learning in Image Recognition of Citrus Pests. Agriculture 2023, 13, 1023. [Google Scholar] [CrossRef]

- Syed-Ab-Rahman, S.F.; Hesamian, M.H.; Prasad, M. Citrus Disease Detection and Classification Using End-to-End Anchor-Based Deep Learning Model. Appl. Intell. 2022, 52, 927–938. [Google Scholar] [CrossRef]

- Dai, F.; Wang, F.; Yang, D.; Lin, S.; Chen, X.; Lan, Y.; Deng, X. Detection Method of Citrus Psyllids With Field High-Definition Camera Based on Improved Cascade Region-Based Convolution Neural Networks. Front. Plant Sci. 2022, 12, 816272. [Google Scholar] [CrossRef]

- Sunkara, R.; Luo, T. No More Strided Convolutions or Pooling: A New CNN Building Block for Low-Resolution Images and Small Objects; Springer Nature: Cham, Switzerland, 2022. [Google Scholar]

- Du, Y.; Zhong, S.; Fang, H.; Wang, N.; Liu, C.; Wu, D.; Sun, Y.; Xiang, M. Modeling Automatic Pavement Crack Object Detection and Pixel-Level Segmentation. Autom. Constr. 2023, 150, 104840. [Google Scholar] [CrossRef]

- Zhu, L.; Wang, X.; Ke, Z.; Zhang, W.; Lau, R. BiFormer: Vision Transformer with Bi-Level Routing Attention. In Proceeding of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Ding, X.; Zhang, X.; Han, J.; Ding, G. Diverse Branch Block: Building a Convolution as an Inception-like Unit. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Jocher, G. Ultralytics YOLO (Version8.0.0) [Computer Software]. Available online: https://github.com/ultralytics (accessed on 10 January 2024).

- Wu, X.; Zhan, C.; Lai, Y.-K.; Cheng, M.-M.; Yang, J. IP102: A Large-Scale Benchmark Dataset for Insect Pest Recognition. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, June 2019; IEEE: New York, NY, USA, 2019; pp. 8779–8788. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Computer Vision–ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Heidelberg, Germany, 2014; Volume 8693, pp. 740–755. ISBN 978-3-319-10601-4. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Jocher, G. YOLOv5 by Ultralytics (Version 7.0) [Computer Software]. 2020. Available online: https://zenodo.org/records/7347926 (accessed on 22 November 2022).

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Tong, Z.; Chen, Y.; Xu, Z.; Yu, R. Wise-IoU: Bounding Box Regression Loss with Dynamic Focusing Mechanism. arXiv 2023, arXiv:2301.10051. [Google Scholar]

- Tishby, N.; Zaslavsky, N. Deep Learning and the Information Bottleneck Principle. In Ieee Information Theory Workshop (itw); IEEE: New York, NY, USA, 2015. [Google Scholar]

- Wang, C.-Y.; Yeh, I.-H.; Liao, H.-Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar]

- Wang, C.; He, W.; Nie, Y.; Guo, J.; Liu, C.; Han, K.; Wang, Y. Gold-YOLO: Efficient Object Detector via Gather-and-Distribute Mechanism. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 10–16 December 2023. [Google Scholar]

| Class | Species Name (Average Length (mm)) | Training Samples | Validation Samples | Test Samples |

|---|---|---|---|---|

| 1 | Adristyrannus (96–106) | 277 | 76 | 44 |

| 2 | Aleurocanthus spiniferus (1–1.3) | 2164 | 596 | 365 |

| 3 | Bactrocera tsuneonis (9.9–12) | 104 | 17 | 20 |

| 4 | Ceroplastes rubens (1–2.5) | 236 | 75 | 22 |

| 5 | Chrysomphalus aonidum | 773 | 122 | 79 |

| 6 | Panonchus citri McGregor (1.5–2) | 346 | 83 | 26 |

| 7 | Papilio Xuthus (3–40) | 290 | 90 | 48 |

| 8 | Parlatoria zizyphus Lucus (1.5–2) | 63 | 15 | 7 |

| 9 | Phyllocnistis citrella Stainton (0.5–4) | 62 | 24 | 15 |

| 10 | Phyllocoptes olives ashmead (0.1–1) | 242 | 123 | 32 |

| 11 | Prodenia litura imago (14–20) | 133 | 46 | 19 |

| 12 | Prodenia litura larvae (14–40) | 225 | 58 | 36 |

| 13 | Toxoptera aurantii (0.2–0.5) | 552 | 264 | 75 |

| Small | 1049 | 334 | 155 | |

| Medium | 2432 | 612 | 336 | |

| Large | 1986 | 643 | 297 | |

| Total | 5467 | 1589 | 788 |

| Models | ||||

|---|---|---|---|---|

| SwinTransformer | 72.9 | 47.2 | 60.6 | 68.752 |

| SSD | 69.7 | 44.4 | 54.7 | 25.35 |

| Faster-RCNN | 78.3 | 45.8 | 55.5 | 51.753 |

| YOLOv3 | 89.1 | 76.1 | 78.1 | 61.588 |

| YOLOv5n | 87.5 | 70.2 | 79.2 | 3.247 |

| YOLOv6n | 88.3 | 72.5 | 78.7 | 4.239 |

| YOLOv8n | 87.0 | 71.1 | 77.9 | 3.157 |

| YOLOv8s | 89.4 | 75.7 | 79.5 | 11.141 |

| YOLOx-s | 81.2 | 63.2 | 64.3 | 8.942 |

| SAW-YOLO | 90.3 | 74.3 | 80.5 | 4.58 |

| Methods | SPDM | AFFD | WIoUv3 | |||||

|---|---|---|---|---|---|---|---|---|

| YOLOv8 | 87.0 | 71.1 | 3.16 | 120.8 | 6.2M | |||

| YOLOv8+SPDM | √ | 88.4(+1.4) | 72.8(+1.7) | 4.19 | 117.7 | 8.2M | ||

| YOLOv8+AFFD | √ | 87.3(+0.3) | 71.2(+0.1) | 3.43 | 81.9 | 6.9M | ||

| YOLOv8+WIoUv3 | √ | 88.6(+1.6) | 72.1(+1.0) | 3.16 | 124.8 | 6.2M | ||

| YOLOv8+S+A | √ | √ | 89.1(+2.1) | 73.7(+2.6) | 4.58 | 81.8 | 8.8M | |

| YOLOv8+S+W | √ | √ | 89.3(+2.3) | 73.1(+2.0) | 4.19 | 98.4 | 8.2M | |

| YOLOv8+A+W | √ | √ | 88.2(+1.2) | 72.5(+1.4) | 3.43 | 89.0 | 6.9M | |

| SAW-YOLO | √ | √ | √ | 90.3(+3.3) | 74.3(+3.2) | 4.58 | 82.9 | 8.8M |

| Models | ||||

|---|---|---|---|---|

| SwinTransformer | 31.3 | 43.4 | 53.0 | 68.752 |

| Faster-RCNN | 26.3 | 41.9 | 51.4 | 51.753 |

| YOLOv3 | 47.9 | 68.48 | 85.0 | 61.588 |

| YOLOv5n | 28.6 | 70.1 | 80.6 | 3.247 |

| YOLOv6n | 41.6 | 66.7 | 81.7 | 4.239 |

| YOLOx-s | 30.6 | 51.1 | 63.0 | 8.942 |

| YOLOv8n | 37.8 | 60.1 | 76.0 | 3.157 |

| YOLOv8s | 43.0 | 70.3 | 84.5 | 11.141 |

| SAW-YOLO | 45.3 | 70.8 | 77.4 | 4.58 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, X.; Liang, J.; Yang, Y.; Li, Z.; Jia, X.; Pu, H.; Zhu, P. SAW-YOLO: A Multi-Scale YOLO for Small Target Citrus Pests Detection. Agronomy 2024, 14, 1571. https://doi.org/10.3390/agronomy14071571

Wu X, Liang J, Yang Y, Li Z, Jia X, Pu H, Zhu P. SAW-YOLO: A Multi-Scale YOLO for Small Target Citrus Pests Detection. Agronomy. 2024; 14(7):1571. https://doi.org/10.3390/agronomy14071571

Chicago/Turabian StyleWu, Xiaojiang, Jinzhe Liang, Yiyu Yang, Zhenghao Li, Xinyu Jia, Haibo Pu, and Peng Zhu. 2024. "SAW-YOLO: A Multi-Scale YOLO for Small Target Citrus Pests Detection" Agronomy 14, no. 7: 1571. https://doi.org/10.3390/agronomy14071571