A Reinforcement Learning-Driven UAV-Based Smart Agriculture System for Extreme Weather Prediction

Abstract

:1. Introduction

- Enhanced multi-UAV path-planning and coordination efficiency: A reinforcement learning-based dynamic path-planning and coordination algorithm is proposed. By integrating geographical information, real-time UAV communication, and task priority evaluation, the flight paths and collaborative strategies of multiple UAVs are optimized, improving the geographical uniformity and timeliness of data acquisition.

- Multi-source data fusion for improved meteorological monitoring: A multi-source data collaborative fusion mechanism is designed, integrating real-time UAV-acquired data with satellite and ground station data. By leveraging edge–cloud collaborative computing, data complementarity and comprehensiveness are enhanced, thereby improving monitoring accuracy and support capabilities under complex meteorological conditions.

- Lightweight intelligent early warning model for real-time extreme weather detection: A lightweight intelligent early warning model is introduced. Through model pruning and parameter optimization, its deployment on UAVs is achieved, while edge inference acceleration techniques significantly enhance real-time data processing capabilities, ensuring the timeliness and accuracy of extreme weather warnings.

- The effectiveness of the proposed framework has been validated through experiments and field tests. Results demonstrate that the proposed method significantly outperforms traditional approaches in terms of data acquisition efficiency, monitoring accuracy, and early warning timeliness. This study provides a feasible solution for intelligent multi-UAV monitoring under complex meteorological conditions and lays a technological foundation for the further development of the low-altitude economy.

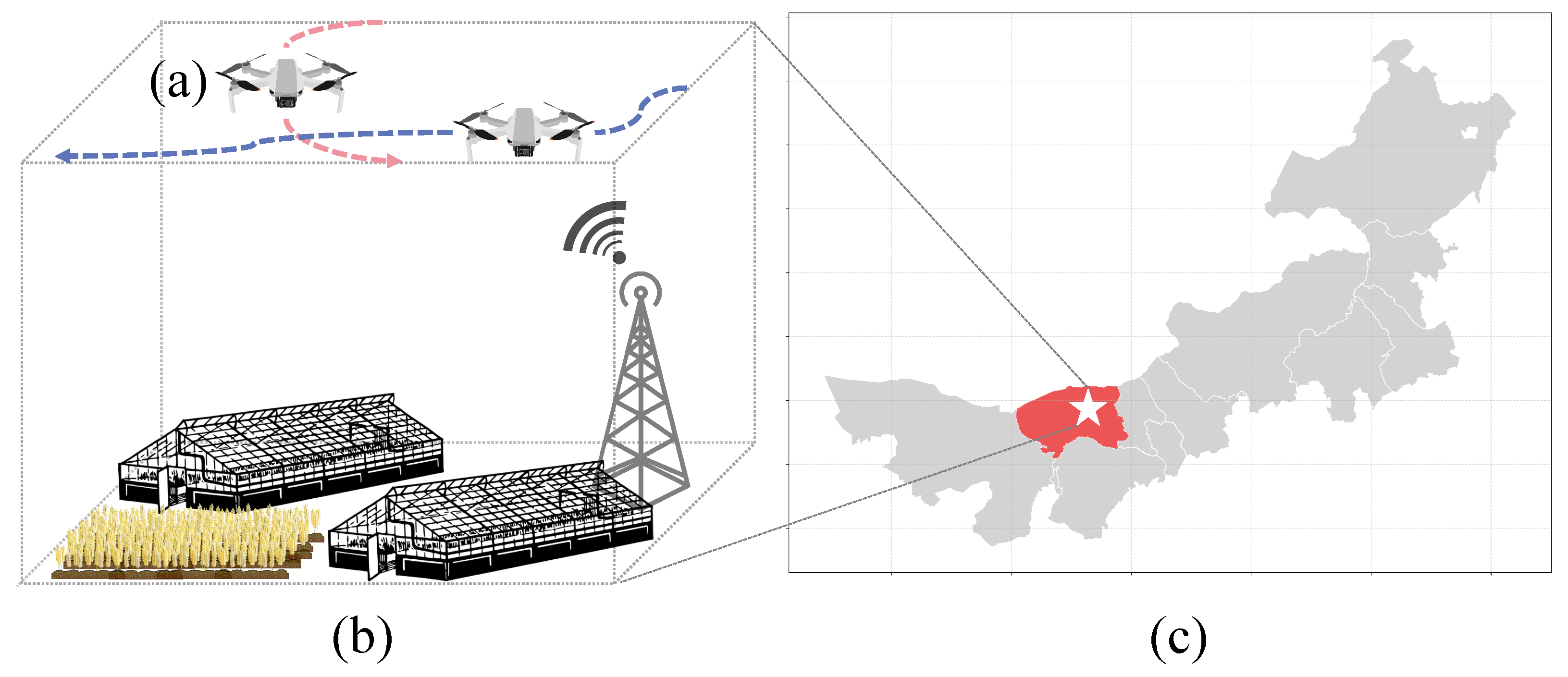

2. Materials and Methods

2.1. Materials Acquisition

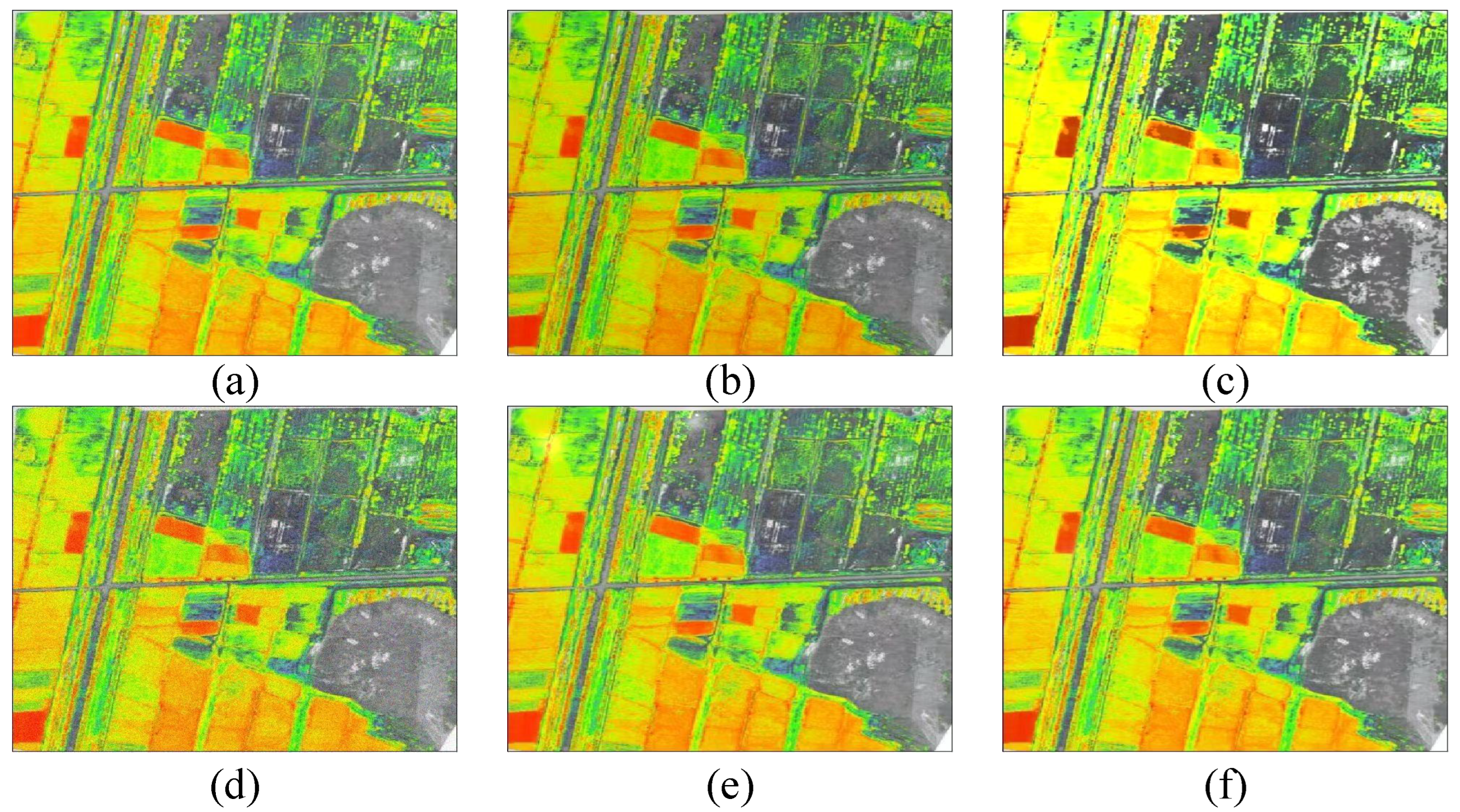

2.2. Data Preprocessing

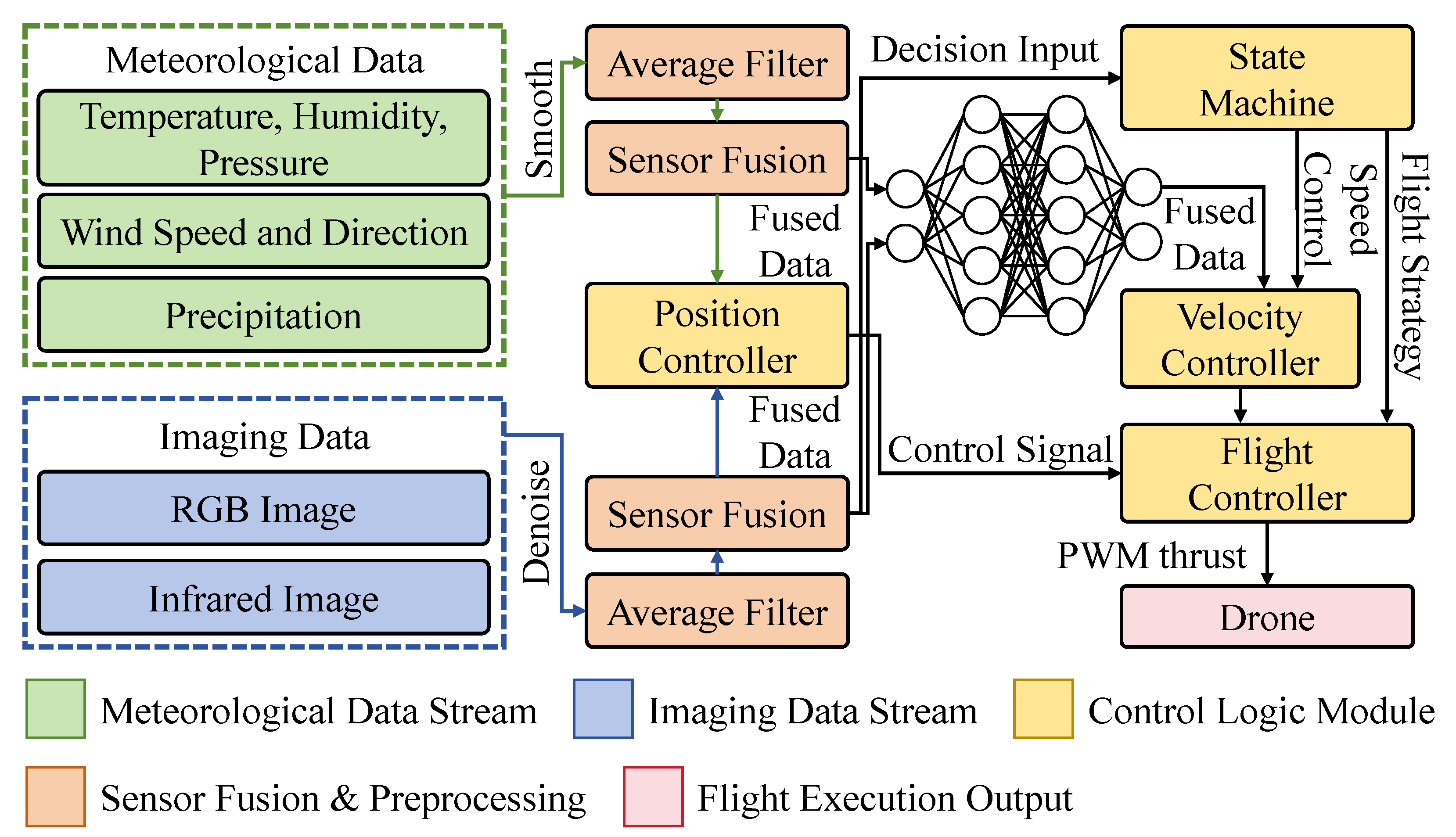

2.2.1. Sensor Data Preprocessing

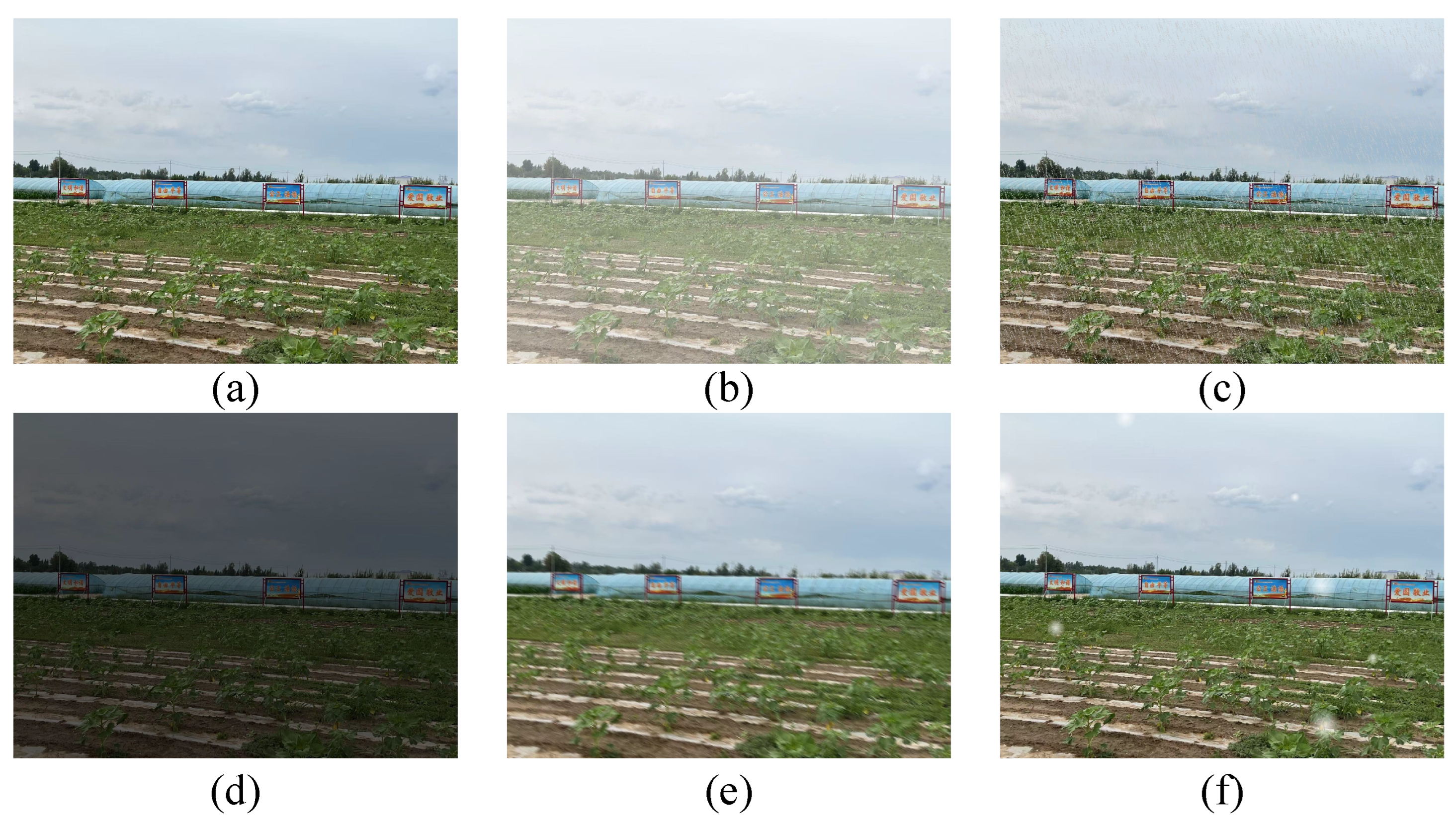

2.2.2. Image Data Preprocessing

2.3. Proposed Method

2.3.1. Overall

2.3.2. UAV Cruise Optimization Algorithm

2.3.3. Density-Aware Attention Mechanism

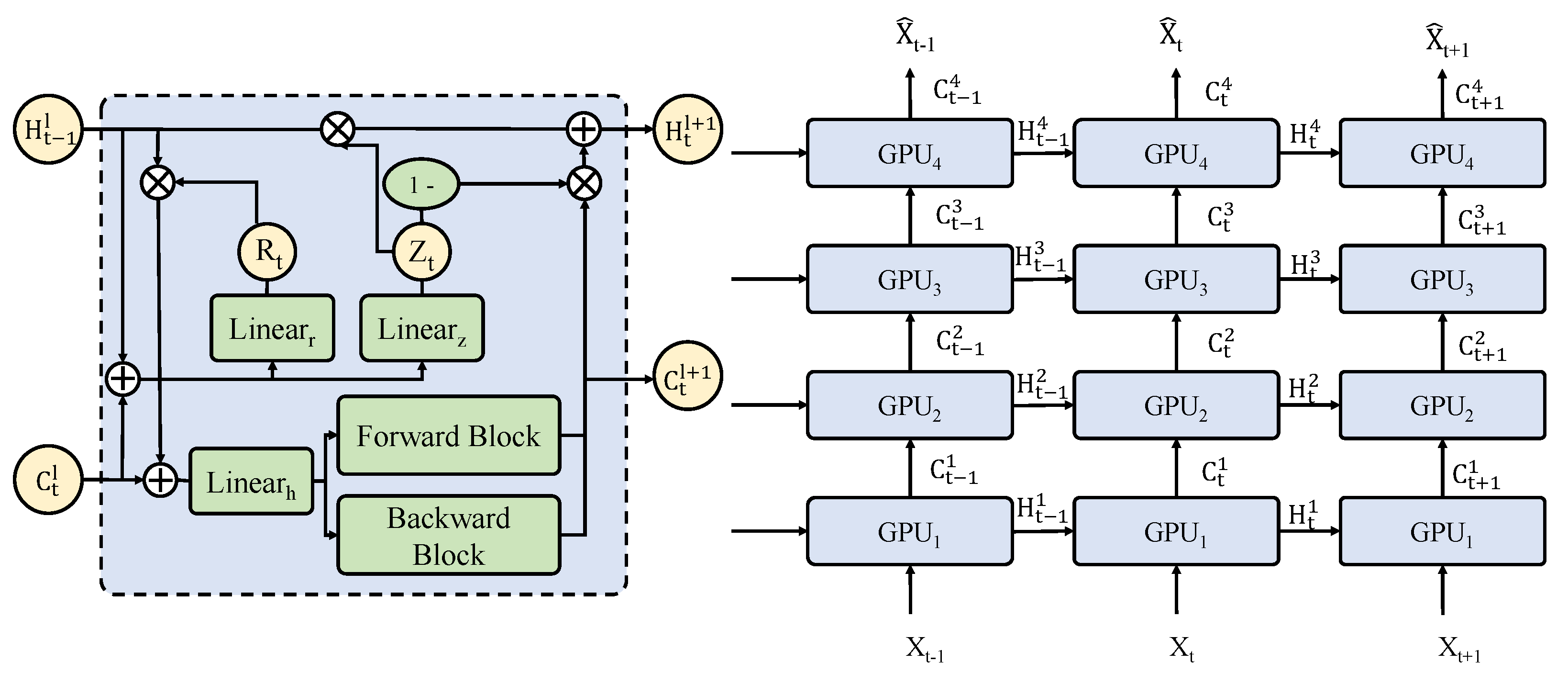

2.3.4. Lightweight Edge-Computing-Based Extreme Weather Early Warning Model

2.4. Experimental Setup

2.4.1. Hardware and Software Platform

2.4.2. Experimental Configuration

2.4.3. Evaluation Metrics

2.5. Baseline

3. Results and Discussion

3.1. Overall Performance of Different Models in Extreme Weather Prediction

3.2. Performance of Different Models in Various Extreme Weather Conditions

3.3. Reliability Testing of Results

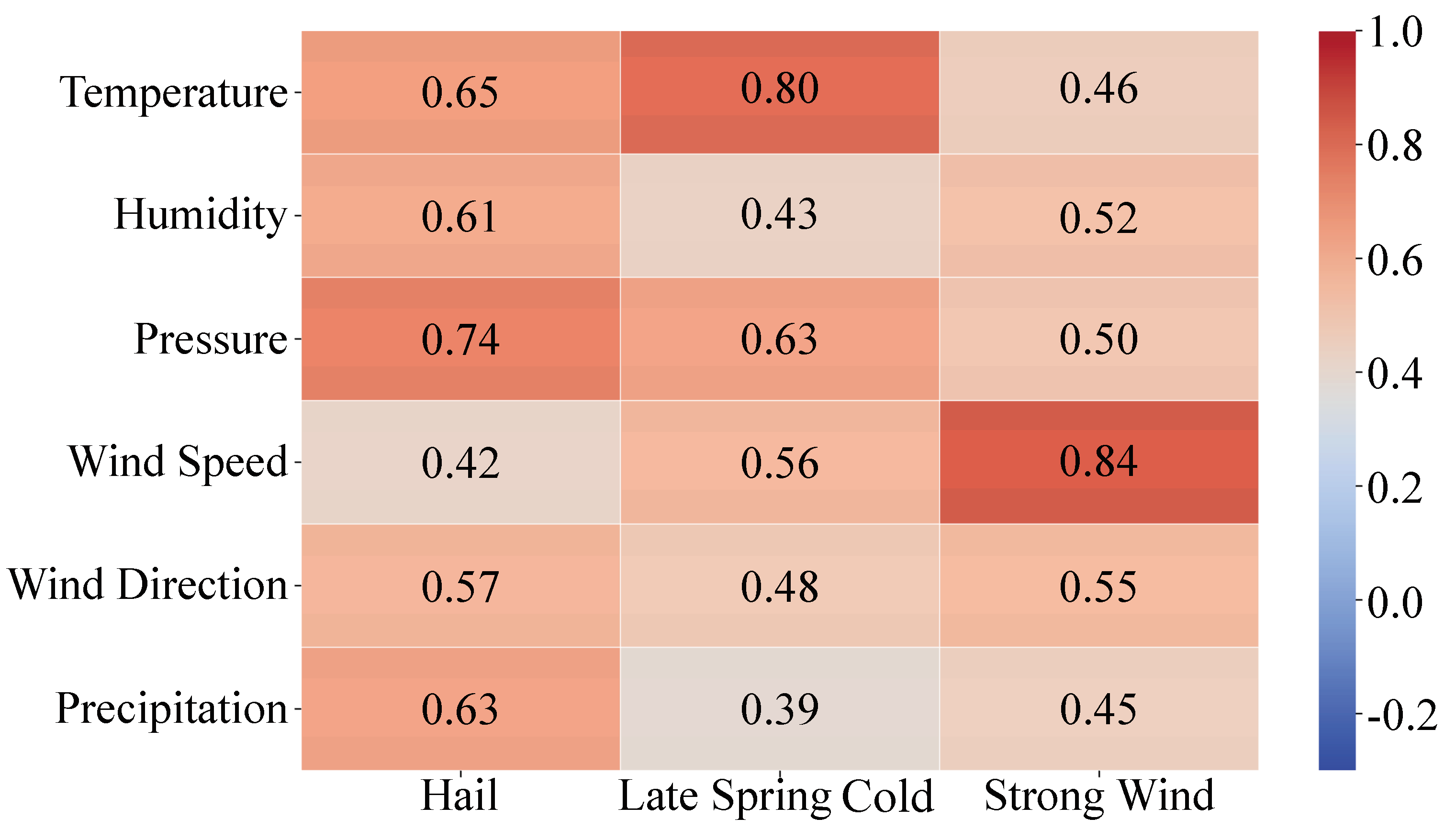

3.4. Correlation Between Different Meteorological Variables and Extreme Weather Events

3.5. Ablation Study on Different Attention Mechanism

3.6. Ablation Study on Different Lightweighting Methods

3.7. Test on Different Platform

3.8. Limitation and Future Work

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Doornbos, J.; Bennin, K.E.; Babur, Ö.; Valente, J. Drone technologies: A tertiary systematic literature review on a decade of improvements. IEEE Access 2024, 12, 23220–23239. [Google Scholar] [CrossRef]

- Quero, C.O.; Martinez-Carranza, J. Unmanned aerial systems in search and rescue: A global perspective on current challenges and future applications. Int. J. Disaster Risk Reduct. 2025, 118, 105199. [Google Scholar] [CrossRef]

- Zhang, Y.; Wa, S.; Zhang, L.; Lv, C. Automatic plant disease detection based on tranvolution detection network with GAN modules using leaf images. Front. Plant Sci. 2022, 13, 875693. [Google Scholar] [CrossRef]

- Ullmann, I.; Bonfert, C.; Grathwohl, A.; Lahmeri, M.A.; Mustieles-Pérez, V.; Kanz, J.; Sterk, E.; Bormuth, F.; Ghasemi, R.; Fenske, P.; et al. Towards detecting climate change effects with UAV-borne imaging radars. IEEE J. Microwaves 2024, 4, 881–893. [Google Scholar] [CrossRef]

- Bayomi, N.; Fernandez, J.E. Eyes in the sky: Drones applications in the built environment under climate change challenges. Drones 2023, 7, 637. [Google Scholar] [CrossRef]

- Alvarez-Vanhard, E.; Corpetti, T.; Houet, T. UAV & satellite synergies for optical remote sensing applications: A literature review. Sci. Remote Sens. 2021, 3, 100019. [Google Scholar]

- Ren, Z.; Zheng, H.; Chen, J.; Chen, T.; Xie, P.; Xu, Y.; Deng, J.; Wang, H.; Sun, M.; Jiao, W. Integrating UAV, UGV and UAV-UGV collaboration in future industrialized agriculture: Analysis, opportunities and challenges. Comput. Electron. Agric. 2024, 227, 109631. [Google Scholar] [CrossRef]

- Qu, C.; Sorbelli, F.B.; Singh, R.; Calyam, P.; Das, S.K. Environmentally-aware and energy-efficient multi-drone coordination and networking for disaster response. IEEE Trans. Netw. Serv. Manag. 2023, 20, 1093–1109. [Google Scholar] [CrossRef]

- Bello, A.B.; Navarro, F.; Raposo, J.; Miranda, M.; Zazo, A.; Álvarez, M. Fixed-wing UAV flight operation under harsh weather conditions: A case study in Livingston island glaciers, Antarctica. Drones 2022, 6, 384. [Google Scholar] [CrossRef]

- Sziroczak, D.; Rohacs, D.; Rohacs, J. Review of using small UAV based meteorological measurements for road weather management. Prog. Aerosp. Sci. 2022, 134, 100859. [Google Scholar] [CrossRef]

- Zhang, Y.; Wa, S.; Liu, Y.; Zhou, X.; Sun, P.; Ma, Q. High-accuracy detection of maize leaf diseases CNN based on multi-pathway activation function module. Remote Sens. 2021, 13, 4218. [Google Scholar] [CrossRef]

- McEnroe, P.; Wang, S.; Liyanage, M. A survey on the convergence of edge computing and AI for UAVs: Opportunities and challenges. IEEE Internet Things J. 2022, 9, 15435–15459. [Google Scholar] [CrossRef]

- Prasad, N.L.; Ramkumar, B. 3-D deployment and trajectory planning for relay based UAV assisted cooperative communication for emergency scenarios using Dijkstra’s algorithm. IEEE Trans. Veh. Technol. 2022, 72, 5049–5063. [Google Scholar] [CrossRef]

- Zhang, W.; Zhang, W. An efficient UAV localization technique based on particle swarm optimization. IEEE Trans. Veh. Technol. 2022, 71, 9544–9557. [Google Scholar] [CrossRef]

- Puente-Castro, A.; Rivero, D.; Pazos, A.; Fernandez-Blanco, E. A review of artificial intelligence applied to path planning in UAV swarms. Neural Comput. Appl. 2022, 34, 153–170. [Google Scholar] [CrossRef]

- Wang, J.; Zhao, Z.; Qu, J.; Chen, X. APPA-3D: An autonomous 3D path planning algorithm for UAVs in unknown complex environments. Sci. Rep. 2024, 14, 1231. [Google Scholar] [CrossRef]

- Nguyen, K.K.; Duong, T.Q.; Do-Duy, T.; Claussen, H.; Hanzo, L. 3D UAV trajectory and data collection optimisation via deep reinforcement learning. IEEE Trans. Commun. 2022, 70, 2358–2371. [Google Scholar] [CrossRef]

- Zhao, N.; Ye, Z.; Pei, Y.; Liang, Y.C.; Niyato, D. Multi-agent deep reinforcement learning for task offloading in UAV-assisted mobile edge computing. IEEE Trans. Wirel. Commun. 2022, 21, 6949–6960. [Google Scholar] [CrossRef]

- Box, G.E.; Jenkins, G.M.; Reinsel, G.C.; Ljung, G.M. Time Series Analysis: Forecasting and Control; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.c. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. Adv. Neural Inf. Process. Syst. 2015, 28, 350. [Google Scholar]

- Sønderby, C.K.; Espeholt, L.; Heek, J.; Dehghani, M.; Oliver, A.; Salimans, T.; Agrawal, S.; Hickey, J.; Kalchbrenner, N. Metnet: A neural weather model for precipitation forecasting. arXiv 2020, arXiv:2003.12140. [Google Scholar]

- Venkatachalam, K.; Trojovskỳ, P.; Pamucar, D.; Bacanin, N.; Simic, V. DWFH: An improved data-driven deep weather forecasting hybrid model using Transductive Long Short Term Memory (T-LSTM). Expert Syst. Appl. 2023, 213, 119270. [Google Scholar] [CrossRef]

- Fang, W.; Yuan, Z.; Wang, B. TMC-Net: A temporal multivariate correction network in temperature forecasting. Expert Syst. Appl. 2025, 274, 127015. [Google Scholar] [CrossRef]

- Ji, J.; He, J.; Lei, M.; Wang, M.; Tang, W. Spatio-temporal transformer network for weather forecasting. IEEE Trans. Big Data 2024, 11, 372–387. [Google Scholar] [CrossRef]

| Parameter | Abbreviation | Measurement Unit |

|---|---|---|

| Temperature | Tair | °C |

| Humidity | Hum | % |

| Pressure | Pres | hPa |

| Wind speed | WS | m s−1 |

| Wind direction | WD | ° |

| Precipitation | Prec | mm |

| Visible light image | Vis | - |

| Infrared thermal image | IR | - |

| Model | Precision | Recall | Accuracy | F1-Score |

|---|---|---|---|---|

| ARIMA [19] | 0.81 | 0.84 | 0.82 | 0.82 |

| LSTM [20] | 0.83 | 0.85 | 0.83 | 0.84 |

| METNet [22] | 0.85 | 0.86 | 0.84 | 0.85 |

| DWFH [23] | 0.85 | 0.87 | 0.86 | 0.86 |

| ConvLSTM [21] | 0.86 | 0.89 | 0.87 | 0.87 |

| TMC-Net [24] | 0.88 | 0.85 | 0.87 | 0.86 |

| STTN [25] | 0.91 | 0.89 | 0.90 | 0.90 |

| Proposed Method | 0.93 | 0.88 | 0.91 | 0.91 |

| Variable | Hail | Late Spring Cold | Strong Wind |

|---|---|---|---|

| Temperature | 0.65 | 0.80 | 0.46 |

| Humidity | 0.61 | 0.43 | 0.52 |

| Pressure | 0.74 | 0.63 | 0.50 |

| Wind Speed | 0.42 | 0.56 | 0.84 |

| Wind Direction | 0.57 | 0.48 | 0.55 |

| Precipitation | 0.63 | 0.39 | 0.45 |

| Attention Mechanism | Precision | Recall | Accuracy | F1-Score |

|---|---|---|---|---|

| Density-Aware Attention | 0.93 | 0.88 | 0.91 | 0.91 |

| Self-Attention | 0.86 | 0.82 | 0.85 | 0.84 |

| Channel Attention | 0.84 | 0.79 | 0.83 | 0.81 |

| Spatial Attention | 0.85 | 0.78 | 0.82 | 0.80 |

| Convolutional Block Attention | 0.83 | 0.77 | 0.81 | 0.79 |

| Platform | Model Version | Memory (MB) | Latency (ms) | FPS |

|---|---|---|---|---|

| Jetson Xavier NX | Original | 1032 | 36 | 21.8 |

| Pruned + Quantized | 612 | 22 | 28.0 | |

| Jetson Nano | Original | 896 | 82 | 4.1 |

| Pruned + Quantized | 544 | 47 | 13.7 |

| Model | Jetson Xavier NX | Jetson Nano | Huawei P50 | NVIDIA A100 |

|---|---|---|---|---|

| ARIMA | 39.1 | 21.5 | 18.2 | 122.4 |

| LSTM | 21.5 | 10.2 | 15.1 | 98.7 |

| METNet | 17.3 | 8.9 | 12.7 | 85.2 |

| DWFH | 19.6 | 9.4 | 13.8 | 89.5 |

| ConvLSTM | 14.8 | 6.3 | 11.4 | 73.2 |

| TMC-Net | 15.5 | 7.0 | 11.6 | 77.1 |

| Proposed Method | 28.0 | 13.7 | 20.4 | 94.5 |

| STTN | 12.6 | 5.8 | 10.9 | 67.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hao, J.; Li, B.; Tang, W.; Liu, S.; Chang, Y.; Pan, J.; Tao, Y.; Lv, C. A Reinforcement Learning-Driven UAV-Based Smart Agriculture System for Extreme Weather Prediction. Agronomy 2025, 15, 964. https://doi.org/10.3390/agronomy15040964

Hao J, Li B, Tang W, Liu S, Chang Y, Pan J, Tao Y, Lv C. A Reinforcement Learning-Driven UAV-Based Smart Agriculture System for Extreme Weather Prediction. Agronomy. 2025; 15(4):964. https://doi.org/10.3390/agronomy15040964

Chicago/Turabian StyleHao, Jiarui, Bo Li, Weidong Tang, Shiya Liu, Yihe Chang, Jianxiang Pan, Yang Tao, and Chunli Lv. 2025. "A Reinforcement Learning-Driven UAV-Based Smart Agriculture System for Extreme Weather Prediction" Agronomy 15, no. 4: 964. https://doi.org/10.3390/agronomy15040964

APA StyleHao, J., Li, B., Tang, W., Liu, S., Chang, Y., Pan, J., Tao, Y., & Lv, C. (2025). A Reinforcement Learning-Driven UAV-Based Smart Agriculture System for Extreme Weather Prediction. Agronomy, 15(4), 964. https://doi.org/10.3390/agronomy15040964