Evaluation of IPCC Models’ Performance in Simulating Late-Twentieth-Century Weather Patterns and Extreme Precipitation in Southeastern China

Abstract

:1. Introduction

2. Data

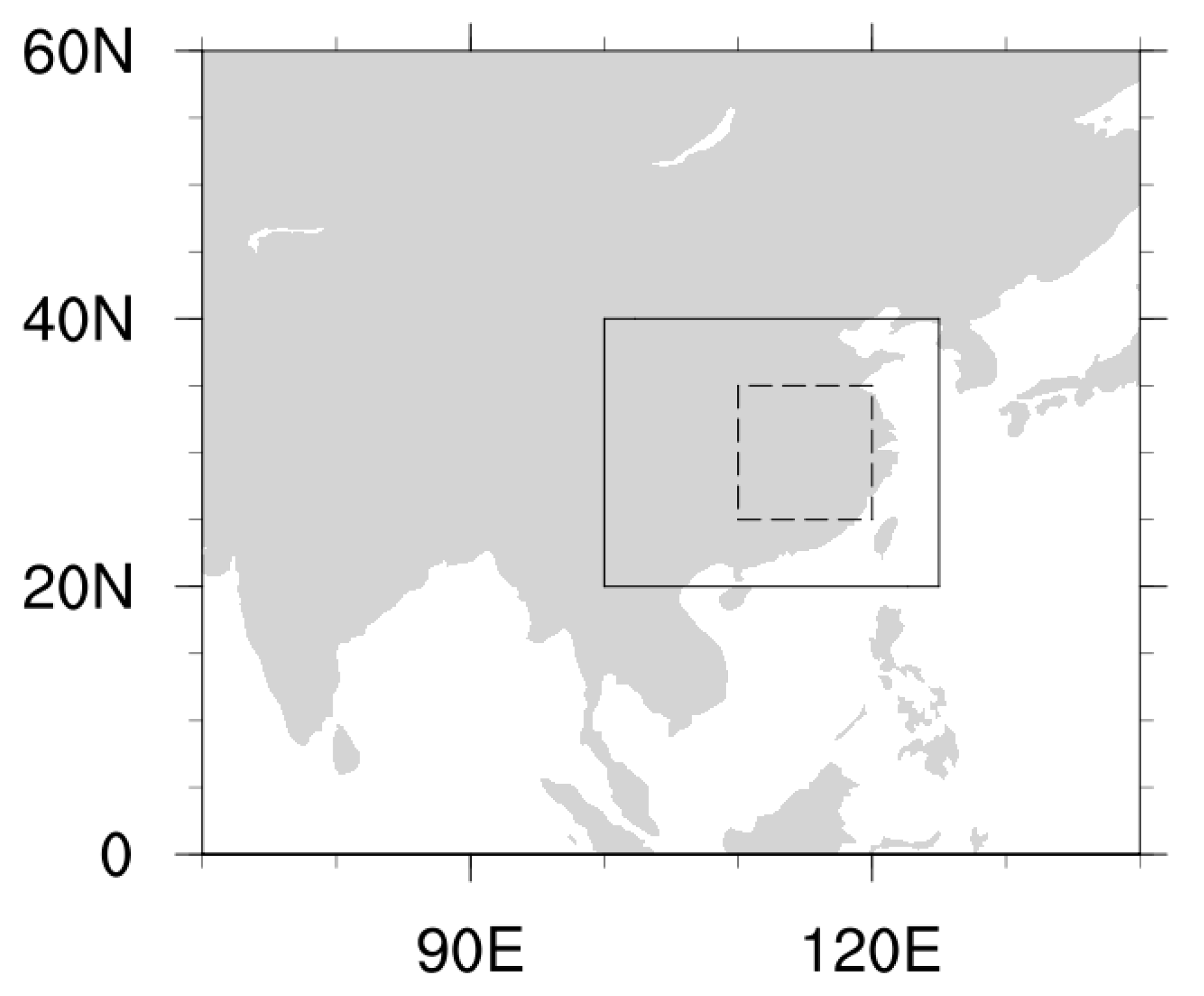

2.1. Analysis Domains

2.2. Validation Data

2.3. Model Output

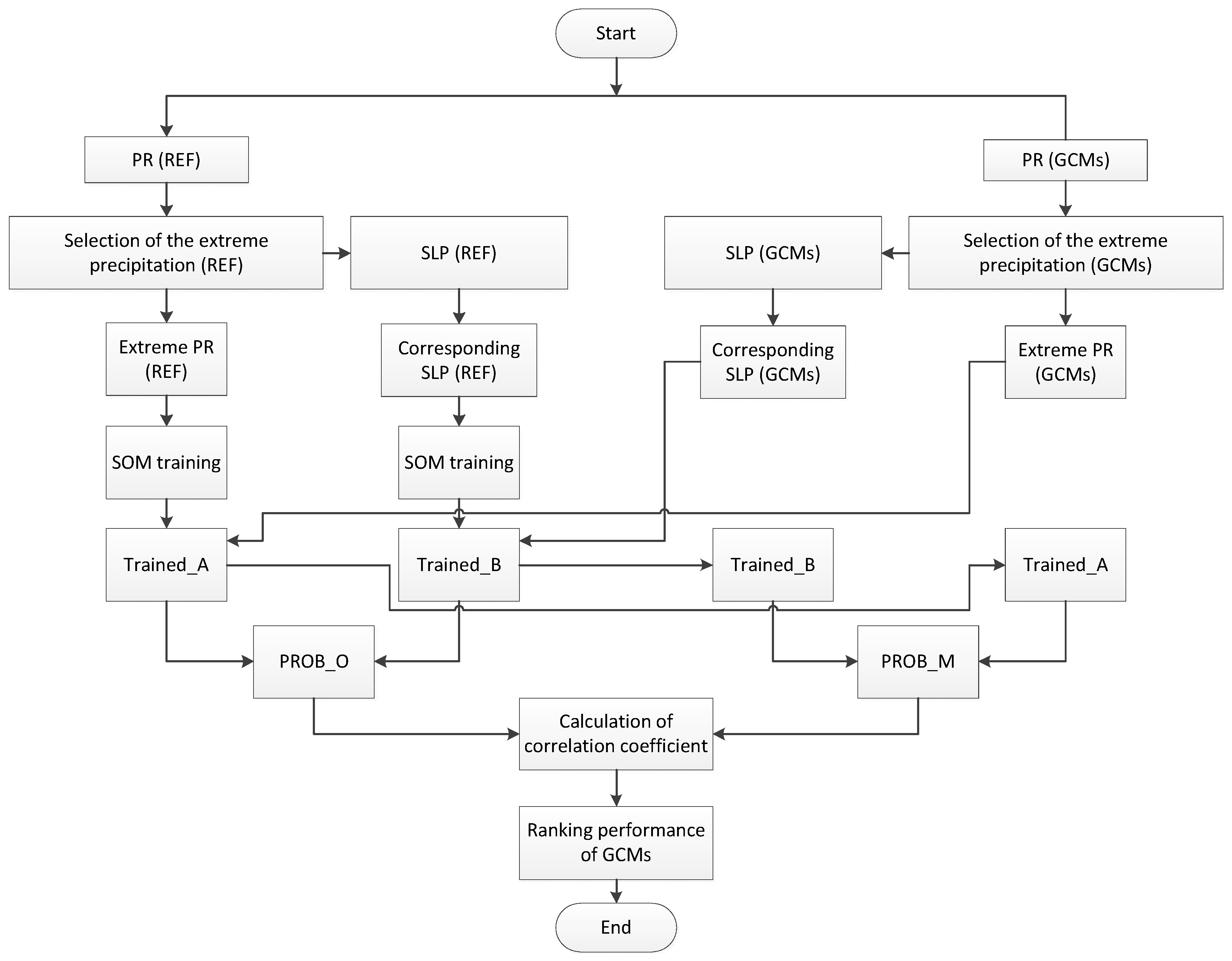

3. Methods

3.1. Classification of WT: Self-Organizing Maps (SOMs)

3.2. Definition of Extreme Precipitation Patterns (EPPs)

3.3. Model Evaluation

3.4. Model Simulation Performance Ranking Metrics

- (a)

- Average correlation coefficient

- (b)

- Cumulative sum of the number of significant correlations

- (c)

- Comprehensive rating index

4. Results

4.1. Simulation of MSLP Temporal Patterns and PR Temporal Patterns

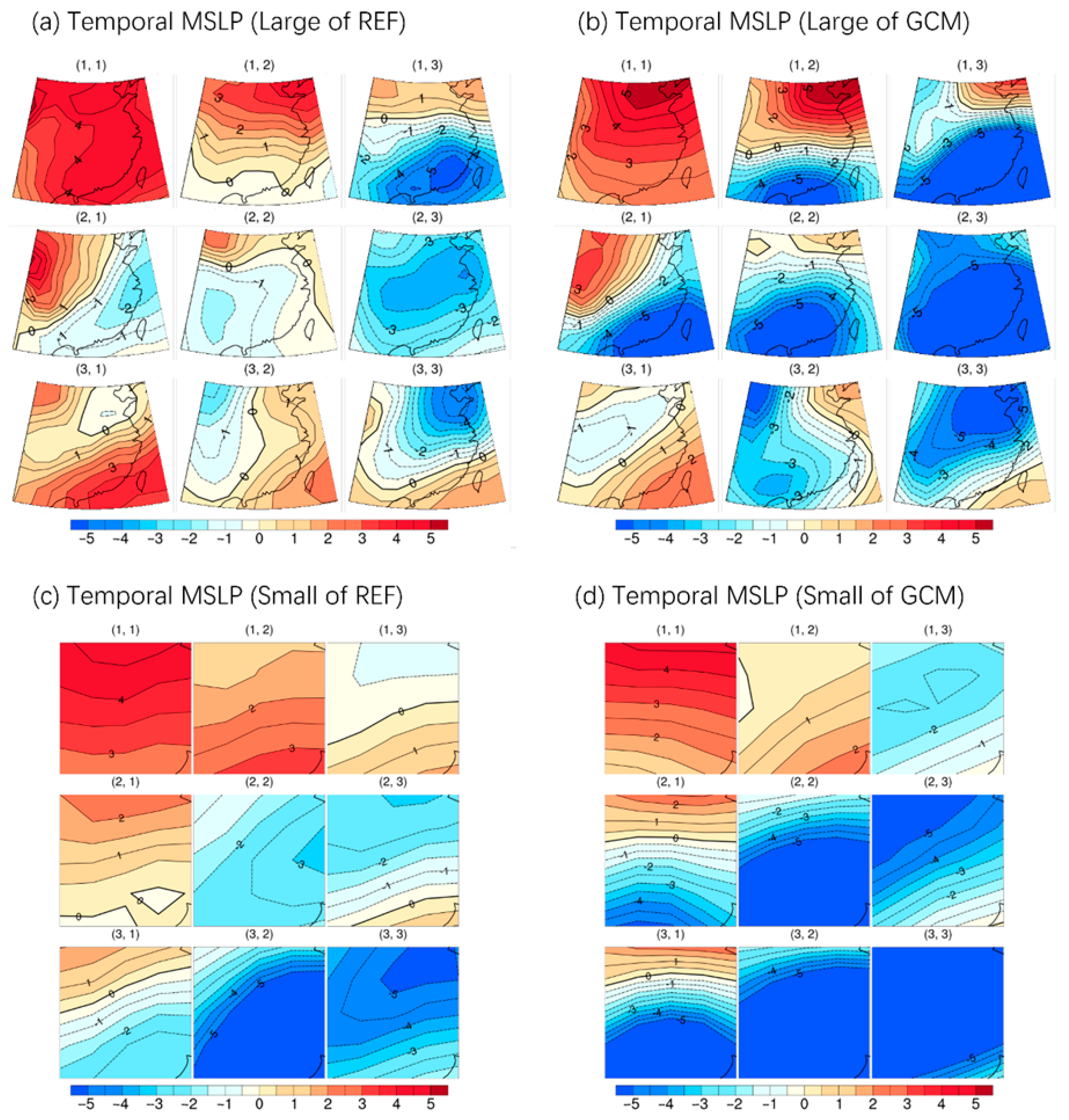

4.1.1. Characteristic Patterns of Mean Sea Level Pressure (MSLP)

4.1.2. PR Characteristic Patterns

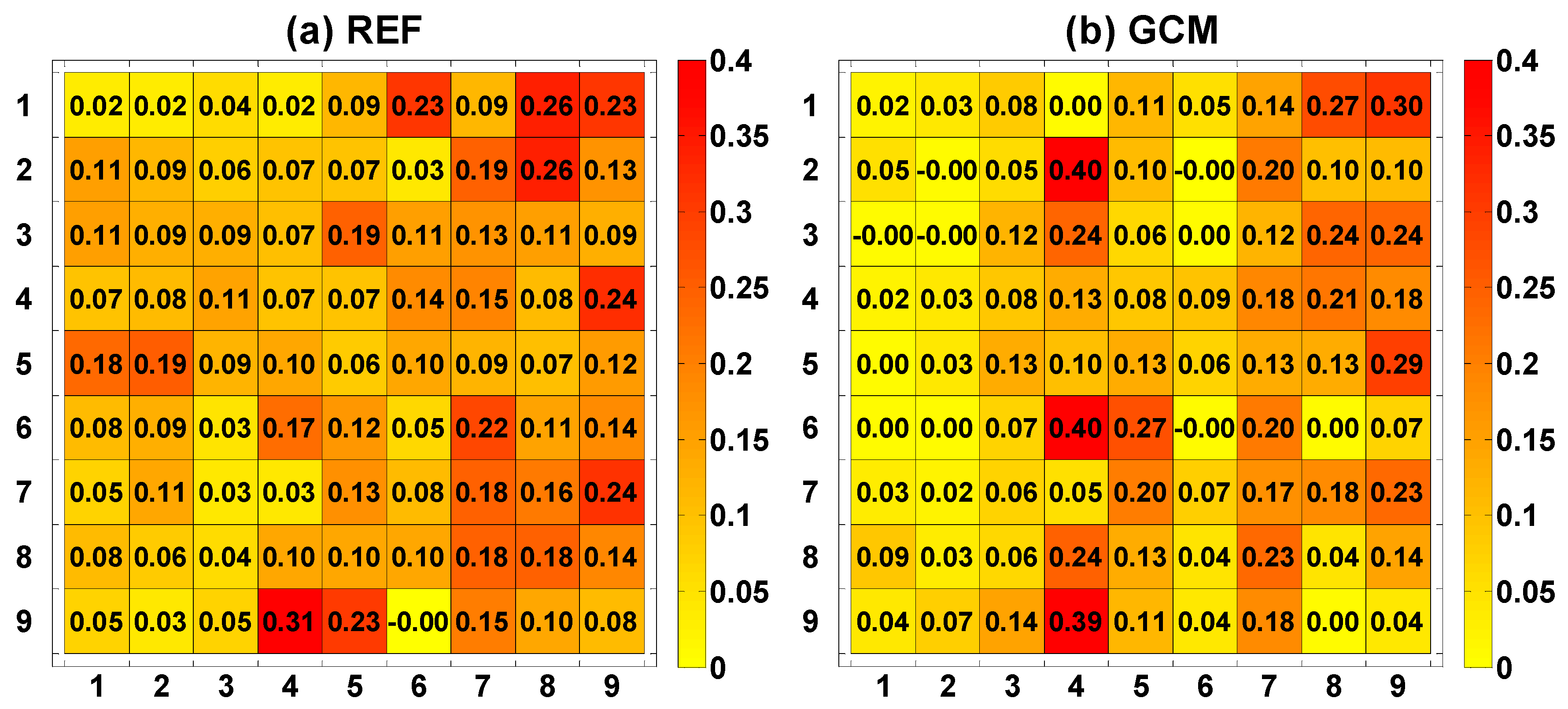

4.2. Simulation of Probability

4.3. Model Ranking

4.3.1. Impact of the Choice of Evaluation Indicators on the Assessment of Model Simulation Capacity

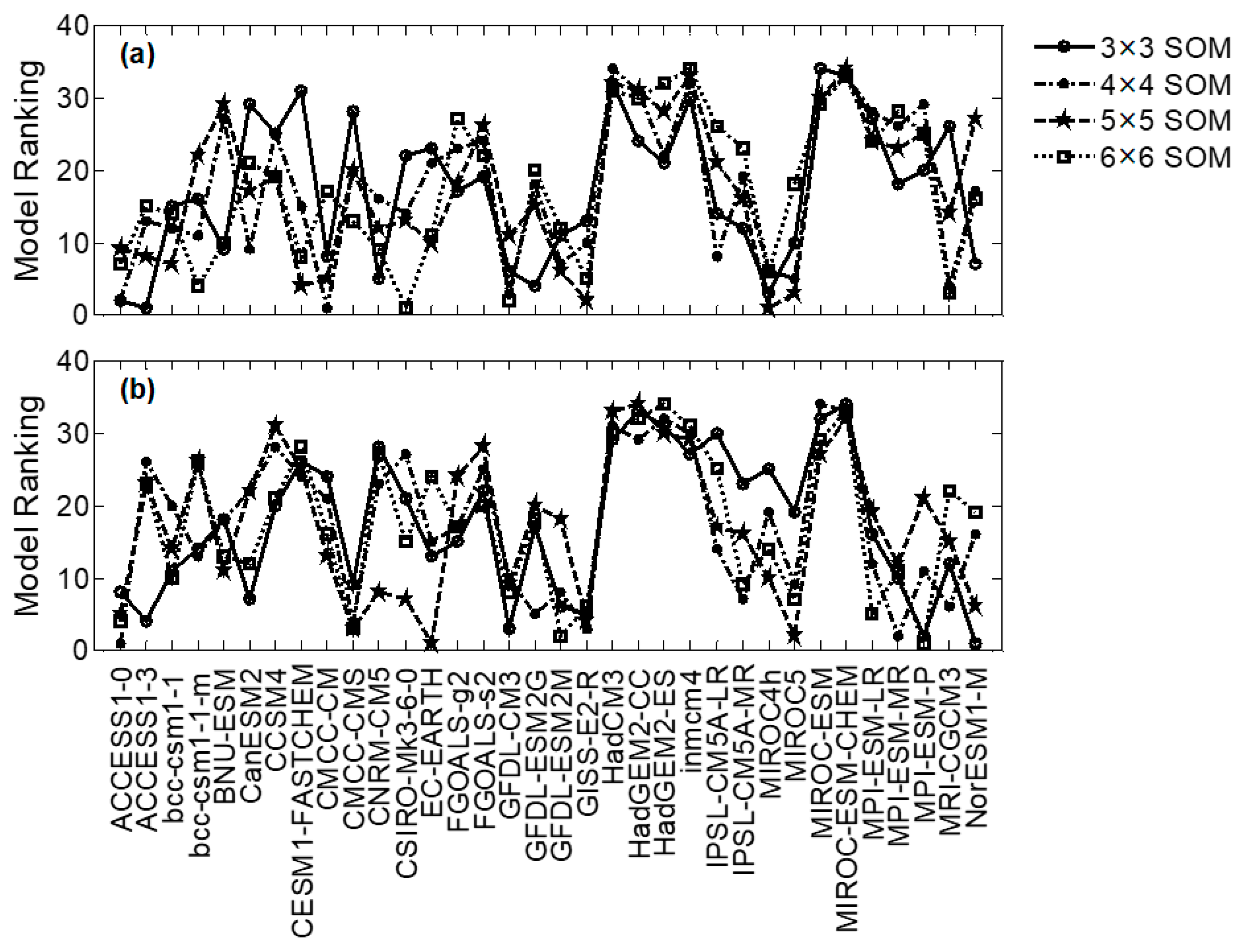

4.3.2. Impact of the Choice of SOM Sizes on the Assessment of Model Simulation Capability

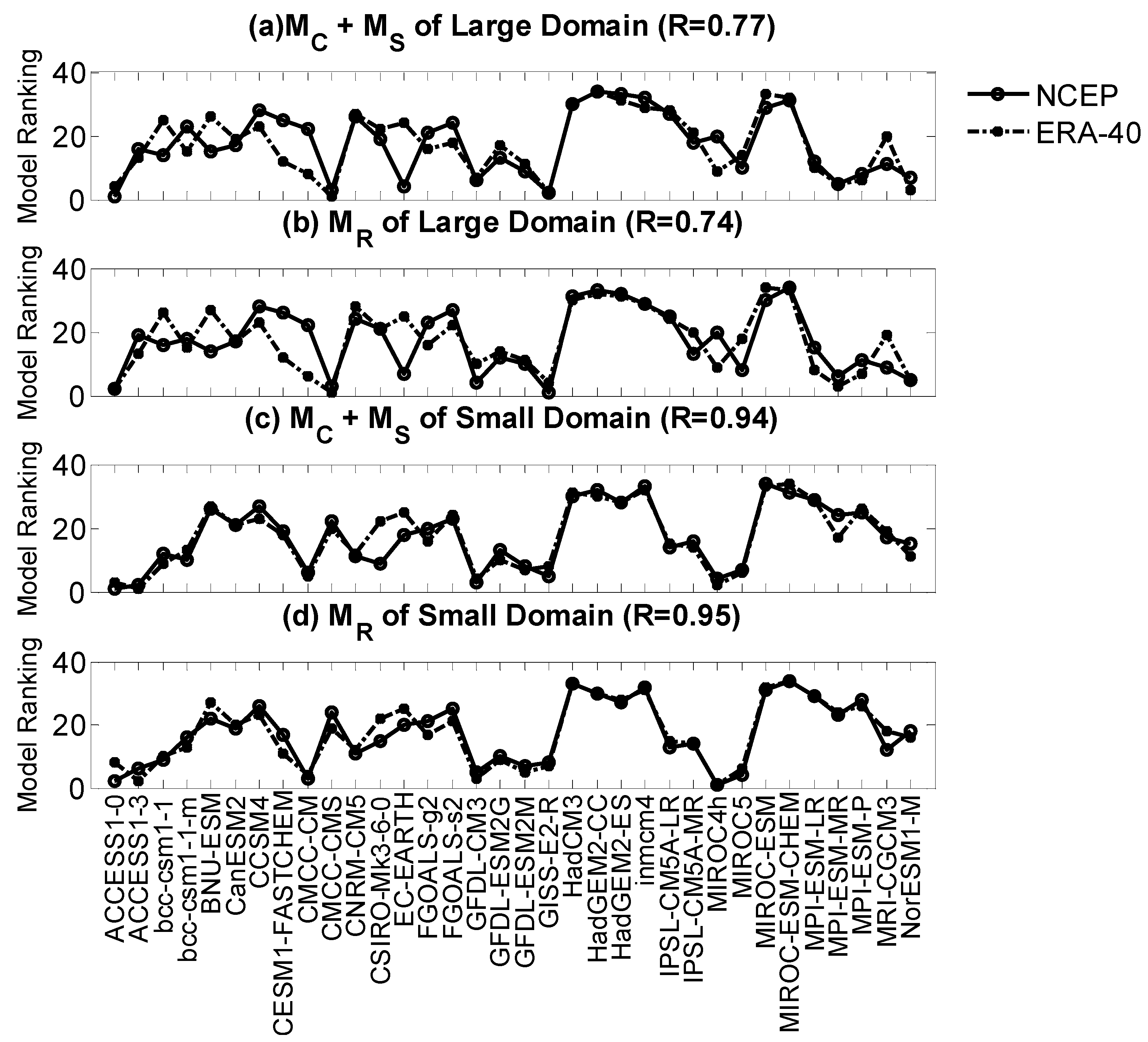

4.3.3. Impact of the Choice of Reanalysis Data on the Assessment of Model Simulation Capability

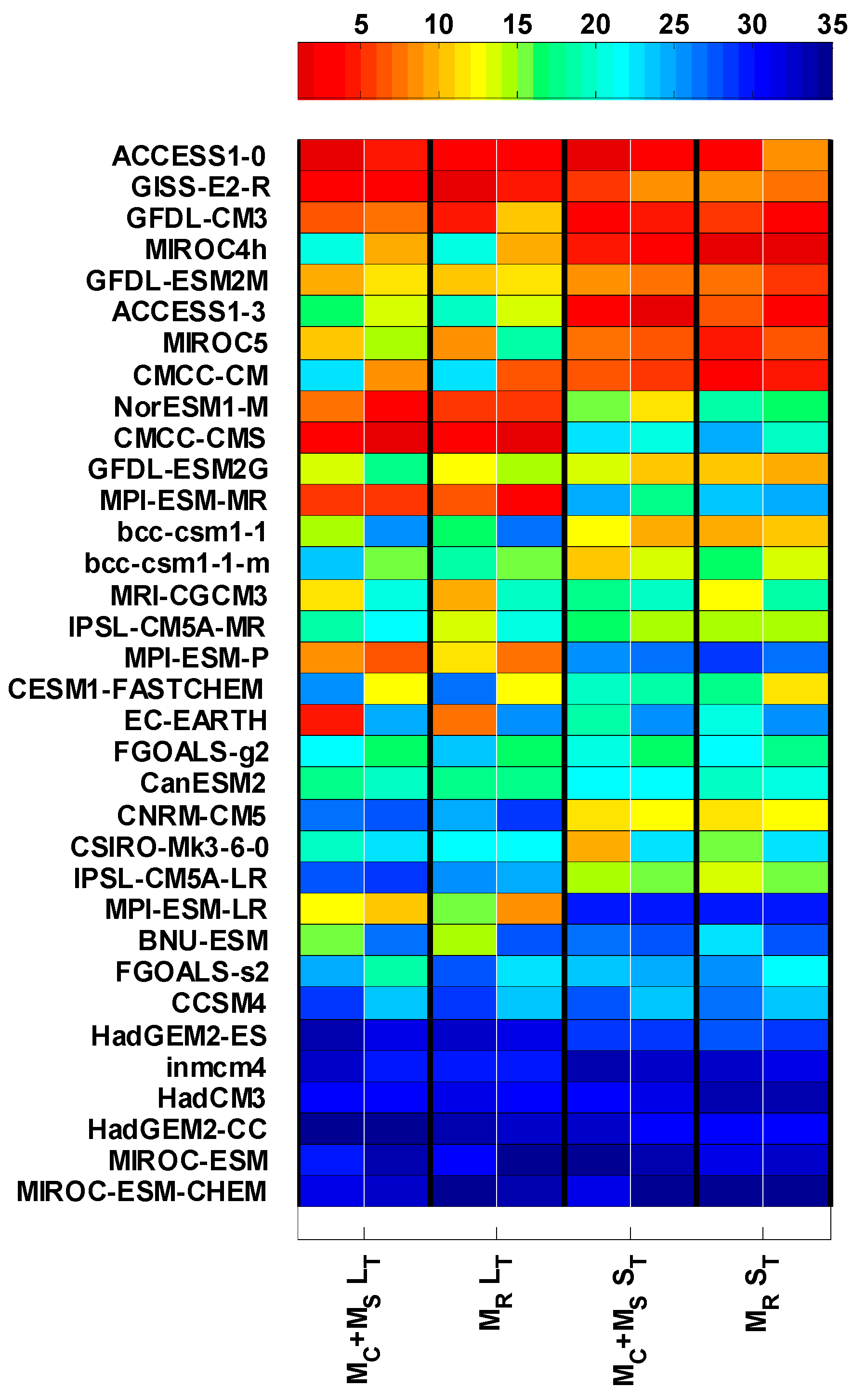

4.3.4. Model Ranking across All Measures

5. Discussion

6. Summary and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

References

- O’Neill, B.C.; Tebaldi, C.; van Vuuren, D.P.; Eyring, V.; Friedlingstein, P.; Hurtt, G.; Knutti, R.; Kriegler, E.; Lamarque, J.-F.; Lowe, J.; et al. The scenario model intercomparison project (ScenarioMIP) for CMIP6. Geosci. Model Dev. 2016, 9, 3461–3482. [Google Scholar] [CrossRef]

- Zhao, L.; Wang, Y.; Zhao, C.; Dong, X.; Yung, Y.L. Compensating Errors in Cloud Radiative and Physical Properties over the Southern Ocean in the CMIP6 Climate Models. Adv. Atmos. Sci. 2022, 39, 2156–2171. [Google Scholar] [CrossRef]

- Song, S.; Yan, X. Evaluation of events of extreme temperature change between neighboring days in CMIP6 models over China. Theor. Appl. Climatol. 2022, 150, 53–72. [Google Scholar] [CrossRef]

- Wei, L.; Xin, X.; Li, Q.; Wu, Y.; Tang, H.; Li, Y.; Yang, B. Simulation and projection of climate extremes in China by multiple Coupled Model Intercomparison Project Phase 6 models. Int. J. Climatol. 2023, 43, 219–239. [Google Scholar] [CrossRef]

- Wang, X.; Pang, G.; Yang, M. Precipitation over the Tibetan Plateau during recent decades: A review based on observations and simulations. Int. J. Climatol. 2018, 38, 1116–1131. [Google Scholar] [CrossRef]

- Masson, D.; Knutti, R. Spatial-scale dependence of climate model performance in the CMIP3 ensemble. J. Clim. 2011, 24, 2680–2692. [Google Scholar] [CrossRef]

- Post, P.; Truija, V.; Tuulik, J. Circulation weather types and their influence on temperature and precipitation in Estonia. Boreal. Environ. Res. 2002, 7, 281–289. [Google Scholar]

- Riediger, U.; Gratzkil, A. Future weather types and their influence on mean and extreme climate indices for precipitation and temperature in Central Europe. Meteorol. Z. 2014, 23, 231–252. [Google Scholar] [CrossRef] [PubMed]

- Lu, K.; Arshad, M.; Ma, X.; Ullah, I.; Wang, J.; Shao, W. Evaluating observed and future spatiotemporal changes in precipitation and temperature across China based on CMIP6-GCMs. Int. J. Climatol. 2022, 42, 7703–7729. [Google Scholar] [CrossRef]

- Cardell, M.F.; Amengual, A.; Romero, R.; Ramis, C. Future extremes of temperature and precipitation in Europe derived from a combination of dynamical and statistical approaches. Int. J. Climatol. 2020, 40, 4800–4827. [Google Scholar] [CrossRef]

- Gleckler, P.J.; Doutriaux, C.; Durack, P.J.; Taylor, K.E.; Zhang, Y.; Williams, D.N.; Mason, E.; Servonnat, J. A More Powerful Reality Test for Climate Models. Eos Trans. Am. Geophys. Union 2020, 101. [Google Scholar] [CrossRef]

- Yazdandoost, F.; Moradian, S.; Izadi, A.; Aghakouchak, A. Evaluation of CMIP6 precipitation simulations across different climatic zones: Uncertainty and model intercomparison. Atmos. Res. 2021, 250, 105369. [Google Scholar] [CrossRef]

- Barnes, E.A. Revisiting the evidence linking Arctic amplification to extreme weather in midlatitudes. Geophys. Res. Lett. 2013, 40, 4734–4739. [Google Scholar] [CrossRef]

- Fernandez-Granja, J.A.; Casanueva, A.; Bedia, J.; Fernandez, J. Improved atmospheric circulation over Europe by the new generation of CMIP6 earth system models. Clim. Dyn. 2021, 56, 3527–3540. [Google Scholar] [CrossRef]

- Hulme, M. Attributing weather extremes to ‘climate change’ A review. Prog. Phys. Geogr. 2014, 38, 499–511. [Google Scholar] [CrossRef]

- Catto, J.L. Extratropical cyclone classification and its use in climate studies. Rev. Geophys. 2016, 54, 486–520. [Google Scholar] [CrossRef]

- Sousa, P.M.; Trigo, R.M.; Barriopedro, D.; Soares, P.M.M.; Ramos, A.M.; Liberato, M.L.R. Responses of European precipitation distributions and regimes to different blocking locations. Clim. Dyn. 2017, 48, 1141–1160. [Google Scholar] [CrossRef]

- Hsu, L.-H.; Wu, Y.-C.; Chiang, C.-C.; Chu, J.-L.; Yu, Y.-C.; Wang, A.-H.; Jou, B.J.-D. Analysis of the Interdecadal and Interannual Variability of Autumn Extreme Rainfall in Taiwan Using a Deep-Learning-Based Weather Typing Approach. Asia-Pac. J. Atmos. Sci. 2023, 59, 185–205. [Google Scholar] [CrossRef]

- Christensen, H.M.; Moroz, I.M.; Palmer, T.N. Simulating weather regimes: Impact of stochastic and perturbed parameter schemes in a simple atmospheric model. Clim. Dyn. 2015, 44, 2195–2214. [Google Scholar] [CrossRef]

- Huth, R. A circulation classification scheme applicable in GCM studies. Theor. Appl. Climatol. 2000, 67, 1–18. [Google Scholar] [CrossRef]

- Lorenzo, M.N.; Ramos, A.M.; Taboada, J.J.; Gimeno, L. Changes in present and future circulation types frequency in northwest Iberian Peninsula. PLoS ONE 2011, 6, e16201. [Google Scholar] [CrossRef] [PubMed]

- Stryhal, J.; Plavcová, E. On using self-organizing maps and discretized Sammon maps to study links between atmospheric circulation and weather extremes. Int. J. Climatol. 2023, 43, 2678–2698. [Google Scholar] [CrossRef]

- Gibson, P.B.; Perkins-Kirkpatrick, S.E.; Uotila, P.; Pepler, A.S.; Alexander, L.V. On the use of self-organizing maps for studying climate extremes. J. Geophys. Res. Atmos. 2017, 122, 3891–3903. [Google Scholar] [CrossRef]

- Wang, Y.; Jiang, Z.; Chen, W. Performance of CMIP5 models in the simulation of climate characteristics of synoptic patterns over East Asia. J. Meteorol. Res. 2015, 29, 594–607. [Google Scholar] [CrossRef]

- Gibson, P.B.; Uotila, P.; Perkins-Kirkpatrick, S.E.; Alexander, L.V.; Pitman, A.J. Evaluating synoptic systems in the CMIP5 climate models over the Australian region. Clim. Dyn. 2016, 47, 2235–2251. [Google Scholar] [CrossRef]

- Li, M.; Jiang, Z.; Zhou, P.; Le Treut, H.; Li, L. Projection and possible causes of summer precipitation in eastern China using self-organizing map. Clim. Dyn. 2020, 54, 2815–2830. [Google Scholar] [CrossRef]

- Gore, M.J.; Zarzycki, C.M.; Gervais, M.M. Connecting Large-Scale Meteorological Patterns to Extratropical Cyclones in CMIP6 Climate Models Using Self-Organizing Maps. Earth's Future 2023, 11, e2022EF003211. [Google Scholar] [CrossRef]

- Jaye, A.B.; Bruyère, C.L.; Done, J.M. Understanding future changes in tropical cyclogenesis using Self-Organizing Maps. Weather Clim. Extremes 2019, 26, 100235. [Google Scholar] [CrossRef]

- Harrington, L.J.; Gibson, P.B.; Dean, S.M.; Mitchell, D.; Rosier, S.M.; Frame, D.J. Investigating event-specific drought attribution using self-organizing maps. J. Geophys. Res. Atmos. 2016, 121, 12766–12780. [Google Scholar] [CrossRef]

- Yu, T.; Chen, W.; Gong, H.; Feng, J.; Chen, S. Comparisons between CMIP5 and CMIP6 models in simulations of the climatology and interannual variability of the east asian summer Monsoon. Clim. Dyn. 2023, 60, 2183–2198. [Google Scholar] [CrossRef]

- Bu, L.; Zuo, Z.; An, N. Evaluating boreal summer circulation patterns of CMIP6 climate models over the Asian region. Clim. Dyn. 2021, 58, 427–441. [Google Scholar] [CrossRef]

- Guan, W.; Hu, H.; Ren, X.; Yang, X.-Q. Subseasonal zonal variability of the western Pacific subtropical high in summer: Climate impacts and underlying mechanisms. Clim. Dyn. 2019, 53, 3325–3344. [Google Scholar] [CrossRef]

- Ha, K.-J.; Seo, Y.-W.; Lee, J.-Y.; Kripalani, R.H.; Yun, K.-S. Linkages between the South and East Asian summer monsoons: A review and revisit. Clim. Dyn. 2018, 51, 4207–4227. [Google Scholar] [CrossRef]

- Huang, Y.; Wang, B.; Li, X.; Wang, H. Changes in the influence of the western Pacific subtropical high on Asian summer monsoon rainfall in the late 1990s. Clim. Dyn. 2018, 51, 443–455. [Google Scholar] [CrossRef]

- Wang, C.; Zhang, L.; Wu, G. Pacific-East Asian Teleconnection: How Does ENSO Affect East Asian Climate? J. Clim. 2013, 26, 990–1002. [Google Scholar] [CrossRef]

- Wang, G.; Zhang, Q.; Yu, H.; Shen, Z.; Sun, P. Double increase in precipitation extremes across China in a 1.5 °C/2.0 °C warmer climate. Sci. Total Environ. 2020, 746, 140807. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Chen, F.; Doan, Q.-V.; Xu, Y. Exploring the effect of urbanization on hourly extreme rainfall over Yangtze River Delta of China. Urban Clim. 2021, 36, 100781. [Google Scholar] [CrossRef]

- Wang, Q.-X.; Wang, M.-B.; Fan, X.-H.; Zhang, F.; Zhu, S.-Z.; Zhao, T.-L. Trends of temperature and precipitation extremes in the Loess Plateau Region of China, 1961–2010. Theor. Appl. Climatol. 2017, 129, 949–963. [Google Scholar] [CrossRef]

- Pérez, J.; Menendez, M.; Mendez, F.; Losada, I. Evaluating the performance of CMIP3 and CMIP5 global climate models over the north–east Atlantic region. Clim. Dyn. 2014, 43, 2663–2680. [Google Scholar] [CrossRef]

- Kalnay, E.; Kanamitsu, M.; Kistler, R.; Collins, W.; Deaven, D.; Gandin, L.; Iredell, M.; Saha, S.; White, G.; Woollen, J.; et al. The NCAR/NCAR 40-year reanalysis project. Bull. Am. Meteorol. Soc. 1996, 77, 437–471. [Google Scholar] [CrossRef]

- Uppala, S.M.; Kallberg, P.W.; Simmons, A.J. The ERA-40 Reanalysis. Q. J. R. Meteorol. Soc. 2005, 131, 2961–3012. [Google Scholar] [CrossRef]

- Gleckler, P.J.; Taylor, K.E.; Doutriaux, C. Performance metrics for climate models. J. Geophys. Res. 2008, 113, L06711. [Google Scholar] [CrossRef]

- Chen, D.; Ou, T.; Gong, L.; Xu, C.Y.; Li, W.; Ho, C.H.; Qian, W. Spatial interpolation of daily precipitation in China: 1951–2005. Adv. Atmos. Sci. 2010, 27, 1221–1232. [Google Scholar] [CrossRef]

- Taylor, K.E.; Stouffer, R.J.; Meehl, G.A. An overview of CMIP5 and the experiment design. Bull. Am. Meteorol. Soc. 2012, 93, 485–498. [Google Scholar] [CrossRef]

- Kohonen, T. Self-organized formation of topologically correct feature maps. Biol. Cybern. 1982, 43, 59–69. [Google Scholar]

- Zhao, S.; Deng, Y.; Black, R.X. A Dynamical and Statistical Characterization of U.S. Extreme Precipitation Events and Their Associated Large-Scale Meteorological Patterns. J. Clim. 2017, 30, 1307–1326. [Google Scholar] [CrossRef]

- Radić, V.; Clarke, G.K.C. Evaluation of IPCC models’ performance in simulating late-twentieth-century climatologies and weather patterns over North America. J. Clim. 2011, 24, 5257–5274. [Google Scholar]

- Schuenemann, K.C.; Cassano, J.J. Changes in synoptic weather patterns and Greenland precipitation in the 20th and 21st centuries: 2. Analysis of 21st century atmospheric changes using self-organizing maps. J. Geophys. Res. 2009, 115, D05108. [Google Scholar] [CrossRef]

| GCMs | Institution | Resolution |

|---|---|---|

| ACCESS1-0 | Commonwealth Scientific and Industrial Research Organisation and Bureau of Meteorology, Australia | 1.875° × 1.25° |

| ACCESS1-3 | Commonwealth Scientific and Industrial Research Organisation and Bureau of Meteorology, Australia | 1.875° × 1.25° |

| bcc-csm1-1 | Beijing Climate Center, China Meteorological Administration, China | 2.8° × ~2.8° |

| bcc-csm1-1-m | Beijing Climate Center, China Meteorological Administration, China | 1.125° × ~1.12° |

| BNU-ESM | Beijing Normal University, China | 2.8° × ~2.8° |

| CanESM2 | Canadian Centre for Climate Modelling and Analysis, Canada | 2.8° × ~2.8° |

| CCSM4 | National Center for Atmospheric Research (NCAR), USA | 1.25° × ~0.9° |

| CESM1-FASTCHEM | National Science Foundation/Department of Energy NCAR, USA | 1.25° × ~0.9° |

| CMCC-CM | Centro Euro-Mediterraneo per i Cambiamenti, Italy | 0.75° × ~0.75° |

| CMCC-CMS | Centro Euro-Mediterraneo per i Cambiamenti, Italy | 1.875° × ~1.875° |

| CNRM-CM5 | Centre National de Recherches Meteorologiques, Meteo-France, France | 1.4° × ~1.4° |

| CSIRO-Mk3-6-0 | Australian Commonwealth Scientific and Industrial Research Organization, Australia | 1.875° × ~1.875° |

| EC-EARTH | Royal Netherlands Meteorological Institute, The Netherlands | 1.125° × 1.125° |

| FGOALS-g2 | Institute of Atmospheric Physics, Chinese Academy of Sciences, China | 2.8 × ~1.65° |

| GFDL-CM3 | Geophysical Fluid Dynamics Laboratory, USA | 2.5° × 2.0° |

| GFDL-ESM2G | Geophysical Fluid Dynamics Laboratory, USA | 2.5° × ~2.0° |

| GFDL-ESM2M | Geophysical Fluid Dynamics Laboratory, USA | 2.5° × ~2.0° |

| GISS-E2-R | NASA Goddard Institute for Space Studies, USA | 2.5° × 2.0° |

| HadCM3 | Met Oce Hadley Centre, UK | 3.75° × 2.5° |

| HadGEM2-CC | Met Oce Hadley Centre, UK | 1.875° × 1.25° |

| HadGEM2-ES | Met Oce Hadley Centre, UK | 1.875° × 1.25° |

| IPSL-CM5A-LR | Institut Pierre-Simon Laplace, France | 3.75° × ~1.895° |

| IPSL-CM5A-MR | Institut Pierre-Simon Laplace, France | 2.5° × ~1.27° |

| MIROC-ESM | Atmosphere and Ocean Research Institute (The University of Tokyo), National Institute for Environmental Studies, and Japan Agency for Marine-Earth Science and Technology (MIROC) | 2.8° × ~2.8° |

| MIROC-ESM-CHEM | AORI, NIES, JAMSTEC, Japan | 2.8° × ~2.8° |

| MIROC4h | AORI, NIES, JAMSTEC, Japan | ~0.56° × ~0.56° |

| MIROC5 | AORI, NIES, JAMSTEC, Japan | ~1.4° × 1.4° |

| MPI-ESM-LR | Max Planck Institute for Meteorology, Germany | 1.875° × ~1.875° |

| MPI-ESM-MR | Max Planck Institute for Meteorology, Germany | 1.875° × ~1.875° |

| MPI-ESM-P | Max Planck Institute for Meteorology, Germany | 1.875° × ~1.875° |

| MRI-CGCM3 | Meteorological Research Institute, Japan | 1.125° × ~1.125° |

| NorESM1-M | Norwegian Climate Centre, Norway | 2.5° × ~1.89° |

| GCM | Performance of Different SOM Sizes (Large) | Performance of Different SOM Sizes (Small) | ||||||

|---|---|---|---|---|---|---|---|---|

| 3 × 3 (81) | 4 × 4 (256) | 5 × 5 (625) | 6 × 6 (1296) | 3 × 3 (81) | 4 × 4 (256) | 5 × 5 (625) | 6 × 6 (1296) | |

| ACCESS1-0 | 0.43 | 0.52 | 0.28 | 0.29 | 0.65 | 0.36 | 0.27 | 0.23 |

| ACCESS1-3 | 0.49 | 0.25 | 0.15 | 0.18 | 0.66 | 0.24 | 0.29 | 0.16 |

| bcc-csm1-1 | 0.42 | 0.30 | 0.20 | 0.23 | 0.32 | 0.25 | 0.29 | 0.16 |

| bcc-csm1-1-m | 0.39 | 0.34 | 0.12 | 0.15 | 0.29 | 0.26 | 0.21 | 0.28 |

| BNU-ESM | 0.36 | 0.32 | 0.22 | 0.21 | 0.36 | 0.05 | 0.05 | 0.17 |

| CanESM2 | 0.45 | 0.28 | 0.15 | 0.21 | 0.08 | 0.27 | 0.23 | 0.12 |

| CCSM4 | 0.34 | 0.12 | 0.07 | 0.19 | 0.15 | 0.08 | 0.22 | 0.14 |

| CESM1-FASTCHEM | 0.23 | 0.27 | 0.13 | 0.10 | −0.01 | 0.24 | 0.29 | 0.22 |

| CMCC-CM | 0.29 | 0.29 | 0.21 | 0.20 | 0.38 | 0.36 | 0.29 | 0.14 |

| CMCC-CMS | 0.42 | 0.45 | 0.30 | 0.29 | 0.10 | 0.16 | 0.22 | 0.16 |

| CNRM-CM5 | 0.18 | 0.28 | 0.23 | 0.13 | 0.45 | 0.19 | 0.27 | 0.19 |

| CSIRO-Mk3-6-0 | 0.32 | 0.23 | 0.26 | 0.20 | 0.23 | 0.24 | 0.25 | 0.37 |

| EC-EARTH | 0.39 | 0.33 | 0.49 | 0.17 | 0.18 | 0.15 | 0.27 | 0.17 |

| FGOALS-g2 | 0.38 | 0.32 | 0.14 | 0.20 | 0.28 | 0.11 | 0.23 | 0.09 |

| FGOALS-s2 | 0.32 | 0.25 | 0.10 | 0.19 | 0.25 | 0.10 | 0.15 | 0.11 |

| GFDL-CM3 | 0.50 | 0.37 | 0.23 | 0.23 | 0.42 | 0.34 | 0.27 | 0.34 |

| GFDL-ESM2G | 0.36 | 0.42 | 0.16 | 0.19 | 0.47 | 0.17 | 0.25 | 0.13 |

| GFDL-ESM2M | 0.47 | 0.37 | 0.17 | 0.31 | 0.35 | 0.29 | 0.29 | 0.16 |

| GISS-E2-R | 0.48 | 0.45 | 0.29 | 0.25 | 0.34 | 0.26 | 0.30 | 0.26 |

| HadCM3 | 0.17 | 0.06 | 0.03 | 0.06 | −0.05 | −0.20 | 0.00 | 0.03 |

| HadGEM2-CC | −0.02 | 0.09 | 0.02 | 0.04 | 0.16 | −0.04 | 0.01 | 0.04 |

| HadGEM2-ES | 0.11 | 0.06 | 0.07 | 0.04 | 0.24 | 0.14 | 0.07 | 0.02 |

| inmcm4 | 0.20 | 0.09 | 0.07 | 0.06 | 0.07 | −0.11 | −0.02 | −0.01 |

| IPSL-CM5A-LR | 0.14 | 0.34 | 0.19 | 0.15 | 0.32 | 0.28 | 0.22 | 0.10 |

| IPSL-CM5A-MR | 0.29 | 0.38 | 0.19 | 0.23 | 0.34 | 0.16 | 0.24 | 0.11 |

| MIROC4h | 0.28 | 0.31 | 0.23 | 0.21 | 0.47 | 0.31 | 0.32 | 0.25 |

| MIROC5 | 0.35 | 0.37 | 0.37 | 0.25 | 0.36 | 0.33 | 0.29 | 0.14 |

| MIROC-ESM | 0.09 | 0.00 | 0.10 | 0.09 | −0.16 | −0.04 | 0.01 | 0.05 |

| MIROC-ESM-CHEM | −0.25 | 0.01 | 0.05 | 0.04 | −0.11 | −0.17 | −0.07 | 0.00 |

| MPI-ESM-LR | 0.38 | 0.34 | 0.16 | 0.27 | 0.12 | −0.01 | 0.18 | 0.10 |

| MPI-ESM-MR | 0.42 | 0.48 | 0.21 | 0.23 | 0.26 | 0.07 | 0.19 | 0.08 |

| MPI-ESM-P | 0.51 | 0.35 | 0.16 | 0.31 | 0.25 | −0.03 | 0.16 | 0.10 |

| MRI-CGCM3 | 0.40 | 0.39 | 0.20 | 0.18 | 0.14 | 0.33 | 0.25 | 0.31 |

| NorESM1-M | 0.52 | 0.32 | 0.27 | 0.19 | 0.41 | 0.18 | 0.14 | 0.15 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Sun, X. Evaluation of IPCC Models’ Performance in Simulating Late-Twentieth-Century Weather Patterns and Extreme Precipitation in Southeastern China. Atmosphere 2023, 14, 1647. https://doi.org/10.3390/atmos14111647

Wang Y, Sun X. Evaluation of IPCC Models’ Performance in Simulating Late-Twentieth-Century Weather Patterns and Extreme Precipitation in Southeastern China. Atmosphere. 2023; 14(11):1647. https://doi.org/10.3390/atmos14111647

Chicago/Turabian StyleWang, Yongdi, and Xinyu Sun. 2023. "Evaluation of IPCC Models’ Performance in Simulating Late-Twentieth-Century Weather Patterns and Extreme Precipitation in Southeastern China" Atmosphere 14, no. 11: 1647. https://doi.org/10.3390/atmos14111647