?p-Norm-like Affine Projection Sign Algorithm for Sparse System to Ensure Robustness against Impulsive Noise

Abstract

:1. Introduction

2. Original APSA

3. Proposed -Norm-like APSA

4. Simulation Results

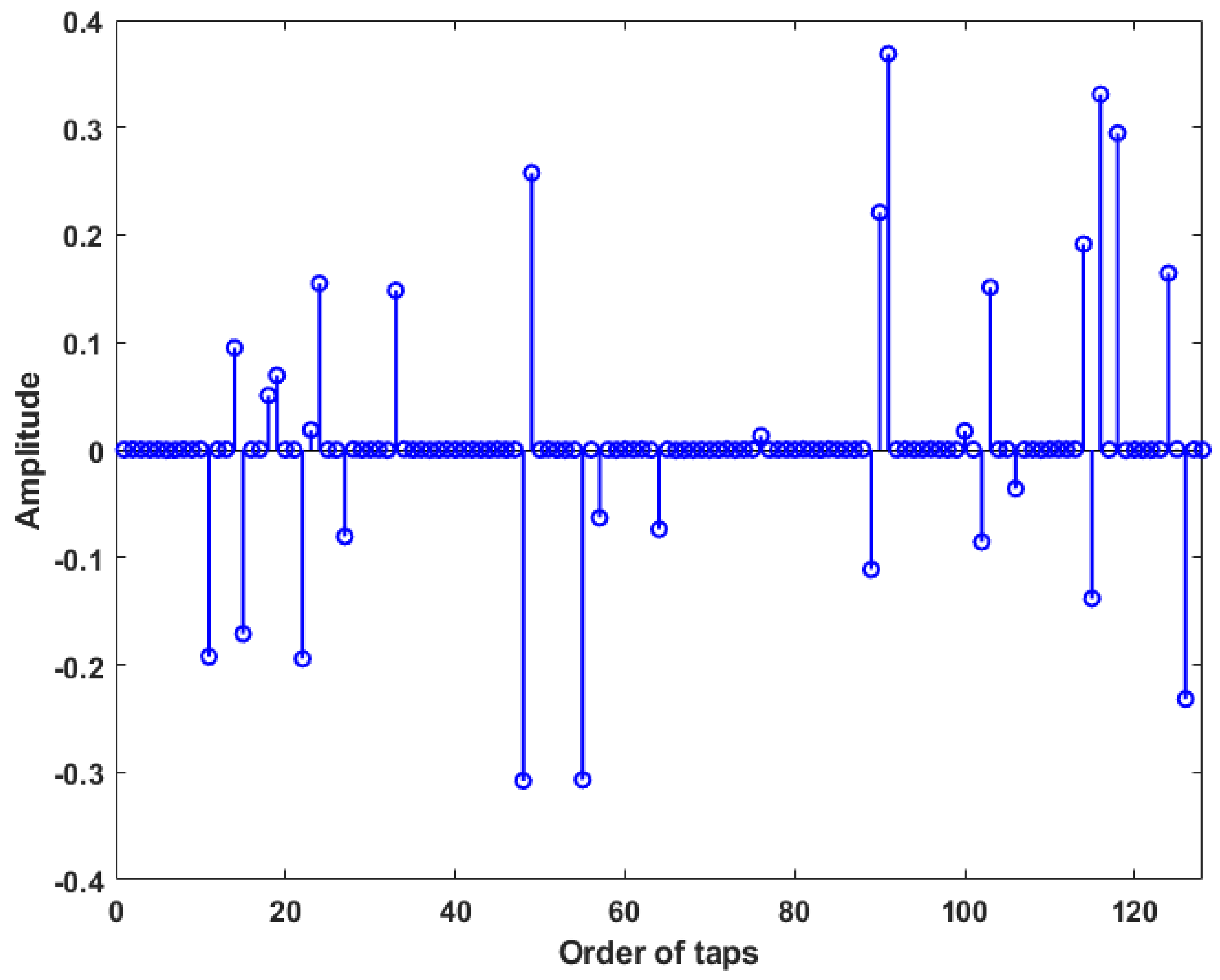

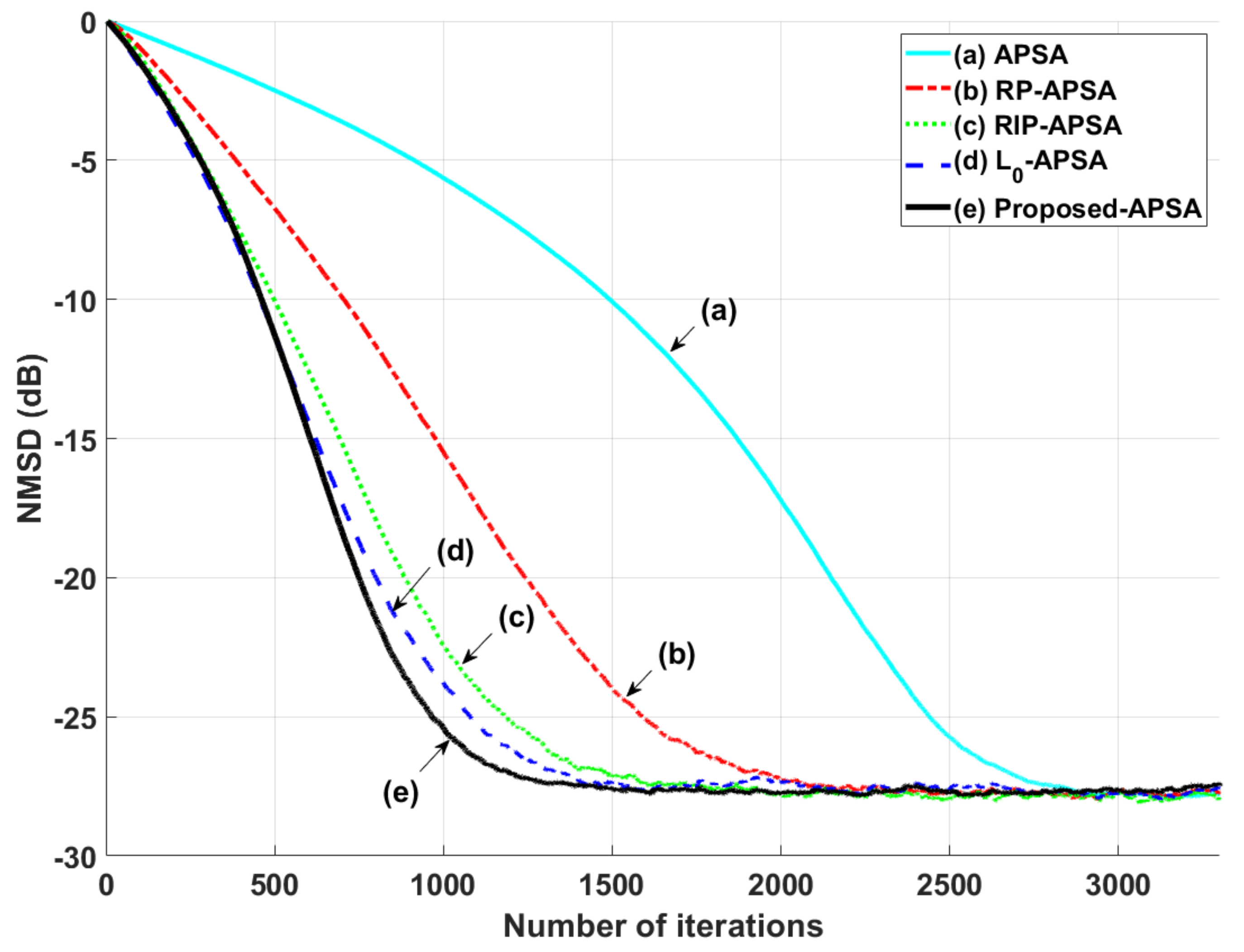

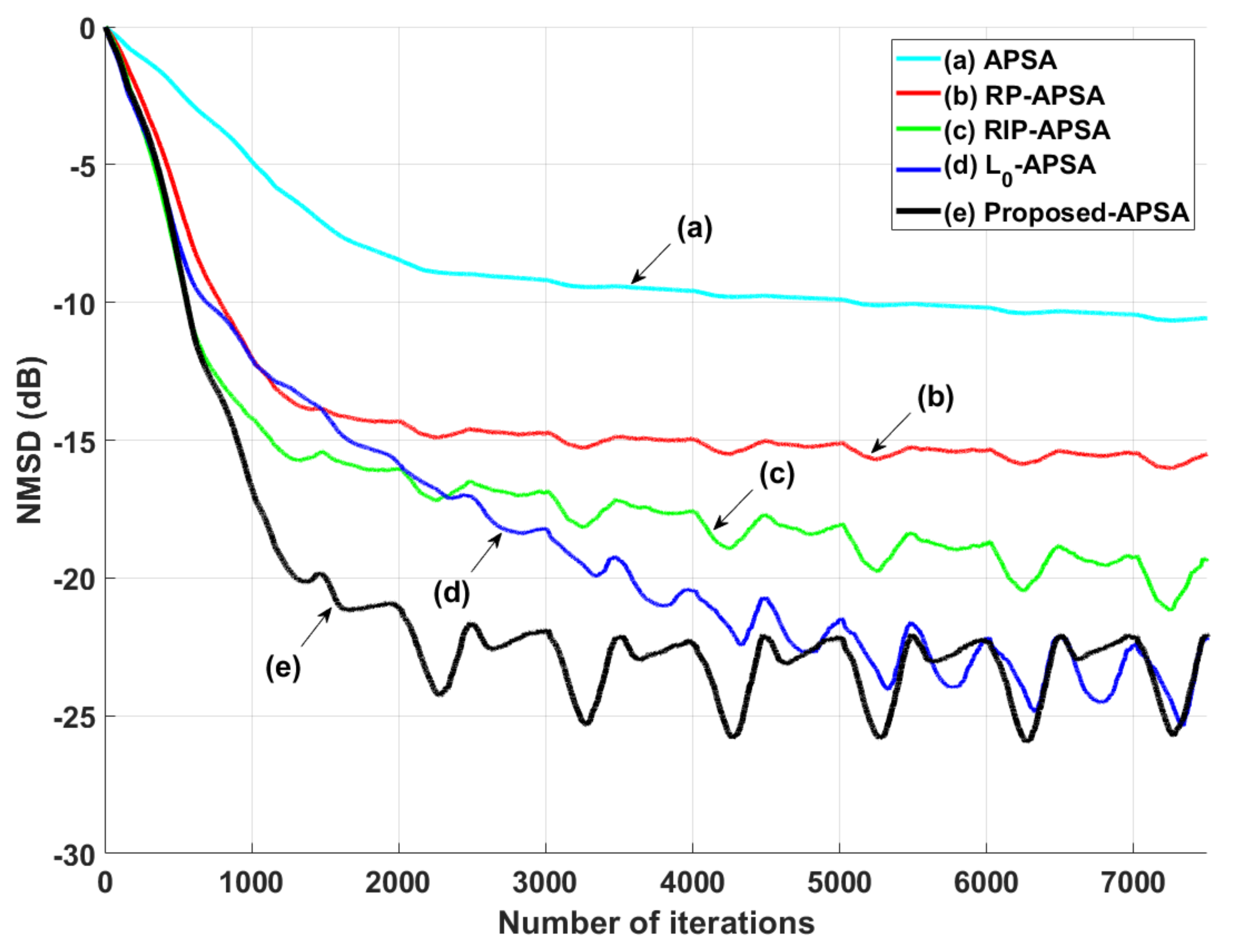

4.1. System Identification for Sparse System in Presence of Impulsive Noises

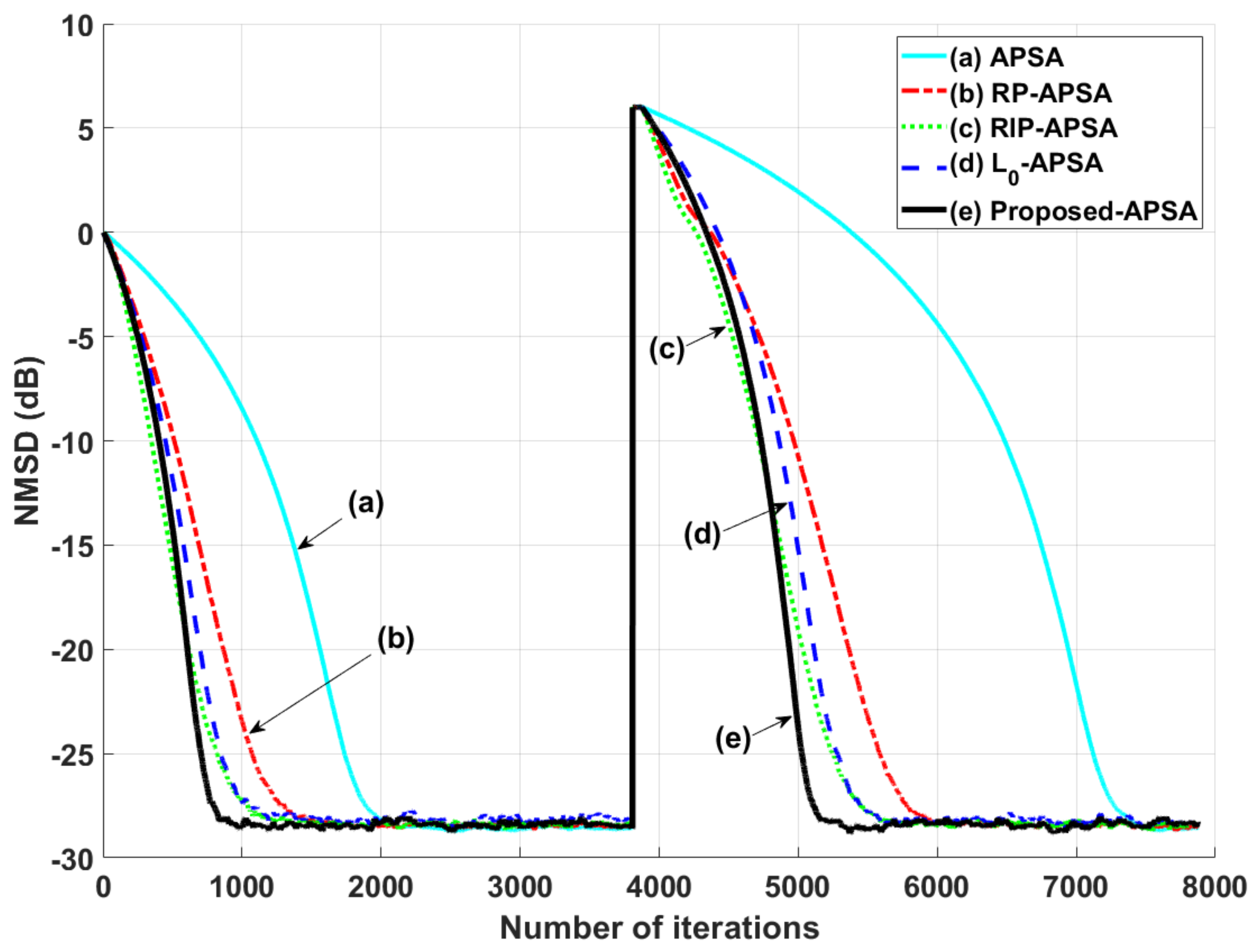

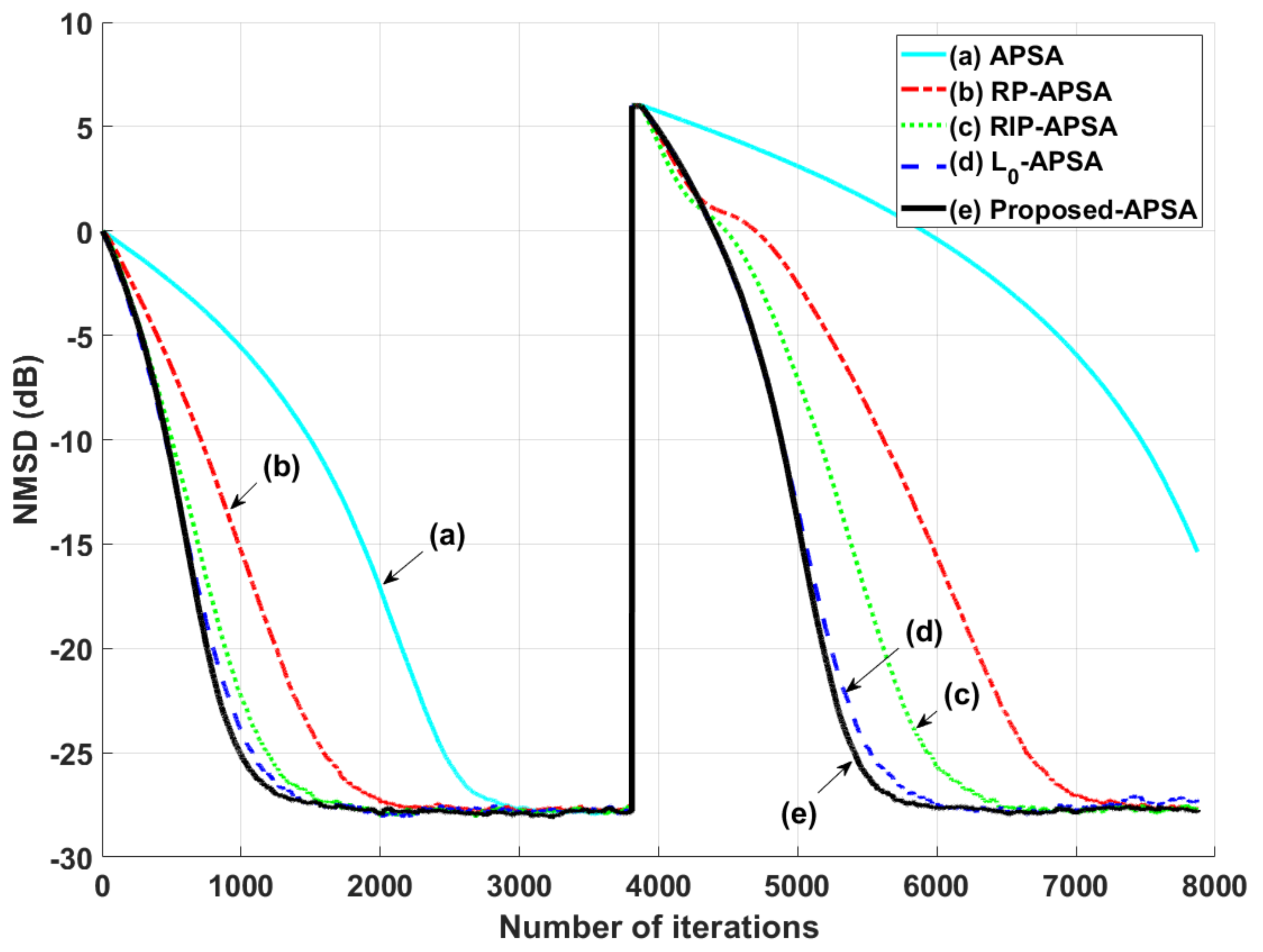

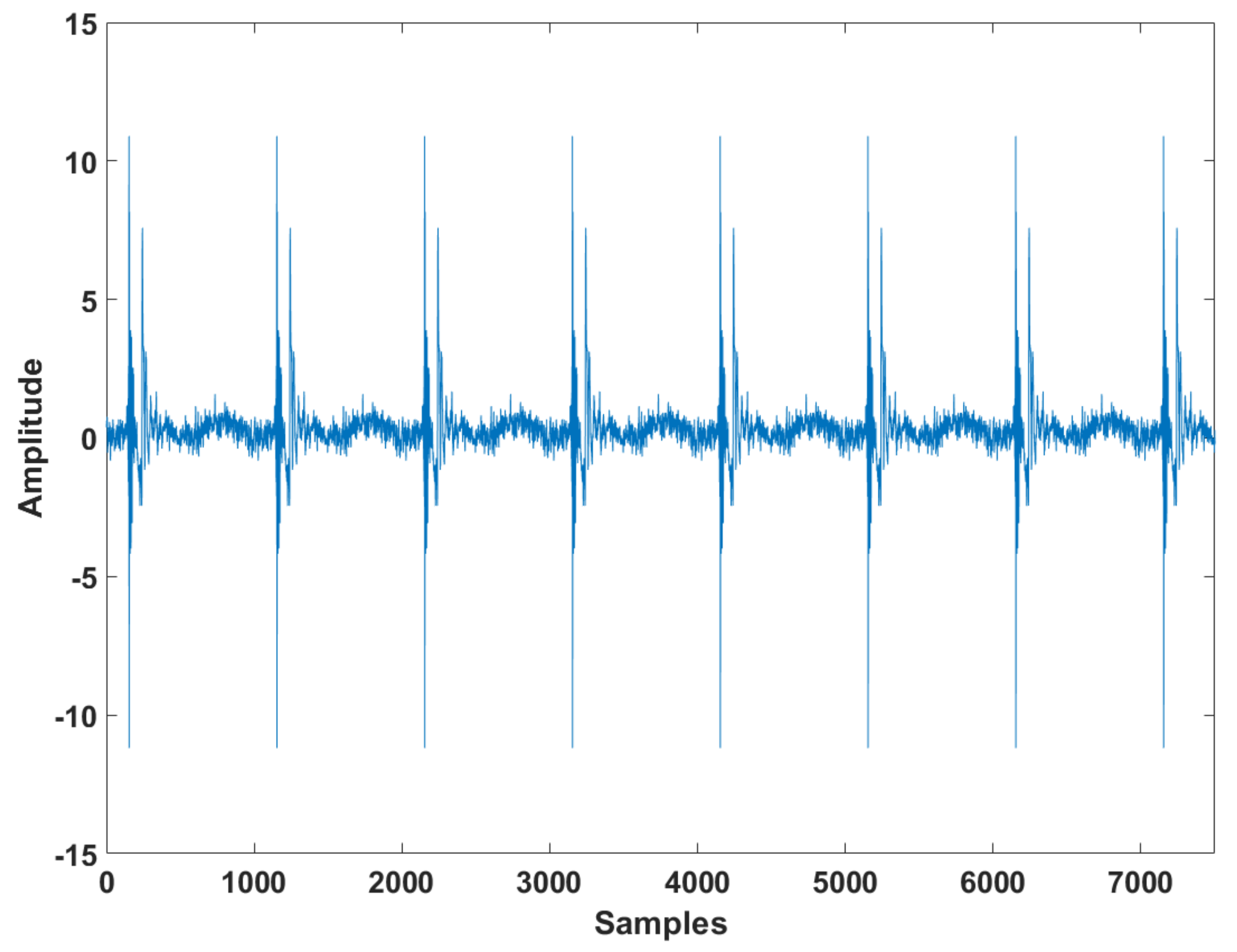

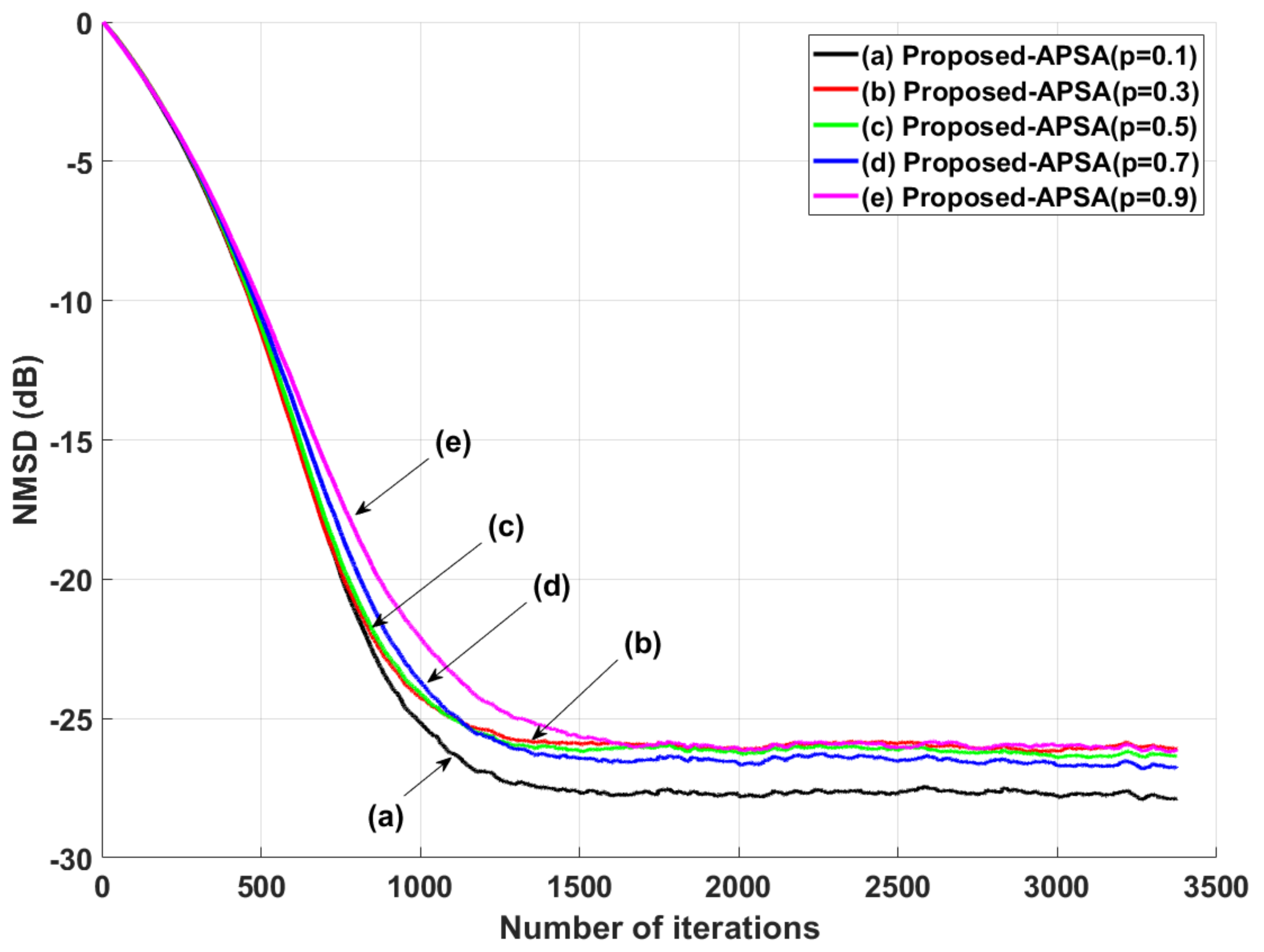

4.2. Speech Input Test Including a Double-Talk Situation

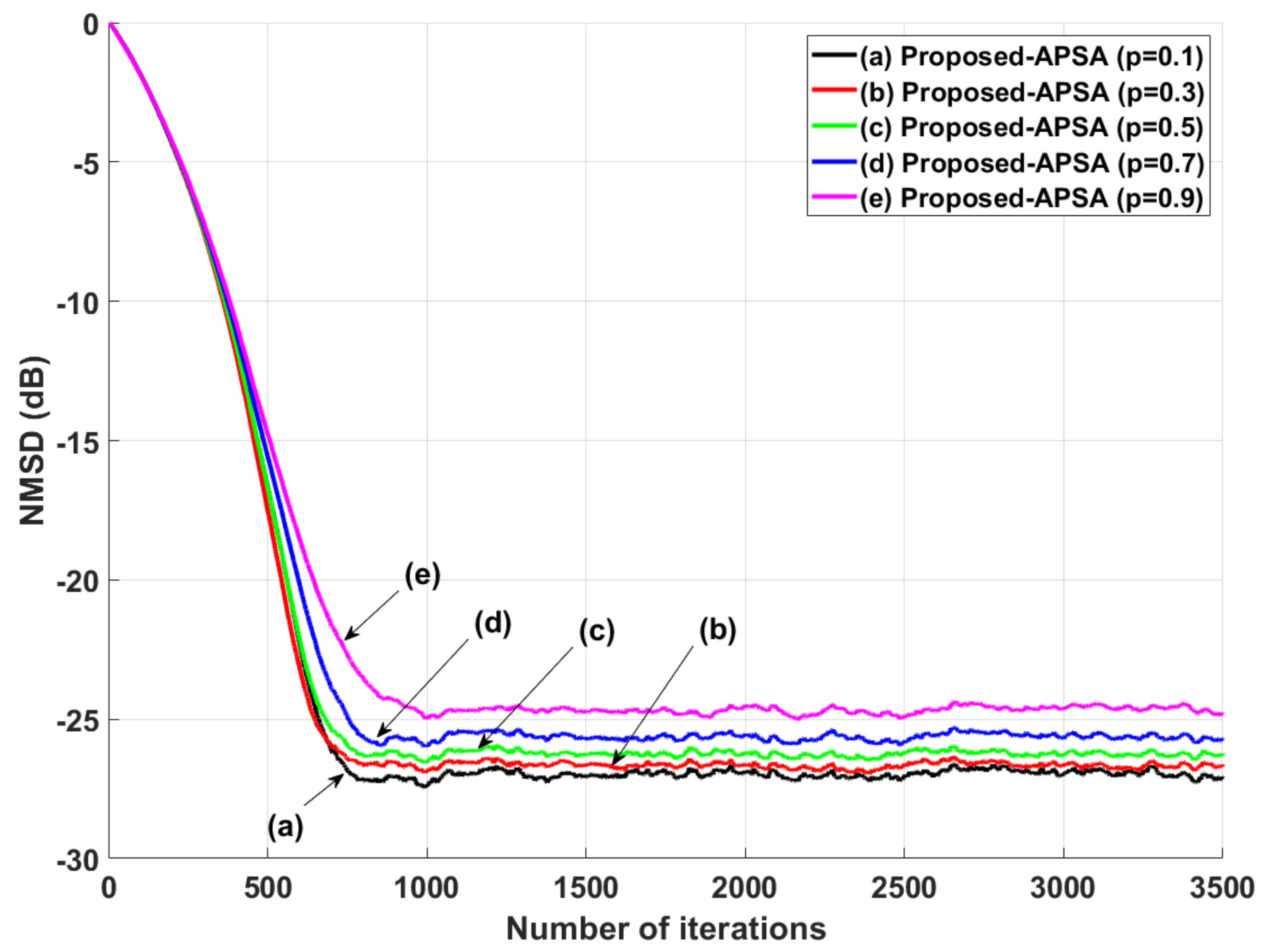

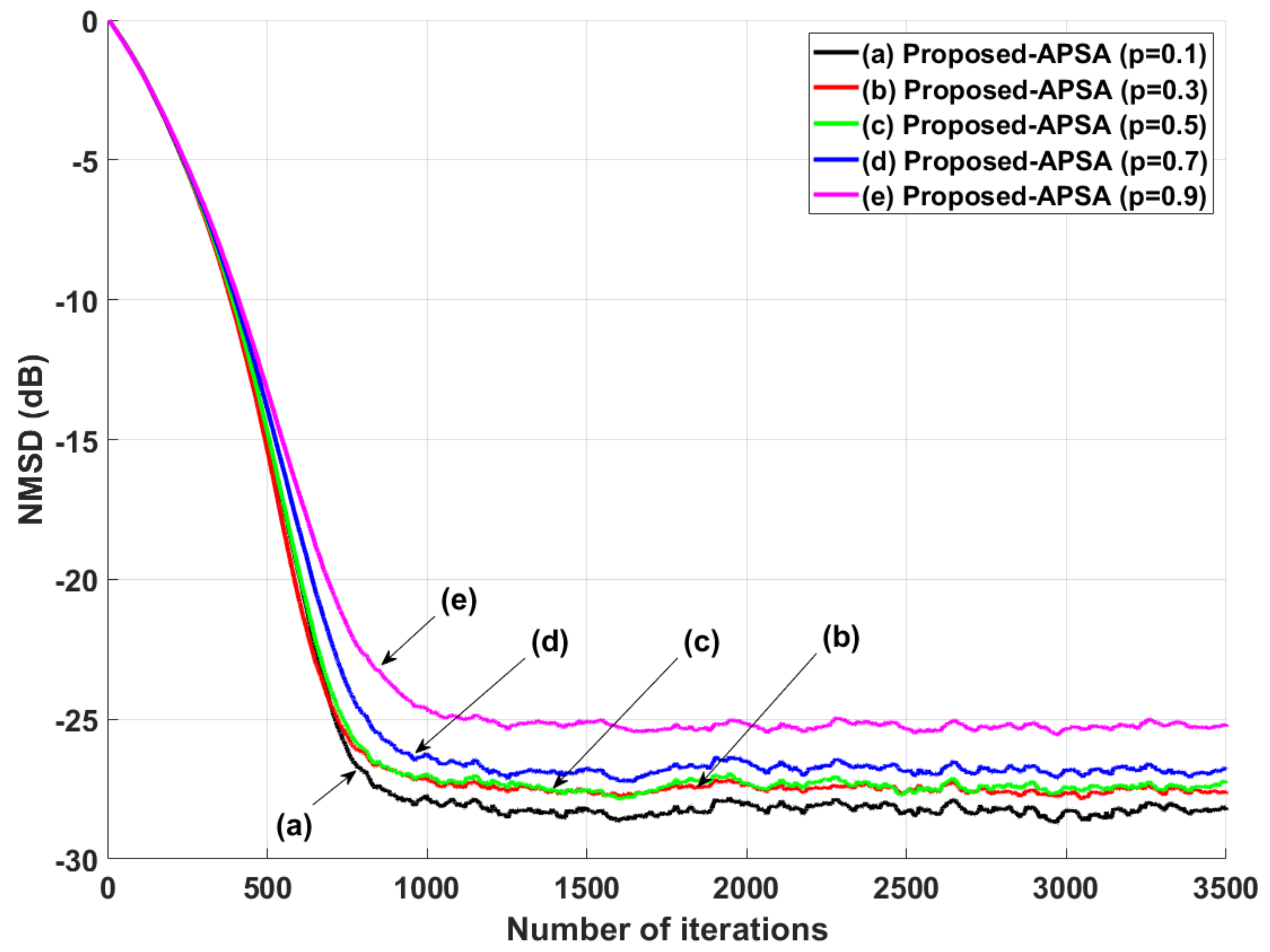

4.3. Practical Considerations for the p Parameter

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, R.; Zhao, H. A Novel Method for Online Extraction of Small-Angle Scattering Pulse Signals from Particles Based on Variable Forgetting Factor RLS Algorithm. Sensors 2021, 21, 5759. [Google Scholar]

- Qian, G.; Wang, S.; Lu, H.H.C. Maximum Total Complex Correntropy for Adaptive Filter. IEEE Trans. Signal Process. 2020, 68, 978–989. [Google Scholar] [CrossRef]

- Shen, L.; Zakharov, Y.; Henson, B.; Morozs, N.; Mitchell, P.D. Adaptive filtering for full-duplex UWA systems with time-varying self-interference channel. IEEE Access 2020, 8, 187590–187604. [Google Scholar] [CrossRef]

- Dogariu, L.-M.; Stanciu, C.L.; Elisei-Iliescu, C.; Paleologu, C.; Benesty, J.; Ciochina, S. Tensor-Based Adaptive Filtering Algorithms. Symmetry 2021, 13, 481. [Google Scholar] [CrossRef]

- Kumar, K.; Pandey, R.; Karthik, M.L.N.S.; Bhattacharjee, S.S.; George, N.V. Robust and sparsity-aware adaptive filters: A Review. Signal Process. 2021, 189, 108276. [Google Scholar] [CrossRef]

- Kivinen, J.; Warmuth, M.K.; Hassibi, B. The p-norm generalization of the LMS algorithm for adaptive filtering. IEEE Trans. Signal Proc. 2006, 54, 1782–1793. [Google Scholar] [CrossRef] [Green Version]

- Ozeki, K.; Umeda, T. An adaptive filtering algorithm using an orthogonal projection to an affine subspace and its properties. Electron. Commun. Jpn. 1984, 67, 19–27. [Google Scholar] [CrossRef]

- Jiang, Z.; Li, Y.; Huang, X.; Jin, Z. A Sparsity-Aware Variable Kernel Width Proportionate Affine Projection Algorithm for Identifying Sparse Systems. Symmetry 2019, 11, 1218. [Google Scholar] [CrossRef] [Green Version]

- Li, G.; Wang, G.; Dai, Y.; Sun, Q.; Yang, X.; Zhang, H. Affine projection mixed-norm algorithms for robust filtering. Signal Process. 2021, 187, 108153. [Google Scholar] [CrossRef]

- Li, G.; Zhang, H.; Zhao, J. Modified Combined-Step-Size Affine Projection Sign Algorithms for Robust Adaptive Filtering in Impulsive Interference Environments. Symmetry 2020, 12, 385. [Google Scholar] [CrossRef] [Green Version]

- Shao, T.; Zheng, Y.R.; Benesty, J. An affine projection sign algorithm robust against impulsive interference. IEEE Signal Process. Lett. 2010, 17, 327–330. [Google Scholar] [CrossRef]

- Yang, Z.; Zheng, Y.R.; Grant, S.L. Proportionate affine projection sign algorithm for network echo cancellation. IEEE Trans. Audio Speech Lang. Process. 2011, 19, 2273–2284. [Google Scholar] [CrossRef]

- Yoo, J.; Shin, J.; Choi, H.; Park, P. Improved affine projection sign algorithm for sparse system identification. Electron. Lett. 2012, 48, 927–929. [Google Scholar] [CrossRef]

- Jin, J.; Mei, S. l0 Norm constraint LMS Algorithm for spare system identification. IEEE Signal Process. Lett. 2009, 16, 774–777. [Google Scholar]

- Li, Y.; Cherednichenko, A.; Jiang, Z.; Shi, W.; Wu, J. A Novel Generalized Group-Sparse Mixture Adaptive Filtering Algorithm. Symmetry 2019, 11, 697. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Wang, Y.; Albu, F.; Jiang, J. A General Zero Attraction Proportionate Normalized Maximum Correntropy Criterion Algorithm for Sparse System Identification. Symmetry 2017, 9, 229. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Wang, Y.; Sun, L. A Proportionate Normalized Maximum Correntropy Criterion Algorithm with Correntropy Induced Metric Constraint for Identifying Sparse Systems. Symmetry 2018, 10, 683. [Google Scholar] [CrossRef] [Green Version]

- Wu, F.; Tong, F. Gradient optimization p-norm-like constraint LMS algorithm for sparse system estimation. Signal Process. 2013, 93, 967–971. [Google Scholar] [CrossRef]

- Wu, F.; Tong, F. Non-Uniform Norm Constraint LMS Algorithm for Sparse System Identification. IEEE Commun. Lett. 2013, 17, 385–388. [Google Scholar] [CrossRef]

- Zayyani, H. Continuous mixed p-norm adaptive algorithm for system identification. IEEE Signal Process. Lett. 2014, 21, 1108–1110. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shin, J.; Kim, J.; Kim, T.-K.; Yoo, J. ?p-Norm-like Affine Projection Sign Algorithm for Sparse System to Ensure Robustness against Impulsive Noise. Symmetry 2021, 13, 1916. https://doi.org/10.3390/sym13101916

Shin J, Kim J, Kim T-K, Yoo J. ?p-Norm-like Affine Projection Sign Algorithm for Sparse System to Ensure Robustness against Impulsive Noise. Symmetry. 2021; 13(10):1916. https://doi.org/10.3390/sym13101916

Chicago/Turabian StyleShin, Jaewook, Jeesu Kim, Tae-Kyoung Kim, and Jinwoo Yoo. 2021. "?p-Norm-like Affine Projection Sign Algorithm for Sparse System to Ensure Robustness against Impulsive Noise" Symmetry 13, no. 10: 1916. https://doi.org/10.3390/sym13101916

APA StyleShin, J., Kim, J., Kim, T.-K., & Yoo, J. (2021). ?p-Norm-like Affine Projection Sign Algorithm for Sparse System to Ensure Robustness against Impulsive Noise. Symmetry, 13(10), 1916. https://doi.org/10.3390/sym13101916