Abstract

In our paper, we introduce a sparse and symmetric matrix completion quasi-Newton model using automatic differentiation, for solving unconstrained optimization problems where the sparse structure of the Hessian is available. The proposed method is a kind of matrix completion quasi-Newton method and has some nice properties. Moreover, the presented method keeps the sparsity of the Hessian exactly and satisfies the quasi-Newton equation approximately. Under the usual assumptions, local and superlinear convergence are established. We tested the performance of the method, showing that the new method is effective and superior to matrix completion quasi-Newton updating with the Broyden–Fletcher–Goldfarb–Shanno (BFGS) method and the limited-memory BFGS method.

Keywords:

symmetric quasi-Newton method; unconstrained optimization problems; matrix completion; automatic differentiation; superlinear convergence; Broyden–Fletcher–Goldfarb–Shanno method MSC:

65K05; 90C06; 90C53

1. Introduction

We concentrated on the unconstrained optimization problem

where is a twice continuously differentiable function; and and denote the gradient and Hessian of f at x, respectively. The first order necessary condition of (1) is

which can be written as the symmetric nonlinear equations

where is a continuously differentiable mapping and the symmetry implies that the Jacobian satisfies . That symmetric nonlinear system has close relationships with many practical problems, such as the gradient mapping of unconstrained optimization problems, the Karush–Kuhn–Tuckrt (KKT) system of equality constrained optimization problem, the discretized two-point boundary value problem, and the saddle point problem (2) [1,2,3,4,5].

For small or medium-scale problems, classical quasi-Newton methods enjoy superlinear convergence without the calculation of the Hessian [6,7]. Let be the current iterative point and be the symmetric approximation of the Hessian; then the iteration generated by quasi-Newton methods is

where is a step length obtained by some line search or other strategies. The search direction can be gotten by solving the equations

where the quasi-Newton matrix is an approximation of and satisfies the secant condition:

where , . The matrix can be updated by different update formulae. The Davidon–Fletcher–Powell (DFP) update,

was first proposed by Davidon [8] and developed by Fletcher and Powell [9]. The Broyden–Fletcher–Goldfard–Shanno (BFGS) update,

was proposed independently by Broyden [10], Fletcher [11], Goldfarb [12], and Shanno [13]. One can find more on the topic in references [14,15,16,17].

If we assume that , then using Sherman–Morrison formula, we have the Broyden’s family update:

where is

When , we have a BFGS update. When , we have a DFP update.

However, a quasi-Newton method is not desirable when applied to solve large-scale problems, because we need to store the full matrx . To overcome such drawback, the so-called sparse quasi-Newton methods [14] have received much attention. Early in 1970, Schubert [18] has proposed a sparse Broyden’s rank one method. Then Powell and Toint [19], Toint [20] studied the sparse quasi-Newton method.

Existing sparse quasi-Newton methods usually use a sparse symmetric matrix as an approximation of the Hessian so that both matrices take the same form or have similar structures. If the limited memory technique [21,22] is adopted, which only stores several pairs to construct a matrix by updating the initial matrix m times, the method can be widely used in practical optimization problems. On the other hand, there are many large-scale problems in scientific fields take the partially separable form

where function , is related to a few variables. For the partially separable unconstrained optimization problems, the partitioned BFGS method [23,24] was proposed and has better performance in practice. The partitioned BFGS method updates each matrix of each element function separately via BFGS updating and sums these matrices to construct the next quasi-Newton matrix . Since the size of x in is smaller than that of n, the matrix will be a small matrix, and then the matrix will be sparse. The quasi-Newton direction is the solution of the linear equations:

However, the partitioned BFGS method cannot always preserve the positive definiteness of the matrix , only if that each element function is convex, so the partitioned BFGS method is implemented with the trust region strategy [25]. Recently, for the partially separable nonlinear equations, Cao and Li [26] have introduced two kinds of partitioned quasi-Newton methods and given their global and superlinear convergence.

Another efficient sparse quasi-Newton method is designed to exploit the sparsity structures of the Hessian. We assume that for all ,

where . References [27,28] have proposed sparse quasi-Newton methods, where satisfies the secant equation

and sparse condition

simultaneously, where is an approximate inverse Hessian. Recently, Yamashita [29] proposed another type of matrix completion quasi-Newton (MCQN) update for solving problem (1) with a sparse Hessian and proved the local and superlinear convergence for MCQN updates with the DFP method. Reference [30] established the convergence of MCQN updates with all of Broyden’s convex family. However, global convergence analysis [31] was presented for two-dimensional functions with uniformly positive definite Hessians.

Another kind of quasi-Newton method for solving large scale unconstrained optimization problems is the diagonal quasi-Newton method, where the Hessian of an objective function is approximated by a diagonal matrix with positive elements. The first version was developed by Nazareth [32], where the quasi-Newton matrix satisfies the least change and weak secant condition [33]:

where is the standard Frobenius norm. Recently, Andrei N. [34] developed a diagonal quasi-Newton method, where the diagonal elements satisfy the least change weak secant condition (3) and minimize the trace of the update. Besides, lots of other techniques, such as forward and central finite differences, the variational principle with a weighted norm, and the generalized Frobenius norm, can be used to derive different kinds of diagonal quasi-Newton method [35,36,37]. Under usual assumptions, the diagonal quasi-Newton method is linearly convergent. The authors of [38] adopted a similar technique to derivation with the DFP method and got a low memory diagonal quasi-Newton method. Using the Armijo line search, they established the global convergence and gave the sufficient conditions for the method to be superlinearly convergent.

The main contribution of our paper is to propose a sparse quasi-Newton algorithm based on automatic differentiation for solving (1). Firstly, similarly to the derivation of BFGS update, we can perform a symmetric rank-two quasi-Newton update:

where and satisfying the adjoint tangent condition [39]

For an matrix, we denote , as A is positive definite. Then, when , if and only if , which means that the proposed update (4) keeps the positive definiteness, as in BFGS updating. Moreover, when is positive definite, the matrices updated by the proposed update (4) are positive definite for solving (1) with uniformly positive definite Hessians. In our work, we pay attention to ; then the proposed rank-two quasi-Newton update (4) method satisfies

which means that equals in the direction exactly. Several lemmas have been given to present the properties of the proposed rank-two quasi-Newton update formula. Secondly, combined with the idea of MCQN method [29], we propose a sparse and symmetric quasi-Newton algorithm for solving (1). Under appropriate conditions, local and superlinear convergence are established. Finally, our numerical results illustrate that the proposed algorithm has satisfying performance.

The paper is organized as follows. In Section 2, we introduce a symmetric rank-two quasi-Newton update based on automatic differentiation and prove several nice properties. In Section 3, by using the idea of matrix completion, we present a sparse quasi-Newton algorithm and show some nice properties. In Section 4, we prove the local and superlinear convergence of the algorithm proposed in Section 3. Numerical results are listed in Section 5, which verify that the proposed algorithm is very encouraging. Finally, we give the conclusion.

2. A New Symmetric Rank-Two Quasi–Newton Update

Similarly to the derivation of BFGS update, we will derive a new symmetric rank-two quasi-Newton update and show several lemmas. Let

where is a rank-two matrix and satisfies the condition

where and . Similarly to the derivation of BFGS, we have the following symmetric rank-two update:

If we denote and , then (6) can be expressed as

It can be seen that the update (6) involves the Hessian , but we do not need to compute them in practice. For given vectors x, s, and , we can get and exactly by the forward and reverse mode of automatic differentiation.

Next, several lemmas are presented.

Lemma 1.

We suppose that and is updated by (6); then if and only if .

Proof.

According to the condition (5), one has

If is positive definite, one has .

Let and . Then for , , it can be derived from (6) that

According to that , there is a symmetric matrix , such that . Then we have from Cauchy–Schwarz inequality that

where the equality holds if and only if , .

Lemma 2.

If we rewrite update Formula (7) as , where is symmetric and satisfies , then E is the solution of the following minimization problem:

where and W satisfies .

Proof.

A suitable Lagrangian function of the convex programming problem is

where and are Lagrange multipliers. Moreover,

or according to the symmetry and cyclic permutations, one has

Taking the transpose and accumulating eliminates to yield

and by and the nonsingularity of W we have that

Substituting (9) into and rewriting gives

Postmultiplying by gives

so we have

Substituting this into (9) gives the result (7). □

Lemma 3.

If and . Then given by (6) solves the variational problem

Proof.

According to the definition of , where [40] is given by

so we have

We have the Lagrangian function

where and are the Lagrange multipliers. Moreover, one has

Transposing and adding in (12) that

Combined with the tangent condition, we have that

and hence

and so

Combined with (6), one has the Formula (7).

In this paper, we set , so one has

which means that is an exact approximation to in direction . Then we have the symmetric rank-two update formula

It can be seen that can preserve the symmetry when is symmetric. If we denote , then we can obtain a similar Broyden convex family update formula:

where the parameter is defined as

The choice corresponds to the BFGS update

3. Algorithm and Related Properties

For the update formula (15), we adopt the idea of matrix completion. The next quasi-Newton matrix is the solution of the following minimization problem:

When is chordal, the minimization problem (18) can be solved by solving the problem

Then can be expressed as the sparse clique-factorization formula [29]. Then Algorithm 1 is stated as follows.

| Algorithm 1 (Sparse Quasi-Newton Algorithm based on Automatic Differentiation) |

|

When the in step 3 is updated by Broyden’s class method, the method corresponds to the method in [29]. In the present paper, we focus on the MCQN update with , where is given by (15).

In what follows, we give some notation for the convenience of analysis. For a nonsingular matrix P satisfying

we let

where is given by (15). Then we can get from (15) that

where

Similarly to that in [30], we can assume that

According to [41] and (21), we have

and

Next, we establish a relation between and , which is very important in the establishment of the local and superlinear convergence of Algorithm 1.

Proposition 1.

For the Algorithm 1, we have the following relation:

4. The Local and Superlinear Convergence

Based on the discussion in Section 3, we prove the local and superlinear convergence of Algorithm 1. First, we list the assumptions.

Assumption A1.

- (1)

- The function is twice continuously differentiable on Ω.

- (2)

- There exist two constants, and , satisfying

According to Assumption 1, we have constants and such that

We define

and get from (32) that

If we take , then one has from (34) that

Furthermore, it is easy to deduce that

where , , and . We define

and rewrite (29) as

As and , we can obtain from the above inequality and (36) that

Considering

one has

where and . Moreover, it follows from (40) that

where . Since and , it is obvious that , and

The theorem given bellow shows that Algorithm 1 converges locally and linearly, where the relation (42) plays an essential role.

Theorem 1.

Let Assumption 1 hold and sequence be generated by Algorithm 1 with , where is updated by (15). Then for any , there is a constant τ , , such that

Proof.

According to the Lemma 4 [29], there are constants and such that when , one has

and

where and . Define

We will prove the inequalities (43) and

hold for any by induction. By the Lipstchitz continuity of , we have for ,

Then, when , it is easy to deduce (43) by (44) and (45). Moreover, when we take and substitute into (48), we can obtain

So we have that (43) and (47) hold for . Assume that (43) and (47) hold for ; then one has

and

Then by the definition of (46), one has

Combine (42) and (44). It can seen that

Thus, we can get that (47) holds for all . This completes the proof. □

Based on the above discussion and the relation (42), we can show the superlinear convergence of the Algorithm 1.

Theorem 2.

Let Assumption A1 hold and sequence be generated by Algorithm 1 with , where is updated by (15). Then there is a constant such that when , , one has

Then the sequence is superlinearly convergent.

Proof.

Let be defined as in (1), and for all k one has

It follows from (41) that

Summing the above inequality and combining (51) and (54), we can deduce

which means that the nonnegative constants , and all tend to zero when . Furthermore, according to the definition of (37), we have that

First, we have

For the case , one has , and ; and then (53) is true.

Moreover, it is easy to deduce that

We also have

For the case , one has , , ; then (53) is true by (56)–(58). Thus, the relation (53) holds for all k.

Next, we will show that (53) indicates that the sufficient condition [6]

holds. According to (47), one has that there is a constant such that , where denotes the eigenvalues of , . When we let , one has

and

When , since , then one has from (53) that

which is the well-known Dennis–Moré condition. Thus, we get the superlinear convergence. □

5. Numerical Experiments

The performance in [29] shows that the MCQN update with the BFGS method has better numerical performance than the MCQN update with DFP method. Hence, we compare the numerical performance of Algorithm 1 with the MCQN update with BFGS method and the limited-memory BFGS method.

The 24 test problems with initial points are given in Table 1, which are from [29,42,43,44]. It can be seen that all the test problems have special Hessian structures such as band matrices, so the chordal extension of the sparsity could be obtained easily. Then in Algorithm 1 can be written as the sparse clique-factorization formula.

Table 1.

The test problems.

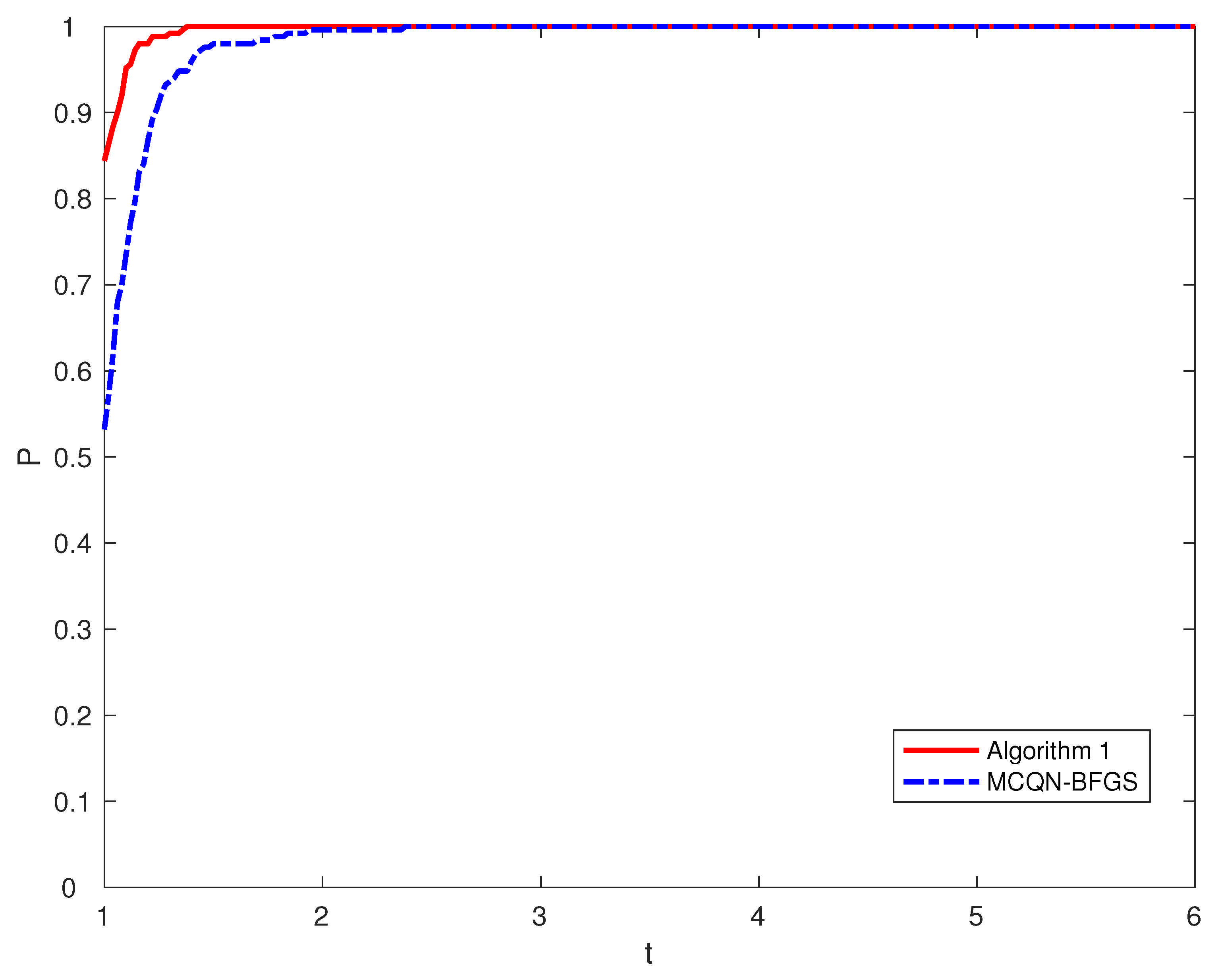

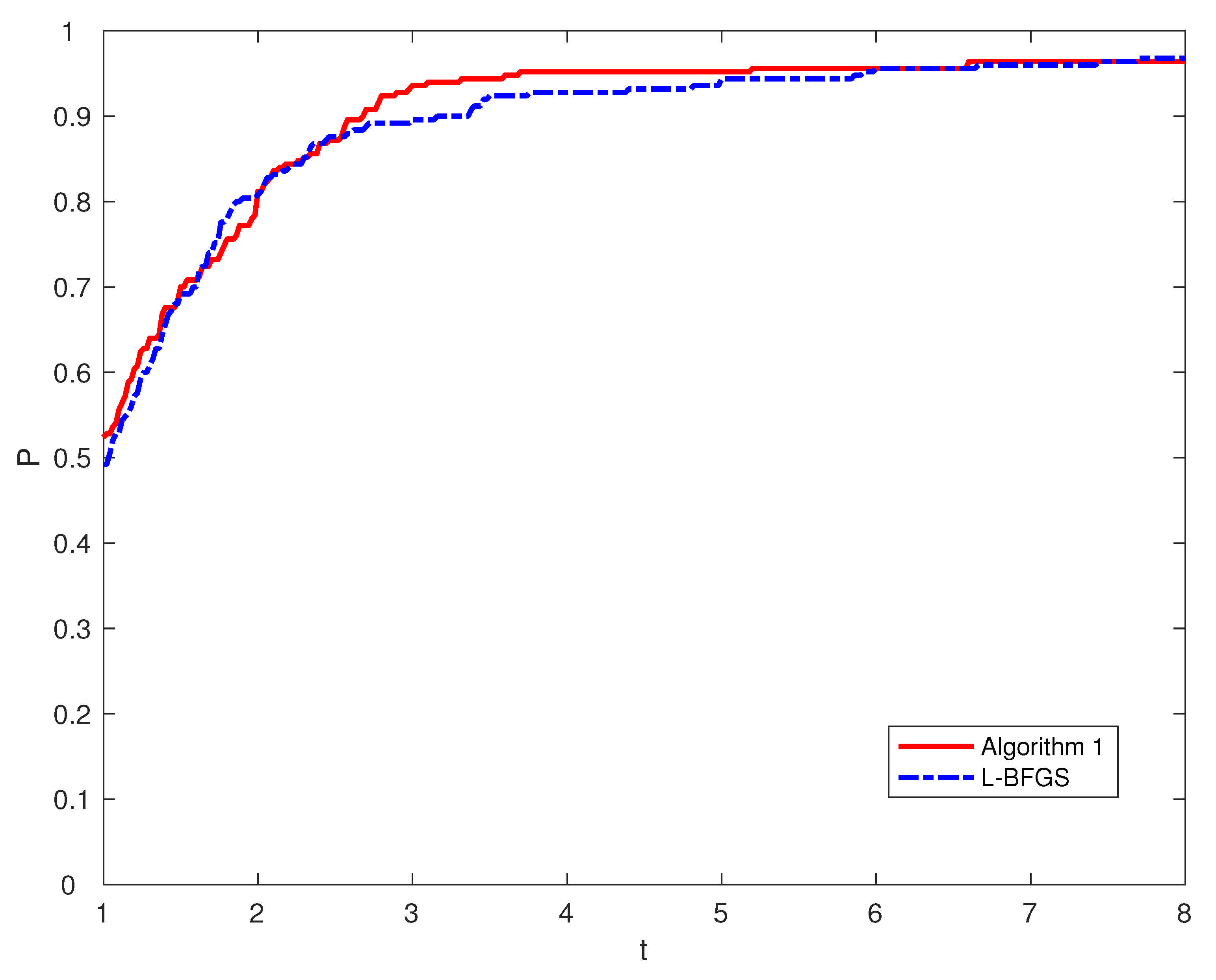

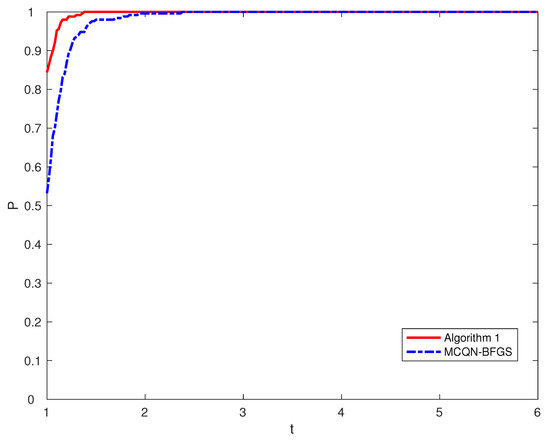

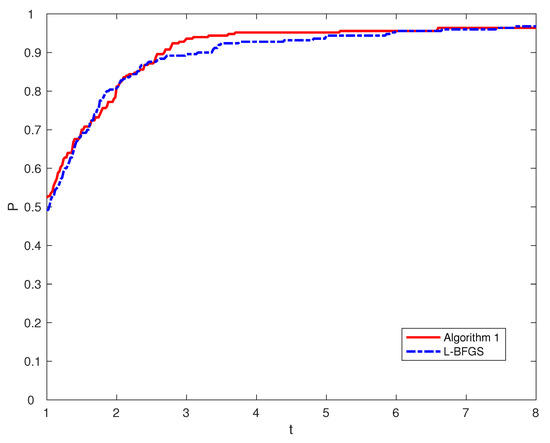

All the methods were coded in MATLAB R2016a on a Core (TM) i5 PC. The automatic differentiation was computed by ADMAT 2.0, which is available on the cayuga research GitHUB page. In Table 1, Table 2, Table 3 and Table 4 and Figure 1 and Figure 2, we report the numerical performances of the three methods. For the convenience of statement, we use the following notation in our numerical results.

Table 2.

Numbers of iterations for problems 1–12.

Table 3.

Numbers of iterations for problems 13–24.

Table 4.

Results of Dim = 1000 with different initial points.

Figure 1.

Performance profiles based on the numbers of iterations.

Figure 2.

Performance profiles based on the numbers of iterations.

- Pro: the problems;

- Dim: the dimensions of the test problem;

- Init: the initial points;

- Method: the algorithm used to solve the problem;

- MCQN-BFGS: MCQN update with the BFGS method;

- L-BFGS: limited-memory with the BFGS method.

We adopted the termination criterion as follows:

Firstly, we tested all three methods on the above 24 problems, whose dimensions are 10, 20, 50, 100, 200, 5000, 1000, 2000, 5000, and 1000. We set in the limited-memory BFGS method. Table 2 and Table 3 contain the numbers of iterations of the three methods for the test problems. Taking account of the total number of iterations, Algorithm 1 outperformed the MCQN update with BFGS method on 11 problems (2, 4, 5, 7, 9, 10, 12, 14, 18, 23, 24). Additionally, Algorithm 1 outperformed the limited memeory BFGS method on 13 problems (1, 2, 3, 7, 9, 12, 15, 16, 18, 19, 20, 21, 23).

For the sake of precise comparison, we adopted the performance profiles from [45], which are distribution functions of a performance metric. We denote P and S as the test set and the set of solvers; and and as the umber of problems and number of solvers, respectively. For solver and problem , we define as the number of iterations or number of function evaluations required for solve problem p using solver s. Then, using the performance ration

we define

where for some constant for all p and s. The equality holds if and only if solver s cannot solve problem p. Therefore, was the probability for satisfying among the best possible ratios.

Figure 1 evaluates the number of iterations of and the MCQN update with BFGS method by using performance profiles. It can be seen that the top curve corresponds to Algorithm 1, which shows that Algorithm 1 had better performance than the MCQN update with BFGS method. Additionally, Figure 2 demonstrates that Algorithm 1 had better performance than the limited-memory BFGS method.

Secondly, for a further comparison of Algorithm 1 and the MCQN update with BFGS method, we tested five different initial points, , , , , and , where is specified in Table 1. The dimensions of the test problems was 1000. Table 4 reports the number of iterations required of the two methods for 24 test problems, which also demonstrates that Algorithm 1 was effective and superior to the MCQN update with BFGS method.

6. Conclusions

In this paper, we presented a symmetric rank-two quasi-Newton update method based on an adjoint tangent condition for solving unconstrained optimization problems. Combined with the idea of matrix completion, we proposed a sparse quasi-Newton algorithm and established its local and superlinear convergence. Extensive numerical results demonstrated that the proposed algorithm outperformed other methods and can be used to solve large-scale unconstrained optimization problems.

Author Contributions

Conceptualization, H.C.; methodology, H.C. and X.A.; software, H.C. and X.A.; formal analysis, H.C.; writing—original draft preparation, H.C. and X.A.; writing—review and editing H.C. and X.A. All authors have read and agreed to the published version of the manuscript.

Funding

The work is supported by the National Natural Science Foundation of China, grant number 11701577; the Natural Science Foundation of Hunan Province, China, grant number 2020JJ5960; and the Scientific Research Foundation of Hunan Provincial Education Department, China, grant number 18C0253.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The date used to support the research plan and all the code used in this study are available from the corresponding author upon request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhou, W. A modified BFGS type quasi-Newton method with line search for symmetric nonlinear equations problems. J. Comput. Appl. Math. 2020, 367, 112454. [Google Scholar] [CrossRef]

- Zhou, W. A globally convergent BFGS method for symmetric nonlinear equations. J. Ind. Manag. Optim. 2021. [Google Scholar] [CrossRef]

- Zhou, W. A class of line search-type methods for nonsmooth convex regularized minimization. Softw. Comput. 2021, 25, 7131–7141. [Google Scholar] [CrossRef]

- Zhou, W.; Zhang, L. A modified Broyden-like quasi-Newton method for nonlinear equations. J. Comput. Appl. Math. 2020, 372, 112744. [Google Scholar] [CrossRef]

- Sabi’u, J.; Muangchoo, K.; Shah, A.; Abubakar, A.B.; Jolaoso, L.O. A Modified PRP-CG Type Derivative-Free Algorithm with Optimal Choices for Solving Large-Scale Nonlinear Symmetric Equations. Symmetry 2021, 13, 234. [Google Scholar] [CrossRef]

- Dennis, J.E.; Moré, J.J. A characterization of superlinear convergence and its application to quasi-Newton methods. Math. Comput. 1974, 28, 549–560. [Google Scholar] [CrossRef]

- Dennis, J.E.; Moré, J.J. Quasi–Newton methods, motivation and theory. SIAM Rev. 1977, 19, 46–89. [Google Scholar] [CrossRef]

- Davidon, W.C. Variable metric method for minimization. In Research Development Report ANL-5990; University of Chicago: Chicago, IL, USA, 1959. [Google Scholar] [CrossRef]

- Fletcher, R.; Powell, M.J. A rapidly convergent descent method for minimization. Comput. J. 1963, 6, 163–168. [Google Scholar] [CrossRef]

- Broyden, C.G. The convergence of a class of double-rank minimization algorithms 1. General considerations. IMA J. Appl. Math. 1970, 6, 76–90. [Google Scholar] [CrossRef]

- Fletcher, R. A new approach to variable metric algorithms. Comput. J. 1970, 13, 317–322. [Google Scholar] [CrossRef]

- Goldfarb, D. A family of variable-metric methods derived by variational means. Math. Comput. 1970, 24, 23–26. [Google Scholar] [CrossRef]

- Shanno, D.F. Conditioning of quasi-Newton methods for function minimization. Math. Comput. 1970, 24, 647–656. [Google Scholar] [CrossRef]

- Quasi–Newton Methods. In Optimization Theory and Methods; Springer Series in Optimization and Its Applications; Springer: Boston, MA, USA, 2006; Volume 1, pp. 203–301. [CrossRef]

- Quasi–Newton Methods. In Numerical Optimization; Springer Series in Operations Research and Financial Engineering; Springer: New York, NY, USA, 2006. [CrossRef]

- Sun, W.; Yuan, Y.X. Optimization Theory and Methods: Nonlinear Programming; Springer Science & Business Media: New York, NY, USA, 2006. [Google Scholar]

- Andrei, N. Continuous Nonlinear Optimization for Engineering Applications in GAMS Technology; Springer Optimization and Its Applications Series; Springer: Berlin, Germany, 2017; Volume 121. [Google Scholar] [CrossRef]

- Schubert, L.K. Modification of a quasi-Newton method for nonlinear equations with a sparse Jacobian. Math. Comput. 1970, 24, 27–30. [Google Scholar] [CrossRef]

- Powell, M.J.D.; Toint, P.L. On the estimation of sparse Hessian matrices. SIAM J. Numer. Anal. 1979, 16, 1060–1074. [Google Scholar] [CrossRef]

- Toint, P. Towards an efficient sparsity exploiting Newton method for minimization. In Sparse Matrices and Their Uses; Academic Press: London, UK, 1981; pp. 57–88. [Google Scholar]

- Nocedal, J. Updating quasi-Newton matrices with limited storage. Math. Comput. 1980, 35, 773–782. [Google Scholar] [CrossRef]

- Liu, D.C.; Nocedal, J. On the limited memory BFGS method for large scale optimization. Math. Program. 1989, 45, 503–528. [Google Scholar] [CrossRef]

- Griewank, A.; Toint, P.L. Partitioned variable metric updates for large structured optimization problems. Numer. Math. 1982, 39, 119–137. [Google Scholar] [CrossRef]

- Griewank, A.; Toint, P.L. Local convergence analysis for partitioned quasi-Newton updates. Numer. Math. 1982, 39, 429–448. [Google Scholar] [CrossRef]

- Griewank, A. The global convergence of partitioned BFGS on problems with convex decompositions and Lipschitzian gradients. Math. Program. 1991, 50, 141–175. [Google Scholar] [CrossRef]

- Cao, H.P.; Li, D.H. Partitioned quasi-Newton methods for sparse nonlinear equations. Comput. Optim. Appl. 2017, 66, 481–505. [Google Scholar] [CrossRef]

- Toint, P.L. On sparse and symmetric matrix updating subject to a linear equation. Math. Comput. 1977, 31, 954–961. [Google Scholar] [CrossRef]

- Fletcher, R. An optimal positive definite update for sparse Hessian matrices. SIAM J. Optim. 1995, 5, 192–218. [Google Scholar] [CrossRef]

- Yamashita, N. Sparse quasi-Newton updates with positive definite matrix completion. Math. Program. 2008, 115, 1–30. [Google Scholar] [CrossRef]

- Dai, Y.H.; Yamashita, N. Analysis of sparse quasi-Newton updates with positive definite matrix completion. J. Oper. Res. Soc. China 2014, 2, 39–56. [Google Scholar] [CrossRef][Green Version]

- Dai, Y.H.; Yamashita, N. Convergence analysis of sparse quasi-Newton updates with positive definite matrix completion for two-dimensional functions. Numer. Algebr. Control. Optim. 2011, 1, 61–69. [Google Scholar] [CrossRef]

- Nazareth, J.L. If quasi-Newton then why not quasi-Cauchy. SIAG/Opt Views-and-News 1995, 6, 11–14. [Google Scholar]

- Dennis, J.E., Jr.; Wolkowicz, H. Sizing and least-change secant methods. SIAM J. Numer. Anal. 1993, 30, 1291–1314. [Google Scholar] [CrossRef]

- Andrei, N. A diagonal quasi-Newton updating method for unconstrained optimization. Numer. Algorithms 2019, 81, 575–590. [Google Scholar] [CrossRef]

- Andrei, N. A new diagonal quasi-Newton updating method with scaled forward finite differences directional derivative for unconstrained optimization. Numer. Funct. Anal. Optim. 2019, 40, 1467–1488. [Google Scholar] [CrossRef]

- Andrei, N. Diagonal Approximation of the Hessian by Finite Differences for Unconstrained Optimization. J. Optim. Theory Appl. 2020, 185, 859–879. [Google Scholar] [CrossRef]

- Andrei, N. A new accelerated diagonal quasi-Newton updating method with scaled forward finite differences directional derivative for unconstrained optimization. Optimization 2021, 70, 345–360. [Google Scholar] [CrossRef]

- Leong, W.J.; Enshaei, S.; Kek, S.L. Diagonal quasi-Newton methods via least change updating principle with weighted Frobenius norm. Numer. Algorithms 2021, 86, 1225–1241. [Google Scholar] [CrossRef]

- Schlenkrich, S.; Griewank, A.; Walther, A. On the local convergence of adjoint Broyden methods. Math. Program. 2010, 121, 221–247. [Google Scholar] [CrossRef]

- Byrd, R.H.; Nocedal, J. A tool for the analysis of quasi-Newton methods with application to unconstrained minimization. SIAM J. Numer. Anal. 1989, 26, 727–739. [Google Scholar] [CrossRef]

- Byrd, R.H.; Nocedal, J.; Yuan, Y.X. Global convergence of a cass of quasi-Newton methods on convex problems. SIAM J. Numer. Anal. 1987, 24, 1171–1190. [Google Scholar] [CrossRef]

- Moré, J.J.; Garbow, B.S.; Hillstrom, K.E. Testing unconstrained optimization software. ACM Trans. Math. Softw. (TOMS) 1981, 7, 17–41. [Google Scholar] [CrossRef]

- Luksan, L.; Matonoha, C.; Vlcek, J. Modified CUTE Problems for Sparse Unconstrained Optimization; Technical Report 1081; Institute of Computer Science, Academy of Sciences of the Czech Republic: Prague, Czech Republic, 2010. [Google Scholar]

- Andrei, N. An unconstrained optimization test functions collection. Adv. Model. Optim. 2008, 10, 147–161. [Google Scholar]

- Dolan, E.D.; Moré, J.J. Benchmarking optimization software with performance profiles. Math. Program. 2002, 91, 201–213. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).