A Software Tool for Exploring the Relation between Diagnostic Accuracy and Measurement Uncertainty

Abstract

1. Introduction

- Sensitivity (Se), specificity (Sp), diagnostic odds ratio (DOR), likelihood ratio for positive or negative result (LR + and LR −, respectively), which are defined conditionally on the true disease status [2] and are prevalence invariant.

- Overall diagnostic accuracy (ODA), which is defined conditionally on the true disease status and is prevalence-dependent.

- Positive predictive and negative predictive value (PPV and NPV), which are defined conditionally on the test outcome and are prevalence-dependent.

- The expected loss for the testing procedure, for a true negative result, for a false negative result, for a true positive result and for a false positive result, defined on the same scale.

- The probabilities for a true negative result, for a false negative result, for a true positive result and for a false positive result.

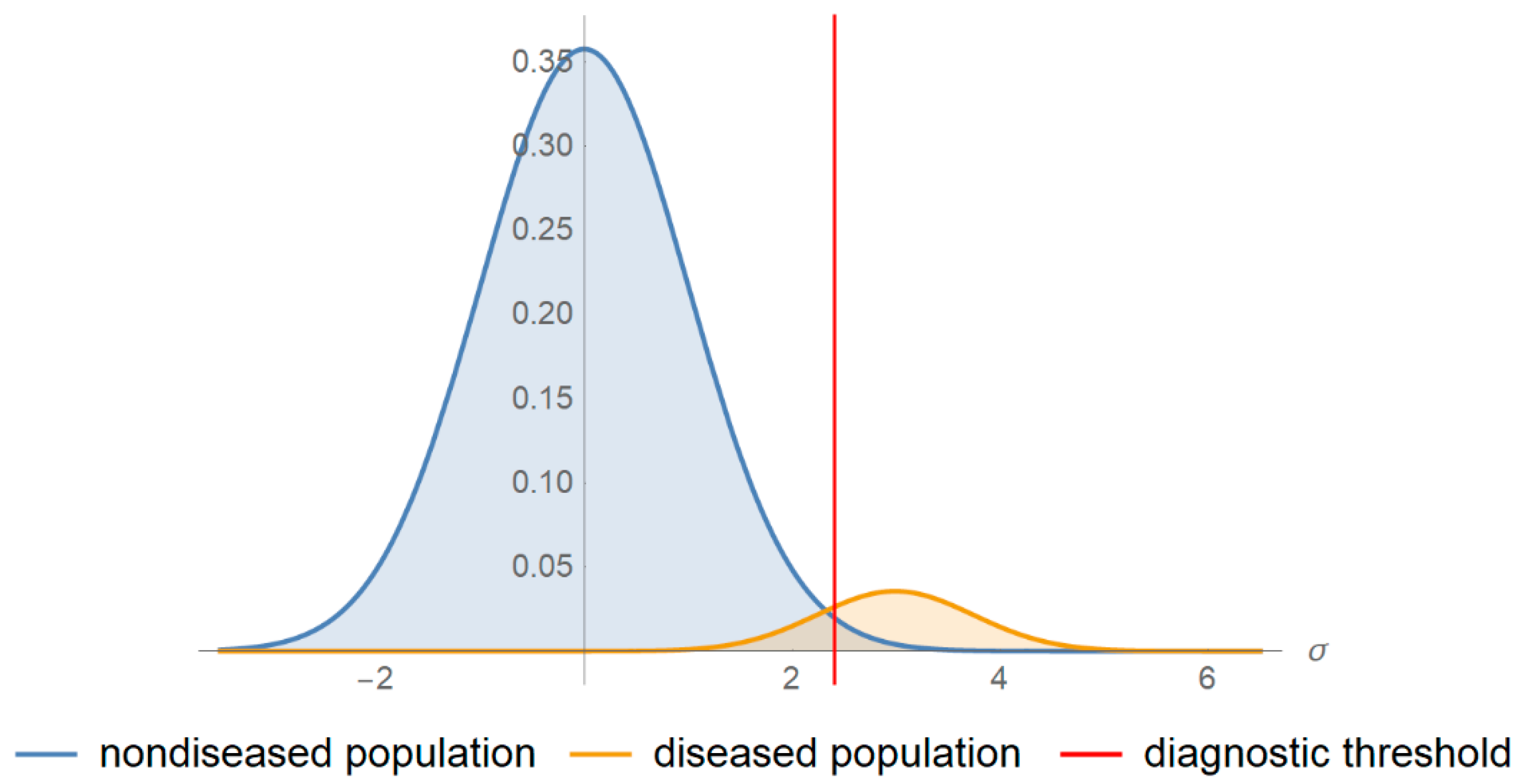

Relation between Diagnostic Accuracy and Measurement Uncertainty

2. Materials and Methods

- There is a reference (“gold standard”) diagnostic method classifying correctly a subject as diseased or non-diseased [16].

- The parameters of the distributions of the measurand are known.

- The measurement uncertainty is normally distributed and homoscedastic in the diagnostic threshold’s range.

- If the measurement is above the threshold the patient is classified as test-positive otherwise as test-negative.

3. Results

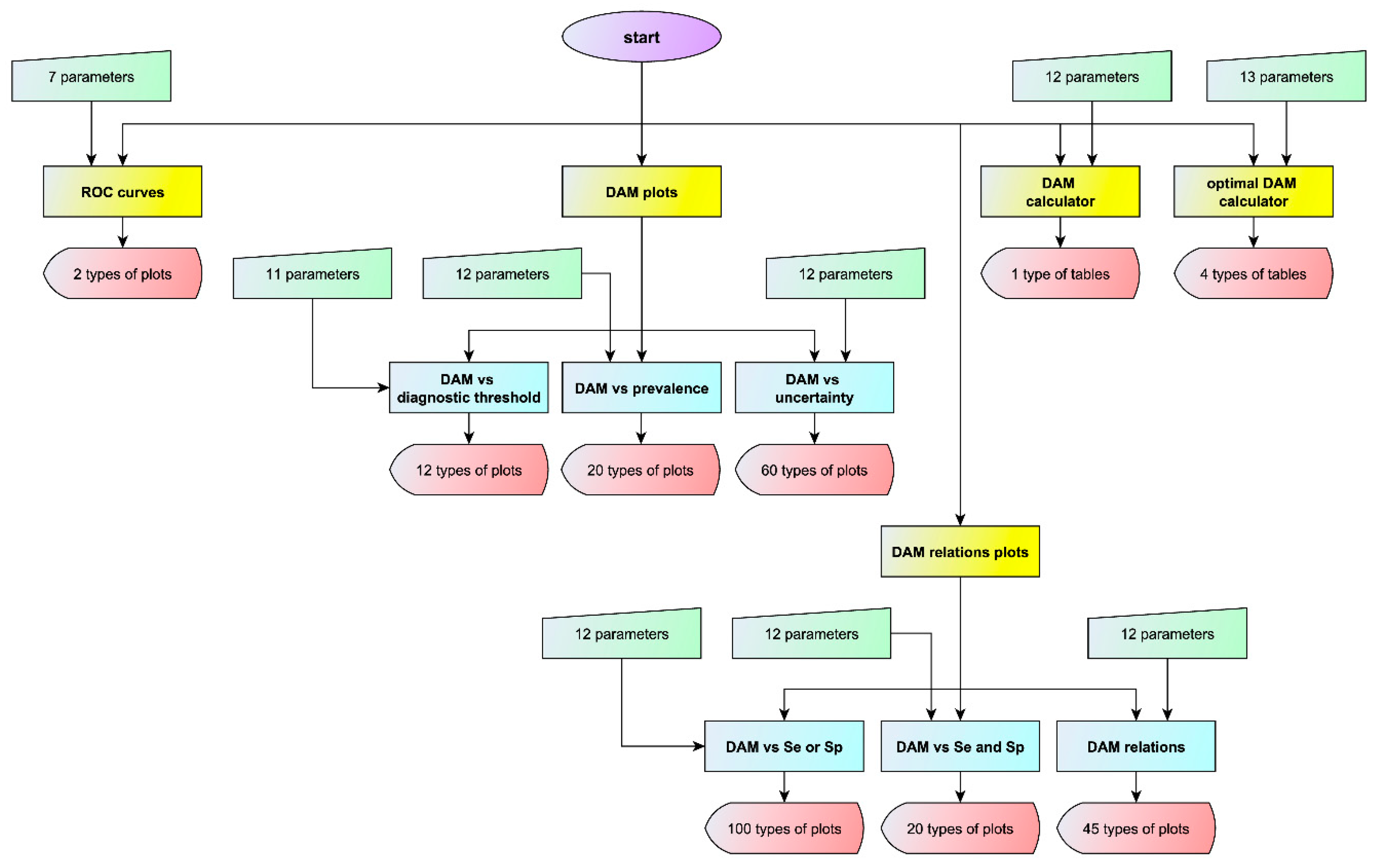

3.1. Interface of the Program

3.1.1. ROC Curves Module

3.1.2. Diagnostic Accuracy Measures Plots Module

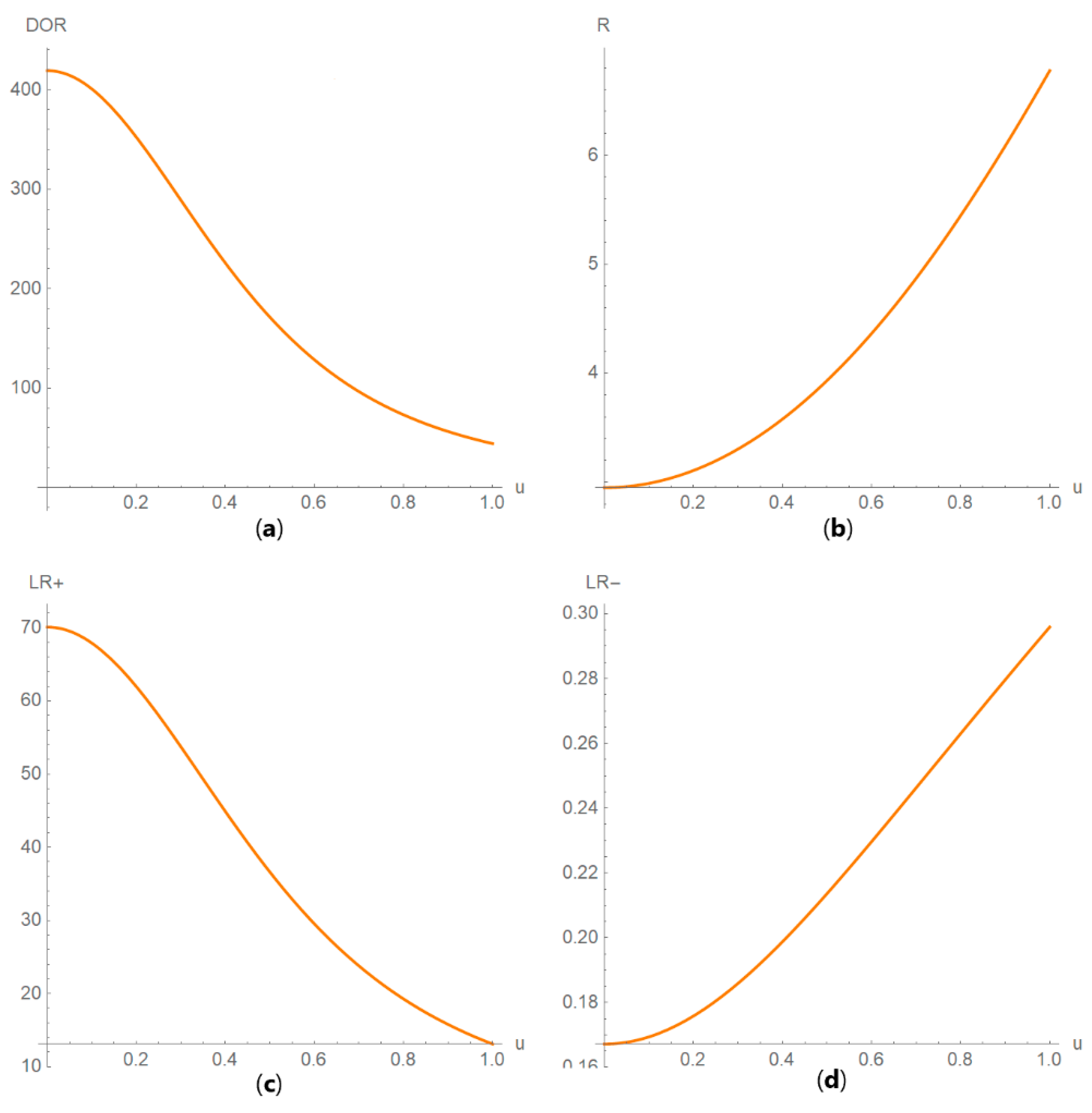

- Diagnostic accuracy measures against diagnostic threshold

- Diagnostic accuracy measures against prevalence

- Diagnostic accuracy measures against standard measurement uncertainty

3.1.3. Diagnostic Accuracy Measures Relations Plots Module

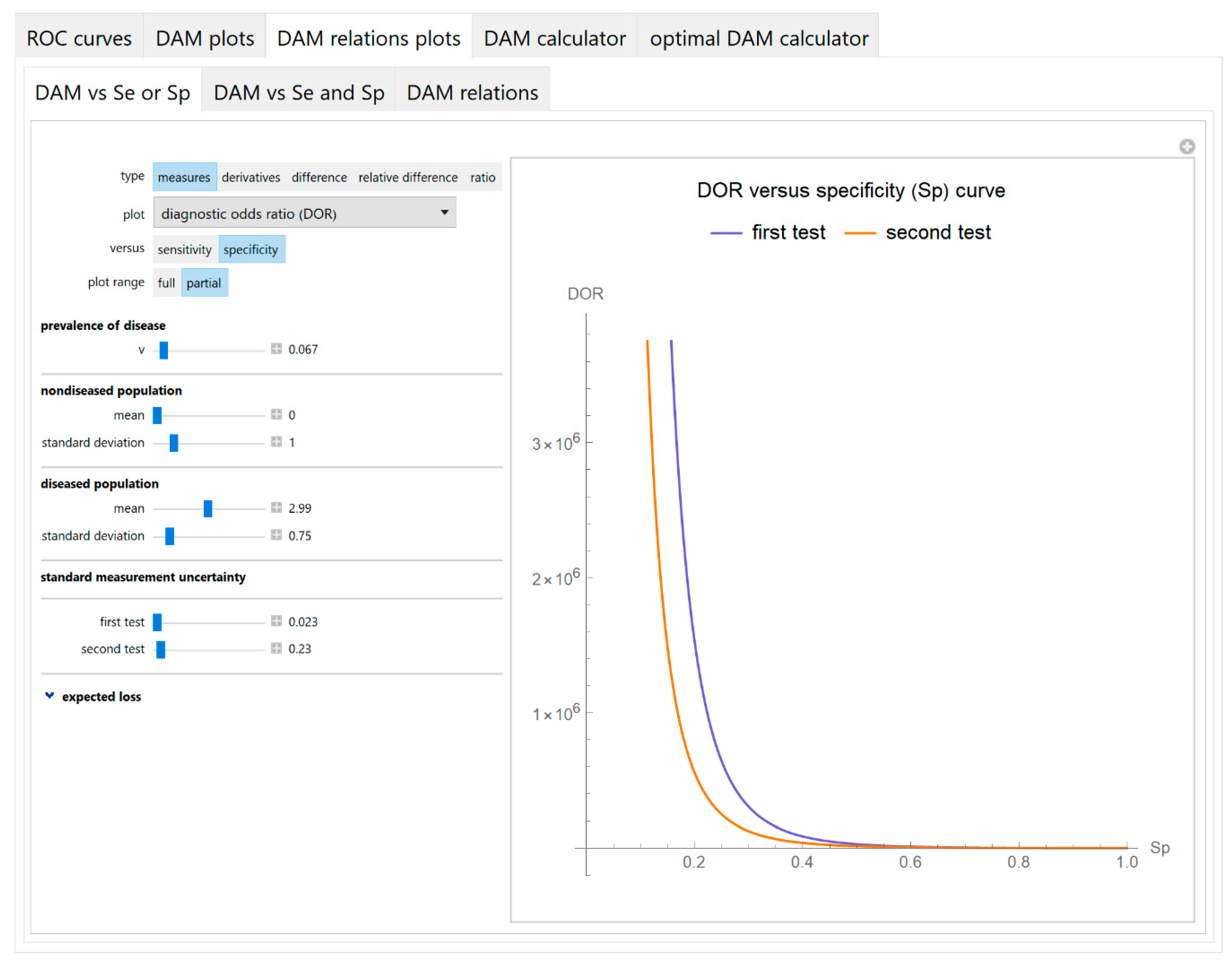

- Diagnostic accuracy measures against sensitivity or specificity

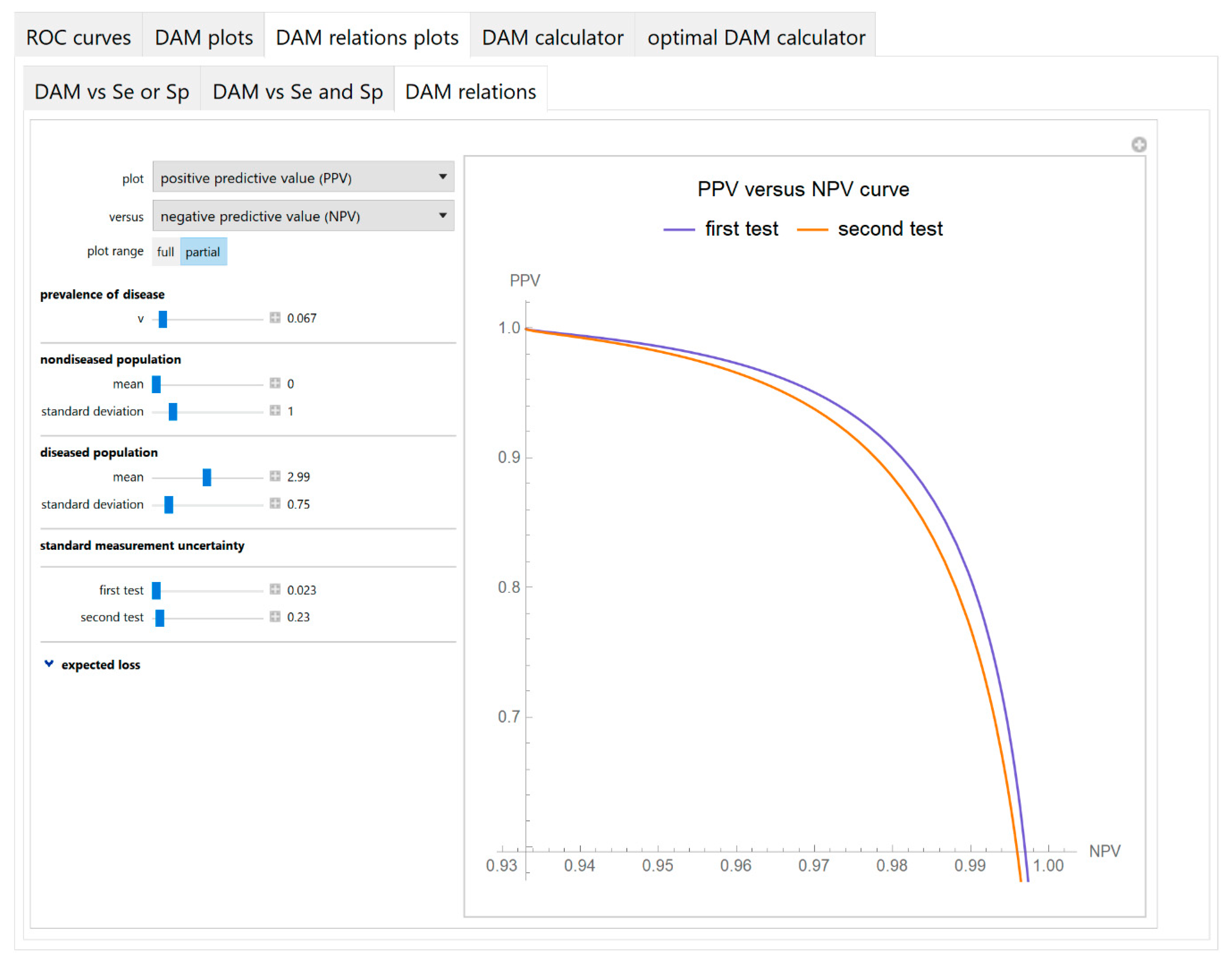

- Diagnostic accuracy measures against sensitivity and specificity

- Diagnostic accuracy measures relations

3.1.4. Diagnostic Accuracy Measures Calculator Module

3.1.5. Optimal Diagnostic Accuracy Measures Calculator Module

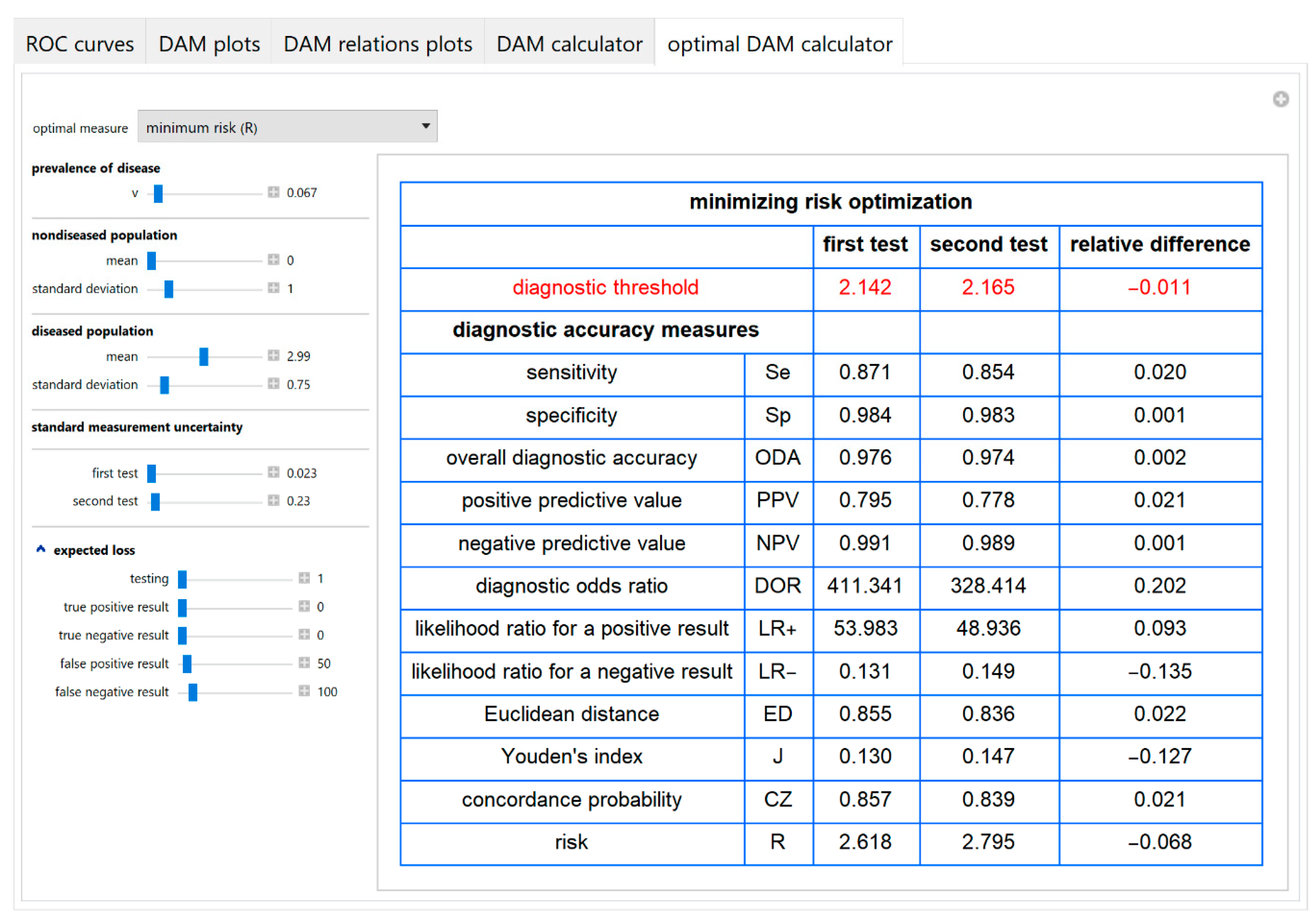

3.2. Illustrative Case Study

- In Table 5.

4. Discussion

- The existence of a “gold standard” diagnostic method. If a “gold standard” does not exist, there are alternative approaches for the estimation of diagnostic accuracy measures [42].

- The parameters of the distributions of the measurand are assumed to be known. In practice, they are estimated [43].

- The normality of either the measurements or their applicable transforms [17,18,44,45]; however, this is usually valid. There is related literature on the distribution of measurements of diagnostic tests, in the context of reference intervals and diagnostic thresholds or clinical decision limits [46,47,48,49,50].

- The measurement uncertainty homoscedasticity in the diagnostic thresholds range. If measurement uncertainty is heteroscedastic, thus skewing the measurement distribution, appropriate transformations may restore homoscedasticity [53].

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

Appendix A

- 1.

- Populations

- 2.

- Test Outcomes

- 3.

- Diagnostic Accuracy Measures

- 4.

- Parameters

- 5.

- Expected Loss

- 6.

- Functions and Relations

Appendix B

- Software Availability and Requirements

References

- Šimundić, A.-M. Measures of diagnostic accuracy: Basic definitions. EJIFCC 2009, 19, 203–211. [Google Scholar]

- Shiu, S.-Y.; Gatsonis, C. The predictive receiver operating characteristic curve for the joint assessment of the positive and negative predictive values. Philos. Trans. A Math. Phys. Eng. Sci. 2008, 366, 2313–2333. [Google Scholar] [CrossRef]

- McNeil, B.J.; Hanley, J.A. Statistical approaches to the analysis of receiver operating characteristic (ROC) curves. Med. Decis. Mak. 1984, 4, 137–150. [Google Scholar] [CrossRef] [PubMed]

- Hanley, J.A.; McNeil, B.J. The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology 1982, 143, 29–36. [Google Scholar] [CrossRef] [PubMed]

- Hilden, J. The area under the ROC curve and its competitors. Med. Decis. Mak. 1991, 11, 95–101. [Google Scholar] [CrossRef] [PubMed]

- Hatjimihail, A.T. The Area Over a Receiver Operating Characteristic (ROC) Curve as an Index of Diagnostic Inaccuracy: Wolfram Demonstrations Project. 2011. (updated 3/7/2011). Available online: https://demonstrations.wolfram.com/TheAreaOverAReceiverOperatingCharacteristicROCCurveAsAnIndex/ (accessed on 28 June 2020).

- Youden, W.J. Index for rating diagnostic tests. Cancer 1950, 3, 32–35. [Google Scholar] [CrossRef]

- Hajian-Tilaki, K. The choice of methods in determining the optimal cut-off value for quantitative diagnostic test evaluation. Stat. Methods Med. Res. 2018, 27, 2374–2383. [Google Scholar] [CrossRef]

- Liu, X. Classification accuracy and cut point selection. Stat. Med. 2012, 31, 2676–2686. [Google Scholar] [CrossRef]

- Joint Committee for Guides in Metrology. Evaluation of Measurement Data—Guide to the Expression of Uncertainty in Measurement; Joint Committee for Guides in Metrology: Sèvres, Paris, France, 2008. [Google Scholar]

- Kallner, A.; Boyd, J.C.; Duewer, D.L.; Giroud, C.; Hatjimihail, A.T.; Klee, G.G.; Lo, S.F.; Pennello, G.; Sogin, D.; Tholen, D.W.; et al. Expression of Measurement Uncertainty in Laboratory Medicine; Approved Guideline; Clinical and Laboratory Standards Institute: Annapolis Junction, MD, USA, 2012. [Google Scholar]

- White, G.H. Basics of estimating measurement uncertainty. Clin. Biochem. Rev. 2008, 29 (Suppl. 1), S53–S60. [Google Scholar]

- Oosterhuis, W.P.; Theodorsson, E. Total error vs. measurement uncertainty: Revolution or evolution? Clin. Chem. Lab. Med. 2016, 54, 235–239. [Google Scholar] [CrossRef]

- Smith, A.F.; Shinkins, B.; Hall, P.S.; Hulme, C.T.; Messenger, M.P. Toward a framework for outcome-based analytical performance specifications: A methodology review of indirect methods for evaluating the impact of measurement uncertainty on clinical outcomes. Clin. Chem. 2019, 65, 1363–1374. [Google Scholar] [CrossRef] [PubMed]

- Ceriotti, F.; Fernandez-Calle, P.; Klee, G.G.; Nordin, G.; Sandberg, S.; Streichert, T.; Vives-Corrons, J.-L.; Panteghini, M. Criteria for assigning laboratory measurands to models for analytical performance specifications defined in the 1st EFLM Strategic Conference. Clin. Chem. Lab. Med. 2017, 55, 189–194. [Google Scholar] [CrossRef] [PubMed]

- Bloch, D.A. Comparing two diagnostic tests against the same “Gold Standard” in the same sample. Biometrics 1997, 53, 73–85. [Google Scholar] [CrossRef] [PubMed]

- Sakia, R.M. The box-cox transformation technique: A review. J. R. Stat. Soc. Ser. D (Statistician) 1992, 41, 169–178. [Google Scholar] [CrossRef]

- Gillard, J. A generalised Box–Cox transformation for the parametric estimation of clinical reference intervals. J. Appl. Stat. 2012, 39, 2231–2245. [Google Scholar] [CrossRef]

- Wolfram, S. An Elementary Introduction to the Wolfram Language, 2nd ed.; Wolfram Media: Champaign, IL, USA, 2017; 340p. [Google Scholar]

- Wolfram Research. I. Mathematica, Version 12.0.; Wolfram Research: Champaign, IL, USA, 2019. [Google Scholar]

- Hatjimihail, A.T. Receiver Operating Characteristic Curves and Uncertainty of Measurement: Wolfram Demonstrations Project. 2007. (updated 6/12/2007). Available online: https://demonstrations.wolfram.com/ReceiverOperatingCharacteristicCurvesAndUncertaintyOfMeasure/ (accessed on 28 June 2020).

- Hatjimihail, A.T. Uncertainty of Measurement and Areas Over and Under the ROC Curves: Wolfram Demonstrations Project. 2009. (updated 4/20/2009). Available online: https://demonstrations.wolfram.com/UncertaintyOfMeasurementAndAreasOverAndUnderTheROCCurves/ (accessed on 28 June 2020).

- Hatjimihail, A.T. Uncertainty of Measurement and Diagnostic Accuracy Measures: Wolfram Demonstrations Project. 2009 (updated 5/26/2009). Available online: https://demonstrations.wolfram.com/UncertaintyOfMeasurementAndDiagnosticAccuracyMeasures/ (accessed on 28 June 2020).

- Chatzimichail, T. Analysis of Diagnostic Accuracy Measures: Wolfram Demonstrations Project. 2015. (updated 7/24/2015). Available online: https://demonstrations.wolfram.com/AnalysisOfDiagnosticAccuracyMeasures/ (accessed on 28 June 2020).

- Chatzimichail, T. Calculator for Diagnostic Accuracy Measures: Wolfram Demonstrations Project. 2018. (updated 4/25/2018). Available online: https://demonstrations.wolfram.com/CalculatorForDiagnosticAccuracyMeasures/ (accessed on 28 June 2020).

- Chatzimichail, T. Correlation of Positive and Negative Predictive Values of Diagnostic Tests: Wolfram Demonstrations Project. 2018. (updated 4/5/2018). Available online: https://demonstrations.wolfram.com/CorrelationOfPositiveAndNegativePredictiveValuesOfDiagnostic/ (accessed on 28 June 2020).

- Chatzimichail, T.; Hatjimihail, A.T. Calculation of Diagnostic Accuracy Measures: Wolfram Demonstrations Project. 2018. (updated 6/22/2018). Available online: https://demonstrations.wolfram.com/CalculatorForDiagnosticAccuracyMeasures/ (accessed on 28 June 2020).

- Lim, T.O.; Bakri, R.; Morad, Z.; Hamid, M.A. Bimodality in blood glucose distribution: Is it universal? Diabetes Care 2002, 25, 2212–2217. [Google Scholar] [CrossRef][Green Version]

- American Diabetes A. 2. Classification and diagnosis of diabetes: Standards of medical care in diabetes-2019. Diabetes Care 2019, 42 (Suppl. 1), S13–S28. [Google Scholar] [CrossRef]

- Kupchak, P.; Wu, A.H.B.; Ghani, F.; Newby, L.K.; Ohman, E.M.; Christenson, R.H. Influence of imprecision on ROC curve analysis for cardiac markers. Clin. Chem. 2006, 52, 752–753. [Google Scholar] [CrossRef]

- Kroll, M.H.; Biswas, B.; Budd, J.R.; Durham, P.; Gorman, R.T.; Gwise, T.E.; Pharmd, A.-B.H.; Hatjimihail, A.T.; Hilden, J.; Song, K. Assessment of the Diagnostic Accuracy of Laboratory Tests Using Receiver Operating Characteristic Curves; Approved Guideline, 2nd ed.; Clinical and Laboratory Standards Institute: Wayne, PA, USA, 2011. [Google Scholar]

- Lippi, G.; Simundic, A.-M.; Plebani, M. Potential preanalytical and analytical vulnerabilities in the laboratory diagnosis of coronavirus disease 2019 (COVID-19). Clin. Chem. Lab. Med. 2020, 58, 1070–1076. [Google Scholar] [CrossRef]

- Tang, Y.-W.; Schmitz, J.E.; Persing, D.H.; Stratton, C.W. The laboratory diagnosis of COVID-19 Infection: Current issues and challenges. J. Clin. Microbiol. 2020, 58, e00512-20. [Google Scholar] [CrossRef]

- Deeks, J.J.; Dinnes, J.; Takwoingi, Y.; Davenport, C.; Leeflang, M.M.G.; Spijker, R.; Hooft, L.; van den Bruel, A.; Emperador, D.; Dittrich, S. Diagnosis of SARS-CoV-2 infection and COVID-19: Accuracy of signs and symptoms; molecular, antigen and antibody tests; and routine laboratory markers. Cochrane Database Syst. Rev. 2020, 26, 1896. [Google Scholar]

- Infantino, M.; Grossi, V.; Lari, B.; Bambi, R.; Perri, A.; Manneschi, M.; Terenzi, G.; Liotti, I.; Ciotta, G.; Taddei, C.; et al. Diagnostic accuracy of an automated chemiluminescent immunoassay for anti-SARS-CoV-2 IgM and IgG antibodies: An Italian experience. J. Med. Virol. 2020. [Google Scholar] [CrossRef] [PubMed]

- Mahase, E. Covid-19: “Unacceptable” that antibody test claims cannot be scrutinised, say experts. BMJ 2020, 369, m2000. [Google Scholar] [CrossRef] [PubMed]

- Kontou, P.I.; Braliou, G.G.; Dimou, N.L.; Nikolopoulos, G.; Bagos, P.G. Antibody tests in detecting SARS-CoV-2 infection: A meta-analysis. Diagnostics (Basel) 2020, 10, 319. [Google Scholar] [CrossRef] [PubMed]

- Theodorsson, E. Uncertainty in measurement and total error: Tools for coping with diagnostic uncertainty. Clin. Lab. Med. 2017, 37, 15–34. [Google Scholar] [CrossRef]

- Padoan, A.; Sciacovelli, L.; Aita, A.; Antonelli, G.; Plebani, M. Measurement uncertainty in laboratory reports: A tool for improving the interpretation of test results. Clin. Biochem. 2018, 57, 41–47. [Google Scholar] [CrossRef]

- Aggarwal, R. Risk, complexity, decision making and patient care. JAMA Surg. 2018, 153, 208. [Google Scholar] [CrossRef]

- Hatjimihail, A.T. Estimation of the optimal statistical quality control sampling time intervals using a residual risk measure. PLoS ONE 2009, 4, e5770. [Google Scholar] [CrossRef]

- Collins, J.; Albert, P.S. Estimating diagnostic accuracy without a gold standard: A continued controversy. J. Biopharm. Stat. 2016, 26, 1078–1082. [Google Scholar] [CrossRef]

- Zhou, X.-H. Statistical Methods in Diagnostic Medicine; Wiley: Hoboken, NJ, USA, 2011. [Google Scholar]

- Atkinson, A.B. The box-cox transformation: Review and extensions. Stat. Sci. 2020. (In Press). Available online: http://eprints.lse.ac.uk/103537/1/StatSciV4.pdf (accessed on 28 June 2020).

- Box, G.E.P.; Cox, D.R. An analysis of transformations. J. R. Stat. Soc. Ser. B Stat. Methodol. 1964, 26, 211–243. [Google Scholar] [CrossRef]

- Solberg, H.E. Approved recommendation (1987) on the theory of reference values. Part 5. Statistical treatment of collected reference values. Determination of reference limits. Clin. Chim. Acta 1987, 170, S13–S32. [Google Scholar] [CrossRef]

- Pavlov, I.Y.; Wilson, A.R.; Delgado, J.C. Reference interval computation: Which method (not) to choose? Clin. Chim. Acta 2012, 413, 1107–1114. [Google Scholar] [CrossRef] [PubMed]

- Sikaris, K. Application of the stockholm hierarchy to defining the quality of reference intervals and clinical decision limits. Clin. Biochem. Rev. 2012, 33, 141–148. [Google Scholar] [PubMed]

- Daly, C.H.; Liu, X.; Grey, V.L.; Hamid, J.S. A systematic review of statistical methods used in constructing pediatric reference intervals. Clin. Biochem. 2013, 46, 1220–1227. [Google Scholar] [CrossRef]

- Ozarda, Y.; Sikaris, K.; Streichert, T.; Macri, J.; IFCC Committee on Reference Intervals and Decision Limits (C-RIDL). Distinguishing reference intervals and clinical decision limits—A review by the IFCC Committee on Reference Intervals and Decision Limits. Crit. Rev. Clin. Lab. Sci. 2018, 55, 420–431. [Google Scholar] [CrossRef]

- Wilson, J.M.G.; Jungner, G. Principles and Practice of Screening for Disease; World Health Organization: Geneva, Switzerland, 1968; 163p. [Google Scholar]

- Petersen, P.H.; Horder, M. 2.3 Clinical test evaluation. Unimodal and bimodal approaches. Scand. J. Clin. Lab. Investig. 1992, 52 (Suppl. 208), 51–57. [Google Scholar]

- Analytical Methods Committee AN. Why do we need the uncertainty factor? Anal. Methods 2019, 11, 2105–2107. [Google Scholar] [CrossRef]

| Populations | |||

|---|---|---|---|

| Non-Diseased | Diseased | ||

| Test Results | Negative | true negative (TN) | false negative (FN) |

| Positive | false positive (FP) | true positive (TP) | |

| Measure | Natural Frequency Definition | Probability Definition |

|---|---|---|

| Sensitivity (Se) | ||

| Specificity (Sp) | ||

| Positive Predictive Value (PPV) | ||

| Negative Predictive Value (NPV) | ||

| Overall Diagnostic Accuracy (ODA) | ||

| Diagnostic Odds Ratio (DOR) | ||

| Likelihood Ratio for a Positive Result (LR+) | ||

| Likelihood Ratio for a Positive Result (LR−) | ||

| Youden’s Index (J) | ||

| Euclidean Distance (ED) | ||

| Concordance Probability (CZ) | ||

| Risk (R) |

| Measure | Definition |

|---|---|

| Positive Predictive Value (PPV) | |

| Negative Predictive Value (NPV) | |

| Overall Diagnostic Accuracy (ODA) | |

| Diagnostic Odds Ratio (DOR) | |

| Likelihood Ratio for a Positive Result (LR+) | |

| Likelihood Ratio for a Positive Result (LR−) | |

| Youden’s Index (J) | |

| Euclidean Distance (ED) | |

| Concordance Probability (CZ) | |

| Risk (R) |

| Settings | Figure 12 | Figure 13 | Figure 14 | Figure 15 | Figure 16 | Figure 17 | Table 5 |

|---|---|---|---|---|---|---|---|

| 2.99 | 2.99 | 2.99 | 2.99 | 2.99 | 2.99 | 2.99 | |

| 0.75 | 0.75 | 0.75 | 0.75 | 0.75 | 0.75 | 0.75 | |

| 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | |

| 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | |

| 0.067 | 0.067 | − | 0.067 | 0.067 | 0.067 | 0.067 | |

| 2.26 | 2.26 | 2.26 | − | − | − | ||

| − | − | 0.023 | 0.023 | 0.023 | 0.023 | 0.023 | |

| − | − | 0.23 | 0.23 | 0.23 | 0.23 | 0.23 | |

| − | − | 1 | − | 1 | 1 | 1 | |

| − | − | 0 | − | 0 | 0 | 0 | |

| − | − | 100 | − | 100 | 100 | 100 | |

| − | − | 0 | − | 0 | 0 | 0 | |

| − | − | 76 | − | 76 | 76 | 76 |

| Optimal Diagnostic Threshold | |||||

|---|---|---|---|---|---|

| First Test | Second Test | Relative Difference | |||

| Optimizing DAM | Youden’s index | J | 1.637 | 1.623 | 0.009 |

| Euclidean distance | ED | 1.676 | 1.663 | 0.008 | |

| concordance probability | CZ | 1.640 | 1.627 | 0.008 | |

| Risk | R | 2.258 | 2.290 | −0.014 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chatzimichail, T.; Hatjimihail, A.T. A Software Tool for Exploring the Relation between Diagnostic Accuracy and Measurement Uncertainty. Diagnostics 2020, 10, 610. https://doi.org/10.3390/diagnostics10090610

Chatzimichail T, Hatjimihail AT. A Software Tool for Exploring the Relation between Diagnostic Accuracy and Measurement Uncertainty. Diagnostics. 2020; 10(9):610. https://doi.org/10.3390/diagnostics10090610

Chicago/Turabian StyleChatzimichail, Theodora, and Aristides T. Hatjimihail. 2020. "A Software Tool for Exploring the Relation between Diagnostic Accuracy and Measurement Uncertainty" Diagnostics 10, no. 9: 610. https://doi.org/10.3390/diagnostics10090610

APA StyleChatzimichail, T., & Hatjimihail, A. T. (2020). A Software Tool for Exploring the Relation between Diagnostic Accuracy and Measurement Uncertainty. Diagnostics, 10(9), 610. https://doi.org/10.3390/diagnostics10090610