Abstract

The smart visualization of medical images (SVMI) model is based on multi-detector computed tomography (MDCT) data sets and can provide a clearer view of changes in the brain, such as tumors (expansive changes), bleeding, and ischemia on native imaging (i.e., a non-contrast MDCT scan). The new SVMI method provides a more precise representation of the brain image by hiding pixels that are not carrying information and rescaling and coloring the range of pixels essential for detecting and visualizing the disease. In addition, SVMI can be used to avoid the additional exposure of patients to ionizing radiation, which can lead to the occurrence of allergic reactions due to the contrast media administration. Results of the SVMI model were compared with the final diagnosis of the disease after additional diagnostics and confirmation by neuroradiologists, who are highly trained physicians with many years of experience. The application of the realized and presented SVMI model can optimize the engagement of material, medical, and human resources and has the potential for general application in medical training, education, and clinical research.

1. Introduction

Over the last decade, imaging systems and devices have undergone significant advances in medical research, such as the use of magnetic resonance imaging (MRI) [1], multi-detector computed tomography (MDCT) [2], and positron emission tomography (PET) [3]. In the last seven years, the application of new technologies such as machine learning (ML) and artificial intelligence (AI), driven by the availability of large data sets (i.e., Big Data), has made astonishing progress in the ability of machines to process and manage data including images [4], language [5], and speech [6]. Furthermore, the rapid development of computer science in collaboration with medicine can contribute to developing new algorithms for disease prediction in medicine and new methods of medical image processing. The healthcare sector can significantly benefit from the application of these new information technologies and the exponential growth of the total generated data (150 EB or 1018 bytes in the United States alone, growing 48% annually [7,8]), as well as from the new approaches in designing a medical information system.

In August 2018, the National Institutes of Health discussed gaps in medical imaging, defining key research priorities including automated imaging methods, extracting valid information from medical imaging, electronic phenotyping, and prospectively structured medical imaging reporting [9]. In addition, medical diagnostics can be improved using modern methods of visualizing large amounts of medical images, which can sometimes be difficult to classify by human experts and specialists. Additionally, AI involving ML and Big Data has the potential to improve clinical outcomes and further increase the value of medical images in a way yet to be defined. It also remains a condition for achieving performance at the physician level [10,11,12]. However, the uncertainty and unreliability of the results obtained by Big Data analysis may result in the fall of perspective models [13]. Despite the evident progress of AI applications in medical imaging and the significant financial investments of large global companies in developing new technologies, radiologists are slowly adopting AI in their work. In a study conducted in the United States in 2020, 90% of 50 radiologists surveyed felt that the enhancement of imaging quality through AI would become mainstream in less than five years [14]. Still, although the advancement in information technology and medical image processing devices is growing exponentially with the synergy of human knowledge, physicians, and researchers, the benefits of computer-assisted intervention and diagnosis are viable.

Many researchers propose different approaches to analyzing medical images using available technologies and automatic processing systems. In the past few years, the analysis of medical images based on ML has gained significant importance in scientific research. In particular, with the progress of computer vision, researchers are encouraged to develop various systems for the analysis, correlation, and interpretation of medical images [15,16,17] such as convolutional neural networks for brain image segmentation [18,19,20,21]; for brain tumor detection and classification [22,23,24]; medical image registration, fusion, and annotation [25,26,27,28,29]; computer-aided diagnosis (CAD) systems [30,31,32,33,34,35]; and the automatic detection of micro-bleeds in a medical image [36,37,38].

This paper presents a new SVMI method for visualizing medical images with a new approach to medical image processing in neuroradiology and a more precise insight into patient brain changes by rescaling and coloring pixels of significance for displaying the disease. The motivation behind this study is the need for a comprehensive survey in the field of medical imaging visualization that approaches the issue from the educational perspective as a foundation of knowledge creation and dissemination in society.

The paper is organized as follows. First, it reviews the literature on different techniques and approaches to medical image processing. In Section 2, we present the standard MDCT diagnostic method in neuroradiology, after which we present our smart visualization (SVMI) method in detail through the detailed description of medical image processing. In Section 3, we give a technological description of the developed SVMI model, followed by an evaluation of the system and results. The following section is a discussion that includes the limitations and theoretical and practical implications of this research. Finally, we give a final opinion on the subject matter and outline future work.

2. Materials and Methods

2.1. Standard Methods and Existing Problems

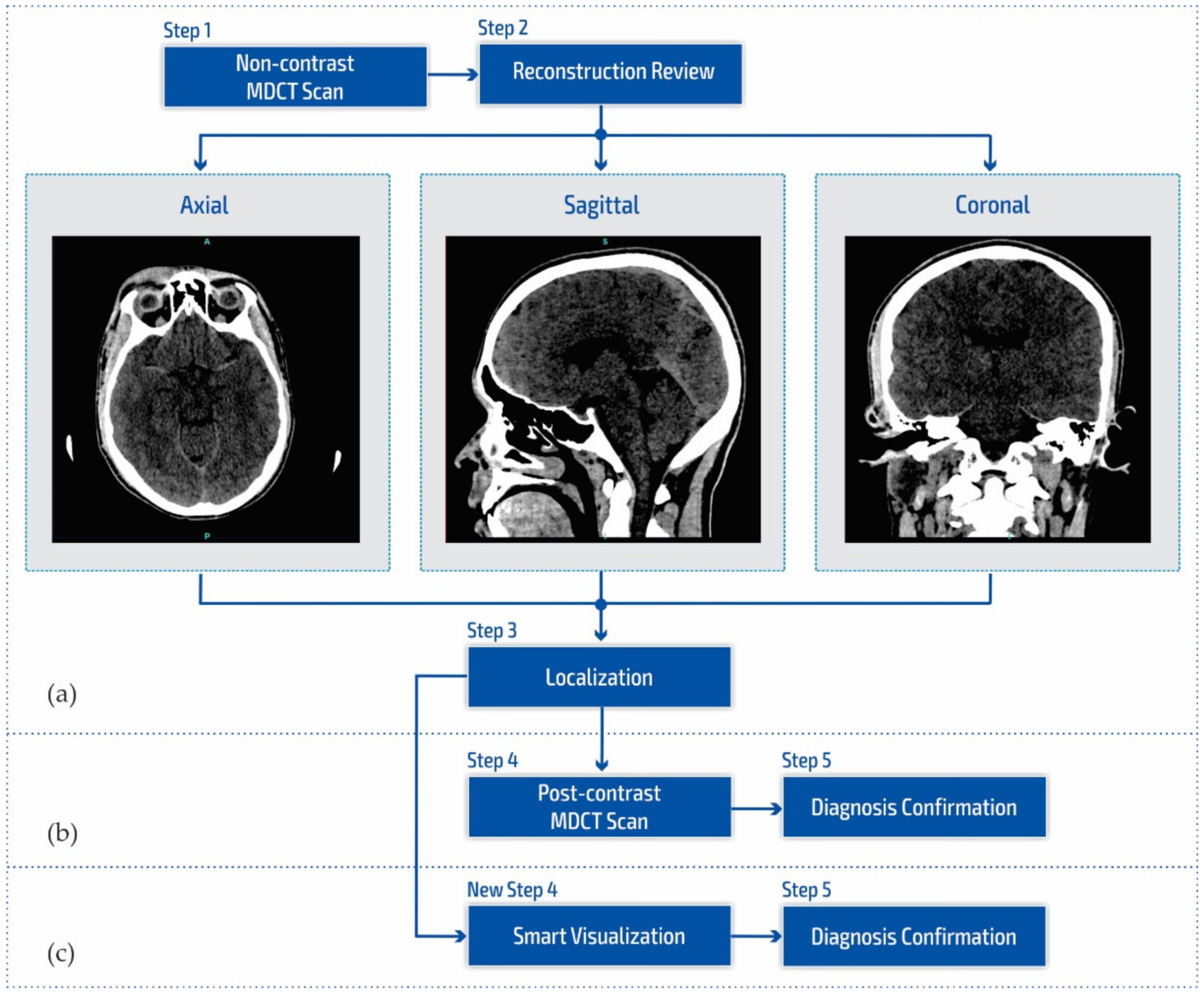

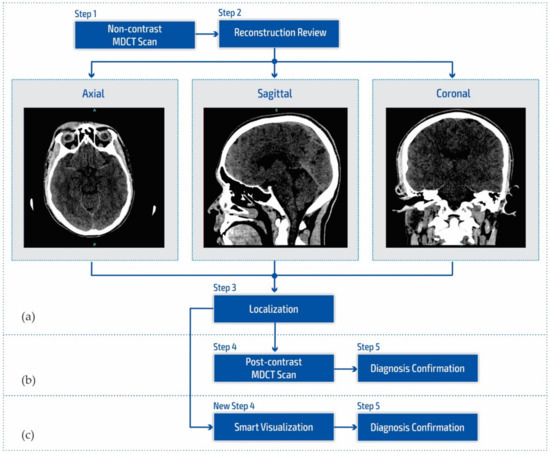

Figure 1a shows a block diagram of the standard neuroradiology diagnostics approach using a multi-detector computed tomography. Following MDCT diagnostics and a non-contrast MDCT endocranium scanning (Figure 1a, Step 1), a neuroradiologist examines the reconstruction in all three cross-section planes (Figure 1a, Step 2): (a) axial, (b) coronal, and (c) sagittal to determine the existence of pathological changes in density, classified as hyperdensity [39]; hypodensity [40]; and isodensity [41], to accurately determine the localization of the region of interest (Figure 1a, Step 3).

Figure 1.

Block diagram of (a) the multi-detector computed tomography method, (b) the post-contrast MDCT diagnostics method, and (c) the smart visualization (SVMI) method.

After determining the existence of a pathological change, the work of a neuroradiologist requires access to post-contrast MDCT diagnostics (Figure 1b, Step 4) to characterize the changes in the brain and develop a conclusion in the form of differential diagnosis and medical determination (Figure 1b, Step 5). Unfortunately, rescanning exposes the patient to the possible consequences of an additional influence of ionizing radiation due to its cumulative effect. Side effects can also be reflected in the patient’s allergic reactions to iodine that have not been previously determined. This includes the inability to use iodine contrast media in pre-existing and confirmed allergic reactions, which consequently refers the patient to MRI diagnostics that are not available to everyone because they have both absolute and relative contraindications. With the goal of successful treatment and diagnosis of brain diseases in patients, current research in MDCT and MRI diagnostics aims to characterize brain diseases non-invasively [42,43,44,45,46]. However, multi-detector computed tomography has some advantages concerning MRI diagnostics, enabling quantitative measures of deterioration of the blood–brain barrier that may be associated with tumor and neovascularization [42,43,47,48].

Due to the stated conditions and limitations in Figure 1b at Step 4, in order to prevent post-contrast scanning of the patient, a New Step 4 in Figure 1c is proposed which enables the smart visualization of medical images (SVMI) and a more precise presentation to neurologists of the localized changes in the patient’s brain at a determined cross-section of plane and slice.

2.2. Smart Visualization Method (SVMI)

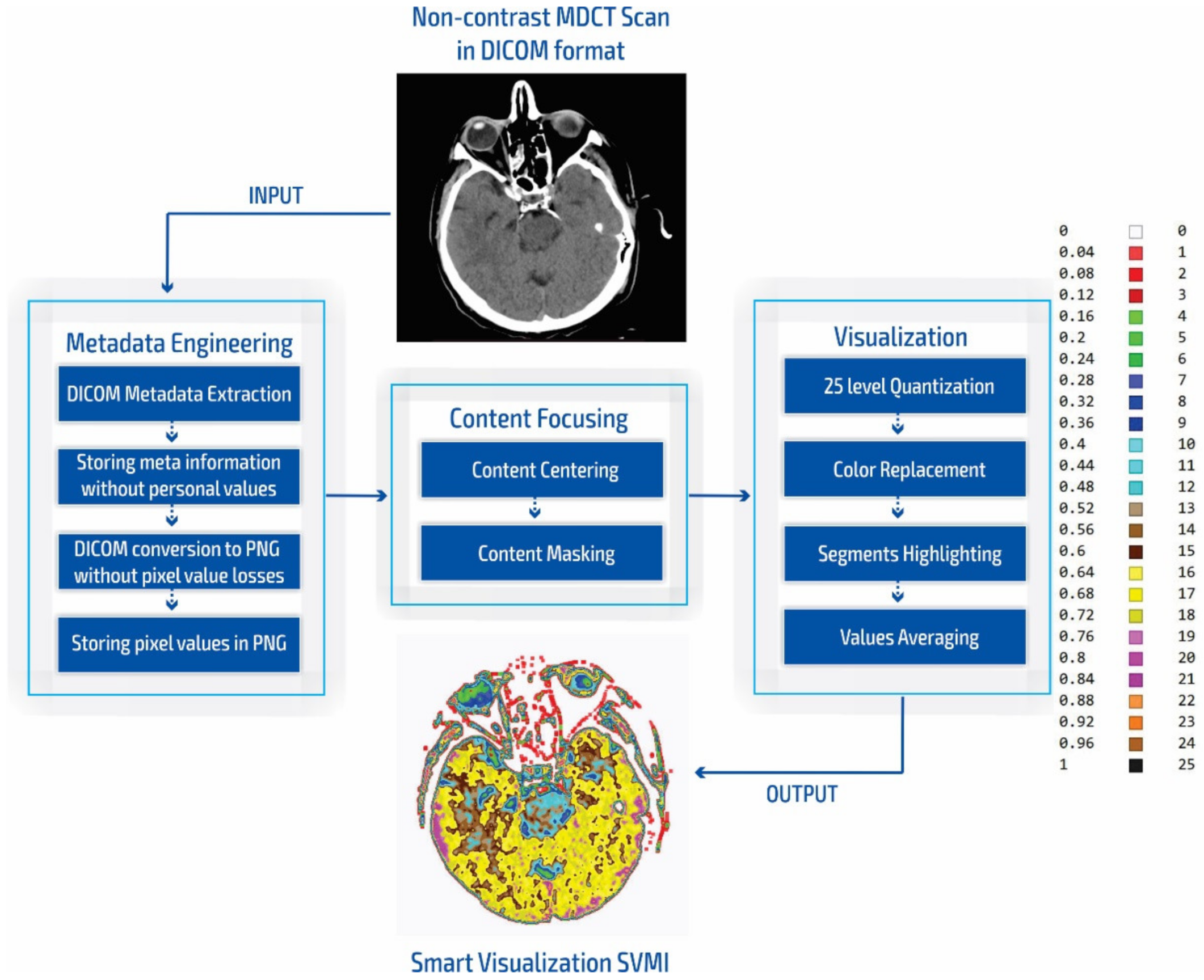

The smart visualization method in Figure 1c is shown through the developed SVMI-model flow diagram in Figure 2 and explained below.

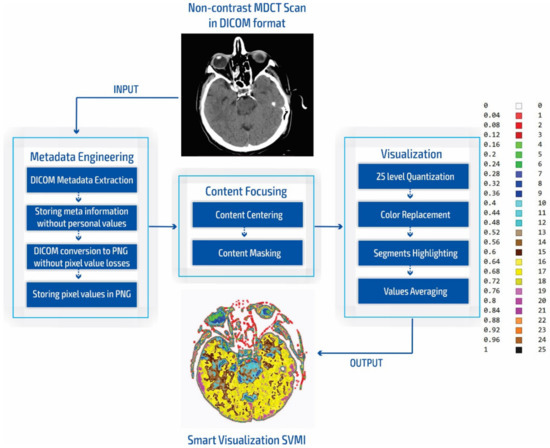

Figure 2.

SVMI model flow chart.

During our experiment, we compared the simulation of standard practices of neuroradiologists for the placement of grayscale images to measure the Hounsfield units with our smart visualization model. Our developed SVMI model employs a 16-bit total-pixel image without loss in conversion and without personal metadata from the DICOM format, ensuring complete protection of patients’ identities. Radiographs do not have absolute pixel values, while MDCT images can be quantified in the absolute values of the Hounsfield unit used for comparison [11]. Furthermore, our masking of medical images allows for the re-scaling of pixel regions in the brain where the neuroradiologist locates a possible change in unenhanced imaging. Accurate localization is essential for understanding the results of medical imaging and urgent cases, including the quick confirmation of the reliability of the diagnosis for a possible surgical approach. Some previous studies that also proposed algorithms that provide maps of regions of interest [10,12,49,50] did not confirm the accuracy of localization compared to neuroradiologists [10,12,50,51] or did not show reliable accuracy [49].

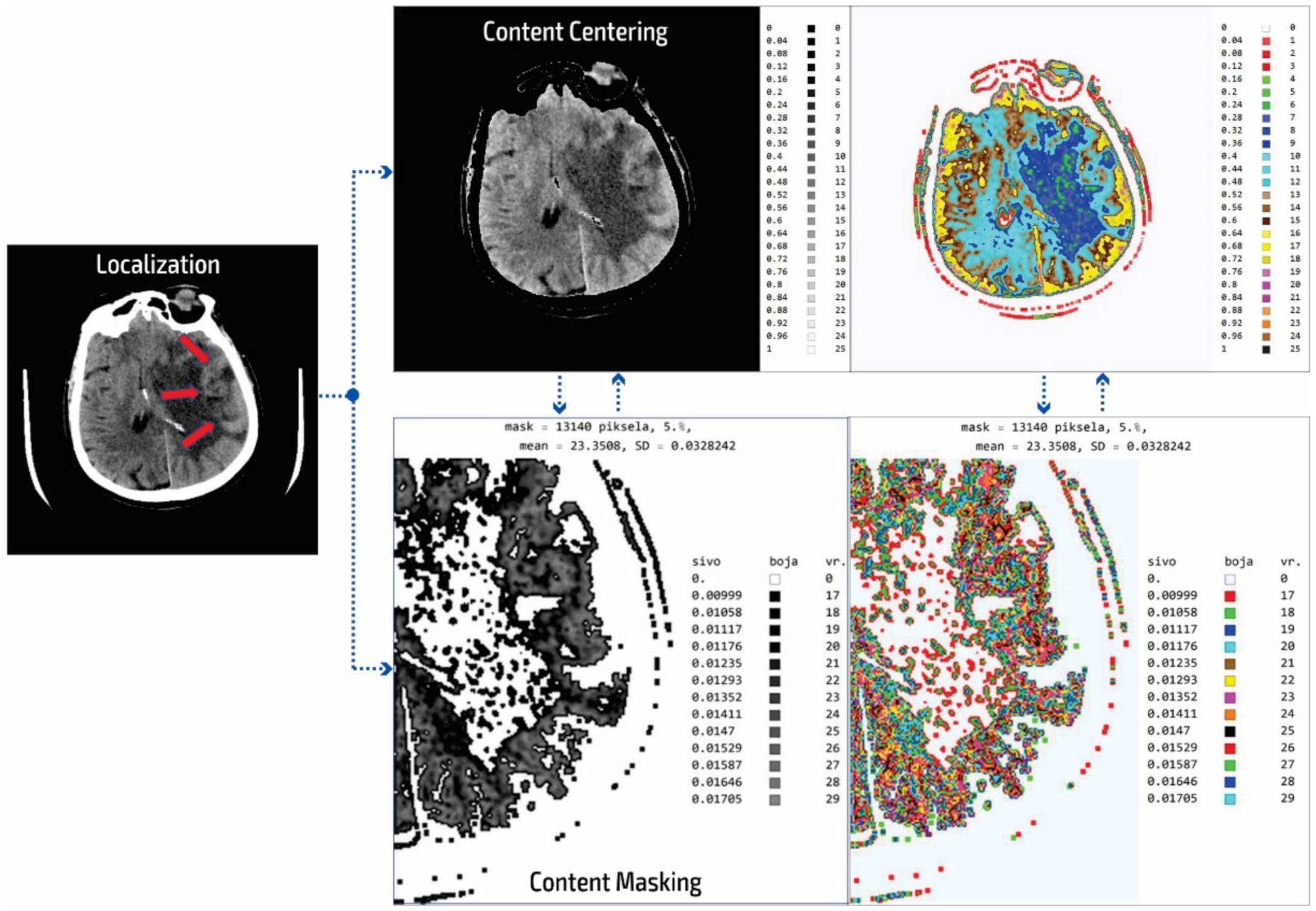

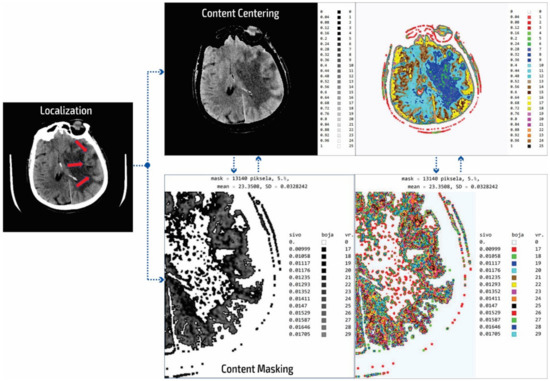

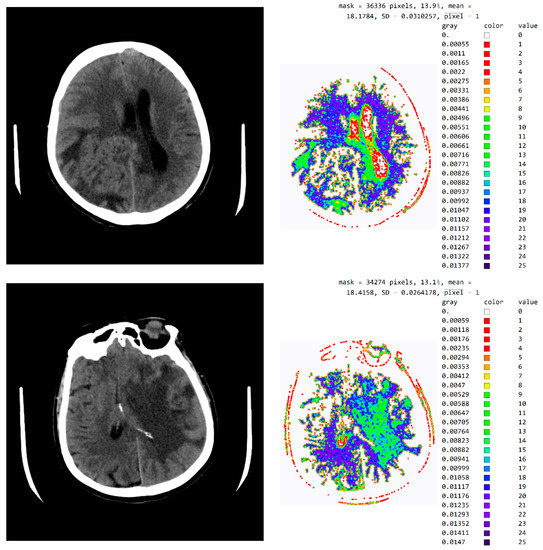

In our research approach, in marking content and regions of interest all values that do not contribute to the content are set to a pixel value of zero. In this way, the levels of black (which have a value of zero or slightly greater than zero), white values (which have a maximum value), and values of gray that are not contained in the central part of the gray are set to zero so as not to interfere with the physician conclusion while observing the central segment. At the same time, white parts (for example, bones) do not affect the mean value of the observed part. The smart visualization and the summary of the system output of the developed SVMI model is shown in Figure 3.

Figure 3.

Summary of the system outputs.

After determining the localization of the appropriate level of a particular cross-section by an experienced neuroradiologist, centering and masking of contents and regions of interest are performed. For a non-contrast MDCT scan in a DICOM-standardized format file, in which integers represent all pixels, it is necessary to extract useful information. Therefore, only the gray range is observed so that all gray values are less than a particular value, all values greater than the other selected value are observed, and all are set to zero so as not to affect the mean value of the selected segment. Adjacent pixel values are colorized differently, meaning that physicians do not need to recognize close levels of gray because segments that have similar pixel values are different. By masking this content, a specific part can be selected (above, below, left and right 1/3, or 2/3 vertically and horizontally) so that only the part of interest is magnified. On the right, relative values of gray from zero to one are provided with a label that shows the color. The minimum and maximum values are chosen arbitrarily so that only the range of gray is observed. All other values are zero and do not affect the mean and standard deviation. Assigned colors can be changed according to the physician’s preferences to provide a clearer view of image segments with the same pixel value. The mean and variance are calculated for the selected range. It is also possible to perform averaging so that the close ranges are more clearly separated from the rest of the pixels.

The disadvantage of existing methods is that the images may show pixels that do not contain valuable information, making it necessary to identify those areas of interest and hide unnecessary pixels. In analyzing many images from the selected device, it was determined that the images contained about 80 different integer values and that the valuable part of the information was presented with 25 integer values. As the gray levels in the original image are normalized from zero to one, which is 30% useful in the display of the gray image used by the physicians, a scaled image with values from zero to one should be rescaled. This achieves 25 adjoining integer values displayed with all 100% in the range from zero to one. The algorithm first determines the central part of the image containing helpful information about soft tissue and eliminates pixels formed outside of the central part perimeter. Following this, the smallest and largest integer pixel values representing soft tissue are determined and all other pixel values are eliminated. Next, the part of the soft tissue where an anomaly can be expected is selected. Only the useful part with values from 1 to 25 are displayed as gray levels ranging from zero to one. In the content masking stage, all other parts of the background, bones, and the darker parts of anomalies that are not in the focus of interest are presented in white. The physician can choose several gray levels less than 25 to eliminate all other pixels that are not in the focus of interest. In the displayed image (Figure 3) of the content masking stage, the gray levels from 0.00999 to 0.01705 are selected as part of the range from zero to one, represented by the integer values of the original image from 17 to 29. The disadvantages of the usual approach to the analysis are that the gray levels are only 30% of the range from zero to one and that pixels may be used that significantly increase the error in the display and statistical data because, for example, on the localized part of changes in the patient’s brain where it is possible to not expect an anomaly there are also darker parts as well as bones and other side effects that do not reflect the soft tissue. Gray and colored pixel levels can be defined according to the selected range to show the color difference of adjacent levels. Additionally, it is sometimes necessary to perform additional processing to, for example, average the pixel values with neighboring values.

It is important to note that this processing does not change the image content and that all pixel values correspond to the original image. Image processing achieves better visibility where the gray levels from the original image are located. The mean value is 23.35 for soft tissue pixels without damaged parts and bone, so the statistical data (mean value and deviation) are only shown for the colored or gray parts of the images.

3. Results

3.1. Technological Description

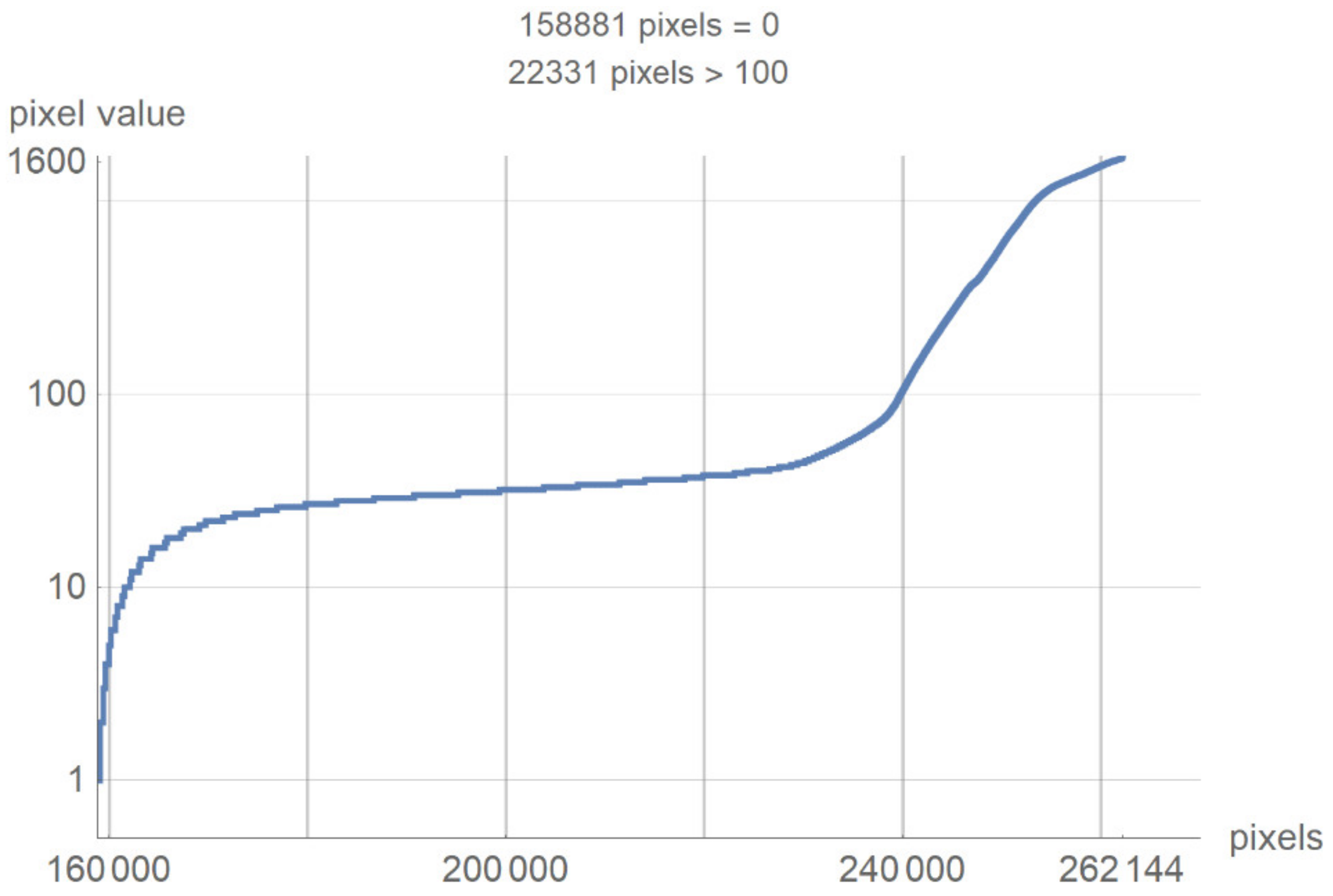

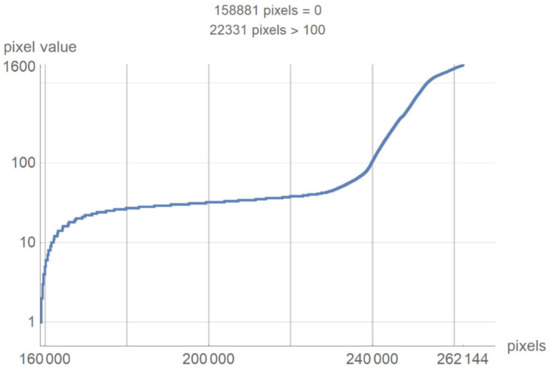

This chapter presents a technological description of the developed SVMI model, which describes image processing according to the proposed method. The image used in the survey questionnaire to evaluate the developed SVMI model was selected for its illustration and technological description. The primary characteristics of the selected image are that it has 512 × 512 pixels or a total of 262,144 pixels of integer-type. Pixel values remain unchanged when the image format converts. The analyzed image in PNG format has the same pixel values as the original image, so the image has no losses after processing. However, many pixels do not contain valuable information, and the first task of the software is to eliminate all pixels from the image that may be misinterpreted or confusing. For the analyzed image, for example, the number of pixels with zero values were calculated. Out of 262,144 pixels, as many as 158,881 pixels had a value of zero. Useful pixels with small values (for example, 1, 2, 3 ...) are difficult to distinguish from values of zero, so it was necessary to eliminate these pixels before analysis by an expert. The number of pixels as a function of value is shown in Figure 4.

Figure 4.

The number of pixels as a function of value.

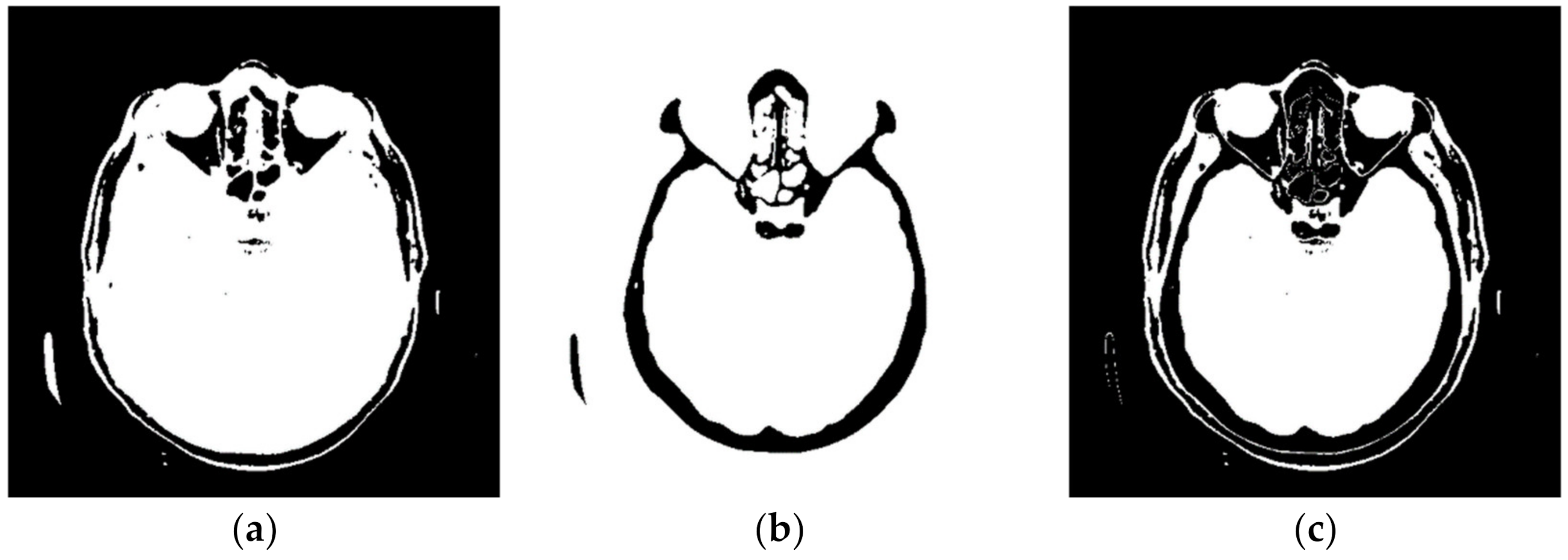

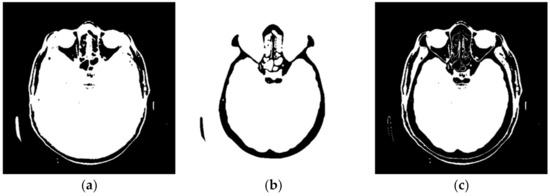

Figure 4 shows a large number of pixels with values between 10 and 100 and 22,331 pixels have a value greater than 100, while the most considerable value of pixels is 1682. Some programs can automatically adjust the image display to highlight pixels that improve the display of a portion of the image. For example, to increase the contrast or brightness of a portion of an image with a large number of pixels., image adjustment can be used to adjust pixel values so that more of the image content is in the visible range or to correct lousy illumination or contrast. The purpose of this software is not to distort the image display without a specialist’s control, but to accurately display the part of the image that contains valuable information on a linear scale and remove pixels from the image that do not valuably contribute to the image content. Pixels with an immense value make it impossible to display valid pixels on a linear scale from zero to one and increase the average value of helpful information pixels. One way to remove un-useful pixels from an image (Figure 5a) is to set high-value pixels to zero (Figure 5b) and to generate a pixel mask, shown in black in Figure 5c.

Figure 5.

Pixels that do not contain useful information: (a) black represents the pixels that have a value of 0; (b) black represents the pixels that have a value > 100; and (c) black represents the pixels that have a value of 0 and a value > 100.

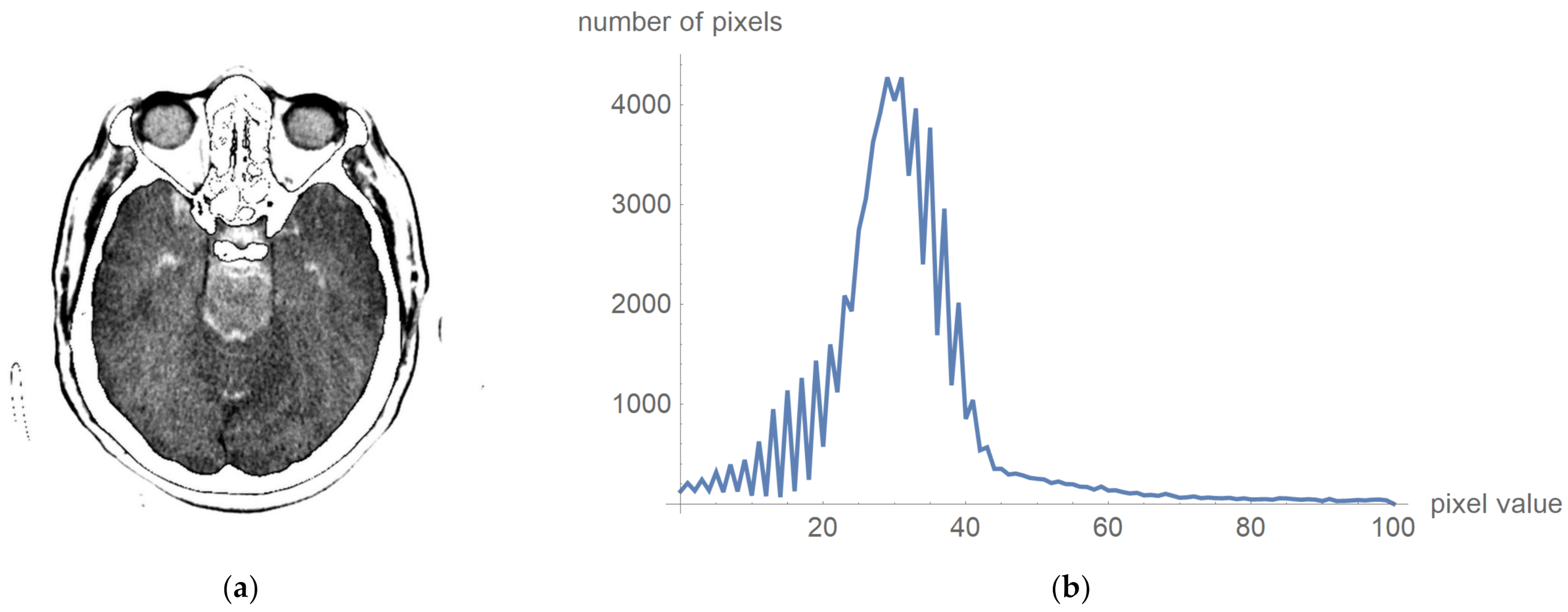

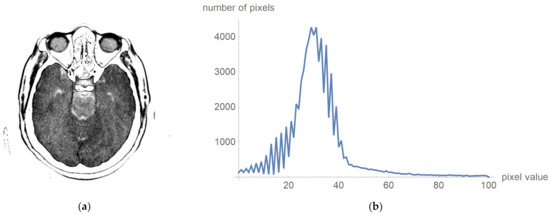

To prove that valuable information is not lost, Figure 6 shows the original image multiplied by the mask and its histogram according to Figure 5c. The negative is presented to clarify that this procedure does not damage valuable information. Figure 6a presents that the central part does not contain high-value pixels.

Figure 6.

Original image multiplied by the mask and its histogram: (a) the negative of the original image multiplied by the mask; and (b)its histogram, whose pixels are higher than 0 and less than 100.

One can now look at what a histogram of pixel values greater than zero and less than one hundred looks like, as is shown in Figure 6b, which demonstrates what the display range for visual analysis by a specialist can be for an image whose values will be in the range from 5 to 55, i.e., that the upper threshold for the pixel value does not need to be 100. To more clearly identify the pixel values, we have chosen to display 24 different levels in which the expert can choose which range they want to observe. All other values are associated with a mask that appears to be zero, and these values are not counted as the mean value.

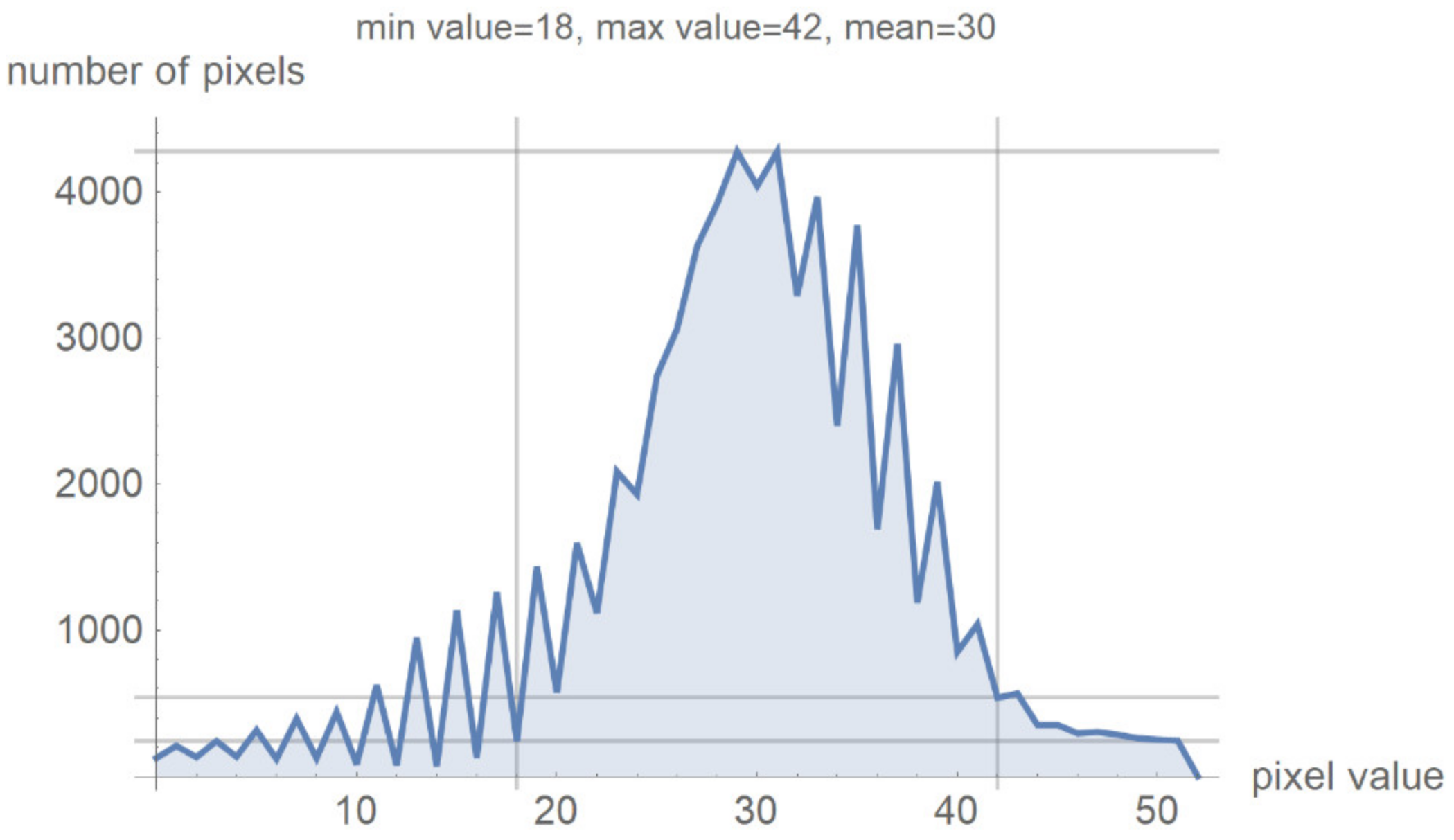

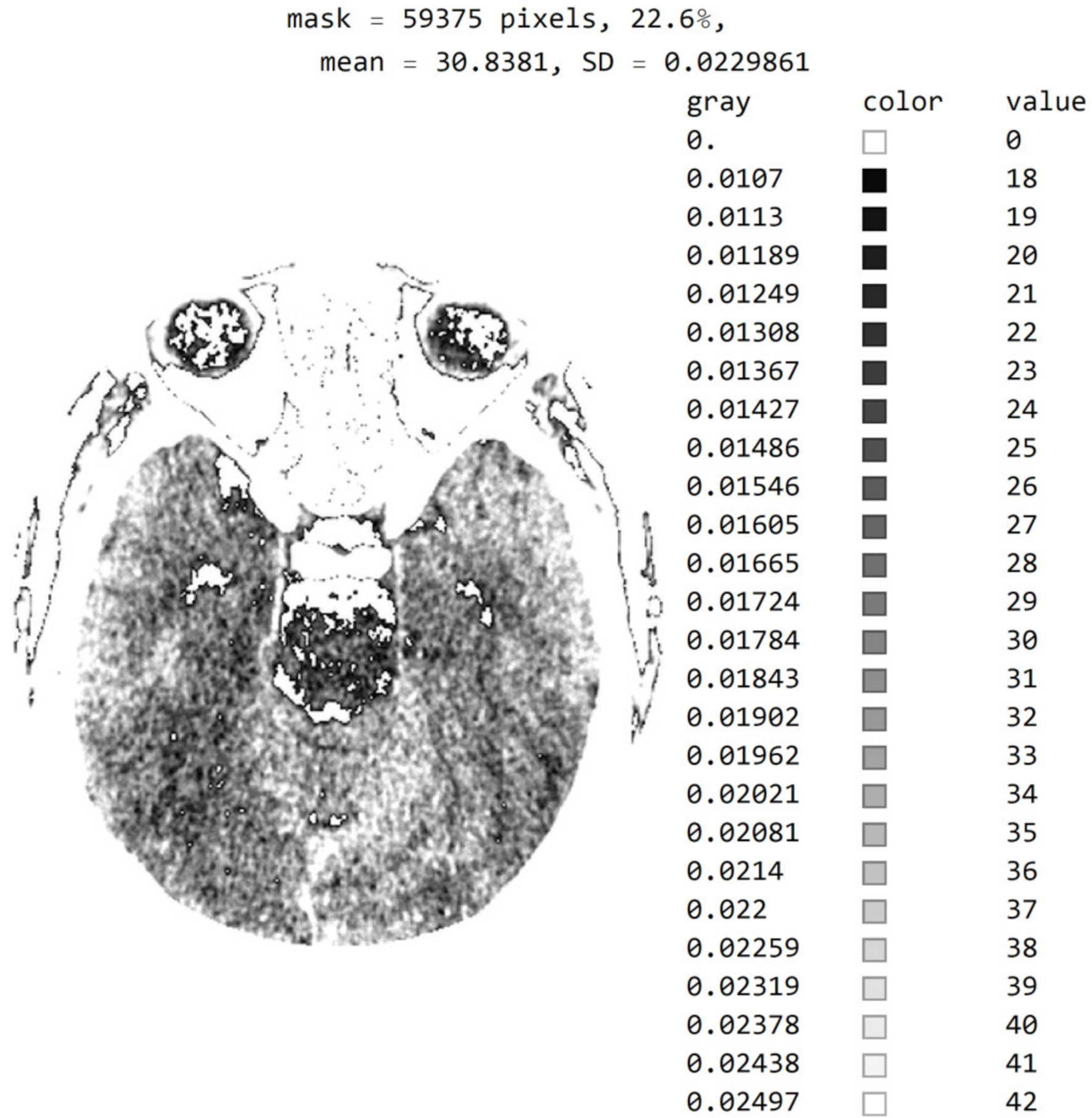

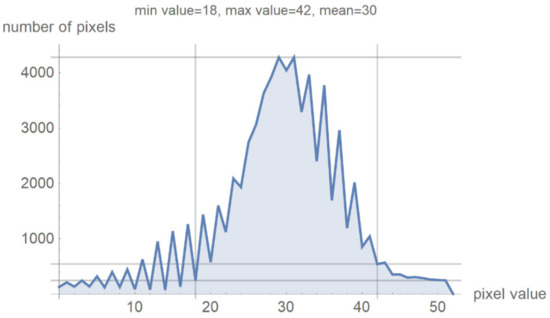

Figure 7 shows a histogram for 24 successive levels from the range {18, 42}, for which a mean value of 30 was calculated. Based on the histogram in Figure 7, the specialist can select the range of pixel values they wish to observe by masking all other values by setting them to 0.

Figure 7.

Image histogram for an arbitrarily selected range of up to 24 successive pixel values.

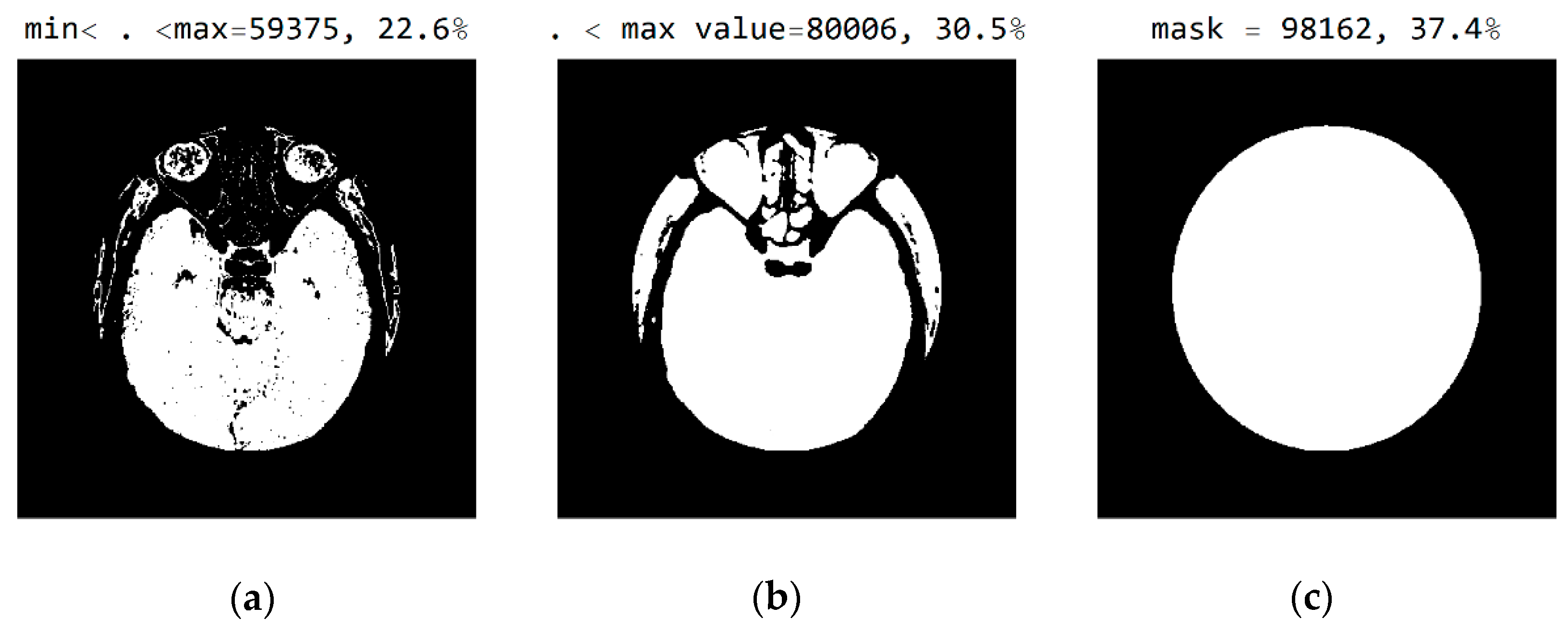

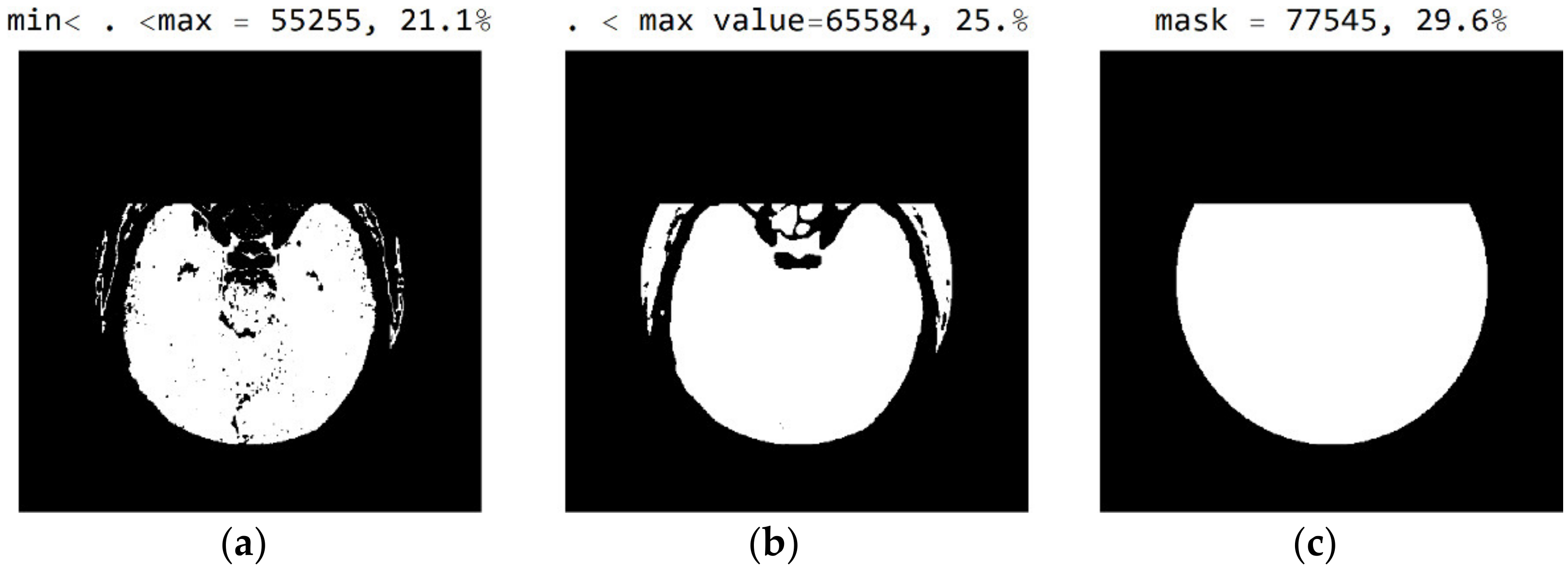

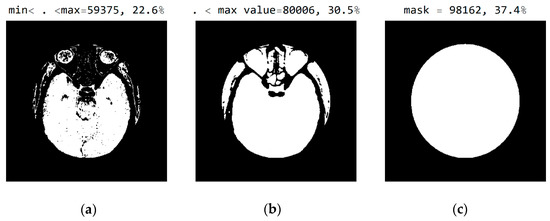

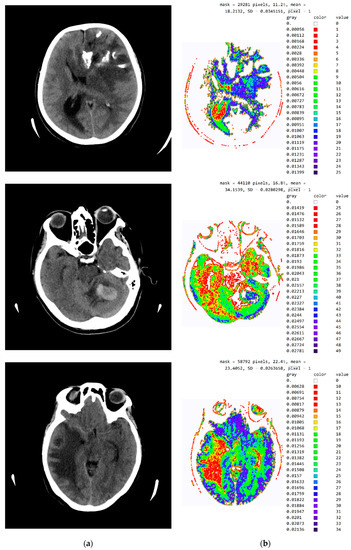

In Figure 8, it can be seen that the third image (Figure 8c), comprising the central part of the circular image, contains 37.4% of the total number of pixels; that the second image (Figure 8b), comprising values from the pixel range from 1 to 55, contains 30.5% of the total number of pixels; and that the mask (Figure 8a) for the selected range of 24 successive pixel values contains 22.6% of the total number of pixels. By choosing the range of pixel values, the specialist can more easily identify the pixel values of interest to conclude the type of disease.

Figure 8.

White represents pixels that will display the correct values: (a) in the range from minimum to the maximum selected value; (b) in the range of values from 1 to 55; and (c) in the central part of the image for the selected mask.

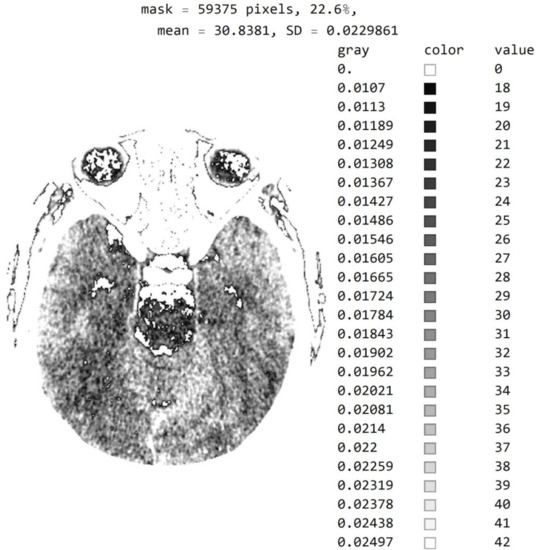

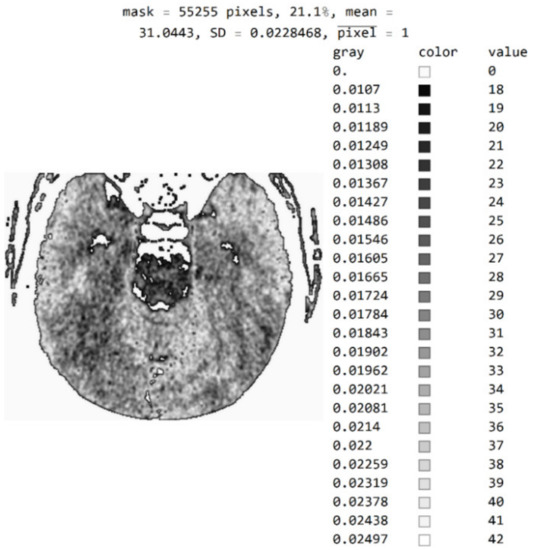

Figure 9 shows an image with exact integer values with shades of gray scaled from black (pixel value 18) to white (pixel value 42), with all other pixels outside this range shown as 0 (white in the picture). The far-right column contains the exact pixel values where it can be observed that the differences of adjacent values are scaled from 0 to a maximum of 1682 on the gray range from 0 to 1, approximately 1/1682 ≈ 0.0006, which makes it impossible to accurately determine the differential values in the original image without using algorithms to highlight a range of values. In addition to displaying pixels whose values are 18 to 42, image rescaling was performed, eliminating the display of parts of the image outside the mask of the circular segment that do not include valuable information. Since the specialist chooses the range of pixel values, Figure 9 clearly shows white parts that are not displayed but could contain valuable information. The specialist may choose a different range if they wish to see pixels from the pixel range of 1 to 55 and consider those pixels to be useful in diagnosing the disease.

Figure 9.

Image with exact integer values with shades of gray scaled from black (pixel value 18) to white (pixel value 42), with all other pixels outside this range shown as 0, i.e., white on the image.

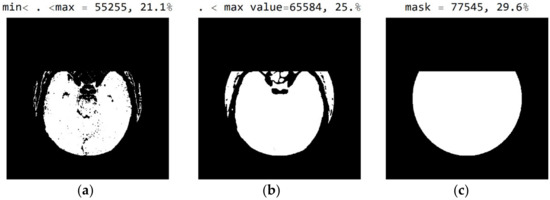

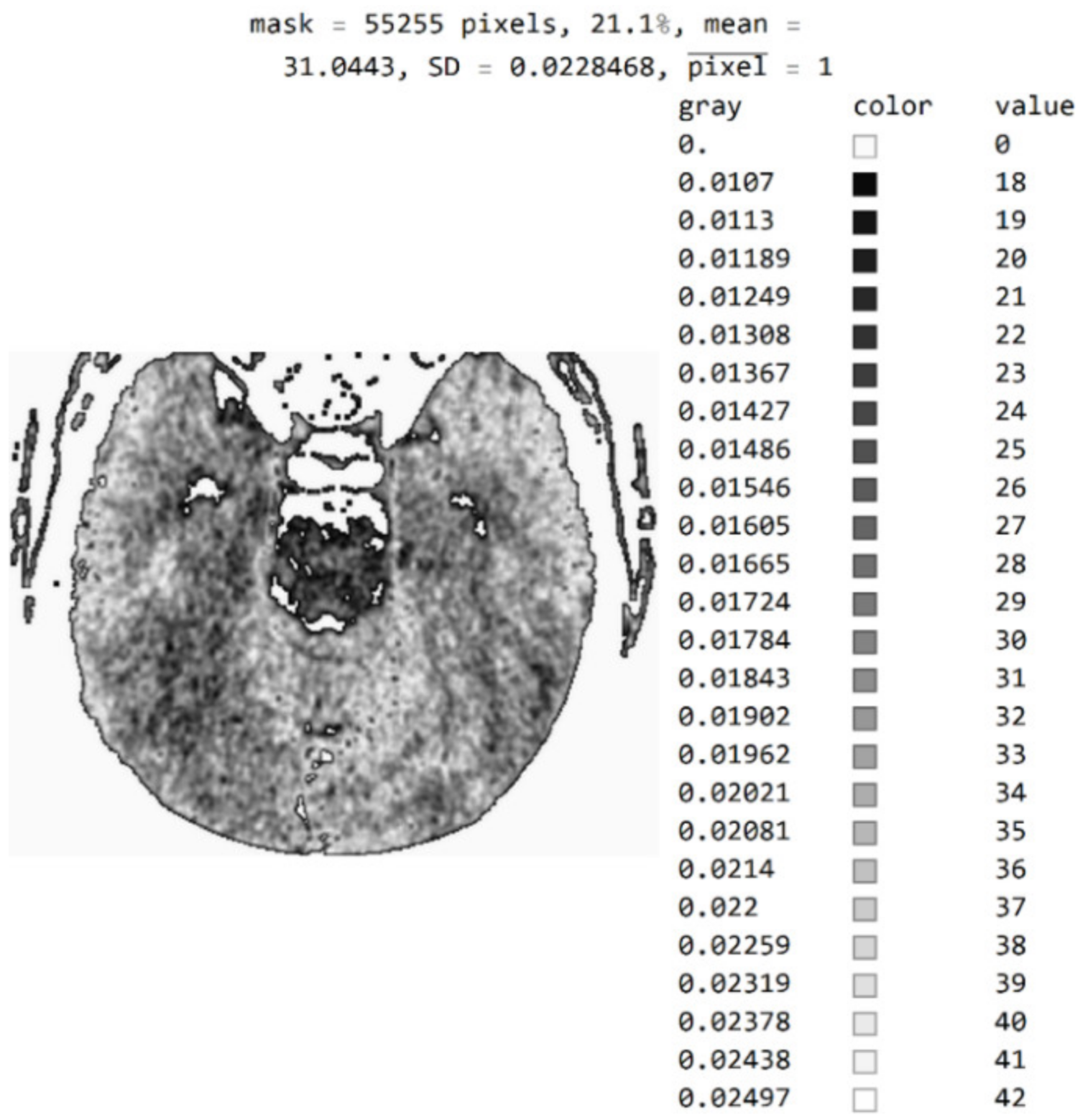

The three Figure 10a–c show black pixel masks that will set all image values to zero so that white pixels can be displayed in the image. This mask has an additional setting of zero for all pixels in the upper third (near the eyes). The new mask allows for the display of 29.6% of the total number of pixels (Figure 10c); the mask with values outside the pixel range from 1 to 55 contains 25% of the pixels (Figure 10b); and the selected range of display contains 21.1% of the total number of pixels (Figure 10a).

Figure 10.

White represent pixels that will display the correct values for the selected mask: (a) in the range from the minimum to the maximum selected value; (b) in the range of values from 1 to 55; and (c) in the part of the image for a selected mask.

By choosing the range of pixel values, the specialist can more easily identify the pixel values of interest to conclude what type of disease is presented.

Figure A1 in Appendix A shows an image with exact values of integer type, shades of gray scaled from black (pixel value 18) to white (pixel value 42), and with all other pixels outside this range shown as zero, i.e., white. The description is the same as in Figure 9. By choosing a mask, the specialist may choose not to show the part of the picture that, in their opinion, does not contribute to the conclusion about the type of disease.

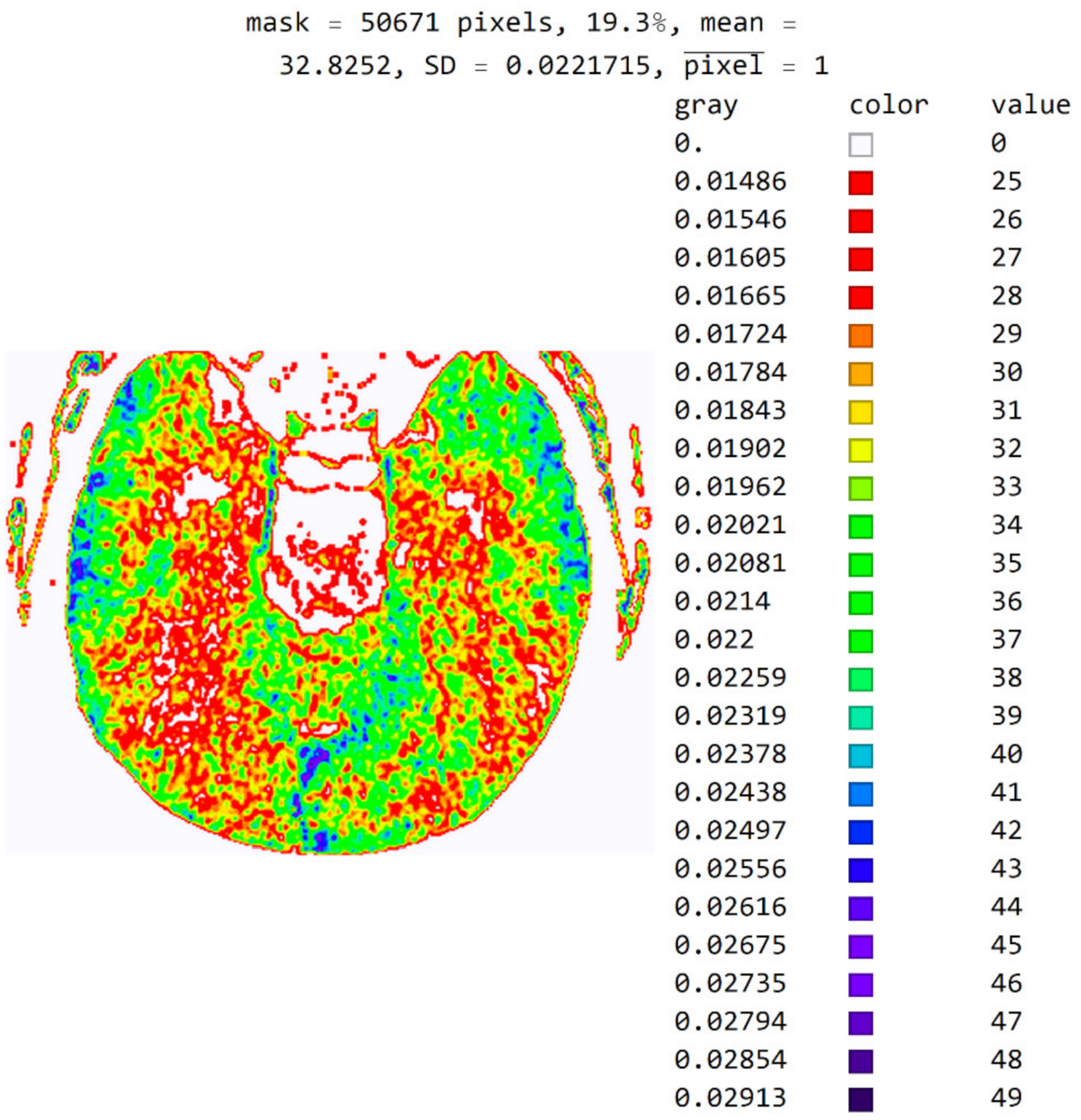

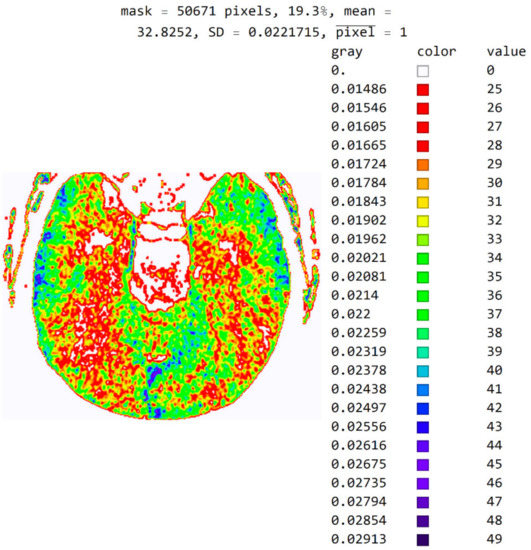

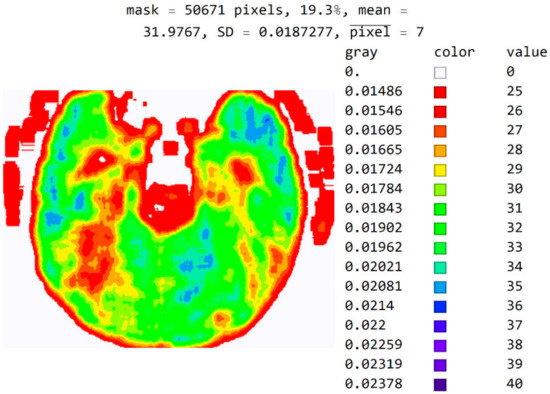

The software also allows pixels to be displayed in color to help identify pixel values in an image. For example, in Figure 11, a color map based on light whose wavelength is in the most commonly used range in radiology (i.e., colors based on light wavelength in nanometers, Physics-Oriented Color Schemes) was selected. The color map may be different depending on what the specialist wants to emphasize to differentiate the values of adjacent pixels. In Figure 11, the minimum value was moved to 25 and not 18 because the specialist expected to see where the pixel values greater than 25 are. It is noted that a small number of pixels have a value greater than 40 and that they do not contribute to the recognition of the disease. Therefore, the specialist can choose a narrower range of values to display. High resolution between adjacent levels can negatively affect disease recognition, so it is desirable to perform averaging so that each pixel receives the average of several adjacent pixels in the image.

Figure 11.

Image with the exact values of an integer type on the color map of the visible spectrum from pixel values 25 to 49. All other pixels outside this range have a value of 0 and are displayed in white.

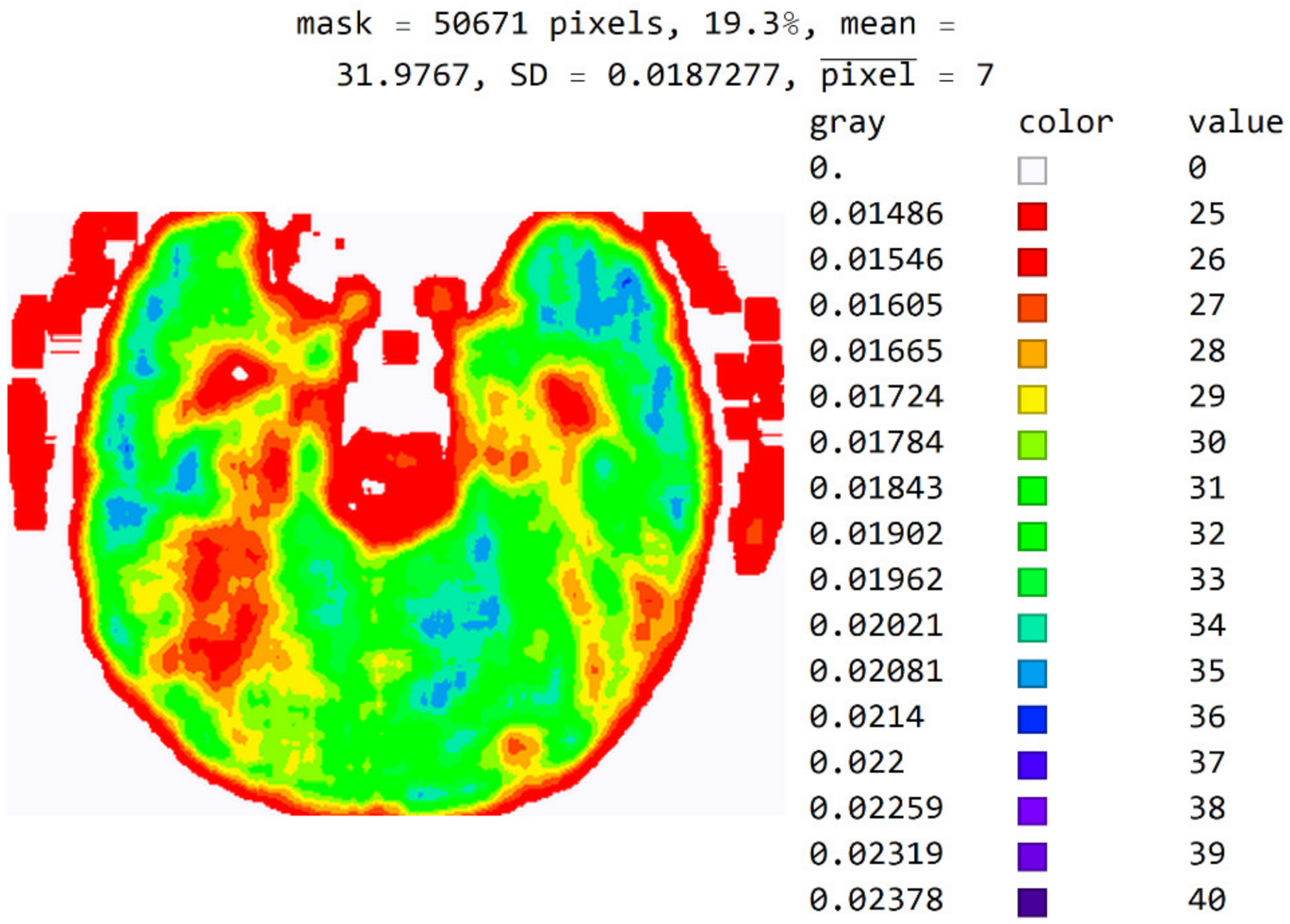

In Figure 12, the mean value of seven adjacent pixels was calculated and averaged from 25 to 50 pixels for the display range. Figure 12 was used in an online questionnaire to evaluate the developed SVMI model. The results showed that, in the practical education of MDs in the radiology residency training program, a decision on diagnosis was made in which 95% of the surveyed physicians made the correct diagnosis after SVMI processing and this diagnosis was confirmed followings additional diagnostics.

Figure 12.

An image display with averaged seven adjacent pixels on a color map of the visible spectrum from pixel values 25 to 40. All other pixels outside this range have a value of 0 and are displayed in white.

The purpose of this software is not to use just one image in the training phase and education. Instead, specialists should experiment with a wide range of values for observation, the choice of the color map, and the choice of the number of adjacent pixels for averaging to gain better insight into the areas that can contribute to the correct medical decision.

Figure A2 in Appendix A shows the image in gray levels with an added red, averaged part in which the image processed with shades of gray can be combined with the colored image so that the image in gray levels has an added segment marked with red. This segment is obtained by processing and averaging.

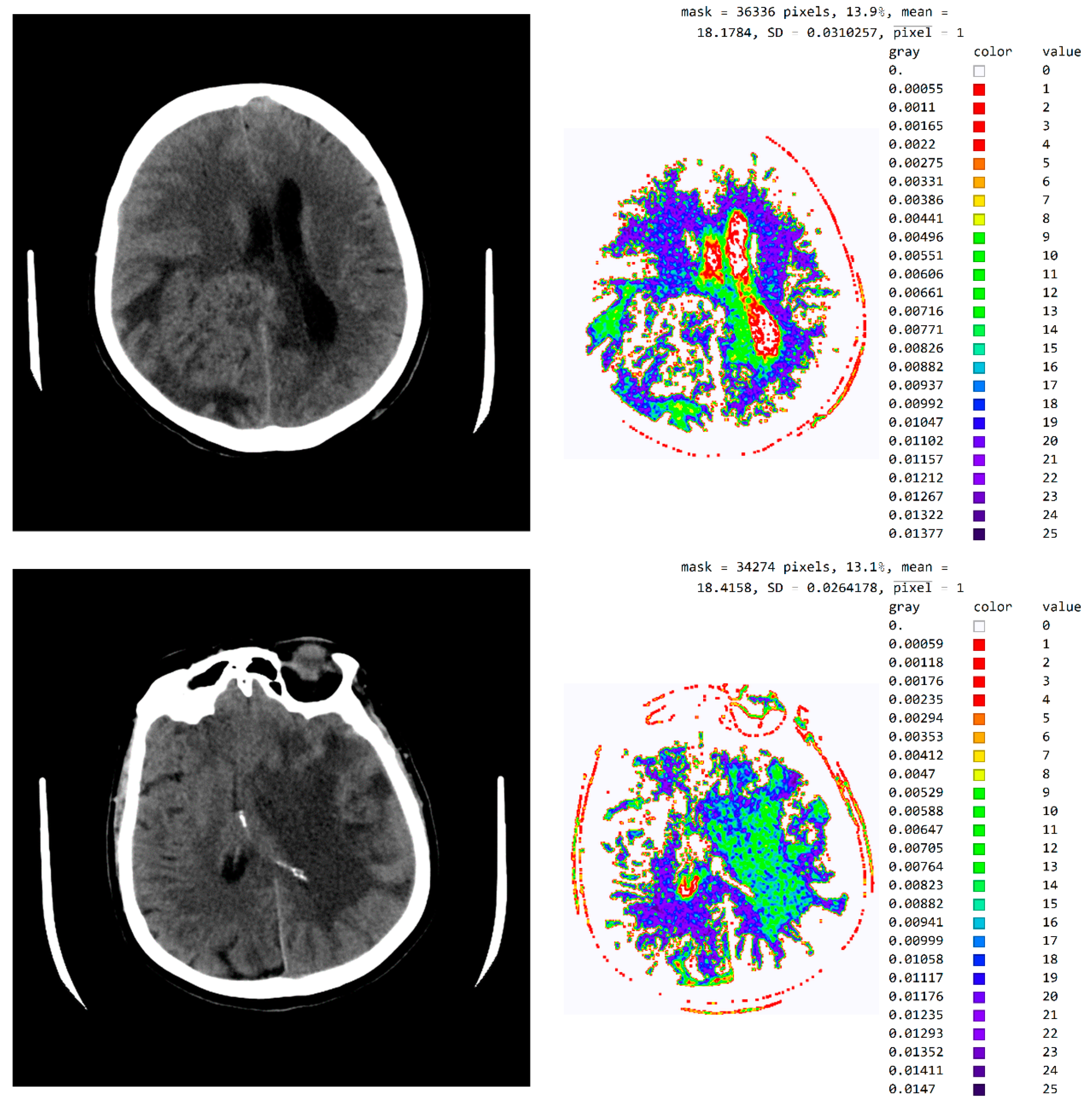

Figure A3 in Appendix A provides a tabular presentation of the various ranges of values and color maps in SVMI processing for different diagnosed brain diseases.

Due to the need for the specialist to select the pixel range for display, color map, and the number of pixels for averaging, this analysis requires an interactive analysis of the specialist when selecting parameters. It cannot be used for automatic analysis and conclusion of the disease.

3.2. Evaluation of the Developed SVMI Model

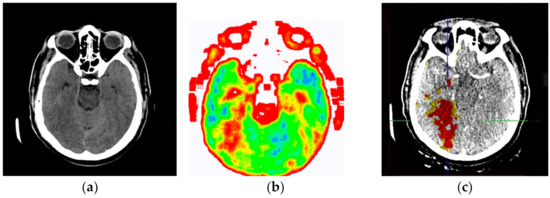

The evaluation of the developed model was conducted through an online questionnaire to determine whether smart visualization of medical images (SVMI) can contribute to the education of MDs in the radiology residency training program and undergraduate medical students. A total of twenty Physicians of the Department of Emergency Neuroradiology expressed their professional medical opinion when completing the questionnaire. Nine were neuroradiology/radiology specialists (45%) and eleven were MDs in the radiology residency training program (55%). All physicians agreed to participate in the research and did not have insight into the patient’s final diagnosis (Diagnosis of the disease after performing all the diagnostic methods provided by the protocol: Occipital right, in the region of PCA vascularization, observed zone of hypodensity, without differentiation of gray-white mass and flattened sulci diff.dg.acute ischemic stroke) after complete diagnostic methods, which included a post-contrast MDCT of endocranium, MDCT perfusion, and MDCT angiography. Therefore, a triple-blind review system was applied. No participants knew the physician’s opinion (before or after), or the patient’s official diagnosis, name, and surname, or the neuroradiologist who performed the examination. Additionally, all survey participants were asked questions according to the order of appearance of the images, starting with Figure 13a.

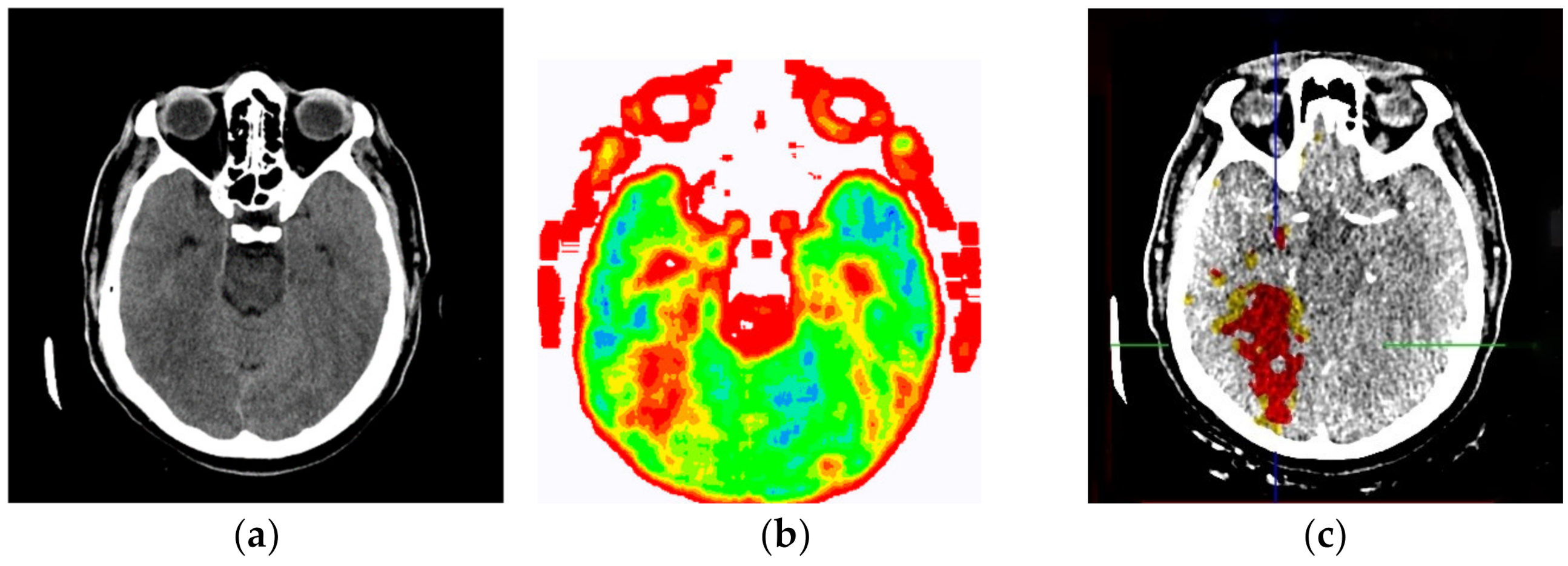

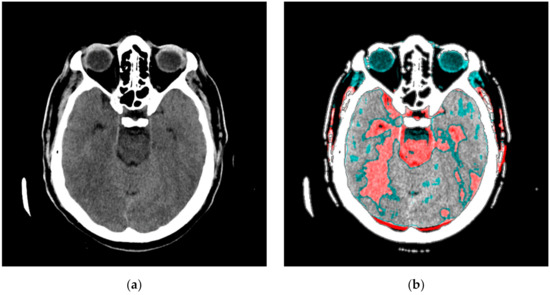

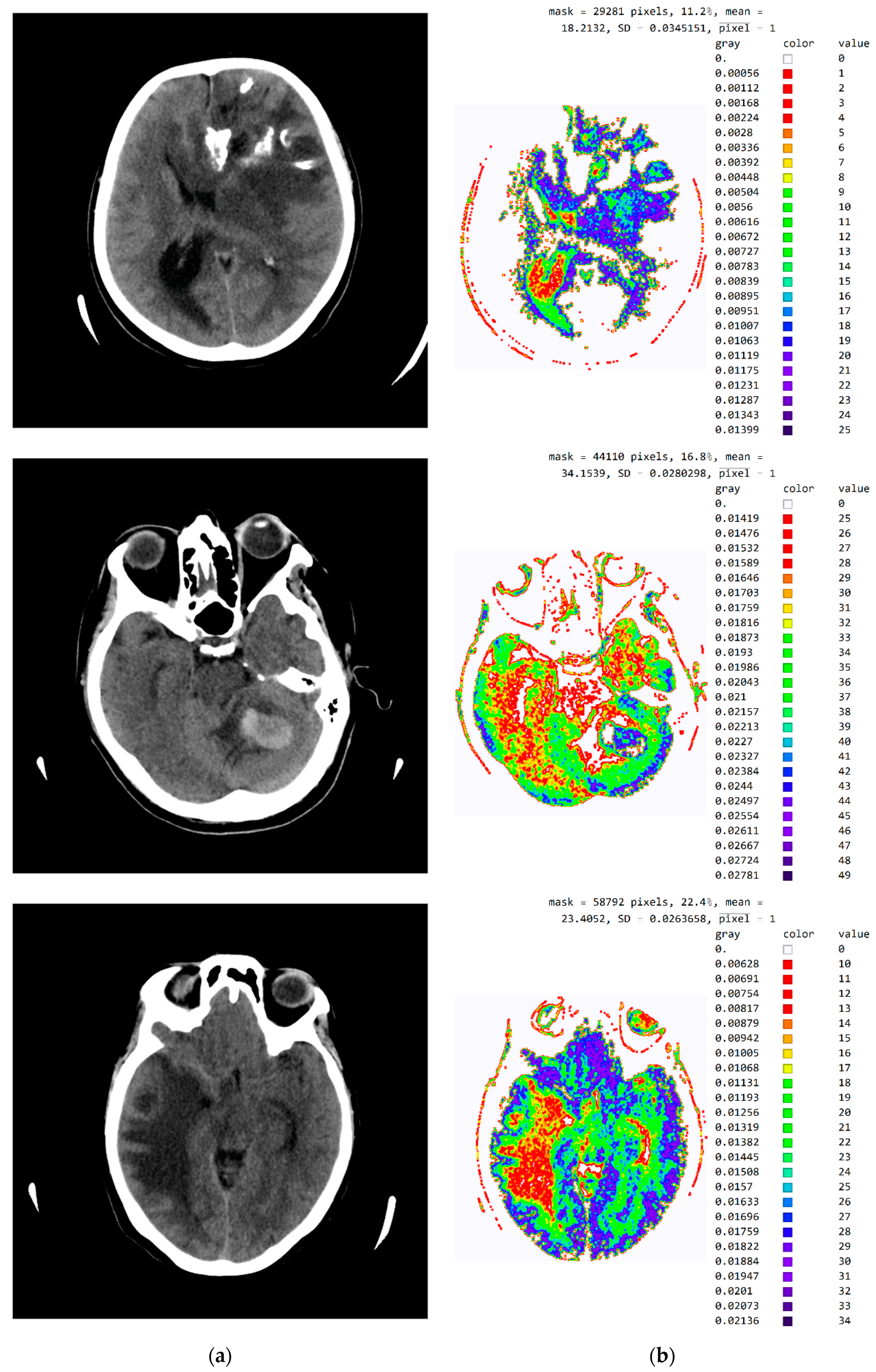

Figure 13.

(a) Non-contrast MDCT image of the endocranium; (b) SVMI of the non-contrast MDCT image of the endocranium; and (c) MDCT perfusion of the same cross-section.

Figure 13a shows a non-contrast MDCT image of the endocranium establishing a zone of hypodensity without clear differentiation of the gray–white mass on the occipital right, which corresponds to an acute ischemic lesion. Figure 13b presents our method of smart visualization of the medical images (SVMI) of the non-contrast MDCT image of the endocranium of the same cross-section. Figure 13c, after additional diagnostics of MDCT perfusion, shows a perfusion deficit that can be seen in the same region—occipital right—which confirms the diagnosis of an acute ischemic stroke.

On the presented non-contrast MDCT image of the patient’s endocranium (Figure 13a), 100% (nine) of radiology specialists noticed that changes in the brain had occurred based on their knowledge in the field of neuroradiology, which ranged from 3 to 15 years of experience for all radiologists who expressed their expert opinion. On the other hand, based on the non-contrast MDCT image of the endocranium (Figure 13a), 81.81% (nine) of the total number of MDs in the radiology residency training program who participated in the questionnaire did not notice the change, did not adequately characterize it, or did not give an adequate differential diagnosis. Table 1 shows the analysis of the physicians’ answers to the first question observing only Figure 13a.

Table 1.

Analysis of the answers to the first question: Analyzing Figure 13a (non-contrast MDCT image of the patient’s endocranium), what is your opinion on whether there are changes in the brain, and if there are, what changes are possible?

Following smart visualization (SVMI) of the non-contrast MDCT image of the endocranium (Figure 13b), out of all physicians whose opinions were requested, 95% of respondents made an accurate diagnosis and confirmed the existence of pathological changes in density. However, one MD in a radiology residency training program answered incorrectly. Table 2 shows the analysis of the physicians’ answers to the second question observing only Figure 13b.

Table 2.

Analysis of the given answers to the second question: Analyzing Figure 13b (SVMI of the non-contrast MDCT image of the endocranium of the same patient and the same region and cross-section), in your opinion, what is the possible diagnosis?

The certainty of physicians in their statements, observing only Figure 13b, was performed using the five-point Likert scale. Table 3 shows the analysis of the answers given to the third question.

Table 3.

Analysis of the answers given to the third question: Please express position and confidence in a given opinion based on observation of a Figure 13b.

Physicians gave their opinion, presented in Table 4 as to whether this smart visualization (SVMI) can contribute to the education of MDs in the radiology residency training program and undergraduate medical students.

Table 4.

Analysis of the given answers to the final question: By analyzing Figure 13b, does this smart visualization (SVMI) of the medical images of a patient’s brain contribute to the education of MDs in the radiology residency training program and undergraduate medical students?

An analysis of the responses, in which 60% of physicians gave a statement that “it can” and 35% of physicians said that “to some extent it can,” argues that smart visualization of medical images (SVMI) can contribute to education. Additionally, the proposed SVMI model follows the clinical protocols for further diagnostics and patient treatment. For confirmation, Figure 13c shows MDCT perfusion in which a perfusion deficit is observed, articulating in favor of the existence of an acute ischemic stroke.

4. Discussion

Given their knowledge and many years of experience in the field, when neuroradiologists notice a change in the non-contrast image, they may have a certain kind of sureness about the type of change in the brain. However, applying an IV (intravenous) contrast agent is necessary to characterize the visualized pathological change in density. From the existing medical documentation, neuroradiologists can understand what kind of change is being observed from previous examinations of non-contrast and post-contrast images. Therefore, it is not necessary to apply contrast media for characterization, but only in the form of disease progression, relapse, or recurrence (in post-operative conditions, after chemotherapy or radiation therapy). If the physician’s specialist has information in the form of a report on the type of pathological change in density, and when there is no need for post-contrast imaging (inpatient disease), the use of SVMI to educate MDs in the radiology residency training program as well as undergraduate medical students becomes important and gains high significance.

Giving an IV contrast agent is necessary when it is difficult to notice changes because the pathological change from applying contrast opacified characteristically. Pathological changes in density at the sub-centimeter level do not have to show indirect signs of existence on the non-contrast MDCT scan. If there is prior knowledge about the malignant disease it is necessary to provide a contrast to determine the endocranial dissemination of the underlying condition. In patients with existing changes in the brain parenchyma by type of secondary deposits and new neurological outbursts, re-administration of contrast medium to the patient can be avoided and the proposed SVMI model could be applied to determine possible newly formed changes of the same type.

The weak point of the proposed method is the lack of automated diagnosis. Therefore, future improvements will include (1) conducting deep learning techniques with a massive dataset already used by experts to train novices and (2) the development of a graphical user interface (GUI) to simplify the expert’s interaction with the software in a way to get the desired response.

5. Conclusions

This paper presents a novel model of smart visualization of medical images (SVMI) obtained through MDCT diagnostics and an innovative method for their reconstruction and improvement, ensuring that the regenerated medical image of the human brain is suitable both for interpretation and as an accompanying tool in diagnostics in radiology residency training programs as well as for education for medical students’ undergraduate studies. With the proposed model of smart visualization of medical images (SVMI), the domain of neuroradiology can experience multiple benefits from the aspect of education. Moreover, a high-performance model of innovative image visualization of specific cross-sections of interest mimics the workflow of neuroradiologists.

Radiology specialists with many years of experience as well as MDs in the radiology residency training program have confirmed that the proposed model could make a significant contribution both in the radiology residency training program and in a more accurate visualization of the region of interest where changes in the brain occur. Of the surveyed physicians, 95% made a correct diagnosis after SVMI processing and confirmed that it could contribute to education in neuroradiology, providing reliable ground truth. In addition, to our knowledge, the method of smart visualization of medical images at the cross-section level for the purpose of educating future radiologists has not been previously tested in scientific research.

An equilibrium between the complexity and quality of medical data obtained through MDCT diagnostics and the careful visualization of images with seclusion segments of interest that can be adapted to all medical applications in healthcare institutions is an essential segment for the future development of smart medical information systems. Our proposed SVMI model offers a practical tool that can lead to great adoption by physicians and health care providers.

Author Contributions

Conceptualization, A.S. and S.L.; Data curation, S.L.; Investigation, S.L.; Methodology, A.S. and M.L.-B.; Project administration, S.L. and V.K.; Resources, S.L. and V.K.; Software, A.S. and M.L.-B.; Validation, S.L.; Formal Analysis, A.S. and M.L.-B.; Visualization, A.S. and M.L.-B.; Supervision, A.S.; Writing—original draft, A.S.; Writing—review & editing, A.S., M.L.-B., S.L. and V.K.; Funding Acquisition, A.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable. Images presented in the paper have no confidential personal data of patients, and individual rights are not infringed, as is detailed in the manuscript. Personal identity details on brain images (names, dates of birth, and identity numbers) are secured and completely anonymous. The information is anonymized, and the submission does not include images that may identify the person.

Data Availability Statement

The data presented in this study are available from the corresponding author upon reasonable request.

Acknowledgments

The authors wish to express gratitude to the radiology and neuroradiology specialists and MDs in the radiology residency training program of the Department of Emergency neuroradiology and musculoskeletal system of the University Clinical Center of Serbia, UKCS, and the Institute of Oncology and Radiology of Serbia for their expert medical opinion in evaluating the developed SVMI model.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Figure A1.

Image with exact integer values, the shades of gray scaled from black (pixel value 18) to white (pixel value 42), with all other pixels outside this range displayed as 0 and shown in white.

Figure A1.

Image with exact integer values, the shades of gray scaled from black (pixel value 18) to white (pixel value 42), with all other pixels outside this range displayed as 0 and shown in white.

Figure A2.

An image in gray levels with the averaged red part from processing. (a) An image display in gray levels and (b) an image display with the red, averaged part added.

Figure A2.

An image in gray levels with the averaged red part from processing. (a) An image display in gray levels and (b) an image display with the red, averaged part added.

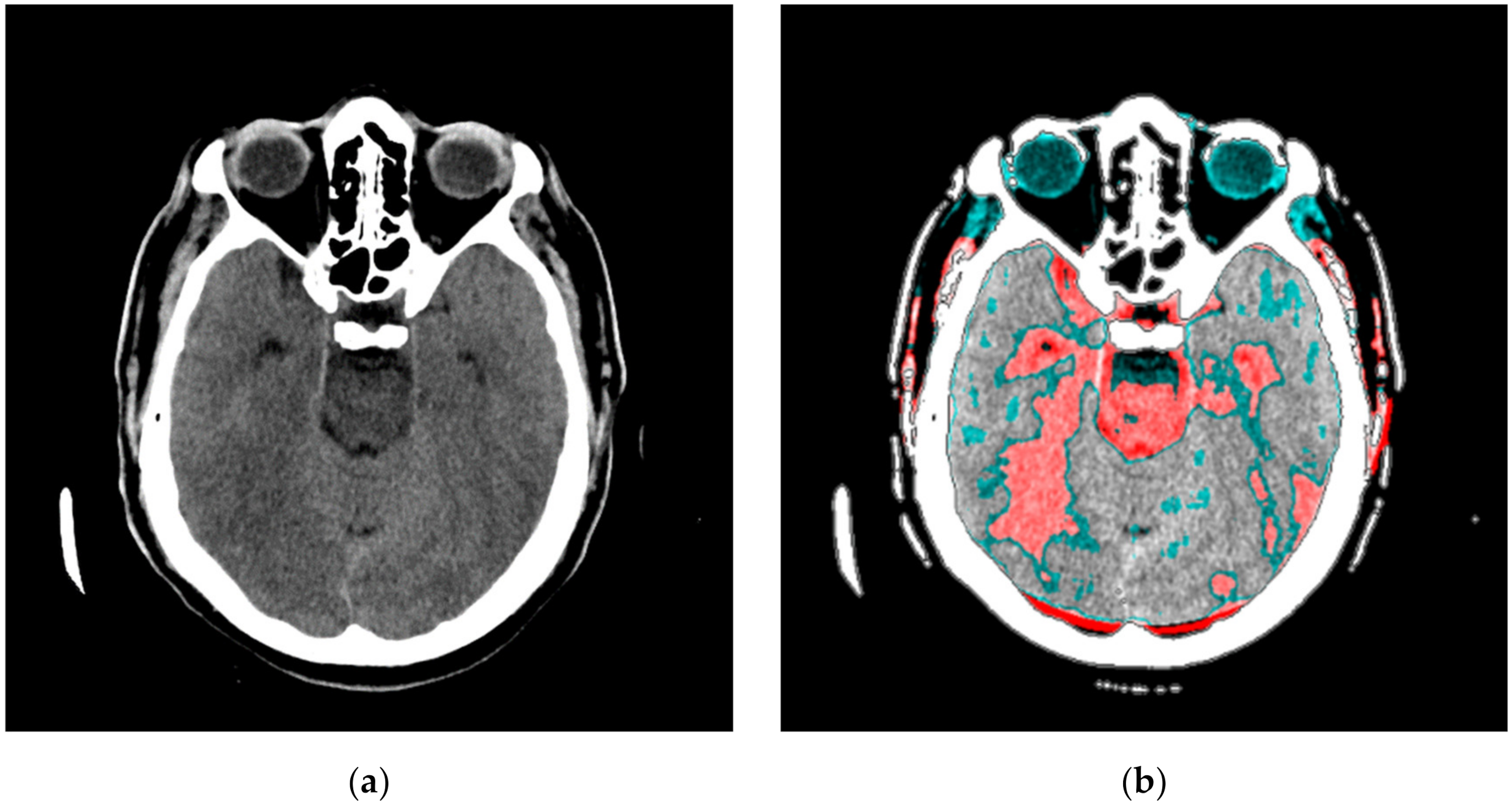

Figure A3.

SVMI processing of non-contrast MDCT image. (a) Non-contrast MDCT image and (b) Smart Visualization of Medical Images SVMI.

Figure A3.

SVMI processing of non-contrast MDCT image. (a) Non-contrast MDCT image and (b) Smart Visualization of Medical Images SVMI.

References

- Campbell-Washburn, A.E.; Ramasawmy, R.; Restivo, M.C.; Bhattacharya, I.; Basar, B.; Herzka, D.A.; Hansen, M.S.; Rogers, T.; Bandettini, W.P.; McGuirt, D.R.; et al. Opportunities in Interventional and Diagnostic Imaging by Using High-Performance Low-Field-Strength MRI. Radiology 2019, 293, 384–393. [Google Scholar] [CrossRef] [PubMed]

- Blanke, P.; Weir-McCall, J.R.; Achenbach, S.; Delgado, V.; Hausleiter, J.; Jilaihawi, H.; Marwan, M.; Nørgaard, B.L.; Piazza, N.; Schoenhagen, P.; et al. Computed tomography imaging in the context of transcatheter aortic valve implantation (TAVI)/transcatheter aortic valve replacement (TAVR) an expert consensus document of the Society of Cardiovascular Computed Tomography. JACC Cardiovasc. Imaging 2019, 12, 1–24. [Google Scholar] [CrossRef] [PubMed]

- Dong, X.; Lei, Y.; Wang, T.; Higgins, K.; Liu, T.; Curran, W.J.; Mao, H.; A Nye, J.; Yang, X. Deep learning-based attenuation correction in the absence of structural information for whole-body positron emission tomography imaging. Phys. Med. Biol. 2020, 65, 055011. [Google Scholar] [CrossRef] [PubMed]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Hirschberg, J.; Manning, C.D. Advances in natural language processing. Science 2015, 349, 261–266. [Google Scholar] [CrossRef]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.E.; Mohamed, A.-R.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T.N.; et al. Deep Neural Networks for Acoustic Modeling in Speech Recognition: The Shared Views of Four Research Groups. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Lloyd, B. Minor, Harnessing the power of data in health. In Health Trends Report. Stanford Medicine; Stanford Medicine: Stanford, CA, USA, 2017; pp. 1–18. [Google Scholar]

- Esteva, A.; Robicquet, A.; Ramsundar, B.; Kuleshov, V.; Depristo, M.; Chou, K.; Cui, C.; Corrado, G.; Thrun, S.; Dean, J. A guide to deep learning in healthcare. Nat. Med. 2019, 25, 24–29. [Google Scholar] [CrossRef]

- Langlotz, C.P.; Allen, B.; Erickson, B.J.; Kalpathy-Cramer, J.; Bigelow, K.; Cook, T.S.; Flanders, A.E.; Lungren, M.P.; Mendelson, D.S.; Rudie, J.D.; et al. A Roadmap for Foundational Research on Artificial Intelligence in Medical Imaging: From the 2018 NIH/RSNA/ACR/The Academy Workshop. Radiology 2019, 291, 781–791. [Google Scholar] [CrossRef]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef]

- Lee, H.; Yune, S.; Mansouri, M.; Kim, M.; Tajmir, S.H.; Guerrier, C.E.; Ebert, S.A.; Pomerantz, S.R.; Romero, J.M.; Kamalian, S.; et al. An explainable deep-learning algorithm for the detection of acute intracranial haemorrhage from small datasets. Nat. Biomed. Eng. 2019, 3, 173–182. [Google Scholar] [CrossRef]

- Rajpurkar, P.; Irvin, J.; Zhu, K.; Yang, B.; Mehta, H.; Duan, T.; Ding, D.; Bagul, A.; Langlotz, C.; Shpanskaya, K.; et al. Chexnet: Radiologist-level pneumonia detection on chest X-rays with deep learning. arXiv 2017, arXiv:1711.05225. [Google Scholar]

- Lazer, D.; Kennedy, R.; King, G.; Vespignani, A. The Parable of Google Flu: Traps in Big Data Analysis. Science 2014, 343, 1203–1205. [Google Scholar] [CrossRef] [PubMed]

- Alexander, A.; Jiang, A.; Ferreira, C.; Zurkiya, D. An Intelligent Future for Medical Imaging: A Market Outlook on Artificial Intelligence for Medical Imaging. J. Am. Coll. Radiol. 2020, 17, 165–170. [Google Scholar] [CrossRef] [PubMed]

- Ursuleanu, T.; Luca, A.; Gheorghe, L.; Grigorovici, R.; Iancu, S.; Hlusneac, M.; Preda, C.; Grigorovici, A. Deep Learning Application for Analyzing of Constituents and Their Correlations in the Interpretations of Medical Images. Diagnostics 2021, 11, 1373. [Google Scholar] [CrossRef] [PubMed]

- Dai, Y.; Gao, Y.; Liu, F. TransMed: Transformers Advance Multi-Modal Medical Image Classification. Diagnostics 2021, 11, 1384. [Google Scholar] [CrossRef]

- Shen, D.; Wu, G.; Suk, H.-I. Deep Learning in Medical Image Analysis. Annu. Rev. Biomed. Eng. 2017, 19, 221–248. [Google Scholar] [CrossRef]

- Rehman, M.U.; Cho, S.; Kim, J.; Chong, K.T. Brainseg-net: Brain tumor mr image segmentation via enhanced encoder–decoder network. Diagnostics 2021, 11, 169. [Google Scholar] [CrossRef]

- Zhang, W.; Li, R.; Deng, H.; Wang, L.; Lin, W.; Ji, S.; Shen, D. Deep convolutional neural networks for multi-modality isointense infant brain image segmentation. Neuroimage 2015, 108, 214–224. [Google Scholar] [CrossRef]

- Kleesiek, J.; Urban, G.; Hubert, A.; Schwarz, D.; Maier-Hein, K.; Bendszus, M.; Biller, A. Deep MRI brain extraction: A 3D convolutional neural network for skull stripping. Neuroimage 2016, 129, 460–469. [Google Scholar] [CrossRef]

- Pereira, S.; Pinto, A.; Alves, V.; Silva, C.A. Brain Tumor Segmentation Using Convolutional Neural Networks in MRI Images. IEEE Trans. Med. Imaging 2016, 35, 1240–1251. [Google Scholar] [CrossRef]

- El Kader, I.A.; Xu, G.; Shuai, Z.; Saminu, S.; Javaid, I.; Ahmad, I.S.; Kamhi, S. Brain Tumor Detection and Classification on MR Images by a Deep Wavelet Auto-Encoder Model. Diagnostics 2021, 11, 1589. [Google Scholar] [CrossRef] [PubMed]

- Latif, G.; Ben Brahim, G.; Iskandar, D.N.F.A.; Bashar, A.; Alghazo, J. Glioma Tumors’ Classification Using Deep-Neural-Network-Based Features with SVM Classifier. Diagnostics 2022, 12, 1018. [Google Scholar] [CrossRef] [PubMed]

- Xie, Y.; Zaccagna, F.; Rundo, L.; Testa, C.; Agati, R.; Lodi, R.; Manners, D.N.; Tonon, C. Convolutional Neural Network Techniques for Brain Tumor Classification (from 2015 to 2022): Review, Challenges, and Future Perspectives. Diagnostics 2022, 12, 1850. [Google Scholar] [CrossRef] [PubMed]

- VanBerlo, B.; Smith, D.; Tschirhart, J.; VanBerlo, B.; Wu, D.; Ford, A.; McCauley, J.; Wu, B.; Chaudhary, R.; Dave, C.; et al. Enhancing Annotation Efficiency with Machine Learning: Automated Partitioning of a Lung Ultrasound Dataset by View. Diagnostics 2022, 12, 2351. [Google Scholar] [CrossRef]

- Wu, G.; Kim, M.; Wang, Q.; Munsell, B.C.; Shen, D. Scalable High-Performance Image Registration Framework by Unsupervised Deep Feature Representations Learning. IEEE Trans. Biomed. Eng. 2015, 63, 1505–1516. [Google Scholar] [CrossRef] [PubMed]

- Suk, H.-I.; Lee, S.-W.; Shen, D. Hierarchical feature representation and multimodal fusion with deep learning for AD/MCI diagnosis. Neuroimage 2014, 101, 569–582. [Google Scholar] [CrossRef]

- Shin, H.-C.; Roberts, K.; Lu, L.; Demner-Fushman, D.; Yao, J.; Summers, R.M. Learning to Read Chest X-rays: Recurrent Neural Cascade Model for Automated Image Annotation. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2497–2506. [Google Scholar] [CrossRef]

- van Tulder, G.; de Bruijne, M. Combining Generative and Discriminative Representation Learning for Lung CT Analysis With Convolutional Restricted Boltzmann Machines. IEEE Trans. Med Imaging 2016, 35, 1262–1272. [Google Scholar] [CrossRef]

- Pang, X.; Zhao, Z.; Weng, Y. The Role and Impact of Deep Learning Methods in Computer-Aided Diagnosis Using Gastrointestinal Endoscopy. Diagnostics 2021, 11, 694. [Google Scholar] [CrossRef]

- Inamdar, M.A.; Raghavendra, U.; Gudigar, A.; Chakole, Y.; Hegde, A.; Menon, G.R.; Barua, P.; Palmer, E.E.; Cheong, K.H.; Chan, W.Y.; et al. A Review on Computer Aided Diagnosis of Acute Brain Stroke. Sensors 2021, 21, 8507. [Google Scholar] [CrossRef]

- Suk, H.-I.; Initiative, T.A.D.N.; Lee, S.-W.; Shen, D. Latent feature representation with stacked auto-encoder for AD/MCI diagnosis. Anat. Embryol. 2015, 220, 841–859. [Google Scholar] [CrossRef]

- Elsharkawy, M.; Sharafeldeen, A.; Soliman, A.; Khalifa, F.; Ghazal, M.; El-Daydamony, E.; Atwan, A.; Sandhu, H.S.; El-Baz, A. A Novel Computer-Aided Diagnostic System for Early Detection of Diabetic Retinopathy Using 3D-OCT Higher-Order Spatial Appearance Model. Diagnostics 2022, 12, 461. [Google Scholar] [CrossRef] [PubMed]

- Suk, H.-I.; Wee, C.-Y.; Lee, S.-W.; Shen, D. State-space model with deep learning for functional dynamics estimation in resting-state fMRI. NeuroImage 2016, 129, 292–307. [Google Scholar] [CrossRef]

- Oza, P.; Sharma, P.; Patel, S.; Bruno, A. A Bottom-Up Review of Image Analysis Methods for Suspicious Region Detection in Mammograms. J. Imaging 2021, 7, 190. [Google Scholar] [CrossRef] [PubMed]

- Dou, Q.; Chen, H.; Yu, L.; Zhao, L.; Qin, J.; Wang, D.; Mok, V.C.; Shi, L.; Heng, P.-A. Automatic Detection of Cerebral Microbleeds From MR Images via 3D Convolutional Neural Networks. IEEE Trans. Med. Imaging 2016, 35, 1182–1195. [Google Scholar] [CrossRef] [PubMed]

- Chesebro, A.G.; Amarante, E.; Lao, P.J.; Meier, I.B.; Mayeux, R.; Brickman, A.M. Automated detection of cerebral microbleeds on T2*-weighted MRI. Sci. Rep. 2021, 11, 4004. [Google Scholar] [CrossRef] [PubMed]

- Li, T.; Zou, Y.; Bai, P.; Li, S.; Wang, H.; Chen, X.; Meng, Z.; Kang, Z.; Zhou, G. Detecting cerebral microbleeds via deep learning with features enhancement by reusing ground truth. Comput. Methods Programs Biomed. 2021, 204, 106051. [Google Scholar] [CrossRef] [PubMed]

- Tijssen, M.P.M.; Hofman, P.A.M.; Stadler, A.A.R.; Van Zwam, W.; De Graaf, R.; Van Oostenbrugge, R.J.; Klotz, E.; Wildberger, J.E.; Postma, A.A. The role of dual energy CT in differentiating between brain haemorrhage and contrast medium after mechanical revascularisation in acute ischaemic stroke. Eur. Radiol. 2014, 24, 834–840. [Google Scholar] [CrossRef] [PubMed]

- Costine-Bartell, B.A.; McGuone, D.; Price, G.; Crawford, E.; Keeley, K.L.; Munoz-Pareja, J.; Dodge, C.P.; Staley, K.; Duhaime, A.C. Development of a model of hemispheric hypodensity (“big black brain”). J. Neurotrauma 2019, 36, 815–833. [Google Scholar] [CrossRef]

- Dekeyzer, S.; Reich, A.; Othman, A.E.; Wiesmann, M.; Nikoubashman, O. Infarct fogging on immediate postinterventional CT—a not infrequent occurrence. Neuroradiology 2017, 59, 853–859. [Google Scholar] [CrossRef]

- Borggrefe, J.; Gebest, M.P.; Hauger, M.; Ruess, D.; Mpotsaris, A.; Kabbasch, C.; Pennig, L.; Laukamp, K.R.; Goertz, L.; Kroeger, J.R.; et al. Differentiation of Intracerebral Tumor Entities with Quantitative Contrast Attenuation and Iodine Mapping in Dual-Layer Computed Tomography. Diagnostics 2022, 12, 2494. [Google Scholar] [CrossRef]

- Yingying, L.; Zhe, Z.; Xiaochen, W.; Xiaomei, L.; Nan, J.; Shengjun, S. Dual-layer detector spectral CT—a new supplementary method for preoperative evaluation of glioma. Eur. J. Radiol. 2021, 138, 109649. [Google Scholar] [CrossRef] [PubMed]

- Su, C.; Liu, C.; Zhao, L.; Jiang, J.; Zhang, J.; Li, S.; Zhu, W.; Wang, J. Amide Proton Transfer Imaging Allows Detection of Glioma Grades and Tumor Proliferation: Comparison with Ki-67 Expression and Proton MR Spectroscopy Imaging. Am. J. Neuroradiol. 2017, 38, 1702–1709. [Google Scholar] [CrossRef] [PubMed]

- Paech, D.; Windschuh, J.; Oberhollenzer, J.; Dreher, C.; Sahm, F.; Meissner, J.-E.; Goerke, S.; Schuenke, P.; Zaiss, M.; Regnery, S.; et al. Assessing the predictability of IDH mutation and MGMT methylation status in glioma patients using relaxation-compensated multipool CEST MRI at 7.0 T. Neuro-Oncology 2018, 20, 1661–1671. [Google Scholar] [CrossRef] [PubMed]

- Togao, O.; Yoshiura, T.; Keupp, J.; Hiwatashi, A.; Yamashita, K.; Kikuchi, K.; Suzuki, Y.; Suzuki, S.O.; Iwaki, T.; Hata, N.; et al. Amide proton transfer imaging of adult diffuse gliomas: Correlation with histopathological grades. Neuro-Oncology 2014, 16, 441–448. [Google Scholar] [CrossRef] [PubMed]

- Cha, S. Neuroimaging in neuro-oncology. Neurotherapeutics 2009, 6, 465–477. [Google Scholar] [CrossRef]

- Al-Okaili, R.N.; Krejza, J.; Woo, J.H.; Wolf, R.L.; O’Rourke, D.M.; Judy, K.D.; Poptani, H.; Melhem, E.R. Intraaxial brain masses: MR imaging–based diagnostic strategy—initial experience. Radiology 2007, 243, 539–550. [Google Scholar] [CrossRef]

- Wang, X.; Peng, Y.; Lu, L.; Lu, Z.; Bagheri, M.; Summers, R.M. ChestX-ray8: Hospital-scale chest X-ray database and benchmarks on weakly-supervised classification and localization of common thorax diseases. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2097–2106. [Google Scholar]

- Poplin, R.; Varadarajan, A.V.; Blumer, K.; Liu, Y.; McConnell, M.V.; Corrado, G.S.; Peng, L.; Webster, D.R. Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning. Nat. Biomed. Eng. 2018, 2, 158–164. [Google Scholar] [CrossRef] [PubMed]

- Chilamkurthy, S.; Ghosh, R.; Tanamala, S.; Biviji, M.; Campeau, N.G.; Venugopal, V.K.; Mahajan, V.; Rao, P.; Warier, P. Deep learning algorithms for detection of critical findings in head CT scans: A retrospective study. Lancet 2018, 392, 2388–2396. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).