Scaphoid Fracture Detection by Using Convolutional Neural Network

Abstract

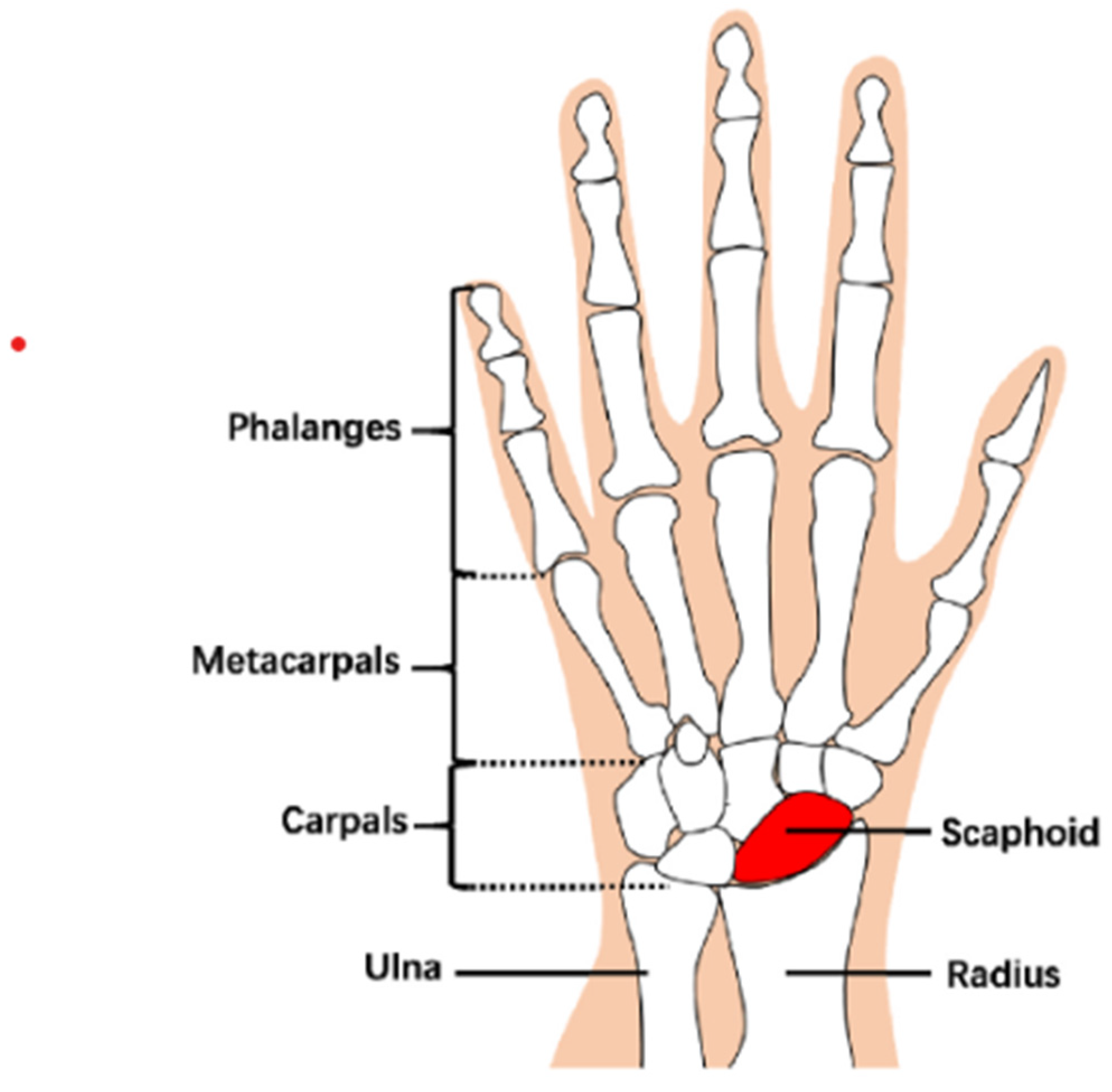

:1. Introduction

- To increase detection accuracy, the proposed method consists of two CNNs: one is to identify the scaphoid area, and another is to detect the fractures of scaphoid.

- Identifying scaphoid areas can reduce the searching space and computational times for consequent fracture detection.

- A powerful fracture detection CNN consists of ResNet, spatial feature pyramid (SSP), and convolutional block attention module (CBAM). Experimental results showed that the proposed CNN achieved high detection performance.

2. Material and Method

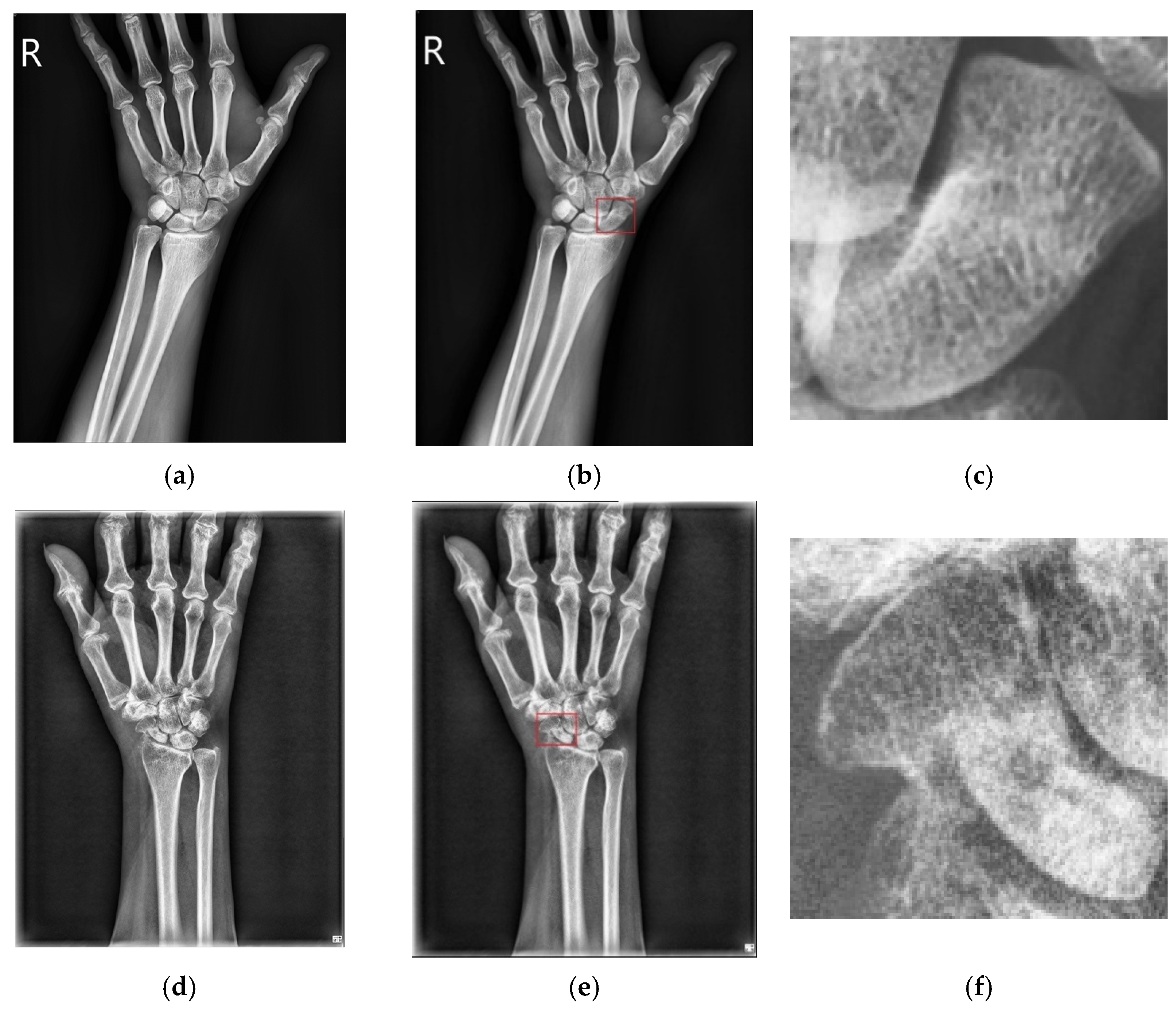

2.1. Data Collections and Implementation Environment

2.2. Methods

2.2.1. Scaphoid Area Detection

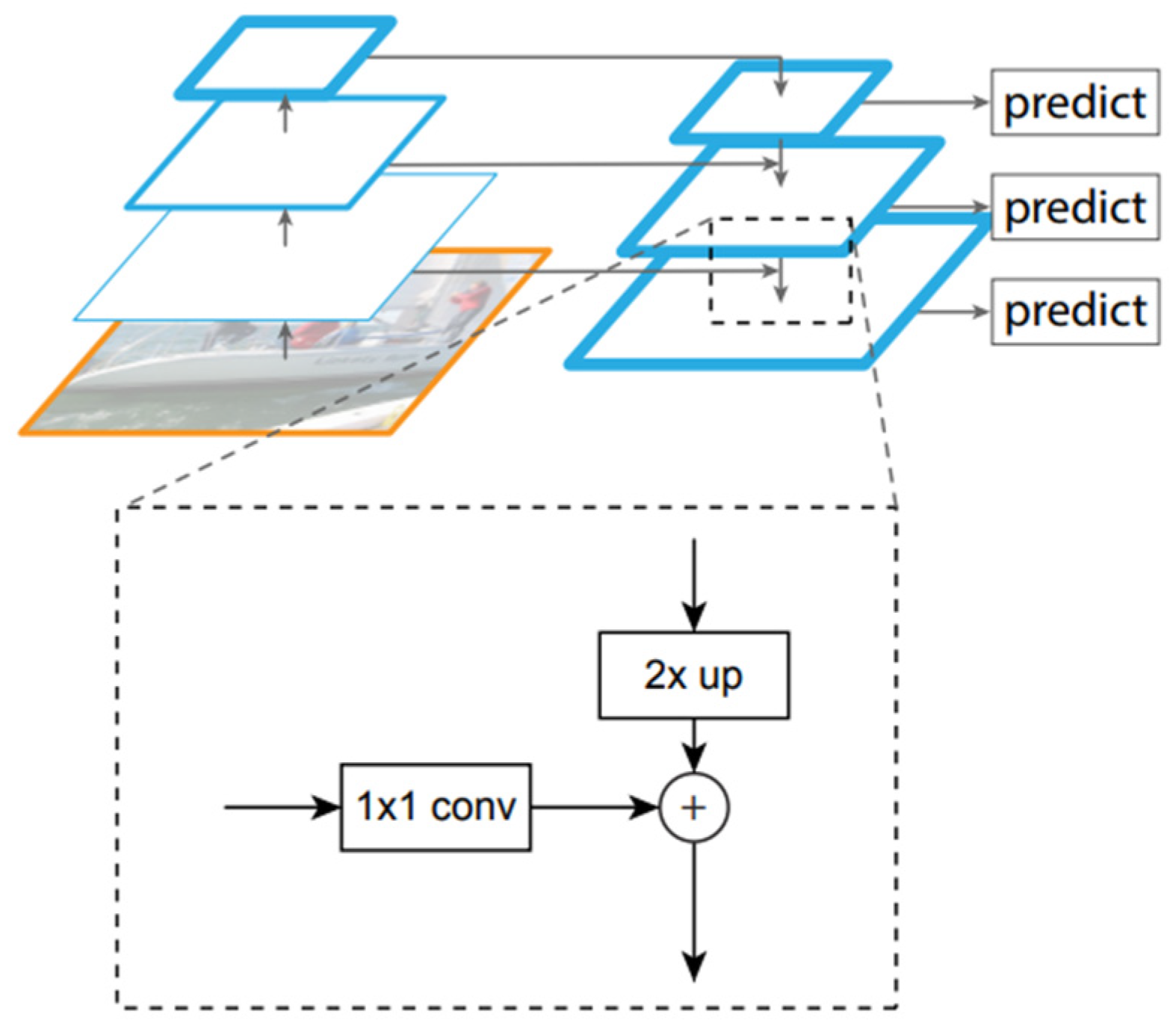

2.2.2. Fracture Area Detection

- Channel attention module. The aggregated feature map is combined through average-pooling and max-pooling operations. The two resulting feature maps are represented as and with the sizes of . These two feature maps are independently built from two fully connected layers and then integrate them into a feature map by elementwise addition and sigmoid operations.

- Spatial attention module. The channel attention feature map and original feature map F are aggregated into the new feature map, and down-sampled by using the average-pooling and max-pooling operation. The results generate two different feature maps and , with sizes of H × W × 1. The two feature maps are concatenated into larger maps and then convoluted and activated by a 7 × 7 × 2 kernel and sigmoid function.

2.2.3. Performance Evaluation

3. Results

3.1. Results of Scaphoid Detection

3.2. Fracture Detection and Classification

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chen, C.; Liu, B.; Zhou, K.; He, W.; Yan, F.; Wang, Z. CSR-Net: Cross-Scale Residual Network for multi-objective scaphoid fracture segmentation. Comput. Biol. Med. 2021, 137, 104776. [Google Scholar] [CrossRef] [PubMed]

- Schmitt, R.; Rosenthal, H. Imaging of scaphoid fractures according to the new S3 guidelines. In RöFo-Fortschritte auf dem Gebiet der Röntgenstrahlen und der bildgebenden Verfahren; Georg Thieme Verlag KG: Stuttgart, Germany, 2016; Volume 188, pp. 459–469. [Google Scholar]

- Gibney, B.; Smith, M.; Moughty, A.; Kavangh, E.C.; Hynes, D.; MacMahon, P.J. Incorporating cone-beam CT into the diagnostic algorithm for suspected radiocarpal fractures: A new standard of care? AJR Am. J. Roentgenol. 2019, 213, 1117–1123. [Google Scholar] [CrossRef] [PubMed]

- Rainey, C.; McConnell, J.; Hughes, C.; Bond, R.; McFadden, S. Artificial intelligence for diagnosis of fractures on plain radiographs: A scoping review of current literature. Intell.-Based Med. 2021, 5, 100033. [Google Scholar] [CrossRef]

- Mathappan, N.; Soundariya, R.S.; Natarajan, A.; Gopalan, S.K. Bio-medical analysis of breast cancer risk detection based on deep neural network. Int. J. Med. Eng. Inform. 2020, 12, 529–541. [Google Scholar] [CrossRef]

- Chen, A.I.; Balter, M.L.; Maguire, T.J.; Yarmush, M.L. Deep learning robotic guideline for autonomous vascular access. Nat. Mach. Intell. 2020, 2, 104–115. [Google Scholar] [CrossRef] [Green Version]

- Langerhuizen, D.W.G.; Bulstra, A.E.J.; Janssen, S.J.; Ring, D.; Kerkhoffs, G.M.M.J.; Jaarsma, R.; Doornberg, J.N. Is deep learning on par with human observers for detection of radiographically visible and occult fractures of the scaphoid? Clin. Orthop. Relat. Res. 2020, 478, 2653–2659. [Google Scholar] [CrossRef] [PubMed]

- Yoon, A.P.; Lee, Y.L.; Kane, R.L.; Kuo, C.F.; Lin, C.; Chung, K.C. Development and validation of a deep learning model using convolutional neural networks to identify scaphoid fractures in radiographs. JAMA Netw. Open 2021, 4, e216096. [Google Scholar] [CrossRef] [PubMed]

- Zhaowei, C.; Nuno, V. Cascade R-CNN: Delving into high quality object detection. arXiv 2017, arXiv:1712.00726v1. [Google Scholar]

- Mingxing, T.; Quoc, V.L. EfficientNet: Rethinking model scaling for convolutional neural networks. arXiv 2019, arXiv:1905.11946v5. [Google Scholar]

- Hendrix, N.; Scholten, E.; Vernhout, B.; Bruijnen, S.; Maresch, B.; de Jong, M.; Diepstraten, S.; Bollen, S.; Schalekamp, S.; de Rooij, M.; et al. Development and validation of a convolutional neural network for automated detection of scaphoid fractures on conventional radiographs. Radiol. Artif. Intell. 2021, 3, e200260. [Google Scholar] [CrossRef] [PubMed]

- Omeiza, D.; Speakman, S.; Cintas, C.; Weldermariam, K. Smooth grad-CAM++: An enhanced inference level visualization technique for deep convolutional neural network models. arXiv 2019, arXiv:1908.01224. [Google Scholar]

- Tung, Y.C.; Su, J.H.; Liao, Y.E.W.; Chang, C.D.; Cheng, Y.F.; Chang, W.C.; Chen, B.H. High-performance scaphoid fracture recognition via effectiveness assessment artificial neural networks. Appl. Sci. 2011, 11, 8485. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Su, J. Deep residual learning for image recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar]

- Huang, G.; Liu, Z.; Maaten, L.V.D.; Kilian, Q.; Weinberger, K.Q. Densely connected convolutional networks. arXiv 2018, arXiv:1608.06993v5. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, R. Going deeper with convolutions. arXiv 2014, arXiv:1409.4842v1. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-CNN: Towards real-time object detection with region proposal Networks. arXiv 2015, arXiv:1506.01497v3. [Google Scholar] [CrossRef] [Green Version]

- Yang, X.; Yan, J.; Ming, Q.; Wang, W.; Zhang, X.; Tian, Q. Rethinking rotated object detection with Gaussian wasserstein distance loss. arXiv 2021, arXiv:2101.11952v3. [Google Scholar]

- Girshick, R. Fast r-cnn. arXiv 2015, arXiv:1504.08083. [Google Scholar]

- Kuok, C.-P.; Horng, M.-H.; Liao, Y.-M.; Chow, N.-H.; Sun, Y.-N. An effective and accurate identification system of Mycobacterium tuberculosis using convolution neural networks. Microsc. Res. Tech. 2019, 82, 709–719. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.Y.; Dollar, P.; He, K.; Hariharan, B.; Elomgie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Gu, Y.; Qin, X.; Peng, Y.; Li, L. Content-augmented feature pyramid network with light linear spatial transformers for object detection. arXiv 2021, arXiv:2105.09464v2. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. arXiv 2018, arXiv:1807.06521. [Google Scholar]

- Xiong, S.; Wu, X.; Chen, H.; Qing, L.; Chen, T.; He, X. Bi-directional skip connection feature pyramid network and sub-pixel convolution for high-quality object detection. Neurocomputing 2020, 440, 185–196. [Google Scholar] [CrossRef]

- Zhong, B.; Ao, K. Single-stage rotation-decoupled detector for an oriented object. Remote Sens. 2020, 12, 3262. [Google Scholar] [CrossRef]

- Oksuz, K.; Cam, B.C.; Kalkan, S.; Akbas, E. Imbalance problems in object detection: A review. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3388–3415. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gupta, V.; Mittal, M. R-peak detection for improved analysis in health informatics. Int. J. Med. Eng. Inform. 2021, 13, 213–223. [Google Scholar]

- Gupta, V.; Mittal, M.; Mittal, V. FrWT-PPCA-Based R-peak Detection for Improved Management of Healthcare System. IETE J. Res. 2021. [Google Scholar] [CrossRef]

- Gupta, V.; Mittal, M.; Mittal, V.; Saxena, N.K. A Critical Review of Feature Extraction Techniques for ECG Signal Analysis. J. Inst. Eng. 2021, 102, 1049–1060. [Google Scholar] [CrossRef]

- Gautam, D.D.; Giri, V.K. A Critical Review of Various Peak Detection Techniques of ECG Signals. i-Manag. J. Digit. Signal Process. 2016, 4, 27–36. [Google Scholar]

- Zou, K.H.; O’Malley, A.J.; Mauri, L. Receiver-operating characteristic analysis for evaluating diagnostic tests and predictive models. Circulation 2007, 115, 654–657. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Brown, C.D.; Davis, H.T. Receiver operating characteristic curves and related decision measures: A tutorial. Chemom. Intell. Lab. Syst. 2006, 80, 24–38. [Google Scholar] [CrossRef]

- Agustin, T.; Utami, E.; Fatta, H.A. Implementation of data augmentation to improve performance CNN method for detecting diabetic retinopathy. In Proceedings of the International Conference on Information and Communications Technology (ICOIACT), Yogyakarta, Indonesia, 24–25 November 2020. [Google Scholar]

- Li, H.; Singh, B.; Nsjibi, M.; Wu, Z.; Davis, L.S. An Analysis of Pre-Training on Object Detection. arXiv 2019, arXiv:1904.05871. [Google Scholar]

| Methods | Recall | Precision | Accuracy | Sensitivity | Specificity | AUC |

|---|---|---|---|---|---|---|

| Our proposed method | 0.789 | 0.894 | 0.853 | 0.789 | 0.900 | 0.920 |

| Methods | Recall | Precision | Accuracy | Sensitivity | Specificity | AUC |

|---|---|---|---|---|---|---|

| Our proposed method | 0.735 | 0.898 | 0.829 | 0.735 | 0.920 | 0.917 |

| Methods | Accurate Samples | Inaccurate Sample |

|---|---|---|

| Detection | 15 | 16 |

| Classification | 16 | 15 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, T.-H.; Horng, M.-H.; Li, R.-S.; Sun, Y.-N. Scaphoid Fracture Detection by Using Convolutional Neural Network. Diagnostics 2022, 12, 895. https://doi.org/10.3390/diagnostics12040895

Yang T-H, Horng M-H, Li R-S, Sun Y-N. Scaphoid Fracture Detection by Using Convolutional Neural Network. Diagnostics. 2022; 12(4):895. https://doi.org/10.3390/diagnostics12040895

Chicago/Turabian StyleYang, Tai-Hua, Ming-Huwi Horng, Rong-Shiang Li, and Yung-Nien Sun. 2022. "Scaphoid Fracture Detection by Using Convolutional Neural Network" Diagnostics 12, no. 4: 895. https://doi.org/10.3390/diagnostics12040895

APA StyleYang, T.-H., Horng, M.-H., Li, R.-S., & Sun, Y.-N. (2022). Scaphoid Fracture Detection by Using Convolutional Neural Network. Diagnostics, 12(4), 895. https://doi.org/10.3390/diagnostics12040895