1. Introduction

Optical Coherence Tomography (OCT) has become the leading diagnostic tool in modern ophthalmology [

1,

2], providing various OCT-based biomarkers such as retinal thickness and volume in multiple zones, the presence of intraretinal cystoid fluid, subretinal fluid, alterations of outer retinal layers, and hyperreflective foci. However, this novel tool is not yet able to establish the progression of retinal diseases. Consequently, the ophthalmologist has to analyse the evolution of the condition based on the average thickness and volume on all seven layers and zones of the retina, which is most often a difficult and time-consuming task. Therefore, the human agent is not always able to identify patterns of disease evolution because of the huge number of retinal characteristics (considering all retinal zones, all layers, and the temporal information for each patient visit). Hence, early treatment is not always undergone, resulting in severe vision impairment or even blindness. This is one opportunity for the software agent to complement the ophthalmologist.

Age-related macular degeneration (AMD) is the leading cause of visual impairment and severe visual loss in developed countries. Early and intermediate AMD revolve around the accumulation of drusen and alteration of the retinal pigment epithelium (RPE). Later in the course of the disease, depending on the presence or absence of abnormal new vessels at the level of the RPE, the advanced-stage AMD can be either neovascular (also known as wet) or atrophic (also known as dry). It is at this advanced stage that the central vision starts to decline, gradually in the atrophic type and abruptly in the neovascular type. Despite it already being a major public health problem, the global prevalence of AMD is expected to further rise to 288 million by 2040 [

3], heavily increasing the socio-economic burden.

Our task is to forecast the evolution of retinal diseases, to help the ophthalmologist to determine when early treatment is needed in order to prevent significant visual loss.

The acquired data are made up of sequences of visits from multiple patients Age-related Macular Degeneration (AMD) that untreated at the appropriate time, may result in irreversible blindness. Each visit contains OCT B-scans and other retinal features, including visual acuity measurements. Technically, this is a time series forecasting task, where the time series analysis aims to help the ophthalmologist to more quickly understand the factors influencing the disease.

We are looking into two technical challenges. The first concerns how to learn from small-sized time series: there are only short sequences (1–5 visits) of medical observations for each patient. The second is how to reveal the learned model to the human agent, i.e., explainability. Aiming to develop a support system for ophthalmologists to discover trends in retinal diseases, good accuracy might not be enough. Therefore, the outcomes of the machine learning model used should be explainable, meaning that a resembling prediction system as needed must be able to allow the user to understand the decision-making process that led to the result. Besides the confidence, the ophthalmologist could be able to gain more knowledge too. For example, based on the medical condition prognosis and the logics behind the AI model, they would be able to determine whether some parts of the retina have a greater impact on the visual acuity or even whether past treatment had a greater influence.

2. The Need for Supporting the Expert’s Decision

Currently, ophthalmologists do not have sufficient data while analysing the OCT B-scans and previous VAs (visual acuity) to predict the evolution of both the retinal structure and vision in patients suffering from late neovascular AMD in order to personalise their treatment plan.

In their patient management, most clinical practices start with an induction phase comprising monthly intravitreal injections for 3 consecutive months. Following this phase, three different regimens have been developed:

In pro re nata (PRN), patients are followed each month and treatment is administered when there is presence of disease activity;

In treat and extend (T&E), the treatment is administered regardless of disease activity but the intertreatment interval is continuously increased;

In a fixed dosing regimen, the treatment is administered at fixed intervals.

Averaging to a bimonthly regimen, as explored by the VIEW study using Aflibercept [

4], might appear to be a good middle ground between the aforementioned strategies, but this might lead to over-treating some, with costly outcomes, while under-treating others with loss of vision, thus highlighting the need for a personalized approach—a perfect task for machine learning.

In clinical settings, doctors cannot quantify the amount of intraretinal fluid. They look at the cross-sectional B-scans from a qualitative point of view and then at the ETDRS macular grid to see the difference in volume and thickness from the previous examination. Even though there exist multiple clinical trials evaluating anti-VEGF efficacy, the results differ from those obtained in real-world settings [

5] and there is a heterogeneous response between individuals [

6] that necessitates tailored personalized treatment schedules.

In the treatment of nAMD, we aim at improving the patient’s VA, given that it is closely connected to their quality of life [

7] and their ability to maintain an independent lifestyle. If VA could be predicted using machine learning, we would see three different scenarios:

Cases where the VA improves. Having this valuable information and showing it to the patient could increase their willingness to undergo the proposed treatment, their compliance, and their psychological well-being, knowing that a great number of patients fear losing their sight after being diagnosed with exudative AMD [

8]. If the prediction could also foresee the best regimen (the number of doses and interval of administration), this could further improve the outcome.

Cases where the VA remains unchanged. Knowing that, in its natural history, AMD continues to deteriorate, keeping the current VA would still be considered a successful outcome.

Cases where the VA declines. In this scenario, future prediction algorithms should take into account the results for different types of anti-VEGF. We might see different responses depending on the chosen agent and administration regimen, and we could anticipate the ones decreasing the VA. If none of the current clinical agents could improve the patient’s vision, we could guide them to clinical trials testing upcoming new therapies [

9]. Another important aspect in this scenario would be to provide adequate mental health support, as recent evidence shows that if implemented at a time during low-vision rehabilitation, this reduces by half the incidence of depressive disorders among nAMD patients [

10].

3. Preparing the Data

3.1. Dataset Description

The dataset was provided by the Department of Ophthalmology of the "Iuliu Hatieganu" University of Medicine and Pharmacy in Cluj-Napoca. It contained 94 patients with age-related macular degeneration (AMD) There were 161 eyes included with more than one medical examination. Each patient had multiple visits, with each one having OCT scans (with XML files that contain retinal thickness and volume features) and the best-corrected visual acuity.

Figure 1 shows the third visit of a patient, right eye only. The image corresponds to a single slice from the OCT volume. This slice is the central one illustrated by the green arrow in the left column. Such OCT volumes include 26 slices (or B-scans).

There are some technical challenges that need to be addressed. First, AMD does not necessarily have the same evolution in both eyes, so we can consider having independent sequences for each eye. Second, the sequences of visits have different lengths and the visits take place between different time intervals. Third, the data may be inconsistent between the OCT scans, the retinal features generated by the Heidelberg Spectralis OCT, and the visual acuity values.

3.2. Inclusion/Exclusion Criteria

We included patients with late neuvascular AMD who had multiple visits consisting of an initial evaluation and follow-ups. Patients that were in a very advanced stage of the disease or who had surgeries were excluded. We did not include sequences that contained only one visit.

3.3. Selecting the Input Features

The retina is approximately 0.5 mm thick and lines the back of the eye. A circular field of approximately 6 mm around the fovea is considered the centre of the retina, called the macula. Using the Early Treatment Diabetic Retinopathy Study (ETDRS) macular grid within the OCT, we split the retina into 9 zones:

,

,

,

,

,

,

,

,

(see

Figure 2). Retinal direction is indicated by

S (superior),

I (inferior),

N (nasal), and

T (temporal). The diameters of the three circles are: central subfield (1 mm), inner ring (3 mm), and outer ring (6 mm). In OCT B-scans, the retina layers appear in the right part, as shown in

Figure 1.

For each visit, we recorded 3D OCT volumes (with different numbers of OCT B-scans) and fundus scans. For each retinal zone, there were two values: average thickness and volume. As a supplement, there were a central point average thickness value, the minimum and maximum central thickness values, and the total retinal volume. We also had visual acuity measurements for each visit. All of these features were generated by the Spectralis machine from Heidelberg Engineering, except for the visual acuity, which was measured at each visit using Snellen visual acuity charts.

For each medical observation, we had more OCT B-scans, depending on the Spectralis machine’s configuration (FAST, Dense, or Posterior Pole), which determines the number of cross-sectional scans. The machine’s configurations were not uniform, meaning that not all visits had the same number of images, and even so, they might not have been taken in exactly the same position of the eye. This might be an impediment if using the OCT images for prediction.

Moreover, we had an OCT fundus image for each examination and an XML file containing the retinal thickness and volume values in each of the 9 zones from the 3 circle diameters shown in

Figure 2. These concentric circle diameters were at 1, 3, and 6 mm from the centre. In the XML file from Listing 1, we had as well the total volume and average thickness of the retina, the minimum and maximum thickness, and the average thickness in the central point.

| Listing 1. Numerical features of the retina |

| <CentralThickness>0.262</CentralThickness> |

| <MinCentralThickness>0.225</MinCentralThickness> |

| <MaxCentralThickness>0.333</MaxCentralThickness> |

| <TotalVolume>5.664</TotalVolume> |

|

| <Zone> |

| ⌴<Name>C0</Name> |

| ⌴<AvgThickness>0.259</AvgThickness> |

| ⌴<Volume>0.203</Volume> |

| ⌴<ValidPixelPercentage>100</ValidPixelPercentage> |

| </Zone> |

| <Zone> |

| ⌴<Name>N1</Name> |

| ⌴<AvgThickness>0.299</AvgThickness> |

| ⌴<Volume>0.470</Volume> |

| ⌴<ValidPixelPercentage>100</ValidPixelPercentage> |

| </Zone> |

Other relevant numerical data included a variable specifying whether treatment was received or not at the visit, the date of the visit, and the visual acuity value. This visual acuity is our target variable.

3.4. The Target Variable—Visual Acuity

The visual function is measured using an ophthalmological examination called the visual acuity test. This test determines the smallest letters that a person can read on a chart, called the Snellen chart. The patient’s visual acuity (VA) is represented by a Snellen (Imperial) fraction based on the following formula: , where D is the distance at which a healthy person can discern the eye chart. Since LogMAR and decimal formats were mostly used, we converted the VA values into the decimal format, since it is the easiest to use and process. In most cases, this implied simply a division, while in other cases, we did not have exact values. Caused by inaccurate measurements, in some cases, we only had a range interval for the visual acuity. For these cases, we used the mean visual acuity value.

The missing visual acuity values were replaced with values that did not influence the sequence for the patient. Inconsistencies between the visual acuity data and OCT data were solved by keeping only data that were common to both of these, or keeping both pieces of data and replacing the missing values.

3.5. Normalising the Data

Nonetheless, the other retinal features ranged within different intervals, requiring normalisation, which is an important step for most machine learning algorithms. The min–max normalisation method was used because there were not many outliers and it also does not change the distribution of the data.

3.6. Handling the Irregular Time Intervals

An irregular time series is a temporal sequence in which the time intervals between the elements of the sequence are not equal. Similarly, in our data, the medical observations took place at different time intervals for each patient. This could be an issue in the task of predicting the future visual acuity for a patient.

Let the sequence of visits be , , …, and the task to predict the visual acuity for the visit be . We consider two approaches to predict the visual acuity for the next medical examination of a patient p as a function of all past n visits:

Time series resampling to change visit frequency such that they happen at equal time intervals: . After resampling, the missing values should be filled by interpolating the previous and following visits.

Consider the sequences of visits as they occur in reality and including the timestamps of the visits as features, as well as the timestamp with which to predict the next visual acuity:

Here, is the feature vector for visit i of patient p containing m features , is the visual acuity to be predicted at visit n for patient p.

3.7. Handling the Missing Values

For both previously mentioned cases, the input data can have missing values. Among the most commonly used methods for missing data replacement is linear interpolation, which does not have a significant influence on the dataset. To calculate feature

y for a visit at time

t, there must exist two visits at times

and

, with

, and both visits have valid (non-missing) features

and

.

This formula can be applied not only on irregular time series (having the timestamps as features), but also on resampled data. Other, more complex methods suggested in the literature include continuous time stochastic models such as GARCH or Kalman filters.

3.8. Removing Noise from OCT Scans

Given that some of the OCT images had salt and pepper noise, a median filter was applied. Using a median filter with a kernel of a specific size involves blurring the image by applying the kernel sequentially on it, and each time replacing the pixel from the centre of the kernel with the mean value of all the other pixels in the kernel. More complex deep learning methods for noise reduction consist of different types of CNNs.

3.9. Augmentation of Data

Patients did not have the same number of visits, resulting in sequences of different sizes. On the one hand, some clustering and classification methods to determine the evolution of AMD can be used with algorithms suitable for series of unequal length, such as Dynamic Time Warping. On the other hand, for regression techniques, equally sized sequences would be required.

In the dataset, the mean sequence length was 4; thus, it would be a good choice to generate series with at most 4 visits. For example, if a patient has the visits

,

,

,

,

and we want to generate a series of 3 visits, the initial sequence can be divided, thus obtaining three series:

,

,

,

,

,

, and

,

,

. The Sliding Window Algorithm is formalised in Algorithm 1.

| Algorithm 1. Sliding Window Algorithm for time series segmentation. |

Input: ts—multivariate time series of form , … k—the desired size for newly generated series Output: generated_ts—vector of all generated series - 1:

functionSlidingWindow(ts, k) - 2:

- 3:

- 4:

if then - 5:

return - 6:

else if then - 7:

- 8:

return - 9:

end if - 10:

- 11:

while do - 12:

- 13:

- 14:

while do - 15:

- 16:

- 17:

end while - 18:

- 19:

- 20:

end while - 21:

return - 22:

end function

|

3.10. Unsupervised OCT Feature Extraction

Given that we had grayscale images with size 512 × 496, it meant 253,952 pixels (features) for each image. To reduce the features, one can opt for: (i) resizing the images (e.g., to 256 × 256); (ii) extracting the region of interest (ROI); or (iii) applying one of the many algorithms for feature extraction. We opted to apply two algorithms: principal component analysis (PCA) and a convolutional autoencoder.

First, focusing on the statistical variance of the data, PCA is able to determine data components by finding their eigenvectors. The aim is to compute the eigenvectors by maximising the covariance between the features. Consequently, we could use PCA to choose the number of components that are able to capture the maximum amounts of variance from our images. To estimate this, the cumulative explained variance depending on the number of components needed to be determined. The explained variance ratio is represented by the fraction of variance of each component with respect to the total variance of all individual components. Thus, through the cumulative explained variance, we could determine the needed number of components for a specific percentage of variability, preferably the number of components that can describe the overall image set.

In

Figure 3, the curve quantifies how much of the total variance in approximately 12,000 dimensions is contained within the first

n components. Here, 99% of the images can be represented using 12,000 components, but 12,000 is still too large for the number of features. Being able to represent 80% of the data would also be a good percentage;

Figure 4 shows that, in this case, we would need slightly more than 250 components.

Second, autoencoders are a type of artificial neural network that have two major components: an encoder and a decoder. The encoder is used to generate a latent space representation as an embedding of the input, and the decoder learns to reconstruct the input based on the code.

An explainable method would be to make use of a convolutional autoencoder (CAE), which applies multiple convolutions on the images in the decoder and then learns to reconstruct them. Several studies [

11,

12] have shown that CAEs can obtain better results in medical imaging, focusing more on the relevant features, through a more natural decomposition in the latent space. It was also shown that, regarding choosing the code size of an autoencoder, the rules commonly used in PCA can also be applied to autoencoders.

Thereby, a convolutional autoencoder with the latent code size of 256 would be a good choice. The encoder performs multiple convolutions on the images, returning a vector of length 256, while the decoder learns to reconstruct the OCT input images during the training, updating all the weights.

Autoencoders were also used in the pipeline from [

13], firstly to encode the cross-sectional OCT scans and then to encode the obtained representation. Even though such a method could improve our results, the approach would not be exactly suitable because of our data inconsistencies. Each patient visit had a different number of OCT scans, depending on the mode used by the Spectralis system. Some research papers, however, try to predict disease evolution from OCT fundus scans. Using only the OCT fundus scans could be more manageable.

3.11. Selecting Numerical Features

Based on the existing research, some retinal layers and zones may have a greater influence on the visual function of people with AMD than others. However, we only had the numerical features of the retinal zones, while most studies use features specific to the retinal layers. Despite this, some zones have been shown to be more influential with respect to the visual function, i.e., zones such as the fovea or the closest ones to the fovea, so domain knowledge can be also used for feature selection. The current focus was to determine the zones that are most correlated to the visual acuity evolution, from a statistical point of view. We used two approaches, detailed below.

3.11.1. Univariate Analysis

In order to select which of these to use first of all, an analysis concerning the relationship between the retinal features and the visual acuity was performed. The correlation between series of features can be determined through correlation coefficients such as Pearson or Spearman. Both of these coefficient values can range between −1 and 1, values near 0 revealing no correlation or a weak correlation, while values closer to the endpoints of this interval suggest a stronger correlation. The difference between the Pearson coefficient (

r) and Spearman’s (

) is that the Pearson coefficient evaluates a linear correlation, while Spearman’s evaluates only a monotonic correlation. These coefficients were computed for two features

x and

y using Equations (

2) and (

3).

where

n is the number of observations for the features and

d is the difference between the two ranks of each observation. Based on Equations (

2) and (

3), we could determine the retinal attributes that had a greater effect on the change in the visual acuity. The results in

Table 1 show that even if there is not any strong correlation, the zones most correlated with the visual acuity are

(the fovea) and

(inner nasal zone). To investigate whether these features together had a higher impact, a multivariate analysis was required.

3.11.2. Multivariate Analysis

Besides using domain knowledge, there are several major ways to perform multivariate feature selection, including: (i) using a filter, (ii) using a wrapper, and (iii) embedded methods [

14].

First, the filter methods are used in the preprocessing pipeline, and they involve choosing the best features based on a specific ranking. For instance, the previous correlation coefficients can be applied, afterwards selecting the most strongly correlated values among them. The idea would be to choose more strongly correlated features, but, in our case, we did not have very strong correlations (see

Table 1). Another filter method would be to not use the variables that have a variance of 0, which denotes that they do not change in time. Such features can even worsen our models. However, having multiple time series in this case, the variance of the feature must be 0 for all the series so as to remove it, which is highly improbable.

Second, the wrapper methods use greedy algorithms to select the features that obtain the best results against a specific learning algorithm. One disadvantage could be that these methods are computationally expensive. An example of such a method is the Recursive Feature Elimination algorithm (RFE). It recursively removes features and retrains the chosen model, until the best performance is obtained. Along with the best performance, the method is interpretable too because it chooses the features based on the feature importance weight’s computation. The Recursive Feature Elimination technique with cross-validation was applied using the implementation from the scikit-learn library. The model used was a gradient boosted machine. The feature importance obtained for both the original data and for the resampled time series can be visualized in

Figure 5.

Third, embedded methods use learning algorithms that have their own feature selection methods built in. Some examples are lasso regularization in linear regression and random forest regression. These are machine learning algorithms typically used for regression, furthermore quantifying feature importance. Thus, they can be used for feature selection and even for post-hoc explainability after predictions.

We used the lasso regression implementation from scikit-learn. Cross-validation was applied when training the estimators, in order to obtain the best score. The features having weights equal to 0 were removed.

4. Running Experiments

4.1. Visual Acuity Forecasting

For predicting future visual acuities, we used multiple regression algorithms. Besides the classical linear regression, the focus was placed more on deep neural networks and ensembles, due to their greater performance. Gradient boosting, random forest, and extremely randomised trees regression algorithms were experimented with K-fold cross-validation, in order to obtain a more accurate evaluation of the algorithm’s performance.

We introduced a bidirectional wrapper over the recurrent layer, making the network a bidirectional recurrent neural network. Bidirectional RNNs are useful, especially in time series, since they are able to learn patterns from temporal sequences in both directions, thus helping in the prediction process. Moreover, the custom model can use any of the three previously mentioned RNN units. The proposed architecture is shown in

Figure 6.

The data to be fed to the network consist of the augmented time series 〈no_series, timesteps, no_features〉. Then, depending on the chosen type of network (based on the nn_type variable), the inputs go through the chosen recurrent layer. The LSTM layer uses an L1 regulariser with to regularise the input feature weights. As a regularisation method, and also to avoid overfitting, a dropout layer is used. Finally, the dense layer with one unit gives the predicted visual acuity. The sigmoid activation function is applied here in order to restrict the interval range of the visual acuity outcome, to be between 0 and 1.

The neural network models are trained using early stopping, meaning that there is not a fixed number of epochs, and the best-performing model is always saved. The loss is computed using Mean Squared Error (MSE), but other metrics are used as well: MAE, RMSE, RMSPE, or R.

4.2. Experimental Setup

The data consist of irregular time series of medical examinations, with varying lengths, having as variables: (i) the target—visual acuity values; (ii) the features—18 numerical OCT variables; (iii) the OCT images.

To obtain the image embeddings, a deep unsupervised dimensionality reduction method was used, specifically a convolutional autoencoder. All 14,337 OCT images were split into three subsets for training (60%), validation (20%), and testing (20%).

For clustering, we were able to use the original sequences, disregarding their irregularity due to the Dynamic Time Warping metric.

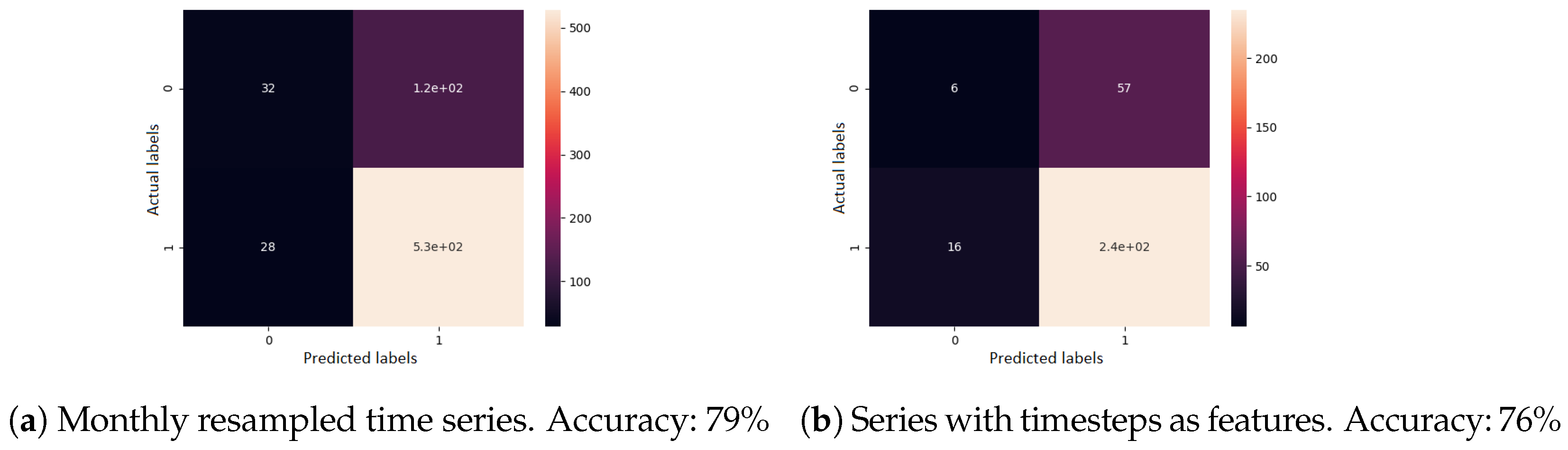

For time series classification and forecasting, the original 115 time series were preprocessed and augmented. Two main approaches were considered. First, the time series were resampled using interpolation, such that all time intervals were equal to 1 month. Second, we simply used the time interval values as features, represented as the number of months from the first visit in each sequence. The series were then segmented in order to obtain more series of the same size. Hence, time series of size 2, 3, and 4 were generated (see

Table 2).

After augmentation, the data were also split into 3 parts: training (60%), validation (30%), and testing (10%) data. Only 10% of the data were kept for testing, given that we had a small dataset. This applied only to the case of deep neural networks. For shallow machine learning algorithms, 10-fold cross validation was performed in all cases, having only train–test splits.

7. Conclusions

We targeted the progression of age-related macular degeneration by means of machine learning technologies, aiming at helping the ophthalmologist to determine when early treatment is needed. We collected a dataset containing 94 patients with AMD and there were 161 eyes included with more than one medical examination. We applied linear regression, gradient boosting, random forest and extremely randomised trees, a bidirectional recurrent neural network, an LSTM network, and a GRU network to handle technical challenges such as learning from small time series, handling different time intervals between visits, or learning from different numbers of visits for each patient (1–5 visits). For predicting the visual acuity, we conducted several experiments with different features. First, by considering only the previously measured visual acuity, the best accuracy of 0.96 was obtained based on a linear regression. Second, by considering numerical OCT features such as previous thickness and volume values in all retinal zones, the LSTM network reached the highest score (). Third, by considering the fundus scan images represented as embeddings obtained from the convolutional autoencoder, the accuracy was increased for all algorithms. The best forecasting results for visual acuity depend on the number of visits and features used for predictions, i.e., 0.99 for LSTM based on three visits (monthly resampled series) based on numerical OCT values, fundus images, and previous visual acuities. Shallow machine learning algorithms performed surprisingly well in this case, even better than more advanced methods such as deep neural networks.