Abstract

In December 2019, the novel coronavirus disease 2019 (COVID-19) appeared. Being highly contagious and with no effective treatment available, the only solution was to detect and isolate infected patients to further break the chain of infection. The shortage of test kits and other drawbacks of lab tests motivated researchers to build an automated diagnosis system using chest X-rays and CT scanning. The reviewed works in this study use AI coupled with the radiological image processing of raw chest X-rays and CT images to train various CNN models. They use transfer learning and numerous types of binary and multi-class classifications. The models are trained and validated on several datasets, the attributes of which are also discussed. The obtained results of various algorithms are later compared using performance metrics such as accuracy, F1 score, and AUC. Major challenges faced in this research domain are the limited availability of COVID image data and the high accuracy of the prediction of the severity of patients using deep learning compared to well-known methods of COVID-19 detection such as PCR tests. These automated detection systems using CXR technology are reliable enough to help radiologists in the initial screening and in the immediate diagnosis of infected individuals. They are preferred because of their low cost, availability, and fast results.

1. Introduction

Coronavirus disease (COVID-19), caused by SARS-CoV-2, is one of the biggest challenges of the 21st century. The entire world is battling against this virus, which has affected 182,302,122 persons and has taken the lives of 3,947,958 individuals worldwide as of 29 June 2021 [1]. The source of its origin still remains undiscovered. The WHO (World Health Organization) declared it as a pandemic on 11 February 2020 because of the widespread infection rate across China and other countries within a span of a few months.

It is a respiratory disease and is highly contagious in all age groups. Fever, sore throat, headache, cough, fatigue, and body pain are some of the known symptoms. The period between infection and the onset of symptoms may range from 2 to 14 days. It spreads via airborne droplet, and infection is caused by coming into contact, directly or indirectly, with infected individuals. Despite the fact that vaccines are now being developed and distributed, for countries with large populations such as India, the challenge still ongoing. It would take years to vaccinate every individual in the country twice. Until then, social distancing and the isolation of infected patients is the only preventive way to break the chain of infection.

The most widely used diagnosis method is RT-PCR (Reverse Transcription-Polymerase Chain Reaction) tests. These testing kits are expensive and take 6 to 8 h to test a single sample. They also have high false-negative and false-positive rate due to their low sensitivity. Therefore, chest radiography consisting of chest X-rays (CXRs) and chest tomography (CT) scans can be further possible solutions for the detection of COVID-19 in early stages. The wide availability of already-installed X-ray machines and CT rooms in hospitals provides an added advantage. In this study, CXRs were preferred over CT scans to avoid CT room disinfection. Moreover, X-rays have lower ionizing radiation and are cheaper than CT scans. Studies show that COVID-19 leaves traces of some radiological signatures which can be identified in chest X-rays. However, these signatures can only be interpreted and analyzed by expert radiologists. This increases the chances of error and delays the process of COVID detection. Hence, there is a need for an automated diagnosis system that processes CXR and CT scan images and produces good COVID-19 detection results.

Clinical cases claim that ultrasound and chest CT perform better in excluding COVID-19 infection than in differentiating it from other respiratory diseases. Thoracic CT imaging is characterized by high specificity and low detection sensitivity to asymptomatic individuals.

Indeed, instead of the known quantitative CT values, the distinction of COVID-19 from non-COVID-19 cases can be based on radiomic characteristics since the latter performs better than the classical quantitative CT with high values of precision, specificity, and sensitivity metrics [2].

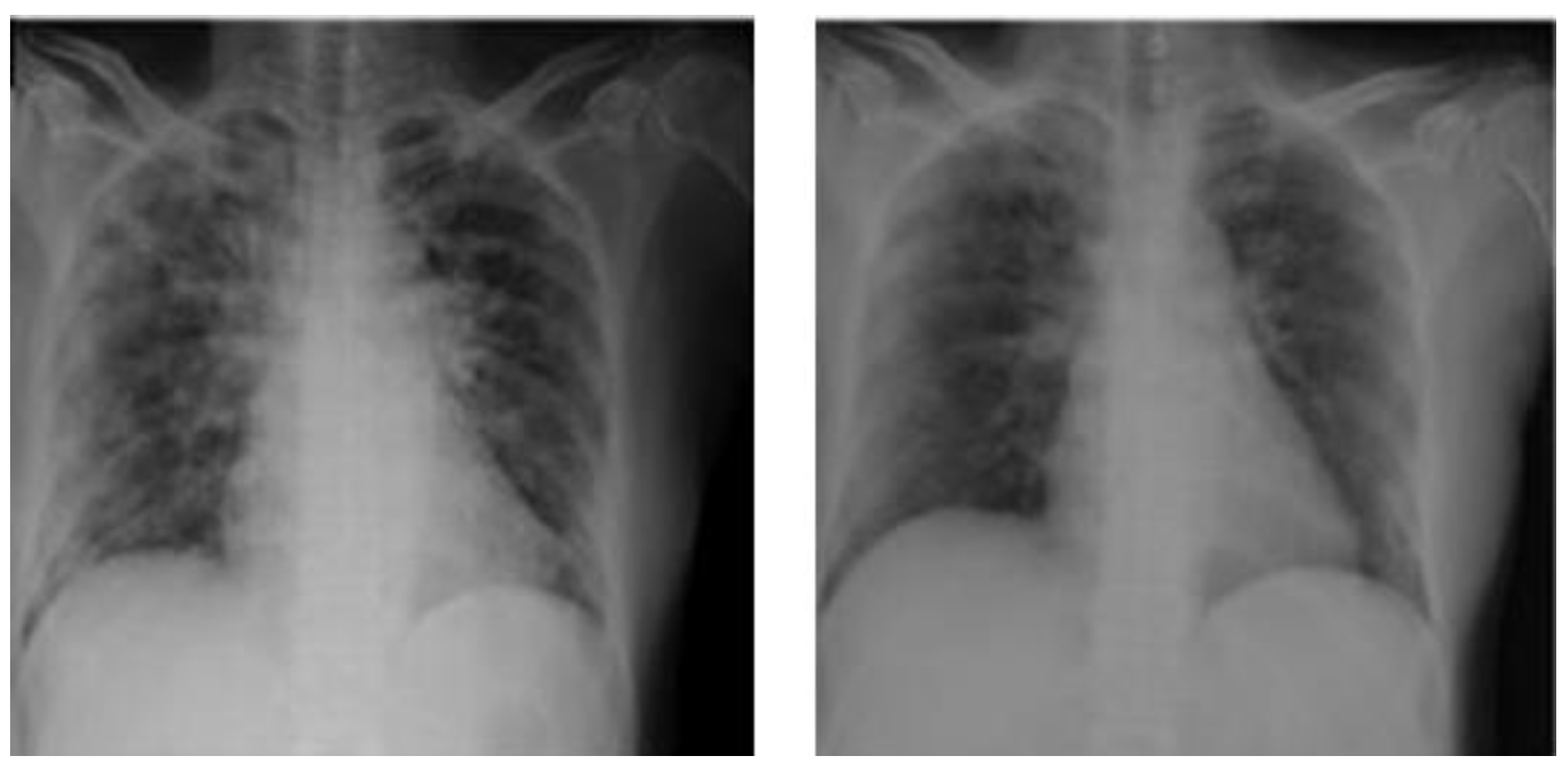

Chest radiography involves transmitting X-rays through a patient’s chest which are reconstructed into medical images by transmitting them into radiation detectors. Figure 1 shows the CXR findings of an infected chest. These infected images are then examined and interpreted by expert radiologists. This manual process is prone to error and thus does not give high sensitivity. Indeed, according to [3], for several reasons, the accuracy of reports of radiologists when interpreting CXRs may not always be perfect due to some unavoidable errors. These errors are sometimes systemic and sometimes human. Artificial intelligence has proved itself worthy because of its high accuracy and prediction rates. In medical imaging, AI (artificial intelligence) is used to analyze and group similar patterns based on their characteristic features in image data. Recent studies proved that this can be used to detect COVID-19 and other similar lung diseases such as pneumonia.

Figure 1.

Ground glass opacities in CXR findings.

The entire medical community is focused on the diagnosis, effective treatment, and containment strategies. This study focuses on advancing the technological tools and solutions with the contribution of various deep learning methodologies. Deep learning is advancing very rapidly and is capable of solving a wide variety of problems in all sectors. It is thus used in the healthcare sector to train CNN (convolutional neural network)-based models for the detection of COVID-19.

This study revolves around the detection of COVID-19 by applying deep learning techniques to CXR images. The remaining paper is organized as follows: Section 2 lists recent related works. Section 3 illustrates materials and methodologies used by researchers to produce classification results. Section 4 discusses the results with various performance metrics. Finally, the studies are concluded and summarized in Section 5.

2. Literature Review

Due to the required time (six to eight hours) for the traditional PCR method used to identify COVID-19 infection, the aim of different recent studies proposing image classifier systems has been to provide medical professionals with another rapid and low-cost method to identify COVID-19 and other pneumonia infections.

CT scanning is a technique applied to symptomatic patients. This technique is conditioned by determining the necessary period, after the appearance of symptoms, for the realization of CT or PCR. Indeed, if the symptoms indicate an infection while the genetic test for the coronavirus is negative, CT scanning can be used as an additional procedure [4].

According to [4], the sensitivity of RT-PCR is lower than that of CT. However, this sensitivity is strongly proportional to the type of material and the method used to carry out the genetic tests. Hence, under certain conditions, the CT test can be properly included in the COVID-19 diagnostic guidelines.

A CT scan is an imaging diagnostic procedure that involves a combination of X-rays and computer technology to produce images. Hence, CT scanning, despite being more expensive than X-ray imaging, is more accurate and can be useful in providing more details in some cases.

Table 1 illustrates the relevant recent studies using deep learning models to detect COVID-19.

Table 1.

Recent studies proposing learning model for classifying images to detect COVID-19.

In what follows, we further investigate the studies mentioned in Table 1 to illustrate their advantages and drawbacks:

In [5], the authors introduce five transfer learning models to detect COVID-19 in lung CT scans. The study assesses the use of standard and contrast adaptive histogram equalization in lung scans. However, this study does not demonstrate the efficiency and impact of using histogram equalization methods on different learning models.

In [6], the authors establish an X-ray image dataset and suggest a pre-processing semi-automated model to pre-train deep learning models to detect COVID-19 and other diseases with known features. The model used allows noise from the X-ray images to be reduced. The experimental tests indicate that even simple network models such as VGG19 become more accurate (by 83%).

The study in [7] introduces a 2D convolution technique to classify CXR lung images to detect COVID-19. The dataset used is composed of 224 normal and COVID-19 images. Although the results found show good computational speed, they are performed in a small dataset with a limited number of features.

In [8], a ResNet-50 transfer learning model is used to classify COVID-19 CXR images. The high obtained classification accuracy (99.5%) can be used in clinical practice. However, this accuracy is obtained using a small dataset. More successful deep learning models can be used with larger datasets.

In [9], a concatenation of features extracted from two transfer learning models are used to detect COVID-19 with X-rays, CT scans, and two biomarkers. With the same results, the introduced concatenation gives a better computational time than the VGG16-ResNet50 concatenation. However, the introduced concatenation has a high number of parameters. In addition, the introduced concatenation can truly predict positive and negative cases from only two positive or negative images, respectively.

In [10], a two-dense-layer model is proposed to detect COVID-19 from CXR images. Batch normalization is introduced in the second layer to avoid the overfitting of the model. Three data types are used in the proposed dataset: normal images, pneumonia images, and COVID-19 pneumonia images. This dataset is used to assess the efficiency of the introduced model compared with Xception, Inception V3, and Resnet50 models. The introduced model has lower loss and higher accuracy than other models in both validation and training data. However, one of the drawbacks of the introduced model is that it takes considerable computational time to obtain important features. Moreover, adding layers in the model can mean training takes longer.

In [11], the authors collect a set of X-ray lung images to evaluate the severity of COVID-19 pneumonia. Bounded boxes are used to identify the diseased area. An RCNN mask can be added to the model used to enhance the accuracy of the detection of the diseased area.

The study in [12] aims to detect COVID-19 from a dataset of 2727 chest radio open-source images using different pre-trained convolutional neural networks as learning models. As a result, the VGG-16 model achieves better classification than the F1 score and gives less false positives and false negatives compared to DenseNet and VGG-19. However, the dataset used should contain more variated images from different groups of geographical regions, races, and ages. Moreover, more training models should be tested to gain a comprehensive comparative analysis between the different models.

In [13], a CSEN recognition model, combining the advantages of representation-based techniques and deep learning models, is used to detect COVID-19 pneumonia. Using training samples and a dictionary, the CSEN establishes mapping from the sparse support coefficients to the query samples. In terms of memory and speed, the proposed CSEN-based system is computationally efficient, but the main issue with it is that its performance rapidly degrades due to the scarcity of data.

In [14], a Mix-Match-based semi-supervised learning system is used to identify the positive cases of COVID-19. The advantage of such a semi-supervised system is the use of unlabeled data which are more available.

In [22], four pre-trained models are used to detect COVID-19 from a dataset composed of 5000 non-COVID X-ray images and 200 COVID X-ray images. However, a larger set of labeled COVID-19 images is needed to accurately estimate the performance of the tested models.

The authors of [23] address the problem of the classification and recognition of COVID-19 images using different CNN pre-trained models. The study concludes that ResNet-34 is better than other networks. However, this study relies on a binary classification (normal or infected by COVID-19) and does not address a multi-class classification for more detection accuracy.

The study in [15] aims to identify COVID-19 using a feature fusion deep learning model. Using K-fold cross-validation tests, the efficiency of the latter introduced model is confirmed to be more accurate than other classification methods (CNN, SVM, KNN, and ANN). The idea of the method is to combine the features extracted by CNNs and those extracted by histogram-oriented gradients (HOGs). The study deduces that choosing appropriate classification and selection features is necessary for COVID-19 detection from X-ray images.

In [16], the authors use a local dataset of X-ray and CT images. The introduced method, based on a deep learning model, achieves good results, but the dataset used is relatively small.

The authors of [17] suggest a three-class (namely: normal, pneumonia, and COVID-19) classification model to detect and classify X-ray images. A transfer learning InceptionV3 model is used. Additional layers are added to enhance the model. The experimental tests indicate the high performance of the three used classes.

In [18], the authors propose a deep multi-layer neural network system named nCOVnet to detect the presence of COVID-19 from X-ray images. Despite the reported high accuracy of the introduced system, the latter relies on a limited training set.

The study in [19] introduces an (L, 2) transfer learning system to classify COVID-19 CT images. The measuring indicator used relies on a micro-averaged F1 score. Despite the fact that the results show that the proposed system is efficient in detecting COVID-19 images, it has some drawbacks: data from different sources such as CT data combined with CXR and historical data are not easily handled. In addition, the used dataset is not clinically verified and is category-limited.

In [20], a three-classes-deep LSTM system is introduced to detect COVID-19 from MCWS images. The dataset used is public, and the comparison with the introduced system with other learning methods gives excellent results. According to the authors, the accuracy of the results can reach 100%.

The study in [21] suggests a DNN X-ray image classifier. Four classes (COVID-19, bacterial, viral, and healthy) and seven scenarios are considered. The results are promising compared to other deep transfer learning systems such as MobileNet, VGG16, and InceptionV4. The resistance of the introduced model to the noise of images is good.

To sum up, the main drawbacks of the previous investigations and recent studies regarding the classification and detection of COVID-19 from images are:

- The absence of scalability evaluation, which is important in estimating the model performance under real-world operation settings.

- Complexity and statistical tests are not given to investigate the robustness of the results.

- The small sizes of datasets and non-variability of data in datasets.

- The absence of the consideration of multi-class classification: only binary (normal vs. infected) classifications are taken into consideration in most studies.

- The absence of hybridizations of deep learning models with other techniques such as evolutionary multi-objective optimization for more accuracy in detection.

Further investigations and discussions of the interest in the use of learning models for COVID-19 detection from images have been given in recent surveys [24,25].

3. Materials and Methods

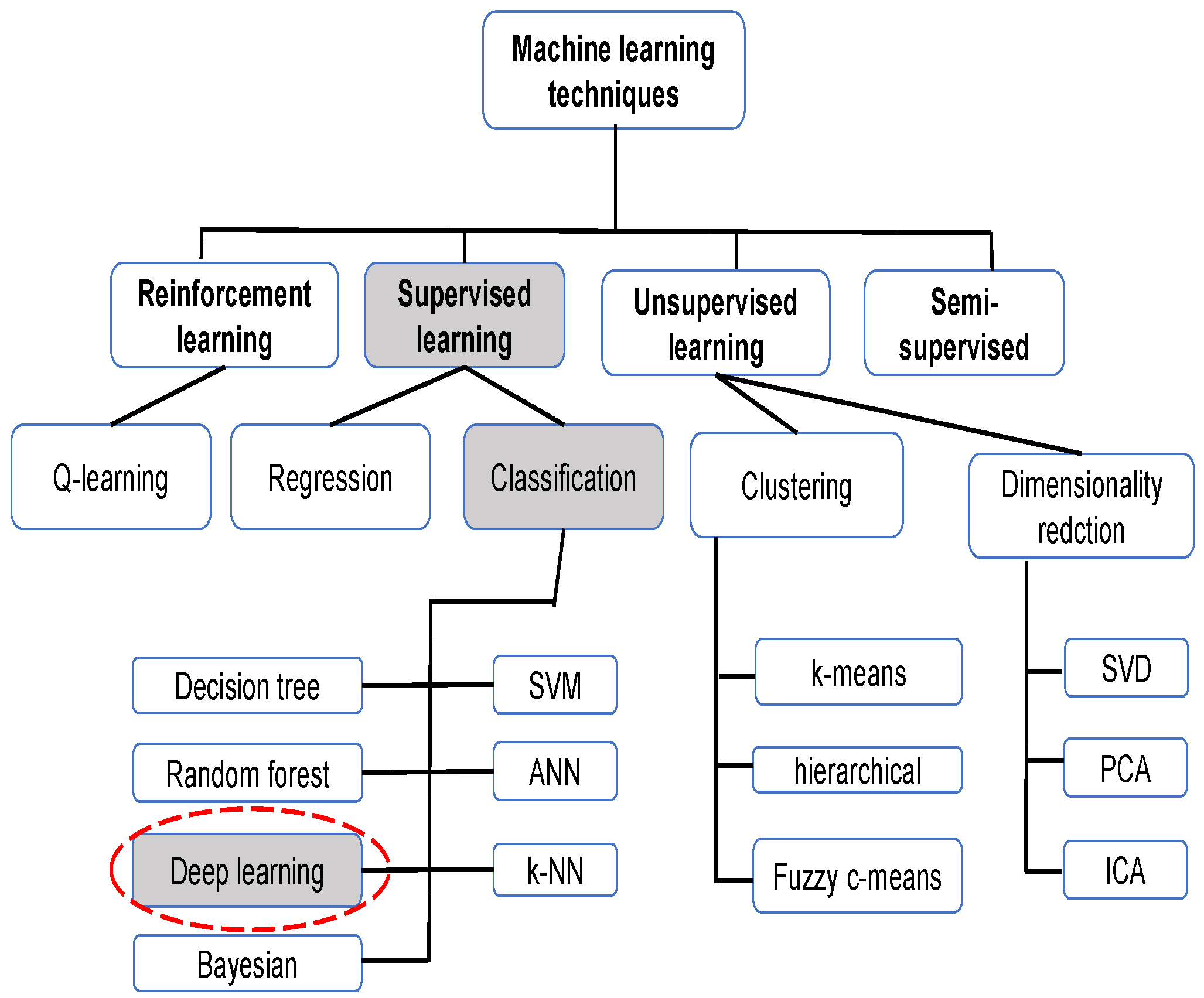

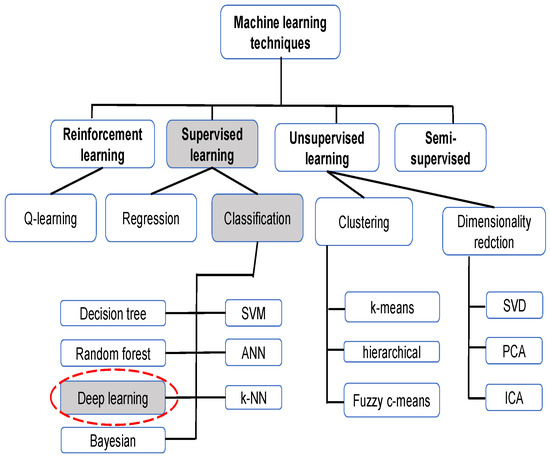

Machine learning, like optimization and other artificial intelligence methods, has been proven to be very useful in resolving real-word complex problems related to engineering issues [26,27,28,29] or medical ones [30,31], as in the case of COVID-19 detection. Figure 2 illustrates the principally used machine learning techniques for CXRs.

Figure 2.

Machine learning techniques for CXR.

To understand and study the relationship between CXR and other deep learning frameworks for COVID-19 diagnosis, this paper reviews various publications and research articles published from March 2020 onwards. The sources used included ScienceDirect, Google Scholar, ArXiv, IEEE, Springer, ACM, etc. and some of the keywords used for the search were “coronaviruses”, “COVID-19 Diagnosis”, “Deep Learning”, “transfer learning”, “Chest Radiography”, and “CNN”. While this study mostly focuses on diagnosis using CXR images, some overlapping techniques used for diagnosis based on chest CT images were also considered. The majority of the research works used deep transfer learning on the ImageNet dataset. CNN architectures were trained with the different tuning of their hyperparameters. The following subsections provide an overview of various state-of-the-art approaches and datasets used to review this survey.

3.1. Types of Classification

The COVID-19 detection task was carried out by classifying the X-ray images using either binary classification, i.e., 2 classes or multi-class classification, i.e., 3 or 4 classes. Each class was labeled by one of the following—“COVID-19”, “healthy”, “no-findings”, “viral pneumonia”, or “bacterial pneumonia”. The binary classification consisted of “COVID-19” as one of the classes, and the other class could be either of the other four, i.e., “non-COVID”. The three-class classification labels were “COVID-19”, “pneumonia”, and “no-findings”. Most studies used binary or triple-class classification. However, some other studies suggested a classification into four classes: “COVID-19”, “viral pneumonia”, “bacterial pneumonia”, and “no-findings or healthy”. Indeed, binary classification represents the dichotomization of a practical situation of a problem using classification rule to decompose the elements of a set into two classes (groups). On the other hand, if there are more than two classes, the classification process is qualified as multi-class classification [32]. It is worth mentioning that binary classification may be customized in several ways to handle multiple classes [33].

Among the issues of binary classification, there is a limited number of classes where only two values for the outcomes are possible: “yes” or “no”. The binary classification can misinterpret the patient’s infections which can lead to errors such as false negative and false positive. False negative occurs if an infected person is categorized as healthy. False positive occurs if a healthy person is categorized as infected.

Among the issues of multi-class classification is the problem of imbalanced datasets. Imbalanced data indicates a problem with a set of inequal representations of classes. The inequal repartition of data can lead to the lower performance of conventional machine learning techniques in the prediction of minority classes. Indeed, multi-class problems with imbalanced datasets are more challenging than binary problems with imbalanced datasets.

Regarding the studies completed, most of them are interested in the binary classification of COVID-19 [34,35,36], and very few studies suggest the multi-class classification of COVID-19 [37,38]. Actually, the performance of multi-class techniques should be improved more.

3.2. Deep Learning Architectures

The operation of deep learning architectures can be explained as follows: One of the deep learning techniques is artificial neural networks, which involve massive amounts of data for computation. This type of learning automatically learns from instances of data. One of the classic architectures of convolutional neural networks is the VGG. To increase the depth of the network layers, VGG, for example, uses a filter analyzer, a connected layer, and a set of shared layers.

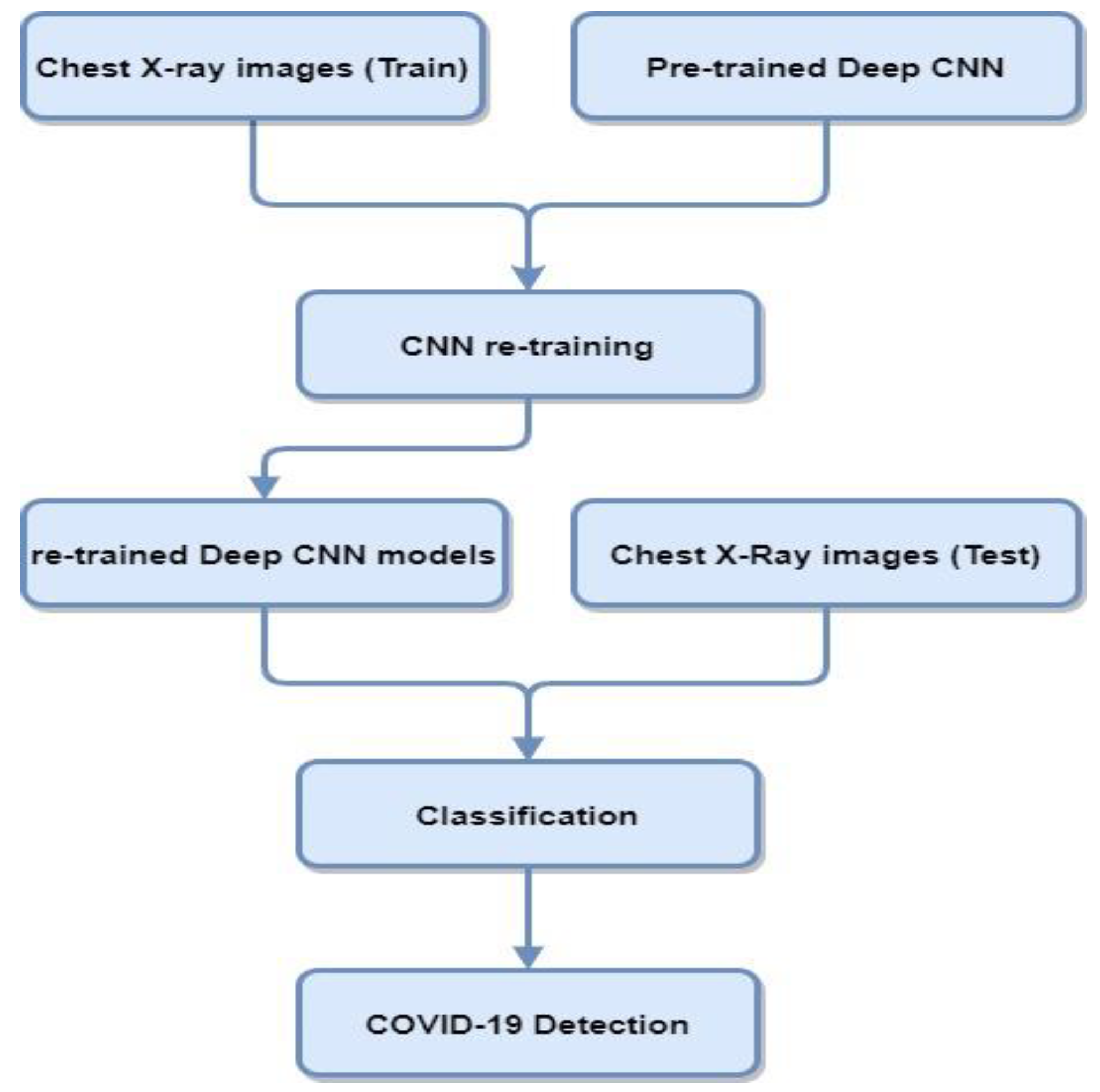

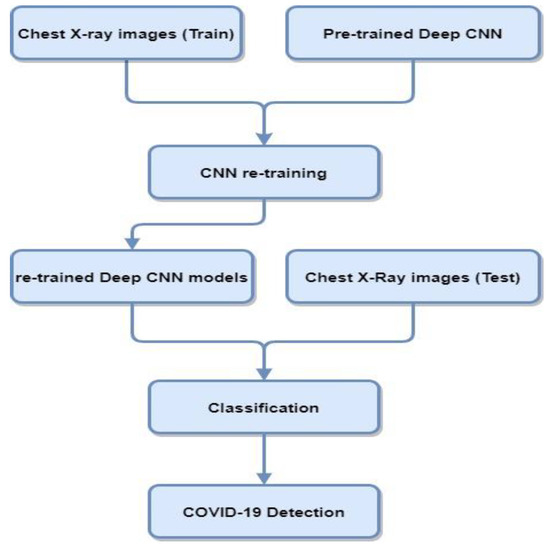

Deep transfer learning and CNNs are widely used in medical imaging applications. Transfer learning is used for those models where the training set is inadequate and training the model from scratch is not feasible. In transfer learning, pre-trained networks are used with the fine-tuning of parameters that performed other traditionally trained networks from scratch for COVID-19 diagnosis. Figure 3 contains a block diagram representing the steps involved in classifying COVID-19 cases from CXR using transfer learning deep CNN architectures.

Figure 3.

Block diagram of deep CNN architectures using CXR for COVID-19 detection.

CNN architectures consist of convolution layers and other pooling layers. They perform well in classification tasks related to computer vision and hence also in assessing medical images. In the further subsections, various CNN architectures and their methodologies are reviewed to identify COVID-19 patients from raw X-ray images.

3.2.1. VGG

This neural network performed very well in the ImagNet Large Scale Visual Recognition Challenge (ILSVRC) in 2014 and was proposed by the Visual Geometry Group (VGG). It mainly consists of 16 or 19 convolution layers and is capable of achieving good accuracy.

VGG16 is a classification and detection technique widely used in the field of image processing. Known for its ease of use for transfer learning, VGG16 can efficiently classify (with an accuracy up to 92.7%) a thousand of images having distinct types.

As far as VGG-19 is concerned, it is 19 layers of depth brought together in a convolutional neural network. In the same previous imaging application context, a database called ImageNet [39] contains over a million images and uses VGG-19 to provide a pre-trained version of the network that can differentiate a thousand types of image objects.

However, due to the large width of convolution layers, its deployment has high computational requirements both in terms of time and memory. For CXR images, VGG-16 extracts features at a low level due to its small kernel size. An attention-based VGG-16 model [40] proposed four main modules—an attention module, convolution module, fully connected layers, and Softmax classifier.

This was implemented using a fine-tuning approach on other pre-trained networks. Three different datasets were used to train the model—D1 for triple-class, D2 for four-class, and D3 for five-class classification. The classification accuracy obtained for 18 parameters was 79.58%, 85.43%, and 87.49%, respectively.

Rahaman [41] evaluated the VGG-19 model and obtained the highest testing accuracy of 89.3% among other CNN architectures. Another study in [42] used the VGG-16 and VGG-19 models for the feature extraction of COVID-19 with SVM with 92.7% and 92.9% accuracy, respectively.

3.2.2. GoogleNet

GoogleNet or Inception V1 [43] was the winner of the ILSVRC 2014 image classification challenge and has a lower error rate than VGG. This consists of 1 × 1 convolution, an inception module (IM), and a global average pooling. The convolution size is the same in each layer. These inception modules learn spatial correlations and cross-channel correlations. The IM reduces dimensionality as its output is smaller than the input in terms of feature maps. Moreover, significantly deeper models could be trained using IM by reducing the trainable parameters by up to 10 times. Other variations of GoogleNet such as Inception V2, Inception-ResNet, Inception V3 [44], and Inception V4 [45] have been developed by slightly varying the inception module.

The authors of [46] performed binary and multi-class classification using GoogleNet and achieved an accuracy of 98.15% for the binary and 75.51% for the multi-class classification of COVID-19 cases. Similarly, the authors of [42] also used GoogleNet with an SVM classifier to obtain an accuracy of 93%.

3.2.3. AlexNet

This architecture requires less training time and fewer eras compared to other previously trained transfer learning models. It also gives outstanding results in the recognition and classification of images. This network is also known to give the highest accuracy on the ImageNet dataset. The network consists of 5 convolutions, 2 hidden, and 1 fully connected layer, making the depth size 8. The input image size was 227 × 227 with 61 million parameters fine-tuned. The dropout method was used to deal with overfitting, and it enabled the network to learn more features. The ReLU activation function was used.

The study in [47] reduced the original 1000 classes in AlexNet to 3 classes—COVID-19, normal, and abnormal. The model was trained on three sets of datasets obtained from various open-source networks and radiological society websites. Researchers further modified the original architecture and proposed four effective AlexNet models that detected and classified CXR images accurately [48]. In [46], the obtained accuracy was 97.04% in binary classification and 63.27% for multi-class classification using AlexNet. The study in [35] used AlexNet for feature extraction and achieved 95.12% accuracy for three classes—COVID-19, SARS, and normal—in their project DeTraC. Each class was divided into separate subclasses and reassembled to give the final prediction outcomes.

3.2.4. MobileNet

This architecture uses separate convolutions depthwise to create lightweight neural networks for embedded and mobile system applications. Balance is maintained by a tradeoff between the hyperparameters for accuracy and latency. It has 53 layers and about 3.4 million trainable parameters [49]. It consists of depthwise convolutions, expansion, and projection convolutions.

For COVID-19 detection, MobileNet was used to achieve accuracies of 60% [50] and 96.30% [46]. However, for distinguishing COVID-19 from normal cases, the mean accuracy using MobileNet-V2 was 87.61%, and for COVID-19 and pneumonia, the mean accuracy was 97.87%. For three-class experiments, it resulted in 92.85% accuracy.

3.2.5. ResNet

On increasing the depth of the network in CNNs, the training error increases. ResNet solves this problem by introducing a residual unit. ResNet-18 and ResNet-34 consist of two deep layers, and ResNet-50/101/152 has three deep layers. The residual learning component reuses the activation from previous layers and skips the layers that do not contribute to the solution. It uses batch normalization and identity connection to mitigate the vanishing gradient problem and improve performance.

It was observed that ResNet is one of the most widely used CNNs in COVID diagnosis studies [51]. The authors of [52] used three variants of this—ResNet-18 for 91% accuracy, ResNet-50 for 95%, and ResNet-101 for 89.2% classification accuracy. ResNet-18 and ResNet-50 were also trained on an imbalanced dataset that had 3000 normal and 100 COVID-positive CXR images. A 89.2% detection accuracy rate in ResNet-50 and a 98% sensitivity rate were obtained in both variants [22].

3.2.6. Xception

Xception [53] stands for an extreme version of Inception, and it also uses depthwise separable convolutions like ResNet instead of traditional convolution. It outperformed Inception V3 on the ImageNet dataset of 17,000 classes and 350 million images. It enables the learning of spatial patterns and cross channels separately. The depthwise separable convolutions lower the number of operations and hence the computational cost by a huge factor.

Inception V3 gave an accuracy of 78.2%, which was increased to 79% on the ImageNet dataset. The authors of [50] diagnosed COVID-19 using the Xception model, and it resulted in the highest precision in detecting COVID-positive cases among the rest of the deep learning classifiers. However, it did not perform well in classifying normal cases. It resulted in a sensitivity rate of 0.894 and 0.830 precision.

CoroNet [38] is another CNN model based on the Xception architecture with two fully connected layers and a dropout layer at the end. It has 33,969,964 trainable parameters. Four-class, three-class, and two-class variants of the CoroNet model were proposed and pre-trained on the ImageNet dataset. In [49], an optimizer with a batch size of 10 and 80 epochs was used for re-training. The mean accuracy values were 89.6%, 95%, and 99% for 4-class, 3-class, and 2-class, respectively.

3.2.7. DenseNet

DenseNet is somewhat similar to ResNet with a few differences. It connects the previous layer to the forward layer by concatenation. Therefore, in a network of n layers, it has n(n + 1)/2 connections. DenseNet is more efficient than other state-of-the-art CNN architectures such as ResNet in image classification parameters and computational terms. The convolution in the network generates fewer feature maps as the layers are densely connected. Redundancy is lower as layers reuse the features and propagate them.

DenseNet was built to resolve the vanishing gradient problem in neural networks, that is, the loss of information before reaching the final output layer because of longer paths. The different versions of DenseNet based on the number of layers computed are DenseNet-121, DenseNet-160, and DenseNet-201. This was the second most used architecture in the previously reviewed studies.

The study in [50] used DenseNet-201 to achieve an accuracy of 90% which was later improved in [42] to 93.8%. A specificity rate of 75.1% was obtained in another application of DenseNet [22]. The authors of [46] used this to achieve a multi-class classification accuracy of 93.46% and binary classification accuracy of 98.75% using DenseNet. DenseNet is also used as a backbone in developing other COVID-19 diagnosis systems using chest CT [54].

3.2.8. SENet

In 2017, the authors of [55] proposed the Squeeze and Excitation Network, which was also the winner of the ILSVRC Challenge 2017. It reduced the top-5 error rate to 2.251% and surpassed the winning entry of the previous year. It was based on the relationship and interdependencies between the channels in a convolution network. It introduces an additional computation known as the SE block and integrates it with other CNNs such as ResNet. This block is added to every residual unit in ResNet to improve performance. This new merger is called SE-Inception-ResNet-v2 and SE-ResNet-50. Though this resulted in increased complexity in computation, it yielded consistent good returns compared to increasing the depths of ResNet architectures. Experiments with non-residual networks such as VGG were also conducted, which also resulted in improved performance.

The SE block is the main building component which comprises three layers—the dense layer, squeeze dense layer, and global average pooling layer. The SE block emphasizes cross-channel patterns rather than spatial patterns and it learns the image objects that are bundled together. The output of the block retains the essential features and downscales the irrelevant feature maps.

The authors of [46] used SENet for the binary classification and multi-class classification of COVID-19 cases and obtained an accuracy of 98.89% and 94.39%, respectively. The study in [22] used this in place of ResNet and obtained 98% sensitivity and 92.9% specificity. The dataset used was highly imbalanced, and the specificity and sensitivity values for ResNet were 89.6% and 90.7%, respectively.

3.2.9. ShuffleNet

Other CNN architectures used were CapsNet, autoencoder, and ShuffleNet [56]. For the ImageNet classification task, these performed better than MobileNet. ShuffleNet was approximately 13 times faster than AlexNet with comparable accuracy values. It has pointwise group convolution operations and channel shuffles to reduce the computations. This enables the flow of information across various channels.

The authors of [57] used feature extraction automatically which was then given to different classifiers—KNN, random forest, SVM, and Softmax. The accuracies obtained with these four classifiers were 99.35%, 80%, 95.81%, and 99.35%, respectively.

3.2.10. DarkCovidNet

DarkNet-19 is the model that is based on a real-time object detection system—YOLO (You only look once) [58]. Rather than designing the entire model from scratch, it is picked as the starting point. The successful architecture of the DarkNet classifier makes it more efficient. Fewer layers and different filter sizes were used compared with the original DarkNet architectures [59]. Filters were gradually increased from 8 to 16 to 32.

DarkNet-19 comprises 19 layers of convolution and five of pooling—Maxpool. These layers are standard CNN layers with varying sizes, filter numbers, and stride parameters.

The modified DarkCovidNet layout has 21 convolution layers and 6 pooling layers. This is a modification of the DarkNet model. It consists of 21 convolution layers. Each DarkNet (DN) layer has one convolution layer with a block size = 3 and stride value = 1. It is followed by batch normalization and the Leaky ReLU activation function. Each triple convolution (tri Conv.) consists of three similar sequential DarkNet layers. The batch normalization is used to reduce the training time and stabilize the model. Leaky ReLU is used as a modification of ReLU with a negative slope of 0.1. Other activation functions such as sigmoid and ReLU could also be used, but they give zero values in their negative side of derivatives. Leaky ReLU overcomes this problem of vanishing gradients and dying neurons. The optimizer in [49] is used to update the weights and loss entropy functions, and the learning rate is taken as 1 × 10−3. This model was evaluated using various performance metrics and resulted in 97.6% binary classification accuracy and 88% triple-class classification accuracy.

3.3. Comparing the Binary and Multi-Class Classification for COVID-19 Detection

So, which type of classification is more suitable for COVID-19 detection?

The study in [60] suggests diagnosing COVID-19 using a system called ECG-BiCoNet which combines deep bi-layers and ECG data to distinguish COVID-19 cases from other cardiac ones. Both binary and multi-class classification were proposed for the ECG-BiCoNet with an accuracy of 98.8% and 91.73%, respectively. The results for numerous classifiers such as RF, SVM, and LDA indicate that:

- -

- The binary classification detects the cardiac variations in ECG images caused by COVID-19 and differentiates it from healthy ECG images. However, binary classification increased the computation load of the training models.

- -

- The multi-class classification properly achieved the detection of COVID-19 cases. However, it has less ability, compared to binary classification, to detect other cardiac diseases and normal ECG images. The computation cost is slightly enhanced compared to the binary classification.

Another study [61] suggested a binary classification (COVID-19 infected or healthy) and multiple classification (pneumonia, COVID-19 infection, or healthy). A 17-layered CNN model with numerous sizes of filters was proposed for the training of CXR images. The accuracy of the model was 98.08% (87.02%, respectively) for binary classification (multi-class, respectively).

4. Results and Discussion

The previous section lists some of the famous works and CNN architectures proposed for the detection of the COVID-19 virus from CXR images. This section provides in-depth analysis and insights into the studies reviewed.

4.1. Datasets

The articles reviewed experimented with 13 different datasets. Table 2 summarizes these datasets with their respective names, the number of images, the resolution of each image, and references. Most of the images are in JPG, JPEG, or PNG format. The Cohen Image Collection [62] is known to be the most cited dataset and was used in almost 85% of the works. This may have resulted in lower image quality as it was collected from online publications rather than original medical reports. It consists of images obtained from various websites and online publications which could assist researchers in developing AI-based deep learning models. The Cohen Image Collection [62], accessible from [63], is a dataset that defines the first initiative to collect clinical cases and public data for COVID-19 as images. Representing the largest prognostic dataset on COVID-19, this dataset involves hundreds of X-ray images in frontal views. It is a reference for the development of decision support and machine learning systems via COVID-19 image processing. The aim of such systems is the prediction of patient survival and the interpretation of their disease development cycle. The images in the Cohen dataset, in both lateral and frontal views, reflect metadata such as survival status, incubation status, time to onset of initial symptoms, and hospital location.

Table 2.

Different COVID-19 CXR datasets used in the investigated works.

The COVID-19 Image Data Collection is named the “Montreal database” in some of the studies. The COVIDx dataset consists of 48 COVID images and is updated constantly [64]. The COVID-19 Dataset Award was won by the COVID-19 Radiography Database, which is a composition of different datasets: the SIRM (Italian Society of Medical and Interventional Radiology) Database, Twitter COVID-19 CXR Dataset, RSNA Pneumonia Detection Challenge Dataset, Kaggle, and other online sources.

The Open-I repository is an open-access biomedical search engine maintained by the US National Library of Medicine in which CXR images can be found with the relevant publication [76]. The Twitter CXR Dataset [72] was shared by a cardiothoracic radiologist in Spain on his Twitter account and it consists of 135 images having SARS-Cov-2 viral infection. The CXR-8 [68] contains frontal-view CXR images of more than 30,000 patients infected with 14 thoracic diseases. This is also known as the RSNA Pneumonia Detection Challenge dataset. Most of these studies combined datasets to increase the training data so redundant data can also be found. The above-stated datasets are publicly available from various online repositories and platforms. However, the rest are obtained from local hospitals. The latter datasets cannot be accessed publicly. GitHub and Kaggle are the most used portals to store and access these datasets.

4.2. Performance Comparison

Despite numerous studies using deep learning models on various datasets, identifying the most efficient architecture is still a difficult task. The variation in testing and training data also added to the differences and complications in comparing the CNN models on standard performance metrics. Most works evaluated their models based on accuracy, F1 scores, specificity and sensitivity rates, the area under the ROC curve, and Kappa statistics.

The differences between the COVID-19 datasets are still unresolved. Thus, a standard COVID-19 dataset should be maintained by the research community with which every researcher could validate their respective models. Standard evaluation metrics should also be specified to ease the comparison among them and test their efficacy. Table 3 contains the summary of results in terms of mean accuracy, F1 score, and AUC score. Specificity, sensitivity, precision, and recall could also be taken for comparison, but they are bound to differ for two-class, three-class, and four-class classification.

Table 3.

Performance of reviewed detection models.

What follows is the signification of the metrics used:

- -

- Accuracy is the most natural measure of performance. It is defined by the percentage of correct predictions compared to the total number of observations. The efficiency of the model is then proportionally linked to the value of its accuracy. In general, an accuracy A is defined by A = TP + TN/TP + FP + FN + TN (knowing that TP = true positives, TN = true negatives, FP = false positives and FN = false negatives). A model having A = 0.76, for example, indicates that this model is approximately 76% accurate. On the other hand, the precision effectively measures the performance only if the data are symmetric (values of the FN are comparable to those of the FP). Hence, other performance metrics should be tested alongside the accuracy.

- -

- Precision: This metric defines the relationship between the total number of predicted positive observations and correctly predicted positive predictions. A typical example of using this metric is, in a disaster, how many people actually survived among those described as having survived? The good performance of this metric is inversely related to the rate of false positives. The precision formula is generally described by TP/TP + FP.

- -

- Sensitivity (recall) is another metric that describes the relationship between the real actual class observations and the correctly predicted positive observations. Sensitivity tries to answer the question: how many passengers did we tagged out of all the passengers who actually survived? Sensitivity is usually defined by the formula TP/TP + FN.

- -

- F1 score is a metric that reflects the weighted average value of sensitivity and precision. This means that the F1 score considers false negatives and false positives simultaneously. In the case where the cost of false negatives is very different from false positives, we must use precision and recall at the same time. If the class distribution is unequal, the F1 score is more useful than the precision. The latter performs better if we have a similar cost of false negatives and false positives. The general formula of the F1 score is as follows: 2 × (Precision × Sensitivity)/(Precision + Sensitivity) [84].

The CXR image databases are used in [85,86], and the accuracy is presented as 98.70%, 88.80%, 95.70%, and 99.90%, respectively.

4.3. Class Imbalance Problem

The major challenge faced in studies on COVID-19 is the limited availability of COVID-positive image datasets. As is evident from the above statistics, the number of COVID-19 images is very small compared to the normal and pneumonia classes. This uneven distribution leads to the class imbalance problem. Some studies focus on data augmentation to enlarge the COVID dataset [87]. Another solution is to take an equal number of images in each class. However, deep models such as ResNet do not perform well with a lower amount of training data.

A study proposed the use of the SMOTE (Synthetic Minority Oversampling Technique) which is a kind of data augmentation technique for minority classes [52].

The number of COVID images varies widely in numbers compared to total data samples. Some studies were simulated with as few as 11, and others took as many as 1536 COVID-19 images, whereas the total number of images was in the range of 50 to 224,316. Thus, AI researchers used different techniques to tackle this problem [88]. The authors of [57] fixed the number of image samples to 310 in each class. The authors of [46,51] also used a fixed number of samples in each class. Both studies [82,89] used a class-weighted entropy loss function. Others emphasized cost-sensitive learning.

4.4. COVID-19 Severity Prediction

Another challenge faced in containing this pandemic is the inability to predict the severity of a patient diagnosed as COVID-positive. Based on symptom period analysis and past CXR records, researchers are working to predict the severity of patients in terms of COVID score.

Assessing the progression of the disease and its effect on the lungs could identify patients at high risk. They could then be treated with extra attention and care from the medical personnel. Deep learning techniques developed on CXR images of patients could assist doctors in tracking, assessing, and monitoring severity and progress and hence aid in efficiently triaging patients. One study [89] monitored patients and predicted whether their condition would improve or worsen in the coming days with an accuracy of 82.7%. The more deadly L- and H-type strains were also identified using DL architectures. Categorizing multiple scans of the same patient, extracting features using DL, and using embedded machine learning algorithms on these features were also used to monitor the condition and recovery of patients. GANs (Generative Adversarial Networks) have also given promising results in severity prediction.

5. Conclusions and Future Scope

This study investigated the diagnosis of COVID-19 cases using AI and deep learning techniques with CXR images given as input. An automated diagnosis system is needed to overcome the shortage of testing kits and speed up the screening process with the limited involvement of medical professionals. Numerous deep learning architectures proposed by researchers were reviewed and discussed.

The proposed models were validated on different datasets, especially by Cohen Image Collection data, which is the most cited dataset. The attributes and descriptions of different datasets used were discussed. The models were later evaluated and compared based on performance metrics such as accuracy, F1 score, and AUC values. However, due to limited instances and the availability of COVID-19 CXR images, almost all datasets are highly imbalanced. So, classification accuracy cannot be the only metric to evaluate and compare the efficiency of these models. Additionally, less training time, a reduced error rate, and good performance with the limited amount of training data are also considered important in developing these models.

Transfer learning produced improved results as the models are pre-trained rather than built from scratch. Different studies used several numbers of classes to identify COVID-19 cases. Binary classification consisted of COVID-19 and normal classes, whereas multi-class classification was further divided as three-class, four-class, and five-class classification. Viral and bacterial pneumonia cases were also segregated along with COVID-19 and no-findings classes.

These proposed methodologies need to be validated on larger datasets with specified standards and evaluation metrics before they come to practice. AI researchers should work closely with expert radiologists to analyze the results and find a tradeoff between the deep features learned automatically and the features extracted by domain knowledge to obtain an accurate diagnosis. Additionally, most of the reviewed works used data augmentation to overcome the problem of a lack of COVID data. GAN network implementation could be used to generate new data as well as predict the severity of patients based on symptom period analysis.

The detection of COVID-19 using radio images and deep learning techniques seems to be a very promising method because of the actual issues encountered by the vaccines regarding the non-acceptation of vaccination by people and regarding the newly appearing COVID-19 variants threatening the efficiency of vaccines.

These techniques could also be used to detect other chest-related illnesses such as pneumonia, tuberculosis, etc., in the near future. More diverse datasets could be used to increase the robustness and accuracy of the model. Mobile applications could be developed via the cloud to assist the initial screening of patients. Radiological screening for COVID-19 diagnosis is an active research area, and sooner or later, the medical community will have to rely on these methods as the pandemic progresses. Furthermore, deep learning techniques can be combined with other intelligent AI methods such as optimization algorithms [90,91] for a better manipulation of radiological imaging for COVID-19 detection.

Author Contributions

Conceptualization, V.K. and M.A. (Malek Alrashidi); methodology, S.L., S.M. and M.A. (Mansoor Alghamdi); software, S.L., A.A. (Abdullah Almuhaimeed) and S.M.; validation, V.K., M.K. and M.A.A. (Majed Abdullah Alrowaily); formal analysis, V.K., I.A., A.A. (Ali Alshehri) and M.A.A. (Majed Abdullah Alrowaily); investigation, M.A. (Malek Alrashidi) and A.A. (Ali Alshehri); resources, M.A. (Mansoor Alghamdi) and M.A.A. (Majed Abdullah Alrowaily); data curation, A.A. (Abdullah Almuhaimeed) and A.A. (Ali Alshehri); writing—original draft preparation, S.L., M.K. and M.A. (Malek Alrashidi); writing—review and editing, S.M., M.A. (Mansoor Alghamdi) and I.A.; visualization, I.A.; supervision S.M., M.K. and A.A. (Abdullah Almuhaimeed); project administration M.A. (Mansoor Alghamdi), A.A. (Abdullah Almuhaimeed) and S.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- WHO. WHO COVID-19 Dashboard. 2021. Available online: https://covid19.who.int (accessed on 12 April 2022).

- Peng, S.; Pan, L.; Guo, Y.; Gong, B.; Huang, X.; Liu, S.; Huang, J.; Pu, H.; Zeng, J. Quantitative CT imaging features for COVID-19 evaluation: The ability to differentiate COVID-19 from non-COVID-19 (highly suspected) pneumonia patients during the epidemic period. PLoS ONE 2022, 17, e0256194. [Google Scholar] [CrossRef] [PubMed]

- Brady, A.P. Error and discrepancy in radiology: Inevitable or avoidable? Insights Imaging 2016, 8, 171–182. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Santura, I.; Kawalec, P.; Furman, M.; Bochenek, T. Chest computed tomography versus RT-PCR in early diagnostics of COVID-19—A systematic review with meta-analysis. Pol. J. Radiol. 2021, 86, 518–531. [Google Scholar] [CrossRef] [PubMed]

- Lawton, S.; Viriri, S. Detection of COVID-19 from CT Lung Scans Using Transfer Learning. Comput. Intell. Neurosci. 2021, 2021, 5527923. [Google Scholar] [CrossRef] [PubMed]

- Horry, M.J.; Chakraborty, S.; Paul, M.; Ulhaq, A.; Pradhan, B.; Saha, M.; Shukla, N. X-ray Image Based COVID-19 Detection Using Pre-trained Deep Learning Models. engrXiv 2020. [Google Scholar] [CrossRef]

- Padma, T.; Kumari, C.U. Deep Learning Based Chest X-ray Image as a Diagnostic Tool for COVID-19. In Proceedings of the 2020 International Conference on Smart Electronics and Communication (ICOSEC), Trichy, India, 10–12 September 2020; pp. 589–592. [Google Scholar] [CrossRef]

- Karhan, Z.; Akal, F. Covid-19 Classification Using Deep Learning in Chest X-ray Images. In Proceedings of the 2020 Medical Technologies Congress (TIPTEKNO), Antalya, Turkey, 19–20 November 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Hilmizen, N.; Bustamam, A.; Sarwinda, D. The Multimodal Deep Learning for Diagnosing COVID-19 Pneumonia from Chest CT-Scan and X-ray Images. In Proceedings of the 2020 3rd International Seminar on Research of Information Technology and Intelligent Systems (ISRITI), Yogyakarta, Indonesia, 10–11 December 2020; pp. 26–31. [Google Scholar] [CrossRef]

- Santoso, F.Y.; Purnomo, H.D. A Modified Deep Convolutional Network for COVID-19 detection based on chest X-ray images. In Proceedings of the 2020 3rd International Seminar on Research of Information Technology and Intelligent Systems (ISRITI), Yogyakarta, Indonesia, 10–11 December 2020; pp. 700–704. [Google Scholar] [CrossRef]

- Darapaneni, N.; Ranjane, S.; Satya, U.S.P.; Prashanth, D.; Reddy, M.H.; Paduri, A.R.; Adhi, A.K.; Madabhushanam, V. COVID 19 Severity of Pneumonia Analysis Using Chest X Rays. In Proceedings of the 2020 IEEE 15th International Conference on Industrial and Information Systems (ICIIS), Rupnagar, India, 26–28 November 2020; pp. 381–386. [Google Scholar] [CrossRef]

- Kandhari, R.; Negi, M.; Bhatnagar, P.; Mangipudi, P. Use of Deep Learning Models to detect COVID-19 from Chest X-rays. In Proceedings of the 2021 International Conference on Computer Communication and Informatics (ICCCI), Coimbatore, India, 27–29 January 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Yamac, M.; Ahishali, M.; Degerli, A.; Kiranyaz, S.; Chowdhury, M.E.H.; Gabbouj, M. Convolutional Sparse Support Estimator-Based COVID-19 Recognition From X-ray Images. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 1810–1820. [Google Scholar] [CrossRef]

- Calderon-Ramirez, S.; Giri, R.; Yang, S.; Moemeni, A.; Umana, M.; Elizondo, D.; Torrents-Barrena, J.; Molina-Cabello, M.A. Dealing with Scarce Labelled Data: Semi-supervised Deep Learning with Mix Match for Covid-19 Detection Using Chest X-ray Images. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 5294–5301. [Google Scholar] [CrossRef]

- Alam, N.A.; Ahsan, M.; Based, A.; Haider, J.; Kowalski, M. COVID-19 Detection from Chest X-ray Images Using Feature Fusion and Deep Learning. Sensors 2021, 21, 1480. [Google Scholar] [CrossRef]

- Gilanie, G.; Bajwa, U.I.; Waraich, M.M.; Asghar, M.; Kousar, R.; Kashif, A.; Aslam, R.S.; Qasim, M.M.; Rafique, H. Coronavirus (COVID-19) detection from chest radiology images using convolutional neural networks. Biomed. Signal Process. Control 2021, 66, 102490. [Google Scholar] [CrossRef]

- Amin, H.; Darwish, A.; Hassanien, A.E. Classification of COVID19 X-ray Images Based on Transfer Learning InceptionV3 Deep Learning Model. In Digital Transformation and Emerging Technologies for Fighting COVID-19 Pandemic: Innovative Approaches; Studies in Systems, Decision and Control; Springer: Cham, Switzerland, 2021; Volume 322, pp. 111–119. [Google Scholar] [CrossRef]

- Panwar, H.; Gupta, P.; Siddiqui, M.K.; Morales-Menendez, R.; Singh, V. Application of deep learning for fast detection of COVID-19 in X-rays using nCOVnet. Chaos Solitons Fractals 2020, 138, 109944. [Google Scholar] [CrossRef]

- Wang, S.-H.; Nayak, D.R.; Guttery, D.S.; Zhang, X.; Zhang, Y.-D. COVID-19 classification by CCSHNet with deep fusion using transfer learning and discriminant correlation analysis. Inf. Fusion 2020, 68, 131–148. [Google Scholar] [CrossRef]

- Demir, F. DeepCoroNet: A deep LSTM approach for automated detection of COVID-19 cases from chest X-ray images. Appl. Soft Comput. 2021, 103, 107160. [Google Scholar] [CrossRef]

- Sheykhivand, S.; Mousavi, Z.; Mojtahedi, S.; Rezaii, T.Y.; Farzamnia, A.; Meshgini, S.; Saad, I. Developing an efficient deep neural network for automatic detection of COVID-19 using chest X-ray images. Alex. Eng. J. 2021, 60, 2885–2903. [Google Scholar] [CrossRef]

- Minaee, S.; Kafieh, R.; Sonka, M.; Yazdani, S.; Soufi, G.J. Deep-COVID: Predicting COVID-19 from chest X-ray images using deep transfer learning. Med. Image Anal. 2020, 65, 101794. [Google Scholar] [CrossRef]

- Nayak, S.R.; Nayak, D.R.; Sinha, U.; Arora, V.; Pachori, R.B. Application of deep learning techniques for detection of COVID-19 cases using chest X-ray images: A comprehensive study. Biomed. Signal Process. Control 2020, 64, 102365. [Google Scholar] [CrossRef]

- Mohammad-Rahimi, H.; Nadimi, M.; Ghalyanchi-Langeroudi, A.; Taheri, M.; Ghafouri-Fard, S. Application of Machine Learning in Diagnosis of COVID-19 Through X-ray and CT Images: A Scoping Review. Front. Cardiovasc. Med. 2021, 8, 638011. [Google Scholar] [CrossRef]

- Ghaderzadeh, M.; Asadi, F. Deep Learning in the Detection and Diagnosis of COVID-19 Using Radiology Modalities: A Systematic Review. J. Health Eng. 2021, 2021, 6677314. [Google Scholar] [CrossRef]

- Mnasri, S.; Bossche, A.V.D.; Nasri, N.; Val, T. The 3D Redeployment of Nodes in Wireless Sensor Networks with Real Testbed Prototyping. In Ad-Hoc, Mobile, and Wireless Networks; Springer: Cham, Switzerland, 2017; Volume 10517, pp. 18–24. [Google Scholar] [CrossRef]

- Hassanat, A.B.; Mnasri, S.; Aseeri, M.; Alhazmi, K.; Cheikhrouhou, O.; Altarawneh, G.; Alrashidi, M.; Tarawneh, A.S.; Almohammadi, K.; Almoamari, H. A Simulation Model for Forecasting COVID-19 Pandemic Spread: Analytical Results Based on the Current Saudi COVID-19 Data. Sustainability 2021, 13, 4888. [Google Scholar] [CrossRef]

- Mnasri, S.; Zidi, K.; Ghedira, K. A heuristic approach based on the multi-agents negotiation for the resolution of the DDBAP. In Proceedings of the 4th International Conference on Metaheuristics and Nature Inspired Computing, Sousse, Tunisia, 27–31 October 2012. [Google Scholar]

- Mnasri, S.; Nasri, N.; Bossche, A.V.D.; Val, T. 3D indoor redeployment in IoT collection networks: A real prototyping using a hybrid PI-NSGA-III-VF. In Proceedings of the 2018 14th International Wireless Communications & Mobile Computing Conference (IWCMC), Limassol, Cyprus, 25–29 June 2018; pp. 780–785. [Google Scholar] [CrossRef]

- Tarawneh, A.S.; Chetverikov, D.; Verma, C.; Hassanat, A.B. Stability and reduction of statistical features for image classification and retrieval: Preliminary results. In Proceedings of the 2018 9th International Conference on Information and Communication Systems (ICICS), Irbid, Jordan, 3–5 April 2018. [Google Scholar]

- Tarawneh, A.S.; Hassanat, A.B.A.; Almohammadi, K.; Chetverikov, D.; Bellinger, C. SMOTEFUNA: Synthetic Minority Over-Sampling Technique Based on Furthest Neighbour Algorithm. IEEE Access 2020, 8, 59069–59082. [Google Scholar] [CrossRef]

- Grandini, M.; Bagli, E.; Visani, G. Metrics for Multi-Class Classification: An Overview. arXiv 2020, arXiv:2008.05756. [Google Scholar]

- Tewari, A.; Bartlett, P.L. On the Consistency of Multiclass Classification Methods. J. Mach. Learn. Res. 2005, 3559, 143–157. [Google Scholar] [CrossRef] [Green Version]

- Umair, M.; Khan, M.S.; Ahmed, F.; Baothman, F.; Alqahtani, F.; Alian, M.; Ahmad, J. Detection of COVID-19 Using Transfer Learning and Grad-CAM Visualization on Indigenously Collected X-ray Dataset. Sensors 2021, 21, 5813. [Google Scholar] [CrossRef]

- Abbas, A.; Abdelsamea, M.M.; Gaber, M.M. Classification of COVID-19 in chest X-ray images using DeTraC deep convolutional neural network. Appl. Intell. 2020, 51, 854–864. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Kang, B.; Ma, J.; Zeng, X.; Xiao, M.; Guo, J.; Cai, M.; Yang, J.; Li, Y.; Meng, X.; et al. A deep learning algorithm using CT images to screen for Corona Virus Disease (COVID-19). medRxiv 2020, 31, 6096–6104. [Google Scholar] [CrossRef] [PubMed]

- Hussain, E.; Hasan, M.; Rahman, A.; Lee, I.; Tamanna, T.; Parvez, M.Z. CoroDet: A deep learning based classification for COVID-19 detection using chest X-ray images. Chaos Solitons Fractals 2020, 142, 110495. [Google Scholar] [CrossRef] [PubMed]

- Khan, A.I.; Shah, J.L.; Bhat, M.M. CoroNet: A deep neural network for detection and diagnosis of COVID-19 from chest X-ray images. Comput. Methods Programs Biomed. 2020, 196, 105581. [Google Scholar] [CrossRef] [PubMed]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef] [Green Version]

- Sitaula, C.; Hossain, M.B. Attention-based VGG-16 model for COVID-19 chest X-ray image classification. Appl. Intell. 2020, 51, 2850–2863. [Google Scholar] [CrossRef] [PubMed]

- Rahaman, M.M.; Li, C.; Yao, Y.; Kulwa, F.; Rahman, M.A.; Wang, Q.; Qi, S.; Kong, F.; Zhu, X.; Zhao, X. Identification of COVID-19 samples from chest X-ray images using deep learning: A comparison of transfer learning approaches. J. X-ray Sci. Technol. 2020, 28, 821–839. [Google Scholar] [CrossRef] [PubMed]

- Kumar, P.; Kumari, S. Detection of Coronavirus Disease (COVID-19) Based on Deep Features. Available online: https://www.preprints.org/Manuscript/202003.0300/V1 (accessed on 9 March 2020).

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef] [Green Version]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 4278–4284. [Google Scholar]

- Rehman, A.; Naz, S.; Khan, A.; Zaib, A.; Razzak, I. Improving Coronavirus (COVID-19) Diagnosis Using Deep Transfer Learning. In Proceedings of the International Conference on Information Technology and Applications, Dubai, United Arab Emirates, 14–15 November 2021; Springer: Singapore, 2022; pp. 23–37. [Google Scholar] [CrossRef]

- Salih, S.Q.; Abdulla, H.K.; Ahmed, Z.S.; Surameery, N.M.S.; Rashid, R.D. Modified AlexNet Convolution Neural Network For Covid-19 Detection Using Chest X-ray Images. Kurd. J. Appl. Res. 2020, 5, 119–130. [Google Scholar] [CrossRef]

- Pham, T.D. Classification of COVID-19 chest X-rays with deep learning: New models or fine tuning? Health Inf. Sci. Syst. 2020, 9, 2. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. Available online: http://arxiv.org/abs/1704.04861 (accessed on 17 May 2022).

- Hemdan, E.E.; Shouman, M.A.; Karar, M.E. COVIDXnet: A framework of deep learning classifiers to diagnose COVID19 in X-ray images. arXiv 2020, arXiv:2003.11055. Available online: http://arxiv.org/abs/2003.11055 (accessed on 16 June 2022).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef] [Green Version]

- Kumar, R.; Arora, R.; Bansal, V.; Sahayasheela, V.J.; Buckchash, H.; Imran, J.; Narayanan, N.; Pandian, G.N.; Raman, B. Accurate prediction of COVID-19 using chest X-ray images through deep feature learning model with smote and machine learning classifiers. medRxiv 2020, 20063461. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep learning with depth wise separable convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar]

- Zhang, Y.-D.; Satapathy, S.C.; Zhang, X.; Wang, S.-H. COVID-19 Diagnosis via DenseNet and Optimization of Transfer Learning Setting. Cogn. Comput. 2021, 1–17. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. arXiv 2017, arXiv:1707.01083v2. [Google Scholar]

- Alqudah, A.; Qazan, S.; Alqudah, A. Automated systems for detection of COVID-19 using chest X-ray images and lightweight convolutional neural networks. Res. Sq. 2020, 24, 1207–1220. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolo9000: Better, faster, stronger. arXiv 2017, arXiv:1612.08242. [Google Scholar]

- Mohammed, K.K. Automated Detection of COVID-19 Coronavirus Cases Using Deep Neural Networks with X-ray Images. Al-Azhar Univ. J. Virus Res. Stud. 2020, 2, 1207–1220. [Google Scholar] [CrossRef]

- Attallah, O. ECG-BiCoNet: An ECG-based pipeline for COVID-19 diagnosis using Bi-Layers of deep features integration. Comput. Biol. Med. 2022, 142, 105210. [Google Scholar] [CrossRef]

- Ozturk, T.; Talo, M.; Yildirim, E.A.; Baloglu, U.B.; Yildirim, O.; Acharya, U.R. Automated detection of COVID-19 cases using deep neural networks with X-ray images. Comput. Biol. Med. 2020, 121, 103792. [Google Scholar] [CrossRef]

- Cohen, J.P.; Morrison, P.; Dao, L.; Roth, K.; Duong, T.Q.; Ghassemi, M. COVID-19 image data collection: Prospective predictions are the future. arXiv 2020, arXiv:2006.11988. Available online: http://arxiv.org/abs/2006.11988 (accessed on 30 June 2022).

- COVID-19 Dataset. Available online: https://github.com/ieee8023/covid-chestxray-dataset (accessed on 20 June 2022).

- COVID-19 Clinical Library. Available online: https://www.figure1.com/covid-19 (accessed on 20 July 2020).

- Wang, L.; Lin, Z.Q.; Wong, A. COVID-Net: A tailored deep convolutional neural network design for detection of COVID-19 cases from chest X-ray images. Sci. Rep. 2020, 10, 19549. [Google Scholar] [CrossRef]

- Chowdhury, M.E.H.; Rahman, T.; Khandakar, A.; Mazhar, R.; Kadir, M.A.; Bin Mahbub, Z.; Islam, K.R.; Khan, M.S.; Iqbal, A.; Al Emadi, N.; et al. Can AI Help in Screening Viral and COVID-19 Pneumonia? arXiv 2020, arXiv:2003.13145. Available online: http://arxiv.org/abs/2003.13145 (accessed on 15 April 2022). [CrossRef]

- Shiraishi, J.; Katsuragawa, S.; Ikezoe, J.; Matsumoto, T.; Kobayashi, T.; Komatsu, K.-I.; Matsui, M.; Fujita, H.; Kodera, Y.; Doi, K. Development of a digital image database for chest radiographs with and without a lung nodule: Receiver operating characteristic analysis of Radiologists’ detection of pulmonary nodules. Am. J. Roentgenol. 2000, 174, 71–74. [Google Scholar] [CrossRef]

- RSNA Pneumonia Detection Challenge Dataset. Available online: https://www.kaggle.com/c/rsna-pneumonia-detection-challenge (accessed on 29 June 2022).

- Italian Society of Medical and Interventional Radiology (SIRM) COVID-19 DATABASE. Available online: https://www.sirm.org/en/category/articles/covid-19-database/ (accessed on 28 September 2020).

- Ballinger, J.; Murphy, A. Resonance and Radiofrequency. Available online: https://radiopaedia.org/ (accessed on 1 August 2022).

- Irvin, J.; Rajpurkar, P.; Ko, M.; Yu, Y.; Ciurea-Ilcus, S.; Chute, C.; Marklund, H.; Haghgoo, B.; Ball, R.; Shpanskaya, K.; et al. CheXpert: A Large Chest Radiograph Dataset with Uncertainty Labels and Expert Comparison. Proc. Conf. AAAI Artif. Intell. 2019, 33, 590–597. [Google Scholar] [CrossRef]

- ChestImaging. Twitter COVID-19 CXR Dataset, @ChestImaging (Twitter Account), Cardiothoracic Radiologist. Available online: https://twitter.com/ChestImaging (accessed on 27 June 2020).

- Larxel. Pediatric Pneumonia Chest X-ray. Kaggle.com. Available online: https://www.kaggle.com/datasets/andrewmvd/pediatric-pneumonia-chest-xray (accessed on 27 June 2022).

- Kermany, D.; Zhang, K.; Goldbaum, M. Labeled Optical Coherence Tomography (OCT) and Chest X-ray Images for Classification Mendeley Data. Available online: https://data.mendeley.com/datasets/rscbjbr9sj/2 (accessed on 14 December 2021).

- Kermany, D.S.; Goldbaum, M.; Cai, W.; Valentim, C.C.S.; Liang, H.; Baxter, S.L.; McKeown, A.; Yang, G.; Wu, X.; Yan, F.; et al. Identifying Medical Diagnoses and Treatable Diseases by Image-Based Deep Learning. Cell 2018, 172, 1122–1131. [Google Scholar] [CrossRef] [PubMed]

- Open Access Biomedical Image Search Engine. National Library of Medicine: Bethesda, MD, USA. 2020. Available online: https://openi.nlm.nih.gov/ (accessed on 18 March 2020).

- Mangal, A.; Kalia, S.; Rajgopal, H.; Rangarajan, K.; Namboodiri, V.; Banerjee, S.; Arora, C. CovidAID: COVID-19 detection using chest X-ray. arXiv 2020, arXiv:2004.09803. [Google Scholar]

- Wehbe, R.M.; Sheng, J.; Dutta, S.; Chai, S.; Dravid, A.; Barutcu, S.; Wu, Y.; Cantrell, D.R.; Xiao, N.; Allen, B.D.; et al. DeepCOVID-XR: An Artificial Intelligence Algorithm to Detect COVID-19 on Chest Radiographs Trained and Tested on a Large US Clinical Dataset. Radiology 2020, 299, E167–E176. [Google Scholar] [CrossRef] [PubMed]

- Afshar, P.; Heidarian, S.; Naderkhani, F.; Oikonomou, A.; Plataniotis, K.N.; Mohammadi, A. COVID-CAPS: A capsule network-based framework for identification of COVID-19 cases from X-ray images. Pattern Recognit. Lett. 2020, 138, 638–643. [Google Scholar] [CrossRef] [PubMed]

- Bassi, P.R.A.S.; Attux, R. A deep convolutional neural network for COVID-19 detection using chest X-rays. Res. Biomed. Eng. 2021, 38, 139–148. [Google Scholar] [CrossRef]

- Zhang, Y.; Niu, S.; Qiu, Z.; Wei, Y.; Zhao, P.; Yao, J.; Huang, J.; Wu, Q.; Tan, M. Covid-da: Deep domain adaptation from typical pneumonia to COVID-19. arXiv 2020, arXiv:2005.01577. [Google Scholar]

- Khobahi, S.; Agarwal, C.; Soltanalian, M. CoroNet: A deep network architecture for semi-supervised task-based identification of COVID-19 from chest X-ray images. medRxiv 2020. [Google Scholar] [CrossRef]

- Hira, S.; Bai, A.; Hira, S. An automatic approach based on CNN architecture to detect Covid-19 disease from chest X-ray images. Appl. Intell. 2020, 51, 2864–2889. [Google Scholar] [CrossRef]

- Available online: https://blog.exsilio.com/all/accuracy-precision-recall-f1-score-interpretation-of-performance-measures (accessed on 27 June 2022).

- Aslan, M.F.; Unlersen, M.F.; Sabanci, K.; Durdu, A. CNN-based transfer learning–BiLSTM network: A novel approach for COVID-19 infection detection. Appl. Soft Comput. 2021, 98, 106912. [Google Scholar] [CrossRef]

- Aslan, M.F.; Sabanci, K.; Durdu, A.; Unlersen, M.F. COVID-19 diagnosis using state-of-the-art CNN architecture features and Bayesian Optimization. Comput. Biol. Med. 2022, 142, 105244. [Google Scholar] [CrossRef]

- Ucar, F.; Korkmaz, D. COVIDiagnosis-net: Deep Bayes-SqueezeNet based diagnosis of the coronavirus disease 2019 (COVID-19) from X-ray images. Med. Hypotheses 2020, 140, 109761. [Google Scholar] [CrossRef]

- Rajaraman, S.; Antani, S. Training deep learning algorithms with weakly labeled pneumonia chest X-ray data for COVID-19 detection. medRxiv 2020. [Google Scholar] [CrossRef]

- Duchesne, S.; Gourdeau, D.; Archambault, P.; Chartrand-Lefebvre, C.; Dieumegarde, L.; Forghani, R.; Gagné, C.; Hains, A.; Hornstein, D.; Le, H.; et al. Tracking and predicting COVID-19 radiological trajectory using deep learning on chest Xrays: Initial accuracy testing. medRxiv 2020. [Google Scholar] [CrossRef]

- Abdallah, W.; Mnasri, S.; Nasri, N.; Val, T. Emergent IoT Wireless Technologies beyond the year 2020: A Comprehensive Comparative Analysis. In Proceedings of the 2020 International Conference on Computing and Information Technology (ICCIT-1441), Tabuk, Saudi Arabia, 9–10 September 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Tarawneh, A.S.; Hassanat, A.B.; Celik, C.; Chetverikov, D.; Rahman, M.S.; Verma, C. Deep Face Image Retrieval: A Comparative Study with Dictionary Learning. In Proceedings of the 2019 10th International Conference on Information and Communication Systems (ICICS), Irbid, Jordan, 11–13 June 2019; pp. 185–192. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).